94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Neurosci. , 01 December 2015

Sec. Neuromorphic Engineering

Volume 9 - 2015 | https://doi.org/10.3389/fnins.2015.00449

This article is part of the Research Topic Benchmarks and Challenges for Neuromorphic Engineering View all 18 articles

Neuromorphic hardware are designed by drawing inspiration from biology to overcome limitations of current computer architectures while forging the development of a new class of autonomous systems that can exhibit adaptive behaviors. Several designs in the recent past are capable of emulating large scale networks but avoid complexity in network dynamics by minimizing the number of dynamic variables that are supported and tunable in hardware. We believe that this is due to the lack of a clear understanding of how to design self-tuning complex systems. It has been widely demonstrated that criticality appears to be the default state of the brain and manifests in the form of spontaneous scale-invariant cascades of neural activity. Experiment, theory and recent models have shown that neuronal networks at criticality demonstrate optimal information transfer, learning and information processing capabilities that affect behavior. In this perspective article, we argue that understanding how large scale neuromorphic electronics can be designed to enable emergent adaptive behavior will require an understanding of how networks emulated by such hardware can self-tune local parameters to maintain criticality as a set-point. We believe that such capability will enable the design of truly scalable intelligent systems using neuromorphic hardware that embrace complexity in network dynamics rather than avoiding it.

What role does the brain serve for producing adaptive behavior? This intriguing question is a long-standing one. So far, most attempts to understand brain function for adaptive behavior have primarily described it as the computation of behavioral responses from internal representations of stimuli and stored representations of past experience, a description we will take issue with below.

As computational systems have grown in functional complexity, the analogy between computers and the brain began to be widely adopted. The basic premise for this analogy was that both computers and the brain received information and acted upon it in complex ways to produce an output. This analogy between computers and the brain (also known as the computer metaphor) has provided a candidate mechanism for cognition, equating it with a digital computer program that can manipulate internal representation according to a set of rules.

The extensive use of the computer metaphor has resulted in the applied notions of symbolic computations and serial processing to construct human-like adaptive behaviors. The task of brain science has become focused on answering the question of how the brain computes (Piccinini and Shagrir, 2014). The key issues, such as serial vs. parallel processing, analog vs. digital coding, and symbolic vs. non-symbolic representations, are being addressed using the computer metaphor wherein perception, action, and cognition are taken to be input, output, and computation. Traditional algorithms that are derived by adopting the computer metaphor have yielded very limited utility in complex, real-world environments, despite several decades of research to develop machines that exhibit adaptive behaviors.

This impasse has forced us to rethink the notion of how adaptive behavior might be realized in machines. One inspiration comes from a key observation that was made in 1950s by Ashby (1947), when he designed a machine called the homeostat (Ashby, 1960). According to him, animals are driven by survival as the objective function and animals that survive are very successful in keeping their essential variables within physiological limits. The term homeostasis dates from 1926, when Cannon (1929) used it to describe the specialized mechanisms unique to living systems which preserve internal equilibrium in the case of an inconstant world (Moore-Ede, 1986). These variables and their limits are fixed through evolution. For example, in humans, if the systolic blood-pressure (which is an example of an essential variable) drops from 120 mm of mercury to 30, the change will result in death. Ashby's thesis then was that systems that exhibit adaptive behaviors are striving to keep their essential variables within limits. Our take-away was that machines that exhibit adaptive behaviors are like a control system that strives to keep a variable within or around a set point.

A related observation is that the complexity of adaptive behaviors increases with the number of physiological parameters that are to be maintained within their limits. We believe that this includes collective essential variables that are learned during the animal's interaction with the environment. However, approaching this from a control system point of view, it can be interpreted as the system striving to maintain stability across a complex set of interacting or coupled control loops with several set points. It is known that maintaining stability of such complex networks with multiple set points is a non-trivial task (Buldyrev et al., 2010). A second non-triviality is due to the inherent delays associated with homeostatic mechanisms. These delays require that homeostatic processes are also predictive. Indeed, analysis of periodic variations in essential variables, such as plasma cortisol levels in humans, shows that the so-called responses of homeostatic mechanisms are largely anticipatory (Moore-Ede, 1986).

The homeostat can be thought of as a simple kind of self-organization. Once its structure is set, external forces interact with various internal forces that automatically balance and create feedback that affects those same external forces. A special kind of dynamic balance, one that persists through and because of constant change, is known as criticality (for the notion of criticality intended, see Bak et al., 1987; Beggs and Plenz, 2003; Legenstein and Maass, 2007). A critical system is poised to react quickly to deviations or perturbations, because of a different balance at the system level—between decay and explosion. Homeostatic balance is a balance of forces, while critical balance is a balance of the dynamics themselves. A system that contains both kinds of balance is typical of so-called self-organized criticality (SOC). A slight reorganization of SOC puts criticality under the control of a homeostatic balance. Such a system would be driven toward criticality.

The mammalian cortex is a complex physical dynamical system. Several lines of research (Linkenkaer-Hansen et al., 2001; Beggs and Plenz, 2003; Petermann et al., 2009; Shew et al., 2011; Tagliazucchi et al., 2012; Yang et al., 2012), demonstrate that spontaneous cortical activity has structure, manifested as cascades of activity termed neuronal avalanches. Theory predicts that networks at criticality or edge-of-chaos, maximize information capacity and transmission (Shew et al., 2011), number of metastable states (Haldeman and Beggs, 2005), and optimized dynamic range (Kinouchi and Copelli, 2006). The ubiquity of scale invariance in nature combined with its advantages for complex dynamics suggests that each of the foregoing properties would be beneficial for both models and artificial systems (Avizienis et al., 2012; Srinivasa and Cruz-Albrecht, 2012).

Evidence for such rich and ceaseless dynamics has been reported across spatial and temporal scales and contain both spatial and temporal structure (Beggs and Plenz, 2003; Kitzbichler et al., 2009; Shew et al., 2011; Tagliazucchi et al., 2012; Yang et al., 2012; Haimovici et al., 2013). Brain activity exists in a highly flexible state, simultaneously maximizing both integration and segregation of information, with optimized information transfer and processing capabilities (Friston et al., 1997; Bressler and Kelso, 2001; Shew and Plenz, 2013; Tognoli and Kelso, 2014). One remarkable feature of spontaneous neural dynamics is that they are present in all but the most extreme brain states (e.g., deep anesthesia, Scott et al., 2014) and have been observed across many different brain configurations (e.g., with different ages, structural differences across individuals and across species).

A range of theoretical models has aimed to simulate brain activity at a macroscopic scale and explain how dynamics occur (Deco et al., 2009; Cabral et al., 2011; Hellyer et al., 2014; Messé et al., 2014). They developed several measures to describe the rich dynamics observed, e.g., metastability (Friston et al., 1997; Bressler and Kelso, 2001; Shanahan, 2010) and the notion of criticality (Shew and Plenz, 2013). These models demonstrate the primary importance of the underlying structural network organization but other factors are also important such as neural noise, time delays, the strength of connectivity as well as the balance of local excitation and inhibition (Deco et al., 2014). While simulating these macroscopic networks, the desired characteristics of the network (i.e., rich and stable dynamics observed with fMRI data) only occur within a narrow window of parameters. Outside this window, models will often fall into a pathological state: either (i) no dynamics (global activity at ceiling or floor); or (ii) random activity with little or no temporal or spatial structure. This is in contrast to actual brain activity, which maintains non-trivial dynamics in the face of a changing external environment as well as structural changes (e.g., the configuration of the brain's structural connectivity or neurotransmitter levels during aging) and across development and different species. A theoretical account of how spontaneous dynamics emerge in the brain must therefore include mechanisms that regulate those dynamics.

One potential mechanism for maintaining neural dynamics is inhibitory plasticity. Recent computational work suggests that, at the neural level, inhibitory plasticity can serve as a homeostatic mechanism by regulating the balance between excitatory and inhibitory (E/I) activity (Vogels et al., 2011). Moreover, this form of inhibitory plasticity has been shown to induce critical dynamics in a mean-field model of coupled excitatory and inhibitory neurons (Magnasco et al., 2009; Cowan et al., 2013), exhibit robust self-organization (Srinivasa and Jiang, 2013), pattern discrimination (Srinivasa and Cho, 2014), and cell assembly formation (Litwin-Kumar and Doiron, 2014). These theoretical results suggest that inhibitory homeostatic plasticity may provide a mechanism to stabilize brain dynamics at the macroscopic level, and may be relevant for understanding macroscopic patterns of brain activity.

Given the benefits, it would be useful for networks to be able to maintain a state of criticality. What follows is a description of an artificial neural system that appears to do so through a process of self-organization. We modeled a recurrent EI neuronal network consisting of 10,000 leaky integrate-and-fire (LIF) neurons (Vogels et al., 2005) composed of 8000 excitatory (E) neurons and 2000 inhibitory (I) neurons with a fixed connection probability of 1%. Several physiological phenomena were also modeled: two types of synaptic current dynamics, excitatory (AMPA) and inhibitory (GABA); Short-term plasticity (STP) (Markram and Tsodyks, 1996; Tsodyks et al., 1998), affecting instantaneous synaptic efficacy; and spike-timing dependent plasticity (STDP) (Markram et al., 1997), affecting long-term synaptic strength such that synapses are strengthened when presynaptic spikes precede postsynaptic spikes, and weakened otherwise. There is abundant neurophysiological evidence for the role of STP and STDP in shaping network structure and dynamics (Markram et al., 1997; Bi and Poo, 1998; Young et al., 2007) For details about this network design, see Stepp et al. (2015).

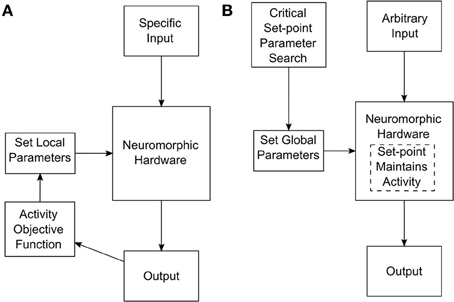

To achieve a system that self-tunes toward criticality, i.e., treats criticality as set-point, we performed a parameter search at the level of global STP, STDP, and synaptic kinetics parameters. This search resulted in several network configurations that maintained themselves in a state of criticality, even after being perturbed by external inputs (For details about the parameter search and associated measurements, see Stepp et al., 2015). A parameter search of this sort should be distinguished from a search at the level of individual synaptic characteristics. For instance, tuning a network to maintain useful activity while receiving certain input might involve setting individual synaptic weights. These weights would generally have to be set differently for different classes of input. The search for self-tuning criticality happens at a more global network level, and the resulting network configuration is appropriate for many classes of input. The two different approaches are depicted in Figure 1.

Figure 1. (A) Tuning process required if synaptic weights or other low-level parameters need to be tuned for specific network inputs. (B) Tuning process for a self-tuning critical network. In this case, the network is tuned at a high level to select for critical dynamics that is adaptively maintained internally.

For a system that must support adaptive behavior, criticality is directly beneficial. Critical systems, by virtue of their balancing act, have quick access to a large number of metastable states (Haldeman and Beggs, 2005). Being able to quickly diverge from one dynamical trajectory to another is clearly appropriate for a system that must adapt to a changing environment. Accordingly, the ability of non-autonomous neural circuits (i.e., ones that receive external inputs) to discriminate patterns tends to be increased for systems near edge-of-chaos states (Legenstein and Maass, 2007). In a recent work by our group (Srinivasa and Cho, 2014), we described a spiking neural model that can learn to discriminate patterns in an unsupervised manner. This can be construed as an adaptive behavior. For this network to exhibit proper function, we must have inhibitory plasticity, because it enables a dynamic balance of network currents between excitation and inhibition. It is during the transient imbalances in the currents that the network adapts its synaptic weights via STDP and thus learns patterns. The ability of the network to perform pattern discrimination (adaptive behavior) is impaired when inhibitory synaptic plasticity is shut off. A recent further study of this work (unpublished work) shows that this network exhibits avalanche dynamics when its spiking activity is evaluated based on the methods described in Stepp et al. (2015). Avalanche dynamics along with balanced inhibitory and excitatory currents strongly suggest that this network is critical. If implemented in neuromorphic hardware, it is then expected that it would function well in a configuration that seeks criticality, without needing an application-specific configuration. Adaptive behavior, to be truly adaptive, must also have some element of permanence. There is evidence that criticality could be a crucial ingredient for learning (de arcangelis and Herrmann, 2010). As such, the same feature appears to support both quick and lasting change, apropos for a phenomenon marked by a balance between opposing tendencies.

There has been a recent interest in developing large scale neuromorphic electronics systems (DARPA SyNAPSE: http://en.wikipedia.org/wiki/SyNAPSE; Human Brain Project: http://www.humanbrainproject.eu/neuromorphic-computing-platform) to enable a new generation of computing platforms with energy efficiencies that compare to biological systems while being capable of learning from its interaction with its environment. For example, at the HRL Laboratories LLC, the multidisciplinary project funded by DARPA SyNAPSE (Srinivasa and Cruz-Albrecht, 2012) attempts to develop a theoretical foundation inspired by neuroscience to engineer electronic systems that exhibit intelligence. The ultimate goal of the project is to build neuromorphic electronics at large scales (for example with 108 neurons and 1011 synapses) to realize autonomous systems that exhibit high combinatorial complexity in adapting to a large variety of environments.

A major problem recognized by the field in developing such large scale systems is the need for monitoring its dynamics for debugging purposes as well as to be able to tune the parameters of such a massive chip, which is currently not feasible due to inherent limitations in accessibility to all the chip components as well as the lack of a clear understanding how to design such self-tuning complex systems. In integrated circuit implementations of large neural networks it is useful, or even necessary, not to have the requirement to monitor all the internal signals of the network. The internal signals could include, for example, spiking signals produced by internal neurons (Cruz-Albrecht et al., 2013), and digital or analog weights of the synapses located between neurons (Cruz-Albrecht et al., 2012, 2013). To monitor the internal signals of the chip requires the use of circuitry connecting internal neurons and synapses to the terminals, or pads, of the chip. The monitoring circuits are detrimental to the density of the network, reducing the number of neurons and synapses that can be implemented for a given area of the chip. It is also detrimental to the efficiency of the network, reducing the number of neural operations per unit of time that can be performed by a given amount of electrical power consumed by the chip. As CMOS technology scales to smaller features, the number of neurons and synapses that can be implemented in a chip grows faster than the number of chip terminals. Similarly the internal bandwidth (Zorian, 1999) of the chips is expected to increase faster than the interface bandwidth (Scholze et al., 2011) with scaling. Therefore, as technology scales it is expected that a smaller portion of all the internal signals of large chips can be monitored simultaneously in real time. If the neural chip is more analog in nature (Cruz-Albrecht et al., 2012), these problems described above are further exacerbated.

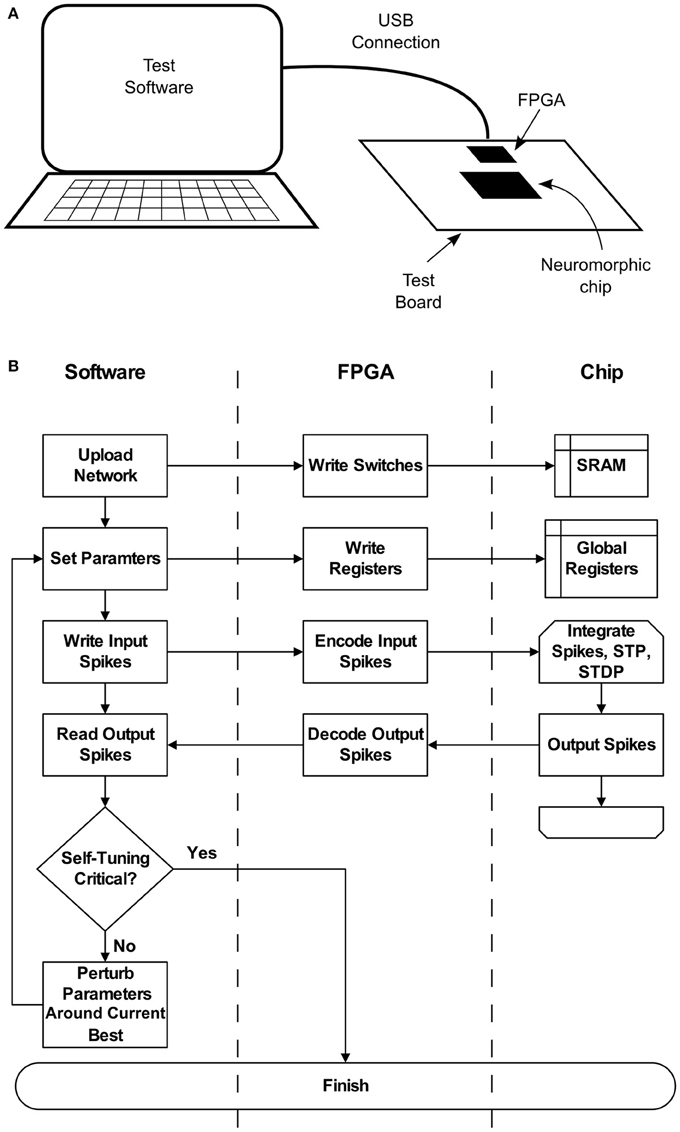

These deficiencies may be addressed if the chip can be set up to operate with criticality as a set-point. To achieve this, a high-level parameter search similar to the one described in Stepp et al. (2015) can be run on the hardware itself. Figure 2 depicts a typical setup, where a neuromorphic chip is installed on a test board, along with a supporting FPGA. A general purpose computer communicates with the FPGA via a USB connection, which enables software to configure the chip as well as send and receive spikes. Once a network is uploaded, parameters can be quickly set and re-set by modifying on-chip registers. A typical search requires less than 100 iterations of 300 s each, amounting to a worst-case runtime of approximately 8 h. This search would result in a configuration that could be set once, without requiring access to low-level parameters such as synaptic weights. Self-tuning criticality, again as shown in Stepp et al. (2015), would then ensure that the network maintained a useful level of activity without input-specific tuning. If parts of the hardware break or begin to function differently, we expect an amount of fault tolerance. Without respect to self-tuning criticality, neural networks of this sort are already relatively tolerant (Srinivasa and Cho, 2012). Beyond this intrinsic fault tolerance, the self-tuning aspect described here extends this capability. At some point, however, the network dynamics will become too different and the search will have to be repeated. The nature of this breaking point is not well understood, and is a subject for further study.

Figure 2. (A) A typical configuration for interacting with the neuromorphic hardware, for instance when conducting a parameter search. Test software runs on a general purpose computer, which communicates with an FPGA over a USB connection. The connection allows software to upload networks to the chip, set hardware parameters, and perform spike-based input and output. (B) Flow chart detailing the parameter search process and its relation to each system component.

We believe that this will result in a novel paradigm for computing and enable the design of a wide range of systems from small to large scales that exhibit robust adaptive behavior in the face of uncertainties. This may also lead to large scale neuromorphic system designs that more accurately account for brain-like dynamics compared to current designs (Eliasmith et al., 2012). Finally, it could also enable the design of truly scalable intelligent systems, since there will not be a need for manual tuning of model or chip parameters by constructing self-tuning critical networks that in turn will enable adaptive behaviors.

NS conceived of the work and drafted the manuscript, NDS drafted the manuscript and performed analyses, JC drafted the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This material is based upon work supported by the Defense Advanced Research Projects Agency (DARPA) under contract HR0011-10-C-0052. Any opinions, findings and conclusions or recommendations expressed in this material are those of author(s) and do not necessarily reflect the views of the Defense Advanced Research Projects Agency. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Ashby, W. R. (1947). Principles of the self-organizing dynamic system. J. Gen. Psychol. 37, 125–128. doi: 10.1080/00221309.1947.9918144

Ashby, W. R. (1960). “The homeostat,” in Design for a Brain (New York, NY: J. Wiley & Sons), 100–121.

Avizienis, A. V., Sillin, H. O., Martin-Olmos, C., Shieh, H. H., Aono, M., Stieg, A. Z., et al. (2012). Neuromorphic atomic switch networks. PLoS ONE 7:e42772. doi: 10.1371/journal.pone.0042772

Bak, P., Tang, C., and Wiesenfeld, K. (1987). Self-organized criticality: an explanation of the 1/f noise. Phys. Rev. Lett. 59, 381–384. doi: 10.1103/PhysRevLett.59.381

Beggs, J. M., and Plenz, D. (2003). Neuronal avalanches in neocortical circuits. J. Neurosci. 23, 11167–11177.

Bi, G. Q., and Poo, M. M. (1998). Activity-induced synaptic modifications in hippocampal culture, dependence on spike timing, synaptic strength and cell type. J. Neurosci. 18, 464–472.

Bressler, S. L., and Kelso, J. S. (2001). Cortical coordination dynamics and cognition. Trends Cogn. Sci. 5, 26–36. doi: 10.1016/S1364-6613(00)01564-3

Buldyrev, S. V., Parshani, R., Paul, G., Stanley, H. E., and Havlin, S. (2010). Catastrophic cascade of failures in interdependent networks. Nature 464, 1025–1028. doi: 10.1038/nature08932

Cabral, J., Hugues, E., Sporns, O., and Deco, G. (2011). Role of local network oscillations in resting-state functional connectivity. Neuroimage 57, 130–139. doi: 10.1016/j.neuroimage.2011.04.010

Cowan, J., Neuman, J., Kiewiet, B., and van Drongelen, W. (2013). Self-organized criticality in a network of interacting neurons. J. Stat. Mech. 2013:P04030. doi: 10.1088/1742-5468/2013/04/P04030

Cruz-Albrecht, J. M., Derosier, T., and Srinivasa, N. (2013). A scalable neural chip with synaptic electronics using CMOS integrated memristors. Nanotechnology 24:384011. doi: 10.1088/0957-4484/24/38/384011

Cruz-Albrecht, J. M., Yung, M. W., and Srinivasa, N. (2012). Energy-efficient neuron, synapse and STDP integrated circuits. IEEE Trans. Biomed. Circ. Syst. 6, 246–256. doi: 10.1109/TBCAS.2011.2174152

de arcangelis, L., and Herrmann, H. J. (2010). Learning as a phenomenon occurring in a critical state. Proc. Natl. Acad Sci. U.S.A. 107, 3977–3981. doi: 10.1073/pnas.0912289107

Deco, G., Jirsa, V., McIntosh, A., Sporns, O., and Kötter, R. (2009). Key role of coupling, delay, and noise in resting brain fluctuations. Proc. Natl. Acad Sci. U.S.A. 106, 10302–10307. doi: 10.1073/pnas.0901831106

Deco, G., McIntosh, A. R., Shen, K., Hutchison, R. M., Menon, R. S., Everling, S., et al. (2014). Identification of optimal structural connectivity using functional connectivity and neural modeling. J. Neurosci. 34, 7910–7916. doi: 10.1523/JNEUROSCI.4423-13.2014

Eliasmith, C., Stewart, T. C., Choo, X., Bekolay, T., DeWolf, T., Tang, Y., et al. (2012). A large-scale model of the functioning brain. Science 338, 1202–1205. doi: 10.1126/science.1225266

Friston, K., Buechel, C., Fink, G., Morris, J., Rolls, E., and Dolan, R. (1997). Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 6, 218–229. doi: 10.1006/nimg.1997.0291

Haimovici, A., Tagliazucchi, E., Balenzuela, P., and Chialvo, D. R. (2013). Brain organization into resting state networks emerges at criticality on a model of the human connectome. Phys. Rev. Lett. 110:178101. doi: 10.1103/PhysRevLett.110.178101

Haldeman, C., and Beggs, J. M. (2005). Critical branching captures activity in living neural networks and maximizes the number of metastable states. Phys. Rev. Lett. 94:58101. doi: 10.1103/PhysRevLett.94.058101

Hellyer, P. J., Shanahan, M., Scott, G., Wise, R. J., Sharp, D. J., and Leech, R. (2014). The control of global brain dynamics: opposing actions of frontoparietal control and default mode networks on attention. J. Neurosci. 34, 451–461. doi: 10.1523/JNEUROSCI.1853-13.2014

Kinouchi, O., and Copelli, M. (2006). Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–351. doi: 10.1038/nphys289

Kitzbichler, M. G., Smith, M. L., Christensen, S. R., and Bullmore, E. (2009). Broadband criticality of human brain network synchronization. PLoS Comput. Biol. 5:e1000314. doi: 10.1371/journal.pcbi.1000314

Legenstein, R., and Maass, W. (2007). Edge of chaos and prediction of computational performance for neural circuit models. Neural Netw. 20, 323–334. doi: 10.1016/j.neunet.2007.04.017

Linkenkaer-Hansen, K., Nikouline, V. V., Palva, J. M., and Ilmoniemi, R. J. (2001). Long-range temporal correlations and scaling behavior in human brain oscillations. J. Neurosci. 21, 1370–1377.

Litwin-Kumar, A., and Doiron, B. (2014). Formation and maintenance of neuronal assemblies through synaptic plasticity. Nat. Commun. 5:5319. doi: 10.1038/ncomms6319

Magnasco, M. O., Piro, O., and Cecchi, G. A. (2009). Self-tuned critical anti-hebbian networks. Phys. Rev. Lett. 102:258102. doi: 10.1103/PhysRevLett.102.258102

Markram, H., Lübke, J., Frotscher, M., and Sakmann, B. (1997). Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275, 213–215. doi: 10.1126/science.275.5297.213

Markram, H., and Tsodyks, M. (1996). Redistribution of synaptic efficacy between neocortical pyramidal neurons. Nature 382, 807–810. doi: 10.1038/382807a0

Messé, A., Rudrauf, D., Benali, H., and Marrelec, G. (2014). Relating structure and function in the human brain: relative contributions of anatomy, stationary dynamics, and non-stationarities. PLoS Comput. Biol. 10:e1003530. doi: 10.1371/journal.pcbi.1003530

Moore-Ede, M. C. (1986). Physiology of the circadian timing system: predictive versus reactive homeostasis. Am. J. Physiol. Regul. Integr. Comp. Physiol. 250, R737–R752.

Petermann, T., Thiagarajan, T. C., Lebedev, M. A., Nicolelis, M. A. L., Chialvo, D. R., and Plenz, D. (2009). Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proc. Natl. Acad. Sci. U.S.A. 106, 15921–15926. doi: 10.1073/pnas.0904089106

Piccinini, G., and Shagrir, O. (2014). Foundations of computational neuroscience. Curr. Opin. Neurobiol. 25, 25–30. doi: 10.1016/j.conb.2013.10.005

Scholze, S., Schiefer, S., Partzsch, J., Hartmann, S., Mayr, C. G., Höppner, S., et al. (2011). VLSI implementation of a 2.8 Gevent/s packet-based AER interface with routing and event sorting functionality. Front. Neurosci. 5:117. doi: 10.3389/fnins.2011.00117

Scott, G., Fagerholm, E. D., Mutoh, H., Leech, R., Sharp, D. J., Shew, W. L., et al. (2014). Voltage imaging of waking mouse cortex reveals emergence of critical neuronal dynamics. J. Neurosci. 34, 16611–16620. doi: 10.1523/JNEUROSCI.3474-14.2014

Shanahan, M. (2010). Metastable chimera states in community-structured oscillator networks. Chaos Interdiscipl. J. Nonlinear Sci. 20:013108. doi: 10.1063/1.3305451

Shew, W. L., and Plenz, D. (2013). The functional benefits of criticality in the cortex. Neuroscientist 19, 88–100. doi: 10.1177/1073858412445487

Shew, W. L., Yang, H., Yu, S., Roy, R., and Plenz, D. (2011). Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J. Neurosci. 31, 55–63. doi: 10.1523/JNEUROSCI.4637-10.2011

Srinivasa, N., and Cho, Y. (2012). Self-organizing spiking neural model for learning fault-tolerant spatio-motor transformations. IEEE Trans. Neural Netw. Learn. Syst. 23, 1526–1538. doi: 10.1109/TNNLS.2012.2207738

Srinivasa, N., and Cho, Y. (2014). Unsupervised discrimination of patterns in spiking neural networks with excitatory and inhibitory synaptic plasticity. Front. Comput. Neurosci. 8:159. doi: 10.3389/fncom.2014.00159

Srinivasa, N., and Cruz-Albrecht, J. (2012). Neuromorphic adaptive plastic scalable electronics: analog learning systems. IEEE Pulse 3, 51–56. doi: 10.1109/MPUL.2011.2175639

Srinivasa, N., and Jiang, Q. (2013). Stable learning of functional maps in self-organizing spiking neural networks with continuous synaptic plasticity. Front. Comput. Neurosci. 7:10. doi: 10.3389/fncom.2013.00010

Stepp, N., Plenz, D., and Srinivasa, N. (2015). Synaptic plasticity enables adaptive self-tuning critical networks. PLoS Comput. Biol. 11:e1004043. doi: 10.1371/journal.pcbi.1004043

Tagliazucchi, E., Balenzuela, P., Fraiman, D., and Chialvo, D. R. (2012). Criticality in large-scale brain fMRI dynamics unveiled by a novel point process analysis. Front. Physiol. 3:15. doi: 10.3389/fphys.2012.00015

Tognoli, E., and Kelso, J. S. (2014). The metastable brain. Neuron 81, 35–48. doi: 10.1016/j.neuron.2013.12.022

Tsodyks, M., Pawelzik, K., and Markram, H. (1998). Neural networks with dynamic synapses. Neural Comput. 10, 821–835. doi: 10.1162/089976698300017502

Vogels, T. P., Rajan, K., and Abbott, L. F. (2005). Neural network dynamics. Annu. Rev. Neurosci. 28, 357–376. doi: 10.1146/annurev.neuro.28.061604.135637

Vogels, T. P., Sprekeler, H., Zenke, F., Clopath, C., and Gerstner, W. (2011). Inhibitory plasticity balances excitation and inhibition in sensory pathways and memory networks. Science 334, 1569–1573. doi: 10.1126/science.1211095

Yang, H., Shew, W. L., Roy, R., and Plenz, D. (2012). Maximal variability of phase synchrony in cortical networks with neuronal avalanches. J. Neurosci. 32, 1061–1072. doi: 10.1523/JNEUROSCI.2771-11.2012

Young, J. M., Waleszczyk, W. J., Wang, C., Calford, M. B., Dreher, B., and Obermayer, K. (2007). Cortical reorganization consistent with spike timing–but not correlation-dependent plasticity. Nat. Neurosci. 10, 887–895. doi: 10.1038/nn1913

Keywords: neuromorphic electronics, adaptive behavior, criticality, spiking, self-organization, synaptic plasticity, homeostasis, current balance

Citation: Srinivasa N, Stepp ND and Cruz-Albrecht J (2015) Criticality as a Set-Point for Adaptive Behavior in Neuromorphic Hardware. Front. Neurosci. 9:449. doi: 10.3389/fnins.2015.00449

Received: 11 August 2015; Accepted: 13 November 2015;

Published: 01 December 2015.

Edited by:

Michael Pfeiffer, University of Zurich and ETH Zurich, SwitzerlandReviewed by:

Terrence C. Stewart, Carleton University, CanadaCopyright © 2015 Srinivasa, Stepp and Cruz-Albrecht. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Narayan Srinivasa, bnNyaW5pdmFzYUBocmwuY29t

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.