- 1Music in the Brain, Department of Clinical Medicine, Center of Functionally Integrative Neuroscience, Aarhus University, Aarhus, Denmark

- 2Interacting Minds Centre, Aarhus University, Aarhus, Denmark

- 3Department of Psychology, Goldsmiths, University of London, London, UK

- 4The Royal Academy of Music, Aarhus, Denmark

Music is a potent source for eliciting emotions, but not everybody experience emotions in the same way. Individuals with autism spectrum disorder (ASD) show difficulties with social and emotional cognition. Impairments in emotion recognition are widely studied in ASD, and have been associated with atypical brain activation in response to emotional expressions in faces and speech. Whether these impairments and atypical brain responses generalize to other domains, such as emotional processing of music, is less clear. Using functional magnetic resonance imaging, we investigated neural correlates of emotion recognition in music in high-functioning adults with ASD and neurotypical adults. Both groups engaged similar neural networks during processing of emotional music, and individuals with ASD rated emotional music comparable to the group of neurotypical individuals. However, in the ASD group, increased activity in response to happy compared to sad music was observed in dorsolateral prefrontal regions and in the rolandic operculum/insula, and we propose that this reflects increased cognitive processing and physiological arousal in response to emotional musical stimuli in this group.

Introduction

Music is highly emotional; it communicates emotions and synchronizes emotions between people (Huron, 2006; Overy, 2012). The social-emotional nature of music is often proposed as an argument for why music has sustained such prominence in human culture (Huron, 2001; Fitch, 2005). Indeed, people spend quite a large amount of their time listening to music. A recent Danish survey found that 79% of people between 12 and 76 years listened to music more than 1 h daily (Moesgaard, 2010), and when people are asked why they listen to music they consistently say that it is because music induces and regulates emotions (Dubé and Le Bel, 2003; Rentfrow and Gosling, 2003). Processing of emotional music is found to engage limbic and paralimbic brain areas, including regions related to reward processing (for reviews see Koelsch, 2010; Peretz, 2010; Zald and Zatorre, 2011). However, not everybody experience and process emotions in the same way. For instance, people with autism spectrum disorder (ASD) are often found to be impaired in recognizing, understanding and expressing emotions (Hobson, 2005).

ASD is a complex neurodevelopmental disorder characterized by difficulties in social and interpersonal communication, combined with stereotyped and repetitive behaviors and interests (APA, 2013). Despite somewhat conflicting findings, studies indicate that people with ASD have difficulties identifying emotions from facial expressions (Boucher and Lewis, 1992; Celani et al., 1999; Baron-Cohen et al., 2000; Philip et al., 2010; see however Jemel et al., 2006), affective speech (Lindner and Rosén, 2006; Golan et al., 2007; Mazefsky and Oswald, 2007; Philip et al., 2010; see however Jones et al., 2011), non-verbal vocal expressions (Hobson, 1986; Heaton et al., 2012) and body movements (Hubert et al., 2007; Hadjikhani et al., 2009; Philip et al., 2010). These difficulties in emotion recognition are associated with altered brain activations in people with ASD compared to neurotypical (NT) controls, i.e., with less activation in the fusiform gyrus and amygdala when viewing emotional faces (Critchley et al., 2000; Schultz et al., 2000; Ashwin et al., 2007; Corbett et al., 2009), and abnormal activation of superior temporal gyrus (STG)/sulcus and inferior frontal gyrus when listening to speech (Gervais et al., 2004; Wang et al., 2006; Eigsti et al., 2012; Eyler et al., 2012). Accordingly, it has been suggested that people with ASD do not automatically direct their attention to emotional cues in their surroundings, but instead tend to perceive emotions more analytically (Jemel et al., 2006; Nuske et al., 2013).

It has previously been advocated that general social-emotional difficulties could make people with ASD less emotionally affected by music and less able to recognize emotions expressed in music (Huron, 2001; Levitin, 2006). Nonetheless, anecdotal reports dating all the way back to Kanner's (1943) first descriptions of autism seems to suggest quite the opposite, namely, that people with autism enjoy listening to music, become emotionally affected by music, and often are musically talented. Behavioral studies have shown that people with ASD process musical contour and intervals just as well as NT individuals (Heaton, 2005), and that they display superior pitch processing (Bonnel et al., 1999; Heaton et al., 1999; Heaton, 2003) and pitch memory (Heaton, 2003; Stanutz et al., 2014). Interestingly, behavioral studies have shown that children and adults with ASD correctly identify a wide range of emotions in music just as well as NT individuals (Heaton et al., 2008; Allen et al., 2009a,b; Caria et al., 2011; Quintin et al., 2011). A qualitative study by Allen et al. (2009b) found that adults with ASD listened to music as often as people without ASD, and when asked why they listened to music, they reported being emotionally affected by the music and feeling a sense of belonging to a particular musical culture. Moreover, Allen et al. (2013) recently showed that physiological responses to music are intact in people with ASD, despite a lower verbal responsiveness to music in this group.

Only one brain imaging study of processing of musical emotions in ASD has been published to this date. Caria et al. (2011) scanned 8 adults with Asperger's syndrome (AS) while listening to excerpts of classical and self-chosen musical pieces. Emotion ratings of valence and arousal showed no difference between the two groups. Their main neurobiological finding was significant activations of a variety of cortical and subcortical brain regions, including bilateral STG, cerebellum, inferior frontal cortex, insula, putamen and caudate nucleus, in response to emotional music, which were common for the ASD and NT group. Yet, between-group comparisons revealed less brain activation in individuals with AS relative to NT individuals in response to both happy and sad music. For happy music, AS individuals showed decreased brain activation relative to NT individuals in the right precentral gyrus, supplementary motor area and cerebellum. When comparing self-chosen favorite happy music with standard happy music the ASD group showed decreased brain responses in supplementary motor area, insula and inferior frontal gyrus compared to the NT group. For sad music, individuals with AS showed decreased brain activation in precentral gyrus, insula and inferior frontal gyrus. Taken together, Caria et al. (2011) concludes that the most prominent difference between the two groups is the decreased activation of left insula in individuals with AS relative to NT individuals during processing of emotional music. This difference might be explained by higher levels of alexithymia (Fitzgerald and Bellgrove, 2006; Bird et al., 2010), an inability to identify and describe feelings, in the AS group compared to the NT group. This study is important in that it is the first to directly investigate the neural processing of emotional music in individuals with ASD. However, the study is limited by a fairly small sample size and it is not apparent if the ASD and the NT group were matched on IQ and/or verbal IQ. Previous studies have shown that differences in emotion processing might depend on verbal IQ rather than having ASD as such (Lindner and Rosén, 2006; Golan et al., 2007; Heaton et al., 2008; Anderson et al., 2010). Besides, the stimuli used by Caria et al. (2011) included a mix of familiar and unfamiliar music (5 familiar/self-chosen and 5 unfamiliar excerpts of happy and sad music respectively). More studies are needed to generalize these findings to larger groups of people with ASD. Empirical investigations of similarities and differences in the neurocognitive processing of music are relevant for understanding the nature of emotional impairments in individuals with ASD. In the literature on music emotions, the distinction between emotion perception and emotion induction is central (Gabrielsson, 2002; Juslin and Laukka, 2004; Konečni, 2008). The present study focused on emotion perception from music in individuals with ASD, because this study was designed to act as a parallel to ASD studies on emotion recognition in other domains (facial expressions, affective prosody, body movements etc.). Thus, the aim of the present study was to investigate emotion recognition and neural processing of happy, sad and neutral music in high-functioning adults with ASD compared to a group of NT individuals matched on age, gender, full-scale IQ and verbal IQ.

Materials and Methods

Participants

A total of 43 participants were included in the study, 23 of these had a formal diagnosis of ASD. Participants with ASD were recruited through the national autism and Asperger's association, assisted living services for young people with ASD, and specialized educational facilities. The structural MRI of three participants with ASD showed abnormal ventricular enlargement (this is not an uncommon finding see Gillberg and Coleman, 1996) and were excluded before data analysis was begun. One ASD participant was unable to relax in the scanner and thus did not complete the testing. Consequently, a total of 19 high-functioning adults with ASD (2 females, 17 males) and 20 NT adults (2 females, 18 males) were included in the data analysis.

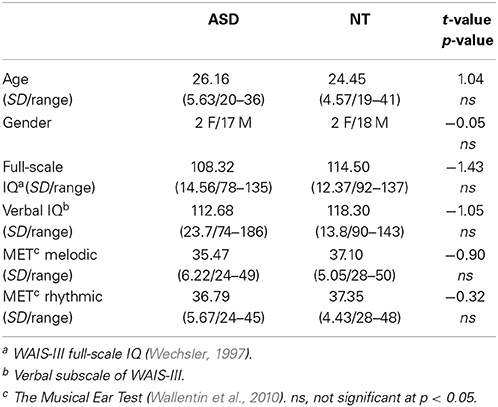

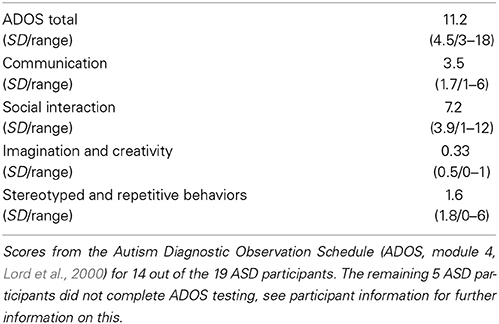

All participants were right-handed and native speakers of Danish, with normal hearing. Groups were matched on gender, age, IQ, and verbal IQ (Table 1). All participants were IQ-tested using Wechsler's Adult Intelligence Scale (WAIS-III, Wechsler, 1997). None of the NT participants had any history of neurological or psychiatric illness. All participants with ASD carried a previous formal diagnosis of ASD. Diagnoses were supported by the autism diagnostic observation schedule (ADOS-G, Lord et al., 2000) at the time of the study. All participants with ASD were invited back in for the ADOS testing after the brain scanning session, but unfortunately five participants were unable to come back for testing due to long transportation, or because they needed special assistance. Thus, 14 out of the 19 ASD participants completed ADOS testing (Table 2). Nonetheless specialized psychiatrists had previously diagnosed all participants with ASD, and we were given access to their medical records to further confirm diagnoses. All ASD participants were medication naïve at the time of the study, and did not have any comorbid psychiatric disorders. All participants gave written informed consent and were compensated for their time and transportation expenses. The study was approved by the local ethics committee and was in accordance with the Helsinki declaration.

Measures of Musical Experience

All participants completed a musical background questionnaire asking about their musical preferences, musical training, listening habits, and general physiological and emotional responses to music. For questions about physiological and emotional responses to music, participants rated how much they agreed (from 1 to 5, where 1 is least, 5 is most) with statements like “I find it easy to recognize whether a melody is happy or sad” or “I can feel my body responding physically when I listen to music.” To compare musical abilities between the two groups, participants completed the Musical Ear Test (Wallentin et al., 2010), which measures melodic and rhythmic competence on a same/different listening task.

Stimuli

Emotional stimuli (happy/sad) were instrumental excerpts of 12 s duration, taken from the beginning of real music pieces of different genres (see Appendix A for a complete list of musical excerpts). Emotional stimuli were selected from a corpus of 120 musical excerpts based on pilot-data from a separate group of 12 neurotypical adults. The 120 musical excerpts were rated for emotionality by the pilot-group on a 5-point Likert-scale ranging from very sad to very happy. A total of 40 music excerpts (20 happy/20 sad) were selected for the fMRI experiment. The 20 happy excerpts, selected for the study, were all rated as happy or very happy by all participants in the pilot group. Similarly, the 20 sad excerpts were rated sad or very sad. During the piloting of the stimuli, people were asked whether they were familiar with the music, and if they could name the artist or part of the title of the piece. If any of the pilot-participants could name artist of part of the title of the musical piece from which the excerpt was taken, the excerpt was not included in the final stimulus sample for the fMRI-experiment. Besides the 20 happy and the 20 sad music excerpts, a 12 s chromatic scale was used as a neutral control condition. The neutral control condition acted as an “auditory baseline” for the two emotion conditions. This was done to have some degree of auditory stimulation across all conditions, while only the emotional intensity varies. Stimuli were all matched on duration and volume.

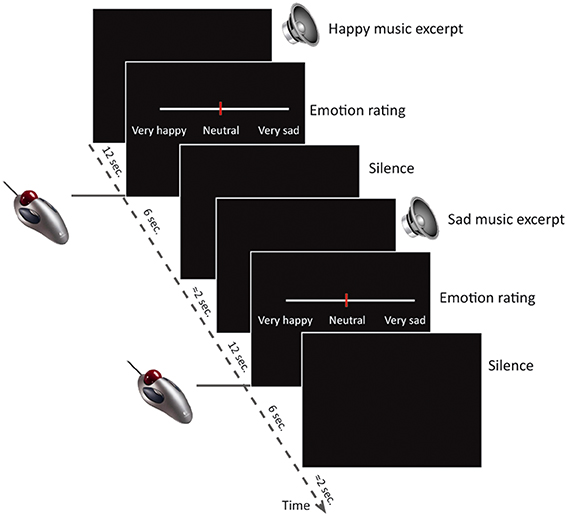

Design

Participants listened to the music excerpts and rated the perceived emotion inside the fMRI-scanner. The study used a block-design, where 20 trials of happy instrumental music, 20 trials of sad and 20 trials of neutral music were presented in pseudo-random order. After hearing each excerpt (12 s), participants had 6 s to rate the perceived emotional intensity of the music on a visual analog scale from very sad over neutral to very happy (Figure 1). The visual analog scale was displayed on an MR compatible screen in the center of the participant's visual field and ratings were given with an MR compatible scroll ball. Participants were instructed that neutral was right in the middle, and the cursor always started out in the neutral position. No visual feedback was displayed during the music listening, but participants were instructed to lie with their eyes open during the entire scan. All participants completed 5 trials outside the scanner, to make sure that they were familiar with the task and understood the instructions. It was emphasized that it was the emotion expressed in the music, and not the emotion or pleasantness experienced by the participant in response to the music, that should be rated. Participants also completed a similar task while listening to emotional linguistic stimuli. The data from this task will be analyzed and published separately of the present study.

Figure 1. Study design. The study consisted of 60 trials (20 happy, 20 sad, 20 neutral) inside the MR scanner. Each trial consisted of a musical excerpt of 12 s duration, followed a visual analog scale depicted on the screen in front of the participant for 6 s while participants indicated their emotion intensity ratings by using an MR-compatible scroll-ball mouse. After the rating there were approximately 2 s silence before the next trial began.

MRI Acquisition

Brain imaging was obtained using a Siemens, 3T Trim Trio, whole-body magnetic resonance scanner located at the Centre of Functionally Integrative Neuroscience at Aarhus University Hospital, Denmark. Two 10,5 min experimental EPI-sequences were acquired with 200 volumes per session and the parameters; TR = 3000 ms, TE = 27 ms, flip angle = 90°, voxel size = 2.00 × 2.00 × 2.00 mm, #voxels = 96 × 96 × 55, slice thickness 2 mm, no gaps. Participants wore MR-compatible headphones inside a 12-channel head coil, and had a trackball in their right hand for the valence ratings. After the functional scans a sagittal T1-weighted anatomical scan with the parameters; TR = 1900 ms, TE = 2.52, flip angle = 9°, voxel size = 0.98 × 0.98 × 1 mm, # voxels = 256 × 256 × 176, slice thickness 1 mm, no gaps, 176 slices, was acquired for later co-registration with the functional data. Participants were instructed to lie still and avoid movement during the scan.

Behavioral Data Analysis

Age, gender, IQ, musicianship and answers on the background questionnaire were compared between groups using independent samples t-test. Emotion ratings were analyzed using a 2 (groups) × 3 (emotion condition: happy, neutral, or sad) mixed model analysis of variance (ANOVA).

fMRI Analysis

fMRI data analysis was performed using Statistical Parametric Mapping (SPM8 version 4667; http://www.fil.ion.ucl.ac.uk/spm) (Penny et al., 2011). Preprocessing was done using default settings in SPM8. The functional images of each participant were motion corrected and realigned, spatially normalized to MNI space using the SPM EPI template and trilinear interpolation (Ashburner and Friston, 1999), and smoothed using an 8 mm full-width at half-maximum smoothing kernel.

For each participant, condition effects were estimated according to the general linear model (Friston et al., 1994). To identify clusters of significant activity across the two groups, one-sample t-contrasts for the main effect of emotional vs. neutral prosody were performed across all participants. For between group differences, random-effects analyses were performed using independent-samples t-tests. All results are thresholded at p < 0.01 after family wise error correction (FWE, Friston et al., 1996) with an extent threshold at 10 voxels. p < 0.01 after FWE correction is a relatively conservative significance threshold, thus to avoid type-2 errors all between-group analyses were also done with a threshold of p < 0.05 after FWE correction. Figures are t-statistics displayed on top of standard MNI T1-images. Labeling of brain regions is done according the Wake Forest University (WFU) PickAtlas (Lancaster et al., 2000; Tzourio-Mazoyer et al., 2002; Maldjian et al., 2003). However, the WFU PickAtlas does not label midbrain structures very precisely, so for identifying activity in ventral striatum and nucleus accumbens we used a midbrain atlas specialized for the basal ganglia (Ahsan et al., 2007). Tables indicate coordinates for peak-voxels significant at both peak and cluster-level.

Results

Behavioral Results

No statistically significant group differences were found with regard to gender, age, full-scale IQ or verbal IQ (Table 1). We found no group difference with regard to musicianship t(37) = −0.85, p = 0.403, or musical abilities as measured with the Musical Ear Test (Table 1). On the ‘musical background’ questionnaire, 11 out of the 19 ASD participants, compared to 5 out of the 20 NT participants, reported that they experienced that specific tones had a great influence or special significance to them (i.e., were perceived as especially annoying or particularly pleasant) t(37) = 2.157, p = 0.038. There were no statistically significant group differences to the questions regarding emotional impact and recognition (“I get emotionally affected by music”; “I find it easy to recognize whether a melody is happy or sad”; “when I am feeling down I often like to listen to sad music”; “It makes me happy to listen to happy music”; “If I am sad, it cheers me up to listen to happy music”). Nor did we see any differences on questions relating to physical arousal associated with music (“It energizes me to listen to music”; “I can feel my body responding physically when I listen to music”; “I often get chills when I listen to music”).

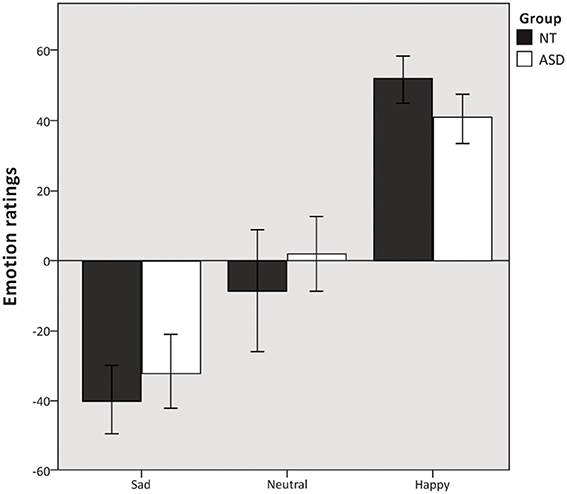

Emotion Ratings

Mixed model ANOVA revealed a significant main effect of emotion condition F(2, 37) = 120.19, p < 0.000. The ANOVA revealed no main effect of group F(1, 37) = 0.365, p = 0.550 (Figure 2) and no significant group × emotion condition interaction F(2, 37) = 2.5, p = 0.091. Additional independent samples t-test revealed no difference in the number of missing responses between the two groups t(37) = −0.928, p = 0.359.

Figure 2. Emotion ratings. Mean emotion ratings (on a visual analog scale from −100 to 100) of sad, neutral and happy musical excerpts. Error bars indicate 95% confidence intervals.

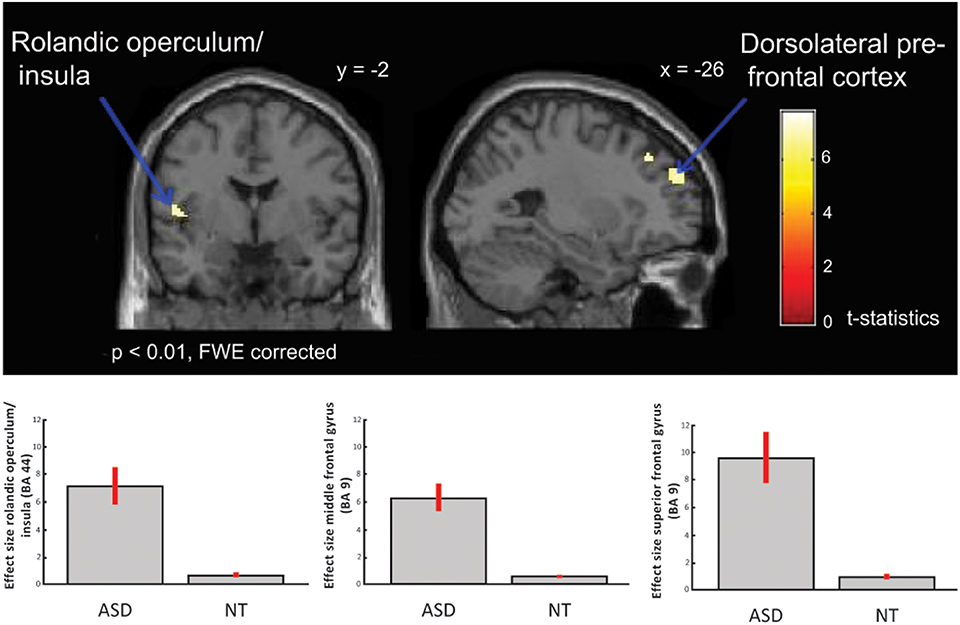

fMRI Results

Between-group comparisons showed no differences on the contrasts; emotional (happy and sad) music vs. neutral music, happy vs. neutral music or sad vs. neutral music at p < 0.01 after FWE-correction, nor at a less conservative significance threshold of p < 0.05 FWE-corrected. However, the ASD group showed significantly greater activation in response to happy compared to sad music in left dorsolateral prefrontal regions i.e., middle frontal gyrus [x = −24, y = 34, z = 42; T(1, 38) = 7.75; BA: 9], left rolandic operculum/insula [x = −50, y = 2, z = 8; T(1, 38) = 7.41; BA: 6] and in superior frontal gyrus [x = −26, y = 52, z = 32; T(38) = 7.23; BA: 9] (Table 3 and Figure 3), than did the NT group.

Figure 3. ASD > NT: group difference for happy vs. sad music (FWE p < 0.01). Individuals with ASD showed increased activation in dorsolateral prefrontal cortex, i.e., middle and superior frontal gyrus, and in insula/rolandic operculum. Box plots show mean effect size for each group in the peak voxel for each region, with 95% confidence intervals.

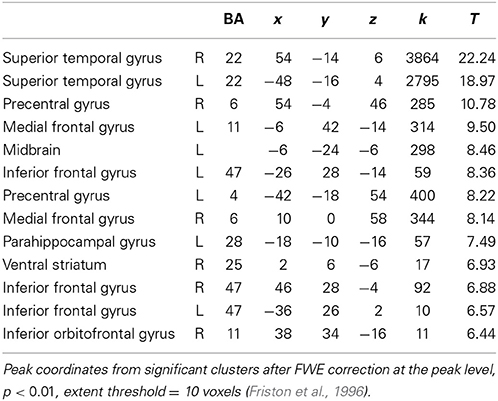

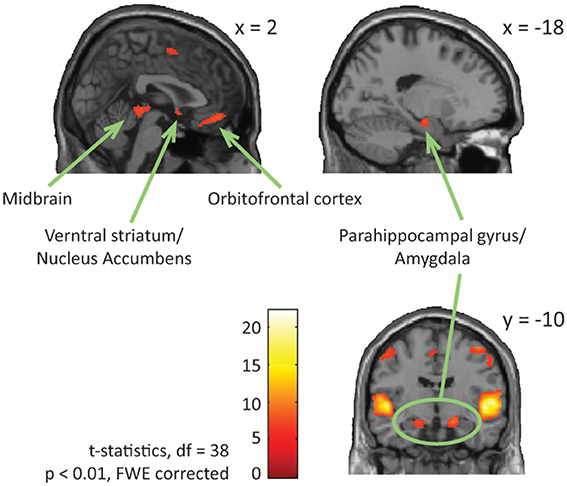

Looking at both groups together, we found significant brain activation in response to emotional (happy and sad) music compared to neutral music in; bilateral STG [BA: 22], precentral gyrus [BA: 6, 4], parahippocampal gyrus [BA: 34, 28], left medial orbitofrontal gyrus [BA: 11], left midbrain, bilateral inferior frontal gyrus [BA: 47], right medial frontal gyrus [BA: 6], right ventral striatum/nucleus accumbens, and in orbitofrontal cortex [BA: 11] (Table 4, Figure 4).

Figure 4. ASD and NT—main effect of emotional vs. neutral music. The figure shows brain activations in response to emotional compared to neutral music across all participants, including activations in midbrain, parahippocampal gyrus extending into amygdala, ventral striatum/nucleus accumbens, and orbitofrontal cortex.

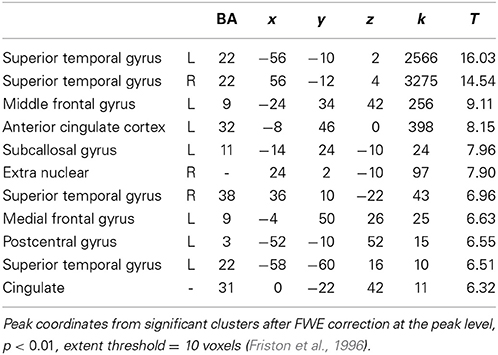

Comparing processing of happy and sad music across groups showed increased activation bilaterally in STG [BA: 22, 38], anterior cingulate and cingulate cortex [BA: 32, 31], subcallosal gyrus, midbrain, medial frontal gyrus [BA: 9], and postcentral gyrus [BA: 3] (Table 5). No brain regions showed more activity in response to sad than happy music across groups.

Discussion

Our results demonstrate intact emotion recognition, and mostly intact neural processing of emotional music in high-functioning adults with ASD compared to NT adults. Across both ASD and NT individuals we found increased activation in limbic and paralimbic brain areas, such as parahippocampal gyrus extending into amygdala, and midbrain structures, also including reward regions, such as medial orbitofrontal cortex and ventral striatum. These regions are highly interconnected and have previously been identified as core regions for emotional processing of music (Koelsch, 2010, see Figure 4), and for other emotional stimuli (Adolphs, 2002). Meanwhile, individuals with ASD displayed significantly greater activation in left dorsolateral prefrontal cortex (i.e., middle and superior frontal gyrus), and left rolandic operculum/insula, in response to happy contrasted with sad music, compared to NT individuals (Figure 3).

The difference in brain activation in response to happy compared to sad music between the two groups could be interpreted as heightened arousal and increased reliance on cognitive processing for emotion recognition of happy music in individuals with ASD. The dorsolateral prefrontal region is associated with higher cognitive functions, such as working memory and executive functions (Boisgueheneuc et al., 2006). With regard to emotion processing the medial parts of the pre-frontal cortex has been found to be involved in emotion appraisal (Etkin et al., 2011). Hence, the increased activation of the dorsolateral prefrontal cortex found in this study, is likely related to a more cognitively demanding emotion recognition strategy for happy music in the ASD group. This would be consistent with the findings of more analytical and cognitive strategies which have been suggested to govern face perception in individuals with ASD (Jemel et al., 2006), and with findings of atypicalities in verbal reporting of emotions (Heaton et al., 2012; Bird and Cook, 2013), including musical emotions in people with ASD (Allen et al., 2009a, 2013).

Meanwhile the insula is critically involved in mediating cognitive and emotional processing, for instance in emotion monitoring and regulation (Menon and Uddin, 2010; Gasquoine, 2014). The insula is highly connected with limbic, sensory and motor regions of the brain, and is considered a central in sensorimotor, visceral, interoceptive processing, homeostatic/allostatic functions, and emotional awareness of self and others (Craig, 2002; Critchley, 2005). Furthermore, the insula is posited to be involved in monitoring emotional salience (Craig, 2009). Consistent with this the insula is previously found to be involved in emotional responses to music (Blood and Zatorre, 2001; Brown et al., 2004; Griffiths et al., 2004; Trost et al., 2012). Hence activation of insula associated with emotional music found in these studies might be due to increased physiological arousal. Indeed, happy music is often found to be more arousing than sad music (see for instance, Trost et al., 2012). Thus the stronger insula activation found in the ASD group in this study might be linked to enhanced bodily arousal in response to happy music compared to NT individuals. This corresponds well with self-reports of individuals with ASD describing stronger physiological responses to music (Allen et al., 2009a).

Interestingly, in our study, we only found evidence for this differential brain processing between individuals with ASD and NT individuals in response to happy compared to sad music. Future studies are needed to clarify whether recruitment of extra cognitive resources is unique to the happy-sad differentiation or is related to other music emotions too.

While subtle brain processing differences were found between the two groups in recognizing happy music, we found no evidence of differences in emotion ratings between the ASD and NT group (Figure 2). The successful emotion recognition seen from our behavioral ratings was further substantiated by the data from the “music background”-questionnaire, where the ASD group reported that they got emotionally affected by listening to music, found it easy to recognize emotions from music, and experienced physiological arousal comparable to that of NT individuals when listening to music in everyday settings. This is consistent with previous findings of intact emotion recognition (Heaton et al., 2008; Quintin et al., 2011), and intact physiological responses to music in high-functioning individuals with ASD (Caria et al., 2011; Allen et al., 2013). Physiological arousal during music listening is associated with emotional and pleasurable responses (Grewe et al., 2005; Salimpoor et al., 2009). Thus, the presence of typical physiological responses to music indicates that people with ASD are not only capable of correctly recognizing emotions in music, but that they also experience full-fledged emotions from listening to music—though they might use an extra effort to report them. In summary our study shows that individuals with ASD do not have deficits in emotion recognition from music in general, but in certain instances rely on partially different strategies for decoding emotions from music, which may result in subtle differences in brain processing.

Looking at brain responses to emotional compared with neutral music we did not find any differences between the two groups. Across all participants we found increased activation in bilateral STG, parahippocampal gyrus extending into amygdala, inferior frontal gyrus, precentral gyrus, left midbrain, and right ventral striatum. This is consistent with what is generally found in studies of music emotion processing (Koelsch et al., 2006; Peretz, 2010; Brattico et al., 2011; Trost et al., 2012; Park et al., 2013). Indeed, music with a high impact on arousal, such as joyful music is associated with increased activation of the STG (Koelsch et al., 2006, 2013; Mitterschiffthaler et al., 2007; Brattico et al., 2011; Mueller et al., 2011; Trost et al., 2012), which also corresponds to our finding of increased STG activation in response to happy compared to sad music. Besides, being engaged in auditory processing, the STG is also central for social and emotional processing (Zilbovicius et al., 2006), and is proposed to code communicative and emotional significance from all social stimuli (Redcay, 2008). Music is a rich tool for communicating emotions (Huron, 2006; Juslin and Västfjäll, 2008), and accordingly emotional music will elicit more activation of the STG compared to neutral music, as was the case in our study. Also, the precentral and inferior frontal gyrus, were more active during emotional music than neutral. Precentral activity is found to correlate with the arousal dimension of music (Trost et al., 2012), and arousal levels are generally found to correlate with emotional responses to music (Grewe et al., 2005; Salimpoor et al., 2009). Increased activation of the inferior frontal gyrus has also ben found in response to pleasant compared to scambled music, and is suggested to reflext music syntactic analysis (Koelsch et al., 2006).

We found increased activation of the parahippocampal gyrus extending into amygdala in response to emotional music across all individuals. Engagement of the parahippocampal gyrus and amygdala are primarily found to respond to negative affective states, including varying degrees of musical dissonance (Blood et al., 1999; Koelsch et al., 2006, 2008), but also to positive affective states and happy music (Mitterschiffthaler et al., 2007). In our study activation of the parahippocampal gyrus and amygdala was not significantly greater in response to sad compared to happy music, suggesting that these structures are implicated in processing of both happy and sad music. We found increased brain activation in midbrain structures, overlapping thalamus, in response to emotional music. Thalamus activity is found to correlate with music-induced psychophysiological arousal and pleasurable chills (Blood and Zatorre, 2001), and is central in processing temporal and ordinal complexity (Janata and Grafton, 2003). Indeed, emotional and pleasurable responses to music seem to be associated with optimal levels of complexity (Berlyne, 1971; North and Hargreaves, 1995; Witek et al., 2014), accordingly it makes sense that we find greater activity in these regions during emotional than neutral music.

Emotional music also engaged parts of the right ventral striatum, including nucleus accumbens, and medial orbitofrontal cortex, these regions are central parts of the brain's dopaminergic reward system (Gebauer et al., 2012), and have previously been associated with strong emotional and pleasurable responses to music (Blood and Zatorre, 2001; Brown et al., 2004; Menon and Levitin, 2005; Salimpoor et al., 2011, 2013). ASD is previously suggested to be associated with deficient reward processing (Kohls et al., 2013), however, the finding of intact activation of the reward system in response to emotional music suggests that music listening has the same pleasurable and motivational impact on people with ASD as it has on NT individuals.

Despite general agreement between the findings of this study and those of the previous neuroimaging study of emotional music perception in high-functioning adults with AS from Caria et al. (2011), there are some deviations. We found increased activation in the ASD group compared to the NT group in response to happy compared to sad music, and no group differences in processing of emotional compared to neutral music overall. Meanwhile, Caria et al. (2011) found less brain activation in various brain regions including precentral gyrus, cerebellum, supplementary motor area, insula and inferior frontal gyrus response to both happy and sad music in individuals with AS relative to NT individuals. However, the study designs are quite different: First, Caria et al. (2011) employed a relatively small study sample, and only had 5 trials in each condition for some of their comparisons. Second, our study required people to decode the emotional intensity of experimenter-selected music directly inside the scanner, while Caria et al. (2011) had their subjects bring half of the music themselves and had them rate the emotion and arousal of all music before the actual scanning. Consequently, our finding of intact emotion recognition and brain responses to music in individuals with ASD might be facilitated by the explicit nature of our task, where participants were directly instructed to evaluate the perceived emotion from the musical excerpts. Other studies have found that individuals with ASD in general perform better on emotion recognition tasks when given more explicit instructions (for review see Nuske et al., 2013) and show more “normalized” brain activity (Wang et al., 2007). Consequently, the difference in brain activation between this study and that of Caria et al. (2011) might relate to differences between active decoding of emotions from music and passive music listening. Also, the participants in Caria et al.'s (2011) study knew the music in advance, and it is well-established that familiarity influences the way we perceive music (Pereira et al., 2011; Van den Bosch et al., 2013), and may change the emotional experience inside the scanner.

Future studies should investigate differences in implicit and explicit emotion perception in music in ASD individuals, and preferably include more emotions than just happiness and sadness. It would also be interesting to conduct similar experiments using alternative scanning protocols such as sparse temporal sampling or interleaved steady state imaging, which might optimize signal intensity from auditory and subcortical regions compared to continuous scanning (Mueller et al., 2011; Perrachione and Ghosh, 2013). Finally, future studies should aim to investigate emotional responses to music in low-functioning and non-verbal individuals with ASD, since these might be the ones who could benefit the most from using music to communicate and share emotions.

Conclusion

Individuals with ASD showed intact emotion recognition from music, as expressed in their behavioral ratings, and in typical brain processing of emotional music overall, with activation of limbic and paralimbic areas, including reward regions. However, in response to happy compared to sad music individuals with ASD had increased activation of left dorsolateral prefrontal regions and rolandic operculum/insula, suggesting a more cognitively demanding strategy for decoding happy music, and potentially higher levels of physiological arousal in individuals with ASD.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgment

This work was supported by the Lundbeck Foundation (R32-A2846 to Line Gebauer).

Supplementary Material

The Supplementary Material for this article can be found online at: http://www.frontiersin.org/journal/10.3389/fnins.2014.00192/abstract

References

Adolphs, R. (2002). Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 12, 169–177. doi: 10.1016/S0959-4388(02)00301-X

Ahsan, R. L., Allom, R., Gousias, I. S., Habib, H., Turkheimer, F. E., Free, S., et al. (2007). Volumes, spatial extents and a probabilistic atlas of the human basal ganglia and thalamus. Neuroimage 38, 261–270. doi: 10.1016/j.neuroimage.2007.06.004

Allen, R., Davis, R., and Hill, E. (2013). The effects of autism and alexithymia on physiological and verbal responsiveness to music. J. Autism Dev. Disord. 43, 432–444. doi: 10.1007/s10803-012-1587-8

Allen, R., Hill, E., and Heaton, P. (2009a). The subjective experience of music in autism spectrum disorder. Ann. N.Y. Acad. Sci. 1169, 326–331. doi: 10.1111/j.1749-6632.2009.04772.x

Allen, R., Hill, E., and Heaton, P. (2009b). ‘Hath Charms to Soothe…’: an exploratory study of how high-functioning adults with asd experience music. Autism 13, 21–41. doi: 10.1177/1362361307098511

Anderson, J. S., Lange, N., Froehlich, A., DuBray, M. B., Druzgal, T. J., Froimowitz, M. P., et al. (2010). Decreased left posterior insular activity during auditory language in autism. AJNR Am. J. Neuroradiol. 31, 131–139. doi: 10.3174/ajnr.A1789

APA. (2013). Diagnostic and Statistical Manual of Mental Disorders. 5th Edn. Arlington, VA: American Psychiatric Publishing.

Ashburner, J., and Friston, K. J. (1999). Nonlinear spatial normalization using basis functions. Hum. Brain Mapp. 7, 254–266. doi: 10.1002/(SICI)1097-0193(1999)7:4<254::AID-HBM4>3.0.CO;2-G

Ashwin, C., Baron-Cohen, S., Wheelwright, S., O'Riordan, M., and Bullmore, E. T. (2007). Differential activation of the amygdala and the ‘social brain’ during fearful face-processing in asperger syndrome. Neuropsychologia 45, 2–14. doi: 10.1016/j.neuropsychologia.2006.04.014

Baron-Cohen, S., Ring, H. A., Bullmore, E. T., Wheelwright, S., Ashwin, C., and Williams, S. C. (2000). The amygdala theory of autism. Neurosci. Biobehav. Rev. 24, 355–364. doi: 10.1016/S0149-7634(00)00011-7

Bird, G., and Cook, R. (2013). Mixed emotions: the contribution of alexithymia to the emotional symptoms of autism. Transl. Psychiatry 3, e285. doi: 10.1038/tp.2013.61

Bird, G., Silani, G., Brindley, R., White, S., Frith, U., and Singer, T. (2010). Empathic brain responses in insula are modulated by levels of alexithymia but not autism. Brain 133(Pt 5), 1515–1525. doi: 10.1093/brain/awq060

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U.S.A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Blood, A. J., Zatorre, R. J., Bermudez, P., and Evans, A. C. (1999). Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nat. Neurosci. 2, 382–387. doi: 10.1038/7299

Boisgueheneuc, F. D., Levy, R., Volle, E., Seassau, M., Duffau, H., Kinkingnehun, S., et al. (2006). Functions of the left superior frontal gyrus in humans: a lesion study. Brain 129, 3315–3328. doi: 10.1093/brain/awl244

Bonnel, A., Mottron, L., Peretz, I., Trudel, M., Gallun, E., and Bonnel, A.-M. (1999). Enhanced pitch sensitivity in individuals with autism: a signal detection analysis. J. Cogn. Neurosci. 15, 226–235.

Boucher, J., and Lewis, V. (1992). Unfamiliar face recognition in relatively able autistic children. J. Child Psychol. Psychiatry 33, 843–850. doi: 10.1111/j.1469-7610.1992.tb01960.x

Brattico, E., Alluri, V., Bogert, B., Jacobsen, T., Vartiainen, N., Nieminen, S., et al. (2011). A functional MRI study of happy and sad emotions in music with and without lyrics. Front. Psychol. 2:308. doi: 10.3389/fpsyg.2011.00308

Brown, S., Martinez, M. J., and Parsons, L. M. (2004). Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport 15, 2033–2037. doi: 10.1097/00001756-200409150-00008

Caria, A., Venuti, P., and de Falco, S. (2011). Functional and dysfunctional brain circuits underlying emotional processing of music in autism spectrum disorders. Cereb. Cortex 21, 2838–2849. doi: 10.1093/cercor/bhr084

Celani, G., Battacchi, M. W., and Arcidiacono, L. (1999). The understanding of the emotional meaning of facial expressions in people with autism. J. Autism Dev. Disord. 29, 57–66. doi: 10.1023/A:1025970600181

Corbett, B. A., Carmean, V., Ravizza, S., Wendelken, C., Henry, M. L., Carter, C., et al. (2009). A functional and structural study of emotion and face processing in children with autism. [Research Support, N.I.H., Extramural]. Psychiatry Res. 173, 196–205. doi: 10.1016/j.pscychresns.2008.08.005

Craig, A. (2009). How do you feel—now? the anterior insula and human awareness. Nat. Rev. Neurosci. 10, 655–666. doi: 10.1038/nrn2555

Craig, A. D. (2002). How do you feel? Interoception: the sense of the physiological condition of the body. Nat. Rev. Neurosci. 3, 655–666. doi: 10.1038/nrn894

Critchley, H. D. (2005). Neural mechanisms of autonomic, affective, and cognitive integration. J. Comp. Neurol. 493, 154–166. doi: 10.1002/cne.20749

Critchley, H. D., Daly, E. M., Bullmore, E. T., Williams, S. C., Van Amelsvoort, T., and Robertson, D. M. (2000). The functional neuroanatomy of social behaviour: changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain 123(Pt 11), 2203–2212. doi: 10.1093/brain/123.11.2203

Dubé, L., and Le Bel, J. (2003). The content and structure of Laypeople's concept of pleasure. Cogn. Emot. 17, 263–295. doi: 10.1080/02699930302295

Eigsti, I.-M., Schuh, J., and Paul, R. (2012). The neural underpinnings of prosody in autism. Child Neuropsychol. 18, 600–617. doi: 10.1080/09297049.2011.639757

Etkin, A., Egner, T., and Kalisch, R. (2011). Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cogn. Sci. 15, 85–93. doi: 10.1016/j.tics.2010.11.004

Eyler, L. T., Pierce, K., and Courchesne, E. (2012). A failure of left temporal cortex to specialize for language is an early emerging and fundamental property of autism. Brain 135(Pt 3), 949–960. doi: 10.1093/brain/awr364

Fitch, W. T. (2005). The evolution of music in comparative perspective. Ann. N. Y. Acad. Sci. 1060, 29–49. doi: 10.1196/annals.1360.004

Fitzgerald, M., and Bellgrove, M. A. (2006). The overlap between alexithymia and Asperger's syndrome. J. Autism Dev. Disord. 36, 573–576. doi: 10.1176/appi.ajp.161.11.2134

Friston, K. J., Holmes, A., Poline, J.-B., Price, C. J., and Frith, C. (1996). Detecting activations in PET and fMRI: levels of inference and power. Neuroimage 4, 223–235. doi: 10.1006/nimg.1996.0074

Friston, K. J., Holmes, A. P., Worsley, K. J., Poline, J.-P., Frith, C. D., and Frackowiak, R. S. J. (1994). Statistical parametric maps in functional imaging: a general linear approach. Hum. Brain Mapp. 2, 189–210. doi: 10.1002/hbm.460020402

Gabrielsson, A. (2002). Emotion perceived and emotion felt: same or different? Music. Sci. 5(suppl. 1), 123–147. doi: 10.1177/10298649020050S105

Gasquoine, P. G. (2014). Contributions of the insula to cognition and emotion. Neuropsychol. Rev. 24, 77–87. doi: 10.1007/s11065-014-9246-9

Gebauer, L., Kringelbach, M. L., and Vuust, P. (2012). Ever-changing cycles of musical pleasure: the role of dopamine and anticipation. Psychomusicology 22, 152–167. doi: 10.1037/a0031126

Gervais, H., Belin, P., Boddaert, N., Leboyer, M., Coez, A., Sfaello, I., et al. (2004). Abnormal cortical voice processing in autism. Nat. Neurosci. 7, 801–802. doi: 10.1038/nn1291

Gillberg, C., and Coleman, M. (1996). Autism and medical disorders: a review of the literature. Dev. Med. Child Neurol. 38, 191–202. doi: 10.1111/j.1469-8749.1996.tb15081.x

Golan, O., Baron-Cohen, S., Hill, J. J., and Rutherford, M. D. (2007). The ‘reading the mind in the voice’ test-revised: a study of complex emotion recognition in adults with and without autism spectrum conditions. J. Autism Dev. Disord. 37, 1096–106. doi: 10.1007/s10803-006-0252-5

Grewe, O., Nagel, F., Kopiez, R., and Altenmüller, E. (2005). How does music arouse “Chills”? investigating strong emotions, combining psychological, physiological, and psychoacoustical methods. Ann. N. Y. Acad. Sci. 1060, 446–449. doi: 10.1196/annals.1360.041

Griffiths, T., Warren, J., Dean, J., and Howard, D. (2004). “When the feeling” S Gone”: a selective loss of musical emotion. J. Neurol. Neurosurg. Psychiatry 75, 344–345. doi: 10.1136/jnnp.2003.015586

Hadjikhani, N., Joseph, R. M., Manoach, D. S., Naik, P., Snyder, J., Dominick, K., et al. (2009). Body expressions of emotion do not trigger fear contagion in autism spectrum disorder. Soc. Cogn. Affect. Neurosci. 4, 70–78. doi: 10.1093/scan/nsn038

Heaton, P. (2003). Pitch memory, labelling and disembedding in autism. J. Child Psychol. Psychiatry 44, 543–551. doi: 10.1111/1469-7610.00143

Heaton, P. (2005). Interval and contour processing in autism. J. Autism Dev. Disord. 35, 787–793. doi: 10.1007/s10803-005-0024-7

Heaton, P., Allen, R., Williams, K., Cummins, O., and Happe, F. (2008). Do social and cognitive deficits curtail musical understanding? Evidence from Autism and Down Syndrome. Br. J. Dev. Psychol. 26, 171–182. doi: 10.1348/026151007X206776

Heaton, P., Pring, L., and Hermelin, B. (1999). A pseudo-savant: a case of exceptional musical splinter skills. Neurocase 5, 503–509. doi: 10.1080/13554799908402745

Heaton, P., Reichenbacher, L., Sauter, D., Allen, R., Scott, S., and Hill, E. (2012). Measuring the effects of alexithymia on perception of emotional vocalizations in autistic spectrum disorder and typical development. Psychol. Med. 42, 2453–2459. doi: 10.1017/S0033291712000621

Hobson, P. (2005). “Autism and emotion,” in Handbook of Autism and Pervasive Developmental Disorders, Vol. 1: Diagnosis, Development, Neurobiology, and Behavior, 3rd Edn., eds F. R. Volkmar, R. Paul, A. Klin, and D. Cohen (Hoboken, NJ: John Wiley and Sons Inc), 406–422.

Hobson, R. P. (1986). The autistic child's appraisal of expressions of emotion. J. Child Psychol. Psychiatry 27, 321–342.

Hubert, B., Wicker, B., Moore, D. G., Monfardini, E., Duverger, H., Fonseca, D. D., et al. (2007). Brief report: recognition of emotional and non-emotional biological motion in individuals with autistic spectrum disorders. J. Autism Dev. Disord. 37, 1386–1392. doi: 10.1007/s10803-006-0275-y

Huron, D. (2001). Is music an evolutionary adaptation? Ann. N. Y. Acad. Sci. 930, 43–61. doi: 10.1111/j.1749-6632.2001.tb05724.x

Huron, D. (2006). Sweet Anticipation: Music and the Psychology of Expectation. Cambridge, MA: MIT Press.

Janata, P., and Grafton, S. T. (2003). Swinging in the brain: shared neural substrates for behaviors related to sequencing and music. Nat. Neurosci. 6, 682–687. doi: 10.1038/nn1081

Jemel, B., Mottron, L., and Dawson, M. (2006). Impaired face processing in autism: fact or artifact? J. Autism Dev. Disord. 36, 91–106. doi: 10.1007/s10803-005-0050-5

Jones, C. R. G., Pickles, A., Falcaro, M., Marsden, A. J. S., Happé, F., Scott, S. K., et al. (2011). A multimodal approach to emotion recognition ability in autism spectrum disorders. J. Child Psychol. Psychiatry 52, 275–285. doi: 10.1111/j.1469-7610.2010.02328.x

Juslin, P. N., and Laukka, P. (2004). Expression, perception, and induction of musical emotions: a review and a questionnaire study of everyday listening. J. New Music Res. 33, 217–238. doi: 10.1080/0929821042000317813

Juslin, P. N., and Västfjäll, D. (2008). Emotional responses to music: the need to consider underlying mechanisms. Behav. Brain Sci. 31, 559–575. discussion 575–621. doi: 10.1017/S0140525X08005293

Koelsch, S. (2010). Towards a neural basis of music-evoked emotions. Trends Cogn. Sci. 14, 131–137. doi: 10.1016/j.tics.2010.01.002

Koelsch, S., Fritz, T., Cramon, D. Y. V., Müller, K., and Friederici, A. D. (2006). Investigating emotion with music: an fMRI study. Hum. Brain Mapp. 27, 239–250. doi: 10.1002/hbm.20180

Koelsch, S., Fritz, T., and Schlaug, G. (2008). Amygdala activity can be modulated by unexpected chord functions during music listening. Neuroreport 19, 1815–1819. doi: 10.1097/WNR.0b013e32831a8722

Koelsch, S., Skouras, S., Fritz, T., Herrera, P., Bonhage, C., Küssner, M. B., et al. (2013). The roles of superficial amygdala and auditory cortex in music-evoked fear and joy. Neuroimage 81, 49–60. doi: 10.1016/j.neuroimage.2013.05.008

Kohls, G., Schulte-Rüther, M., Nehrkorn, B., Müller, K., Fink, G. R., Kamp-Becker, I., et al. (2013). Reward system dysfunction in autism spectrum disorders. Soc. Cogn. Affect. Neurosci. 8, 565–572. doi: 10.1093/scan/nss033

Konečni, V. J. (2008). Does music induce emotion? A theoretical and methodological analysis. Psychol. Aesth. Creat. Arts 2, 115–129. doi: 10.1037/1931-3896.2.2.115

Lancaster, J. L., Woldorff, M. G., Parsons, L. M., Liotti, M., Freitas, C. S., Rainey, L., et al. (2000). Automated talairach atlas labels for functional brain mapping. Hum. Brain Mapp. 10: 120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8

Levitin, D. J. (2006). This is Your Brain on Music: The Science of a Human Obsession. New York, NY: Dutton.

Lindner, J. L., and Rosén, L. A. (2006). Decoding of emotion through facial expression, prosody and verbal content in children and adolescents with asperger's syndrome. J. Autism Dev. Disord. 36, 769–777. doi: 10.1007/s10803-006-0105-2

Lord, C., Risi, S., Lambrecht, L., Cook, E. H. Jr., Leventhal, B. L., DiLavore, P. C., et al. (2000). The autism diagnostic observation schedule-generic: a standard measure of social and communication deficits associated with the spectrum of autism. J. Autism Dev. Disord. 30, 205–223. doi: 10.1023/A:1005592401947

Maldjian, J. A., Laurienti, P. J., Burdette, J. B., and Kraft, R. A. (2003). An Automated method for neuroanatomic and cytoarchitectonic atlas- based interrogation of fMRI data sets. Neuroimage 19, 1233–1239. doi: 10.1016/S1053-8119(03)00169-1

Mazefsky, C. A., and Oswald, D. P. (2007). Emotion perception in asperger's syndrome and high-functioning autism: the importance of diagnostic criteria and cue intensity. J. Autism Dev. Disord. 37, 1086–1095. doi: 10.1007/s10803-006-0251-6

Menon, V., and Levitin, D. J. (2005). The rewards of music listening: response and physiological connectivity of the mesolimbic system. Neuroimage 28, 175–184. doi: 10.1016/j.neuroimage.2005.05.053

Menon, V., and Uddin, L. Q. (2010). Saliency, switching, attention and control: a network model of insula function. Brain Struct. Funct. 214, 655–667. doi: 10.1007/s00429-010-0262-0

Mitterschiffthaler, M. T., Fu, C. H. Y., Dalton, J. A., Andrew, C. M., and Williams, S. C. R. (2007). A functional MRI Study of happy and sad affective states induced by classical music. Hum. Brain Mapp. 28, 1150–1162. doi: 10.1002/hbm.20337

Moesgaard, K. (2010). Musik Som Brandingplatform (Eng. Title. Music as Branding Plat Form). Available online at: http://www.promus.dk/files/rapporter/mec_20musikundersoegelse_20september_202010.pdf

Mueller, K., Mildner, T., Fritz, T., Lepsien, J., Schwarzbauer, C., Schroeter, M. L., et al. (2011). Investigating brain response to music: a comparison of different fMRI acquisition schemes. Neuroimage 54, 337–343. doi: 10.1016/j.neuroimage.2010.08.029

North, A. C., and Hargreaves, D. J. (1995). Subjective complexity, familiarity and liking of popular music. Psychomusicology 14, 77–793. doi: 10.1037/h0094090

Nuske, H. J., Vivanti, G., and Dissanayake, C. (2013). Are emotion impairments unique to, universal, or specific in autism spectrum disorder? A comprehensive review. Cogn. Emot. 27, 1042–1061. doi: 10.1080/02699931.2012.762900

Overy, K. (2012). Making music in a group: synchronization and shared experience. Ann. N.Y. Acad. Sci. 1252, 65–68. doi: 10.1111/j.1749-6632.2012.06530.x

Park, M., Hennig-Fast, K., Bao, Y., Carl, P., Pöppel, E., Welker, L., et al. (2013). Personality traits modulate neural responses to emotions expressed in music. Brain Res. 1523, 68–76. doi: 10.1016/j.brainres.2013.05.042

Penny, W. D., Friston, K. J., Ashburner, J. T., Kiebel, S. J., and Nichols, T. E. (Eds.). (2011). Statistical Parametric Mapping: The Analysis of Functional Brain Images. London: Academic Press.

Pereira, C. S., Teixeira, J., Figueiredo, P., Xavier, J., Castro, S. L., and Brattico, E. (2011). Music and emotions in the brain: familiarity matters. PLoS ONE 6:e27241. doi: 10.1371/journal.pone.0027241

Peretz, I. (2010). “Towards a neurobiology of musical emotions,” in Handbook of Music and Emotion: Theory, Research, Applications, ed P. J. J. Sloboda (Oxford: Oxford University Press), 99–126.

Perrachione, T. K., and Ghosh, S. S. (2013). Optimized design and analysis of sparse-sampling FMRI experiments. Front. Neurosci. 7:55. doi: 10.3389/fnins.2013.00055

Philip, R. C. M., Whalley, H. C., Stanfield, A. C., Sprengelmeyer, R., Santos, I. M., Young, A. W., et al. (2010). Deficits in facial, body movement and vocal emotional processing in autism spectrum disorders. Psychol. Med. 40, 1919–1929. doi: 10.1017/S0033291709992364

Quintin, E.-M., Bhatara, A., Poissant, H., Fombonne, E., and Levitin, D. J. (2011). Emotion perception in music in high-functioning adolescents with autism spectrum disorders. J. Autism Dev. Disord. 41, 1240–1255. doi: 10.1007/s10803-010-1146-0

Redcay, E. (2008). The superior temporal sulcus performs a common function for social and speech perception: implications for the emergence of autism. Neurosci. Biobehav. Rev. 32, 123–142. doi: 10.1016/j.neubiorev.2007.06.004

Rentfrow, P. J., and Gosling, S. D. (2003). The do re mi's of everyday life: the structure and personality correlates of music preferences. J. Pers. Soc. Psychol. 84, 1236–1256. doi: 10.1037/0022-3514.84.6.1236

Salimpoor, V. N., Benovoy, M., Larcher, K., Dagher, A., and Zatorre, R. J. (2011). Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nat. Neurosci. 14, 257–262. doi: 10.1038/nn.2726

Salimpoor, V. N., Benovoy, M., Longo, G., Cooperstock, J., and Zatorre, R. (2009). The rewarding aspects of music listening are related to degree of emotional arousal. PLoS ONE 4:e7487. doi: 10.1371/journal.pone.0007487

Salimpoor, V. N., van den Bosch, I., Kovacevic, N., McIntosh, A. R., Dagher, A., and Zatorre, R. J. (2013). Interactions between the nucleus accumbens and auditory cortices predict music reward value. Science 340, 216–219. doi: 10.1126/science.1231059

Schultz, R. T., Gauthier, I., Klin, A., Fulbright, R. K., Anderson, A. W., Volkmar, F., et al. (2000). Abnormal ventral temporal cortical activity during face discrimination among individuals with autism and asperger syndrome. Arch. Gen. Psychiatry 57, 331–340. doi: 10.1001/archpsyc.57.4.331

Stanutz, S., Wapnick, J., and Burack, J. (2014). Pitch discrimination and melodic memory in children with autism spectrum disorder. Autism 18, 137–147. doi: 10.1177/1362361312462905

Trost, W., Ethofer, T., Zentner, M., and Vuilleumier, P. (2012). Mapping aesthetic musical emotions in the brain. Cereb. Cortex 22, 2769–2783. doi: 10.1093/cercor/bhr353

Tzourio-Mazoyer, N., Landeau, B., Papathanassiou, D., Crivello, F., Etard, O., Delcroix, N., et al. (2002). Automated anatomical labeling of activations in spm using a macroscopic anatomical parcellation of the mni mri single-subject brain. Neuroimage 15, 273–289. doi: 10.1006/nimg.2001.0978

Van den Bosch, I., Salimpoor, V. N., and Zatorre, R. J. (2013). Familiarity mediates the relationship between emotional arousal and pleasure during music listening. Front. Hum. Neurosci. 7:534. doi: 10.3389/fnhum.2013.00534

Wallentin, M., Nielsen, A. H., Friis-Olivarius, M., Vuust, C., and Vuust, P. (2010). The musical ear test, a new reliable test for measuring musical competence. Learn. Individ. Differ. 20, 188–196. doi: 10.1016/j.lindif.2010.02.004

Wang, A. T., Lee, S. S., Sigman, M., and Dapretto, M. (2006). Neural basis of irony comprehension in children with autism: the role of prosody and context. Brain 129(Pt 4), 932–943. doi: 10.1093/brain/awl032

Wang, A. T., Lee, S. S., Sigman, M., and Dapretto, M. (2007). Reading affect in the face and voice. ArchGen Psychiatry 64, 698–708. doi: 10.1001/archpsyc.64.6.698

Wechsler, D. (1997). WAIS-III/WMS-III Technical Manual. San Antonio, TX: The Psychological Corporation.

Witek, M., Clarke, E., Wallentin, M., Kringelbach, M. L., and Vuust, P. (2014). Rhythmic complexity, body-movement and pleasure in groove music. PLoS ONE 9:e94446. doi: 10.1371/journal.pone.0094446

Zald, D. H., and Zatorre, R. J. (2011). “Music,” in Neurobiology of Sensation and Reward, Frontiers in Neuroscience, Chapter 19, ed J. A. Gottfried (Boca Raton, FL: CRC Press), 405–429.

Keywords: autism spectrum disorder, music, emotion, fMRI

Citation: Gebauer L, Skewes J, Westphael G, Heaton P and Vuust P (2014) Intact brain processing of musical emotions in autism spectrum disorder, but more cognitive load and arousal in happy vs. sad music. Front. Neurosci. 8:192. doi: 10.3389/fnins.2014.00192

Received: 28 February 2014; Accepted: 19 June 2014;

Published online: 15 July 2014.

Edited by:

Eckart Altenmüller, University of Music and Drama Hannover, GermanyReviewed by:

Elvira Brattico, University of Helsinki, FinlandCatherine Y. Wan, Beth Israel Deaconess Medical Center and Harvard Medical School, USA

Copyright © 2014 Gebauer, Skewes, Westphael, Heaton and Vuust. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Line Gebauer, Department of Clinical Medicine, Center of Functionally Integrative Neuroscience, Aarhus University, Noerrebrogade 44, Build. 10 G, 5th floor, Aarhus 8000, Denmark e-mail:Z2ViYXVlckBwZXQuYXVoLmRr;bGluZWdlYmF1ZXJAZ21haWwuY29t

Line Gebauer

Line Gebauer Joshua Skewes

Joshua Skewes Gitte Westphael1

Gitte Westphael1 Pamela Heaton

Pamela Heaton Peter Vuust

Peter Vuust