95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Neurosci. , 24 May 2012

Sec. Decision Neuroscience

Volume 6 - 2012 | https://doi.org/10.3389/fnins.2012.00070

Investigation into the neural and computational bases of decision-making has proceeded in two parallel but distinct streams. Perceptual decision-making (PDM) is concerned with how observers detect, discriminate, and categorize noisy sensory information. Economic decision-making (EDM) explores how options are selected on the basis of their reinforcement history. Traditionally, the sub-fields of PDM and EDM have employed different paradigms, proposed different mechanistic models, explored different brain regions, disagreed about whether decisions approach optimality. Nevertheless, we argue that there is a common framework for understanding decisions made in both tasks, under which an agent has to combine sensory information (what is the stimulus) with value information (what is it worth). We review computational models of the decision process typically used in PDM, based around the idea that decisions involve a serial integration of evidence, and assess their applicability to decisions between good and gambles. Subsequently, we consider the contribution of three key brain regions – the parietal cortex, the basal ganglia, and the orbitofrontal cortex (OFC) – to perceptual and EDM, with a focus on the mechanisms by which sensory and reward information are integrated during choice. We find that although the parietal cortex is often implicated in the integration of sensory evidence, there is evidence for its role in encoding the expected value of a decision. Similarly, although much research has emphasized the role of the striatum and OFC in value-guided choices, they may play an important role in categorization of perceptual information. In conclusion, we consider how findings from the two fields might be brought together, in order to move toward a general framework for understanding decision-making in humans and other primates.

Over the past 10 years, there has been a resurgence of interest in the neural and computational mechanisms by which humans and other primates make decisions under uncertainty. This work has bridged multiple levels of description, with some researchers focusing on the contributions of individual neurons to decision-making, and others trying to map entire brain circuits for voluntary choice. Computational accounts have ranged from biophysically plausible neural network models to large-scale simulations in which the behavior of millions of neurons is captured in a single variable. Correspondingly, the techniques involved have included those focused on both local neuronal circuits, such as single-cell electrophysiology or microstimulation and global brain systems, such as functional neuroimaging, lesion studies, and pharmacological manipulations.

Curiously however, this research program has largely been carried out in two distinct but parallel streams. One stream, which is sometimes called “perceptual decision-making (PDM),” grew out of classical psychophysics, and is concerned with how humans choose an appropriate action during the detection, discrimination, or categorization of sensory information. The other stream, which we refer to as “economic decision-making (EDM),” has asked how humans choose among different options on the basis of their associated reinforcement history. To date, we would argue, researchers in either stream have been surprisingly reluctant to import concepts or approaches from the other. Instead, researchers interested in perceptual and economic choices have tended to use different classes of computational model, focused on distinct neural circuits, and have arrived at different conclusions about whether humans make good choices or not.

However, we would argue that an understanding of the computational neurobiology of voluntary choice would benefit from increased cross-fertilization between the literatures concerned with perceptual and economic decisions. It might be worth considering, for example, that all perceptual decisions are ultimately motivated by reward (or the avoidance of loss) whereas all economic decisions require perceptual appraisal of the alternatives on offer. Moreover, there is a common structure to virtually all decision-making tasks employed across the literature: an agent is required to identify one or more stimuli in a given sensory modality (what is it?), and then to select a response which will maximize the probability of positive feedback or reward (what is it worth?). In what follows, we will argue that despite the differences of approach between the two streams, one can conceive of the problem they seek to understand under one general conceptual framework, in which decisions are sensorimotor acts that depend on the integration of sensory evidence with information about reward value.

In this review, we summarize the work of computational, behavioral, and cognitive neuroscientists who have reached across the divide between the sub-fields of perceptual and EDM. Some researchers have considered, for example, how reward might influence sensory discrimination (Feng et al., 2009; Rorie et al., 2010; Serences and Saproo, 2010; Summerfield and Koechlin, 2010; Weil et al., 2010; Mulder et al., 2012), or how process models used to describe perceptual decisions might be applied in the economic domain (Basten et al., 2010; Philiastides et al., 2010; Hare et al., 2011b; Krajbich and Rangel, 2011; Krajbich et al., 2011; Hunt et al., 2012). In doing so, we emphasize a number of issues which we believe to be of key interest for researchers concerned with decision-making. In particular, we review work that has asked how perceptual decisions are biased by economic information, for example where the response options may have asymmetric costs and benefits. We also consider the mechanisms by which rewards (or informative feedback) might drive learning about sensorimotor acts. These considerations prompt a discussion about where in the primate brain information about the likely identity of a stimulus and its likely reinforcement value are combined. In conclusion, we aim to move toward a more general framework for understanding the neurobiology of decision-making, drawing upon approaches from the sub-fields of both perceptual and EDM.

It is beyond the scope of the current article to provide a comprehensive overview of the literatures concerned with perceptual and economic choice, and we refer the reader instead to a number of excellent summaries published in recent years (Gold and Shadlen, 2007; Heekeren et al., 2008; Kable and Glimcher, 2009; Rangel and Hare, 2010; Rushworth et al., 2011). Rather, here we aim to highlight the similarities and differences between the methods and approaches in the two fields.

Perceptual decision-making is concerned with the mechanisms by which observers categorize sensory signals, and as such, tasks typically require observers to classify weak or noisy sensory information. For example, one influential paradigm called the “random-dot kinetogram” or RDK task (Britten et al., 1993) requires observers to classify the net direction of motion of a cloud of randomly moving dots. However, whilst the sensory information in these tasks is ambiguous, the reinforcement contingencies (i.e., which action leads to reward, given the identity of the stimulus) are usually clear and over-learned. Thus, it is the identity of the stimulus that is uncertain, not the value of its associated action. By contrast, EDM tasks tend to employ stimuli that are perceptually unambiguous, often in the visual domain. For example, in classic “multi-armed bandit” tasks, agents usually view two easily discriminable shapes or symbols, each associated with a distinct reward statistics (Sutton and Barto, 1998; Daw et al., 2006). However, whilst perceptual uncertainty on these tasks is negligible, the task is challenging because the reinforcement value associated with the two options may drift or jump unpredictably across the experiment (Behrens et al., 2007; Summerfield et al., 2011), or in different situations, because the agent has to choose between two or more assets whose value (learned prior to the experiment) is roughly comparable (Kable and Glimcher, 2007; Plassmann et al., 2007), and the value representations are themselves noisy. Thus, relative value of each stimulus and/or its associated action is uncertain, but its identity is known to the agent.

Because uncertainty in PDM tasks is owing to the identity of the stimulus itself, these experiments often take place in the “proficient” stage of task performance, where the response-reward contingencies have been either unambiguously instructed or learned through extensive training. Thus, the computational models that have been used to characterize performance have focused on the choice period itself, rather than on any reinforcement learning that occurs following feedback. One class of model that has attracted a great deal of recent interest is premised on the idea that choices depend on a serial sampling mechanism, in which evidence about the identity of the stimulus is collected and integrated until a criterial level of certainty is reached (Wald and Wolfowitz, 1948; Bogacz et al., 2006; Ratcliff and McKoon, 2008). Many variants of this model have been proposed (see Serial Sampling Models of PDM below), but they share a common advantage, namely, the ability to predict both choices and choice latencies (i.e., reaction times) in judgment tasks. A major theme of research into PDM is thus to understand the mental chronometry (i.e., the changing information processing over time) of the choice process.

Two main classes of computational model have informed the literature on EDM. One very successful class of model, that draws upon a rich literature from learning theory in experimental psychology (Rescorla and Wagner, 1972) and machine learning (Sutton and Barto, 1998), describes the mechanisms by which the value of stimuli or actions is learned (reinforcement learning or RL models). This model proposes that these values are updated according to how surprising an outcome is (a “prediction error”) scaled by a further parameter that controls the rate of learning. Models of the choice process in this field have tended to describe the weighting that agents give to different magnitudes or probabilities of reward. The other successful account, called Prospect Theory has been applied to decisions where the probabilistic information about the choice is not learned by feedback but explicitly instructed. Prospect Theory can account for proposed violations of rational economic behavior, including preference reversals, risk aversion, and susceptibility to framing effects, via appeal to non-linear weighting functions mapping objective probabilities and magnitudes of reward to their subjective, internal counterparts (Kahneman and Tversky, 1979). However, Prospect Theory describes human economic behavior without providing a normative framework for understanding choice, and offers no account of the processes that underpin decision-making. Similarly, in most reinforcement learning tasks, choices are typically modeled by assuming that agents simply choose the most valuable option (a “greedy” policy), or choose according to a sigmoidal “softmax” function that privileges the most valuable option whilst permitting some stochasticity. Below, we argue that the near-absence of process models in EDM is a major limitation to the current state of the field, and that the application of serial sampling models to economic choices may represent a fruitful avenue for future research.

A related concern in both the fields of PDM and EDM is whether humans make good decisions or not. In EDM, the descriptive account offered by Prospect Theory catalogs the heuristics and biases that characterize human economic decisions, arriving at the conclusion that humans often make poor and irrational choices. In one classic example, it was shown that faced with an equivalent alternative, humans will favor the option to save 400/600 people from a fictitious disease, but will reject an offer to allow 200/600 people to fall ill, despite the mathematical equivalence of the two prospects (Tversky and Kahneman, 1981). Other examples abound in the behavioral economic literature (Kahneman et al., 1982). This contrasts sharply, however, with the approach taken in psychophysical investigations of perceptual choice, where a strong emphasis has been placed on the optimality of detection and categorization judgments. For example, humans integrate evidence from different sources or modalities according to its reliability, exactly as a statistically ideal observer should (Ashby and Gott, 1988; Ernst and Banks, 2002; Kording and Wolpert, 2004). Once again, however, the notion that agents are optimal for perceptual choice and suboptimal for economic choices may reflect a bias in the approach or emphasis of researchers in the two sub-fields, rather than a fundamental difference in the relevant computational mechanisms. For example, agents may approximate optimal behavior in multi-armed bandit problems (Behrens et al., 2007); on the other hand, sensory detection thresholds may typically be set too high, leading to overly conservative or poorly adjusted detection judgments (Maloney, 1991). Below, we consider the possibility that agents appear to be closer to optimal for perceptual choices mainly because we have a clearer notion of what is begin optimized in psychophysical judgment tasks (see Decision Optimality in PDM and EDM).

Researchers concerned with PDM and EDM share the goal of identifying a final common pathway for decisions, that is, a critical stage at which all decision-relevant information has been integrated, and options can be compared in a “common neural currency.” Nevertheless, researchers in the two fields have tended to pin their hopes on very different neural circuits. In PDM, where simple, over-learned sensorimotor tasks are a ubiquitous tool, the focus has been on dorsal stream cortical regions that receive inputs from the sensory cortices, but which contain at least some neurons which code information in the frame of reference of the response. For example, researchers using RDK stimuli in conjunction with a saccadic response have focused on a lateral parietal area that receives input from motion-sensitive extrastriate area MT, but which contains neurons coding for spatial targets of an eye movement (Roitman and Shadlen, 2002; Bennur and Gold, 2011). In other work, recordings have been made from frontal cortical zones with similar properties (Kim and Shadlen, 1999; de Lafuente and Romo, 2006). By contrast, in EDM, researchers have focused on structures such as the dopaminergic midbrain or orbitofrontal cortex (OFC), where neurons respond directly to the reinforcing properties of food or money (Schultz, 1986; Critchley and Rolls, 1996; O’Doherty et al., 2001), and on the structures such as the striatum or anterior cortex, where neuronal responses scale with reward prediction errors (Schultz et al., 1997; Matsumoto et al., 2007). These predilections might seem a natural reflection of the different sources of uncertainty typically manipulated in PDM and EDM tasks (about the identity of the stimulus, presumably determined in cortical circuits; and about the value of the stimulus, presumably determined in subcortical and limbic circuits and interconnected structures). However, there may also be strong reasons to suspect the involvement of cortical regions, such as the parietal cortex, in representing the expected value of a choice (Sugrue et al., 2004), as well as evidence that the OFC and BG play an important role in discrimination and categorization judgments even in the absence of explicit reward (Eacott and Gaffan, 1991; Ashby et al., 2010). Below, we review this evidence, with a view to providing an integrated account of the neural systems underlying decision-making in primates.

In summary, thus, researchers interested in perceptual and economic choices have made different assumptions, used different approaches, focused on different models and neural circuits, and, not surprisingly, drawn different conclusions. However, we can conceive of the decisions made in both perceptual and economic choice tasks under a common framework – the agent must (i) disambiguate one or more stimuli, and (ii) estimate their worth. Whilst we know much from the PDM and EDM literatures about the neural and computational mechanisms underlying these two processes separately, we know very little about how perceptual and reward information is integrated in the primate brain. In other words, we have as yet no general understanding of the mechanisms by which primates make decisions.

A renewed interest in the computational mechanisms underlying decision-making has enriched the field in recent years. In this section, we focus on the sequential sampling framework, the most prominent computational theory in PDM. Crucially, however, we also point to successful applications of serial sampling models in accounting for economic choices, and argue that such models may be promising candidates for inclusion in a unified theory of choice. We start this section with a general introduction on sequential sampling models (SSMs) of PDM, and motivate their use in theorizing value-guided behavior. We continue our discussion by contemplating computational accounts of how decision-relevant information is fed into the decision process. Finally, we attempt to provide a mechanistic overview of the decision process with regards to optimality.

Two prominent frameworks have been proposed to account for the psychology of PDM: signal detection theory (SDT; Green and Swets, 1966) and SSMs (Laming, 1968; Ratcliff, 1978; Vickers, 1979). While SDT assumes that a decision is settled on the basis of a single sample of information, SSMs suggest that multiple samples of evidence are integrated across time up to a critical level of certainty. Because SSMs are dynamic, they have predictive power unavailable to static SDT accounts, allowing us to model not only choice behavior but also the full time-course of the deliberation process. Theoretical research in the field has explored these models’ relation to statistically optimal inference (Bogacz et al., 2006; Bogacz, 2007), and used both behavioral and neural recordings to validate and compare between models (Ratcliff et al., 2007; Kiani et al., 2008; Ditterich, 2010; Tsetsos et al., 2011). On the contrary, in EDM the focus has not been placed on developing explanatory mechanisms of the deliberative process but on ad hoc, descriptive modifications of the normative theory (Von Neumann and Morgenstern, 1944) in order to account for choice biases and apparently paradoxical behavior (Kahneman and Tversky, 1984; Gilovich et al., 2002). The absence of process models in EDM stands in sharp contrast with the state-of-the-art in PDM and poses a serious challenge for the development of a unified theory of choice. However, the recent development of dynamical models of preference formation, which build upon the tradition of SSMS, promises to establish a theoretical link between PDM and EDM (Busemeyer and Townsend, 1993; Usher and McClelland, 2004; Johnson and Busemeyer, 2005; Otter et al., 2008). We next provide an overview of serial sampling models of PDM and subsequently motivate their use in EDM.

In mathematical statistics, the optimal solution to the problem of disambiguating two competing hypotheses given a series of noisy information is provided by the sequential probability ratio test (Wald, 1947; Gold and Shadlen, 2001). For a fixed error rate, SPRT uses the minimum possible amount of evidence in order to generate a categorical decision (Wald and Wolfowitz, 1948). This is achieved by updating at each sampling step the log likelihood ratio of the evidence given the two alternative hypotheses, until it exceeds a pre-defined threshold, at which point the process is terminated and a decision occurs favoring the hypothesis with the larger likelihood. This simple, optimal process explains fundamental aspects of human choices, such as the speed-accuracy trade-off (SAT), whereby higher decision thresholds, and thus more prolonged sampling, leads to more accurate choices (Johnson, 1939). However, although the SPRT has proved able to capture many aspects of human binary choices, it assumes that the observer has perfect prior knowledge of the distributions of evidence. Thus, psychological models of PDM have attempted to approximate the sequential sampling process in more psychologically plausible and computationally feasible ways.

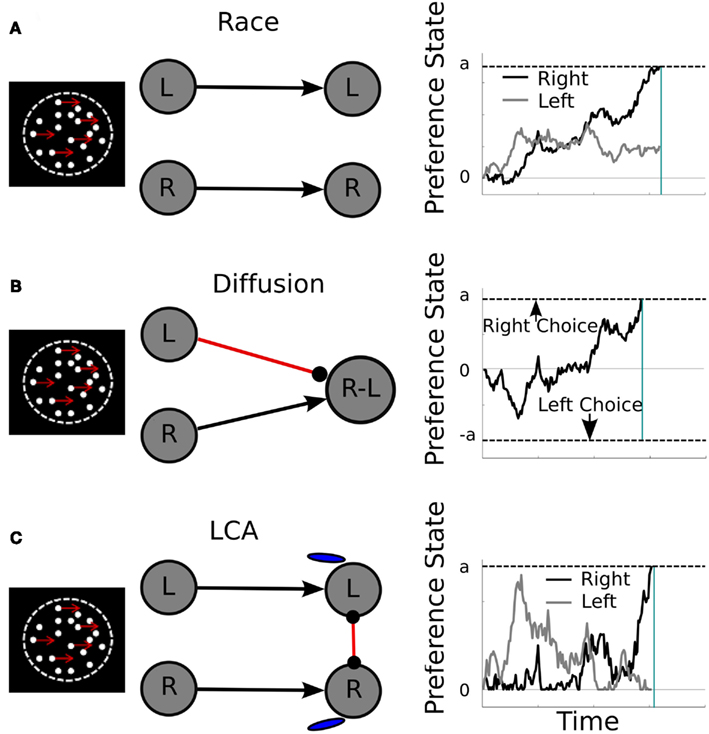

Two different broad classes of SSM of PDM have been proposed in the literature. The first class encompasses accumulator or race models (Figure 1A) that assume the independent integration of pieces of sensory evidence toward a common response criterion, analogous to a race among athletes running on independent tracks (Vickers, 1979; Townsend and Ashby, 1983; Brown and Heathcote, 2008). This mechanism contrasts with that of the diffusion model (Figure 1B), in which the net difference in evidence favoring either option is accumulated (Laming, 1968; Ratcliff, 1978; Ratcliff and McKoon, 2008). Thus, we can differentiate among PDM models according to whether the input to the decision process is an absolute or a relative signal (see Decision Input). A third class of PDM models has also emerged, building on mechanisms of existing mathematical models but also on principles of neural computation, such as the leaky competing accumulator model or LCA (Usher and McClelland, 2001) and the Wang model (Wang, 2002; Figure 1C). These models share with the race framework the idea that the absolute evidence for each alternative is integrated. However, similar to the diffusion, they induce competition among the alternative hypotheses in the form of lateral inhibition at the response level (see Decision Processes and their Relation to Optimality).

Figure 1. Computational architecture (middle panels) and representative activation trajectories (right panels) of the race (A), diffusion (B), and LCA (C) models in a motion discrimination task (left panels). Middle panels: black lines with arrowheads represent excitatory connections, and red lines terminating in filled circles correspond to inhibitory connections. Gray circles represent units encoding left (L) and right (R) responses or their difference (R-L). Blue “tears” stand for activation leakage. Right panels: representative activation over time (x-axis) in L (gray) and R (black) units, or a unit encoding their difference of activation (black, part B only). Bounds on activation level, at which a choice is initiated, are indicated by the dashed line signaled with lowercase letter a (or – a, part B only). Vertical cyan line, estimated reaction time for the representative trial. In the race model, the two options race independently toward a common upper decision boundary. In the diffusion model, choice is determined by which boundary is first reached (upper or lower). In the LCA model, the two options compete against each other toward a common response criterion.

Most PDM tasks require the observer to categorize noisy evidence presented in series. Serial sampling thus provides a natural mechanism for optimizing decisions, by averaging out the noise-driven fluctuations over time and steadily enhancing the signal-to-noise ratio. In EDM tasks, however, stimuli tend to be static and perceptually unambiguous. What benefit might be conferred by serial sampling in EDM tasks, and what might be accumulated? In EDM tasks, uncertainty is derived from variability in internal information about the expected value of each option. A growing consensus indicates that dedicating more processing time to an economic choice confers similar benefits as in PDM tasks, as if the participants were “accumulating” internal information about economic value, rather than averaging over external noise. For example, subjective values may be sampled stochastically from long-term memory, allowing a subjective value representation to be actively constructed on the basis of past experience (Sigman and Dehaene, 2005; Milosavljevic et al., 2010). These samples could be defined either with respect to the immediate context (i.e., how good is an option compared to the other alternatives), or in relation to memory contents (how good is an option relative to other similar options encountered in the past; Stewart et al., 2006). Whether this covert sampling process is governed by similar principles and mechanisms to the mental process that underlies PDM remains an open question.

One important model, called Decision Field Theory (DFT; Busemeyer and Townsend, 1993; Johnson and Busemeyer, 2005) argues that sampling of competing options is biased in part by expected reward, so that more valuable sources are sampled more frequently. In DFT, different attributes of a percept or good are sampled in turn according to where attention is oriented, such the decision variable (DV) corresponds to the attention-weighted sum of the sampled information. Attention might be oriented stochastically, or directed preferentially to a subset of the information, such as the most valuable option. DFT is able to explain preference reversals in economic behavior, such as the Allais paradox (Johnson and Busemeyer, 2005), and contextual effects in multi-attribute choice (Roe et al., 2001). A related account, Decision by Sampling (DbS), proposes that utilities are constructed afresh through sampling attribute values from both the immediate context and the long-term memory, and considering the rank of the target value within the current set of samples (Stewart et al., 2006). By assuming that the contents of memory reflect the real world distribution of decision-relevant quantities, DbS explains a range of biases such as aversion to losses, overestimation of small probabilities and underestimation of large probabilities, and hyperbolic temporal discounting. These models contrast with more descriptive accounts such as Prospect Theory (Kahneman and Tversky, 1979), that simply assume that these principles are primitives of decision behavior, rather than explaining how they occur in a plausible computational framework.

The serial sampling approach has been applied with success to PDM tasks where evidence is noisy and sequential. One might argue that this approach is tailored to the serial nature of the PDM tasks. However, the recent success of SSMs to explain classic puzzles and paradoxes in EDM suggests that they may offer a domain-general mechanism by which uncertainty can be reduced in decision-making, irrespective of whether that uncertainty arises from the sensory or value representation. In the next subsection we discuss what information might serve as input to the decision process, and we then overview computational accounts of how this process might work.

In the PDM literature a major controversy is whether decisions are settled on the basis of the relative or the absolute amount of the accumulated evidence. Race models (Figure 1A), which assume independence among the accumulated tallies of evidence, offer prima facie neurobiological plausibility, and have the virtue of being easy to extend to decisions between more than two alternatives (Bogacz et al., 2007; Furman and Wang, 2008; Tsetsos et al., 2011). By contrast, accounts based on the SPRT, such as the diffusion model (Figure 1B), offer closer approximations to statistically optimal choice behavior, and are also supported by neurophysiological evidence (see Orbitofrontal Cortex below).

Paradoxically, the same question has provoked a major debate in the field of behavioral economics over the last 60 years, but with converse claims about optimality. There, expected utility theory, the cornerstone of theories of rational choice, argues that utilities are derived in absolute terms, independent of the context, whereas relativist theories appear to provide a better empirical description of human choice behavior (see Vlaev et al., 2011 for a review). Early psychological theories proposed that the normative expected utility is modified in several ways during choice. For example, Prospect Theory assumes that values are calculated with respect to a reference point, or status quo, and introduces non-linearities to value and probability functions. Crucially however, these theories conserve the notion that the value of an option is independent of other available options (Kahneman and Tversky, 1979; Keeney and Raiffa, 1993). By contrast, relative theories of decision-making propose that option values are computed afresh in the context of each decision (Tversky and Simonson, 1993; Parducci and Fabre, 1995; Gonzalez-Vallejo, 2002). Thus, the value assigned to an option reflects not only its properties but also those of the other available alternatives. On the empirical front, context effects such as preference reversal (Simonson, 1989; Roe et al., 2001; Johnson and Busemeyer, 2005) and prospect relativity (Stewart et al., 2003) have supported the notion of relative valuation. Consider for example a hypothetical choice between two laptop computers; one is expensive and very light (A) while the other one is heavy and cheaper (B). Paradoxically, an initial tendency to favor B can be reversed by the appearance of a third option, (C) which is similar in weight to A but more expensive. This asymmetric dominance effect (Huber et al., 1982; Simonson, 1989) is representative of a class of contextual preference reversals that pose a serious challenge to independent valuation theories, which would predict that the valuation of A and B is a function of their attribute values only and that irrelevant alternatives, like option C, should not affect this valuation. This question of whether decision-relevant brain regions encode value in an absolute (“menu-invariant”) or relative framework is a major concern in neuroscientific studies of EDM (see Orbitofrontal Cortex and Absolute Stimulus Value below).

Therefore, a central question for both literatures is whether the input to the decision process is an absolute or a relative quantity. Interestingly, debate has focused on how the information is transformed before being processed by the decision mechanism, under the assumption that all available information is utilized. An alternative approach posits that the sampling process is biased by selective attention or endogenous factors (e.g., preference states). In what follows we review the literature, drawing attention to the distinction between unbiased and biased sampling of information.

In most PDM tasks, choices are typically made on the basis of a single stimulus feature or dimension, and observers are instructed to hold fixation steady (but see Siegel et al., 2008 for an exception). It is thus implicitly assumed that fluctuations in visual attention are controlled for, such that information is sampled evenly for all alternatives. However, choices are known to be biased by attentional factors, such as where observers place their gaze (Russo and Rosen, 1975; Russo and Leclerc, 1994; Payne, 1976; Glockner and Herbold, 2011). This phenomenon was investigated recently on an economic decision task, in which eye movements were measured whilst hungry observers chosen between two food items displayed visually on either side of the screen (Krajbich et al., 2011). The authors compared the ability of variants of the drift-diffusion model (DDM) to account for choices and choice latencies made in the experiment, reporting that the winning model was one in which the gain of accumulation was modulated multiplicatively by the value of the currently fixated item. A follow-up study demonstrated comparable effects for trinary choices, using a multi-alternative version of the DDM (Krajbich and Rangel, 2011). The modeling aspects of the work demonstrate the applicability of serial sampling models to EDM, and highlight the importance of recognizing the capacity-limited nature of the choice process, something which has been notably absent particularly from PDM models, which prefer to emphasize that decisions are made in a strictly optimal fashion (Bogacz et al., 2006; Bogacz, 2007; van Ravenzwaaij et al., 2012). The specific interpretation of the data offered by the authors, that economic preferences depend on a stochastic sampling of the (external) world via attention, is intriguing, but another possibility is that the sampling process itself is biased by the observers’ preferences (Svenson and Benthorn, 1992; Jonas et al., 2001; Doll et al., 2011; Le Mens and Denrell, 2011). For example if the decision maker is leaning toward one alternative, she might sample from it more often, seeking for confirmatory evidence. This would be consistent with the more general observation that agents seek to confirm, rather than to disconfirm, hypotheses that they already entertain, and would suggest that momentary preference states could strongly bias the input to the evidence accumulation process in a bidirectional fashion (Holyoak and Simon, 1999; Shimojo et al., 2003).

In EDM problems whose structure is more complex, with each option varying along several dimensions, the selective allocation of resources to a subset of the choice information is more imperative. Empirical research in this area has attempted to track the regularities in information acquisition and identify what strategies people might use in order to compare options (e.g., within-option evaluation across all attributes, or across options evaluation attribute by attribute; Russo and Rosen, 1975; Dhar et al., 2000; Fellows, 2006; Glockner and Herbold, 2011). One possibility, attributable to DFT (Roe et al., 2001) is that people switch their attentional focus back and forth from attribute to attribute (Tversky, 1972). The subjective attribute values for each option are accumulated across time and a decision is initiated once a threshold is breached. This proposal is appealing as it provides a generic framework of integration across attributes, even if these attributes are incommensurate and thus cannot be represented in a “common currency.” For example, DFT has applied a similar attention switching approach to address how people’s decisions about sensory information are biased by rewards, under the assumption that observers switch their attention between sensory evidence and information about the likely payoffs (Diederich and Busemeyer, 2006; see Decision Optimality in PDM and EDM). Finally, another plausible case where biased sampling might be critical is when people are faced with multi-alternative problems either in PDM or EDM tasks (Krajbich and Rangel, 2011). These problems might be broken down into a multitude of binary comparisons which can be based on the similarity of the items in the decision space (Russo and Rosen, 1975). Further empirical work is needed to identify how people allocate their attention when confronted with a large number of options, for example when dining at a restaurant with an extensive menu.

We describe above the current debate regarding what information consists the input to the decision process. In this subsection we consider how this process works, namely what mechanisms are in play for transforming input into decisions. A central question in both PDM and EDM is whether the decision mechanism reflects an optimized process. However, while in PDM optimality is defined in statistical terms (optimization of SAT) in EDM it is defined as the maximization of the reward rate. In the next section we review recent attempts that examine how these two different notions of optimality are combined when making decision; and what aspects of the choice mechanisms are responsible for suboptimal biases. We next turn to another important aspect of the decision mechanism, temporal weighting, and its relation to choice optimality. Temporal weighting is often considered to be a suboptimal property leading to order effects and biases. We argue that in special cases overweighting early or late information might result in better decisions. Finally we consider response inhibition as being responsible for conflict in difficult choice problems, and we discuss the computational and descriptive merits of this mechanism.

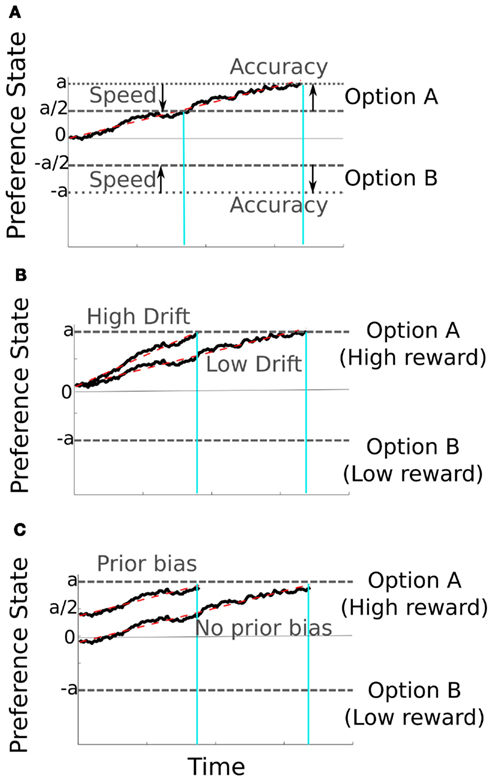

Humans’ choice behavior obeys a simple principle by which the advantages of speed and accuracy are traded off against one another (Johnson, 1939; Wickelgren, 1977; Bogacz et al., 2010). Within the sequentially sampling framework this SAT is controlled by a single parameter, the height of the decision bound (Laming, 1968); a low bound implies that decisions will be fast but overly influenced by noise fluctuations while a high bound produces accurate but delayed responses (Figure 2A). For fixed rewards rates there exists a specific response criterion value that optimizes this trade-off, and most PDM work has focused on identifying this bound or testing whether human behavior respects it (Bogacz et al., 2006). However, in both the real world and the lab, responses are often associated with unstable, asymmetric rewards, raising the question of how people maximize simultaneously their accuracy and the reward rate. For example, a radiographer examining medical scans might have to impose alternately a liberal or conservative criterion for identifying an atypicality, such as an incipient tumor, depending on the relative costs and benefits of missing the first signs of disease, or inconveniencing the patient with more tests (Diederich and Busemeyer, 2006; Feng et al., 2009; Summerfield and Koechlin, 2010; Gao et al., 2011). In such cases, participants exhibit a bias toward the high-reward option (Figures 2B,C), but the computational mechanisms by which this occurs is a topic of ongoing research. One possibility is that a higher reward changes the way the sensory evidence for the high-reward hypothesis is perceived, by increasing the drift rate (i.e., slope) of the corresponding accumulator (Figure 2B). An alternative hypothesis is that the way the input is processed remains unaffected but it is the starting point of the accumulation for the high-reward hypothesis that is shifted closer to the decision bound (Figure 2C). Specific behavioral patterns observed in humans and other primates, such as fast errors when the high-reward option was incorrect, could be captured only by the latter hypothesis (Summerfield and Koechlin, 2010; Gao et al., 2011). This shift of the starting point, prior to the onset of evidence, is independent of the decision bound and the SAT of the observer. Other accounts emphasize that human participants try to find a compromise between the perceived benefits of accuracy and overt reward (Bohil and Maddox, 2003; Simen et al., 2009).

Figure 2. Representative activation trajectories from the diffusion model with noise (black traces) and without noise (dashed red traces). Decision bounds are signaled by the dashed line marked with lowercase letter a or −a). In part (A), the speed-accuracy trade-off is determined by the height of the response boundary with lower boundaries resulting in faster and less accurate decisions (and vice versa for higher boundaries). (B,C) Choice can be biased by the presence of asymmetric rewards. This is achieved either by increasing the rate of evidence accumulation (high drift trajectory in (B) for the high-reward option or by increasing its initial activation, prior to the onset of accumulation (C). Vertical cyan lines show RTs for representative trials under conditions where speed or accuracy are emphasized (A) or where decisions are biased by reward (B,C).

Thus, although PDM tasks have emphasized the optimization of accuracy, observers are swayed by factors that change the underlying reward rate (Summerfield and Koechlin, 2010; Gao et al., 2011). In EDM, optimality has tended to refer to the maximization of reward within the timeframe of the experiment, a tradition owing to neoclassical economics, where the agent is fully informed about all available alternatives and her behavior is rational when it maximizes reward rate. Failure to maximize rewards is typically considered to be irrational. In real world, however, information about the environment is not provided but must be actively sampled, leading to a trade-off between exploiting current resources and exploring new alternatives. Thus, although any organism that optimizes its fitness should jointly maximize reward and information, traditional interpretation of laboratory findings failed to consider optimality in this broader perspective. Although phenomena such as hyperbolic delay-discounting (Loewenstein and Thaler, 1989) and loss-aversion (Tversky and Kahneman, 1991) are trademarks of irrational behavior within behavioral economics, there may be circumstances in the wild where it is optimal to demonstrate such behavior – for example, losses can have fatal consequences for an organism, whereas gains may be short-lived. These concerns have been addressed in EDM by the research program of ecological rationality highlighting that choice optimality should not be assessed independently of the environmental structure and the way it influences the tradeoff between information and reward acquisition (Oaksford and Chater, 1996; Gigerenzer and Selten, 2001; Shanks et al., 2002; Behrens et al., 2007).

When people make decisions on the basis of sequential evidence, they often weigh information differentially according to its position in the sequence. For example, a judge may arrive at a hasty conclusion on the basis of the first witness (primacy bias), or a voter may judge a politician on his or her most recent debating performance (recency bias). Such order effects have been encountered in both PDM (Usher and McClelland, 2001; Kiani et al., 2008) and EDM tasks (Hogarth and Einhorn, 1992; Newell et al., 2009). The PDM literature has focused on mechanistic models where the temporal weighting of information emerges from the dynamics of evidence integration (Usher and McClelland, 2001). On the other hand, in EDM order effects have been captured mostly in a descriptive fashion, with different weights being assigned to different pieces of information (Anderson, 1981; Hogarth and Einhorn, 1992). It is important for decisions theorists to clarify what aspects of the generic (domain-independent) decision mechanism generate order effects. An appealing possibility has been put forward in biologically inspired models of PDM. There, depending on different value parameters the decision mechanism can overweight (i.e., attractor dynamics) or downweight early information (i.e., leaky integration, see also (Bogacz et al., 2007) for an extensive discussion).

What is the merit of weighing information differently at different times? Applying stronger weights to early information (attractor dynamics and primacy) is a useful mechanism that prevents endless procrastination when the information is weak or ambivalent (see also next subsection). In these cases the choice will be determined by random noise fluctuations early on, ensuring that the decision maker does not engage in excessively prolonged deliberation (Usher and McClelland, 2001). On the other hand, overweighting late information (recency) is useful when the sensory environment is volatile, because forgetting early information and emphasizing on the latest status of the world results in faster adaptation to changes that occur to the underlying statistical structure of the environment. Thus, whereas biased temporal weighting of information (either perceptual or economical) might appear suboptimal from the pure perspective of accuracy maximization, it confers benefits on reward-maximization if the decisions are challenging or if the environment is unstable.

Choosing among two alternatives that are similar in terms of value or sensory evidence will result in response conflict, longer decision times (Laming, 1968; Ratcliff, 1978; Usher and McClelland, 2001), and less confidence associated with the final decision (Vickers, 1979; Pleskac and Busemeyer, 2010). The prolongation of deliberation in such cases might occur because the decision bound is raised to allow a clear winner to emerge among closely matched alternatives. However, adjusting the bound to the decision input requires either a priori knowledge about the difficulty of decision problem or the online adjustment of the criterion as the input unfolds. A more plausible way to produce longer decision times for more difficult problems is by introducing competition between the alternatives. In PDM this competition can be incorporated in two ways: in the diffusion model it takes place at the input level (section Decision Input and Figure 1B), while in accumulator models where the decision inputs are assumed to be independent (like in race models, Figure 1A), competitive interactions are achieved via lateral inhibition at the response level (Usher and McClelland, 2001; Wang, 2002), as illustrated in Figure 2C. Response inhibition in PDM brings some computational advantages in multi-alternative problems, where the activation in favor of poor options will be early suppressed and the decision process will continue evaluating only the strong or informative options (Bogacz et al., 2007). Additionally, response inhibition results in attractor dynamics (discussed in Temporal Weighting) which can facilitate resolving difficult decisions problems within reasonable time (Wang, 2002; Bogacz et al., 2007).

In EDM, conflict is captured in relative valuation models of preference by assuming that the input is transformed according to the value of its rivals (similar to the PDM diffusion, see also Biased Sampling). Typically the actual integration of the transformed input does not involve competitive interactions among alternatives. One exception is encountered in SSM of multi-attribute decision-making, like DFT (Roe et al., 2001) and LCA for value-based choice (Usher and McClelland, 2004), where it is assumed that different alternatives compete via response inhibition. It is noteworthy that in DFT a type of local, distance-dependent response inhibition is the key mechanism that explains a series of preference reversal effects (e.g., the asymmetric dominance effect, see Decision Input for an example). According to this account, alternatives that are similar to each other in the decision space compete more strongly while dissimilar alternatives do not interact with each other [see also (Tsetsos et al., 2010) and (Hotaling et al., 2010) for discussion of this mechanism].

PDM models assume that choice is the result of the accumulation of sequentially sampled sensory evidence. By contrast, EDM models have sought to define deviations from rationality but said little about the underlying mental process. The advent of process models of economic preference that capitalize on the serial sampling property of PDM, promises to provide an improved and mechanistic understanding of EDM (see Serial sampling models of EDM). In the preceding section we provided an overview of currently debated mechanistic aspects of PDM and EDM. We first reviewed how information is transformed before being processed by the decision mechanism (relative vs. absolute input, see Decision Input), highlighting also the possibility that these quantities might be actively sampled, subject to exogenous (e.g., visual attention) or endogenous (e.g., preference states) biases. We then discussed how the relevant input is transformed into a decision (see Decision Processes and their Relation to Optimality). In parallel to describing specific computational elements of choice (e.g., temporal weighting, response competition) we also discussed their relation to optimality. Although traditional views state that EDM is suboptimal while PDM optimal, we propose that this contradiction may have arisen because each literature has defined optimality in a different way.

A number of different brain regions have been proposed as key components of the circuit underlying simple decisions about visual stimuli. In the primate, three of the clearest candidates are (i) dorsal stream cortical circuits, such as the parietal and premotor cortices, (ii) the striatum and related circuitry of the basal ganglia, and (iii) the medial and lateral OFC. Other regions, such as the anterior cingulate cortex (ACC) and prefrontal cortex (PFC) clearly play important roles in decision-making too; for example, the ACC may be important for learning the value of actions (Rushworth and Behrens, 2008), and the PFC for integrating information over multiple time scales in the service of action selection (Koechlin and Summerfield, 2007). However, in the interests of brevity, we do not consider these in detail, referring the reader instead to other reviews that have considered these regions more comprehensively (Summerfield and Koechlin, 2009; Rushworth et al., 2011). Below, we consider the role of parietal/premotor, basal ganglia, and orbitofrontal structures in the decision process, with a specific focus on how each region might contribute to the processing and integration of perception and reward.

The parietal cortex has long been implicated in the mechanisms by which sensation is converted to action, within initial debates concerning whether parietal neurons encode spatial information in the frame of reference of the stimulus or the response (Colby and Goldberg, 1999). Patients with unilateral lesions of the parietal cortex fail to orient saccades or other actions to the contralesional side of space (Robertson and Halligan, 1999), and bilateral damage provokes an inability to combine information from multiple spatial locations, for example to permit accurate judgments of similarity or dissimilarity (Friedman-Hill et al., 1995). Prominent theories of the parietal cortex suggest that it combines information across visual features (Treisman and Gelade, 1980) to generate a map of the relative salience of different locations of external space (Gottlieb, 2007). However, the parietal cortex also seems to be important for combining information across time. This is clear from neuropsychological studies, in which recently encoded spatial information is rapidly lost (Husain et al., 2001), and from neuroimaging studies which highlight increased in parietal blood-oxygen (BOLD) signals during visual short-term memory maintenance, for example in studies requiring detection of change in sequential arrays (Xu and Chun, 2006). Integration of information across a cluttered visual scene is facilitated by repeated sampling of the scene with saccadic eye movements, whose generation depend on dedicated regions of the parietal cortex (Gottlieb and Balan, 2010).

However, it is single-cell research conducted over the past ten years that has generated the most prominent evidence in favor of the idea that parietal neurons act as cortical integrators, and that has emphasized a role in PDM. During viewing of noisy stimulus such as an RDK, neurons in lateral intraparietal area LIP whose receptive fields (RFs) overlap with one of two saccadic choice targets exhibit firing rates that accelerate with a gain proportional to level of evidence (motion coherence) in the stimulus favoring a response at that target (Roitman and Shadlen, 2002). Initial investigations were at pains to demonstrate that activity in these cells was not a mere reflection of the sensory input, or the motor response (Gold and Shadlen, 2007). For example, the signal grows during constant stimulation, persists after the stimulus has been extinguished, and deviates from zero even when there is no motion signal in the stimulus (0% coherence trials). Similarly, the parietal activity does not predict the motor parameters of the eventual response (e.g., saccadic latency, velocity, or precision), suggesting that it is not a mere motor preparatory signal. However, more recent work in which sensory and oculomotor codes are dissociated demonstrated has demonstrated that LIP responses are more heterogenous, with some neurons responding to the motion direction, and others to the saccadic choice (Bennur and Gold, 2011). Nevertheless, at least some parietal neurons encode decision-relevant information it the frame of reference of the selected action.

One interpretation of this stereotyped increase in firing rate during perceptual judgment is that unlike earlier visual regions that encode the instantaneous sensory information, parietal neurons represent information that is integrated across a temporal window extending for many hundreds of milliseconds. The integration of serial samples of evidence is critical to optimizing a decision process, because repeated sampling of a noisy stimulus enhances the precision of the estimated information in well-described mathematical fashion. It is this intuition that informs serial sampling models of the decision process described above, and prompted the suggestion that parietal neurons might implement the accumulation-to-bound process that is known to describe decisions and decision latencies on a wide variety of perceptual choice tasks. Indeed, after an initial burst, the firing rates of neurons whose RFs overlap with the alternative, disfavored target tend to decrease with a gain proportional to the motion coherence, seemingly favoring one class of serial sampling models over all others – the DDM, in which accumulators are coupled by mutual inhibition, such that the DV represents a scalar quantity corresponding to the relative evidence in favor of either choice (see Serial Sampling Models of PDM; see though (Huk and Shadlen, 2005) p.3027 for a discussion on the possibility of attractor models (Wang, 2002 to better explain LIP responses). Moreover, response-locked analyses show that the parietal activity drops off sharply about 70 ms prior to saccade initiation, at a criterial firing rate that does not depend on the level of information in the stimulus. This satisfies another important prediction of most serial sampling models, namely that a decision is made when a criterial evidence level is reached. This work has generated a great deal of excitement, and prompted the claim that the parietal cortex plays a key role in the decision processes that underlie sensorimotor control, by integrating evidence up to a choice threshold, at which point an appropriate response is generated (Gold and Shadlen, 2007).

One question that has received less attention, however, is how the reinforcement value of the options is integrated into the DV during perceptual choice tasks such as motion discrimination. In the experiments described above, the monkey is working to receive a liquid reward following each correct saccadic movement, but recordings are typically made during proficient performance of the task, where the monkey has been trained on many thousands of trials over which the reward contingencies have remained unchanged. Recall that most of the relevant parietal neurons encode the information in the frame of reference of the choice that will eventually be made, even when this is dissociated from the sensory information in the stimulus. In other words, the strength of synapses linking the visual and parietal cortical representations must be adjusted during training to encode information about the likely reinforcement value of each sensorimotor pairing. Recent work has shown that the improvement in perceptual performance on psychophysical tasks such as the random-dot kinetogram is better explained by plastic changes in the parietal and prefrontal regions than those in sensory cortex (Law and Gold, 2008; Kahnt et al., 2011), and that the trajectory of learning is well-described by a reinforcement learning scheme in which visuo-parietal connection weights are gradually updated according to a prediction error signal (Law and Gold, 2009). This leads to a steeper gain of accumulation after repeated feedback-mediated learning, and consequently, more sensitive perceptual judgments. However, it remains unknown whether this prediction error is computed at the cortex, or is dependent on processing of the subsequent reward in subcortical regions (Kahnt et al., 2011). One study suggests that during perceptual categorization of options that can change unpredictably, requiring constant tracking of category statistics, both cortical and subcortical mechanisms are employed. However, systems based in the striatum and medial PFC underlie optimal decisions in stable environments, whereas the dorsolateral PFC mediates decisions in fast-changing situations, where it is useful to base decisions on recently buffered information (Summerfield et al., 2011). In general, however, these mechanisms have been explored in considerably less detail for perceptual than for economic choices.

Perceptual learning experiments chart the gradual improvement to performance that comes with extensive training and feedback. However, the costs and benefits associated with different types of perceptual error can sometimes change rapidly and unpredictably, on the basis of instructions or other contextual factors. Thus, another line of research has attempted to characterize the neural and computational mechanisms by which perceptual decisions are biased by instructions or cues that signal the relative outcomes associated with each response. As outlined above, serial sampling models in which asymmetric rewards bias the starting point of the accumulation process – an additive, a priori bias in favor of the more valuable response – fit observers’ performance better than models in which the bias influences accumulation rate in a multiplicative fashion (Whiteley and Sahani, 2008; Feng et al., 2009; Pleger et al., 2009; Simen et al., 2009; Summerfield and Koechlin, 2010; Mulder et al., 2012). Correspondingly, there is evidence from single-cell recordings that the responses of parietal neurons reflect an outcome-related bias as an additive increase in firing at or before the moment of stimulus onset (Platt and Glimcher, 1999; Rorie et al., 2010). This occurs during both challenging perceptual discriminations, for example of the RDK stimulus (Rorie et al., 2010), as well as during choices based on perceptually conspicuous information (Platt and Glimcher, 1999). Similar results have also been observed in the premotor cortex. Consistent with this finding, model-based fMRI studies have revealed that an additive reward-mediated bias is correlated with the BOLD signal in the lateral parietal cortex during signal detection (Fleming et al., 2010; Summerfield and Koechlin, 2010).

Relatedly, the responses of parietal neurons track the evolving value of an option in n-armed bandit and other economic choice tasks in which rewards are learned by feedback (Dorris and Glimcher, 2004; Sugrue et al., 2004, 2005). For example, LIP signals during a value-based choice task are well-described by a probabilistic choice model (akin to an RL model) that engages in a leaky integration of information across recent trials, producing classical “matching” behavior typically observed in bandit and reversal learning tasks (Sugrue et al., 2004). More recently, LIP neurons have been observed to correlate both with the delay-discounted value of an offer shortly after its onset, before coming to code for a choice in the build-up to action (Louie and Glimcher, 2010). These and other findings have led some authors to propose that the signal-dependent build-up of activity observed during viewing of the RDK stimulus reflects a growing expectation that an eye movement into the target field will be rewarded, an expectation that builds faster on high coherence trials, and is normalized by the value of other options (Kable and Glimcher, 2009). Thus, like brain regions classically implicated in economic choices (see below), parietal signals can adapt rapidly to reflect the changing expected value associated with a choice (Sugrue et al., 2005).

There is good evidence, thus, that the parietal cortex is involved in integrating sensory information during deliberation, and that this integration occurs largely in the frame of reference of the action. However, the relevant sensorimotor contingencies are most likely learned via a reinforcement learning mechanism; and parietal signals reflect the relative reward of different perceptual alternatives, even when their value changes rapidly and unpredictably. In other words, although much work has focused on the parietal contribution to integration of evidence in PDM, single-cell responses there are strongly biased by the expected economic value of the choice-relevant response, and reward-guided visuo-motor learning rescales the responses of parietal neurons, leading to faster accumulation for more practiced choices. In subsequent sections, we contrast the involvement of the parietal cortex in the decision process to that of other candidate structures, such as the basal ganglia.

The basal ganglia are a family of interconnected subcortical nuclei that have been implicated in a complex array of overlapping cognitive and motor behaviors. A detailed review of the functional architecture of the basal ganglia is beyond the scope of this review; we refer the reader to excellent accounts elsewhere (Alexander and Crutcher, 1990; Chevalier and Deniau, 1990; Doll and Frank, 2009; Redgrave et al., 2011). However, we begin by highlighting two architectural features of the basal ganglia that are of particular relevance to our understanding of their contribution to decision-making. Firstly, the striatum receives inputs from a number of cortical regions, including those concerned with processing both sensory and motor information, as well as receiving information about reward from the ventral midbrain (Alexander and Crutcher, 1990). Moreover, its neurons show gradually accelerating firing rates in response to a noisy RDK stimulus, “ramping” activity that is similar in many respects to that observed at the cortex (Ding and Gold, 2010). It is thus ideally placed to contribute to sensorimotor integration and reward-guided learning during perceptual choice tasks. Secondly, information passing through the basal ganglia is routed via one of two major conduits, known as the direct and indirect pathway, that have opposing inhibitory and disinhibitory control over the thalamus and thus on subsequent cortical motor structures such as the premotor area or superior colliculus. Striatal inputs can thus lead to selective disinhibition of the relevant motor area, a hallmark of any system engaged in efficient selection of one action over two or more competing alternatives (Chevalier and Deniau, 1990; Redgrave et al., 1999). Additionally, neuropsychological evidence also attests to the importance of the striatum in reward- and feedback-guided sensorimotor learning. Caudate lesions impair the ability to learn new visuo-motor associations from feedback (Packard et al., 1989), a phenomenon also observed following degeneration of the nigro-striatal pathway in Parkinson’s Disease (Ashby et al., 2003), whereas disconnection of all visual cortical outputs except those to the striatum spares visuo-motor discrimination (Eacott and Gaffan, 1991). In other words, sensory input to the striatum seems to be both necessary and sufficient for the learning mechanisms that lead to accurate perceptual category judgments. Together, these considerations point to the basal ganglia playing a key role in the mechanisms by which actions are selected, on the basis of integrated sensory and reward information.

Information from cortical integrators, such as those LIP neurons that exhibit ramping activity during discrimination judgments about randomly moving dots, is subsequently routed to the striatum (Pare and Wurtz, 2001). A consensus holds that Hebbian synaptic plasticity at the striatum depends on the presence of phasic dopaminergic inputs arising in midbrain regions sensitive to reinforcement (Wickens, 1993). Dopamine signaling from the ascending nigro-striatal pathway thus gates cortico-striatal plasticity, such that rewards occurring in a window lasting for a few seconds after an action strengthen synapses linking sensory information to the relevant action (Kerr and Wickens, 2001). The sign, amplitude and timing of these dopaminergic inputs are tightly correlated with those of hypothetical “prediction error” signals that guide simulated reinforcement learning. Intriguingly, there is evidence that both the presence and absence of dopamine is functionally significant at the striatum. Increased dopamine uptake at D1 receptors promotes potentiation of sensorimotor responses that evoke positive outcomes, whereas decreased uptake at a separate, D2-receptors mediated mechanism leads to reduced sensorimotor efficacy where the relevant pairing is followed by a punishment (Doll and Frank, 2009). Thus, patients with Parkinson’s disease, where DA signaling is chronically lowered, tend to learn better from negative than positive outcomes, and may even learn about punishment more effectively than controls (Frank et al., 2004). These “go” and “no-go” signals may be routed to the thalamus via separate direct and indirect pathways that exert inhibitory and disinhibitory control over the thalamus respectively (Frank, 2005). Thus, the architecture of the basal ganglia is well disposed to allow learning about the value of responding (or of inhibiting a response) in a given sensory context to proceed in a supervised fashion, via integration with information about unexpected reward from the ventral midbrain.

One consequence of enhanced responsiveness of cortico-striatal synapses could be to effectively reduce the level of cortical activity required to elicit a response in the striatum, and thus a disinhibition of the relative output neurons in the cortex or superior colliculus. Cortico-striatal plasticity has thus been proposed as a plausible neurobiological mechanism by which the decision threshold (for example, the “bound” in the DDM) could be lowered in order to bias responding by reward (Ito and Doya, 2011), or to adapt response times to optimize reward rate under different conditions that emphasize speed or accuracy (Bogacz et al., 2010), as discussed above. This account draws support from biologically realistic network simulations of threshold modulation in the random-dot motion task, in which dopamine-mediated cortico-striatal plasticity determines the threshold level that must be achieved for an all-or-none disinhibition of choice-appropriate neurons in the superior colliculus mediating the required saccadic response (Lo and Wang, 2006).

However, one caveat to this account is that adaptation of synaptic efficacy might not occur fast enough to mediate the rapid switching between speed- and accuracy-based responding, or to accommodate situations in which the rewards associated with two perceptual alternative reverse rapidly, as is required by laboratory tasks (Furman and Wang, 2008). Nevertheless, dopaminergic inputs might also act to bias action selection via direct excitatory inputs to the striatum. For example, when choosing a saccadic response conditioned on the spatial location of a target, the responses of striatal neurons reflect the integration of the action and its expected value (Hikosaka, 2007). Striatal signals reflecting a bias toward a more valuable option can be seen even in the period before stimulation begins, and thus are good candidate substrates for the offset in the accumulation of evidence toward a more rewarded choice in serial decision models such as the DDM (Lauwereyns et al., 2002; Ding and Hikosaka, 2007). However, selective dopaminergic receptor blockade abolishes the striatal responses with a corresponding effect on reaction times (Nakamura and Hikosaka, 2006), suggesting that the influence of reward on the choice process depends on direct inputs from the dopaminergic system (but see Choi et al., 2005). In a similar vein, the observed functional connectivity occurring when speed is emphasized over accuracy might reflect a selecting boosting of striatal activity by phasic input from cortical structures such as the supplementary motor area, rather than slow plastic changes at cortico-striatal synapses (Bogacz et al., 2010). In humans, support for this view is offered by the finding that cortico-striatal functional (Harsay et al., 2011) and structural (Forstmann et al., 2010) connectivity both predict individual differences in the extent to which a motivating stimulus enhances choice performance.

Selectively lowering the threshold for one course of action (decision bound) is one candidate mechanism by which an economically favorable action might be privileged over other alternatives. As described above in section “Conflict and Response Competition” above, however, another possibility is that active competition occurs between rival options coupled by local inhibitory connections. This competition is proposed by several computational models of perceptual choice, including those that have attempted to describe decisions among three or more options, such as the Wang model (Wang, 2002; Wong and Wang, 2006), the LCA model (Usher and McClelland, 2001), and the MSPRT (Bogacz and Larsen, 2011). When choice alternatives conflict, an ideal observer will prolong deliberation in order to increase the chances that a single clear winner will emerge. Indeed, faced with the choice between two highly valued options, humans deliberate for longer than when choosing between a high- and a low-valued option, apparently inhibiting the impulse to respond rapidly on the basis of prior reinforcement (Ratcliff and Frank, 2012). At least two models propose that this inhibition depends on computations occurring as information flows through the basal ganglia. Bogacz and colleagues(Bogacz and Gurney, 2007; Bogacz and Larsen, 2011) suggest that restraining responses according to the cumulative evidence in favor of the alternatives might be one function of the indirect basal ganglia pathway, whereas Frank (Frank et al., 2007; Doll and Frank, 2009) has convincingly argued that this function is the province of a third, hyper-direct pathway through the basal ganglia, which links the cortex to the basal ganglia output nuclei via the subthalamic nucleus (STN). For example, patients undergoing disruptive deep-brain stimulation of the STN will respond impulsively on choices between two highly valued options, unlike healthy controls (Frank et al., 2007). Recent evidence suggests that the STN may act to raise the decision threshold under conditions of response competition by modulating activity in the medial PFC (Cavanagh et al., 2011). This consistent with a study implicating of the anterior cingulate cortex in threshold modulation, during decisions about visual stimuli linked by fixed transitional probabilities (Domenech and Dreher, 2010).

There is thus compelling evidence that the basal ganglia contribute to sensorimotor learning, via dopamine-gated changes in cortico-striatal plasticity. Potentiated cortico-striatal connections might be one mechanism by which an economically advantageous alternative might be favored in a perceptual choice task such as the RDK paradigm. Relatedly, basal ganglia structures such as the STN might act to raise the decision threshold, particularly in the immediate post-stimulus period, when several competing responses simultaneously appear promising (Ratcliff and Frank, 2012). Finally, direct excitatory inputs from cortical or subcortical structures might provoke a pre-stimulus bias toward a more rewarding option that is visible in baseline levels of activity in striatal neurons, a factor that can account for the influence of reward-mediated bias on reaction times in serial sampling models such as the DDM. In the following section, we consider how we might reconcile these findings with the suggestion that parietal signals are also modulated by expected value during PDM (Sugrue et al., 2005), or that cortico-cortical plasticity is an important substrate for sensorimotor learning (Balleine et al., 2009).

The work described in sections “Parietal Cortex” and “The Basal Ganglia Nuclei” above suggests that both the parietal cortex and the basal ganglia make an important contribution to the integration perceptual evidence about the identity of a stimulus with information about its economic value. For example, (i) both parietal and striatal neurons seem to act as integrators during noisy perceptual decision tasks such as the RDK paradigm; (ii) cortico-cortical and cortico-striatal plasticity both seem good candidates for mediating reward-guided learning mechanisms during perceptual discrimination and categorization judgments, and (iii) biases toward a more economically valuable option seem to be reflected in an additive offset to pre-stimulus activity in both the parietal cortex and striatum. How can we reconcile these two accounts? Might it be that evolution has equipped primates with two mechanisms for learning the value of sensorimotor acts, and if so, why?

According to classic accounts, cortical and subcortical regions contribute to decisions over distinct timescales, with explicit action planning about novel response contingencies occurring in the cortex, before being consolidated to more phylogenetically ancient subcortical circuits implicated in habit-based behaviors (Dickinson and Balleine, 2002). For example, one computational model suggests that long-run average action values are “cached” in a dorsolateral striatal territories, providing a stable but inflexible representation of the value of actions that is immune to noise-driven fluctuations in the value of different actions, in contrast to more labile representations in the PFC (Daw et al., 2005). According to this and many similar proposals, slow “model-free” RL processes depend on the basal ganglia, whereas the neocortex and hippocampus provide the agent with an explicit model of the world that can be used to control behavior in a cognitively sophisticated, “model-based” fashion (Glascher et al., 2010).

However, experimental evidence suggests that the learning in the basal ganglia can, in fact, occur quite rapidly. For example, in neurophysiological recordings (Schultz et al., 1997) and functional neuroimaging studies (O’Doherty et al., 2003), ventral midbrain or striatal responses are found to track choice values even when they change over just tens of trials. Although this is slower than the moment-by-moment contextual control over action selection demonstrably afforded by the dorsolateral PFC in humans following explicit instructions (Koechlin et al., 2003), it is much faster than the practice-driven cortico-cortical changes observed in incremental perceptual learning studies. Correspondingly, although both the cortex and the striatum are the targets of ascending dopaminergic signals presumed to carry reward prediction errors, only in the striatum does dopamine reuptake occur rapidly (Cragg et al., 1997). By contrast, the neocortical dopamine response to a single reward, such as a food pellet, can still be detected many minutes later (Feenstra and Botterblom, 1996). This presumably allows striatal dopamine to reinforce punctate sensorimotor events, rather than prolonged tasks or episodes.

Thus, one possibility is that during perceptual discrimination tasks, supervised, dopamine-gated reinforcement learning occurs relatively rapidly at the striatum, allowing information about more rewarding stimuli a greater opportunity of flowing through the basal ganglia loops to drive motor output structures back in the cortex. Simultaneously, cortico-cortical learning proceeds in an unsupervised fashion, allowing sensory and motor representations that are frequently reinforced and linked through the circuitry of the basal ganglia to be associated via Hebbian principles. One way of thinking about this is that if we wish to term the subcortical system “habitual,” then this cortico-cortical system is “super-habitual” (M. J. Frank, personal communication). This theory, proposed in a number of guises over recent years (Houk and Wise, 1995; Ashby et al., 2007) and incorporated into leading models of basal ganglia function (Frank, 2005; Bogacz and Larsen, 2011) draws upon the idea familiar from theories of memory that new associations are consolidated to the cortex, providing complementary flexible and stable control over behavior (Norman and O’Reilly, 2003). The most compelling evidence in favor of this view comes from single-cell recordings in the striatum and PFC during reversal learning, which have revealed that responses in the caudate adapt earlier than in the cortex – within as little as five trials of an unpredicted switch (Pasupathy and Miller, 2005). Effective connectivity analyses of fMRI data in humans performing a discrimination task also suggest that the instantiation of connectivity between sensory regions and the frontal cortex depends on the mediating influence of the basal ganglia (den Ouden et al., 2010). Finally, a recent perceptual learning experiment demonstrated that while practice-driven gain enhancements to the gain of encoding of the DV in parietal cortex and ACC were predicted by an RL model, prediction error signals generated by the model correlated with activity in the ventral striatum (Kahnt et al., 2011). These latter studies point to striatal prediction error signals as a ubiquitous mechanism by which the cortex might learn slowly about appropriate sensorimotor contingencies, irrespective of whether they lead to explicit incentives (as in EDM) or merely informative feedback (as in PDM).