94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

EDITORIAL article

Front. Neuroinform., 26 November 2024

Volume 18 - 2024 | https://doi.org/10.3389/fninf.2024.1520012

This article is part of the Research TopicReproducible Analysis in NeuroscienceView all 10 articles

Editorial on the Research Topic

Reproducible analysis in neuroscience

One of the key ingredients of scientific progress is the ability to repeat, replicate, and reproduce independently important scientific findings. Recently, independent groups failed to replicate the results of several experiments in various research areas, opening the so-called “reproducibility crisis.” The reasons behind these failures may be motivated by the excessive trust given to the results obtained by digital computers. Indeed, little attention was given to the implementation of a principal algorithm, and method or to the variation introduced by the use of different software, and hardware systems or to how difficult a finding can be recover after weeks or years or to the precision level one had performed a computational experiment (Donoho et al., 2009; Peng, 2011).

To extricate this tight tangled set of terms, it is important to precisely define the meaning of reproducing, replicating, and repeating with the terminology long established in experimental sciences (Plesser, 2018). The Association for Computing Machinery (ACM) has adopted the following definitions for the three highly used terms on research (Association for Computing Machinery, 2016).

Repeatability (Same team, same experimental setup, and same data): the measurements (findings) can be obtained with precision by the same team, using the same measurement procedure (experimental protocol), the same measuring system, under the same operating conditions (e.g., neuroimaging system like MRI 3T with the same set-up, time of the day, etc.), in the same location (Lab) following a multiple trial acquisition protocol. For solely computational experiments, it practically means that a researcher can reliably repeat his/her own computations.

Replicability (different team, same experimental setup, and different data): the measurements (findings) can be obtained with precision by a different team, using the same measurement procedure (experimental protocol), the same measuring system, under the same operating conditions (e.g., neuroimaging system like MRI 3T with the same set-up, time of the day, etc.), in the same location (Lab) following a multiple trial acquisition protocol. For solely computational experiments, it practically means that an independent group can obtain the same result by employing the author's own experimental artifacts.

Reproducibility (different team, different experimental setup, and different data): the measurements (findings) can be obtained with precision by a different team, using a different measurement procedure (experimental protocol), a different measuring system, under similar operating conditions (e.g., neuroimaging system like MRI 3T with the same set-up, time of the day, etc.) in a different location (Lab) following a multiple trial acquisition protocol. For solely computational experiments, it practically means that an independent group can obtain the same result using experimental artifacts produced completely independently from the author's artifacts.

For more information, an interested researcher can read informative studies discussing this terminology (Crook et al., 2013; Goodman et al., 2016; Nichols et al., 2017).

In computational neuroscience, there are two types of studies: simulation experiments and advanced analyses of experimental data. In both types of studies, methods reproducibility refers to obtaining the same results when running the same code again. However, this type of reproducibility demands access to experimental data, code, and simulation specifications (Botvinik-Nezer and Wager, 2023). Results reproducibility demands access to the experimental data, but the analysis can be realized by using different pipelines (combination of methods, code) e.g., analysis packages or neural simulators.

In the methodological part of a study, data analysis demands a lot of decisions. More recently, 70 independent analysis teams tested nine prespecified hypotheses using the same task–functional magnetic resonance imaging (fMRI) dataset (Botvinik-Nezer et al., 2020). The 70 teams selected 70 different analytical pipelines, and this variation affected the results, including the statistical maps and conclusions drawn regarding the preselected hypotheses tested. In a recent study, Luppi et al. (2024) systematically evaluated 768 data-processing pipelines for network construction from resting-state functional MRI, evaluating the effect of brain parcellation, global signal regression, and connectivity definition.

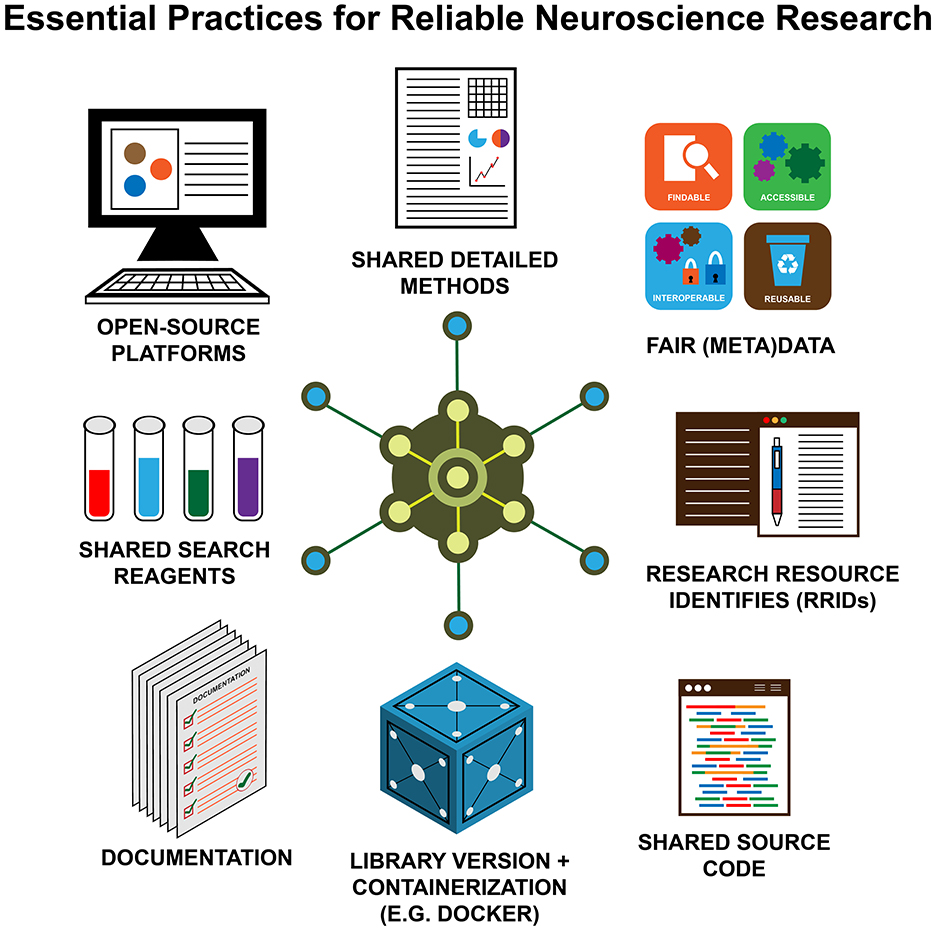

Several organizations worldwide have tried to increase awareness about the importance of reproducibility and replicability in different disciplines in recent years (e.g., www.repro4everyone.org, Global Reproducibility Networks), including open-source repositories for research resources (e.g., Zenodo), protocols, source code (e.g., GitHub), datasets, etc. Figure 1 illustrates schematically the key ingredients to make a research study reproducible and replicable (Auer et al., 2021).

Figure 1. The key elements of reproducible (neuro)science. Open—source platforms; FAIR (meta)data; shared, detailed methods; shared source code; shared search reagents; documentation; research resource identifies (RRIDs); library version + containerization (e.g., Docker).

In recent years, myriad tools have been developed to support rigor, reproducible, and replicable research findings. Another key term that the community adopted to support these practices is open science. Open science is made up of three big pillars: data, code, and articles (Gorgolewski and Poldrack, 2016).

In the data direction, several efforts have been made to build platforms to openly upload experimental data and organize it into a common and shareable format (e.g., BIDS). Code sharing is also crucial since open data should be processed with tools and scripts that are available and versioned. In this regard, the adoption of collaborative and versioning platforms such as GitHub has increased the accessibility of software specific to publication. Moreover, tools that automate and containerize software have reduced the problems of software versions and the burden of time to control it. Finally, articles should describe methods and data well and be published, if possible, in open-access journals or preprints to increase transparency.

In this Research Topic, we collected articles that push toward the direction of reproducibility and adopting open science practices in neuroscience.

Ioanas et al. focused on a new concept: reexecution. This concept, which is innovative in reproducibility studies, concerns the possibility to run the very same pipeline using the tools and data shared with the publication to reproduce the findings of a study. They presented an automated workflow for full, end-to-end article reexecution generating the full research communication output from the raw data, and automatically executable code. This study underlines the feasibility of article regeneration as a process that takes advantage of data and tools sharing in conjunction with containerization.

As we mentioned above, sharing data is crucial for reproducibility, and McPhee et al. discussed in a transparent way the different challenges of collecting, harmonizing, and analyzing data collected in a collaborative research network. They faced problems in the harmonization of data across different disorders, partners, and formats. Their proposed strategy overcame the aforementioned restrictions faced by other research groups and will further help the researchers understand the health research outcomes in children with neurodevelopmental disorders (NDDs).

Naseri et al. discussed the disparities between MRI and PET research in terms of scientific standards, analytic plan pre-registration, data and code sharing, containerized workflows, and standardized processing pipelines. They discussed the importance of the research community in PET to follow general practices of MRI research as a way to release the full potential of brain PET research.

Another important ingredient for open science and reproducibility is code. In addition to sharing the analysis pipelines, it is also important to build software that allows reproducibility. In this Research Topic, different articles propose their tools to ease transparency and reproducibility.

Winchester et al. demonstrated the Eventer website as a common framework to upload, analyze, and share the findings, including meta-data and supervised learning-assisted models as a way for enhancing reproducibility when analyzing datasets of spontaneous synaptic activity.

Meyers introduced a package that makes it easy to perform decoding neural analyses in the R programming language, implementing a range of different analytic pipelines. This new R package will help researchers create reproducible and shared decoding analyses.

Ister et al. introduced a MATLAB package called SuMRak. It integrates brain segmentation, volumetry, image registration, and parameter map generation into a unified interface, thereby reducing the number of separate tools that researchers may require for straightforward data handling. This package offers an efficient MRI data processing of preclinical brain images, enabling researchers to extract consistent and precise measurements, significantly reducing the operating time, and allowing the analysis of large datasets.

Routier et al. presented Clinica, an open-source software platform designed to make clinical neuroscience studies easier and reproducible. Clinica provides processing pipelines for MRI and PET images that involve the combination of different software packages, the transformation of input data to the Brain Imaging Data Structure (BIDS), and the store of the output data using the ClinicA Processed Structure (CAPS). This platform supports the reproducible analysis in neuroimaging research.

Ji et al. demonstrated the QuNex software tool, which is an integrative platform for reproducible multimodal neuroimaging analytics. It provides an end-to-end execution capability of the entire study neuroimaging workflow, from data onboarding to analyses, to be customized and executed via a single command. QuNex platform is optimized for high-performance computing (HPC) or cloud-based environments, enabling high-throughput parallel processing of large-scale open neuroimaging datasets. This platform supports the reproducible neuroimaging analysis.

In conclusion, the eight articles published in this Research Topic emphasize the strength and importance of replicating research studies to confirm and advance our knowledge in neuroscience. We would like to draw attention to reviewers when editing and reviewing replication research studies, as we have revealed that not every researcher understands the importance of publishing replication studies. An independent reviewer should always focus on the existence of the source code, and the availability of research data in open accessible repositories, and the study design as a minimal confirmation of the robustness and generalizability of novel findings. We hope this Research Topic and editorial will stimulate the research community to conduct more replication studies, following the important rules of reproducible neuroscience and understanding the importance and value of reproducibility and replicability in (neuro)science.

SD: Conceptualization, Writing – original draft, Writing – review & editing. VM: Writing – original draft, Writing – review & editing. RG: Writing – original draft, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. SD is supported by a Beatriu de Pinós research fellowship (2020 BP 00116). RG was supported by a grant from the BIAL Foundation (Grant Number: PT/FB/BL-2022-142). VM was supported by funding to the Blue Brain Project, a research center of the École Polytechnique Fédérale de Lausanne (EPFL), from the Swiss Government's ETH Board of the Swiss Federal Institutes of Technology.

The author(s) would like to thank Dr. Huthkarsha Merum for helping prepare the figure. The authors acknowledge the support of Mercey Mathew in conceptualizing this Research Topic.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Association for Computing Machinery (2016). Artifact Review and Badging. Available at: https://www.acm.org/publications/policies/artifact-review-badging (accessed November 24, 2017).

Auer, S., Haelterman, N. A., Weissberger, T. L., Erlich, J. C., Susilaradeya, D., Julkowska, M., et al. (2021). Reproducibility for everyone team. A community-led initiative for training in reproducible research. Elife 10:e64719. doi: 10.7554/eLife.64719

Botvinik-Nezer, R., Holzmeister, F., Camerer, C. F., Dreber, A., Huber, J., Johannesson, M., et al. (2020). Variability in the analysis of a single neuroimaging dataset by many teams. Nature 582, 84–88. doi: 10.1038/s41586-020-2314-9

Botvinik-Nezer, R., and Wager, T. D. (2023). Reproducibility in neuroimaging analysis: challenges and solutions. Biol. Psychiat. 8, 780–788. doi: 10.1016/j.bpsc.2022.12.006

Crook, S., Davison, A. P., and Plesser, H. E. (2013). “Learning from the past: approaches for reproducibility in computational neuroscience,” in 20 Years in Computational Neuroscience, ed. J. M. Bower (New York, NY: Springer Science+Business Media), 73–102.

Donoho, D. L., Maleki, A., Rahman, I. U., Shahram, M., and Stodden, V. (2009). 15 years of reproducible research in computational harmonic analysis. Comput. Sci. Eng. 11, 8–18. doi: 10.1109/MCSE.2009.15

Goodman, S. N., Fanelli, D., and Ioannidis, J. P. A. (2016). What does research reproducibility mean? Sci. Transl. Med. 8:341ps12. doi: 10.1126/scitranslmed.aaf5027

Gorgolewski, K. J., and Poldrack, R. A. (2016). A practical guide for improving transparency and reproducibility in neuroimaging research. PLoS Biol. 14:e1002506. doi: 10.1371/journal.pbio.1002506

Luppi, A. I., Gellersen, H. M., Liu, Z. Q., Peattie, A. R. D., Manktelow, A. E., Adapa, R., et al. (2024). Systematic evaluation of fMRI data-processing pipelines for consistent functional connectomics. Nat. Commun. 15:4745. doi: 10.1038/s41467-024-48781-5

Nichols, T. E., Das, S., Eickhoff, S. B., Evans, A. C., Glatard, T., Hanke, M., et al. (2017). Best practices in data analysis and sharing in neuroimaging using MRI. Nat. Neurosci. 20, 299–303. doi: 10.1038/nn.4500

Peng, R. D. (2011). Reproducible research in computational science. Science 334, 1226–1227. doi: 10.1126/science.1213847

Keywords: reproducible analysis, repeatability and reproducibility, R&R studies, neuroscience, neuroimaging (anatomic and functional), analytic pipelines, reproducibility of results

Citation: Dimitriadis SI, Muddapu VR and Guidotti R (2024) Editorial: Reproducible analysis in neuroscience. Front. Neuroinform. 18:1520012. doi: 10.3389/fninf.2024.1520012

Received: 30 October 2024; Accepted: 12 November 2024;

Published: 26 November 2024.

Edited and reviewed by: Michael Denker, Jülich Research Centre, Germany

Copyright © 2024 Dimitriadis, Muddapu and Guidotti. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stavros I. Dimitriadis, c3RpZGltaXRyaWFkaXNAZ21haWwuY29t

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.