94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Neuroinform., 09 March 2023

Volume 17 - 2023 | https://doi.org/10.3389/fninf.2023.1154080

This article is part of the Research TopicNavigating the Landscape of FAIR Data Sharing and Reuse: Repositories, Standards, and ResourcesView all 13 articles

Heidi Kleven

Heidi Kleven Ingrid Reiten

Ingrid Reiten Camilla H. Blixhavn

Camilla H. Blixhavn Ulrike Schlegel

Ulrike Schlegel Martin Øvsthus

Martin Øvsthus Eszter A. Papp

Eszter A. Papp Maja A. Puchades

Maja A. Puchades Jan G. Bjaalie

Jan G. Bjaalie Trygve B. Leergaard

Trygve B. Leergaard Ingvild E. Bjerke*

Ingvild E. Bjerke*Brain atlases are widely used in neuroscience as resources for conducting experimental studies, and for integrating, analyzing, and reporting data from animal models. A variety of atlases are available, and it may be challenging to find the optimal atlas for a given purpose and to perform efficient atlas-based data analyses. Comparing findings reported using different atlases is also not trivial, and represents a barrier to reproducible science. With this perspective article, we provide a guide to how mouse and rat brain atlases can be used for analyzing and reporting data in accordance with the FAIR principles that advocate for data to be findable, accessible, interoperable, and re-usable. We first introduce how atlases can be interpreted and used for navigating to brain locations, before discussing how they can be used for different analytic purposes, including spatial registration and data visualization. We provide guidance on how neuroscientists can compare data mapped to different atlases and ensure transparent reporting of findings. Finally, we summarize key considerations when choosing an atlas and give an outlook on the relevance of increased uptake of atlas-based tools and workflows for FAIR data sharing.

Converting the increasing amounts of multifaceted neuroscience data into knowledge about the healthy and diseased brain requires that relevant data are accumulated and combined in a common context. The FAIR principles set forward by Wilkinson et al. (2016), stating that data should be findable, accessible, interoperable, and re-useable, facilitate such data integration. Practical implementation of these principles in neuroscience can be achieved by using brain atlases as a common framework, equipping the data with metadata describing their location in the brain. Brain atlases contain standardized references to brain locations, and their utility for integrating neuroscience data is already well-established (Toga and Thompson, 2001; Zaslavsky et al., 2014; Bjerke et al., 2018b).

Neuroscientists use atlases at several stages of a research project, from planning and conducting studies to analyzing data and publishing results. A variety of atlases exist, revealing different features of rat and mouse (collectively referred to as murine) neuroanatomy. However, different atlases use various traditions for defining and naming brain regions, hampering interpretation, and comparison of data from locations specified using different atlases. Thus, while atlases provide common frameworks for neuroscience data integration, researchers might find it challenging to know which atlas to choose and how to use it. This makes it difficult for researchers to efficiently interpret and analyze their data using atlases, and for reporting and sharing data in accordance with the FAIR principles. Here, we provide a guide to using murine brain atlases for efficient analysis, reporting and comparison of data, offering the perspective that open volumetric brain atlases are essential for these purposes.

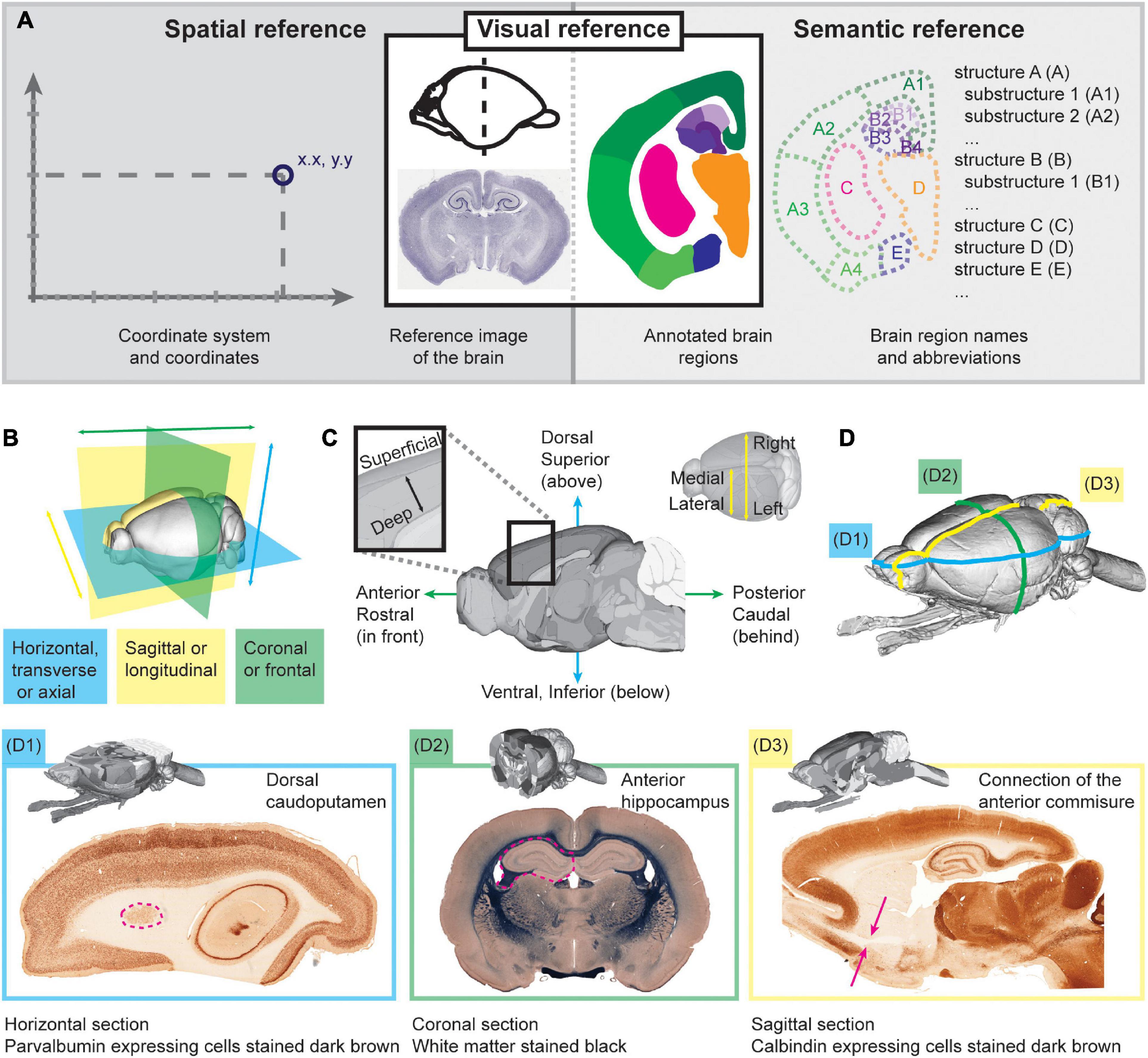

There are two types of murine brain atlases: traditional two-dimensional (2D) atlases with serial section images (e.g., Paxinos and Watson, 2013; Swanson, 2018) and digital volumetric (3D) atlases (e.g., Papp et al., 2014; Barrière et al., 2019; Wang et al., 2020). The traditional atlases rank among the most cited neuroscience publications. However, they are limited by the distance between section images and the fixed plane(s) of orientation. They are also poorly suited for automated whole-brain analysis and digital workflows, and reuse of atlas images in publications may require permission from the publisher. The digital volumetric atlases are typically shared openly, and they allow data analysis independent of the plane of sectioning. The most detailed and commonly used volumetric atlas for the mouse is the Allen Mouse Brain Common Coordinate Framework (Allen Mouse Brain CCF; Wang et al., 2020), which has been instrumental for the acquisition and sharing of the Allen Institute’s large data collections (Lein et al., 2007; Oh et al., 2014; Tasic et al., 2016). For the rat, the most detailed volumetric atlas is the Waxholm Space atlas of the Sprague Dawley rat brain (WHS rat brain atlas; RRID:SCR_017124; Papp et al., 2014; Kjonigsen et al., 2015; Osen et al., 2019; Kleven et al., 2023a). Other murine brain atlases are also available [see summary by Barrière et al. (2019)]. Regardless of the 2D or 3D format, murine brain atlases can be navigated and interpreted using the spatial, visual, and semantic reference space (Figure 1A; Kleven et al., 2023a).

Figure 1. Navigating brain atlases to find anatomical locations. (A) Simplified version of the brain atlas ontology model (AtOM, Kleven et al., 2023a). The main elements of an atlas include the coordinate system, the reference image (here exemplified with a coronal platypus brain section; Mikula et al., 2007), the annotated brain regions, and the brain region names. The elements provide different entry points for navigating the atlas, through a spatial, semantic or visual reference. (B) Illustration of the three standard planes (horizontal, blue; sagittal, yellow; coronal, green) typically used to cut brain sections. (C) Illustration of the essential terminology typically used for indicating positions in the brain (e.g., the terms “rostral” and “caudal” to refer to positions towards the front and back of the brain, respectively). (D) Illustration of useful landmark regions in the murine brain [adapted from Bjerke et al. (2023)], with examples from the horizontal (D1), coronal (D2; Leergaard et al., 2018), and sagittal (D3) planes.

The spatial reference consists of a coordinate system and a reference image. The reference image of an atlas may originate from a single specimen (Papp et al., 2014) or represent a population average (Wang et al., 2020) of multiple specimens, with different brain region characteristics (e.g., cyto- or chemoarchitecture, and gene expression) visible depending on the modality. The reference image is made measurable through the coordinate system. Most brain atlases use a 3D Cartesian coordinate system with a defined origin and each of the x, y, z axes oriented in one of the standard anatomical planes. Atlases typically follow the neurological orientation of axes described by the right-anterior-superior (RAS) scheme, where the x-axis is oriented toward the right (R), the y toward anterior (A), and the z toward superior (S)1. The origin may be defined by skull features (stereotaxic coordinate system; Paxinos and Watson, 2013), internal landmarks (Waxholm Space; Papp et al., 2014), or the physical limits of the reference image such as the corner of a volume (Wang et al., 2020).

The visual reference consists of the reference image and a set of boundaries of brain regions (annotations), defined using criteria-based interpretations (e.g., differences in gene expression patterns, and changes in cyto-, myelo-, or chemoarchitecture). Easily recognizable features that are consistent across individuals are often used as landmarks when positioning an experimental image in an atlas (Sergejeva et al., 2015). For example, the beginning and end of easily distinguished brain regions, such as the caudoputamen or hippocampus (Figure 1D), are highly useful for orientation. Such landmarks are particularly useful for guiding and assessing the quality of the spatial registration of experimental section images to an atlas (Puchades et al., 2019; see section on analysis below), as well as for detecting abnormal anatomical features in the images. A selection of useful murine brain landmarks are given by Bjerke et al. (2023).

The semantic reference consists of the brain region annotations and their names. Regions, areas, and nuclei of the brain may be named after the person who first defined them, or after distinct features, such as their architecture or relative position within a broader region. While murine brain atlas terminologies often combine terms from different conventions, most atlases present white matter regions with a lower case first letter and gray matter regions with a capital first letter. Digital atlases may also use color coding schemes to indicate relationships between region annotations, e.g., using the same color for all white matter regions or for regions at the same level of the hierarchy of gray matter regions (Wang et al., 2020).

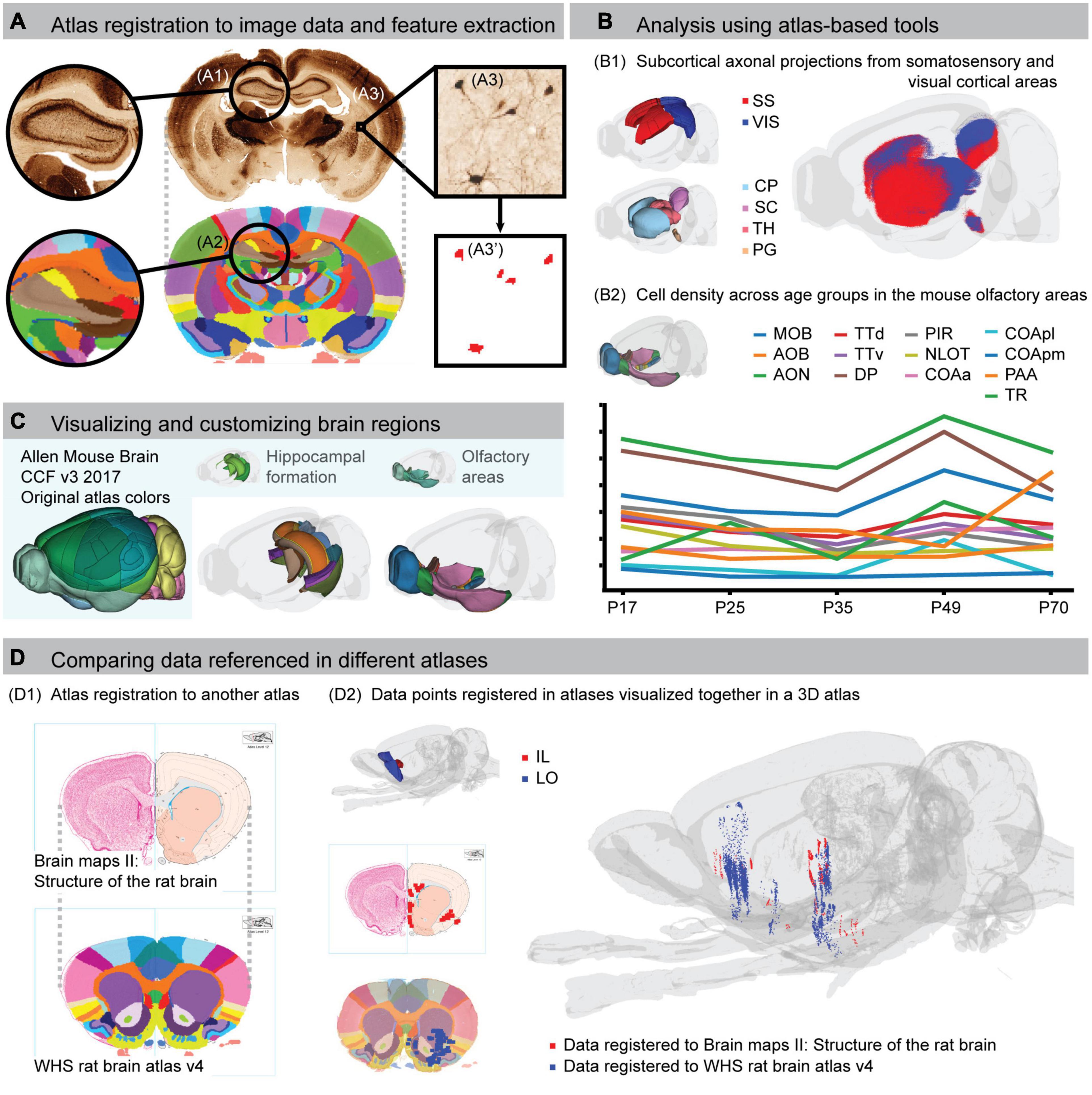

Atlas coordinates provide spatial reference in machine-readable units. When coupled to the atlas terminology, they enable automated analysis of data registered to that atlas. A broad range of software incorporating atlases, here called atlas-based tools, are available to perform various digital analyses of brain image data. Atlas-based analyses rely on spatial registration, here defined as the process of assigning anatomical location to each pixel or voxel of the data (Figure 2A). This is achieved through aligning 2D and/or 3D data with the reference image of the atlas.

Figure 2. Using brain atlases for spatial registration, analysis, visualization and comparison of data. (A) Example of spatial registration of a histological section to the Waxholm Space (WHS) rat brain atlas. Landmark regions, such as the hippocampus (A1,A2), are used to find corresponding positions between the image and the atlas. Features in the images (A3), with spatial metadata from the registration, can be extracted (A3’). The example in (A) shows a histological image stained for parvalbumin neurons registered to the WHS atlas. (B) The principal workflow of combining atlas registration with extracted features illustrated in (A) can be used for different types of atlas-based analyses. (B1) 3D dot map visualization of corticostriatal, corticotectal, and corticopontine axonal projections originating from the primary somatosensory cortex (SS, red) and visual cortex (VIS, blue) cortical areas, extracted from anterograde tract tracing data (Oh et al., 2014) registered to the Allen mouse brain CCFv3-2017 (Ovsthus et al., 2022). (B2) Analysis of dopamine 1 receptor positive cell densities in olfactory regions of the mouse brain across five postnatal day (P) age groups [y axis values not shown, preliminary data extracted from images provided by Bjerke et al. (2022)]. (C) Visualization of customized regions from the Allen mouse brain CCFv3-2017. The left panel shows the entire atlas with the default color scheme. The middle panel shows a transparent view of the brain with regions of the hippocampal formation color coded to their corresponding region in the WHS rat brain atlas, facilitating cross-species comparisons. In the right panel, to better visualize the extent of individual regions, they are coded with contrasting colors, whereas the original atlas uses the same or highly similar colors. (D) Example of how co-registration of brain atlases supports comparison of data referenced in different atlases. The stereotaxic atlas by Swanson (1998) has been spatially registered to the WHS rat brain atlas (D1). Data that have been extracted and mapped to the two atlases can therefore be co-visualized in the same 3D space (D2). In this example, the red points are extracted from a previous study where retrograde projections from injections in the infralimbic cortex were represented with schematic drawing of terminal fields onto atlas plates from the Swanson atlas (Figure 8, data mirrored for comparison; Hoover and Vertes, 2007). The blue points are extracted from a public dataset showing the anterograde projections originating from the lateral orbitofrontal cortex (case F1 BDA; Kondo et al., 2022). AOB, accessory olfactory bulb; AON, anterior olfactory nucleus; CP, caudoputamen; COAa, cortical amygdalar area, anterior part; COApl, cortical amygdalar area, posterior part, lateral zone; COApm, cortical amygdalar area, posterior part, medial zone; DP, dorsal peduncular area; IL, infralimbic cortex; LO, lateral orbitofrontal cortex; MOB, main olfactory bulb; NLOT, nucleus of the lateral olfactory tract; PAA, piriform-amygdalar area; PG, pontine gray; PIR, piriform area; SC, superior colliculus; SS, somatosensory area; TH, thalamus; TR, postpiriform transition area; TTd, taenia tecta dorsal part; TTv, taenia tecta ventral part; VIS, visual area.

Several computational methods for registration of 2D image data to atlases have been developed. However, implementations are typically tailored to specific data types (e.g., fluorescent images or 3D data) and may require coding skills. Thus, tools with a graphical user interface that are applicable to a broad range of data types have also been developed, often incorporated as part of analytic workflows (Tappan et al., 2019; Ueda et al., 2020; BICCN Data Ecosystem Collaboration et al., 2022; Tyson and Margrie, 2022). An example of a standalone tool for spatial registration of histological sections to volumetric atlases is QuickNII (RRID:SCR_017978; Puchades et al., 2019). QuickNII is available with the WHS rat brain atlas (v2, v3, and v4) and the Allen Mouse Brain CCF (v3-2015 and v3-2017). Manual alignment of individual section images is relatively time-consuming, and can greatly benefit from a machine learning-based approach for section alignment, such as implemented in DeepSlice for coronal rat and mouse brain sections2 (Carey et al., 2022). While these tools rely on linear registration methods, murine brains show variability (Badea et al., 2007; Scholz et al., 2016) that cannot always be compensated for by using linear transformations. Histological brain sections are also prone to physical damage and deformities caused by tissue processing (Simmons and Swanson, 2009). To amend this, non-linear adaptations of linearly registered murine images can be achieved using VisuAlign (RRID:SCR_017978).

Murine brain research increasingly includes 3D imaging data acquired by magnetic resonance or diffusion tensor imaging (Gesnik et al., 2017), serial two-photon imaging (Oh et al., 2014) or light sheet microscopy (Ueda et al., 2020). As these data are spatially coherent and avoid the deformities and damage seen in histological sections, they lend themselves well to volume-to-volume registration with 3D reference atlases. Several groups have developed computational methods for this type of alignment [see review by BICCN Data Ecosystem Collaboration et al. (2022)3 and Tyson and Margrie (2022)], most often toward the Allen Mouse Brain CCF. The Elastix toolbox (Klein et al., 2010) also offers a collection of algorithms that can be used for 3D image registration.

Spatially registered image data can be used in analytic workflows for region-based annotation, quantification, and reconstruction of features in and across images. Such workflows typically entail three steps: (1) registration of image data (2D or 3D) to an atlas, (2) feature extraction, and (3) quantification and/or visualization of extracted features (Figure 2B). Several authors have demonstrated how such workflows can be used to quantify features of the brain (Kim et al., 2017; Pallast et al., 2019; Newmaster et al., 2020). Although many use custom code, workflows based on both commercial and open source tools exist. For example, NeuroInfo from MBF Bioscience (Tappan et al., 2019) supports reconstruction of sections into a volume and registration to an atlas with automatic image segmentation and quantification. Alternatively, the free and open source QUINT workflow (Yates et al., 2019) aligns histological section images to atlas, and applies the same alignment to segmented images where a given feature (e.g., labeled cell bodies) is represented with a single color using the Nutil tool (Groeneboom et al., 2020, RRID:SCR_017183). For registration of electrode positions or viral expression, the HERBS software (Fuglstad et al., 2023) offers integrated spatial registration and feature extraction, where results can be directly visualized in 3D.

Spatial metadata makes it possible to view and interact with atlases and image data in several online atlas viewers. The Scalable Brain Atlas Composer4 (SBA; Bakker et al., 2015) is capable of viewing 2D or 3D images of a range of different formats. In addition, the SBA can view spatial metadata (e.g., from QuickNII or DeepSlice) together with .png images of histological sections. Another online tool is the EBRAINS interactive atlas viewer5, which is available for all versions of the WHS rat brain atlas and the Allen Mouse Brain CCF. This viewer also allows upload of user-defined data. For example, the user can drag-and-drop a .nii volume to view it in the three standard planes and slice it in arbitrary angles, with region annotations available as an overlay. Additionally, 3D rendering of coordinate-based data such as point clouds representing tracer distributions or cell bodies can be achieved online via MeshView (RRID:SCR_017222). MeshView allows slicing of volumes containing point clouds in user-defined planes for inspection and analysis of topographical patterns (see e.g., Tocco et al., 2022).

Open access digital brain atlases allow researchers to customize the anatomical annotations, reference images, or terminology in the atlas for specific analyses. Several tools have taken advantage of this, and enable the user to customize the atlas in an interactive way through a user interface. For example, QCAlign (RRID:SCR_023088; Gurdon et al., in preparation) allows interactive exploration of the hierarchy and grouping of brain region names that can subsequently be used in the QUINT workflow to merge brain regions into broader, custom regions for analysis. This may for example be used to merge and rename regions to make them compatible with a different naming convention, e.g., to enable cross-species comparison where atlases for different species must be harmonized (Figure 2C; Bjerke et al., 2021). Merging regions can also facilitate teaching by introducing students to macrostructure before revealing details. A more advanced use case is to modify or create new brain region annotations. For this purpose, the open access segmentation software like e.g., ITK-SNAP (Yushkevich et al., 2006) is useful for viewing and editing volumetric files across a range of different formats.

A major challenge across atlases is the variety of brain region annotations and terminologies (Swanson, 2000; Bohland et al., 2009). When different names are used to refer to the same brain region, or when similar names are used for partly overlapping ones, confusion is inevitable (Van De Werd and Uylings, 2014; Bjerke et al., 2020). Unequivocal referencing (see “Citing atlases and anatomical locations”) can mitigate some of this, but the challenge remains that different terminologies often reflect differences in criteria for annotating brain regions. Differences in the brain region annotations across atlases and their versions make it difficult to compare data where locations are reported using different atlases. To amend this, Khan et al. (2018) performed a co-registration between versions of the stereotaxic rat brain atlases. They migrated data originally registered to one of Paxinos and Watson’s (1986) earliest atlases to its corresponding plate in Swanson’s (2018) most recent versions, making the data comparable. It is also possible to migrate legacy data to a volumetric atlas, upon which different datasets can be compared and co-visualized in 3D space (Figure 2D). To support such efforts, we have spatially registered several versions of the traditional stereotaxic atlases to the WHS rat brain atlas and Allen Mouse Brain CCF (Bjerke et al., 2020). The co-registration data are available for download through the EBRAINS Knowledge Graph6 in QuickNII compatible format (see, e.g., Bjerke et al., 2018b and the related EBRAINS project on the web portal)7. The open access Swanson atlases are also available in an interactive viewer. Thus, the variety of atlases available and the fact that different data will be referenced using different atlases, while a challenge, can be mitigated by mapping atlases to each other.

Brain locations may be specified by names or coordinates, but to be reproducible a specific citation of the atlas used is required. A challenge is that researchers often report the name of a brain region that they are familiar with, and not the name recorded in the brain atlas they have used (Bjerke et al., 2020). For example, a researcher may use “striatum” to refer to the dorsal part of the striatal complex called “caudoputamen” in most atlases. While the researcher may see these names as interchangeable, a reader may consider “striatum” to include the nucleus accumbens, which is also a common convention. This creates a source of confusion even when citing an atlas. We have previously put forward a set of recommendations to unambiguously refer to anatomical locations in the murine brain (Bjerke et al., 2018a), e.g., highlighting the importance of using terms as they appear in the atlas, or otherwise specifying how the terms used relate to those in the atlas.

Citation of an atlas should include the version. This is easy with traditional atlases following a linear versioning track (Paxinos and Watson, 2007; Swanson, 2018). However, volumetric digital atlases are often provided with several files that may be versioned separately. To facilitate correct citation, Kleven et al. (2023a) proposed an Atlas ontology model and an overview of the versioning of the two most commonly used volumetric murine brain atlases, the WHS rat brain atlas and the Allen Mouse Brain CCF. Beyond consistent and correct citation of atlases, any customizations (see “Customizing brain atlases for analysis and visualization”) should be clearly documented (Rodarie et al., 2021).

When using atlas-based software, it is important to be aware that software versioning is often independent of the atlas versioning. Thus, the software and atlas versions will have separate citation policies (usually along with separate RRIDs; Bandrowski and Martone, 2016), and should be named and cited accordingly when reporting data acquired using atlas-based software. Multiple atlases may be available in the same tool, in which case it is critical to record which atlas and version was used.

With several atlases available, it is challenging to know what sets different atlases apart and choosing the most appropriate brain atlas depends on its intended purpose. First, reproducibility and availability should be considered. In most laboratories, there are 2D book atlases on the shelf. While the mere physical availability of book atlases makes them convenient to use during experimental work, many of them are challenging to use for transparent reporting due to restrictive licenses and high costs for reproducing figures. Choosing an open access atlas makes it easier to communicate findings transparently and ensure their replicability. Second, reference images differ among atlases and should ideally match the experimental data at hand. For example, different strains are used across available rat brain atlases, with Wistar used in the Paxinos atlases (Paxinos and Watson, 2013) and Sprague Dawley used in Swanson’s atlases (Swanson, 2018) and in the Waxholm Space rat brain atlas (Papp et al., 2014). Other characteristics, such as age category, sex, wild type or transgenic specimens, and data modality will also influence how well the atlas can be applied to experimental data. In general, the more characteristics match between the subjects used in an experiment and the reference atlas, the better the atlas will represent the data, an essential consideration for analyses. A third important feature of an atlas is its interoperability with other atlases and related analysis software. A researcher intending to analyze data based on an atlas will benefit from a digital 3D atlas incorporated in digital tools and workflows. Whether the atlas has been used in a similar study or is part of a data integration effort may also be relevant (Oh et al., 2014; Bjerke et al., 2018b; Erö et al., 2018), as this will facilitate comparison of findings with published data and enable similar comparisons in the future.

Brain atlases are continuously created and refined to reflect researchers’ needs for appropriate references for subjects of different ages (developmental or aging) or strains, or for data acquired with various imaging modalities, to mention some. In particular, there has been an increasing focus on the need for a common coordinate framework to map data across different developmental stages. A challenge with these resources is that they either do not cover early postnatal and embryonic stages (Newmaster et al., 2020), or have delineations that are not readily compatible with adult atlases (Young et al., 2021). Additionally, there is need for brain atlases capturing the fine details of brain regions distinguished by e.g., topographical organization of connections (Zingg et al., 2014; Hintiryan et al., 2016). For example, Chon et al. (2019) created an atlas with highly granular annotations of the mouse caudoputamen by using cortico- and thalamo-striatal connectivity data. By combining delineations from Allen Mouse Brain CCF and the Franklin and Paxinos atlases, this atlas also helps alleviating some of the inconsistencies in nomenclature (Chon et al., 2019). As these examples show, several atlases are required to cater to current needs, and future methodologies and findings will add further possibilities and needs for continued development and refinement of atlases. For such new atlases to enable researchers to cite, (re-)analyze, and compare data independently of the original atlas used, it is essential that they are openly shared and properly documented (Kleven et al., 2023a).

In this perspective, we have provided a guide to murine brain atlases with a focus on how to use them for spatial registration, efficient analysis, and transparent reporting of data. Powerful analytic pipelines will hopefully incentivize more researchers to spatially register their data to atlases. We anticipate that the increasing availability and automation of atlas-based software with graphical user interfaces will fundamentally change how neuroscience will be performed in the future and lead to a major increase in the amount of more easily interpretable neuroscience data. For the field to benefit maximally from this shift, it is crucial that datasets and spatial metadata are openly shared in a public repository. This can be achieved with open access volumetric atlases as essential resources for making the wealth of multifaceted neuroscience data FAIR.

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

HK and IB conceptualized and wrote the manuscript with input from TL. HK, IR, MØ, and IB prepared figures. All authors contributed to the development of concepts and resources described in the manuscript, and to manuscript revision.

This work was funded by the European Union’s Horizon 2020 Framework Program for Research and Innovation under the Specific Grant Agreement No. 945539 (Human Brain Project SGA3) and the Research Council of Norway under Grant Agreement No. 269774 (INCF Norwegian Node).

We thank Gergely Csucs and Dmitri Darine for their expert technical assistance.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Badea, A., Ali-Sharief, A., and Johnson, G. (2007). Morphometric analysis of the C57BL/6J mouse brain. Neuroimage 37, 683–693. doi: 10.1016/j.neuroimage.2007.05.046

Bakker, R., Tiesinga, P., and Kötter, R. (2015). The scalable brain atlas: instant web-based access to public brain atlases and related content. Neuroinformatics 13, 353–366. doi: 10.1007/s12021-014-9258-x

Bandrowski, A., and Martone, M. (2016). RRIDs: a simple step toward improving reproducibility through rigor and transparency of experimental methods. Neuron 90, 434–436. doi: 10.1016/j.neuron.2016.04.030

Barrière, D., Magalhães, R., Novais, A., Marques, P., Selingue, E., Geffroy, F., et al. (2019). The SIGMA rat brain templates and atlases for multimodal MRI data analysis and visualization. Nat. Commun. 10, 1–13. doi: 10.1038/s41467-019-13575-7

BICCN Data Ecosystem Collaboration, Hawrylycz, M. J., Martone, M. E., Hof, P. R., Lein, E. S., Regev, A., et al. (2022). The BRAIN initiative cell census network data ecosystem: a user’ s guide. bioRxiv doi: 10.1101/2022.10.26.513573

Bjerke, I., Cullity, E., Kjelsberg, K., Charan, K., Leergaard, T., and Kim, J. (2022). DOPAMAP, high-resolution images of dopamine 1 and 2 receptor expression in developing and adult mouse brains. Sci. Data 9, 1–11. doi: 10.1038/s41597-022-01268-8

Bjerke, I., Øvsthus, M., Andersson, K., Blixhavn, C., Kleven, H., Yates, S., et al. (2018a). Navigating the murine brain: toward best practices for determining and documenting neuroanatomical locations in experimental studies. Front. Neuroanat. 12:82. doi: 10.3389/FNANA.2018.00082

Bjerke, I., Øvsthus, M., Papp, E., Yates, S., Silvestri, L., Fiorilli, J., et al. (2018b). Data integration through brain atlasing: human Brain Project tools and strategies. Eur. Psychiatry 50, 70–76. doi: 10.1016/j.eurpsy.2018.02.004

Bjerke, I., Ovsthus, M., Checinska, M., and Leergaard, T. (2023). An illustrated guide to landmarks in histological rat and mouse brain images. Zenodo. doi: 10.5281/zenodo.7575515

Bjerke, I., Puchades, M., Bjaalie, J., and Leergaard, T. (2020). Database of literature derived cellular measurements from the murine basal ganglia. Sci. Data 7:211. doi: 10.1038/s41597-020-0550-3

Bjerke, I., Yates, S., Laja, A., Witter, M., Puchades, M., Bjaalie, J., et al. (2021). Densities and numbers of calbindin and parvalbumin positive neurons across the rat and mouse brain. iScience 24:101906. doi: 10.1016/j.isci.2020.101906

Bohland, J., Bokil, H., Allen, C., and Mitra, P. (2009). The brain atlas concordance problem: quantitative comparison of anatomical parcellations. PLoS One 4:e0007200. doi: 10.1371/journal.pone.0007200

Carey, H., Pegios, M., Martin, L., Saleeba, C., Turner, A., Everett, N., et al. (2022). DeepSlice: rapid fully automatic registration of mouse brain imaging to a volumetric atlas. bioRxiv doi: 10.1101/2022.04.28.489953v1

Chon, U., Vanselow, D., Cheng, K., and Kim, Y. (2019). Enhanced and unified anatomical labeling for a common mouse brain atlas. Nat. Commun. 10:5067. doi: 10.1038/s41467-019-13057-w

Erö, C., Gewaltig, M., Keller, D., and Markram, H. (2018). A cell atlas for the mouse brain. Front. Neuroinform. 12:84. doi: 10.3389/fninf.2018.00084

Fuglstad, J., Saldanha, P., Paglia, J., and Whitlock, J. (2023). HERBS: histological e-data registration in rodent brain spaces. eLife doi: 10.1101/2021.10.01.462770 [Epub ahead of print].

Gesnik, M., Blaize, K., Deffieux, T., Gennisson, J. L., Sahel, J. A., Fink, M., et al. (2017). 3D functional ultrasound imaging of the cerebral visual system in rodents. Neuroimage 149, 267–274. doi: 10.1016/j.neuroimage.2017.01.071

Groeneboom, N., Yates, S., Puchades, M., and Bjaalie, J. (2020). Nutil: a pre- and post-processing toolbox for histological rodent brain section images. Front. Neuroinform. 14:37. doi: 10.3389/fninf.2020.00037

Gurdon, B., Yates, S. C., Csucs, G., Groeneboom, N. E., Hadad, N., Telpoukhovskaia, M., et al.. (in preparation). Detecting the effect of genetic diversity on brain-wide cellular and pathological changes in a novel Alzheimer’s disease mouse model. Manuscript in preparation.

Hintiryan, H., Foster, N., Bowman, I., Bay, M., Song, M., Gou, L., et al. (2016). The mouse cortico-striatal projectome. Nat. Neurosci. 19, 1100–1114. doi: 10.1038/nn.4332

Hoover, W. B., and Vertes, R. P. (2007). Anatomical analysis of afferent projections to the medial prefrontal cortex in the rat. Brain Struct. Funct. 212, 149–179. doi: 10.1007/s00429-007-0150-4

Khan, A., Perez, J., Wells, C., and Fuentes, O. (2018). Computer vision evidence supporting craniometric alignment of rat brain atlases to streamline expert-guided, first-order migration of hypothalamic spatial datasets related to behavioral control. Front. Syst. Neurosci. 12:7. doi: 10.3389/fnsys.2018.00007

Kim, Y., Yang, G., Pradhan, K., Venkataraju, K., Bota, M., García del Molino, L., et al. (2017). Brain-wide maps reveal stereotyped cell-type-based cortical architecture and subcortical sexual dimorphism. Cell 171, 456–469. doi: 10.1016/j.cell.2017.09.020

Kjonigsen, L., Lillehaug, S., Bjaalie, J., Witter, M., and Leergaard, T. (2015). Waxholm Space atlas of the rat brain hippocampal region: three-dimensional delineations based on magnetic resonance and diffusion tensor imaging. Neuroimage 108, 441–449. doi: 10.1016/j.neuroimage.2014.12.080

Klein, S., Staring, M., Murphy, K., Viergever, M. A., and Pluim, J. P. W. (2010). Elastix: a toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 29, 196–205. doi: 10.1109/TMI.2009.2035616

Kleven, H., Gillespie, T. H., Zehl, L., Dickscheid, T., and Bjaalie, J. G. (2023a). AtOM, an ontology model for standardizing use of brain atlases in tools, workflows, and data infrastructures. bioRxiv doi: 10.1101/2023.01.22.525049

Kleven, H., Bjerke, I., Clascá, F., Groenewegen, H., Bjaalie, J., and Leergaard, T. (2023b). Waxholm Space atlas of the rat brain: A 3D atlas supporting data analysis and integration. [Preprint].

Kondo, H., Olsen, G., Gianatti, M., Monterotti, B., Sakshaug, T., and Witter, M. (2022). Anterograde visualization of projections from orbitofrontal cortex in rat (v1.1). EBRAINS doi: 10.25493/2MX9-3XF

Leergaard, T. B., Lillehaug, S., Dale, A., and Bjaalie, J. G. (2018). Atlas of normal rat brain cyto- and myeloarchitecture [Data set]. Human Brain Project Neuroinformatics Platform. doi: 10.25493/C63A-FEY

Lein, E., Hawrylycz, M., Ao, N., Ayres, M., Bensinger, A., Bernard, A., et al. (2007). Genome-wide atlas of gene expression in the adult mouse brain. Nature 445, 168–176. doi: 10.1038/nature05453

Mikula, S., Trotts, I., Stone, J., and Jones, E. (2007). Internet-enabled high-resolution brain mapping and virtual microscopy. Neuroimage 35, 9–15. doi: 10.1016/j.neuroimage.2006.11.053

Newmaster, K., Nolan, Z., Chon, U., Vanselow, D., Weit, A., Tabbaa, M., et al. (2020). Quantitative cellular-resolution map of the oxytocin receptor in postnatally developing mouse brains. Nat. Commun. 11, 1–12. doi: 10.1038/s41467-020-15659-1

Oh, S., Harris, J., Ng, L., Winslow, B., Cain, N., Mihalas, S., et al. (2014). A mesoscale connectome of the mouse brain. Nature 508, 207–214. doi: 10.1038/nature13186

Osen, K., Imad, J., Wennberg, A., Papp, E., and Leergaard, T. (2019). Waxholm Space atlas of the rat brain auditory system: three-dimensional delineations based on structural and diffusion tensor magnetic resonance imaging. Neuroimage 199, 38–56. doi: 10.1016/j.neuroimage.2019.05.016

Ovsthus, M., Van Swieten, M. M. H., Bjaalie, J. G., and Leergaard, T. B. (2022). Point coordinate data showing spatial distribution of corticostriatal, corticothalamic, corticocollicular, and corticopontine projections in wild type mice. EBRAINS. doi: 10.25493/QT31-PJS

Pallast, N., Wieters, F., Fink, G., and Aswendt, M. (2019). Atlas-based imaging data analysis tool for quantitative mouse brain histology (AIDAhisto). J. Neurosci. Methods 326:108394. doi: 10.1016/j.jneumeth.2019.108394

Papp, E., Leergaard, T., Calabrese, E., Johnson, G., and Bjaalie, J. (2014). Waxholm Space atlas of the Sprague Dawley rat brain. Neuroimage 97, 374–386. doi: 10.1016/j.neuroimage.2014.04.001

Paxinos, G., and Watson, C. (1986). The Rat Brain in Stereotaxic Coordinates, 2nd Edn. Burlington, MA: Academic Press.

Paxinos, G., and Watson, C. (2007). The Rat Brain in Stereotaxic Coordinates, 6th Edn. Burlington, MA: Academic Press.

Paxinos, G., and Watson, C. (2013). The Rat Brain in Stereotaxic Coordinates, 7th Edn. Burlington, NJ: Elsevier Inc.

Puchades, M., Csucs, G., Ledergerber, D., Leergaard, T., and Bjaalie, J. (2019). Spatial registration of serial microscopic brain images to three-dimensional reference atlases with the QuickNII tool. PLoS One 14:e0216796. doi: 10.1371/journal.pone.0216796

Rodarie, D., Verasztó, C., Roussel, Y., Reimann, M., Keller, D., Ramaswamy, S., et al. (2021). A method to estimate the cellular composition of the mouse brain from heterogeneous datasets. PLoS Comput. Biol. 18:e01010739. doi: 10.1371/journal.pcbi.1010739

Scholz, J., LaLiberté, C., van Eede, M., Lerch, J., and Henkelman, M. (2016). Variability of brain anatomy for three common mouse strains. Neuroimage 142, 656–662. doi: 10.1016/j.neuroimage.2016.03.069

Sergejeva, M., Papp, E. A., Bakker, R., Gaudnek, M. A., Okamura-Oho, Y., Boline, J., et al. (2015). Anatomical landmarks for registration of experimental image data to volumetric rodent brain atlasing templates. J. Neurosci. Methods 240, 161–169. doi: 10.1016/j.jneumeth.2014.11.005

Simmons, D., and Swanson, L. (2009). Comparing histological data from different brains: sources of error and strategies for minimizing them. Brain Res. Rev. 60, 349–367. doi: 10.1016/j.brainresrev.2009.02.002

Swanson, L. (2000). What is the brain? Trends Neurosci. 23, 519–527. doi: 10.1016/S0166-2236(00)01639-8

Swanson, L. (2018). Brain maps 4.0—Structure of the rat brain: an open access atlas with global nervous system nomenclature ontology and flatmaps. J. Comp. Neurol. 526, 935–943. doi: 10.1002/cne.24381

Tappan, S. J., Eastwood, B. S., O’Connor, N., Wang, Q., Ng, L., Feng, D., et al. (2019). Automatic navigation system for the mouse brain. J. Comp. Neurol. 527, 2200–2211. doi: 10.1002/cne.24635

Tasic, B., Menon, V., Nguyen, T., Kim, T., Jarsky, T., Yao, Z., et al. (2016). Adult mouse cortical cell taxonomy revealed by single cell transcriptomics. Nat. Neurosci. 19, 335–346. doi: 10.1038/nn.4216

Tocco, C., Øvsthus, M., Bjaalie, J., Leergaard, T., and Studer, M. (2022). Topography of corticopontine projections is controlled by postmitotic expression of the area-mapping gene Nr2f1. Development 149:26. doi: 10.1242/dev.200026

Tyson, A. L., and Margrie, T. W. (2022). Mesoscale microscopy and image analysis tools for understanding the brain. Prog. Biophys. Mol. Biol. 168, 81–93. doi: 10.1016/j.pbiomolbio.2021.06.013

Ueda, H. R., Dodt, H. U., Osten, P., Economo, M. N., Chandrashekar, J., and Keller, P. J. (2020). Whole-brain profiling of cells and circuits in mammals by tissue clearing and light-sheet microscopy. Neuron 106, 369–387. doi: 10.1016/j.neuron.2020.03.004

Van De Werd, H., and Uylings, H. (2014). Comparison of (stereotactic) parcellations in mouse prefrontal cortex. Brain Struct. Funct. 219, 433–459. doi: 10.1007/s00429-013-0630-7

Wang, Q., Ding, S., Li, Y., Royall, J., Feng, D., Lesnar, P., et al. (2020). The allen mouse brain common coordinate framework: a 3D reference atlas. Cell 181, 1–18. doi: 10.1016/j.cell.2020.04.007

Wilkinson, M., Dumontier, M., Aalbersberg, I., Appleton, G., Axton, M., Baak, A., et al. (2016). The FAIR guiding principles for scientific data management and stewardship. Sci. Data 3, 1–9. doi: 10.1038/sdata.2016.18

Yates, S., Groeneboom, N., Coello, C., Lichtenthaler, S., Kuhn, P., Demuth, H., et al. (2019). QUINT: workflow for quantification and spatial analysis of features in histological images from rodent brain. Front. Neuroinform. 13:75. doi: 10.3389/fninf.2019.00075

Young, D., Darbandi, S. F., Schwartz, G., Bonzell, Z., Yuruk, D., Nojima, M., et al. (2021). Constructing and optimizing 3D atlases from 2D data with application to the developing mouse brain. eLife 10:e61408.

Yushkevich, P., Piven, J., Hazlett, H., Smith, R., Ho, S., Gee, J., et al. (2006). User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. Neuroimage 31, 1116–1128. doi: 10.1016/j.neuroimage.2006.01.015

Zaslavsky, I., Baldock, R., and Boline, J. (2014). Cyberinfrastructure for the digital brain: spatial standards for integrating rodent brain atlases. Front. Neuroinform. 8:74. doi: 10.3389/fninf.2014.00074

Keywords: brain atlases, FAIR data, reporting practices, spatial registration, rat brain, mouse brain, brain-wide analysis, neuroinformatics

Citation: Kleven H, Reiten I, Blixhavn CH, Schlegel U, Øvsthus M, Papp EA, Puchades MA, Bjaalie JG, Leergaard TB and Bjerke IE (2023) A neuroscientist’s guide to using murine brain atlases for efficient analysis and transparent reporting. Front. Neuroinform. 17:1154080. doi: 10.3389/fninf.2023.1154080

Received: 30 January 2023; Accepted: 21 February 2023;

Published: 09 March 2023.

Edited by:

Maaike M. H. Van Swieten, Integral Cancer Center Netherlands (IKNL), NetherlandsReviewed by:

Yongsoo Kim, Penn State Health Milton S. Hershey Medical Center, United StatesCopyright © 2023 Kleven, Reiten, Blixhavn, Schlegel, Øvsthus, Papp, Puchades, Bjaalie, Leergaard and Bjerke. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ingvild E. Bjerke, aS5lLmJqZXJrZUBtZWRpc2luLnVpby5ubw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.