95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Neuroinform. , 24 June 2022

Volume 16 - 2022 | https://doi.org/10.3389/fninf.2022.876012

This article is part of the Research Topic Nonlinear Connectivity, Causality and Information Processing in Neuroscience View all 5 articles

In many scientific fields including neuroscience, climatology or physics, complex relationships can be described most parsimoniously by non-linear mechanics. Despite their relevance, many neuroscientists still apply linear estimates in order to evaluate complex interactions. This is partially due to the lack of a comprehensive compilation of non-linear methods. Available packages mostly specialize in only one aspect of non-linear time-series analysis and most often require some coding proficiency to use. Here, we introduce NoLiTiA, a free open-source MATLAB toolbox for non-linear time series analysis. In comparison to other currently available non-linear packages, NoLiTiA offers (1) an implementation of a broad range of classic and recently developed methods, (2) an implementation of newly proposed spatially and time-resolved recurrence amplitude analysis and (3) an intuitive environment accessible even to users with little coding experience due to a graphical user interface and batch-editor. The core methodology derives from three distinct fields of complex systems theory, including dynamical systems theory, recurrence quantification analysis and information theory. Besides established methodology including estimation of dynamic invariants like Lyapunov exponents and entropy-based measures, such as active information storage, we include recent developments of quantifying time-resolved aperiodic oscillations. In general, the toolbox will make non-linear methods accessible to the broad neuroscientific community engaged in time series processing.

In science, researchers usually strive to explain natural phenomena by the most parsimonious way possible. Based on experimental evidence, the scientist formulates a model of how the data was generated and successively tests it. A model is deemed fitting if it is able to make prediction with an acceptable accuracy. For simple relationships such as force and acceleration in classic Newton mechanics, linear models offer the best fit. In these models, small variations of the inputs lead to small differences in the output observables. All of the remaining variance left unexplained by the model is thought to be generated by noise, either due to the circumstances of the measurement, or due to some inherent stochasticity of the underlying system (e.g., Brownian motion). While linear models capture some phenomena in nature, they insufficiently explain more complex systems, e.g., as studied in neuroscience (Faure and Korn, 2001), climatology (Ghil et al., 1991), geophysics (Lovejoy et al., 2009), or genetics (Mazur et al., 2009). Complex systems are often characterized by non-linear relationships, with unique features not observed in linear systems. Here, small input variations may lead to drastic differences in a system’s future behavior, e.g., observed in neurons during generation of action potentials (Fitzhugh, 1960). Also, non-linear systems often produce emergent behavior, e.g., swarm behavior (Garnier et al., 2007), where its collective dynamics cannot trivially be described by a sum of its parts (principle of superposition). Whereas linear models typically need stochastic components to capture complex relationships, non-linear models – e.g., for predator–prey relationships (Danca et al., 1997) – often suffice with simple deterministic equations, thereby increasing their predictive value. One highly relevant area for the application of non-linear methods is time series analysis. A time series is defined as a series of data points indexed in temporal order, as e.g., measured by electroencephalography in neuroscience. For non-linear time series analysis, the measured data is implicitly assumed to be sampled from a non-linear system. While a linear time series is completely characterized by its first two moments and expresses a Gaussian distribution, non-linear time series may express high order temporal dynamics. As such linear time series may be discriminated from non-linear time series by means of surrogate tests employing, e.g., phase randomization techniques (Schreiber and Schmitz, 1996). The complex temporal dynamics of non-linear time series also often exhibit complex phase-space representations, which may be characterized by their geometric properties.

In neuroscience, single-cell activity is highly non-linear as neurons produce spiking activity according to the all-or-nothing principle. However, while non-linearity is a hallmark of microscopic dynamics it is still unclear if and how this transposes to the macroscopic scale. Electroencephalographic measurements representing large scale brain activity have been shown to possess characteristics of chaotic processes in the 1980s and early 1990s (Babloyantz et al., 1985; Soong and Stuart, 1989; Pritchard and Duke, 1992; Stam et al., 1994). Chaotic processes are characterized by highly unstable non-linear dynamics with a very complex topography in the state-space representations of their dynamics (Kantz and Schreiber, 2004). However, with the introduction of surrogate techniques it became clear, that certain linear systems, i.e., filtered linear noise could also produce spurious chaotic hallmarks (Schreiber and Schmitz, 1996). Thus, many previous findings on chaos in the brain might not hold up today as they have not been rigorously compared to surrogate data. While the early enthusiasm for simple chaotic dynamics in the brain had somewhat faded in the 1990s, non-linear dynamics in macroscopic neurophysiological data got a reappraisal in the early 2000s which still holds on and challenges the view of large-scale brain dynamics being the result of linear filtered noise processes (for a review see Stam, 2005). Instead, studies applying surrogate testing on resting state EEG data of healthy subjects suggest that brain dynamics might fluctuate between non-linear limit-cycle and filtered noise processes (Stam et al., 1999; Valdes et al., 1999; Freyer et al., 2009, 2011). Similarly, high-amplitude non-linear activity originating from limit-cycle or even chaotic dynamics have been shown to be present in pathological states, e.g., during seizures of epilepsy patients (Jing and Takigawa, 2000; Andrzejak et al., 2001; Ferri et al., 2001) or in Parkinson’s patients (Pezard et al., 2001; Chen et al., 2010). More recently, the increasing research focus on certain macroscopic oscillatory activities with characteristic asymmetric waveform shapes, e.g., the mu rhythm observed in the sensorimotor cortex undermines the importance of non-linear research in neuroscience (for a review see Cole and Voytek, 2017). Non-linearity in neuroscience is often attributed to the anatomical and physiological complexity of the neuronal networks present at all scales, ranging from small scale cell-to-cell interactions up to synchronous oscillations of millions of neurons in the cortex.

The field of complex systems theory is a synthesis of many research areas involved in non-linear analysis, including the study of dynamical systems, information theory and recurrence analysis (Nicolis and Rouvas-Nicolis, 2007). With increasing computational capabilities of modern computers, these methods become more easily applicable and may thus become complementary to standard linear techniques. With the advent of machine learning approaches, non-linear statistics might prove to be valuable features complementing linear statistics, as has been shown, e.g., in studies to distinguish neurological from healthy subjects in depression (Hosseinifard et al., 2013), Parkinson’s (Pezard et al., 2001), fibromyalgia (Paul et al., 2019), Alzheimer’s (Houmani et al., 2015), or epilepsy patients (Yuan et al., 2011; Li et al., 2020). Current state-of-the-art machine learning techniques like long short-term memory neural networks (LSTM) are especially suited for experimental data since they were explicitly developed for prediction and classification of non-linear time series. Studies have used them, e.g., for estimation of effective connectivity (Wang et al., 2018; Abbasvandi and Nasrabadi, 2019), emotion detection (Zangeneh Soroush et al., 2018; Dar et al., 2022) or classification of EEG-based motor imagery (Khademi et al., 2022). Further, methods from signal-processing may produce spurious results for non-linear signals, as has been recently demonstrated for phase-amplitude coupling (Gerber et al., 2016; Lozano-Soldevilla et al., 2016). Up to this point a comprehensive toolbox combining methods from the wide field of complex systems theory within a unified framework is lacking in the field of neuroscience. Thus, we developed NoLiTiA, a free, open-source MATLAB toolbox for non-linear time series analysis. The toolbox covers established and novel methods from three distinct fields of complex systems theory: dynamical systems theory (Kantz and Schreiber, 2004), recurrence quantification analysis (Eckmann et al., 1987) and information theory (Shannon and Weaver, 1949, Figure 1). Our objective is to provide an easily accessible, intuitive toolbox to a broad scientific community.

In the following sections we will first give a short introduction to the topics covered by the toolbox, explain the general workflow and implementation, and finally validate the core methodology and present some example applications.

The toolbox comprises methods from three different fields of complex systems theory: (a) dynamical systems theory, (b) recurrence quantification analysis, and (c) information theory (Figure 1). While information theory is completely independent from (a) and (b), recurrence quantification analysis was originally derived from dynamical systems theory. However, as recurrence quantification analysis becomes more developed and the field of methods increasingly extensive, especially through seminal works from Eckmann et al. (1987); Marwan and Kurths (2002), Romano et al. (2004), and Marwan et al. (2007), it gained its own standing which is why we defined it as an independent field alongside dynamical systems theory. In this section we will shortly introduce all three fields alongside some of the most relevant methods and estimators implemented in NoLiTiA. The full documentation of all functions and parameters can be found in the toolbox manual (see Supplementary Material).

The field of dynamical systems theory includes methods to characterize the temporal evolution of phase-space observables (states), i.e., the combination of all independent variables uniquely determining a state in multidimensional systems (Kantz and Schreiber, 2004). An intuitive example is the pendulum, of which the current state is uniquely determined by its momentary position and acceleration. Thus, its phase-space is two dimensional and in the case of no friction forms an unstable periodic orbit, i.e., closed trajectory in response to small perturbations of its resting position. With friction, phase-space trajectories spiral into a fixed point at the resting position of the pendulum. In the case of a driven pendulum, the phase-space also forms a closed trajectory, which attracts nearby trajectories induced by small perturbations. In contrast to the undriven pendulum with no friction it is thus a stable periodic orbit, as it is resistant to small perturbations. Both, the fixed point of an undriven pendulum with friction and the closed trajectory of a driven pendulum are examples of so-called attractors and determine the systems qualitative behavior. As the name implies, attractors attract system states in its near vicinity and keep its dynamics bounded to a specific region in phase-space. Non-linear systems may possess very complex attractors which are composed of an indefinite number of fixed points and periodic orbits. Using methods from dynamical systems theory, it is possible to characterize such attractors and estimate short- and long-term behavior of non-linear systems, e.g., how fast initially similar states diverge over time in phase-space. This allows crucial insights into the temporal stability of the underlying system and furthermore makes it possible to distinguish random from chaotic or highly complex but deterministic behavior. By studying the dynamic skeleton of the system, i.e., its fixed points and (unstable) periodic orbits, it is also possible to control its behavior to a certain extent (Bielawski et al., 1994; Schöll, 2010; Zhu et al., 2019). For most methods from dynamical systems theory, it is necessary to have a phase-space representation of the measured time series. Although, in most experimental setups it is not possible to measure all state variables simultaneously, under certain conditions, Takens’ delay embedding theorem (see section below) guarantees the reconstruction of a topologically equivalent phase-space from univariate time series (Takens, 1981). The implemented methods include, e.g., testing for non-linearity (Schreiber and Schmitz, 1997), phase-space reconstruction (Takens, 1981), topological complexity measures (correlation dimension, Grassberger and Procaccia, 1983, false nearest neighbors, Kennel et al., 1992), non-linear prediction (Ragwitz and Kantz, 2002), measures for detecting temporal instability (Lyapunov exponents, Rosenstein et al., 1993; Kantz, 1994) and for detecting unstable periodic orbits (So et al., 1996).

Many methods from classic signal processing like Fourier analysis, but also newer methods like phase-amplitude coupling rely on the assumption of linearity. NoLiTiA utilizes a test for non-linearity based on the generation of surrogates. According to Schreiber and Schmitz (1997) quantification of temporal asymmetry of a time series X with data points xt is most sensitive to distinguish linear from non-linear processes:

with <.. > indicating the average. The measure quantifies the sharpness of a signal’s transition backwards in time. Smoother signals are more likely to be generated by linear processes. However, to exclude a stochastic origin, results need to be compared to surrogates. The time-inversion statistic Qt is first calculated for the original data and then for a specified number of surrogates. A two-sided Z-statistic is then calculated and compared to the distribution of surrogates. If the absolute Z-score is smaller than 1.96 the test is significant at an alpha-level of 5%. The toolbox provides several algorithms for surrogate data generation to test the null hypothesis of test data being generated by a linear process. The implemented algorithms include shuffling of time points, shuffling of data segments, phase-randomization and amplitude-adjusted phase-randomization. Briefly, the latter algorithm for generating surrogates is implemented as follows: (1) original data is Fourier transformed, (2) phases of Fourier transformed data are randomized, (3) the inverse of the Fourier transform is performed on phase randomized data. After these steps the following four steps are repeated iteratively: (4) rank sort surrogate data according to original data (5) calculate Fourier transform of surrogates, (6) exchange surrogate amplitudes with amplitudes of original data, (7) calculate the inverse of Fourier transform (Schreiber and Schmitz, 1996). The test may be invoked by calling the nta_timerev.m function. At least the surrogate method (default: phase-randomization) and the number of surrogates (default: 100) should be specified.

Embedding of univariate time series in phase-space is implemented using Takens’ delay embedding theorem (Takens, 1981). By time-shifting a univariate time series x(t) d times by a factor τ, the time series can be embedded in a d-dimensional phase-space:

where TP indicates the transpose and xt being the d-dimensional state vector at time t.

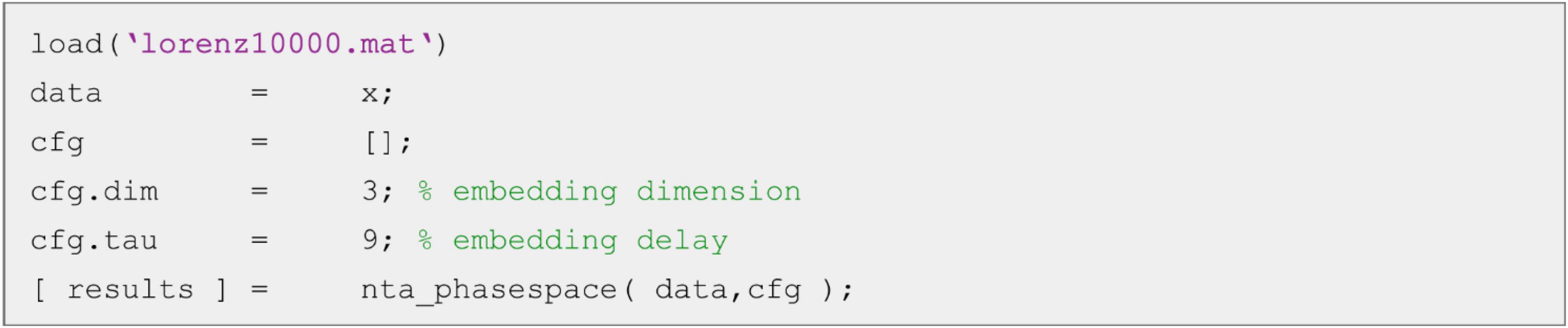

The phase-space may be reconstructed using the nta_phasespace.m function (Figure 2).

Figure 2. Example script for phase-space reconstruction. The minimum necessary parameters are the embedding dimension cfg.dim and the embedding delay cfg.tau.

The two embedding parameters cfg.dim ( = d) and cfg.tau need to be specified to get reasonable results. The phase-space matrix (time × embedding dimension) is returned in the field embTS of the results structure array. As phase-space embedding is a key procedure in non-linear time series analysis, nta_phasespace.m is called as a subfunction by most other routines in the toolbox.

Despite the possibility to define the necessary parameters for phase-space reconstruction ad hoc, both parameters dim and τ may be automatically optimized based on whether data is generated by a stochastic or deterministic process. The deterministic approach involves the method of false nearest neighbors for dimensionality optimization and auto-mutual information for τ optimization (Kennel et al., 1992; Cao, 1997, see section “Information Theoretic Measures”). The stochastic approach co-optimizes dim and τ using the Ragwitz prediction criterion for Markov-models (Ragwitz and Kantz, 2002) (see section Non-linear Prediction).

Embedding optimization may be invoked by calling the nta_optimize_embedding.m function.

For the reconstruction of the phase-space, the dimension d may be chosen ad hoc or optimized with regard to some advantageous criteria. One possibility is to optimize d with regard to the topology of the phase-space using the false nearest neighbors approach introduced by Kennel et al. (1992). The algorithm (nta_fnn.m) quantifies the number of false neighboring points of a time series X in a reconstructed phase-space which arise due to an insufficient embedding. If the embedding dimension is chosen too low, points which should be far away on the attractor may become direct neighbors, due to them being projected into each other’s vicinity. A toy example would be two points in a Cartesian two-dimensional coordinate system, which have the same x-coordinate but different y-coordinates. Projecting both points on the x-axis, i.e., reducing the dimension, would results in both points being on top of each other, or in other words, become false neighbors. On the other hand, projecting both points into the next higher dimension does not increase their distance to each other. The false nearest neighbors approach embeds the time series successively into higher dimensions and counts the reduction of neighbors, which arise due to projections:

with θ being the Heaviside-step function, i being the temporal index of x, j being the temporal index of n, n(xi) indicating the next neighbor of xi,||..|| indicating the maximum norm, Rtol being a distance threshold, Atol being a loneliness threshold and SD indicating the standard deviation of the time series. The distance threshold Rtol (default: 10) determines the minimum distance that a neighbor needs to reach in the next higher dimension to be considered a false nearest neighbor. The loneliness threshold Atol (default: 2) corrects for nearest neighbors which initially are already too far away and thus cannot move much further apart from each other due to the limits of the attractor’s diameter [≈SD(x)]. The Atol threshold is especially important for short data sets which generate only sparsely filled phase-spaces. The lowest dimension, for which the percentage of nearest neighbors drops to zero is a reasonable choice for embedding (Kennel et al., 1992). Besides τ a range of embedding dimensions should be specified to test for (default: 1–9).

While the false nearest neighbors algorithm is well suited for determining the embedding dimension of deterministic systems it may return spurious results if the underlying process is generated by a stochastic process. Ragwitz and Kantz (2002) proposed the application of a simple non-linear predictor (Farmer and Sidorowich, 1987) to embed stochastic processes with the Markov property, i.e., processes with finite memory. Here d-dimensional states xt at each time point t are predicted by averaging the iterates of all closest spatial neighbors of a neighborhood U within a distance ε (function: nta_ragwitz.m):

The root mean squared prediction error (RMSPE) is subsequently calculated.

This procedure is repeated for all query points N for each combination of embedding parameters dim and τ. The combination of embedding parameters for which the RMSPE is minimum is suggested for further usage. The same approach has been implemented, e.g., in the TRENTOOL and JIDT software packages for the estimation of information theoretic measures (Lindner et al., 2011; Lizier, 2014). Possible configuration parameters include the range of embedding dimensions (default: 2–9) and delays (default: 10–100% autocorrelation time).

The phase-space representation of non-linear processes, i.e., the attractor often possesses a scale invariance of its geometrical topology. This so called fractality commonly serves as an indicator for the complexity of the analyzed system (Shekatkar et al., 2017; Wiltshire et al., 2017; Hoang et al., 2019). It can be quantified by estimating the correlation dimension D2 (Grassberger and Procaccia, 1983) defined by:

where C(ε) counts all pairs of states xi and xj on the attractor which are closer than a distance ε:

with N being the total number of points, θ being the Heaviside-step function and | | ..| | indicating some distance norm.

For chaotic systems, a definite fractal dimension can be quoted, if an estimate of D2 can be found for a reasonable scaling range and if this estimate is invariant for a reasonable number of embedding dimensions higher than the order of the process (Kantz and Schreiber, 2004). According to Theiler (1986) temporal neighbors in phase-space have to be excluded. For this so-called Theiler window, one may choose twice the autocorrelation time, i.e., the first zero crossing of the autocorrelation function (Theiler, 1986). Another option is to use a so called space-time separation plot (Provenzale et al., 1992), which allows to depict spatial distances in phase-space as a function of temporal distances (function: nta_spacetimesep.m). Estimation of D2 may be invoked by using the nta_corrdim.m function.

It is calculated by estimating the slope of a line fitted to the logarithm of C as a function of log(ε):

Among the most important parameters to specify is the range of distances ε to test (default: 1–100% of attractor diameter).

A characteristic feature of non-linear systems is the exponential divergence of similar states in phase-space (Rosenstein et al., 1993; Kantz, 1994):

with δt0 indicating the initial distance of two states, δΔt indicating the distance of the two states after t time steps and λ being the largest Lyapunov exponent, i.e., the divergence rate. A large positive Lyapunov exponent indicates a very unstable system, where small differences of states, e.g., introduced by external perturbances may quickly lead to drastic changes of the temporal behavior. A negative exponent is related to dissipative, i.e., converging dynamics. Estimation of Lyapunov exponents have e.g., been used to explain and even predict seizures in epilepsy (Lehnertz and Elger, 1998; Aarabi and He, 2017; Usman et al., 2019). In the context of signal processing it has been shown, that the Lyapunov exponent is also relevant for the estimation of other measures like Granger-causality, as systems in which λ approaches zero from below have very unstable autoregressive representations (Friston et al., 2014).

The toolbox implements two algorithms for estimating the maximum Lyapunov exponent:

Kantz’ algorithm (Kantz, 1994):

Rosenstein’s algorithm (Rosenstein et al., 1993):

The difference between both algorithms is that Rosenstein’s algorithm only utilizes the closest neighbor of each point, while Kantz’ algorithm takes the average of all neighbors within a given neighborhood-size. Both, however, yield very similar results (Dingwell, 2006).

Similar to the correlation dimension an estimation of the maximum Lyapunov exponent for chaotic systems should be invariant for succeeding embedding dimensions of at least the order of the underlying process or else it cannot be concluded to be a property of an invariant attractor of a deterministic system (Kantz and Schreiber, 2004). The function nta_lya.m may be used to estimate the largest Lyapunov exponent.

It is calculated similar to the estimate of the correlation dimension by fitting a straight line to the logarithm of δΔt as a function of t. Important parameters to specify are the number of temporal iterations δΔt (default: 10) and, in the case of the Kantz’ algorithm, the neighborhood-size U (default: 5% of attractor diameter).

In non-linear systems unstable periodic orbits form its skeleton, thus determining its overall dynamics. By detecting these periodic orbits it is possible to control the systems long term behavior by applying a feedback control scheme (Bielawski et al., 1994; Schöll, 2010; Zhu et al., 2019). The function nta_upo.m may be used to determine the location of any period one orbits in a two-dimensional phase-space. The algorithm implemented in NoLiTiA is the one proposed by So et al. (1996). Briefly, the algorithm applies a transformation procedure, where it locally linearizes the phase-space and projects any points in its vicinity on the nearest fixed point, while simultaneously dispersing any unrelated points far away. The procedure may be repeated n times for maximal efficiency. A surrogate approach using the same routines as described for the non-linearity testing may be applied to test for statistical significance of any possible fixed points. For one-dimensional maps plotted in two-dimensional phase-space the fixed points lie on the intersection of the first diagonal and the attractor. Thus, only the first diagonal needs to be tested statistically. Most importantly, the user should specify the number of transformations to perform (default: 100) and whether and if how many surrogates should be generated for statistical testing (default: 0).

Recurrence-based measures are derived from the field of dynamical systems theory and exploit the neighborhood-relationships of states by reducing arbitrarily high dimensional phase-spaces to two dimensional recurrence matrices M (Eckmann et al., 1987; Marwan et al., 2007):

where θ indicates the Heaviside-step function, ||..|| indicates a distance norm (e.g., Euclidean norm), ε is the neighborhood-size in phase-space and xt is the phase-space vector at time t. As the name suggests each value one represents one recurrence in time which is coloured in black in the graphical representation of the recurrence matrix (Figure 3). Depending on the local dynamics of the system, the recurrence plot shows different motifs. Parallel diagonal lines are a hallmark of periodicity and determinism. In contrast, vertical lines appear due to laminar, i.e., unchanging behavior. White corners may appear due to slow drifts i.e., non-stationarity and isolated dots most often indicate stochastic behavior (Eckmann et al., 1987; Marwan et al., 2007).

Figure 3. Examples for recurrence plots with different motifs. (A) White noise, (B) periodic signal with a period of 100 samples, (C) Lorenz-system (see section “Validation and Example Applications” for an explanation of the Lorenz-system). X and Y axis represent temporal order of states. Each black dot indicates a recurrence of state i at time j. Figure adapted from Weber and Oehrn (2022).

The recurrence matrix as well as several derived measures like determinism (Marwan and Kurths, 2002), laminarity (Marwan et al., 2002), generalized autocorrelation (Romano et al., 2005) or recurrence period density entropy (Little et al., 2007) may be calculated using the nta_recurrenceplot.m function. An important parameter to specify is either the neighborhood-size ε (default: 0) or the recurrence rate, which automatically determines the fill-rate of the recurrence plot in percent of the total possible fill-rate, by adjusting individual neighborhood-sizes for each point in phase-space (default: 5%).

Aside from classic recurrence quantification analysis measures, as well as bivariate extensions (joint nta_jointrecurrenceplot.m) and cross recurrence plots (nta_crossrecurrenceplot.m, Marwan and Kurths, 2002; Romano et al., 2004), two recently proposed measures are implemented: time- and scale-resolved recurrence amplitude analysis (Weber and Oehrn, 2022).

Oscillatory phenomena can be abundantly observed in many scientific fields. Examples are the El-Nino-Southern Oscillation in climatology (Timmermann et al., 2018), the Belousov-Zhabotinsky reaction in chemistry (Hudson and Mankin, 1981), predator–prey relationships in biology (Danca et al., 1997) or electrophysiological brain activity in neuroscience (Buzsáki and Draguhn, 2004). Oscillatory phenomena are often analyzed by means of spectral analysis methods like Fourier- or Wavelet analysis (Muthuswamy and Thakor, 1998; Oehrn et al., 2018). A major property of these methods is the assumption, that the time series on which they are applied to can be best characterized by a superposition of sine waves. However, many natural oscillations are non-sinusoidal and would be imperfectly represented by standard spectral methods (Philander and Fedorov, 2003; Cole and Voytek, 2017). Application of these methods results in spurious harmonics in the frequency domain and might even lead to false positive observations of derived methods, like phase-amplitude coupling (Gerber et al., 2016; Lozano-Soldevilla et al., 2016). Another basic assumption when applying classic methods is a certain periodicity of the analyzed signal. Studies frequently observe oscillatory behavior which is not perfectly periodic, but nearly periodic or quasi-periodic (Ko et al., 2011; Li et al., 2011; Thompson et al., 2014; Yousefi et al., 2018). Such signals are represented by broad peaks in the frequency domain, which might even mask adjacent frequency components. Over the last years methods from recurrence analysis have become an alternative to classic spectral methods, especially when analysing quasi-periodic (Zou et al., 2008) or non-sinusoidal (Little et al., 2007) oscillatory activity. In the following, we will briefly describe the estimation procedure of the time-resolved recurrence amplitude spectrum (TRAS) and spatially-resolved recurrence period spectrum (SREPS).

A d-dimensional state xt is defined to be recurrent if, after Δt time steps, it is within a neighborhood Uε of xt (Eckmann et al., 1987):

For the limit of ε→ 0 xt+Δt is defined to be periodic with period Δt, if Δt is the same for all t. Based on the methods of close returns (Lathrop and Kostelich, 1989) the recurrence time T of any closest temporal neighbor xt + Δt of xt within a spatial neighborhood Uε may be estimated as the difference (Little et al., 2007; Ngamga et al., 2007):

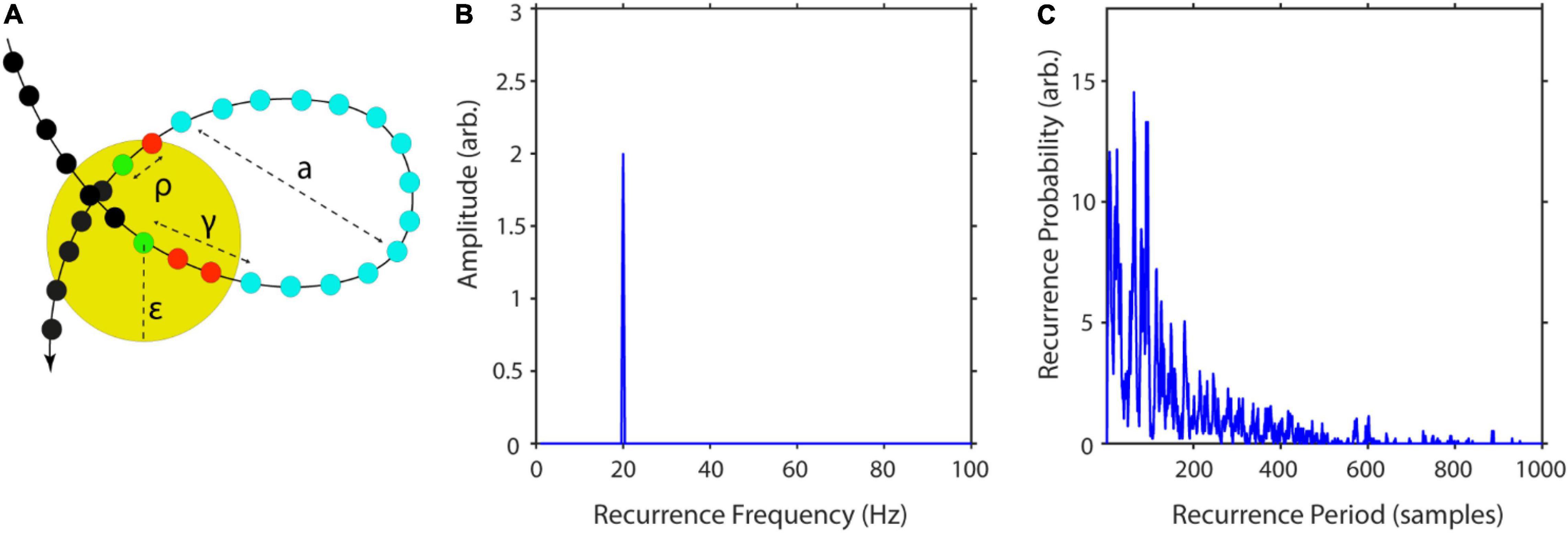

where γ is the difference in samples between xt and xt first leaving Uε. ρ is the sample difference between xt reentering Uε and xt+Δt (Figure 4A). This is equivalent to calculating the number of adjacent, vertical zeros in M (equation 12, see also Figure 3). After repeating this procedure for all state vectors, one can calculate a histogram R(T) with bin size equal to 1 sample and a number of bins equal to the longest recurrence time Tmax. By normalizing R(T) by the total number of recurrences, one gets the recurrence probability density (Little et al., 2007; Figure 4C). In the following, we will refer to this measure simply as recurrence probability. To account for the high number of short period recurrences in noisy data, P(T) can be calculated for a predefined range of T, i.e., specifying a Tmin and Tmax.

Figure 4. Computation steps for recurrence amplitudes estimator. (A) Example of a recurrent trajectory with amplitude a. The yellow circle indicates the neighborhood of size ε of the reference point (green). The arrow indicates the temporal flow, while the small colored circles represent succeeding states. In this example, it takes t = 14 samples for the reference point to leave and re-enter the neighborhood. (B) Recurrence amplitude of a 20 Hz sinusoid with amplitude a = 2. (C) Example of recurrence probability as a function of recurrence periods for the Lorenz system. Raw recurrence amplitudes are weighted with their respective recurrence probabilities to correct for spurious rare recurrences with high amplitudes. Figure adapted from Weber and Oehrn (2022).

The recurrence probability thus measures the probability of a recurrence occurring after T time steps.

As recurrent states form closed trajectories in phase-space, their energy is contained within the phase-space volume. Thus, a reasonable approximation of the recurrent amplitude of a specific frequency is to estimate the maximum phase-space diameter and weight it by the respective recurrence probability of this frequency (Figure 4B):

where q is the number of recurrences per T and n is the number of samples per recurrence (see Figure 4A).

The estimation of P(T) is highly dependent on ε. A choice of ε too small would result in many empty neighborhoods and thus in a high degree of statistical errors. If ε is chosen too large recurrences are not local anymore and recurrence periods are underestimated. To avoid ambiguity and to effectively eliminate the parameter ε one may calculate P(T) over a wide range of values, resulting in a spatially-resolved recurrence period spectrum (SREPS).

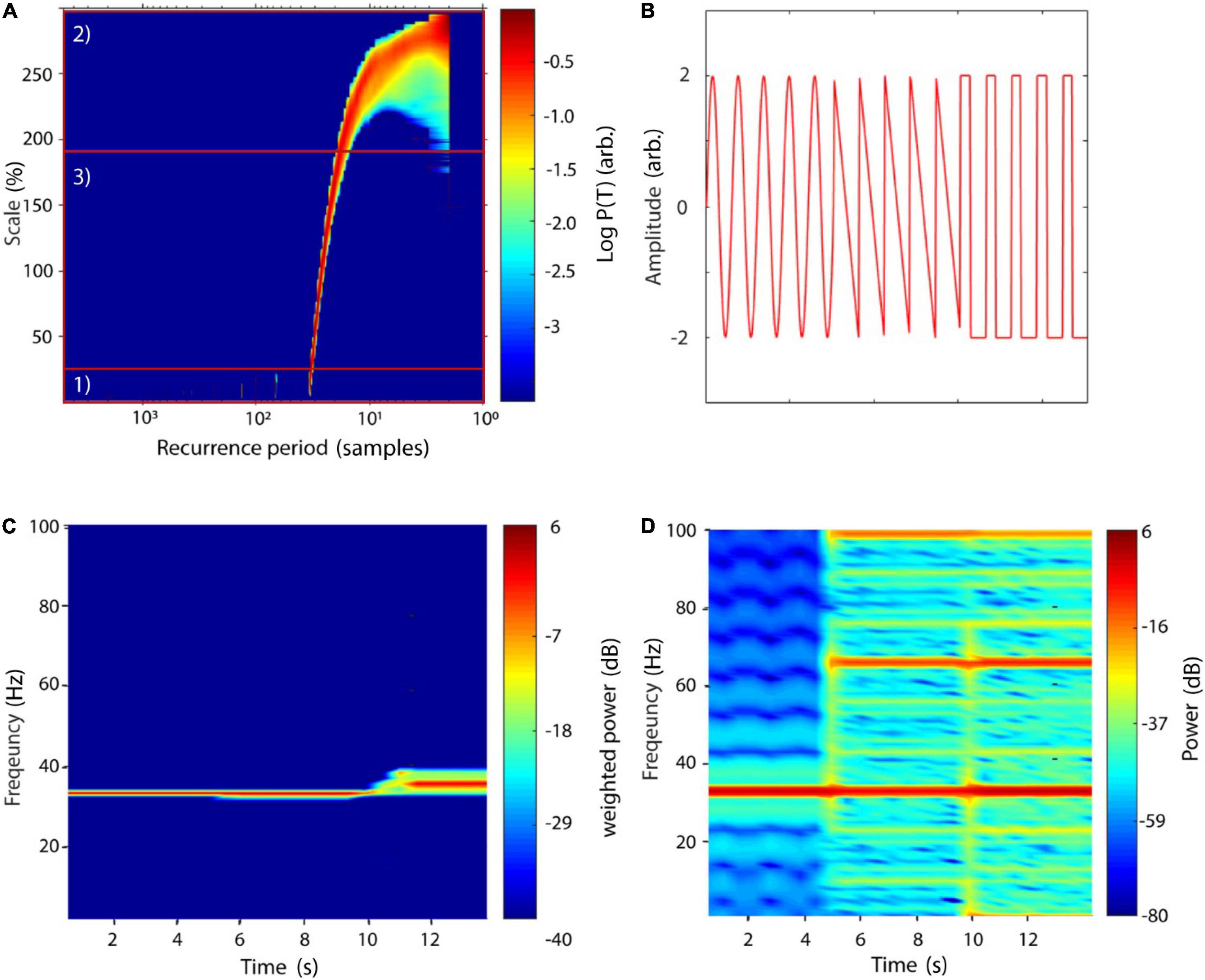

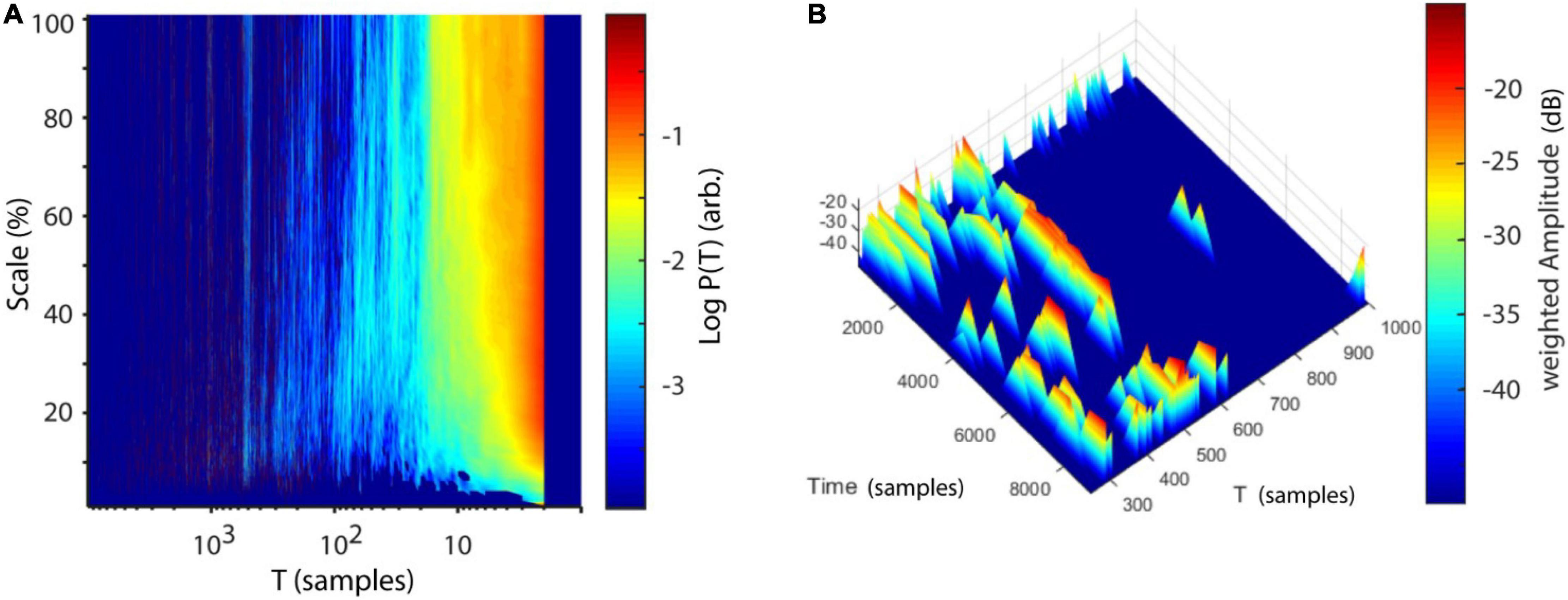

In NoLiTiA, we calculate SREPS as a function of T and ε. ε may be defined in percent of the standard deviation of the analyzed time series. In the SREPS one would expect to find three regions of interest depending on ε (e.g., see Figure 5A). For very small ε the SREPS is governed by statistical errors and a uniform distribution across all T. At the crossing of the noise level, multiples of the true recurrence periods might appear, due to the recurrent points slightly missing the neighborhood of the reference point. For very high ε the distribution of P(T) is heavily shifted to small T with only few points leaving and re-entering any neighborhood, with the extreme case of a neighborhood-size fully engulfing the phase-space. If the time series contains any oscillatory activity, slowly shifting but continuous spectral peaks occur in the intermediate range of ε. The shifting occurs, as the increasing neighborhood-size succeedingly engulfs more and more adjacent samples, which leads to an underestimation of the recurrence period. As stated above, the best estimate of the recurrence period can thus be found at the crossing of the continuous spectral peaks and the noise regime for small ε (Figure 5A). SREPS may be estimated using the nta_recfreq_en_scan.m function. The most important parameter is the range of neighborhood-sizes, which may be specified by setting the parameter cfg.ens to a three element vector including minimum, step size and maximum ε (default: [1 1 100], i.e., 1–100 % of SD of data).

Figure 5. SREPS and TRAS of a compound signal. (A) SREPS of a 50 s time series with a 3 Hz oscillation, sampled at 100 Hz (+8% noise relative to STD of raw signal). Note the three regions: (1) for small neighborhood-sizes SREPS is governed by noise, (2) for large neighborhoods SREPS is biased toward small periods, (3) at the crossing of the noise level, the true recurrence period of 33 samples can be observed. (B) Signal of three concatenated oscillations, each with a duration of 5 s, sampled at 1000 Hz: (1) sinusoid of 33 Hz, (2) sawtooth signal at 33 Hz, and (3) rectangle signal at 33 Hz. Note that shorter segments of each oscillatory signal are depicted for illustration purposes. Arb., arbitrary units. (C) TRAS of the compound signal (window length: 1100 ms, overlap 50%) with weighted amplitudes. (D) Short-time Fourier transform of the same signal (window length: 1100 ms, overlap: 50%). (A,C,D) Adapted from Weber and Oehrn (2022).

One disadvantage of the method is its lack of temporal resolution. This, however, is important for many scientific fields, where non-stationary data is present. In neuroscience one is often interested in contrasting a baseline at rest with neuronal activity modulated by an internal or external stimulus (Oehrn et al., 2015). A straightforward solution for implementing a time-dependent recurrence amplitude spectrum (TRAS) is to simply divide the time series into sections of equal length and compute the spectrum for each of them.

with wn indicating the nth temporal window of input time series x, with window size s = [xn… xn +windowsize-1].

A similar approach is typically used for short-time Fourier transform (STFT). Naturally, the choice for the window length determines the longest resolvable recurrence period. In order to smooth the boundaries of each temporal segment, NoLiTiA uses windows with 50% overlap. In Figure 5C we compare TRAS to short-time Fourier transform (STFT, Figure 5D) using a concatenated signal of three different oscillations each sampled at 1000 Hz: (1) a sinusoid of 33 Hz, (2) a sawtooth signal at 33 Hz and (3) a rectangle signal at 33 Hz (Figure 5B). Note the occurrence of harmonics in Figure 5D for the non-sinusoidal signals, which are absent in the TRAS. Estimation of TRAS may be invoked by calling the nta_wind_recfreq.m function. Besides an appropriate neighborhood-size, it is also important to specify a reasonable time window length (default: 1/10 of data length in samples). There is no theoretical basis on how to define ε for experimental data and as such data should only be interpreted in the light of transparent reporting. However, from a practical point of view it can be argued that a good start is choosing ε in the range of 50–100% of the SD of neuronal time series data, as the measurement noise typically is of that order of magnitude (Ryynänen et al., 2004). As can be seen, in Figure 5A the estimate of recurrence periods is quite robust above the noise regime and only shifts very slowly toward lower periods.

One possibility to study complex systems is by analysing its distributed computing capabilities (Fernández and Solé, 2006; Lizier, 2012). Distributed computing describes a systems information sharing and processing routines. Especially in neuroscience, information theoretic measures have witnessed a surge of interest over the last decade (Dimitrov et al., 2011) and were e.g., used to study neural coding (Quiroga and Panzeri, 2009) or communication (Weber et al., 2020). Measures from information theory quantify the information content of variables or equivalently the amount of uncertainty reduced when measuring the outcome of a random event. The most basic information theoretic measure is the Shannon entropy H (Shannon and Weaver, 1949):

or for continuous variables the differential entropy:

where p(x) indicates the probability of variable X taking the value x.

For most information theoretic measures, two different estimators are supplied:

(1) an estimator using a simple binning approach (Cover and Joy, 1991).

(2) a nearest neighbors-based estimator (Kozachenko and Leonenko, 1987), with the latter being more computational demanding but unbiased for mutual information.

Most of the functions provided offer an average, as well as a time-resolved local variant of the information theoretic measures (Lizier, 2012).

Based on the concept of Shannon entropy, the mutual information determines the shared information content between two processes X and Y (Cover and Joy, 1991, Figure 6):

A derived concept is the auto-mutual information between a signal X and a time shifted copy of itself. The auto-mutual information may be used to estimate the optimal delay τ for reconstructing the phase-space (Fraser and Swinney, 1986). It is used as a subroutine for the function nta_optimize_embedding.m when specifying the input parameter cfg.method as “deterministic” (see section Phase-Space Reconstruction).

By calculating the mutual information between the present and the immediate past state of a process X, one can estimate the active information storage (AIS). AIS quantifies how much information is currently in use for computing the next state (Lizier, 2012):

As the estimation of all entropy-based measures strongly depends on the estimation of the involved probability functions, the number of bins (for binning algorithms, default: 0) and the number of neighbors (for the nearest neighbors-based algorithms, default: 4) should be specified. An optional optimization algorithm for selecting the number of bins for the estimation of probabilities is implemented using the Freedman–Diaconis rule (Freedman and Diaconis, 1981).

For a complete list of functions, implemented methods, and parameters please refer to the manual in the Supplementary Material.

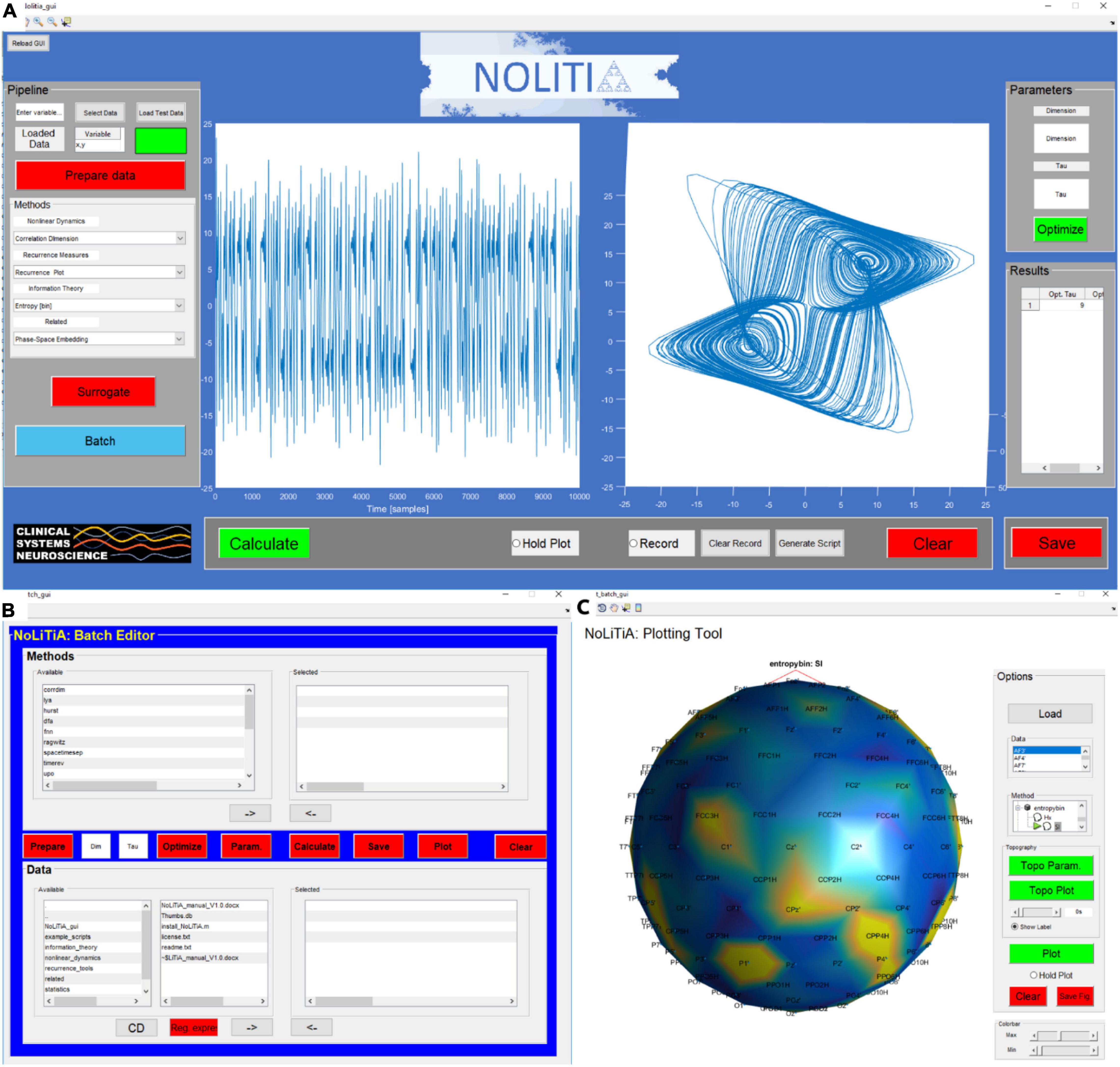

Depending on the degree of needed flexibility, experience in programming and ease of use, NoLiTiA offers three different ways to analyse data: (1) a graphical user interface (GUI), (2) a batch-editor, and (3) custom-made MATLAB-scripts (Figure 7).

Despite slight differences, all three pathways share the same basic processing pipeline. Depending on the user’s analysis pathway, data may be loaded either from a file or directly from workspace (GUI). NoLiTiA offers the possibility to pre-process data. The user may specify a time-region of interest, to subtract linear trends, to normalize and/or to filter data [for filtering, the Signal Processing Toolbox™ (The Mathworks, Inc.) needs to be installed]. The user may choose methods from the three topics: (1) non-linear dynamics, (2) recurrence-based methods, (3) information theoretic measures (Figure 1). Most of the methods necessitate to define embedding parameters (dim = dimension, τ = embedding delay) for phase-space reconstruction. The three pathways offer either to define them ad hoc by the user or to optimize them based on two different approaches. The optimization procedure should be chosen depending on whether data was generated by a deterministic or a stochastic process (see section “Information Theoretic Measures.” and Phase-Space Reconstruction).

Every method has at least one parameter, which may be specified by the user. If a parameter is left unspecified, the default value is applied (see the manual in the Supplementary Material for a list of all parameters including default values).

Depending on the analysis pathway, results are either plotted automatically (GUI), or the user may optionally choose to do so (batch and custom-made scripts).

The graphical user interface (GUI) is intended to be the most beginner’s friendly option for analysis. The GUI can be invoked by typing nolitia_gui in the command window (Figure 8A). The interface is composed of four main regions: on the left side, the user loads the input data, chooses whether and how to pre-process, specifies analysis methods, generates surrogate data and enters batch-mode. On the right side, the user may enter embedding parameters (dim and τ) ad hoc or choose to optimize them using two different approaches. Method-specific results are displayed in a designated table. In the middle of the interface, two axes display method-specific figures after calculation. By clicking the ‘Hold Plot’ radar button the user may superimpose results of subsequent analyses. By toggling the ‘Record’-button, all main steps and commands done by the user are saved in a queue. Clicking the ‘Generate Script’-button automatically generates a MATLAB-script of all operations saved in the queue.

Figure 8. Interfaces of NoLiTiA. (A) GUI depicting a phase-space reconstruction of the Lorenz attractor, (B) batch-editor, (C) plotting-tool showing a topographic representation of Shannon entropies estimated from artificial EEG data.

The batch-editor is intended to be a compromise between the accessibility of the GUI and the flexibility of custom-made scripts. In contrast to the GUI, it allows for a semi-automatized stacked analysis of multiple datasets and methods. The batch-editor can be invoked by either clicking on the ‘batch’ button in the GUI (Figure 8A) or by typing batch_gui in the command line of MATLAB. The batch-editor is composed of three main parts. In the bottom half, the user loads datasets and chooses which data to analyse. In the top half, the user chooses which methods to use for analysis. In the center, linearly arranged buttons guide the user through the analysis pipeline (Figure 8B).

Either clicking on the ‘Plot’-button or typing plot_batch_gui in the command line loads the Plotting-Tool (Figure 8C). The plotting-tool is intended to be used for displaying results generated by the batch-editor. If the tool is loaded by clicking the Plot-button after computation in the batch-editor, the results of the first data set are automatically loaded into the plotting-tool. Alternatively, the user may load saved data by clicking the ‘Load’-button. Available methods are represented by a hierarchical tree structure, which can be unfolded by clicking on Methods in the method panel. Methods which can be plotted using the Plot-button are indicated by a green arrow next to its name. Results are plotted in the centre axis.

The plotting tool may be used to analyse electroencephalographic (EEG)- data, as each time series may represent one recorded channel. The plotting-tool allows for a topographical representation of results per channel. By clicking on the red ‘Topo Param.’-button the user is prompted to select an electrode-position file containing channel names and electrode positions. Additionally, the user may specify specific electrodes to plot, as well as a sampling frequency. Finally, after selecting a method from the list, the topographic plot is displayed after clicking the ‘Topo Plot’-button (Figure 8C). Methods, which can be plotted using the ‘Topo Plot’ -button are indicated by a head shape next to its name. Methods with a green arrow, as well as a head shape symbol next to it may be plotted time resolved. This can be done by first clicking the ‘Topo Plot’-button and then using the slider below to scroll forward or backward in time. Supported function are listed in the Supplementary Material.

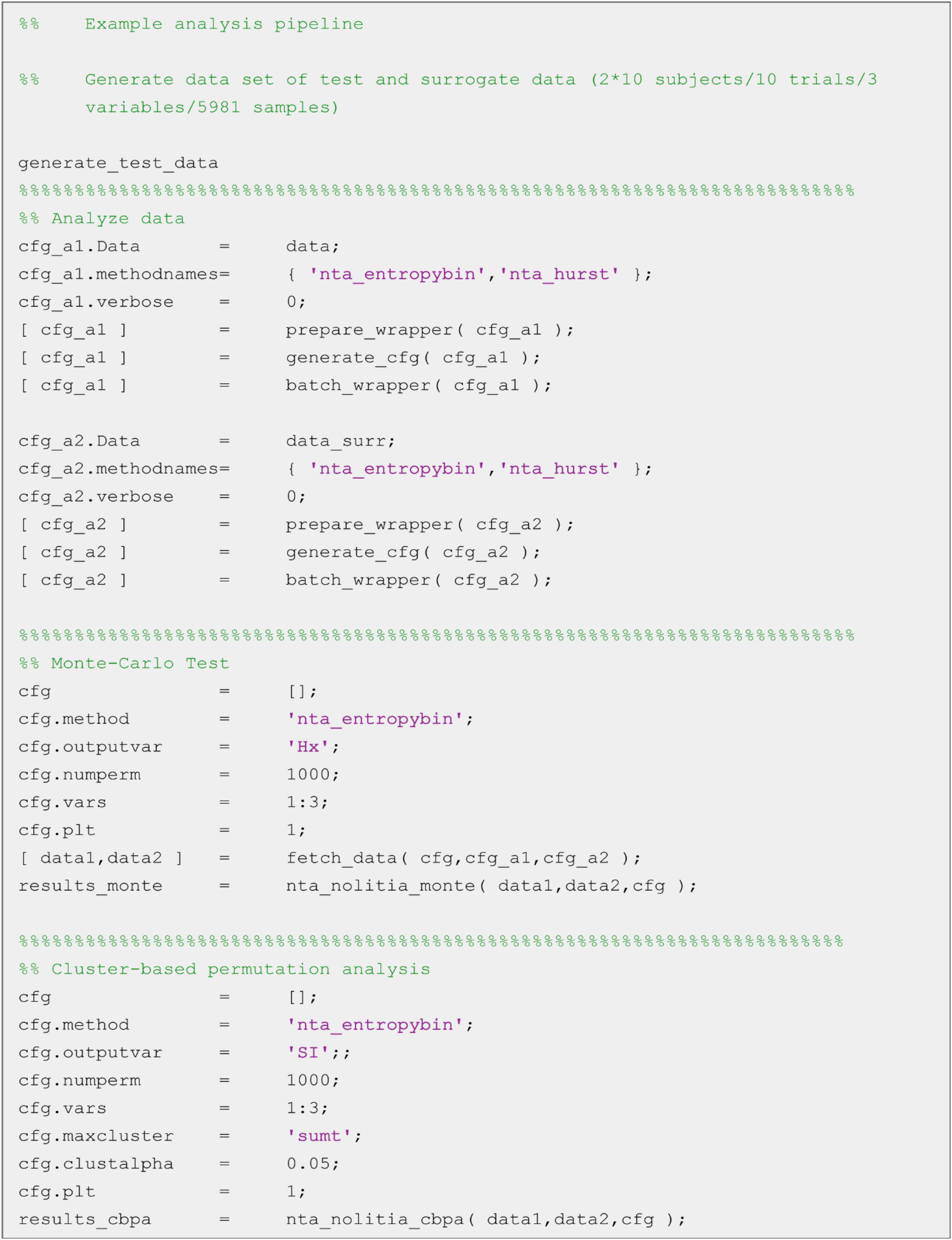

The final option to analyse data is by using custom-made scripts (Figure 9). This option offers the highest flexibility but requires some experience in MATLAB-scripting. All core functions are designed in the same way. Following the naming convention of other MATLAB toolboxes like FieldTrip (Oostenveld et al., 2011) or SPM (The Wellcome Dept. of Imaging Neuroscience, London1) most of NoLiTiAs functions start with a prefix (nta_). The user must provide up to two datasets (depending on whether the method is uni- or bivariate) and a configuration structure cfg containing method-specific parameters. An example script is shown below. Default values exist for all parameters. For a complete list of parameters per method see the manual in the Supplementary Material. For beginners the GUI offers the possibility to record processing steps made in the GUI and to save them as a MATLAB script. Differences between datasets, e.g. measured conditions in cognitive neuroscience may be statistically tested using non-parametric procedures, i.e., a Monte-Carlo simulation (nta_nolitia_monte.m) or cluster-based permutation algorithm (nta_nolitia_cbpa.m, Oostenveld et al., 2011).

Figure 9. Example script for statistical analysis. After generating test data, original and surrogate data get analyzed using binning estimator of Shannon entropy (‘nta_entropybin’). Statistical differences between original and surrogate data can then be estimated for the output variables average Shannon entropy (‘Hx’) and local Shannon information (‘SI’) using non-parametric Monte-Carlo Test or Cluster-based permutation analysis, respectively.

The scripts of the following examples can be found in the Supplementary Material. The first example data set comprises 250 s of the X-component of the Lorenz system (Lorenz, 1963):

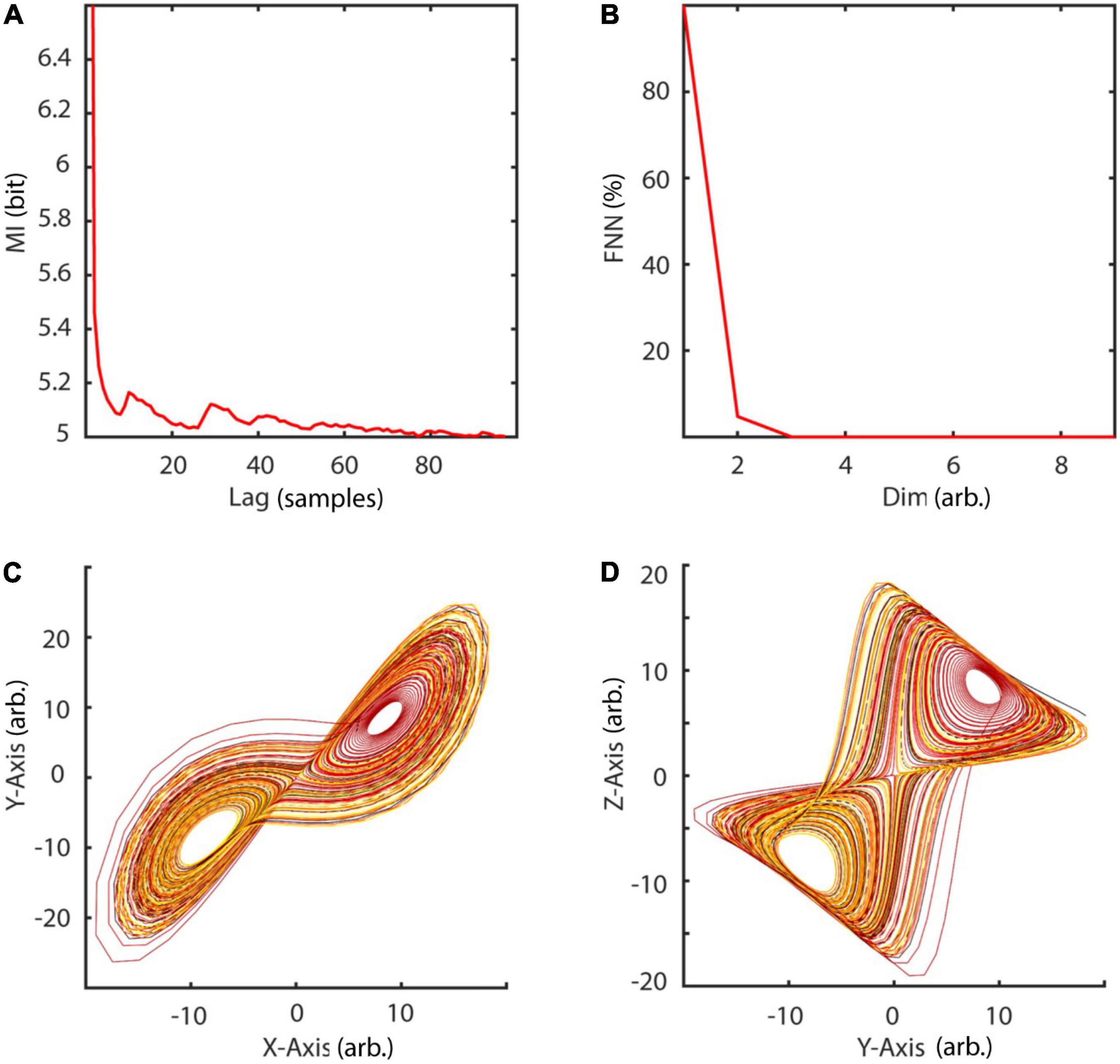

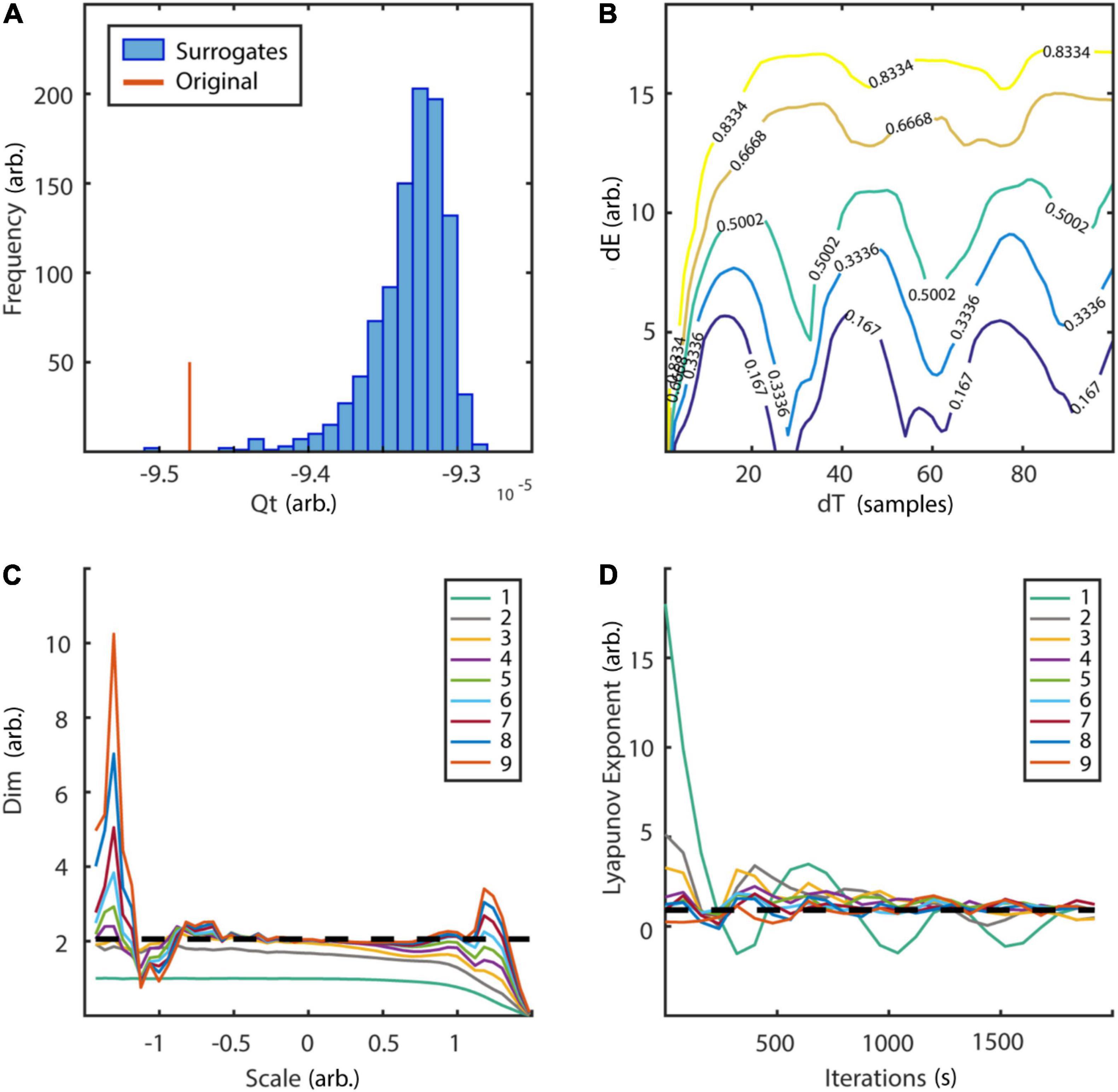

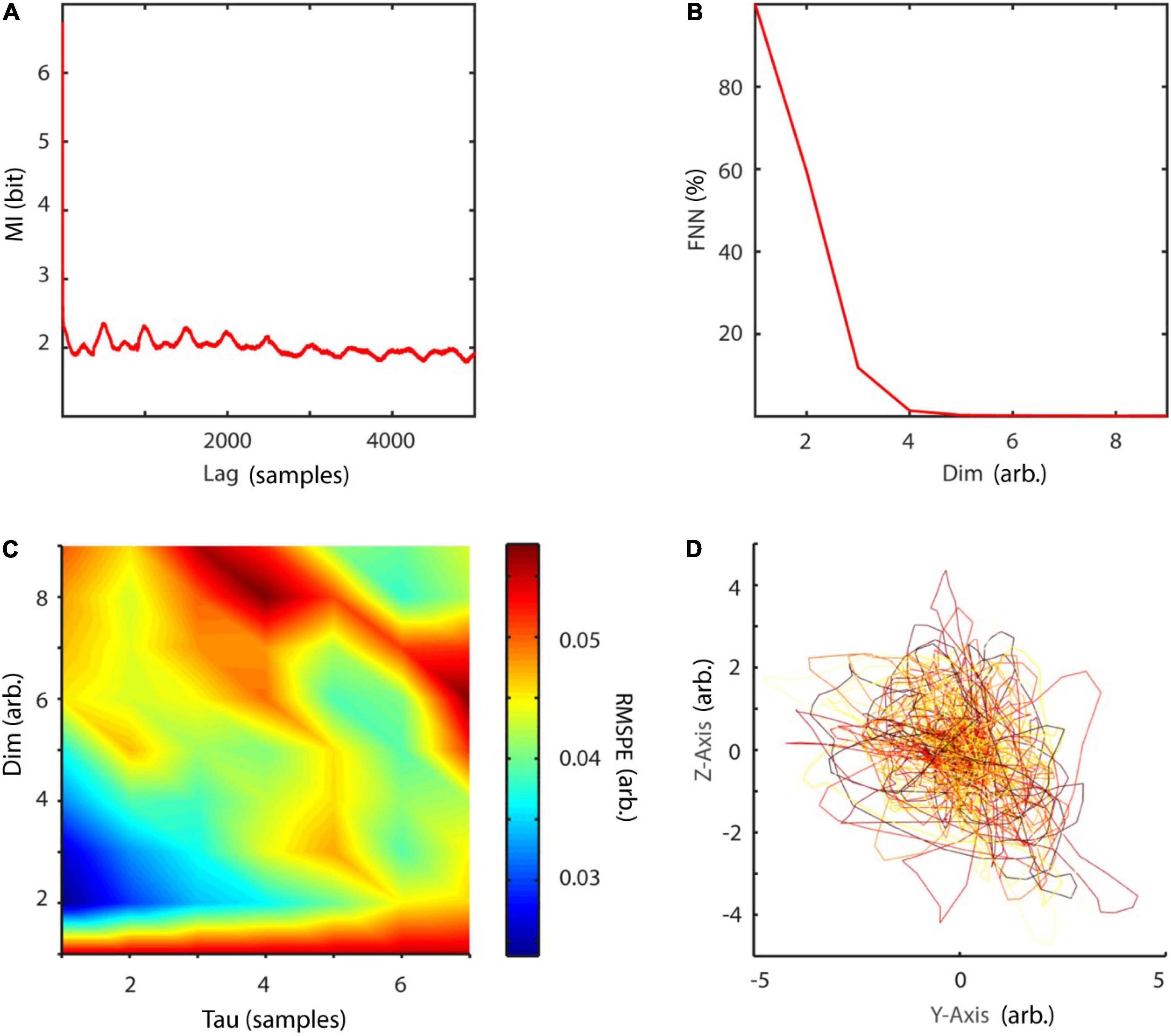

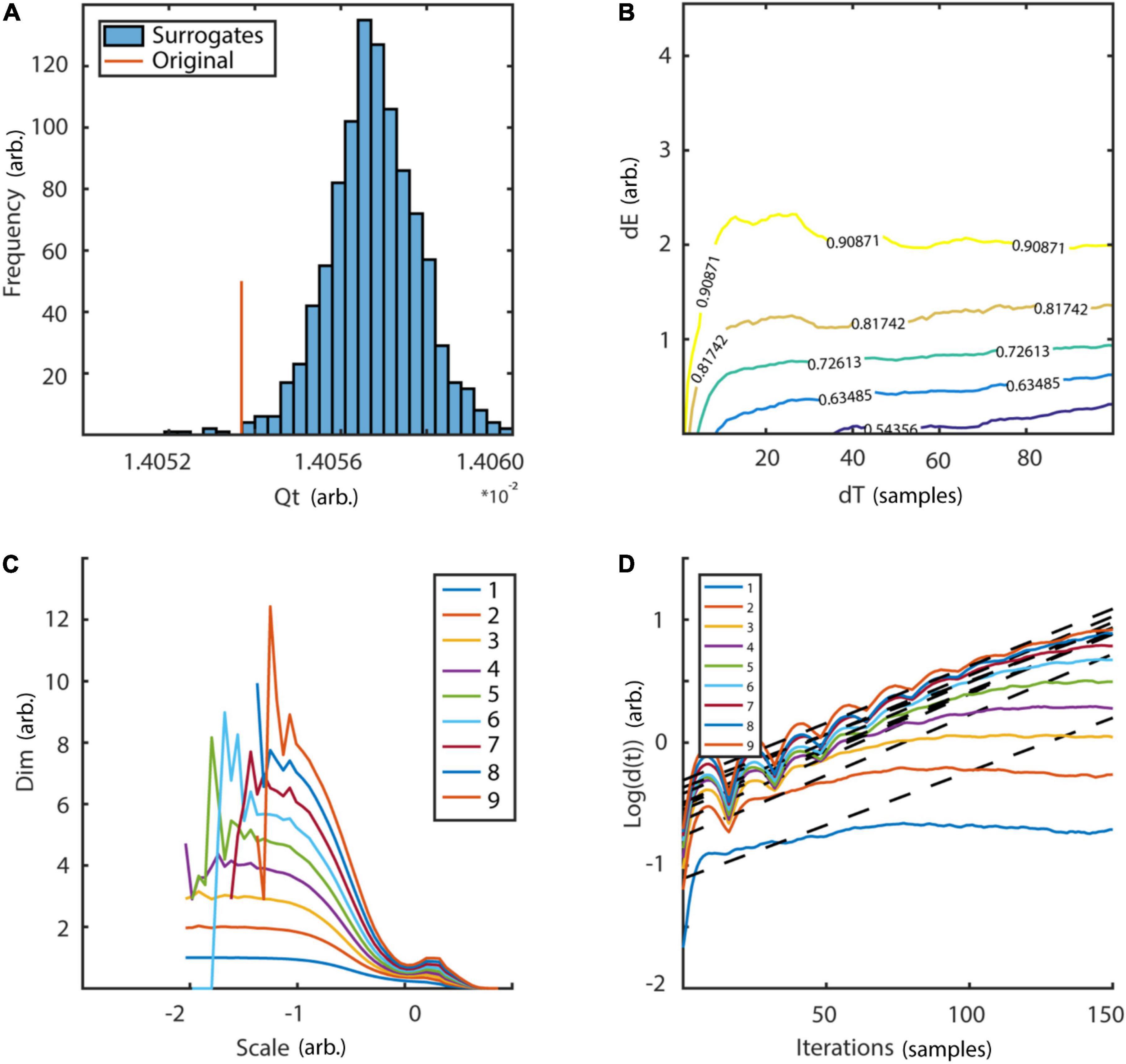

with random initial condition and sampled at 40 Hz. The Lorenz system is a classic mathematical model, initially designed to study atmospheric convection. However, it is better known for its well characterized chaotic behavior invoked by using specific parameters. Typical parameters a = 10, b = 28, and c = 8/3 were used in order to invoke chaotic behaviour (Lorenz, 1963). To reconstruct the phase-space, the embedding delay τ = 8 was chosen according to the first minimum of the auto-mutual information (Figure 10A) and the embedding dimension parameter dim = 3 using the false nearest neighbors algorithm (Kennel et al., 1992) over a range of embedding dimensions d = 1–9 (Figure 10B). The original as well as the reconstructed attractor are topologically similar (Figures 10C,D). The typical attractor with its two wings can be recognized both in the original (Figure 10C) and reconstructed phase-space (Figure 10D). Next, non-linearity was tested using the time inversion statistic and 1,000 amplitude-adjusted phase randomized surrogates as suggested by Schreiber and Schmitz (1997). As expected for a non-linear system, the null hypothesis of linearity was rejected at an alpha-level of 5% (Z-score = –2.19, Figure 11A). The correlation dimension was estimated using the Grassberger-Procaccia algorithm (Grassberger and Procaccia, 1983) and the largest Lyapunov exponent using the Kantz algorithm (Kantz, 1994). The Theiler-window of temporal correlations was chosen according to the plateau region of the space-time separation plot at about dt = 20 (Figure 11B).

Figure 10. Embedding of Lorenz data. (A) The embedding delay τ = 8 was chosen according to the first minimum of the auto-mutual information function. (B) Cao’s method of using the false nearest neighbors algorithm revealed d = 3 as the optimal embedding dimension. (C,D) Original and reconstructed Lorenz attractor. MI, mutual information; FNN, false nearest neighbors; dim, dimension.

Figure 11. Tests for non-linearity and determinism. (A) Frequency distribution of time reversibility statistic of 1,000 surrogates in comparison to original data. The null hypothesis of linearity could be rejected at an alpha level of 5% (Z-score = –2.19). (B) Space-time separation plot revealed a plateau at ∼dT = 20 which was subsequently used as Theiler-window. Colored lines indicate the proportion of states indicated by the superimposed numbers. (C) Estimation of correlation dimension for embedding dimension d = 1–9. Analysis revealed a plateau region slightly above two for embedding dimension 3–9 between spatial scales of ∼-0.7 to 0.7, which is in line with the analytic value (slashed line). (D) Estimation of largest Lyapunov exponent for embedding dimensions d = 1–9. In accordance with the analytic value (slashed line = 0.906) estimation revealed a maximum Lyapunov exponent of ∼0.9. arb., arbitrary units; dE, spatial separation; dT, temporal separation; dim, dimension. Scale: logarithmic neighborhood-size in phase-space.

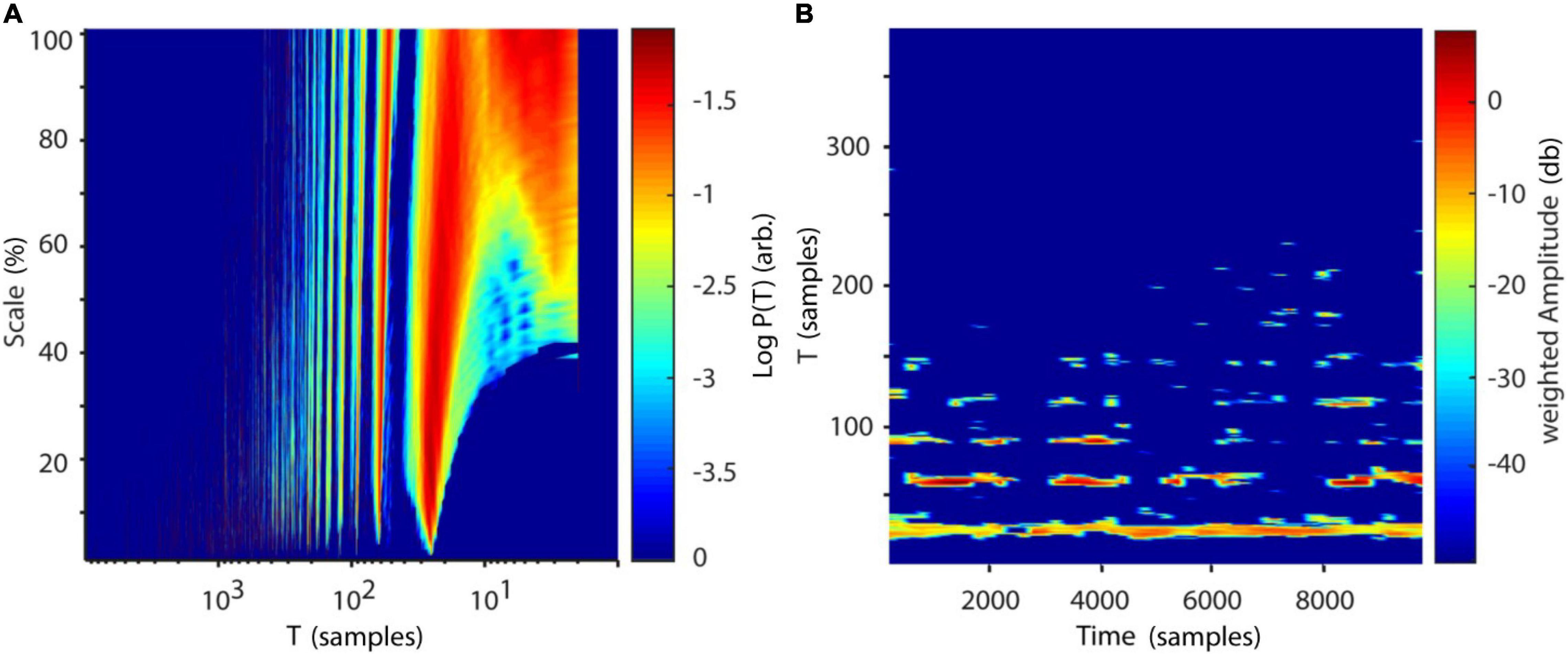

Results for correlation dimension and Lyapunov exponent both asymptotically reach their analytically calculated values (Figures 11C,D, D2 = 2.05, λ = 0.906, Grassberger and Procaccia, 1983; Sprott, 2003). Finally, a spatially (SREPS), as well as a temporally (TRAS) resolved recurrence periodogram was created. It quantifies the probability of recurrences in phase-space as a function of recurrence periods and either spatial scales (equation 18), i.e., neighborhood-sizes or time using overlapping temporal windows (equation 19). For the example analysis, spatial scales ranging from 1 to 100% of the standard deviation and temporal windows of 400 samples with 50% overlap were used. Results are summarized in Figures 12A,B and reveal intermittent recurrences with periods at multiples of 24 samples.

Figure 12. Recurrence periodograms of Lorenz data. (A) Recurrence probabilities as a function of recurrence periods and spatial scale. A high probability of recurrence can be seen at multiples of T∼24 samples (∼1.67 Hz). (B) Time-resolved recurrence periodogram with window size w = 400 samples at 50% overlap. Similar to (A) multiples of a fundamental period of ∼24 samples can be detected. The windowing approach reveals intermittent notches in the spectrum (e.g., at ∼2000 samples), which are concurrent with the Lorenz attractor reinjecting its trajectory to the center of its “wings” (see Figures 10C,D). Scale: Neighborhood-size in % of standard deviations of Lorenz data. T, recurrence period; P(T), probability of recurrence at period T.

In order to validate the estimation of period one unstable periodic orbits, 100 iterates of the logistic map (Ausloss and Dirickx, 2006) were created with random initial condition and a parameter a = 3.92 to invoke chaotic dynamics:

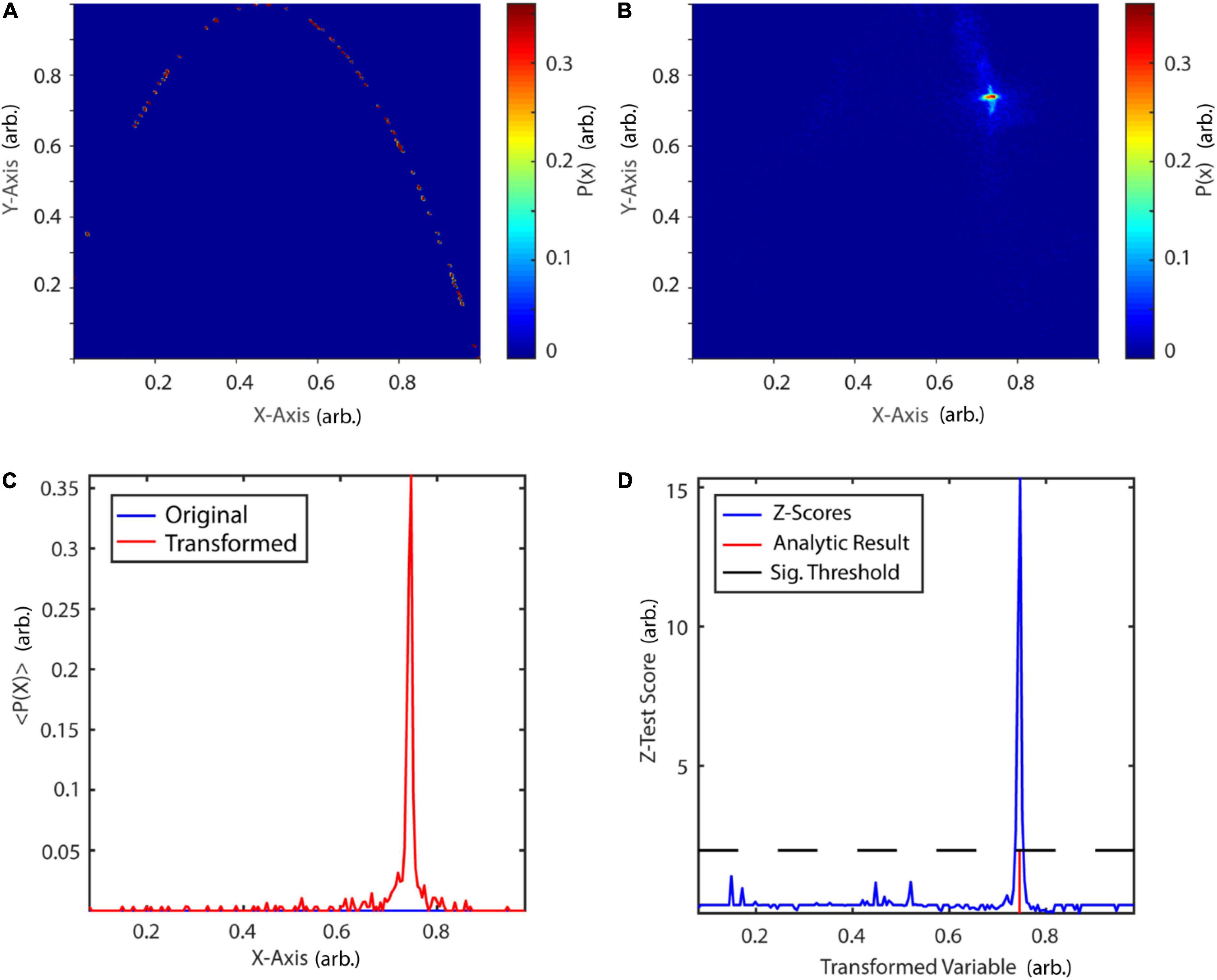

The transformation procedure was performed 500 times. The histogram of the original two-dimensional phase-space is depicted in Figure 13A, while Figure 13B shows the phase-space after the transformation procedure. In Figure 13C, the probability of a phase-space occurrence is displayed as a function of X-axis coordinates of the first diagonal, both, for the original and transformed phase-space. After the transformation procedure, points of the phase space have been accumulated at approximately 0.75. A permutation test with 1,000 amplitude-adjusted phase randomized surrogates was applied to test for significance of the correctly detected fixpoint at 0.75 at an alpha-level of 5% (Z-score = 15.32, Figure 13D). The results are in accordance with previously reported results (So et al., 1996).

Figure 13. Periodic orbit transform of the logistic map. (A) Probability map of the original phase-space. (B) Probability map after periodic orbit transform. (C) Diagonals of the original and transformed probability map. After transformation, states in its vicinity are mapped onto the fixpoint at 0.745. (D) Surrogate test reveals a significant fixpoint at 0.746. P(x): probability of state x, < P(x) > : mean probability over 500 transformations. arb., arbitrary units.

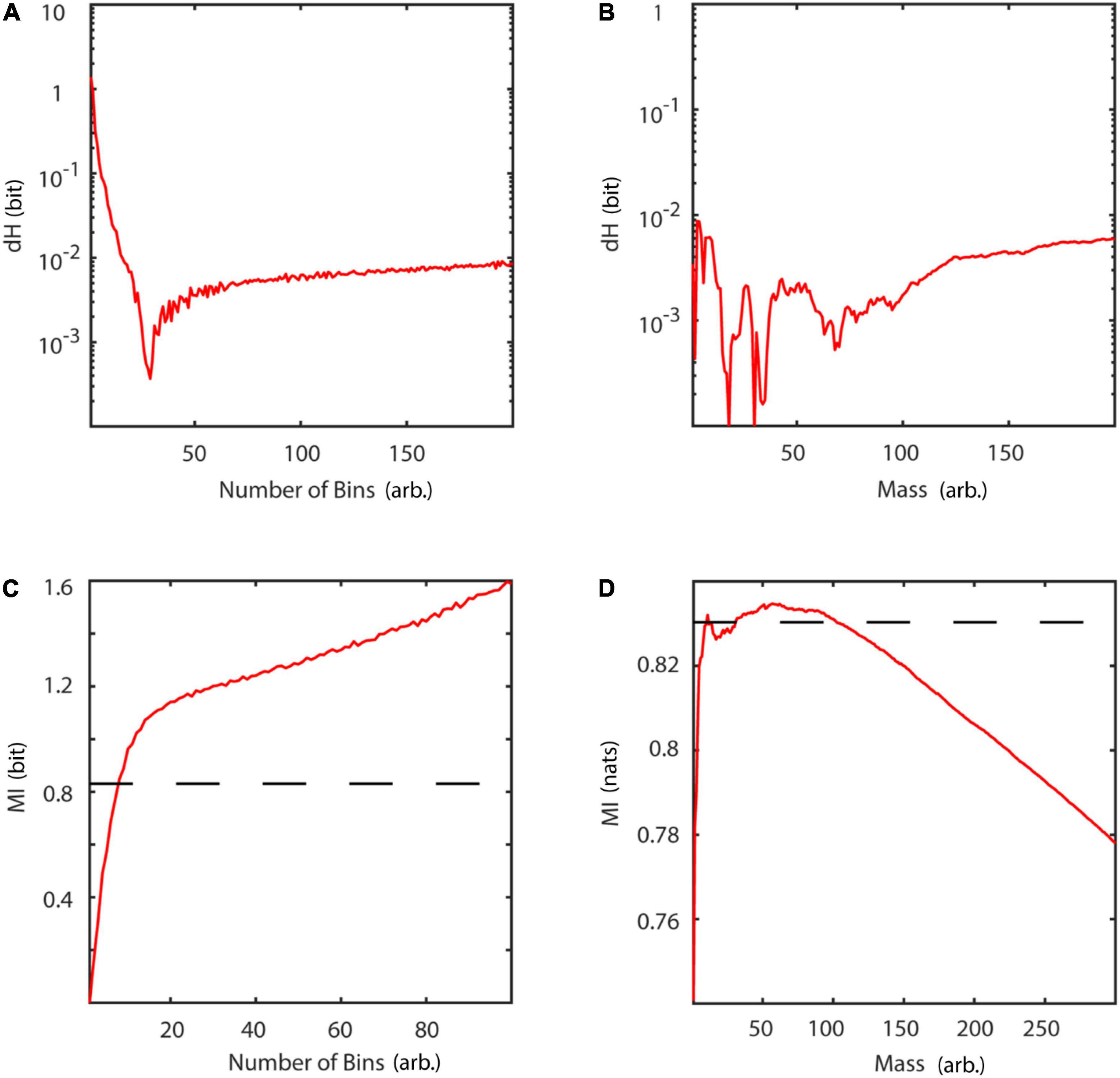

Information theoretic measures were validated using Gaussian distributions of unit variance and a covariance of 0.9. Figures 14A,B summarize estimation results in comparison to analytic values for the binning (Cover and Joy, 1991) and neighborhood-based estimator (Kozachenko and Leonenko, 1987) of Shannon entropy and differential entropy, respectively. Entropy can be calculated analytically for Gaussian distributions according to:

Figure 14. Basic information theoretic measures. (A) Difference between analytic and estimated Shannon entropy as a function of number of bins. (B) Difference between analytic and estimated differential entropy as a function of mass using the Kozachenko-Leonenko estimator. (C) Mutual information as a function of number of bins. (D) Mutual information as a function of mass, using Kraskov’s estimator. Slashed line: analytic value. dH, entropy difference between analytic and estimated value; MI, mutual information; mass, number of neighbors used for estimation of probability densities.

Similarly, Figures 14C,D display results for mutual information estimation using a binning (Cover and Joy, 1991) and a nearest neighbor-based approach (Kraskov et al., 2004). Mutual information for a bivariate Gaussian distribution is given by:

The results of the implemented algorithms are in accordance with previously published results (Kraskov et al., 2004). Both, the binning and the nearest neighbors estimator of entropy asymptotically reach a stable difference dH with respect to the analytical values at approximately 10–2. Locally, for few neighbors, the nearest neighbors estimator even reaches a difference of approximately 10–4. Comparison of mutual information estimators with analytical values shows, that the nearest neighbors estimator is reliable for a broader parameter range, than the binning estimator. While the binning estimator overestimates mutual information for increasing number of bins, the nearest neighbors estimator underestimates it for a number of neighbors larger than 100.

In a fourth example ∼4 s (10,000 samples) of intraoperatively recorded electromyographic activity (EMG) of a Parkinson’s disease patient at rest during tremor activity was analysed. The aim was to characterize tremor activity with respect to frequency content and whether it can be described to be generated by either a linear stochastic, non-linear stochastic or non-linear deterministic process. The data was recorded from the right extensor digitorum communis (EDC) using the INOMED ISIS MER-system (INOMED Corp., Teningen, Germany) and sampled at a frequency of 2456 Hz. The patient signed written informed consent prior to publication. Data collection was approved by the local ethics committee and was in accordance with the Declaration of Helsinki. The same data was previously published by Florin et al. (2010).

Using NoLiTiA, data was first trend corrected and normalized to zero mean and unit variance. Next, a fourth order, bidirectional, 20 Hz high-pass Butterworth filter was applied to remove activity unrelated to EMG. Since no a priori assumption could be made regarding the question whether the generating process was deterministic or stochastic, the optimal embedding dimension was estimated using the false nearest neighbors algorithm and the Ragwitz criterion and results compared (Figures 15B,C). The embedding delay was co-optimized by the Ragwitz criterion and additionally determined using the first minimum of the auto-mutual information function (Figures 15A,C). An example embedding is illustrated in Figure 15D.

Figure 15. Embedding procedure of EMG data. (A) The auto-mutual information revealed a first-minimum at 14 samples, which was subsequently used as embedding delay. (B) The method of false nearest neighbors led to an optimal embedding dimension of 5. (C) Additionally, the Ragwitz criterion was used to co-optimize embedding dimension and delay. As expected, the procedure suggested a much smaller delay and embedding dimension with 1 and 3, respectively. (D) Embedding of EMG data with τ = 14 and d = 3 for illustration purposes. arb., arbitrary units; MI, mutual information; FNN, false nearest neighbors; dim, dimension; RMSPE, root-mean-square prediction error.

Non-linearity was tested using a surrogate test and the time-inversion statistic as suggested by Schreiber and Schmitz (1997). Thousand amplitude-adjusted phase randomized surrogates were created. The null hypothesis of linearity was rejected at an alpha-level of 5% Figure 16A.

Figure 16. Testing for non-linearity and determinism. Distribution of 1,000 surrogates for non-linearity test. (A) Following the surrogate test, which employed a time inversion statistic, the null hypothesis of linearity could be rejected at an alpha-level of 5% (Z-score = 2.85). (B) The space-time separation plot shows a plateau region at ∼20 samples which was subsequently used as Theiler-window. Colored lines indicate proportion of data points as indexed by superimposed numbers. (C) Estimation of correlation dimension for embedding dimensions d = 1–9 as a function of logarithmic spatial scales. Neither a clear scaling region, nor any convergence for subsequent embedding dimensions could be detected. (D) Average distances of neighboring states in phase-space as a function of temporal iterations are plotted for embedding dimensions d = 1–9. Neither a clear exponential expansion rate nor any convergence for subsequent embedding dimensions could be detected. Qt, time-inversion statistic; dE, spatial distance; dT, temporal distance; dim, dimension; d(t), average distance of next neighbors after t iterations.

Next, determinism was tested by estimating the correlation dimension and maximum Lyapunov exponent over a range of embedding dimensions d = 1–9. If a stable embedding dimension invariant estimate of either statistic could be detected the null hypothesis of stochasticity could be rejected. For the Theiler-window of temporal correlations, twice the autocorrelation time was excluded. Additionally, a space-time separation plot was produced to verify the choice (Figure 16B). Results are summarized in Figures 16C,D. While the null hypothesis of linearity could be rejected, neither an embedding dimension invariant scaling range for the correlation dimension nor exponential divergence of neighboring states, i.e., a positive maximum Lyapunov exponent could be detected.

Finally, the frequency content of the EMG was analyzed by calculating (1) recurrence periods time independent over a range of spatial scales (SREPS) ε = 1–100% of the standard deviation of EMG data and (2) by estimating recurrence periods time-resolved using overlapping windows of 0.5 s and a fixed spatial scale of ε = 10% (TRAS). Temporally and spatially resolved recurrence period analysis revealed prominent recurrence periods at multiples of ∼500 samples, i.e., 203 ms (4.9 Hz), probably indicating tremor activity (Figures 17A,B). In conclusion, the EMG-data of the Parkinson’s patients can be characterized as generated by a non-linear stochastic oscillator at approximately 5 Hz.

Figure 17. Analysis of recurrence periods. (A) Logarithmic recurrence period probabilities as a function of recurrence periods T and spatial scale (SREPS). (B) Logarithmic recurrence period probabilities as a function of recurrence periods T and time using windows of 1200 samples with 50% overlap (TRAS). In both plots prominent recurrence periods are visible at multiples of ∼500 samples (∼4.9 Hz).

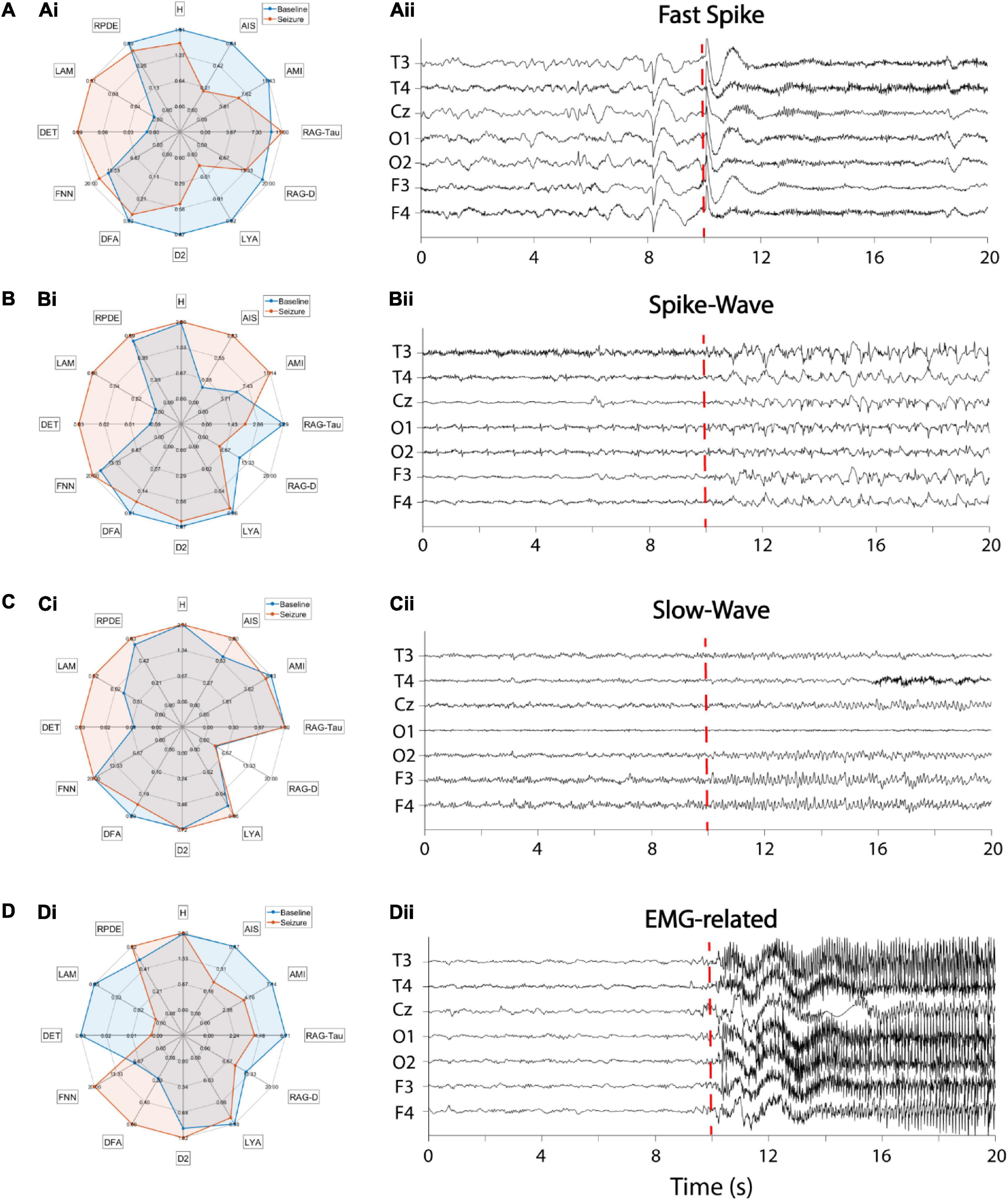

In a final example we demonstrate the application of the toolbox to characterize four different epileptic activity patterns in the EEG of four epilepsy patients. To this end, we used the freely available epilepsy dataset supplied at https://github.com/ieeeWang/EEG-feature-seizure-detection. The same dataset has been used in previous studies, e.g., Wang et al. (2017a,b). Twenty seconds of resting-state EEG data was recorded at seven electrodes with a sampling rate of 100 Hz. At each of the four datasets different epileptic activity patterns commence after a 10 s baseline period, i.e., (a) fast spike activity, (b) spike-wave complexes, (c) slow-wave complexes, and (d) seizure-related EMG artifacts (Figures 18Aii–Dii). Twelve different non-linear features were estimated for each baseline and seizure period [information theory: active information storage (AIS), Shannon entropy (H), auto-mutual information (AMI); dynamical systems theory: Ragwitz-Tau, Ragwitz-d, Lyapunov exponent (LYA), correlation dimension (D2), detrended fluctuation analysis (DFA), false nearest neighbors (FNN); Recurrence Quantification Analysis: determinism (DET), laminarity (LAM), recurrence period density entropy (RPDE)]. Non-linear features were subsequently averaged over electrodes and compared in a radar chart (Figures 18Ai–Di). Each of the four epileptic activity patterns exhibits distinct non-linear profiles in comparison to their respective baseline activity and to each other. Overall, recurrence-based features DET and LAM, as well as the information-theory based feature AIS seem to best distinguish epileptic from baseline activity. In comparison to EMG-related activity, fast-spike activity, spike-wave and slow-wave complexes exhibit higher DET and LAM scores in comparison to their respective baselines. Spike-wave and slow-wave complexes both show very similar non-linear profiles with the exception that the former has larger differences in AIS and AMI scores between seizure and baseline activity. In contrast to spike-wave and slow-wave complexes fast spike activity shows both, a smaller AIS score in comparison to baseline, as well as a much smaller LYA score. In summary, the specific non-linear profiles of epileptic discharge patterns might hint at the combination of non-linear methods being valuable features to use in future machine learning studies on seizure detection.

Figure 18. Non-linear profiles of different epileptic EEG patterns of four patients. (Ai–Di) Radar charts of 12 non-linear features comparing baseline with epileptic EEG activity. Blue area: baseline activity, red area: epileptic activity. Features were averaged across seven EEG channels. (Aii–Dii) Corresponding EEG time series. The red dashed line indicates the seizure onset. (A) Fast-spike activity, (B) Spike-wave complexes, (C) Slow-wave activity, (D) EMG-related activity. AIS, active information storage; AMI, auto-mutual information; D2, correlation dimension; DET, determinism; DFA, detrended fluctuation analysis; FNN, false nearest neighbors; H, Shannon entropy; LAM, laminarity; LYA, largest Lyapunov exponent; RAG-D, Ragwitz dimension; RAG-Tau, Ragwitz delay; RPDE, recurrence period density entropy.

In this study a free, open-source toolbox for non-linear time series analysis, including several established and novel measures, was presented. The implementation of methods, ranging from dynamical systems theory, recurrence analysis to information theory were validated on artificial data. An example application was given for electrophysiological data of a Parkinson’s disease patient and four epilepsy patients. In comparison to similar toolboxes (Hegger et al., 1999; Lizier, 2014; Donges et al., 2015), NoLiTiA offers three advantages: (1) By combining methods and algorithms from three broad fields of complexity theory, the package covers a wide range of applications within the same framework, e.g., ranging from characterizing stochastic or deterministic systems to analysing oscillatory phenomena. (2) The GUI and batch-editor offer accessibility of non-linear methods even for scientists unexperienced in programming. (3) Aside from established methods, two recently proposed measures for quantification of oscillatory activity were implemented (Weber and Oehrn, 2022). SREPS and TRAS each aim to extend the recurrence period density estimation (Little et al., 2007) by estimation of the recurrence amplitude, as well as a spatial and temporal dimension, respectively. SREPS may be applied to choose an optimal neighborhood-size for a subsequent time-resolved analysis. Similar to STFT, TRAS allows to analyze oscillatory activity as a function of time. However, an advantage of TRAS is its applicability on non-linear signals, i.e., non-sinusoidal oscillations (Weber and Oehrn, 2022). Analysis of such signals by means of STFT and related measures lead to the appearance of spurious harmonics in the resulting spectra. As the shape of the oscillations is irrelevant for TRAS, as long as similar states recur, even highly asymmetric signals, like sawtooth oscillations, generate sharp peaks in the TRAS. Preliminary code of NoLiTiA has already been successfully applied in a diverse range of previous studies ranging from a normative study for action pictures and naming latencies to analysis of EEG and invasive recordings in Parkinson’s patients (Weber et al., 2020; Busch et al., 2021; Loehrer et al., 2021; Oehrn et al., 2021; Weber and Oehrn, 2022). Future updates will continuously add recent methods to offer the most up-to-date software library for non-linear data analysis in neuroscience.

With the exception of the low-level function to estimate distances in phase-space (nta_neighsearch.mex64), all functions are implemented and validated in MATLAB 2016b. To accelerate computation, the former is implemented in C as a Mex-function and provided as a compiled Mex64 file for 64bit operating systems. To guarantee ease of use, most functions are implemented in the same way, demanding up to two input data sets and one configuration structure. The output is a single “results” structure consisting of fields for provided input parameters, as well as estimation results. Methods were validated using analytic solutions of the Lorenz-system, logistic map and standard distributions, i.e., uniform and Gaussian distributions. The toolbox is open-source and distributed under 2-clause BSD license.

The toolbox is readily available to download at: http://nolitia.com. Analysis code and data that were used for examples may be downloaded at: https://rushfiles.one/client/publiclink.aspx?id=UjgM7RHEb1. Epilepsy data were downloaded at: https://github.com/ieeeWang/EEG-feature-seizure-detection.

The study involving the Parkinson’s patient was reviewed and approved by the Ethics Commission of Cologne University’s Faculty of Medicine. The patient/participant provided written informed consent to participate in this study. Collection of epilepsy data was approved by Kempenhaeghe’s Ethical Review Board. See the original publication of Wang et al. (2017a) for details.

IW developed and implemented the measures, performed the analyses, and wrote the manuscript. CO performed the measurements, wrote the manuscript, discussed the results, and helped supervising the project. Both authors contributed to the article and approved the submitted version.

Open access funding was provided by the Open Access Publication Fund of Philipps-Universität Marburg with the support of the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The authors thank Dr. Michael von Papen for discussions during the implementation of methods, Prof. Dr. Esther Florin for providing an example data set, Prof. Dr. Lars Timmermann for guidance during the planning of the study and Jonas Gerards for testing the toolbox and its functions.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2022.876012/full#supplementary-material

Aarabi, A., and He, B. (2017). Seizure prediction in patients with focal hippocampal epilepsy. Clin. Neurophysiol. 128, 1299–1307. doi: 10.1016/j.clinph.2017.04.026

Abbasvandi, Z., and Nasrabadi, A. M. (2019). A self-organized recurrent neural network for estimating the effective connectivity and its application to EEG data. Comput. Biol. Med. 110, 93–107. doi: 10.1016/j.compbiomed.2019.05.012

Andrzejak, R. G., Widman, G., Lehnertz, K., Rieke, C., David, P., and Elger, C. E. (2001). The epileptic process as nonlinear deterministic dynamics in a stochastic environment: an evaluation on mesial temporal lobe epilepsy. Epilepsy Res. 44, 129–140. doi: 10.1016/s0920-1211(01)00195-4

Babloyantz, A., Salazar, J. M., and Nicolis, C. (1985). Evidence of chaotic dynamics of brain activity during the sleep cycle. Phys. Lett. A 111, 152–156. doi: 10.1016/0375-9601(85)90444-x

Bielawski, S., Derozier, D., and Glorieux, P. (1994). Controlling unstable periodic orbits by a delayed continuous feedback. Phys. Rev. E 49:R971. doi: 10.1103/physreve.49.r971

Busch, J. L., Haeussler, F. S., Domahs, F., Timmermann, L., Weber, I., and Oehrn, C. R. (2021). German normative data with naming latencies for 283 action pictures and 600 action verbs. Behav. Res. Methods 54, 649–662. doi: 10.3758/s13428-021-01647-w

Buzsáki, G., and Draguhn, A. (2004). Neuronal Oscillations in Cortical Networks. Science 304, 1926–1929.

Cao, L. (1997). Practical method for determining the minimum embedding dimension of a scalar time series. Phys. D: Nonlinear Phenom. 110, 43–50. doi: 10.1016/S0167-2789(97)00118-8

Chen, C. C., Hsu, Y. T., Chan, H. L., Chiou, S. M., Tu, P. H., Lee, S. T., et al. (2010). Complexity of subthalamic 13–35Hz oscillatory activity directly correlates with clinical impairment in patients with Parkinson’s disease. Exp. Neurol. 224, 234–240. doi: 10.1016/j.expneurol.2010.03.015

Cole, S. R., and Voytek, B. (2017). Brain oscillations and the importance of waveform shape. Trends Cogn. Sci. 21, 137–149. doi: 10.1016/j.tics.2016.12.008

Danca, M., Codreanu, S., and Bakó, B. (1997). Detailed analysis of a nonlinear prey-predator model. J. Biol. Phys. 23, 11–20. doi: 10.1023/A:1004918920121

Dar, M. N., Akram, M. U., Yuvaraj, R., Gul Khawaja, S., and Murugappan, M. (2022). EEG-based emotion charting for parkinson’s disease patients using convolutional recurrent neural networks and cross dataset learning. Comput. Biol. Med. 144:105327. doi: 10.1016/j.compbiomed.2022.105327

Dimitrov, A. G., Lazar, A. A., and Victor, J. D. (2011). Information theory in neuroscience. J. Comput. Neurosci. 30, 1–5.

Dingwell, J. B. (2006). “Lyapunov exponents,” in Wiley Encyclopedia of Biomedical Engineering, ed. M. Akay (New York, NY: Willey).

Donges, J. F., Heitzig, J., Beronov, B., Wiedermann, M., Runge, J., Feng, Q. Y., et al. (2015). Unified functional network and nonlinear time series analysis for complex systems science: The pyunicorn package. Chaos 25:113101. doi: 10.1063/1.4934554

Eckmann, J.-P., Kamphorst, S. O., and Ruelle, D. (1987). Recurrence plots of dynamical systems. Europhys. Lett. 4:9. doi: 10.1063/1.5026743

Farmer, J. D., and Sidorowich, J. J. (1987). Predicting chaotic time series. Phys. Rev. Lett. 59:845.

Faure, P., and Korn, H. (2001). Is There Chaos in the Brain? I. Concepts of Nonlinear Dynamics and Methods of Investigation. C. R. Acad. Sci. III 324, 773–793. doi: 10.1016/S0764-4469(01)01377-4

Fernández, P., and Solé, R. V. (2006). “The Role of Computation in Complex Regulatory Networks,” in Power laws, Scale-free networks and Genome Biology. Berlin: Springer, 206–225.

Ferri, R., Elia, M., Musumeci, S. A., and Stam, C. J. (2001). Non-linear EEG analysis in children with epilepsy and electrical status epilepticus during slow-wave sleep (ESES). Clin. Neurophysiol. 112, 2274–2280. doi: 10.1016/s1388-2457(01)00676-9

Fitzhugh, R. (1960). Thresholds and Plateaus in the Hodgkin-Huxley Nerve Equations. J. General Physiol. 43, 867–896. doi: 10.1085/jgp.43.5.867

Florin, E., Gross, J., Reck, C., Maarouf, M., Schnitzler, A., Sturm, V., et al. (2010). Causality between local field potentials of the subthalamic nucleus and electromyograms of forearm muscles in Parkinson’s disease. Eur. J. Neurosci. 31, 491–498. doi: 10.1111/j.1460-9568.2010.07083.x

Fraser, A. M., and Swinney, H. L. (1986). Independent coordinates for strange atractors from mutual information. Phys. Rev. A 33:1134. doi: 10.1103/physreva.33.1134

Freedman, D., and Diaconis, P. (1981). On the histogram as a density estimator: L 2 theory. Zeitschrift Wahrscheinlichkeitstheorie und verwandte Gebiete 57, 453–476.

Freyer, F., Aquino, K., Robinson, P. A., Ritter, P., and Breakspear, M. (2009). Bistability and non-gaussian fluctuations in spontaneous cortical activity. J. Neurosci. 29, 8512–8524. doi: 10.1523/JNEUROSCI.0754-09.2009

Freyer, F., Roberts, J. A., Becker, R., Robinson, P. A., Ritter, P., and Breakspear, M. (2011). Biophysical mechanisms of multistability in resting-state cortical rhythms. J. Neurosci. 31, 6353–6361. doi: 10.1523/JNEUROSCI.6693-10.2011

Friston, K. J., Bastos, A. M., Oswal, A., van Wijk, B., Richter, C., and Litvak, V. (2014). Granger causality revisited. NeuroImage 101, 796–808. doi: 10.1016/j.neuroimage.2014.06.062

Garnier, S., Gautrais, J., and Theraulaz, G. (2007). The biological principles of swarm intelligence. Swarm Intell. 1, 3–31. doi: 10.1007/s11721-007-0004-y

Gerber, E. M., Sadeh, B., Ward, A., Knight, R. T., and Deouell, L. Y. (2016). Non-sinusoidal activity can produce cross-frequency coupling in cortical signals in the absence of functional interaction between neural sources. PLoS One 11:e0167351. doi: 10.1371/journal.pone.0167351

Ghil, M., Kimoto, M., and Neelin, J. D. (1991). Nonlinear dynamics and predictability in the atmospheric sciences. Rev. Geophys. 29, 46–55.

Grassberger, P., and Procaccia, I. (1983). Measuring the strangeness of strange attractors. Physica D: Nonlinear Phenom. 9, 189–208. doi: 10.1016/0167-2789(83)90298-1

Hegger, R., Kantz, H., and Schreiber, T. (1999). Practical implementation of nonlinear time series methods: the TISEAN package. Chaos 9, 413–435. doi: 10.1063/1.166424

Hoang, P., Jacquir, S., Lemus, S., and Ma, Z. (2019). Quantification of contractile dynamic complexities exhibited by human stem cell-derived cardiomyocytes using nonlinear dimensional analysis. Sci. Rep. 9:14714. doi: 10.1038/s41598-019-51197-7

Hosseinifard, B., Moradi, M. H., and Rostami, R. (2013). Classifying depression patients and normal subjects using machine learning techniques and nonlinear features from EEG signal. Comput. Methods Prog. Biomed. 109, 339–345. doi: 10.1016/j.cmpb.2012.10.008

Houmani, N., Dreyfus, G., and Vialatte, F. B. (2015). Epoch-based entropy for early screening of alzheimer’s disease. Int. J. Neural Syst. 25:1550032. doi: 10.1142/S012906571550032X

Hudson, J. L., and Mankin, J. C. (1981). Chaos in the Belousov-Zhabotinskii Reaction. J. Chem. Phys. 74, 6171–6177. doi: 10.1063/1.4918595

Jing, H., and Takigawa, M. (2000). Topographic analysis of dimension estimates of EEG and filtered rhythms in epileptic patients with complex partial seizures. Biol. Cybern. 83, 391–397. doi: 10.1007/s004220000183

Kantz, H. (1994). A robust method to estimate the maximal Lyapunov exponent of a time series. Phys. Lett. A 185, 77–87. doi: 10.1016/0375-9601(94)90991-1

Kantz, H., and Schreiber, T. (2004). Nonlinear Time Series Analysis. Cambridge, CA: Cambridge university press.

Kennel, M. B., Brown, R., and Abarbanel, H. D. I. (1992). Determining embedding dimension for phase-space reconstruction using a geometrical construction. Phys. Rev. A 45, 3403–3411. doi: 10.1103/PhysRevA.45.3403

Khademi, Z., Ebrahimi, F., and Kordy, H. M. (2022). A transfer learning-based CNN and LSTM hybrid deep learning model to classify motor imagery EEG signals. Comput. Biol. Med. 143:105288. doi: 10.1016/j.compbiomed.2022.105288

Ko, A. L., Darvas, F., Poliakov, A., Ojemann, J., and Sorensen, L. B. (2011). Quasi-periodic fluctuations in default mode network electrophysiology. J. Neurosci. 31, 11728–11732. doi: 10.1523/JNEUROSCI.5730-10.2011

Kozachenko, L. F., and Leonenko, N. (1987). Sample Estimate of the Entropy of a Random Vector. Problemy Peredachi Inform. 23, 9–16.

Kraskov, A., Stögbauer, H., and Grassberger, P. (2004). Estimating mutual information. Phys. Rev. E 69:66138. doi: 10.1103/PhysRevE.69.066138

Lathrop, D. P., and Kostelich, E. J. (1989). Characterization of an experimental strange attractor by periodic orbits. Phys. Rev. A 40, 4028–4031. doi: 10.1103/PhysRevA.40.4028

Lehnertz, K., and Elger, C. E. (1998). Can epileptic seizures be predicted? Evidence from nonlinear time series analysis of brain electrical activity. Phys. Rev. Lett. 80:5019.