95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

TECHNOLOGY AND CODE article

Front. Neuroinform. , 27 May 2022

Volume 16 - 2022 | https://doi.org/10.3389/fninf.2022.835657

This article is part of the Research Topic Neuroinformatics of Large-Scale Brain Modelling View all 12 articles

Mean-field theory of neuronal networks has led to numerous advances in our analytical and intuitive understanding of their dynamics during the past decades. In order to make mean-field based analysis tools more accessible, we implemented an extensible, easy-to-use open-source Python toolbox that collects a variety of mean-field methods for the leaky integrate-and-fire neuron model. The Neuronal Network Mean-field Toolbox (NNMT) in its current state allows for estimating properties of large neuronal networks, such as firing rates, power spectra, and dynamical stability in mean-field and linear response approximation, without running simulations. In this article, we describe how the toolbox is implemented, show how it is used to reproduce results of previous studies, and discuss different use-cases, such as parameter space explorations, or mapping different network models. Although the initial version of the toolbox focuses on methods for leaky integrate-and-fire neurons, its structure is designed to be open and extensible. It aims to provide a platform for collecting analytical methods for neuronal network model analysis, such that the neuroscientific community can take maximal advantage of them.

Biological neuronal networks are composed of large numbers of recurrently connected neurons, with a single cortical neuron typically receiving synaptic inputs from thousands of other neurons (Braitenberg and Schüz, 1998; DeFelipe et al., 2002). Although the inputs of distinct neurons are integrated in a complex fashion, such large numbers of weak synaptic inputs imply that average properties of entire populations of neurons do not depend strongly on the contributions of individual neurons (Amit and Tsodyks, 1991). Based on this observation, it is possible to develop analytically tractable theories of population properties, in which the effects of individual neurons are averaged out and the complex, recurrent input to individual neurons is replaced by a self-consistent effective input (reviewed, e.g., in Gerstner et al., 2014). In classical physics terms (e.g., Goldenfeld, 1992), this effective input is called mean-field, because it is the self-consistent mean of a field, which here is just another name for the input the neuron is receiving. The term self-consistent refers to the fact that the population of neurons that receives the effective input is the same that contributes to this very input in a recurrent fashion: the population's output determines its input and vice-versa. The stationary statistics of the effective input therefore can be found in a self-consistent manner: the input to a neuron must be set exactly such that the caused output leads to the respective input.

Mean-field theories have been developed for many different kinds of synapse, neuron, and network models. They have been successfully applied to study average population firing rates (van Vreeswijk and Sompolinsky, 1996, 1998; Amit and Brunel, 1997b), and the various activity states a network of spiking neurons can exhibit, depending on the network parameters (Amit and Brunel, 1997a; Brunel, 2000; Ostojic, 2014), as well as the effects that different kinds of synapses have on firing rates (Fourcaud and Brunel, 2002; Lindner, 2004; Schuecker et al., 2015; Schwalger et al., 2015; Mattia et al., 2019). They have been used to investigate how neuronal networks respond to external inputs (Lindner and Schimansky-Geier, 2001; Lindner and Longtin, 2005), and they explain why neuronal networks can track external input on much faster time scales than a single neuron could (van Vreeswijk and Sompolinsky, 1996, 1998). Mean-field theories allow studying correlations of neuronal activity (Sejnowski, 1976; Ginzburg and Sompolinsky, 1994; Lindner et al., 2005; Trousdale et al., 2012) and were able to reveal why pairs of neurons in random networks, despite receiving a high proportion of common input, can show low output correlations (Hertz, 2010; Renart et al., 2010; Tetzlaff et al., 2012; Helias et al., 2014), which for example has important implication for information processing. They describe pair-wise correlations in network with spatial organization (Rosenbaum and Doiron, 2014; Rosenbaum et al., 2017; Dahmen et al., 2022) and can be generalized to correlations of higher orders (Buice and Chow, 2013). Mean-field theories were utilized to show that neuronal networks can exhibit chaotic dynamics (Sompolinsky et al., 1988; van Vreeswijk and Sompolinsky, 1996, 1998), in which two slightly different initial states can lead to totally different network responses, which has been linked to the network's memory capacity (Toyoizumi and Abbott, 2011; Schuecker et al., 2018). Most of the results mentioned above have been derived for networks of either rate, binary, or spiking neurons of a linear integrate-and-fire type. But various other models have been investigated with similar tools as well; for example, just to mention a few, Hawkes processes, non-linear integrate-and-fire neurons (Brunel and Latham, 2003; Fourcaud-Trocmé et al., 2003; Richardson, 2007, 2008; Grabska-Barwinska and Latham, 2014; Montbrió et al., 2015), or Kuramoto-type models (Stiller and Radons, 1998; van Meegen and Lindner, 2018). Additionally, there is an ongoing effort showing that many of the results derived for distinct models are indeed equivalent and that those models can be mapped to each other under certain circumstances (Ostojic and Brunel, 2011; Grytskyy et al., 2013; Senk et al., 2020).

Other theories for describing mean population rates in networks with spatially organized connectivity, based on taking a continuum limit, have been developed. These theories, known as neural field theories, have deepened our understanding of spatially and temporally structured activity patterns emerging in cortical networks, starting with the seminal work by Wilson and Cowan (1972, 1973), who investigated global activity patterns, and Amari (1975, 1977), who studied stable localized neuronal activity. They were successfully applied to explain hallucination patterns (Ermentrout and Cowan, 1979; Bressloff et al., 2001), as well as EEG and MEG rhythms (Nunez, 1974; Jirsa and Haken, 1996, 1997). The neural field approach has been used to model working memory (Laing et al., 2002; Laing and Troy, 2003), motion perception (Giese, 2012), cognition (Schöner, 2008), and more; for extensive reviews of the literature, we refer the reader to Coombes (2005), Bressloff (2012), and Coombes et al. (2014).

Clearly, analytical theories have contributed to our understanding of neuronal networks and they provide a plethora of powerful and efficient methods for network model analysis. Comparing the predictions of analytical theories to simulations, experimental data, or other theories necessitates a numerical implementation applicable to various network models, depending on the research question. Such an implementation is often far from straightforward and at times requires investing substantial time and effort. Commonly, such tools are implemented as the need arises, and their reuse is not organized systematically and restricted to within a single lab. This way, not only are effort and costs spent by the neuroscientific community duplicated over and over again, but also are many scientists deterred from taking maximal advantage of those methods although they might open new avenues for investigating their research questions.

In order to make analytical tools for neuronal network model analysis accessible to a wider part of the neuroscientific community, and to create a platform for collecting well-tested and validated implementations of such tools, we have developed the Python toolbox NNMT (Layer et al., 2021), short for Neuronal Network Mean-field Toolbox. We would like to emphasize that NNMT is not a simulation tool; NNMT is a collection of numerically solved mean-field equations that directly relate the parameters of a microscopic network model to the statistics of its dynamics. NNMT has been designed to fit the diversity of mean-field theories, and the key features we are aiming for are modularity, extensibility, and a simple usability. Furthermore, it features an extensive test suite to ensure the validity of the implementations as well as a comprehensive user documentation. The current version of NNMT mainly comprises tools for investigating networks of leaky integrate-and-fire neurons as well as some methods for studying binary neurons and neural field models. The toolbox is open-source and publicly available on GitHub.1

In the following, we present the design considerations that led to the structure and implementation of NNMT as well as a representative set of use cases. Section 2 first introduces its architecture. Section 3 then explains its usage by reproducing previously published network model analyses from Schuecker et al. (2015), Bos et al. (2016), Sanzeni et al. (2020), and Senk et al. (2020). Section 4 compares NNMT to other available toolboxes for neuronal network model analysis, discusses its use cases from a more general perspective, indicates current limitations and prospective advancements of NNMT, and explains how new tools can be contributed.

What are the requirements a package for collecting analytical methods for neuronal network model analysis needs to fulfill? To begin with, it should be adaptable and modular enough to accommodate many and diverse analytical methods while avoiding code repetition and a complex interdependency of package components. It should enable the application of the collected algorithms to various network models in a simple and transparent manner. It should make the tools easy to use for new users, while also providing experts with direct access to all parameters and options. Finally, the methods need to be thoroughly tested and well documented.

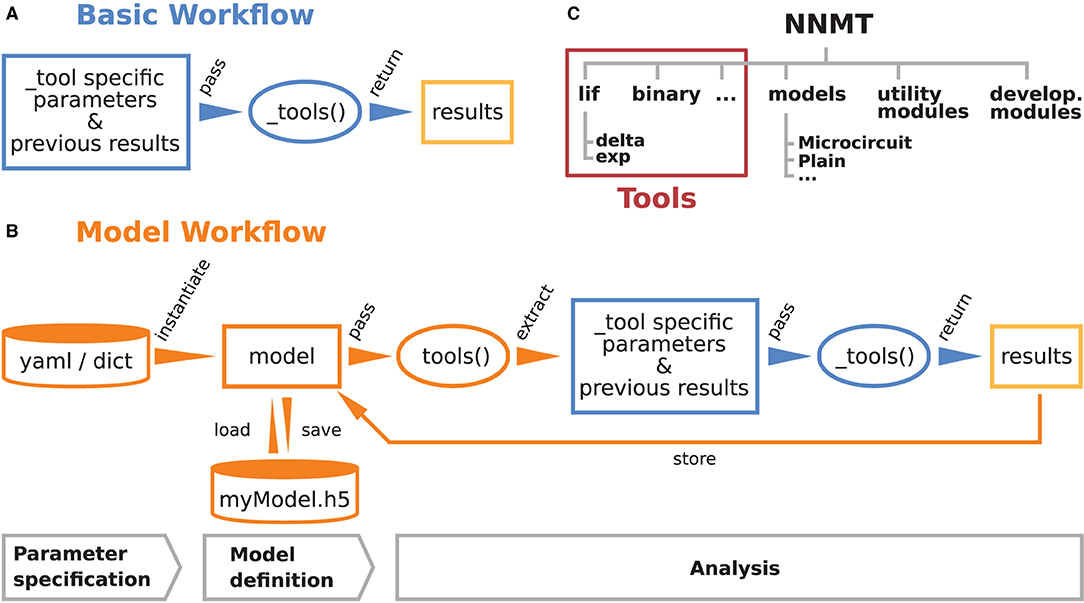

These are the main considerations that guided the development of NNMT. Figures 1A,B illustrate how the toolbox can be used in to two different workflows, depending on the preferences and goals of the user. In the basic workflow the individual method implementations called tools are directly accessed, whereas the model workflow provides additional functionality for the handling of parameters and results.

Figure 1. Structure and workflows of the Neuronal Network Mean-field Toolbox (NNMT). (A) Basic workflow: individual mean-field based analysis methods are implemented as functions, called _tools(), that can be used directly by explicitly passing the required arguments. (B) Model workflow: to facilitate the handling of parameters and results, they can be stored in a model class instance, which can be passed to a tool(), which wraps the basic workflow of the respective _tool(). (C) Structure of the Python package. In addition to the tool collection (red frame), containing the tools() and the _tools(), and pre-defined model classes, the package provides utility functions for handling parameter files and unit conversions, as well as software aiding the implementation of new methods.

The core of NNMT is a collection of low-level functions that take specific parameters (or pre-computed results) as input arguments and return analytical results of network properties. In Figure 1A, we refer to such basic functions as _tools(), as their names always start with an underscore. We term this lightweight approach of directly using these functions the basic workflow. The top part of Listing 1 demonstrates this usage; for example, the quantity to be computed could be the mean firing rate of a neuronal population and the arguments could be parameters which define neuron model and external drive. While the basic workflow gives full flexibility and direct access to every parameter of the calculation, it remains the user's responsibility to insert the arguments correctly, e.g., in the right units.

Listing 1: The two modes of using NNMT: In the basic workflow (top), quantities are calculated by passing all required arguments directly to the underscored tool functions available in the submodules of NNMT. In the model workflow (bottom), a model class is instantiated with parameter sets and the model instance is passed to the non-underscored tool functions which automatically extract the relevant parameters.

The model workflow is a convenient wrapper of the basic workflow (Figure 1B). A model in this context is an object that stores a larger set of parameters and can be passed directly to a tool(), the non-underscored wrapper of the respective _tool(). The tool() automatically extracts the relevant parameters from the model, passes them as arguments to the corresponding core function _tool(), returns the results, and stores them in the model. The bottom part of Listing 1 shows how a model is initialized with parameters and then passed to a tool() function.

Models are implemented as Python classes and can be found in the submodule nnmt.models. We provide the class nnmt.models.Network as a parent class and a few child classes which inherit the generic methods and properties but are tailored to specific network models; custom models can be created straightforwardly. The parameters distinguish network parameters, which define neuron models and network connectivity, and analysis parameters; an example for an analysis parameter is a frequency range over which a function is evaluated. Upon model instantiation, parameter sets defining values and corresponding units are passed as Python dictionaries or yaml files. The model constructor takes care of reading in these parameters, computing dependent parameters from the imported parameters, and converting all units to SI units for internal computations. Consequently, the parameters passed as arguments and the functions for computing dependent parameters of a specific child class need to be aligned. This design encourages a clear separation between a concise set of base parameters and functionality that transforms these parameters to the generic (vectorized) format that the tools work with. To illustrate this, consider the weight matrix of a network of excitatory and inhibitory neuron populations in which all excitatory connections have the same weight and all inhibitory ones another weight. As argument one could pass just a tuple of two different weight values and the corresponding model class would take care of constructing the full weight matrix. This happens in the example presented in Section 3.2.2: The parameter file network_params_microcircuit.yaml contains the excitatory synaptic weight and the ratio of inhibitory to excitatory weights. On instantiation, the full weight matrix is constructed from these two parameters, following the rules defined in nnmt.models.Microcircuit.

When a tool() is called, it checks whether the provided model object contains all required parameters and previously computed results. Then the tool() extracts the required arguments, calls the respective _tool(), and caches and returns the result. If the user attempts to compute the same property twice, using identical parameters, the tool() will retrieve the already computed result from the model's cache and return that value. Results can be exported to an HDF5 file and also loaded.

Using the model workflow instead of the basic workflow comes with the initial overhead of choosing a suitable combination of parameters and a model class, but has the advantages of a higher level of automation with built-in mechanisms for checking correctness of input (e.g., regarding units), reduced redundancy, and the options to store and load results. Both modes of using the toolbox can also be combined.

The structure of the Python package NNMT is depicted in Figure 1C. It is subdivided into submodules containing the tools (e.g., nnmt.lif.exp, or nnmt.binary), the model classes (nnmt.models), helper routines for handling parameter files and unit conversions, as well as modules that collect reusable code employed in implementations for multiple neuron models (cf. Section 4.4). The tools are organized in a modular, extensible fashion with a streamlined hierarchy. To give an example, a large part of the currently implemented tools apply to networks of leaky integrate-and-fire (LIF) neurons, and they are located in the submodule nnmt.lif. The mean-field theory for networks of LIF neurons distinguishes between neurons with instantaneous synapses, also called delta synapses, and those with exponentially decaying post-synaptic currents. Similarly, the submodule for LIF neurons is split further into the two submodules nnmt.lif.delta and nnmt.lif.exp. NNMT also collects different implementations for computing the same quantity using different approximations or numerics, allowing for a comparison of different approaches.

Apart from the core package, NNMT comes with an extensive online documentation,2 including a quickstart tutorial, all examples presented in this paper, a complete documentation of all tools, as well as a guide for contributors.

Furthermore, we provide an extensive test suite that validates the tools by checking them against previously published results and alternative implementations where possible. This ensures that future improvements of the numerics do not break the tools.

In this section, we demonstrate the practical use of NNMT by replicating a variety of previously published results. The examples presented have been chosen to cover a broad range of common use cases and network models. We include analyses of both stationary and dynamic network features, as mean-field theory is typically divided into two parts: stationary theory, which describes time-independent network properties of systems in a stationary state, and dynamical theory, which describes time-dependent network properties. Additionally, we show how to use the toolbox to map a spiking to a simpler rate model, as well as how to perform a linear stability analysis. All examples, including the used parameter files, are part of the online documentation.2

The toolbox can be either installed using pip:

or by installing it directly from the repository, which is described in detail in the online documentation. After the installation, the module can be imported:

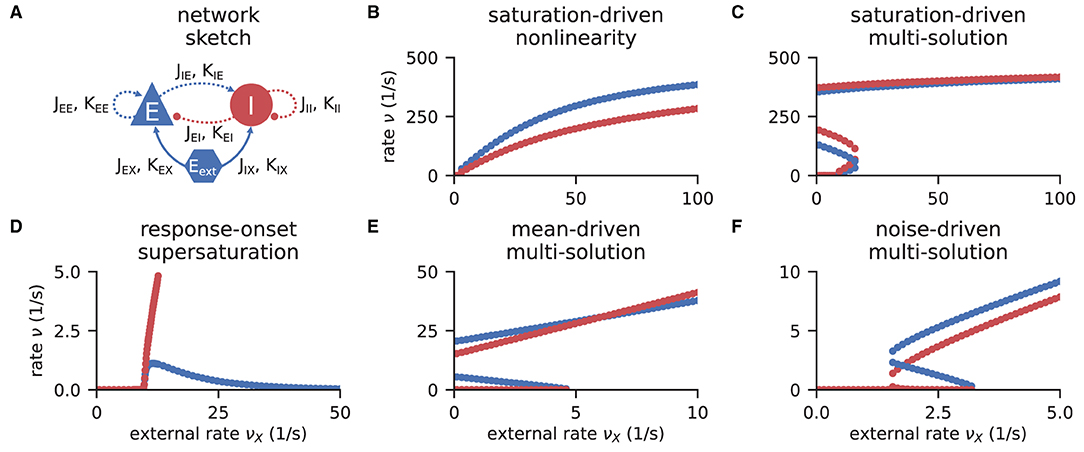

Networks of excitatory and inhibitory neurons (EI networks, Figure 2A) are widely used in computational neuroscience (Gerstner et al., 2014), e.g., to show analytically that a balanced state featuring asynchronous, irregular activity emerges dynamically in a broad region of the parameter space (van Vreeswijk and Sompolinsky, 1996, 1998; Brunel, 2000; Hertz, 2010; Renart et al., 2010). Remarkably, such balance states emerge in inhibition dominated networks for a variety of neuron models if the indegree is large, K ≫ 1, and the weights scale as (Sanzeni et al., 2020; Ahmadian and Miller, 2021). Furthermore, in a balanced state, a network responds linearly to external input in the limit K → ∞ (van Vreeswijk and Sompolinsky, 1996, 1998; Brunel, 2000; Sanzeni et al., 2020; Ahmadian and Miller, 2021). How do EI networks of LIF neurons respond to external input at finite indegrees? Sanzeni et al. (2020) uncover five different types of nonlinearities in the network response depending on the network parameters. Here, we show how to use the toolbox to reproduce their result (Figures 2B–F).

Figure 2. Response nonlinearities in EI-networks. (A) Network diagram with nodes and edges according to the graphical notation proposed by Senk et al. (in press). (B–F) Firing rate of excitatory (blue) and inhibitory (red) population for varying external input rate νX. Specific choices for synaptic weights (J, Jext) and in-degrees (K, Kext) lead to five types of nonlinearities: (B) saturation-driven nonlinearity, (C) saturation-driven multi-solution, (D) response-onset supersaturation, (E) mean-driven multi-solution, and (F) noise-driven multi-solution. See Figure 8 in Sanzeni et al. (2020) for parameters.

The network consists of two populations, E and I, of identical LIF neurons with instantaneous (delta) synapses (Gerstner et al., 2014). The subthreshold dynamics of the membrane potential Vi of neuron i obeys

where τm denotes the membrane time constant, R the membrane resistance, and Ii the input current. If the membrane potential exceeds a threshold Vth, a spike is emitted and the membrane voltage is reset to the reset potential V0 and clamped to this value during the refractory time τr. After the refractory period, the dynamics continue according to Equation (1). For instantaneous synapses, the input current is given by

where Jij is the synaptic weight from presynaptic neuron j to postsynaptic neuron i (with Jij = 0 if there is no synapse), the tj,k are the spike times of neuron j, and dij is a synaptic delay (in this example dij = d for all pairs of neurons). In total, there are NE and NI neurons in the respective populations. Each neuron is connected to a fixed number of randomly chosen presynaptic neurons (fixed in-degree); additionally, all neurons receive external input from independent Poisson processes with rate νX. The synaptic weights and in-degrees of recurrent and external connections are population-specific:

All weights are positive, implying an excitatory external input.

The core idea of mean-field theory is to approximate the input to a neuron as Gaussian white noise ξ(t) with mean 〈ξ(t)〉 = μ and noise intensity . This approximation is well-suited for asynchronous, irregular network states (van Vreeswijk and Sompolinsky, 1996, 1998; Amit and Brunel, 1997b). For a LIF neuron driven by such Gaussian white noise, the firing rate is given by (Siegert, 1951; Tuckwell, 1988; Amit and Brunel, 1997b)

where the rescaled reset- and threshold-voltages are

The first term in Equation (4) is the refractory period and the second term is the mean first-passage time of the membrane voltage from reset to threshold. The mean and the noise intensity of the input to a neuron in a population a ∈ {E, I}, which control the mean first-passage time through Equation (5), are determined by (Amit and Brunel, 1997b)

respectively, where each term reflects the contribution of one population, with the corresponding firing rates of the excitatory νE, inhibitory νI, and external population νX. Note that we use the letters i, j, k, … to index single neurons and a, b, c, … to index neuronal populations. Both μa and σa depend on the firing rate of the neurons νa, which is in turn given by Equation (4). Thus, one arrives at the self-consistency problem

which is coupled across the populations due to Equation (6) and Equation (7).

Our toolbox provides two algorithms to solve Equation (8): (1) Integrating the auxiliary ordinary differential equation (ODE) with initial values νa(0) = νa,0 using scipy.integrate.solve_ivp (Virtanen et al., 2020) until it reaches a fixed point , where Equation (8) holds by construction. (2) Minimizing the quadratic deviation , using the least squares (LSTSQ) solver scipy.optimize.least_squares (Virtanen et al., 2020) starting from an initial guess νa,0. The ODE method is robust to changes in the initial values and hence a good first choice. However, it cannot find self-consistent solutions that correspond to an unstable fixed point of the auxiliary ODE (note that the stability of the auxiliary ODE does not indicate the stability of the solution). To this end, the LSTSQ method can be used. Its drawback is that it needs a good initial guess, because otherwise the found minimum might be a local one where the quadratic deviation does not vanish, , and which accordingly does not correspond to a self-consistent solution, νa ≠ ϕ(μa, σa). A prerequisite for both methods is a numerical solution of the integral in Equation (4); this is discussed in Section A.1 in the Appendix.

The solutions of the self-consistency problem Equation (8) for varying νX and fixed J, Jext, K, and Kext reveal the five types of response nonlinearities (Figure 2). Different response nonlinearities arise through specific choices of synaptic weights, J and Jext, and in-degrees, K and Kext, which suggests that already a simple EI-network possesses a rich capacity for nonlinear computations. Whenever possible, we use the ODE method and resort to the LSTSQ method only if the self-consistent solution corresponds to an unstable fixed point of the auxiliary ODE. Combining both methods, we can reproduce the first columns of Figure 8 in Sanzeni et al. (2020), where all five types of nonlinearities are presented.

In all cases, we chose appropriate initial values νa,0 for either method. Note that an exploratory analysis is necessary if the stability properties of a network model are unknown, and potentially multiple fixed points are to be uncovered because there are, to the best of our knowledge, no systematic methods in d > 1 dimensions that provide all solutions of a nonlinear system of equations.

In Listing 2, we show a minimal example to produce the data shown in Figure 2B. After importing the function that solves the self-consistency Equation (8), we collect the neuron and network parameters in a dictionary. Then, we loop through different values for the external rate νX and determine the network rates using the ODE method, which is sufficient in this example. In Listing 2 and to produce Figure 2B, we use the basic workflow because only one isolated tool of NNMT (nnmt.lif.delta._firing_rates()) is employed, which requires only a few parameters defining the simple EI-network.

Listing 2: Example script to produce the data shown in Figure 2B using the ODE method (initial value νa,0 = 0 for population a ∈ {E, I}).

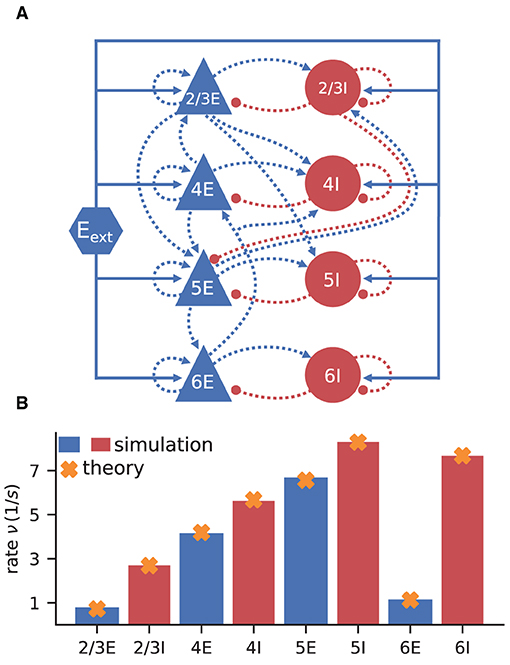

Here we show how to use the model workflow to calculate the firing rates of the cortical microcircuit model by Potjans and Diesmann (2014). The circuit is a simplified point neuron network model with biologically plausible parameters, which has been recently used in a number of other works: for example, to study network properties such as layer-dependent attentional processing (Wagatsuma et al., 2011), connectivity structure with respect to oscillations (Bos et al., 2016), and the effect of synaptic weight resolution on activity statistics (Dasbach, Tetzlaff, Diesmann, and Senk, 2021); to assess the performance of different simulator technologies such as neuromorphic hardware (van Albada et al., 2018) and GPUs (Knight and Nowotny, 2018; Golosio et al., 2021); to demonstrate forward-model prediction of local-field potentials from spiking activity (Hagen et al., 2016); and to serve as a building block for large-scale models (Schmidt et al., 2018).

The model consists of eight populations of LIF neurons, corresponding to the excitatory and inhibitory populations of four cortical layers: 2/3E, 2/3I, 4E, 4I, 5E, 5I, 6E, and 6I (see Figure 3A). It defines the number of neurons in each population, the number of connections between the populations, the single neuron properties, and the external input. Simulations show that the model yields realistic firing rates for the different populations as observed in particular in the healthy resting-state of early sensory cortex (Potjans and Diesmann, 2014, Table 6).

Figure 3. Cortical microcircuit model by Potjans and Diesmann (2014). (A) Network diagram (only the strongest connections are shown as in Figure 1 of the original publication). Same notation as in Figure 2A. (B) Simulation and mean-field estimate for average population firing rates using the parameters from Bos et al. (2016).

In contrast to the EI-network model investigated in Section 3.2.1, the neurons in the microcircuit model have exponentially shaped post-synaptic currents: Equation (2) is replaced by Fourcaud and Brunel (2002)

with synaptic time constant τs. Note that Jij is a measure in volts here. As discussed in Section 3.2.1, in mean-field theory the second term, representing the neuronal input, is approximated by Gaussian white noise. The additional synaptic filtering leads to the membrane potential (Equation 1) receiving colored noise input. Fourcaud and Brunel (2002) developed a method for calculating the firing rate for this synapse type. They have shown that, if the synaptic time constant τs is much smaller than the membrane time constant τm, the firing rate for LIF neurons with exponential synapses can be calculated using Equation (4) with shifted integration boundaries

with the rescaled reset- and threshold-voltages from Equation (5) and , where ζ(x) denotes the Riemann zeta function; the subscript cn stands for “colored noise”.

The microcircuit has been implemented as an NNMT model (nnmt.models.Microcircuit). We here use the parameters of the circuit as published in Bos et al. (2016) which is slightly differently parameterized than the original model (see Table A1 in the Appendix). The parameters of the model are specified in a yaml file, which uses Python-like indentation and a dictionary-style syntax. List elements are indicated by hyphens, and arrays can be defined as nested lists. Parameters with units can be defined by using the keys val and unit, whereas unitless variables can be defined without any keys. Listing 3 shows an example of how some of the microcircuit network parameters used here are defined. Which parameters need to be provided in the yaml file depends on the model used and is indicated in their respective docstrings.

Listing 3: Some microcircuit network parameters defined in a yaml file. A dictionary-like structure with the keys val (value) and unit is used to define the membrane time constant, which is the same across all populations. The numbers of neurons in each population are defined as a list. Only the numbers for the first three populations are displayed.

Once the parameters are defined, a microcircuit model is instantiated by passing the respective parameter file to the model constructor; the units are automatically converted to SI units. Then the firing rates are computed. For comparison, we finally load the simulated rates from Bos et al. (2016):

The simulated rates have been obtained by a numerical network simulation (for simulation details see Bos et al., 2016) in which the neuron populations are connected according to the model's original connectivity rule: “random, fixed total number with multapses (autapses prohibited)”, see Senk et al. (in press) as a reference for connectivity concepts. The term multapses refers to multiple connections between the same pair of neurons and autapses are self-connections; with this connectivity rule multapses can occur in a network realization but autapses are not allowed. For simplicity, the theoretical predictions assume a connectivity with a fixed in-degree for each neuron. Dasbach et al. (2021) show that simulated spike activity data of networks with these two different connectivity rules are characterized by differently shaped rate distributions (“reference” in their Figures 3d and 4d). In addition, the weights in the simulation are normally distributed while the theory replaces each distribution by its mean; this corresponds to the case Nbins = 1 in Dasbach et al. (2021). Nevertheless, our mean-field theoretical estimate of the average population firing rates is in good agreement with the simulated rates (Figure 3B).

One of the most important dynamical properties of a neuronal network is how it reacts to external input. A systematic way to study the network response is to apply an oscillatory external input current leading to a periodically modulated mean input μ(t) = μ+δμRe(eiωt) (cf. Equation 6), with fixed frequency ω, phase, and amplitude δμ, and observe the emerging frequency, phase, and amplitude of the output. If the amplitude of the external input is small compared to the stationary input, the network responds in a linear fashion: it only modifies phase and amplitude, while the output frequency equals the input frequency. This relationship is captured by the input-output transfer function N(ω) (Brunel and Hakim, 1999; Brunel et al., 2001; Lindner and Schimansky-Geier, 2001), which describes the frequency-dependent modulation of the output firing rate of a neuron population

Note that in this section we only study the linear response to a modulation of the mean input, although in general, a modulation of the noise intensity (Equation 7) can also be included (Lindner and Schimansky-Geier, 2001; Schuecker et al., 2015). The transfer function N(ω) is a complex function: Its absolute value describes the relative modulation of the firing rate. Its phase, the angle relative to the real axis, describes the phase shift that occurs between input and output. We denote the transfer function for a network of LIF neurons with instantaneous synapses in linear-response approximation as

with the rescaled reset- and threshold-voltages and as defined in Equation (5) and using the parabolic cylinder functions as defined in (Abramowitz and Stegun, 1974, Section 19.3) and (Olver et al., 2021, Section 12.2). denotes the first derivative by x. A comparison of our notation and the transfer function given in Schuecker et al. (2015, Equation 29) can be found in Section A.2.1 in the Appendix.

For a neuronal network of LIF neurons with exponentially shaped post-synaptic currents, introduced in Section 3.2.2, Schuecker et al. (2014, 2015) show that an analytical approximation of the transfer function can be obtained by a shift of integration boundaries, akin to Equation (10):

To take into account the effect of the synaptic dynamics, we include an additional low-pass filter:

If the synaptic time constant is much smaller than the membrane time constant (τs ≪ τm), an equivalent expression for the transfer function is obtained by a Taylor expansion around the original boundaries (cf. Schuecker et al. 2015, Equation 30). The toolbox implements both variants and offers choosing between them by setting the argument method of nnmt.lif.exp.transfer_function to either shift or taylor.

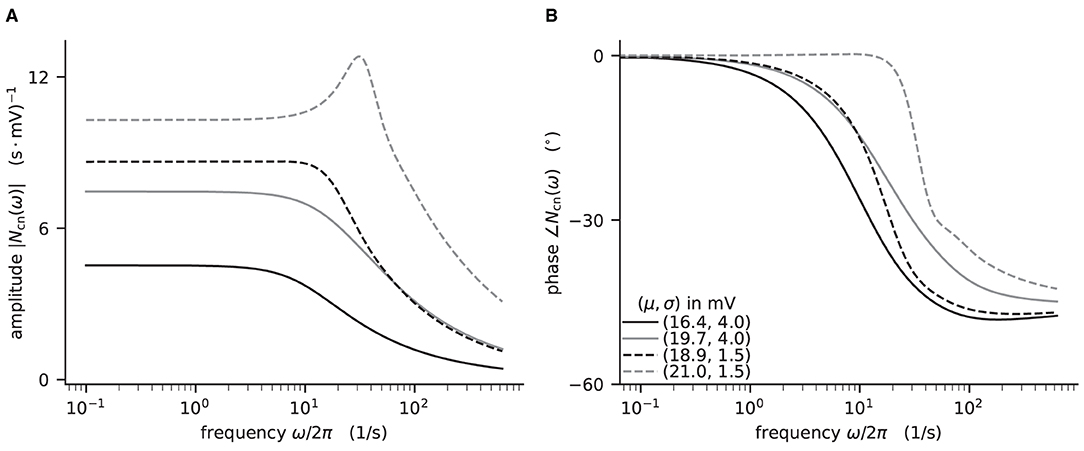

Here, we demonstrate how to calculate the analytical “shift version” of the transfer function for different means and noise intensities of the input current (see Figure 4) and thereby reproduce Figure 4 in Schuecker et al. (2015).

Figure 4. Colored-noise transfer function Ncn of LIF model in different regimes. (A) Absolute value and (B) phase of the “shift” version of the transfer function as a function of the log-scaled frequency. Neuron parameters are set to Vth = 20 mV, V0 = 15 mv, τm = 20 ms, and τs = 0.5 ms. For given noise intensities of input current, σ = 4 mV (solid line) and σ = 1.5 mV (dashed line), the mean input μ is chosen such that firing rates ν = 10 Hz (black) and ν = 30 Hz (gray) are obtained.

The crucial parts for producing Figure 4 using NNMT are shown in Listing 4 for one example combination of mean and noise intensity of the input current. Instead of using the model workflow with nnmt.lif.exp.transfer_function, we here employ the basic workflow, using nnmt.lif.exp._transfer_function directly. This allows changing the mean input and its noise intensity independently of a network model's structure, but requires two additional steps: First, the necessary parameters are loaded from a yaml file, converted to SI units and then stripped off the units using the utility function nnmt.utils._convert_to_si_and_strip_units. Second, the analysis frequencies are defined manually. In this example we choose logarithmically spaced frequencies, as we want to plot the results on a log-scale. Finally, the complex-valued transfer function is calculated and then split into its absolute value and phase. Figure 4 shows that the transfer function acts as a low-pass filter that suppresses the amplitude of high frequency activity, introduces a phase lag, and can lead to resonance phenomena for certain configurations of mean input current and noise intensity.

Listing 4: Example script for computing a transfer function shown in Figure 4 using the method of shifted integration boundaries.

The replication of the results from Schuecker et al. (2015) outlined here is also used in the integration tests of the toolbox. Note that the implemented analytical form of the transfer function by Schuecker et al. (2015) is an approximation for low frequencies, and deviations from a simulated ground truth are expected for higher frequencies (ω/2π ≳ 100 Hz at the given parameters).

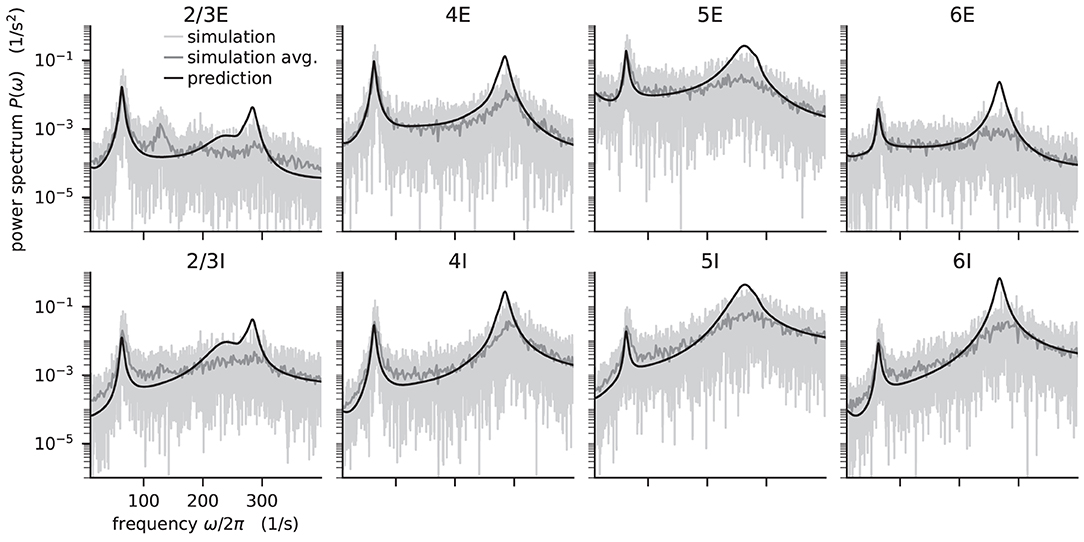

Another frequently studied dynamical property is the power spectrum, which describes how the power of a signal is distributed across its different frequency components, revealing oscillations of the population activity. The power is the Fourier transformed auto-correlation of the population activities (c.f. Bos et al. 2016, Equations 16-18). Linear response theory on top of a mean-field approximation, allows computing the power, dependent on the network architecture, the stationary firing rates, and the neurons' transfer function (Bos et al., 2016). The corresponding analytical expression for the power spectra of population a at angular frequency ω is given by the diagonal elements of the correlation matrix

with ⊘ denoting the elementwise (Hadamard) division, the effective connectivity matrix , where the dot denotes the scalar product, while ⊙ denotes the elementwise (Hadamard) product, the mean population firing rates ν, and the numbers of neurons in each population n. The effective connectivity combines the static, anatomical connectivity J ⊙ K, represented by synaptic weight matrix J and in-degree matrix K, and dynamical quantities, represented by the transfer functions Ncn,s,a(ω) (Equation (13)), and the contribution of the delays in (Equation 13), represented by their Fourier transformed distributions Dab(ω) (cf. Bos et al. 2016, Equations 14, 15).

The modular structure in combination with the model workflow of this toolbox permits a step-by-step calculation of the power spectra, as shown in Listing 5. The inherent structure of the theory is emphasized in these steps: After instantiating the network model class with given network parameters, we determine the working point, which characterizes the statistics of the model's stationary dynamics. It is defined by the population firing rates, the mean, and the standard deviation of the input to a neuron of the respective population. This is necessary for determining the transfer functions. The calculation of the delay distribution matrix is then required for calculating the effective connectivity and to finally get an estimate of the power spectra. Figure 5 reproduces Figure 1E in Bos et al. (2016) and shows the spectra for each population of the adjusted version (see Table A1 in the Appendix) of the microcircuit model.

Listing 5: Example script to produce the theoretical prediction (black lines) shown in Figure 5B.

Figure 5. Power spectra of the population spiking activity in the adapted cortical microcircuit from Bos et al. (2016). The spiking activity of each population in a 10 s simulation of the model is binned with 1 ms resolution and the power spectrum of the resulting histogram is calculated by a fast Fourier transform (FFT; light gray curves). In addition, the simulation is split into 500 ms windows, the power spectrum calculated for each window and averaged across windows (gray curves). Black curves correspond to analytical prediction obtained with NNMT as described in Listing 5. The panels show the spectra for the excitatory (top) and inhibitory (bottom) populations within each layer of the microcircuit.

The numerical predictions obtained from the toolbox largely coincide with simulated data taken from the original publication (Bos et al., 2016) and reveal dominant oscillations of the population activities in the low-γ range around 63 Hz. Furthermore, faster oscillations with peak power around 300 Hz are predicted with higher magnitudes in the inhibitory populations 4I, 5I, and 6I.

The deviation between predicted and simulated power spectra seen at ~130 Hz in population 2/3E could be a harmonic of the correctly predicted, prominent 63 Hz peak; a non-linear effect not captured in linear response theory. Furthermore, the systematic overestimation of the power spectrum at large frequencies is explained by the limited validity of the analytical approximation of the transfer function for high frequencies.

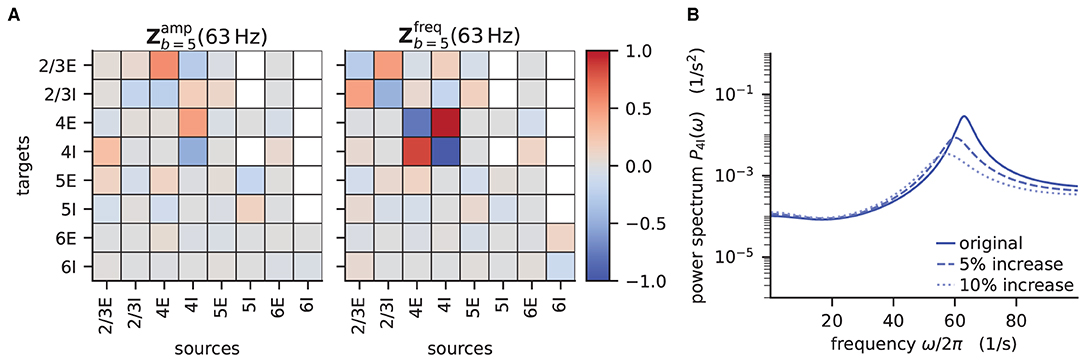

The power spectra shown in the previous section exhibit prominent peaks at certain frequencies, which indicate oscillatory activity. Naturally, this begs the question: which mechanism causes these oscillations? Bos et al. (2016) expose the crucial role that the microcircuit's connectivity plays in shaping the power spectra of this network model. They have developed a method called sensitivity measure to directly relate the influence of the anatomical connections, especially the in-degree matrix, on the power spectra.

The power spectrum of the a-th population Pa(ω) receives a contribution from each eigenvalue λb of the effective connectivity matrix, . Such a contribution consequently diverges as the complex-valued λb approaches 1+0i in the complex plane, which is referred to as the point of instability. This relation can be derived by replacing the effective connectivity matrix in Equation (14) by its eigendecomposition. The sensitivity measure leverages this relationship and evaluates how a change in the in-degree matrix affects the eigenvalues of the effective connectivity and thus indirectly the power spectrum. Bos et al. (2016) introduce a small perturbation αcd of the in-degree matrix, which allows writing the effective connectivity matrix as , where we dropped the delay subscript d. The sensitivity measure Zb,cd(ω) describes how the b-th eigenvalue of the effective connectivity matrix varies when the cd-th element of the in-degree matrix is changed

where is the partial derivative of the eigenvalue with respect to a change in connectivity, and ub are the left and right eigenvectors of corresponding to eigenvalue λb(ω).

The complex sensitivity measure can be understood in terms of two components: is the projection of the matrix Zb onto the direction in the complex plane defined by 1 − λb(ω); it describes how, when the in-degree matrix is perturbed, the complex-valued λb(ω) moves toward or away from the instability 1 + 0i, and consequently how the amplitude of the power spectrum at frequency ω increases or decreases. is the projection onto the perpendicular direction and thus describes how the peak frequency of the power spectrum changes with the perturbation of the in-degree matrix. For a visualization of these projections, refer to Figure 5B in Bos et al. (2016).

The toolbox makes this intricate measure accessible by supplying two tools: After computing the required working point, transfer function, and delay distribution, the tool nnmt.lif.exp.sensitivity_measure computes the sensitivity measure at a given frequency for one specific eigenvalue. By default, this is the eigenvalue which is closest to the instability 1 + 0i. To perform the computation, we just need to add one line to Listing 5:

The result is returned in form of a dictionary that contains the sensitivity measure and its projections. The tool nnmt.lif.exp.sensitivity_measure_all_eigenmodes wraps that basic function and calculates the sensitivity measure for all eigenvalues at the frequency for which each eigenvalue is closest to instability.

According to the original publication (Bos et al., 2016), the peak around 63Hz has contributions from one eigenvalue of the effective connectivity matrix. Figure 6 shows the projections of the sensitivity measure at the frequency for which this eigenvalue is closest to the instability, as illustrated in Figure 4 of Bos et al. (2016). The sensitivity measure returns one value for each connection between populations in the network model. For a negative value indicates that increasing the in-degrees of a specific connection causes the amplitude of the power spectrum at the evaluated frequency to drop. If the value is positive, the amplitude is predicted to grow as the in-degrees increase. Similarly, for positive the frequency of the peak in the power spectrum shifts toward higher values as in-degrees increase, and vice versa. The main finding in this analysis is that the low-γ peak seems to be affected by excitatory-inhibitory loops in layer 2/3 and layer 4.

Figure 6. Sensitivity measure at low-γ frequency and corresponding power spectrum of microcircuit with adjusted connectivity. (A) Sensitivity measure of one eigenmode of the effective connectivity relevant for low-γ oscillations. The sensitivity measure for this mode is evaluated at the frequency where the corresponding eigenvalue is closest to the point of instability 1 + 0i in complex plane. (left subpanel) visualizes the influence of a perturbation of a connection on the peak amplitude of the power spectrum. (right subpanel) shows the impact on the peak frequency. Non-existent connections are masked white. (B) Mean-field prediction of power spectrum of population 4I with original connectivity parameters (solid line), 5% increase (dashed line) and 10% increase (dotted line) in connections K4I → 4I. The increase in inhibitory input to population 4I was counteracted by an increase of the excitatory external input Kext → 4I to maintain the working point.

To decrease the low–γ peak in the power spectrum, one could therefore increase the 4I to 4I connections (cp. Figure 6A):

and calculate the power spectrum as in Listing 5 again to validate the change. Note that a change in connectivity leads to a shift in the working point. We are interested in the impact of the modified connectivity on the fluctuation dynamics at the same working point and thus need to counteract the change in connectivity by adjusting the external input. In the chosen example this is ensured by satisfying J4I → 4IΔK4I → 4Iν4I = −Jext → 4IΔKext → 4Iνext, which yields .

If several eigenvalues of the effective connectivity matrix influence the power spectra in the same frequency range, adjustments of the connectivity are more involved. This is because a change in connectivity would inevitably affect all eigenvalues simultaneously. Further care has to be taken because the sensitivity measure is subject to the same constraints as the current implementation of the transfer function, which is only valid for low frequencies and enters the sensitivity measure directly.

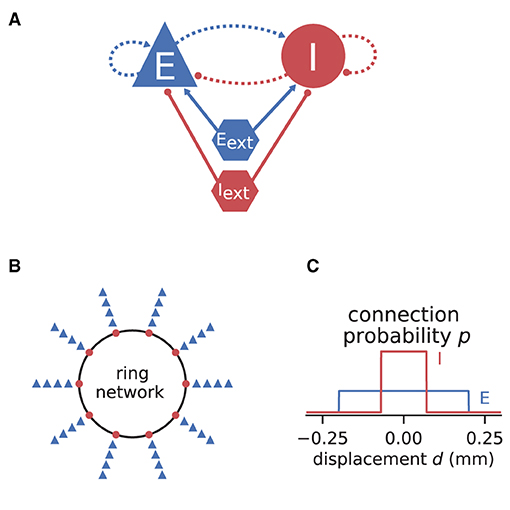

If the neurons of a network are spatially organized and connected according to a distance-dependent profile, the spiking activity may exhibit pattern formation in space and time, including wave-like phenomena. Senk et al. (2020) set out to scrutinize the non-trivial relationship between the parameters of such a network model and the emerging activity patterns. The model they use is a two-population network of excitatory E and inhibitory I spiking neurons, illustrated in Figure 7. All neurons are of type LIF with exponentially shaped post-synaptic currents. The neuron populations are recurrently connected to each other and themselves and they receive additional external excitatory Eext and inhibitory Iext Poisson spike input of adjustable rate as shown in Figure 7A. The spatial arrangement of neurons on a ring is illustrated in Figure 7B and the boxcar-shaped connectivity profiles in Figure 7C.

Figure 7. Illustrations of spiking network model by Senk et al. (2020). (A) Excitatory and inhibitory neuronal populations randomly connected with fixed in-degree and multapses allowed (autapses prohibited). External excitatory and inhibitory Poisson drive to all neurons. Same notation as in Figure 2A. (B) One inhibitory and four excitatory neurons per grid position on a one-dimensional domain with periodic boundary conditions (ring network). (C) Normalized, boxcar-shaped connection probability with wider excitation than inhibition; the grid spacing is here 10−3 mm. For model details and parameters, see Tables II–IV of Senk et al. (2020); the specific values given in the caption of their Figure 6 are used throughout here.

In the following, we consider a mean-field approximation of the spiking model with spatial averaging, that is a time and space continuous approximation of the discrete model as derived in Senk et al. (2020, Section E. Linearization of spiking network model). We demonstrate three methods used in the original study: First, Section 3.4.1 explains how a model can be brought to a defined state characterized by its working point. The working point is given by the mean μ and noise intensity σ of the input to a neuron, which are both quantities derived from network parameters and require the calculation of the firing rates. With the spiking model in that defined state, Section 3.4.2 then maps its transfer function to the one of a rate model. Section 3.4.3 finally shows that this working-point dependent rate model allows for an analytical linear stability analysis of the network accounting for its spatial structure. This analysis can reveal transitions to spatial and temporal oscillatory states which, when mapped back to the parameters of the spiking model, may manifest in distinct patterns of simulated spiking activity after a startup transient.

With network and analysis parameters predefined in yaml files, we set up a network model using the example model class Basic:

Upon initialization the given parameters are automatically converted into the format used by NNMT's tools. For instance, relative spike reset and threshold potentials are derived from the absolute values, connection strengths in units of volt are computed from the post-synaptic current amplitudes in ampere, and all values are scaled to SI units.

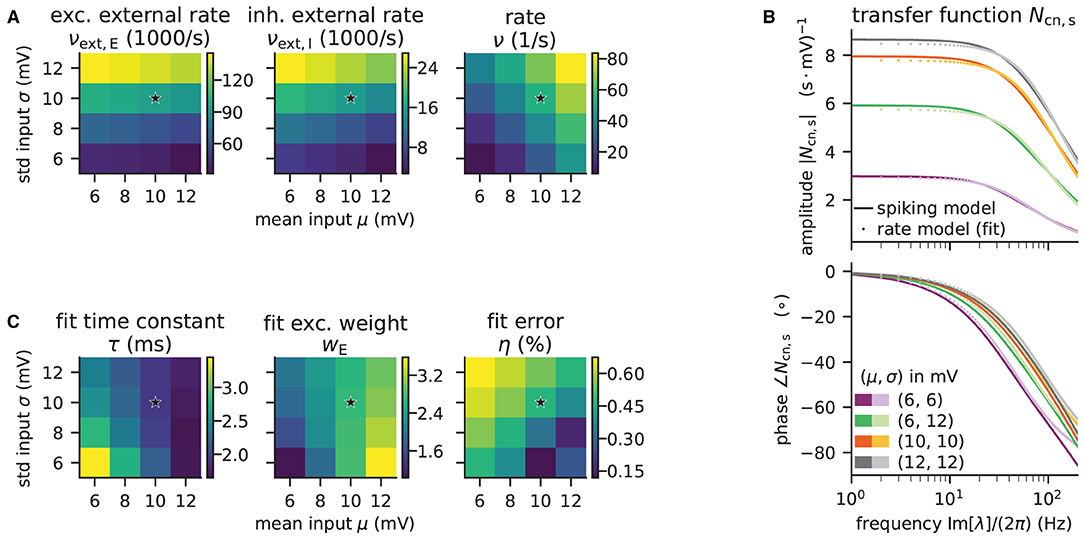

We aim to bring the network to a defined state by fixing the working point but also want to explore if the procedure of fitting the transfer function still works for different network states. For a parameter space exploration, we use a method to change parameters provided by the model class and scan through a number of different working points of the network. To obtain the required input for a target working point, we adjust the external excitatory and inhibitory firing rates accordingly; NNMT uses a vectorized version of the equations given in Senk et al. (2020, Appendix F: Fixing the working point) to calculate the external rates needed:

The implementation uses only one excitatory and one inhibitory Poisson source to represent the external input rates which typically originate from a large number of external source neurons. These two external sources are connected to the network with the same relative inhibition g as used for the internal connections. The resulting external rates for different choices of (μ, σ) are color-coded in the first two plots of Figure 8A. The third plot shows the corresponding firing rates of the neurons, which are stored in the results of the model instance when computing the working point explicitly:

Figure 8. Network parameters and mean-field results from scanning through different working points. Working point (μ, σ) combines mean input μ and noise intensity of input σ. (A) External excitatory νext,E and inhibitory νext,I Poisson rates required to set (μ, σ) and resulting firing rates ν. (B) Transfer function Ncn,s of spiking model and fitted rate-model approximation with low-pass filter for selected (μ, σ) (top: amplitude, bottom: phase). (C) Fit results (time constants τ and excitatory weights wE) and fit errors η. The inhibitory weights are wI = −gwE with g = 5. Star marker in panels (A) and (C) denotes target working point (10, 10) mV. Similar displays as in Senk et al. (2020, Figure 5).

Although the external rates are substantially higher than the firing rates, since a neuron is recurrently connected to hundreds of neurons, the total external and recurrent inputs are of the same order.

We map the parameters of the spiking model to a corresponding rate model by, first, computing the transfer function Ncn,s given in Equation (13) of the spiking model, and second, fitting the simpler transfer function of the rate model, for details see Senk et al. (2020, Section F. Comparison of neural-field and spiking models). The dynamics of the rate model can be written as a differential equation for the linearized activity ra with populations a, b ∈ {E, I}:

with the delay d; τ is the time constant and wb are the unitless weights that only depend on the presynaptic population. The transfer function is just the one of a low-pass filter, NLP = 1/(1 + λτ), where λ is the frequency in Laplace domain. The tool to fit the transfer function requires that the actual transfer function Ncn,s has been computed beforehand and fits NLPw to τmNcn,s·J ⊙ K for the same frequencies together with τ, w, and the combined fit error η:

The absolute value of the transfer function is fitted with non-linear least-squares using the solver scipy.optimize.curve_fit. Figure 8B illustrates the amplitude and phase of the transfer function and its fit for a few (μ, σ) combinations. The plots of Figure 8C show the fitted time constants, the fitted excitatory weight, and the combined fit error. The inhibitory weight is proportional to the excitatory one in the same way as the post-synaptic current amplitudes.

Sections 3.4.1 and 3.4.2 considered a mean-field approximation of the spiking model without taking space into account. In the following, we assume a spatial averaging of the discrete network depicted in Figure 7 and introduce the spatial connectivity profiles pa(x). Changing Equation (16) to the integro-differential equation

yields a neural field model defined in continuous space x. This model lends itself to analytical linear stability analysis, as described in detail in Senk et al. (2020, Section A. Linear stability analysis of a neural-field model). In brief, we analyze the stability of a fixed-point solution to this differential equation system which, together with parameter continuation methods and bifurcation analysis, allows us to determine points in parameter space where transitions from homogeneous steady states to oscillatory states can occur. These transitions are given as a function of a bifurcation parameter, here the constant delay d, which is the same for all connections. The complex-valued, temporal eigenvalue λ of the linearized time-delay system is an indicator for the system's overall stability and can serve as a predictor for temporal oscillations, whereas the spatial oscillations are characterized by the real-valued wave number k. Solutions that relate λ and k with the model parameters are given by a characteristic equation, which in our case reads (Senk et al., 2020, Equation 7):

with the time constant of the rate model τ, the multi-valued Lambert WB function3 on branch B (Corless et al., 1996), and the effective connectivity profile c(k), which combines the weights wb and the Fourier transforms of the spatial connectivity profiles. Note that the approach generalizes from the boxcar-shaped profiles used here to any symmetric probability density function. NNMT provides an implementation to solve this characteristic equation in its linear_stability module using the spatial module for the profile:

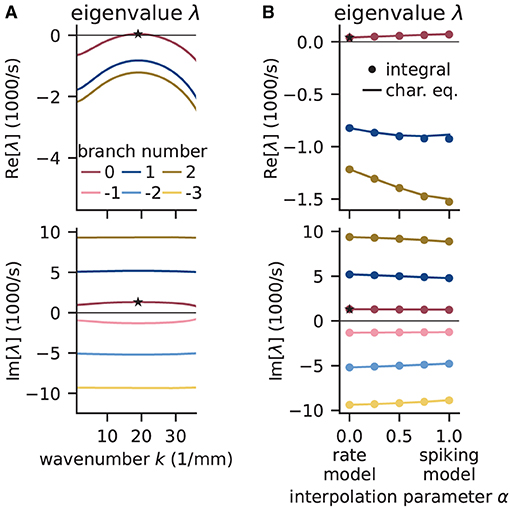

Figure 9A shows that the computed eigenvalues come for the given network parameters in complex conjugate pairs. The branch with the largest real part is the principal branch (B = 0). Temporal oscillations are expected to occur if the real part of the eigenvalue on the principal branch becomes positive; the oscillation frequency can then be read off the imaginary part of that eigenvalue. In this example, the largest eigenvalue λ* on the principal branch has a real part that is just above zero. There exists a supercritical Hopf bifurcation and the delay as the bifurcation parameter is chosen large enough such that the model is just beyond the bifurcation point separating the stable from the instable state. The respective wave number k* is positive, which indicates spatial oscillations as well. The oscillations in both time and space predicted for the rate model imply that the activity of the corresponding spiking model might exhibit wave trains, i.e., temporally and spatially periodic patterns. The predicted propagation speed of the wave trains is given by the phase velocity Im[λ*]/k*.

Figure 9. Linear stability analysis of spatially structured network model. (A) Analytically exact solution for real (top) and imaginary (bottom) part of eigenvalue λ vs. wavenumber k using rate model derived by fit of spiking model at working point (μ, σ) = (10, 10) mV. Color-coded branches of Lambert WB function; maximum real eigenvalue (star marker) on principal branch (B = 0). (B) Linear interpolation between rate (α = 0) and spiking model (α = 1) by numerical integration of Senk et al. (2020, Equation 30) (solid line) and by numerically solving the characteristic equation in Senk et al. (2020, Equation 29) (circular markers). Star markers at same data points as in (A). Similar displays as in Senk et al. (2020, Figure 6).

To determine whether the results obtained with the rate model are transferable to the spiking model, Figure 9B interpolates the analytical solutions of the rate model [α = 0, evaluating Equation (18)] to solutions of the spiking model (α = 1, accounting for the transfer function Ncn,s), which can only be computed numerically. Thus, the parameter α interpolates between the characteristic equations of these two models which primarily differ in their transfer function; for details see Senk et al. (2020, Section F.2 Linear interpolation between the transfer functions). Since the eigenvalues estimated this way show only little differences between rate and spiking model, we conclude that predictions from the rate model should hold also for the spiking model in the parameter regime tested. Following the argument of Senk et al. (2020), the predicted pattern formation could next be tested in a numerical simulation of the discrete spiking network model. Their Figure 7c for the delay d = 1.5 ms shows such results with the same parameters as used here; this figure also illustrates transitions from homogeneous states to oscillatory states by changing the delay (panels b, c, and e).

Mean-field theory grants important insights into the dynamics of neuronal networks. However, the lack of a publicly available numerical implementation for most methods entails a significant initial investment of time and effort prior to any scientific investigations. In this paper, we present the open-source toolbox NNMT, which currently focuses on methods for LIF neurons but is intended as a platform for collecting standard implementations of various neuronal network model analyses based on mean-field theory that have been thoroughly tested and validated by the neuroscientific community (Riquelme and Gjorgjieva, 2021). As use cases, we reproduce known results from the literature: the non-linear relation between the firing rates and the external input of an E-I-network (Sanzeni et al., 2020), the firing rates of a cortical microcircuit model, its response to oscillatory input, its power spectrum, and the identification of the connections that predominantly contribute to the model's low frequency oscillations (Schuecker et al., 2015; Bos et al., 2016), and pattern formation in a spiking network, analyzed by mapping it to a rate model and conducting a linear stability analysis (Senk et al., 2020).

In the remainder of the discussion, we compare NNMT to other tools suited for network model analysis. We expand on the different use cases of NNMT and also point out the inherent limitations of analytical methods for neuronal network analysis. We conclude with suggestions on how new tools can be added to NNMT and how the toolbox may grow and develop in the future.

There are various approaches and corresponding tools that can help to gain a better understanding of a neuronal network model. There are numerous simulators that numerically solve the dynamical equations for concrete realizations of a network model and all its stochastic components, often focusing either on the resolution of single-neurons, for example NEST (Gewaltig and Diesmann, 2007), Brian (Stimberg et al., 2019), or Neuron (Hines and Carnevale, 2001), or on the population level, for example TheVirtualBrain (Sanz Leon et al., 2013). Similarly, general-purpose dynamical system software like XPPAUT (Ermentrout, 2002) can be used. Simulation tools, like DynaSim (Sherfey et al., 2018), come with enhanced functionality for simplifying batch analysis and parameter explorations. All these tools yield time-series of activity, and some of them even provide the methods for analyzing the generated data. However, simulations only indirectly link a model's parameters with its activity: to gain an understanding of how a model's parameters influence the statistics of their activity, it is necessary to run many simulations with different parameters and analyze the generated data subsequently.

Other approaches provide a more direct insight into a model's behavior on an abstract level: TheVirtualBrain and the Brain Dynamics Toolbox (Heitmann et al., 2018), for example, allow plotting a model's phase space vector field while the parameters can be changed interactively, allowing for exploration of low-dimensional systems defined by differential equations without the need for simulations. XPPAUT has an interface to AUTO-07P (Doedel and Oldeman, 1998), a software for performing numerical bifurcation and continuation analysis. It is worth noting that such tools are limited to models that are defined in terms of differential equations. Models specified in terms of update rules, such as binary neurons, need to be analyzed differently, for example using mean-field theory.

A third approach is to simplify the model analytically and simulate the simplified version. The simulation platform DiPDE4 utilizes the population density approach to simulate the statistical evolution of a network model's dynamics. Schwalger et al. (2017) start from a microscopic model of generalized integrate-and-fire neurons and derive mesoscopic mean-field population equations, which reproduce the statistical and qualitative behavior of the homogeneous neuronal sub-populations. Similarly, Montbrió et al. (2015) derive a set of non-linear differential equations describing the dynamics of the rate and mean membrane potentials of a population of quadratic integrate-and-fire (QIF) neurons. The simulation platform PyRates (Gast et al., 2019) provides an implementation of this QIF mean-field model, and allows simulating them to obtain the temporal evolution of the population activity measures.

However, mean-field and related theories can go beyond such reduced dynamical equations: they can directly link model parameters to activity statistics, and they can even provide access to informative network properties that might not be accessible otherwise. The spectral bound (Rajan and Abbott, 2006) of the effective connectivity matrix in linear response theory (Lindner et al., 2005; Pernice et al., 2011; Trousdale et al., 2012) is an example of such a property. It is a measure for the stability of the linearized system and determines, for example, the occurrence of slow dynamics and long-range correlations (Dahmen et al., 2022). Another example is the sensitivity measure presented in Section 3.3.3, which directly links a network model's connectivity with the properties of its power spectrum. These measures are not accessible via simulations. They require analytical calculation.

Similarly, NNMT is not a simulator. NNMT is a collection of mean-field equation implementations that directly relate a model's parameters to the statistics of its dynamics or to other informative properties. It provides these implementations in a format that makes them applicable to as many network models as possible. This is not to say that NNMT does not involve numerical integration procedures; solving self-consistent equations, such as in the case of the firing rates calculations in Section 3.2.1 and Section 3.2.2, is a common task, and a collection of respective solvers is part of NNMT.

In Section 3, we present concrete examples of how to apply some of the tools available. Here, we revisit some of the examples to highlight the use cases NNMT lends itself to, as well as provide some ideas for how the toolbox could be utilized in future projects.

Analytical methods have the advantage of being fast, and typically they only require a limited amount of computational resources. The computational costs for calculating analytical estimates of dynamical network properties like firing rates, as opposed to the costs of running simulations of a network model, are independent of the number of neurons the network is composed of. This is especially relevant for parameter space explorations, for which many simulations have to be performed. To speed up prototyping, a modeler can first perform a parameter scan using analytical tools from NNMT to get an estimate of the right parameter regimes and subsequently run simulations on this restricted set of parameters to arrive at the final model parameters. An example of such a parameter scan is given in Section 3.2.1, where the firing rates of a network are studied as a function of the external input.

Additionally to speeding up parameter space explorations, analytical methods may guide parameter space explorations in another way: namely, by providing an analytical relation between network model parameters and network dynamics, which allows a targeted adjustment of specific parameters to achieve a desired network activity. The prime example implemented in NNMT is the sensitivity measure presented in Section 3.3.3, which provides an intuitive relation between the network connectivity and the peaks of the power spectrum corresponding to the dominant oscillation frequencies. As shown in the final part of Section 3.3.3, the sensitivity measure identifies the connections which need to be adjusted in order to modify the dominant oscillation mode in a desired manner. This illustrates a mean-field method that provides a modeler with additional information about the origin of a model's dynamics, such that a parameter space exploration can be restricted to the few identified crucial model parameters.

A modeler investigating which features of a network model are crucial for the emergence of certain activity characteristics observed in simulations might be interested in comparing models of differing complexity. The respective mappings can be derived in mean-field theory, and one variant included in NNMT, which is presented in Section 3.4, allows mapping a LIF network to a simpler rate network. This is useful to investigate whether spiking dynamics is crucial for the observed phenomenon.

On a general note, which kind of questions researchers pursue is limited by and therefore depends on the tools they have at hand (Dyson, 2012). The availability of sophisticated neural network simulators for example has lead to the development of conceptually new and more complex neural network models, precisely because their users could focus on actual research questions instead of implementations. We hope that collecting useful implementations of analytical tools for network model analysis will have a similar effect on the development of new tools and that it might lead to new, creative ways of applying them.

As a collection of analytical methods, NNMT comes with inherent limitations that apply to any toolbox for analytical methods: it is restricted to network, neuron, and synapse models, as well as observables, for which a mean-field theory exists, and the tools are based on analytical assumptions, simplifications, and approximations, restricting their valid parameter regimes and their explanatory power, which we expand upon in the next paragraphs.

Analytical methods can provide good estimates of network model properties, but there are limitations that must be considered when interpreting results provided by NNMT: First of all, the employed numerical solvers introduce numerical inaccuracies, but they can be remedied by changing hyperparameters such as integration step sizes or iteration termination thresholds. More importantly, analytical methods almost always rely on approximations, which can only be justified if certain assumptions are fulfilled. Typical examples of such assumptions are fast or slow synapses, or a random connectivity. If such assumptions are not met, at least approximately, and the valid parameter regime of a tool is left, the corresponding method is not guaranteed to give reliable results. Hence, it is important to be aware of a tool's limitations, which we aim to document as thoroughly as possible.

An important assumption of mean-field theory is uncorrelated Poissonian inputs. As discussed in Section 3.2.1, asynchronous irregular activity is a robust feature of inhibition dominated networks, and mean-field theory is well-suited to describe the activity of such models. However, if a network model features highly correlated activity, or strong external input common to many neurons, approximating the input by uncorrelated noise no longer holds and mean-field estimates become unreliable.

In addition to the breakdown of such assumptions, some approaches, like linear response theory, rely on neglecting higher order terms. This restricts the tools' explanatory power, as they cannot predict higher order effects, such as the presence of higher harmonics in a network's power spectrum. Addressing these deficiencies necessitates using more elaborate analyses, and users should be aware of such limitations when interpreting the results.

Finally, a specific limitation of NNMT is that it currently only collects methods for LIF neurons. However, one of the aims of this paper is to encourage other scientists to contribute to the collection, and we outline how to do so in the following section.

A toolbox like NNMT always is an ongoing project, and there are various aspects that can be improved. In this section, we briefly discuss how available methods could be improved, what and how new tools could be added, as well as the benefits of implementing a new method with the help of NNMT.

First of all, NNMT in its current state is partly vectorized but the included methods are not parallelized, e.g., using multiprocessing or MPI for Python (mpi4py). Vectorization relies on NumPy (Harris et al., 2020) and SciPy (Virtanen et al., 2020), which are thread-parallel for specific backends, e.g., IntelMKL. With the tools available in the toolbox at the moment, run-time only becomes an issue in extensive parameter scans, for instance, when the transfer function needs to be calculated for a large range of frequencies. To further reduce the runtime, the code could be made fully vectorized. Alternatively, parallelization of many tools in NNMT is straightforward, as many of them include for loops over the different populations of a network model and for loops over the different analysis frequencies. A third option is just-in-time compilation, as provided by Numba (Lam et al., 2015).

Another aspect to consider is the range of network models a tool can be applied to. Thus far, the toolbox primarily supports arbitrary block structured networks. Future developments could extend the class of networks to even more general models.

Due to the research focus at our lab, NNMT presently mainly contains tools for LIF neurons in the fast synaptic regime and networks with random connectivity. Nonetheless, the structure of NNMT allows for adding methods for different neuron types, like for example binary (Ginzburg and Sompolinsky, 1994) or conductance-based neurons (Izhikevich, 2007; Richardson, 2007), as well as more elaborate network models. Another way to improve the toolbox is adding tools that complement the existing ones: As discussed in Section 4.3, many mean-field methods only give valid results for certain parameter ranges. Sometimes, there exist different approximations for the same quantity, valid in complementary parameter regimes. A concrete example is the currently implemented version of the transfer function for leaky integrate-and-fire neurons, based on Schuecker et al. (2015), which gives a good estimate for small synaptic time constants compared to the membrane time constant τs/τm ≪ 1. A complementary estimate for τs/τm ≫ 1 has been developed by Moreno-Bote and Parga (2006). Similarly, the current implementation of the firing rates of leaky integrate-and-fire neurons, based on the work of Fourcaud and Brunel (2002), is valid for τs/τm ≪ 1. Recently, van Vreeswijk and Farkhooi (2019) have developed a method accurate for all combinations of synaptic and membrane time constants.

In the following, we explain how such implementations can be added and how using NNMT helps implementing new methods. Clearly, the implementations of NNMT help implementing methods that build on already existing ones. An example is the firing rate for LIF neurons with exponential synapses nnmt.lif.exp._firing_rates() which wraps the calculation of firing rates for LIF neurons with delta synapses nnmt.lif.delta._firing_rates(). Additionally, the toolbox may support the implementation of tools for other neuron models. As an illustration, let us consider adding the computation of the mean activity for a network of binary neurons (included in NNMT 1.1.0). We start with the equations for the mean input μa, its variance , and the firing rates m (Helias et al., 2014, Equations 4, 6, and 7)

with indegree matrix Kab from population b to population a, synaptic weight matrix Jab, and firing-threshold Θa. The sum may include an external population providing input to the model. This set of self-consistent equations has the same structure as the self-consistent equations for the firing rates of a network of LIF neurons, Equation (8): the input statistics are given as functions of the rate, and the rate is given as a function of the input statistics. Therefore, it is possible to reuse the firing rate integration procedure for LIF neurons, providing immediate access to the two different methods presented in Section 3.2.1. Accordingly, it is sufficient to implement Equation (19) in a new submodule nnmt.binary and apply the solver provided by NNMT to extend the toolbox to binary neurons.

The above example demonstrates the benefits of collecting analytical tools for network model analysis in a common framework. The more methods and corresponding solvers the toolbox comprises, the easier implementing new methods becomes. Therefore, contributions to the toolbox are highly welcome; this can be done via the standard pull request workflow on GitHub (see the “Contributors guide” of the official documentation of NNMT2). We hope that in the future, many scientists will contribute to this collection of analytical methods for neuronal network model analysis, such that, at some point, we will have tools from all parts of mean-field theory of neuronal networks, made accessible in a usable format to all neuroscientists.

Publicly available datasets were used in this study, and the corresponding sources are cited in the main text. The toolbox's repository can be found at https://github.com/INM-6/nnmt, and the parameter files used in the presented examples can be found in the examples section of the online documentation https://nnmt.readthedocs.io/en/latest/.

HB and MH developed and implemented the code base and the initial version of the toolbox. ML, JS, and SE designed the current version of the toolbox. ML implemented the current version of the toolbox, vectorized and generalized tools, developed and implemented the test suite, wrote the documentation, and created the example shown in Section 3.2.2. AM improved the numerics of the firing rate integration (Methods) and created the example shown in Section 3.2.1. SE implemented integration tests, improved the functions related to the sensitivity_measure, and created the examples shown in Section 3.3. JS developed and implemented the tools used in Section 3.4 and created the respective example. ML, JS, SE, AM, and MH wrote this article. All authors approved the submitted version.

This project has received funding from the European Union's Horizon 2020 Framework Programme for Research and Innovation under Specific Grant Agreement Nos. 720270 (HBP SGA1), 785907 (HBP SGA2), and 945539 (HBP SGA3), has been partially funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – 368482240/GRK2416, and has been partially funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – 491111487. This research was supported by the Joint Lab “Supercomputing and Modeling for the Human Brain”.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We would like to thank Jannis Schuecker, who has contributed to the development and implementation of the code base and the initial version of the toolbox, and Angela Fischer, who supported us designing Figure 1. Additionally, we would also like to thank our reviewers for the thorough and constructive feedback, which lead to significant improvements.

1. ^https://github.com/INM-6/nnmt

2. ^https://nnmt.readthedocs.io/

3. ^The Lambert WB function is defined as for z ∈ ℂ and has infinitely many solutions, numbered by the branches B.

Abramowitz, M., and Stegun, I. A. (1974). Handbook of Mathematical Functions: With Formulas, Graphs, and Mathematical Tables (New York: Dover Publications).

Ahmadian, Y., and Miller, K. D. (2021). What is the dynamical regime of cerebral cortex? Neuron 109, 3373–3391. doi: 10.1016/j.neuron.2021.07.031

Amari, S.-I. (1975). Homogeneous nets of neuron-like elements. Biol. Cybern. 17, 211–220. doi: 10.1007/BF00339367

Amari, S.-I. (1977). Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybern. 27, 77–87. doi: 10.1007/bf00337259

Amit, D. J., and Brunel, N. (1997a). Dynamics of a recurrent network of spiking neurons before and following learning. Netw. Comp. Neural Sys. 8, 373–404. doi: 10.1088/0954-898x_8_4_003

Amit, D. J., and Brunel, N. (1997b). Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cereb. Cortex 7, 237–252. doi: 10.1093/cercor/7.3.237