- 1INRIA Bordeaux Sud-Ouest, Talence, France

- 2Institut des Maladies Neurodégénératives, Université de Bordeaux, Centre National de la Recherche Scientifique UMR 5293, Bordeaux, France

- 3LaBRI, Université de Bordeaux, Bordeaux INP, Centre National de la Recherche Scientifique UMR 5800, Talence, France

Scientific code is different from production software. Scientific code, by producing results that are then analyzed and interpreted, participates in the elaboration of scientific conclusions. This imposes specific constraints on the code that are often overlooked in practice. We articulate, with a small example, five characteristics that a scientific code in computational science should possess: re-runnable, repeatable, reproducible, reusable, and replicable. The code should be executable (re-runnable) and produce the same result more than once (repeatable); it should allow an investigator to reobtain the published results (reproducible) while being easy to use, understand and modify (reusable), and it should act as an available reference for any ambiguity in the algorithmic descriptions of the article (replicable).

Introduction (R0)

Replicability1 is a cornerstone of science. If an experimental result cannot be re-obtained by an independent party, it merely becomes, at best, an observation that may inspire future research (Mesirov, 2010; Open Science Collaboration, 2015). Replication issues have received increased attention in recent years, with a particular focus on medicine and psychology (Iqbal et al., 2016). One could think that computational research would mostly be shielded from such issues, since a computer program describes precisely what it does and is easily disseminated to other researchers without alteration.

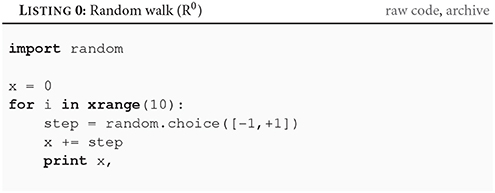

But precisely because it is easy to believe that if a program runs once and gives the expected results it will do so forever, crucial steps to transform working code into meaningful scientific contributions are rarely undertaken (Schwab et al., 2000; Sandve et al., 2013; Collberg and Proebsting, 2016). Computational research is plagued by replication problems, in part, because it seems impervious to them. Contrary to production software who provides a service geared toward a practical outcome, the motivation behind scientific code is to test a hypothesis. While in some instance production software and scientific code are indistinguishable, the reasons why they were created are different, and, therefore, so are the criteria to evaluate their success. A program can fail as a scientific contribution in many different ways for many different reasons. Borrowing the terms coined by Goble (2016), for a program to contribute to science, it should be re-runnable (R1), repeatable (R2), reproducible (R3), reusable (R4), and replicable (R5). Let us illustrate this with a small example, a random walk (Hughes, 1995) written in Python:

In the code above, the random.choice function randomly returns either +1 or −1. The instruction “for i in xrange(10):” executes the next three indented lines ten times. Executed, this program would display:

What could go wrong with such a simple program?

Well…

Re-runnable (R1)

Have you ever tried to re-run a program you wrote some years ago? It can often be frustratingly hard. Part of the problem is that technology is evolving at a fast pace and you cannot know in advance how the system, the software and the libraries your program depends on will evolve. Since you wrote the code, you may have reinstalled or upgraded your operating system. The compiler, interpreter or set of libraries installed may have been replaced with newer versions. You may find yourself battling with arcane issues of library compatibility—thoroughly orthogonal to your immediate research goals—to execute again a code that worked perfectly before. To be clear, it is impossible to write future-proof code, and the best efforts can be stymied by the smallest change in one of the dependencies. At the same time, modernizing an unmaintained ten-year-old code can reveal itself to be an arduous and expensive undertaking—and precarious, since each change risks affecting the semantics of the program. Rather than trying to predict the future or painstakingly dusting off old code, an often more straightforward solution is to recreate the old execution environment2. For this to happen however, the dependencies in terms of systems, software, and libraries must be made clear enough.

A re-runnable code is one that can be run again when needed, and in particular more than the one time that was needed to produce the results. It is important to notice that the re-runnability of a code is not an intrinsic property. Rather, it depends on the context, and becomes increasingly difficult as the code ages. Therefore, to be and remain re-runnable on the computers of other researchers, a re-runnable code should describe—with enough details to be recreated—an execution environment in which it is executable. As shown by Collberg and Proebsting (2016), this is far from being either obvious or easy.

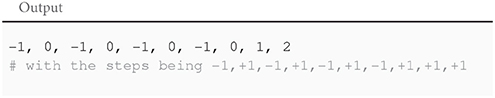

In our case, the R0 version of our tiny walker seems to imply that any version of Python would be fine. This not the case: it uses the print instruction and the xrange operator, both specific to Python 2. The print instruction, available in Python 2 (a version still widely used; support is scheduled to stop in 2020), has been deprecated in Python 3 (first released in 2008, almost a decade ago) in favor of a print function, while the xrange operator has been replaced by the range operator in Python 3. In order to try to future-proof the code a bit, we might as well target Python 3, as is done in the R1 version. Incidentally, it remains compatible with Python 2. But whichever version is chosen, the crucial step here is to document it.

Repeatable (R2)

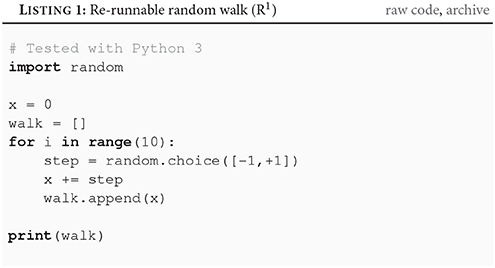

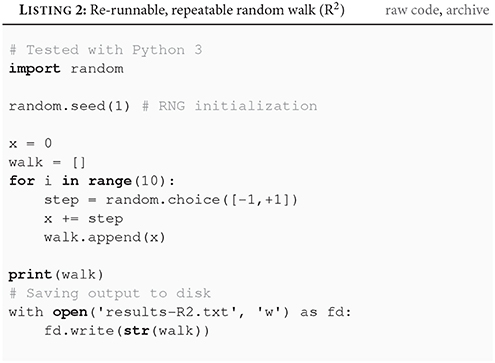

The code is running and producing the expected results. The next step is to make sure that you can produce the same output over successive runs of your program. In other words, the next step is to make your program deterministic, producing repeatable output. Repeatability is valuable. If a run of the program produces a particularly puzzling result, repeatability allows you to scrutinize any step of the execution of the program by re-running it again with extraneous prints, or inside a debugger. Repeatability is also useful to prove that the program did indeed produce the published results. Repeatability is not always possible or easy (Diethelm, 2012; Courtès and Wurmus, 2015). But for sequential and deterministically parallel programs (Hines, and Carnevale, 2008; Collange et al., 2015) not depending on analog inputs, it often comes down to controlling the initialization of the pseudo-random number generators (RNG).

For our program, that means setting the seed of the random module. We may also want to save the output of the program to a file, so that we can easily verify that consecutive runs do produce the same output: eyeballing differences is unreliable and time-consuming, and therefore won't be done systematically.

Setting seeds should be done carefully. Using 439 as a seed in the previous program would result in ten consecutive +1 steps3, which—although a perfectly valid random walk—lend itself to a gross misinterpretation of the overall dynamics of the algorithm. Verifying that the qualitative aspects of the results and the conclusions that are made are not tied to a specific initialization of the pseudo-random generator is an integral part of any scientific undertaking in computational science; this is usually done by repeating the simulations multiple times with different seeds.

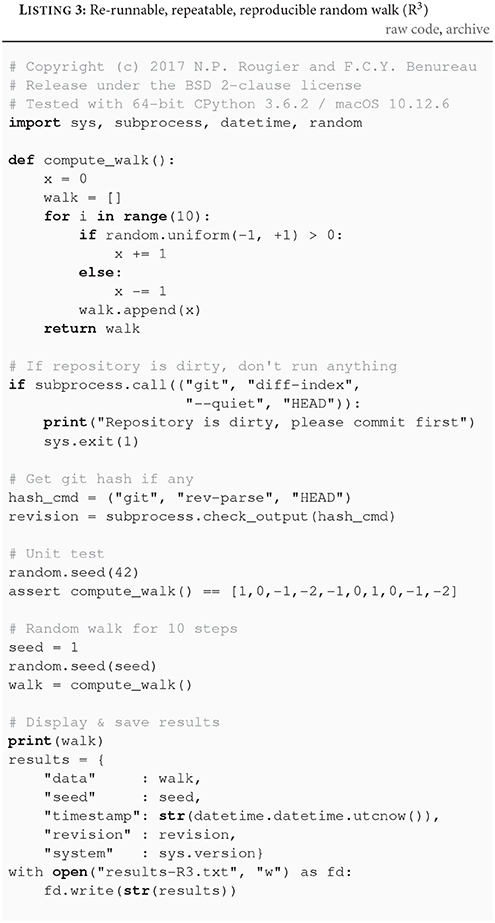

Reproducible (R3)

The R2 code seems fine enough, but it hides several problems that come to light when trying to reproduce results. A result is said to be reproducible if another researcher can take the original code and input data, execute it, and re-obtain the same result (Peng et al., 2006). As explained by Donoho et al. (2009), scientific practice must expect that errors are ubiquitous, and therefore be robust to them. Ensuring reproducibility is a fundamental step toward this: it provides other researchers the means to verify that the code does indeed produce the published results, and to scrutinize the procedures it employed to produce them. As demonstrated by Mesnard and Barba (2017), reproducibility is hard.

For instance, the R2 program will not produce the same results all the time. It will, because it is repeatable, produce the same results over repeated executions. But it will not necessarily do so over different execution environments. The cause is to be found in a change that occurred in the pseudo-random number generator between Python 3.2 and Python 3.3. Executed with Python 2.7–3.2, the code will produce the sequence −1, 0, 1, 0, −1, −2, −1, 0, −1, −2. But with Python 3.3–3.6, it will produce −1, −2, −1, −2, −1, 0, 1, 2, 1, 0. With future versions of the language, it may change still. For the R3 version, we abandon the use of the random.choice function in favor of the random.uniform function, whose behavior is consistent across versions 2.7–3.6 of Python.

Because any dependency of a program—to the most basic one, the language itself—can change its behavior from one version to the other, executability (R1) and determinism (R2) are necessary but not sufficient for reproducibility. The exact execution environment used to produce the results must also be specified—rather than the broadest set of environments where the code can be effectively run. In other words, assertions such as “the results were obtained with CPython 3.6.1” are more valuable, in a scientific context, than “the program works with Python 3.x and above”. With the increasing complexity of computational stacks, retrieving, and deciding what is pertinent (CPU architecture? operating system version? endianness?) might be non-trivial. A good rule of thumb is to include more information than necessary rather than not enough, and some rather than none.

Recording the execution environment is only the first step. The R2 program uses a random seed but does not keep a trace of it except in the code. Should the code change after the production of the results, someone provided with the last version of the code will not be able to know which seed was used to produce the results, and would need to iterate through all possible random seeds, an impossible task in practice4.

This is why result files should come alongside their context, i.e., an exhaustive list of the parameters used as well as a precise description of the execution environment, as the R3 code does. The code itself is part of that context: the version of the code must be recorded. It is common for different results or different figures to have been generated by different versions of the code. Ideally, all results should originate from the same (and last) version of the code. But for long or expensive computations, this may not be feasible. In that case, the result files should contain the version of the code that was used to produce it. This information can be obtained from the version control software. This also allows, if some errors are found and corrected after some results have been obtained, to identify which ones should be recomputed. In R3, the code records the git revision, and prevents execution if the repository holds uncommitted changes when the computation starts.

Published results should obviously come from versions of the code where every change and every file has been committed. This includes pre-processing, post-processing, and plotting code. Plotting code may seem mundane, but it is as vulnerable as any other piece of the code to bugs and errors. When it comes to checking that the reproduced data match the one published in the article, however, figures can reveal themselves to be imprecise and cumbersome, and sometimes plain unusable. To avoid having to manually overlay pixelated plots, published figures should be accompanied by their underlying data (coordinates of the plotted points) in the supplementary data to allow straightforward numeric comparisons.

Another good practice is to make the code self-verifiable. In R3, a short unit test is provided, that allows the code to verify its own reproducibility. Should this test fail, then there is little hope of reproducing the results. Of course, passing the test does not guarantee anything.

It is obvious that reproducibility implies availability. As shown in Collberg and Proebsting (2016), code is often unavailable, or only available upon request. While the latter may seem sufficient, changes in email address, changes in career, retirement, a busy inbox or poor archiving practices can make a code just as unreachable. Code and input data and result data should be available with the published article, as supplementary data, or through a DOI link to a scientific repository such as Figshare, Zenodo5 or a domain specific database, such as ModelDB for computational neuroscience. The codes presented in this article are available in the GitHub repository github.com/rougier/random-walk and at doi.org/10.5281/zenodo.848217.

To recap, reproducibility implies re-runnability and repeatability and availability, yet imposes additional conditions. Dependencies and platforms must be described as precisely and as specifically as possible. Parameters values, the version of the code, and inputs should accompany the result files. The data and scripts behind the graphs must be published. Unit tests are a good way to embed self-diagnostics of reproducibility in the code. Reproducibility is hard, yet tremendously necessary.

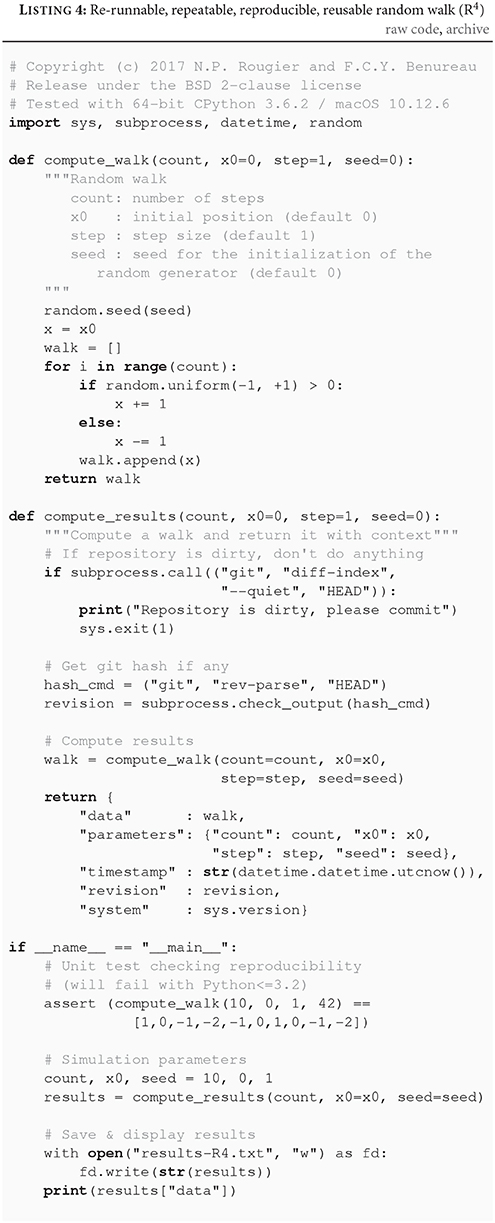

Reusable (R4)

Making your program reusable means it can be easily used, and modified, by you and other people, inside and outside your lab. Ensuring your program is reusable is advantageous for a number of reasons.

For you, first. Because the you now and the you in 2 years are two different persons. Details on how to use the code, its limitations, its quirks, may be present to your mind now, but will probably escape you in 6 months (Donoho et al., 2009). Here, comments and documentation can make a significant difference. Source code reflects the results of the decisions that were made during its creation, but not the reasons behind those decisions. In science, where the method and its justification matter as much as the results, those reasons are precious knowledge. In that context, a comment on how a given parameter was chosen (optimization, experimental data, educated guess), why a library was chosen over another (conceptual or technical reasons?) is valuable information.

Reusability of course directly benefits other researchers from your team and outside of it. The easier it is to use your code, the lower the threshold is for other to study, modify and extend it. Scientists constantly face the constraint of time: if a model is available, documented, and can be installed, run, and understood all in a few hours, it will be preferred over another that would require weeks to reach the same stage. A reproducible and reusable code offers a platform both verifiable and easy-to-use, fostering the development of derivative works by other researchers on solid foundations. Those derivative works contribute to the impact of your original contribution.

Having more people examining and using your code also means that potential errors have a higher chance to be caught. If people start using your program, they will most likely report bugs or malfunctions they encounter. If you're lucky enough, they might even propose either bug fixes or improvements, hence improving the overall quality of your software. This process contributes to the long-term reproducibility to the extent people continue to use and maintain the program.

Despite all this, reusability is often overlooked, and it is not hard to see why. Scientists are rarely trained in software engineering, and reusability can represent an expensive endeavor if undertaken as an afterthought, for little tangible short-term benefits, for a codebase that might, after all, only see a single use. And, in fact, reusability is not as indispensable a requirement as re-runnability, repeatability, and reproducibility. Yet, some simple measures can tremendously increase reusability, and at the same time strengthen reproducibility and re-runnability over the long-term.

Avoid hardcoded or magic numbers. Magic numbers are numbers present directly in the source code, that do not have a name and therefore can be difficult to interpret semantically. Hardcoded values are variables that cannot be changed through a function argument or a parameter configuration file. To be modified, they involve editing the code, which is cumbersome and error-prone. In the R3 code, the seed and the number of steps are respectively hardcoded and magic.

Similarly, code behavior should not be changed by commenting/uncommenting code (Wilson et al., 2017). Modification of the behavior of the code, required when different experiments examine slightly different conditions, should always be explicitly set through parameters accessible to the end-user. This improves reproducibility in two ways: it allows those conditions to be recorded as parameters in the result files, and it allows to define separate scripts to run or configuration files to load to produce each of the figures of the published paper. With documentation explaining which script or configuration file corresponds to which experiment, reproducing the different figures becomes straightforward.

Documentation is one of the most potent tools for reusability. A proper documentation on how to install and run the software often makes the difference whether other researchers manage to use it or not. A comment describing what each function does, however evident, can avoid hours of head-scratching. Great code may need few comments. Scientists, however, are not always brilliant developers. Of course, bad, complicated code should be rewritten until is simple enough to explain itself. But realistically, this is not always going to be done: there is simply not enough incentive for it. There, a comment that explains the intentions and reasons behind a block of code can be tremendously useful.

Reusability is not a strict requirement for scientific code. But it has many benefits, and a few simple measures can foster it considerably. To complement the R4 version provided here, we provide an example repository of a re-runnable, repeatable, reproducible and reusable random walk code. The repository is available on GitHub github.com/benureau/r5 and at doi.org/10.5281/zenodo.848284.

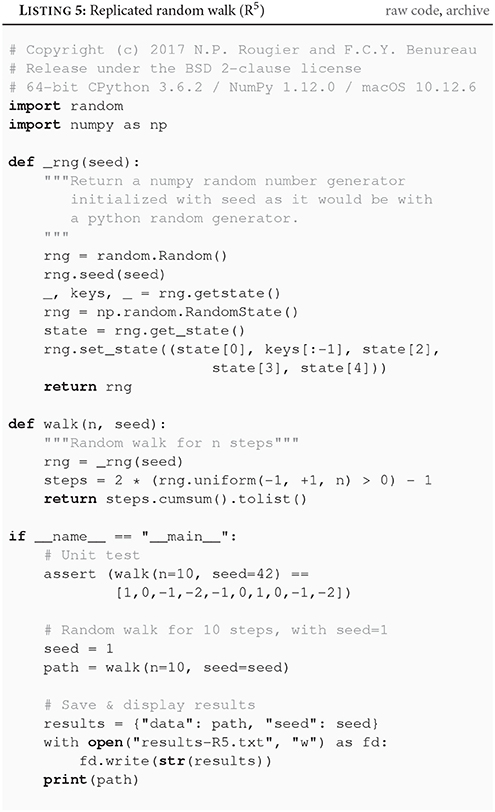

Replicable (R5)

Having made a software reusable offers an additional way to find errors, especially if your scientific contribution is popular. Unfortunately, this is not always effective, and some recent cases have shown that bugs can lurk in well-used open-source code, impacting the false positive rates of fMRI studies (Eklund et al., 2016), or the encryption of communications over the Internet (Durumeric et al., 2014). Let's be clear: the goal here is not to remove all bugs and mistakes from science. The goal is to have methods and practices in place that make possible for the inevitable errors that will be made to be caught and corrected by motivated investigators. This is why, as explained by Peng et al. (2006), the replication of important findings by multiple independent investigators is fundamental to the accumulation of scientific evidence.

Replicability is the implicit assumption that an article that does not provide the source code makes: that the description it provides of the algorithms is sufficiently precise and complete to re-obtain the results it presents. Here, replicating implies writing a new code matching the conceptual description of the article, in order to reobtain the same results. Replication affords robustness to the results because, should the original code contain an error, a different codebase creates the possibility that this error will not be repeated, in the same way that replicating a laboratory experiment in a different laboratory can ferret out subtle biases. While every published article should strive for replicability, it is seldom obtained. In fact, absent an explicit effort to make an algorithmic description replicable, there is little probability that it will be.

This is because most papers strive to communicate the main ideas behind their contribution in terms as simple and as clear as possible, so that the reader may be able to easily understand them. Trying to ensure replicability in the main text adds a myriad of esoteric details that are not conceptually significant and clutter the explanations. Therefore, unless the writer dedicates an addendum or a section of the supplementary information for technical details specifically aimed at replicability, the information will not be there because clarity and concision represent enticing incentives not to do so.

But even when those details are present, the best efforts may fall short because an oversight, a typo or a difference between what is evident for the writer and for the reader (Mesnard and Barba, 2017). Minute changes in the numerical estimation of a common first-order differential equation can have significant impact (Crook et al., 2013). Hence, a reproducible code plays an important role alongside its article: it is a objective catalog of all the implementation details.

A researcher seeking to replicate published results might first consider only the article. If she fails to replicate the results, she will consult the original code, and with it be able to pinpoint why her code and the code of the authors differ in behavior. Because a mistake on their part? Hers? Or a difference in a seemingly innocuous implementation detail? A fine analysis of why a particular algorithmic description is lacking or ambiguous or why a minor implementation decision is in fact crucial to obtain the published results is of great scientific value. Such an analysis can only be done with access to both the article and the code. With only the article, the researcher will often be unable to understand why she failed to replicate the results, and will naturally be inclined to only report replication successes.

Replicability, therefore, does not negate the necessity of reproducibility. In fact, it often relies on it. To illustrate this, let us consider what could be the description of the random walker, as it would be written in an article describing it:

The model uses the Mersene Twister generator initialized with the seed 1. At each iteration, a uniform number between −1 (included) and +1 (excluded) is drawn and the sign of the result is used for generating a positive or negative step.

This description, while somewhat precise, forgoes—as it is common—the initialization of the variables (here the starting value of the walk: 0), and the technical details about which implementation of the RNG is used.

It may look innocuous. After all, the Python documentation, states that “Python uses the Mersenne Twister as the core generator. It produces 53-bit precision floats and has a period of 2**19937-1”. Someone trying to replicate the work however might choose to use the RNG from the NumPy library. The NumPy library is extensively used in the science community, and it provides an implementation of the Mersene Twister generator too. Unfortunately, the way the seed is interpreted by the two implementations is different, yielding different random sequences.

Here we are able to replicate exactly6 the behavior of the pure-Python random walker by setting the internal state of the NumPy RNG appropriately, but only because we have access to specific technical details of the original code (the use of the random module of the standard Python library of CPython 3.6.1), or to the code itself.

But there are still more subtle problems with the description given above. If we look more closely at it, we can realize that nothing is said about the specific case of 0 when generating a step. Do we have to consider 0 to be a positive or a negative step? Without further information and without the original code, it is up to the reader to decide. Likewise, the description is ambiguous regarding the first element of the walk. Is the initialization value included (it was not in our codes so far)? This slight difference might affect the statistics of short runs.

All these ambiguities in the description of an algorithm pile up; some are inconsequential (the 0 case has null probability), but some may affect the results in important ways. They are mostly inconspicuous to the reader and oftentimes, to the writer as well. In fact, the best way to ferret out the ambiguities, big and small, of an article is to replicate it. This is one of the reasons why the ReScience journal (Rougier et al., 2017) was created (the second author, Nicolas Rougier, is one of the editor-in-chief of ReScience). This open-access journal, run by volunteers, targets computational research and encourages the explicit replication of already published research, promoting new and open-source implementations in order to ensure that the original research is reproducible.

Code is a key part of a submission to the ReScience journal. During the review process, reviewers run the submitted code, may criticize its quality and its ease-of-use, and verify the reproduciblity of the replication. The Journal of Open Source Software (Smith et al., 2017) functions similarly: testing the code is a fundamental part of the review process.

Conclusion

Throughout the evolution of a small random walk example implemented in Python, we illustrated some of the issues that may plague scientific code. The code may be correct and of good quality, and still possess many problems that reduce its contribution to the scientific discourse. To make these problems explicit, we articulated five characteristics that a code should possess to be a useful part of a scientific publication: it should be re-runnable, repeatable, reproducible, reusable, and replicable.

Running old code on tomorrow's computer and software stacks may not be possible. But recreating the old code's execution environment may be: to ensure the long-term re-runnability of a code, its execution environment must be documented. For our example, a single comment went a long way to transform the R0 code into the R1 (re-runnable) one.

Science is built on verifying the results of others. This is harder to do if each execution of the code produce a different result. While for complex concurrent workflows this may not be possible, in all instances where it is feasible the code should be repeatable. This allows future researchers to examine exactly how a specific result was produced. Most of the time, what is needed is to set or record the initial state of the pseudo-random number generator, as what done in the R2 (repeatable) version.

Even more care is needed to make a code reproducible. The exact execution environment, code and parameters used must be recorded and embedded in the results files, as the R3 (reproducible) version does. Furthermore, the code must be made available as supplementary data with the whole computational workflow, from preprocessing steps to plotting scripts.

Making code reusable is a stretch goal that can yield tremendous benefits for you, your team, and other researchers. Taken into account during development rather than as an afterthought, simple measures can avoid hours of head-scratching for others, and for yourself—in a few years. Documentation is paramount here, even if it is a single comment per function, as it was done in the R4 (reusable) version.

Finally, there is the belief that an article should suffice by itself: the descriptions of the algorithms present in the paper should suffice to reobtain (to replicate) the published results. For well-written papers that precisely dissociate conceptually significant aspects from irrelevant implementation details, that may be. But scientific practice should not assume the best of cases. Science assumes that errors can crop up everywhere. Every paper is a mistake or a forgotten parameter away from irreplicability. Replication efforts use the paper first, and then the reproducible code that comes along with it whenever the paper falls short of being precise enough.

In conclusion, the R3 (reproducible) form should be accepted as the minimum scientific standard (Wilson et al., 2017). This means this should be actually checked by reviewers and publishers when code is part of a work worth being published. It's hardly the case today.

Compared to psychology or biology, the replication issues of computational works have reasonable and efficient solutions. But making sure that these solutions are adopted will not be solved by articles such as this one. Just like in other fields, we have to modify the incentives for the researchers to publish by adopting exigences, enforced domain-wide, on what constitutes an acceptable scientific computational work.

Author Contributions

We both contributed equally to the ideas, the text and the code.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2017.00069/full#supplementary-material

Footnotes

1. ^Reproducibility and replicability are employed differently by different authors and in different domains (see for instance the report from the U.S. National Academies of Sciences, 2016). Here, we place ourselves in the context of computational works, where data is produced by a program. In this paper, we call a result reproducible if one can take the original source code, re-execute it and reobtain the original result. Conversely, a result is replicable if one can create a code that matches the algorithmic descriptions given in the published article and reobtain the original result.

2. ^To be clear, and although virtual machines are often a great help here, this is not always possible. It is, however, always more difficult when the original execution environment is unknown.

3. ^With CPython 3.3–3.6. See the next section for details.

4. ^Here, with 210 possibilities for a 10-step random walk, the seed used or another matching the generated sequence could certainly be found. For instance, 436 is the smallest positive integer seed to reproduce the results of R0 with Python 2.7 (1,151, 3,800, 4,717 or 11,235,813 work as well). Such a search becomes intractable for a 100-step walk.

5. ^Online code repositories such as GitHub are not scientific repositories, and may disappear, change name, or change their access policy at any moment. Direct links to them are not perpetual, and, when used, they should always be supplemented by a DOI link to a scientific archive.

6. ^Striving, as we do here, for a perfect quantitative match may seem unnecessary. Yet, in replication projects, in particular in computational research, quantitative comparisons are a simple and effective way to verify that the behavior has been reproduced. Moreover, they are particularly helpful to track exactly where the code of a tentative replication fails to reproduce the published results. For a discussion about statistical ways to assess replication see the report of the U.S. National Academies of Sciences (2016).

References

Collange, S., Defour, D., Graillat, S., and Iakymchuk, R. (2015). Numerical reproducibility for the parallel reduction on multi- and many-core architectures. Parallel Comput. 49, 83–97. doi: 10.1016/j.parco.2015.09.001

Collberg, C., and Proebsting, T. A. (2016). Repeatability in computer systems research. Commun. ACM 59, 62–69. doi: 10.1145/2812803

Courtès, L., and Wurmus, R. (2015). “Reproducible and user-controlled software environments in HPC with Guix,” in 2nd International Workshop on Reproducibility in Parallel Computing (RepPar) (Vienne).

Crook, S. M., Davison, A. P., and Plesser, H. E. (2013). “Learning from the past: approaches for reproducibility in computational neuroscience,” in 20 Years of Computational Neuroscience, ed J. M. Bower (New York, NY: Springer), 73–102.

Diethelm, K. (2012). The limits of reproducibility in numerical simulation. Comput. Sci. Eng. 14, 64–72. doi: 10.1109/mcse.2011.21

Donoho, D. L., Maleki, A., Rahman, I. U., Shahram, M., and Stodden, V. (2009, January). Reproducible research in computational harmonic analysis. Comput. Sci. Eng. 11, 8–18. doi: 10.1109/mcse.2009.15

Durumeric, Z., Payer, M., Paxson, V., Kasten, J., Adrian, D., Halderman, J. A., et al. (2014). “The matter of heartbleed,” in Proceedings of the 2014 Conference on Internet Measurement Conference - IMC'14 (Vancouver, BC: ACM Press).

Eklund, A., Nichols, T. E., and Knutsson, H. (2016). Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proc. Natl. Acad. Sci. U.S.A. 113, 7900–7905. doi: 10.1073/pnas.1602413113

Goble, C. (2016). “What is reproducibility? The R*brouhaha,” in First International Workshop on Reproducible Open Science (Hannover). Available online at: http://repscience2016.research-infrastructures.eu/img/CaroleGoble-ReproScience2016v2.pdf (September 9, 2016).

Hines, M. L., and Carnevale, N. T. (2008). Translating network models to parallel hardware in NEURON. J. Neurosci. Methods 169, 425–455. doi: 10.1016/j.jneumeth.2007.09.010

Hughes, B. D. (1995). Random Walks and Random Environments. Oxford; New York, NY: Clarendon Press; Oxford University Press.

Iqbal, S. A., Wallach, J. D., Khoury, M. J., Schully, S. D., and Ioannidis, J. P. A. (2016). Reproducible research practices and transparency across the biomedical literature. PLoS Biol. 14:e1002333. doi: 10.1371/journal.pbio.1002333

Mesirov, J. P. (2010). Accessible reproducible research. Science 327, 415–416. doi: 10.1126/science.1179653

Mesnard, O., and Barba, L. A. (2017). Reproducible and replicable computational fluid dynamics: it's harder than you think. Comput. Sci. Eng. 19, 44–55. doi: 10.1109/mcse.2017.3151254

Open Science Collaboration (2015). Estimating the reproducibility of psychological science. Science 349:aac4716. doi: 10.1126/science.aac4716

Peng, R. D., Dominici, F., and Zeger, S. L. (2006). Reproducible epidemiologic research. Am. J. Epidemiol. 163:783. doi: 10.1093/aje/kwj093

Rougier, N., Hinsen, K., Alexandre, F., Arildsen, T., Barba, L., Benureau, F., et al. (2017, July). Sustainable computational science: the ReScience initiative. ArXiv e-prints. arXiv:1707. 04393.

Sandve, G. K., Nekrutenko, A., Taylor, J., and Hovig, E. (2013). Ten simple rules for reproducible computational research. PLoS Comput. Biol. 9:e1003285. doi: 10.1371/journal.pcbi.1003285

Schwab, M., Karrenbach, N., and Claerbout, J. (2000). Making scientific computations reproducible. Comput. Sci. Eng. 2, 61–67. doi: 10.1109/5992.881708

Smith, A., Niemeyer, K. E., Katz, D., Barba, L., Githinji, G., Gymrek, M., et al. (2017, July). Journal of Open Source Software (JOSS): design first-year review. ArXiv e-prints. arXiv:170 7.02264.

U.S. National Academies of Sciences, Engineering, and Medicine (2016). Statistical Challenges in Assessing and Fostering the Reproducibility of Scientific Results: Summary of a Workshop, ed M. Schwalbe. Washington, DC: The National Academies Press.

Keywords: replicability, reproducibility of results, reproducible science, reproducible research, computational science, software development, best practices

Citation: Benureau FCY and Rougier NP (2018) Re-run, Repeat, Reproduce, Reuse, Replicate: Transforming Code into Scientific Contributions. Front. Neuroinform. 11:69. doi: 10.3389/fninf.2017.00069

Received: 28 August 2017; Accepted: 17 November 2017;

Published: 04 January 2018.

Edited by:

Sharon Crook, Arizona State University, United StatesReviewed by:

Thomas E. Nichols, Independent Researcher, Oxford, United KingdomPaul Pavlidis, University of British Columbia, Canada

Thomas Marston Morse, Department of NeuroScience, Yale School of Medicine, Yale University, United States

Copyright © 2018 Benureau and Rougier. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fabien C. Y. Benureau, ZmFiaWVuQGJlbnVyZWF1LmNvbQ==

Fabien C. Y. Benureau

Fabien C. Y. Benureau Nicolas P. Rougier

Nicolas P. Rougier