- 1Department of Biomedical Engineering, Columbia University, New York, NY, United States

- 2Department of Applied Mathematics, Columbia University, New York, NY, United States

- 3Department of Psychiatry, Columbia University, New York, NY, United States

- 4Mortimer B. Zuckerman Mind Brain Behavior Institute, Columbia University, New York, NY, United States

Brain tissue segmentation has demonstrated great utility in quantifying MRI data by serving as a precursor to further post-processing analysis. However, manual segmentation is highly labor-intensive, and automated approaches, including convolutional neural networks (CNNs), have struggled to generalize well due to properties inherent to MRI acquisition, leaving a great need for an effective segmentation tool. This study introduces a novel CNN-Transformer hybrid architecture designed to improve brain tissue segmentation by taking advantage of the increased performance and generality conferred by Transformers for 3D medical image segmentation tasks. We first demonstrate the superior performance of our model on various T1w MRI datasets. Then, we rigorously validate our model's generality applied across four multi-site T1w MRI datasets, covering different vendors, field strengths, scan parameters, and neuropsychiatric conditions. Finally, we highlight the reliability of our model on test-retest scans taken in different time points. In all situations, our model achieved the greatest generality and reliability compared to the benchmarks. As such, our method is inherently robust and can serve as a valuable tool for brain related T1w MRI studies. The code for the TABS network is available at: https://github.com/raovish6/TABS.

Introduction

Brain tissue segmentation represents an important application of medical image processing, in which an MRI image of the brain is segmented into three classes: gray matter (GM), white matter (WM), and cerebrospinal fluid (CSF). Brain tissue segmentation is a critical step in Voxel Based Morphometry (VBM), a method used to quantitatively analyze MRI scans. VBM presents the ability to highlight subtle structural abnormalities by estimating differences in GM and WM brain tissue volume. As such, VBM has been prevalent for characterizing and monitoring conditions such as schizophrenia (Wright et al., 1995), Alzheimer's (Hirata et al., 2005), Huntingon's (Kassubek et al., 2004), and bipolar disorder (Nugent et al., 2006). VBM has also been used as an integral preprocessing tool in machine learning and deep learning based disease classification pipelines (Salvador et al., 2017; Nemoto et al., 2021). Outside of VBM, brain tissue segmentation is useful for characterizing tissue volume in particular regions of interest. It is often used with magnetic resonance spectroscopy to quantify metabolites by tissue type, and both techniques have been applied together to investigate morphological differences associated with various disorders (Auer et al., 2001; Bagory et al., 2011) as well as correct for metabolite measurements based on differing tissue fractions (Harris et al., 2015).

Despite the demonstrated utility of brain tissue segmentation, there is no universally accepted method capable of segmenting accurately and efficiently across a wide variety of datasets. Manual segmentation of brain tissue is extremely labor intensive, often impractical given larger datasets, and difficult even for experts. Alternatively, automated segmentation has proven challenging due to properties inherent to the MRI scans themselves. Changes in vendors or field strength have both been linked with increased variance in repeated scan measures (Han et al., 2006), and scans acquired through different imaging protocols tend to fluctuate more in terms of volumetric brain measures (Kruggel et al., 2010). Time of day as well as time between scans have been associated with variable tissue volume estimation (Karch et al., 2019) while neuropsychiatric conditions such as schizophrenia have been linked with subtle brain tissue anatomical changes (Koutsouleris et al., 2015). Together, these inconsistencies make it difficult for brain tissue segmentation solutions to be applicable across datasets of differing vendors, collection parameters, time points, and neuropsychiatric condition.

Many of the earlier proposed automated solutions have depended on intensity thresholding (Dora et al., 2017), population-based atlases (Cabezas et al., 2011), clustering (Mahmood et al., 2015; Dora et al., 2017), statistical methods (Zhang and Brady, 2000; Marroquín et al., 2002; Greenspan et al., 2006; Angelini et al., 2007), and standard machine learning algorithms. Thresholding-based approaches often struggle to segment low contrast input images with overlapping brain tissue intensity histograms. Alternatively, atlas-based algorithm performance heavily depends on the quality of the population derived brain atlas. While machine learning algorithms such as support vector machine (SVM; Bauer and Nolte, 2011), random forest (Dadar and Collins, 2021), and neural networks (Amiri et al., 2013) have demonstrated reasonable segmentation performance, their accuracy largely relies on the quality of manually extracted features. In general, many of these algorithms require a priori information to properly segment brain tissue, which is often not feasible to acquire for all new scans segmented. FSL FAST is a popular statistical brain tissue segmentation toolkit that combines Gaussian mixture models with hidden Markov random fields to achieve reliable segmentation performance across a variety of datasets (Zhang and Brady, 2000). However, segmentation via FAST is time consuming and therefore not ideal for many real-time segmentation applications.

Convolutional neural networks (CNNs) have recently emerged as a superior alternative to standard machine learning algorithms for classification-based brain segmentation given their feature-encoding capabilities (Akkus et al., 2017). CNNs have been found to outperform machine learning algorithms such as random forest and SVM specifically for brain tissue segmentation (Zhang et al., 2015). Following their introduction, many other CNN-based networks have been proposed for brain tissue segmentation (Moeskops et al., 2016; Khagi and Kwon, 2018) as well as brain tumor segmentation (Beers et al., 2017; Mlynarski et al., 2019; Feng et al., 2020a), including both 2D and 3D approaches. Unet represents one popular segmentation algorithm (Ronneberger and Fischer, 2015; Çiçek et al., 2016), which consists of symmetric encoding and decoding convolutional operations that allows for the preservation of the initial image resolution following segmentation. Variants of Unet have been successfully applied to brain tissue segmentation achieving state of the art performance. For example, one study achieved a DICE score of 0.988 using 3D Unet, which even outperformed human experts (Kolarík et al., 2018). More recently, 2D patch-based Unet and Unet-inspired implementations have gained traction (Lee et al., 2020; Yamanakkanavar and Lee, 2020) to better preserve and account for local details; such models have outperformed their non-patch-based variants.

Despite the impressive performance CNNs have demonstrated for brain tissue segmentation, they often struggle to generalize well when presented with new datasets. Many prior brain tissue segmentation approaches only report test performance on the same dataset upon which the model was trained. While such metrics validate the generality of the proposed model on MRI scans from the same dataset, they fail to quantify model performance across different datasets where changes in acquisition parameters can impact MRI image features and thus decrease the model's generality. Given the importance of brain tissue segmentation in VBM and pre-processing, it is not practical to retrain a CNN model every time a scan is obtained differently. As such, model generality is especially imperative to developing a widely applicable automated brain tissue segmentation solution.

Transformers are an alternative to CNNs that have recently demonstrated state-of-the-art results in natural image segmentation. Emerging evidence suggests that Transformers coupled with CNNs may improve performance and generalization for medical image segmentation tasks including brain tissue segmentation (Chen et al., 2021; Hatamizadeh et al., 2021; Sun et al., 2021; Wang et al., 2021). In this study, we sought to improve the traditional Unet architecture using Transformers to not only achieve higher brain tissue segmentation performance, but also generalize better across different datasets while remaining reliable. Here, we propose Transformer-based Automated Brain Tissue Segmentation (TABS), a new 3D CNN-Transformer hybrid deep learning architecture for brain tissue segmentation. In doing so, we elucidate the benefits of embedding a Transformer module within a CNN encoder-decoder architecture specifically for brain tissue segmentation. Furthermore, after achieving improved within dataset performance, we are the first to rigorously demonstrate model generality and reliability across multiple vendors, field strengths, scan parameters, time points, and neuropsychiatric condition.

Materials and methods

Study design

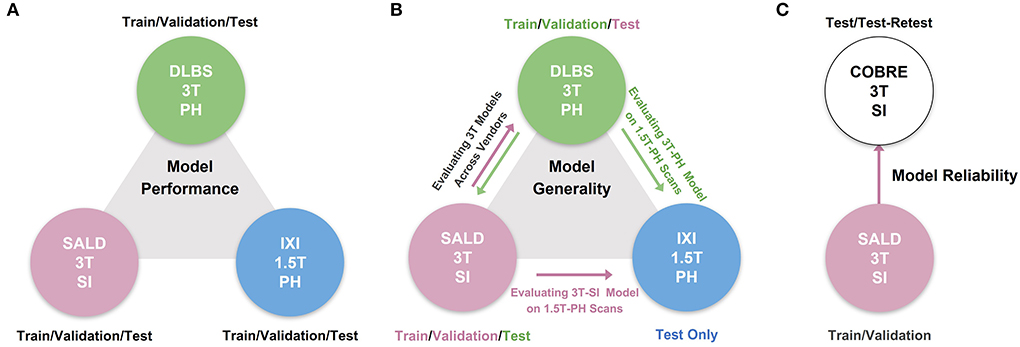

We conducted three experiments to evaluate model performance, generality, and reliability for brain tissue segmentation. The experimental pipeline for these experiments is visualized in Figure 1. First, we trained and tested all of the models on three separate datasets (DLBS, SALD, and IXI) of differing acquisition parameters along with an aggregate total dataset containing all of the scans combined. We then evaluated model generality across field strength and vendors; models trained on 3T datasets were tested on the 1.5T dataset and models trained on 3T datasets from different vendors were tested on one another. Finally, we extended our generalization testing to an alternate dataset (COBRE) containing test-retest repeated scans of both schizophrenia and healthy patients. We applied models pre-trained on the 3T SALD dataset to COBRE to give them the best chance of generalizing well, as SALD and COBRE were collected using similar acquisition parameters. Once confirming that TABS generalized the best on this dataset, we compared the reliability of TABS to that of the ground truth by evaluating the similarity of outputs on the test-retest repeated scans. Given that each pair of scans were acquired from the same subject within a small time frame, we expected a more reliable tool to output very similar segmentation predictions across both scans.

Figure 1. Overview of experimental pipeline. (A) Model performance test, where each model was trained and tested on individual datasets. (B) Model generality test, where models pre-trained on 3T DLBS/SALD datasets were tested on one another and on the 1.5T IXI dataset. (C) Model reliability test, where the best generalizing model to the COBRE dataset was compared to FAST based on similarity in segmentation outputs for repeated scans.

We compared TABS to three other benchmark CNN models in our experiments: vanilla Unet, Unet-SE, and ResUnet. We chose Unet given its prior state of the art performance in 3D brain tissue segmentation (Kolarík et al., 2018), and we also compared to prior attempts at improving Unet including squeeze-excitation (SE) blocks (Hu et al., 2018) before each downsampling operation (Unet-SE) and residual connections (ResUnet; Zhang et al., 2018). Moreover, given that the model architecture for TABS is identical to that of ResUnet except for the Vision Transformer, comparing to ResUnet allowed us to highlight the specific benefits conferred by the Transformer. All of the tested models were the same depth and encoded the same number of features. Finally, we also compared to FSL FAST, the tool used to generate the ground truths, in our reliability evaluation.

Study design

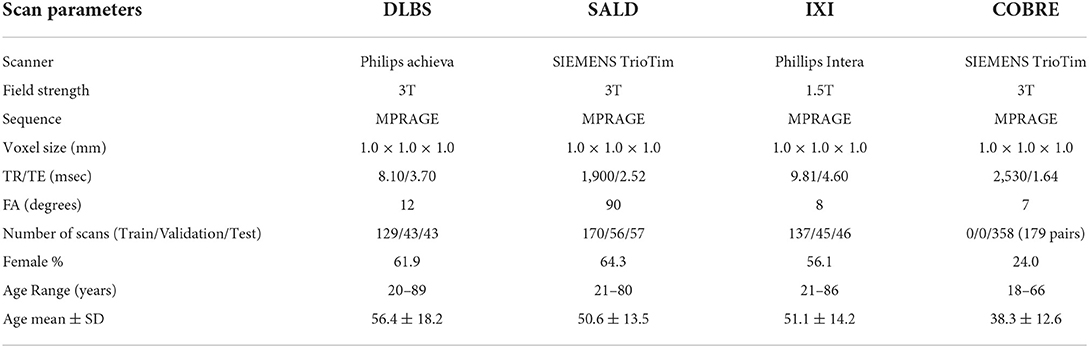

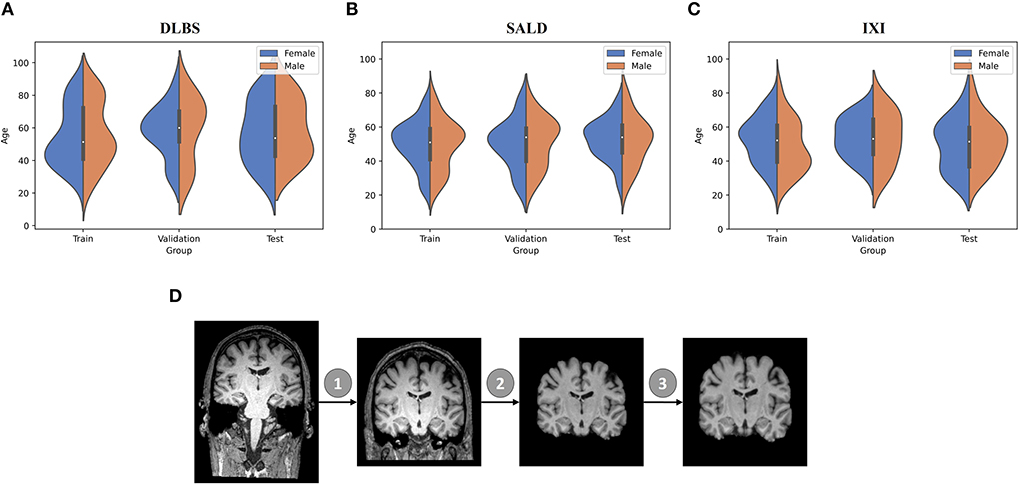

We collected MRI scans of healthy participants over a broad age range from three datasets for our first two experiments: DLBS (Rodrigue et al., 2012), SALD (Wei et al., 2018), and IXI (Biomedical Image Analysis Group et al., 2018). While they all use a MPRAGE sequence, the datasets vary in terms of their other acquisition parameters. Firstly, they differ by field strength, where DLBS and SALD contain 3T scans and IXI contains 1.5T scans. Moreover, all three datasets were acquired using different scanners, with the SALD dataset acquired using a Siemens manufactured scanner as opposed to Phillips. Lastly, the datasets differ in terms of scan parameters such as repetition/echo time and flip angle. We split each dataset into 3:1:1 train/validation/test groups while maintaining a broad age distribution across each subsection. The age distributions across these splits for each of these datasets are shown in Figures 2A–C. We also collected paired test-retest scans taken at different time points of healthy participants and schizophrenia patients from the COBRE dataset (Bustillo et al., 2017) for our third experiment. The demographic information and acquisition parameters for all four datasets are outlined in Table 1.

Figure 2. Data demographic and pre-processing visualization. (A–C) Age distribution by gender for train/validation/test groups of DLBS, SALD, and IXI, respectively. (D) MRI pre-processing pipeline consisting of 1. Bias field correction 2. Brain extraction 3. Affine correction.

We followed the initial pre-processing protocol outlined by Feng et al. (2020b) for all of the datasets, which includes bias field correction (Sled et al., 1998), brain extraction using FreeSurfer (Ségonne et al., 2004), and affine registration to the 1 mm3 isotropic MNI152 brain template with trilinear interpolation using FSL FLIRT (Jenkinson et al., 2002). After these steps, the DLBS/SALD/IXI MRI images were 182 × 218 × 182, and the COBRE images were 193 × 229 × 193. We padded and cropped the images to reach an input dimension of 192 × 192 × 192, using a maximum intensity projection across all scans for each dataset to ensure that we did not remove important anatomical components. Finally, we normalized the intensities for each scan to values between −1 and 1. The pre-processing pipeline is visualized in Figure 2D.

Model architecture and implementation

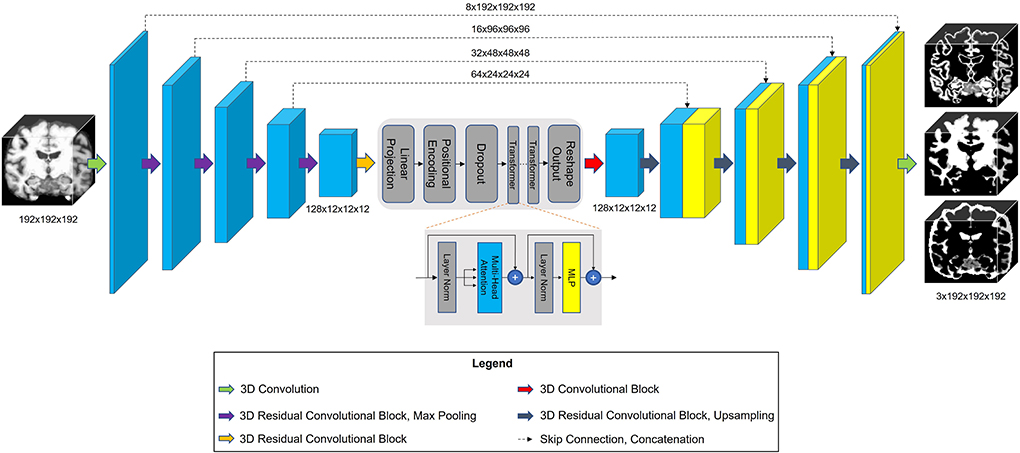

The architecture of our proposed model is shown in Figure 3. TABS is a ResUnet (Zhang et al., 2018) inspired model that consists of a 5-layered 3D CNN encoder and decoder. TABS takes an input dimension of 192 × 192 × 192, and the five encoder layers downsample the original image to f x12 × 12 × 12, where f represents the number of encoded features. For this specific implementation, we chose a f value of 128. We followed the same “linear projection and learned positional embedding” operations introduced in Wang et al. (2021) to convert the encoded feature tensor into 512 tokenized vectors that are sequentially fed into the Transformer module in the order determined by the learned positional embeddings. Our Transformer encoder consists of 4 layers and 8 heads following the implementation initially described by Vaswani et al. (2017). The output of the Transformer is 512 × 1,728, which we then reshape to 512 × 12 × 12 × 12 and reduce the feature dimensionality to f via convolution. The decoder portion of the network reconstructs the image to the original input dimension, and a final convolution operation is applied to generate a 3-channel output with each channel corresponding to an individual tissue type. We used a Softmax activation function to ensure that the probabilities for each voxel across the three channels add up to 1.

Figure 3. Model architecture for TABS, including a 5-layer encoder/decoder with a Vision Transformer between the encoder and decoder.

Training protocol

All four models were trained using the same parameters described below. We trained for 350 epochs with early stopping based on validation loss. We selected pre-trained models based on the best validation performance. We used FAST to generate ground truth probability maps for each brain tissue type and stacked and cropped them to generate a three-channel image matching the output shape of our models (3 × 192 × 192 × 192). The models were trained on three 24 GB NVIDIA Quadro 6000 graphical processing units using mean-squared-error (MSE) loss with a batch size of 3. We used group normalization as opposed to batch normalization due to group normalization's increased stability for smaller batch sizes (Wu and He, 2018). We trained using Adam (Kingma and Ba, 2014) as the optimization algorithm with a learning rate of 1E-5 and weight decay set to 1E-6.

Evaluation metrics

All evaluation metrics were only taken for the portion of the outputs containing the brain, meaning that the background voxels outside of the segmentation field were not considered. Additionally, all metrics were calculated individually for each brain tissue type. Segmentation similarity using continuous probability estimates was quantified using Pearson correlation, Spearman correlation, and MSE. Segmentation maps for each tissue type were then generated from the probability estimations by taking the argmax along the channel axis. We generated binary maps for each tissue type based on the numerical value assigned to each voxel of the argmax output. Segmentation similarity between these binary maps was quantified using DICE Score, Jaccard Index, and Haussdorf Distance (HD; Beauchemin et al., 1998).

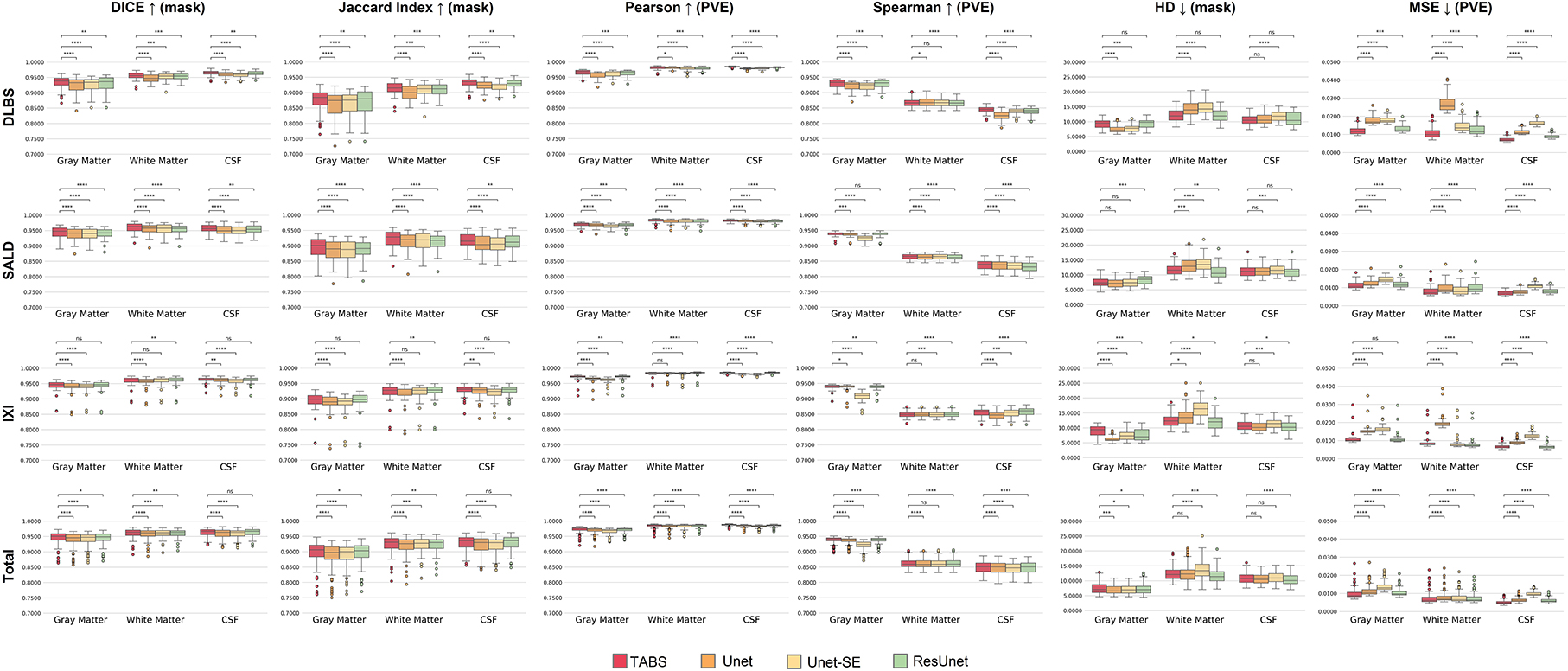

Model performance across each metric was compared using paired non-parametric Wilcoxon tests. Specifically, for each tissue type, TABS's performance was compared pairwise with each of the benchmark models tested against. We used an α value of 0.05. Significant differences are shown in box plots, with each the number of * indicating order of significance (ns, not significant, *p < 0.05, **p < 0.01, ***p < 0.001, ****p < 0.0001).

Results

Model generality

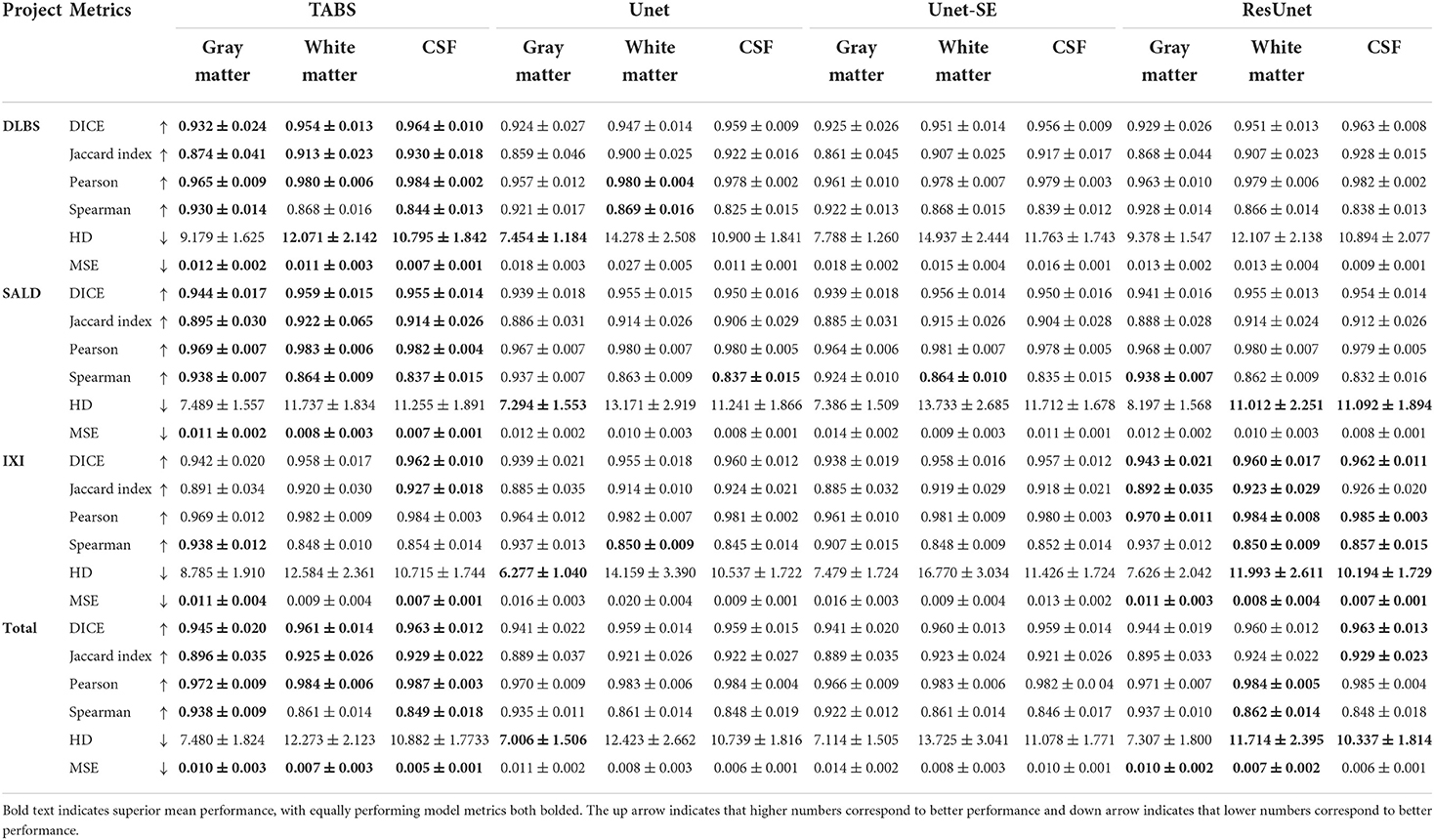

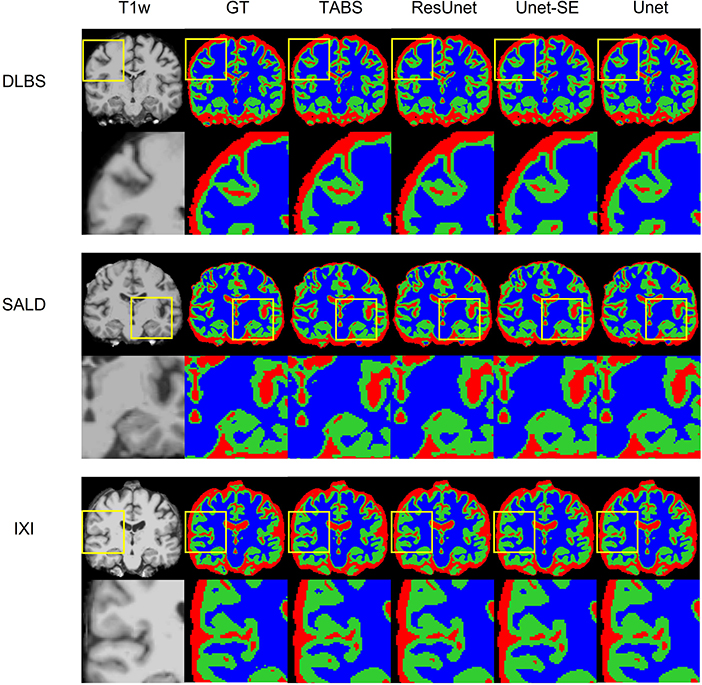

The performance results for each model trained and tested on DLBS, IXI, SALD, and Total datasets individually are reported in Table 2 and visualized in Figure 4. TABS outperformed ResUnet, Unet-SE, and Unet on all the datasets for most metrics except for the 1.5T IXI dataset, where TABS outperformed Unet-SE and Unet while only performing slightly worse than ResUnet. TABS consistently achieves higher of DICE/Jaccard metrics across all tissue types along with higher correlation and lower MSE on most tissue types. In general, all models performed better on WM and CSF as opposed to GM. Figure 5 plots representative segmentation outputs for performance testing for each of the datasets.

Figure 4. Box plots visualizing model performance with Wilcoxon pairwise comparisons. Each * indicates order of significance.

Figure 5. Visualization of model performance for DLBS, SALD, and IXI. Segmentation maps for the ground truth, TABS, and the three benchmark models are shown from left to right following the T1w scan. Zoom in regions are included below each image.

Model generality—DLBS, IXI, and SALD

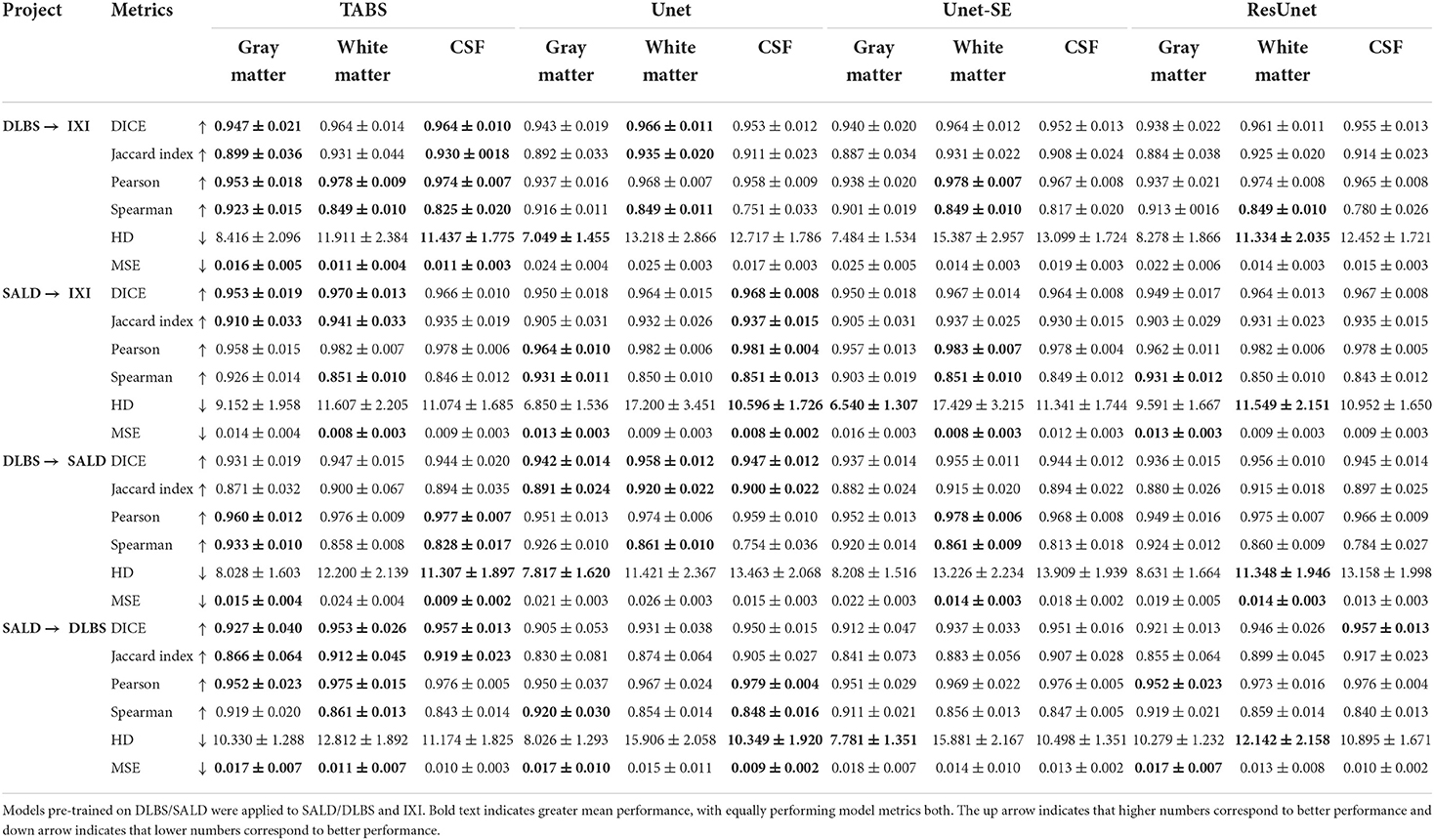

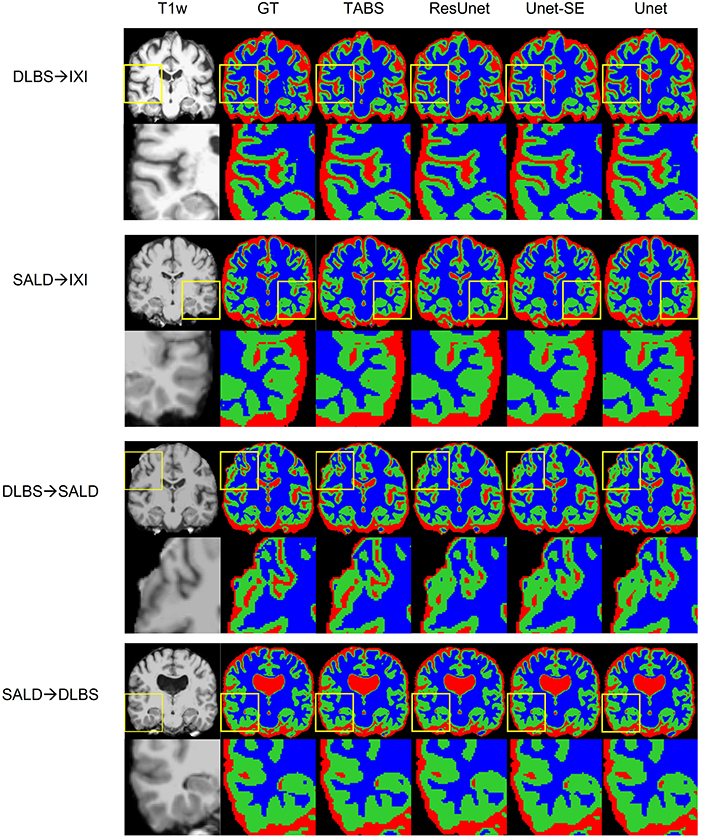

The generality results for all models trained on DLBS/SALD and applied to IXI as well as trained on DLBS/SALD and applied to SALD/DLBS are shown in Table 3 and visualized in Figure 6. TABS generalized better across datasets on most metrics for the DLBS → IXI and SALD → DLBS tests, with higher DICE/Jaccard and correlation metrics for at least two tissue types. Additionally, for the SALD → IXI generalization test, TABS reached higher DICE/Jaccard metrics for both GM and WM. We observed that models trained on SALD performed better when applied to IXI than models trained on IXI itself. TABS also exhibited a similar increase in performance when pre-trained on DLBS and applied to IXI compared to TABS trained on IXI. Representative segmentation outputs for all models for each test scenario is shown in Figure 7.

Figure 6. Box plots visualizing model generality with Wilcoxon pairwise comparisons. Each * indicates order of significance. (A) Model generality across field strengths. (B) Model generality across vendor.

Figure 7. Visualization of model generality across vendors, field strength, and scanning parameters. Segmentation maps for the ground truth, TABS, and the three benchmark models are shown from left to right following the T1w scan. Zoom in regions are included below each image.

Model generality—COBRE

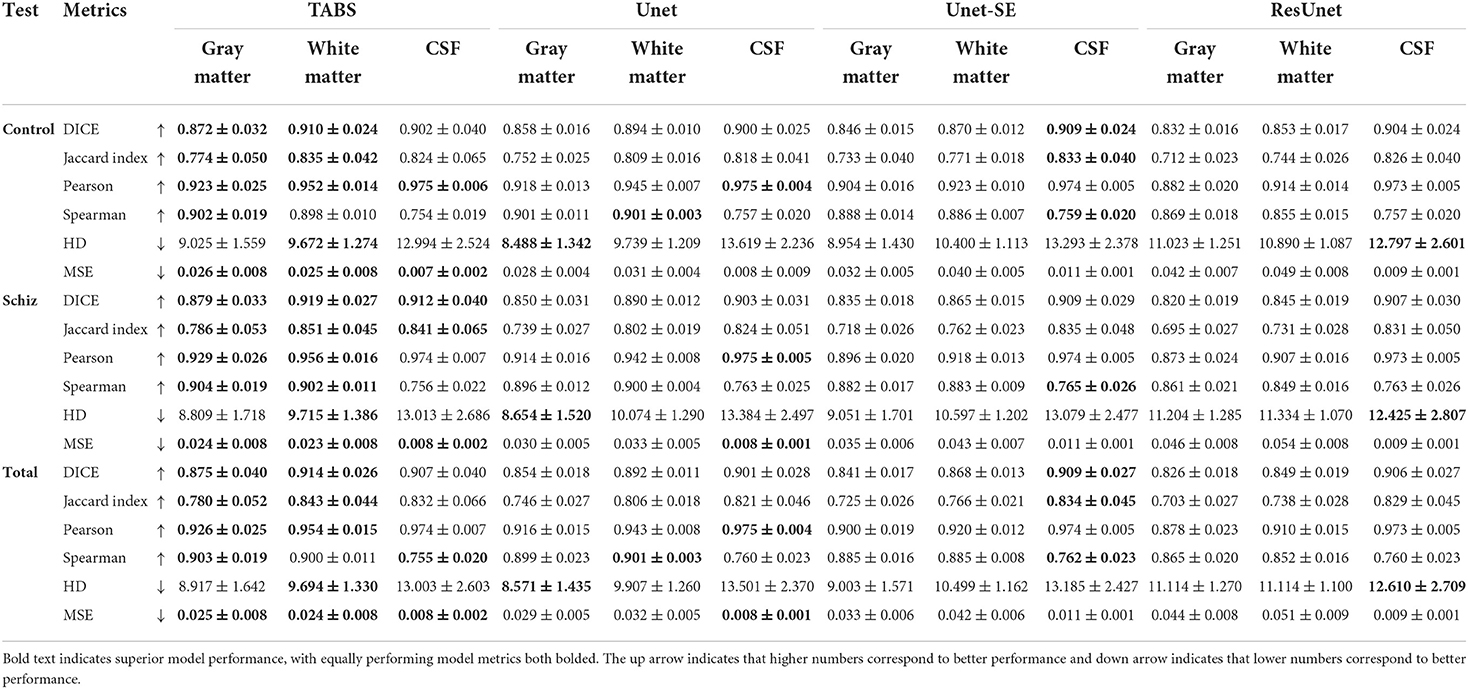

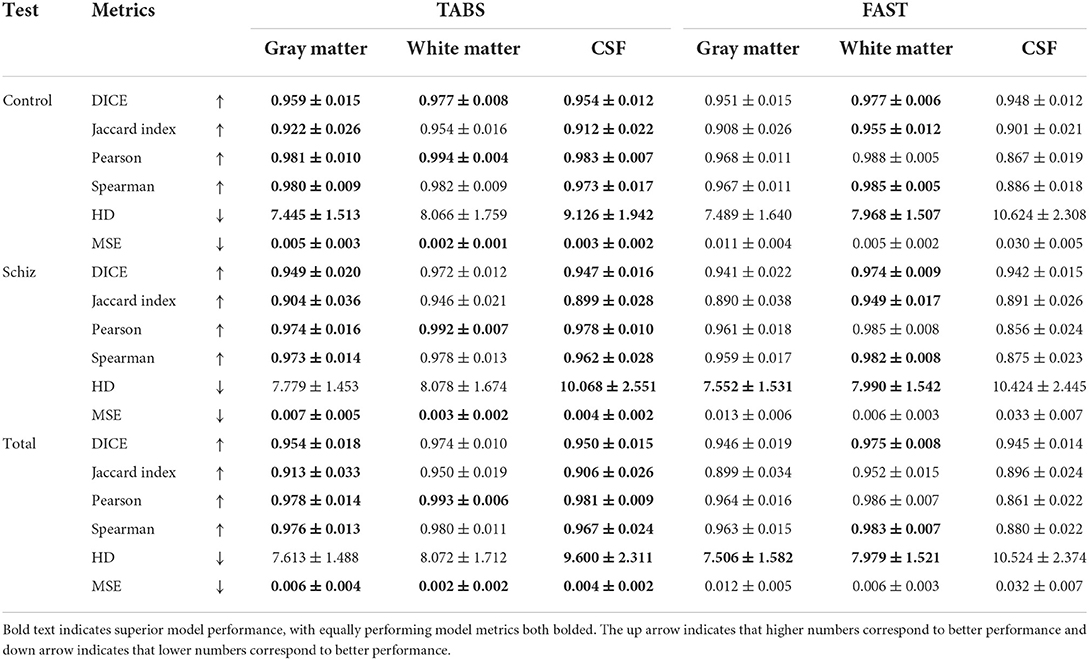

We extended our generalization testing to the COBRE dataset, consisting of healthy and schizophrenia test-retest repeated scans. The generalization performance for all models is reported in Table 4 and visualized in Figure 8. TABS generalized better for GM and WM across the control, schizophrenia, and aggregate total dataset compared to the benchmark models for most metrics. Moreover, TABS also achieved higher DICE/Jaccard metrics for CSF for schizophrenia patients.

Figure 8. Box plots visualizing model generality and test-retest reliability on the COBRE dataset with Wilcoxon pairwise comparisons. Each * indicates order of significance.

COBRE test-retest

TABS showcased better reliability compared to FAST, the tool used to generate the ground truths. Similarity metrics between test-retest repeated images for both TABS and FAST are shown in Table 5 for the control, schizophrenia, and total aggregate datasets and visualized in Figure 8. TABS proved consistently more reliable across almost all metrics for GM and CSF. Moreover, TABS reached a higher Pearson correlation and lower MSE over all tissue types, and only performed slightly worse than FAST on WM DICE/Jaccard. Representative segmentation outputs for paired repeated scans from both control and schizophrenia datasets are visualized in Figure 9.

Table 5. Test-retest reliability results across time-points and neuropsychiatric condition for TABS compared to FAST (ground truth) for control, schizophrenia, and aggregate total datasets from COBRE.

Figure 9. Visualization of test-retest reliability results across time-points and neuropsychiatric condition. Segmentation maps for TABS and FAST following the T1w scan are shown for each pair of repeated scans for control and schizophrenia groups. Zoom in regions are included below each image.

Discussion

In this study, we present TABS, a new Transformer-CNN hybrid deep learning architecture designed for brain tissue segmentation. TABS showcased superior performance compared to prior state-of-the-art CNN implementations while also generalizing exceptionally well across datasets and remaining reliable between paired test-retest scans. These traits are critical to developing a useful and more widely applicable brain tissue segmentation toolkit. Through TABS, we also demonstrate the methodological utility using a Vision Transformer to improve the Unet architecture for brain tissue segmentation.

Our experimental protocol was designed to elucidate the real-world applicability of TABS compared to various benchmark models. The datasets included in this study were chosen with the goal of emulating the extreme differences in MRI input a brain tissue segmentation algorithm would receive in real-world applications; the DLBS, SALD, and IXI datasets varied in terms of manufacturer, field strengths, and scanner parameters. Moreover, our test-retest dataset consisted of repeated scans from schizophrenia and healthy patients taken at different time points, presenting an even more challenging segmentation task. Due to these factors, we believe our evaluation methodology accurately captures the versatility of TABS.

We first found that TABS was the best performing model when trained and tested on the same dataset. While TABS achieved significantly higher performance than both Unet and Unet-SE, we observed marginal performance benefits over ResUnet. We hypothesize that the residual connections are responsible for the bulk of the performance gain over the traditional Unet models, with the Transformer module providing a small but consistent performance increase within datasets.

Throughout our generality testing, TABS performed the best on most datasets compared to the benchmark Unet models. The most significant generalization differences we observed were between TABS and ResUnet. Given that their model architectures are identical except for the Transformer, we believe that the addition of the Transformer significantly improves model generality. CNNs are not well-suited to capture long-range dependencies in the input image due to the local receptive fields of convolutional kernels. We believe that this property could make Transformer-based networks agnostic to dataset-specific variations and thus more generalizable. The addition of the Transformer allows TABS to preserve and even improve the within dataset performance conferred by residual connections while also generalizing better than the vanilla Unet, where ResUnet struggled.

We also noticed that all of the models tested improved in performance when trained on SALD and applied to IXI as opposed to training on IXI itself. This disparity could be due to the difference in field strength: the higher quality 3T MRI images from SALD may provide more globally relevant features than the 1.5T MRI images from IXI. However, for TABS specifically, we observed this same effect when pre-trained on 3T DLBS scans. These results indicate that TABS can potentially take better advantage of higher quality training data compared to the benchmark models.

Furthermore, we found that TABS generalized the best on an alternate COBRE dataset consisting of both healthy and schizophrenia scans. Schizophrenia patients often reflect subtle anatomical differences compared to healthy subjects, such as alterations in GM volume (Koutsouleris et al., 2015). These changes make generalizing to the schizophrenia dataset an especially difficult task. Additionally, the mean age of the COBRE dataset was slightly lower than the datasets TABS was originally trained on, making generalizing to COBRE potentially even more challenging. TABS generalized the best compared to the benchmark models on the overall COBRE dataset, with even more pronounced differences for the schizophrenia portion. Therefore, we believe that TABS may excel in more difficult segmentation cases where standard Unet models yield errors.

Finally, our test-retest experiment highlights the reliability of TABS, the best generalizing model on the COBRE dataset, compared with the FAST, the algorithm used to generate the ground truths. The test-retest repeated scans used in this study were taken from the same patient within a short time frame, meaning that we expected minimal differences in the segmentation output. Through this test, we find that TABS not only generalizes well on the COBRE dataset, but also maintains this performance more reliably than FAST.

In general, while traditional approaches such as FAST have demonstrated compelling brain tissue segmentation performance, there are several advantages to deep learning-based alternatives such as TABS. First and foremost, the production times for segmented scans using FAST are significantly higher than that of TABS. For example, in our testing on the same machine, TABS could generate the segmentations for an aggregate set of 146 T1w MRI scans 57x faster, with an average time of 6.2 s per scan. In contrast, FAST required 353.7 s per scan. Deep learning algorithms also provide more capacities for customization, as loaded models can be fine-tuned and altered for particular tasks as well as directly built into post-processing pipelines.

Despite the demonstrated advantages of TABS, there are certain limitations in our work that can be addressed in subsequent studies. 3D CNN models often require a large amount of computational power to efficiently train. While we were able to use full resolution MRI inputs for our model, we were limited to a batch size of 3 due to memory constraints. Using a larger batch size may have resulted in better performance. Additionally, even though we trained TABS on three large datasets, our model could be further improved by increasing our sample size. An increase in sample size could account for variations in MRI image characteristics not captured in the four datasets we investigated. In fact, Fletcher et al. (2021) required 8,000 train images from 11 cohorts to develop and validate a sufficiently generalized skull stripping model. Lastly, recent findings suggest that patch-based 2D CNN approaches perform better than non-patch-based variants for brain tissue segmentation (Lee et al., 2020; Yamanakkanavar and Lee, 2020). As such, we believe that we could extend TABS to a patch based 3D model in future studies to better capture local information that may be lost by processing the entire image at once.

Code availability statement

The code used in this project is proprietary. The code for the TABS model is available at https://github.com/raovish6/TABS, and the entire TABS package is available upon request of the corresponding author. The code for TABS is © 2021 The Trustees of Columbia University in the City of New York. This work may be reproduced and distributed for academic non-commercial purposes only. The data used in this study can be obtained from the following sources: Rodrigue et al. (2012), Biomedical Image Analysis Group et al. (2018), Wei et al. (2018), and Bustillo et al. (2017).

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author contributions

VR: conceptualization, methodology, software, formal analysis, investigation, data curation, writing—original draft, review and editing, and visualization. ZW, SA, and DM: software, formal analysis, visualization, and writing—review and editing. P-YL: formal analysis, visualization, and writing—review and editing. YT: writing—original draft and review and editing. XZ: software, formal analysis, investigation, and writing—review and editing. AL: writing—review and editing. JG: supervision, project administration, conceptualization, data curation, visualization, and writing—original draft and review and editing. All authors contributed to the article and approved the submitted version.

Acknowledgments

The content of this manuscript has previously appeared online as a preprint (Rao et al., 2022).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Akkus, Z., Galimzianova, A., Hoogi, A., Rubin, D. L., Erickson, B. J. (2017). Deep learning for brain MRI segmentation: state of the art and future directions. J. Digit. Imaging 30, 449–459. doi: 10.1007/s10278-017-9983-4

Amiri, S., Movahedi, M. M., Kazemi, K., Parsaei, H. (2013). An automated MR image segmentation system using multi-layer perceptron neural network. J. Biomed. Phys. Eng. 3:115.

Angelini, E. D., Song, T., Mensh, B. D., Laine, A. F. (2007). Brain MRI segmentation with multiphase minimal partitioning: a comparative study. Int. J. Biomed. Imaging 2007:10526. doi: 10.1155/2007/10526

Auer, D. P., Wilke, M., Grabner, A., Heidenreich, J. O., Bronisch, T., Wetter, T. C. (2001). Reduced NAA in the thalamus and altered membrane and glial metabolism in schizophrenic patients detected by 1H-MRS and tissue segmentation. Schizophr. Res. 52, 87–99. doi: 10.1016/S0920-9964(01)00155-4

Bagory, M., Durand-Dubief, F., Ibarrola, D., Comte, J. C., Cotton, F., Confavreux, C., et al. (2011). Implementation of an absolute brain 1H-MRS quantification method to assess different tissue alterations in multiple sclerosis. IEEE Trans. Biomed. Eng. 59, 2687–2694. doi: 10.1109/TBME.2011.2161609

Bauer, S., Nolte, L. P. Reyes, M. (2011). “Fully automatic segmentation of brain tumor images using support vector machine classification in combination with hierarchical conditional random field regularization,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Berlin; Heidelberg: Springer), 354–361. doi: 10.1007/978-3-642-23626-6_44

Beauchemin, M., Thomson, K. P., Edwards, G. (1998). On the Hausdorff distance used for the evaluation of segmentation results. Can. J. Remote Sensing 24, 3–8. doi: 10.1080/07038992.1998.10874685

Beers, A., Chang, K., Brown, J., Sartor, E., Mammen, C. P., Gerstner, E., et al. (2017). Sequential 3d u-nets for biologically-informed brain tumor segmentation. arXiv preprint arXiv:1709.02967. doi: 10.1117/12.2293941

Biomedical Image Analysis Group Imperial College London Centre for the Developing Brain King's College London. (2018). Information eXtraction From Images. Available online at: https://brain-development.org/ixi-dataset/ (accessed December 15, 2021).

Bustillo, J. R., Jones, T., Chen, H., Lemke, N., Abbott, C., Qualls, C., et al. (2017). Glutamatergic and neuronal dysfunction in gray and white matter: a spectroscopic imaging study in a large schizophrenia sample. Schizophr. Bull. 43, 611–619. doi: 10.1093/schbul/sbw122

Cabezas, M., Oliver, A., Lladó, X., Freixenet, J., Cuadra, M. B. (2011). A review of atlas-based segmentation for magnetic resonance brain images. Comput. Methods Prog. Biomed. 104, e158–e177. doi: 10.1016/j.cmpb.2011.07.015

Chen, J., Lu, Y., Yu, Q., Luo, X., Adeli, E., Wang, Y., et al. (2021). Transunet: Transformers make strong encoders for medical image segmentation. arXiv [Preprint]. arXiv:2102.04306. doi: 10.48550/arXiv.2102.04306

Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T. Ronneberger, O. (2016). “3D U-Net: learning dense volumetric segmentation from sparse annotation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Cham: Springer), 424–432. doi: 10.1007/978-3-319-46723-8_49

Dadar, M., Collins, D. L. (2021). BISON: brain tissue segmentation pipeline using T1-weighted magnetic resonance images and a random forest classifier. Magn. Reson. Med. 85, 1881–1894. doi: 10.1002/mrm.28547

Dora, L., Agrawal, S., Panda, R., Abraham, A. (2017). State-of-the-art methods for brain tissue segmentation: a review. IEEE Rev. Biomed. Eng. 10, 235–249. doi: 10.1109/RBME.2017.2715350

Feng, X., Lipton, Z. C., Yang, J., Small, S. A., Provenzano, F. A., Alzheimer's Disease Neuroimaging Initiative Frontotemporal Lobar Degeneration Neuroimaging Initiative (2020a). Estimating brain age based on a uniform healthy population with deep learning and structural magnetic resonance imaging. Neurobiol. Aging 91, 15–25. doi: 10.1016/j.neurobiolaging.2020.02.009

Feng, X., Tustison, N. J., Patel, S. H., Meyer, C. H. (2020b). Brain tumor segmentation using an ensemble of 3d u-nets and overall survival prediction using radiomic features. Front. Comput. Neurosci. 14:25. doi: 10.3389/fncom.2020.00025

Fletcher, E., Decarli, C., Fan, A. P., Knaack, A. (2021). Convolutional neural net learning can achieve production-level brain segmentation in structural magnetic resonance imaging. Front. Neurosci. 15:683426. doi: 10.3389/fnins.2021.683426

Greenspan, H., Ruf, A., Goldberger, J. (2006). Constrained Gaussian mixture model framework for automatic segmentation of MR brain images. IEEE Trans. Med. Imaging 25, 1233–1245. doi: 10.1109/TMI.2006.880668

Han, X., Jovicich, J., Salat, D., van der Kouwe, A., Quinn, B., Czanner, S., et al. (2006). Reliability of MRI-derived measurements of human cerebral cortical thickness: the effects of field strength, scanner upgrade and manufacturer. Neuroimage 32, 180–194. doi: 10.1016/j.neuroimage.2006.02.051

Harris, A. D., Puts, N. A., Edden, R. A. (2015). Tissue correction for GABA-edited MRS: considerations of voxel composition, tissue segmentation, and tissue relaxations. J. Magn. Reson. Imaging 42, 1431–1440. doi: 10.1002/jmri.24903

Hatamizadeh, A., Tang, Y., Nath, V., Yang, D., Myronenko, A., Landman, B., et al. (2021). Unetr: transformers for 3d medical image segmentation. arXiv [Preprint]. arXiv:2103.10504. doi: 10.1109/WACV51458.2022.00181

Hirata, Y., Matsuda, H., Nemoto, K., Ohnishi, T., Hirao, K., Yamashita, F., et al. (2005). Voxel-based morphometry to discriminate early Alzheimer's disease from controls. Neurosci. Lett. 382, 269–274. doi: 10.1016/j.neulet.2005.03.038

Hu, J., Shen, L., Sun, G. (2018). “Squeeze-and-excitation networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Beijing: IEEE), 7132–7141. doi: 10.1109/CVPR.2018.00745

Jenkinson, M., Bannister, P., Brady, M., Smith, S. (2002). Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage 17, 825–841. doi: 10.1006/nimg.2002.1132

Karch, J. D., Filevich, E., Wenger, E., Lisofsky, N., Becker, M., Butler, O., et al. (2019). Identifying predictors of within-person variance in MRI-based brain volume estimates. NeuroImage 200, 575–589. doi: 10.1016/j.neuroimage.2019.05.030

Kassubek, J., Juengling, F. D., Kioschies, T., Henkel, K., Karitzky, J., Kramer, B., et al. (2004). Topography of cerebral atrophy in early Huntington's disease: a voxel based morphometric MRI study. J. Neurol. Neurosurg. Psychiatry 75, 213–220.

Khagi, B., Kwon, G. R. (2018). Pixel-label-based segmentation of cross-sectional brain MRI using simplified SegNet architecture-based CNN. J. Healthc. Eng. 2018:3640705. doi: 10.1155/2018/3640705

Kingma, D. P., Ba, J. (2014). Adam: A method for stochastic optimization. arXiv [Preprint]. arXiv:1412.6980. doi: 10.48550/arXiv.1412.6980

Kolarík, M., Burget, R., Uher, V. Dutta, M. K. (2018). “3D dense-U-net for MRI brain tissue segmentation,” in 2018 41st International Conference on Telecommunications and Signal Processing (Brno), 1–4 doi: 10.1109/TSP.2018.8441508

Koutsouleris, N., Riecher-Rössler, A., Meisenzahl, E. M., Smieskova, R., Studerus, E., Kambeitz-Ilankovic, L., et al. (2015). Detecting the psychosis prodrome across high-risk populations using neuroanatomical biomarkers. Schizophr. Bull. 41, 471–482. doi: 10.1093/schbul/sbu078

Kruggel, F., Turner, J., Muftuler, L. T., Alzheimer's Disease Neuroimaging Initiative (2010). Impact of scanner hardware and imaging protocol on image quality and compartment volume precision in the ADNI cohort. Neuroimage 49, 2123–2133. doi: 10.1016/j.neuroimage.2009.11.006

Lee, B., Yamanakkanavar, N., Choi, J. Y. (2020). Automatic segmentation of brain MRI using a novel patch-wise U-net deep architecture. PLoS ONE 15:e0236493. doi: 10.1371/journal.pone.0236493

Mahmood, Q., Chodorowski, A., Persson, M. (2015). Automated MRI brain tissue segmentation based on mean shift and fuzzy c-means using a priori tissue probability maps. IRBM 36, 185–196. doi: 10.1016/j.irbm.2015.01.007

Marroquín, J. L., Vemuri, B. C., Botello, S., Calderon, E., Fernandez-Bouzas, A. (2002). An accurate and efficient Bayesian method for automatic segmentation of brain MRI. IEEE Trans. Med. Imaging 21, 934–945. doi: 10.1109/TMI.2002.803119

Mlynarski, P., Delingette, H., Criminisi, A., Ayache, N. (2019). 3D convolutional neural networks for tumor segmentation using long-range 2D context. Comput. Med. Imaging Graph. 73, 60–72. doi: 10.1016/j.compmedimag.2019.02.001

Moeskops, P., Viergever, M. A., Mendrik, A. M., De Vries, L. S., Benders, M. J., Išgum, I. (2016). Automatic segmentation of MR brain images with a convolutional neural network. IEEE Trans. Med. Imaging 35, 1252–1261. doi: 10.1109/TMI.2016.2548501

Nemoto, K., Sakaguchi, H., Kasai, W., Hotta, M., Kamei, R., Noguchi, T., et al. (2021). Differentiating dementia with lewy bodies and Alzheimer's disease by deep learning to structural MRI. J. Neuroimaging 31, 579–587. doi: 10.1111/jon.12835

Nugent, A. C., Milham, M. P., Bain, E. E., Mah, L., Cannon, D. M., Marrett, S., et al. (2006). Cortical abnormalities in bipolar disorder investigated with MRI and voxel-based morphometry. Neuroimage 30, 485–497. doi: 10.1016/j.neuroimage.2005.09.029

Rao, V. M., Wan, Z., Ma, D., Lee, P.-Y., Tian, Y., Laine, A. F., et al. (2022). Improving across-dataset brain tissue segmentation using transformer. arXiv [Preprint]. arXiv:2201.08741. doi: 10.48550/arXiv.2201.08741

Rodrigue, K. M., Kennedy, K. M., Devous, M. D., Rieck, J. R., Hebrank, A. C., Diaz-Arrastia, R., et al. (2012). β-Amyloid burden in healthy aging: regional distribution and cognitive consequences. Neurology 78, 387–395.

Ronneberger, O., Fischer, P. Brox, T. (2015). “U-net: convolutional networks for biomedical image segmentation,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Cham: Springer), 234–241. doi: 10.1007/978-3-319-24574-4_28

Salvador, R., Radua, J., Canales-Rodríguez, E. J., Solanes, A., Sarró, S., Goikolea, J. M., et al. (2017). Evaluation of machine learning algorithms and structural features for optimal MRI-based diagnostic prediction in psychosis. PLoS ONE 12:e0175683. doi: 10.1371/journal.pone.0175683

Ségonne, F., Dale, A. M., Busa, E., Glessner, M., Salat, D., Hahn, H. K., et al. (2004). A hybrid approach to the skull stripping problem in MRI. Neuroimage 22, 1060–1075. doi: 10.1016/j.neuroimage.2004.03.032

Sled, J. G., Zijdenbos, A. P., Evans, A. C. (1998). A nonparametric method for automatic correction of intensity nonuniformity in MRI data. IEEE Trans. Med. Imaging 17, 87–97. doi: 10.1109/42.668698

Sun, Q., Fang, N., Liu, Z., Zhao, L., Wen, Y., Lin, H. (2021). HybridCTrm: bridging CNN and transformer for multimodal brain image segmentation. J. Healthc. Eng. 2021:7467261. doi: 10.1155/2021/7467261

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., et al. (2017). “Attention is all you need,” in Advances in Neural Information Processing Systems (San Francisco, CA), 5998–6008.

Wang, W., Chen, C., Ding, M., Yu, H., Zha, S. Li, J. (2021). “Transbts: multimodal brain tumor segmentation using transformer,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Cham: Springer), 109–119. doi: 10.1007/978-3-030-87193-2_11

Wei, D., Zhuang, K., Ai, L., Chen, Q., Yang, W., Liu, W., et al. (2018). Structural and functional brain scans from the cross-sectional Southwest University adult lifespan dataset. Sci. Data 5, 1–10. doi: 10.1038/sdata.2018.134

Wright, I. C., McGuire, P. K., Poline, J. B., Travere, J. M., Murray, R. M., Frith, C. D., et al. (1995). A voxel-based method for the statistical analysis of gray and white matter density applied to schizophrenia. Neuroimage 2, 244–252. doi: 10.1006/nimg.1995.1032

Wu, Y., He, K. (2018). “Group normalization,” in Proceedings of the European Conference on Computer Vision (Menlo Park, CA), 3–19. doi: 10.1007/978-3-030-01261-8_1

Yamanakkanavar, N., Lee, B. (2020). Using a patch-wise m-net convolutional neural network for tissue segmentation in brain mri images. IEEE Access 8, 120946–120958. doi: 10.1109/ACCESS.2020.3006317

Zhang, W., Li, R., Deng, H., Wang, L., Lin, W., Ji, S., et al. (2015). Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. NeuroImage 108, 214–224. doi: 10.1016/j.neuroimage.2014.12.061

Zhang, Y., Brady, J. M. Smith, S. (2000). “Hidden Markov random field model for segmentation of brain MR image,” in Medical Imaging 2000: Image Processing, Vol. 3979 (Oxford: International Society for Optics and Photonics), 1126–1137. doi: 10.1117/12.387617

Keywords: MRI, transformer, deep learning, segmentation, investigation, brain tissue segmentation

Citation: Rao VM, Wan Z, Arabshahi S, Ma DJ, Lee P-Y, Tian Y, Zhang X, Laine AF and Guo J (2022) Improving across-dataset brain tissue segmentation for MRI imaging using transformer. Front. Neuroimaging 1:1023481. doi: 10.3389/fnimg.2022.1023481

Received: 19 August 2022; Accepted: 24 October 2022;

Published: 21 November 2022.

Edited by:

Sairam Geethanath, Icahn School of Medicine at Mount Sinai, United StatesReviewed by:

Hamid Osman, Taif University, Saudi ArabiaEvan Fletcher, University of California, Davis, United States

Copyright © 2022 Rao, Wan, Arabshahi, Ma, Lee, Tian, Zhang, Laine and Guo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jia Guo, amczNDAwQGNvbHVtYmlhLmVkdQ==

Vishwanatha M. Rao

Vishwanatha M. Rao Zihan Wan

Zihan Wan Soroush Arabshahi

Soroush Arabshahi David J. Ma

David J. Ma Pin-Yu Lee1

Pin-Yu Lee1 Ye Tian

Ye Tian Xuzhe Zhang

Xuzhe Zhang Jia Guo

Jia Guo