- 1Department of ECE, NRI Institute of Technology (Autonomous), Vijayawada, India

- 2School of Electronics Engineering, VIT-AP University, Andhra Pradesh, India

- 3Department of ECE, Koneru Lakshmaiah Education Foundation, Hyderabad, India

Introduction: Brain cancer is a frequently occurring disease around the globe and mostly developed due to the presence of tumors in/around the brain. Generally, the prevalence and incidence of brain cancer are much lower than that of other cancer types (breast, skin, lung, etc.). However, brain cancers are associated with high mortality rates, especially in adults, due to the false identification of tumor types, and delay in the diagnosis. Therefore, the minimization of false detection of brain tumor types and early diagnosis plays a crucial role in the improvement of patient survival rate. To achieve this, many researchers have recently developed deep learning (DL)-based approaches since they showed a remarkable performance, particularly in the classification task.

Methods: This article proposes a novel DL architecture named BrainCDNet. This model was made by concatenating the pooling layers and dealing with the overfitting issues by initializing the weights into layers using ‘He Normal’ initialization along with the batch norm and global average pooling (GAP). Initially, we sharpen the input images using a Nimble filter, which results in maintaining the edges and fine details. After that, we employed the suggested BrainCDNet for the extraction of relevant features and classification. In this work, two different forms of magnetic resonance imaging (MRI) databases such as binary (healthy vs. pathological) and multiclass (glioma vs. meningioma vs. pituitary) are utilized to perform all these experiments.

Results and discussion: Empirical evidence suggests that the presented model attained a significant accuracy on both datasets compared to the state-of-the-art approaches, with 99.45% (binary) and 96.78% (multiclass), respectively. Hence, the proposed model can be used as a decision-supportive tool for radiologists during the diagnosis of brain cancer patients.

1 Introduction

Brain tumors are characterized by the uncontrolled growth of cells within or near the brain. According to the American Cancer Society and the National Brain Tumor Foundation (NBTF) report, so far, more than 150 distinct brain tumors have been documented. Among them, glioma and meningioma tumors occur frequently, while pituitary tumors rarely happen. Typically, brain tumors are categorized into two groups: primary and secondary or metastatic. Primary brain tumors originate from the brain or its surroundings and are divided into benign (non-cancerous) or malignant (cancerous) (Rasheed et al., 2023). Tumors that do not have active cells and have less effect on human life are called benign. Meningioma and most pituitary tumors are benign. Tumors that contain active cells and highly impact human life are called malignant. Gliomas (astrocytoma, ependymoma, and oligodendroglioma) are malignant tumors. To identify these tumors, we require an adequate radiological examination and estimation. Physicians utilize medical imaging or scanning techniques, such as X-ray, computed tomography (CT), and magnetic resonance imaging (MRI) to meet this criterion. Among them, MRI is a frequently used non-invasive scanning procedure for identifying the abnormalities of brain tissues since it produces high spatial resolution images and is safe from radiation. Hence, it is most suitable for all subjects, such as children, adults, pregnant women, etc. In addition, MRI yields accurate visualization of anatomical structures in the human body, especially the soft tissues of the brain. Based on these details, radiologists or doctors will quickly decide to provide appropriate treatment to the patient, such as radiotherapy, chemotherapy, and surgery.

According to the Brain Cancer Statistics 2019 (Ilic and Ilic, 2023), 347,992 new cases (187,491 males and 160,501 females) are registered across the globe. Among them, 246,253 (138,605 males, 107,648 females) died from brain cancer. These statistics show that, in males, the incidence and mortality are higher than in females. The incidence rate for males is 4.8/100,000, and for females is 3.6/100,000, while the mortality rate in males is 3.9/100,000, and in females is 2.6/100,000. Worldwide, European countries have higher incidence and mortality rates (incidence rate: 7.9/100,000 in males and 5.5/100,000 in females; mortality rate: 5.4/100,000 in males and 3.5/100,000 in females).

From the statistical analysis, we conclude that early diagnosis of brain tumors is crucial in improving a patient’s lifespan. However, manually inspecting these MRI images for longer periods of time is tedious and prone to errors. Therefore, computer-aided diagnosis plays a vital role in assisting clinicians. Hence, in this work, we proposed a novel approach called BrainCDNet.

1.1 Highlights of this study

In this study, first, we exploited a Nimble filtering algorithm to highlight edge details within the image. Following this, we developed a novel deep neural network called BrainCDNet to extract meaningful features by addressing issues encountered in existing approaches, such as training parameters and network stability. The internal architecture of the suggested network is designed to enhance model accuracy by adjusting hyperparameters such as optimizer, learning rate, epochs, batch size, and the number of layers. Our model is implemented on binary (healthy vs. pathological) and multiclass (glioma vs. meningioma vs. pituitary) classification problems using 5-fold cross-validation and hold-out. The proposed framework achieved optimal performance with an accuracy of 99.45% (binary) and 96.78% (multiclass). Experimental outcomes demonstrate that the presented technique achieves better classification accuracy with fewer learning parameters (997,123, including 994,563 trainable and 2,560 non-trainable) than existing approaches.

2 Related works

For a past two decades, researchers and scientists focus on the classification of brain tumors from MRI images by adopting machine learning (ML), and deep learning (DL) mechanisms. In this section, we outline a few recently developed approaches.

Islam et al. (2021) proposed an enhanced brain tumor detection approach using super pixels and principal component analysis (PCA)-based feature extraction followed by template-based k-means (TKM) clustering. Through this sequence of steps, they attained 95% accuracy. Demir et al. (2023) suggested a deep learning (DL)-based model with an accuracy of 99% using MobileNetV2, ReliefF feature selection, and k-nearest neighbors (KNN). With the help of pre-trained convolutional neural networks (CNN) such as VGG-16, Srinivas et al. (2022) implemented a deep transfer learning framework that obtained 86.04% classification accuracy.

Shanthi et al. (2022) developed an optimized hybrid CNN (OHCNN) methodology using CNN followed by long short-term memory (LSTM) and adaptive RIDER optimization (ARO). The CNN-LSTM-ARO-based model generated an accuracy of 97.25%. Reddy et al. (2023) outlined a diagnosis model that utilized local texture features, blue monkey extended bald edge optimization (BMEBEO), and deep belief network (DBN) followed by Bi-LSTM (DBN-LSTM). The presented technique obtained an accuracy of 92.61%.

Vankdothu and Hameed (2022) offered an automated brain tumor detection approach with an accuracy of 95.17% for identifying the pathological behavior of MRI images. The proposed framework includes segmentation by improved k-means clustering (IKMC), feature extraction using gray-level co-occurrence matrix (GLCM), and classification based on recurrent CNN (RCNN). Zhu et al. (2023) developed a novel architecture: ResNet-based bat extreme learning machine (RBELM) to discriminate between normal and abnormal brain MRI images, gaining 99% detection accuracy.

Mijwil et al. (2023) suggested a MobileNet V1-based DL model to detect brain tumors from MRI images, and they yielded 97.3% accuracy. Rahman and Islam (2023) developed a parallel deep CNN (PDCNN) architecture to diagnose brain MRI tumors, and they attained 97.33% accuracy. To classify brain tumors into malignant and benign, Mehrotra et al. (2020) proposed an artificial intelligence (AI) based DL methodology with an accuracy of 99.04%.

Nanda et al. (2023) presented a new hybrid model that utilized a saliency KMC, social spider optimization, and radial basis neural network (RBNN). Through this process, the authors yield 92% accuracy. Kibriya et al. (2023) implemented an ensemble model by concatenating VGG-16 and GLCM features. Later, these features were fed to a support vector machine (SVM) to detect the pathological behavior of brain MRI images. The presented ensemble architecture attained 99.03% accuracy.

Abiwinanda et al. (2019) attempted to classify MRI-based brain tumors by developing an optimized CNN architecture. By this network, the authors achieved a detection rate of 84.19%. Pashaei et al. (2018) extracted relevant features using CNN and then employed a kernel-based extreme learning machine (KELM) for detecting brain tumors. Here, the suggested approach attained 93.68% accuracy.

Anaraki et al. (2019) proposed a CNN and genetic algorithm (GA) based model to grade the brain MRI images into glioma, meningioma, and pituitary. The experimental results of the presented model reveal that they attained 94.2% accuracy. Afshar et al. (2019) offered a modified CapsNet to analyze brain tumors from MRI images. Through this model, the authors achieved better performance with 90.89% accuracy in comparing conventional CNN approaches.

To implement an accurate brain tumor identification model, Gumaei et al. (2019) utilized a hybrid feature extraction framework such as PCA followed by gradient image descriptor (PCA-GIST) and regularized ELM (RELM). Here, the authors gained maximum accuracy with a 94.23% value. Swati et al. (2019) used a pre-trained CNN, namely VGG-19, and block-wise fine-tuning to distinguish MRI-based brain tumors. Based on this architecture, they achieved 94.82% classification accuracy.

Das et al. (2019) described a customized CNN-based strategy to interpret brain MRI images that achieved a significant accuracy of 94.39%. Kurmi and Chaurasia (2020) outlined an automated prognosis system with 92.6% accuracy based on hand-crafted features, neighborhood component analysis (NCA), and multilayer perceptron (MLP). Noreen et al. (2021) designed a hybrid technique through Xception and ensemble techniques. To improve the performance of the model, the authors further employed fine-tuning. Using this idea, they yield 94.34%.

Mukherkjee et al. (2022) offered a DL-based framework using aggregation of generative adversarial networks (AggrGAN) and ResNet-152 to identify the type of brain tumor. By making use of this technique, they obtained 93.88%. Deepak and Ameer (2023) utilized a deep feature fusion and majority voting mechanism to categorize brain tumors from the imbalanced MRI database. Here, the suggested approach attained 95.4% accuracy. To improve the performance of brain tumor diagnosis methodology, Khan et al. (2023) developed a hybrid network with 95.10% accuracy using DenseNet 169 and ML frameworks such as random forest (RF), SVM, and XGBoost.

3 Materials and methods

This section describes the dataset used in the work and the relevant steps involved in the proposed model to identify the pathology of brain tumors using MRI images. Figure 1 indicates the flow diagram of the suggested BrainCDNet. Subsequent sections describe each block of Figure 1.

3.1 Dataset

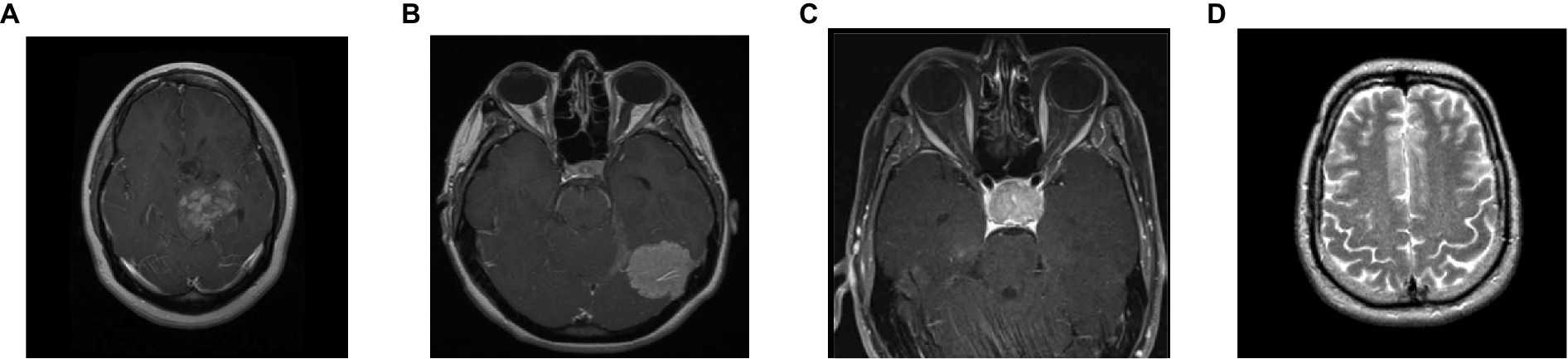

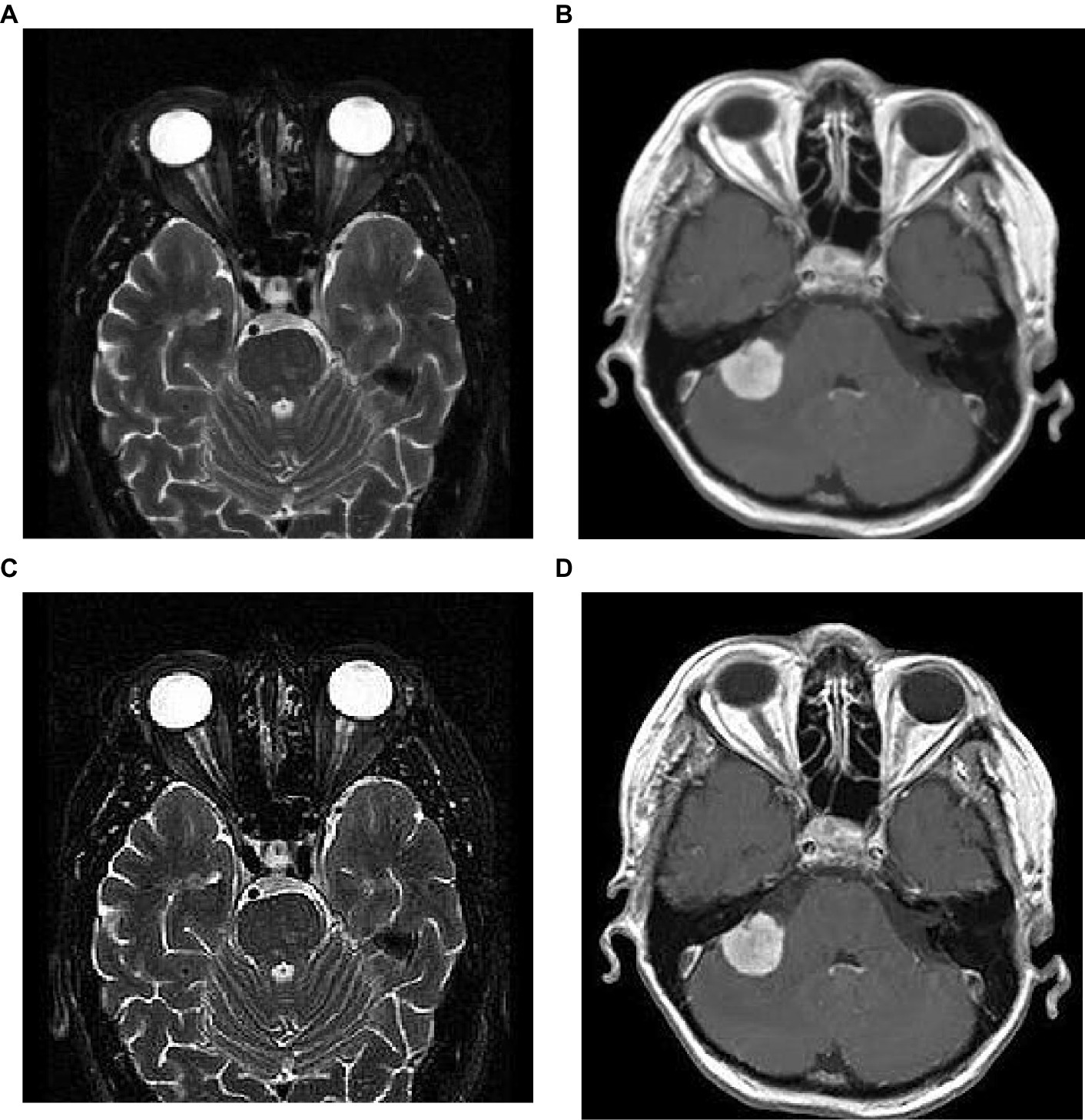

To analyze the presented framework, we consider the two brain MRI image dataset scenarios: binary (healthy vs. pathological) and multiclass (glioma vs. meningioma vs. pituitary). The first scenario comprised 2,376 T2-weighted MRI images, including 1746 pathological (glioma, Sarcoma, meningioma, and Alzheimer’s) and 630 healthy images (Kaggle, n.d.). The second scenario constituted 2,764 T1-weighted contrast-enhanced MRI images with 926 glioma, 937 meningioma, and 901 pituitary gland tumor images (MRI, n.d.). Figure 2 shows the sample images used in this work. All of these images have varied resolution sizes; however, to feed these images to the proposed deep net, we resize them to 224 × 224 × 3.

3.2 Image sharpening

Image sharpening is a digital image processing technique used to enhance the contrast and detail of an image. The goal of sharpening is to improve the visual perception of the image by enhancing the edges, contours, texture, and some fine details that may have been blurred or softened during image capturing or processing. Over the last few decades, various approaches have been developed to fulfill the requirements of image sharpening. Unsharp masking, Laplacian sharpening, deconvolutional sharpening, and frequency-domain filtering techniques are more popular among them. However, image sharpening is an open problem for researchers due to the over-sharpening (Toh and Isa, 2011) and amplification of image noise (Sheppard et al., 2004). To minimize these problems, we used a Nimble filter (Zohair, 2018), developed recently and the processes is as follows:

1. Initially, generate a blurred version of the original image, I(m,n), by estimating the average of the horizontal and vertical shifts for a source image, then multiply by a scaling factor α. The resultant image includes the spatial details of original image.

2. Secondly, multiply the original image with a scaling factor α.

3. Finally, add outcomes of steps 1 and 2 to obtain the resultant sharpened image.

The mathematical characterization of the suggested Nimble filter is described in the Equation 1 as follows:

where, S(m,n) is the sharpened image; m,n are the spatial coordinates; α is a scaling factor that controls the amount of sharpness enhancement, and which is always greater than 1. In this work, we consider α as 2 and the corresponding implications of image sharpening are represented in Figure 3. From this figure, we observed that due to the image sharpening, we highlighted the patterns (see Figure 3C) and removed the blurring (see Figure 3D) from the original images.

3.3 The BrainCDNet architecture

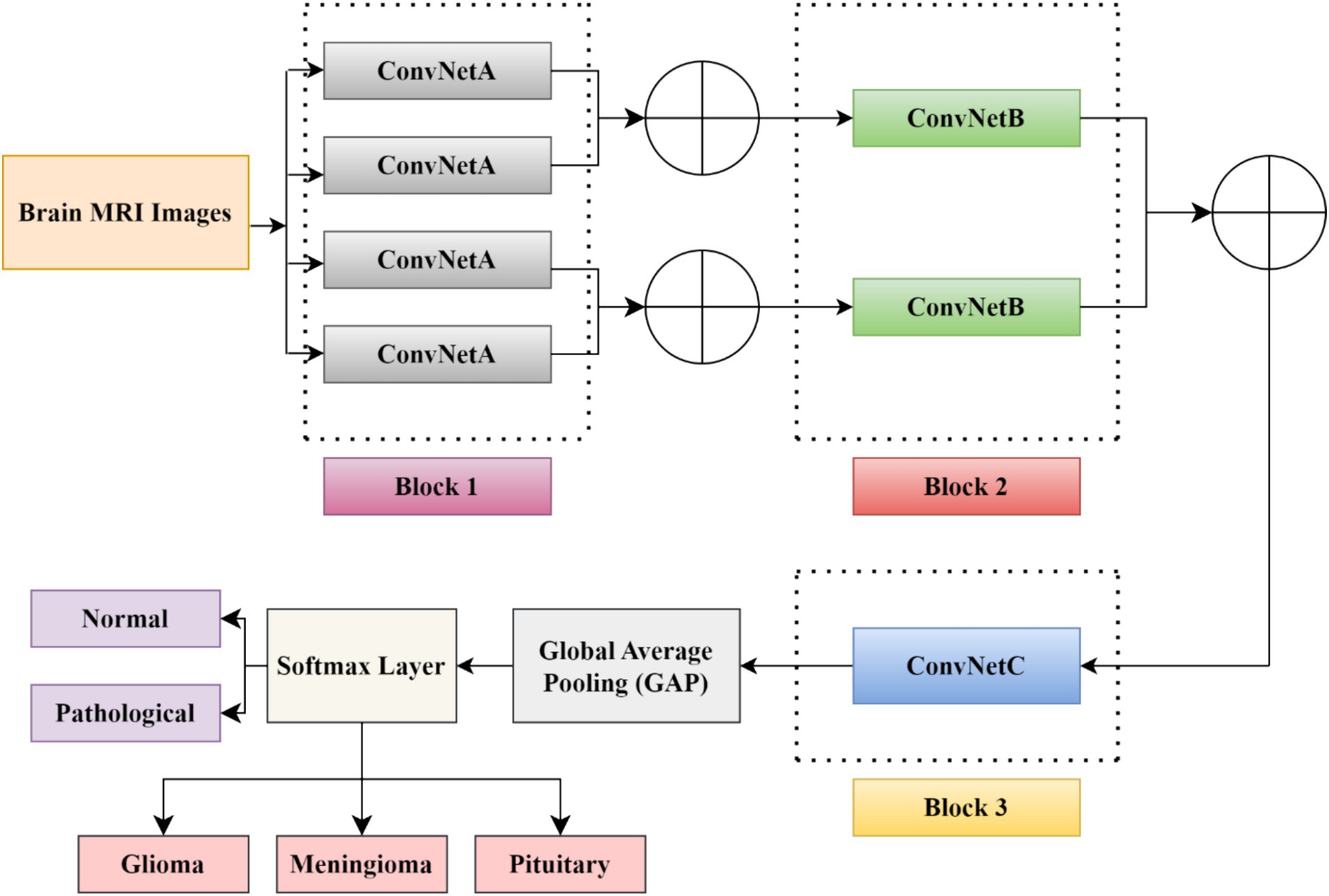

In this study, we developed a BrainCDNet model by concatenating the pooling layers, which is shown in Figure 4. The suggested architecture mainly includes three CNN blocks (blocks 1 to 3), global average pooling, and a softmax layer.

The first block (or block 1) consists of four ConvNetA architectures, which can be used as a feature extractor. Each ConvNetA includes a 3 × 3 convolutional layer with 64 filters and a stride 2, scaled exponential linear unit (SELU), batch normalization, and 2 × 2 max-pooling with stride 2. Features obtained from each ConvNetA are concatenated using Equation 2 as follows (Barzekar and Zeyun, 2022):

where, (p,q) are the dimensions of ConvNet; x and y denotes the feature maps; (cm,cn) represents the number of channels on each ConvNet output, ⨁ illustrates the concatenation operator.

The above process is applied to the top two and the bottom two ConvNetA architectures. The corresponding feature maps obtained from the first block are fed to the second block CNN framework.

The second block (or block 2) incorporates two ConvNetB architectures. Each ConvNetB includes 2 convolutional layers with a kernel size of 3 × 3 and 1 × 1, stride 1, and 128 filters. Here, by the 1 × 1 convolution, we relatively minimize the model’s computational complexity and reduce the significant number of feature maps. Later on, after 3 × 3 convolutions, we placed a SELU activation and batch normalization. Similarly, after 1 × 1 convolutions, we introduced a SELU activation and batch normalization followed by 2 × 2 max-pooling with stride 2. The outcomes of both ConvNet B architectures are concatenated by Equation (2), and the resultant feature maps feed as input to block 3.

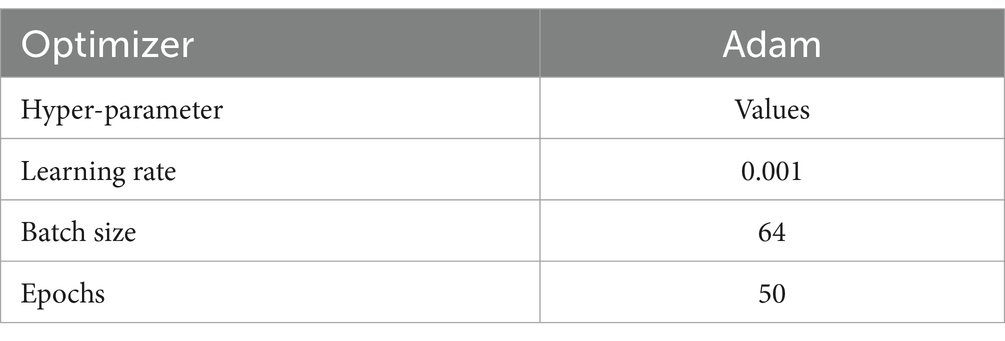

The third block (or block 3) contains one CNN architecture namely, ConvNetC, which has the following configurations: One convolutional layer with a filter size of 3 × 3, stride 1, and 256 filters, followed by Gaussian error linear unit (GELU) and batch normalization. In addition, we incorporated a 1 × 1 convolution with the same configurations. Afterward, the features obtained from the third block are summarized by a global average pooling (GAP). By this pooling layer, we can reduce the number of training parameters and also prevent overfitting issues. Finally, these summarized features are fed to the softmax layer to classify the brain MRI images into two categories: healthy vs. pathological and glioma vs. meningioma vs. pituitary. The hyperparameters of the BrainCDNet architecture is presented in Table 1.

4 Results and discussions

This section presents the simulation results of the proposed method, as shown in Figure 1. As mentioned in the previous section, the MRI images are first preprocessed using the Nimble filter for better visualization and diagnosis. Later, we followed two classification scenarios. In the first scenario, we considered 2376 T2-weighted MRI images from the healthy and pathological subjects. The experimentation on this group discriminates only between these two classes. In scenario 2, we considered 2764 T1-weighted MRI images from three classes, namely glioma, meningioma, and pituitary gland tumor images. This set of experiments classifies these three pathological MRI images.

4.1 Performance metrics

The choice of performance metrics is crucial for any ML or DL-based models for quantified analysis. In this work, we adopted the most often used metrics in the literature (Srinivas et al., 2022): true positive rate (TPR)/Sensitivity, true negative rate (TNR)/Specificity, positive predictive value (PPV)/Precision, F-score, area under curve (AUC), and accuracy. For better performance, all of these values must be high.

4.1.1 Scenario 1 (binary classification)

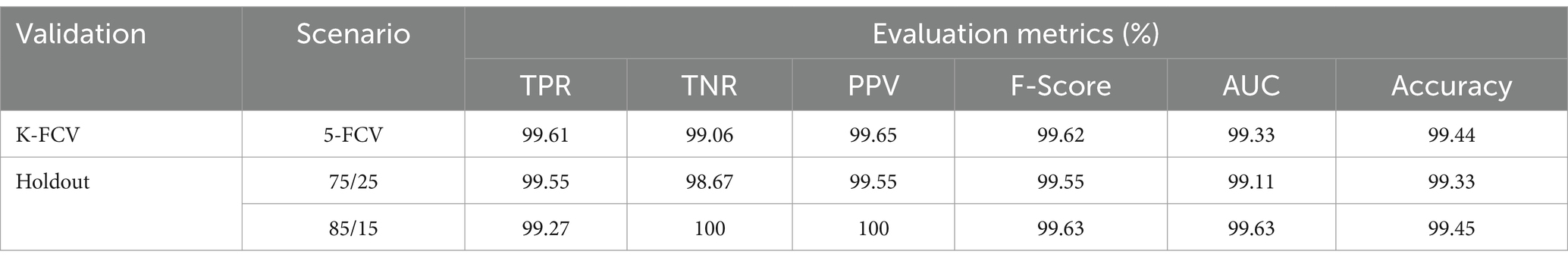

Before executing any DL model, the first step is to separate the dataset into training and test sets for proper validation. Therefore, the selection of a validation scheme is a significant step. In this work, we performed two types of validation schemes, namely, K-fold cross-validation and a holdout, to estimate the performance of the proposed method. The whole data will be divided into approximately K-portions in the K-fold scheme. Later, one portion of the data will be kept for testing, and the remaining K-1 portion will be used for training the model. This process repeats for K times, and a different test set will be used for each run. Finally, the average result of all these K runs is considered for the model. Besides, the holdout scheme will randomly divide the dataset into training and testing datasets by ensuring data from all classes is available in both datasets. Here, we considered K = 5 in the prior validation scheme and two holdout cases (Case 1: 75% of data for training and 25% for testing. Case 2: 85% of data for training and 15% for testing).

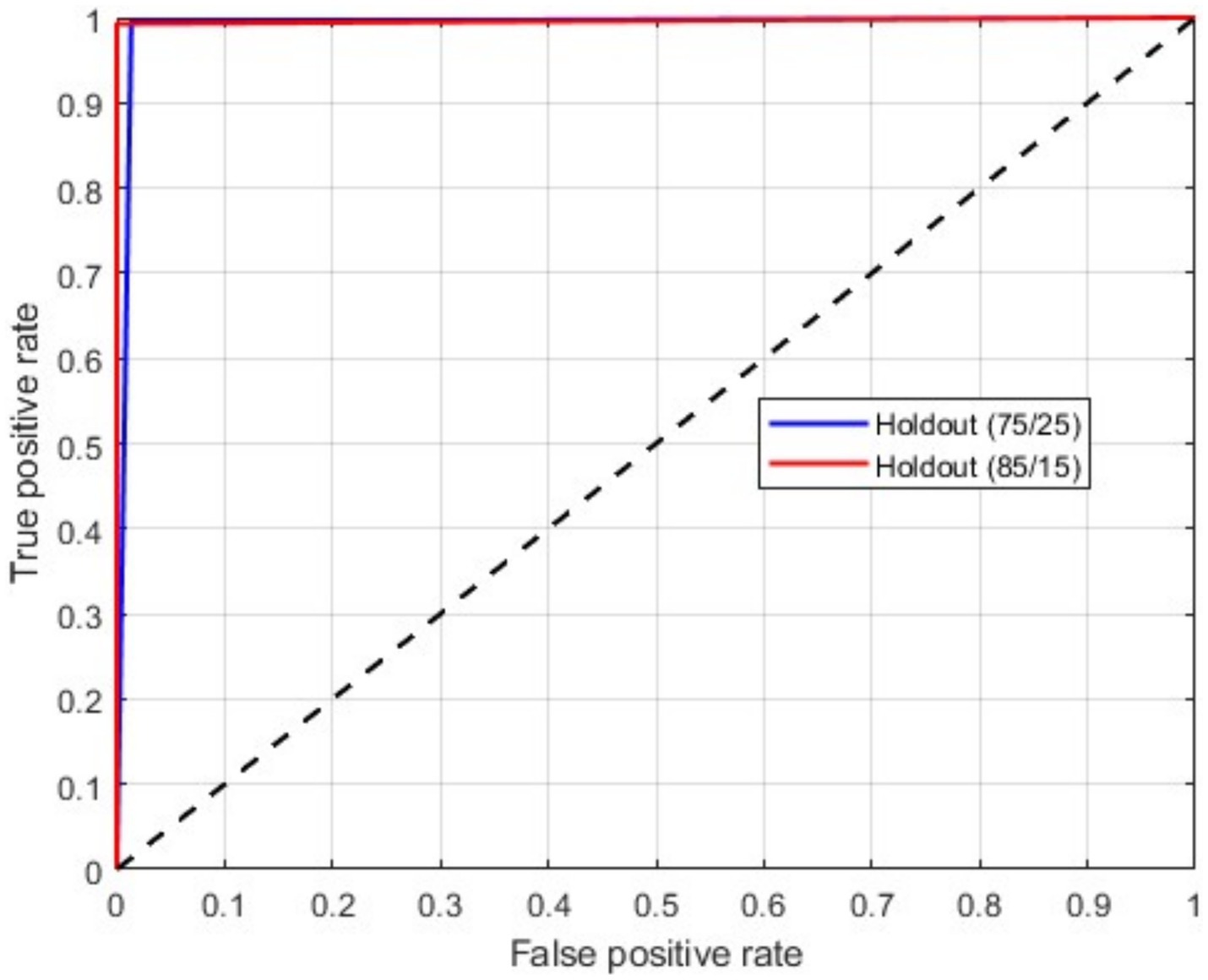

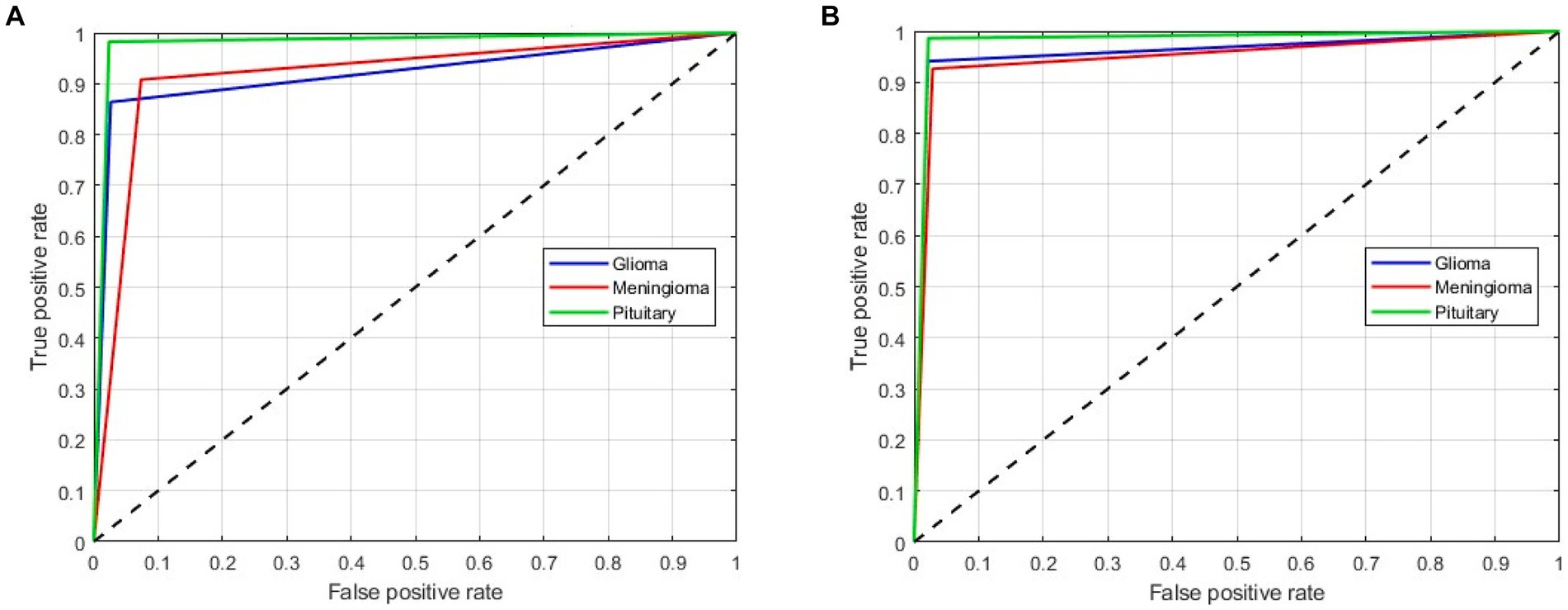

Table 2 presents the binary classification results with the proposed BrainCDNet using 5-fold cross-validation and two holdout schemes mentioned above. Our method gives better results in all validation scenarios, and the most significant results are highlighted in bold font. Figure 5 represents the receiver operating characteristics (ROC) of the holdout method. From this we observed that holdout (85/15) method has a wider ROC curve compared to the holdout (75/25), which indicates better classification performance.

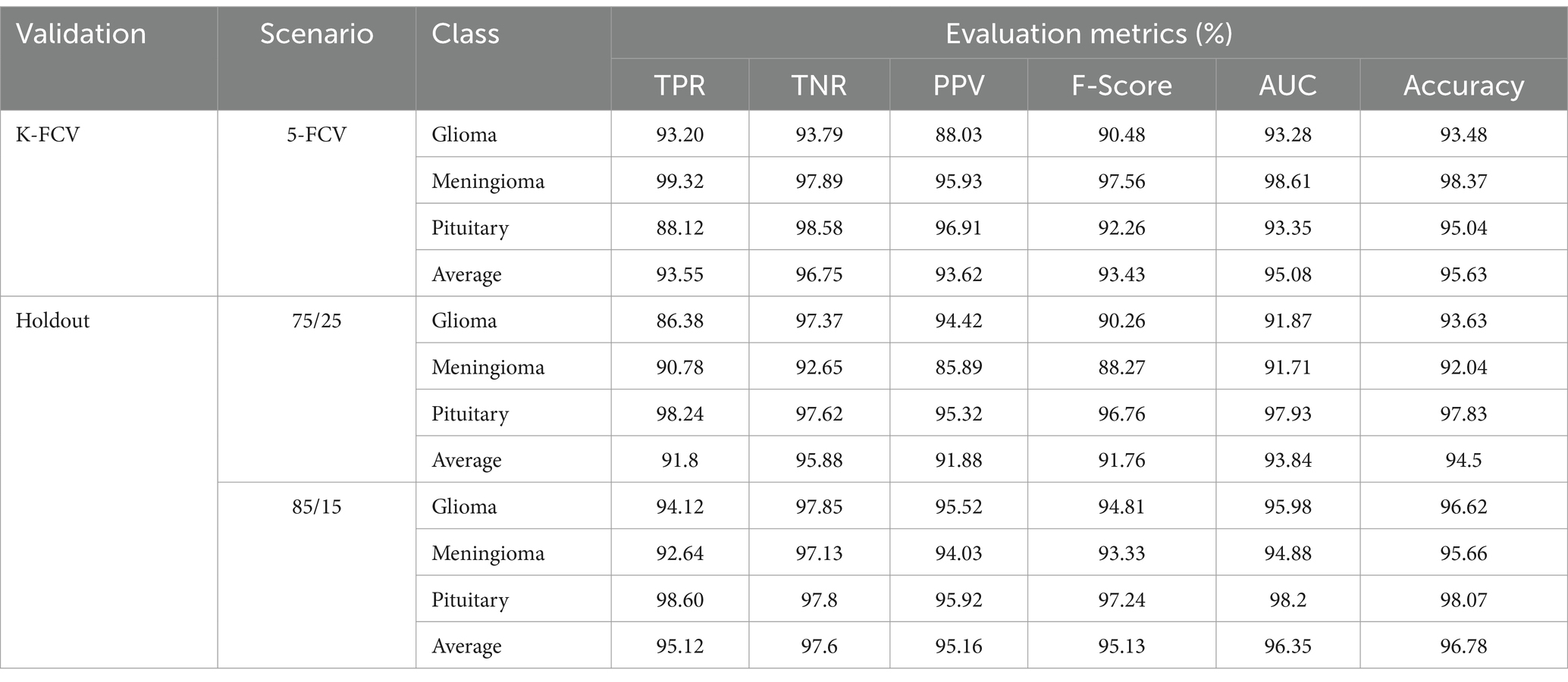

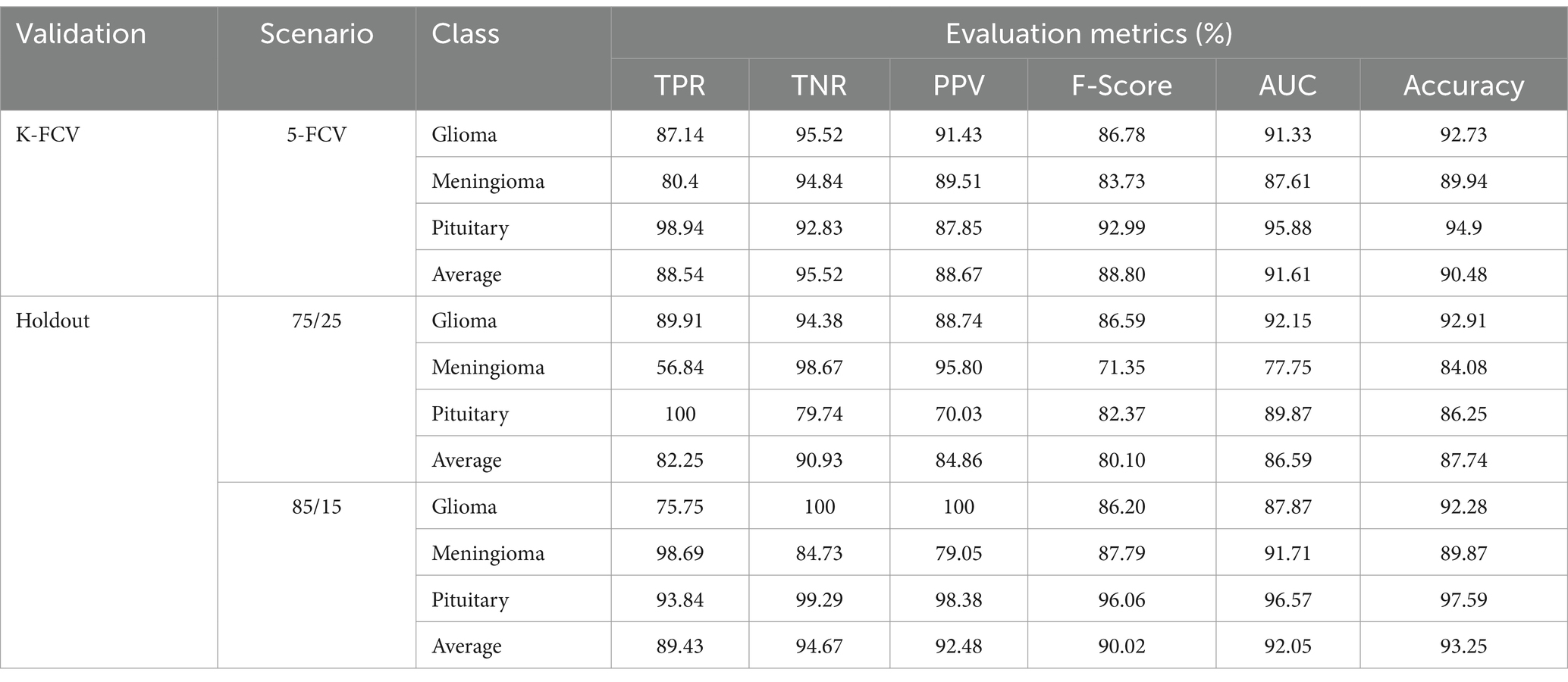

4.1.2 Scenario 2 (multiclass classification)

As mentioned above, in this scenario, we considered a multiclass classification. The given MRI images are distinguished between the three pathological classes, glioma, meningioma, and pituitary. It is one of the essential models for preparing for real-time situations. Table 3 presents the simulation results of this scenario. The table shows that the proposed approach yields a better result by more than 90% in all validation schemes. However, the holdout scheme is dominating among them. The average results of each scheme are presented in boldface. Another important observation from the table is that our approach provides higher metric values for each class. Figure 6 illustrates the ROC curves for the multiclass classification using holdout method. From these, we observed that compared to the other primary tumors, pituitary brain tumors are significantly classified.

Figure 6. ROC curves for a multiclass classification using holdout method: (A) Holdout (75/25); (B) Holdout (85/15).

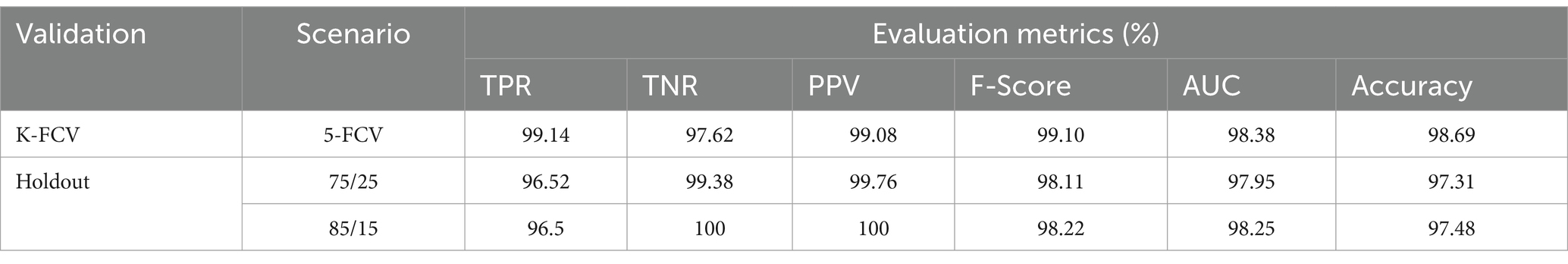

4.2 Ablation study

To evaluate the contribution of the image enhancement using Nimble filter to the overall performance of our model, we conducted an ablation study by training and evaluating our model with and without using the Nimble filter. The Tables 2, 3 presents the results with Nimble filter, and the Tables 4, 5 presents the results without using Nimble filer.

Table 5. Evaluation measures of the proposed multiclass classification model (without Nimble filter).

Comparing the metric values in Tables 2, 4, and similarly, Tables 3, 5, it is evident that the results are significantly improved when using the Nimble filter. Specifically, the accuracy of the model is higher when the Nimble filter is applied. These results indicate that the Nimble filter plays a crucial role in improving the performance of our model.

4.3 Discussion

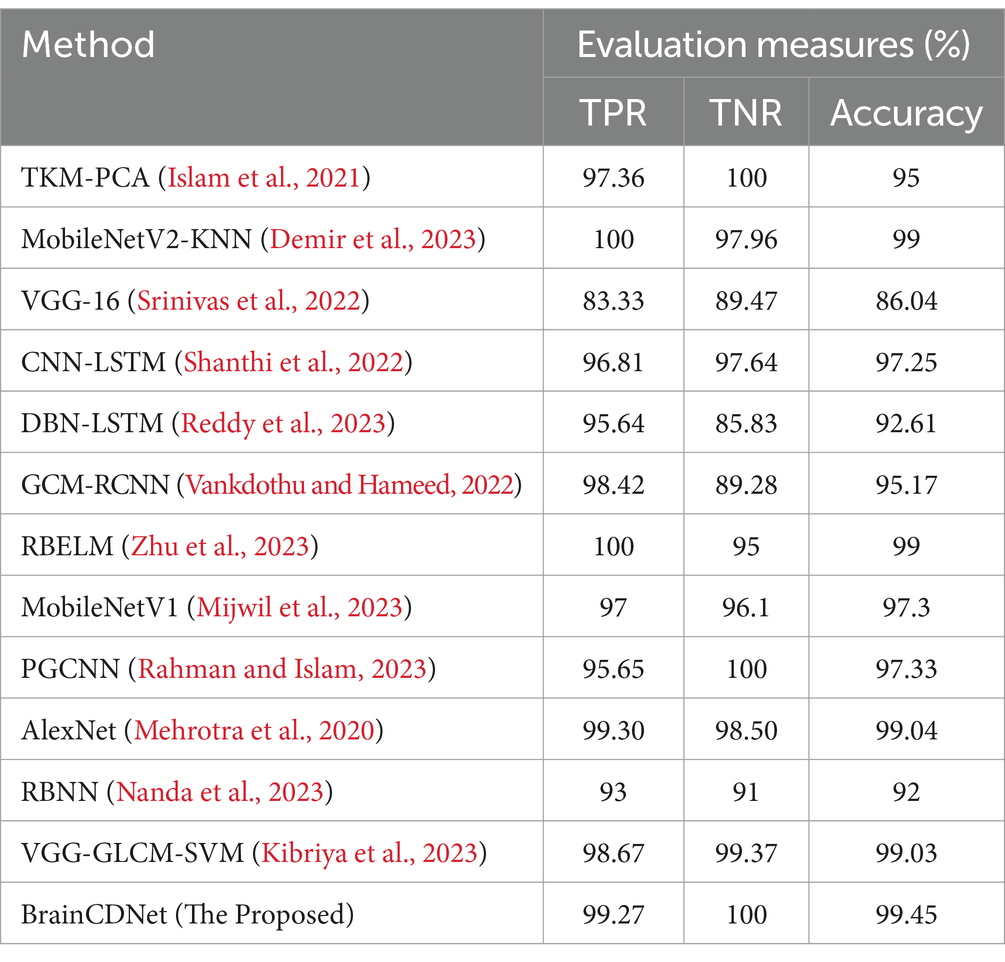

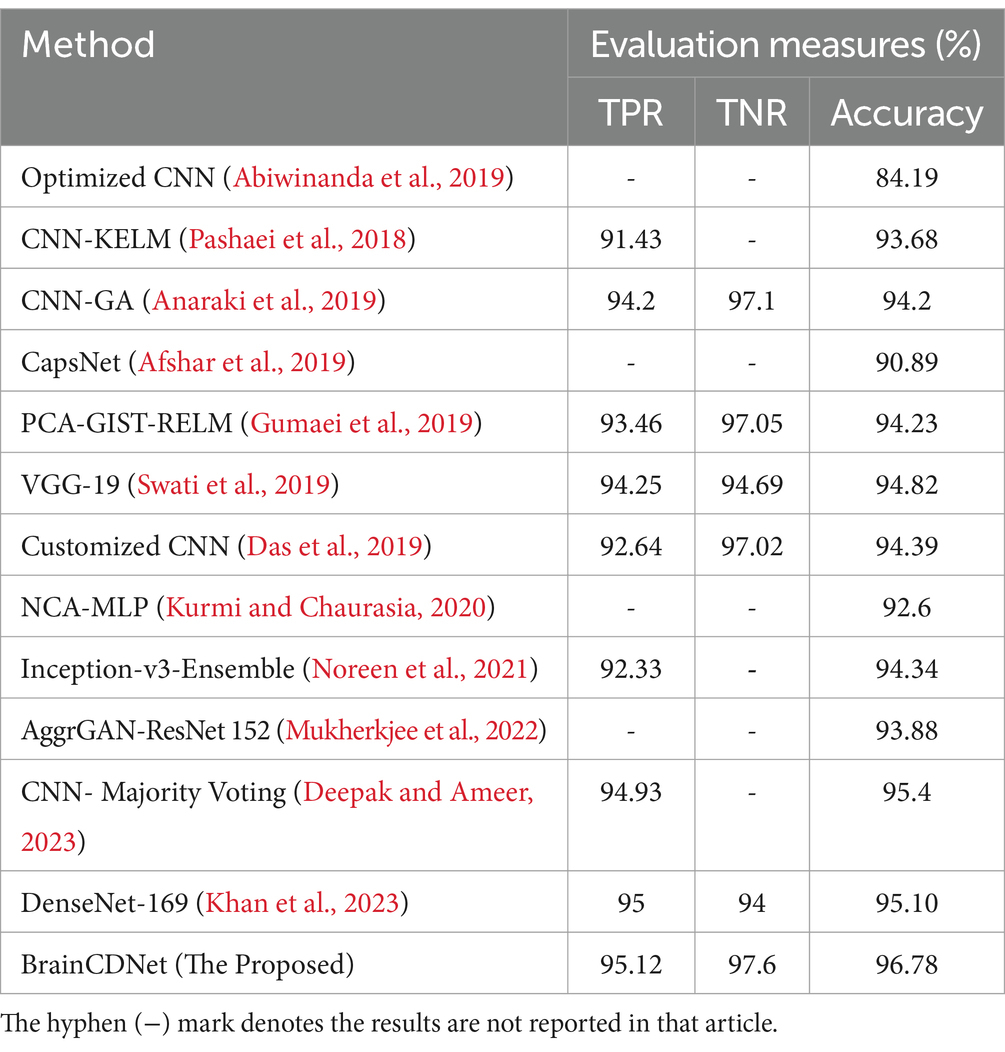

To understand the efficacy of our proposed method, we conducted a comparative analysis with the existing state-of-the-art. We compared our approach with existing methods by scenario (binary and multiclass) for fair analysis. Table 6 shows the results of the existing literature and proposed approach for binary classification, and Table 7 is about multiclass classification. In these tables, we compared the metric accuracy for all the methods as it is a standard measure used by all the mentioned works.

Table 7 shows the results of the multiclass classification. It is evident from the results that the proposed work achieved considerable improvement over the other works. The discussion on the proposed work with the compared works is given below.

Tables 6, 7 demonstrates that most of the existing studies achieved over 95% accuracy in MRI image classification. However, these studies suffer from several limitations.

The study in Islam et al. (2021) utilized a combination of datasets, including one mentioned in (Kaggle, n.d.), yet their dataset for classification purposes consisted of only about 40 MRI images, which is nearly 60 times smaller than the dataset used in our research. Similarly, studies in Shanthi et al. (2022), Srinivas et al. (2022), Demir et al. (2023), Reddy et al. (2023), Zhu et al. (2023), Mehrotra et al. (2020), and Nanda et al. (2023) employed deep neural networks but were constrained by relatively small datasets, ranging from 100 to 1,000 MRI images. To fully exploit the effectiveness of deep networks, it is essential to train them with extensive data. While the work mentioned in Vankdothu and Hameed (2022) used a substantial dataset of 2,870 images, the authors first extracted handmade features before applying them to the RNN. In contrast, our proposed algorithm bypasses this manual feature extraction process, achieving the desired result more efficiently. In another study (Mijwil et al., 2023), the authors grouped MRI images of glioma, pituitary, and meningioma tumors into one category, totaling 7,788 images, and treated 2,500 images as no tumor images. Despite employing a considerable number of MRI images, they followed only a binary classification scheme. In contrast, our proposed approach considered two distinct scenarios for improved predictive analysis. The study (Rahman and Islam, 2023), utilized three datasets for classification, employing a significant number of MRI images and various holdout schemes for validation. Despite achieving a high accuracy rate of 97.33%, which is less than 2% lower than our approach, the employed deep neural network architecture required more parameters to implement. Our proposed BrainCDNet architecture reduces the number of training parameters and enhances network stability. Finally, in another study (Kibriya et al., 2023), the authors achieved a 99% classification result by combining the features of a deep network and GLCM and using SVM for classification. However, this approach does not constitute a fully adaptive feature extraction scheme like our proposed BrainCDNet.

4.4 Overall remarks

In contrast to many existing works in the literature that primarily focus on binary classification or multiclassification schemes, our proposed approach demonstrates improved results for both of these cases. While many existing studies relied on single cross-validation analysis, we provided results for three different validation schemes, enhancing the robustness and reliability of our findings. Compared to many existing studies, we considered a larger MRI image dataset, allowing for more comprehensive analysis and evaluation of our proposed approach. Our proposed BrainCDNet scheme is implemented in a block-wise manner, significantly reducing the number of trainable parameters. This reduction in parameters not only streamlines the implementation process but also enhances the efficiency and stability of the network.

Besides, the results of the ablation study demonstrate the importance of the image enhancement filter in improving the performance of our model. By enhancing the input images before feeding them into the model, we were able to achieve higher accuracy and better image quality. This confirms the effectiveness of our proposed approach for image enhancement using machine learning. Medical images often rely on fine details and clear edges for accurate diagnosis. The Nimble Filter is designed to enhance these aspects effectively. Traditional sharpening filters can amplify existing noise in images. The Nimble Filter aims to achieve sharpening while minimizing the impact on noise levels.

5 Conclusion

In conclusion, developing accurate and efficient methods for detecting and classifying brain tumors is paramount in improving patient outcomes. This study introduces BrainCDNet, a novel DL architecture for brain tumor classification using MRI. By leveraging advanced techniques such as Nimble filtering for image sharpening, batch normalization for addressing overfitting, and GAP for feature extraction, BrainCDNet demonstrates a good performance on both binary (healthy vs. pathological) and multiclass (glioma vs. meningioma vs. pituitary) MRI databases.

The results presented in this study showcase the effectiveness of BrainCDNet in accurately identifying and classifying brain tumors, achieving an impressive accuracy of 99.45% for binary classification and 96.78% for multiclass classification. These findings highlight the potential of DL-based approaches in medical image analysis and underscore the importance of early detection and precise diagnosis of brain cancer where timely intervention is critical.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found at: https://www.kaggle.com/datasets/navoneel/brain-mri-images-for-brain-tumor-detection; https://www.kaggle.com/datasets/sartajbhuvaji/brain-tumor-classification-mri.

Ethics statement

Ethical approval was not required for the studies involving humans because publicly available datasets were used.

Author contributions

KRe: Writing – original draft. KRa: Writing – original draft. RD: Writing – review & editing. VK: Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abiwinanda, N., Hanif, M., Hesaputra, S. T., Handayani, A., and Mengko, T. R. (2019). “Brain tumor classification using convolutional neural network” in World congress on medical physics and biomedical engineering 2018: June 3–8, 2018, Prague, Czech Republic, Vol. 1 (Singapore: Springer), 183–189.

Afshar, P., Plataniotis, K. N., and Mohammadi, A. (2019). Capsule networks for brain tumor classification based on MRI images and coarse tumor boundaries. ICASSP 2019–2019 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 1368–1372).

Anaraki, A. K., Ayati, M., and Kazemi, F. (2019). Magnetic resonance imaging-based brain tumor grades classification and grading via convolutional neural networks and genetic algorithms. Biocybernet. Biomed. Eng. 39, 63–74. doi: 10.1016/j.bbe.2018.10.004

Barzekar, H., and Zeyun, Y. (2022). C-net: a reliable convolutional neural network for biomedical image classification. Expert Syst. Appl. 187:116003. doi: 10.1016/j.eswa.2021.116003

Das, S., Aranya, O. R., and Labiba, N. N. (2019). Brain tumor classification using convolutional neural network. In 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT) (pp. 1–5).

Deepak, S., and Ameer, P. M. (2023). Brain tumor categorization from imbalanced MRI dataset using weighted loss and deep feature fusion. Neurocomputing 520, 94–102. doi: 10.1016/j.neucom.2022.11.039

Demir, K., Berna, A. R., and Demir, F. (2023). Detection of brain tumor with a pre-trained deep learning model based on feature selection using MR images. Firat Univ. J. Exp. Comput. Eng. 2, 23–31. doi: 10.5505/fujece.2023.36844

Gumaei, A., Hassan, M. M., Hassan, M. R., Alelaiwi, A., and Fortino, G. (2019). A hybrid feature extraction method with regularized extreme learning machine for brain tumor classification. IEEE Access 7, 36266–36273. doi: 10.1109/ACCESS.2019.2904145

Ilic, I., and Ilic, M. (2023). International patterns and trends in the brain cancer incidence and mortality: an observational study based on the global burden of disease. Heliyon 9:e18222. doi: 10.1016/j.heliyon.2023.e18222

Islam, M. K., Ali, M. S., Miah, M. S., Rahman, M. M., Alam, M. S., and Hossain, M. A. (2021). Brain tumor detection in MR image using superpixels, principal component analysis and template based K-means clustering algorithm. Machine Learn. Appl. 5:100044. doi: 10.1016/j.mlwa.2021.100044

Kaggle. (n.d.) Brain MRI Images for Brain Tumor Detection. Available at: https://www.kaggle.com/datasets/navoneel/brain-mri-images-for-brain-tumor-detection (Accessed October 2023).

Khan, S. U., Zhao, M., Asif, S., and Chen, X. (2023). Hybrid-NET: a fusion of DenseNet169 and advanced machine learning classifiers for enhanced brain tumor diagnosis. Int. J. Imaging Syst. Technol. 34:e22975. doi: 10.1002/ima.22975

Kibriya, H., Amin, R., Kim, J., Nawaz, M., and Gantassi, R. (2023). A novel approach for brain tumor classification using an ensemble of deep and hand-crafted features. Sensors 23:4693. doi: 10.3390/s23104693

Kurmi, Y., and Chaurasia, V. (2020). Classification of magnetic resonance images for brain tumour detection. IET Image Process. 14, 2808–2818. doi: 10.1049/iet-ipr.2019.1631

Mehrotra, R., Ansari, M. A., Agrawal, R., and Anand, R. S. (2020). A transfer learning approach for AI-based classification of brain tumors. Machine Learn. Appl. 2:100003. doi: 10.1016/j.mlwa.2020.100003

Mijwil, M. M., Doshi, R., Hiran, K. K., Unogwu, O. J., and Bala, I. (2023). MobileNetV1-based deep learning model for accurate brain tumor classification. Mesopotamian J. Comput. Sci. 2023, 32–41. doi: 10.58496/MJCSC/2023/005

MRI. (n.d.) Brain Tumor Classification (MRI). Available at: https://www.kaggle.com/datasets/sartajbhuvaji/brain-tumor-classification-mri (Accessed October 2023).

Mukherkjee, D., Saha, P., Kaplun, D., Sinitca, A., and Sarkar, R. (2022). Brain tumor image generation using an aggregation of GAN models with style transfer. Sci. Rep. 12:9141. doi: 10.1038/s41598-022-12646-y

Nanda, A., Barik, R. C., and Bakshi, S. (2023). SSO-RBNN driven brain tumor classification with saliency-K-means segmentation technique. Biomed. Signal Proces. Control 81:104356. doi: 10.1016/j.bspc.2022.104356

Noreen, N., Palaniappan, S., Qayyum, A., Ahmad, I., and Alassafi, M. O. (2021). Brain tumor classification based on fine-tuned models and the ensemble method. Comput. Materials Continua 67, 3967–3982. doi: 10.32604/cmc.2021.014158

Pashaei, A., Sajedi, H., and Jazayeri, N. (2018). Brain tumor classification via convolutional neural network and extreme learning machines. In 2018 8th International conference on computer and knowledge engineering (ICCKE) (pp. 314–319).

Rahman, T., and Islam, M. S. (2023). MRI brain tumor detection and classification using parallel deep convolutional neural networks. Measurement 26:100694.

Rasheed, Z., Ma, Y. K., Ullah, I., Ghadi, Y. Y., Khan, M. Z., Khan, M. A., et al. (2023). Brain tumor classification from MRI using image enhancement and convolutional neural network techniques. Brain Sci. 13:1320. doi: 10.3390/brainsci13091320

Reddy, A. V., Mallick, P. K., Srinivasa Rao, B., and Kanakamedala, P. (2023). An efficient brain tumor classification using MRI images with hybrid deep intelligence model. Imaging Sci. J. 72, 1–5. doi: 10.1080/13682199.2023.2207892

Shanthi, S., Saradha, S., Smitha, J. A., Prasath, N., and Anandakumar, H. (2022). An efficient automatic brain tumor classification using optimized hybrid deep neural network. Int. J. Intel. Netw. 3, 188–196. doi: 10.1016/j.ijin.2022.11.003

Sheppard, A. P., Sok, R. M., and Averdunk, H. (2004). Techniques for image enhancement and segmentation of tomographic images of porous materials. Physica A 339, 145–151. doi: 10.1016/j.physa.2004.03.057

Srinivas, C., NP, K. S., Zakariah, M., Alothaibi, Y. A., Shaukat, K., Partibane, B., et al. (2022). Deep transfer learning approaches in performance analysis of brain tumor classification using MRI images. J. Healthcare Eng. 2022, 1–17. doi: 10.1155/2022/3264367

Swati, Z. N., Zhao, Q., Kabir, M., Ali, F., Ali, Z., Ahmed, S., et al. (2019). Brain tumor classification for MR images using transfer learning and fine-tuning. Comput. Med. Imaging Graph. 75, 34–46. doi: 10.1016/j.compmedimag.2019.05.001

Toh, K. K., and Isa, N. A. (2011). Locally adaptive bilateral clustering for image deblurring and sharpness enhancement. IEEE Trans. Consum. Electron. 57, 1227–1235. doi: 10.1109/TCE.2011.6018878

Vankdothu, R., and Hameed, M. A. (2022). Brain tumor MRI images identification and classification based on the recurrent convolutional neural network. Measurement 24:100412. doi: 10.1016/j.measen.2022.100412

Zhu, Z., Khan, M., Wang, S. H., and Zhang, Y. (2023). RBEBT: a ResNet-based BA-ELM for brain tumor classification. Cmc-Comput. Mater. Contin. 74, 101–111. doi: 10.32604/cmc.2023.030790

Keywords: brain tumors, deep learning, magnetic resonance imaging, Nimble filter, normalization

Citation: Reddy KR, Rajesh KNVPS, Dhuli R and Kumar VR (2024) BrainCDNet: a concatenated deep neural network for the detection of brain tumors from MRI images. Front. Hum. Neurosci. 18:1405586. doi: 10.3389/fnhum.2024.1405586

Edited by:

Kang Hao Cheong, Singapore University of Technology and Design, SingaporeReviewed by:

Saim Rasheed, King Abdulaziz University, Saudi ArabiaEmanuele Torti, University of Pavia, Italy

Copyright © 2024 Reddy, Rajesh, Dhuli and Kumar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kandala N. V. P. S. Rajesh, a2FuZGFsYS5yYWplc2gyMDE0QGdtYWlsLmNvbQ==

K. Rasool Reddy

K. Rasool Reddy Kandala N. V. P. S. Rajesh

Kandala N. V. P. S. Rajesh Ravindra Dhuli2

Ravindra Dhuli2 Vuddagiri Ravi Kumar

Vuddagiri Ravi Kumar