94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 08 January 2024

Sec. Brain Health and Clinical Neuroscience

Volume 17 - 2023 | https://doi.org/10.3389/fnhum.2023.1325215

This article is part of the Research Topic Online Data Collection for Human Neuroscience: Challenges and Opportunities. View all 8 articles

A correction has been applied to this article in:

Corrigendum: Remote assessment of cognition in Parkinson's disease and cerebellar ataxia: the MoCA test in English and Hebrew

There is a critical need for accessible neuropsychological testing for basic research and translational studies worldwide. Traditional in-person neuropsychological studies are inherently difficult to conduct because testing requires the recruitment and participation of individuals with neurological conditions. Consequently, studies are often based on small sample sizes, are highly time-consuming, and lack diversity. To address these challenges, in the last decade, the utilization of remote testing platforms has demonstrated promising results regarding the feasibility and efficiency of collecting patient data online. Herein, we tested the validity and generalizability of remote administration of the Montreal Cognitive Assessment (MoCA) test. We administered the MoCA to English and Hebrew speakers from three different populations: Parkinson’s disease, Cerebellar Ataxia, and healthy controls via video conferencing. First, we found that the online MoCA scores do not differ from traditional in-person studies, demonstrating convergent validity. Second, the MoCA scores of both our online patient groups were lower than controls, demonstrating construct validity. Third, we did not find differences between the two language versions of the remote MoCA, supporting its generalizability to different languages and the efficiency of collecting binational data (USA and Israel). Given these results, future studies can utilize the remote MoCA, and potentially other remote neuropsychological tests to collect data more efficiently across multiple different patient populations, language versions, and nations.

Neuropsychological testing is essential for understanding cognitive processes and brain functionality across various patient groups, languages, and countries. Neuropsychological research provides insight into how different areas of the brain function, allowing for neuroanatomical localization and network level understanding of different brain regions (Grahn et al., 2009; Zald and Andreotti, 2010; O’Halloran et al., 2012). By testing individuals with brain disorders, we have advanced our understanding of brain-behavior relationships and gained a more detailed understanding of cognition (Lezak, 2000). In cognitive research, there is often a selection bias, focusing mainly on cortical function (Parvizi, 2009; Janacsek et al., 2022; Saban and Gabay, 2023). As a result, the role of subcortical regions is often overlooked (Saban et al., 2018a,b, 2021; Soloveichick et al., 2021).

However, studying patients with subcortical brain pathologies can help us understand the role of these regions in cognition (Rossetti et al., 2011; Malek-Ahmadi et al., 2018; Saban and Ivry, 2021; Saban and Gabay, 2023). For instance, neuropsychological testing of people with Parkinson’s disease (PD) provides insights into the function of the basal ganglia (BG) (Orozco et al., 2020). Similarly, studying individuals with Cerebellar Ataxia (CA) helps us understand the function of the cerebellum (Saban and Gabay, 2023).

Parkinson’s disease and CA are neurodegenerative disorders that affect the central nervous system, leading to profound impacts on motor processes. In PD, the loss of dopamine-producing neurons in the substantia nigra results in motor symptoms, such as tremors and rigidity. However, cognitive impairment is also a manifestation of PD, with deficits in executive function, attention, and memory (Kandiah et al., 2014; Weintraub et al., 2015; Moustafa et al., 2016). CA leads to difficulty in coordination, balance, and fine motor control. Cognitive impairment in CA is also expected as the disease progresses, especially in attention and executive functions (Fancellu et al., 2013; Tran et al., 2020; Malek et al., 2022). Motor function is frequently evaluated via the United Parkinson’s disease Rating Scale (UPDRS, Goetz, 2003) in PD, and in CA via the Scale for Assessment and Rating of Ataxia (SARA, Schmitz-Hübsch et al., 2006). Testing the cognitive profiles of both the PD and CA populations can allow researchers to better understand how subcortical brain structures relate to human cognitive abilities. The Montreal Cognitive Assessment (MoCA; Nasreddine et al., 2005), a globally recognized test, is commonly used by healthcare providers and researchers to screen and assess cognition in a wide variety of neurological diseases, including both PD and CA.

While testing cognition in PD and CA is crucial for understanding cognitive processes and the human brain, recruitment and testing of these individuals in person is challenging. This is partly due to the rarity of CA, which affects less than 0.03% of the population (Salman, 2018), and the mobility restrictions faced by individuals with PD or CA. These challenges often result in prolonged study periods (e.g., 2 years) and small sample sizes, typically fewer than 15 participants (Breska and Ivry, 2018; Olivito et al., 2018; Wang et al., 2018). Moreover, many studies rely on participants from the same geographic area or family (McDougle et al., 2021), which leads to a lack of diversity in the sample and potential bias.

In addition, collecting sufficient data across multiple patient groups for sensitivity or specificity testing can be a significant challenge with traditional in-person testing methods. These limitations highlight the need for alternative approaches to data collection in neuropsychological studies. The challenges of traditional in-person methods have led to the rise of online methods in behavioral studies. Research shows that remote testing, including video telehealth approaches, is as reliable and valid as in-person testing (Casler et al., 2013; Chandler and Shapiro, 2016; Buhrmester et al., 2018; Bilder et al., 2020; Geddes et al., 2020; Marra et al., 2020; Saban and Ivry, 2021; Binoy et al., 2023).

Remote testing offers several advantages. For example, it makes research participation more convenient for individuals with neurological conditions by eliminating the need for travel (Barbosa et al., 2020). It also allows for rapid data collection and comprehensive assessments (Binoy et al., 2023), reaching a wider and more diverse pool of participants (Saban and Ivry, 2021; Binoy et al., 2023). However, online testing has its limitations. For example, it may be biased toward those with internet access and technological literacy, and home environment can vary (Hewitt et al., 2020).

In recent years, remote methods have been increasingly used to identify individuals with cognitive impairment. The Montreal Cognitive Assessment (MoCA; Nasreddine et al., 2005), a globally recognized test, is commonly used by healthcare providers and researchers to assess mild cognitive impairment (MCI). The MoCA has been employed to screen for mild cognitive impairment in PD and CA (Saban and Ivry, 2021), and to evaluate cognition (Butcher et al., 2017; Wu et al., 2017). However, a significant challenge lies in making remote evaluations of cognitive impairment, such as the MoCA, more accessible while ensuring their validity in both healthy and clinical populations.

Accordingly, with the growing use of technology, there has been a wide interest in the validity of administering the MoCA test remotely. A validated telephone version of the MoCA (T-MoCA) exists, which may be used when face-to-face (F2F) administration is not feasible (Klil-Drori et al., 2022). This telephone-based method could expand the recruitment pool to include individuals who need videoconferencing access. However, it is mainly useful for a simplified classification of patient cognitive status (Carlew et al., 2020). A preliminary study tested the T-MoCA on 21 PD participants, who also completed the traditional F2F MoCA. The study found only a modest correlation between the T-MoCA and traditional neuropsychological measures (verbal delayed recall = 0.35, trail making = 0.21, digit span = 0.55, Stroop interference trail = 0.39) (Benge and Kiselica, 2021). Another study tested an electronic version of the MoCA (eMoCA) via a touchscreen. The study compared the eMoCA to the regular paper version on a sample of 40 healthy older adults in the same session, finding a strong correlation (r = 0.68) between the two scores (Wallace et al., 2019). However, to our knowledge, the eMoCA has yet to be tested remotely on patients with PD or CA.

Several studies have investigated a video-conferencing version of the MoCA. A recent study compared MoCA scores obtained F2F with those obtained via video telehealth in a large sample of healthy English-speaking participants (Loring et al., 2023). The study found no differences between the two methods, supporting the validity of remote MoCA administration. Another study on English-speaking patients with mild-to-severe dementia found that the average MoCA score was not different in those tested remotely compared to those tested F2F, with an excellent intra-class coefficient reliability (ICC = 0.93) (Lindauer et al., 2017). Interestingly, a study amongst Japanese-speaking older adults found that the ICC for the MoCA was high overall but varied depending on the subgroup. The ICCs were lower in healthy controls (0.53) compared to those with mild cognitive impairment (MCI) (0.82) or dementia (0.82), and depended on disease severity (Iiboshi et al., 2020). Given these studies, it remains unclear whether online MoCA is valid in clinical populations.

A few studies have demonstrated the feasibility and efficiency of administering the MoCA on PD or CA participants through video conferencing. One study on a small sample of English-speaking PD patients (8) showed the feasibility of remotely administering the MoCA to these patients with movement disorders. However, this study did not compare the video conferencing patient data to F2F results (Abdolahi et al., 2016). In a pilot study on a small sample (n = 11) of English-speaking PD participants, participants completed the F2F MoCA and videoconferencing MoCA 1 week later (Stillerova et al., 2016). No differences were found between the two methods of administration; however, due to the small sample size, the validity of the videoconferencing MoCA remains to be tested. While most prior studies have small sample size, one study used a large database (n = 166) of PD participants. The researchers assessed patients via videoconferencing, which included the MoCA, showing feasibility of remote MoCA (Dorsey et al., 2015). Although this study had a large sample of PD participants, no comparison was made between patients and healthy participants. Online administration of the MoCA on participants with CA has been tested in a limited capacity by one study. In a pilot study administering a modified online version of the MoCA on a small sample of English-speaking CA participants (n = 18), no differences were found between online administration and previous in-person studies (Binoy et al., 2023).

As can be derived from reading previous literature, most of the studies that used the remote MoCA were conducted in English, with only one exception in Japanese. However, there is a notable over-reliance on English speakers in cognitive science (Blasi et al., 2022). English is the dominant language in the study of human cognition and behavior, and both the subjects of cognitive science studies and the researchers themselves are often English speakers. This reliance on English as the primary language of participants (and researchers) introduces a clear bias in the measurement of cognitive functions and hinder cognitive assessments (Blasi et al., 2022).

Online assessment allows for broader geographic reach and more diverse patient populations, supporting the generalizability of online testing in populations that do not consist solely of English speakers. The MoCA has been translated into 36 different languages, including Hebrew. While the in-person Hebrew version has been validated (Lifshitz et al., 2012), the remote version has yet to be validated in healthy or clinical populations.

To bridge the above-mentioned gaps, the current study aimed to assess the validity and generalizability of administering the MoCA online in two different languages (English and Hebrew) and across three populations: PD, CA, and healthy controls. We tested the convergent validity of the online MoCA by comparing our online data to in-person studies in all three groups, predicting no difference between the administration methods. The construct validity was also tested by comparing our online patient groups to healthy controls, hypothesizing similar patterns to previous in-person literature. Lastly, we examined the generalizability of online testing across different language-speaking populations: English and Hebrew.

A total of 120 participants were evaluated. The participants responded to online advertisements (e.g., Facebook groups). For interested individuals, we followed-up with an email and a video call to describe the project in detail. Our initial recruitment email indicated that participation would require the ability to use a computer. Note that we ensured there were no video or audio issues before starting each session, so it would not interrupt the assessment. If there was any issue, we resolved it during the meeting or, in rare cases, rescheduled the session. For each participant, we obtained medical history, and we tested MCI using the MoCA (version 8.1). This protocol was approved by Tel Aviv University ethics committee and all participants provided informed consent.

See Table 1 for demographic information of all groups. Fifty percent of the participants (n = 60) were assessed via the English version, and the remaining participants via the Hebrew version (Lifshitz et al., 2012). All participants reported that they speak only one language, either Hebrew or English. For each language, we administered the MoCA to 20 participants in each group: Control, PD, and CA.

The Hebrew-speaking CA group consisted of 17 individuals with a known genetic subtype of cerebellar ataxia (SCA3) and 3 with degenerative disorders of unknown etiology. Their mean duration since diagnosis was 6.1 (SD = 5.4) years and their SARA score was 12.1 (SD = 5.3). The English-speaking CA group consisted of 12 individuals with a known genetic subtype of CA (1 SCA1, 1 SCA28, 7 SCA3, 2 SCA5, 1 SCA6), and 8 with degenerative disorders of unknown etiology. Their mean duration since diagnosis was 5.5 (SD = 3.6) years and their SARA score was 13.3 (SD = 4.9). For the Hebrew and English-speaking PD group, we did not include individuals with surgical intervention (e.g., DBS), and all participants were tested while on their current medication regimen. The English-speaking PD group’s mean duration since diagnosis was 8 (SD = 4.9) years and their UPDRS score was 20.5 (SD = 5.3). The Hebrew-speaking PD group’s mean duration since diagnosis was 6.3 (SD = 4.5) years and their UPDRS score was 21.4 (SD = 12.6). All PD participants’ Hoehn and Yahr scores were below 4. The diagnosis of both patient groups was also based on self-report. Self-report assessment has evolved considerably in recent years, emerging as a robust and effective data collection method. Previous studies have found high concurrence rates between self-report and clinician-determined diagnosis (Kim et al., 2018; Winslow et al., 2018; Smolensky et al., 2020). The age across all groups ranged from 47.5 to 64 years, and MoCA scores did not change significantly within this age range (Rossetti et al., 2011; Freitas et al., 2012). The years of education of all groups ranged from 14.6 to 16.9 years, and it was found that variance above 12 years of education did not affect the MoCA score (Rossetti et al., 2011).

To calculate the required sample sizes, we conducted a power analysis (alpha = 0.05; power = 0.99) using effect sizes derived from five in-person studies that compared each patient group (PD or CA) and a neurotypical group in the MoCA test (PD: Hoops et al., 2009; Dalrymple-Alford et al., 2010; Hu et al., 2014; Kandiah et al., 2014; Biundo et al., 2016; D = −1.152: large effect size; CA: Tunc et al., 2019; Zhang et al., 2020; Chen et al., 2022; Schniepp et al., 2023; van Prooije et al., 2023; D = −1.265: large effect size). These analyses suggested a minimal sample size of 14 participants for each patient group (PD = 13.39; CA = 11.38). As such, the sample sizes of our groups (20) had sufficient power to detect group differences. To our knowledge, no previous studies compared the three groups on two different languages of the MoCA.

Our online MoCA tests are in accordance with the official instructions that appear on the MoCA website. Changes were made to minimize deviations from standard F2F administration. Six MoCA items (visuospatial and naming) were presented using PowerPoint slides by the share screen Zoom option. For the trail-making test, participants were asked to say the number-letter sequence aloud (rather than drawing lines to connect the circle) according to the instructions provided on the official MoCA website for videoconferencing administration, which have been validated in a healthy control group (Loring et al., 2023). The copy cube stimulus slide contained “Cube copy” next to the figure to closely mimic the paper and pencil presentation. Similarly, the draw clock stimulus was presented on the screen during clock drawing. Participants were instructed to draw the cube and the clock on their own piece of paper and present their drawings in front of the camera. Following the clock drawing, participants were instructed to put the paper and writing utensils aside. Naming stimuli were presented individually on the screen. Orientation for place and city asked for the participant’s location.

First, to assess the convergent validity of the online MoCA, we compared our online results to previous in-person studies (called “literature value”) using a one-sample t-test. For the literature value, we obtained the mean and standard deviation from relevant papers. Papers were selected based on their relevance (healthy, CA, and PD groups), with studies that administered the MoCA in-person and published within the last 15 years. The MoCA literature value used for the healthy control group comparison was taken from the original in-person MoCA validation study (Nasreddine et al., 2005). The literature value for the PD and CA groups were derived from five in-person studies for each group (PD: Hoops et al., 2009; Dalrymple-Alford et al., 2010; Hu et al., 2014; Kandiah et al., 2014; Biundo et al., 2016; CA: Tunc et al., 2019; Zhang et al., 2020; Chen et al., 2022; Schniepp et al., 2023; van Prooije et al., 2023).

The average MoCA score for our control group was 27.3, which is a value that falls within the normal range (>26). We did not find a significant difference from the literature value [μ = 27.4; n = 90, t(39) = 0.491, p = 0.626, effect size = 0.078]. Similarly, the online MoCA scores for the PD and CA groups were not significantly different from the literature values [PD: μ = 25.096, n = 523, t(39) = 1.770, p = 0.085, effect size = 0.280; CA: μ = 24.689, n = 195, t(39) = 0.846, p = 0.403, effect size = 0.134]. Given that the literature values were obtained in-person, these results show that our online approach produces typical results, supporting the convergent validity of the online approach in all groups.

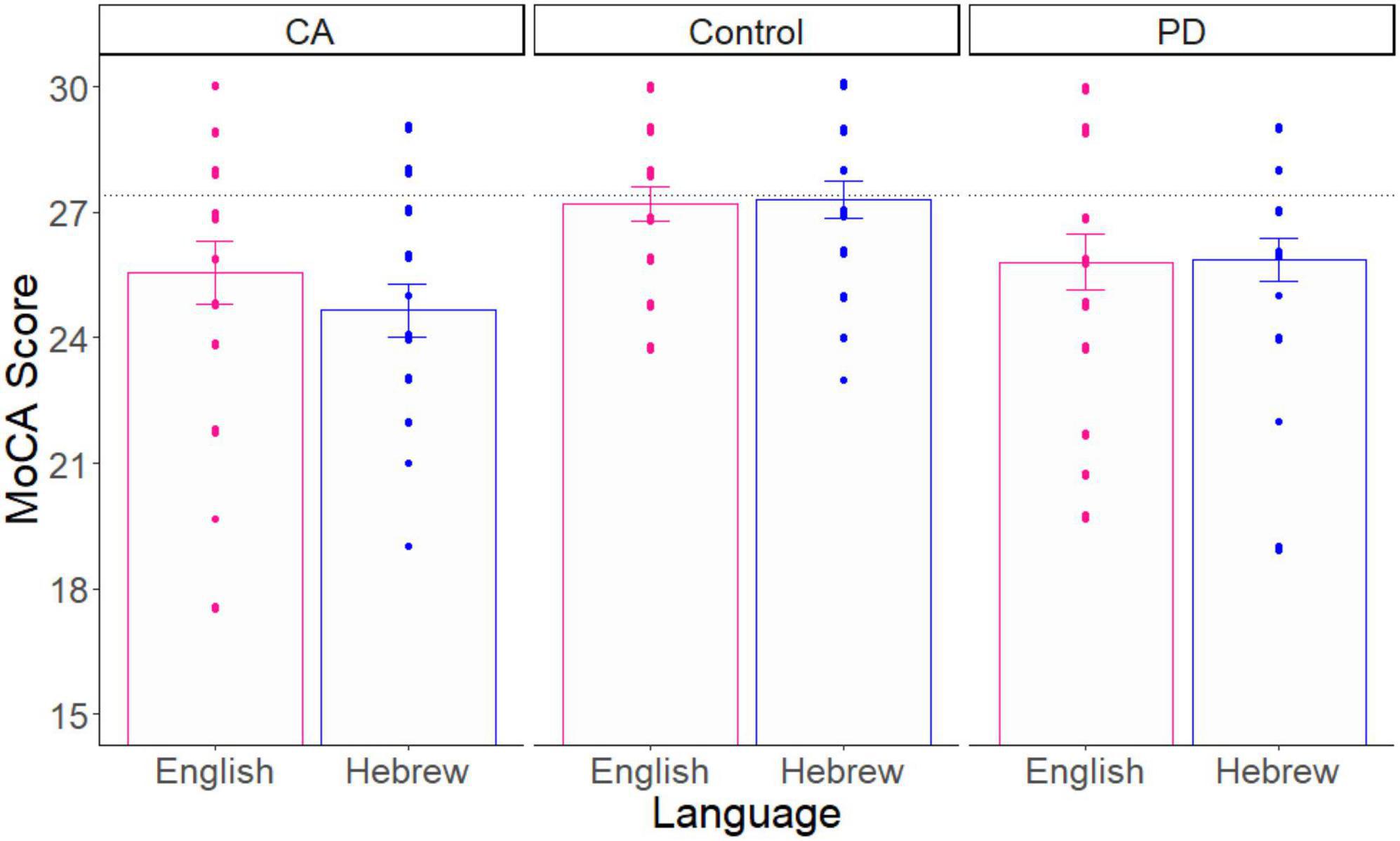

Second, since these patient groups typically show lower MoCA scores than healthy controls, we assessed the construct validity of the online MoCA by comparing our online neurotypical group to our patient groups. Third, to assess generalizability to other languages, we compared our Hebrew-speaking participants to our English-speaking participants. To achieve these last two goals, we carried out a two-way analysis of variance (ANOVA; in R software) with Group (Control, PD, CA) and Language (Hebrew, English) as the independent variables, and the MoCA score as the dependent measure. See Figure 1 for a comparison of the three groups and two languages (n = 60/Language).

Figure 1. The average MoCA score as a function of group and language. The score for each participant is a dot. The black dotted line is the literature value of healthy control participants. Error bars = SE.

As expected by previous literature, this analysis showed that there was a significant main effect of Group on the MoCA score [F(2, 114) = 7.100, p = 0.001, effect size = 0.110]. Planned-comparison analyses revealed that the Control group performed significantly higher than the two patient groups, which did not differ significantly from each other [PD vs. Control: t(78) = 2.785, p = 0.006, effect size = 0.623; CA vs. controls: t(78) = 3.766, p = 0.0003, effect size = 0.842; PD vs. CA: t(78) = 1.139, p = 0.258, effect size = 0.255]. There was no significant main effect of Language [F(1, 114) = 0.292, p = 0.590, effect size = 0.002]. Finally, we did not find a significant interaction effect between Group and Language [F(2, 114) = 0.464, p = 0.630, effect size = 0.008]. These results demonstrate the construct validity and generalizability of the online MoCA test.

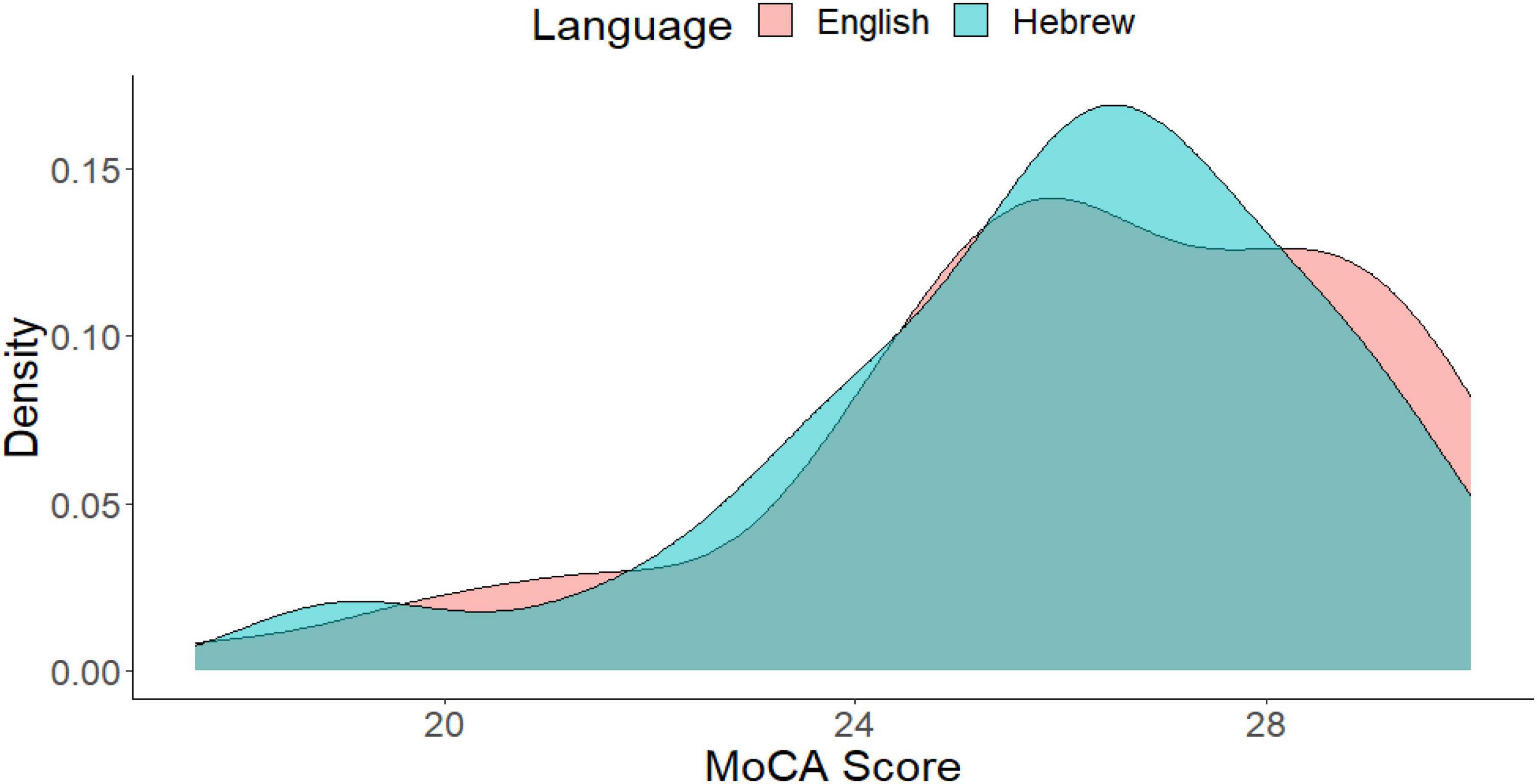

We also examined the degree of overlap between the distributions of the two language versions of the MoCA, as shown in Figure 2. The overlap was defined as the intersection of the ranges of the two distributions, and we calculated the integral of the pointwise minimum of these densities over this range. The degree of overlap between the two distributions was found to be 91% (out of 100), indicating a high degree of overlap. This suggests that the two language distributions are similar to each other.

Figure 2. Histogram of the MoCA score as a function of language. The degree of overlap between the two distributions is 91%.

Our study provides evidence supporting the equivalence of online MoCA testing with traditional in-person testing. We evaluated the validity of online MoCA administration in three groups: PD, CA, and healthy controls. The results indicate construct validity for online MoCA administration. As anticipated, the patient groups (PD and CA) scored lower than the healthy control group, reflecting the known cognitive impacts of these conditions. Interestingly, we observed no differences between the English and Hebrew versions of the test in each group. Our findings suggest that online MoCA testing is generalizable across two different language versions and can be valid across three populations.

Our current study has four primary limitations. Firstly, while our total sample size was 120 participants, each subgroup consisted of only 20 individuals. This limited sample size per subgroup restricts our ability to assess the specific items within the MoCA for each subgroup. This limited sample size per subgroup also restricts our ability to assess cognitive abilities within each specific subtype (e.g., SCA3 vs. SCA6). Future research could benefit from utilizing the remote MoCA to recruit larger sample sizes for each group. This would also allow for a more detailed analysis of individual MoCA items. Secondly, a more direct comparison between in-person and online administration could be achieved by conducting both types of assessments with the same participants from the PD and CA groups. This approach would provide a direct measure of validity by comparing these two testing methods. Third, conducting tests and experiments online may introduce an inherent selection bias, as participants are expected to have computer proficiency, potentially skewing the results in favor of technology-proficient populations. In our study, some participants found the attention and sentence repetition tasks more challenging due to internet connectivity issues that may obscure the audio. However, in general, participants provided positive feedback stating that they found the remote format to be more convenient, saving them the time and cost associated with traveling to an in-person testing site. Finally, there is also a potential selection bias in favor of participants with less disease severity who are more capable of participating in online studies. These considerations offer possible directions for future studies aiming to further our understanding of online cognitive assessments.

One interesting point of comparison is the eMoCA, which enables automated testing (Wallace et al., 2019). We propose that the videoconferencing MoCA has some advantages for PD and CA patients, as can be observed from our study. Videoconferencing MOCA is a simpler alternative as opposed to eMoCA which requires mailing a touchscreen tablet to participants or installing an application onto the participant’s device. Videoconferencing methods can also simplify the process for older participants who may not be as skilled with technology. Video conferencing allows researchers to maintain an adaptable human presence for participants to interact with while performing the assessment. Since videoconferencing involves a researcher who can mediate the computer interface, this allows a more accessible approach than the eMoCA, which requires independent work with a tablet and application. Additionally, the eMoCA is not available in all languages, including Hebrew. However, one limitation of videoconferencing methods compared to the eMoCA is that videoconferencing requires an administrator to be present, while the eMoCA is fully automated.

Despite these limitations, our study underscores the potential of the online MoCA in facilitating multinational data collection. The creation of a multinational database for patients with neurological conditions, especially rare conditions such as CA, can lead to a more representative and diverse sample size. Given that the MoCA is available in many languages, the remote version of the MoCA offers researchers an opportunity to gather more representative data across multiple language-speaking populations. This could help overcome major limitations in neuropsychological research (Saban and Ivry, 2021) related to language barriers and constraints imposed by the neurological conditions being tested.

We collected data from 120 participants in a 1-month period. Thus, the online approach is not only valid but much more efficient in terms of data collection. Our sample included individuals who are currently residing in more than 20 USA states, such as New York and California, and different cities in Israel, such as Jerusalem and Tel Aviv. Accordingly, our online sample is much more geographically diverse than a typical laboratory-based study. Recruitment across countries and different populations is critical for diversity and, potentially, better representation of the population. Our remote videoconferencing MoCA shows promise in the avenue of telemedicine, which is especially useful for early detection and screening of neurodegenerative conditions that require long-term repeated evaluation of symptoms. This would improve accessibility for rural and underserved populations, as well as those with mobility restrictions.

Remote testing presents an encouraging avenue for broadening the scope and depth of neuropsychological research. It opens possibilities for investigating interactions between other areas of function, such as motor abilities, and comparing conditions like PD and CA with other neurological conditions, such as Huntington’s disease. There is a need for future studies to develop new strategies to validate remote diagnostic tests, such as the SARA and the UPDRS.

Our promising results underscore the potential use of technological advancements to revolutionize both research and clinical communities. Establishing the validity of online cognitive assessment is particularly vital in the era of tailored therapy and biomarker development. Online cognitive batteries using large and diverse cohorts can allow researchers to profile cognitive abilities more accurately in different pathologies. The increased efficiency in collecting data and diversity in sample sizes resulting from binational efforts could significantly enhance our understanding of PD and CA. The impact of technological advancements could lead to improved diagnosis, treatment, and care for individuals affected by these conditions. It is our hope that neuropsychological assessment developments will continue to evolve in tandem with technological capabilities, ultimately benefiting patients worldwide.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

The studies involving humans were approved by the Tel Aviv University Center for Accessible Neuropsychology. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

SB: Writing – original draft, Writing – review and editing. LM-K: Writing – review and editing. PP: Writing – review and editing. WS: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Supervision, Validation, Writing – original draft, Writing – review and editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the TAU startup grant for WS.

We acknowledge Dr. Kathleen Poston for her constructive feedback.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdolahi, A., Bull, M. T., Darwin, K. C., Venkataraman, V., Grana, M. J., Dorsey, E. R., et al. (2016). A feasibility study of conducting the Montreal Cognitive Assessment remotely in individuals with movement disorders. Health Inform. J 22, 304–311. doi: 10.1177/1460458214556373

Barbosa, W., Zhou, K., Waddell, E., Myers, T., and Dorsey, E. R. (2020). Improving access to care: telemedicine across medical domains. Annu. Rev. Public Health 42, 463–481. doi: 10.1146/annurev-publhealth-090519-093711

Benge, J. F., and Kiselica, A. M. (2021). Rapid communication: Preliminary validation of a telephone adapted Montreal Cognitive Assessment for the identification of mild cognitive impairment in Parkinson’s disease. Clin. Neuropsychol. 35, 133–147. doi: 10.1080/13854046.2020.1801848

Bilder, R. M., Postal, K. S., Barisa, M., Aase, D. M., Munro Cullum, C., Gillaspy, S. R., et al. (2020). Inter organizational practice committee recommendations/guidance for teleneuropsychology in response to the covid-19 pandemic. Arch. Clin. Neuropsychol. 35, 647–659. doi: 10.1093/arclin/acaa046

Binoy, S., Woody, R., Ivry, R. B., and Saban, W. (2023). Feasibility and efficacy of online neuropsychological assessment. Sensors 23:5160. doi: 10.3390/s23115160

Biundo, R., Weis, L., Bostantjopoulou, S., Stefanova, E., Falup-Pecurariu, C., Kramberger, M. G., et al. (2016). MMSE and MoCA in Parkinson’s disease and dementia with Lewy bodies: a multicenter 1-year follow-up study. J. Neural Transm. 123, 431–438. doi: 10.1007/s00702-016-1517-6

Blasi, D. E., Henrich, J., Adamou, E., Kemmerer, D., and Majid, A. (2022). Over-reliance on English hinders cognitive science. Trends Cogn. Sci. 26, 1153–1170. doi: 10.1016/j.tics.2022.09.015

Breska, A., and Ivry, R. B. (2018). Double dissociation of single-interval and rhythmic temporal prediction in cerebellar degeneration and Parkinson’s disease. Proc. Natl. Acad. Sci. U. S. A. 115, 12283–12288.

Buhrmester, M. D., Talaifar, S., and Gosling, S. D. (2018). An evaluation of amazon’s mechanical Turk, its rapid rise, and its effective use. Perspect. Psychol. Sci. 13, 149–154. doi: 10.1177/1745691617706516

Butcher, P. A., Ivry, R. B., Kuo, S. H., Rydz, D., Krakauer, J. W., and Taylor, J. A. (2017). The cerebellum does more than sensory prediction error-based learning in sensorimotor adaptation tasks. J. Neurophysiol. 118, 1622–1636. doi: 10.1152/JN.00451.2017

Carlew, A. R., Fatima, H., Livingstone, J. R., Reese, C., Lacritz, L., Pendergrass, C., et al. (2020). Cognitive Assessment via Telephone: A Scoping Review of Instruments. Arch. Clin. Neuropsychol. 35, acaa096. doi: 10.1093/arclin/acaa096

Casler, K., Bickel, L., and Hackett, E. (2013). Separate but equal? A comparison of participants and data gathered via Amazon’s MTurk, social media, and face-to-face behavioral testing. Comput. Hum. Behav. 29, 2156–2160. doi: 10.1016/J.CHB.2013.05.009

Chandler, J., and Shapiro, D. (2016). Conducting clinical research using crowdsourced convenience samples. Annu. Rev. Clin. Psychol. 12, 53–81. doi: 10.1146/ANNUREV-CLINPSY-021815-093623

Chen, H., Dai, L., Zhang, Y., Feng, L., Jiang, Z., Wang, X., et al. (2022). Network reconfiguration among cerebellar visual, and motor regions affects movement function in spinocerebellar ataxia type 3. Front. Aging Neurosci. 14:773119. doi: 10.3389/fnagi.2022.773119

Dalrymple-Alford, J. C., MacAskill, M. R., Nakas, C. T., Livingston, L., Graham, C., Crucian, G. P., et al. (2010). The MoCA: well-suited screen for cognitive impairment in Parkinson disease. Neurology 75, 1717–1725. doi: 10.1212/WNL.0B013E3181FC29C9

Dorsey, E. R., Wagner, J. D., Bull, M. T., Rizzieri, A., Grischkan, J., Achey, M. A., et al. (2015). Feasibility of virtual research visits in fox trial finder. J. Parkinsons Dis. 5:150549. doi: 10.3233/JPD-150549

Fancellu, R., Paridi, D., Tomasello, C., Panzeri, M., Castaldo, A., Genitrini, S., et al. (2013). Longitudinal study of cognitive and psychiatric functions in spinocerebellar ataxia types 1 and 2. J. Neurol. 260, 3134–3143. doi: 10.1007/S00415-013-7138-1

Freitas, S., Simões, M. R., Alves, L., Vicente, M., and Santana, I. (2012). Montreal cognitive assessment (MoCA): Validation study for vascular dementia. J. Int. Neuropsychol. Soc. 18, 1031–1040. doi: 10.1017/S135561771200077X

Geddes, M. R., O’Connell, M. E., Fisk, J. D., Gauthier, S., Camicioli, R., and Ismail, Z. (2020). Remote cognitive and behavioral assessment: Report of the Alzheimer society of Canada task force on dementia care best practices for COVID-19. Alzheimer Dement. 12:12111. doi: 10.1002/dad2.12111

Goetz, C. C. (2003). The Unified Parkinson’s Disease Rating Scale (UPDRS): status and recommendations. Mov. Disord. 18, 738–750. doi: 10.1002/MDS.10473

Grahn, J. A., Parkinson, J. A., and Owen, A. M. (2009). The role of the basal ganglia in learning and memory: neuropsychological studies. Behav. Brain Res. 199, 53–60. doi: 10.1016/J.BBR.2008.11.020

Hewitt, K. C., Rodgin, S., Loring, D. W., Pritchard, A. E., and Jacobson, L. A. (2020). Transitioning to telehealth neuropsychology service: Considerations across adult and pediatric care settings. Clin. Neuropsychol. 34, 1335–1351. doi: 10.1080/13854046.2020.1811891

Hoops, S., Nazem, S., Siderowf, A. D., Duda, J. E., Xie, S. X., Stern, M. B., et al. (2009). Validity of the MoCA and MMSE in the detection of MCI and dementia in Parkinson disease. Neurology 73, 1738–1745. doi: 10.1212/WNL.0B013E3181C34B47

Hu, M. T. M., Szewczyk-Królikowski, K., Tomlinson, P., Nithi, K., Rolinski, M., Murray, C., et al. (2014). Predictors of cognitive impairment in an early stage Parkinson’s disease cohort. Move. Disord. 29, 351–359. doi: 10.1002/mds.25748

Iiboshi, K., Yoshida, K., Yamaoka, Y., Eguchi, Y., Sato, D., Kishimoto, M., et al. (2020). A validation study of the remotely administered Montreal cognitive assessment tool in the elderly Japanese population. Telemed. J. E Health 26, 920–928. doi: 10.1089/tmj.2019.0134

Janacsek, K., Evans, T. M., Kiss, M., Shah, L., Blumenfeld, H., and Ullman, M. T. (2022). Subcortical cognition: The fruit below the rind. Annu. Rev. Neurosci. 45, 361–386. doi: 10.1146/annurev-neuro-110920-013544

Kandiah, N., Zhang, A., Cenina, A. R., Au, W. L., Nadkarni, N., and Tan, L. C. (2014). Montreal Cognitive Assessment for the screening and prediction of cognitive decline in early Parkinson’s disease. Parkinson. Relat. Disord. 20, 1145–1148. doi: 10.1016/J.PARKRELDIS.2014.08.002

Kim, H. M., Leverenz, J. B., Burdick, D. J., Srivatsal, S., Pate, J., Hu, S. C., et al. (2018). Diagnostic validation for participants in the Washington state Parkinson disease registry. Parkinsons Dis. 2018:3719578. doi: 10.1155/2018/3719578

Klil-Drori, S., Phillips, N., Fernandez, A., Solomon, S., Klil-Drori, A. J., and Chertkow, H. (2022). Evaluation of a telephone version for the Montreal cognitive assessment: establishing a cutoff for normative data from a cross-sectional study. J. Geriatr. Psychiatry Neurol. 35, 374–381. doi: 10.1177/08919887211002640

Lezak, M. D. (2000). “Nature, applications, and limitations of neuropsychological assessment following traumatic brain injury,” in International handbook of neuropsychological rehabilitation, eds. A. -L. Christensen and B. P. Uzzell (Boston, MA: Springer), 67–79. doi: 10.1007/978-1-4757-5569-5_4

Lifshitz, M., Dwolatzky, T., and Press, Y. (2012). Validation of the Hebrew version of the MoCA test as a screening instrument for the early detection of mild cognitive impairment in elderly individuals. J. Geriatr. Psychiatry Neurol. 25. doi: 10.1177/0891988712457047

Lindauer, A., Seelye, A., Lyons, B., Dodge, H. H., Mattek, N., Mincks, K., et al. (2017). Dementia care comes home: Patient and caregiver assessment via telemedicine. Gerontologist 57, gnw206. doi: 10.1093/geront/gnw206

Loring, D. W., Lah, J. J., and Goldstein, F. C. (2023). Telehealth equivalence of the Montreal cognitive assessment (MoCA): Results from the Emory healthy brain study (EHBS). J. Am. Geriatr. Soc. 71, 1931–1936. doi: 10.1111/jgs.18271

Malek, N., Makawita, C., Al-Sami, Y., Aslanyan, A., and de Silva, R. (2022). A Systematic Review of the Spectrum and Prevalence of Non-Motor Symptoms in Adults with Hereditary Cerebellar Ataxias. Mov. Disord. Clin. Pract. 9, 1027–1039. doi: 10.1002/mdc3.13532

Malek-Ahmadi, M., O’Connor, K., Schofield, S., Coon, D. W., and Zamrini, E. (2018). Trajectory and variability characterization of the Montreal cognitive assessment in older adults. Aging Clin. Exp. Res. 30, 993–998. doi: 10.1007/s40520-017-0865-x

Marra, D. E., Hamlet, K. M., Bauer, R. M., and Bowers, D. (2020). Validity of teleneuropsychology for older adults in response to COVID-19: A systematic and critical review. Clin. Neuropsychol. 34, 1411–1452. doi: 10.1080/13854046.2020.1769192

McDougle, S. D., Tsay, J., Pitt, B., King, M., Saban, W., Taylor, J. A., et al. (2021). Continuous manipulation of mental representations is compromised in cerebellar degeneration. bioRxiv [Preprint]. doi: 10.1101/2020.04.08.032409

Moustafa, A. A., Chakravarthy, S., Phillips, J. R., Gupta, A., Keri, S., Polner, B., et al. (2016). Motor symptoms in Parkinson’s disease: A unified framework. Neurosci. Biobehav. Rev. 68, 727–740. doi: 10.1016/J.NEUBIOREV.2016.07.010

Nasreddine, Z. S., Phillips, N. A., Bédirian, V., Charbonneau, S., Whitehead, V., Collin, I., et al. (2005). The montreal cognitive assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 53, 695–699. doi: 10.1111/J.1532-5415.2005.53221.X

O’Halloran, C. J., Kinsella, G. J., and Storey, E. (2012). The cerebellum and neuropsychological functioning: a critical review. J. Clin. Exp. Neuropsychol. 34, 35–56. doi: 10.1080/13803395.2011.614599

Olivito, G., Lupo, M., Iacobacci, C., Clausi, S., Romano, S., Masciullo, M., et al. (2018). Structural cerebellar correlates of cognitive functions in spinocerebellar ataxia type 2. J. Neurol. 265, 597–606. doi: 10.1007/S00415-018-8738-6

Orozco, J. L., Valderrama-Chaparro, J. A., Pinilla-Monsalve, G. D., Molina-Echeverry, M. I., Castaño, A. M. P., Ariza-Araújo, Y., et al. (2020). Parkinson’s disease prevalence, colombiaage distribution and staging in. Neurol. Int. 12:8401. doi: 10.4081/ni.2020.8401

Parvizi, J. (2009). Corticocentric myopia: old bias in new cognitive sciences. Trends Cogn. Sci. 13, 354–359. doi: 10.1016/j.tics.2009.04.008

Rossetti, H. C., Lacritz, L. H., Munro Cullum, C., and Weiner, M. F. (2011). Normative data for the Montreal Cognitive Assessment (MoCA) in a population-based sample. Neurology 77, 1272–1275. doi: 10.1212/WNL.0b013e318230208a

Saban, W., and Gabay, S. (2023). Contributions of lower structures to higher cognition: Towards a dynamic network model. J. Intell. 11, 121.

Saban, W., and Ivry, R. B. (2021). PONT: A protocol for online neuropsychological testing. J. Cogn. Neurosci. 33, 2413–2425. doi: 10.1162/JOCN_A_01767

Saban, W., Klein, R. M., and Gabay, S. (2018a). Probabilistic versus “Pure” volitional orienting: A monocular difference. Atten. Percept. Psychophys. 80, 669–676. doi: 10.3758/s13414-017-1473-8

Saban, W., Sekely, L., Klein, R. M., and Gabay, S. (2018b). Monocular channels have a functional role in endogenous orienting. Neuropsychologia 111, 341–349. doi: 10.1016/j.neuropsychologia.2018.01.002

Salman, M. S. (2018). Epidemiology of cerebellar diseases and therapeutic approaches. Cerebellum 17, 4–11. doi: 10.1007/S12311-017-0885-2

Saban, W., Raz, G., Grabner, R. H., Gabay, S., and Kadosh, R. C. (2021). Primitive visual channels have a causal role in cognitive transfer. Sci. Rep. 11:8759. doi: 10.1038/s41598-021-88271-y

Schmitz-Hübsch, T., Du Montcel, S. T., Baliko, L., Berciano, J., Boesch, S., Depondt, C., et al. (2006). Scale for the assessment and rating of ataxia: development of a new clinical scale. Neurology 66, 1717–1720. doi: 10.1212/01.WNL.0000219042.60538.92

Schniepp, R., Huppert, A., Decker, J., Schenkel, F., Dieterich, M., Brandt, T., et al. (2023). Multimodal mobility assessment predicts fall frequency and severity in cerebellar ataxia. Cerebellum 22, 85–95. doi: 10.1007/s12311-021-01365-1

Smolensky, L., Amondikar, N., Crawford, K., Neu, S., Kopil, C. M., Daeschler, M., et al. (2020). Fox Insight collects online, longitudinal patient-reported outcomes and genetic data on Parkinson’s disease. Sci. Data 7:67. doi: 10.1038/s41597-020-0401-2

Soloveichick, M., Kimchi, R., and Gabay, S. (2021). Functional involvement of subcortical structures in global-local processing. Cognition 206:104476. doi: 10.1016/j.cognition.2020.104476

Stillerova, T., Liddle, J., Gustafsson, L., Lamont, R., and Silburn, P. (2016). Remotely assessing symptoms of Parkinson’s disease using videoconferencing: A feasibility study. Neurol. Res. Int. 2016:4802570. doi: 10.1155/2016/4802570

Tran, H., Nguyen, K. D., Pathirana, P. N., Horne, M. K., Power, L., and Szmulewicz, D. J. (2020). A comprehensive scheme for the objective upper body assessments of subjects with cerebellar ataxia. J. Neuroeng. Rehabil. 17:162. doi: 10.1186/s12984-020-00790-3

Tunc, S., Baginski, N., Lubs, J., Bally, J. F., Weissbach, A., Baaske, M. K., et al. (2019). Predictive coding and adaptive behavior in patients with genetically determined cerebellar ataxia—-A neurophysiology study. Neuroimage Clin. 24:102043. doi: 10.1016/j.nicl.2019.102043

van Prooije, T., Knuijt, S., Oostveen, J., Kapteijns, K., Vogel, A. P., and van de Warrenburg, B. (2023). Perceptual and Acoustic Analysis of Speech in Spinocerebellar ataxia Type 1. Cerebellum doi: 10.1007/s12311-023-01513-9 [Epub ahead of print].

Wallace, S. E., Donoso Brown, E. V., Simpson, R. C., D’Acunto, K., Kranjec, A., Rodgers, M., et al. (2019). A comparison of electronic and paper versions of the montreal cognitive assessment. Alzheimer Dis. Assoc. Disord. 33, 272–278. doi: 10.1097/WAD.0000000000000333

Wang, R. Y., Huang, F. Y., Soong, B. W., Huang, S. F., and Yang, Y. R. (2018). A randomized controlled pilot trial of game-based training in individuals with spinocerebellar ataxia type 3. Sci. Rep. 8:7816. doi: 10.1038/S41598-018-26109-W

Weintraub, D., Simuni, T., Caspell-Garcia, C., Coffey, C., Lasch, S., Siderowf, A., et al. (2015). Cognitive performance and neuropsychiatric symptoms in early, untreated Parkinson’s disease. Mov. Disord. 30, 919–927. doi: 10.1002/MDS.26170

Winslow, A. R., Hyde, C. L., Wilk, J. B., Eriksson, N., Cannon, P., Miller, M. R., et al. (2018). Self-report data as a tool for subtype identification in genetically-defined Parkinson’s Disease. Sci. Rep. 8:12992. doi: 10.1038/s41598-018-30843-6

Wu, X., Liao, X., Zhan, Y., Cheng, C., Shen, W., Huang, M., et al. (2017). Microstructural alterations in asymptomatic and symptomatic patients with spinocerebellar ataxia type 3: A tract-based spatial statistics study. Front. Neurol. 8:714. doi: 10.3389/FNEUR.2017.00714/FULL

Zald, D. H., and Andreotti, C. (2010). Neuropsychological assessment of the orbital and ventromedial prefrontal cortex. Neuropsychologia 48, 3377–3391. doi: 10.1016/J.NEUROPSYCHOLOGIA.2010.08.012

Keywords: online, neuropsychological testing, MoCA, Parkinson’s, ataxia, basal ganglia, cerebellum, Hebrew

Citation: Binoy S, Montaser-Kouhsari L, Ponger P and Saban W (2024) Remote assessment of cognition in Parkinson’s disease and Cerebellar Ataxia: the MoCA test in English and Hebrew. Front. Hum. Neurosci. 17:1325215. doi: 10.3389/fnhum.2023.1325215

Received: 20 October 2023; Accepted: 06 December 2023;

Published: 08 January 2024.

Edited by:

Rebecca J. Hirst, University of Nottingham, United KingdomReviewed by:

Luca Marsili, University of Cincinnati, United StatesCopyright © 2024 Binoy, Montaser-Kouhsari, Ponger and Saban. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: William Saban, d2lsbHNhYmFuQHRhdWV4LnRhdS5hYy5pbA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.