94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 06 December 2023

Sec. Speech and Language

Volume 17 - 2023 | https://doi.org/10.3389/fnhum.2023.1228808

This article is part of the Research Topic Rising Stars in Speech and Language 2023 View all 4 articles

The role of the left ventral occipitotemporal cortex (vOT) in reading is well-established in both sighted and blind readers. Its role in speech processing remains only partially understood. Here, we test the involvement of the left vOT in phonological processing of spoken language in the blind (N = 50, age: 6.76–60.32) and in the sighted (N = 54, age: 6.79–59.83) by means of whole-brain and region-of-interest (including individually identified) fMRI analyses. We confirm that the left vOT is sensitive to phonological processing (shows greater involvement in rhyming compared to control spoken language task) in both blind and sighted participants. However, in the sighted, the activation was observed only during the rhyming task and in the speech-specific region of the left vOT, pointing to task and modality specificity. In contrast, in the blind group, the left vOT was active during speech processing irrespective of task and in both speech and reading-specific vOT regions. Only in the blind, the left vOT presented a higher degree of sensitivity to phonological processing than other language nodes in the left inferior frontal and superior temporal cortex. Our results suggest a changed development of the left vOT sensitivity to spoken language, resulting from visual deprivation.

The left ventral occipitotemporal cortex (vOT) is known to be an important part of the reading network. Though the exact function of this region remains a subject of debate (Dehaene and Cohen, 2011; Price and Devlin, 2011), it is activated universally during reading by readers of very different scripts (Rueckl et al., 2015). Moreover, the left vOT is activated not only during print reading using vision but also in a similar manner in Braille readers, both blind and sighted, who read tactually (Reich et al., 2011; Siuda-Krzywicka et al., 2016; Raczy et al., 2019). Its sensitivity to written words changes in the course of reading acquisition (Dehaene-Lambertz et al., 2018), and its connectivity with language processing areas probably has a shaping role in this development (Saygin et al., 2016). The impact of visual deprivation on the course of the vOT development remains unknown. Some findings, however, suggest that this region, along with other parts of the occipital cortex, may become a part of the language network independently of the Braille reading acquisition in the blind (Bedny et al., 2015).

In the blind, the left vOT is also active during speech processing. This activation was shown to be related to syntactic processing (Kim et al., 2017), but some studies suggest that it may also be implicated in phonological processing. Arnaud et al. (2013) found a repetition suppression effect during spoken vowel presentation in the vOT, whereas Burton et al. (2003) presented activation in the vOT during the auditory rhyming task. The role of the left vOT in speech processing in the sighted population was also discussed. Though weaker than in the blind, in the sighted the left vOT can be activated during speech processing (Yoncheva et al., 2010; Ludersdorfer et al., 2016; Planton et al., 2019). It was often explained as automatic activation of the orthographic codes stored in the left vOT connected to the successful reading acquisition (Dehaene et al., 2015) and thus less pronounced in illiterate (Dehaene et al., 2010) or poor readers (Blau et al., 2010; Desroches et al., 2010; Dȩbska et al., 2019). Activation of the left vOT during speech processing in the sighted was shown to depend on the task. When the task does not require access to the orthographic representation, a specific deactivation is observed instead (Yoncheva et al., 2010; Ludersdorfer et al., 2016). It was also suggested that the left vOT in the sighted contains separate neuronal populations for written and spoken language processing (Pattamadilok et al., 2019).

Both learning- and deprivation-induced neuroplasticity have an unquestionable influence on the functional organization of the human brain. These influences may interact with each other. The change in the reading modality and lack of visual inputs to the left vOT region may change its function in a significant way. Recently, we have demonstrated that the superior temporal regions are relatively disengaged during Braille reading (Dziȩgiel-Fivet et al., 2021), a finding that was apparent also in the previous studies on the blind (Burton et al., 2002; Gizewski et al., 2003; Kim et al., 2017). In the sighted population, these temporal sites are thought to be engaged in phonological processing during reading (Kovelman et al., 2012) and speech processing and related to the sequential, phonology-based strategy of reading used by beginning readers (Jobard et al., 2003; Martin et al., 2015). Similar findings of decreased activation in temporal regions accompanied by increased activation of the vOT in the blind compared to sighted were observed during sound categorization (Mattioni et al., 2022). The authors found reduced decoding accuracy in the temporal cortex that was concomitant to the enhanced representation of sound categories in the occipital cortex. This effect was specific to the human voice. These results suggest that visual deprivation may trigger a redeployment mechanism, in which a certain type of processing is relocated from the intact to the deprived cortices. This could be the case for phonological processing typically tagging the superior temporal cortex.

This study aimed to test whether the left vOT is involved in the phonological processing of spoken language in the blind and to see if this involvement is different from the one observed in the sighted participants. We predicted that the left vOT will be sensitive to phonological processing showing greater activation during the phonological than control spoken language task in both blind and sighted individuals. However, we expected this sensitivity to be greater in the blind than in the sighted. Furthermore, we explored whether sensitivity to phonology depends on specific areas of the vOT selectively involved in reading or speech processing. Additionally, we compared the left vOT activation to other typical language areas and the primary visual cortex. If in the blind the vOT is part of the language network, it should exhibit the same degree of sensitivity to phonological processing as other language nodes in the left inferior frontal and superior temporal cortex.

Data from 51 blind (31 women, mean age = 23.94, SD = 14.11, range 6.76–60.32) and 54 sighted (31 women, mean age = 22.97, SD = 13.58, range 6.79–59.83) participants were included in the analyses. Sample sizes in this study are higher than the sample sizes usually found in studies with blind participants (typically 10–20). The blind sample was of convenience—all the blind participants matching the inclusion criteria (early blindness, Braille as a primary script for reading acquisition, no knowledge of print Latin alphabet, and no contraindications for MRI scanning) were tested. The sighted sample was recruited to match the blind in terms of age, sex, handedness, and education level. Handedness was measured using the Edinburgh Handedness Questionnaire translated into Polish. Most of the participants were right-handed (45 blind and 47 sighted), but almost half of the blind participants preferred using their left hand for reading Braille (22 participants). None of the blind participants has ever learned to read the Latin alphabet visually.

None of the participants had any history of neurological illness or brain damage (other than the cause of blindness) and all of the participants declared having normal hearing. All of the anatomical images were assessed by a radiologist and no brain damage was found in any of the participants. Blind participants were congenitally (N = 42) or early blind (N = 9, from 6 years of age at the latest) due to pathology in or anterior to the optic chiasm (details on the blindness causes and onset can be found under this link: https://osf.io/kzjw2/).

One blind participant was excluded from all analyses due to excessive motion during scanning. Two sighted participants were not included in the whole-brain analyses because of missing data in their individual masks. Thus, the final group sizes in the whole-brain analyses were as follows: blind: 50 participants, sighted: 52 participants, and the final group sizes in the ROI analyses were as follows: blind: 50 participants, sighted: 54 participants.

As the delayed onset of blindness may influence the organization of language processing in the blind (Burton et al., 2002; Bedny et al., 2012), the analyses were repeated excluding the blind participants who were not congenitally blind. The differences in comparison to the results on the complete sample were minor and thus only the complete sample results are presented in the main text. The results of the restricted sample are presented in the Supplementary material. Since the inclusion of a wide age range could potentially limit the interpretation of results, we report separate ROI analyses on the adult participants only in Supplementary material. Again, the differences in comparison to the results on the complete sample were minor and therefore here we report the complete sample results.

Two fMRI tasks were used to answer our research questions—a language localizer and a phonological task. The stimuli for the tasks were presented using the Presentation software (Neurobehavioral Systems, Albany, CA). Auditory stimuli were presented via noise-attenuating headphones (NordicNeuroLab), visual stimuli were displayed on an LCD monitor, and tactile stimuli via NeuroDevice Tacti TM Braille display (Debowska et al., 2013).

The language localizer was previously described by Dziȩgiel-Fivet et al. (2021). The participants were asked to silently read (sighted participants from the screen and blind participants from the Braille display) and listen to stimuli in three conditions: real words, pronounceable but nonsense pseudowords, and non-linguistic control stimuli (for the visual condition: 3 or 4 hash signs, for the tactile condition 3 or 4 six dot Braille sign, for the auditory condition vocoded speech stimuli). There was no other task than to read and listen to the stimuli. The task was presented in three runs. Each run consisted of 36 blocks−18 auditory and 18 tactile or visual including 6 blocks per condition. Within each block, four different stimuli from the same condition were presented in succession. Auditory and visual stimuli were displayed for 1,000 ms, while tactile stimuli were displayed for 3,000 ms (Veispak et al., 2012; Kim et al., 2017), with a 1,000 ms interstimulus interval. Blocks were separated with 3,000–6,000 ms breaks. For a more detailed description of the localizer task, please refer to Dziȩgiel-Fivet et al. (2021). Here, only the real words and non-linguistic control conditions were considered. The comparison of these two conditions is supposed to delineate language-specific activations, which are not connected to purely sensory perception.

During the phonological (rhyming) task, participants were asked to judge whether auditorily presented pairs of words rhyme or not. In the control task, in which phonological processing was minimal (see Section 5), participants had to decide whether they heard the same word twice or whether the word pair consisted of two different words (Kovelman et al., 2012). The yes/no answers (50% correct responses were “yes” and 50% were “no”) were given by pressing a corresponding button. Each task consisted of 20 common word pairs (all one- to two-syllable nouns), presented in blocks of four pairs each. There were 10 rhyming/same pairs and 10 non-rhyming/different pairs. Both the rhyming and the control task included the same stimuli but were presented during separate runs. Words in pairs were separated by 2 s, and after the second word in a pair, there was a 4-s break for an answer.

The data were obtained on the 3T Siemens Trio Scanner. The functional images were acquired in a whole-brain echo-planar imaging (EPI) sequence with 12 channel head coil (language localizer: 32 slices, slice-thickness = 4 mm, TR = 2,000 ms, TE = 30 ms, flip angle = 80°, FOV = 220 mm3, matrix size = 64 × 64, voxel size: 3.4 × 3.4 × 4 mm; phonological tasks: 35 slices, slice-thickness = 3.5 mm, TR = 2,000 ms, TE = 30 ms, flip angle = 90°, FOV = 224 mm3, matrix size = 64 × 64, and voxel size: 3.5 × 3.5 × 3.5 mm). The anatomical images were acquired using T1-weighted (T1w) MPRAGE sequence with 32 channel head coil (176 slices, slice-thickness: 1 mm, TR = 2,530 ms, TE = 3.32 ms, flip angle = 7°, matrix size = 256 × 256, and voxel size = 1 × 1 × 1 mm).

Preprocessing of the MRI data and whole-brain analyses were conducted using SPM12 (SPM12, Wellcome Trust Centre for Neuroimaging, London, UK) running on Matlab2017b (The Math-Works Inc. Natick, MA, USA). The standard preprocessing pipeline was applied. First, for all of the functional data, the realignment parameters were estimated (realignment to the mean functional image), and the data was slice-time corrected and resliced. The anatomical images were then coregistered to the mean functional image and segmented based on the template provided in SPM. Afterward, the normalization of the functional data to the MNI space was carried out with the voxel size of 2 × 2 × 2 mm. Finally, images were smoothed with an 8 mm isotropic Gaussian kernel. The ART toolbox (https://www.nitrc.org/projects/artifact_detect) was used additionally to create movement regressors as well as to detect the excessive in-scanner motion—movement over 2 mm and rotation over 0.2 mm in relation to the previous volume (default ART toolbox settings). To include a session in the analyses, 80% of the volumes needed to be artifact free. One session of one participant had to be excluded and as it was the control task run for the phonological activity analysis; this participant had to be excluded from all analyses.

Preprocessed data were analyzed using a voxel-wise GLM approach. The condition blocks were convolved with the canonical hemodynamic function, and movement and motion outliers regressors were added to the model. The masking threshold in the first level model specification was defined as 0.5 to ensure good coverage of the temporal and occipitotemporal regions by the individual participants' brain masks.

For detailed results of the localizer task see the Supplementary material. We replicated the main findings of Dziȩgiel-Fivet et al. (2021), i.e., the relative deactivation of the temporal sites during Braille reading and inclusion of the vOT to modality-independent language network in the blind in a larger sample including children.

The second-level analyses were conducted on the phonological task. One-sample t-tests were used to delineate regions involved in phonological processing (rhyming > control) within groups and two-sample t-tests to show the differences between the groups.

Additionally, in Supplementary material, we present the results of analyses where each task was compared to baseline (i.e., rhyming > baseline and control > baseline). Deactivation in both tasks was also analyzed (i.e., baseline > rhyming and baseline > control), as previous studies suggested that the activation of the vOTs during speech processing in the sighted depends on the task (Yoncheva et al., 2010; Ludersdorfer et al., 2016). If not otherwise specified, whole brain results are reported at p < 0.001 voxel-level threshold with FWE p < 0.05 cluster-level correction.

The literature-based left vOT ROI was created as a sum of two 10 mm radius spheres around two peaks—one from the Lerma-Usabiaga et al.'s (2018) study, the averaged LEX contrast peaks coordinates (−41.54, −57.67, −10.18), and the second from the Kim et al.'s (2017) study, the peak of activation for the auditory words > backward speech contrast averaged between the blind participants (−41, −44, −17). The two spheres were intersected with the Inferior Temporal Gyrus (ITG) and Fusiform Gyrus (FG) masks coming from the AAL3 atlas (Rolls et al., 2020) in order to exclude voxels from the cerebellum. The ROI was created using the MarsBar toolbox (Brett et al., 2002). The resulting ROI consisted of 761 voxels.

In order to compare the pattern of activation of the left vOT to other parts of the language network, three additional literature-based ROIs were defined. First, the primary visual cortex and Broca's region ROIs were extracted from the Anatomy Toolbox (Eickhoff et al., 2005). Additionally, the left superior temporal gyrus (STG) ROI was defined based on the recent meta-analysis of the studies tapping into phonological and semantic processing (Hodgson et al., 2021) as a 10 mm radius sphere around a peak for phonological > semantic activations in the superior temporal cortex (coordinates: −58, −23, 8).

As the location of the language-sensitive voxels in the vOT can be highly variable (Saxe et al., 2006), we used an individual ROIs approach. The individual ROIs were defined based on the language localizer task activation within the volume of the search defined as the sum of two 20 mm radius spheres around the same peaks as the literature-based ROI, intersected with the ITG and FG masks (2,658 voxels).

First, we wanted to select the parts of the vOT that are language sensitive, irrespective of the modality, i.e., areas sensitive to reading processing and/or speech processing. To this end, the 50 most activated voxels (with the highest t-value) in the volume of search in the speech > non-linguistic control and reading words > non-linguistic control contrasts were marked. Then, the marked voxels from the two contrasts (reading and speech-processing related) were combined to create the individual language ROI (ranging from 60 to 100 voxels; 50 voxels would reflect a complete overlap between the speech and reading-related ROIs and 100 voxels would reflect no overlap between the speech and reading-related ROIs).

In fact, there was little to none overlap between the speech and reading-related voxels in both blind (mean number of overlapping voxels = 8.78, SD = 12.04) and sighted (mean number of overlapping voxels = 4.57, SD = 7.61) and the groups did not differ in this respect (W = 1570.5, p = 0.134). The ROIs were not necessarily constructed of contiguous voxels. In the blind group, the voxel presenting the largest interparticipant overlap (15 ROIs overlapping) was located at −40, −46, and −14. In the sighted group, the voxel presenting the largest interparticipant overlap (13 ROIs overlapping) was located at −39, −46, and −16. The location of the individual ROIs is consistent with the results presented in Dziȩgiel-Fivet et al. (2021), where localization of the peaks of activation related to reading was not discernible between the blind and the sighted group.

Next, we defined two ROIs in the left vOT selective either to reading or speech processing. The speech-selective ROIs were defined as voxels activated during speech-processing more than for reading [(speech > non-linguistic control) > (reading > non-linguistic control)] in the localizer task and the reading-selective ROIs were defined as the voxels activated for reading more than for speech-processing [(reading > non-linguistic control) > (speech > non-linguistic control)]. The 50 most activated voxels were selected for each contrast (highest t-value).

In-house scripts written in Matlab that used SPM12 functions (the “spm_summarize” function for the extraction of the contrast estimates values) were used to extract ROI data. The contrast estimates for the rhyming > baseline and control > baseline contrast from the phonological tasks were analyzed. Scripts in R (version 4.04, R Core Team, 2023) were used to analyze the ROI data—compare groups and conditions, and conduct correlation analysis with reading skills, phonological awareness, and age (see Supplementary material).

Both blind and sighted participants performed rhyming and control tasks near the ceiling level. In the rhyming task, the sighted group achieved 98.67% (SD = 2.97%) accuracy on average, and the blind group achieved 99.15% accuracy (SD = 4.59%). There was a significant difference between the groups for the rhyming task accuracy, tested with the Mann–Whitney U test (W = 1,486, p = 0.036). In the control task, the sighted group scored 99.43% (SD = 1.88%) on average, whereas the blind group scored 99.15% (SD = 2.51%). The difference between the groups was insignificant for the control task (W = 1259.5, p = 0.632).

The analysis of reaction times (RT) indicated that the control task was significantly easier (evoked shorter reaction times) than the experimental rhyming task. This was the case for both blind (mean RT rhyming = 1.28 s, SD RT rhyming = 0.28 s, mean RT control = 1.16 s, SD RT control = 0.34 s, W = 1,049, p < 0.001) and sighted (mean RT rhyming = 1.33 s, SD RT rhyming = 0.30 s, mean RT control = 1.13 s, SD RT control = 0.27 s, W = 1,335, p < 0.001). The differences between the groups in reaction times were not significant for either of the tasks (rhyming W = 1,159, p = 0.444; control W = 1,323, p = 0.731).

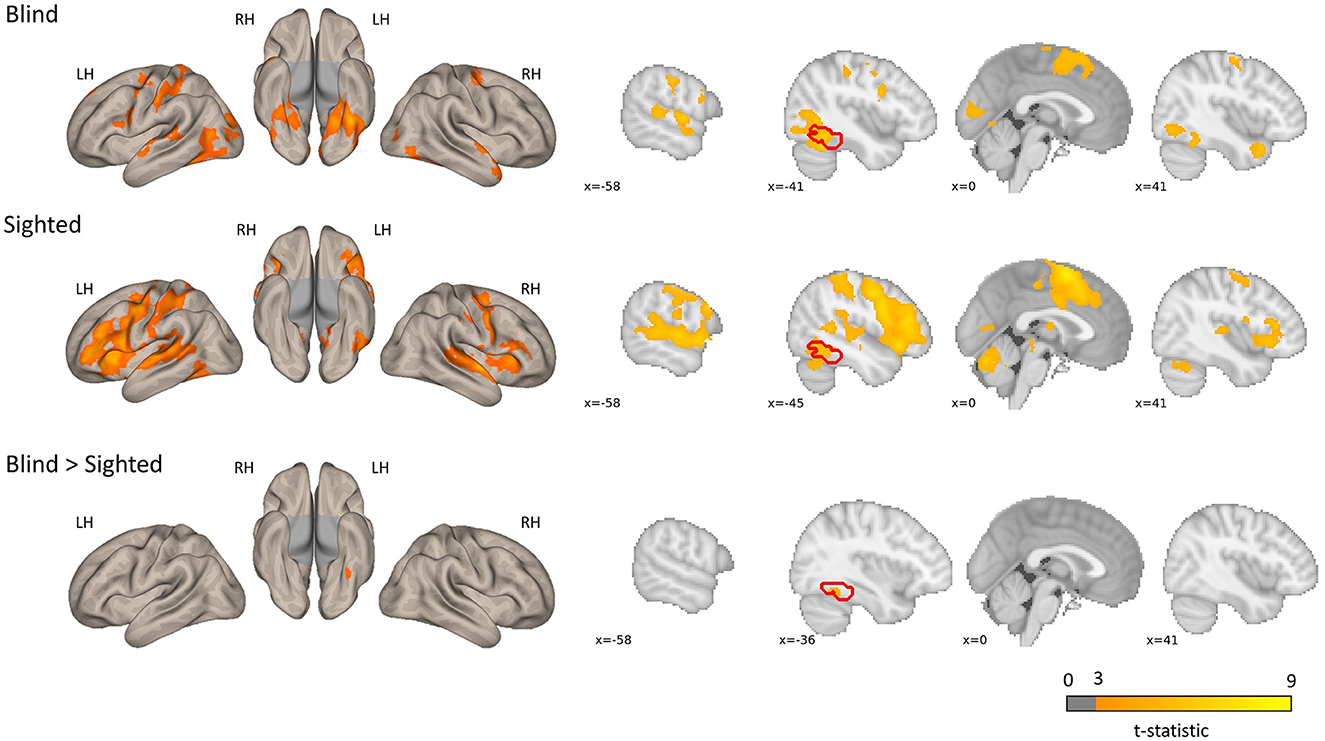

The regions sensitive to phonological processing were delineated using contrast comparing the rhyming task to the control task. In this contrast, both groups activated the typical network including perisylvian regions (inferior frontal gyrus, middle temporal gyrus, and superior temporal gyrus), as well as the left vOT (see Figure 1 and Table 1). The blind group additionally activated the primary visual cortex. In the group comparison, a significant difference was found in the left vOT, only when a more lenient statistical threshold (p < 0.001, cluster extent 50 voxels, as shown by Bedny et al., 2015) was used—the blind group activated the left vOT cluster to a larger extent than the sighted group. On the statistical threshold used in all other comparisons (p < 0.001 on the voxel level, FWE cluster corrected at p < 0.05), there were no significant group differences for this contrast.

Figure 1. Group level activations and regions activated more by the blind than the sighted for the rhyming > baseline contrast. The blind > sighted contrast did not survive the standard cluster threshold correction (p < 0.001 at voxel level, p < 0.05 FWE cluster level) and is presented with a more lenient threshold (p < 0.001, k = 50 voxels). The inverse comparison (sighted > blind) did not yield any significant activations. The left vOT region is marked in red.

Table 1. Group-level activations and the results of the group comparison of the activations in the rhyming > control contrast.

When each condition was compared to baseline, the suprathreshold activation in the left vOT (and other parts of the occipital cortex) was observed only in the blind group in both tasks (Supplementary Figure 5 and Supplementary Table 6 for rhyming and Supplementary Figure 6 and Supplementary Table 7 for control), and this activation was larger than in the sighted group. Both blind and sighted participants showed deactivation mainly in regions that are a part of the default mode network (anterior, middle, and posterior cingulate, angular gyrus, precuneus, medial frontal cortex; Supplementary Figure 7 and Supplementary Table 8). However, in the sighted group, occipital regions were largely deactivated too. During the control task, the deactivation included bilateral vOT regions. This indicates that the relatively increased activation in the sighted vOT in the rhyming compared to the control task may be a consequence of deactivation in the control task.

First, we examined the effect of group (blind vs. sighted) and task (rhyming vs. control task) in the literature-based and individual language-sensitive left vOT ROIs. Next, we examined these effects in speech and reading selective left vOT ROIs. Additionally, in order to control for the deactivation effects in the vOT, the activations within the ROIs were compared to zero in every group using the non-parametric one-sample Wilcox signed-rank test. Finally, we compared the activation of the left vOT to other literature-based ROIs (V1, IFG, and STG).

As the assumptions for the parametric methods (multilevel modeling ANOVA, using the “lme” function from the “nlme” package, model residuals were not independent of the fitted values) were not met, a robust ANOVA method (“bwtrim” function from the “WRS2” package) was used.

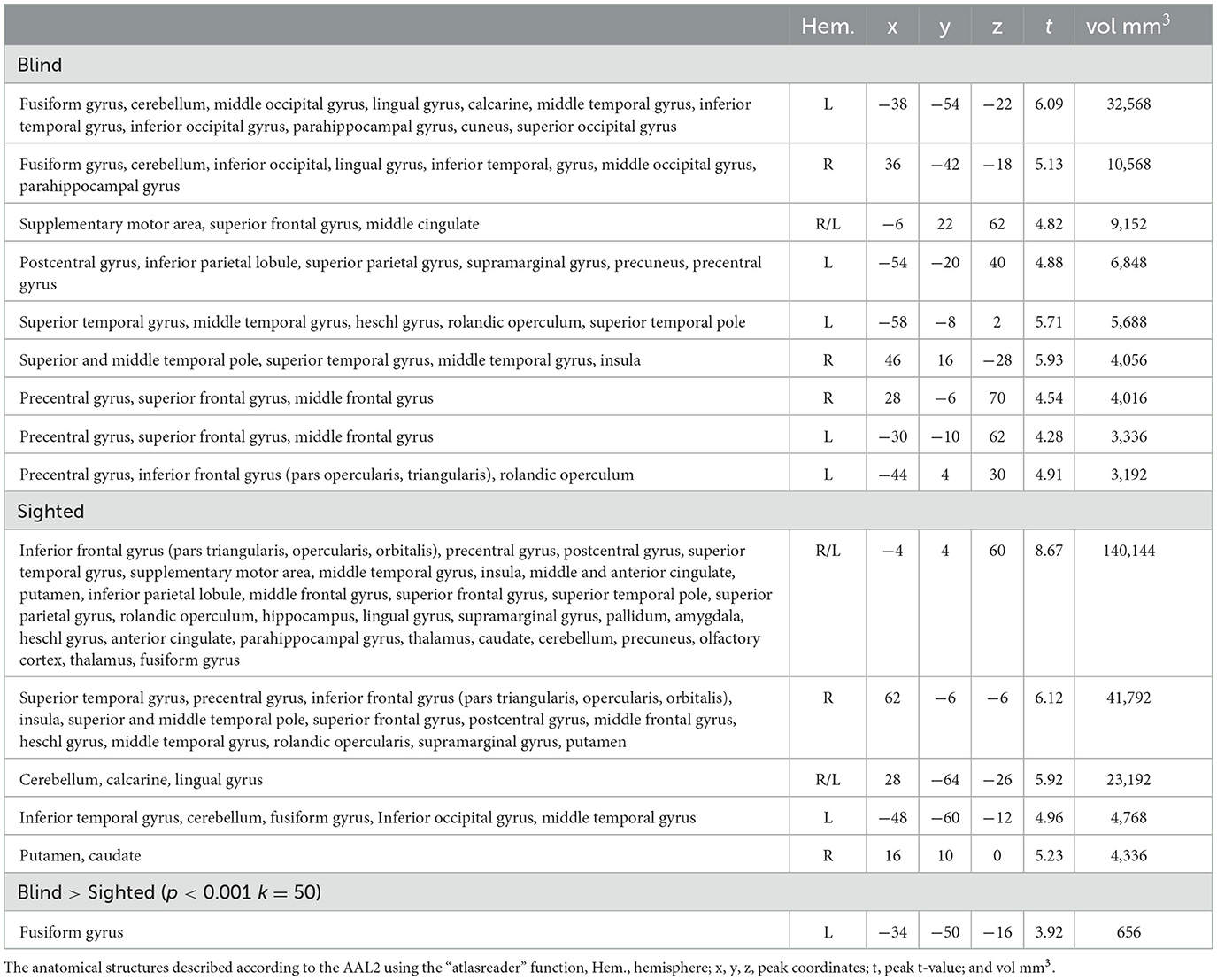

When the literature-based ROI data were analyzed, there was a significant main effect of group [F(1, 45.98) = 47.47, p < 0.001] with the blind group showing higher activation compared to the sighted group, as well as a significant main effect of condition [F(1, 48.83) = 42.39, p < 0.001] with rhyming task evoking higher activation than the control task. The interaction of group and condition did not reach significance [F(1, 48.83) = 3.92, p = 0.053, Figure 2].

Figure 2. Contrast estimates extracted from the literature-based ROI (its location presented on the brain image) and individual ROIs (color bar depicts the overlap of the ROIs between participants) for the experimental conditions in both groups.

Analyses of the data from the ROIs defined individually gave similar results. There was a significant effect of group [F(1, 46.28) = 61.16, p < 0.001] and condition [F(1, 57.94) = 41.02, p < 0.001]. The interaction of group and condition was insignificant [F(1, 57.94) = 2.36, p = 0.130, Figure 2].

The vOT activations were significantly greater than zero for both conditions in the blind group, independently of the ROI type (literature-based ROI: rhyming task mean contrast estimates = 0.45, SD = 0.31, W = 1,258, p < 0.001, control task mean contrast estimates = 0.23, SD = 0.35, W = 1,030, p < 0.001; individual ROIs: rhyming task mean contrast estimates = 0.81, SD = 0.55, W = 1,266, p < 0.001, control task mean contrast estimates = 0.52, SD = 0.47, W = 1,234, p < 0.001). On the other hand, in the sighted the vOT activation was significantly greater than zero only when the rhyming task activation in the individually defined ROIs was taken into consideration (mean contrast estimates = 0.15, SD = 0.37, W = 1,081, p = 0.007). The activity during the control task was significantly below zero when the literature-based ROI data were analyzed (mean contrast estimates = −0.09, SD = 0.24, W = 395, p = 0.006). The rhyming task-related activity in the literature-based ROI (mean contrast estimates = 0.05, SD = 0.26, W = 879, p = 0.242) and the control task-related activity in the individually defined ROIs (mean contrast estimates = −0.04, SD = 0.35, W = 640, p = 0.380) were not significantly different from zero in the sighted group. The deactivation pattern observed in the whole-brain analysis was thus largely confirmed, with the vOT deactivation being task-dependent only in the sighted group. The blind group activated the vOT for speech processing for both rhyming and control conditions.

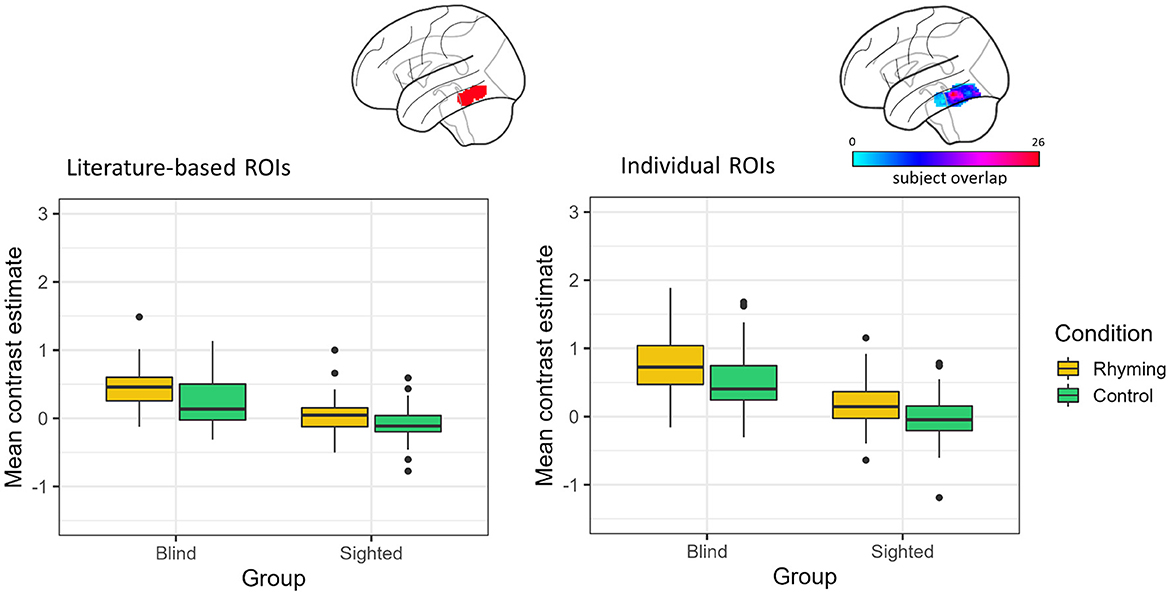

The effect of group and condition was analyzed using two-way robust ANOVA (“bwtrim” function) within either speech or reading-selective ROI. In both reading- and speech-selective ROIs, there was a significant main effect of group [reading: F(1, 48.54) = 35.01, p < 0.001, speech: F(1, 48.16) = 13.42, p < 0.001; Figure 3] and condition [reading: F(1, 572.95) = 13.68, p < 0.001, speech: F(1, 57.62) = 23.70, p < 0.001]. The interaction effect was not significant either in the reading-specific ROIs [F(1, 52.95) = 0.09, p = 0.249] or in the speech-selective ROIs [F(1, 57.62) = 1.39, p = 0.244].

Figure 3. Contrast estimates extracted from the reading and speech-selective individual ROIs. The color bar represents the overlap of the individual ROIs.

For both tasks, the blind group's activations were greater than zero (rhyming: reading: V = 1,150, p < 0.001, speech: V = 1,180, p < 0.001; control: reading: V = 970, p = 0.001, speech: V = 1,002, p < 0.001). Thus, across tasks and ROI definitions, the blind group activated the left vOT for speech processing (and to a greater extent than the sighted group, see Figure 3).

In the sighted group, the mean contrast estimates were greater than zero only when the rhyming task activations in the speech-selective ROIs were analyzed (V = 1,050, p = 0.008, Figure 3). The one-sample Wilcox signed-rank tests were insignificant for the control task in the speech-selective ROIs (V = 779, p = 0.757) and for the rhyming task extracted from the reading-selective ROIs (rhyming: V = 578, p = 0.158). When the control task activations in the reading-selective ROIs were analyzed, they turned out to be significantly lower than zero (V = 344, p = 0.001).

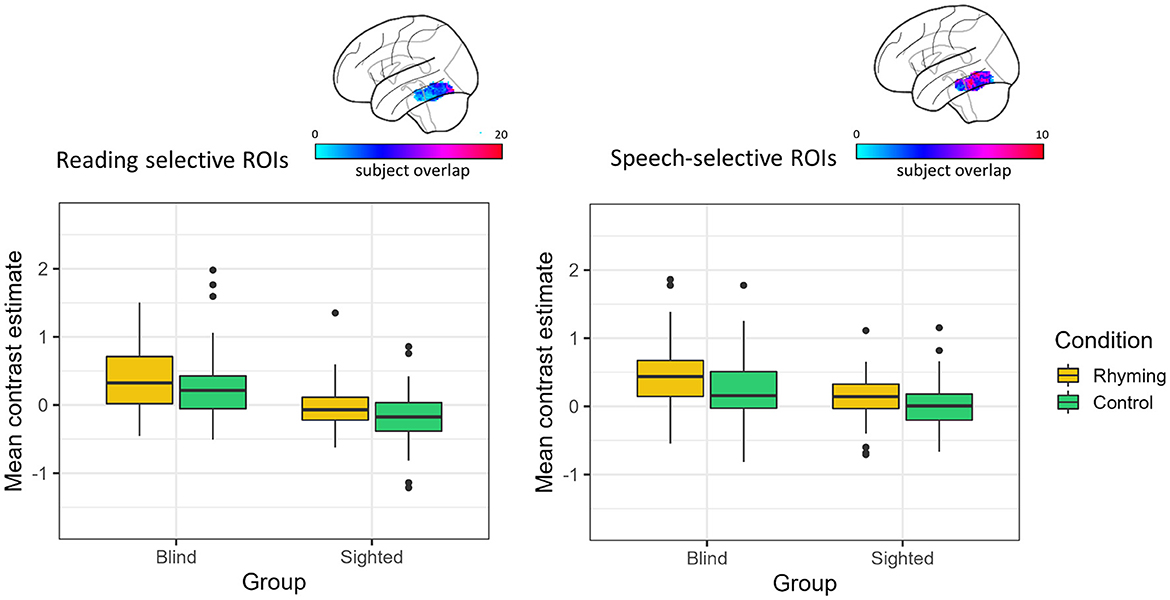

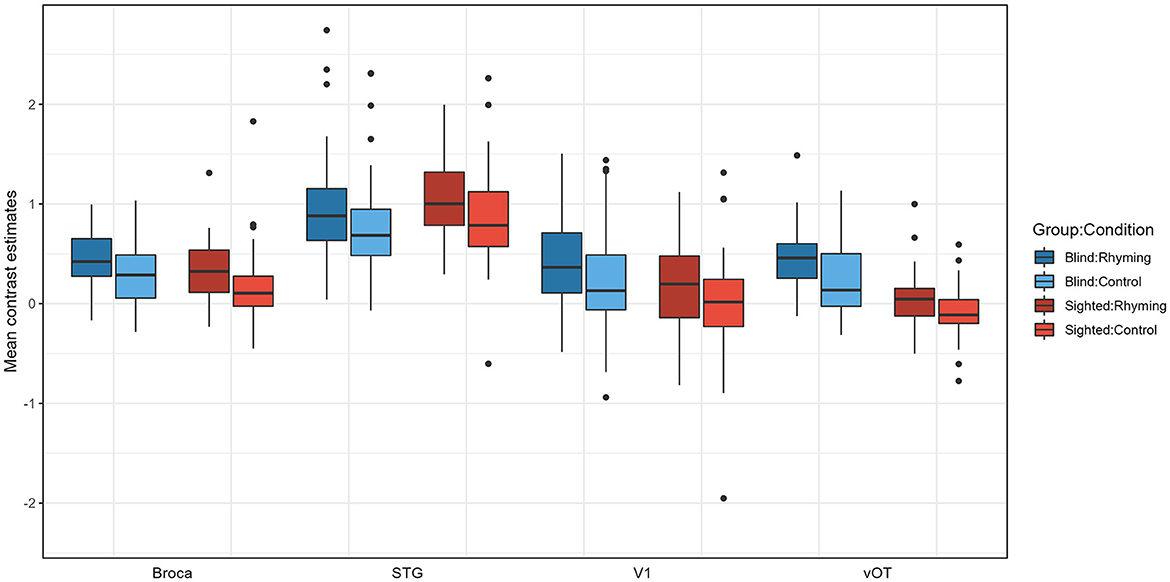

Activation of the left vOT was compared to the brain response in V1, STG and Broca's area using a three-way mixed ANOVA was conducted with ROI (vOT vs. V1 vs. STG vs. Broca's) and condition (rhyming vs. control) as the within- participants and group (Blind vs. Sighted) as between- participants factors. The residuals homoscedasticity assumption was not met; however, there are no robust methods for three-way ANOVA, so a classical three-way ANOVA (“anovaRM” function from Jamovi statistical package) was used nevertheless. As the assumption of sphericity was also violated, Greenhouse–Gaiser correction was applied.

There was a significant main effect of group [F(1, 102) = 9.70 p = 0.002], ROI [F(2.39, 243.83) = 183.11 p < 0.001] and condition [F(1, 102) = 34.84 p < 0.001], as well as significant group × ROI interaction [F(2.39, 243.83) = 12.74 p < 0.001, Figure 4]. The condition × ROI interaction [F(2.39, 244.20) = 0.17 p = 0.879], group × condition interaction [F(1, 102) = 0.00 p = 0.965], and the three-way group × ROI × condition interaction [F(2, 244.20) = 1.05 p = 0.361] were not significant. The significance of the main effect of group and ROI and the group × ROI interaction was confirmed for both rhyming and control tasks by the robust two-way ANOVA (“bwtrim” function from the “WRS2” package) conducted within conditions.

Figure 4. Contrast estimates extracted from the four ROIs for experimental conditions for both groups.

Pairwise comparisons were conducted (with default Tukey adjustment of p-value and p < 0.05 significance threshold). Post-hoc tests have shown that the activations for rhyming were higher than for the control task in both groups, for vOT but not for other ROIs in the blind (vOT: p < 0.001, V1: p = 0.368, STG: p = 0.107; Broca's area: p = 0.252) and for the Broca's, STG, and marginally vOT ROI in the sighted (vOT: p = 0.052, V1: p = 0.295, STG: p = 0.010, Broca's area: p = 0.027). Group by ROI interaction can be interpreted as stemming from the fact that in the vOT ROI (Rhyming: p < 0.001, Control: p < 0.001), for both conditions, activation was higher in the blind group than in the sighted group and the differences between the groups were not significant for the Broca's area (Rhyming: p = 0.814, Control p = 0.622), V1 (Rhyming: p = 0.420, Control p = 0.686), and STG ROI (Rhyming: p = 1.000, Control p = 1.00). In the blind group, for both conditions, STG ROI activation was higher than the three other ROIs (p-values of all comparisons < 0.001), and the differences between the Broca's area, V1, and vOT were insignificant (p-values of all comparisons >0.889). On the other hand, in the sighted group, for both conditions, not only did STG ROI have higher activation than the three other ROIs (p-values of all comparisons < 0.001) but also the Broca's area had higher activation than the vOT ROI (p < 0.001). The differences between the vOT and V1, as well as V1 and Broca's area, were not significant (p-values of all comparisons >0.242).

This study aimed to test the involvement of the left vOT in phonological processing in both blind and sighted participants. We confirmed that the left vOT is sensitive to phonological processing in both groups, showing increased activation during the rhyming task as compared to the control task (which was also based on linguistic stimuli but should evoke only minimal phonological processing). At the same time, the blind group engaged the left vOT to a greater extent during both tasks than the sighted group. These results resemble the pattern obtained by Kim et al. (2017), where the left vOT in sighted participants responded more to auditory words than backward speech, albeit to a lesser degree than in the blind group. Higher activation for both conditions in the blind group, together with the absence of interaction between group and condition, suggests that the blind vOT is more responsive to phonology than the sighted vOT, but the response profiles do not significantly differ between the groups. Interestingly, in the blind group, the left vOT was activated above baseline during the control task. This contrasted with the sighted participants, where we found left vOT deactivation during the control task, which was consistent with previous findings (Yoncheva et al., 2010; Ludersdorfer et al., 2016; Planton et al., 2019). Only during the rhyming task and specifically within the neuronal populations specialized in processing spoken language, activation in the left vOT was observed in the sighted. This pattern of results could potentially be explained by the presence of phonological groups of neurons within the left vOT that are specifically attuned to phonological features of stimuli (Pattamadilok et al., 2019; for a review of alternative hypotheses, see Dȩbska et al., 2023). In cases where phonological processing is not task relevant, auditory stimuli induced a general deactivation of the visual cortex, including the vOT, relative to rest in the sighted group.

There were no significant differences between the blind and the sighted groups when typical language regions were considered (STG, Broca's area). Yet, only in the blind group, the left vOT presented a higher degree of sensitivity to phonological processing than other language-network nodes. In the sighted, both STG and Broca's area showed stronger activation than the vOT. Interestingly, there was no difference between the activation of V1 and Broca's area in the sighted group. These results suggest that following visual deprivation, vOT becomes a regular node of the language network (engaged in language processing similarly to STG and Broca's region) and is recruited in language processing independently of task demands.

The literature-based ROI used in the current study was quite large and spanned portions of the vOT that may have diverse functional roles (Cohen et al., 2004; Pammer et al., 2004; Vinckier et al., 2007; Bouhali et al., 2019; Ludersdorfer et al., 2019). Additionally, the significant group differences peaked anteriorly to the classical localization of print-sensitive vOT (y = −48 for rhyming > baseline, y = −50 for rhyming > control, compared to y = −57/−58 reported by Cohen et al., 2000; Lerma-Usabiaga et al., 2018). In the sighted, a gradient of specialization was observed with the more anterior parts of the vOT engaged in processing the increasingly complex stimuli with lexical content (Vinckier et al., 2007). The observed group differences may not be bound to the part of the vOT that encompasses orthographic representations in the sighted, but rather to the part of the vOT connected to the semantic system. However, the results of the literature-based ROIs and individually localized ROIs that tapped into the parts of the vOT specifically engaged in reading were the same. The observed pattern of activations was thus present in the area functionally connected to reading. Additionally, the gradient of specialization in the vOT was recently shown to be absent in blind Braille readers (Tian et al., 2023). Current results point to a changed role of the left vOT in the language system of blind individuals.

Although our data do not permit testing this hypothesis directly, we think that the observed differences between the blind and the sighted in the activation during a phonological task reflect a different developmental trajectory in these two groups. The left vOT region is connected to the perisylvian language areas as well as to the occipital cortex (Yeatman et al., 2013). In the sighted population, this unique set of connections is thought to define its crucial role in reading (Dehaene et al., 2015; Saygin et al., 2016). As the left vOT is connected to both visual and linguistic areas it is a perfect candidate for a region binding the newly learned written form of language with the known spoken form. This association is so strong that the left vOT may present some sensitivity to spoken language too (Planton et al., 2019).

In individuals who are congenitally or early blind, the connections of the left vOT probably stay largely unchanged (Noppeney, 2007); however, the nature of the input from the connected areas is different (Bedny, 2017). We know that the language network in the blind is very similar to the one observed in the sighted population, the difference being the inclusion of the occipital cortex (Röder et al., 2002; Dziȩgiel-Fivet et al., 2021). The occipital cortex in the blind is thought to be involved in many high-order cognitive processes and language is one of them (Bedny et al., 2011, 2015). Thus, the left vOT, along with other occipital areas like V1, might also be incorporated into the language processing network, even before Braille reading acquisition. When blind individuals learn how to read, the left vOT becomes active during tactile reading but this activation may reflect more general linguistic processes and not solely the activation of the orthographic representations (Tian et al., 2023).

The rhyming task used was quite easy for the participants, as demonstrated by the analysis of the performance data. The choice of such an easy task was dictated by the large age range of the participants of the study. The task must have been possible to be completed by the minor participants. Second, the control task was chosen based on previous studies focused on phonological processing (Kovelman et al., 2012; Raschle et al., 2014; Yu et al., 2018). However, it consisted of linguistic stimuli and some phonologically related activation, while imminently low-level may have also been evoked by the control stimuli. Finally, it could be the case that our design based on the comparison of rhyming to the control task did not strictly isolate phonological processing but additionally included other linguistic (e.g., semantics) or attention-related processes. Therefore, future studies should use other, more sensitive contrasts to isolate phonology. Finally, the age range in the current study was wider than usually encountered in this type of study. We decided to include such a wide range of participants to maximize the size of the blind sample and thus increase the power of the analyses. Nevertheless, only a few participants at the beginning of reading acquisition were included in the study which prevented us from studying the developmental changes in the vOT sensitivity to language.

To conclude, we suggest that phonological sensitivity for spoken language in the left vOT in blind participants is different in nature from the one observed in the sighted population. Blind participants activated the left vOT during both rhyming and control spoken language tasks and across the ROI definition methods. Contrarily, the sighted group activation for speech processing was present only in the rhyming task and only within the left vOT neuronal populations specialized in processing spoken language. We hypothesize that in the sighted the sensitivity to spoken language in the left vOT is secondary to its involvement in reading whereas in the blind the sensitivity to speech in this region comes first. Further longitudinal studies are needed to confirm the proposed developmental account of the left vOT response to spoken language in relation to reading acquisition in the sighted and blind populations.

The second-level data from the phonological task, participants' demographic characteristics, contrast estimates extracted for the ROI analyses, and scripts used for the ROI analyses can be found at: https://osf.io/kzjw2/.

The study was approved by the Scientific Studies Ethics Committee of the Institute of Psychology, Jagiellonian University, which applies The Declaration of Helsinki rules. Adult participants signed an informed consent form at the beginning of the experimental session. The form was sent to the blind participants beforehand in a format readable by the screen reading software. The consent form was signed by the parents of non-adult participants and the verbal consent of children was acquired.

GD-F: Conceptualization, Data curation, Formal analysis, Investigation, Project administration, Visualization, Writing—original draft, Writing—review & editing. JB: Conceptualization, Data curation, Investigation, Methodology, Project administration, Writing—review & editing. KJ: Conceptualization, Data curation, Funding acquisition, Project administration, Methodology, Supervision, Writing—review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was funded by grant from National Science Centre (2016/22/E/HS6/00119).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2023.1228808/full#supplementary-material

Arnaud, L., Sato, M., Ménard, L., and Gracco, V. L. (2013). Repetition suppression for speech processing in the associative occipital and parietal cortex of congenitally blind adults. PLoS ONE 8, e64553. doi: 10.1371/journal.pone.0064553

Bedny, M. (2017). Evidence from blindness for a cognitively pluripotent cortex. Trends Cogn. Sci. 21, 637–648. doi: 10.1016/j.tics.2017.06.003

Bedny, M., Pascual-Leone, A., Dodell-Feder, D., Fedorenko, E., and Saxe, R. (2011). Language processing in the occipital cortex of congenitally blind adults. Proc. Natl. Acad. Sci. U.S.A. 108, 4429–4434. doi: 10.1073/pnas.1014818108

Bedny, M., Pascual-Leone, A., Dravida, S., and Saxe, R. (2012). A sensitive period for langugae in the visual cortex: distinct patterns of plasticity in congenitally versus late blind adults. Brain Lang. 122, 167–170. doi: 10.1016/j.bandl.2011.10.005

Bedny, M., Richardson, H., and Saxe, R. (2015). ‘visual' cortex responds to spoken language in blind children. J. Neurosci. 35, 11674–11681. doi: 10.1523/JNEUROSCI.0634-15.2015

Blau, V., Reithler, J., van Atteveldt, N., Seitz, J., Gerretsen, P., Goebel, R., et al. (2010). Deviant processing of letters and speech sounds as proximate cause of reading failure: a functional magnetic resonance imaging study of dyslexic children. Brain 133, 868–879. doi: 10.1093/brain/awp308

Bouhali, F., Bézagu, Z., Dehaene, S., and Cohen, L. (2019). A mesial-to-lateral dissociation for orthographic processing in the visual cortex. Proc. Natl. Acad. Sci. U.S.A. 116, 43. doi: 10.1073/pnas.1904184116

Brett, M., Anton, J. L., Valabregue, R., and Poline, J. B. (2002). “Region of interest analysis using an SPM toolbox,” in Paper Presented at the 8th International Conference on Functional Mapping of the Human Brain (Sendai).

Burton, H., Diamond, J. B., and McDermott, K. B. (2003). Dissociating cortical regions activated by semantic and phonological tasks: a fMRI study in blind and sighted people. J. Neurophysiol. 90, 1965–1982. doi: 10.1152/jn.00279.2003

Burton, H., Snyder, A. Z., Conturo, T. E., Akbudak, E., Ollinger, J. M., and Raichle, M. E. (2002). Adaptive changes in early and late blind: a fMRI study of braille reading adaptive changes in early and late blind: a fMRI study of braille reading. J. Neurophysiol. 87, 589–607. doi: 10.1152/jn.00285.2001

Cohen, L., Dehaene, S., Naccache, L., Lehéricy, S., Dehaene-Lambertz, G., Hénaff, M.-A., et al. (2000). The visual word form area. Brain 123, 291–307. doi: 10.1093/brain/123.2.291

Cohen, L., Jobert, A., Le Bihan, D., and Dehaene, S. (2004). Distinct unimodal and multimodal regions for word processing in the left temporal cortex. NeuroImage 23, 1256–1270. doi: 10.1016/j.neuroimage.2004.07.052

Debowska, W., Wolak, T., Soluch, P., Orzechowski, M., and Kossut, M. (2013). Design and evaluation of an innovative MRI-compatible Braille stimulator with high spatial and temporal resolution. J. Neurosci. Methods 213, 32–38. doi: 10.1016/j.jneumeth.2012.12.002

Dȩbska, A., Chyl, K., Dziȩgiel, G., Kacprzak, A., Łuniewska, M., Plewko, J., et al. (2019). Reading and spelling skills are differentially related to phonological processing: behavioral and fMRI study. Dev. Cogn. Neurosci. 39, 100683. doi: 10.1016/j.dcn.2019.100683

Dȩbska, A., Wójcik, M., Chyl, K., Dziȩgiel-Fivet, G., and Jednoróg, K. (2023). Beyond the Visual Word Form Area – a cognitive characterization of the left ventral occipitotemporal cortex. Front. Hum. Neurosci. 17, 1199366. doi: 10.3389/fnhum.2023.1199366

Dehaene, S., and Cohen, L. (2011). The unique role of the visual word form area in reading. Trends Cogn. Sci. 15, 254–262. doi: 10.1016/j.tics.2011.04.003

Dehaene, S., Cohen, L., Morais, J., and Kolinsky, R. (2015). Illiterate to literate: behavioural and cerebral changes induced by reading acquisition. Nat. Rev. Neurosci. 16, 234–244. doi: 10.1038/nrn3924

Dehaene, S., Pegado, F., Braga, L. W., Ventura, P., Filho, G. N., Jobert, A., et al. (2010). How learning to read changes the cortical networks for vision and language. Science 330, 1359–1364. doi: 10.1126/science.1194140

Dehaene-Lambertz, G., Monzalvo, K., and Dehaene, S. (2018). The emergence of the visual word form: longitudinal evolution of category-specific ventral visual areas during reading acquisition. PLoS Biol. 16, e2004103. doi: 10.1371/journal.pbio.2004103

Desroches, A. S., Cone, N. E., Bolger, D. J., Bitan, T., Burman, D. D., and Booth, J. R. (2010). Children with reading difficulties show differences in brain regions associated with orthographic processing during spoken language processing. Brain Res. 1356, 73–84. doi: 10.1016/j.brainres.2010.07.097

Dziȩgiel-Fivet, G., Plewko, J., Szczerbiński, M., Marchewka, A., Szwed, M., and Jednoróg, K. (2021). Neural network for Braille reading and the speech-reading convergence in the blind: similarities and differences to visual reading. NeuroImage 231, 117851. doi: 10.1016/j.neuroimage.2021.117851

Eickhoff, S. B., Stephan, K. E., Mohlberg, H., Grefkes, C., Fink, G. R., Amunts, K., et al. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage 25, 1325–1335. doi: 10.1016/j.neuroimage.2004.12.034

Gizewski, E. R., Gasser, T., De Greiff, A., Boehm, A., and Forsting, M. (2003). Cross-modal plasticity for sensory and motor activation patterns in blind subjects. NeuroImage 19, 968–975. doi: 10.1016/S1053-8119(03)00114-9

Hodgson, V. J., Ralph, M. A. L., and Jackson, R. L. (2021). Multiple dimensions underlying the functional organisation of the language network. NeuroImage 241, 118444. doi: 10.1016/j.neuroimage.2021.118444

Jobard, G., Crivello, F., and Tzourio-Mazoyer, N. (2003). Evaluation of the dual route theory of reading: a metanalysis of 35 neuroimaging studies. NeuroImage 20, 693–712. doi: 10.1016/S1053-8119(03)00343-4

Kim, J. S., Kanjlia, S., Merabet, L. B., and Bedny, M. (2017). Development of the visual word form area requires visual experience: evidence from blind Braille readers. J. Neurosci. 37, 11495–11504. doi: 10.1523/JNEUROSCI.0997-17.2017

Kovelman, I., Norton, E. S., Christodoulou, J. A., Gaab, N., Lieberman, D. A., Triantafyllou, C., et al. (2012). Brain basis of phonological awareness for spoken language in children and its disruption in dyslexia. Cereb. Cortex 22, 754–764. doi: 10.1093/cercor/bhr094

Lerma-Usabiaga, G., Carreiras, M., and Paz-Alonso, P. M. (2018). Converging evidence for functional and structural segregation within the left ventral occipitotemporal cortex in reading. Proc. Natl. Acad. Sci. U.S.A. 115, E9981–E9990. doi: 10.1073/pnas.1803003115

Ludersdorfer, P., Price, C. J., Kawabata Duncan, K. J., DeDuck, K., Neufeld, N. H., and Seghier, M. L. (2019). Dissociating the functions of superior and inferior parts of the left ventral occipito-temporal cortex during visual word and object processing. NeuroImage 199, 325–335. doi: 10.1016/j.neuroimage.2019.06.003

Ludersdorfer, P., Wimmer, H., Richlan, F., Schurz, M., Hutzler, F., and Kronbichler, M. (2016). Left ventral occipitotemporal activation during orthographic and semantic processing of auditory words. NeuroImage 124, 834–842. doi: 10.1016/j.neuroimage.2015.09.039

Martin, A., Schurz, M., Kronbichler, M., and Richlan, F. (2015). Reading in the brain of children and adults: A meta-analysis of 40 functional magnetic resonance imaging studies. Hum. Brain Mapp. 36, 1963–1981. doi: 10.1002/hbm.22749

Mattioni, S., Rezk, M., Battal, C., Vadlamudi, J., and Collignon, O. (2022). Impact of blindness onset on the representation of sound categories in occipital and temporal cortices. ELife 11, e79370. doi: 10.7554/eLife.79370.sa2

Noppeney, U. (2007). The effects of visual deprivation on functional and structural organization of the human brain. Neurosci. Biobehav. Rev. 31, 1169–1180. doi: 10.1016/j.neubiorev.2007.04.012

Pammer, K., Hansen, P. C., Kringelbach, M. L., Holliday, I., Barnes, G., Hillebrand, A., et al. (2004). Visual word recognition: the first half second. NeuroImage 22, 1819–1825. doi: 10.1016/j.neuroimage.2004.05.004

Pattamadilok, C., Planton, S., and Bonnard, M. (2019). Spoken language coding neurons in the Visual Word Form Area: evidence from a TMS adaptation paradigm. NeuroImage 186, 278–285. doi: 10.1016/j.neuroimage.2018.11.014

Planton, S., Chanoine, V., Sein, J., Anton, J. L., Nazarian, B., Pallier, C., et al. (2019). Top-down activation of the visuo-orthographic system during spoken sentence processing. NeuroImage 202, 116135. doi: 10.1016/j.neuroimage.2019.116135

Price, C. J., and Devlin, J. T. (2011). The interactive account of ventral occipitotemporal contributions to reading. Trends Cogn. Sci. 15, 246–253. doi: 10.1016/j.tics.2011.04.001

R Core Team (2023). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing.

Raczy, K., Urbańczyk, A., Korczyk, M., Szewczyk, J. M., Sumera, E., and Szwed, M. (2019). Orthographic priming in braille reading as evidence for task-specific reorganization in the ventral visual cortex of the congenitally blind. J. Cogn. Neurosci. 31, 1065–1078. doi: 10.1162/jocn_a_01407

Raschle, N. M., Stering, P. L., Meissner, S. N., and Gaab, N. (2014). Altered neuronal response during rapid auditory processing and its relation to phonological processing in prereading children at familial risk for dyslexia. Cereb. Cortex 24, 2489–2501. doi: 10.1093/cercor/bht104

Reich, L., Szwed, M., Cohen, L., and Amedi, A. (2011). A ventral visual stream reading center independent of visual experience. Curr. Biol. 21, 363–368. doi: 10.1016/j.cub.2011.01.040

Röder, B., Stock, O., Bien, S., Neville, H., and Rösler, F. (2002). Speech processing activates visual cortex in congenitally blind humans. Eur. J. Neurosci. 16, 930–936. doi: 10.1046/j.1460-9568.2002.02147.x

Rolls, E. T., Huang, C. C., Lin, C. P., Feng, J., and Joliot, M. (2020). Automated anatomical labelling atlas 3. NeuroImage 206:116189. doi: 10.1016/j.neuroimage.2019.116189

Rueckl, J. G., Paz-Alonso, P. M., Molfese, P. J., Kuo, W.-J., Bick, A., Frost, S. J., et al. (2015). Universal brain signature of proficient reading: evidence from four contrasting languages. Proc. Natl. Acad. Sci. U.S.A. 112, 15510–15515. doi: 10.1073/pnas.1509321112

Saxe, R., Brett, M., and Kanwisher, N. (2006). Divide and conquer: a defense of functional localizers. NeuroImage 30, 1088–1096. doi: 10.1016/j.neuroimage.2005.12.062

Saygin, Z. M., Osher, D. E., Norton, E. S., Youssoufian, D. A., Beach, S. D., Feather, J., et al. (2016). Connectivity precedes function in the development of the visual word form area. Nat. Neurosci. 19, 1250–5. doi: 10.1038/nn.4354

Siuda-Krzywicka, K., Bola, Ł., Paplińska, M., Sumera, E., Jednoróg, K., Marchewka, A., et al. (2016). Massive cortical reorganization in sighted braille readers. ELife 5, e10762. doi: 10.7554/eLife.10762

Tian, M., Saccone, E. J., Kim, J. S., Kanjlia, S., and Bedny, M. (2023). Sensory modality and spoken language shape reading network in blind readers of Braille. Cereb. Cortex 33, 2426–2440. doi: 10.1093/cercor/bhac216

Veispak, A., Boets, B., Männamaa, M., and Ghesquière, P. (2012). Probing the perceptual and cognitive underpinnings of braille reading. An Estonian population study. Res. Dev. Disabil. 33, 1366–1379. doi: 10.1016/j.ridd.2012.03.009

Vinckier, F., Dehaene, S., Jobert, A., Dubus, J. P., Sigman, M., and Cohen, L. (2007). Hierarchical coding of letter strings in the ventral stream: dissecting the inner organization of the visual word-form system. Neuron 55, 143–156. doi: 10.1016/j.neuron.2007.05.031

Yeatman, J. D., Rauschecker, A. M., and Wandell, B. A. (2013). Anatomy of the visual word form area: adjacent cortical circuits and long-range white matter connections. Brain Lang. 125, 146–155. doi: 10.1016/j.bandl.2012.04.010

Yoncheva, Y. N., Zevin, J. D., Maurer, U., and McCandliss, B. D. (2010). Auditory selective attention to speech modulates activity in the visual word form area. Cereb. Cortex 20, 622–632. doi: 10.1093/cercor/bhp129

Keywords: blind, fMRI, language, plasticity, vOT, VWFA

Citation: Dziȩgiel-Fivet G, Beck J and Jednoróg K (2023) The role of the left ventral occipitotemporal cortex in speech processing—The influence of visual deprivation. Front. Hum. Neurosci. 17:1228808. doi: 10.3389/fnhum.2023.1228808

Received: 25 May 2023; Accepted: 13 November 2023;

Published: 06 December 2023.

Edited by:

Sharon Geva, University College London, United KingdomReviewed by:

Judy Kim, Princeton University, United StatesCopyright © 2023 Dziȩgiel-Fivet, Beck and Jednoróg. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gabriela Dziȩgiel-Fivet, Zy5kemllZ2llbEBuZW5ja2kuZWR1LnBs; Katarzyna Jednoróg, ay5qZWRub3JvZ0BuZW5ja2kuZWR1LnBs

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.