94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci., 10 October 2023

Sec. Cognitive Neuroscience

Volume 17 - 2023 | https://doi.org/10.3389/fnhum.2023.1174104

This article is part of the Research TopicSimultaneous EEG-fMRI Applications in Cognitive NeuroscienceView all 9 articles

Introduction: Emotions play a critical role in human communication, exerting a significant influence on brain function and behavior. One effective method of observing and analyzing these emotions is through electroencephalography (EEG) signals. Although numerous studies have been dedicated to emotion recognition (ER) using EEG signals, achieving improved accuracy in recognition remains a challenging task. To address this challenge, this paper presents a deep-learning approach for ER using EEG signals.

Background: ER is a dynamic field of research with diverse practical applications in healthcare, human-computer interaction, and affective computing. In ER studies, EEG signals are frequently employed as they offer a non-invasive and cost-effective means of measuring brain activity. Nevertheless, accurately identifying emotions from EEG signals poses a significant challenge due to the intricate and non-linear nature of these signals.

Methods: The present study proposes a novel approach for ER that encompasses multiple stages, including feature extraction, feature selection (FS) employing clustering, and classification using Dual-LSTM. To conduct the experiments, the DEAP dataset was employed, wherein a clustering technique was applied to Hurst’s view and statistical features during the FS phase. Ultimately, Dual-LSTM was employed for accurate ER.

Results: The proposed method achieved a remarkable accuracy of 97.5% in accurately classifying emotions across four categories: arousal, valence, liking/disliking, dominance, and familiarity. This high level of accuracy serves as strong evidence for the effectiveness of the deep-learning approach to emotion recognition (ER) utilizing EEG signals.

Conclusion: The deep-learning approach proposed in this paper has shown promising results in emotion recognition using EEG signals. This method can be useful in various applications, such as developing more effective therapies for individuals with mood disorders or improving human-computer interaction by allowing machines to respond more intelligently to users’ emotional states. However, further research is needed to validate the proposed method on larger datasets and to investigate its applicability to real-world scenarios.

Emotions constitute a valuable facet of an individual’s character and play a pivotal role in the development and acquisition of virtues (Mullins and Sabherwal, 2018). Emotions hold significant significance in human communication and are manifested through a range of expressive patterns known as emotional labeling. Numerous previous studies have focused on emotion classification, often examining commonly studied general emotions such as happiness, sadness, anger, fear, disgust, and surprise. These emotions are typically depicted on a two-dimensional diagram, with stimulation and arousal serving as key dimensions (Al-Nafjan et al., 2017).

Multiple methods have been employed to discern human emotions, including the analysis of speech patterns and tone of voice (Moriyama and Ozawa, 2003; Zeng et al., 2009). However, it is worth noting that this bodily state can be susceptible to manipulation or imitation (Schuller and Schuller, 2021). Facial expressions and their alterations are commonly utilized for emotion recognition; however, these expressions can be intentionally modified by individuals, posing challenges in accurately discerning their genuine emotions (Aryanmehr et al., 2018; Dzedzickis et al., 2020; Harouni et al., 2022). EEG (electroencephalography) is a technique employed to monitor brain activity through the measurement of voltage changes generated by the collective neural activity within the brain (San-Segundo et al., 2019; Dehghani et al., 2020, 2022, 2023; Sadjadi et al., 2021; Mosayebi et al., 2022). EEG serves as a reflection of the brain’s activity and functioning, and it finds diverse applications, including but not limited to emotion recognition (Dehghani et al., 2011a,b, 2013; Ebrahimzadeh and Alavi, 2013; Nikravan et al., 2016; Soroush et al., 2017, 2018a,b, 2019a,b, 2020; Bagherzadeh et al., 2018; Alom et al., 2019; Ebrahimzadeh et al., 2019a,b,c, 2021, 2022, 2023; Bagheri and Power, 2020; Karimi et al., 2022; Rehman et al., 2022; Yousefi et al., 2022, 2023).

Methods rooted in machine learning (ML), pattern recognition, and data mining have been employed to detect and identify emotions through the analysis of EEG signals (Martínez-Tejada et al., 2020). ML, as a highly effective approach, proves invaluable in identifying, diagnosing, and classifying emotions. It facilitates the discovery of patterns that aid in determining distinct types of emotions (Zhang et al., 2020). Nevertheless, while the accuracy of ML tends to improve as the volume of data increases, there exists a threshold beyond which additional data does not yield significant accuracy improvements (Raftarai et al., 2021). To tackle this challenge, deep learning (DL) algorithms have emerged as a solution. DL-based methods demonstrate that as the data becomes larger and more comprehensive, the accuracy achieved increases correspondingly (Alom et al., 2019; Dargan et al., 2020; Karimi et al., 2021).

DL is a ML method (Shrestha and Mahmood, 2019) that encompasses various architectures. One example is Multilayer Perceptron (MLP) networks, which consist of multiple hidden layers, an input layer, an output layer, and hidden units within each layer. Another notable type is Recurrent Neural Networks (RNN), known for their effectiveness in time series prediction, rapid convergence, and adaptability (Rout et al., 2017). In RNN, the output of the hidden layer is fed back, allowing each neuron in the output layer to establish a feedback connection through a buffer layer. This feedback mechanism significantly enhances the RNN’s capacity for learning, recognition, and pattern generation.

In the RNN architecture, each hidden neuron is linked to a single feedback neuron, characterized by a weight of one. Consequently, the feedback layer captures and represents the previous state of the hidden layer. Notably, the number of feedback neurons corresponds to the number of hidden neurons within the network (Jia et al., 2021). In theory while traditional recurrent neural networks (RNNs), have the potential to generate sequences of any complexity given their size, this is often not the case in practice. RNNs struggle with retaining information from past inputs over extended periods, resulting in limited short-term memory. This limitation hampers the network’s ability to effectively model long-term structures and can lead to instability during sequence generation. To address this challenge, Long Short-Term Memory (LSTM) RNNs were introduced. Unlike traditional RNNs, which overwrite their content at each time step, LSTM RNNs incorporate gates that enable them to selectively retain important information. When an LSTM unit identifies a significant feature in the initial steps of the input sequence, it can effectively preserve this information along a longer pathway. This capability empowers LSTMs to handle long-term dependencies and makes them particularly suitable for serving as buffer units within recurrent networks. By leveraging their long-term memory, LSTMs enhance the network’s capacity to improve predictions by considering past context, even if the understanding of recent history may not be perfect (Yin et al., 2021).

LSTM networks represent an advancement over traditional RNNs, specifically designed to address the challenge of retaining past data in memory. By overcoming the problem of diminishing memory capacity in standard recurrent neural networks, LSTM networks offer improved capabilities for tasks such as classification, processing, and forecasting of time series data with uncertain time delays. The training of LSTM networks involves the utilization of back-propagation, a popular technique for model optimization and learning (Yin et al., 2021).

Numerous studies have been conducted to differentiate between emotions and brain signals. Phase Lag Index (PLI) was introduced as a means of identifying multiband static networks (Liu et al., 2019).

In study by Ozel et al. (2019), a novel approach for event-related analysis was introduced, focusing on the time-frequency analysis of multi-channel EEG signals utilizing multivariate transformation. The study incorporated various classifiers, including Support Vector Machine (SVM), K-Nearest Neighbors (KNN), Decision Tree (DT), and Ensemble Classifier. Similarly (Islam and Ahmad, 2019), presented a method that utilized discrete wavelet analysis to classify emotions in EEG signals with a KNN classifier. Ordonez-Bolanos et al. (2019) developed a method for generating a three-component emotion recognition (ER) method, which combines improved full experimental mode analysis, discrete violet transform, and maximum overlap of the discrete violet transform from the brain signal. To determine the class of the feature set, linear discriminant analysis (LDA) and KNN classifications were used in a cascade architecture. Lee et al. (2019) introduced a database and a new method that leverages neural networks to identify emotions. This study described three approaches for identification including support vector machine and neural network-based methods, as well as a belief neural network. Yang et al. (2019) utilized a convolutional neural network with maximum pooling for feature extraction in ER. They employed a neural network with a 50-layer sedimentary architecture, incorporating both maximum pooling and averaging to generate feature vectors. These feature vectors were combined to form the final feature vector and two LSTM models were used for classification. Shukla et al. (2021) employed an optimal feature selection (FS) method to enhance the accuracy of emotion recognition using EEG data. The selected features were then classified using KNN, SVM, and multi-layer perceptron neural network algorithms. Yang et al. (2020) proposed a novel approach for emotion recognition which utilizes the Fourier transform and Mel energy dynamic spectral coefficients. The feature vectors of each signal were then classified using SVM. Wei et al. (2020) developed an emotion recognition method that combines two convolutional neural networks (CNNs) and RNN. This study described the use of CNN for generating high-level features and another CNN for generating features correlated with each other. Then, LSTM RNN was used for further processing and classification of the feature vectors. AlZoubi et al. (2023) classified naturalistic expression of emotions using brain and environmental signals. Khateeb et al. (2021) proposed a novel method for improving the accuracy of ER using EEG signals by combining various feature extraction techniques. This study revealed the characteristics of EEG signals in both time and frequency domains and a multi-class SVM algorithm optimized with a genetic evolutionary algorithm led to improved performance. In the study by Algarni et al. (2022), a range of statistical and Hurst features was employed for classification. To select the most relevant features, the Binary Gray Wolf Optimizer was utilized. ER was performed using a stacked bi-directional LSTM (Bi-LSTM) model. The primary objective of the classification task was to categorize emotions into three main classes: arousal, valence, and liking.

Despite the significant advancements in EEG-based emotion recognition, it is essential to recognize the persistent challenges that continue to shape this field. Existing research in EEG-based emotion recognition has undeniably made valuable contributions, but it is not without its limitations. One notable limitation is the susceptibility of EEG signals to various sources of noise, including environmental interference and artifacts, which can undermine the accuracy of emotion recognition systems. Furthermore, traditional methods of feature extraction from EEG data may struggle to capture the nuanced and complex emotional states that individuals experience. Additionally, the ability to select the most informative features for classification remains a challenging task. Moreover, modeling temporal dependencies and effectively utilizing long-term contextual information present in EEG data is an ongoing challenge. These limitations collectively underscore the need for innovative approaches to address these issues and enhance the accuracy and efficiency of EEG-based emotion recognition systems. However, the existing literature highlights the potential of DL techniques, such as RNN and LSTM, in achieving high accuracy in data classification. However, a critical challenge lies in selecting appropriate features for training these models. This study aims to enhance the accuracy of ER by leveraging DL approaches and brain signals. By employing an effective feature selection technique and combining various classification methods, both high accuracy in ER and reduced computational complexity can be achieved. The primary objective of this paper is to propose an LSTM-based approach for ER using EEG signals.

The proposed method consists of several steps, including preprocessing, feature extraction, dimensionality reduction, and classification. In the preprocessing step, EEG signals undergo quality improvement and noise removal procedures. Subsequently, time/frequency domain features are extracted from the EEG signals to identify the most relevant and informative features for classification. The classification process is performed using the LSTM method.

This study’s key contributions include the extraction of discriminative features from EEG signals, the introduction of an efficient feature selection method, and the improvement of the LSTM architecture for classification purposes. By addressing the challenges of feature selection and leveraging the power of LSTM networks, this approach aims to enhance the accuracy and effectiveness of ER using EEG signals.

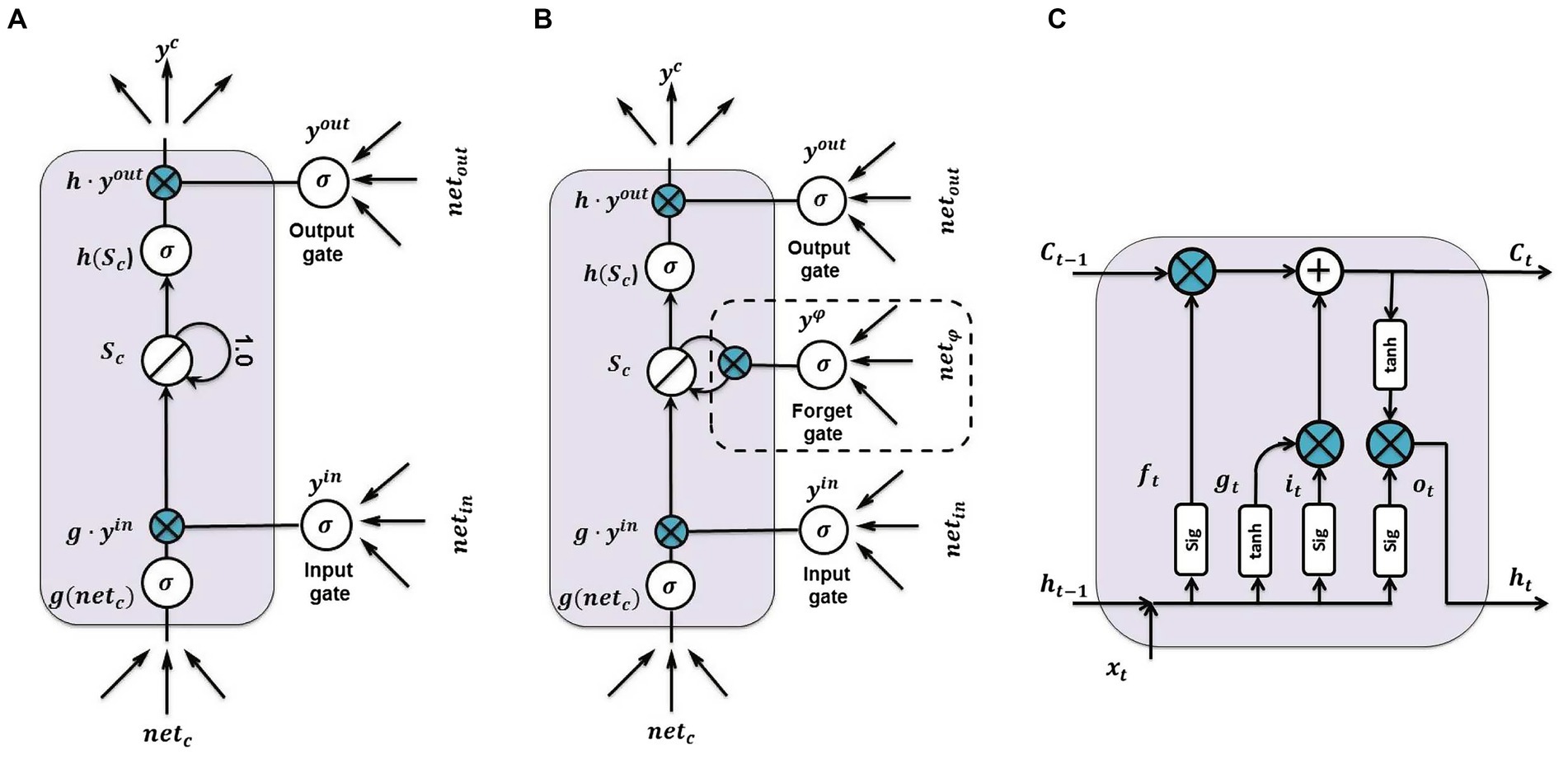

Deep learning utilizes various ML techniques to extract complex patterns and representations from data by employing multiple nonlinear models. The DL workflow consists of two essential stages: training and inference. During the training phase, large quantities of labeled data are examined to reveal their distinctive features. In the inference phase, informed conclusions are drawn, and previously unexplored data is accurately classified or labeled (Leevy et al., 2020). DL, also known as deep structured learning and hierarchical learning, is distinguished by its multi-layered architecture. These layers employ non-linear processing units to efficiently transform and extract significant features from the data (Dargan et al., 2020). In this paper, LSTM has been utilized for ER. Figure 1 illustrates three different configurations of LSTM cells employed in the study (Smagulova and James, 2019). These networks incorporate feedback loops that allow for the retention of information from previous time steps, enabling its persistence within the network. LSTM, a type of recurrent neural network architecture, is specifically designed to improve information storage and retrieval compared to traditional counterparts. Unlike conventional recurrent neural networks that overwrite content at each time step, LSTM networks employ gating mechanisms to selectively retain valuable information. Recurrent neural networks derive their name from the fact that the output of each layer depends on the parameters of preceding layers, granting them the ability to retain and store information from previously observed data. While LSTM is acknowledged as a powerful tool, it still faces challenges such as gradient fading and gradient explosion (Smagulova and James, 2019).

Figure 1. Three LSTM cell configurations: (A) Basic LSTM structure with one memory cell and two gates, (B) LSTM cell with forgetting gate, and (C) updated LSTM model with forgetting gate (Smagulova and James, 2019).

Accurate analysis during the ER process is crucial, particularly in the context of behavioral and mental disorders. However, conducting ER and obtaining precise results can be a challenging task. Previous research papers have highlighted variations in outcomes attributed to various factors, including network experience, environmental conditions, data preprocessing techniques, and classifier selection. As a result, there is an urgent need to develop a more effective methodology to achieve optimal performance. In this regard, this paper presents a novel and efficient approach that leverages the power of DL, specifically utilizing LSTM for ER.

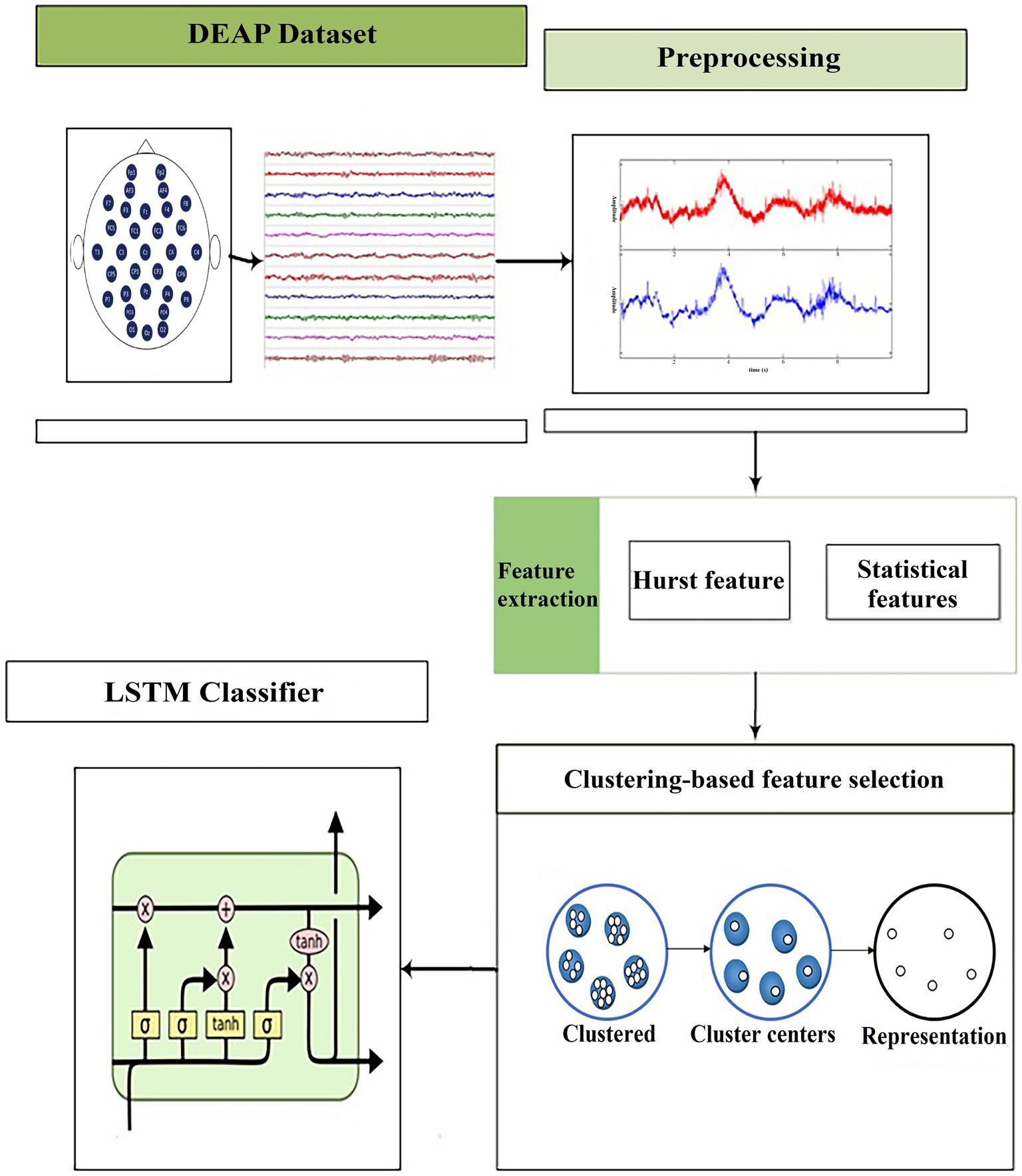

This paper focuses on performing ER using EEG signals and employs DL-based classification approach with the LSTM classifier. The LSTM classifier requires key features, such as Hurst features and statistical features, to be extracted from preprocessed input signals. In order to enhance the classifier’s performance, a filter-based FS method will be utilized to choose the most distinctive features for classification. The selected features will then be trained using the proposed LSTM as a DL-based classification approach to recognize different emotions. The proposed method for ER using brain signals is depicted in Figure 2, which illustrates the various stages including preprocessing, feature extraction, dimensionality reduction, and classification. Detailed explanations of these stages will be provided in the following sections.

Figure 2. Block diagram of the proposed method (Cho and Hwang, 2020).

Signals can often be accompanied by varying degrees of noise, which may manifest as artifacts and other forms of disturbance in EEG signals. The EEG data from the DEAP dataset underwent preprocessing before, which included artifact removal (specifically EOG), filtering, and down-sampling. Furthermore, a visual inspection was conducted to identify and address any potential artifacts in the data. In addition in this paper, the Empirical Mode Decomposition (EMD) method was employed to eliminate noise from the EEG signal (Huang et al., 1998). The EMD algorithm decomposes the signal x[n] into a set of amplitude-frequency-modulated components, b[n], referred to intrinsic mode functions (IMFs). The EMD technique is an empirical and data-driven technique and in the entire dataset, the number of extremes must be the same as the number of zero crossings or differ by at most one and the mean value of the package defined by the maximum and minimum must be zero. The reconstructed signal x[n] is derived using this approach with m representing the number of samples of a signal. Figure 3 depicts the denoised signal after applying the EMD method.

Feature extraction plays a crucial role in the effective ER methods based on EEG signals. In this study, feature extraction is done following preprocessing and the generation of noise-free dataset. Feature extraction enables a better understanding of the data and leads to a reduction in computational costs, data storage requirements, and training time. Time and frequency domain features are utilized in this study, and the Hurst view is a notable time domain feature extraction technique employed. In this paper only time Domaine features are extracted.

During the feature extraction process, Hurst’s exponent is employed to measure changes in EEG time series. This feature helps in identifying the presence or absence of long-term trends in sequential one-dimensional signals, such as EEG signal sequences. Equation (1) is used to calculate the Hurst index (Geng et al., 2011; Ebrahimzadeh and Najararaabi, 2016; Algarni et al., 2022).

The Hurst Exponent (HE) quantifies the level of persistence in a time series. It is calculated by taking the cumulative derivative of the mean-centered signal with respect to partial time series of length n, as expressed by the following equation:

n this equation, is the average of signal with the length of n. HE can be defined as:

In Equation (2), E[.] refers to expectation value and S(n) is the standard deviation of the partial time series.

Due to its non-stationary nature in the time domain, the EEG signal is analyzed using statistical methods to accurately depict its characteristics. A series of statistical features of the EEG signal have been used for ER including skewness (as a degree of asymmetry of a distribution), curve elongation, entropy, mean, variance, signal energy, and Shannon entropy.

This paper introduces the utilization of the FS method for ER, which involves three steps in the repetition and correlation analysis as described below:

1. The feature space is established using the Euclidean distance criterion in this study.

2. The features are classified using clustering methods such as K-means.

3. To obtain the optimal subset of features, an FS method based on the minimum redundancy maximum relevance (mRMR) approach is applied within each cluster. The resulting subset of features is then utilized for classification, with the cluster heads serving as the final features. This approach is thoroughly explained in detail in Ismi et al. (2016).

The proposed FS method is depicted in Figure 4. Initially, the feature space is clustered, and the resulting cluster centers are regarded as the selected features for the clustered data. The strongly related features are identified as the fundamental features of the Essential attribute, forming the foundation of the conditional features. The optimization process primarily focuses on correlation and repeatability analysis, addressing two key optimization problems. To optimize the selected features, a classic criterion based on repetition or relationship, mRMR and Max (Relevance ) and Min (Relevance ) will be used.

The mutual information-based mRMR approach only minimizes the mutual information between features. This can improve the classification efficiency of the desired features. With the proposed FS method, the most effective features are selected and then classified using a LSTM model.

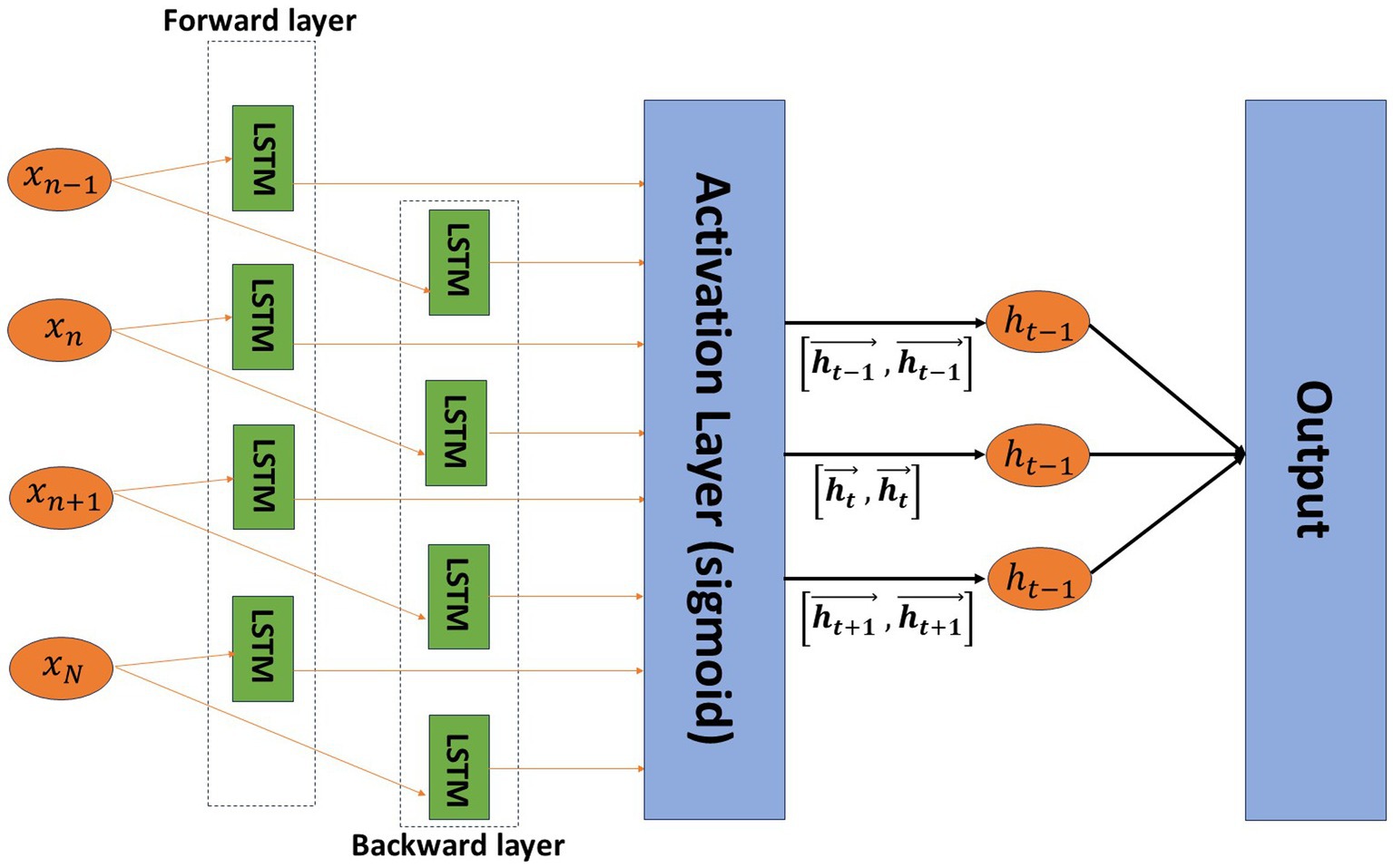

To enhance the classification performance of LSTM, we propose the Dual-LSTM model. This classifier is trained with optimally selected features and is designed for emotion classification. In this paper, we use the developed Dual-LSTM for sentiment detection, which is a DL algorithm that processes the input sequence in both normal chronological order and reverse chronological order through two separate networks. As illustrated in Figure 5, the outputs of these networks are consecutive in each time step. The Dual-LSTM’s stacked layer architecture enables it to capture both forward and backward information about the sequence at each time step, resulting in high classification accuracy.

Figure 5. Dual-LSTM structure used in this study (Algarni et al., 2022).

The proposed ER method was validated using the widely-used DEAP dataset for ER. This dataset consists of recordings from 32 participants while watching a music video. This dataset comprises recordings from 32 participants while they watched a music video. The dataset consists of 40 videos carefully selected to represent varying levels of arousal, valence, liking/disliking, dominance, and familiarity. The levels of arousal and valence were measured using numerical values ranging from 1 to 9. The dataset was recorded with a high EEG frequency of 512 Hz. Table 1 presents a summary of the key information about the dataset. In this study, the preprocessed data files were utilized, with each participant’s file containing two matrices: data and labels (as shown in Table 1). The dataset is divided into two primary matrices: the numerical data matrix and the label set. The data matrix has dimensions of 40 × 40 × 8,064 (video/trial × channel × data), encompassing channel and video data. The label set has dimensions of 4 × 40, representing the experiences of potency, arousal, mastery, and liking. The dataset was preprocessed using a band-pass filter with a frequency range of 4–45 Hz, and the EEG signals were down-sampled to 128 Hz (George et al., 2019).

This paper applies the proposed Dual-LSTM DL neural method with appropriate settings to classify the preprocessed EEG data of 32 participants from the DEAP dataset who watched 40 videos, with the objective of identifying the emotions evoked by the videos. The Dual-LSTM model is particularly advantageous when considering the entire time sequence at each time step in the signal, as it effectively captures long-term dependencies between time steps. The Dual-LSTM model utilizes two layers of LSTM, operating in both forward and reverse modes for learning. For emotion detection in the proposed method, a five-layer classifier was trained, and the following parameters were employed:

• The maximum number of epochs was set to 35, allowing the model to perform 35 weight update iterations. The weight updating is performed using informative data.

• The mini-batch size was set to 80.

• An initial learning rate of 0.01 was used to enhance training speed by increasing the learning rate in the initial cycles. The gradient threshold was set to 1 to balance the training process by keeping gradients at a reasonable level.

• The “Plots” option was set to “Training Progress” to generate graphs illustrating the actual progress of the training process as the iterations increment.

Table 2 summarizes the parameters and settings of the classifier. The random search method involves selecting a random sample of data and testing the performance of the proposed model using randomly selected parameter sets. One of the main challenges of this approach is to identify an accurate classification method with parameters such as the learning rate and number of hidden layer units with significant impacts on the accuracy of the classifier. For instance, a low learning rate value can result in slow convergence, while a high value can lead to erratic and unstable performance. The number of hidden layer units also influences the fit of the model. Training dynamics are influenced by class size. A large batch size can result in poor generalization and increased memory requirements during training, while a small batch size can lead to convergence on training data. In this study, a reliable heuristic random search algorithm is employed to identify optimal parameter values that balance the performance and computational efficiency of the Dual-LSTM model. To train the model, we utilized the ADAM optimization algorithm which is a faster alternative to stochastic gradient descent methods commonly used in deep learning. ADAM combines the advantages of the AdaGrad and RMSProp algorithms, making it effective for handling sparse gradients and noisy data. This optimizer provides computational efficiency for training the Dual-LSTM model, making it suitable for large data or parameter sets. We used 70% of the total data for training the Dual-LSTM which consisted of 896 records of the feature matrix, while the remaining 30% (384 records) were used for testing. The number of features extracted from each EEG signal is shown in Table 3.

The performance evaluation of this model involved assessing multiple criteria, including accuracy, recall, precision, and specificity. These criteria are quantified using Equation 3, as depicted below:

In the Equation (3), TP, TN, FN, and FP are true positive, true negative, false negative, and false positive, respectively.

To assess the proposed method, we first evaluate each emotion individually. Next, we analyze the collection of all emotions as input for classification. We assess the accuracy and ROC criteria separately in all four states: arousal, capacity, liking/disliking, dominance, and familiarity, both before and after FS. Additionally, we compare the proposed classifier as recurrent DL classifier with the conventional LSTM classifier.

Table 4 shows the results of ER classification using statistical features, Hurst features, a combination of Hurst and statistical features, and FS method with LSTM and Dual-LSTM. As presented in Table 3, the use of LSTM in FS mode outperforms the non-FS mode. The results of using Dual-LSTM demonstrated a noticeable enhancement compared to the use of LSTM alone. This superiority can be attributed to the presence of memory and forgetting units in Dual-LSTM, which operate in a round-trip fashion. Finally, a combination of Hurst and statistical and Dual-LSTM led to the best performance for ER using the EEG signals. In Table 5, the results of proposed method was compared with previous ones.

The proposed method was evaluated by assessing individual emotions separately, followed by analyzing the collective set of emotions as input for classification. The accuracy and ROC criteria were assessed for four states: arousal, capacity, liking/disliking, dominance, and familiarity, both before and after applying the FS method. Additionally, the proposed classifier, a recurrent DL classifier, was compared to the conventional LSTM classifier.

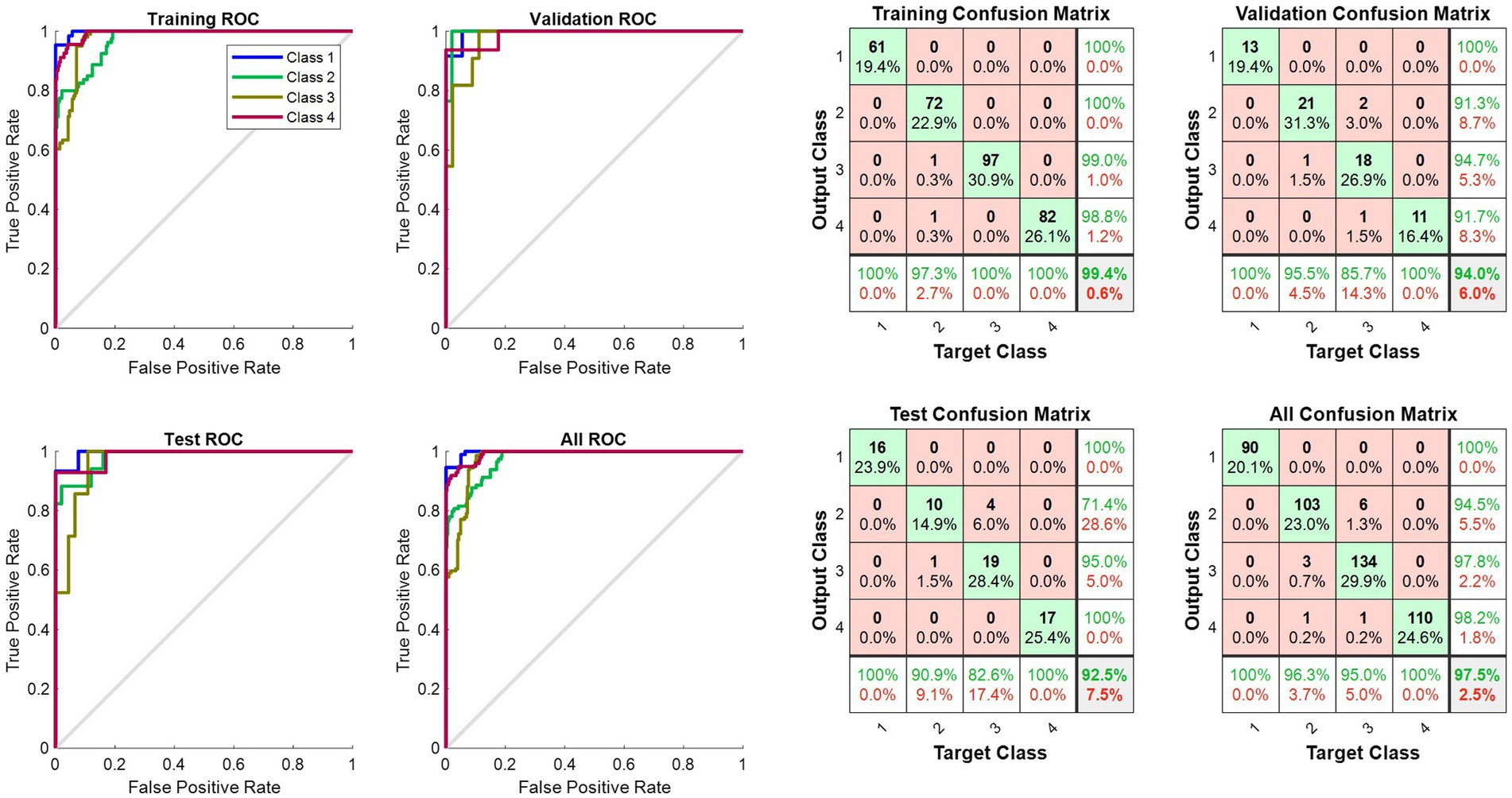

Table 4 presents the results of ER classification using different algorithms, including statistical features, Hurst features, a combination of Hurst and statistical features, and the FS method with LSTM and Dual-LSTM. As shown in Table 4, employing LSTM in FS mode outperformed the non-FS mode. Moreover, the results of using Dual-LSTM showed a significant improvement compared to using LSTM alone. This enhancement can be attributed to the presence of memory and forgetting units in Dual-LSTM, which operate in a bidirectional manner. Finally, combining Hurst and statistical features with Dual-LSTM yielded the best performance for ER using EEG signals. Figure 6 shows the ROC curve and confusion matrix for ER using FS method on Hurst and statistical features and using Dual-LSTM. As depicted in Figure 6, the proposed method could classify most emotion types with high accuracy rate.

Figure 6. The ROC curve and confusion matrix for ER using the FS method on Hurst and statistical features and using the Dual-LSTM.

Table 5 provides a comparison between the results of the proposed method in this study and previous studies. The accuracy achieved in this study (98.73%) outperformed most of the previous studies.

The recognition of emotions using EEG signals presents several challenges due to their nonstationary nature and complexity. This paper proposed an effective solution for ER based on DL. The proposed deep learning approach significantly enhances the accuracy of ER using EEG signals. In this study, DL was utilized for ER with EEG signals, employing a DL-based classification approach using the LSTM classifier. The LSTM classifier relied on key features, such as Hurst and statistical features, which were extracted from preprocessed input signals. To select the most distinctive features for emotion recognition and classification, a filter-based FS method was employed. The proposed method consisted of several stages, including preprocessing, feature extraction, dimensionality reduction through feature selection, and classification. The combined use of multiple feature series led to improved recognition accuracy, with the Dual-LSTM model outperforming the LSTM model in FS mode. Table 4 displays the results of ER using FS and other features, including statistical and Hurst features, based on LSTM and Dual-LSTM classification. The results demonstrated that the feature selection algorithm enhanced the classification performance, with Dual-LSTM achieving higher accuracy, sensitivity, precision, and specificity compared to LSTM. Dual-LSTM captures information from both past and future time steps, resulting in a better understanding of the sequence dynamics. In contrast, LSTM processes the input sequence only in the forward direction. Table 5 compared the accuracy of the proposed model with other DL and ML methods using the DEAP dataset. The results showed a significant improvement over previous models, thanks to the adoption of an improved approach compared to traditional LSTM models. However, differences in feature extraction techniques, classification methods, and parameters led to varying classification accuracies, despite utilizing the same dataset. The use of an appropriate FS method is crucial for enhancing the model’s performance. Compared to prior research using RNN and LSTM, the proposed method yielded exceptional outcomes. It is worth noting that earlier studies mainly focused on valence and arousal, while this study successfully classifies all four emotions.

This study employs Dual-LSTM deep neural networks for ER. In the proposed method, after extracting the statistical features and Hurst features, dimension reduction was performed using the proposed method in this study. The Dual-LSTM neural classifier was then used for classification, and its performance was compared with other classifiers including LSTM using the accuracy, precision, recall, and specificity criteria. The results demonstrate that the use of Dual-LSTM coupled with dimension reduction of the statistical and Hurst features outperforms other methods. Additionally, the feature extraction and FS stages based on LSTM demonstrated better results compared to the other methods, likely due to their data-driven nature.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and the institutional requirements.

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Algarni, M., Saeed, F., Al-Hadhrami, T., Ghabban, F., and Al-Sarem, M. (2022). Deep learning-based approach for emotion recognition using electroencephalography (EEG) signals using bi-directional Long Short-Term Memory (bi-LSTM). Sensors 22:2976. doi: 10.3390/s22082976

Alhagry, S., Aly, A., and Reda, A. (2017). Emotion recognition based on EEG using LSTM recurrent neural network. Int. J. Adv. Comput. Sci. Appl. 8, 355–358. doi: 10.14569/IJACSA.2017.081046

Al-Nafjan, A., Hosny, M., Al-Ohali, Y., and Al-Wabil, A. (2017). Review and classification of emotion recognition based on EEG brain-computer interface system research: a systematic review. Appl. Sci. 7:1239. doi: 10.3390/app7121239

Alom, M. Z., Taha, T. M., Yakopcic, C., Westberg, S., Sidike, P., Nasrin, M. S., et al. (2019). A state-of-the-art survey on deep learning theory and architectures. Electronics 8:292. doi: 10.3390/electronics8030292

AlZoubi, O., AlMakhadmeh, B., Yassein, M. B., and Mardini, W. (2023). Detecting naturalistic expression of emotions using physiological signals while playing video games. J. Ambient. Intell. Humaniz. Comput. 14, 1133–1146. doi: 10.1007/s12652-021-03367-7

Aryanmehr, Saeed, Karimi, Mohsen, and Boroujeni, Farsad Zamani. (2018). CVBL IRIS gender classification database image processing and biometric research, computer vision and biometric laboratory (CVBL). In 2018 IEEE 3rd international conference on image, vision and computing (ICIVC)Available at: https://ieee-dataport.org/documents/cvbl-iris-super-resolution-dataset.

Bagheri, M., and Power, S. D. (2020). EEG-based detection of mental workload level and stress: the effect of variation in each state on classification of the other. J. Neural Eng. 17:056015. doi: 10.1088/1741-2552/abbc27

Bagherzadeh, S., Maghooli, K., Farhadi, J., and Zangeneh Soroush, M. (2018). Emotion recognition from physiological signals using parallel stacked autoencoders. Neurophysiology 50, 428–435. doi: 10.1007/s11062-019-09775-y

Cho, J., and Hwang, H. (2020). Spatio-temporal representation of an electoencephalogram for emotion recognition using a three-dimensional convolutional neural network. Sensors 20:3491. doi: 10.3390/s20123491

Dargan, S., Kumar, M., Ayyagari, M. R., and Kumar, G. (2020). A survey of deep learning and its applications: a new paradigm to machine learning. Arch. Comput. Methods Eng. 27, 1071–1092. doi: 10.1007/s11831-019-09344-w

Dehghani, A., Ghassabi, Z., Moghddam, H. A., and Moin, M. S. (2013). Human recognition based on retinal images and using new similarity function. EURASIP J. Image Video Process. 2013:58. doi: 10.1186/1687-5281-2013-58

Dehghani, A., Moghaddam, H. A., and Moin, M. S. (2011a). “Retinal identification based on rotation invariant moments.” In 2011 5th international conference on bioinformatics and biomedical engineering, 1–4. IEEE. Wuhan, China.

Dehghani, A., Moradi, A., Dehghani, M., and Ahani, A. (2011b). Nonlinear solution for radiation boundary condition of heat transfer process in human eye. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2011, 166–169. doi: 10.1109/IEMBS.2011.6089920

Dehghani, A., Soltanian-Zadeh, H., and Hossein-Zadeh, G.-A. (2020). Global data-driven analysis of brain connectivity during emotion regulation by electroencephalography neurofeedback. Brain Connect. 10, 302–315. doi: 10.1089/brain.2019.0734

Dehghani, A., Soltanian-Zadeh, H., and Hossein-Zadeh, G.-A. (2022). Probing FMRI brain connectivity and activity changes during emotion regulation by EEG neurofeedback. Front. Hum. Neurosci. 16:988890. doi: 10.3389/fnhum.2022.988890

Dehghani, A., Soltanian-Zadeh, H., and Hossein-Zadeh, G.-A. (2023). Neural modulation enhancement using connectivity-based EEG neurofeedback with simultaneous fMRI for emotion regulation. Neuroimage. 279:120320.

Dzedzickis, A., Kaklauskas, A., and Bucinskas, V. (2020). Human emotion recognition: review of sensors and methods. Sensors 20:592. doi: 10.3390/s20030592

Ebrahimzadeh, E., and Alavi, S. M. (2013). Implementation and designing of line-detection system based on electroencephalography (EEG). Ann. Mil. Health Sci. Res. 11:e66739

Ebrahimzadeh, E., Fayaz, F., Rajabion, L., Seraji, M., Aflaki, F., Hammoud, A., et al. (2023). Machine learning approaches and non-linear processing of extracted components in frontal region to predict RTMS treatment response in major depressive disorder. Front. Syst. Neurosci. 17:919977. doi: 10.3389/fnsys.2023.919977

Ebrahimzadeh, E., and Najararaabi, B. (2016). A novel approach to predict sudden cardiac death using local feature selection and mixture of experts. Comput. Electr. Eng. 7, 15–32.

Ebrahimzadeh, E., Saharkhiz, S., Rajabion, L., Oskouei, H. B., Seraji, M., Fayaz, F., et al. (2022). Simultaneous EEG-FMRI for assessment of human brain function. Front. Syst. Neurosci. 16:934266. doi: 10.3389/fnsys.2022.934266

Ebrahimzadeh, E., Shams, M., Fayaz, F., Rajabion, L., Mirbagheri, M., Araabi, B. N., et al. (2019a). Quantitative determination of concordance in localizing epileptic focus by component-based EEG-FMRI. Comput. Methods Prog. Biomed. 177, 231–241. doi: 10.1016/j.cmpb.2019.06.003

Ebrahimzadeh, E., Shams, M., Jopungha, A. R., Fayaz, F., Mirbagheri, M., Hakimi, N., et al. (2019b). Epilepsy Presurgical evaluation of patients with complex source localization by a novel component-based EEG-FMRI approach. Iran. J. Radiol. 16:99134.doi: 10.5812/iranjradiol.99134

Ebrahimzadeh, E., Shams, M., Jounghani, A. R., Fayaz, F., Mirbagheri, M., Hakimi, N., et al. (2021). Localizing confined epileptic foci in patients with an unclear focus or presumed multifocality using a component-based EEG-FMRI method. Cogn. Neurodyn. 15, 207–222. doi: 10.1007/s11571-020-09614-5

Ebrahimzadeh, E., Soltanian-Zadeh, H., Araabi, B. N., Fesharaki, S. S. H., and Habibabadi, J. M. (2019c). Component-related BOLD response to localize epileptic focus using simultaneous EEG-FMRI recordings at 3T. J. Neurosci. Methods 322, 34–49. doi: 10.1016/j.jneumeth.2019.04.010

Geng, S., Zhou, W., Yuan, Q., Cai, D., and Zeng, Y. (2011). EEG non-linear feature extraction using correlation dimension and Hurst exponent. Neurol. Res. 33, 908–912. doi: 10.1179/1743132811Y.0000000041

George, F. P., Shaikat, I. M., Hossain, P. S. F., Parvez, M. Z., and Uddin, J. (2019). Recognition of emotional states using EEG signals based on time-frequency analysis and SVM classifier. Int. J. Electr. Comput. Eng. 9:1012. doi: 10.11591/ijece.v9i2.pp1012-1020

Girardi, D., Lanubile, F., and Novielli, N. (2017). Emotion detection using noninvasive low cost sensors. ArXiv [Cs.HC] Available at: http://arxiv.org/abs/1708.06664

Harouni, M., Karimi, M., Nasr, A., Mahmoudi, H., and Najafabadi, Z. (2022). “Health monitoring methods in heart diseases based on data mining approach: a directional review” in Prognostic models in healthcare: AI and statistical approaches (Singapore; Singapore: Springer Nature), 115–159.

Huang, N. E., Zheng, S., Long, S. R., Wu, M. C., Shih, H. H., Zheng, Q., et al. (1998). The empirical mode decomposition and the Hilbert Spectrum for nonlinear and non-stationary time series analysis. Proc. Math. Phys. Eng. Sci. 454, 903–995. doi: 10.1098/rspa.1998.0193

Islam, Md Rabiul, and Ahmad, Mohiuddin. (2019). “Wavelet analysis based classification of emotion from EEG signal.” In 2019 international conference on electrical, computer and communication engineering (ECCE). IEEE. Cox'sBazar, Bangladesh

Ismi, D. P., Panchoo, S., and Murinto, M. (2016). K-means clustering based filter feature selection on high dimensional data. Int. J. Adv. Intell. Informatics 2:38. doi: 10.26555/ijain.v2i1.54

Jia, Z., Lin, Y., Wang, J., Feng, Z., Xie, X., and Chen, C. (2021). HetEmotionNet: two-stream heterogeneous graph recurrent neural network for multi-modal emotion recognition. ArXiv [Cs.LG] Available at: http://arxiv.org/abs/2108.03354

Karimi, M., Harouni, M., Jazi, E. I., Nasr, A., and Azizi, N. (2022). “Improving monitoring and controlling parameters for Alzheimer’s patients based on IoMT” in Studies in Big Data (Singapore: Springer Nature Singapore), 213–237.

Karimi, M., Harouni, M., Nasr, A., and Tavakoli, N. (2021). “Automatic lung infection segmentation of Covid-19 in CT scan images” in Intelligent computing applications for COVID-19 (Boca Raton, FL: CRC Press), 235–253.

Khateeb, M., Anwar, S. M., and Alnowami, M. (2021). Multi-domain feature fusion for emotion classification using DEAP dataset. IEEE Access 9, 12134–12142. doi: 10.1109/ACCESS.2021.3051281

Lee, H., Choi, J., Kim, S., Jun, S. C., and Lee, B.-G. (2019). A compressive sensing-based automatic sleep-stage classification system with radial basis function neural network. IEEE Access 7, 186499–186509. doi: 10.1109/ACCESS.2019.2961326

Leevy, J. L., Khoshgoftaar, T. M., and Villanustre, F. (2020). Survey on RNN and CRF models for De-identification of medical free text. J. Big Data 7, 1–22. doi: 10.1186/s40537-020-00351-4

Liu, X., Li, T., Tang, C., Tao, X., Chen, P., Bezerianos, A., et al. (2019). Emotion recognition and dynamic functional connectivity analysis based on EEG. IEEE Access 7, 143293–143302. doi: 10.1109/ACCESS.2019.2945059

Martínez-Tejada, L. A., Maruyama, Y., Yoshimura, N., and Koike, Y. (2020). Analysis of personality and EEG features in emotion recognition using machine learning techniques to classify arousal and valence labels. Mach. learn. knowl. extr. 2, 99–124. doi: 10.3390/make2020007

Moriyama, T., and Ozawa, S. (2003). “Emotion recognition and synthesis system on speech.” In Proceedings IEEE international conference on multimedia computing and systems. IEEE Comput. Soc. Florence, Italy.

Mosayebi, R., Dehghani, A., and Hossein-Zadeh, G.-A. (2022). Dynamic functional connectivity estimation for neurofeedback emotion regulation paradigm with simultaneous EEG-FMRI analysis. Front. Hum. Neurosci. 16:933538. doi: 10.3389/fnhum.2022.933538

Mullins, J. K., and Sabherwal, R. (2018). Gamification: a cognitive-emotional view. J. Bus. Res. 106, 304–314. doi: 10.1016/j.jbusres.2018.09.023

Nikravan, M., Ebrahimzadeh, E., Izadi, M. R., and Mikaeili, M. (2016). Toward a computer aided diagnosis system for lumbar disc herniation disease based on Mr images analysis. Biomed. Eng. - Appl. Basis Commun. 28:1650042. doi: 10.4015/S1016237216500423

Ordonez-Bolanos, O. A., Gomez-Lara, J. F., Becerra, M. A., Peluffo-Ordonez, D. H., Duque-Mejia, C. M., Medrano-David, D., et al. (2019). “Recognition of emotions using ICEEMD-based characterization of multimodal physiological signals.” In 2019 IEEE 10th Latin American symposium on Circuits & Systems (LASCAS). IEEE. Armenia, Colombia

Ozel, P., Akan, A., and Yilmaz, B. (2019). Synchrosqueezing transform based feature extraction from EEG signals for emotional state prediction. Biomed. Signal Process. Control 52, 152–161. doi: 10.1016/j.bspc.2019.04.023

Pandey, P., and Seeja, K. R. (2022). Subject independent emotion recognition from EEG using VMD and deep learning. J. King Saud Univ. - Comput. Inf. 34, 1730–1738. doi: 10.1016/j.jksuci.2019.11.003

Raftarai, A., Mahounaki, R. R., Harouni, M., Karimi, M., and Olghoran, S. K. (2021). “Predictive models of hospital readmission rate using the improved AdaBoost in COVID-19” in Intelligent computing applications for COVID-19 (Boca Raton: CRC Press), 67–86.

Rehman, A., Harouni, M., Karimi, M., Saba, T., Bahaj, S. A., and Awan, M. J. (2022). Microscopic retinal blood vessels detection and segmentation using support vector machine and K-nearest neighbors. Microsc. Res. Tech. 85, 1899–1914. doi: 10.1002/jemt.24051

Rout, A. K., Dash, P. K., Dash, R., and Bisoi, R. (2017). Forecasting financial time series using a low complexity recurrent neural network and evolutionary learning approach. J. King Saud Univ. - Comput. Inf. 29, 536–552. doi: 10.1016/j.jksuci.2015.06.002

Sadjadi, S. M., Ebrahimzadeh, E., Shams, M., Seraji, M., and Soltanian-Zadeh, H. (2021). Localization of epileptic foci based on simultaneous EEG-FMRI data. Front. Neurol. 12:645594. doi: 10.3389/fneur.2021.645594

San-Segundo, R., Gil-Martín, M., D’Haro-Enríquez, L. F., and Pardo, J. M. (2019). Classification of epileptic EEG recordings using signal transforms and convolutional neural networks. Comput. Biol. Med. 109, 148–158. doi: 10.1016/j.compbiomed.2019.04.031

Schuller, D. M., and Schuller, B. W. (2021). A review on five recent and near-future developments in computational processing of emotion in the human voice. Emot. Rev 13, 44–50. doi: 10.1177/1754073919898526

Shrestha, A., and Mahmood, A. (2019). Review of deep learning algorithms and architectures. IEEE Access 7, 53040–53065. doi: 10.1109/ACCESS.2019.2912200

Shukla, J., Barreda-Angeles, M., Oliver, J., Nandi, G. C., and Puig, D. (2021). Feature extraction and selection for emotion recognition from Electrodermal activity. IEEE Trans. Affect. Comput. 12, 857–869. doi: 10.1109/TAFFC.2019.2901673

Smagulova, K., and James, A. P. (2019). A survey on LSTM Memristive neural network architectures and applications. Eur. Phys. J. Spec. Top. 228, 2313–2324. doi: 10.1140/epjst/e2019-900046-x

Soroush, M. Z., Maghooli, M., Kamaledin Setarehdan, K., and Motie Nasrabadi, A. (2018a). Emotion classification through nonlinear EEG analysis using machine learning methods. Int. J. Clin. Neurosci. 5, 135–149. doi: 10.15171/icnj.2018.26

Soroush, M. Z., Maghooli, M., Kamaledin Setarehdan, K., and Nasrabadi, S. (2017). A review on EEG signals based emotion recognition. Int. J. Clin. Neurosci. 4, 118–129. doi: 10.15171/icnj.2017.01

Soroush, M. Z., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. M. (2018b). A novel method of EEG-based emotion recognition using nonlinear features variability and Dempster–Shafer theory. Biomed. Eng. - Appl. Basis Commun 30:1850026. doi: 10.4015/S1016237218500266

Soroush, M. Z., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. M. (2019a). A novel EEG-based approach to classify emotions through phase space dynamics. SIViP 13, 1149–1156. doi: 10.1007/s11760-019-01455-y

Soroush, M. Z., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. M. (2019b). Emotion recognition through EEG phase space dynamics and Dempster-Shafer theory. Med. Hypotheses 127, 34–45. doi: 10.1016/j.mehy.2019.03.025

Soroush, M. Z., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. M. (2020). Emotion recognition using EEG phase space dynamics and Poincare intersections. Biomed. Signal Process. Control 59:101918. doi: 10.1016/j.bspc.2020.101918

Thammasan, N., Moriyama, K., Fukui, K.-I., and Numao, M. (2017). Familiarity effects in EEG-based emotion recognition. Brain Inform. 4, 39–50. doi: 10.1007/s40708-016-0051-5

Wei, C., Chen, L.-L., Song, Z.-Z., Lou, X.-G., and Li, D.-D. (2020). EEG-based emotion recognition using simple recurrent units network and ensemble learning. Biomed. Signal Process. Control 58:101756. doi: 10.1016/j.bspc.2019.101756

Xing, X., Li, Z., Tianyuan, X., Shu, L., Bin, H., and Xiangmin, X. (2019). SAE+LSTM: a new framework for emotion recognition from Multi-Channel EEG. Front. Neurorobot. 13:37. doi: 10.3389/fnbot.2019.00037

Yang, N., Dey, N., Sherratt, R. S., and Shi, F. (2020). Recognize basic emotional Statesin speech by machine learning techniques using Mel-frequency cepstral coefficient features. J. Intell. Fuzzy Syst. 39, 1925–1936. doi: 10.3233/JIFS-179963

Yang, H., Han, J., and Min, K. (2019). A multi-column CNN model for emotion recognition from EEG signals. Sensors 19:4736. doi: 10.3390/s19214736

Yin, Y., Zheng, X., Bin, H., Zhang, Y., and Cui, X. (2021). EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 100:106954. doi: 10.1016/j.asoc.2020.106954

Yousefi, M. R., Dehghani, A., and Amini, A. A. (2022). Construction of multi-resolution wavelet based mesh free method in solving Poisson and imaginary Helmholtz problem. Int. J. smart electr. eng. 11, 215–222.

Yousefi, M. R., Dehghani, A., Golnejad, S., and Hosseini, M. M. (2023). Comparing EEG-based epilepsy diagnosis using neural networks and wavelet transform. Appl. Sci. 13:10412.

Zeng, Z., Pantic, M., Roisman, G. I., and Huang, T. S. (2009). A survey of affect recognition methods: audio, visual, and spontaneous expressions. IEEE Trans. Pattern Anal. Mach. Intell. 31, 39–58. doi: 10.1109/TPAMI.2008.52

Keywords: emotion recognition, electroencephalography, deep learning, DEAP dataset, Hurst’s view and statistical features, Dual-LSTM

Citation: Yousefi MR, Dehghani A and Taghaavifar H (2023) Enhancing the accuracy of electroencephalogram-based emotion recognition through Long Short-Term Memory recurrent deep neural networks. Front. Hum. Neurosci. 17:1174104. doi: 10.3389/fnhum.2023.1174104

Received: 25 February 2023; Accepted: 25 September 2023;

Published: 10 October 2023.

Edited by:

Elias Ebrahimzadeh, University of Tehran, IranReviewed by:

Morteza Zangeneh Soroush, Islamic Azad University, Tehran, IranCopyright © 2023 Yousefi, Dehghani and Taghaavifar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohammad Reza Yousefi, bXIteW91c2VmaUBpYXVuLmFjLmly

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.