95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 10 May 2023

Sec. Cognitive Neuroscience

Volume 17 - 2023 | https://doi.org/10.3389/fnhum.2023.1160981

This article is part of the Research Topic New Insights in the Cognitive Neuroscience of Attention View all 14 articles

Introduction: The focus of cognitive and psychological approaches to narrative has not so much been on the elucidation of important aspects of narrative, but rather on using narratives as tools for the investigation of higher order cognitive processes elicited by narratives (e.g., understanding, empathy, etc.). In this study, we work toward a scalar model of narrativity, which can provide testable criteria for selecting and classifying communication forms in their level of narrativity. We investigated whether being exposed to videos with different levels of narrativity modulates shared neural responses, measured by inter-subject correlation, and engagement levels.

Methods: Thirty-two participants watched video advertisements with high-level and low-level of narrativity while their neural responses were measured through electroencephalogram. Additionally, participants’ engagement levels were calculated based on the composite of their self-reported attention and immersion scores.

Results: Results demonstrated that both calculated inter-subject correlation and engagement scores for high-level video ads were significantly higher than those for low-level, suggesting that narrativity levels modulate inter-subject correlation and engagement.

Discussion: We believe that these findings are a step toward the elucidation of the viewers’ way of processing and understanding a given communication artifact as a function of the narrative qualities expressed by the level of narrativity.

Attention is involved in all cognitive and perceptual processes (Chun et al., 2011). To some degree, an attentive state toward an external stimulus implies the silencing of internally oriented mental processing (Dmochowski et al., 2012). Sufficiently strong attentive states can hamper conscious awareness of one’s environment and oneself (Busselle and Bilandzic, 2009). In contrast to neutral stimuli, emotional stimuli attract greater and more focused attention (see Vuilleumier, 2005 for a review of the topic). As stories can generate and evoke strong feelings (Hogan, 2011), the pathos implicit in narratives could be considered attention-seeking stimuli. In fact, people tend to engage emotionally with stories (Hogan, 2011). Attentional focus to a narrative stimulates complex processing (Houghton, 2021), with narratives inducing “emotionally laden attention” (Dmochowski et al., 2012). In the narrative domain, attentional focus and the sense of being absorbed into the story are part of narrative engagement (Busselle and Bilandzic, 2009). Indeed, researchers have suggested that narratives’ inherent persuasiveness is related to the feelings of immersion they evoke, a phenomenon that has been termed “transportation effects” (Green and Brock, 2000). In this context, “transportation” indicates a combination of attention, feelings, and imagery where there is a convergent process of different perceptual, cognitive, and affective systems and capacities to the narrative events (Green and Brock, 2000). During narrative comprehension, involuntary autobiographical memories triggered by the story in question appear to impair attention only momentarily (Tchernev et al., 2021). This is not the case for either internally or externally generated daydreaming or distraction (Tchernev et al., 2021). Thus, narrative engagement can be hampered when thoughts unrelated to the narrative arise (Busselle and Bilandzic, 2009). In addition, the degree to which audiences engage with a narrative varies based on delivery modality (e.g., audio or visual) (Richardson et al., 2020), during narrative comprehension (Song et al., 2021a), and across individuals (Ki et al., 2016; Sonkusare et al., 2019). Nonetheless, narratives are naturally engaging (Sonkusare et al., 2019), and they reflect daily experiences, making them potentially useful devices for understanding cognitive processes such as engagement.

The use of simplified and abstract stimuli has been common in cognitive neuroscience for decades (e.g., Dini et al., 2022a). Although such stimuli enable highly controlled experiments and isolation of the study variables, they tend to lack ecological validity. Hence, recent studies have employed stimuli that simulate real-life situations, including narratives, termed “naturalistic stimuli” (see Sonkusare et al., 2019, for a review of the topic). From this perspective, advertising is considered a naturalistic stimulus that is designed to be emotionally persuasive (Sonkusare et al., 2019). Video ads provide narrative content in short period of time; hence, advertisers focus on delivering a key message through stories with different narrativity levels. In fact, companies use narrative-style ads because stories captivate, entertain, and involve consumers (Escalas, 1998; Coker et al., 2021). Researchers have found that, at the neural level, narratives (particularly more structured ones) tend to induce similar affective and cognitive states across viewers (Dmochowski et al., 2012; Song et al., 2021a). However, whether consumers perceive stories in similar ways is of great interest, as this might affect whether they ultimately engage with the advertisement as expected. To investigate the underlying cognitive processes generated by narratives, many studies have used traditional methods such as electroencephalogram (EEG) power analysis (Wang et al., 2016). Though valid, the metrics provided by traditional methods may not be ideal when considering narrative as a continuous stimulus, given that such methods could generally require stimulus repetition. In addition, they do not capture how the same information is processed across individuals.

Inter-subject correlation (ISC) is an appropriate neural metric for investigating shared neural responses, especially when using naturalistic stimuli, including media messages (see Schmälzle, 2022, for a discussion of the topic). This data-driven method assumes the occurrence of common brain reactions to a narrative, which improves the generalizability of the findings. By correlating neural data across individuals, this metric can identify localized neural activities that react to a narrative in a synchronous fashion (i.e., in a time-locked manner) (Nastase et al., 2019). ISC is well-suited to analysis of both functional magnetic resonance (fMRI) (Redcay and Moraczewski, 2020) and EEG (Petroni et al., 2018; Imhof et al., 2020) data. ISC obtained using naturalistic stimuli has been used to investigate episodic encoding and memory (Hasson et al., 2008; Cohen and Parra, 2016; Simony et al., 2016; Song et al., 2021a), social interaction (Nummenmaa et al., 2012), audience preferences (Dmochowski et al., 2014), information processing (Regev et al., 2019), narrative comprehension (Song et al., 2021b), and, similar to this study, attention and engagement (Dmochowski et al., 2012; Ki et al., 2016; Cohen et al., 2017; Poulsen et al., 2017; Imhof et al., 2020; Schmälzle and Grall, 2020; Grall et al., 2021; Song et al., 2021a; Grady et al., 2022).

Inter-subject correlation calculated from EEG data has been shown to predict levels of attentional engagement with auditory and audio-visual narratives (Ki et al., 2016; Cohen et al., 2017). Cohen et al. (2017) found that neural engagement with narratives measured through ISC was positively correlated with behavioral measurements of engagement, including real-world engagement. Another study demonstrated that dynamic ISC aligns with reported suspense levels of a narrative (Schmälzle and Grall, 2020). Attention was shown to modulate (attended) narrative processing at high levels of the cortical hierarchy (Regev et al., 2019). Ki et al. (2016) found that ISC was weaker when participants had to concurrently perform mental arithmetic and attend to a narrative than when participants only attended to the narrative. This was especially the case when participants attended to audiovisual narratives compared to auditory-only narratives. They also reported that the ISC difference between the two attentional states, as well as its magnitude, was more pronounced when the stimulus was a cohesive narrative than when it was a meaningless one. Moreover, they demonstrated that audiovisual narratives generated stronger ISC than audio-only narratives. In line with this finding, a recent fMRI study indicated that sustained attention network dynamics are correlated with engagement while attending audiovisual narratives (Song et al., 2021a). However, this is not the case for audio-only narratives. Nevertheless, in audio-only stimuli, ISC is higher for personal narratives compared to a non-narrative description or a meaningless narrative (i.e., a reversed version of the personal narratives) (Grall et al., 2021). Furthermore, Song et al. (2021a) found higher interpersonal synchronization of default mode network activity during moments of higher self-reported engagement with both audio-visual and audio-only narratives. In addition, highly engaging moments of a narrative evoke higher ISC in the mentalizing network compared to less engaging moments (Grady et al., 2022). Finally, ISC was observed to diminish when viewers watched video clips for the second time (Dmochowski et al., 2012; Poulsen et al., 2017; Imhof et al., 2020), which was supported by a study showing lower ISC when viewers were presented with a scrambled narrative for the second time compared to the first time (Song et al., 2021b).

In summary, while some previous studies have examined the relationship between ISC and narrative engagement (or its correlate, attention) (Dmochowski et al., 2012; Ki et al., 2016; Cohen et al., 2017; Poulsen et al., 2017; Regev et al., 2019; Schmälzle and Grall, 2020; Grall et al., 2021; Song et al., 2021a; Grady et al., 2022), they assumed that “being a narrative” is an either-or quality. For example, a cohesive narrative is considered a narrative, whereas a meaningless narrative (e.g., a scrambled narrative) is not considered one. From the narratological perspective, there has been increased interest in “narrativity” as a scalar property; that is, artifacts (pictures, videos, stories, and other forms of representation) may have different degrees of narrativity. In other words, narrative may be a matter of more-or-less rather than either-or (Ryan, 2007). Therefore, how different levels of narrativity affect narrative engagement remains an open question. Moreover, whether narrativity levels lead to different degrees of ISC, and whether it is possible to predict narrativity level based on ISC values, has not yet been explored. Therefore, our main research question for this study is as follows: does narrativity level [high (HL) vs. low (LL)] modulate self-reported engagement and ISC? To answer this question, we asked 32 participants to watch 12 narrative, real video ads twice each (with sound removed) varying in their degree of narrativity. We defined “narrativity level” as the degree to which an artifact is perceived to evoke a complete narrative script (Ryan, 2007). Essentially, this refers the degree to which a story seems complete in all its structural elements, such as the presence of defined characters in a stipulated context, clear causal links between events, and a sense of closure resulting from the intertwining of these events (Ryan, 2007). While participants watched the videos, we recorded their EEG brain signals to investigate shared neural responses to the video ads across participants; that is, we investigated the interpersonal reliability of neural responses (expressed by ISC). We further assessed how narrativity level affected self-reported engagement with the ads. Thus, we asked participants to rate how much each ad captured their attention and how immersed in it they felt. Furthermore, we examined how engagement and ISC differ for video ads watched for the first time versus the second time. Based on the aforementioned studies, which explore how narratives engage individuals and indicate that ISC levels are higher during moments of higher narrative engagement and, we postulate that HL video ads will have higher ISC than LL video ads and that self-reported engagement will be higher for HL ads than for LL ads. Moreover, we expect that both engagement and ISC will be higher the first time the videos are seen compared to the second time.

This study was approved by the local ethics committee (Technical Faculty of IT and Design, Aalborg University) and performed in accordance with the Danish Code of Conduct for Research and the European Code of Conduct for Research Integrity. All participants signed a written informed consent form at the beginning of the session, were debriefed at the end of the experiment, and were given a symbolic payment as a token of gratitude for their time and effort. Data collection took place throughout November 2020. This study was part of a larger study; here, we report only the information relevant to the present study.

We recruited 32 (13 women) right-handed participants of 16 nationalities between the ages of 20 and 37 (M = 26.84, SD = 4.33). Regarding occupation, 69% were students, 16% were workers, and 15% were both. Regarding educational level, 12% had completed or were completing a bachelor’s degree, and 88% had completed or were completing a master’s degree. We requested that participants not drink caffeine products at least 2 h prior the experimental session.

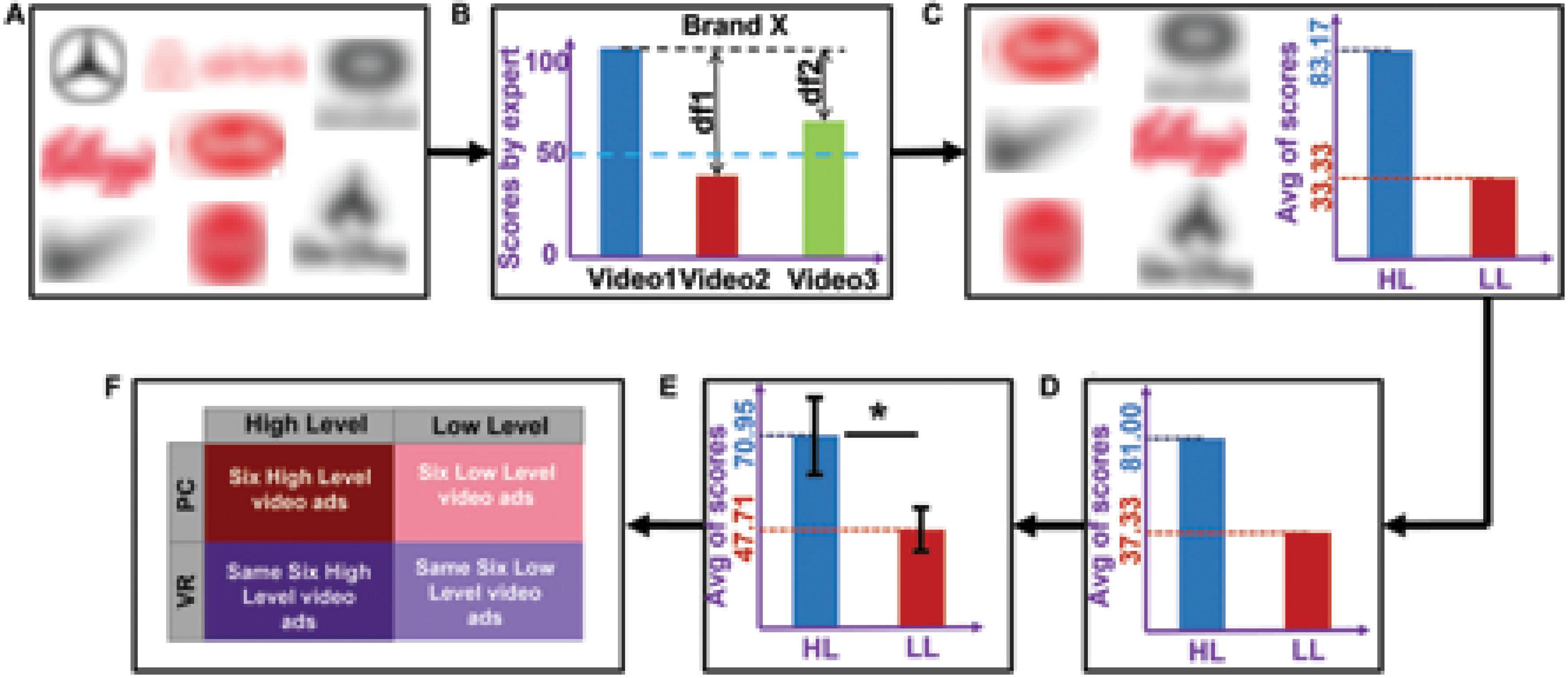

To select the stimuli, we initially pre-selected 22 video ads (eight brands; Figure 1A) varying in the number of elements that constitute different narrative levels according to Ryan (2007). The narrativity levels of these video ads were then preliminarily rated from 0 to 100 by an expert in the narrative field. Based on these scores, we selected six brands that had the largest difference in score between two of their ads (to maximize the difference in narrativity level between two ads of the same brand), coinciding with an absolute score below 50 for one ad and above 50 for the other ad (Figure 1B). We then grouped the video ads into two categories: HL and LL narrativity. Although not categorizing the videos—thus considering continuity in narrativity level—would better align with a scalar model of narrativity, our results would suffer from statistical invalidity. Because the videos were real and therefore not created specifically for the study, they had idiosyncratic features separate from the narrativity level (e.g., different scenario, characters, and plot). These features could either cover a potential effect of narrativity level or lead to a misattribution of an effect. By categorizing the videos into two narrativity levels, we sought to mitigate the potential effects of those individual features and make the differences in narrativity level more salient. The final selection consisted of six video ads with HL narrativity (M = 83.17, SD = 10.70) and another six with LL narrativity (M = 33.33, SD = 10.70), with each of the six brands contributing one video to each of the two groups (Figure 1C). The videos can be seen here: https://youtu.be/RBMo0wvFKoY. To validate the categorization of the ads into these two categories, another independent expert in the narrative field rated the narrativity level of the 12 video ads from 0 to 100, and their ratings were consistent with those of first expert (HL: M = 81.00, SD = 13.70; LL: M = 37.33, SD = 15.37; Figure 1D). To assess whether the general public would also perceive a difference in narrativity level between the two categories of videos, we conducted an online validation test using the Clickworker platform1 with an independent panel of participants, which confirmed that our classification was valid (see section “3. Results”). For the online test, a total of 156 participants were assigned to one of four groups. Each group was assigned to watch three video ads, each of which was from a distinct brand. To capture the degree of perceived narrativity in each video ad, participants were asked to answer five questions used by Kim et al. (2017), such as “the commercial tells a story” and “the commercial shows the main actors or characters in a story.” All questions were rated from 0 (strongly disagree) to 100 (strongly agree). Average responses to these five questions showed whether the videos ads were indeed perceived to be in the HL (higher scores) or LL (lower scores) category. The responses confirmed that our initial categorization of the videos was valid (see results and Figure 1E).

Figure 1. Video ads selection procedure, division into high (HL) and low (LL) categories, results of the ratings, and the resulting design. (A) The eight pre-selected brands from which we chose 22 video ads. (B) Process of selecting videos based on expert’s rating. Suppose that, from brand X, we pre-selected three videos that were rated by the expert. The videos that had the maximum difference (df1) in score, for which one score was >50 and the other was <50, were selected as the final videos from brand X. (C) The six selected brands with average scores. For each brand, the video with a higher score was assigned to the HL category (six videos) and the video with a lower score was assigned to the LL category (six videos). (D) Average of the second expert’s ratings across the six selected videos. (E) Average scores (across participants and stimuli) for narrativity level, rated by 124 participants. An independent sample t-test showed that the narrativity scores of the HL videos were significantly higher than those of the LL videos (p < 0.001). The black error bars represent the standard deviation of the sample. (F) The 2 × 2 experiment design consisted of the following conditions: HL–PC, HL–VR, LL–PC, and LL–VR. *p < 0.05.

The stimuli therefore comprised 12 2D video ads from six brands (Barilla, Coke, Disney, Kellogg’s, Nike, and Oculus), with two video ads from each brand, one for each narrativity level. We selected different brands to mitigate the influence of brand and product category. The ads were real commercials retrieved from YouTube. We removed the audio and edited some of the videos slightly to adjust the length (which varied from 57 to 63 s). We selected non-verbal narratives in video format because motion picture narratives are less susceptible to interindividual differences and generate more homogeneous experiences across individuals than verbal (oral) narratives (Jajdelska et al., 2019). This may be because the visual images are directly related to the narrative content, which reduces personal interpretations of the story (Richardson et al., 2020).

We conducted a 2 × 2 full factorial within-subjects study with two levels of narrativity [high level (HL) vs. low level (LL)] and two media [computer screen (PC) vs. virtual reality (VR)]. The study included four conditions: six HL video ads presented on a PC (HL–PC), six HL video ads presented in VR (HL–VR), six LL video ads presented on a PC (LL–PC), and six LL video ads presented in VR (LL–VR). See Figure 1F.

Initially, the EEG device (32-channel, 10–20 system) was placed on each participant’s scalp, and the impedance of the active electrodes was set to less than 25 kΩ using a conductive gel, according to the manufacturer’s guidelines. The signals were recorded at a sampling rate of 500 Hz using Brain Products software. The HTC Vive Pro VR headset was placed on top of the EEG electrodes, and the impedance of the electrodes was checked again. The task comprised two sessions of approximately 25 min each, separated by a 20 min interval. During one session, participants watched ads on a PC; during the other session, they watched ads in VR. During each session, participants watched all of the video ads and answered a questionnaire after each. A 2-s fixation cross was shown before each new video. The videos were displayed first on a PC for half of the participants. The video presentation order was counterbalanced across participants but the same across media. The ten-item questionnaire included two questions of interest for this study: (i) “this commercial really held my attention” and (ii) “this ad draws me in”; both were scored from 0 (strongly disagree) to 100 (strongly agree). We retrieved these questions from the “being hooked” scale (Escalas et al., 2004), and they were meant to capture consumers’ sustained attention to the advertisement (Escalas et al., 2004). High levels of focused attention and high levels of immersion (feelings of transportation) can indicate high levels of narrative engagement. Thus, we calculated our engagement metric by averaging attention and immersion scores.

We performed all preprocessing and processing steps using Matlab R2020b (The Math Works, Inc, Natick, MA, USA) with in-house codes and tools from the FieldTrip 202101282 and EEGLAB 2021.03 toolboxes. To remove high and low frequency noise, we applied a third-order Butterworth filter with 1–40 Hz cut-off frequencies to the raw data. Next, we detected bad channels using an automated rejection process with voltage threshold +500 μV and, after confirmation by an expert, rejected them from the channel list. We then interpolated the removed channels using the spherical spline method based on the activity of six surrounding channels in the FieldTrip toolbox. The average number of rejected channels per participant was 1.92 + 1.48. One participant was excluded from the analyses due to having more than five bad channels, and two participants were excluded due to missing trials. Subsequently, we segmented the filtered EEG data corresponding to the 12 video ads and concatenated the data. We then excluded the EEG data corresponding to the time when participants were answering the questionnaires. Next, we conducted independent component analysis on the concatenated data to remove remaining noise. We estimated source activity using the second-order blind identification method. We then identified eye-related artifacts and other noisy components, which were confirmed by an expert and removed from the component list. Applying the inverse independent component analysis coefficients to the remaining components, we obtained the denoised data. Finally, we re-referenced the denoised data to the average activity of all electrodes.

To evaluate whether the evoked responses to the stimuli were shared among participants, we calculated the ISC of neural responses to the video ads by calculating the correlation of EEG activity among participants. Researchers have established that, for an evoked response across participants (or trials) to be reproduceable, it is necessary that each participant (trial) provides a reliable response (Ki et al., 2016). In this sense, ISC is similar to traditional methods that capture the reliability of a response by measuring the increase in magnitude of neural activity; the important difference is that, by measuring reliability across participants, we avoid presenting stimuli multiple times to a single participant. In addition, this method is compatible with continuous naturalistic stimuli such as video ads. Therefore, the goal of ISC analysis is to identify correlated EEG components that are maximally shared across participants. By “EEG component,” we mean a linear combination of electrodes, which can be considered “virtual sources” (Ki et al., 2016). This correlated component analysis method is similar to principal component analysis, for which, instead of the maximum variance within a dataset, the maximum correlation among datasets is considered. Like other component extraction methods, this method identifies components by solving a eigenvalue problem (Parra and Sajda, 2003; de Cheveigné and Parra, 2014). Below, we explain our ISC calculation procedure for multiple stimuli [provided in Cohen and Parra (2016) and Ki et al. (2016)].

To construct the input data, we combined data from participants in all four conditions (six videos for each condition; see Figure 2A for one stimulus) and obtained 24 three-dimensional EEG matrices (channel × data samples × participants). For each of these 24 matrices, we separately calculated the between-subject cross-covariance using Eq. 1:

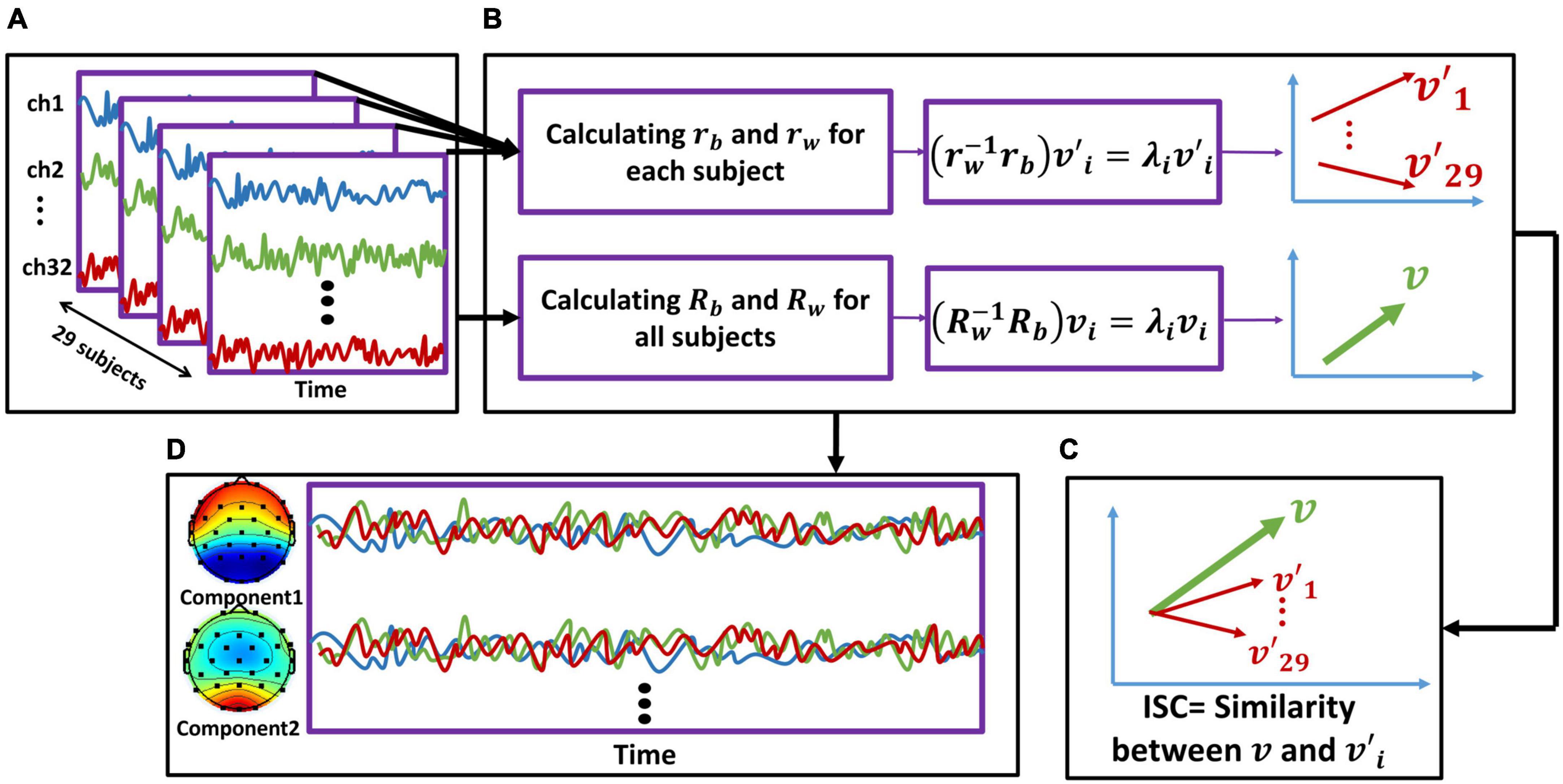

Figure 2. The inter-subject correlation (ISC) calculation and component activity estimation procedure. We repeated this procedure separately for each stimulus. (A) We first concatenated the EEG activity of all participants together. (B: upper line) For each subject, we calculated the rb and rw [using (Eqs. 1, 2), respectively] based on the cross-covariance matrices. By solving the eigenvalue problem (Eq. 3), we computed eigenvectors of each subject. The red arrows represent each subject’s eigenvector (v′). Therefore, we obtained 29 v′, corresponding to the number of participants. Note that each v′i is a 32-dimensional matrix corresponding to the number of electrodes (for simplicity, the vectors are shown in two dimensions). (B: lower line) We summed all the rb across all participants to calculate Rb and did the same for rw to calculate Rw. Then, by solving the eigenvalue problem, we obtained vi, which represents maximal correlation across participants. Note that v is a 32-dimensional matrix corresponding to the number of electrodes (for simplicity, the vectors are shown in two dimensions). (C) We calculated the similarity between the representative vector of all participants (v) and each of the vectors corresponding to each subject (v′1v′29) to obtain ISC. (D) Using the calculated eigenvectors and forward model, we calculated the scalp activity of each component, which are linear combinations of electrode activity (32 components were calculated with the order from strongest to weakest). In sum, the activity of first two strongest components is displayed here.

where Rkl is as follows:

Rkl indicates cross-covariance among all electrodes of subject k with all electrodes of subject l. The matrix xk(t) contains 32 electrode activities (pre-processed data) of subject k measured in time, and is the average of xk(t) over time. Additionally, we separately calculated the within-subject cross-covariance for each of the abovementioned matrices using Eq. 2:

Rkk is calculated in an identical manner to Rkl, except it considers only the electrode activity of subject k. We then summed the calculated Rb and Rw of all 24 matrices to obtain the pooled within- and between- subject cross-covariances, representing data on all stimuli.

Then, to improve the robustness of our analysis against outliers, we used the shrinkage regularization method to regularize the within subject-correlation matrix (Blankertz et al., 2011) Eq. 3:

where is the average of eigenvectors of Rw, with γ equal to 0.5.

Next, by solving the eigenvalue problem for (Eq. 4), we obtained eigenvectors vi. Such eigenvectors are projections indicating the maximum correlation among participants, from strongest to weakest, provided by eigenvalues λi.

After this step, we obtained the projections that are maximally correlated among all participants considering all stimuli (Figure 2B). Next, to measure the reliability of individual participants’ EEG responses, we calculated the correlation of projected data for each subject with projected data for the group separately for each stimulus (Figure 2C). This metric shows how similar the brain activity of a single subject was to that of all other participants. Next, we calculated the ISC using the correlation of such projections for each stimulus (video ads) and component, averaged across all possible combinations of participants. Thus, for each stimulus and each subject, we obtained a component matrix. By summing the first two strongest components, we obtained the ISC for each subject, as follows (Eq. 5):

where

rki is the Pearson correlation coefficient averaged across all pairs of participants applied to component projection yik, which is defined as follows (Eq. 6):

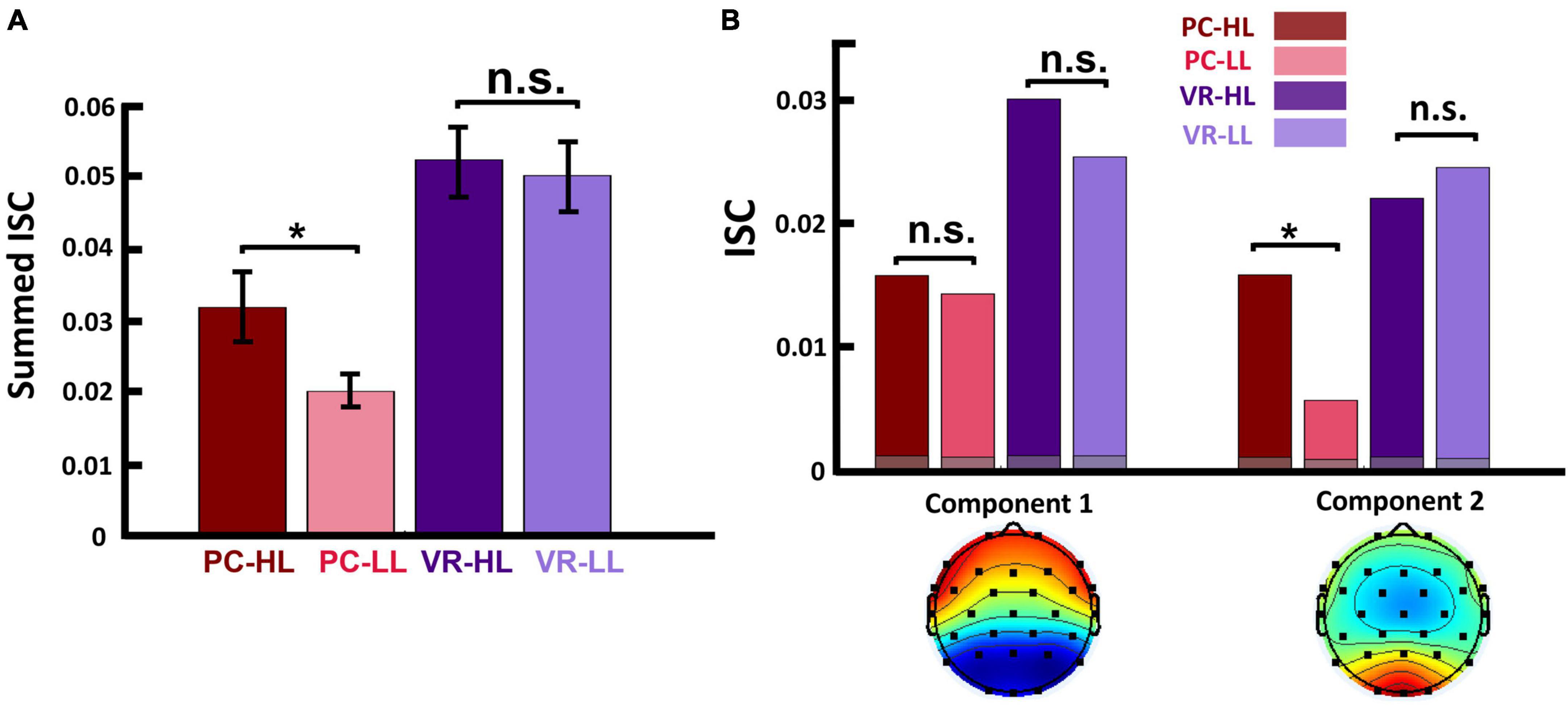

Next, we summed the first two strongest components to represent the ISC of each subject for each stimulus (i = 1:2) and ignored the weaker components because they were below chance level in one condition. Finally, we summed the calculated ISC over the six stimuli of each condition to obtain the ISC of all participants in each of the four conditions. To illustrate the scalp activity of each component, we used the corresponding forward model following previous studies (Parra et al., 2005; Haufe et al., 2014; Figure 2D). In Figure 3A, the bars illustrate the sum of the two first components.

Figure 3. (A) Summed ISC over the first two strongest components in four conditions. This shows that, in the PC condition, the ISC of HL video ads was significantly higher than that of LL video ads (p = 0.029), and in the VR condition, the ISC of HL video ads was still higher than that of LL video ads, but not statistically significant (p = 0.638). (B-top) Calculated ISC separately for each component and within each condition. This shows that, for the first component, the difference between HL and LL video ads is not significant in either the PC or the VR condition. However, in the second component, the ISC of HL video ads was significantly higher than that of LL video ads (p = 0.002) in PC condition, but not in the VR condition (p = 0.313). The gray bars show the average over phase randomized iterations. (B-bottom) Scalp activity of first two strongest components. Activity in component 1 is throughout the anterior and posterior regions, while in component 2, activity is concentrated in the posterior region. In both figures, the colored dots represent the calculated ISC values for each participant. *p < 0.05.

To determine chance-level ISC, we first built up phase-randomized EEG data using a method that randomizes EEG signal phases in the frequency domain (Theiler et al., 1992; Ki et al., 2016). Using this method, we obtained new time series where the temporal alterations were not necessarily aligned with the original signals and therefore not correlated across participants. We then implemented the aforementioned steps on the randomized data, identically: for each condition, we computed within- and between-subject cross-covariances, projected the data on eigenvectors, calculated ISC, and summed them over stimuli.

We generated 5000 sets of such randomized data and continued the process as described above to obtain a null distribution representing the random ISC activity. Next, we tested the actual ISC values against the null distribution to evaluate whether the actual ISCs are above the chance level. To do this, we used a two-tailed significance test with p = (1 + number of null ISC values ≥ empirical ISC) / (1 + number of permutations). See Supplementary Figure 1.

Some studies have reported that ISC values are higher in lower frequency bands and vice versa (Lankinen et al., 2014; Thiede et al., 2020). Although in this study we do not aim to evaluate the effect of narrativity levels on the ISC of different frequency bands, it is worth exploring it. For this, we filtered the EEG data into different frequency bins using the abovementioned Butterworth filter. The frequency bins comprise 1–2 Hz, 2–3 Hz, 3–4 Hz, 4–5 Hz, 5–6 Hz, 6–7 Hz, alpha (8–12 Hz), low-beta (13–20 Hz), and high-beta (21–40 Hz). We employed a high resolution in the lower frequency bands to verify whether our results aligned with previous findings in these frequency bands (Lankinen et al., 2014). After filtering the signal, we repeated the same procedure of ISC calculation as mentioned above and obtained the ISC for the frequency bands.

To explore whether the ISC differences are derived by either change in engagement scores or a change in narrativity levels, we implemented two correlation analyses: one for ISC vs. engagement scores and one for ISC vs. narrativity levels. To do this, we used a partial correlation approach considering subjects and engagement/level of narrativity as covariates depending on the correlation (e.g., for the correlation of ISC vs. engagement, the level of narrativity is considered as a covariate).

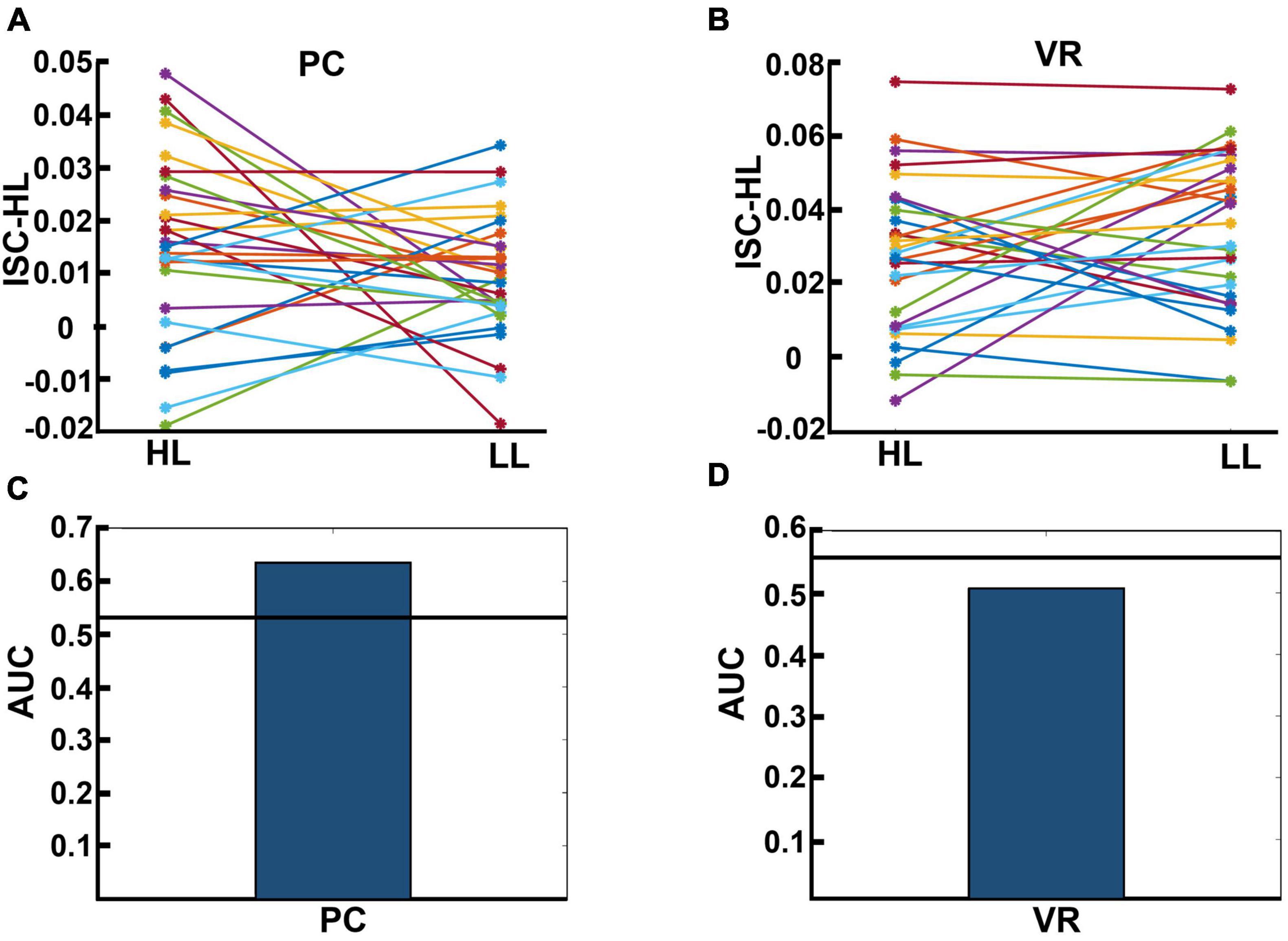

We also tested whether we could assign a single participant to the HL or LL group based on participant’s neural brain activity. In other words, we tested whether we could predict the level of narrativity the participant was exposed based on EEG activity when participants attended to HL and LL videos. To do so, we calculated the projections vi using only the data from the HL group. We then calculated the ISC of each participant (from both the HL and LL groups) based on the projections calculated only from the HL group and called the result “ISC-HL.” Therefore, in each group, each participant had an ISC-HL value showing how similar their neural activity was to the activity of all participants in the HL group. The difference between ISC-HL and ISC is that, while calculating ISC, we explored how similar the activity of a participant was to their own group (whether HL or LL). When calculating ISC-HL, however, we calculated how similar the activity of participants in both groups (HL and LL) was to the activity of only the participants in the HL group. We hypothesized that the brain activity of participants from the HL group would be more similar to the overall activity of all participants in the HL group (meaning higher ISC-HL) and that the brain activity of participants from the LL group would be less similar to the overall activity of all participants in the HL group (meaning lower ISC-HL). To avoid bias in this procedure, we excluded the test subject from the HL group while computing the projections vi and then calculated the ISC-HL for the corresponding subject. As described in the previous section, we summed the two first stronger components of ISC-HL and also summed the six stimuli for each condition. Additionally, we assessed classification performance for both groups (HL vs. LL) using the area under the curve (AUC) characteristics of a fitted support vector machine model with leave-one-out approach. To determine the chance-level AUC, we randomly shuffled the labels 1,000 times. In each iteration, AUC was calculated through an identical process, starting from extracting the correlated components of HL-labeled group and following all subsequent steps described above. We calculated p-values using (1 + number of null AUC values ≥ actual AUC) / (1 + number of permutations) to see whether the observed AUC is above chance level. We repeated all the aforementioned steps separately for the PC and VR conditions.

To test statistical differences across narrativity levels, we conducted a two-way ANOVA. Based on the study design, our independent variables were narrativity level (HL vs. LL) and medium (PC vs. VR). The dependent variables were the scores from the two questionnaires (i.e., attention and immersion) and calculated neural reliability (as expressed by ISC). The output of such a comparison includes the main effects of “narrativity level” and “medium” and the interaction effect of “narrativity level × medium.” The same procedure and dependent variables, but with narrativity level (HL vs. LL) and viewing order (first vs. second) as independent variables, were used to test statistical differences across viewing order. Participants watched each video twice: once in one medium and once in the other medium. The medium that was used first was counterbalanced across participants. For the analysis, we created a group for the first viewing and a group for the second viewing, regardless of the medium used. In both of these groups, participants watched the same videos, including both HL and LL video ads. In the present study, we focused on the effect of narrativity level and viewing order on brain activity patterns by comparing the calculated features in HL vs. LL conditions, and first vs. second viewing. Therefore, we do not report results for the main effect of medium. Nevertheless, we included medium type as a factor in the statistical analyses to control for its effect. Additionally, we performed post hoc analysis when we identified interaction effects but also when we judged it appropriate to report simple effects. We corrected the p-values of the post-hoc tests using the Bonferroni method. Finally, to avoid multiple comparison error of multiple statistical tests (such as comparing HL and LL within two extracted components; see results), we corrected the p-values based on the number of statistical test repetition errors (FDR correction) using the Benjamini-Hochberg method (Gerstung et al., 2014).

Of the 156 participants who completed the online test, we considered 124 responses to be valid as the remaining participants answered too fast or failed to answer the attention question correctly. Results of the online test confirmed that the general public also perceived ads in the HL category as having a high narrativity level (MHL = 70.95, SDHL = 10.79) and adds in the LL category as having a low narrativity level (MLL = 47.71, SDLL = 13.52; see Figure 1E in the section “2. Materials and methods”). All six of the HL ads received higher scores than any of the six LL ads. An independent sample t-test confirmed that the difference between the two categories was statistically significant [t(61) = 7.554, p < 0.001].

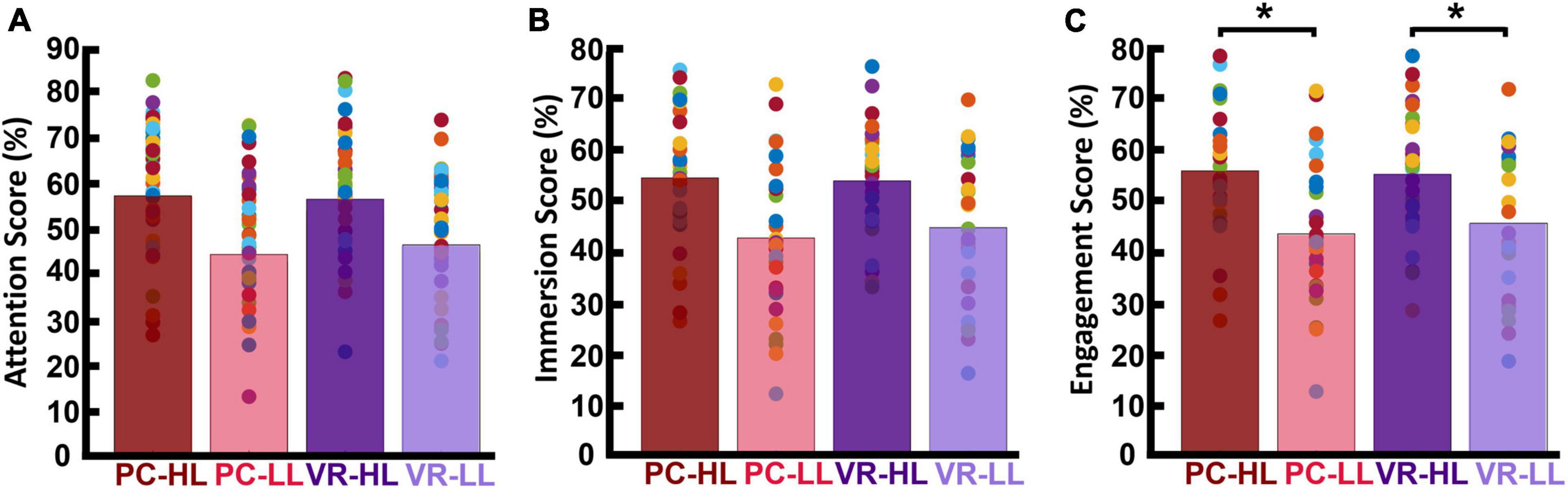

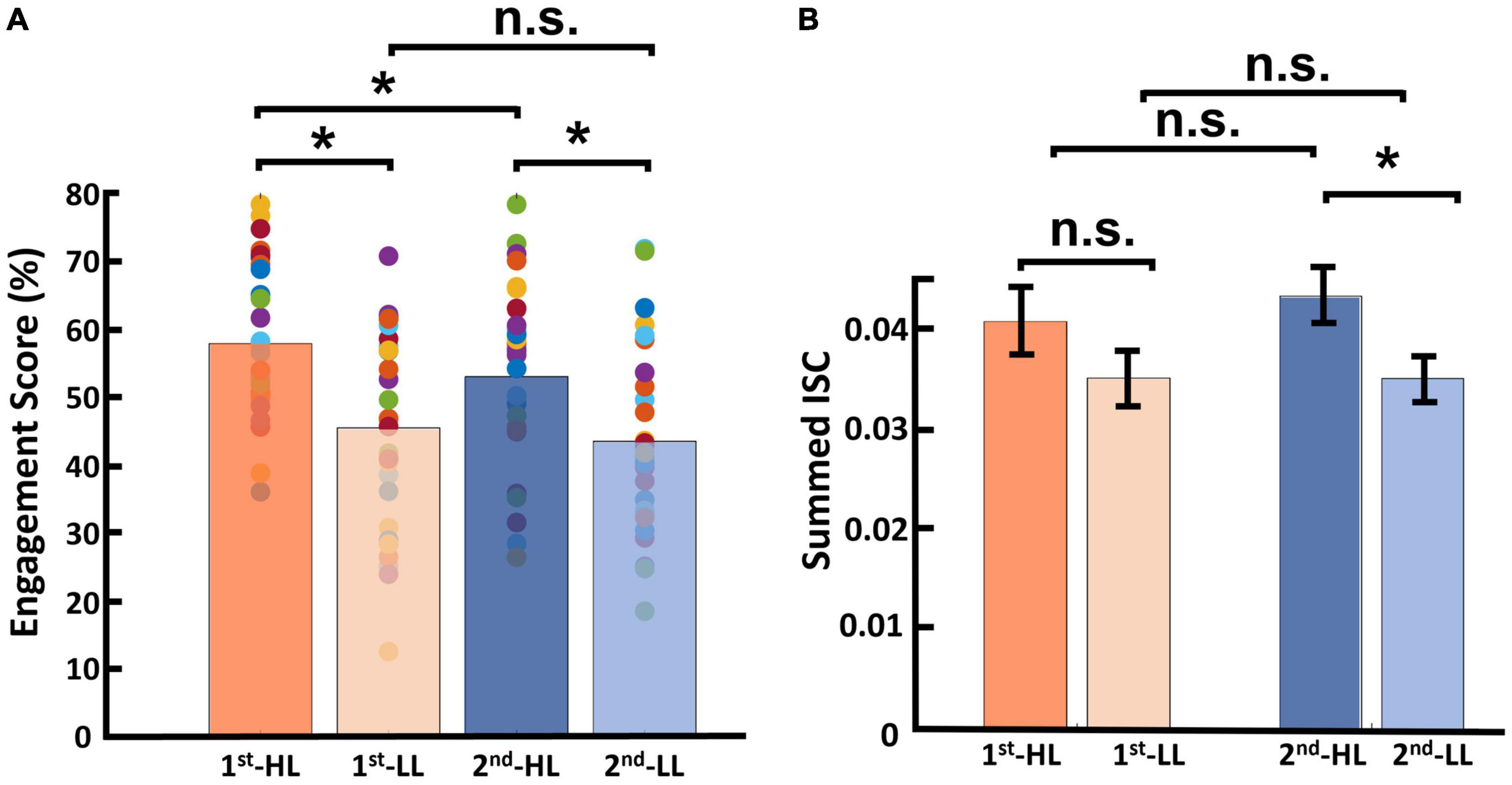

We first report our descriptive analysis of the attention, immersion, and engagement scores for each of the four conditions (HL–PC, HL–VR, LL–PC, and LL–VR; see section “2. Materials and methods” for abbreviations), then report results of the statistical analysis conducted on engagement scores. Note that our engagement metric is the average of the attention and immersion scores. Attention scores for the PC conditions were as follows: MHL–PC was 57.03 (SDHL–PC = 14.76), and MLL–PC was 44.16 (SDLL–PC = 13.92). The attention scores for the VR conditions were as follows: MHL–VR was 56.26 (SDHL–VR = 14.19), and MLL–VR was 46.28 (SDLL–VR = 14.41). Figure 4A shows the average attention scores in each of the four conditions. Immersion scores for the PC conditions were as follows: MHL–PC was 54.26 (SDHL–PC = 13.28), and MLL–PC was 42.53 (SDLL–PC = 15.09). Immersion scores for the VR conditions were as follows: MHL–VR was 53.67 (SDHL–VR = 10.81), and MLL–VR was 44.56 (SDLL–VR = 14.15). Figure 4B shows the average immersion scores in each of the four conditions. Engagement scores for the PC conditions were as follows: MHL–PC was 55.65 (SDHL–PC = 13.54), and MLL–PC was 43.35 (SDLL–PC = 14.04). Engagement scores for the VR conditions were as follows: MHL–VR was 54.97 (SDHL–VR = 12.16), and MLL–VR was 45.42 (SDLL–VR = 14.00). Results of the 2 × 2 repeated measures ANOVA showed a main effect of narrativity level on perceived engagement [F(1,28) = 19.779, p < 0.001]. Post hoc analysis showed that participants engaged more with HL ads than with LL ads in both PC [F(28,1) = 17.03, p < 0.001] and VR [F(28,1) = 20.320, p < 0.001] conditions. See Figure 4C.

Figure 4. Participants’ self-reported scores. Each bar is the average of the scores across participants and across corresponding video ads. The colored dots represent the score for each participant averaged across the corresponding videos. (A) Self-reported attention scores. (B) Self-reported immersion scores. (C) Engagement scores (average of attention and immersion scores). This shows that the HL engagement scores were significantly higher than the LL engagement scores in both PC (p < 0.001) and VR (p < 0.001) conditions. *p < 0.05.

First, we tested whether the actual ISC values are significantly above the chance level and the results indicated that all the calculated ISCs are significantly higher than the generated null distribution (see Supplementary Figure 1 for detailed information). Next, we applied the same statistical analysis used for the self-reported data to assess whether our neurological metric (ISC) changed according to narrativity level. To do so, we used summed ISC over the first two strongest components (see section “2. Materials and methods”) as a representative of neural reliability. Results of the 2 × 2 repeated measures ANOVA showed a main effect of narrativity level, where ISC was higher when participants watched the HL ads than when they watched the LL ads [F(1,28) = 4.467, p = 0.044]. Although the interaction effect was not significant, we then conducted post hoc analysis to determine whether this difference occurred in the PC or VR condition. Results of the post hoc analysis showed that ISC was significantly higher for the HL ads (MHL = 0.031, SDHL = 0.019) than for the LL ads (MLL= 0.020, SDLL = 0.016) in the PC condition [F(28,1) = 5.266, p = 0.017]. In the VR condition, the ISC of HL ads (MHL = 0.052, SDHL = 0.027) was still higher than that of LL ads (MLL = 0.050, SDLL = 0.023), but this difference was not significant [F(1,28) = 0.226, p = 0.638]. See Figure 3A.

Furthermore, to investigate which factors affect neural reliability, we compared the ISC of HL video ads to that of LL video ads separately for each component. We corrected the p-values because we repeated our calculations twice for the two components, and results can be seen in the top part of Figure 3B. Results showed that, for the first component, the main effect of narrativity is not significant [F(1,28) = 1.145, corrected p = 0.294]. However, for the second component, the main effect of narrativity was significantly higher in HL video ads than in LL video ads [F(1,28) = 5.412, corrected p = 0.045]. Post hoc analysis of the second component revealed that the ISC of HL ads (MHL = 0.015, SDHL = 0.010) was significantly higher than that of LL ads (MLL = 0.005, SDLL = 0.009) in the PC condition [F(1,28) = 13.676, corrected p = 0.002]; however, there was no significant difference between HL and LL ads in the VR condition [F(1,28) = 1.056, corrected p = 0.313]. In addition, different correlated components had different patterns of scalp activities (Figure 3B, bottom part).

The correlation analysis revealed that for the correlation between ISC and engagement, neither on the PC (r = −0.101, corrected-p = 0.421) nor on the VR (r = 0.047, corrected-p = 0.722) conditions there was a statistically significant correlation (Supplementary Figure 2A). However, for the correlation between ISC and narrativity level, the PC condition showed a significant correlation (r = 0.314, corrected-p = 0.017), and the VR condition did not show a significant correlation (r = 0.055, corrected-p = 0.678), as shown in Supplementary Figure 2B.

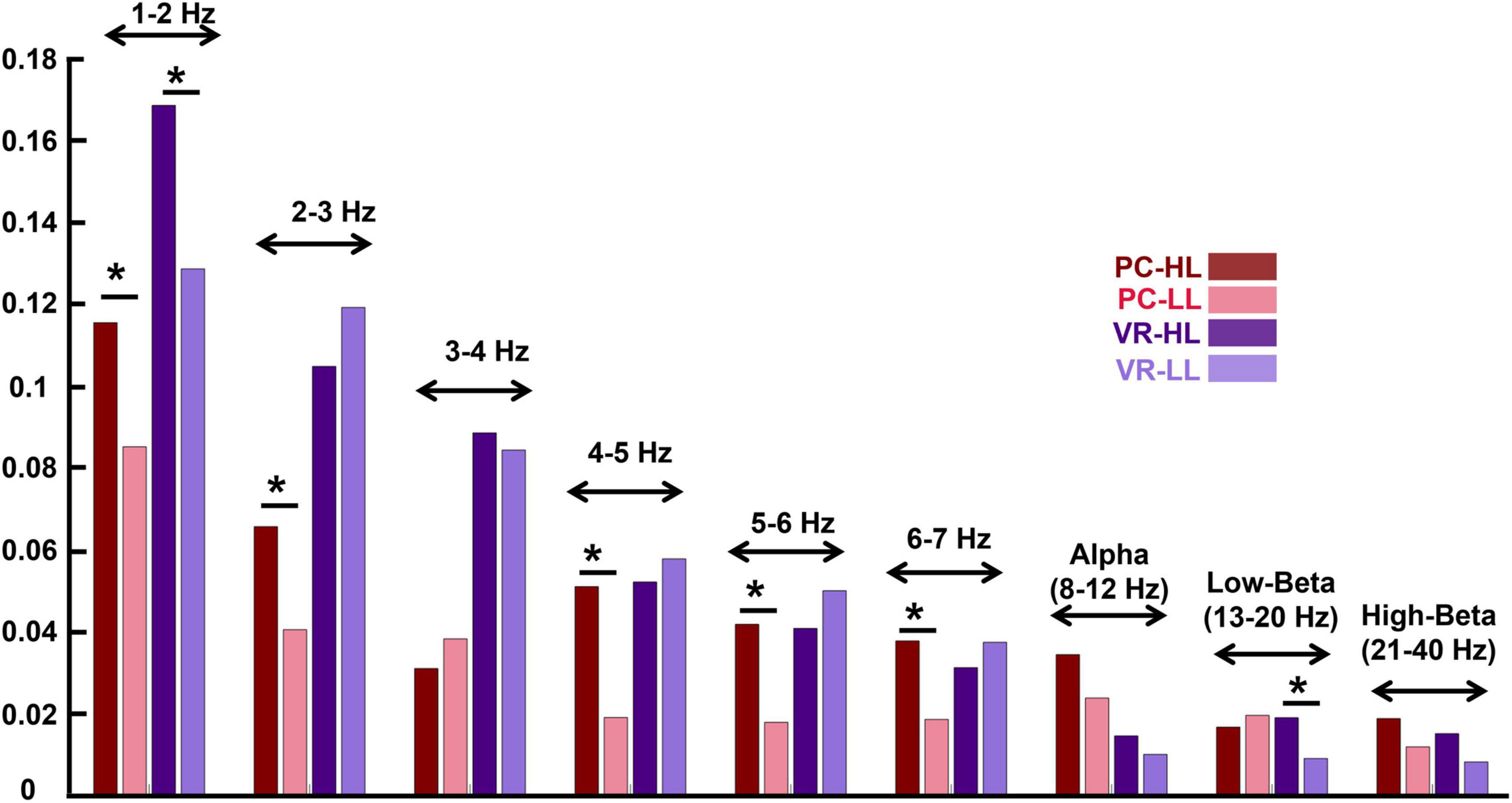

The results for the ISC in different frequency bands indicate similar effects of narrativity level on ISC of different frequencies as of on a wide EEG band. They are illustrated in Figure 5.

Figure 5. Inter-subject correlation of different frequency bins for the four conditions (see the figure legend). The stars above the bars indicate a significant difference between the two conditions (p < 0.05). The frequency bands are indicated above the bar charts of the four conditions. The figure indicates that in the lower frequency bands, the ISC values are higher compared to the ISC of higher frequency bands. In addition, the effect of the narrativity level on ISC values in the frequency bands is the same as its effect in wide-band EEG: In the PC condition, the ISC of HL is higher than the ISC of LL [in all frequency bins except for 3–4 Hz and low-beta; in alpha and high-beta, it is marginally significant (p = 0.052; p = 0.056, respectively)]. *p < 0.05.

In the PC condition, the ISC of some frequency bands are significantly higher in the HL compared to the LL condition as follows: 2−3 Hz (MHL = 0.066, SDHL = 0.054, MLL = 0.041, SDLL = 0.045, F(1,28) = 2.207, corrected p = 0.031), 4–5 Hz [MHL = 0.051, SDHL = 0.458, MLL = 0.198, SDLL = 0.33, F(1,28) = 3.846, corrected p < 0.001], 5–6 Hz [MHL = 0.042, SDHL = 0.035, MLL = 0.018, SDLL = 0.037, F(1,28) = 2.478, corrected p = 0.016], and 6–7 Hz [MHL = 0.038, SDHL = 0.029, MLL = 0.019, SDLL = 0.035, F(1,28) = 2.232, corrected p = 0.029]. However, some of the frequency bands did not show significant differences between HL and LL conditions as follows: 1–2 Hz (MHL = 0.116, SDHL = 0.069, MLL = 0.085, SDHL = 0.071, F(1,28) = 1.639, corrected p = 0.106), 3–4 Hz [MHL = 0.031, SDHL = 0.048, MLL = 0.039, SDLL = 0.043, F(1,28) = −0.694, corrected p = 0.490], alpha (MHL = 0.035, SDHL = 0.022, MLL = 0.024, SDLL = 0.020, F(1,28) = 2.011, corrected p = 0.052), low-beta (MHL = 0.017, SDHL = 0.019, MLL = 0.020, SDLL = 0.021, F(1,28) = −0.539, corrected p = 0.592), and high-beta (MHL = 0.019, SDHL = 0.013, MLL = 0.012, SDLL = 0.013, F(1,28) = 1.946, corrected p = 0.056).

In the VR condition, in ISC of 1–2 Hz (MHL = 0.169, SDHL = 0.097, MLL = 0.129, SDLL = 0.060, F(1,28) = 2.264, corrected p = 0.027) and low-beta (MHL = = 0.019, SDHL = 0.017, MLL = 0.009, SDLL = 0.016, F(1,28) = 2.261, corrected p = 0.027) there is a significant difference between HL and LL conditions. However, the other frequency bands did not show any significant difference between H and LL conditions as follows: 2–3 Hz [MHL = 0.169, SDHL = 0.097, MLL = 0.129, SDLL = 0.060, F (1,28) = −1.111, corrected p = 0.271], 3–4 Hz [MHL = 0.089, SDHL = 0.036, MLL = 0.085, SDLL = 0.050, F (1,28) = 0.444, corrected p = 0.658], 4–5 Hz [MHL = 0.052, SDHL = 0.045, MLL = 0.058, SDLL = 0.041, F (1,28) = −0.538, corrected p = 0.592], 5–6 Hz [MHL= 0.041, SDHL = 0.037, MLL = 0.050, SDLL = 0.034, F (1,28) = −0.993, corrected p = 0.324], 6–7 Hz [MHL = 0.032, SDHL = 0.028, MLL = 0.038, SDLL = 0.029, F (1,28) = −0.889, corrected p = 0.377], alpha [MHL = 0.153, SDHL = 0.026, MLL = 0.100, SDLL = 0.022, F (1,28) = 0.723, corrected p = 0.472], and high-beta [MHL = 0.015, SDHL = 0.014, MLL = 0.009, SDLL = 0.013, F (1,28) = 1.955, corrected p = 0.055].

To evaluate whether viewing order affects engagement scores and ISC, we implemented the abovementioned statistical procedure with narrativity level and viewing order as independent variables. We first report the resulting engagement scores and ISC scores.

Regarding engagement scores, the average and standard deviation for the first viewing group were as follows: MHL was 57.71 (SDHL = 2.10), and MLL was 45.42 (SDLL = 2.65). For the second viewing group, these figures were as follows: MHL was 52.90 (SDHL = 2.57), and MLL was 43.36 (SDLL = 2.56; see Figure 6A). Results of this statistical analysis revealed a significant main effect of viewing order. Further post hoc analysis indicated that this main effect was driven by the HL category, for which videos viewed first received significantly higher engagement scores than those viewed second [F(1,28) = 12.784, p = 0.001]. However, in the LL condition, there was no significant difference between the first and second viewing groups [F(1,28) = 1.686, p = 0.205]. Moreover, there was a main effect of narrativity level: of videos in the first viewing group, HL videos received significantly higher engagement scores than LL videos [F(1,28) = 23.047, p < 0.001]; this was also the case for videos in the second viewing group [F(1,28) = 13.391, p = 0.001].

Figure 6. Results of viewing order (first and second) considering narrativity level (HL and LL). (A) Results of engagement scores, where for videos in the HL condition, engagement scores upon first viewing were significantly higher than those upon second viewing. Moreover, HL videos received significantly higher engagement scores than LL videos upon both first and second viewings. (B) Results for calculated ISC of viewing order, where the only significant difference was between the second viewing of HL videos and that of LL videos. *p < 0.05.

Regarding ISC, the average and standard deviation for videos in the first viewing group were as follows: MHL = 0.040 (SDHL = 0.025), and MLL was 0.035 (SDLL = 0.027). For the second viewing group, these figures were as follows: MHL was 0.043 (SDHL = 0.027), and MLL was 0.035 (SDLL = 0.023; see Figure 6B). Results of this statistical analysis showed no significant main effect of viewing order. Further post hoc analysis showed that the HL video ads received higher ISC scores than LL video ads upon second viewing [F(1,28) = 4.411, p = 0.045], but not upon first viewing [F(1,28) = 1.144, p = 0.294].

We calculated ISC-HL to test if it was possible to determine whether a single subject was watching an HL video ad or an LL video ad based on their neural activity. Figures 7A, B display the calculated ISC-HL in both VR and PC conditions. As expected, the neural responses of 23 (76.6%) participants were much more similar to those of the HL group (had higher ISC-HL) when they attended to HL videos than when they attended to LL videos in the PC condition (Figure 7A). In the VR condition, the neural responses of 15 (50%) participants showed higher ISC-HL while attending to HL videos than while attending to LL videos (Figure 7B). Given ISC-HL as the predictor of narrativity level, the classifier shows above-chance performance in the PC condition but not in the VR condition (Figures 7C, D). In the PC condition, the actual AUC was 0.637, while the chance-level AUC was 0.548 (p < 0.001). In the VR condition, the actual AUC was 0.507, while the chance-level AUC was 0.543 (p = 0.245).

Figure 7. (A,B) Results for calculated ISC-HL for each participant in the PC and VR conditions. Lines and markers of each color show the ISC-HL of one participant in the HL and LL conditions. (A) This shows that 76.6% of participants had higher ISC-HL when they attended to HL video ads than when they attended to LL video ads. This shows that most of the participants who were exposed to HL video ads showed higher similarity to the trained model (which is derived from data of HL condition) than those who are exposed to LL video ads. (B) This relates to the same evaluation as panel (A), but in the VR condition, where 50% of participants had higher ISC-HL. (C,D) Area under the curve (AUC) of classification performance for predicting exposure to HL and LL video ads in PC and VR conditions, respectively. The black lines show the average over randomized AUCs. (C) Shows that the AUC of classification based on participants’ neural activity in the PC condition is higher than chance level, while panel (D) shows that the AUC of classification based on participants’ neural activity in VR condition is below chance level.

In this study, we tested whether different levels of narrativity (HL vs. LL) lead to differences in information processing reliability (represented by ISC), specifically while watching video ads. Furthermore, we evaluated whether different levels of narrativity cause differences in self-reported engagement ratings. To this aim, we presented HL and LL video ads to 32 participants while collecting their EEG signals. In addition, for each video ad, participants self-reported their levels of attention and immersion, which we considered two core factors of engagement. We calculated the ISC of each participant by calculating the similarity of their correlated components to those of the participant pool. One advantage of ISC analysis is that it does not require stimulus repetition; this is advantageous because such repetition causes decreased attention and engagement (Dmochowski et al., 2012; Ki et al., 2016). As expected, our results showed that both calculated ISC and engagement scores of HL video ads were significantly higher than those for LL video ads, suggesting that narrativity level modulates ISC and engagement. However, the modulation of ISC was not correlated with the degree of engagement with the narrative.

Previous studies have evaluated the relationship between narrative engagement—or its core component, attention—and ISC. Cohen et al. (2017) measured self-reported engagement scores and ISC while participants were exposed to naturalistic videos. They found that more engaging videos were processed uniformly in participants’ brains, leading to higher ISC. Consistent with their results, Poulsen et al. (2017) reported that a lack of engagement manifests an unreliable neural response (meaning lower ISC), and they introduced EEG-ISC as a marker of engagement. Song et al. (2021a) investigated whether engagement ratings modulate ISC by evaluating the relationship between continuous self-reported engagement ratings and ISC using continuous naturalistic stimuli. They reported higher ISC during highly narrative engaging moments and concluded that ISC reflects engagement levels. In line with it, Schmälzle and Grall (2020) demonstrated that dynamic ISC aligns with reported levels of suspense (i.e., a proxy for engagement levels) of a narrative, and Grady et al. (2022) showed that ISC is higher during intrinsic engaging moments of a narrative. In another study, Dmochowski et al. (2012) used short video clips to evaluate attention and emotion using ISC. They found a close correspondence between expected engagement and neural correlation, suggesting that extracting maximally correlated components (ISC) reflects cortical processing of attention or emotion. Finally, Ki et al. (2016) investigated whether attentional states modulate ISC for audio and audiovisual narratives, and they concluded that higher attention leads to higher neural reliability across subjects. Inspired by previous studies (Busselle and Bilandzic, 2009; Dmochowski et al., 2012; Lim et al., 2019), we measured narrative engagement by averaging two of its important components, attention and immersion.

Our results indicated that, using ISC (in the PC condition), we were able to significantly discriminate levels of narrativity: the ISC levels of HL video ads were significantly higher than those of LL video ads (Figure 3A). Consistent with our narrativity level discrimination, self-reported engagement ratings were significantly higher for HL video ads than for LL ones (Figure 4C). In addition, in the PC condition, the ISC-HL was able to predict the narrativity level based on neural responses (measured via EEG) to the stimuli. This means that the model used significantly predicted whether the participants were attending to HL or LL narrativity. The level of significance was not as strong as the previously reported accuracy for predicting attentional states (Ki et al., 2016); however, this could be due to explicit differences between experimental conditions (participants were made to count backward during the task to diminish their attentional state). This finding is the first step toward predicting exposure to different levels of narrativity based solely on the ISC of neural activity evoked by stimuli, which leads to a better comprehension of the brain mechanism that processes narrativity levels. Further studies with different designs should be conducted with a more specific focus on predicting narrativity level.

In contrast with other studies, we did not find a relationship between engagement levels and ISC, as the correlation analysis suggested. A plausible explanation for this finding is the type of stimulus employed. Several studies compared ISC scores of interrupted, scrambled, or reversed narratives with those of non-modified narratives. Dmochowski et al. (2012) reported higher ISC for non-scrambled narratives than for disrupted or scrambled narratives. Ki et al. (2016), Poulsen et al. (2017), and Grall et al. (2021) found higher ISC scores for a cohesive narrative than for a meaningless, scrambled or reversed narrative. These findings demonstrate that meaningless narratives are linked to lower ISC scores. Song et al. (2021b) used scrambled video clips to identify the moments in which narrative comprehension occurs, and they reported that story comprehension occurs when events are causally related to each other. Moreover, they showed that, in such moments, the underlying brain states were mostly correlated across subjects. The stimuli manipulations applied by previous studies (e.g., scrambling the narrative or presenting narratives that lacked causality) created greater differences between experimental conditions than those in our study. While we shared with these studies their interest in the subjects’ engagement and other factors related to the reception of the narrative, we were also interested in investigating the possibility of classifying and discriminating structural characteristics of narrative artifacts. Therefore, we defined the narrativity levels (HL and LL) based on narrative structural elements and properties such as the presence of defined characters in an identifiable context, clear causal links between events, and closure resulting from the intertwining of these events. Our conceptualization of narrativity levels was inspired by Ryan (2007).

From this perspective, our results align with those of previous studies, indicating that information processing is highly consistent across subjects when participants are exposed to HL narrativity. In other words, the fact that the ISC of HL video ads is significantly higher than that of LL video ads in the PC condition (Figure 3A) is a promising indication that EEG-ISC could potentially be used to explore levels of narrativity (and correlative engagement) in different kinds of media artifacts. However, unlike previous studies using stimuli that were “either-or” regarding narrativity possession, our ISC was not mediated by engagement levels. This finding suggests that ISC can represent or capture other cognitive processes beyond engagement. The evaluation of the activity of the first two components separately (Figure 3B) provides some insights in this regard. For the first component, activity was distributed throughout the anterior and posterior regions, while in the second component, activity was concentrated in the posterior region. Overall, the second component drove the narrativity level modulation in response to the HL narrative video ads. While previous studies relating ISC to engagement found more widespread ISC (e.g., Dmochowski et al., 2012, 2014; Cohen et al., 2017; Song et al., 2021a), strong posterior ISC was linked to shared psychological perspective (Lahnakoski et al., 2014) and shared understanding of narrative (Nguyen et al., 2019). Lahnakoski et al. (2014) asked participants to take one perspective or another to interpret the events of a movie. When participants watched the movie and adopted the same perspective, posterior ISC was stronger than when they adopted different perspectives. The authors posited that ISC represented a shared understanding of the environment. This was supported by the study by Nguyen et al. (2019), which showed that participants with similar recalls of a narrative had stronger ISC in the posterior medial cortex and angular gyrus compared to those with dissimilar recalls. In our case, high levels of narrativity better immersed participants in the story world compared to low levels. This might have eased participants to take the perspective of the character(s) in HL videos. In addition, videos with low narrativity levels might not have been so successful in leading to similar perspective taking and shared understanding because the story was more fragmented than in HL video ads. Because ISC appears to be related to both engagement and shared understanding or perspective-taking, our findings suggest that ISC seems to be more sensitive to the latter than to the former (see Dini et al., 2022c). However, further investigation is necessary to elucidate why narrativity level did not appear to affect ISC in the same way in the VR condition. Chang et al. (2015) reported that ISC might change due to fatigue effects for participants in an fMRI scanner. Thus, one possible explanation for the non-significant effect of narrativity level on ISC in the VR condition is fatigue. Wearing the VR headset and the EEG cap for almost 25 min might have caused fatigue and discomfort (e.g., related to posture, weight, or itching), and such an effect might have masked the effects of narrativity level on ISC. Another plausible explanation is also related to the VR feature. As VR is an increasingly popular immersive technology, participants’ expectations about the VR modality may have affected their level of attention. Participants may have been disappointed to find a 2D stimulus that perhaps failed to meet their expectations and therefore diminished their active attention.

In the narratology field, there is a substantial, ongoing debate about whether narrative should be considered an either-or property or a scalar property (i.e., a matter of more or less) (Ryan, 2007; Abbott, 2008). Therefore, in the last two decades, some narratologists have introduced the notion that different artifacts may have different levels of narrativity (Ryan, 2007; Oatley, 2011). It is widely accepted that, even though events in a story do not have to be chronologically ordered, the sequence of the events must nonetheless follow a narrative logic if closure is to be achieved (this is something that is commonly encapsulated in the distinction between “story” and “discourse”). Therefore, a “scrambled” narrative ceases to be a narrative; instead, it is a series of unrelated events, lacking an internal temporal logic. If there is no discernable, recoverable chronological order of connected events, the sequence could hardly be considered a narrative. Although the previous studies presented in our literature review provided the methodological bases for our study, they were not centered on narrative qualities. In our study, we focus on investigating the plausibility of testing a particular expressive artifact for levels of narrativity. Therefore, our stimuli presented two different degrees of narrativity (LL and HL). We showed that small differences in narrativity levels (i.e., between HL and LL) have effects on ISC and engagement similar to the effects of more evident differences between such levels (e.g., scrambled vs. non-scrambled). However, the underlying reason for differences in ISC between narratives with some degree of narrativity level did not reflect differences in perceived engagement; rather, it seemed related to perspective taking and shared understanding. These findings are a step toward narrative comprehension, especially when considering narrative as a scalar property (Bruni et al., 2021).

Previous studies have reported that ISC values are sensitive to the frequency information of the input signal (Lankinen et al., 2014; Thiede et al., 2020), meaning that ISC values are higher in lower frequency bands and vice versa. In this study, we used a wide EEG band (1–40 Hz) for the analysis, and the results revealed lower ISC values compared to other studies (e.g., Ki et al., 2016). Therefore, we evaluated whether the ISC values change according to different frequency bands: 1–2 Hz, 2–3 Hz, 3–4 Hz, 4–5 Hz, 5–6 Hz, 6–7 Hz, alpha (8–12 Hz), low-beta (13–20 Hz), and high-beta (21–40 Hz). In line with previous studies (Lankinen et al., 2014), our results showed higher ISC values in lower frequency bands and lower ISC values in higher frequency bands (Figure 5). The possible explanation for this could be that in high frequencies, even small timing variations can substantially decrease the correlation across signals, leading to a decrease in ISC values. In addition, it is also possible that these differences derive from phase variations in higher frequencies across subjects. Although this study does not aim to elaborate on the effect of narrativity levels on ISC of different frequency bands, our results point to an interesting finding. Aligned with the results obtained from the wide-band analysis, we found that the ISC of HL is significantly higher than LL in different frequency bins. Even though some frequency bins did not show this effect, in most of them there is a tendency of higher ISC for the HL than the LL condition. In summary, the analysis of ISC for different frequency bins supported the findings of the wide-band ISC in terms of the effect of narrativity on shared neural responses across participants.

To test whether viewing order modulates engagement and ISC, we separated the data into two groups—first and second viewing—and conducted statistical analyses considering narrativity level and viewing order to be independent variables. Dmochowski et al. (2012) found that attentional engagement decreases when participants watch a stimulus for the second time compared to the first time. Moreover, they reported significantly lower ISC for the second viewing. Ki et al. (2016) confirmed and extended their results by declaring that neural responses become less reliable upon second viewing of a stimulus, showing significantly lower ISC. In an fMRI study, Song et al. (2021b) investigated the effect of viewing order on neural activity, watching scrambled videos twice. Their results replicated the findings of the aforementioned study and other studies (Poulsen et al., 2017; Imhof et al., 2020) by showing that neural states across participants were less synchronized when watching the videos, including the scrambled videos, for the second time. However, Chang et al. (2015) conducted a combined EEG-magnetoencephalography (MEG) study and reported increased ISC during the second viewing of the stimuli. They declared that participants’ prediction of the story structure increased during the second viewing, resulting in a more similar EEG-MEG activity across participants, and that this contradiction with previous studies might be due to the different time window selected for ISC calculation. Our results showed that engagement level dropped during the second viewing, supporting previous studies (Dmochowski et al., 2012). For the HL videos ads, engagement scores for the first viewing were significantly higher than those for the second viewing, although this was not the case for the LL ads (Figure 6A). The decrease in engagement scores for HL ads but not LL ads might be due to differences intrinsic to the classification of our stimuli. While videos in the HL category included all or almost all narrativity elements (Ryan, 2007), those in the LL category included only a few. Thus, we could say that the HL video ads were more storytelling-based than the LL video ads. Therefore, it could be that factors such as suspense, expectation, and suspension of disbelief, which naturally occur when watching stories, are attenuated when the story is viewed for the second time, lowering engagement levels in the case of HL video ads. These same factors are not present or are present to a lesser extent when watching LL video ads, and therefore, engagement levels were not harmed. However, there was no significant difference in ISC between the first and second viewing in either HL or LL video ads, suggesting that viewing order does not modulate ISC. Though the current dataset cannot provide definite answers, these differences in findings might be explained by our study design. First, our participants were exposed to 12 short video ads, a greater number of stimuli than previous studies employed when investigating viewing order (Dmochowski et al., 2012; Chang et al., 2015; Ki et al., 2016; Poulsen et al., 2017; Song et al., 2021b). Watching 12 videos in sequence might have reduced participants’ memories of details of the stories. Hence, when watching the videos for the second time (although they were perceived as less engaging) there remained a substantial amount of information to be processed, which could have been reflected in the ISC of the second viewing. Another possible factor that might have hindered participants’ short-term memories of the videos and affected ISC levels is the time span and the tasks performed between the two viewings. In our study, the second exposure to the video ads was separated from the first by about 20 min. During those 20 min, participants performed two different tasks for a separate study. Therefore, the current dataset was not able to capture the neural underpinnings of viewing order. Considering the limitations of this study, future studies could be conducted to capture this effect.

This study investigated whether high or low narrativity levels in video advertisement would significantly affect self-reported engagement and shared neural responses across individuals, measured through EEG-ISC, with the ads. The findings demonstrated that a higher narrativity level led to an increase in engagement with the ad and an increase in ISC of the posterior part of the brain, also during a second viewing. Interestingly, the results suggest that ISC may be more sensitive to shared perspective-taking—which could also indicate a shared understanding of the narrative—than engagement levels. Moreover, the findings imply that narratives with higher narrativity levels evoke similar interpretations in their audience compared to narratives with lower narrativity levels. This study advances the elucidation of the viewers’ way of processing and understanding a given communication artifact as a function of the narrative qualities expressed by the level of narrativity.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Local Ethics Committee (Technical Faculty of IT and Design, Aalborg University) and performed in accordance with the Danish Code of Conduct for Research and the European Code of Conduct for Research Integrity. The patients/participants provided their written informed consent to participate in this study.

AS, HD, LB, and EB designed the experiment. AS and HD collected the data and wrote the main manuscript. HD analyzed the neuro data and prepared the figures. AS analyzed the self-reported data. LB and EB contributed to writing the manuscript. All authors revised and approved the manuscript.

This work was supported by the Rhumbo (European Union’s Horizon 2020 Research and Innovation Program under the Marie Skłodowska-Curie Grant Agreement No. 813234).

We are grateful to Tirdad Seifi Ala for helping with the technical aspects of the data analysis and revising an early version of the manuscript, and Thomas Anthony Pedersen for providing technical support and help during the early stages of the study. This study is available as a preprint (Dini et al., 2022b).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2023.1160981/full#supplementary-material

Abbott, H. P. (2008). The Cambridge introduction to narrative, 2nd Edn. Cambridge: Cambridge University Press, doi: 10.1017/CBO9780511816932

Blankertz, B., Lemm, S., Treder, M., Haufe, S., and Müller, K.-R. (2011). Single-trial analysis and classification of ERP components—a tutorial. Neuroimage 56, 814–825. doi: 10.1016/j.neuroimage.2010.06.048

Bruni, L. E., Dini, H., and Simonetti, A. (2021). “Narrative cognition in mixed reality systems: Towards an empirical framework,” in Virtual, augmented and mixed reality, eds J. Y. C. Chen and G. Fragomeni (Cham: Springer International Publishing), 3–17. doi: 10.1007/978-3-030-77599-5_1

Busselle, R., and Bilandzic, H. (2009). Measuring narrative engagement. Media Psychol. 12, 321–347. doi: 10.1080/15213260903287259

Chang, W.-T., Jääskeläinen, I. P., Belliveau, J. W., Huang, S., Hung, A.-Y., Rossi, S., et al. (2015). Combined MEG and EEG show reliable patterns of electromagnetic brain activity during natural viewing. Neuroimage 114, 49–56. doi: 10.1016/j.neuroimage.2015.03.066

Chun, M. M., Golomb, J. D., and Turk-Browne, N. B. (2011). A taxonomy of external and internal attention. Annu. Rev. Psychol. 62, 73–101. doi: 10.1146/annurev.psych.093008.100427

Cohen, S. S., Henin, S., and Parra, L. C. (2017). Engaging narratives evoke similar neural activity and lead to similar time perception. Sci. Rep. 7:4578. doi: 10.1038/s41598-017-04402-4

Cohen, S. S., and Parra, L. C. (2016). Memorable audiovisual narratives synchronize sensory and supramodal neural responses. eNEURO 3, ENEURO.0203–16.2016. doi: 10.1523/ENEURO.0203-16.2016

Coker, K. K., Flight, R. L., and Baima, D. M. (2021). Video storytelling ads vs argumentative ads: How hooking viewers enhances consumer engagement. J. Res. Interact. Mark. 15, 607–622. doi: 10.1108/JRIM-05-2020-0115

de Cheveigné, A., and Parra, L. C. (2014). Joint decorrelation, a versatile tool for multichannel data analysis. Neuroimage 98, 487–505. doi: 10.1016/j.neuroimage.2014.05.068

Dini, H., Simonetti, A., Bigne, E., and Bruni, L. E. (2022a). EEG theta and N400 responses to congruent versus incongruent brand logos. Sci. Rep. 12:4490. doi: 10.1038/s41598-022-08363-1

Dini, H., Simonetti, A., and Bruni, L. E. (2022c). Exploring the neural processes behind narrative engagement: An EEG study. bioRxiv [Preprint]. doi: 10.1101/2022.11.28.518174

Dini, H., Simonetti, A., Bigne, E., and Bruni, L. E. (2022b). Higher levels of narrativity lead to similar patterns of posterior EEG activity across individuals. bioRxiv [Preprint]. doi: 10.1101/2022.09.23.509168

Dmochowski, J. P., Bezdek, M. A., Abelson, B. P., Johnson, J. S., Schumacher, E. H., and Parra, L. C. (2014). Audience preferences are predicted by temporal reliability of neural processing. Nat. Commun. 5:4567. doi: 10.1038/ncomms5567

Dmochowski, J. P., Sajda, P., Dias, J., and Parra, L. C. (2012). Correlated components of ongoing EEG point to emotionally laden attention – a possible marker of engagement? Front. Hum. Neurosci. 6:112. doi: 10.3389/fnhum.2012.00112

Escalas, J. E. (1998). “Advertising narratives: What are they and how do they work?,” in Representing consumers, ed. B. Stern (Oxfordshire: Routledge), 267–289. doi: 10.4324/9780203380260-19

Escalas, J. E., Moore, M. C., and Britton, J. E. (2004). Fishing for Feelings? Hooking viewers helps!. J. Consum. Psychol. 14, 105–114. doi: 10.1207/s15327663jcp1401&2_12

Gerstung, M., Papaemmanuil, E., and Campbell, P. J. (2014). Subclonal variant calling with multiple samples and prior knowledge. Bioinformatics 30, 1198–1204. doi: 10.1093/bioinformatics/btt750

Grady, S. M., Schmälzle, R., and Baldwin, J. (2022). Examining the relationship between story structure and audience response. Projections 16, 1–28. doi: 10.3167/proj.2022.160301

Grall, C., Tamborini, R., Weber, R., and Schmälzle, R. (2021). Stories collectively engage listeners’ brains: Enhanced intersubject correlations during reception of personal narratives. J. Commun. 71, 332–355. doi: 10.1093/joc/jqab004

Green, M. C., and Brock, T. C. (2000). The role of transportation in the persuasiveness of public narratives. J. Pers. Soc. Psychol. 79, 701–721. doi: 10.1037/0022-3514.79.5.701

Hasson, U., Furman, O., Clark, D., Dudai, Y., and Davachi, L. (2008). Enhanced intersubject correlations during movie viewing correlate with successful episodic encoding. Neuron 57, 452–462. doi: 10.1016/j.neuron.2007.12.009

Haufe, S., Meinecke, F., Görgen, K., Dähne, S., Haynes, J.-D., Blankertz, B., et al. (2014). On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage 87, 96–110.

Hogan, P. C. (2011). Affective narratology: The emotional structure of stories. Nebraska: University of Nebraska Press.

Houghton, D. M. (2021). Story elements, narrative transportation, and schema incongruity: A framework for enhancing brand storytelling effectiveness. J. Strateg. Mark 1–16. doi: 10.1080/0965254X.2021.1916570

Imhof, M. A., Schmälzle, R., Renner, B., and Schupp, H. T. (2020). Strong health messages increase audience brain coupling. Neuroimage 216:116527. doi: 10.1016/j.neuroimage.2020.116527

Jajdelska, E., Anderson, M., Butler, C., Fabb, N., Finnigan, E., Garwood, I., et al. (2019). Picture this: A review of research relating to narrative processing by moving image versus language. Front. Psychol. 10:1161. doi: 10.3389/fpsyg.2019.01161

Ki, J. J., Kelly, S. P., and Parra, L. C. (2016). Attention strongly modulates reliability of neural responses to naturalistic narrative stimuli. J. Neurosci. 36, 3092–3101. doi: 10.1523/JNEUROSCI.2942-15.2016

Kim, E., Ratneshwar, S., and Thorson, E. (2017). Why narrative ads work: An integrated process explanation. J. Advert. 46, 283–296. doi: 10.1080/00913367.2016.1268984

Lahnakoski, J. M., Glerean, E., Jääskeläinen, I. P., Hyönä, J., Hari, R., Sams, M., et al. (2014). Synchronous brain activity across individuals underlies shared psychological perspectives. Neuroimage 100, 316–324. doi: 10.1016/j.neuroimage.2014.06.022

Lankinen, K., Saari, J., Hari, R., and Koskinen, M. (2014). Intersubject consistency of cortical MEG signals during movie viewing. Neuroimage 92, 217–224. doi: 10.1016/j.neuroimage.2014.02.004

Lim, S., Yeo, M., and Yoon, G. (2019). Comparison between concentration and immersion based on EEG analysis. Sensors 19:1669. doi: 10.3390/s19071669

Nastase, S. A., Gazzola, V., Hasson, U., and Keysers, C. (2019). Measuring shared responses across subjects using intersubject correlation. Soc. Cogn. Affect. Neurosci. 14, 669–687. doi: 10.1093/scan/nsz037

Nguyen, M., Vanderwal, T., and Hasson, U. (2019). Shared understanding of narratives is correlated with shared neural responses. Neuroimage 184, 161–170. doi: 10.1016/j.neuroimage.2018.09.010

Nummenmaa, L., Glerean, E., Viinikainen, M., Jaaskelainen, I. P., Hari, R., and Sams, M. (2012). Emotions promote social interaction by synchronizing brain activity across individuals. Proc. Natl. Acad. Sci. U.S. A. 109, 9599–9604. doi: 10.1073/pnas.1206095109

Parra, L., and Sajda, P. (2003). Blind source separation via generalized eigenvalue decomposition. J. Mach. Learn. Res. 4, 1261–1269.

Parra, L. C., Spence, C. D., Gerson, A. D., and Sajda, P. (2005). Recipes for the linear analysis of EEG. Neuroimage 28, 326–341.

Petroni, A., Cohen, S. S., Ai, L., Langer, N., Henin, S., Vanderwal, T., et al. (2018). The variability of neural responses to naturalistic videos change with age and sex. eNEURO 5, ENEURO.0244–17.2017. doi: 10.1523/ENEURO.0244-17.2017

Poulsen, A. T., Kamronn, S., Dmochowski, J., Parra, L. C., and Hansen, L. K. (2017). EEG in the classroom: Synchronised neural recordings during video presentation. Sci. Rep. 7:43916. doi: 10.1038/srep43916

Redcay, E., and Moraczewski, D. (2020). Social cognition in context: A naturalistic imaging approach. Neuroimage 216:116392. doi: 10.1016/j.neuroimage.2019.116392