- Department of Biomedical Engineering, Faculty of Engineering, Shahed University, Tehran, Iran

Emotion recognition systems have been of interest to researchers for a long time. Improvement of brain-computer interface systems currently makes EEG-based emotion recognition more attractive. These systems try to develop strategies that are capable of recognizing emotions automatically. There are many approaches due to different features extractions methods for analyzing the EEG signals. Still, Since the brain is supposed to be a nonlinear dynamic system, it seems a nonlinear dynamic analysis tool may yield more convenient results. A novel approach in Symbolic Time Series Analysis (STSA) for signal phase space partitioning and symbol sequence generating is introduced in this study. Symbolic sequences have been produced by means of spherical partitioning of phase space; then, they have been compared and classified based on the maximum value of a similarity index. Obtaining the automatic independent emotion recognition EEG-based system has always been discussed because of the subject-dependent content of emotion. Here we introduce a subject-independent protocol to solve the generalization problem. To prove our method’s effectiveness, we used the DEAP dataset, and we reached an accuracy of 98.44% for classifying happiness from sadness (two- emotion groups). It was 93.75% for three (happiness, sadness, and joy), 89.06% for four (happiness, sadness, joy, and terrible), and 85% for five emotional groups (happiness, sadness, joy, terrible and mellow). According to these results, it is evident that our subject-independent method is more accurate rather than many other methods in different studies. In addition, a subject-independent method has been proposed in this study, which is not considered in most of the studies in this field.

Introduction

Emotions, which refer to a psychophysiological process resulting from understanding an object or situation, affect our daily lives by directing actions and moderating motivation. Most human works are influenced by emotions, from how we think to decision making and our behavior or communication. Positive emotions enhance human health and work effectiveness, whereas negative emotions probably pave the way for health issues. For example, the World Health Organization (WHO) had predicted that depression would be the most common disease in the world by 2020 (Byun et al., 2019), and untreated depression increases the mortality rate and may cause suicidal behavior, which is a severe public health problem (Franklin et al., 2017). Moreover, it was confirmed that learning processes are deeply affected by emotional intelligence, especially for information extraction, in which its importance is most apparent (Salovey and Mayer, 1990; Goleman, 1995).

In recent years, many different uses of Human-Computer Interaction (HCI) systems have become commonplace (Chai et al., 2016), and one of the applications of HCI systems that have been recently taken into consideration are systems that need to detect and analyze the emotions, such as rehabilitation systems or health care, computer video games, etc. Designing such systems requires comprehending and recognizing emotions (Stickel et al., 2009; Bajaj and Pachori, 2015; Verma and Tiwary, 2017), so understanding the user’s emotional state is assumed as a significant factor. In the past decade, emotion recognition researches from different modalities [e.g., physiological signals (Khezri et al., 2015; Yin et al., 2017), facial expression (Cohen et al., 2000; Ioannou et al., 2005; Flynn et al., 2020; Proverbio et al., 2020), etc.] have been grown up and recently, thanks to the existence of cost-effective devices for capturing brain signals [electroencephalographic (EEG) signal] as input for systems that decode the relationship between emotions and electroencephalographic (EEG) variation, researchers in the field of Brain-Computer Interface (BCI) have been studying emotion recognition to make affective BCI (aBCI) systems (Mühl et al., 2011; Ju et al., 2020; Torres et al., 2020).

Emotion recognition is the research area trying to design systems and devices capable of identifying, interpreting, and processing human emotions, which would lead to the probable creation of machines capable of interacting with emotions. Emotional states play an essential role in decision-making or problem-solving, and emotional self-awareness can help people manage their mental health and optimize their work performance. Some researchers believe that using of EEG-based BCI in emotion recognition systems will soon increase, and they could be used for emotion recognition in daily life for several purposes, such as gaming and entertainment, health care facilities, teaching-learning scenarios, and optimizing performance in the workplace or some other applications (Torres et al., 2020). Therefore, the study of emotion recognition seems to be very practical. One of the most important challenges in this field is to decode the EEG’s information and map it to specific emotions, which we intend to address in this study. In this regard, several investigations have been conducted using various procedures in the past, which we will review in the next section.

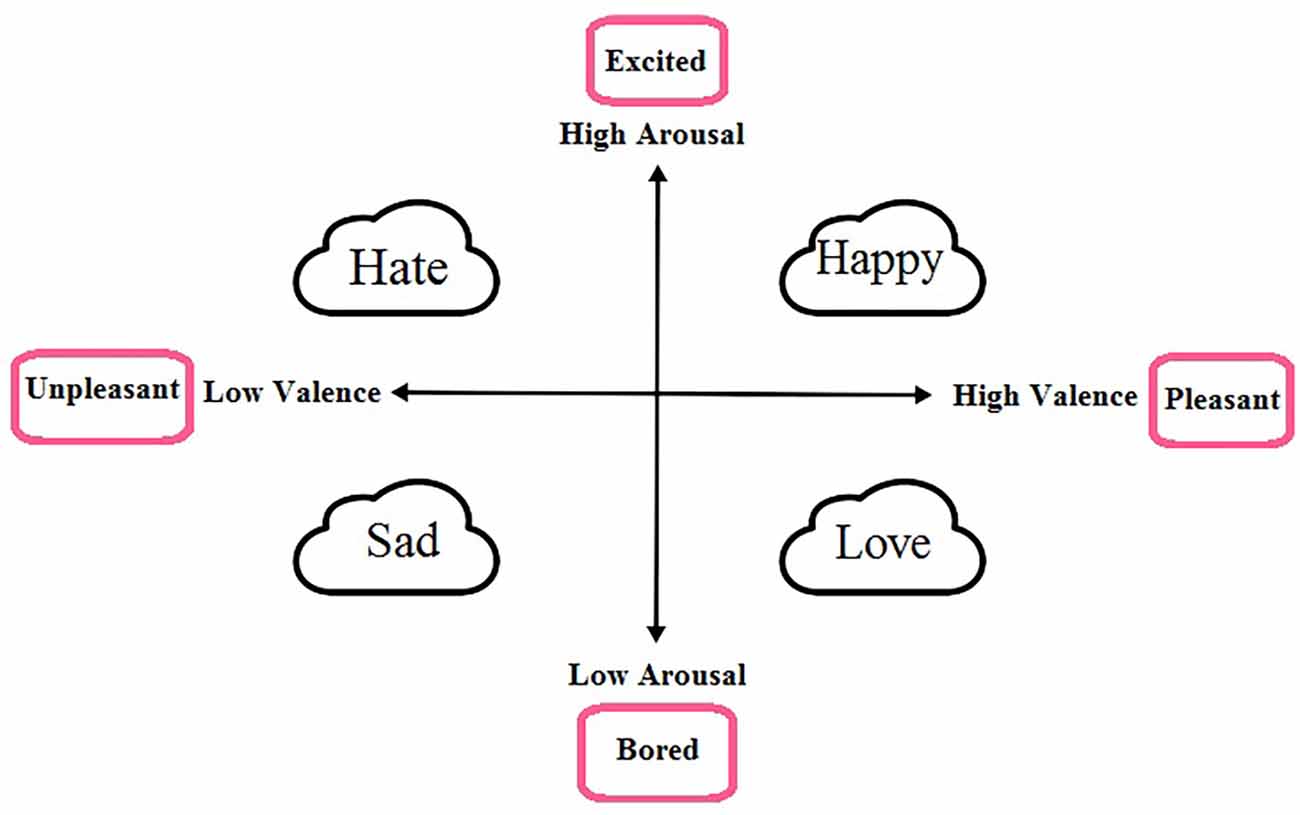

Explaining a person’s emotional state is one of the main issues in emotion recognition. Dimensional and discrete models are two models for describing emotional states. In general, some fundamental emotional states, such as sadness, happiness, hate, joy, surprise, terrible, and anger, express discrete models (Ekman, 1992), whereas the valence-arousal space represents the dimensional model (Mehrabian, 1996). The dimensional model describes all emotional states using the valence and arousal axis. The range of qualitative changes varies from negative to positive, and each one is interpreted in a way. While high to low-level activity in the arousal state represents exited to boring conditions, the positive and negative valence score states demonstrate pleasant (e.g., happiness) to unpleasant feelings (e.g., sadness). Figure 1 illustrates the arousal-valence dimensional model and some of the discrete emotions.

Figure 1. Representation of dimensional model for emotions , this model defines all emotions on a two dimensional space, valence and arousal. Valence denotes the polarity of emotions [positive (pleasant) or negative (unpleasant)] and arousal shows the amount of excitement [high (excited) or low (bored)]. As seen in the figure, discrete emotions, based on the amount of arousal and valence values, can be shown on this dimensional model.

In recent years, emotion recognition has received much attention in many fields of science. Several approaches have been introduced to identify or classify emotions, which will be reviewed below.

Several approaches have been used for emotion recognition in the literature. Since a person’s emotional state has an external appearance on his/her face and the emotions can be recognized from his/her face, one of the most common approaches to recognize emotions is the facial expression recognition systems (Koelstra et al., 2012; Sharma et al., 2019; Yadav, 2021). Another common approach to recognizing emotions is speech analysis done in a wide range of studies (Schuller et al., 2003; Jahangir et al., 2021). Despite the positive results reported from these methods, the person may want to hide his/her inner feelings. Also, these recognition methods have a critical limitation because they are dependent on the cultural and social environment of the subjects. This limitation may be overcome by using physiological signals such as electromyogram (EMG), electroencephalogram (EEG), skin temperature, blood volume pulse, etc. (Yoon and Chung, 2013). Thus, the emotion recognition systems moved towards processing physiological signals because they are more accurate due to non-being controllable by the subject. Physiological changes are the basis of emotions in our body (Alhagry et al., 2017), so analyzing these signals, like electrocardiogram (ECG), electromyogram (EMG), galvanic skin response (GSR), blood volume pressure (BVP), and electroencephalogram (EEG) make emotions to be recognized (Koelstra et al., 2012; Moharreri et al., 2018; Bao et al., 2021; Ebrahimzadeh et al., 2021a). Because the source of a person’s emotions is the central nervous system (CNS), the brain signals seem to be the most appropriate option for extracting emotional information. The most common signal that shows the brain’s electrical activity is the electroencephalogram (EEG), which is widely used in extracting and analyzing brain system information due to its non-invasiveness, easy recording, and very high temporal resolution (Ebrahimzadeh et al., 2019a, 2021b; Zhong et al., 2020; Sadjadi et al., 2021). The EEG signal actually measures the brain’s activity, which is responsible for regulating and controlling emotions (Soroush et al., 2020), so emotion recognition systems based on EEG signals have been favored by researchers (Takahashi, 2004; Bos, 2006; Petrantonakis and Hadjileontiadis, 2011; Bajaj and Pachori, 2015; Pham et al., 2015; Singh and Singh, 2017).

There are several linear feature extraction techniques, including time and frequency domain methods (Taran and Bajaj, 2019) and a variety of traditional machine learning methods such as Support Vector Machines (SVM), Linear Discriminant Analysis (LDA), Artificial Neural Networks (ANN) and functional/effective connectivity (Zhang et al., 2020; Ebrahimzadeh et al., 2021c; Seraji et al., 2021) for EEG-based Emotion Recognition systems (Zhong et al., 2020; Bao et al., 2021). Statistical features of EEG signals, such as mean value, power of the signal, and the first and second difference, are usually used as time-domain features (Takahashi and Tsukaguchi, 2003), and frequency domain characteristics like the power spectrum of each EEG different bands are used as frequency domain features (Wang et al., 2011). The above methods are all linear analysis methods. But the EEG signal is generated by a very complex system (i.e., the brain) that is supposed to have a nonlinear, non-stationary, and chaotic behavior (Soroush et al., 2020). So, it is better to adopt a suitable non-linear method to extract information from this nonlinear complex system.

Regarding the nonlinear nature of EEG (Stam, 2005), it seems nonlinear features and tools outperform emotion recognition. Non-linear features, such as Fractal dimension (FD; Sourina and Liu, 2011; Liu and Sourina, 2014), sample entropy (Jie et al., 2014; Raeisi et al., 2020), and nonstationary index (Kroupi et al., 2011), have been used in mentioned studies to recognize emotions. Among the nonlinear approaches that have been performed, some of them require advanced combinations of a large number of features or use complicated systems or algorithms, so their computational cost is high, and also the clinical meaning of each variable is fully blurred within complex classifiers (García-Martínez et al., 2017; Ebrahimzadeh et al., 2019b), so, in this study, we tried to propose a method to overcome this issue that has a low computational cost.

One of the nonlinear analysis tools is Symbolic Time Series Analysis (STSA), which has been favored over the last few years in many research areas, including mechanical systems, artificial intelligence, data mining, and the bio-signal processing (Alcaraz, 2018). One of the main advantages of symbols is the effectiveness of numerical computation that could be considerably boosted relative to what is achievable by directly analyzing the original time series (Daw et al., 2003). This is an essential feature in utilizing systems with limited computational speed and memory capacity used in real-time mobile platform applications. Additionally, analysis of symbolic data is typically robust to the measurement noise (Daw et al., 2003; Reinbold et al., 2021). There, to have low-cost and relatively simple devices, symbolization can be directly performed in the instrumentation software (Chin et al., 2005). Also, it has been shown that, in the case of noisy signals, symbolization can boost the signal-to-noise ratio (Daw et al., 2003). Symbolic analysis has been used to investigate many biological systems features (Daw et al., 2003; Glass and Siegelmann, 2010; Schulz and Voss, 2017; Awan et al., 2018), in between, neural pathologies diagnosing (Lehnertz and Dickten, 2015) and neural systems laboratory measurement are the most notable candidates. For example, Azarnoosh et al. (2011) used symbolic analysis methods to determine mental fatigue.

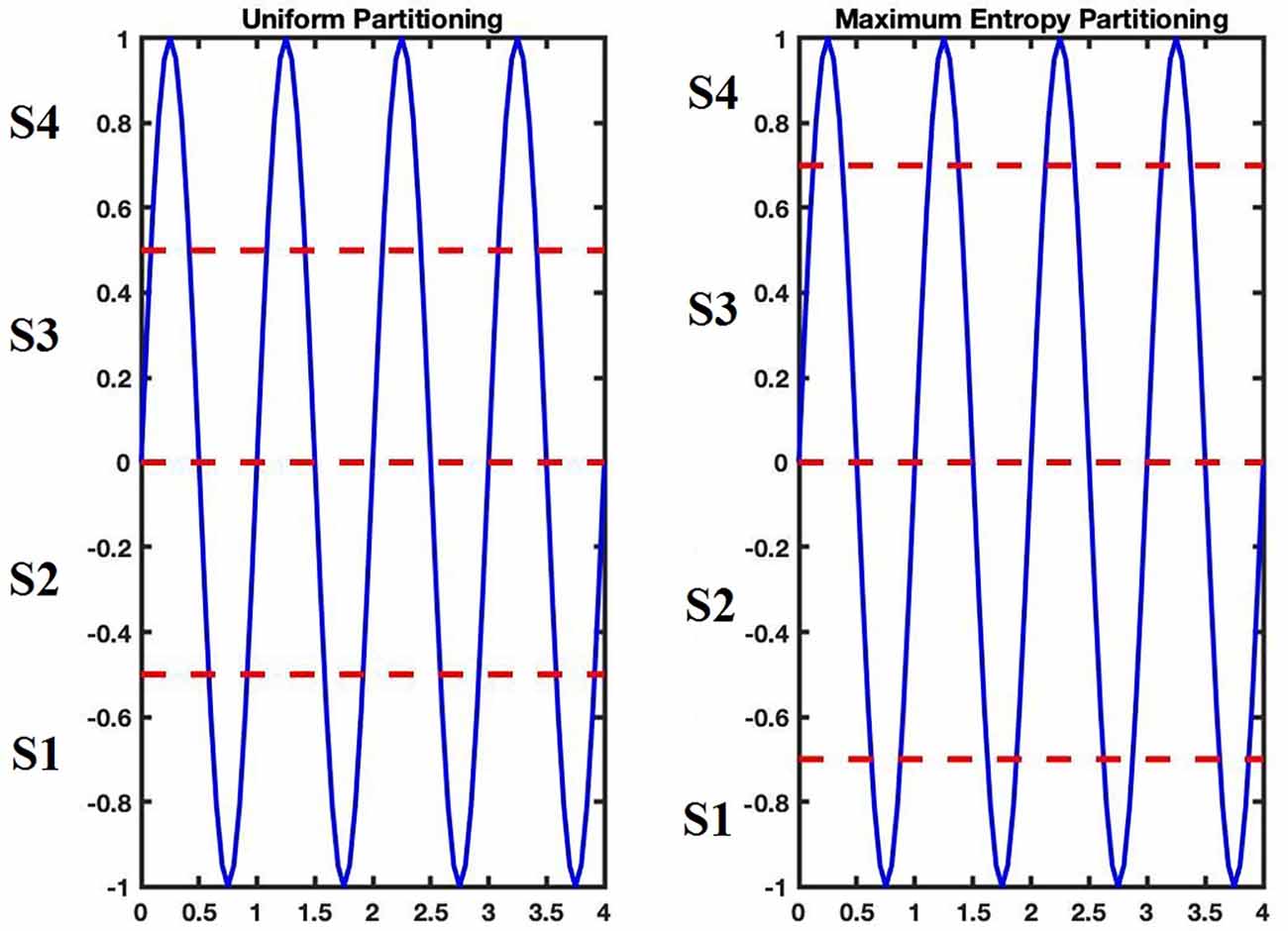

The original data must be discretized into a matching sequence of symbols to perform this technique. The transformed version of the original data contains temporal information of the original signal. So, an important stage in STSA is the data partitioning for generating the symbol sequence (Daw et al., 2003). Traditional methods for generating the symbols and selecting the location of partitions have used the mean, midpoint, or median of the data. Other methods that have been suggested are: to make equal size intervals over the data range (“Uniform Partitioning”; Rajagopalan and Ray, 2006) or equal probability regions over the data range (“Maximum Entropy partitioning”; Rajagopalan and Ray, 2006). Tang et al. (1997) argued that a mean-based binary symbol set partitioning could be used for dynamics reconstruction of nonlinear models, even upon noisy dynamics. Uniform partitioning of EEG signals was applied to identify precursors to seizures by Hively et al. (2000). There are also other approaches to defining symbols, including “symbolic false nearest neighbors partitioning (SFNNP)” (Buhl and Kennel, 2005) and “wavelet-space partitioning (WSP)” (Rajagopalan and Ray, 2006).

One of the essential tools in nonlinear system analysis is the phase space, which has important information about the system (Soroush et al., 2020). Relying on the precious knowledge of the phase space, in the present study, we try to extract this information by the symbolic analysis of this space to find a general pattern for emotions and classify some emotional states based on these patterns.

Another problem with most methods used to recognize or classify emotions is that they are subject-dependent. Because the people’s opinions towards feelings are subjective (Soroush et al., 2020), being dependent on the person is a weakness for an emotion recognition system, and designing a system independent of the individual, which can achieve a general pattern for each emotion, is a significant advantage to it counts. This study uses the leave-one-subject-out validation method to provide a subject-independent system for emotion recognition.

The theoretical framework of a novel STSA-based approach has been introduced in the present study to recognize the dynamical patterns and its experimental validation for emotion recognition using EEG signals. Considering the advantages mentioned for the symbolic time series analysis method in the analysis of signals and nonlinear systems (such as reducing the sensitivity to noise, decreasing the numerical computation, etc.) and according to the complex and non-linear nature of the EEG signal, our goal in this study is to develop a new method based on the symbolic time series analysis approach, which uses the most constructive information from the phase space with the least computational cost. To extract the information from the whole brain system, all 32 EEG channels would be utilized to construct the phase space. This, however, would dramatically increase the dimensionality and computational demands. We propose Spherical Phase Space Partitioning (SPSP) to overcome this issue, which uses for partitioning the phase space, generating the symbolic sequences, and achieving a general index pattern for each emotional state. Then, the symbolic sequences are compared and classified based on the maximum value of a similarity index. A subject-independent protocol will be used to enhance generalization ability.

So, this study aims to improve the precision of emotion recognition systems with a simple, low computational cost and subject-independent algorithm. The remainder of the manuscript is organized as follows. “Materials and Methods” explains the database put to analysis in the study, provides a brief description of the traditional STSA and cosine similarity index methods, and finally introduces the proposed method for symbolization. The results are given in “Results” Section and then discussed in “Discussion” Section. Ultimately, the most important conclusions are brought to light in the final part of “Discussion” Section.

Materials and Methods

Dataset

DEAP database was applied in the current study, a multimodal emotional database for human emotions analysis, taken from subjects who watched music videos (Koelstra et al., 2012). The DEAP database consists of EEG and peripheral physiological signals of 32 subjects, in which 40 music videos were displayed for each subject. EEG and peripheral signals were recorded at a sampling rate of 512 Hz. In this study, pre-processed data provided by DEAP authors were used. After down-sampling the EEG data to 128 Hz, they were averaged to the common reference. Afterward, eye artifacts were taken away, and a high-pass filter was used. The duration of each video was 1 min, and after each movie, subjects scored the movie in each of the five dimensions of a self-assessment questionnaire. Emotional response covers five dimensions: dominance, valence, arousal, liking, and familiarity. Moreover, some videos have featured discrete labels like happiness and sadness. This study is based on the discrete model for emotion, using five emotional states sadness, happiness, hate, joy, and mellow.

Symbolic Time Series Analysis

Symbolic Analysis of a signal is a new approach in which continuous signals are converted to symbol sequences using partitioning of the continuous signal domain (Srivastav, 2014). This method is a special case of symbolic dynamics, which is used as its substitution, especially for signals, because of the inherent limitations in the symbolic dynamics method for executing for signals (the symbolic dynamics approach is often applied to systems), particularly in the presence of noise (Daw et al., 2003).

According to the concept of symbolic dynamics, it would be possible to describe the features of a dynamical system by partitioning its phase space into K sets that are mutually disjoint {S1, S2,…, SK}, so each possible trajectory transforms into a sequence of symbols (Robinson, 1998). Typically, a generating partition is needed for applying the concepts of symbolic dynamics, which match an exclusive assignment of symbolic sequences to every system’s trajectory. It should be noted that this requirement is usually ignored in real-world applications because of noise; yet, even if there is no noise, generating partitions are either not available or could not be estimated (Hirata et al., 2004). Hence, as an alternative to symbolic dynamic, performing Symbolic Time Series Analysis (STSA) has been favored recently in many applications (Donner et al., 2008).

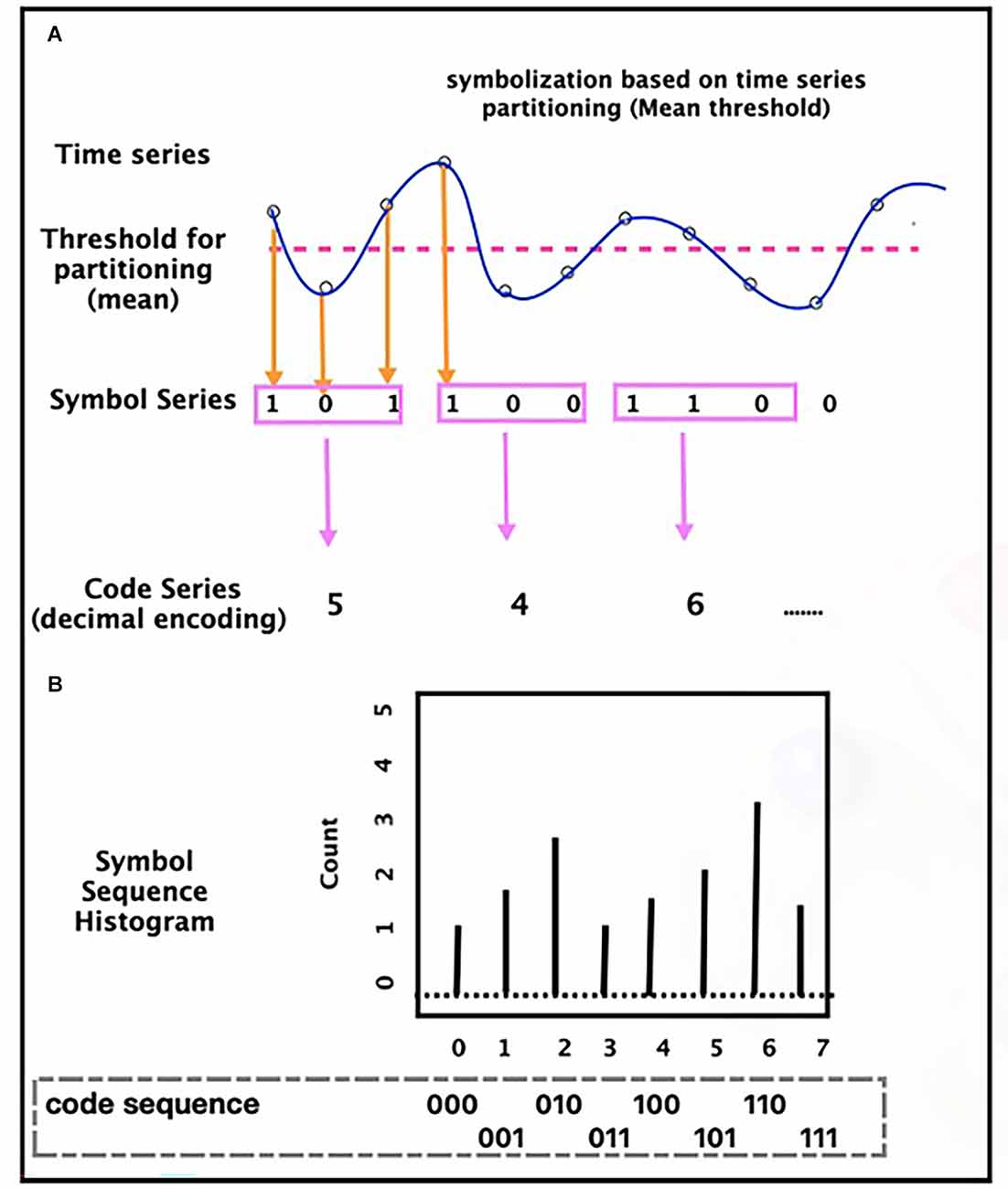

In traditional symbolic dynamic (or symbolic time series analysis), the next step after symbolization is symbolic sequence (words in the symbolic dynamics letter) construction which is made by gathering groups of symbols together to identify temporal patterns. Finally, the analysis of these symbol sequences (words) is usually performed by statistical analysis of the word occurrence frequency in the symbolic sequences. Figure 2 illustrates this process for a time series.

Figure 2. Time series symbolization process (A) and tabulating a histogram of symbol sequence (B)—based on a threshold (for example, mean, in this figure), the time-series convert to a symbol sequence (series). Based on the length considered for the words, the sequence of symbols becomes a series of words. Then, the analysis of the words is usually performed by statistical analysis of the words occurrence, frequency, like histogram.

As mentioned in section 1, there are some criteria for partitioning and discretizing the signal, such as mean, median, mean ± variance, and some other new approaches like False Nearest Neighbor Partitioning (Kennel and Buhl, 2003), Wavelet Partitioning (Rajagopalan and Ray, 2006) and Hilbert–Hung Partitioning (Sarkar et al., 2009). This article aims to introduce a new approach for partitioning the continuous data in the phase space based on the spherical partitioning, which will be explained in Section “Proposed STSA-Based Emotion Recognition Method”.

Cosine Similarity

A similarity measure is an important tool for determining the degree of similarity between two objects. It is believed that similarity measures are advantageous in pattern recognition, image processing, and machine learning (Ye, 2011). The cosine similarity measure is one of these measures, a widely used metric that is both a simple and effective (Xia et al., 2015). It is defined as the cosine of the angle between the two vectors, determines whether two vectors are pointing in roughly the same direction (Salton and McGill, 1986), and is obtained by dividing the inner product of two vectors by the product of their lengths.

This classic measure is applied for information extraction and is the most useful described measure for proving vector similarity (Salton and McGill, 1986). The formulate of the cosine similarity is simply as follows:

Where Ai and Bi are components of vectors A and B, respectively and ||x|| indicate the absolute value of the desired vector. As can be seen, the cosine similarity is obtained by dividing the inner product of two vectors by their lengths. This division eliminates the effect of the “length” or “magnitude” of the vectors, which is an important feature of cosine similarity. This lack of sensitivity to vectors’ magnitude is essential, especially in our study where we work with “codes” or “symbols”, instead of “amplitude” or “real values”. The lack of dependence on the domain seems very necessary and valuable. So we use this measure to find similarities between each emotional symbolic sequence and each emotion index, for classification purposes.

Proposed STSA-Based Emotion Recognition Method

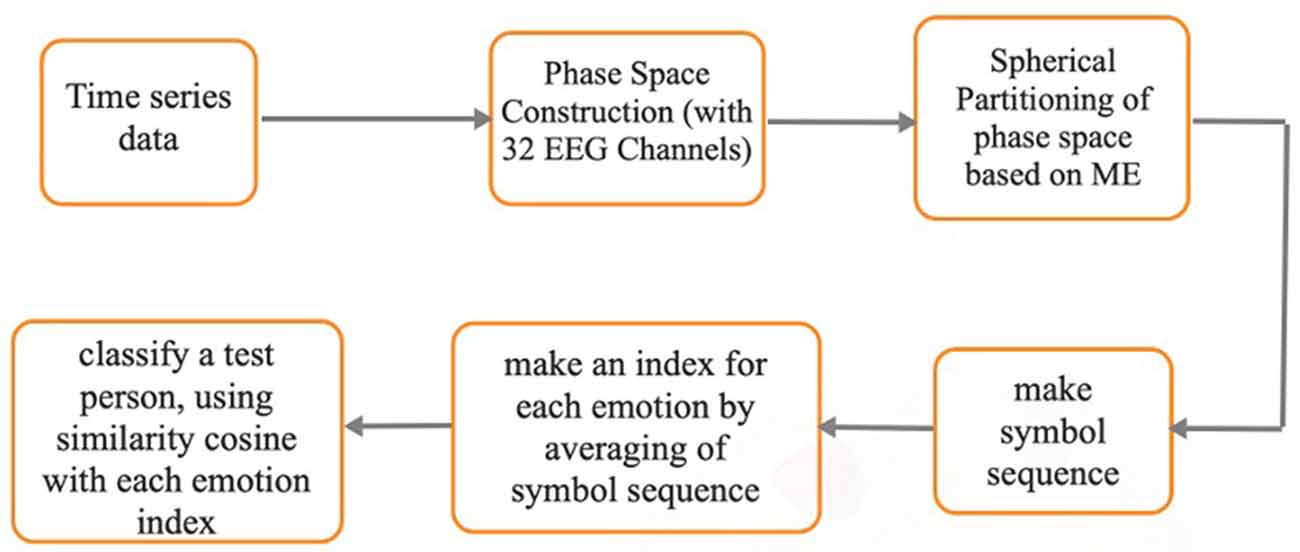

The adopted procedure of STSA to recognize emotions used in this study is explained briefly in this section. The adjusted STSA emotion recognition method uses the vector information generated by phase space partitioning, wherein the time-series data grow. The steps are as follows:

• Partitioning of the signal’s phase space and transforming time-series data from the continuous domain to the symbolic domain.

• Calculation of the similarity between each emotional state vector and reference (index) vectors for the classification of each emotional test vector.

Spherical Phase Space Partitioning Based Symbolic Time Series Analysis (SPSP–STSA)

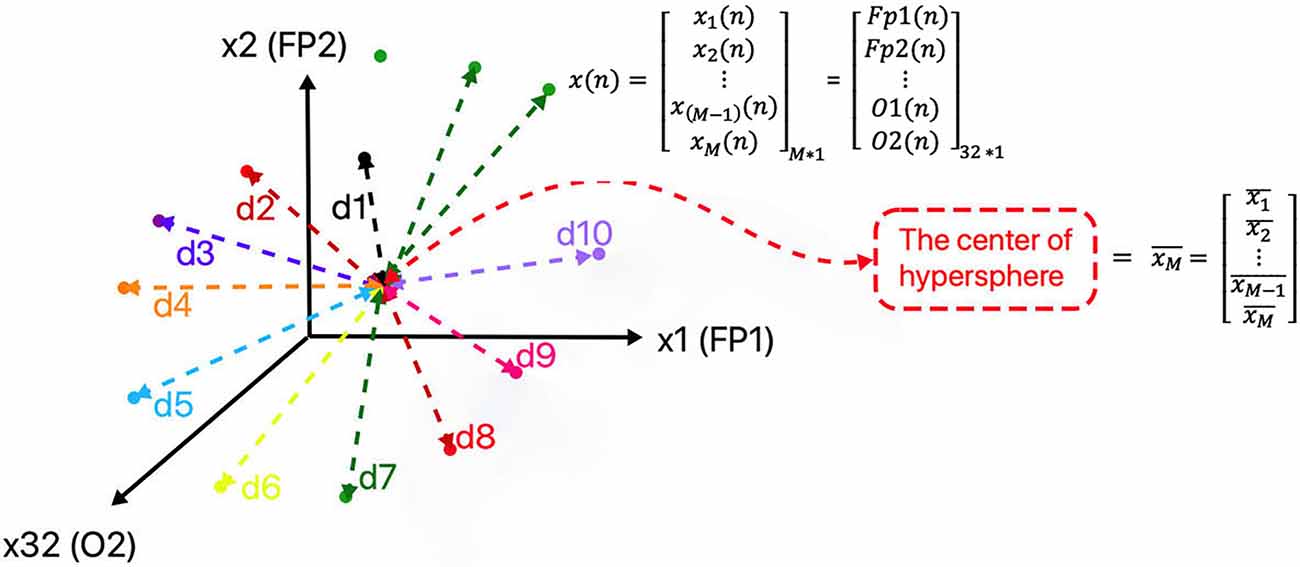

Because of the advantages of phase space in representing the nonlinear features of a system, partitioning the phase space was employed in this study. To analyze a set of signals, using partitioning the phase space, we first need to construct its phase space trajectory. All 32 EEG channels (Fp1, Fp2, AF3, AF4, Fz, F3, F4, F7, F8, FC1, FC2, FC5, FC6, Cz, C3, C4, T7, T8, CP1, CP2, CP5, CP6, Pz, P3, P4, P7, P8, PO3, PO4, Oz, O1, O2) is used to construct the phase space and each sample point “n” in the phase space, is constructed as follow:

where n = 1:N denotes the samples, N = 8,064 is the total points of each signal, M = 32 is the number of channels (the dimensional of phase space), xi(n) denotes the ith dimension of the space at the sample “n” and x(n) denotes each M dimensional point of the phase space (Figure 3). The matrix of all trajectory points for one EEG signal (for example: for one movie of one person with the length of N points) in the phase space will be as follows:

Figure 3. Representation of points and the distances of points from the center of the hypersphere, in the constructed phase space of the signal (due to the limitation of representation of space dimensions number, only three dimensions of phase space are displayed).

where X is an M*N matrix that indicates the total points of a trajectory for one EEG signal.

Then we use the new spherical partitioning idea to discretize the phase space and make symbols.

In the partitioning scheme, there are two main approaches:

-Uniform Partitioning

-Maximum Entropy Partitioning (ME)

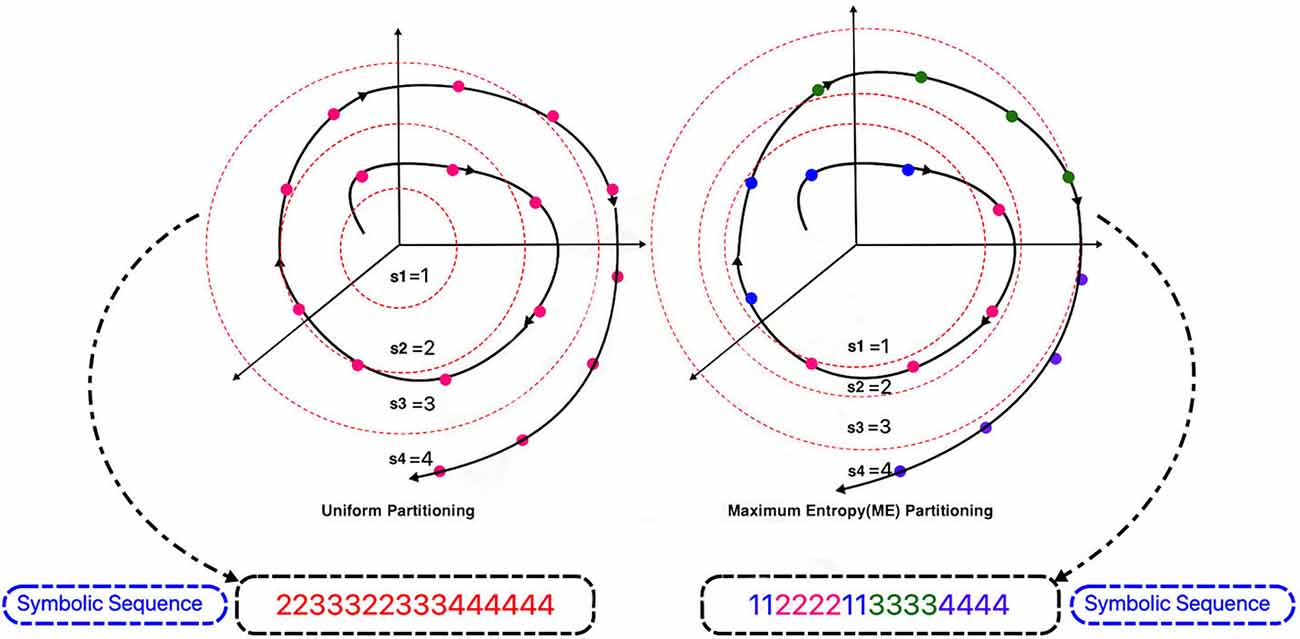

Firstly, the maximum and minimum of the distances from the mean point are assessed, and equal-sized regions are obtained by partitioning the range between the maximum and minimum. These regions are mutually disjointed. A symbol from the alphabet (the set of symbols) then assigns each region. The data point is coded with a specified symbol if it is in a certain region. Therefore, a sequence of symbols is generated based on a given sequence of time series data (Uniform Partitioning).

Naturally, it seems to be more appropriate to partition regions due to their information, i.e., the higher the information, the finer the partitioning, and vice versa. To reach this goal, a partitioning method was applied so that the entropy of the generated symbol sequence is increased as much as possible (Rajagopalan and Ray, 2005). The process to obtain an ME partition is explained below.

Suppose the length of the signal is L and the number of symbols is S (size of the alphabet). L samples of signals are set in ascending arrangement. A data segment of length [L/S] starts from the first sorted data point, makes a separate portion of the partitioning, in which [x] denotes the integral part of x. Figure 4 shows the difference between these two types of partitioning for a sine signal and Figure 5 shows this difference for a sample signal in three-dimensional phase space. The plot on the right in Figure 5 indicates ME partitioning for a sample signal, with S = 4. As predicted, the partitions’ size is not equal; nevertheless, the symbols have equal likelihoods. It is more probable that discrepancies in data patterns are revealed in ME partitioning than in other partitioning methods (Rajagopalan and Ray, 2006).

Figure 5. Comparison of uniform and maximum entropy partitioning in spherical partitioning and symbol sequence generation phase.

In our proposed spherical partitioning method, based on the ME partitioning approach, at first, each point’s distance (d) is calculated from the center of the hypersphere (Figure 3). Then the partitioning is done based on the number of symbols selected according to the rule described below.

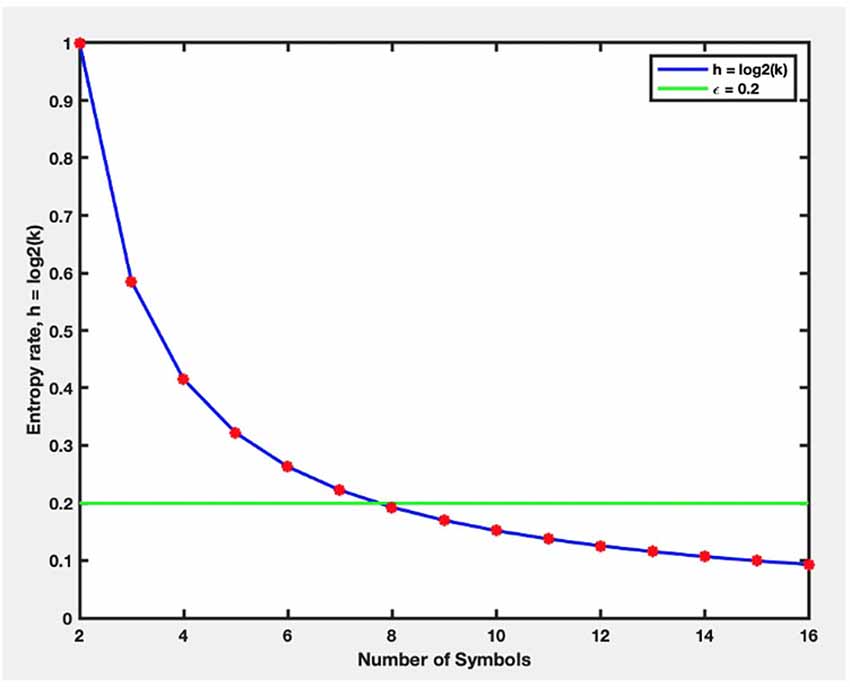

The selection of symbol number S is essential in STSA and is an active research area. For instance, a small value of S might be insufficient to capture the features of the time series data. Besides, a large value of S could cause redundancy and unused computational resources. To select S, Rajagopalan and Ray (2006) applied an entropy-based approach, so we used this approach in this study. Presume H(k) signifies the symbol sequence Shannon Entropy which is acquired after k symbol partitioning:

where pi indicates the probability of occurrence of the symbol si and H(1) = 0.

If sufficient information content of the underlying data set, like the Maximum Entropy Partitioning situation, has been available, then H(k) would be log2(k). To indicate the change in entropy in terms of the number of symbols (S), we describe h(.) as follows:

A threshold εh was defined, where 0 < εh << 1 and start with k = 2; for each k, the symbol probabilities pi (i = 1, 2, …, k) are computed, and H(k) and h(k) are calculated by equations (4) and (5) respectively and when h(k) < εh, exit the algorithm and choose k as the number of symbols (S) (Rajagopalan and Ray, 2006; Figure 6).

After selecting the number of symbols, it is the time to partition the phase space, and in this study, a new approach was considered as Spherical Phase Space Partitioning (SPSP). Consider “M” EEG channels shown by xm(n) m = 1, …, M (m: counter of dimension, M: total number of state-space dimension (EEG channels)); n = 1, …., N (n: sample counter, N: length of time series). At first, the phase space is constructed using 32 channels. The mean point (center of hypersphere) is calculated as follows:

where denotes the center of a hypersphere in M dimensional state space and indicates the mean value of each dimension obtained by the average formula mentioned above. Then, based on the trajectory points distance from the center point (d) (Figure 3) and based on the ME approach, the phase space is partitioned to S symbols. As the trajectory evolves in the phase space, symbol sequences would be made (Figure 5).

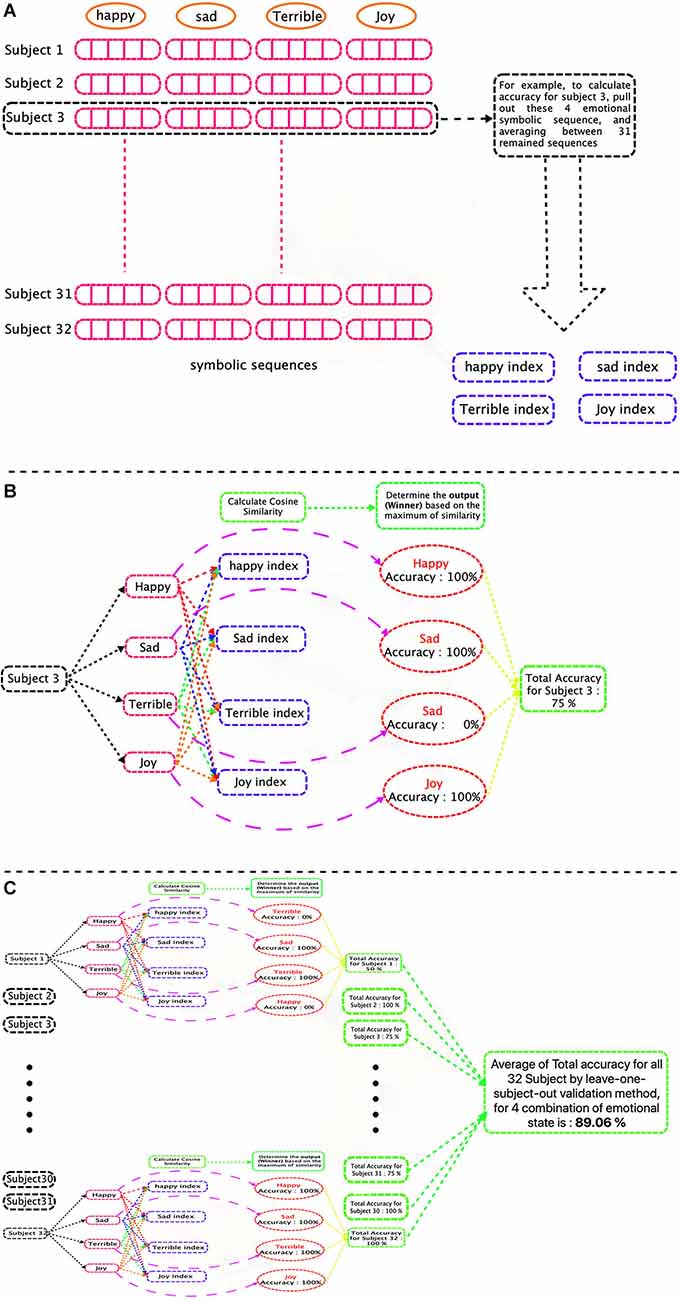

Classification: Cosine Similarity

After partitioning the phase space and generating the symbol sequences, we need to compare each person’s emotional state symbolic vector with some emotional indexes and detect and classify each emotional state. We use cosine similarity for this purpose. For each subject, we calculated the cosine similarity of the symbolic sequence of that person with the index of each emotional group, which is obtained by averaging over the symbolic sequences of other subjects, and the classification of each test data is based on the maximum value of this criterion. The performances of these models were examined using the leave-one-subject-out cross-validation method.

The process of the proposed method is shown in Figure 7. Also, we are preparing the codes in a user-friendly way, and after preparing them, we will put them on the GitHub.

Results

Our new proposed approach to STSA was applied to the openly accessible emotion dataset (the DEAP dataset; Koelstra et al., 2012). All 32 EEG channels have been used for phase-space construction. The phase space was constructed and partitioned individually for each person and each video. Selecting the number of symbols S was one of the critical phases in STSA, which was performed based on the entropy-based criteria described in “Proposed STSA-Based Emotion Recognition Method” Section. The threshold parameter εh was selected to be 0.2. Figure 8 shows the change in entropy h (Equation 5) against the number of symbols S. As is shown, h monotonically was reduced with increasing the amount of S and becomes less than εh when S = 8. Thus, the number of symbols S was selected to be 8.

Figure 8. The changes in entropy (h) against the number of symbols (S). (h) monotonically was reduced with increasing the amount of S and becomes less than εh when S = 8.

After selecting the number of symbols, the phase space was constructed and partitioned for each person’s movie based on the ME Partitioning approach. As the system evolves through time, it moves through several blocks in its phase space, and the matching symbol j (j = 1, 2,…, 8) is allocated to it thus a data sequence is adapted to a symbol sequence si1, si2, si3,…. Therefore, the symbol sequences represent coarse-graining of the trajectories time evolution. After all intended movies of all participants were symbolized, we used cosine similarity (Equation 1) to find the maximum similarity between each person’s symbolic emotional EEG signals and each emotional index for classification purposes.

To validate the classification results, we used the leave-one-subject-out cross-validation method (i.e., to compute the accuracy for each subject, that person was excluded, and the average of all 31 remaining sequences was calculated as an index for each emotional state.) Then, the cosine similarity between each emotional index and a test vector was computed, the accuracy for each subject was computed, and the total accuracy was obtained by averaging on all subjects. This procedure is shown in Figures 9A–C.

Figure 9. Graphical flowchart of classification process of a signal (four groups of emotions case). (A) Training phase and calculate emotional indices. (B) Test Phase. Each test subject’s EEG emotional symbolic sequence, is compared to four symbolic indexes (for each emotion) and based on the maximum value of the cosine similarity, is assigned to one emotional group and finally, the accuracy is calculated. (C) Calculate total accuracy for all subjects.

The procedure was performed for four different combinations of emotions by adding one emotion in each step. At first, we started with two emotions, namely happiness, and sadness, and our proposed method was able to classify these two emotional groups with a precision of 98.44%. In the next step, we added Joy to the groups, and we were able to distinguish these three groups with an accuracy of 93.75%. For four groups classification, we were able to classify the emotions including happiness, sadness, joy, and terrible and separated four groups with an accuracy of 89.06% and finally, for five groups of emotions (happiness, sadness, joy, terrible, and mellow) the classification accuracy was 85%.

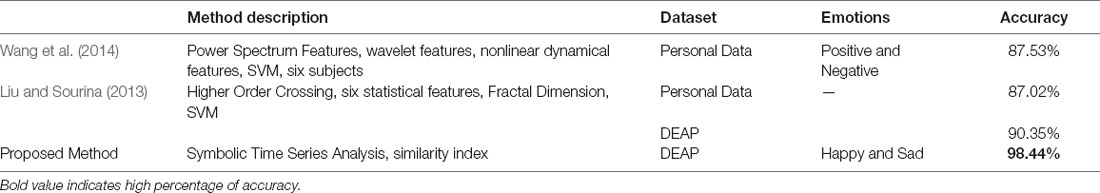

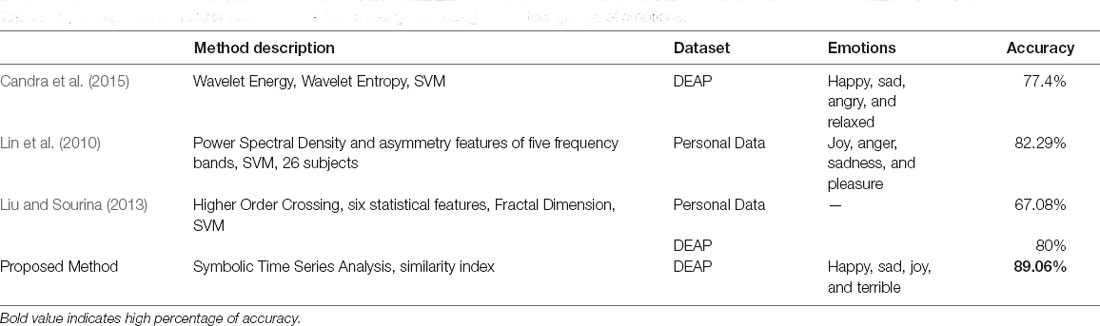

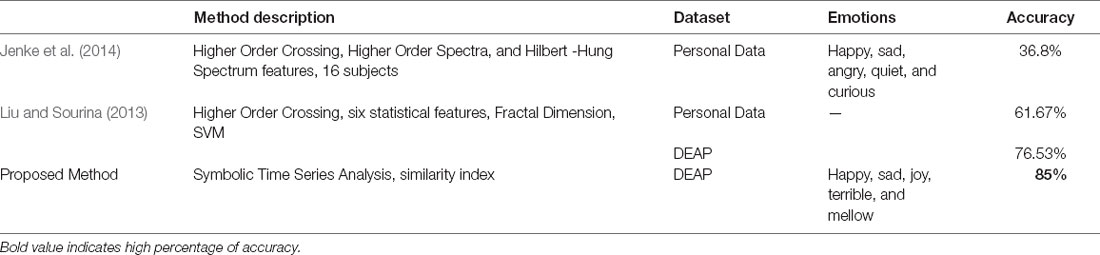

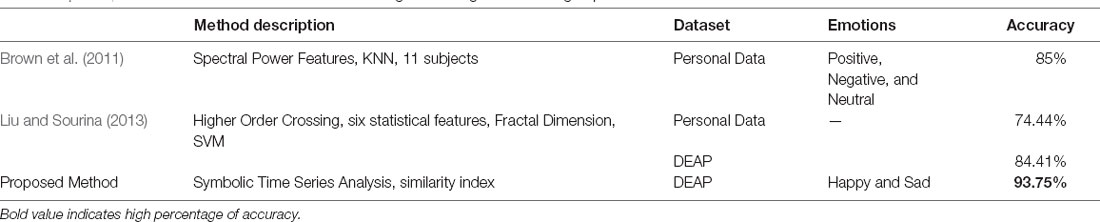

It should be noted that in all four combinations of emotional states, an increase in classification accuracy is observed compared to some previous studies. Our proposed method results in comparison with the other methods in the literature, on the same dataset or some other datasets, were shown in Tables 1–4.

Table 2. Comparison of different studies for emotion recognition using EEG—three groups of emotions.

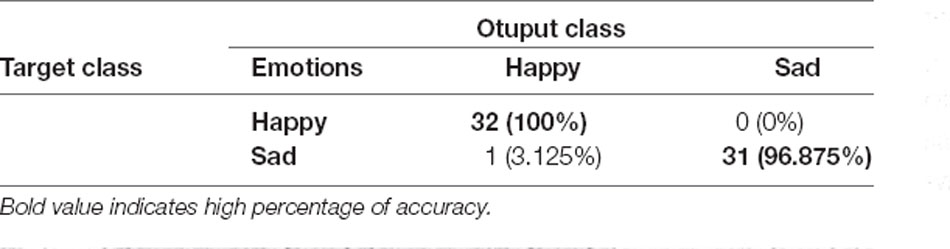

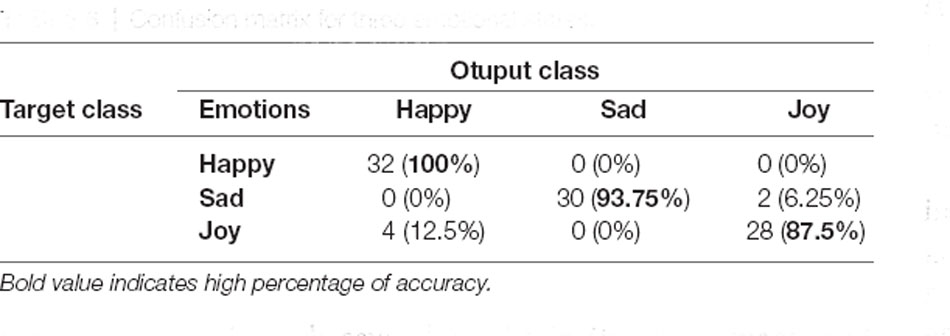

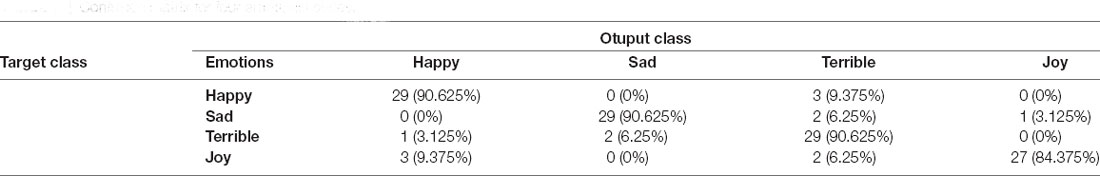

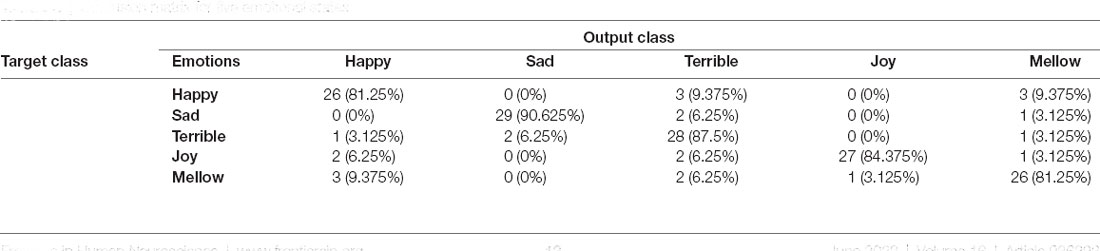

The classification accuracy is reported in Tables 1–4, but in order to determine which emotions are easiest and which are the most difficult to distinguish, investigating the confusion matrices can be useful. The confusion matices for each groups of emotional state are shown in Tables 5–8. Tables 6, 7 show, for example, that joy is the most difficult emotional state to classify and has the highest rate of misclassification among the three and four emotional states. In addition, other emotional states can be analyzed using Tables 5–8.

Discussion

Emotion recognition using EEG signals has received much attention recently. EEG is an invaluable source of information about the brain dynamic and is Inherently nonlinear and highly complex (Soroush et al., 2020). External or internal stimulation, such as eliciting emotions, causes brain activity to become more complex. Accordingly, in order to study these types of systems, the use of nonlinear time series descriptors like what is performed in this study is imperative. Given the Symbolic Time Series Analysis (STSA) potential capabilities for nonlinear analyzing the EEG dynamics, it was used to recognize the emotional states in this study. Moreover, it was shown that low computational complexity and noise robustness are the other advantages of this method (Daw et al., 2003). Most of the different approaches to STSA have been proposed in the one-dimensional signal space. A person’s emotions, however, are not localized in any particular area of their brain. Neural circuits responsible for emotion regulation are distributed along different brain regions (Soroush et al., 2020). Therefore, we have used the entire 32-channel EEG signal for the whole brain, and the partitioning of the phase space has been done based on our proposed Spherical Phase Space Partitioning. To achieve more information, we performed the analysis in the phase space of all 32 EEG channels (instead of applying the STSA directly on the EEG time series themselves) and partitioned the phase space based on our proposed Spherical Phase Space Partitioning. Applying to the DEAP dataset, our proposed method has successfully identified all four combinations of emotional states. The comparison with other methods applied on the same dataset (DEAP) or other datasets is shown in Tables 1–4. The evaluation indicated that our proposed method can recognize emotions more accurately than several different methods (which are represented in bold in Tables). For instance, the emotion recognition system which has been proposed by Liu and Sourina (2013), based on Higher-Order Crossing (HOC) features and Support Vector Machine (SVM) classifier, for two emotional states of DEAP, had an accuracy of 90.35%. In contrast, our proposed method had the accuracy of 98.44% on the same number of emotional states of DEAP. Also, for three combinations of emotions, they had an accuracy of 84.41%, while our proposed method had an accuracy of 93.75%. Increasing the classification accuracy can be seen in two other cases for four and five combinations of emotional states.

For four emotional states, the confusion matrix in Table 7 indicates that the happy, sad and terrible were classified with 90.625% of accuracy, and joy was classified with 84.375%. This indicates that in this group, joy is the most difficult to classify. It may be due to the similarity of the brain’s behavioral patterns in joy to states such as happiness. As is seen, in 9.375% of cases, the state of joy is mistakenly classified as happiness, which can indicate the similarity of the dynamic pattern of the brain in the case of joy to happiness.

Conclusion

This study proposed an emotion recognition system based on a new Symbolic Time Series Analysis approach. Using the DEAP dataset, the proposed EEG-based emotion recognition system has successfully identified the emotional states of happiness and sadness, with an accuracy of 98.44% in a subject-independent approach. By increasing the number of emotions, the accuracy rates of 93.75%, 89.06%, and 85% were obtained for three, four, and five groups of emotions, respectively. The key strength of the proposed method is that while the dynamic characteristics of the signals are preserved, it is also a simple and fast method, which has a significant advantage, especially in real-time applications. For our future work, a potential research direction worth considering would be reducing the number of channels based on the importance of the brain area in the emotional states, which some methods like ICA could do. In addition, since the DEAP dataset videos are more based on the dimensional definition for emotions and just a few numbers of videos are labeled based on the discrete definition, more studies on DEAP have attempted to classify the emotions based on the arousal and valence axes. Future works can be conducted to use our proposed method to classify the arousal and valence axes or four areas of the space.

Data Availability Statement

The data analyzed in this study is subject to the following licenses/restrictions: In this study, the DEAP database has been used. This database is available on: https://www.eecs.qmul.ac.uk/mmv/datasets/deap/download.html, but to access it, its collectors must be requested to be issued a password and provided it to you. Requests to access these datasets should be directed to https://anaxagoras.eecs.qmul.ac.uk/request.php?dataset=DEAP.

Author Contributions

AM and HT contributed to the conception and design of the study. AM is the supervisor and corresponding author. HT performed the analysis and wrote the first draft of the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We express our gratitude to the Cognitive Science and Technologies Council (COGC), Tehran, Iran for their support.

References

Alcaraz, R. (2018). Symbolic entropy analysis and its applications. Entropy (Basel) 20:568. doi: 10.3390/e20080568

Alhagry, S., Fahmy, A. A., and El-Khoribi, R. A. (2017). Emotion recognition based on EEG using LSTM recurrent neural network. Int. J. Adv. Comp. Sci. Appl. 8, 355–358. doi: 10.14569/IJACSA.2017.081046

Awan, I., Aziz, W., Shah, I. H., Habib, N., Alowibdi, J. S., Saeed, S., et al. (2018). Studying the dynamics of interbeat interval time series of healthy and congestive heart failure subjects using scale based symbolic entropy analysis. PLoS One 13:e0196823. doi: 10.1371/journal.pone.0196823

Azarnoosh, M., Nasrabadi, A. M., Mohammadi, M. R., and Firoozabadi, M. (2011). Investigation of mental fatigue through EEG signal processing based on nonlinear analysis: symbolic dynamics. Chaos Solitons Fractals 44, 1054–1062. doi: 10.1016/j.chaos.2011.08.012

Bajaj, V., and Pachori, R. B. (2015). “Detection of human emotions using features based on the multiwavelet transform of EEG signals,” in Brain-Computer Interfaces, Vol. 74, eds A. Hassanien, and A. Azar (Cham: Springer). doi: 10.1007/978-3-319-10978-7_8

Bao, G., Zhuang, N., Tong, L., Yan, B., Shu, J., Wang, L., et al. (2021). Two-level domain adaptation neural network for eeg-based emotion recognition. Front. Hum. Neurosci. 14:605246. doi: 10.3389/fnhum.2020.605246

Bos, D. O. (2006). EEG-based emotion recognition. The influence of visual and auditory stimuli. 56, 1–17.

Brown, L., Grundlehner, B., and Penders, J. (2011). Towards wireless emotional valence detection from EEG. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2011, 2188–2191. doi: 10.1109/IEMBS.2011.6090412

Buhl, M., and Kennel, M. B. (2005). Statistically relaxing to generating partitions for observed time-series data. Phys. Rev. E. Stat. Nonlin. Soft Matter Phys. 71:046213. doi: 10.1103/PhysRevE.71.046213

Byun, S., Kim, A. Y., Jang, E. H., Kim, S., Choi, K. W., Yu, H. Y., et al. (2019). Detection of major depressive disorder from linear and nonlinear heart rate variability features during mental task protocol. Comput. Biol. Med. 112:103381. doi: 10.1016/j.compbiomed.2019.103381

Candra, H., Yuwono, M., Handojoseno, A., Chai, R., Su, S., and Nguyen, H. T. (2015). Recognizing emotions from EEG subbands using wavelet analysis. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2015, 6030–6033. doi: 10.1109/EMBC.2015.7319766

Chai, X., Wang, Q., Zhao, Y., Liu, X., Bai, O., and Li, Y. (2016). Unsupervised domain adaptation techniques based on auto-encoder for non-stationary EEG-based emotion recognition. Comput. Biol. Med. 79, 205–214. doi: 10.1016/j.compbiomed.2016.10.019

Chin, S. C., Ray, A., and Rajagopalan, V. (2005). Symbolic time series analysis for anomaly detection: a comparative evaluation. Signal Process. 85, 1859–1868. doi: 10.1016/j.sigpro.2005.03.014

Cohen, I., Garg, A., and Huang, T. S. (2000). “Emotion recognition from facial expressions using multilevel HMM,” in Neural Information Processing Systems, Vol. 2, (State College, PA, USA: Citeseer).

Daw, C. S., Finney, C. E. A., and Tracy, E. R. (2003). A review of symbolic analysis of experimental data. Rev. Sci. Instrum. 74, 915–930. doi: 10.1063/1.1531823

Donner, R., Hinrichs, U., and Scholz-Reiter, B. (2008). Symbolic recurrence plots: a new quantitative framework for performance analysis of manufacturing networks. Eur. Phys. J. Special Top. 164, 85–104. doi: 10.1140/epjst/e2008-00836-2

Ebrahimzadeh, E., Asgarinejad, M., Saliminia, S., Ashoori, S., and Seraji, M. (2021a). Predicting clinical response to transcranial magnetic stimulation in major depression using time-frequency EEG signal processing. Biomed. Eng. Appl. Basis Commun. 33:2150048. doi: 10.4015/S1016237221500484

Ebrahimzadeh, E., Shams, M., Jounghani, A. R., Fayaz, F., Mirbagheri, M., Hakimi, N., et al. (2021b). Localizing confined epileptic foci in patients with an unclear focus or presumed multifocality using a component-based EEG-fMRI method. Cogn. Neurodyn. 15, 207–222. doi: 10.1007/s11571-020-09614-5

Ebrahimzadeh, E., Shams, M., Seraji, M., Sadjadi, S. M., Rajabion, L., and Soltanian-Zadeh, H. (2021c). Localizing epileptic foci using simultaneous EEG-fMRI Recording: template component cross-correlation. Front. Neurol. 12:695997. doi: 10.3389/fneur.2021.695997

Ebrahimzadeh, E., Shams, M., Fayaz, F., Rajabion, L., Mirbagheri, M., Araabi, B. N., et al. (2019a). Quantitative determination of concordance in localizing epileptic focus by component-based EEG-fMRI. Comput. Methods Programs Biomed. 177, 231–241. doi: 10.1016/j.cmpb.2019.06.003

Ebrahimzadeh, E., Soltanian-Zadeh, H., Araabi, B. N., Fesharaki, S. S. H., and Habibabadi, J. M. (2019b). Component-related BOLD response to localize epileptic focus using simultaneous EEG-fMRI recordings at 3T. J. Neurosci. Methods 322, 34–49. doi: 10.1016/j.jneumeth.2019.04.010

Ekman, P. (1992). An argument for basic emotions. Cogn. Emotion 6, 169–200. doi: 10.1080/02699939208411068

Flynn, M., Effraimidis, D., Angelopoulou, A., Kapetanios, E., Williams, D., Hemanth, J., et al. (2020). Assessing the effectiveness of automated emotion recognition in adults and children for clinical investigation. Front. Hum. Neurosci. 14:70. doi: 10.3389/fnhum.2020.00070

Franklin, J. C., Ribeiro, J. D., Fox, K. R., Bentley, K. H., Kleiman, E. M., Huang, X., et al. (2017). Risk factors for suicidal thoughts and behaviors: a meta-analysis of 50 years of research. Psychol. Bull. 1436, 187–232. doi: 10.1037/bul0000084

García-Martínez, B., Martínez-Rodrigo, A., Zangróniz, R., Pastor, J. M., and Alcaraz, R. (2017). Symbolic analysis of brain dynamics detects negative stress. Entropy 19:196. doi: 10.3390/e19050196

Glass, L., and Siegelmann, H. T. (2010). Logical and symbolic analysis of robust biological dynamics. Curr. Opin. Genet. Dev. 20, 644–649. doi: 10.1016/j.gde.2010.09.005

Goleman, D. P. (1995). Emotional Intelligence: Why It Can Matter More Than IQ for Character, Health and Lifelong Achievement. New York, NY: Bantam Books.

Hirata, Y., Judd, K., and Kilminster, D. (2004). Estimating a generating partition from observed time series: symbolic shadowing. Phys. Rev. E. Stat. Nonlin. Soft Matter Phys. 70:016215. doi: 10.1103/PhysRevE.70.016215

Hively, L. M., Protopopescu, V. A., and Gailey, P. C. (2000). Timely detection of dynamical change in scalp EEG signals. Chaos 10, 864–875. doi: 10.1063/1.1312369

Ioannou, S. V., Raouzaiou, A. T., Tzouvaras, V. A., Mailis, T. P., Karpouzis, K. C., and Kollias, S. D. (2005). Emotion recognition through facial expression analysis based on a neurofuzzy network. Neural Netw. 18, 423–435. doi: 10.1016/j.neunet.2005.03.004

Jahangir, R., Teh, Y. W., Hanif, F., and Mujtaba, G. (2021). Deep learning approaches for speech emotion recognition: state of the art and research challenges. Multimed. Tools Appl. 80, 23745–23812. doi: 10.1007/s11042-020-09874-7

Jenke, R., Peer, A., and Buss, M. (2014). Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 5, 327–339. doi: 10.1109/TAFFC.2014.2339834

Jie, X., Cao, R., and Li, L. (2014). Emotion recognition based on the sample entropy of EEG. Biomed. Mater. Eng. 24, 1185–1192. doi: 10.3233/BME-130919

Ju, Z., Gun, L., Hussain, A., Mahmud, M., and Ieracitano, C. (2020). A novel approach to shadow boundary detection based on an adaptive direction-tracking filter for brain-machine interface applications. Appl. Sci. 10:6761. doi: 10.3390/app10196761

Kennel, M. B., and Buhl, M. (2003). Estimating good discrete partitions from observed data: symbolic false nearest neighbors. Phys. Rev. Lett. 91:084102. doi: 10.1103/PhysRevLett.91.084102

Khezri, M., Firoozabadi, M., and Sharafat, A. R. (2015). Reliable emotion recognition system based on dynamic adaptive fusion of forehead biopotentials and physiological signals. Comput. Methods Programs Biomed. 122, 149–164. doi: 10.1016/j.cmpb.2015.07.006

Koelstra, S., Muhl, C., Soleymani, M., Lee, J.-S., Yazdani, A., Ebrahimi, T., et al. (2012). Deap: a database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 3, 18–31. doi: 10.1109/T-AFFC.2011.15

Kroupi, E., Yazdani, A., and Ebrahimi, T. (2011). “EEG correlates of different emotional states elicited during watching music videos,” in Affective Computing and Intelligent Interaction, eds S. D’Mello, A. Graesser, B. Schuller, and J. C. Martin (Berlin, Heidelberg: Springer), doi: 10.1007/978-3-642-24571-8_58

Lehnertz, K., and Dickten, H. (2015). Assessing directionality and strength of coupling through symbolic analysis: an application to epilepsy patients. Philos. Trans. A Math. Phys. Eng. Sci. 373:20140094. doi: 10.1098/rsta.2014.0094

Lin, Y.-P., Wang, C.-H., Jung, T.-P., Wu, T.-L., Jeng, S.-K., Duann, J.-R., et al. (2010). EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 57, 1798–1806. doi: 10.1109/TBME.2010.2048568

Liu, Y., and Sourina, O. (2013). “EEG databases for emotion recognition,” in 2013 International Conference on Cyberworlds (Yokohama, Japan), 302–309. doi: 10.1109/CW.2013.52

Liu, Y., and Sourina, O. (2014). “Real-time subject-dependent EEG-based emotion recognition algorithm,” in Transactions on Computational Science XXIII, eds M. L. Gavrilova, C J. K. Tan, X. Mao, and L. Hong (Berlin, Heidelberg: Springer), 199–223. doi: 10.1007/978-3-662-43790-2_11

Mehrabian, A. (1996). Pleasure-arousal-dominance: a general framework for describing and measuring individual differences in temperament. Curr. Psychol. 14, 261–292. doi: 10.1007/BF02686918

Moharreri, S., Dabanloo, N. J., and Maghooli, K. (2018). Modeling the 2D space of emotions based on the poincare plot of heart rate variability signal. Biocybern. Biomed. Eng. 38, 794–809. doi: 10.1016/j.bbe.2018.07.001

Mühl, C., Brouwer, A. M., van Wouwe, N. C., van den Broek, E. L., Nijboer, F., and Heylen, D. K. J. (2011). Modality-Specific Affective Responses and Their Implications for Affective BCI. Graz, Austria: Verlag der Technischen Universität.

Petrantonakis, P. C., and Hadjileontiadis, L. J. (2011). A novel emotion elicitation index using frontal brain asymmetry for enhanced EEG-based emotion recognition. IEEE Trans. Inf. Technol. Biomed. 15, 737–746. doi: 10.1109/TITB.2011.2157933

Pham, T. D., Tran, D., Ma, W., and Tran, N. Y. (2015). “Enhancing performance of EEG-based emotion recognition systems using feature smoothing,” in Neural Information Processing, eds S. Arik, T. Huang, W. Lai and Q. Liu (Cham: Springer), 95–102. doi: 10.1007/978-3-319-26561-2_12

Proverbio, A. M., Camporeale, E., and Brusa, A. (2020). Multimodal recognition of emotions in music and facial expressions. Front. Hum. Neurosci. 14:32. doi: 10.3389/fnhum.2020.00032

Raeisi, K., Mohebbi, M., Khazaei, M., Seraji, M., and Yoonessi, A. (2020). Phase-synchrony evaluation of EEG signals for multiple sclerosis diagnosis based on bivariate empirical mode decomposition during a visual task. Comput. Biol. Med. 117:103596. doi: 10.1016/j.compbiomed.2019.103596

Rajagopalan, V., and Ray, A. (2006). Symbolic time series analysis via wavelet-based partitioning. Signal Process. 86, 3309–3320. doi: 10.1016/j.sigpro.2006.01.014

Rajagopalan, V., and Ray, A. (2005). “Wavelet-based space partitioning for symbolic time series analysis,” in Proceedings of the 44th IEEE Conference on Decision and Control, (Seville, Spain), 5245–5250. doi: 10.1109/CDC.2005.1582995

Reinbold, P. A. K., Kageorge, L. M., Schatz, M. F., and Grigoriev, R. O. (2021). Robust learning from noisy, incomplete, high-dimensional experimental data via physically constrained symbolic regression. Nat. Commun. 12:3219. doi: 10.1038/s41467-021-23479-0

Robinson, C. (1998). Dynamical Systems: Stability, Symbolic Dynamics and Chaos. Boca Raton, FL: CRC press.

Sadjadi, S. M., Ebrahimzadeh, E., Shams, M., Seraji, M., and Soltanian-Zadeh, H. (2021). Localization of epileptic foci based on simultaneous EEG-fMRI data. Front. Neurol. 12:645594. doi: 10.3389/fneur.2021.645594

Salovey, P., and Mayer, J. D. (1990). Emotional intelligence. Imagination Cogn. Personal. 9, 185–211. doi: 10.2190/DUGG-P24E-52WK-6CDG

Salton, G., and McGill, M. J. (1986). Introduction to Modern Information Retrieval. New York: McGraw-Hill.

Sarkar, S., Mukherjee, K., and Ray, A. (2009). Generalization of Hilbert transform for symbolic analysis of noisy signals. Signal Process. 89, 1245–1251. doi: 10.1016/j.sigpro.2008.12.009

Schuller, B., Rigoll, G., and Lang, M. (2003). “Hidden Markov model-based speech emotion recognition,” in 2003 International Conference on Multimedia and Expo, (Baltimore, MD, USA), 401–404. doi: 10.1109/ICME.2003.1220939

Schulz, S., and Voss, A. (2017). “Symbolic dynamics, Poincaré plot analysis and compression entropy estimate complexity in biological time series,” in Complexity and Nonlinearity in Cardiovascular Signals, ed G. Valenza (Berlin: Springer International Publishing).

Seraji, M., Mohebbi, M., Safari, A., and Krekelberg, B. (2021). Multiple sclerosis reduces synchrony of the magnocellular pathway. PLoS One 16:e0255324. doi: 10.1371/journal.pone.0255324

Sharma, M., Jalal, A. S., and Khan, A. (2019). Emotion recognition using facial expression by fusing key points descriptor and texture features. Multimed. Tools Appl. 78, 16195–16219. doi: 10.1007/s11042-018-7030-1

Singh, M. I., and Singh, M. (2017). Development of a real time emotion classifier based on evoked EEG. Biocybern. Biomed. Eng. 37, 498–509. doi: 10.1016/j.bbe.2017.05.004

Soroush, M. Z., Maghooli, K., Setarehdan, S. K., and Nasrabadi, A. L. (2020). Emotion recognition using EEG phase space dynamics and Poincare intersections. Biomed. Signal Process. Control 59:101918. doi: 10.1016/j.bspc.2020.101918

Sourina, O., and Liu, Y. (2011). A fractal-based algorithm of emotion recognition from EEG using arousal-valence model. Biosignals 2, 209–214. doi: 10.5220/0003151802090214

Srivastav, A. (2014). “Estimating the size of temporal memory for symbolic analysis of time-series data,” in American Control Conference, (Portland, OR, USA), 1126–1131. doi: 10.1109/ACC.2014.6858929

Stam, C. J. (2005). Nonlinear dynamical analysis of EEG and MEG: review of an emerging field. Clin. Neurophysiol. 116, 2266–2301. doi: 10.1016/j.clinph.2005.06.011

Stickel, C., Ebner, M., Steinbach-Nordmann, S., Searle, G., and Holzinger, A. (2009). “Emotion detection: application of the valence arousal space for rapid biological usability testing to enhance universal access,” in Universal Access in Human-Computer Interaction, ed C. Stephanidis (Berlin, Heidelberg: Springer), 615–624. doi: 10.1007/978-3-642-02707-9_70

Takahashi, K. (2004). “Remarks on emotion recognition from bio-potential signals,” in 2nd International conference on Autonomous Robots and Agents, (Palmerston North, New Zealand), 186–191.

Takahashi, K., and Tsukaguchi, A. (2003). “Remarks on emotion recognition from multi-modal bio-potential signals,” in 2003 IEEE International Conference on Systems, Man and Cybernetics. Conference Theme-System Security and Assurance (Cat. No. 03CH37483), (Hammamet, Tunisia). doi: 10.1109/ICSMC.2003.1244650

Tang, X. Z., Tracy, E. R., and Brown, R. (1997). Symbol statistics and spatio-temporal systems. Physica D Nonlin. Phenomena 102, 253–261. doi: 10.1016/S0167-2789(96)00201-1

Taran, S., and Bajaj, V. (2019). Emotion recognition from single-channel EEG signals using a two-stage correlation and instantaneous frequency-based filtering method. Comput. Methods Programs Biomed. 173, 157–165. doi: 10.1016/j.cmpb.2019.03.015

Torres, E. P., Torres, E. A., Hernández-Álvarez, M., and Yoo, S. G. (2020). EEG-based BCI emotion recognition: a survey. Sensors (Basel) 20:5083. doi: 10.3390/s20185083

Verma, G. K., and Tiwary, U. S. (2017). Affect representation and recognition in 3d continuous valence-arousal-dominance space. Multimed. Tools Appl. 76, 2159–2183. doi: 10.1007/s11042-015-3119-y

Wang, X.-W., Nie, D., and Lu, B.-L. (2011). “EEG-based emotion recognition using frequency domain features and support vector machines,” in Neural Information Processing, eds B. L. Lu, L. Zhang, and J. Kwok (Berlin, Heidelberg: Springer), 734–743. doi: 10.1007/978-3-642-24955-6_87

Wang, X.-W., Nie, D., and Lu, B.-L. (2014). Emotional state classification from EEG data using machine learning approach. Neurocomputing 129, 94–106. doi: 10.1016/j.neucom.2013.06.046

Xia, P., Zhang, L., and Li, F. (2015). Learning similarity with cosine similarity ensemble. Inform. Sci. 307, 39–52. doi: 10.1016/j.ins.2015.02.024

Yadav, S. P. (2021). Emotion recognition model based on facial expressions. Multimed. Tools Appl. 80, 26357–26379. doi: 10.1007/s11042-021-10962-5

Ye, J. (2011). Cosine similarity measures for intuitionistic fuzzy sets and their applications. J. Math. Comput. Model. 53, 91–97. doi: 10.1016/j.mcm.2010.07.022

Yin, Z., Zhao, M., Wang, Y., Yang, J., and Zhang, J. (2017). Recognition of emotions using multimodal physiological signals and an ensemble deep learning model. Comput. Methods Programs Biomed. 140, 93–110. doi: 10.1016/j.cmpb.2016.12.005

Yoon, H. J., and Chung, S. Y. (2013). EEG-based emotion estimation using Bayesian weighted-log-posterior function and perceptron convergence algorithm. Comput. Biol. Med. 43, 2230–2237. doi: 10.1016/j.compbiomed.2013.10.017

Zhang, S., Hu, B., Ji, C., Zheng, X., and Zhang, M. (2020). Functional connectivity network based emotion recognition combining sample entropy. IFAC-PapersOnLine 53, 458–463. doi: 10.1016/j.ifacol.2021.04.125

Keywords: emotion recognition, subject-independent classification systems, brain-computer interface, nonlinear dynamic analysis, symbolic time series analysis, phase space partitioning, cosine similarity

Citation: Tavakkoli H, Motie Nasrabadi A (2022) A Spherical Phase Space Partitioning Based Symbolic Time Series Analysis (SPSP–STSA) for Emotion Recognition Using EEG Signals. Front. Hum. Neurosci. 16:936393. doi: 10.3389/fnhum.2022.936393

Received: 05 May 2022; Accepted: 01 June 2022;

Published: 29 June 2022.

Edited by:

Lutz Jäncke, University of Zurich, SwitzerlandReviewed by:

Masoud Seraji, University of Texas at Austin, United StatesAli Rahimpour Jounghani, University of California, Merced, United States

Mohammad Shams, George Mason University, United States

Copyright © 2022 Tavakkoli and Motie Nasrabadi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ali Motie Nasrabadi, bmFzcmFiYWRpQHNoYWhlZC5hYy5pcg==

Hoda Tavakkoli

Hoda Tavakkoli Ali Motie Nasrabadi

Ali Motie Nasrabadi