94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Hum. Neurosci., 24 June 2022

Sec. Brain Imaging and Stimulation

Volume 16 - 2022 | https://doi.org/10.3389/fnhum.2022.920558

This article is part of the Research TopicMethods and Protocols in Brain StimulationView all 6 articles

Behavioral effects of non-invasive brain stimulation techniques (NIBS) can dramatically change as a function of different factors (e.g., stimulation intensity, timing of stimulation). In this framework, lately there has been a growing interest toward the importance of considering the inter-individual differences in baseline performance and how they are related with behavioral NIBS effects. However, assessing how baseline performance level is associated with behavioral effects of brain stimulation techniques raises up crucial methodological issues. How can we test whether the performance at baseline is predictive of the effects of NIBS, when NIBS effects themselves are estimated with reference to baseline performance? In this perspective article, we discuss the limitations connected to widely used strategies for the analysis of the association between baseline value and NIBS effects, and review solutions to properly address this type of question.

Converging evidence demonstrates that the behavioral effects of transcranial magnetic stimulation (TMS) could dramatically change as a function of different factors, such as stimulation intensity (Moliadze et al., 2003; Abrahamyan et al., 2011, 2015; Silvanto et al., 2017), timing of stimulation (Kammer, 2007; de Graaf et al., 2014; Chiau et al., 2017; Silvanto et al., 2017) and the initial brain “state” when stimulation is applied (Siebner, 2004; Silvanto and Pascual-Leone, 2008; Ruzzoli et al., 2010; Schwarzkopf et al., 2011; Perini et al., 2012; Romei et al., 2016; Silvanto and Cattaneo, 2017). In this framework, there is lately a growing interest toward the importance of considering the inter-individual differences in baseline performance when describing the impact of TMS. Although few studies directly investigated the role of inter-individual differences in determining the behavioral effects of non-invasive brain stimulation techniques (NIBS), there is currently a strong drive to explore how NIBS effects covary with individual characteristics, especially with the performance at baseline (see Silvanto et al., 2018).

A consistent body of independent and recent evidence suggests that baseline performance modulates TMS effects (Schwarzkopf et al., 2011; Painter et al., 2015; Emrich et al., 2017; Juan et al., 2017; Paracampo et al., 2018; Silvanto et al., 2018). Furthermore, also the effects of others brain stimulation techniques, such as transcranial direct current stimulation (tDCS) or transcranial random noise stimulation (tRNS), seem to interact with baseline performance level (Jones and Berryhill, 2012; Tseng et al., 2012; Hsu et al., 2014, 2016; Benwell et al., 2015; Learmonth et al., 2015; Juan et al., 2017; Penton et al., 2017; Schaal et al., 2017; Yang and Banissy, 2017; see also Vergallito et al., 2022 for a recent review). Together these findings have been interpreted as indicative of the importance of adopting an individual differences approach, when describing the effect of NIBS. This is indeed a potentially important issue for brain stimulation studies: Even though at group level a modulation in performance does not emerge, a deeper analysis focusing on individual differences may disclose stimulation effects characterizing specific classes of individuals (Silvanto et al., 2018). According to this view, baseline performance can be seen as an indirect measure of neural excitability that, in interaction with the TMS intensity, contributes to the behavioral outcome (Silvanto and Cattaneo, 2017; Silvanto et al., 2017, 2018). The facilitatory vs. inhibitory effect of TMS as a function of neuronal excitability is a well-established mechanism and it is consistently observed when TMS is applied during a behavioral task following a predictable manipulation of the initial neural state, such as adaptation or priming (see Silvanto et al., 2008 for a review). State-dependent TMS effects in paradigms based on priming/adaptation have been observed in a range of different domains, from number and letter processing (Kadosh et al., 2010; Cattaneo Z. et al., 2010; Renzi et al., 2011) to action observation (Cattaneo, 2010; Cattaneo L. et al., 2010; Jacquet and Avenanti, 2015) and perception of emotion (Mazzoni et al., 2017).

Assessing how baseline performance level (and brain state) determine behavioral effects of brain stimulation techniques is therefore an important question, which raise up crucial methodological issue. How to assess the association between baseline value and subsequent change? Or, in other words, how can we test whether the performance at baseline is predictive of the effect of NIBS? An approach that has been typically used to provide evidence of an association between baseline performance and their changes after the stimulation is the correlation approach. This consists in regressing or correlating the magnitude of the induced stimulation effect (which is defined as the performance in the effective TMS/tDCS condition minus the performance in the baseline/Sham condition) with the baseline level of performance (sham stimulation) (Emrich et al., 2017; Penton et al., 2017; Yang and Banissy, 2017; Paracampo et al., 2018; Silvanto et al., 2018; Diana et al., 2021; Wu et al., 2021). Another conceptually similar approach is the categorization approach (Tu et al., 2005). It consists in categorizing subjects according to threshold values, such as the median baseline performance (i.e., median-split) and subsequently comparing the effect of NIBS in terms of changes in the behavioral outcome (defined as the active TMS/tDCS condition minus the baseline performance) across the two subgroups (i.e., “low” performers vs. “high” performers) (Tseng et al., 2012; Hsu et al., 2014, 2016; Benwell et al., 2015; Learmonth et al., 2015; Juan et al., 2017; Schaal et al., 2017; Silvanto et al., 2018). However, these approaches are connected to severe biases in estimating the effects. Albeit such biases are well documented (Oldham, 1962; Tu et al., 2005; Chiolero et al., 2013), they have been neglected in several TMS/tDCS studies. We first illustrate biases connected to these methods, and we conclude by discussing techniques that have been proposed to investigate baseline modulatory effects without incurring in such biases.

The correlation approach consists in correlating or regressing a baseline with a deviation from the baseline, or equivalently in regressing the deviation from the baseline on the baseline. One issue with this strategy is not taking into account that the estimate of the deviation from a baseline depends on the baseline itself (Oldham, 1962). This issue is known as mathematical coupling, and can take place when a correlation is estimated between two variables that share a common source of variation (Blance et al., 2005). Let us denote as xi the observed baseline performance of the i-th individual and as yi the performance observed after NIBS. The deviation of i's performance from the baseline is computed as di=yi−xi. The relationship between baseline performance and NIBS effect can be then estimated as the correlation rd, x = ry−x,x. We should suspect a mathematical coupling by seeing that x contributes to both variables being correlated. Since x contributes positively to the first term of the correlation and negatively to the second term, the expected correlation is negative (Spearman, 1913).

A simple numeric example is probably the most effective way to illustrate how dramatic the effects of mathematical coupling can be (Oldham, 1962). We can use the R statistical language (R Core Team, 2021) to generate random data representing the performance of N = 50 subjects in the baseline (x) and experimental stimulation (y) conditions.

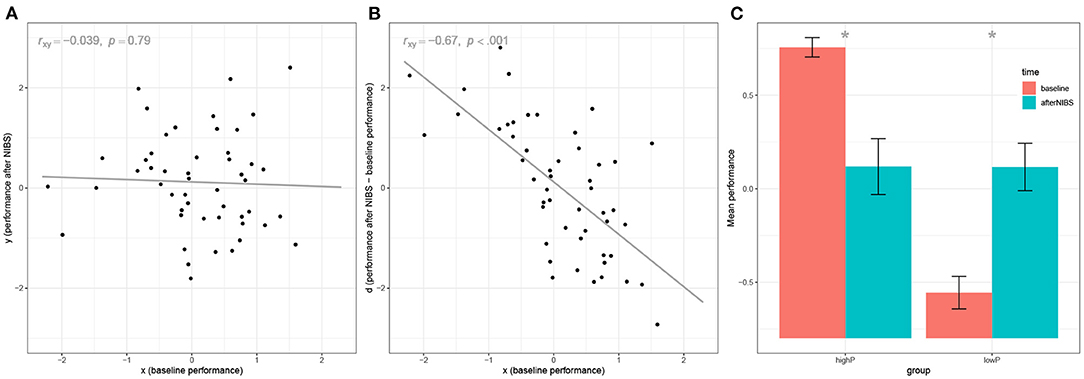

The first line, set.seed(1), serves to fix the random number generation procedure, such that the readers will be able to produce our exact same results on their computers. Lines 2 and 3 actually generate the data at baseline and after NIBS. In this example, all datapoints are independently sampled from a standard normal distribution with μ = 0 and σ = 1. Thus, data come from a population in which there is no relationship between the variables involved and no effect of neurostimulation whatsoever. In short, such data come from a population in which the null hypothesis is true for all parameters of interest. In fact, at line 4, we can test the effect of the NIBS by comparing performance before and after stimulation, and obtain a null result, t(49) = −0.09, p = 0.93, as it could be expected. Similarly, at line 5, we test the correlation between x and y and obtain a null result, r = −0.039, p = 0.79 (Figure 1A). However, at line 6 we test the correlation between the baseline and the deviation from the baseline, and we obtain ry−x, x = −0.67, p < 0.001 (Figure 1B). If we repeated the example with a different random seed, we would obtain slightly different results each time. On average, it can be demonstrated that our results would converge toward the value (Spearman, 1913; Chiolero et al., 2013).1 Thus, under the null hypothesis of no relationships and no effect of neurostimulation, a researcher using the correlation approach would expect to find a correlation between the baseline and the deviation that can be considered very large (Cohen, 1988).

Figure 1. Illustration of mathematical coupling and regression to the mean using a numeric example with N = 50 subjects. (A) visualizes the relationship between the simulated baseline performance (x) and the simulated performance after NIBS (y). Data are simulated to be uncorrelated. (B) shows the biases in the correlation approach, by visualizing the relationship between the baseline performance (x) and the deviation from the baseline after NIBS (d). (C) shows the biases in the categorization approach: The sample is split into high-performers (highP) and low-performers (lowP) and the baseline performance is compared with the performance after NIBS. Bars represent ±1 SE.

Another bias connected to the correlation approach is regression toward the mean due to measurement error at baseline (Nesselroade et al., 1980; Blomqvist, 1987; Tu et al., 2005). Let us now consider the fact that the individual performance is always assessed with a certain degree of error. Variations in observed performance could reflect transient and non-systematic factors (tiredness, distraction, etc.), which introduce noise in the assessment. The observed performance at baseline of the i-th subject could be decomposed into xi = Xi+exi, where the observed performance xi is given by the sum of the true performance Xi and measurement error exi. The same holds for the performance after NIBS, yi = Yi+eyi. A researcher's aim would be to estimate the true correlation, which is the correlation involving the true latent performance rY−X, X, but the researcher would typically approximate that value by estimating the correlation involving observed performance, ry−x, x. However, the observed difference yi−xi is equal to Yi−Xi+eyi−exi. The correlation ry−x, x is thus affected by the measurement error exi being present both in the independent and in the dependent variable, with opposite signs. In particular, Blomqvist (1987) has shown that the relationship between the observed correlation (or regression slope) and the true value is ry−x, x = rY−X, X(1−k)−k, where is the measurement error, the ratio of the error variance of the observed baseline performance, , to the total variance of the observed baseline performance, σx. For example, let us assume a situation in which the true correlation is very low, rY−X, X = 0.01, and the error variance in performance assessment is 40% (a value that is not uncommon in tasks used in the field, e.g., Fan et al., 2002). The researcher would expect to observe a correlation of ry−x, x = −0.394, which would be regarded as significantly different from zero (p < 0.05) on a sample of more than N = 25 participants.

Interestingly, both biases lead to the same type of results, which is observing a negative correlation between baseline and change. This is exactly what most of the studies in the field reviewed above reported.

The categorization approach consists in categorizing subjects into two groups, one including those with higher baseline performance (e.g., above the median) and the other including the remaining subjects. The deviation in performance between the two groups is then compared (e.g., in using t-test or ANOVA). The categorization approach avoids mathematical coupling but is nonetheless affected by regression toward the mean.

As for the correlation approach, an easy way to understand why the categorization approach is problematic is considering what would happen if this was applied under the null hypothesis. Let us define the null hypothesis as the one in which the neurostimulation has no effect whatsoever and the true performance of all participants is the same. For simplicity, let us assume that the true performance takes value zero (i.e., Xi = Yi = 0, ∀i). In this situation, any variance in the observed performance is just measurement noise. Following the typical categorization approach, we would nonetheless perform a median or mean split, relying on the observed baseline performance, and divide participants into high performers (highP) and low performers (lowP). The observed performance of the highP group will thus be always larger than the observed performance of the lowP group, but this will not be true for the performance after neurostimulation. Therefore, we will typically observe a performance increase after stimulation for the lowP group and a performance decrease for the highP group.

This bias can also be easily illustrated by continuing the simple numeric example used above. In particular, lines 7–10 in the code below perform the median split, separating the x and y variables for those who have better or worse observed baseline performance. Lines 11–12 perform a paired-samples t-test to examine changes in performance in the lowP group and calculate the effect size dz (Cohen, 1988; Perugini et al., 2018). Lines 13–14 replicate the analysis for the highP group.

The results of the t-test show, as expected, a significant performance improvement for the lowP group, t(24) = −2.90, p = 0.008, dz = 0.58, as well as a significant decrease in performance for the highP group, t(24) = 2.89, p = 0.008, dz = −0.58 (Figure 1C). This is of course an example on few randomly generated data points: If one repeated this example with different random data, the effect size would converge toward the values (i.e., ±0.68),2 an effect that is considered by Cohen above the medium size [i.e., “one large enough to be visible to the naked eye” (Cohen, 1988)], with a positive sign for the lowP group and a negative sign for the highP group.

We have shown how a researcher using either the correlation approach or the categorization approach would easily believe to have found a potentially interesting effect, even in a situation in which no effect is present, just because of mathematical coupling and regression to the mean. However, investigating how baseline performance can modulate NIBS effects is a very interesting research question and should not be neglected. Methods for testing such effects have been developed that allow reducing the impact of such systematic biases.

The first method has been proposed by Oldham (Oldham, 1962), and it consists in simply correlating the mean (or, equivalently, the sum) of the performance at baseline and after the stimulation (i.e., ) with the performance change (x−y). Albeit this method might appear very similar to the correlation method illustrated above, it can be demonstrated that it gets rid of the mathematical coupling (Tu and Gilthorpe, 2007). This method can be used in any situation in which the correlation approach is used, by simply changing one of the terms.

It has been shown that Oldham's method is equivalent to test a change in variance between x and y (Tu and Gilthorpe, 2007) and that a differential effect of NIBS according to the baseline implies a change in variance (Chiolero et al., 2013). An alternative test similar to Oldham's method is to directly test the differences between the variances of x and y (Tu and Gilthorpe, 2007). However, an important limitation of both this and Olhdam's methods is that any factor increasing or decreasing variance after NIBS other than the genuine stimulation effects, namely a change in error variance after NIBS, could lead to spurious conclusions (Tu et al., 2005; Chiolero et al., 2013).

In the above discussion, we have shown that if x and y are unrelated, the expected correlation between the baseline and the deviation from the baseline is . Researchers could then wonder whether it would be possible to test the correlation observed in their samples against this value, instead of zero. The issue is slightly complicated by the fact that the expected correlation is not always , but it depends on the correlation between x and y. Tu and colleagues (Tu et al., 2005) showed that the correct value can be calculated as . This method showed performances comparable to the Oldham's method in simulation (Tu et al., 2005). Like Oldham's method, this strategy assumes that error variance in the assessment of performance does not differ before and after NIBS (Tu et al., 2005).

Another method has been proposed by Blomqvist (1987), which corrects the distortion introduced by regression to the mean due to measurement error in baseline performance. This method requires estimating the parameter k mentioned above, to recover the true unbiased correlation from the observed correlation or regression slope using the formula3 . Parameter k, the measurement error, can be estimated as one minus the reliability of the test used for assessing performance (see Parsons et al., 2019 for guidance on how to estimate reliability in cognitive tests), and should be obtained on data independent of those used for the baseline (Tu and Gilthorpe, 2007). A limit of Blomqvsist's method is that it does not correct for regression to the mean due to factors other than measurement error, such as that due to genuine heterogeneity in the responses of patients to treatments (Tu and Gilthorpe, 2007).

Methods based on multilevel linear models have also been suggested to obtain unbiased estimates. In particular, if one has available many repeated measures over time and is interested in estimating whether the (e.g., linear) trend in change over time is related to the baseline, one can employ multilevel linear models and estimate the correlation between random intercept (i.e., the interindividual variance in the baseline performance) and random slope (i.e., the interindividual variance in the deviation from the slope) (Byth and Cox, 2005; Chiolero et al., 2013). This is also possible if only two assessments of performance are available, but estimating such models requires constraining error variance to zero to make the model identified (Blance et al., 2005). When using mixed models, it is crucial to center the predictor variable (i.e., time should be coded as −0.5 if before NIBS and +0.5 if after NIBS, not as 0 and 1), otherwise estimates will be vulnerable to mathematical coupling (Blance et al., 2005). Unlike other methods reviewed, this solution allows testing more elaborated models including also covariates (Blance et al., 2005).

The main goal of this perspective article was clarifying the main biases connected to widely used methods to examine the association between baseline performance and NIBS effects, reviewing solutions proposed in the literature. In particular, we have shown that mathematical coupling and regression to the mean can have large distorting effects on estimates, leading to extremely biased conclusions even when the null hypothesis is true. We also reviewed several solutions to mitigate such biases. None of the methods reviewed can be considered as the perfect solution, and whether one of such methods is superior to the others is still debated (Hayes, 1988; Tu et al., 2005; Tu and Gilthorpe, 2007; Chiolero et al., 2013). However, any of these methods will be superior to both the correlation and the categorization approaches that have been used in the field of NIBS. In situations in which is difficult to determine which biases are more likely to affect one's estimate, we suggest to apply different methods (e.g., Oldham's and Blomqvist's method), to inspect the results after considering different sources of bias.

We wish to stress that the biases and the solutions reviewed here are not recent findings. Some of them have been known for more than fifty years (Oldham, 1962; Blomqvist, 1987). Furthermore, these biases are not strictly specific to NIBS, but are relevant whenever one is interested in examining the relationships between baseline levels and deviations from such levels. Nonetheless, knowledge of such biases and solutions does not seem to be effectively integrated in the NIBS literature. The present work thus provides a strong contribution to a deeper understanding of the non-linear effects observed in brain stimulation studies (Schwarzkopf et al., 2011; Jones and Berryhill, 2012; Tseng et al., 2012; Hsu et al., 2014; Benwell et al., 2015; Painter et al., 2015; Learmonth et al., 2015; Emrich et al., 2017; Penton et al., 2017; Schaal et al., 2017; Yang and Banissy, 2017; Paracampo et al., 2018; Silvanto et al., 2018), and represents a step forward toward a full exploitation of the potential of brain stimulation techniques.

CL, LC, and GC: conceptualization and writing—review and editing. CL and GC: manuscript preparation. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^If we used an unstandardized regression approach, our results would converge towards a slope b = −1 (Blomqvist, 1987), which is equivalent to a correlation of . This can be seen considering that the population variance of x and y are σx = σy = 1 and their correlation is σxy = 0. The standard deviation of y–x is thus The regression slope by−x, x can be standardized with the formula .

2. ^This value can be obtained by considering that ylowP and yhighP have been sampled from a standard normal distribution. Since the median of a standard normal distribution is zero, xhighP and xlowP follow a half-normal distribution, with mean M = and SD = (Olmos et al., 2012). The expected value of the effect size dz can then be calculated as the mean of the differences between ylowP−xlowP or yhighP−xhighP, which are respectively , divided by the standard deviation of such differences, which is in both cases . The expected effect size is then given by .

3. ^Notice that Blomqvist (1987) reports a slightly different formula from those used here, in which k is subtracted and not added from the observed correlation/regression slope. This is because in some fields the deviation from baseline is calculated as y–x (i.e., improvement from baseline), in other fields the same change is calculated as x–y (i.e., deterioration from baseline), thus implying a change in sign.

Abrahamyan, A., Clifford, C. W. G., Arabzadeh, E., and Harris, J. A. (2011). Improving visual sensitivity with subthreshold transcranial magnetic stimulation. J. Neurosci. 31, 3290. doi: 10.1523/JNEUROSCI.6256-10.2011

Abrahamyan, A., Clifford, C. W. G., Arabzadeh, E., and Harris, J. A. (2015). Low intensity TMS enhances perception of visual stimuli. Brain Stimul. 8, 1175. doi: 10.1016/j.brs.2015.06.012

Benwell, C. S. Y., Learmonth, G., Miniussi, C., Harvey, M., and Thut, G. (2015). Non-linear effects of transcranial direct current stimulation as a function of individual baseline performance: evidence from biparietal tDCS influence on lateralized attention bias. Cortex 69, 152–165. doi: 10.1016/j.cortex.2015.05.007

Blance, A., Tu, Y. K., and Gilthorpe, M. S. (2005). A multilevel modelling solution to mathematical coupling. Stat. Methods Med. Res. 14, 553–565. doi: 10.1191/0962280205sm418oa

Blomqvist, N (1987). On the bias caused by regression toward the mean in studying the relation between change and initial value. J. Clin. Periodontol. 14, 34–37. doi: 10.1111/j.1600-051X.1987.tb01510.x

Byth, K., and Cox, D. R. (2005). On the relation between initial value and slope. Biostatistics 6, 395–403. doi: 10.1093/biostatistics/kxi017

Cattaneo, L (2010). Tuning of ventral premotor cortex neurons to distinct observed grasp types: a TMS-priming study. Exp. Brain Res. 207, 165–172. doi: 10.1007/s00221-010-2454-5

Cattaneo, L., Sandrini, M., and Schwarzbach, J. (2010). State-dependent TMS reveals a hierarchical representation of observed acts in the temporal, parietal, and premotor cortices. Cereb. Cortex 20, 2252–2258. doi: 10.1093/cercor/bhp291

Cattaneo, Z., Devlin, J. T., Salvini, F., Vecchi, T., and Silvanto, J. (2010). The causal role of category-specific neuronal representations in the left ventral premotor cortex (PMv) in semantic processing. NeuroImage 49, 2728–2734. doi: 10.1016/j.neuroimage.2009.10.048

Chiau, H. Y., Muggleton, N. G., and Juan, C. H. (2017). Exploring the contributions of the supplementary eye field to subliminal inhibition using double-pulse transcranial magnetic stimulation. Hum. Brain Mapp. 38, 339. doi: 10.1002/hbm.23364

Chiolero, A., Paradis, G., Rich, B., and Hanley, J. A. (2013). Assessing the relationship between the baseline value of a continuous variable and subsequent change over time. Front. Public Heal. 1, 29. doi: 10.3389/fpubh.2013.00029

Cohen, J (1988). Statistical Power Analysis for the Behavioral Sciences. New York, NY: Erlbaum. doi: 10.1234/12345678

de Graaf, T. A., Koivisto, M., Jacobs, C., and Sack, A. T. (2014). The chronometry of visual perception: review of occipital TMS masking studies. Neurosci. Biobehav. Rev. 45, 295–304. doi: 10.1016/j.neubiorev.2014.06.017

Diana, L., Pilastro, P.N, Aiello, E.K, Eberhard-Moscicka, A., et al. (2021). “Saccades, attentional orienting and disengagement: the effects of anodal tDCS over right posterior parietal cortex (PPC) and frontal eye field (FEF),” in ACM Symposium on Eye Tracking Research and Applications (New York, NY: ACM), 1–7. doi: 10.1145/3448018.3457995

Emrich, S. M., Johnson, J. S., Sutterer, D. W., and Postle, B. R. (2017). Comparing the effects of 10-Hz repetitive TMS on tasks of visual STM and attention. J. Cogn. Neurosci. 29, 286–297. doi: 10.1162/jocn_a_01043

Fan, J., McCandliss, B. D., Sommer, T., Raz, A., and Posner, M. I. (2002). Testing the efficiency and independence of attentional networks. J. Cogn. Neurosci. 14, 340–347. doi: 10.1162/089892902317361886

Hayes, R. J (1988). Methods for assessing whether change depends on initial value. Stat. Med. 7, 915–927. doi: 10.1002/sim.4780070903

Hsu, T.-Y., Tseng, P., Liang, W.-K., Cheng, S.-K., and Juan, C.-H. (2014). Transcranial direct current stimulation over right posterior parietal cortex changes prestimulus alpha oscillation in visual short-term memory task. NeuroImage 98, 306–313. doi: 10.1016/j.neuroimage.2014.04.069

Hsu, T. Y., Juan, C. H., and Tseng, P. (2016). Individual differences and state-dependent responses in transcranial direct current stimulation. Front. Hum. Neurosci. 10, 643. doi: 10.3389/fnhum.2016.00643

Jacquet, P. O., and Avenanti, A. (2015). Perturbing the action observation network during perception and categorization of actions' goals and grips: state-dependency and virtual lesion TMS effects. Cereb. Cortex 25, 598–608. doi: 10.1093/cercor/bht242

Jones, K. T., and Berryhill, M. E. (2012). Parietal contributions to visual working memory depend on task difficulty. Front. Psychiatry 3, 81. doi: 10.3389/fpsyt.2012.00081

Juan, C.-H., Tseng, P., and Hsu, T.-Y. (2017). Elucidating and modulating the neural correlates of visuospatial working memory via noninvasive brain stimulation. Curr. Dir. Psychol. Sci. 26, 165–173. doi: 10.1177/0963721416677095

Kadosh, R. C., Muggleton, N., Silvanto, J., and Walsh, V. (2010). Double dissociation of format-dependent and number-specific neurons in human parietal cortex. Cereb. Cortex 20, 2166–2171. doi: 10.1093/cercor/bhp273

Kammer, T (2007). Masking visual stimuli by transcranial magnetic stimulation. Psychol. Res. 71, 659. doi: 10.1007/s00426-006-0063-5

Learmonth, G., Thut, G., Benwell, C. S. Y., and Harvey, M. (2015). The implications of state-dependent tDCS effects in aging: behavioural response is determined by baseline performance. Neuropsychologia 74, 108–119. doi: 10.1016/j.neuropsychologia.2015.01.037

Mazzoni, N., Jacobs, C., Venuti, P., Silvanto, J., and Cattaneo, L. (2017). State-dependent TMS reveals representation of affective body movements in the anterior intraparietal cortex. J. Neurosci. 37, 7231–7239. doi: 10.1523/JNEUROSCI.0913-17.2017

Moliadze, V., Zhao, Y., Eysel, U., and Funke, K. (2003). Effect of transcranial magnetic stimulation on single-unit activity in the cat primary visual cortex. J. Physiol. 553, 665–679. doi: 10.1113/jphysiol.2003.050153

Nesselroade, J. R., Stigler, S. M., and Baltes, P. B. (1980). Regression toward the mean and the study of change. Psychol. Bull. 88, 622–637. doi: 10.1037/0033-2909.88.3.622

Oldham, P. D (1962). A note on the analysis of repeated measurements of the same subjects. J. Chronic Dis. 15, 969–977. doi: 10.1016/0021-9681(62)90116-9

Olmos, N. M., Varela, H., Gómez, H. W., and Bolfarine, H. (2012). An extension of the half-normal distribution. Stat. Pap. 53, 875–886. doi: 10.1007/s00362-011-0391-4

Painter, D. R., Dux, P. E., and Mattingley, J. B. (2015). Distinct roles of the intraparietal sulcus and temporoparietal junction in attentional capture from distractor features: an individual differences approach. Neuropsychologia 74, 50–62. doi: 10.1016/j.neuropsychologia.2015.02.029

Paracampo, R., Pirruccio, M., Costa, M., Borgomaneri, S., and Avenanti, A. (2018). Visual, sensorimotor and cognitive routes to understanding others' enjoyment: an individual differences rTMS approach to empathic accuracy. Neuropsychologia 116, 86–98. doi: 10.1016/j.neuropsychologia.2018.01.043

Parsons, S., Kruijt, A.-W., and Fox, E. (2019). Psychological science needs a standard practice of reporting the reliability of cognitive-behavioral measurements. Adv. Methods Pract. Psychol. Sci. 2, 378–395. doi: 10.1177/2515245919879695

Penton, T., Dixon, L., Evans, L. J., and Banissy, M. J. (2017). Emotion perception improvement following high frequency transcranial random noise stimulation of the inferior frontal cortex. Sci. Rep. 7, 1–7. doi: 10.1038/s41598-017-11578-2

Perini, F., Cattaneo, L., Carrasco, M., and Schwarzbach, J. V. (2012). Occipital transcranial magnetic stimulation has an activity-dependent suppressive effect. J. Neurosci. 32, 12361–12365. doi: 10.1523/JNEUROSCI.5864-11.2012

Perugini, M., Gallucci, M., and Costantini, G. (2018). A practical primer to power analysis for simple experimental designs. Int. Rev. Soc. Psychol. 31, 1–23. doi: 10.5334/irsp.181

R Core Team. (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna. Available online at: https://www.R-project.org/.

Renzi, C., Vecchi, T., Silvanto, J., and Cattaneo, Z. (2011). Overlapping representations of numerical magnitude and motion direction in the posterior parietal cortex: A TMS-adaptation study. Neurosci. Lett. 490, 145–149. doi: 10.1016/j.neulet.2010.12.045

Romei, V., Thut, G., and Silvanto, J. (2016). Information-based approaches of noninvasive transcranial brain stimulation. Trends Neurosci. 39, 782–795. doi: 10.1016/j.tins.2016.09.001

Ruzzoli, M., Marzi, C. A., and Miniussi, C. (2010). The neural mechanisms of the effects of transcranial magnetic stimulation on perception. J. Neurophysiol. 103, 2982–2989. doi: 10.1152/jn.01096.2009

Schaal, N. K., Kretschmer, M., Keitel, A., Krause, V., Pfeifer, J., and Pollok, B. (2017). The significance of the right dorsolateral prefrontal cortex for pitch memory in non-musicians depends on baseline pitch memory abilities. Front. Neurosci. 11, 677. doi: 10.3389/fnins.2017.00677

Schwarzkopf, D. S., Silvanto, J., and Rees, G. (2011). Stochastic resonance effects reveal the neural mechanisms of transcranial magnetic stimulation. J. Neurosci. 31, 3143–3147. doi: 10.1523/JNEUROSCI.4863-10.2011

Siebner, H. R (2004). Preconditioning of low-frequency repetitive transcranial magnetic stimulation with transcranial direct current stimulation: evidence for homeostatic plasticity in the human motor cortex. J. Neurosci. 24, 3379–3385. doi: 10.1523/JNEUROSCI.5316-03.2004

Silvanto, J., Bona, S., and Cattaneo, Z. (2017). Initial activation state, stimulation intensity and timing of stimulation interact in producing behavioral effects of TMS. Neuroscience 363, 134–141. doi: 10.1016/j.neuroscience.2017.09.002

Silvanto, J., Bona, S., Marelli, M., and Cattaneo, Z. (2018). On the mechanisms of Transcranial Magnetic Stimulation (TMS): how brain state and baseline performance level determine behavioral effects of TMS. Front. Psychol. 9, 741. doi: 10.3389/fpsyg.2018.00741

Silvanto, J., and Cattaneo, Z. (2017). Common framework for “virtual lesion” and state-dependent TMS: the facilitatory/suppressive range model of online TMS effects on behavior. Brain Cogn. 119, 32–38. doi: 10.1016/j.bandc.2017.09.007

Silvanto, J., Muggleton, N., and Walsh, V. (2008). State-dependency in brain stimulation studies of perception and cognition. Trends Cogn. Sci. 12, 447–454. doi: 10.1016/j.tics.2008.09.004

Silvanto, J., and Pascual-Leone, A. (2008). State-dependency of transcranial magnetic stimulation. Brain Topogr. 21, 1–10. doi: 10.1007/s10548-008-0067-0

Spearman, C (1913). Correlations of sums or differences. Br. J. Psychol. 5, 417–426. doi: 10.1111/j.2044-8295.1913.tb00072.x

Tseng, P., Hsu, T. Y., Chang, C. F., Tzeng, O. J. L., Hung, D. L., Muggleton, N. G., et al. (2012). Unleashing potential: transcranial direct current stimulation over the right posterior parietal cortex improves change detection in low-performing individuals. J. Neurosci. 32, 10554–10561. doi: 10.1523/JNEUROSCI.0362-12.2012

Tu, Y. K., Bælum, V., and Gilthorpe, M. S. (2005). The relationship between baseline value and its change: problems in categorization and the proposal of a new method. Eur. J. Oral Sci. 113, 279–288. doi: 10.1111/j.1600-0722.2005.00229.x

Tu, Y. K., and Gilthorpe, M. S. (2007). Revisiting the relation between change and initial value: a review and evaluation. Stat. Med. 26, 443–457. doi: 10.1002/sim.2538

Vergallito, A., Feroldi, S., Pisoni, A., and Romero Lauro, L. J. (2022). Inter-individual Variability in tDCS effects: a narrative review on the contribution of stable, variable, and contextual factors. Brain Sci. 12, 522. doi: 10.3390/brainsci12050522

Wu, D., Zhou, Y. J., Lv, H., Liu, N., and Zhang, P. (2021). The initial visual performance modulates the effects of anodal transcranial direct current stimulation over the primary visual cortex on the contrast sensitivity function. Neuropsychologia 156, 1–8. doi: 10.1016/j.neuropsychologia.2021.107854

Keywords: brain stimulation, baseline performance, mathematical coupling, regression to the mean, measurement error

Citation: Lega C, Cattaneo L and Costantini G (2022) How to Test the Association Between Baseline Performance Level and the Modulatory Effects of Non-Invasive Brain Stimulation Techniques. Front. Hum. Neurosci. 16:920558. doi: 10.3389/fnhum.2022.920558

Received: 14 April 2022; Accepted: 06 June 2022;

Published: 24 June 2022.

Edited by:

Fa-Hsuan Lin, University of Toronto, CanadaReviewed by:

Alexandra Lackmy-Vallée, Sorbonne Universités, FranceCopyright © 2022 Lega, Cattaneo and Costantini. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Carlotta Lega, Q2FybG90dGEubGVnYUB1bmltaWIuaXQ=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.