94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 06 December 2022

Sec. Brain-Computer Interfaces

Volume 16 - 2022 | https://doi.org/10.3389/fnhum.2022.1051463

Emotion classification using electroencephalography (EEG) data and machine learning techniques have been on the rise in the recent past. However, past studies use data from medical-grade EEG setups with long set-up times and environment constraints. This paper focuses on classifying emotions on the valence-arousal plane using various feature extraction, feature selection, and machine learning techniques. We evaluate different feature extraction and selection techniques and propose the optimal set of features and electrodes for emotion recognition. The images from the OASIS image dataset were used to elicit valence and arousal emotions, and the EEG data was recorded using the Emotiv Epoc X mobile EEG headset. The analysis is carried out on publicly available datasets: DEAP and DREAMER for benchmarking. We propose a novel feature ranking technique and incremental learning approach to analyze performance dependence on the number of participants. Leave-one-subject-out cross-validation was carried out to identify subject bias in emotion elicitation patterns. The importance of different electrode locations was calculated, which could be used for designing a headset for emotion recognition. The collected dataset and pipeline are also published. Our study achieved a root mean square score (RMSE) of 0.905 on DREAMER, 1.902 on DEAP, and 2.728 on our dataset for valence label and a score of 0.749 on DREAMER, 1.769 on DEAP, and 2.3 on our proposed dataset for arousal label.

The role of human emotion in cognition is vital and has been studied for a long time with different experimental and behavioral paradigms. Psychology researchers have tried to understand human perception through surveys for a long time. Recently, with the increasing need to learn about human perception, without human biases and conception of various emotions across people (Ekman, 1972), we observe the increasing popularity of neurophysiological recordings and brain imaging methods. Since emotions are triggered almost instantly, Electroencephalography (EEG) is an attractive choice due to its better temporal resolutions and mobile recording devices (Lang, 1995; Moss et al., 2003; Koelstra et al., 2012; Katsigiannis and Ramzan, 2018; Ko et al., 2021; Tuncer et al., 2021).

The algorithmic pipeline of decoding user intentions through neurophysiological signals consists of denoising, pre-processing, feature extraction, electrode and feature selection, and classification. Although there are deep-learning algorithms (Haselsteiner and Pfurtscheller, 2000; Übeyli, 2009; Schirrmeister et al., 2017; Karlekar et al., 2018; Zhou et al., 2018; Jeevan et al., 2019; Jin and Kim, 2020; Tao et al., 2020) which claim to do the frequency decomposition, feature extraction, and classifier training in the hidden layers, their explainability is limited, and amount of training data required is huge. Machine learning with time-domain features performs weighted spatial-temporal averaging of EEG signals with pattern recognition. Feature extraction methods (Ting et al., 2008; Al-Fahoum and Al-Fraihat, 2014; Oh et al., 2014; Zhang et al., 2016) require human effort, and expertise is required in identifying the appropriate features and electrode location depending on the modality, stimulus, recording instrument, and participant. Moreover, current feature extraction and selection method benchmarks (Song et al., 2018; Dar et al., 2020) for emotion recognition are focused on eliciting emotions through video-based stimuli, and the applicability of the proposed methods for static-image elicited emotional response is limited. Most pattern recognition benchmarks (Placidi et al., 2016; Kusumaningrum et al., 2020; Dhingra and Ram Avtar Jaswal, 2021) for decoding human emotions from EEG signals have been performed with research-grade EEG recording systems with large setup times, sophisticated recording setup, and cost. Although a portable EEG headset has a lesser signal-to-noise ratio, its low-cost and easy use makes it an attractive choice for collecting data from a wider population sample and overcoming the problem of insufficient uniform EEG data for algorithmic research.

In this study, first, we propose a protocol for eliciting emotions by presenting selected images from the OASIS dataset (Kurdi et al., 2016) and signal recording through a low-cost, portable EEG headset. Second, we create a pipeline of pre-preprocessing, feature extraction, electrode and feature selection, and classifier for emotional response (Valence and Arousal) decoding and evaluate it for our dataset and two open-source datasets; incremental training to demonstrate the dependence of performance on population sample size is presented. Third, we rank different categories of feature extraction techniques to evaluate the applicability of feature extraction techniques for highlighting the patterns indicative of emotional responses. Moreover, we analyze the electrode importance and rank different brain regions for their importance. The electrodes' relative importance can help explain the significance of different regions for emotion elicitation, lead to optimized electrode configuration while conducting neural-recording studies, and inspire the development of advanced feature extraction techniques for emotional response decoding. Fourth, we ask if we can automate the feature selection and electrode selection techniques for BCI pipeline engineering and validate the procedure with a qualitative and quantitative comparison with neuroscience literature. Importantly, we validate the pipeline for two open-source datasets based on video-based stimuli and recorded signals through the proposed protocol for eliciting emotions through images. The variety of stimuli, recording instruments, and demography of the population sample aids in eliminating bias and rigorous analysis of different pipeline components. Lastly, we publish the proposed pipeline and recorded dataset for the community.

In the past, the scope of using electrophysiological data for emotion prediction has widened and led to standardized 2D emotion metrics of valence and arousal (Russell, 1980) to train and evaluate pattern recognition algorithms. Human brain-recording experiments have been conducted to associate emotion quantitatively with words, pictures, sounds, and videos (Lang, 1995; Lane et al., 1999; Gerber et al., 2008; Eerola and Vuoskoski, 2011; Leite et al., 2012; Moors et al., 2013; Warriner et al., 2013; Kurdi et al., 2016; Mohammad, 2018). EEG frequency band is dominant during different roles, corresponding to various emotional, and cognitive states (Klimesch et al., 1990; Klimesch, 1996, 1999, 2012; Bauer et al., 2007; Berens et al., 2008; Jia and Kohn, 2011; Kamiński et al., 2012). Besides using energy spectral values, researchers use many other features such as frontal asymmetry, differential entropy and indexes for attention, approach motivation and memory. “Approach” emotions, such as happiness, are associated with left hemisphere brain activity, whereas “withdrawal,” such as disgust, emotions, are associated with right hemisphere brain activity (Davidson et al., 1990; Coan et al., 2001). The left-to-right alpha activity is therefore used for approach motivation. The occipito-parietal alpha power has been found to have correlations with attention (Smith and Gevins, 2004; Misselhorn et al., 2019). Fronto-central increase in theta and gamma activities has been proven essential for memory-related cognitive functions (Shestyuk et al., 2019). Differential entropy combined with asymmetry gives out features such as differential and rational asymmetry for EEG segments are some recent developments as forward-fed features for neural networks (Duan et al., 2013; Torres et al., 2020).

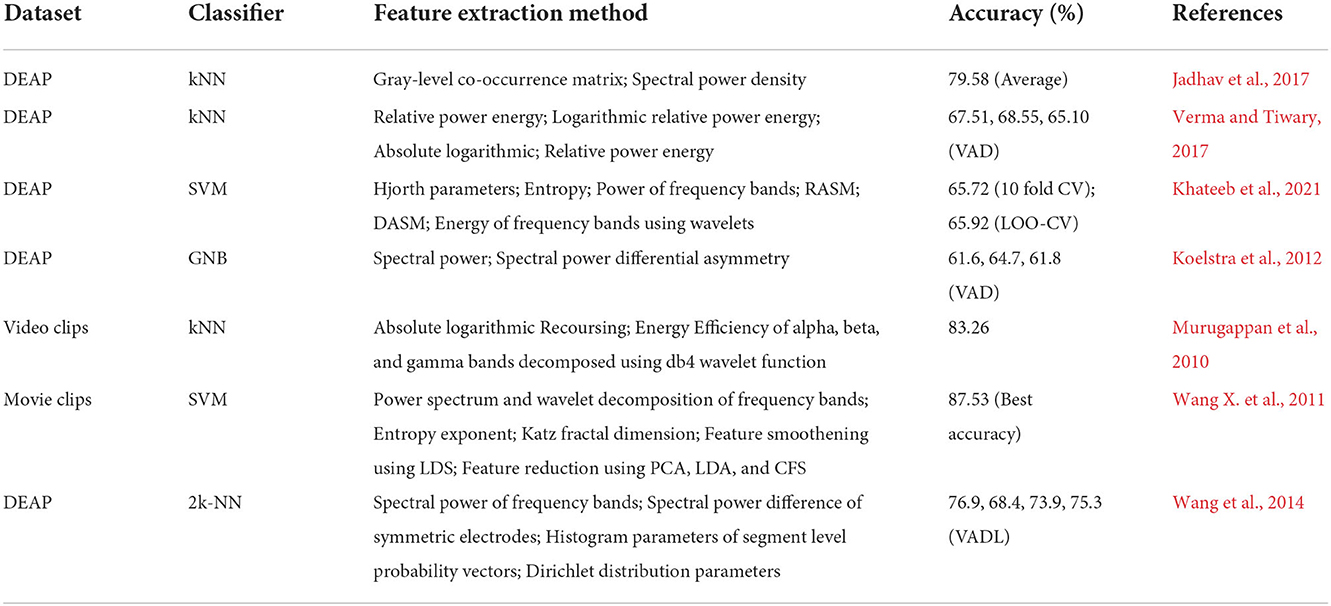

In an attempt to classify emotions using EEG signals, many time-domain, frequency-domain, continuity, complexity (Gao et al., 2019; Galvão et al., 2021), statistical, microstate (Lehmann, 1990; Milz et al., 2016; Shen X. et al., 2020), wavelet-based (Jie et al., 2014), and Empirical (Patil et al., 2019; Subasi et al., 2021) features extraction techniques have been proposed. We have summarized the latest studies using EEG to recognize the emotional state in Table 1.

Table 1. Table summarizing various machine learning algorithms and features used to classify emotions on various datasets, reported with accuracy.

This paper is organized as follows. Section 2.1 describes the three datasets used for our analysis. The theoretical background and the details of pre-processing steps (referencing, filtering, motion artifact, and rejection and repair of bad trials) are discussed in Section 2.2. Section 2.3 addresses the feature extraction details and provides an overview of the features extracted. Section 2.4 describes the feature selection procedure adopted in this work. Section 3 presents our experiments and results. This is followed by Section 4 for discussion of experiments performed and results obtained in this work. Finally, Section 5 summarizes this work's conclusion and future scope.

The OASIS image dataset (Kurdi et al., 2016) consists of a total of 900 images from various categories, such as natural locations, people, events, and inanimate objects with various valence and arousal elicitation values. Out of 900 images, 40 were selected to cover the valence and arousal rating spectrum, as shown in Figure 1.

Figure 1. Valence and arousal ratings of OASIS dataset. Valence and arousal ratings of the entire OASIS (Kurdi et al., 2016) image dataset (blue) and the images selected for our experiment (red). The images were selected to represent each quadrant of the 2D space.

The experiment was conducted in a closed room, with the only light source being the digital 21” Samsung 1,080 p monitor. Data was collected from fifteen participants of mean age 22 with ten males and five females using an EMOTIV Epoc EEG headset consisting of 14 electrodes according to the 10–20 montage system at a sampling rate of 128 Hz, and only the EEG data corresponding to the image viewing time was segmented using markers and used for analysis.

The study was approved by the Institutional Ethics Committee of BITS, Pilani (IHEC-40/16-1). All EEG experiments/methods were performed in accordance with the relevant guidelines and regulations as per the Institutional Ethics Committee of BITS, Pilani. All participants were explained the experiment protocol, and written consent for recording the EEG data for research purposes was obtained from each subject.

The subjects were explained the meaning of valence and arousal before the start of the experiment and were seated at a distance of 80–100 cm from the monitor.

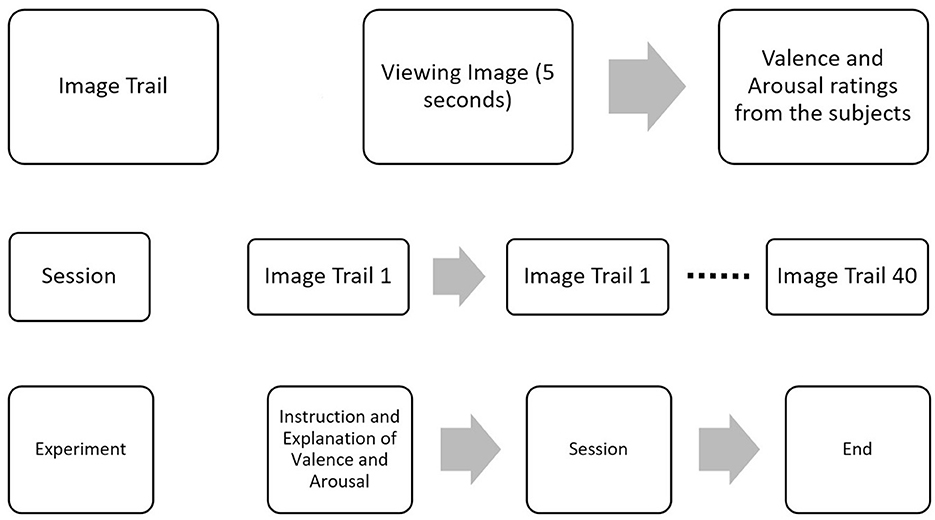

The images were shown for 5 s through Psychopy (Peirce et al., 2019), and the participants were asked to rate valence and arousal on a scale of 1–10 before proceeding to the next image, as shown in Figure 2. Additionally, the participants' ratings were compared to the original ratings provided in the OASIS image dataset as shown in Supplementary Figure 1, and MSE between the two was 1.34 and 1.39 for valence and arousal, respectively.

Figure 2. EEG data collection protocol. Experiment protocol for the collection of EEG data. Forty images from the OASIS dataset were shown to elicit emotion in the valence and arousal planes. After presenting each image, ratings were collected from participants.

DEAP dataset (Koelstra et al., 2012) has 32 subjects; each subject was shown 40 music videos one min long. Participants rated each video in arousal, valence, like/dislike, dominance, and familiarity levels. Data was recorded using 40 EEG electrodes placed according to the standard 10–20 montage system. The sampling frequency was 128Hz. This analysis considers only 14 channels (AF3, F7, F3, FC5, T7, P7, O1, O2, P8, T8, FC6, F4, F8, AF4) for the sake of uniformity with the other two datasets.

DREAMER (Katsigiannis and Ramzan, 2018) dataset has 23 subjects; each subject was shown 18 videos at a sampling frequency 128 Hz. Audio and visual stimuli in the form of film clips were employed to elicit emotional reactions from the participants of this study and record EEG and ECG data. After viewing each film clip, participants were asked to evaluate their emotions by reporting the felt arousal (ranging from uninterested/bored to excited/alert), valence (ranging from unpleasant/stressed to happy/elated), and dominance. Data was recorded using 14 EEG electrodes.

Raw EEG signals extracted from the recording device are continuous, unprocessed signals containing various kinds of noise, artifacts and irrelevant neural activity. Hence, a lack of EEG pre-processing can reduce the signal-to-noise ratio and introduce unwanted artifacts into the data. In the pre-processing step, noise and artifacts presented in the raw EEG signals are identified and removed to make them suitable for analysis in the further stages of the experiment. The following subsections discuss each pre-processing step (referencing, filtering, motion artifact, and rejection and repair of bad trials) in more detail.

The average amplitude of all electrodes for a particular time point was calculated and subtracted from the data of all electrodes. This was done for all time points across all trials.

A Butterworth bandpass filter of 4th order was applied to filter out frequencies between 0.1 and 40 Hz.

Motion artifacts were removed by using Pearson Coefficients (Onikura et al., 2015). The gyroscopic data (accelerometer readings) and EEG data were taken corresponding to each trial. Each of these trials of EEG data was separated into its independent sources using Independent Component Analysis (ICA) algorithm. For the independent sources obtained corresponding to a single trial, Pearson coefficients were calculated between each source signal and each axis of accelerometer data for the corresponding trial. The mean and standard deviations of Pearson coefficients were then calculated for each axis obtained from overall sources. The sources with Pearson coefficient 2 standard deviations above the mean for any one axis were high pass filtered for 3 Hz using a Butterworth filter as motion artifacts exist at these frequencies. The corrected sources were then projected back into the original dimensions of the EEG data using the mixing matrix given by ICA.

Auto Reject is an algorithm developed by Jas et al. (2017) for rejecting bad trials in Magneto-/Electro- encephalography (M/EEG data), using a cross-validation framework to find the optimum peak-to-peak threshold to reject data.

• We first consider a set of candidate thresholds ϕ.

• Given a matrix of dimensions (epochs × channels × time points) by X ∈ R N×P, where N is the number of trials/epochs and P is the number of features. P = Q*T, Q is the number of sensors, and T is the number of time points per sensor.

• The matrix is split into K-folds. Each of the K parts will be considered the training set once, and the rest of the K-1 parts become the test set.

• For each candidate threshold, i.e., for each

we apply this candidate peak to peak threshold (ptp) to reject trials in the training set known as bad trials, and the rest of the trials become the good trials in the training set.

where Xi indicates a particular trial.

• A is the peak-to-peak threshold of each trial, Gl is the set of trials whose ptp is less than the candidate threshold being considered

• Then, the mean amplitude of the good trials (for each sensor and their corresponding set of time points) is calculated

• While the median amplitude of all trials is calculated for the test set

• Now, the Frobenius norm is calculated for all K folds, giving K errors ek ∈ E; the mean of all these errors is mapped to the corresponding candidate threshold.

• The following analysis was done considering all channels at once; thus, it is known as auto-reject global

• Similar process can be considered where analysis can be done for each channel independently, i.e., data matrix becomes(epochs × 1 × time points) known as the local auto-reject, where we get optimum thresholds for each sensor independently.

• The most optimum threshold is the one that gives the least error

As bad trials were already rejected in the DEAP and DREAMER datasets, we do not perform automatic trial rejections.

In this work, the following set of 36 features was extracted from the EEG signal data with the help of EEGExtract library (Saba-Sadiya et al., 2020) for all three datasets:

• Shannon Entropy (S.E.)

• Subband Information Quantity for Alpha [8–12 Hz], Beta [12–30 Hz], Delta [0.5–4 Hz], Gamma [30–45 Hz], and Theta[4–8 Hz] band (S.E.A., S.E.B., S.E.D., S.E.G., S.E.T.)

• Hjorth Mobility (H.M.)

• Hjorth Complexity (H.C.)

• False Nearest Neighbor (F.N.N)

• Differential Asymmetry (D.A., D.B., D.D., D.G., D.T.)

• Rational Asymmetry (R.A., R.B., R.D., R.G., R.T.)

• Median Frequency (M.F.)

• Band Power (B.P.A., B.P.B., B.P.D., B.P.G., B.P.T.)

• Standard Deviation (S.D.)

• Diffuse Slowing (D.S.)

• Spikes (S.K.)

• Sharp spike (S.S.N.)

• Delta Burst after Spike (D.B.A.S.)

• Number of Bursts (N.B.)

• Burst length mean and standard deviation (B.L.M., B.L.S.)

• Number of Suppressions (N.S.)

• Suppression length mean and standard deviation (S.L.M., S.L.S.).

These features were extracted with a 1 s sliding window and no overlap. The extracted features can be categorized into two different groups based on the ability to measure the complexity and continuity of the EEG signal. The reader is encouraged to refer to the work done by Ghassemi (2018) for an in-depth discussion of these features.

Complexity features represent the degree of randomness and irregularity associated with the EEG signal. Different features in the form of entropy and complexity measures were extracted to gauge the information content of non-linear and non-stationary EEG signal data.

Shannon entropy (Shannon, 1948) is a measure of uncertainty (or variability) associated with a random variable. Let X be a set of finite discrete random variables , Shannon entropy, H(X), is defined as

where c is a positive constant and p(xi) is the probability of (xi) (ϵ) X such that:

Higher entropy values indicate high complexity and less predictability in the system (Phung et al., 2014).

Sub-band Information Quantity (SIQ) refers to the entropy of the decomposed EEG wavelet signal for each of the five frequency bands (Jia et al., 2008; Valsaraj et al., 2020). In our analysis, the EEG signal was decomposed using a butter-worth filter of order 7, followed by an FIR/IIR filter. This resultant wave signal's Shannon entropy [H(X)] is the desired SIQ of a particular frequency band. Due to its tracking capability for dynamic amplitude change and frequency component change, this feature has been used to measure the information in the brain (Shin et al., 2006; Kanungo et al., 2021).

Hjorth Parameters indicate time-domain statistical properties introduced by Hjorth (1970). Variance-based calculation of Hjorth parameters incurs a low computational cost, making them appropriate for EEG signal analysis. We use complexity and mobility (Das and Pachori, 2021) parameters in our analysis. Horjth mobility signifies the power spectrum's mean frequency or the proportion of standard deviation. It is defined as:

where var(.) denotes the variance operator and x(t) denotes the EEG time-series signal.

Hjorth complexity signifies the change in frequency. This parameter has been used to measure the signal's similarity to a sine wave. It is defined as:-

False Nearest Neighbor is a measure of signal continuity and smoothness. It is used to quantify the deterministic content in the EEG time series data without assuming chaos (Kennel et al., 1992; Hegger and Kantz, 1999).

We incorporate Differential Entropy (DE) (Zheng et al., 2014) in our analysis to construct two features for each of the five frequency bands, namely, Differential Asymmetry (DASM) and Rational Asymmetry (RASM). Mathematically, DE [h(X)] is defined as:

where X follows the Gauss distribution N(μ,σ2), x is a variable and π and exp are constant.

Differential Asymmetry (or DASM) (Duan et al., 2013) for each frequency band was calculated as the difference of differential entropy of each of seven pairs of hemispheric asymmetry electrodes.

Rational Asymmetry(or RASM) (Duan et al., 2013) for each frequency band was calculated as the ratio of differential entropy between each of seven pairs of hemispheric asymmetry electrodes.

Continuity features signify the clinically relevant signal characteristics of EEG signals (Hirsch et al., 2013; Ghassemi, 2018). These features have been acclaimed to serve as qualitative descriptors of states of the human brain and are important in emotion recognition.

Median Frequency refers to the 50% quantile or median of the power spectrum distribution. Median Frequency has been studied extensively due to its observed correlation with awareness (Schwilden, 1989) and its ability to predict imminent arousal (Drummond et al., 1991). It is a frequency domain or spectral domain feature.

Band power refers to the signal's average power in a specific frequency band. The powers of the delta, theta, alpha, beta, and gamma frequency bands were used as spectral features. Initially, a butter-worth filter of order seven was applied to the EEG signal to calculate band power. IIR/FIR filter was applied further on the EEG signal in order to separate out signal data corresponding to a specific frequency band. The average of the power spectral density was calculated using a periodogram of the resulting signal. Signal Processing sub-module (scipy.signal) of SciPy library (Virtanen et al., 2020) in python was used to compute the band power feature.

Standard Deviation has proved to be an important time-domain feature in past experiments (Panat et al., 2014; Amin et al., 2017). Mathematically, it is defined as the square root of the variance of the EEG signal segment.

Previous studies (Boutros, 1996) have shown that diffuse slowing correlates with impairment in awareness, concentration, and memory; hence, it is an important feature for estimating valence/arousal levels from EEG signal data.

Spikes (Hirsch et al., 2013) refers to the peaks in the EEG signal up to a threshold, fixed at mean + 3 standard deviation. The number of spikes was computed by finding local minima or peaks in EEG signal over seven samples using scipy.signal.find_peaks method from SciPy library (Virtanen et al., 2020).

The change in delta activity after and before a spike is computed epoch-wise by adding the mean of seven elements of the delta band before and after the spike, used as a continuity feature.

Sharp spikes refer to spikes which last <70 ms and is a clinically important features in the study of electroencephalography (Hirsch et al., 2013).

The number of amplitude bursts(or simply the number of bursts) constitutes a significant feature (Hirsch et al., 2013).

Statistical properties of the bursts, mean μ and standard deviation σ of the burst lengths, have been used as continuity features.

Burst Suppression refers to a pattern where high voltage activity is followed by an inactive period and is generally a characteristic feature of deep anesthesia (Ching et al., 2012). We use the number of contiguous segments with amplitude suppressions as a continuity feature with a threshold fixed at 10μ (Saba-Sadiya et al., 2020).

Statistical properties like mean μ and standard deviation σ of the suppression lengths are used as a continuity feature.

After feature extraction, feature selection is performed to optimize the selection and ranking of features, reduce model complexity, decrease computation time and enhance learning precision. The feature selection step plays a crucial role in eliminating redundant features that do not contribute to model performance while preserving the relevant information of EEG signals. Hence, selecting the correct predictor variables or feature vectors can improve the learning process in any machine learning pipeline. In this work, initially, zero-variance or constant features were eliminated from the set of 36 extracted EEG features using the VarianceThreshold feature selection method using sci-kit learn package (Pedregosa et al., 2011). Next, a subset of 25 features common to all 3 datasets (DREAMER, DEAP, and OASIS EEG) was selected after applying the VarianceThreshold method for further analysis. This was done to validate our approach on a common set of features. The set of 11 features (S.E., F.N.N., D.S., S.K., D.B.A.S., N.B., B.L.M., B.L.S., N.S., S.L.M., S.L.S.) were excluded from further analysis. Hence, we reduce the feature space from a set of 36 extracted features to this subset of 25 features. Corresponding to each feature, a feature matrix of shape [nc, ns] is generated. We append all these feature matrices to create a new matrix of shape [nc*nf, ns]. This matrix is inverted to get features as columns for each segment, i.e., a matrix of shape [ns, nc*nf] where nc is the number of channels, nf is the number of features and ns is the number of segments. These feature column vectors serve as input for the SelectKBest algorithm for performing feature selection and ranking for all three datasets. SelectkBest (Pedregosa et al., 2011) is a filter-based, univariate feature selection method intended to select and retain first k-best features based on the scores produced by univariate statistical tests. In our work, f_regression was used as the scoring function since valence and arousal are continuous numeric target variables. It uses Pearson correlation coefficient as defined in Equation (8) to compute the correlation between each feature vector in the input matrix, X and target variable, y, as follows:

The corresponding F-value is then calculated as:

where n is the number of samples.

SelectkBest method then ranks the feature vectors based on F-scores returned by the f_regression method. Higher scores correspond to better features.

Random forest is an ensemble estimator that fits many classifying decision trees on various sub-samples of the data set and uses averaging over this ensemble of trees to improve the predictive accuracy and control over-fitting (Pedregosa et al., 2011). Moreover, it has been found to be suitable for high-dimensional data. In this experiment, a random forest regressor was implemented with 100 tree estimators and squared-error criterion as base parameters using the sci-kit learn library.

The following regression evaluation metrics were assessed to gauge the model performance as part of this experiment:

Root Mean Square Error (RMSE) can be defined as the standard deviation of residual errors as shown in Equation (10). Hence, RMSE estimates the deviation of actual values from the predicted regression line. Lower RMSE corresponds to accurate predictions and smaller residual errors by the model. RMSE is more sensitive toward outliers than MAE since the error difference is squared.

R2 score is a statistic that denotes the proportion of variance in the dependent variable (y) explained by independent variables (x) of the machine learning model. Higher values of R2 score correspond to greater ability of independent variables in explaining the variance in the dependent variable. Since the R2 score depends on the sample size of the dataset and the number of predictor variables, the R2 score is not meaningfully comparable across datasets of different dimensionality (MAR, 2021). R2 score can be computed as:

Mean Absolute Error or l1 loss is the mean of the absolute difference between the predicted value () and the actual value (yi) of the dependent variable as shown in Equation (12). MAE is a popular linear regression metric that uses the same scale of the observed value. Like RMSE, MAE is also a negatively oriented metric; thus, lower values correspond to more accurate predictions by the model.

Explained variance is a part of total variance that acts as a measure of discrepancy between the model and actual data. EV is different from the R2 score in computation as it does not account for systematic offset and uses biased variance to explain the spread of data points. Hence, if the mean error of the predictor is unbiased, the EV score and R2 score should become equal. EV can be calculated as:

where Var{θ} is the variance operator for variable θ.

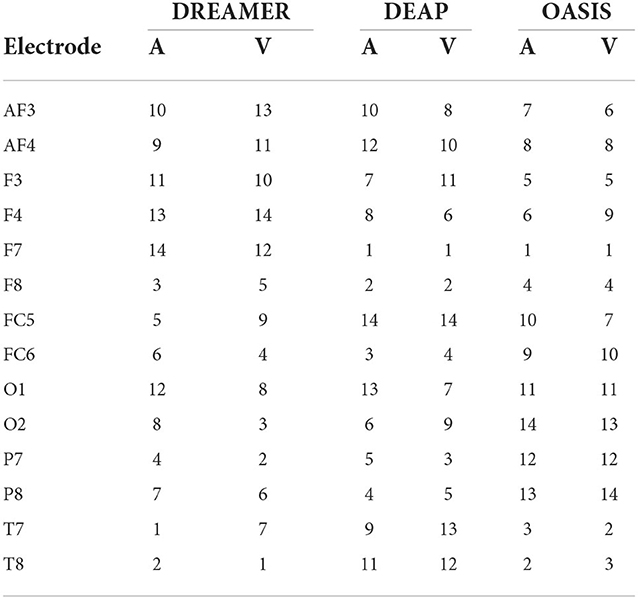

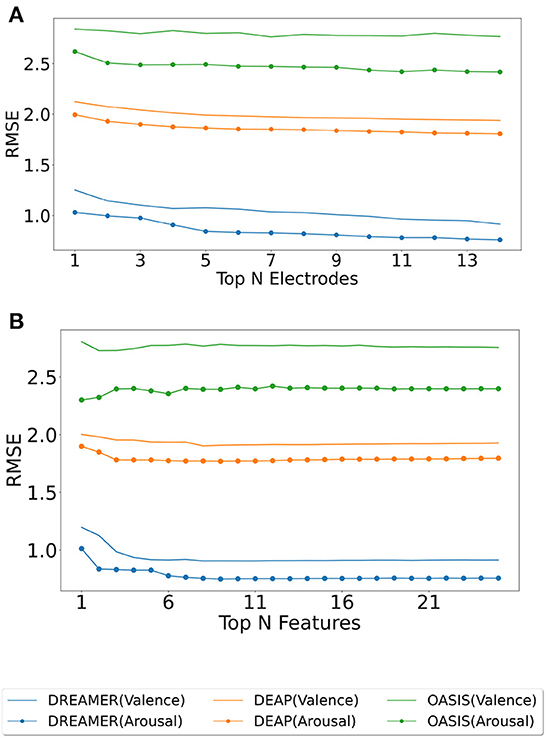

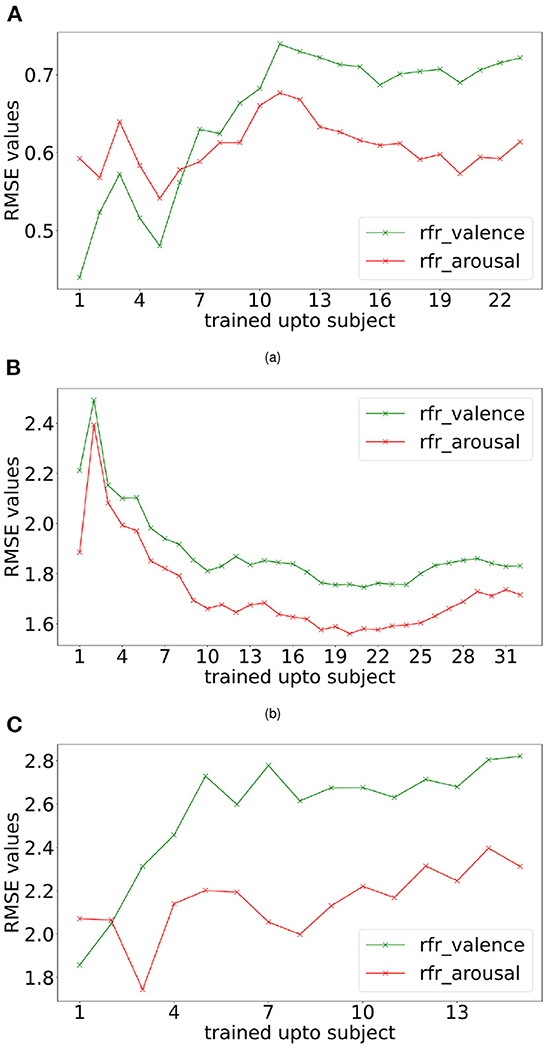

The electrodes were ranked for the three datasets using the SelectKBest method, as discussed in Section 2.4, and the ranks are tabulated for valence and arousal labels in Table 2. To produce a ranking for Top N electrodes, feature data for top N electrodes were initially considered. The resultant matrix was split in the ratio 80:20 for training and evaluating the random forest regressor model. The procedure was repeated until all 14 electrodes were taken into account. The RMSE values for the same are shown in Figure 3A. It should be noted that, unlike feature analysis, data corresponding to five features each of DASM and RASM was excluded from the Top N electrode-wise RMSE study since these features are constructed using pairs of opposite electrodes.

Table 2. Electrode ranking for valence label (V) and arousal label (A) based on SelectKBest feature selection method.

Figure 3. Model evaluation for feature and electrode selection. The random forest regressor was trained on the training set (80%) corresponding to top N electrodes (ranked using SelectKBest feature selection method), and RMSE was computed on the test set (20%) for valence (plain) and arousal (dotted) label on DREAMER, DEAP, and OASIS EEG datasets as shown in (A). A similar analysis was performed for top N features for DREAMER, DEAP, and OASIS EEG datasets, as shown in (B).

Each extracted feature was used to generate its corresponding feature matrix of shape (nbChannels, nbSegments). These feature matrices were then ranked using the SelectKBest feature selection method. Initially, a feature matrix for the best feature was generated. The ranks were tabulated for valence and arousal labels in Table 3. This data was split into 80:20 train-test data; the training data was used to perform regression with Random Forest Regressor, predicted values on test data were compared with actual test labels, and RMSE was computed. In the second run, feature matrices of best and second-best features were combined, data was split into train and test data, the model was trained, and predictions made by the model on test data were used to compute RMSE. This procedure was followed until all the features were taken into account. The RMSE values for the feature analysis procedure, as described above, are shown in Figure 3B.

Table 3. Feature ranking for valence label (V) and arousal label (A) based on SelectKBest feature selection method.

As given by the feature analysis described above, the best features were used to generate a feature matrix for valence and arousal for each dataset. The feature matrix was then used to train a random forest regressor as part of the incremental learning algorithm.

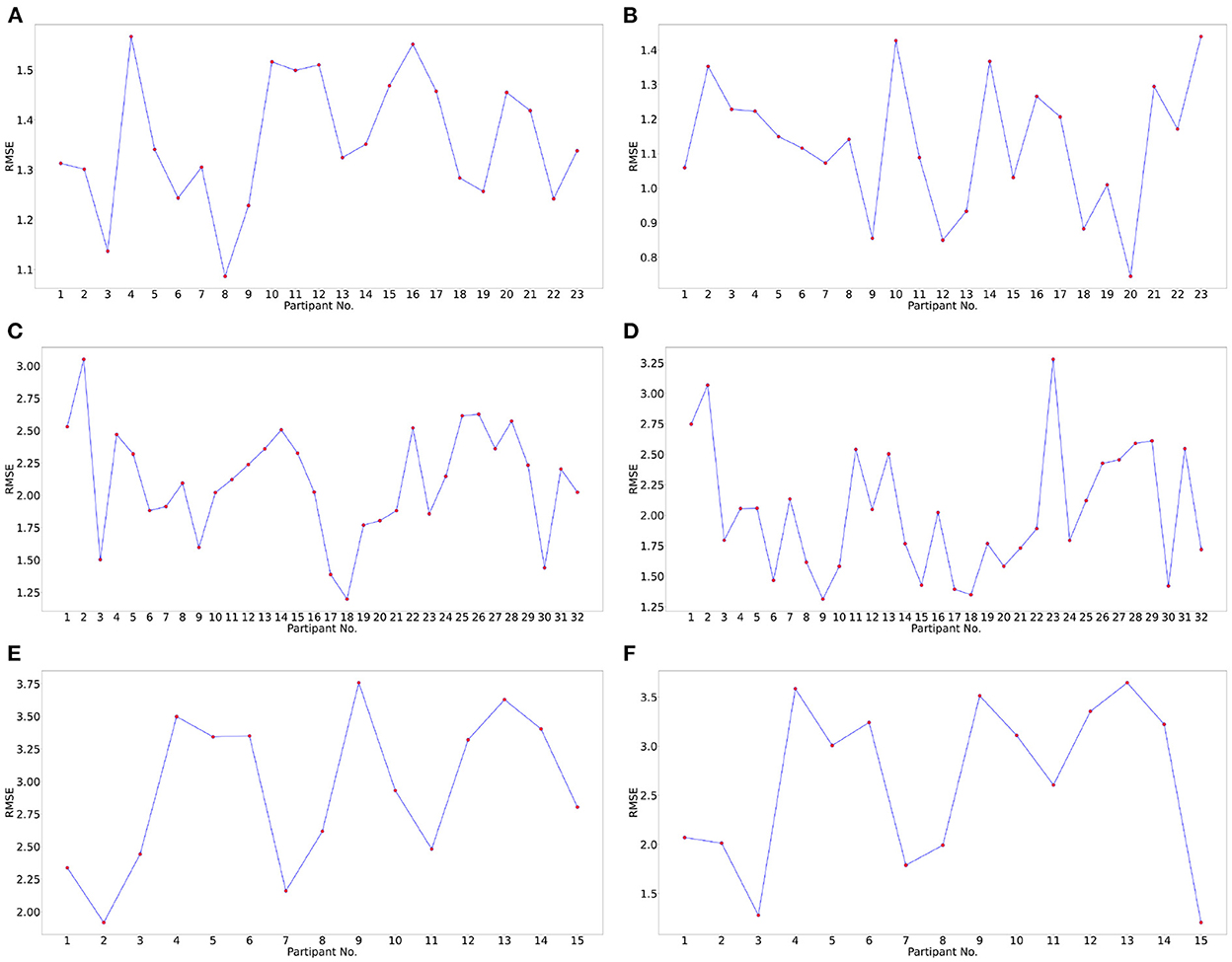

Incremental learning was performed based on the collection of subject data. Initially, the first subject data was taken, their trial order shuffled and then split using 80:20 train test size, the model was trained using train split, predictions were made for test data, and next 2nd subject data was taken together with the 1st subject, trial order shuffled, again a train-test split taken and the random forest regressor model was trained using the train split. Predictions were made for the test split. This procedure was repeated until data from all the subjects were used for RMSE computation. RMSE values for each training step, i.e., training data consisted of subject 1 data, then the combination of subject 1, 2 data, then the combination of subject 1, 2, 3 data, and so on. The plots generated for RMSE values for the individual steps of training are shown in Figure 4.

Figure 4. Incremental learning performance. Valence and arousal RMSE readings were obtained with incremental learning for DREAMER (A), DEAP (B), and OASIS EEG (C) datasets using random forest regressor (rfr).

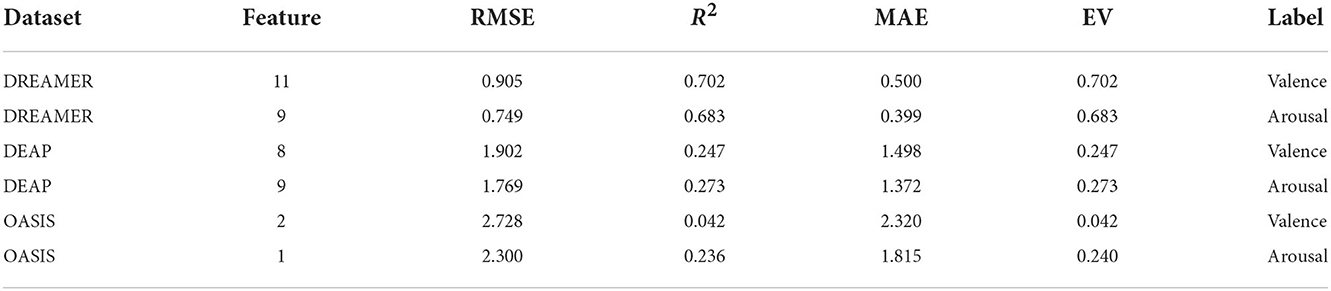

Subject generalization is a crucial problem in identifying EEG signal patterns. To prevent over-fitting and avoid subject-dependent patterns. We train the model with data from all the subjects except a single subject and evaluate the model on this remaining subject. Hence, the model is evaluated for each subject to identify subject bias and prevent any over-fitting. Also, when building a machine learning model, it is a standard practice to validate the results by leaving aside a portion of data as the test set. In this work, we used the leave-one-subject-out cross-validation technique to avoid participant bias and evaluate the generalization capabilities of the pipeline. Leave-one-subject-out cross-validation is a k-fold cross-validation technique, where the number of folds, k, equals the number of participants in a dataset. The cross-validated RMSE values for the three datasets for all the participants are plotted in Figure 5.

Figure 5. Subject wise performance analysis for valence and arousal labels. Leave-one-subject-out cross-validation performance analysis for valence label for (A) DREAMER, (C) DEAP, (E) OASIS datasets and arousal label for (B) DREAMER, (D), DEAP (F) OASIS datasets, respectively. In this cross-validation technique, one subject was chosen as the test subject, and the models were trained on the data of the remaining subjects.

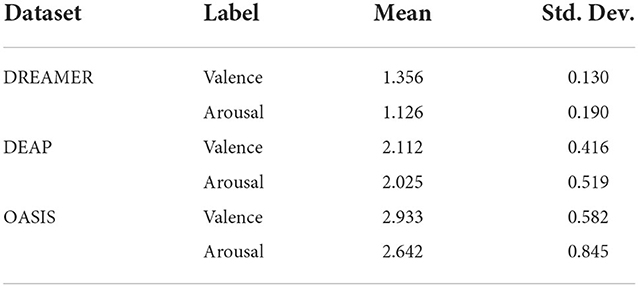

The mean and standard deviation of RMSE values for valence and arousal labels after cross-validation has been summarized in Table 6. The best RMSE values lie within the standard deviation range for the leave-one-subject-out cross-validation results. Hence, inferences drawn from them can be validated.

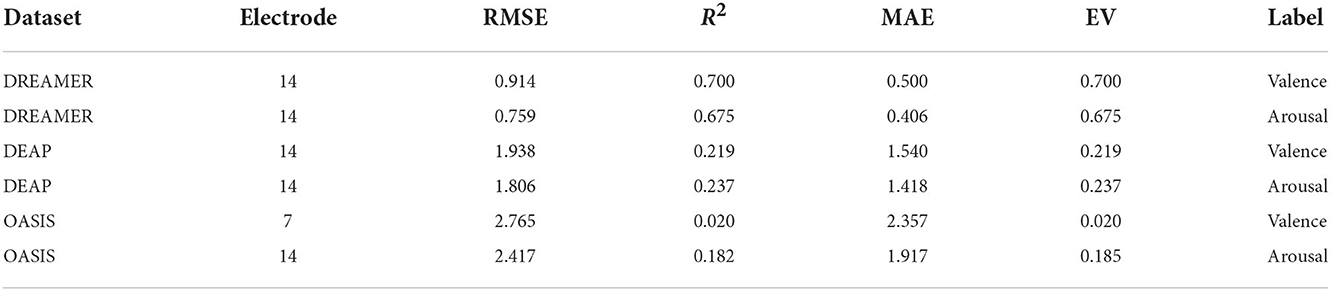

Table 4 indicates that the optimum values for RMSE, R2 score, MAE, EV obtained on the test set (20%) using the optimum set of features were 0.905, 0.702, 0.500, 0.702 and 0.749, 0.683, 0.399, 0.683 on the DREAMER dataset; 1.902, 0.247, 1.498, 0.247 and 1.769, 0.273, 1.372, 0.273 on the DEAP dataset; and 2.728, 0.042, 2.320, 0.042 and 2.300, 0.236, 1.815, 0.240 on OASIS dataset for valence and arousal, respectively. For leave-one-subject-out cross-validation, we achieved the best RMSE of 1.35, 1.12 on DREAMER, 2.11, 2.02 on DEAP, and 2.93, 2.64 on the OASIS dataset for valence and arousal, respectively as shown in Figure 5.

Table 4. Regression evaluation metrics values for valence and arousal labels on the test set (20%) of DEAP, DREAMER, and OASIS datasets for the optimum set of features.

The dimension of the feature vector is dependent on the number of electrodes and features used for training the machine learning model. Training the model with high-dimensional data requires a proportional sample size to avoid over-fitting. Limited training data and participant bias are classic drawbacks of EEG datasets, especially in the case of emotional state recognition. Therefore determination of the optimum number of electrodes and features is a critical step.

For analysing the subject generalization capability of the proposed methods, two experiments were conducted: incremental learning, as shown in Figure 4, and leave-one-out cross-validation, as shown in Figure 4 and Table 6. As shown in Supplementary Figure 2, the incremental learning (IL) error is lower than leave-one-out cross-validation (LOCV) for most of the participants. For the DEAP dataset (32 participants), the performance improves when increasing the number of participants considered to train the model (Figure 4B). For DREAMER and OASIS EEG datasets, while the performance worsens while increasing the number of participants (Figures 4A,C), the IL performance is substantially better than LOCV performance (Supplementary Figure 2), indicating participant bias is higher in these two datasets. Moreover, IL learning error saturates after 10 participants in DREAMER and 5 participants in the OASIS EEG dataset. Therefore, the model overfits when trained with data from a few subjects and the generalization capabilities of the proposed model scale with sample size.

The optimum number (N) of electrodes (Table 5) and features (Table 4) are the ones that produce minimum RMSE in during model evaluation, while the increasing N, as shown in Figures 3A,B. In Figure 3A, a general decline could be seen in the error when increasing the number of electrodes, indicating the importance of high electrode density.

Table 5. Regression evaluation metrics values for valence and arousal label on the test set (20%) of DEAP, DREAMER, and OASIS dataset for the optimum set of electrodes.

For the number of features, the downward trend saturates, and even reversal could be observed (Figure 3B) when increasing the number of features beyond a limit. This is also indicated in Table 4 with N being less than half the total features for all the datasets. Interestingly, the lowest RMSE was observed with just a single feature for decoding arousal for the OASIS EEG dataset. This might be explained by the fact that OASIS EEG data is smaller than the other two datasets, and increasing the feature-length leads to over-fitting.

The subject generalization capabilities of the learned model can be estimated by comparing leave-one-out cross-validation (Table 6) and standard 80-20 split (Table 4). The former error is higher than the latter by 50, 14, and 9% for DREAMER, DEAP, and OASIS EEG datasets, respectively. The number of selected features is also highest for DREAMER and lowest for the OASIS EEG dataset, indicating that more features increase participant bias and hence should be carefully determined.

Table 6. Mean and standard deviation (Std. Dev.) of RMSE values for valence and arousal label data after leave-one-subject-out-cross-validation.

As shown in Tables 2, 3, three rankings were obtained from three datasets for each label. For the valence labels, out of the top 25% electrodes, 33% were in the frontal regions (F3, F4, F7, F8, AF3, AF4, FC5, FC6), 33% in the temporal regions (T8, T7), 22% the parietal regions (P7, P8), and 11% in the occipital regions (O1, O2). Of the top 50% electrodes, 57% were in the frontal regions, 19% in the temporal regions, 19% in the parietal regions, and 4% in the occipital regions.

For the arousal labels, out of the top 25% electrodes, 55% were in the frontal regions, and 44% in the temporal regions. Of the top 50% electrodes, 57% were in the frontal regions, 19% in the temporal regions, 19% in the parietal regions, and 4% in the occipital regions.

Therefore, the frontal region was the most significant brain region for recognizing valence and arousal, followed by the temporal, parietal, and occipital. This is in accordance with previous works on EEG channel selection (Alotaiby et al., 2015; Shen J. et al., 2020).

The optimum set of features was obtained using feature rankings and model evaluation results present in Tables 3, 4, respectively. For the DREAMER dataset, this set was observed to be (S.E.G, S.E.B, H.M, H.C., B.P.A, S.D, S.E.A, B.P.B, R.B, D.B, D.A) for valence and (S.E.G, H.C, S.E.B, D.A, R.A, H.M, B.P.A, S.D, B.P.B) for arousal respectively. The minimum RMSE values obtained using these optimal features on the DREAMER dataset were 0.905 and 0.749 for valence and arousal dimensions, respectively, as evident from Table 4. Therefore these features were critical for recognizing emotional states and can be used in future studies to evaluate classifiers like Artificial Neural Networks and ensembles.

As shown in Table 3, band power and sub-band information quantity feature for gamma and beta frequency bands performed better in estimating valence and arousal than other frequency bands. Hence the gamma and beta frequency bands are the most critical for emotion recognition (Wang X.-W. et al., 2011; Zheng et al., 2017).

It can be inferred from Table 3 that H.M. was mostly ranked among the top 3 features for predicting valence and arousal labels. Similarly, H.C. was ranked among the top four features. This inference is consistent with the previous studies that claim the importance of time-domain Hjorth parameters in accurate EEG classification tasks (Cecchin et al., 2010; Türk et al., 2017).

In the past, statistical properties like standard deviation derived from the reconstruction of EEG signals have been claimed to be significant descriptors of the signal and provide supporting evidence to the results obtained in this study (Panda et al., 2010; Malini and Vimala, 2016). It was observed that SD was ranked among the top 8 ranks in general.

Additionally, spatial filtering through optimizing the covariance matrices with training data using common spatial patterns (CSP) and Riemannian geometry (Barachant et al., 2011) have been used to aid better classification results (Simar et al., 2020). However, such methods are only applicable for classification tasks, and extension to regression problems is not in the scope of this study. Lastly, the classifier could be further optimized using advanced ensemble learning techniques (Fang et al., 2021) or using deep networks, often referred to as a bag of deep features (Asghar et al., 2019).

EEG is a low-cost, noninvasive neuroimaging technique that provides high spatiotemporal information about brain activity, and it has become an indispensable tool for decoding cognitive neural signatures. However, the multi-stage intelligent signal processing method has several indispensable steps like pre-processing, feature extraction, feature selection, and classifier training. In this work, we propose a generalized open-source neural signal processing pipeline based on machine learning to accurately classify emotional index on a continuous valence-arousal plane using these EEG signals. We statistically investigated and validated artifact rejection, automated bad-trial rejection, state-of-the-art spatiotemporal feature extraction techniques, and feature selection techniques on a self-curated dataset recorded from a portable headset in response to the OASIS emotion elicitation image dataset and two open-source EEG datasets. The static images also reduce demographic bias like language and social context and enable generalized benchmarks of different feature extraction for emotional response detection across various recording setups. This published dataset could be used in future studies for intelligent signal processing methods like deep learning, reinforcement learning, and neuromorphic computing. The published simplistic python pipeline would aid researchers in focusing on innovation in specific signal processing steps like feature selection or machine learning without the need to recreate the entire pipeline from scratch. In accordance with neuroscience literature, our proposed system could identify the optimum set of electrodes and features that produce minimum RMSE during emotion classification for a given dataset. It also validated the claim that beta and gamma frequency bands are more effective than others in emotion classification. The OASIS EEG dataset collection was limited to 15 participants due to the COVID-19 pandemic. In future, we plan to collect the data for at least 40 participants to draw stronger inferences. Future work would also include the analysis of end-to-end neural networks and transfer learning for emotion recognition. The published dataset can further advance machine learning systems for emotional state detection with data recorded from portable headsets. The published EEG processing pipeline of artifact rejection, feature extraction, feature ranking, feature selection, and machine learning could be expanded and adapted for processing EEG signals in response to a variety of stimuli.

The code supporting this study is made publicly available at https://github.com/rohitgarg025/Decoding_EEG. The OASIS EEG dataset is published at https://zenodo.org/record/7332684#.Y3b_Dt9OlhE.

The studies involving human participants were reviewed and approved by Institutional Ethics Committee of BITS, Pilani (IHEC-40/16-1). The patients/participants provided their written informed consent to participate in this study.

NG and VB conceptualized the research. RG, NG, and AA performed the experiments and analyzed the data. VB supervised the study. NG, RG, AA, and VB approved and contributed to writing the manuscripts. All authors contributed to the article and approved the submitted version.

This work was supported by the Department of Science and Technology, Government of India, vide Reference No: SR/CSI/50/2014(G) through the Cognitive Science Research Initiative (CSRI). NG acknowledge financial support from the EU: ERC-2017-COG project IONOS (GA 773228).

We acknowledge Mr. Parrivesh N. S. and Mr. V. A. S. Abhinav for their valuable suggestions and assistance in data collection during the planning and initial development of this research work. We acknowledge the non-financial support from Ironwork Insights Inc. in experiment design.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2022.1051463/full#supplementary-material

Al-Fahoum, A. S., and Al-Fraihat, A. A. (2014). Methods of EEG signal features extraction using linear analysis in frequency and time-frequency domains. Int. Scholar. Res. Notices 2014:730218. doi: 10.1155/2014/730218

Alotaiby, T., Abd El-Samie, F. E., Alshebeili, S. A., and Ahmad, I. (2015). A review of channel selection algorithms for EEG signal processing. EURASIP J. Adv. Signal Process. 2015, 1–21. doi: 10.1186/s13634-015-0251-9

Amin, H. U., Mumtaz, W., Subhani, A. R., Saad, M. N. M., and Malik, A. S. (2017). Classification of EEG signals based on pattern recognition approach. Front. Comput. Neurosci. 11:103. doi: 10.3389/fncom.2017.00103

Asghar, M. A., Khan, M. J., Fawad Amin, Y., Rizwan, M., Rahman, M., Badnava, S., et al. (2019). EEG-based multi-modal emotion recognition using bag of deep features: an optimal feature selection approach. Sensors 19, 1–16. doi: 10.3390/s19235218

Barachant, A., Bonnet, S., Congedo, M., and Jutten, C. (2011). Multiclass brain-computer interface classification by Riemannian geometry. IEEE Trans. Biomed. Eng. 59, 920–928. doi: 10.1109/TBME.2011.2172210

Bauer, E. P., Paz, R., and Paré, D. (2007). Gamma oscillations coordinate amygdalo-rhinal interactions during learning. J. Neurosci. 27, 9369–9379. doi: 10.1523/JNEUROSCI.2153-07.2007

Berens, P., Keliris, G. A., Ecker, A. S., Logothetis, N. K., and Tolias, A. S. (2008). Comparing the feature selectivity of the gamma-band of the local fi eld potential and the underlying spiking activity in primate visual cortex. Front. Syst. Neurosci. 2:8. doi: 10.3389/neuro.06.002.2008

Boutros, N. N. (1996). Diffuse electroencephalogram slowing in psychiatric patients: a preliminary report. J. Psychiatry Neurosci. 21:259.

Cecchin, T., Ranta, R., Koessler, L., Caspary, O., Vespignani, H., and Maillard, L. (2010). Seizure lateralization in scalp EEG using Hjorth parameters. Clin. Neurophysiol. 121, 290–300. doi: 10.1016/j.clinph.2009.10.033

Ching, S., Purdon, P. L., Vijayan, S., Kopell, N. J., and Brown, E. N. (2012). A neurophysiological metabolic model for burst suppression. Proc. Natl. Acad. Sci. U.S.A. 109, 3095–3100. doi: 10.1073/pnas.1121461109

Coan, J. A., Allen, J. J., and Harmon-Jones, E. (2001). Voluntary facial expression and hemispheric asymmetry over the frontal cortex. Psychophysiology 38, 912–925. doi: 10.1111/1469-8986.3860912

Dar, M. N., Akram, M. U., Khawaja, S. G., and Pujari, A. N. (2020). CNN and LSTM-based emotion charting using physiological signals. Sensors 20:4551. doi: 10.3390/s20164551

Das, K., and Pachori, R. (2021). Schizophrenia detection technique using multivariate iterative filtering and multichannel EEG signals. Biomed. Signal Process. Control 67:102525. doi: 10.1016/j.bspc.2021.102525

Davidson, R. J., Ekman, P., Saron, C. D., Senulis, J. A., and Friesen, W. V. (1990). Approach-withdrawal and cerebral asymmetry: emotional expression and brain physiology I. J. Pers. Soc. Psychol. 58, 330–341. doi: 10.1037/0022-3514.58.2.330

Dhingra, R. C., and Ram Avtar Jaswal, S. (2021). Emotion recognition based on EEG using DEAP dataset. Eur. J. Mol. Clin. Med. 8, 3509–3517. Available online at: https://ejmcm.com/article_11758.html

Drummond, J., Brann, C., Perkins, D., and Wolfe, D. (1991). A comparison of median frequency, spectral edge frequency, a frequency band power ratio, total power, and dominance shift in the determination of depth of anesthesia. Acta Anaesthesiol. Scand. 35, 693–699. doi: 10.1111/j.1399-6576.1991.tb03374.x

Duan, R.-N., Zhu, J.-Y., and Lu, B.-L. (2013). “Differential entropy feature for EEG-based emotion classification,” in 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), 81–84. doi: 10.1109/NER.2013.6695876

Eerola, T., and Vuoskoski, J. K. (2011). A comparison of the discrete and dimensional models of emotion in music. Psychol. Mus. 39, 18–49. doi: 10.1177/0305735610362821

Ekman, P. (1972). “Universals and cultural differences in facial expressions of emotion BT,” in Nebraska Symposium on Motivation.

Fang, Y., Yang, H., Zhang, X., Liu, H., and Tao, B. (2021). Multi-feature input deep forest for EEG-based emotion recognition. Front. Neurorobot. 14:617531. doi: 10.3389/fnbot.2020.617531

Galv ao, F., Alarc ao, S. M., and Fonseca, M. J. (2021). Predicting exact valence and arousal values from EEG. Sensors 21:3414. doi: 10.3390/s21103414

Gao, Z., Cui, X., Wan, W., and Gu, Z. (2019). Recognition of emotional states using multiscale information analysis of high frequency EEG oscillations. Entropy 21:609. doi: 10.3390/e21060609

Gerber, A. J., Posner, J., Gorman, D., Colibazzi, T., Yu, S., Wang, Z., et al. (2008). An affective circumplex model of neural systems subserving valence, arousal, and cognitive overlay during the appraisal of emotional faces. Neuropsychologia 46, 2129–2139. doi: 10.1016/j.neuropsychologia.2008.02.032

Ghassemi, M. M. (2018). Life after death: techniques for the prognostication of coma outcomes after cardiac arrest (Ph.D. thesis). Massachusetts Institute of Technology, Cambridge, MA, United States.

Haselsteiner, E., and Pfurtscheller, G. (2000). Using time-dependent neural networks for EEG classification. IEEE Trans. Rehabil. Eng. 8, 457–463. doi: 10.1109/86.895948

Hegger, R., and Kantz, H. (1999). Improved false nearest neighbor method to detect determinism in time series data. Phys. Rev. E 60:4970. doi: 10.1103/PhysRevE.60.4970

Hirsch, L., LaRoche, S., Gaspard, N., Gerard, E., Svoronos, A., Herman, S., et al. (2013). American clinical neurophysiology society?s standardized critical care EEG terminology: 2012 version. J. Clin. Neurophysiol. 30, 1–27. doi: 10.1097/WNP.0b013e3182784729

Hjorth, B. (1970). EEG analysis based on time domain properties. Electroencephalogr. Clin. Neurophysiol. 29, 306–310. doi: 10.1016/0013-4694(70)90143-4

Jadhav, N., Manthalkar, R., and Joshi, Y. (2017). Electroencephalography-based emotion recognition using gray-level co-occurrence matrix features. Adv. Intell. Syst. Comput. 459, 335–343. doi: 10.1007/978-981-10-2104-6_30

Jas, M., Engemann, D. A., Bekhti, Y., Raimondo, F., and Gramfort, A. (2017). Autoreject: automated artifact rejection for MEG and EEG data. NeuroImage 159, 417–429. doi: 10.1016/j.neuroimage.2017.06.030

Jeevan, R. K., Venu Madhava Rao, S. P., Pothunoori, S. K., and Srivikas, M. (2019). “EEG-based emotion recognition using LSTM-RNN machine learning algorithm,” in Proceedings of 1st International Conference on Innovations in Information and Communication Technology, ICIICT 2019 (Chennai), 1–4. doi: 10.1109/ICIICT1.2019.8741506

Jia, X., Koenig, M. A., Nickl, R., Zhen, G., Thakor, N. V., and Geocadin, R. G. (2008). Early electrophysiologic markers predict functional outcome associated with temperature manipulation after cardiac arrest in rats. Crit. Care Med. 36:1909. doi: 10.1097/CCM.0b013e3181760eb5

Jia, X., and Kohn, A. (2011). Gamma rhythms in the brain. PLoS Biol. 9:e1001045. doi: 10.1371/journal.pbio.1001045

Jie, X., Rui, C., and Li, L. (2014). Emotion recognition based on the sample entropy of EEG. Biomed. Mater. Eng. 24, 1185–1192. doi: 10.3233/BME-130919

Jin, L., and Kim, E. Y. (2020). Interpretable cross-subject EEG-based emotion recognition using channel-wise features. Sensors 20, 1–18. doi: 10.3390/s20236719

Kamiński, J., Brzezicka, A., Gola, M., and Wróbel, A. (2012). Beta band oscillations engagement in human alertness process. Int. J. Psychophysiol. 85, 125–128. doi: 10.1016/j.ijpsycho.2011.11.006

Kanungo, L., Garg, N., Bhobe, A., Rajguru, S., and Baths, V. (2021). “Wheelchair automation by a hybrid BCI system using SSVEP and eye blinks,” in 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (Melbourne, VIC), 411–416. doi: 10.1109/SMC52423.2021.9659266

Karlekar, S., Niu, T., and Bansal, M. (2018). Detecting linguistic characteristics of Alzheimer's dementia by interpreting neural models. ACL Anthol. 701–707. doi: 10.18653/v1/N18-2110

Katsigiannis, S., and Ramzan, N. (2018). DREAMER: a database for emotion recognition through EEG and ECG signals from wireless low-cost off-the-shelf devices. IEEE J. Biomed. Health Informatics 22, 98–107. doi: 10.1109/JBHI.2017.2688239

Kennel, M. B., Brown, R., and Abarbanel, H. D. (1992). Determining embedding dimension for phase-space reconstruction using a geometrical construction. Phys. Rev. A 45:3403. doi: 10.1103/PhysRevA.45.3403

Khateeb, M., Anwar, S., and Alnowami, M. (2021). Multi-domain feature fusion for emotion classification using DEAP dataset. IEEE Access 9, 12134–12142. doi: 10.1109/ACCESS.2021.3051281

Klimesch, W. (1996). Memory processes, brain oscillations and EEG synchronization. Int. J. Psychophysiol. 24, 61–100. doi: 10.1016/S0167-8760(96)00057-8

Klimesch, W. (1999). EEG alpha and theta oscillations reflect cognitive and memory performance: a review and analysis. Brain Res. Rev. 29, 169–195. doi: 10.1016/S0165-0173(98)00056-3

Klimesch, W. (2012). Alpha-band oscillations, attention, and controlled access to stored information. Trends Cogn. Sci. 16, 606–617. doi: 10.1016/j.tics.2012.10.007

Klimesch, W., Pfurtscheller, G., Mohl, W., and Schimke, H. (1990). Event-related desynchronization, ERD-mapping and hemispheric differences for words and numbers. Int. J. Psychophysiol. 8, 297–308. doi: 10.1016/0167-8760(90)90020-E

Ko, L. W., Su, C. H., Yang, M. H., Liu, S. Y., and Su, T. P. (2021). A pilot study on essential oil aroma stimulation for enhancing slow-wave EEG in sleeping brain. Sci. Rep. 11, 1–11. doi: 10.1038/s41598-020-80171-x

Koelstra, S., Mühl, C., Soleymani, M., Lee, J. S., Yazdani, A., Ebrahimi, T., et al. (2012). DEAP: a database for emotion analysis; Using physiological signals. IEEE Trans. Affect. Comput. 3, 18–31. doi: 10.1109/T-AFFC.2011.15

Kurdi, B., Lozano, S., and Banaji, M. R. (2016). Introducing the open affective standardized image set (OASIS). Behav. Res. Methods 49, 457–470. doi: 10.3758/s13428-016-0715-3

Kusumaningrum, T., Faqih, A., and Kusumoputro, B. (2020). Emotion recognition based on DEAP database using EEG time-frequency features and machine learning methods. J. Phys. Conf. Ser. 1501:012020. doi: 10.1088/1742-6596/1501/1/012020

Lane, R. D., Chua, P. M., and Dolan, R. J. (1999). Common effects of emotional valence, arousal and attention on neural activation during visual processing of pictures. Neuropsychologia 37, 989–997. doi: 10.1016/S0028-3932(99)00017-2

Lang, P. (1995). International Affective Picture System (IAPS). Technical manual and affective ratings. NIMH Center Study Emot. Attent. 1, 39–58. Available online at: https://www2.unifesp.br/dpsicobio/adap/instructions.pdf

Lehmann, D. (1990). “Brain electric microstates and cognition: The atoms of thought,” in Machinery of the Mind: Data, Theory, and Speculations About Higher Brain Function, eds J. E. Roy, T. Harmony, L. S. Prichep, M. Valdes-Soso, and P. A. Valdes-Sosa (Boston, MA: Birkhauser Boston), 209–224. doi: 10.1007/978-1-4757-1083-0_10

Leite, J., Carvalho, S., Galdo-Alvarez, S., Alves, J., Sampaio, A., and Gonçalves, Ó. F. (2012). Affective picture modulation: valence, arousal, attention allocation and motivational significance. Int. J. Psychophysiol. 83, 375–381. doi: 10.1016/j.ijpsycho.2011.12.005

Malini, A., and Vimala, V. (2016). “An epileptic seizure classifier using EEG signal,” in 2016 International Conference on Computing Technologies and Intelligent Data Engineering (ICCTIDE'16), 1–4. doi: 10.1109/ICCTIDE.2016.7725334

MAR, D. W. M. V. A. (2021). Missing data and regression. Available online at: https://web.pdx.edu/~newsomj/mvclass/ho_missing.pdf

Milz, P., Faber, P. L., Lehmann, D., Koenig, T., Kochi, K., and Pascual-marqui, R. D. (2016). The functional significance of EEG microstates—associations with modalities of thinking. NeuroImage 125, 643–656. doi: 10.1016/j.neuroimage.2015.08.023

Misselhorn, J., Friese, U., and Engel, A. K. (2019). Frontal and parietal alpha oscillations reflect attentional modulation of cross-modal matching. Sci. Rep. 9, 1–11. doi: 10.1038/s41598-019-41636-w

Mohammad, S. M. (2018). “Obtaining reliable human ratings of valence, arousal, and dominance for 20,000 English words,” in ACL 2018 - 56th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), Vol. 1, 174–184. doi: 10.18653/v1/P18-1017

Moors, A., De Houwer, J., Hermans, D., Wanmaker, S., van Schie, K., Van Harmelen, A. L., et al. (2013). Norms of valence, arousal, dominance, and age of acquisition for 4,300 Dutch words. Behav. Res. Methods 45, 169–177. doi: 10.3758/s13428-012-0243-8

Moss, M., Cook, J., Wesnes, K., and Duckett, P. (2003). Aromas of rosemary and lavender essential oils differentially affect cognition and mood in healthy adults. Int. J. Neurosci. 113, 15–38. doi: 10.1080/00207450390161903

Murugappan, M., Ramachandran, N., and Sazali, Y. (2010). Classification of human emotion from EEG using discrete wavelet transform. J. Biomed. Sci. Eng. 334054, 390–396. doi: 10.4236/jbise.2010.34054

Oh, S.-H., Lee, Y.-R., and Kim, H.-N. (2014). A novel EEG feature extraction method using Hjorth parameter. Int. J. Electron. Electr. Eng. 2, 106–110. doi: 10.12720/ijeee.2.2.106-110

Onikura, K., Katayama, Y., and Iramina, K. (2015). Evaluation of a method of removing head movement artifact from EEG by independent component analysis and filtering. Adv. Biomed. Eng. 4, 67–72. doi: 10.14326/abe.4.67

Panat, A., Patil, A., and Deshmukh, G. (2014). “Feature extraction of EEG signals in different emotional states,” in IRAJ Conference.

Panda, R., Khobragade, P. S., Jambhule, P. D., Jengthe, S. N., Pal, P., and Gandhi, T. K. (2010). “Classification of EEG signal using wavelet transform and support vector machine for epileptic seizure diction,” in 2010 International Conference on Systems in Medicine and Biology (Kharagpur), 405–408. doi: 10.1109/ICSMB.2010.5735413

Patil, M., Garg, N., Kanungo, L., and Baths, V. (2019). “Study of motor imagery for multiclass brain system interface with a special focus in the same limb movement,” in 2019 IEEE 18th International Conference on Cognitive Informatics & Cognitive Computing (ICCI* CC). Milan: IEEE. 90–96. doi: 10.1109/ICCICC46617.2019.9146105

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al. (2011). Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830. Available online at: https://www.jmlr.org/papers/volume12/pedregosa11a/pedregosa11a.pdf?ref=https://githubhelp.com

Peirce, J., Gray, J., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., et al. (2019). Psychopy2: Experiments in behavior made easy. Behav. Res. Methods 51, 195–203. doi: 10.3758/s13428-018-01193-y

Phung, D. Q., Tran, D., Ma, W., Nguyen, P., and Pham, T. (2014). “Using shannon entropy as EEG signal feature for fast person identification,” in ESANN, Vol. 4 (Citeseer), 413–418.

Placidi, G., Di Giamberardino, P., Petracca, A., Spezialetti, M., and Iacoviello, D. (2016). “Classification of emotional signals from the DEAP dataset,” in International Congress on Neurotechnology, Electronics and Informatics, Vol. 2 (SCITEPRESS), 15–21. doi: 10.5220/0006043400150021

Russell, J. A. (1980). A circumplex model of affect. J. Pers. Soc. Psychol. 39, 1161–1178. doi: 10.1037/h0077714

Saba-Sadiya, S., Chantland, E., Alhanai, T., Liu, T., and Ghassemi, M. M. (2020). Unsupervised EEG artifact detection and correction. Front. Digit. Health 2:57. doi: 10.3389/fdgth.2020.608920

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., et al. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 38, 5391–5420. doi: 10.1002/hbm.23730

Schwilden, H. (1989). Use of the median EEG frequency and pharmacokinetics in determining depth of anaesthesia. Baillière's Clin. Anaesthesiol. 3, 603–621. doi: 10.1016/S0950-3501(89)80021-2

Shannon, C. E. (1948). A mathematical theory of communication. Bell Syst. Techn. J. 27, 379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x

Shen, J., Zhang, X., Huang, X., Wu, M., Gao, J., Lu, D., et al. (2020). An optimal channel selection for EEG-based depression detection via kernel-target alignment. IEEE J. Biomed. Health Informatics. 25, 2545–2556. doi: 10.1109/JBHI.2020.3045718

Shen, X., Hu, X., Liu, S., Song, S., and Zhang, D. (2020). “Exploring EEG microstates for affective computing: decoding valence and arousal experiences during video watching,” in Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS (Montreal, QC), 841–846. doi: 10.1109/EMBC44109.2020.9175482

Shestyuk, A. Y., Kasinathan, K., Karapoondinott, V., Knight, R. T., and Gurumoorthy, R. (2019). Individual EEG measures of attention, memory, and motivation predict population level TV viewership and Twitter engagement. PLoS ONE 14:e214507. doi: 10.1371/journal.pone.0214507

Shin, H.-C., Tong, S., Yamashita, S., Jia, X., Geocadin, G., and Thakor, V. (2006). Quantitative EEG and effect of hypothermia on brain recovery after cardiac arrest. IEEE Trans. Biomed. Eng. 53, 1016–1023. doi: 10.1109/TBME.2006.873394

Simar, C., Cebolla, A.-M., Chartier, G., Petieau, M., Bontempi, G., Berthoz, A., et al. (2020). Hyperscanning EEG and classification based on Riemannian geometry for festive and violent mental state discrimination. Front. Neurosci. 14:588357. doi: 10.3389/fnins.2020.588357

Smith, M. E., and Gevins, A. (2004). Attention and brain activity while watching television: components of viewer engagement. Media Psychol. 6, 285–305. doi: 10.1207/s1532785xmep0603_3

Song, T., Zheng, W., Song, P., and Cui, Z. (2018). EEG emotion recognition using dynamical graph convolutional neural networks. IEEE Trans. Affect. Comput. 11, 532–541. doi: 10.1109/TAFFC.2018.2817622

Subasi, A., Tuncer, T., Dogan, S., Tanko, D., and Sakoglu, U. (2021). EEG-based emotion recognition using tunable Q wavelet transform and rotation forest ensemble classifier. Biomed. Signal Process. Control 68:102648. doi: 10.1016/j.bspc.2021.102648

Tao, W., Li, C., Song, R., Cheng, J., Liu, Y., Wan, F., and Chen, X. (2020). EEG-based emotion recognition via channel-wise attention and self attention. IEEE Trans. Affect. Comput. 3045, 1–12. doi: 10.1109/TAFFC.2020.3025777

Ting, W., Guo-Zheng, Y., Bang-Hua, Y., and Hong, S. (2008). EEG feature extraction based on wavelet packet decomposition for brain computer interface. Measurement 41, 618–625. doi: 10.1016/j.measurement.2007.07.007

Torres, P. E. P, Torres, E. A., Hernández-Álvarez, M., and Yoo, S. G. (2020). EEG-based BCI emotion recognition: a survey. Sensors 20, 1–36. doi: 10.3390/s20185083

Tuncer, T., Dogan, S., and Subasi, A. (2021). A new fractal pattern feature generation function based emotion recognition method using EEG. Chaos Solit. Fract. 144:110671. doi: 10.1016/j.chaos.2021.110671

Türk, Ö., Şeker, M., Akpolat, V., and Özerdem, M. S. (2017). “Classification of mental task EEG records using Hjorth parameters,” in 2017 25th Signal Processing and Communications Applications Conference (SIU) (Antalya), 1–4. doi: 10.1109/SIU.2017.7960608

Übeyli, E. D. (2009). Analysis of EEG signals by implementing eigenvector methods/recurrent neural networks. Digit. Signal Process. 19, 134–143. doi: 10.1016/j.dsp.2008.07.007

Valsaraj, A., Madala, I., Garg, N., Patil, M., and Baths, V. (2020). “Motor imagery based multimodal biometric user authentication system using EEG,” in 2020 International Conference on Cyberworlds (CW) (Caen), 272–279. doi: 10.1109/CW49994.2020.00050

Verma, G. K., and Tiwary, U. S. (2017). Affect representation and recognition in 3D continuous valence-arousal-dominance space. Multim. Tools Appl. 76, 2159–2183. doi: 10.1007/s11042-015-3119-y

Virtanen, P., Gommers, R., Oliphant, T. E., Haberland, M., Reddy, T., Cournapeau, D., et al. (2020). SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272. doi: 10.1038/s41592-020-0772-5

Wang, X., Nie, D., and Lu, B.-L. (2011). “EEG-based emotion recognition using frequency domain features and support vector machines,” in ICONIP.

Wang, X.-W., Nie, D., and Lu, B.-L. (2011). “EEG-based emotion recognition using frequency domain features and support vector machines,” in International Conference on Neural Information Processing (Springer), 734–743. doi: 10.1007/978-3-642-24955-6_87

Wang, X.-W., Nie, D., and Lu, B.-L. (2014). Emotional state classification from EEG data using machine learning approach. Neurocomputing 129, 94–106. doi: 10.1016/j.neucom.2013.06.046

Warriner, A. B., Kuperman, V., and Brysbaert, M. (2013). Norms of valence, arousal, and dominance for 13,915 English lemmas. Behav. Res. Methods 45, 1191–1207. doi: 10.3758/s13428-012-0314-x

Zhang, Y., Ji, X., and Zhang, S. (2016). An approach to EEG-based emotion recognition using combined feature extraction method. Neurosci. Lett. 633, 152–157. doi: 10.1016/j.neulet.2016.09.037

Zheng, W.-L., Dong, B.-N., and Lu, B.-L. (2014). “Multimodal emotion recognition using EEG and eye tracking data,” in 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Chicago, IL), 5040–5043.

Zheng, W.-L., Zhu, J.-Y., and Lu, B.-L. (2017). Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 10, 417–429. doi: 10.1109/TAFFC.2017.2712143

Keywords: signal processing, electroencephalography (EEG), machine learning, valence, arousal, emotion, feature extraction, artifact rejection

Citation: Garg N, Garg R, Anand A and Baths V (2022) Decoding the neural signatures of valence and arousal from portable EEG headset. Front. Hum. Neurosci. 16:1051463. doi: 10.3389/fnhum.2022.1051463

Received: 22 September 2022; Accepted: 08 November 2022;

Published: 06 December 2022.

Edited by:

Bin He, Carnegie Mellon University, United StatesReviewed by:

Jiahui Pan, South China Normal University, ChinaCopyright © 2022 Garg, Garg, Anand and Baths. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rohit Garg, ZjIwMTgwMTkzQGdvYS5iaXRzLXBpbGFuaS5hYy5pbg==; Veeky Baths, dmVla3lAZ29hLmJpdHMtcGlsYW5pLmFjLmlu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.