94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci., 21 October 2021

Sec. Sensory Neuroscience

Volume 15 - 2021 | https://doi.org/10.3389/fnhum.2021.757254

This article is part of the Research TopicChanges in the Auditory Brain Following Deafness, Cochlear Implantation, and Auditory Training, volume IIView all 5 articles

One of the biggest challenges that face cochlear implant (CI) users is the highly variable hearing outcomes of implantation across patients. Since speech perception requires the detection of various dynamic changes in acoustic features (e.g., frequency, intensity, timing) in speech sounds, it is critical to examine the ability to detect the within-stimulus acoustic changes in CI users. The primary objective of this study was to examine the auditory event-related potential (ERP) evoked by the within-stimulus frequency changes (F-changes), one type of the acoustic change complex (ACC), in adult CI users, and its correlation to speech outcomes. Twenty-one adult CI users (29 individual CI ears) were tested with psychoacoustic frequency change detection tasks, speech tests including the Consonant-Nucleus-Consonant (CNC) word recognition, Arizona Biomedical Sentence Recognition in quiet and noise (AzBio-Q and AzBio-N), and the Digit-in-Noise (DIN) tests, and electroencephalographic (EEG) recordings. The stimuli for the psychoacoustic tests and EEG recordings were pure tones at three different base frequencies (0.25, 1, and 4 kHz) that contained a F-change at the midpoint of the tone. Results showed that the frequency change detection threshold (FCDT), ACC N1′ latency, and P2′ latency did not differ across frequencies (p > 0.05). ACC N1′-P2 amplitude was significantly larger for 0.25 kHz than for other base frequencies (p < 0.05). The mean N1′ latency across three base frequencies was negatively correlated with CNC word recognition (r = −0.40, p < 0.05) and CNC phoneme (r = −0.40, p < 0.05), and positively correlated with mean FCDT (r = 0.46, p < 0.05). The P2′ latency was positively correlated with DIN (r = 0.47, p < 0.05) and mean FCDT (r = 0.47, p < 0.05). There was no statistically significant correlation between N1′-P2′ amplitude and speech outcomes (all ps > 0.05). Results of this study indicated that variability in CI speech outcomes assessed with the CNC, AzBio-Q, and DIN tests can be partially explained (approximately 16–21%) by the variability of cortical sensory encoding of F-changes reflected by the ACC.

The cochlear implant (CI) is a prosthetic device that provides an effective treatment for individuals with bilateral severe-to-profound hearing loss. Currently there are approximately 750,000 CIs registered worldwide. The CI has been reported to improve hearing ability and life quality in most patients, language development in children, and possibly cognitive function in old adults (Vermeire et al., 2005; Claes et al., 2018; Andries et al., 2021).

One of the major issues about CI user’s hearing ability is that their spectral resolution is poor and they cannot perform well in tasks that heavily depend on pitch cues such as speech perception in noisy backgrounds, music melody recognition, voice pitch differentiation, and talker identification (Galvin et al., 2007; Sagi and Svirsky, 2017; Gaudrain and Başkent, 2018; Fowler et al., 2021). Unlike normal acoustic hearing with a healthy cochlea that transmits the temporal and spectral information of sounds through approximately 3,000 inner hair cells, CI users’ hearing is constrained at the peripheral stage not only by the limitation of CI signal processing algorithms that discard temporal fine structures but also by the use of only up to 22 electrodes for sound delivery (Gaudrain and Başkent, 2018; Berg et al., 2020). CI users’ capability to detect frequency changes is further exasperated by deafness (Moore, 1985). Therefore, the temporal and place cues available to CI users are limited for differentiating sound frequencies (Zeng, 2002; Pretorius and Hanekom, 2008; Swanson et al., 2009; Oxenham, 2013).

Despite similar CI technology limitations across all CI users in spectral resolution, some patients are star CI users whose performance of some tasks is within the range of normal hearing listeners’ performance while others barely benefit from their CIs (Maarefvand et al., 2013; Hay-McCutcheon et al., 2018). Understanding the source of this variability is critical for customized rehabilitation (Fu and Galvin, 2008). The variability appears to be related to a variety of potential factors including patient demographics (e.g., patient’s age, age of implantation, duration of deafness, duration of CI use, and etiology of hearing loss), cochlear abnormalities, surgical issues, electrode insertion (e.g., insertion depth and location), clinical mapping (e.g., frequency-place mismatch), device maintenance, neural status (e.g., survival of spiral ganglion neurons, and cortical neural plasticity), and higher-level cognitive functions (e.g., verbal working memory, attention, executive function, and learning processes, Blamey et al., 1992; Alexiades et al., 2001; Doucet et al., 2006; Finley et al., 2008; Reiss et al., 2008; Grasmeder et al., 2014; Jeong and Kim, 2015; Moberly et al., 2018; Berg et al., 2020; Heutink et al., 2020; Kim et al., 2021). With so many influencing factors, it is difficult to predict the likelihood of CI success using only demographic data (Lachowska et al., 2014).

Psychoacoustic methods may help us understand some of the fundamental reasons of this variability and offer single-point non-language-based measures of CI outcomes (Drennan et al., 2014). Studies have reported that CI users’ speech performance was significantly correlated to their capability to detect sound changes in spectral or frequency domain (Drennan et al., 2014; Gifford et al., 2014; Kenway et al., 2015; Sheft et al., 2015; Turgeon et al., 2015). In CI users, frequency discrimination tasks can be conducted with acoustic stimuli delivered through the sound processor, or with electrical stimuli directly presented to the CI electrodes. While the latter approach is effective to control the type of cues used (temporal vs. place cues, Nelson et al., 1995; Zeng, 2002; Kenway et al., 2015), tasks presented in the free field can provide information on patients’ performance through the sound processor, which is the way CI users perceive speech in daily lives (Martin, 2007; Pretorius and Hanekom, 2008; Vandali et al., 2015).

For frequency discrimination, many studies have used pitch discrimination or pitch ranking tasks (Gfeller et al., 2007; Won et al., 2007; Goldsworthy, 2015; Turgeon et al., 2015). These tasks require participants to identify the target stimulus that has a different pitch relative to the reference stimulus at a certain pitch or to determine the direction of the pitch change of the target stimulus relative to the reference. Therefore, these tasks assess the ability to detect across-stimulus frequency changes. Daily life sounds contain dynamic changes of acoustic features that serve as critical cues for speech and music perception. Examples of these acoustic changes include voice fundamental frequency contours, spectral shapes of the vowels, formant transitions, and melodic contours (Moore, 1985; Parikh and Loizou, 2005; McDermott and Oxenham, 2008). As described in Zhang et al. (2019), our lab used a frequency change detection task that required the participants to identify pure tones (base frequencies of 0.25, 1, and 4 kHz) containing frequency changes in the middle of the tone (within-stimulus F-changes). The results showed that there was a strong correlation (R2 ranges from 0.71 to 0.74) between the mean frequency change detection thresholds (FCDTs) across the three base frequencies and speech perception outcomes assessed with Consonant-Nucleus-Consonant (CNC) word, CNC phoneme, Arizona Biomedical Sentence Recognition in quiet and noise (AzBio-Q and AzBio-N), and the Digit-in-Noise (DIN) tests. Moreover, we suggested that a mean FCDT of 10% be used as a cutoff to separate CI users into moderate-to-good (e.g., >60% for CNC and AzBio-Q) and poor performers. This finding was consistent with that in Turgeon et al. (2015): proficient CI users (>65% word recognition) had a frequency discrimination threshold of less than 10% for 0.5 and 4 kHz. If the FCDT will be used as a convenient non-language test to predict CI outcomes, then it is necessary to compare CI users whose FCDT is good vs. poor to determine the neurophysiological differences of these groups. This information will deepen our understanding of neural correlates underlying patients’ variability in speech outcomes.

The auditory event-related potentials (ERPs) recorded with electroencephalographic (EEG) techniques in response to within-stimulus sound change, also called acoustic change complexes (ACCs), have attracted interests from researchers (Ostroff et al., 1998; Martin and Boothroyd, 2000; Friesen and Tremblay, 2006; Brown et al., 2015). The ACC is a type of cortical auditory evoked potential (CAEP), which could be evoked by stimulus onset (onset-CAEP), the within-stimulus sound change (ACC), and stimulus offset (offset-CAEP). The ACC does not require the individual’s attention to the stimuli or behavioral response and thus is suitable for difficult-to-test patients. The ACC measures (e.g., the minimum sound change that can evoke an ACC, the ACC peak amplitudes and latencies) were found to be in agreement with the behavioral performance of auditory discrimination tasks (Martin, 2007; Mathew et al., 2017; Han and Dimitrijevic, 2020). The ACC has shown to be reliable in normal hearing listeners, individuals with hearing loss, and CI users (Tremblay et al., 2003; Friesen and Tremblay, 2006; Martin, 2007; Martinez et al., 2013; Mathew et al., 2017).

Most previous ACC studies involving CI users have used acoustic stimuli containing changes of multiple dimensions (e.g., the changes of frequency components, intensity, and periodicity) presented in the sound field or electrical stimuli containing changes that are delivered through the CI electrode (Ostroff et al., 1998; Martin, 2007; Kim et al., 2009). Simple pure tones containing only F-changes presented in sound field can be used for both psychoacoustic and ACC experimental designs to better reveal brain-behavior relationships regarding within-stimulus detection in the frequency domain alone. Our group first examined the ACC using pure tones (160 and 1,200 Hz) containing F-changes (5 and 50%) in both normal hearing listeners (Liang et al., 2016) and CI users (Liang et al., 2018). In the CI study, we found that ACC N1′ latency to the 160 Hz tone containing the 50% F-change was significantly correlated to the behaviorally measured FCDT and clinically collected word recognition score (Liang et al., 2018). A recently published study (Vonck et al., 2021) has used tones containing F-changes to evoke the ACC in normal hearing listeners and hearing-impaired listeners. The results showed that the ACC threshold (the minimum F-change that can evoke an ACC) was significantly correlated to behavioral performance of frequency discrimination threshold. Participants with higher ACC thresholds had poorer speech perception in noise.

The current study is a companion study of Zhang et al. (2019), which did not have electrophysiological results. This study examined the ACCs evoked by F-changes at different base frequencies (fbases): 0.25, 1, and 4 kHz. These fbases are assigned to the electrodes at different regions of the cochlea (Skinner et al., 2002). Therefore, the ACC results of the current study would provide information about how auditory cortex may process F-changes at different fbases (Pratt et al., 2009).

This current study addresses the following questions: (1) How CI users process F-changes of different fbases at the cortical level; (2) Can ACCs be used as objective tools to estimate the behavioral performance of F-change detection and speech perception? (3) If the mean FCDT was used to separate the CI ears into good and poor performers, as suggested in Zhang et al. (2019), what are the profiles of these two groups in demographic factors, speech performance, and ACC measures? (4) Are there within-subject ear difference between Left and Right ears in bilateral CI users? The current results would provide clinically relevant information on the substantial variability in CI outcomes across and within subjects.

Twenty-one adult CI users (nine females and 12 males; 20–83 years old; nine unilateral and 12 bilateral CI users) participated in this study. There was no upper age limit for subject recruitment, as previous research findings showed age is not a factor limiting CI use (Buchman et al., 1999; Wong et al., 2016). The means and standard deviations (M ± SD) of age, age at implantation, duration of deafness and duration of CI use were: 57.85 ± 14.61, 50.66 ± 16.44, 28.98 ± 18.52, and 5.22 ± 4.69 years, respectively. All participants were right-handed, native English speakers with no history of neurological or psychological disorders. All participants except two were post-lingually deafened. All CI users wore the devices from Cochlear Corporation. In the 12 bilateral CI users, eight were tested in the two CI ears separately; the rest four were tested in one CI ear only due to personal reasons for not coming back for testing in the other CI ear. Therefore, a total of 29 CI ears were tested separately. All patients have used the CI for at least 3 months (Blamey et al., 1992). The use of 3-month as a cutoff for recruitment was because: (1) Previous studies reported that CI users exhibited the greatest amount of improvement of speech perception in the first 3 months of implant use (Spivak and Waltzman, 1990; Kelsall et al., 2021). (2) One study (Drennan et al., 2014) examining the ability of adult CI users to detect spectral changes of sound over the first year of implantation reported that the improvement occurred between 1 and 3 months but not between 3 and 12 months. Demographic data of participants are shown in Table 1. This study was approved by the Institutional Review Board of the University of Cincinnati. Participants gave written informed consent before participating in the study and received financial compensation for their participation.

Pure tones of 1-s duration (including 20-ms raised-cosine onset and offset ramps) at fbases of 0.25, 1, and 4 kHz were generated using MATLAB at a sample rate of 44.1 kHz. Then a series of tones at these three fbases that contained different magnitudes of upward F-changes at 500 ms after the tone onset were generated. The F-change occurred at 0 phase (zero crossing) and there was no audible transient when the F-change occurred (Dimitrijevic et al., 2008; Pratt et al., 2009). The electrodogram of the stimuli was provided in Zhang et al. (2019), suggesting that the transient cue at the transition was minimal. The amplitudes of all stimuli were normalized.

Participants were first tested for pure-tone hearing thresholds to ensure the audibility of the stimuli presented through their clinical processors. They were seated on a comfortable chair in a sound-treated booth for the following tests, with the stimuli presented in the sound field at approximately 70 dBA through a speaker 1 m away from the participant’s head at 0-degree azimuth. For CI users, it is important to present the stimuli at the same perceived loudness level rather than at a fixed intensity level (Pretorius and Hanekom, 2008). Therefore, CI users were allowed to adjust their processor sensitivity setting to the most comfortable level, i.e., a loudness level of 6–7 on a 0–10 scale before testing (Hoppe et al., 2001).

The stimuli were tones of three fbases (0.25, 1, and 4 kHz) containing F-changes, with the magnitude varied from 0.5 to 200%. The FCDT for each fbase was measured using an adaptive, 3-alternative forced-choice (3AFC) procedure in which the participants were instructed to identify the target stimulus by pressing the button on the computer screen with no visual feedback given. Each trial of stimuli consisted of two reference stimuli without the F-change and one target stimulus with a F-change in the middle of the tone, respectively. The order of standard and target stimuli was randomized and the silent interval between the stimuli in a trial was 500 ms. The target stimulus of the first trial was a 18% change, and the step size was adjusted according to a 2-down 1-up staircase technique based on the participants’ response. The FCDT at each fbase was calculated as the average of the last six reversals. Details of the FCDT were described in our previous study (Zhang et al., 2019).

The following speech tests were administered: (1) CNC Word Recognition Test (Peterson and Lehiste, 1962). The results were scored both for words and phonemes correctly identified in terms of percent correct. (2) AzBio sentences in quiet (AzBio-Q, Spahr et al., 2012) and in noise with a signal-to-noise ratio (SNR) of +10 dB (Brant et al., 2018). Results were scored as word correctly identified in terms of percent correct. (3) Digit-in-Noise Test (DIN). Results were expressed as the speech reception threshold (SRT) in dB. A lower SRT indicates better performance (Smits et al., 2016).

The 40-channel Neuroscan EEG system (Compumedics Neuroscan, Inc., Charlotte, NC, United States) was used to collect EEG data. The electrode cap was placed according to the International standard 10–20 system. Electro-ocular activity (EOG) was monitored so that eye movement artifacts could be identified and rejected during offline data analysis. The average electrode impedance was lower than 10 kΩ. EEG signals from a total of 1–3 electrodes over the CI coil were not used for recordings. During testing, participants read self-selected books or magazines to keep alert and to avoid attention effects on the ERPs. There were asked to ignore the acoustic stimuli. The continuous EEG data were recorded using tones at three fbases (0.25, 1, and 4 kHz) containing different percentages of F-changes (0, 10, and 70%). There was a total of 400 trials for each of the nine types of stimuli (3 fbases × 3 changes). The stimulus conditions were randomized across participants to prevent order effects. The inter-stimulus interval was 800 ms. Continuous EEG data was collected from participants with a band-pass filter setting from 0.1 to 100 Hz and a sampling rate of 1,000 Hz. The raw EEG data was saved on a computer for the following EEG data analysis.

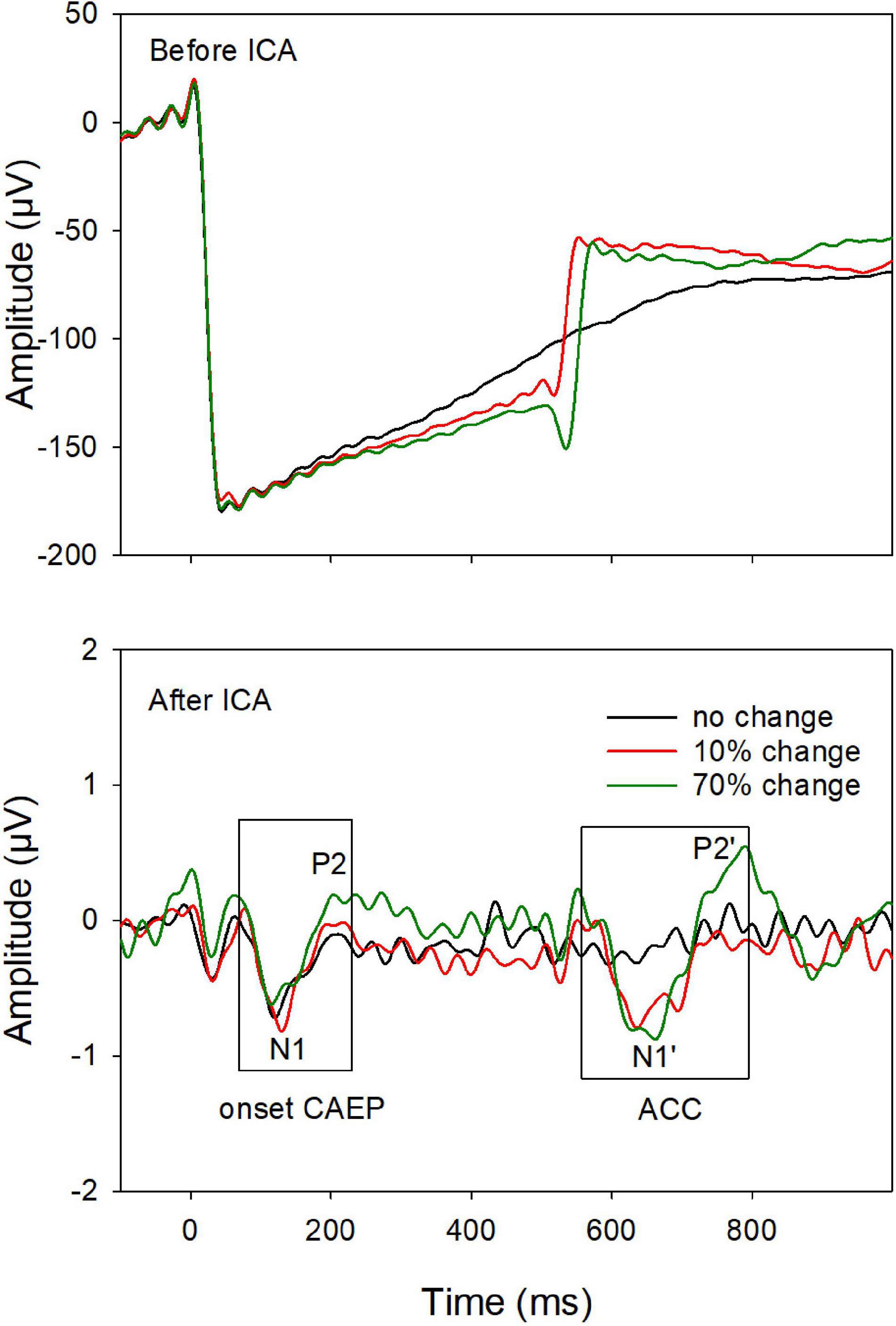

Continuous EEG data were digitally filtered (0.1–30 Hz) and then separated into segments over windows of −100 to 1,000 ms relative to the tone onset. Further data processing was performed using EEGLAB toolbox (Delorme and Makeig, 2004) running within MATLAB (MathWorks, United States). Each data segment was visually inspected, and segments contaminated by non-stereotyped artifacts were rejected. After this procedure, there were at least 200 segments/trials for each type of stimulus in each CI ear. The data was baseline-corrected and re-referenced using a common average reference. Independent component analysis (ICA) was then applied. The ICA decomposes the EEG dataset into mutually independent components, including those from artifactual and neutral EEG sources. The artifact components were identified using the criteria of CI artifacts (e.g., the component lasts for the whole duration of the stimulus; the scalp projection of the component shows a centroid on the CI side) and linearly subtracted from the EEG data (Gilley et al., 2006; Debener et al., 2008; Sandmann et al., 2009; Zhang et al., 2013). Figure 1 demonstrated that the ICA procedure was effective for artifact removal. Before artifact removal, large CI artifacts (more than a hundred microvolts) dominated in the EEG responses (top plot); after the artifact removal (bottom plot), the onset CAEP (N1-P2 complex), and the ACC (N1′-P2′ complex) were revealed and showed similar morphologies we reported in normal hearing listeners, despite smaller amplitudes in the CI user (Liang et al., 2016).

Figure 1. An example of event-related-potentials (ERPs) before (top) and after (bottom) artifact removal using the independent component (ICA) analysis. After removing the artifacts, the onset-CAEP and ACC are revealed. The response peaks (N1 and P2 for the onset-CAEP and the N1′ and P2′ for the ACC, as shown in the two boxes) are marked.

After artifact components were removed, the remaining components were then constructed to form the final EEG data, which was later filtered and averaged. EEG data from the electrodes close to the CI coil were replaced by linearly interpolated values computed from neighboring EEG signals. The averaged ERP waveform was derived for each type of stimuli. All ERPs were analyzed from electrode site Cz, where the cortical responses display the largest amplitude relative to other electrodes (Martin, 2007). The focus of this study is the ACC evoked by the 70% F-change, as the ACC evoked by 10% F-change was missing in multiple CI ears. The presence of the ACC was determined on the ERPs based on criteria: (i) an expected ACC wave morphology (N1′-P2′ complex) within the expected time window (approximately 580–680 ms after the tone onset), and (ii) a visual difference in the waveforms between the F-change conditions vs. no change condition. Finally, the peak components of the ACC (N1′ and P2′) were labeled. The N1′-P2′ peak-to-peak amplitude was used to represent the amplitude of the ACC, as in previous studies (Tremblay et al., 2001; Kim et al., 2009).

One-way repeated measure Analysis of Variance (ANOVA) was used to examine the effect of fbases on ACC measures and FCDTs. Bonferroni correction was applied when assessing pairwise comparisons for fbases. Pearson correlation analysis was used to examine to correlation of ACC measures and other measures. All CI ears were categorized as good CI ears and poor CI ears using the 10% FCDT as a cutoff, all other data (demographic data, speech perception performance, and ACC outcomes) were compared between good and poor CI users using independent t-tests. All data were compared between the Left and Right ears in the eight bilateral CI users, using paired t-tests. Statistical significance was defined as p < 0.05 for all analyses. Bonferroni correction was applied to adjust the p-value for multiple comparisons. If data normality was not achieved and the above parametric tests were not appropriate, the corresponding non-parametric tests were used for statistical analyses. Analyses were performed in SigmaPlot Version 14 (Systat Software Inc.) and SAS Version 9.4 (SAS Institute, Cary, NC, United States).

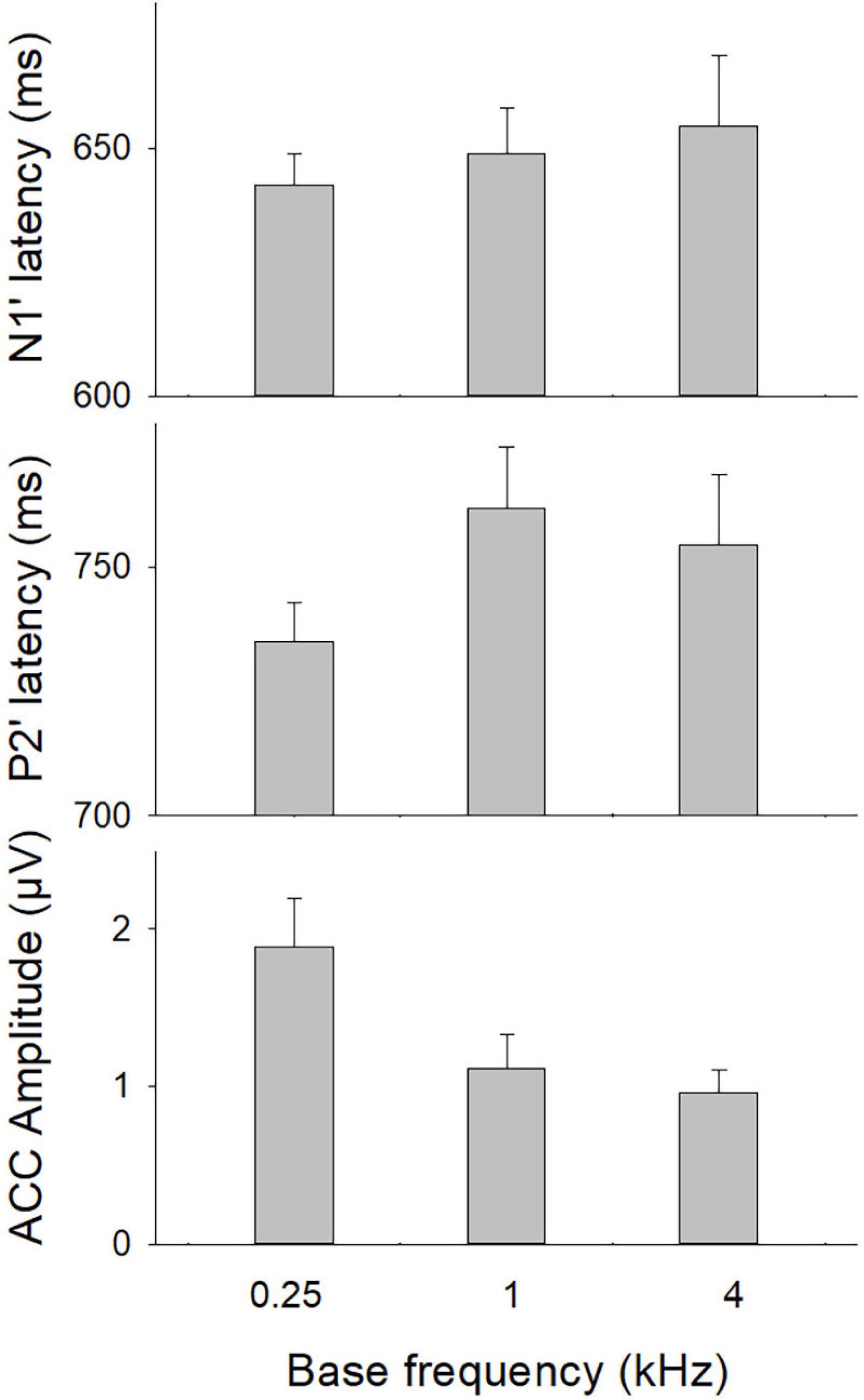

The ratios of present ACCs evoked by 70% F-changes, calculated with the number of present ACCs divided by the number of CI ears, were 87.5, 70.4, and 53.6% for fbases at 0.25, 1, and 4 kHz, respectively. Figure 2 shows the means for the ACC measures at three fbases. The amplitude of the ACC was larger and peak latencies were shorter for fbase at 0.25 kHz than for 1 and 4 kHz.

Figure 2. The ACC measures (N1′ and P2′ latencies, N1′-P2′ amplitude) at three base frequencies. Error bars indicate the standard error. A one-way repeated ANOVA was conducted separately for N1′ latency, P2′ latency, and N1′-P2′ amplitude. Results showed that there was a statistical significance for N1′-P2′ amplitude (p < 0.01). Bonferroni follow-up test showed a larger ACC amplitude for 0.25 kHz than for other frequencies (p < 0.01).

A one-way repeated ANOVA was conducted separately for N1′ latency, P2′ latency, and N1′-P2′ amplitude. Results showed that there was a statistical significance for N1′-P2′ amplitude [F(2,48) = 15.21; p < 0.01]. Bonferroni follow-up test showed that the N1′-P2′ amplitude was larger for 0.25 kHz (1.77 ± 1.42 μV) than for other frequencies (1.10 ± 0.85 μV for 1 kHz; 0.73 ± 0.57 for 4 kHz, p < 0.01). There was no difference in the N1′-P2′ amplitude between 1 and 4 kHz (p > 0.05). The N1′ and P2′ peak latencies did not differ significantly across frequencies (p > 0.05). Therefore, the mean N1′ latency and P2′ latency across three base frequencies were calculated and used for further correlation analyses.

The mean FCDTs at the three fbases were 8.68, 4.43, and 7.69%, respectively, for 0.25, 1, and 4 kHz. One-way repeated ANOVA was conducted to examine the differences in the FCDT among different fbases. Normality test failed, and Friedman Repeated Measures Analysis of Variance on Ranks was conducted and the results showed no difference in the FCDT among different fbases (p > 0.05).

The correlations between ACC measures (peak latencies and N1′-P2′ amplitude) and speech outcomes were examined using Pearson correlation analysis. The mean N1′ latency was negatively correlated with CNC word recognition (r = −0.40, p < 0.05) and CNC phoneme (r = −0.40, p < 0.05), and positively correlated with mean FCDT (r = 0.46, p < 0.05). The P2′ latency was positively correlated with DIN (r = 0.47, p < 0.05) and mean FCDT (r = 0.47, p < 0.05). There was no statistically significant correlation between N1′-P2′ amplitude and speech outcomes (all ps > 0.05). Table 2 shows the results of correlation analyses.

With the criterion of 10% FCDT, all CI ears were categorized as good CI ears (n = 21, mean FCDT < 10%) and poor CI ears (n = 8, mean FCDT ≥ 10%). Compared to poor CI ears, good CI ears showed a shorter duration of deafness (M = 40.38 and 26.07 years, respectively), but the difference did not reach statistical significance (p > 0.05). There was no difference in the duration of CI use (3.09 vs. 6.04 years, p > 0.05), or age at implantation (46.25 vs. 52.33, p > 0.05). The mean speech performance in poor CI ears was worse than in good CI ears in all tests (18.5 vs. 65% for CNC word, 34.75 vs. 77.17% for CNC phoneme, 25.9 vs. 78.2% for AzBio-Q, 16.5 vs. 54.5% for AzBio-N, and 12.5 vs. 3.9 dB for DIN). Statistical results showed that there was a significant group difference in the CNC word (t = −5.0, p < 0.05), CNC phoneme (t = −4.86, p < 0.05), AzBio-Q (Mann–Whitney U Statistic = 14.50, p < 0.05), AzBio-N (t = −3.65, p < 0.05), and DIN (t = 3.28, p < 0.05). After correcting for multiple comparisons, these statistically significant differences still existed (p < 0.05).

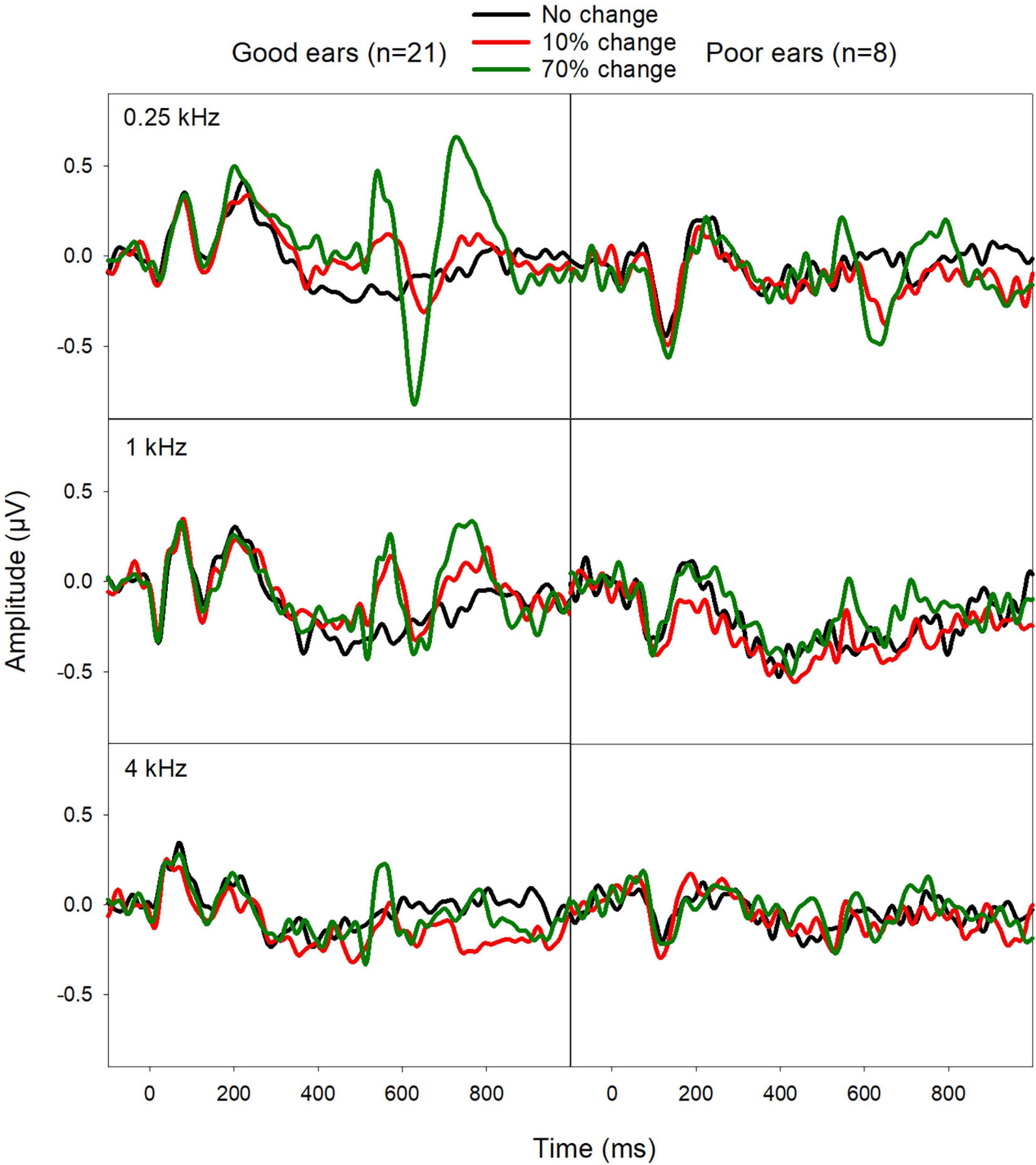

Figure 3 shows the EEG data from the good and poor CI ears. Good CI ears differed from poor CI ears in the ACCs rather than the onset-CAEP. The ACC N1′-P2′ amplitude was greater for good CI ears than poor CI ears, especially for the fbase at 0.25 kHz. The N1′ peak for good CI users showed a shorter latency than for poor CI ears.

Figure 3. Mean ERPs from good CI ears (mean FCDT < 10%, n = 21) and poor CI ears (mean FCDT ≥ 10%, n = 8). The ACCs were more prominent for the fbase at 0.25 kHz than for 1 and 4 kHz. The ACCs were worse in the poor CI ears compared to the good CI ears.

The mean ACC measures evoked by 70% F-change across three fbases were compared between poor and good CI ears. Mann–Whitney Rank Sum Test showed no difference in N1′-P2′ amplitude (Mann–Whitney U Statistic = 35.0, p > 0.05) N1′ latency (t = 1.90, p > 0.05), and P2′ latency (t = 1.77, p > 0.05). Figure 4 shows the mean of ACC amplitude and latencies for poor and good CI ears.

Figure 4. The mean ACC measures (N1′ and P2′ latencies as well as the N1′-P2′ amplitude) in good and poor CI ears at the three fbases. Mann–Whitney Rank Sum Test showed that the difference in ACC measures between these two subgroups did not reach statistical significance (p > 0.05).

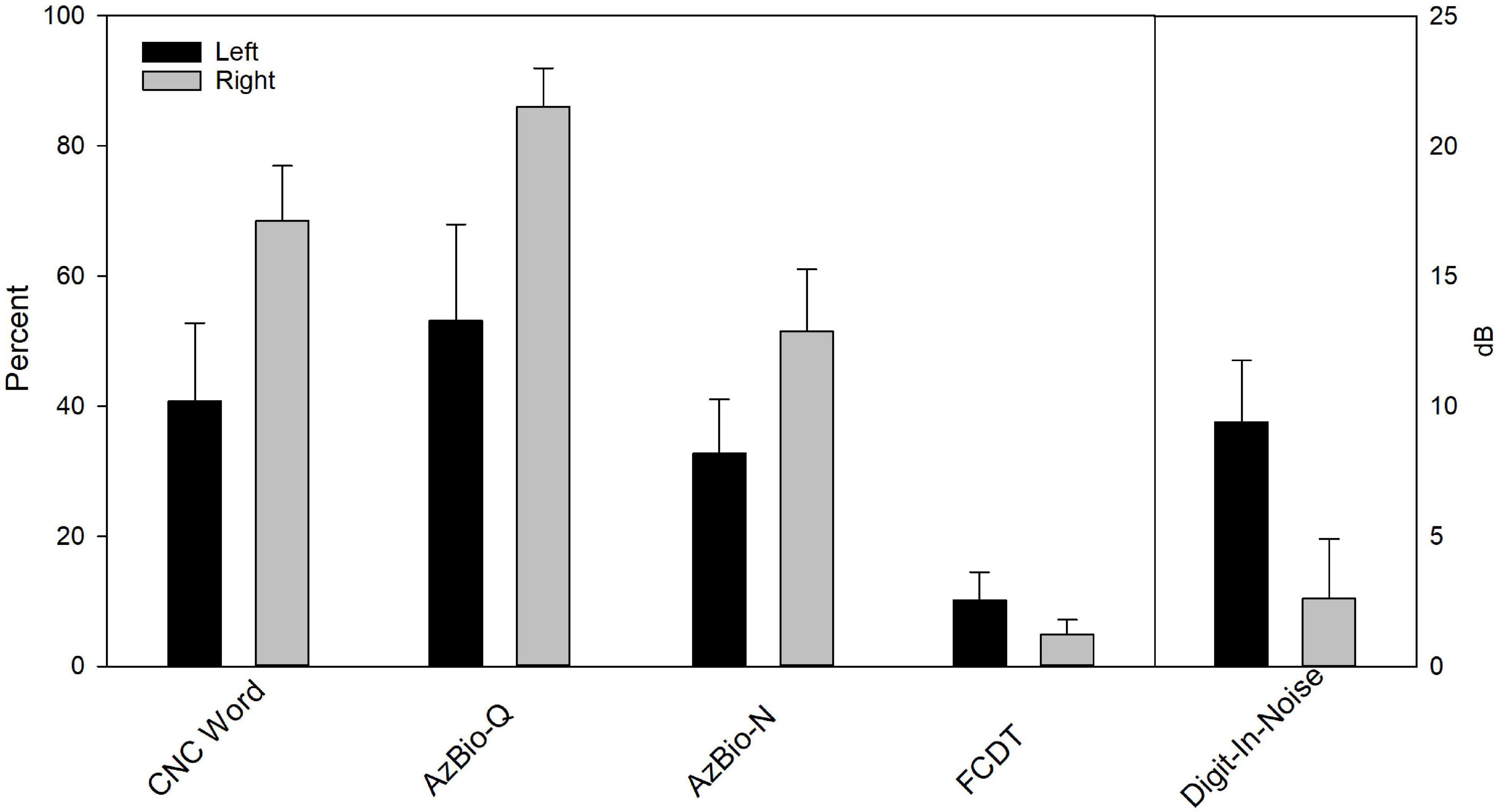

To examine if there is ear difference (within-subject variability), the data from bilateral CI users were singled out to compare between Left and Right CI ears for demographic factors (age at implantation, duration of deafness, and duration of CI use), ACC data, and behavioral data. Paired-t tests showed that, there was no statistical differences for age at implantation between Left and Right CI ears (48.13 vs. 42.75 years, p > 0.05), duration of deafness (28.50 vs. 27.25, p > 0.05), and duration of CI use (3.38 vs. 8.69 years, p > 0.05).

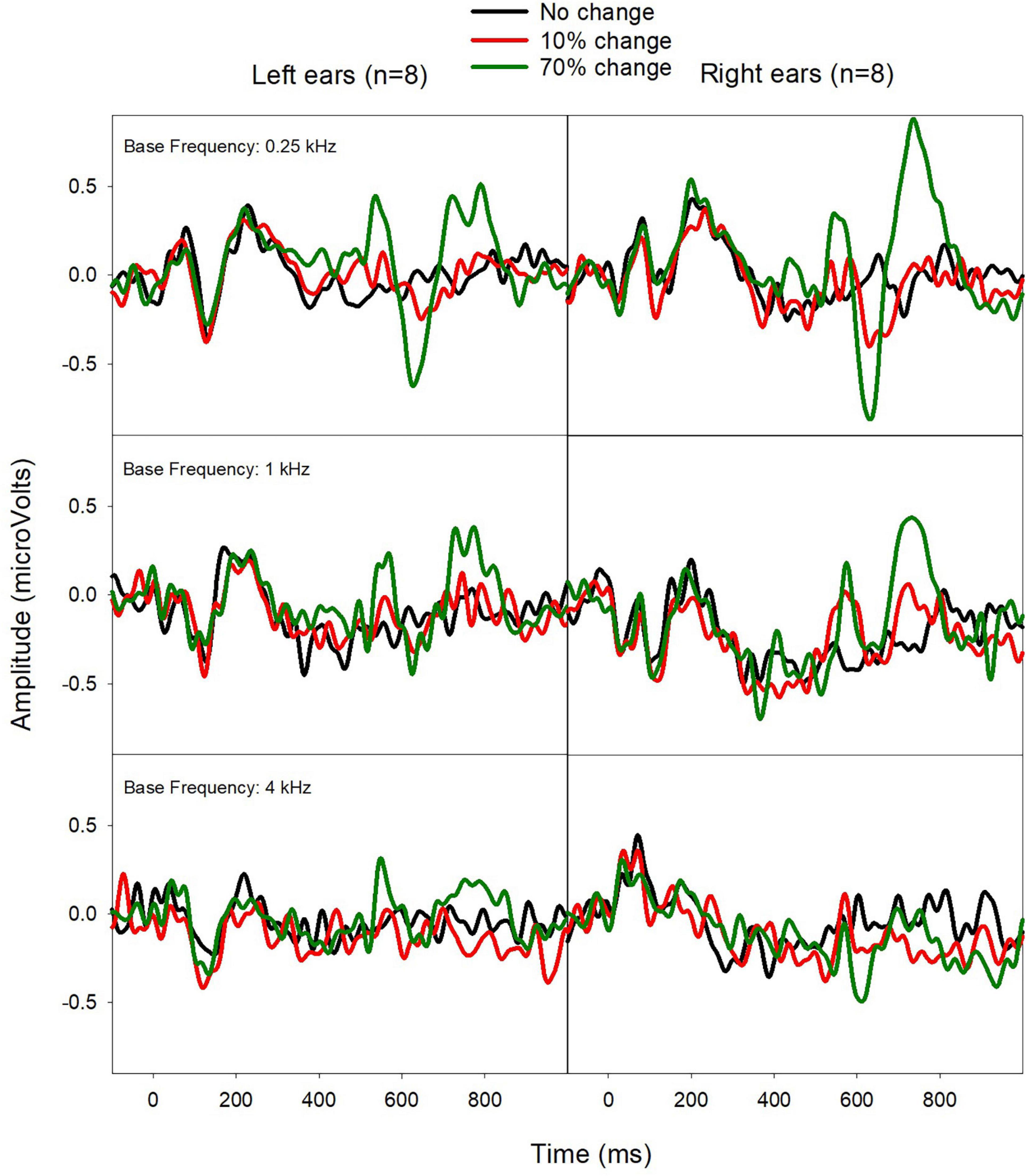

Figure 5 shows the mean ERPs from Left and Right ears. The ACC shows some ear difference, with the Right CI ears displaying a larger ACC amplitude than the Left CI ears for the fbase at 0.25 kHz. Paired-t-tests showed no statistical difference in N1′ and P2′ latencies, and ACC N1′-P2′ amplitude (p > 0.05).

Figure 5. Mean ERPs from Left (left) and Right CI ears (right) in eight bilateral CI users. The ACCs were larger for Right CI ears for the fbase at 0.25 kHz.

Figure 6 shows the mean CI outcomes (speech performance and FCDT) in Left and Right ears, which suggests a better performance in Right ears. Wilcoxon Signed Rank Tests was performed and results showed Right CI ears had better CNC score than Left CI ears (p < 0.05), but the difference in other measures did not reach statistical significance (FCDT, AzBio-Q, AzBio-N, and DIN, p > 0.05). After correcting for multiple pairs of comparisons, the difference between Left and Right ears in the CNC score did not reach the statistical significance (p > 0.05).

Figure 6. The behavioral performance for Left (left) and Right CI ears (right) in eight bilateral CI users. Wilcoxon Signed Rank Tests showed Right CI ears had better CNC score than Left CI ears (p < 0.05). After correcting for multiple pairs of comparisons, the difference did not reach statistical significance (p > 0.05).

This study examined the ACC in response to within-stimulus F-changes and its correlation to behavioral performance of F-change detection (FCDT) and speech perception in adult CI users. The main results showed that ACC peak (N1′ and P2′) latencies were correlated with the FCDT and speech scores. Together with previous ACC studies in CI users and non-CI individuals (Martin, 2007; He et al., 2012; Kim, 2015; Liang et al., 2016; Vonck et al., 2021), our findings further support the conclusion that the ACC is a promising tool to objectively assess listeners’ ability to detect sound changes and to predict the speech perception performance.

Numerous studies have examined automatic cortical response to sound changes using another ERP, the mismatch negativity (MMN). The MMN is evoked by the oddball stimulus paradigm consisting of frequently presented standard stimuli and rarely presented deviants that differ from the standards in a certain acoustic feature (e.g., amplitude, frequency, or duration, Tervaniemi et al., 2005; Sandmann et al., 2010; Naatanen et al., 2017). However, the MMN is a neural response to the between-stimulus change (from standards to deviants that are separated with a quiet period) rather than within-stimulus sound changes, which serve as critical acoustic cues for speech perception in our daily lives. Moreover, the MMN reflects the discrepancy between the neural responses to deviants and the standard stimuli and thus it is a response to both sound change and stimulus onset rather than the acoustic change per se. The ACC is a true cortical response to the within-stimulus sound change and it is not affected by the stimulus onset if the duration of the sound portion prior to the occurrence of the sound change is long enough. Compared to the MMN, the ACC is more time-efficient (every stimulus trial contributes to the ACC), sensitive, and has a larger, more stable amplitude, and better test-retest reliability (Friesen and Tremblay, 2006; Kim, 2015).

This study has examined if the variability of CI users’ speech performance can be explained by the variability in cortical responses to within-stimulus F-changes reflected by the ACC. The variability across CI ears for each speech measure was large, with a range of 2–94% for CNC words (M = 52.2%), 6–98% for CNC phoneme (M = 65.5%), 0–99% for AzBio-Q (M = 65.5%), 0–95% for AzBio-N (M = 43.7%), and 17.7 to −5.2 dB for DIN (M = 6.2 dB). The mean N1′ latency was negatively correlated with CNC scores and positively correlated with the mean FCDT. The P2′ latency was negatively correlated with DIN performance and positively correlated with the mean FCDT (see Table 2). Moreover, good CI ears tend to show shorter N1′ and P2′ latencies and larger N1′-P2 amplitudes than poor CI ears (Figure 4), despite that the difference did not reach statistical significance due to small sample size in the poor CI ear group. The ACC reflects automatic sensory processing of within-stimulus sound changes, as the response was recorded without participants’ voluntary participation or attention. Therefore, the finding of the current study suggests that the variability in the cortical sensory encoding of F-changes partially (about 16–21%, R2 ≈ 0.16–0.21, p < 0.05) contributes to the variability in CI users’ speech perception performance.

Our current findings also indirectly indicated that, in addition to cortical sensory processing of sound, cognitive functions also play a critical role in CI speech outcomes, as suggested by behavioral studies (Moberly et al., 2017, 2018; Tamati et al., 2020). Specifically, this study found that the variability of the cortical sensory encoding of F-changes was not enough to account for the variability of speech outcomes (R2 ≈ 0.16–0.21, p < 0.05). In our previous study (Zhang et al., 2019), we reported that the mean FCDT across three fbases was highly correlated to the CNC, AzBio-Q, and DIN tests (R2 = 0.71–0.74, p < 0.05). Together, these findings may suggest that the top-down cognitive processing, which played a critical role in both behavioral performance of F-change detection and speech perception, was not reflected by the ACC that only represented the cortical sensory encoding of sound changes.

The ACC for 0.25 fbase displayed a larger amplitude than the ACC for other fbases. We suspected that this may be because the auditory pathway has uneven neural integrity for different fbases. Most of the participants in this study were post-lingually deafened and may have had gradual progressive deafness (most likely from high to low frequencies) before implantation. When they were fitted with hearing aids, their perception of sounds at lower frequencies was better than high frequencies even if their hearing deteriorated over time. Their prolonged low frequency hearing experience might have helped slow down the deafness-related neural degeneration along the auditory pathway. Therefore, when sounds were reintroduced after cochlear implantation, their cortical sensory encoding of F-changes (reflected by the ACC) was more robust for the low fbase than higher fbases.

It is interesting to notice that, although the ACC at 0.25 kHz was greater than that at higher fbases, the FCDTs at different fbases were not significantly different. Several previous studies examining CI users’ frequency discrimination ability using tones presented in sound field also reported no statistical differences between low vs. high frequency ranges. For instance, Turgeon et al. (2015) reported no statistical difference in the frequency discrimination thresholds between 0.5 vs. 4 kHz (approximately 9% vs. 8% for proficient CI users and 25% and 20% for non-proficient CI users, respectively). Pretorius and Hanekom (2008) reported the frequency discrimination threshold expressed by the ratio of the actual threshold frequency to the reference frequency was constant in the tested frequency range from 200 to 1,200 Hz. Gfeller et al. (2002) reported similar frequency discrimination thresholds at 200, 400, 800, 1,600, and 3,200 Hz, with many of the tested CI users being able to discriminate frequency differences smaller than 6%. One explanation for a similar performance in the high vs. low frequency range, according to some authors, is that CI users can use non-temporal cues such as place cues alone or other cues (e.g., sound brightness related to timbre) for pitch change detection in the high frequency ranges (Swanson et al., 2009). With the ACC data available in this study, we also speculate the top-down modulation, which is not reflected by the ACC, may have played a role in behavioral performance of sound discrimination (Parker et al., 2002; Schneider et al., 2011).

Ear differences (left vs. right ears) in bilateral CI users have been reported in some studies using behavioral assessment methods (Henkin et al., 2008, 2014; Kraaijenga et al., 2018), however, neurophysiological data is lacking in the literature for adult CI users. This study reveals some ear difference in speech perception performance (CNC word, p < 0.05), but the significance disappeared after correcting for multiple comparisons. The ACC also shows a slightly larger amplitude for the Right CI ears relative to the Left CI ears for the low fbase, but the difference did not reach statistical significance (p > 0.05). The ear difference was not shown in major demographic data (e.g., duration of CI use, age at implantation, and duration of deafness). It is likely that the small number of bilateral CI users (n = 8) may have prevented the potential ear difference from being revealed. However, our interesting data provide a direction for future research efforts to examine if there is a statistically significant and clinical relevant ear difference using a larger sample size.

The FCDT test used in this study consists of pure tones with only a within-stimulus change in the frequency domain. The unique feature of this test is that it avoids interference by the acoustic changes of other domains. This study found that a 10% FCDT can separate CI users with good and poor performers, who also showed a significant difference in speech performance (p < 0.05). This task may provide an easy, quick, and non-linguistic tool to “screen out” poor CI ears for target intervention. This tool is useful when a clinical evaluation is not realistic or when the patients are not reliable to perform clinical speech tests (e.g., young children), or when the patients have language barriers (e.g., non-native speakers).

The capacity of ACCs in reflecting automatic auditory discrimination has been of interest among researchers looking for objective tools for assessing auditory discrimination ability. The ACC peak latencies evoked by the within-stimulus F-change were correlated to behavioral performance of F-change detection and speech perception in CI users in our previous study (Liang et al., 2016) and the current study, despite that different fbases have been used in these two studies. The ACC amplitude appeared to be a less sensitive measure than the N1′ and P2′ latencies to predict speech performance and FCDTs, although it tends to be smaller in poor CI ears than in good CI ears (Figure 4). Together with previous studies (Kim, 2015; Han and Dimitrijevic, 2020), this study further confirms that the ACC can be used as an objective tool to assess CI users’ ability to detect sound changes and to predict their speech outcomes.

This study used pure tones containing a F-change that occurred at 0 phase to evoke the ACC. There was no audible transient click reported by the participants. However, these stimuli did contain a small degree of transient cue at the transition when the change occurred, as shown in the electrodogram in Zhang et al. (2019). The spread of excitation may have introduced a loudness cues that can enhance the ACC evoked by the F-change. Moreover, because frequency discrimination performance can be affected by the position of the fbase relative to the frequency response of filters of the CI user’s clinical map (Pretorius and Hanekom, 2008), our fixed fbases selected for testing (0.25, 1, and 4 kHz) for all participants did not take the individual clinical map differences into consideration. We will address the above issues with the following approaches: (1) We will add a transition period of a fast-logarithmic frequency modulation sweep with a frequency change [fbase × (F-change)] between the two segments of the tone {fbase and [fbase + (F-change)]} to prevent transient signals, as suggested in a recent study using tones containing F-changes to evoke the ACC in normal hearing and hearing impaired listeners (Vonck et al., 2021); (2) We will compare the ACCs evoked by the F-change with vs. without a simultaneous intensity change to determine the extent of contribution of the sound intensity change to the ACCs evoked by the F-change; (3) We will use individualized fbases for each CI user according to the frequency response of filters of the participant’s clinical map.

Additionally, this study did not have an age-matched control group with normal hearing, which made it difficult to assess the extent of influence from patients’ age on the ACC results. Furthermore, some heterogeneity within the CI users may result in sampling effects on the results. Future studies will include an age-matched control group and a group of homogeneous CI users.

This study examined the ACC evoked by within-stimulus F-changes in adult CI users, behavioral performance of frequency change detection, and speech perception performance. The mean N1′ and P2′ latencies were significantly correlated to the mean FCDT and speech scores. Using the criterion of 10% for the mean FCDT allows the separation of good and poor CI ears with significantly different speech outcomes. The ACC amplitude was significantly larger for 0.25 kHz than for higher fbases, indicating that the cortical sensory processing is more robust at 0.25 kHz. The lack of effects of fbase on the FCDT may be the result of an additional role of the higher-level top-down modulation in frequency change detection.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Institutional Review Board of the University of Cincinnati. The patients/participants provided their written informed consent to participate in this study.

KM collected and analyzed the data and wrote the first draft of the manuscript. FZ designed the framework of this study, helped with data analysis, and manuscript writing. NZ helped with statistical analysis and data interpretation. GF helped with participant recruitment and data collection. All authors contributed to the article and approved the submitted version.

This research was partially supported by the University Research Council (URC Pilot) grant at the University of Cincinnati and the National Institutes of Health R15 grant (NIH 1 R15 DC016463-01) to FZ. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health and other funding agencies.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We would like to thank all participants for their participation in this research.

Alexiades, G., Roland, J. T., Fishman, A. J., Shapiro, W., Waltzman, S. B., and Cohen, N. L. (2001). Cochlear reimplantation: surgical techniques and functional results. Laryngoscope, 111, 1608–1613. doi: 10.1097/00005537-200109000-00022

Andries, E., Gilles, A., Topsakal, V., Vanderveken, O. M., Van de Heyning, P., Van Rompaey, V., et al. (2021). Systematic review of quality of life assessments after cochlear implantation in older adults. Audiol. Neuro-Otol. 26, 61–75. doi: 10.1159/000508433

Berg, K. A., Noble, J. H., Dawant, B. M., Dwyer, R. T., Labadie, R. F., and Gifford, R. H. (2020). Speech recognition with cochlear implants as a function of the number of channels: Effects of electrode placement. J. Acoust. Soc. Am. 147:3646. doi: 10.1121/10.0001316

Blamey, P. J., Pyman, B. C., Gordon, M., Clark, G. M., Brown, A. M., Dowell, R. C., et al. (1992). Factors predicting postoperative sentence scores in postlinguistically deaf adult cochlear implant patients. Ann. Otol. Rhinol. Laryngol. 101, 342–348. doi: 10.1177/000348949210100410

Brant, J. A., Eliades, S. J., Kaufman, H., Chen, J., and Ruckenstein, M. J. (2018). AzBio speech understanding performance in quiet and noise in high performing cochlear implant users. Otol. Neurotol. 39, 571–575.

Brown, C. J., Jeon, E. K., Chiou, L. K., Kirby, B., Karsten, S. A., Turner, C. W., et al. (2015). Cortical auditory evoked potentials recorded from nucleus hybrid cochlear implant users. Ear Hear. 36, 723–732. doi: 10.1097/AUD.0000000000000206

Buchman, C. A., Fucci, M. J., and Luxford, W. M. (1999). Cochlear implants in the geriatric population: benefits outweigh risks. Ear Nose Throat J. 78, 489–494.

Claes, A. J., Van de Heyning, P., Gilles, A., Van Rompaey, V., and Mertens, G. (2018). Cognitive outcomes after cochlear implantation in older adults: A systematic review. Cochlear Implants Int. 19, 239–254. doi: 10.1080/14670100.2018.1484328

Debener, S., Hine, J., Bleeck, S., and Eyles, J. (2008). Source localization of auditory evoked potentials after cochlear implantation. Psychophysiology, 45, 20–24.

Delorme, A., and Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21.

Dimitrijevic, A., Michalewski, H. J., Zeng, F. G., Pratt, H., and Starr, A. (2008). Frequency changes in a continuous tone: Auditory cortical potentials. Clin. Neurophysiol. 119, 2111–2124.

Doucet, M. E., Bergeron, F., Lassonde, M., Ferron, P., and Lepore, F. (2006). Cross-modal reorganization and speech perception in cochlear implant users. Brain J. Neurol. 129, 3376–3383.

Drennan, W. R., Anderson, E. S., Won, J. H., and Rubinstein, J. T. (2014). Validation of a clinical assessment of spectral-ripple resolution for cochlear implant users. Ear Hear. 35:92. doi: 10.1097/AUD.0000000000000009

Finley, C. C., Holden, T. A., Holden, L. K., Whiting, B. R., Chole, R. A., Neely, G. J., et al. (2008). Role of electrode placement as a contributor to variability in cochlear implant outcomes. Otol. Neurotol. 29, 920–928.

Fowler, S. L., Calhoun, H., and Warner-Czyz, A. D. (2021). Music perception and speech-in-noise skills of typical hearing and cochlear implant listeners. Am. J. Audiol. 30, 170–181. doi: 10.1044/2020_AJA-20-00116

Friesen, L. M., and Tremblay, K. L. (2006). Acoustic change complexes recorded in adult cochlear implant listeners. Ear Hear. 27, 678–685.

Fu, Q. J., and Galvin, J. J. III. (2008). Maximizing cochlear implant patients’ performance with advanced speech training procedures. Hear. Res. 242, 198–208. doi: 10.1016/j.heares.2007.11.010

Galvin, J. J.III,, Fu, Q. J., and Nogaki, G. (2007). Melodic contour identification by cochlear implant listeners. Ear Hear. 28, 302–319. doi: 10.1097/01.aud.0000261689.35445.20

Gaudrain, E., and Başkent, D. (2018). Discrimination of voice pitch and vocal-tract length in cochlear implant users. Ear Hear. 39, 226–237. doi: 10.1097/AUD.0000000000000480

Gfeller, K., Turner, C., Mehr, M., Woodworth, G., Fearn, R., Knutson, J. F., Witt, S., and Stordahl, J. (2002). Recognition of familiar melodies by adult cochlear implant recipients and normal-hearing adults. Cochlear Implants Int. 3, 29–53. doi: 10.1179/cim.2002.3.1.29

Gfeller, K., Turner, C., Oleson, J., Zhang, X., Gantz, B., Froman, R., et al. (2007). Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear Hear. 28, 412–423.

Gifford, R. H., Hedley-Williams, A., and Spahr, A. J. (2014). Clinical assessment of spectral modulation detection for adult cochlear implant recipients: a non-language based measure of performance outcomes. Int. J. Audiol. 53, 159–164. doi: 10.3109/14992027.2013.851800

Gilley, P. M., Sharma, A., Dorman, M., Finley, C. C., Panch, A. S., and Martin, K. (2006). Minimization of cochlear implant stimulus artifact in cortical auditory evoked potentials. Clin. Neurophysiol. 117, 1772–1782. doi: 10.1016/j.clinph.2006.04.018

Goldsworthy, R. L. (2015). Correlations between pitch and phoneme perception in cochlear implant users and their normal hearing peers. J. Assoc. Res. Otolaryngol. 16, 797–809.

Grasmeder, M. L., Verschuur, C. A., and Batty, V. B. (2014). Optimizing frequency-to-electrode allocation for individual cochlear implant users. J. Acoust. Soc. Am. 136:3313. doi: 10.1121/1.4900831

Han, J. H., and Dimitrijevic, A. (2020). Acoustic change responses to amplitude modulation in cochlear implant users: relationships to speech perception. Front. Neurosci. 14:124. doi: 10.3389/fnins.2020.00124

Hay-McCutcheon, M. J., Peterson, N. R., Pisoni, D. B., Kirk, K. I., Yang, X., and Parton, J. (2018). Performance variability on perceptual discrimination tasks in profoundly deaf adults with cochlear implants. J. Commun. Disord. 72, 122–135.

He, S., Grose, J. H., and Buchman, C. A. (2012). Auditory discrimination: the relationship between psychophysical and electrophysiological measures. Int. J. Audiol. 51, 771–782. doi: 10.3109/14992027.2012.699198

Henkin, Y., Swead, R. T., Roth, D. A., Kishon-Rabin, L., Shapira, Y., Migirov, L., Hildesheimer, M., and Kaplan-Neeman, R. (2014). Evidence for a right cochlear implant advantage in simultaneous bilateral cochlear implantation. Laryngoscope, 124, 1937–1941. doi: 10.1002/lary.24635

Henkin, Y., Taitelbaum-Swead, R., Hildesheimer, M., Migirov, L., Kronenberg, J., and Kishon-Rabin, L. (2008). Is there a right cochlear implant advantage? Otol. Neurotol. 29, 489–494. doi: 10.1097/MAO.0b013e31816fd6e5

Heutink, F., Verbist, B. M., Mens, L. H. M., Huinck, W. J., and Mylanus, E. A. M. (2020). The evaluation of a slim perimodiolar electrode: surgical technique in relation to intracochlear position and cochlear implant outcomes. Eur. Arch. Oto-Rhino-Laryngol. 277, 343–350. doi: 10.1007/s00405-019-05696-y

Hoppe, U., Rosanowski, F., Iro, H., and Eysholdt, U. (2001). Loudness perception and late auditory evoked potentials in adult cochlear implant users. Scand. Audiol. 30, 119–125. doi: 10.1080/010503901300112239

Jeong, S. W., and Kim, L. S. (2015). A new classification of cochleovestibular malformations and implications for predicting speech perception ability after cochlear implantation. Audiol. Neuro-Otol. 20, 90–101. doi: 10.1159/000365584

Kelsall, D., Lupo, J., and Biever, A. (2021). Longitudinal outcomes of cochlear implantation and bimodal hearing in a large group of adults: A multicenter clinical study. Am. J. Otolaryngol. 42:102773. doi: 10.1016/j.amjoto.2020.102773

Kenway, B., Tam, Y. C., Vanat, Z., Harris, F., Gray, R., Birchall, J., Carlyon, R., and Axon, P. (2015). Pitch discrimination: An independent factor in cochlear implant performance outcomes. Otol. Neurotol. 36, 1472–1479. doi: 10.1097/MAO.0000000000000845

Kim, J. R. (2015). Acoustic change complex: clinical implications. J. Audiol. Otol. 19, 120–124. doi: 10.7874/jao.2015.19.3.120

Kim, J. R., Brown, C. J., Abbas, P. J., Etler, C. P., and O’Brien, S. (2009). The effect of changes in stimulus level on electrically evoked cortical auditory potentials. Ear Hear. 30, 320–329. doi: 10.1097/AUD.0b013e31819c42b7

Kim, Y., Lee, J. Y., Kwak, M. Y., Park, J. T., Kang, W. S., Ahn, J. H., et al. (2021). High-frequency cochlear nerve deficit region: Relationship with deaf duration and cochlear implantation performance in postlingual deaf adults. Otol. Neurotol. 42, 844–850. doi: 10.1097/MAO.0000000000003092

Kraaijenga, V., Derksen, T. C., Stegeman, I., and Smit, A. L. (2018). The effect of side of implantation on unilateral cochlear implant performance in patients with prelingual and postlingual sensorineural hearing loss: A systematic review. Clin. Otolaryngol. 43, 440–449. doi: 10.1111/coa.12988

Lachowska, M., Pastuszka, A., Glinka, P., and Niemczyk, K. (2014). Benefits of cochlear implantation in deafened adults. Audiol. Neuro-Otol. 19, 40–44. doi: 10.1159/000371609

Liang, C., Earl, B., Thompson, I., Whitaker, K., Cahn, S., Xiang, J., et al. (2016). Musicians are better than non-musicians in frequency change detection: Behavioral and electrophysiological evidence. Front. Neurosci. 10:464. doi: 10.3389/fnins.2016.00464

Liang, C., Houston, L. M., Samy, R. N., Abedelrehim, L. M. I., and Zhang, F. (2018). Cortical processing of frequency changes reflected by the acoustic change complex in adult cochlear implant users. Audiol. Neuro-Otol. 23, 152–164.

Maarefvand, M., Marozeau, J., and Blamey, P. J. (2013). A cochlear implant user with exceptional musical hearing ability. Int. J. Audiol. 52, 424–432.

Martin, B. A. (2007). Can the acoustic change complex be recorded in an individual with a cochlear implant? separating neural responses from cochlear implant artifact. J. Am. Acad. Audiol. 18, 126–140

Martin, B. A., and Boothroyd, A. (2000). Cortical, auditory, evoked potentials in response to changes of spectrum and amplitude. J. Acoust. Soc. Am. 107, 2155–2161.

Martinez, A. S., Eisenberg, L. S., and Boothroyd, A. (2013). The acoustic change complex in young children with hearing loss: A preliminary study. Semin. Hear. 34, 278–287. doi: 10.1055/s-0033-1356640

Mathew, R., Undurraga, J., Li, G., Meerton, L., Boyle, P., Shaida, A., et al. (2017). Objective assessment of electrode discrimination with the auditory change complex in adult cochlear implant users. Hear. Res. 354, 86–101.

McDermott, J. H., and Oxenham, A. J. (2008). Music perception, pitch, and the auditory system. Curr. Opin. Neurobiol. 18, 452–463.

Moberly, A. C., Houston, D. M., Harris, M. S., Adunka, O. F., and Castellanos, I. (2017). Verbal working memory and inhibition-concentration in adults with cochlear implants. Laryngoscope Invest. Otolaryngol. 2, 254–261. doi: 10.1002/lio2.90

Moberly, A. C., Patel, T. R., and Castellanos, I. (2018). Relations between self-reported executive functioning and speech perception skills in adult cochlear implant users. Otol. Neurotol. 39, 250–257. doi: 10.1097/MAO.0000000000001679

Moore, B. C. (1985). Frequency selectivity and temporal resolution in normal and hearing-impaired listeners. Br. J. Audiol. 19, 189–201. doi: 10.3109/03005368509078973

Naatanen, R., Petersen, B., Torppa, R., Lonka, E., and Vuust, P. (2017). The MMN as a viable and objective marker of auditory development in CI users. Hear. Res. 353, 57–75.

Nelson, D. A., Van Tasell, D. J., Schroder, A. C., Soli, S., and Levine, S. (1995). Electrode ranking of “place pitch” and speech recognition in electrical hearing. J. Acoust. Soc. Am. 98, 1987–1999. doi: 10.1121/1.413317

Ostroff, J. M., Martin, B. A., and Boothroyd, A. (1998). Cortical evoked response to acoustic change within a syllable. Ear Hear. 19, 290–297. doi: 10.1097/00003446-199808000-00004

Oxenham, A. J. (2013). Revisiting place and temporal theories of pitch. Acoust. Sci. Technol. 34, 388–396.

Parikh, G., and Loizou, P. C. (2005). The influence of noise on vowel and consonant cues. J. Acoust. Soc. Am. 118, 3874–3888. doi: 10.1121/1.2118407

Parker, S., Murphy, D. R., and Schneider, B. A. (2002). Top-down gain control in the auditory system: evidence from identification and discrimination experiments. Percep. Psychophys. 64, 598–615. doi: 10.3758/bf03194729

Peterson, G. E., and Lehiste, I. (1962). Revised CNC lists for auditory tests. J. Speech Hear. Disord. 27, 62–70. doi: 10.1044/jshd.2701.62

Pratt, H., Starr, A., Michalewski, H. J., Dimitrijevic, A., Bleich, N., and Mittelman, N. (2009). Auditory-evoked potentials to frequency increase and decrease of high- and low-frequency tones. Clin. Neurophysiol. 120, 360–373.

Pretorius, L. L., and Hanekom, J. J. (2008). Free field frequency discrimination abilities of cochlear implant users. Hear. Res. 244, 77–84.

Reiss, L. A., Gantz, B. J., and Turner, C. W. (2008). Cochlear implant speech processor frequency allocations may influence pitch perception. Otol. Neurotol. 29, 160–167. doi: 10.1097/mao.0b013e31815aedf4

Sagi, E., and Svirsky, M. A. (2017). Contribution of formant frequency information to vowel perception in steady-state noise by cochlear implant users. J. Acoust. Soc. Am. 141:1027. doi: 10.1121/1.4976059

Sandmann, P., Eichele, T., Buechler, M., Debener, S., Jancke, L., Dillier, N., et al. (2009). Evaluation of evoked potentials to dyadic tones after cochlear implantation. Brain J. Neurol. 132, 1967–1979.

Sandmann, P., Kegel, A., Eichele, T., Dillier, N., Lai, W., Bendixen, A., Debener, S., Jancke, L., and Meyer, M. (2010). Neurophysiological evidence of impaired musical sound perception in cochlear-implant users. Clin. Neurophysiol. 121, 2070–2082. doi: 10.1016/j.clinph.2010.04.032

Schneider, B. A., Parker, S., and Murphy, D. (2011). A model of top-down gain control in the auditory system. Attent. Percep. Psychophys. 73, 1562–1578. doi: 10.3758/s13414-011-0097-7

Sheft, S., Cheng, M. Y., and Shafiro, V. (2015). Discrimination of stochastic frequency modulation by cochlear implant users. J. Am. Acad. Audiol. 26, 572–581. doi: 10.3766/jaaa.14067

Skinner, M. W., Arndt, P. L., and Staller, S. J. (2002). Nucleus 24 advanced encoder conversion study: Performance versus preference. Ear Hear. 23, 2S–17S.

Smits, C., Watson, C. S., Kidd, G. R., Moore, D. R., and Goverts, S. T. (2016). A comparison between the dutch and american-english digits-in-noise (DIN) tests in normal-hearing listeners. Int. J. Audiol. 55, 358–365. doi: 10.3109/14992027.2015.1137362

Spahr, A. J., Dorman, M. F., Litvak, L. M., Van Wie, S., Gifford, R. H., Loizou, P. C., et al. (2012). Development and validation of the AzBio sentence lists. Ear Hear. 33, 112–117.

Spivak, L. G., and Waltzman, S. B. (1990). Performance of cochlear implant patients as a function of time. J. Speech Hear. Res. 33, 511–519. doi: 10.1044/jshr.3303.511

Swanson, B., Dawson, P., and McDermott, H. (2009). Investigating cochlear implant place-pitch perception with the modified melodies test. Cochlear Implants Int. 10, 100–104. doi: 10.1179/cim.2009.10.Supplement-1.100

Tamati, T. N., Ray, C., Vasil, K. J., Pisoni, D. B., and Moberly, A. C. (2020). High- and low-performing adult cochlear implant users on high-variability sentence recognition: Differences in auditory spectral resolution and neurocognitive functioning. J. Am. Acad. Audiol. 31, 324–335. doi: 10.3766/jaaa.18106

Tervaniemi, M., Just, V., Koelsch, S., Widmann, A., and Schroger, E. (2005). Pitch discrimination accuracy in musicians vs nonmusicians: an event-related potential and behavioral study. Exper. Brain Res. 161, 1–10. doi: 10.1007/s00221-004-2044-5

Tremblay, K. L., Friesen, L., Martin, B. A., and Wright, R. (2003). Test-retest reliability of cortical evoked potentials using naturally produced speech sounds. Ear and Hear. 24, 225–232.

Tremblay, K., Kraus, N., McGee, T., Ponton, C., and Otis, B. (2001). Central auditory plasticity: changes in the N1-P2 complex after speech-sound training. Ear Hear. 22, 79–90. doi: 10.1097/00003446-200104000-00001

Turgeon, C., Champoux, F., Lepore, F., and Ellemberg, D. (2015). Deficits in auditory frequency discrimination and speech recognition in cochlear implant users. Cochlear Implants Int. 16, 88–94.

Vandali, A., Sly, D., Cowan, R., and van Hoesel, R. (2015). Training of cochlear implant users to improve pitch perception in the presence of competing place cues. Ear Hear. 36, e1–e13.

Vermeire, K., Brokx, J. P., Wuyts, F. L., Cochet, E., Hofkens, A., and Van de Heyning, P. H. (2005). Quality-of-life benefit from cochlear implantation in the elderly. Otol. Neurotol. 26, 188–195.

Vonck, B. M. D., Lammers, M. J. W., Schaake, W. A. A., van Zanten, G. A., Stokroos, R. J., and Versnel, H. (2021). Cortical potentials evoked by tone frequency changes compared to frequency discrimination and speech perception: Thresholds in normal-hearing and hearing-impaired subjects. Hear. Res. 401:108154. doi: 10.1016/j.heares.2020.108154

Won, J. H., Drennan, W. R., and Rubinstein, J. T. (2007). Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. J. Assoc. Res. Otolaryngol. 8, 384–392. doi: 10.1007/s10162-007-0085-8

Wong, D. J., Moran, M., and O’Leary, S. J. (2016). Outcomes after cochlear implantation in the very elderly. Otol. Neurotol. 37, 46–51. doi: 10.1097/MAO.0000000000000920

Zhang, F., Benson, C., and Cahn, S. J. (2013). Cortical encoding of timbre changes in cochlear implant users. J. Am. Acad. Audiol. 24, 46–58.

Keywords: cochlear implant, hearing loss, frequency change detection, acoustic change complex, speech perception

Citation: McGuire K, Firestone GM, Zhang N and Zhang F (2021) The Acoustic Change Complex in Response to Frequency Changes and Its Correlation to Cochlear Implant Speech Outcomes. Front. Hum. Neurosci. 15:757254. doi: 10.3389/fnhum.2021.757254

Received: 11 August 2021; Accepted: 01 October 2021;

Published: 21 October 2021.

Edited by:

Hidehiko Okamoto, International University of Health and Welfare (IUHW), JapanReviewed by:

Takwa Gabr, Kafrelsheikh University, EgyptCopyright © 2021 McGuire, Firestone, Zhang and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fawen Zhang, RmF3ZW4uWmhhbmdAdWMuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.