94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Hum. Neurosci., 22 November 2021

Sec. Cognitive Neuroscience

Volume 15 - 2021 | https://doi.org/10.3389/fnhum.2021.726998

Omer Ashmaig1,2

Omer Ashmaig1,2 Liberty S. Hamilton1,3,4

Liberty S. Hamilton1,3,4 Pradeep Modur5

Pradeep Modur5 Robert J. Buchanan1,5,6,7

Robert J. Buchanan1,5,6,7 Alison R. Preston2,3,8,9

Alison R. Preston2,3,8,9 Andrew J. Watrous1,2,3,8,10*

Andrew J. Watrous1,2,3,8,10*Intracranial recordings in epilepsy patients are increasingly utilized to gain insight into the electrophysiological mechanisms of human cognition. There are currently several practical limitations to conducting research with these patients, including patient and researcher availability and the cognitive abilities of patients, which limit the amount of task-related data that can be collected. Prior studies have synchronized clinical audio, video, and neural recordings to understand naturalistic behaviors, but these recordings are centered on the patient to understand their seizure semiology and thus do not capture and synchronize audiovisual stimuli experienced by patients. Here, we describe a platform for cognitive monitoring of neurosurgical patients during their hospitalization that benefits both patients and researchers. We provide the full specifications for this system and describe some example use cases in perception, memory, and sleep research. We provide results obtained from a patient passively watching TV as proof-of-principle for the naturalistic study of cognition. Our system opens up new avenues to collect more data per patient using real-world behaviors, affording new possibilities to conduct longitudinal studies of the electrophysiological basis of human cognition under naturalistic conditions.

There are currently 65 million active cases of epilepsy worldwide and approximately thirty percent of these patients are resistant to current medications (Kwan et al., 2011; Feigin et al., 2019). In these medication-resistant cases of epilepsy, patients may undergo invasive intracranial monitoring using indwelling electrodes to localize the source of their seizures. Depending on the purview of the clinical team and the individual case, patients may be implanted with multiple electrodes that record from hundreds of locations simultaneously. These patients often stay in the hospital for at least a week, presenting rare opportunities to directly measure local field potential (LFP) and/or single neuron activity in the behaving human brain over days or weeks with high spatiotemporal resolution (Jacobs and Kahana, 2010;Parvizi and Kastner, 2018).

Given the insights which can be gained from direct electrophysiological recordings, it follows that maximizing the amount of data collected in this setting will prove beneficial to furthering our understanding of the human brain. However, there are several practical challenges when collecting human intracranial recordings. First, testing in this patient population often requires finding a “goldilocks” testing window in which the research team and patient are both available to conduct research. The invasive nature of electrode implantation often results in both physical and cognitive challenges that can affect a patient’s ability to focus on performing experimental tasks. For example, pain medications may cause drowsiness, and the physical connections of electrodes make patient mobility a challenge. Thus, to allow for optimal testing conditions and prevent interruptions during testing, the patient must feel physically and cognitively well enough to perform cognitive tasks. Researchers must prioritize the needs of the patient and clinical team, so the research team frequently remains physically present until a testing window becomes available. These windows may occur during nights or weekends and place a burden on the research team to work beyond traditional work hours.

Second, researchers must employ tasks that accommodate a variety of cognitive abilities and impairments in epilepsy patients (Motamedi and Meador, 2003; Holmes, 2015). Practically, the researcher may ask an uncomfortable patient to perform a cognitive task with focus and effort instead of watching TV or browsing the internet. Humans prefer tasks that are appropriately challenging for their skillset (Csíkszentmihályi, 1990). Thus, if an experiment is too easy or too demanding, the patient may not agree to perform the task at all or may only perform it once, reducing the amount of data collected per patient. This can be even more problematic when multiple research groups are working with the same patient. As the patient performs different tasks, they naturally gravitate toward those tasks which are most engaging and are appropriately challenging. Researchers with less suitable tasks may therefore be unlikely to obtain more than one session of data per patient, limiting statistical power and generalizability of research findings. All together, the practical limitations of finding the “goldilocks” testing window and employing an appropriate task reduce the amount of usable data that can be gained from each patient.

Despite these challenges, cognitive neuroscientific inquiry has increasingly leveraged human intracranial recordings (Brazier, 1968; Jacobs et al., 2010; Parvizi and Kastner, 2018). Born out of the traditions of cognitive psychology and stimulus-response views of cognition, most of this work has measured behavior using “classical” tasks with experimenter-generated stimuli. While such well-specified and controlled designs benefit researchers, these designs often have seemingly arbitrary task demands from the patients’ point of view. Thus, while classical tasks provide tight experimental control, they may do so at the expense of ecological validity. The arbitrary and repetitive nature of such tasks may limit a patient’s enthusiasm to repeat experiments, reducing the amount of data collected per patient. Given the value of human intracranial recordings to cognitive neuroscientific progress, research protocols which collect the maximum amount of useful behavioral, and neural data will expedite our understanding of cognition.

It is increasingly recognized that neuroscientific models should be developed and tested in more real-world contexts (Yoder and Belmonte, 2010; Podvalny et al., 2017; Matusz et al., 2019; Hamilton and Huth, 2020; Nastase et al., 2020). For example, recent work has focused on understanding cognition using naturalistic stimuli during memory encoding (Baldassano et al., 2017; Chen et al., 2017; Davis et al., 2020; Heusser et al., 2020; Michelmann et al., 2020; Antony et al., 2021) or spatial navigation (Stangl et al., 2021). While naturalistic experimental designs present additional challenges in understanding the data compared to classical paradigms, naturalistic tasks such as video games can provide novel benefits and insights into a diverse range of cognitive mechanisms (Boot, 2015; Palaus et al., 2017). For example, video games have been shown to enhance motivation compared to traditional neuropsychological tasks (Lohse et al., 2013; Ferreira-Brito et al., 2019; Reid, 2012). Furthermore, the real world does not operate in a uni- or bi-modal fashion as the brain must perceive and respond to multisensory information (Stein and Stanford, 2008; Sella et al., 2014; Van Atteveldt et al., 2014). More real-world experimental designs may therefore not only motivate patients to perform more tasks during their hospitalization but also may enhance behavioral performance and lead to unique cortical activity compared to “classic” experiments (David et al., 2004; Sella et al., 2014; Matusz et al., 2019; Bijanzadeh et al., 2020).

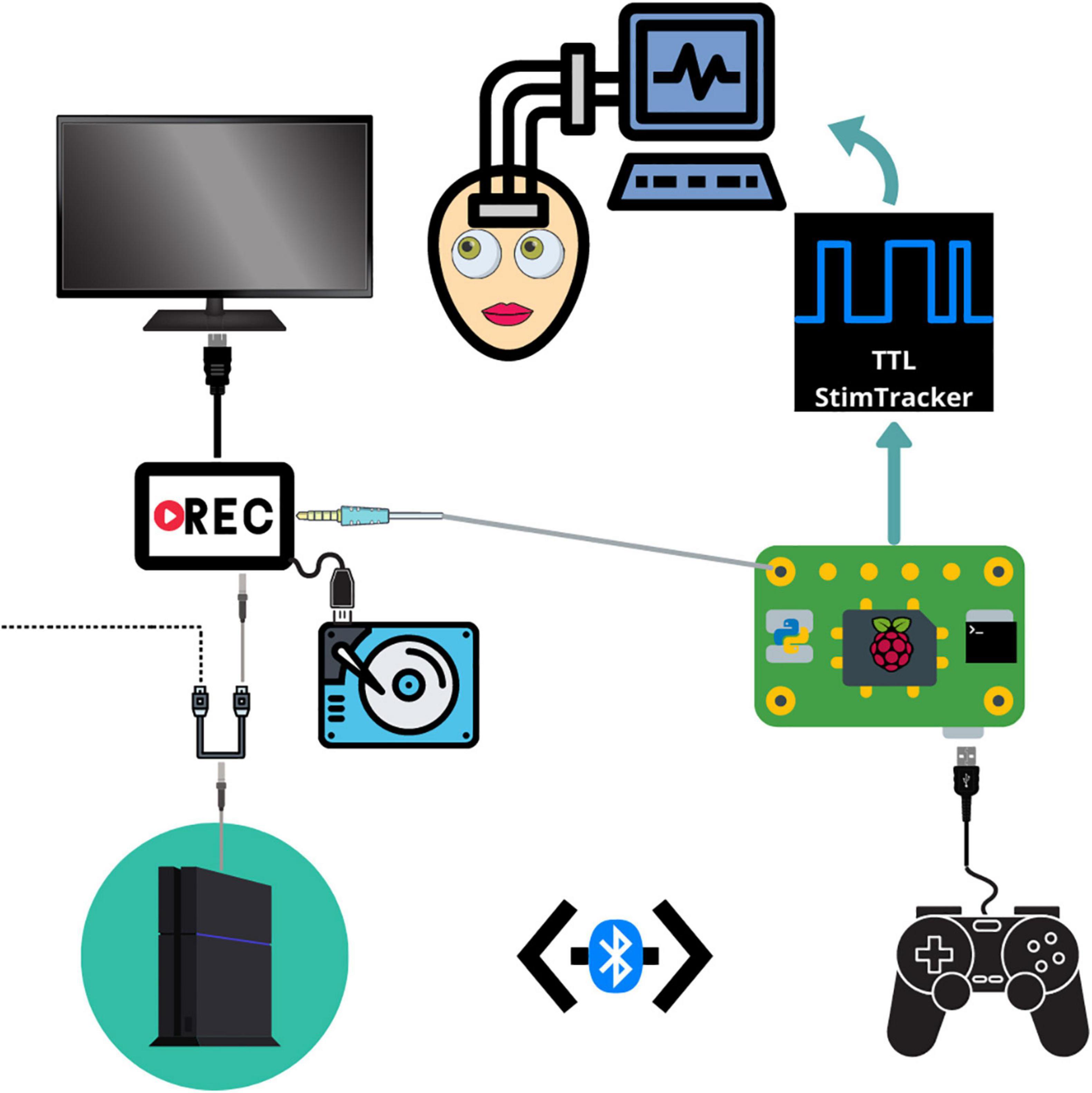

To address the above challenges, we describe here a platform for continuous cognitive monitoring of epilepsy patients undergoing intracranial monitoring during their hospitalization (Figures 1, 2). This platform aims to maximize the amount of useful behavioral and neural data per patient and further our understanding of the working mechanisms of cognition during naturalistic behaviors. This system can provide novel insights by adding content and external validity to the current “classic” neuroscientific literature.

Figure 1. Schematic depicting the connections between each component. The PS4 system outputs audiovisual stimuli to a video recorder through an HDMI splitter. The video recorder saves the audiovisual media to an external hard drive and then outputs the signal to a monitor. The Raspberry Pi detects and logs PS4 controller button responses and sends a unique tone for each button which is embedded in the audiovisual recording. The Raspberry Pi simultaneously sends event markers to the Cedrus StimTracker, which generates a unique TTL event recorded alongside the neural data.

• PlayStation 4 with controller.

• Raspberry Pi.

• HDMI to DVI converter.

• DVI to HDMI converter.

• HDMI splitter.

• Hauppauge Standalone Video Recorder.

• Micro USB cable (8 foot minimum).

• Monitor or TV with HDMI port.

• USB A or mini USB A cable (depends on StimTracker model).

• Cedrus StimTracker Duo or Quad.

• 3 HDMI to HDMI Cables.

• Mobile AV Cart.

• Power Extension Cord.

• Large Hard Drive (at least 1 TB).

• Python and Bash Scripts (supplemental).

Our testing platform synchronizes continuous neural recordings with audiovisual stimuli and button-press responses (Supplementary Movie 1), allowing researchers to perform analyses between different recording modalities. This system allows researchers to synchronize continuous neural recordings with any activity on the PlayStation 4 console. All system components are placed on a mobile cart that is rolled into the patient’s room and placed near the foot of the bed so that the monitor can swing out over the patient’s feet. Each button press is logged, overlays a unique auditory tone within the audiovisual recording, and sends a customizable, jitter free event marker, which are delayed in the neural recordings by precisely 2 ms. This setup allows for precise offline syncing between the neural recordings, audiovisual stimuli, and motor responses. Through the PlayStation 4 console, researchers have a spectrum of possibilities for tasks – from using currently available video games and movies to fully designing a well-controlled experiment through a game creation system such as “Dreams,” a video game that allows users and/or researchers to create their own games (Skrebels, 2020). Custom experiments built in Unity can also be imported onto the PlayStation 4 console.

In our implementation, a stand-alone video recorder continuously records audiovisual media from the gaming console to an external hard drive. The PS4 controller sends button press events to the Cedrus StimTracker via a Raspberry Pi, allowing for offline synchronization of behavioral and neural data (Supplementary Figure 1). The Raspberry Pi runs two python scripts simultaneously. A Python 2 script detects and logs controller activity via a micro USB cable and sends an event code to a Cedrus StimTracker via a USB cable. Each button corresponds to a unique, prime-numbered TTL pulse width embedded in the neural recordings. A Python 3 script saves a unique tone into the audiovisual recording for each button press and patients do not hear these tones. These scripts are available at the following Github page: github.com/oeashmaig/CCM. Two python versions and scripts are required in the presented setup due to cross-compatibility issues with specific python modules.

For our particular configuration, an HDMI splitter from the PS4 system to the audiovisual recorder resolves compatibility issues between the two devices and splits the video signal to a second external hard drive and monitor, enabling family and friends to watch alongside the patient. A second hard drive of the original audiovisual signal becomes useful here to remove the button tones during offline processing.

Basic instructions are given here. Full line-by-line details on installation are also provided on the github page.1

1. Download the python and bash scripts from Github.

2. Install the required python 2 and 3 modules: qt5-default, libasound2-dev, pyxid, simpleaudio, pygame.

3. Download and install the D2xx driver from FTDI chip.2

4. Edit the /etc/rc.local file to run the bash script after startup.

1. Connect the PS4 controller to the PS4 system via Bluetooth before booting the Raspberry Pi.

2. On the PS4 system, navigate to “System Settings” and disable HDCP.

3. Plug in the HDMI-to-DVI-to HDMI converter to the PS4 system.

4. Connect the HDMI output from the PS4 to an HDMI splitter to the Hauppauge Video Recorder, which is connected to a storage device.

5. Configure the Hauppauge device and connect it to a high capacity external hard drive (format as Windows NTFS with Master Boot Record, ≥ 2TB recommended).

6. Connect an audio cable from the Raspberry Pi to the Hauppauge Video Recorder.

7. Connect HDMI cable from Hauppauge Video Recorder to TV/monitor.

8. Connect the controller to the Raspberry Pi via a long micro USB cable.

9. Connect the Raspberry Pi to the Cedrus StimTracker via a USB A cable.

1. Begin neural recordings using NeuraLynx research electrophysiology system.

2. Ensure all system components are plugged in and all devices have power.

3. Turn on the PS4.

4. Ensure the Hauppauge shows a green LED and then start recording (indicated by a solid red LED).

5. Instruct the patient on how to use the system, including streaming services, various games tasks, and other downloadable multimedia.

6. Confirm button presses are being detected as event markers in the NeuraLynx system.

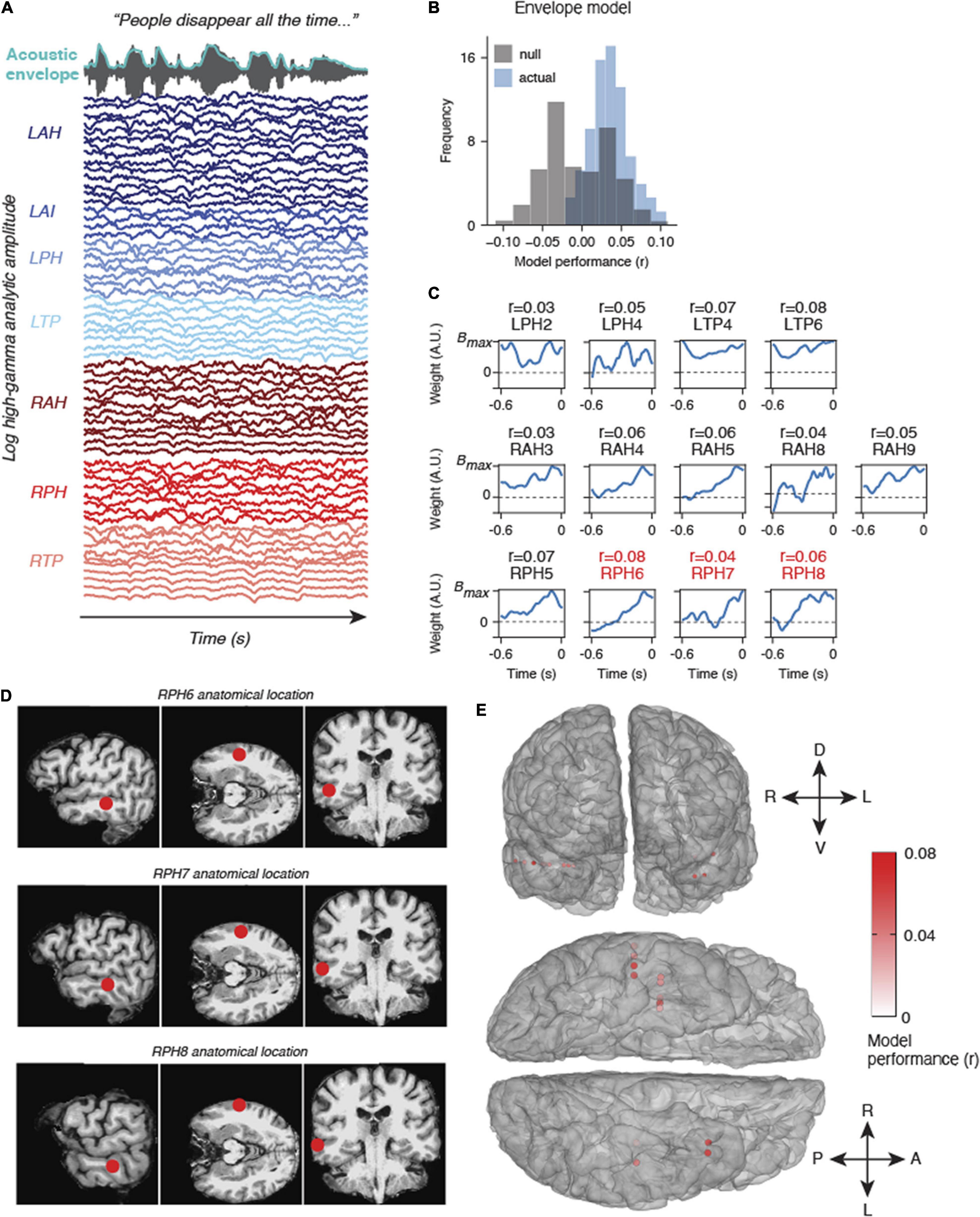

To demonstrate the utility of our approach to uncover insights from the neural data, we provide example results from one patient watching and listening to Outlander Season 1, Episode 1, while intracranial recordings were acquired from bilateral contacts in the temporal lobe (Figure 3A and Supplementary Figure 2). For this analysis, we used a simple acoustic envelope model, though in practice researchers could fit models to phonological information (Mesgarani et al., 2014), semantic information (Huth et al., 2016; Broderick et al., 2018), visual information (Nishimoto and Gallant, 2011), and more. The participant was a 48 year old male with 63 electrodes implanted across the following regions: left anterior temporal lobe to hippocampus (LAH, 14 contacts), left anterior temporal lobe to insula (LAI, 4 contacts), left posterior temporal lobe to hippocampus (LPH, 7 contacts), left temporal pole (LTP, 8 contacts), right anterior temporal lobe to hippocampus (RAH, 12 contacts), right posterior temporal lobe to hippocampus (RPH, 8 contacts), and right temporal pole (RTP, 10 contacts). Electrodes were localized using iELVis (Groppe et al., 2016).

Figure 3. Example results from natural listening dataset. (A) One participant watched Season 1, Episode 1 of Outlander while their brain activity was recorded. The audio and acoustic envelope of this movie stimulus are shown at the top, along with aligned high gamma neural activity from each electrode colored by electrode device. (B) Results from an encoding model using the acoustic envelope as a stimulus feature indicate that some electrodes are modeled significantly higher than chance (null model, gray). (C) Encoding model weights for electrodes that were modeled significantly higher than chance (p < 0.05, bootstrap permutation test). R values above indicate the correlation between the predicted high gamma response and actual high gamma on held out data. The locations of RPH6-8 are also shown in panel (D). (D) Anatomical location of three example electrodes from (C,D). These three electrodes in device RPH were localized near the superior temporal sulcus (STS), a higher order speech area. (E) All significantly modeled electrodes shown on a 3D mesh of the participant’s cerebral cortex, shown in frontal view (top) and ventral view (bottom). Electrodes are colored according to model performance (r).

Data were acquired at 2 kHz using the NeuraLynx ATLAS system. The data were converted to fif format for use in MNE python. During preprocessing, we first inspected the data for large motion artifacts or other epileptiform activity. The data were then notch filtered at 60 Hz and harmonics at 120 and 180 Hz. Next, a common average reference was performed across all electrodes. Finally, we computed the high gamma analytic amplitude of each electrode using a Hilbert transform of the 70–150 Hz bandpass filtered signal (Mesgarani et al., 2014; Hamilton et al., 2018). After averaging across the high gamma frequency range using 8 logarithmically spaced center frequency bands, we downsampled the high gamma time series to 100 Hz. These data were then z-scored within each electrode prior to model fitting.

We computed the acoustic envelope of the audio signal from the recorded TV show stimulus using a Hilbert transform followed by a low-pass filter (third-order Butterworth filter; cutoff frequency, 25 Hz). We then fit a linear encoding model to predict the high gamma signals at each electrode separately as a function of the envelope at different time delays (0–0.6 s). Such linear encoding models are commonly used to uncover acoustic/phonetic tuning in ECoG, EEG, and multi-unit recordings using natural continuous stimuli (Theunissen et al., 2001; Di Liberto et al., 2015; Crosse et al., 2016; Broderick et al., 2018; Hamilton et al., 2018; Desai et al., 2021). This takes the form of the following equation:

where, is the predicted high gamma time series at t, w is the receptive field weight matrix for a given feature f at time delay τ, and S(f,t−τ)is the delayed stimulus matrix. We chose to use one single feature (the acoustic envelope) at 60 time delays to predict the recorded sEEG data. Time delays from 0 to 0.6 s were used at 100 Hz resolution, representing neural responses that were evoked from acoustic activity up to 0.6 s in the past. We fit the weight matrix w using cross-validated ridge regression, with a random 80% subset of the data used to train the model, and then held out 20% as a test set. Model performance was measured as the correlation between the predicted on held out data and the actual LFP data. To determine whether this correlation was significantly higher than would be expected by chance, we used a bootstrap permutation analysis in which we randomly shuffled chunks of the response dataset for training 100 times (to break the relationship between stimulus and response) and computed a distribution of null models. For each of the 100 shuffles, we computed the correlation between the predicted LFP from the null model and the actual LFP. We then calculated how often the correlation of the null model exceeded the correlation of the true model to compute a p-value (with a floor value of p = 0.01).

To date, we have deployed the cognitive monitoring system in 4 patients. Each patient has used the system for several hours to play a variety of movies, TV shows, and games during simultaneous neural recordings. All patients watched the first episode of Sherlock along with patient-specific movies and games based on their preferences. These data will enable investigation of neural correlates of volitional behavior for patient-specific choices. In addition, future analyses using these data will focus on generating spatiotemporal receptive fields using the movie data and spectrotemporal receptive fields using the audio data. We first provide results obtained from such an analysis in a single patient before describing the anticipated advantages of the testing platform.

We fit models that predicted the acoustic envelope of a TV show using high gamma signals recorded in one patient. This model performed significantly above chance in a number of electrodes across the left and right temporal lobe (Figure 3). The envelope weights for each of the significant electrodes are shown in Figure 3C. Most of these weights follow a characteristic time course indicating a broad, increased high gamma response following envelope onsets at a latency of approximately 100 ms. While these regions are not in canonical early auditory areas, they do appear to be adjacent to higher-order speech cortex including areas of the superior temporal sulcus (STS, see Figures 3D,E). Other electrodes in regions such as the anterior insula or deeper temporal lobe structures (e.g., posterior hippocampus) were not well-modeled by acoustic envelope features, as expected. Overall, these results provide just one example of many analyses that could be performed on a naturalistic dataset. We now describe the anticipated advantages of our system.

The primary benefit to researchers is the ability to collect more neural and behavioral data per patient while providing a common set of tasks that all patients can perform regardless of cognitive ability. Our system provides a means for patients to entertain themselves while providing useful neural recordings with millisecond-precision, synchronized stimuli at their leisure. For example, we have found our system to be particularly useful in patients undergoing sleep deprivation to induce seizures. These patients are often looking for something to do to stay awake late at night and thus readily engage in low-effort cognitive tasks such as playing video games or watching movies. This platform thus reduces the need for the research team to be physically present in the hospital, mitigating issues with patient and research team availability.

Our system also affords the opportunity for researchers to capture activities of daily living, such as listening to music, watching streaming audio or video services, or playing video games. This allows researchers to investigate a diverse array of cognitive functions within a naturalistic context. Even in periods of fatigue, most patients have been quite willing to watch Netflix “for science.” We anticipate that this increase in multimodal data will allow researchers to build more robust within-patient models of cognitive processes.

Many patients spend most of their time in a hospital bed for at least a week and become quite bored. Our platform provides the patient with an alternative to the hospital-provided television, their primary form of entertainment. At the same time, we leverage each patient’s unique interests to investigate a wide range of cognitive processes. The patient has the choice to either watch their favorite shows or play a pre-selected library of games. Naturally, these two options are less cognitively demanding for the patient compared to “classic” tasks and minimizes subjective feelings associated with volunteering time for a typical task. Furthermore, our typical patient population has a variable and limited number of controlled experiments they can perform in a single day without significantly losing motivation and energy. Thus, patients can provide additional useful data on their own schedule based on their own preferences and choices. Finally, the testing platform reduces possible experimental bias and/or stress experienced by patients due to being observed by a researcher (Yoder and Belmonte, 2010).

Video game controllers are designed to be a more intuitive interface for patients compared to using arbitrary keyboard mappings or a button box, minimizing the need for patient training. All patients thus far have either had some familiarity with using the PS4 or required no more than 5 min of instruction. We also provide a “cheat sheet” for patients to understand what they could do on our system, how to do it, and a simple explanation of why it would improve our understanding of the human brain.

We anticipate that our system can benefit several other research endeavors. First, video games induce seizures in a small subset of epileptic patients. In cases of reflex and musicogenic epilepsy, some patients experience seizures due to photic or auditory stimulation (Ferrie et al., 1994; Millett et al., 1999; Stern, 2015). However, the underlying mechanisms of seizure propagation in these patient populations remains unclear. Furthermore, in non-photosensitive epilepsy patients, the current literature is unclear whether video games induce seizures due to non-photic factors such as changes in arousal or simply due to chance (Ferrie et al., 1994; Millett et al., 1999). Through continuous cognitive and electrophysiological monitoring preceding seizures, both clinicians and researchers may better understand the underlying pathophysiology and manifestation of seizures on both an individual and population level.

Second, there has been a growing interest in understanding the role of sleep in systems memory consolidation, especially the role of sharp wave ripples (Jiang et al., 2019). These studies have been primarily studied in rodents given the rare and limited ability to investigate these processes directly in human recordings. Thus, the use of our testing platform presents the opportunity to significantly increase the amount of recorded human neural data during sleep. Finally, our system employs a PS4 system to collect neuroscientific data which could also be distributed to the general public to collect a large sample of normative behavioral data.

While we have presented a specific use case here for a NeuraLynx system with a Cedrus StimTracker, our setup is also transferrable to other research recording systems. For example, with Tucker Davis Technologies (TDT) RZ2 hardware including analog and digital inputs, the Cedrus StimTracker can be bypassed altogether, and audio button press signals may be recorded directly from the Raspberry Pi to an analog input that is synchronized with neural data. A similar process may be performed for split output audio signals such that one output goes to a speaker that the patient hears, and the other is passed as an analog input to the research system. We have tested this system successfully with TDT and NeuraLynx systems, but it should in principle work for any research system that is able to take in audio as an analog input or generate TTL pulses from the data as is shown here.

There are downsides to implementing our system, although we consider these minor compared to its advantages. First, more equipment and setup time is needed, though our typical setup time for the system is under 15 min. Second, the additional electronic components in the patient’s room could introduce 60Hz noise to the neural recordings. Notably, we have not found this in our recordings to date and this issue could be further mitigated with an uninterruptible power supply. Given the significant increases in recorded behavioral and neural data, more storage is required on both data acquisition systems. Typically, neural recordings require approximately 2 TB of storage per patient and the audiovisual recordings require 100 GB of storage per day.

This significant addition of behavioral and neural data requires additional computational resources during offline processing. Although more data may be obtained from video games in some patients, most current video games are considered to be less statistically powerful per unit time for a specific hypothesis and may present challenges in removing or isolating confounding stimulus features (Hamilton and Huth, 2020). However, at the same time, we can also obtain more behavioral responses per unit time, especially in motor-related tasks. In addition, the variety of acoustic and linguistic information present in hours of Netflix movies may surpass the variety that can be presented in a short “classic” task. In either case, recent computational advances in computer vision using deep learning models (Mathis et al., 2018) and reinforcement learning models (Nichol et al., 2018) can help circumvent these high-dimensional, computationally costly issues (Hamilton and Huth, 2020; Yang et al., 2021).

As previously discussed, patients may be intrinsically motivated to use this testing platform. This may introduce a potential issue for the research team when a patient prefers to use this platform over more “classic” experiments, although we have not yet observed this in our patient population. To mitigate this issue, we introduce our system to patients after we have collected data with our “classical” tasks.

In cases where a research team is interested in investigating various possible cognitive mechanisms through movies or TV, but is not physically present to observe the patient, an important question arises – how do you know if a patient is paying attention or if they are watching with loud TV volume? While we ask patients to pause during periods of conversation or other moments of distraction, clinical video recordings of the patient could be used to verify attention toward the screen. Using such recordings requires the researcher to follow the appropriate ethical guidelines and consent process. Eye tracking could also be added in future upgrades to our platform in order to more directly measure viewing behavior.

While the system is designed to give patients freedom of choice, researchers must narrow the list of possible media to be included on the system. If each patient watches a different movie based on their own preference, how would researchers perform group level analysis? We have addressed this issue by taking a hybrid approach and ask patients to watch a small subset of content on the system before watching whatever they choose. This should allow researchers to study how volitional behaviors and their associated neural dynamics augment task performance, for instance during memory encoding (Voss et al., 2011; Gureckis and Markant, 2012; Markant and Gureckis, 2014; Fried et al., 2017; Estefan et al., 2021). Currently, we ask patients to first watch Sherlock and play Pac-Man before doing other tasks on the system, as these stimuli have been used in several previous fMRI studies (Baldassano et al., 2017; Chen et al., 2017; Vodrahalli et al., 2018). In cases where patients watch different shows, we can study language at a population level by using transcripts and computational models to find overlap in natural language representations. For auditory and visual tasks, the spectrogram of the audio or a wavelet decomposition of the visual information could be used to predict neural responses (Desai et al., 2021).

We have provided the design, specifications, and code for implementing a continuous cognitive monitoring platform. We implemented this system for use with epilepsy patients undergoing invasive monitoring for seizures but believe it could be modified for standard EEG monitoring or other patient populations. Furthermore, while this platform is designed for use with a PlayStation 4 console and a Cedrus StimTracker, the general methodological approach can be easily modified with alternative hardware. Future work may load custom experiments onto the PlayStation 4 console. As technology continues to innovate, we believe the idea of continuous cognitive monitoring is more important than the specific implementation of the system as described here.

Proof of principle results from one patient demonstrated significant auditory encoding of the acoustic envelope in high gamma activity. The relatively low correlations of the model are likely a result of the locations of the electrodes in this particular patient – higher performance would be expected for electrodes in the superior temporal gyrus and Heschl’s gyrus (Hamilton et al., 2021; Khalighinejad et al., 2021). However, more lateral electrodes (higher numbers on each device) generally showed better envelope following, which is consistent with what we would expect based on functional neuroanatomy.

One consideration when developing methods to maximize data acquisition is the cognitive abilities of patients. Although not every patient can perform cognitively demanding tasks, nearly all patients can either play simple arcade-style video games or watch a movie. Movies and TV shows present opportunities to investigate event-segmentation, language, and memory processes within an ecologically valid context, thus adding content validity to our understandings of diverse cognitive functions (Berezutskaya et al., 2020). We believe that expanding our neuroscientific research protocols to more complex, real-world contexts will provide novel insights into the working mechanisms of cognition.

Another issue likely to arise with our system is how researchers will make sense of increasing amounts of multi-modal data. Clearly, more computational resources will be required, and we anticipate that recent advances in statistical and computational models will allow us to analyze these complex data. For example, recent work using clinical audio and video recordings of patients have been used to characterize naturalistic behavior (Wang et al., 2016; Bijanzadeh et al., 2020). Our system builds upon this work and provides a means to track what the patient is doing more closely than can be derived from clinical recordings alone. Moreover, the platform we describe is useful in gently guiding patients into performing particular cognitive operations, such as watching, listening, and playing. Furthermore, innovations in computer vision using deep learning models, such as DeepLab Cut (Mathis et al., 2018) require minimal training and should be able to accurately track task performance in individuals. Unsupervised learning models and dimensionality reduction methods can help us develop unbiased behavioral and neural insights into these multimodal recordings without a priori hypotheses or pre-defined features of interest (Wang et al., 2016; Cabañero-Gómez et al., 2018; Hamilton and Huth, 2020). While many video games may be considered to be less statistically powerful (Matusz et al., 2019), video games have a greater natural effect size, meaning they add external and content validity alongside the detection and importance of an effect.

The system we describe can accommodate the spectrum of possible experimental designs from more “classical” to more naturalistic. “Classical” experiments are well-controlled and offer valuable insights, but lack applicability to real-world situations. In our view, the goal is not to get rid of “classical” experimental paradigms, but instead to present a method to collect additional and novel data, integrate both experimental designs into our existing workflow, and to enrich real-world neuroscientific research. While continuous cognitive monitoring is more complex and may be more challenging to analyze, we anticipate that it will offer content and external validity to diverse scientific fields in cognitive neuroscience. By expanding the analysis of neural recordings from well-controlled, simplistic paradigms to more complex stimuli, we believe our system can provide data to validate and complement the current neuroscientific literature.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the Institutional Review Board (IRB) at the University of Texas at Austin. The patients/participants provided their written informed consent to participate in this study.

AW and OA contributed to conception and design of the testing platform, and wrote the first draft of the manuscript. OA wrote the python scripts. LH performed the auditory encoding model. All authors contributed to manuscript revision, read, and approved the submitted version.

This work was supported by the NIMH R21MH127842-01.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2021.726998/full#supplementary-material

Supplementary Figure 1 | Synchronization process. Controller button presses are logged on the Raspberry Pi. Each button sends a unique sine wave tone in the video audio and a unique TTL pulse that is aligned with the neural signal. The controller log, TTL pulses, and the embedded tones in the video audio allow for offline synchronization between the PS4 system and the neural recordings.

Supplementary Figure 2 | Electrode localization from the patient analyzed with the acoustic encoding model.

Supplementary Video 1 | Example synchronized behavioral and neural data generated using our testing platform. Upper panel shows PacMan gameplay. Simultaneously recorded neural data from an example hippocampal electrode and button press events are shown in the middle and lower panels, respectively.

Antony, J. W., Hartshorne, T. H., Pomeroy, K., Gureckis, T. M., Hasson, U., McDougle, S. D., et al. (2021). Behavioral, physiological, and neural signatures of surprise during naturalistic sports viewing. Neuron 109, 377–390.

Baldassano, C., Chen, J., Zadbood, A., Pillow, J., Hasson, U., and Norman, K. (2017). Discovering event structure in continuous narrative perception and memory. Neuron 95, 709–721.e5. doi: 10.1016/j.neuron.2017.06.041

Berezutskaya, J., Freudenburg, Z. V., Ambrogioni, L., Güçlü, U., van Gerven, M. A. J., and Ramsey, N. F. (2020). Cortical network responses map onto data-driven features that capture visual semantics of movie fragments. Sci. Rep. 10:12077. doi: 10.1038/s41598-020-68853-y

Bijanzadeh, M., Khambhati, A. N., Desai, M., Wallace, D. L., Shafi, A., Dawes, H. E., et al. (2020). Naturalistic affective behaviors decoded from spectro-spatial features of multi-day human intracranial recordings. BioRxiv [Preprint] doi: 10.1101/2020.11.26.400374

Boot, W. R. (2015). Video games as tools to achieve insight into cognitive processes. Front. Psychol. 6:3.

Brazier, M. A. (1968). Electrical activity recorded simultaneously from the scalp and deep structures of the human brain. J. Nervous Mental Dis. 147, 31–39.

Broderick, M. P., Anderson, A. J., Di Liberto, G. M., Crosse, M. J., and Lalor, E. C. (2018). Electrophysiological Correlates of Semantic Dissimilarity Reflect the Comprehension of Natural, Narrative Speech. Curr. Biol. 28, 803–809.e3. doi: 10.1016/j.cub.2018.01.080

Cabañero-Gómez, L., Hervas, R., Bravo, J., and Rodriguez-Benitez, L. (2018). Computational EEG analysis techniques when playing video games: a systematic review. Proceedings 2:483. doi: 10.3390/proceedings2190483

Chen, J., Leong, Y. C., Honey, C. J., Yong, C. H., Norman, K. A., and Hasson, U. (2017). Shared memories reveal shared structure in neural activity across individuals. Nat. Neurosci. 20, 115–125. doi: 10.1038/nn.4450

Crosse, M. J., Di Liberto, G. M., Bednar, A., and Lalor, E. C. (2016). “The multivariate temporal response function (MTRF) toolbox: a MATLAB toolbox for relating neural signals to continuous stimuli. Front. Hum. Neurosci. 10:604.

Csíkszentmihályi, M. (1990). Flow: The Psychology of Optimal Experience, Vol. 1990. Manhattan, NY: Harper & Row.

David, S. V., Vinje, W. E., and Gallant, J. L. (2004). Natural stimulus statistics alter the receptive field structure of v1 neurons. J. Neurosci. 24, 6991–7006.

Davis, E. E., Chemnitz, E., Collins, T. K., Geerligs, L., and Campbell, K. L. (2020). Looking the same, but remembering differently: preserved eye-movement synchrony with age during movie-watching. PsyArXiv [Preprint] doi: 10.31234/osf.io/xazdw

Desai, M., Holder, J., Villarreal, C., Clark, N., and Hamilton, L. S. (2021). Generalizable EEG encoding models with naturalistic audiovisual stimuli. BioRxiv [Preprint] doi: 10.1101/2021.01.15.426856 BioRxiv 2021.01.15. 426856,

Di Liberto, G. M., O’Sullivan, J. A., and Lalor, E. C. (2015). Low-frequency cortical entrainment to speech reflects phoneme-level processing. Curr. Biol. 25, 2457–2465. doi: 10.1016/j.cub.2015.08.030

Estefan, D. P., Zucca, R., Arsiwalla, X., Principe, A., Zhang, H., Rocamora, R., et al. (2021). Volitional learning promotes theta phase coding in the human hippocampus. Proc. Natl. Acad. Sci. U.S.A. 118:e2021238118.

Feigin, V. L., Nichols, E., Alam, T., Bannick, M. S., Beghi, E., Blake, N., et al. (2019). Global, regional, and national burden of neurological disorders, 1990–2016: a systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 18, 459–480.

Ferreira-Brito, F., Fialho, M., Virgolino, A., Neves, I., Miranda, A. C., Sousa-Santos, N., et al. (2019). Game-based interventions for neuropsychological assessment, training and rehabilitation: which game-elements to use? A systematic review. J. Biomed. Inform. 98:103287.

Ferrie, C. D., De Marco, P., Grünewald, R. A., Giannakodimos, S., and Panayiotopoulos, C. P. (1994). Video game induced seizures. J. Neurol. Neurosurg. Psychiatry 57, 925–931. doi: 10.1136/jnnp.57.8.925

Fried, I., Haggard, P., He, B. J., and Schurger, A. (2017). Volition and action in the human brain: processes, pathologies, and reasons. J. Neurosci. 37, 10842–10847.

Groppe, D. M., Bickel, S., Dykstra, A. R., Wang, X., Mégevand, P., Mercier, M. R., et al. (2016). IELVis: an open source MATLAB toolbox for localizing and visualizing human intracranial electrode data. BioRxiv [Preprint] doi: 10.1101/069179

Gureckis, T. M., and Markant, D. B. (2012). Self-directed learning: a cognitive and computational perspective. Perspect. Psychol. Sci. 7, 464–481.

Hamilton, L. S., Edwards, E., and Chang, E. F. (2018). A spatial map of onset and sustained responses to speech in the human superior temporal gyrus. Curr. Biol. 28, 1860–1871.e4. doi: 10.1016/j.cub.2018.04.033

Hamilton, L. S., and Huth, A. G. (2020). The revolution will not be controlled: natural stimuli in speech neuroscience. Lang. Cogn. Neurosci. 35, 573–582.

Hamilton, L. S., Oganian, Y., Hall, J., and Chang, E. F. (2021). Parallel and distributed encoding of speech across human auditory cortex. Cell 184, 4626–4639.e13. doi: 10.1016/j.cell.2021.07.019

Heusser, A. C., Fitzpatrick, P. C., and Manning, J. R. (2020). Geometric models reveal behavioral and neural signatures of transforming naturalistic experiences into episodic memories. BioRxiv [Preprint] doi: 10.1101/409987

Holmes, G. L. (2015). Cognitive impairment in epilepsy: the role of network abnormalities. Epileptic Disord. 17, 101–116.

Huth, A. G., de Heer, W. A., Griffiths, T. L., Theunissen, F. E., and Gallant, J. L. (2016). Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532, 453–458. doi: 10.1038/nature17637

Jacobs, J., and Kahana, M. J. (2010). Direct brain recordings fuel advances in cognitive electrophysiology. Trends Cogn. Sci. 14, 162–171.

Jacobs, J., Korolev, I. O., Caplan, J. B., Ekstrom, A. D., Litt, B., Baltuch, G., et al. (2010). Right-lateralized brain oscillations in human spatial navigation. J. Cogn. Neurosci. 22, 824–836. doi: 10.1162/jocn.2009.21240

Jiang, X., Gonzalez-Martinez, J., and Halgren, E. (2019). Coordination of human hippocampal sharpwave ripples during NREM sleep with cortical theta bursts, spindles, downstates, and upstates. J. Neurosci. 39, 8744–8761.

Khalighinejad, B., Patel, P., Herrero, J. L., Bickel, S., Mehta, A. D., and Mesgarani, N. (2021). Functional Characterization of Human Heschl’s Gyrus in Response to Natural Speech. NeuroImage 235:118003. doi: 10.1016/j.neuroimage.2021.118003

Kwan, P., Schachter, S. C., and Brodie, M. J. (2011). Drug-resistant epilepsy. New Engl. J. Med. 365, 919–926. doi: 10.1056/NEJMra1004418

Lohse, K., Shirzad, N., Verster, A., Hodges, N., and Van der Loos, H. F. M. (2013). Video games and rehabilitation: using design principles to enhance engagement in physical therapy. J. Neurol. Phys. Ther. 37, 166–175.

Markant, D. B., and Gureckis, T. M. (2014). Is it better to select or to receive? Learning via active and passive hypothesis testing. J. Exp. Psychol. Gen. 143:94.

Mathis, A., Mamidanna, P., Cury, K. M., Abe, T., Murthy, V. N., Mathis, M. W., et al. (2018). DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 21, 1281–1289.

Matusz, P. J., Dikker, S., Huth, A. G., and Perrodin, C. (2019). Are we Ready for Real-World Neuroscience?. Cambridge, MA: MIT Press.

Mesgarani, N., Cheung, C., Johnson, K., and Chang, E. F. (2014). Phonetic feature encoding in human superior temporal gyrus. Science 343, 1006–1010. doi: 10.1126/science.1245994

Michelmann, S., Price, A. R., Aubrey, B., Doyle, W. K., Friedman, D., Dugan, P. C., et al. (2020). Moment-by-moment tracking of naturalistic learning and its underlying hippocampo-cortical interactions. BioRxiv [Preprint] doi: 10.1038/s41467-021-25376-y

Millett, C. J., Fish, D. R., Thompson, P. J., and Johnson, A. (1999). Seizures during video-game play and other common leisure pursuits in known epilepsy patients without visual sensitivity. Epilepsia 40, 59–64.

Nastase, S. A., Goldstein, A., and Hasson, U. (2020). Keep it real: rethinking the primacy of experimental control in cognitive neuroscience. NeuroImage 222:117254. doi: 10.1016/j.neuroimage.2020.117254

Nichol, A., Achiam, J., and Schulman, J. (2018). On first-order meta-learning algorithms. ArXiv [Preprint] arXiv:1803.02999v3,

Nishimoto, S., and Gallant, J. L. (2011). A three-dimensional spatiotemporal receptive field model explains responses of area MT neurons to naturalistic movies. J. Neurosci. 31, 14551–14564. doi: 10.1523/JNEUROSCI.6801-10.2011

Palaus, M., Marron, E. M., Viejo-Sobera, R., and Redolar-Ripoll, D. (2017). Neural basis of video gaming: a systematic review. Front. Hum. Neurosci. 11:248.

Parvizi, J., and Kastner, S. (2018). Promises and limitations of human intracranial electroencephalography. Nat. Neurosci. 21, 474–483. doi: 10.1038/s41593-018-0108-2

Podvalny, E., Yeagle, E., Mégevand, P., Sarid, N., Harel, M., Chechik, G., et al. (2017). Invariant temporal dynamics underlie perceptual stability in human visual cortex. Curr. Biol. 27, 155–165. doi: 10.1016/j.cub.2016.11.024

Sella, I., Reiner, M., and Pratt, H. (2014). Natural stimuli from three coherent modalities enhance behavioral responses and electrophysiological cortical activity in humans. Int. J. Psychophysiol. 93, 45–55.

Skrebels, J. (2020). Explaining Art’s Dream, the “Story Mode” Inside Dreams. San Francisco, CA: IGN.

Stangl, M., Topalovic, U., Inman, C. S., Hiller, S., Villaroman, D., Aghajan, Z. M., et al. (2021). Boundary-anchored neural mechanisms of location-encoding for self and others. Nature 589, 420–425. doi: 10.1038/s41586-020-03073-y

Stein, B. E., and Stanford, T. R. (2008). Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266.

Theunissen, F. E., David, S. V., Singh, N. C., Hsu, A., Vinje, W. E., and Gallant, J. L. (2001). Estimating spatio-temporal receptive fields of auditory and visual neurons from their responses to natural stimuli. Network (Bristol, England) 12, 289–316.

Van Atteveldt, N., Murray, M. M., Thut, G., and Schroeder, C. E. (2014). Multisensory integration: flexible use of general operations. Neuron 81, 1240–1253.

Vodrahalli, K., Chen, P.-H., Liang, Y., Baldassano, C., Chen, J., Yong, E., et al. (2018). Mapping between fMRI responses to movies and their natural language annotations. NeuroImage 180, 223–231.

Voss, J. L., Gonsalves, B. D., Federmeier, K. D., Tranel, D., and Cohen, N. J. (2011). Hippocampal brain-network coordination during volitional exploratory behavior enhances learning. Nat. Neurosci. 14, 115–120.

Wang, N. X. R., Olson, J. D., Ojemann, J. G., Rao, R. P. N., and Brunton, B. W. (2016). Unsupervised decoding of long-term, naturalistic human neural recordings with automated video and audio annotations. Front. Hum. Neurosci. 10:165. doi: 10.3389/fnhum.2016.00165

Yang, Q., Lin, Z., Zhang, W., Li, J., Chen, X., Zhang, J., et al. (2021). Monkey plays pac-man with compositional strategies and hierarchical decision-making. BioRxiv [Preprint] doi: 10.1101/2021.10.02.462713 BioRxiv 2021.10.02.46 2713,

Keywords: iEEG (intracranial EEG), movies and other media, epilepsy monitoring and recording, naturalistic, behavior and cognition, video games, intracerebral EEG recordings

Citation: Ashmaig O, Hamilton LS, Modur P, Buchanan RJ, Preston AR and Watrous AJ (2021) A Platform for Cognitive Monitoring of Neurosurgical Patients During Hospitalization. Front. Hum. Neurosci. 15:726998. doi: 10.3389/fnhum.2021.726998

Received: 17 June 2021; Accepted: 29 October 2021;

Published: 22 November 2021.

Edited by:

Stefan Pollmann, Otto von Guericke University Magdeburg, GermanyReviewed by:

Pierre Mégevand, Université de Genève, SwitzerlandCopyright © 2021 Ashmaig, Hamilton, Modur, Buchanan, Preston and Watrous. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Andrew J. Watrous, QW5kcmV3LldhdHJvdXNAYmNtLmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.