- 1Computational Neuroscience Group, Center for Brain and Cognition, Department of Information and Communication Technologies, Universitat Pompeu Fabra, Barcelona, Spain

- 2Cognitive Neuroscience and Neuroinformatics Unit, D'Or Institute for Research and Education (IDOR), Rio de Janeiro, Brazil

- 3Biomedical Engineering Program, Instituto Alberto Luiz Coimbra de Pós-Graduação e Pesquisa de Engenharia, Federal University of Rio de Janeiro, Rio de Janeiro, Brazil

- 4Institució Catalana de la Recerca i Estudis Avançats, Barcelona, Spain

- 5Department of Neuropsychology, Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany

- 6Turner Institute for Brain and Mental Health, Monash University, Melbourne, VIC, Australia

- 7Scients Institute, Palo Alto, CA, United States

During the last decades, neurofeedback training for emotional self-regulation has received significant attention from scientific and clinical communities. Most studies have investigated emotions using functional magnetic resonance imaging (fMRI), including the real-time application in neurofeedback training. However, the electroencephalogram (EEG) is a more suitable tool for therapeutic application. Our study aims at establishing a method to classify discrete complex emotions (e.g., tenderness and anguish) elicited through a near-immersive scenario that can be later used for EEG-neurofeedback. EEG-based affective computing studies have mainly focused on emotion classification based on dimensions, commonly using passive elicitation through single-modality stimuli. Here, we integrated both passive and active elicitation methods. We recorded electrophysiological data during emotion-evoking trials, combining emotional self-induction with a multimodal virtual environment. We extracted correlational and time-frequency features, including frontal-alpha asymmetry (FAA), using Complex Morlet Wavelet convolution. Thinking about future real-time applications, we performed within-subject classification using 1-s windows as samples and we applied trial-specific cross-validation. We opted for a traditional machine-learning classifier with low computational complexity and sufficient validation in online settings, the Support Vector Machine. Results of individual-based cross-validation using the whole feature sets showed considerable between-subject variability. The individual accuracies ranged from 59.2 to 92.9% using time-frequency/FAA and 62.4 to 92.4% using correlational features. We found that features of the temporal, occipital, and left-frontal channels were the most discriminative between the two emotions. Our results show that the suggested pipeline is suitable for individual-based classification of discrete emotions, paving the way for future personalized EEG-neurofeedback training.

1. Introduction

“This world's anguish is no different from the love we insist on holding back,” wrote Aberjhani in the book “Elemental: The Power of Illuminated Love” published in 2008 with the prize-winner artist Luther E. Vann. A decade later, there is still much to discover about the neurophysiology of complex emotions, which, according to some, represent a combination of basic emotions (Barrett et al., 2007) or primary emotive states and cognitive components such as event-feature-emotion complexes (Moll et al., 2005, 2008). The main novelty of this study is that we tried to classify complex affective states, which have been rarely explored with the EEG. We focus on tenderness as an affiliative feeling because it is the basis for empathy and prosocial behavior (Eslinger, 1998; Zahn et al., 2009), associated with social bonding, care, and well-being. On the contrary, anguish reflects a negative state of mental suffering linked to social dysfunction, withdrawal, and poor mental health (Corbett, 2015).

Given the importance of affiliative emotions for a range of psychosocial processes (Baumeister and Leary, 1995), psychotherapeutic approaches have embraced the training of such emotions as part of the therapeutic process (Gilbert and Procter, 2006; Lutz et al., 2008; Germer and Siegel, 2012; Neff and Germer, 2013). Real-time fMRI-Neurofeedback (NFB) training, a type of closed-loop brain-computer interface (BCI), has already been explored concerning the self-regulation of complex emotional states. Recently, Lorenzetti et al. (2018) demonstrated that the self-regulation of anguish and tenderness is possible by targeting the septohypothalamic network, engaged in affiliative feelings (Moll et al., 2011, 2012). In their experiment, Lorenzetti and colleagues validated a new protocol combining NFB with a multimodal, immersive virtual environment BCI and musical excerpts as means to deliver feedback to participants (Lorenzetti et al., 2018). However, the fMRI comes with several disadvantages, such as the signal delay (Friston et al., 1994), the high scanning cost, and an environment that can be hostile and uncomfortable. In contrast, EEG offers a higher temporal resolution and a direct measure of information processing. It is cheaper and relatively simple to use, making it a more accessible clinical environment tool. Therefore, the purpose of our work is to validate the use of the EEG with the protocol developed in Lorenzetti et al. (2018) to classify complex emotions.

Another novelty here is that we employed a multisensory virtual-reality (VR) scenario with audio input to elicit complex emotions. Music has been proven to be an effective stimulus in evoking genuine feelings in the laboratory setting (Scherer, 2004; Ribeiro et al., 2019). Moreover, the employment of VR has largely increased in the last years, and its validity for BCI application has been demonstrated (Coogan and He, 2018). For example, the work of Johnson et al. (2018) showed the feasibility of using VR BCI-training for motor recovery after stroke. Other studies have shown that integrating heterogeneous sensory stimuli leads to improved BCI performance by enhancing brain patterns (Wang et al., 2017; Huang et al., 2019) and that using multiple sensory modalities can improve the individuals' engagement and motivation in psychological and cognitive interventions (Cho et al., 2002; Lécuyer et al., 2008; Kovacevic et al., 2015). Furthermore, the study conducted by Baños and colleagues in 2004 has pointed out the advantage of immersion by means of VR scenarios when eliciting emotions through simulation of real experiences (Baños et al., 2004). To the contrary, widely used research paradigms in affective computing and BCI have typically employed single-modality or non-immersive stimuli to elicit emotions, such as the international affective pictures (IAPS; Lang et al., 1997), music, or movie clips. The ability to evoke affective states reliably and ethically in an experimental setting remains a critical challenge in emotion-recognition research (Kory and D'Mello, 2015). Therefore, we also encouraged participants to use personalized “mantras” (i.e., short emotionally loaded sentences) to facilitate self-induced emotional states. This approach to emotion induction can be of great advantage when using neurofeedback as self-regulation training (Yankauer, 1999).

To date, research in emotion classification using EEG signals mostly focused on distinguishing emotion based on psychological models (Russell, 2003; Rubin and Talarico, 2009), primarily employing the “circumplex model” (Lang et al., 1993), which portrays different types of emotions in a two-dimensional space comprising valence and arousal. Another model, the discrete emotional model, has mainly been used to explore basic emotions (i.e., sadness, happiness, fear, surprise, anger, and disgust; Baumgartner et al., 2006; Balconi and Mazza, 2009; Li and Lu, 2009). Attempts to differentiate tenderness from negative emotions through EEG features using machine learning (ML) algorithms have been made, as in the two studies conducted by Zhao et al. (2018a,b). In these studies, the authors found the frontal alpha asymmetry (FAA) and the midline theta power to be useful features to classify such emotional states.

Recently, a growing body of research investigated emotion-recognition with deep learning algorithms. Particularly convolutional neural networks showed promising results, outperforming traditional ML models in distinguishing emotional states (Aloysius and Geetha, 2017; Li et al., 2018; Moon et al., 2018; Yang et al., 2019; Zhang et al., 2020). However, these models are still in their infancy and have various limitations for EEG-based BCI classification due to the limited training data available (Lotte et al., 2018).

Here, we conducted a proof-of-concept study to establish a reliable method that can be later applied for EEG-based NFB on the self-regulation of complex emotional states. Therefore, we opted for a traditional ML algorithm, the linear Support Vector Machine (SVM), which is characterized by high generalization properties, low computational capacity, and sufficient validation in real-time application. We extracted both FAA and time-frequency, together with correlational features across the whole scalp. We tested the model's performance and generalization properties by applying a trial-specific cross-validation procedure. Furthermore, we applied a feature-ranking algorithm to reduce our dataset's dimensionality and to investigate which channels and frequency bands were the most discriminative between the two emotional states. We hypothesized that tenderness and anguish have different electrophysiological correlates, not reducible just to the FAA and that they can be distinguished using an ML approach. Finally, we calculated Spearman's correlation coefficient between subjective ratings and subject-specific classification accuracy to understand whether the SVM performance was associated with the self-evaluation of the emotional intensity, the mantras' utility, the fatigue, and concentration levels that subjects felt throughout the experimental blocks.

2. Materials and Methods

2.1. Participants

We recruited 14 healthy adults or young adults (7 males and 7 females) with no history of psychiatric disorder or neurological illness for this proof of concept experiment. Before analysis, we discarded EEG recordings from three participants (2 males and 1 female) due to the presence of excessive artifacts. The average age of the 11 remaining participants was 27 years (SD = 7), ranging from 19 to 46. All participants had normal or corrected-to-normal vision and had Brazilian nationality, except one German participant who fluently spoke Portuguese.

2.2. Emotion-Inducing Stimuli

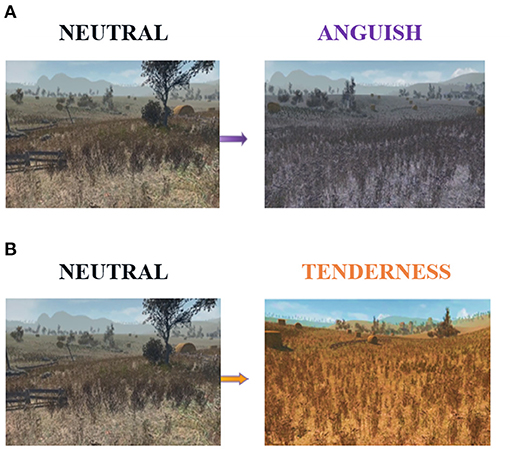

We implemented a multimodal stimulation with a virtual scenario, an edited version of the Unity 3D asset Autumnal Nature Pack, accompanied by emotion-specific musical excerpts. The virtual environment displayed the first-person view over a landscape of hills and cornfields, differently colored according to the emotion elicited: purple for anguish and orange for tenderness (Figure 1).

Figure 1. The virtual reality scenario, which we used to induce emotions during the experiment. Compared to the neutral-colored scene on the left, the color hue of the landscape turned purple (A) for anguish trials, while for all tenderness trials, it turned into a vivid orange (B).

Together with these visual stimuli, participants listened to eight different instrumental musical excerpts of 46 s. Music excerpts were fixed for each trial type (i.e., 4 for anguish and 4 for tenderness) and normalized with the root mean square feature on the software Audacity (Audacity, http://www.audacityteam.org). For all tenderness trials, we played a piece of soft, harmonic, and gentle music. In contrast, we electronically manipulated the anguish trials; unpleasant stimuli from the originally pleasant tunes used for the tenderness condition. We used four new sound-files, in which the actual melodic excerpts were simultaneously played with two pitch-shifted versions of the same excerpts. The pitch-shifted versions were one tone above and a tritone below the original pitch (samples of the stimuli are provided at http://www.stefan-koelsch.de/Music_Emotion1), resulting in dissonant and distorted music. For the neutral condition, the landscape was typically colored, and no background music was presented.

2.3. Experimental Procedure

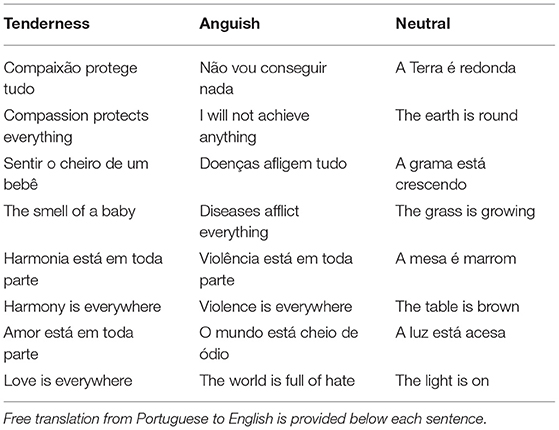

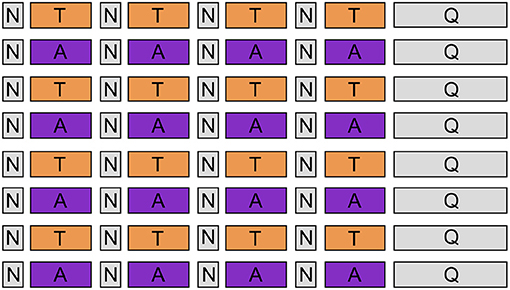

Upon arrival, all participants signed the informed consent and filled up the State-Trait Anxiety Inventory (STAI), the Beck's Depression Inventory (BDI), and The Positive and Negative Affect Schedule (PANAS). After the EEG cap setup, we instructed participants to use mantras and personally recalled memories to facilitate emotional self-induction during the experiment. As guidance, we provided a list of suggested mantras (Table 1), but they were free to choose mantras personally. Throughout the experiment, subjects were comfortably seated in an armed chair approximately 50 cm away from the screen, wearing padded headphones. The experimental procedure consisted of 8 emotion-alternating blocks (4 for anguish trials and 4 for tenderness), as represented in Figure 2. Each block included four emotion-eliciting trials (46 s) interleaved by four short neutral trials (12 s), which allowed for a brief rest between each emotional induction. The auditory and visual stimuli used for each trial are the ones described in the previous section. We recorded EEG signals throughout the whole experiment, and we gave no break between the blocks.

Figure 2. Experimental design. Eight emotion-alternating blocks (orange boxes refer to “tenderness” condition, and purple boxes to “anguish”), each consisting of 4 emotion-inducing trials of 46 s, interleaved by 4 short neutral trials of 12 s. At the end of each block, participants had to fill out a self-assessment questionnaire.

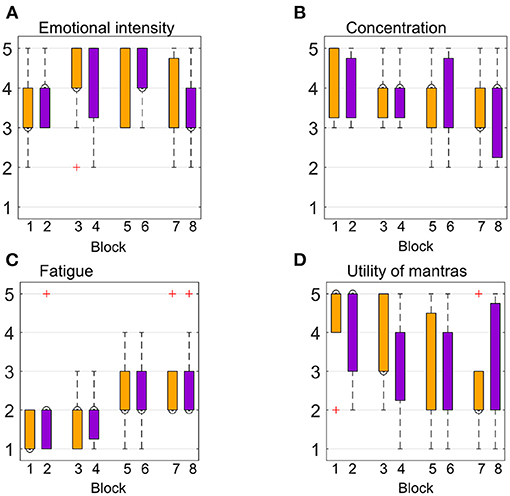

2.3.1. Subjective Ratings

At the end of each emotional block, participants had to fill a short self-assessment questionnaire in which they had to rate on a 5-point Likert scale: (i) the emotional intensity (from 1 = very mild to 5 = highly intense), (ii) the utility of mantras (from 1 = not useful to 5 = very useful), (iii) the concentration and (iv) the fatigue levels (from 1 = very low to 5 = very high) they felt throughout the experimental block.

2.4. EEG Acquisition

EEG data were recorded using the Brain Vision Recorder software. The signal was acquired from the BrainAmp amplifier and the standard 64 channels EEG-MR Plus cap (Brainproducts, Germany) at a sampling rate of 1,000 Hz and a bandwidth of 0.01–250 Hz. All the electrodes had sintered Ag/AgCl sensors placed according to the standardized international 10/20 EEG placement system, and their impedance was kept under seven kΩ. We used one of the 64 channels to record the ECG signal, and we set channel FCz as the reference electrode and channel AFz as the ground. We programmed the entire processing pipeline on Matlab (The Mathworks, Inc.).

2.5. EEG Preprocessing

We performed the offline analysis of EEG data using the EEGLAB toolbox (Delorme and Makeig, 2004) on Matlab R2019b (The Mathworks, Inc.). First, the preprocessing pipeline included downsampling the signal to 250 Hz and applying a bandpass Butterworth filter of 0.01–45 Hz. The Independent Component Analysis (ICA) algorithm was used to correct for eyeblinks and muscular artifacts. Components that captured artifacts were manually pruned independently for each subject. After artifact removal, the EEG dataset was epoched and cut into three distinct datasets for each subject according to the experimental condition. For each participant, we created one dataset for the 16 tenderness trials of 46 s length each, one for all the 16 anguish trials of 46 s length each, and one containing all 32 neutral trials of 12 s length. Data were then visually inspected, but we did not apply any further artifact or epoch rejection method. This choice was based on our future goal of using a similar setup for real-time neurofeedback studies, which will preclude the possibility to inspect and reject bad epochs visually. Therefore, we pursued a method allowing for effective classification despite the presence of some residual artifacts. Finally, we applied the surface Laplacian transform by implementing algorithms (Cohen, 2014) inspired by the spherical spline method described by Perrin et al. (1987a,b, 1989). This spatial filter allows reducing volume conduction effects for connectivity analysis purposes.

2.6. Feature Extraction

2.6.1. Time-Frequency Analysis

The EEG data were transformed into the time-frequency domain using Complex Morlet Wavelet convolution to preserve information about the temporal dynamics. The Complex Morlet wavelet (CMW) is a complex-valued sine wave tapered by a Gaussian window described by the following equation:

Where e−t2/2s2 is the real-valued Gaussian and ei2πft is the result of the Euler's formula combined with a sine wave (Cohen, 2019). The time t is centered with regard to the wavelet by taking −2: sampling rate:+2 to avoid phase shifts.

One of the benefits of the CMW convolution over other methods such as Short-time Fourier Transform or the Hilbert Transform is the Gaussian-shaped wavelet in the frequency-domain. However, the caveat when doing convolution with a Complex Morlet Wavelet is to accurately define the width of the Gaussian, here defined as s, a key parameter for determining the trade-off between temporal and spatial resolution of the time-frequency analysis (see Cohen, 2019).

The parameter s is expressed as s = c /2πf, where c denotes the number of cycles of the wavelet, which is dependent on the frequency f of the same. A narrower Gaussian with fewer cycles in the time domain leads to a high temporal resolution but reduced spectral precision, and vice-versa with a wider Gaussian. Therefore, we applied a variable number of cycles ranging from 3 up to 10, increasing as a function of frequency to have a balanced trade-off between temporal and spectral resolutions. Since we were interested in all frequency bands, we selected a range of frequencies going from 1 up to 45 Hz. After applying CMW convolution, we extracted the power from the coefficients, and we applied a decibel-baseline normalization. Considering that we were mainly interested in changes in spectral features from a neutral mental state to the two distinct emotional ones, we used all the neutral trials as a baseline. To increase the number of samples, we cut the time-frequency data of each trial with a sliding-windows approach. Each window was 1 second long with a half-second overlap, resulting in a total of 91 windows per trial.

Then, we calculated the average change in power compared to the neutral baseline for seven frequency bands (delta 1–4 Hz, theta 4–8 Hz, low alpha 8–10 Hz, high alpha 10–12 Hz, low beta 13–18 Hz, high beta 18–30 Hz, and gamma 31–45 Hz). Within each window and for all the 63 channels and the seven frequency bands, the features extracted were the mean power, the standard deviation of the mean, and the frontal alpha asymmetry (FAA). The FAA coefficients were calculated for the channel pairs Fp1-Fp2 and F3-F4 in both low-alpha (8–10 Hz) and high-alpha (10–12 Hz) bands. The resulting feature array consisted of 2,912 samples for both classes (1,456 for anguish and 1,456 for tenderness) with a total of 886 features.

2.6.2. Amplitude Time-Series Correlation

After CMW, we extracted each channel's amplitude information for each frequency component in the range of 1-45 Hz. We then applied the sliding-window approach again. Within each of the 91 windows and for each of the seven frequency bands aforementioned, we calculated the Spearman correlation coefficient of the 63*63 channels matrix. This time-frequency correlational analysis allows characterizing both the time-varying and frequency-varying properties of non-stationary signals such as electrophysiological signals. To eliminate redundant information and reduce the feature array's size, we only selected all the coefficients in the upper triangle of the correlational matrices. The resulting high-dimensional feature array consisted of 13,671 features (pairwise channel correlation coefficients for each frequency band) and 2,912 windows as samples for both emotional classes.

2.7. Classification and Feature Selection

We used the MATLAB R2019b Statistics and Machine Learning Toolbox for classification and visualization of the data. We opted for a linear Support Vector Machine (SVM) algorithm for high-dimensional data for binary classification of the feature arrays. SVMs are supervised learning algorithms that define a hyperplane as a decision boundary, such that the margin of separation between two classes is maximized. Herewith, SVMs provide a measure that allows scaling the certainty to which a window sample is assigned to one of the two classes: the sample's distance from the separating hyperplane. Regarding future applications in NFB, SVM allows for scaling the feedback (e.g., gradually changing color hue) and, thus, more precise response to emotional intensity changes.

We applied 8-fold cross-validation to train and validate the classifier. Therefore, we assigned all windows of one trial to the same fold. Thinking about possible future applications in NFB studies, we chose this method to estimate event-specific markers' impact on classification accuracy. As the model will be trained before the NFB session, we aimed at testing its performance on an entirely unknown set of trials. The four trials' windows (2 tenderness, 2 anguish) were thus kept as test sets, while the classifier was trained with the remaining 28 trials. We iterated the 8-fold cross-validation ten times and averaged across classification runs.

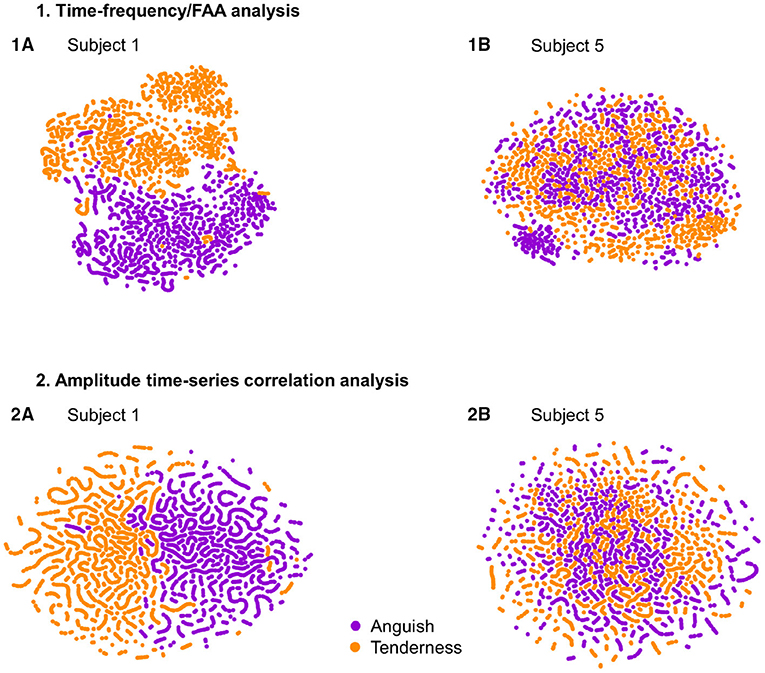

To visualize the datasets and understand the variability in performance across participants, we used t-Distributed Statistic Neighbour Embedding (t-SNE) (Van der Maaten and Hinton, 2008). T-SNE aims to preserve the local and global data structure when plotting all samples in a two-dimensional plane. The high-dimensional data is converted into a set of pairwise similarities and embedded in two dimensions such that similar samples are grouped together (Van der Maaten and Hinton, 2008). We applied t-SNE to the feature sets (time-frequency/FAA and correlational features) of the participant with the best and the one with the worst performance and tested whether it could succeed in finding two global data clusters, separating the two emotions.

Due to our dataset's high dimensionality, we performed feature selection through a feature-ranking algorithm using the Bioinformatics Toolbox of MATLAB 2019b. We aimed to improve the classifier's learning performance and identify the most common discriminative features across participants. Feature extraction and selection methods, besides improving predictive power, also help lower computational complexity and build models with better generalization properties (Liu et al., 2005; Anthony and Ruther, 2007). Using the t-test as an independent criterion for binary classification, we ranked the features after their significance between the classes. MATLAB's built-in function determines the absolute value of the two-sample t-test with pooled variance estimate for each feature. Essentially, the algorithm estimates how unlikely it is for a given feature that the difference in both emotional states' mean and variance occurred by chance. We set up two new feature arrays for each participant, using only the first 100 features selected by the feature-ranking algorithm. We classified the emotional states with the reduced sets of features using the abovementioned 8-fold validation method to test performance improvements. Furthermore, we extracted the 20 highest-ranked features for each subject to evaluate features' re-occurrence across participants.

2.7.1. Statistical Comparisons

We performed statistical analysis of accuracy distributions using the Wilcoxon rank-sum method in order to understand which type of features performed better. We also wanted to investigate whether the SVM performances were significantly above chance, thus we statistically compared accuracy distributions of real-labeled data with surrogate data (i.e., randomly shuffled labels). Finally, we computed Spearman's correlation coefficients between participants' self-assessment questionnaire and SVM accuracy to assess whether there was a relationship between subjective ratings and differences in performance.

3. Results

First, we investigated through ML whether the two complex emotional states could be accurately differentiated in a subject-wise manner using time-frequency/FAA and correlational features extracted within 1 s windows. To ensure that classification performance did not depend on the high feature-to-sample ratio, we performed a feature-ranking procedure and we repeated classification with a drastically reduced number of features. Furthermore, we aimed at comprehending the inter-subject variability by visually inspecting the data distribution of individual subjects by using the t-SNE method. Then, we investigated the common highest-ranked features across subjects to shed light on the spatial and spectral properties that allowed discriminating between tenderness and anguish. Finally, we explored whether subjective emotional experience (i.e., self-ratings) showed any association with subject-dependent classification performance.

3.1. Classification Results Using SVM

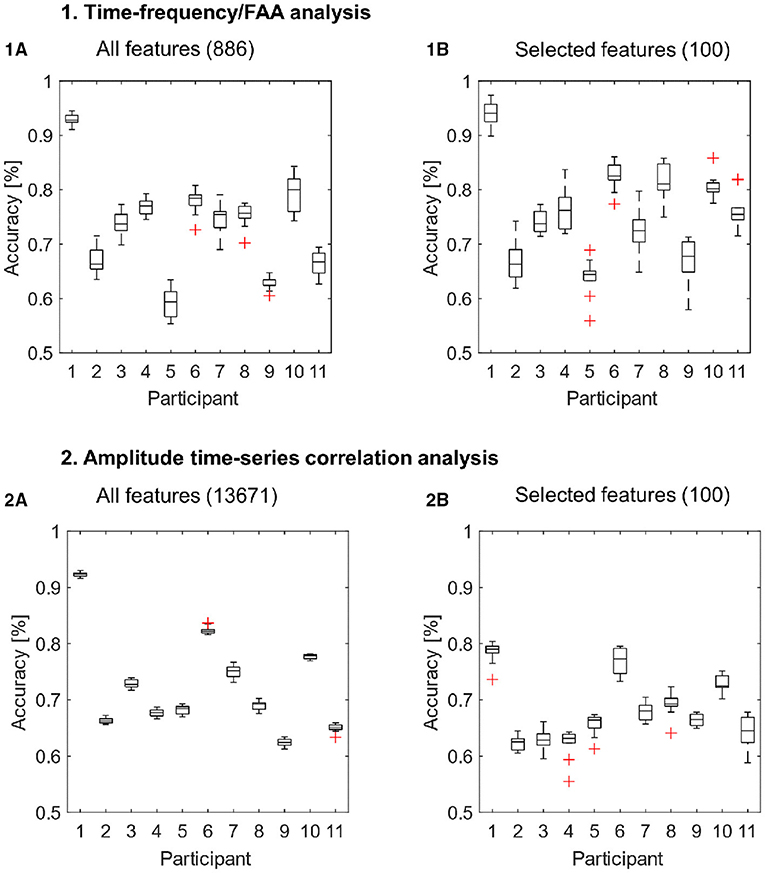

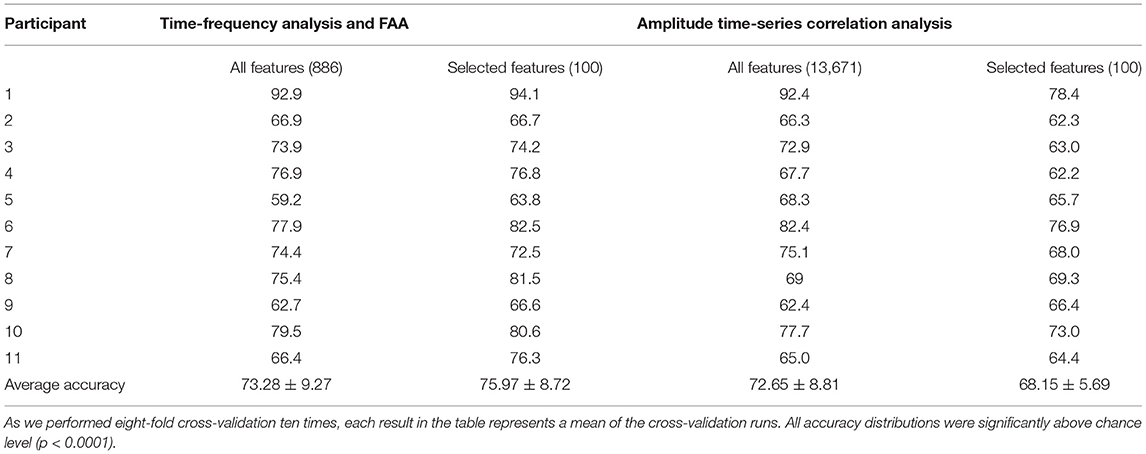

The results of all cross-validation runs for each participant are presented in Figure 3. Corresponding average accuracies for each subject are summarized in Table 2. Furthermore, we compared the accuracy distributions for both types of features or feature arrays (i.e., whole features sets and subsets of selected features) using the Wilcoxon rank-sum test, reporting corresponding p-values.

Figure 3. Classification results for the features extracted with the time-frequency/FAA analysis (1A,1B) and amplitude time-series correlation analysis (2A,2B). The classification was performed with all available features (1A,2A) and exclusively with the 100 selected features (1B,2B) for each feature extraction method. We performed eight-fold cross-validation ten times, such that the boxplots depict the results of 10 classification runs for each participant.

Table 2. Classification accuracy in percent for each participant, feature extraction method, and the number of features.

For the whole feature array extracted with the time-frequency/FAA analysis, we reported accuracies ranging from 59.2% up to 92.9% (mean = 73.28, SD = 9.27) when applying cross-validation (Figure 3, Subplot 1A). We report a range of accuracies from 62.4 to 92.4% (mean = 72.65, SD = 8.81) for the entire feature set extracted with amplitude time-series correlation analysis (Figure 3, Subplot 2A). The SVM performance was highly above chance-level (i.e., compared to surrogate data) for all participants using both time-frequency/FAA and amplitude correlation features (p < 0.0001). These results show that it is possible to discriminate between anguish and tenderness within-participants, despite the presence of a high inter-subject variability across both feature sets.

Then, due to the high-dimensionality of both feature sets, we performed a feature-ranking procedure and we repeated the 8-fold cross-validation with the selected subsets of 100 features. The subset of selected features significantly improved the model's predictive power for time-frequency/FAA features (p = 0.021; Figure 3, Subplot 1B). The results of 8-fold cross-validation using the 100 selected features through the feature-ranking algorithm yielded accuracies ranging from 63.8% up to 94.1% for the time-frequency/FAA feature set (mean = 75.97, SD = 8.72). In contrast, the performance significantly worsened (p = < 0.0001, Figure 3, Subplot 2B) for the selected 100 amplitude correlation features, with accuracies ranging from 62.2 to 78.4% (mean = 68.15, SD = 5.69). Nevertheless, the model's performance was highly above chance for both subsets of selected features (p = < 0.0001).

To understand the variability of performance across subjects, we plotted with t-SNE the datasets of participant 1 and participant 5, who had the best and one of the worst classification accuracies, respectively. The datasets' global geometry of subject 1 (Figure 4, Subplot 1A and 2A) can be separated into two clusters corresponding to the emotional states. In contrast, we could not find the same global structure for the datasets of participant 5 (Figure 4, Subplot 1B and 2B). Notably, the datasets resulting from the amplitude time-series correlation analysis (Figure 4, Subplot 2A and 2B) displayed a more defined regional pattern: samples clustering into lines. These clusters consisted of initially temporally adjacent samples. For the participant with the worst classification accuracy, participant 5, this regional pattern dominated the global data structure (Figure 4, Subplot 2B), suggesting that this phenomenon depends on temporal properties of connectivity rather than changes in neural correlation directly related to the emotional states.

Figure 4. T-SNE visualization for the feature sets of subjects 1 and 5, with the best performance (1A,2A) and one of the worst classification performances (1B,2B), respectively. Samples of the classes “Anguish” and “Tenderness” are plotted in violet and orange, respectively. The upper two plots (1A,1B) depict the time-frequency analysis datasets. The two plots at the bottom (2A,2B) display the two participants' amplitude time-series correlation features.

3.2. Highest-Ranked Features Across Participants

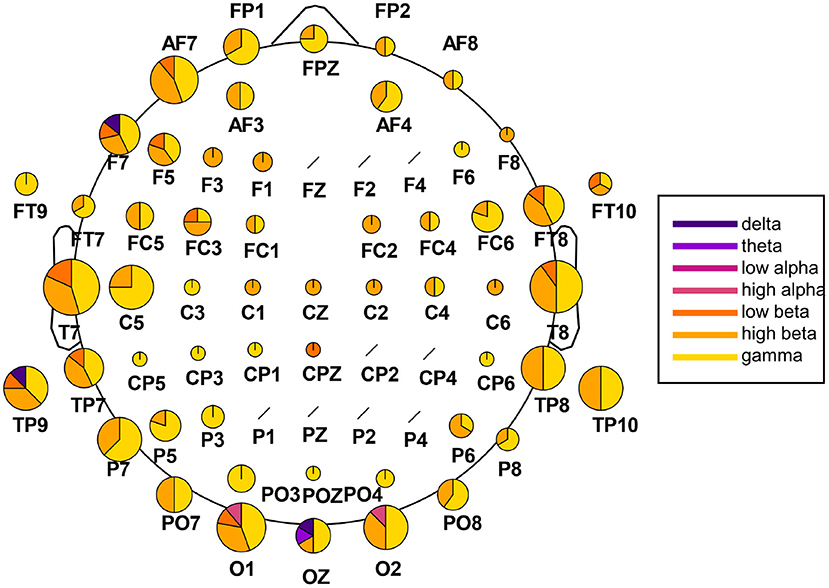

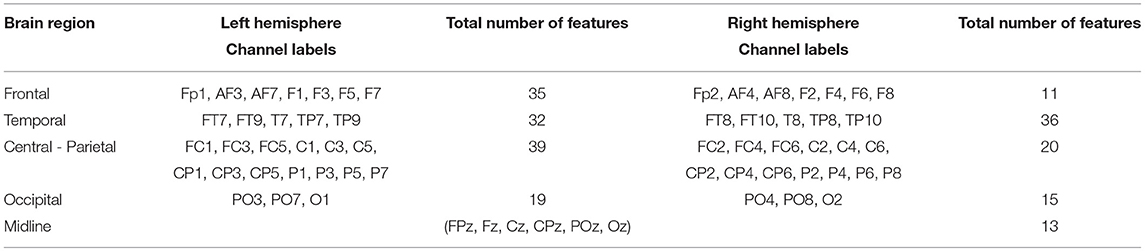

We extracted the twenty highest-ranked features for each subject and each feature array in order to highlight which channels and frequency bands were the most discriminative between the two emotions. These main 220 features of the time-frequency/FAA analysis are presented in Figure 5. Notably, the FAA coefficients did not occur in this selection. Additionally, we summarized the features separated after brain region and hemisphere, irrespective of the frequency band, in Table 3. In the frontal site, we noticed a strong prominence of features in the left hemisphere compared to the corresponding right with a ratio of approximately 3:1 (35 to 11 features), when evaluating channels pairs Fp1/Fp2, AF3/AF4, AF7/AF8, F1/F2, F3/F4, F5/F6, and F7/F8. We showed the particular importance of channel AF7 compared to the complementary right-hemispheric channel AF8 and the strong dominance of gamma and high-beta bands in the highest-ranked features. Moreover, the extracted features showed the relevance of the occipital channels O1, O2, and Oz and both hemispheres' temporal sites.

Figure 5. Extraction of the highest-ranked features for classification from the time-frequency analysis. The analysis was carried out across participants, with 220 features in total (20 per participant). The size of the channel-specific pie plot reflects how often the respective channel occurred in the main features. The pie plots' distribution indicates the relative amount of features from each frequency band,ranging from delta (dark violet) to gamma (yellow). Channels marked with a black slash did not occur in the main features.

Table 3. Extraction of the highest-ranked features for classification from the time-frequency analysis across participants separated after brain region and left/right hemisphere.

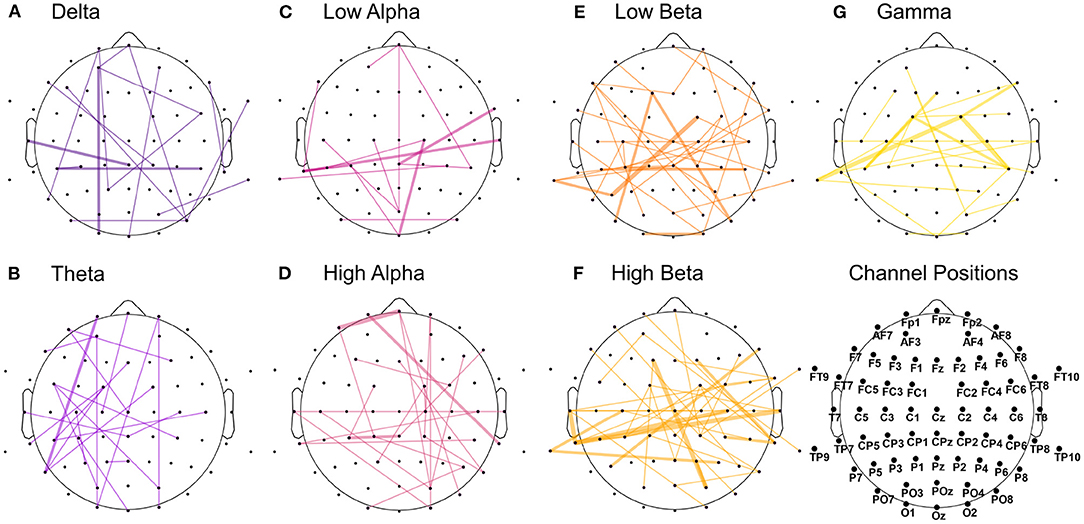

On the other side, the highest-ranked features extracted from the amplitude time-series correlation analysis appeared to be more equally distributed across the seven analyzed frequency bands (Figure 6). In particular, theta and high-alpha ranges showed asymmetrical distribution in correlation, with a prominence in the left hemisphere for the former and in the right one for the latter. Significant correlations in low-alpha and beta, especially high-beta, appeared to be more prominent in the posterior part than the frontal one. The results also highlighted the importance of temporoparietal and temporo-temporal correlations in the beta and gamma bands (Figures 6E–G). In contrast, correlations in lower frequencies appeared to be dominant in the frontal and occipital sites (Figures 6A–D).

Figure 6. Features with the highest significance in distinguishing the emotional states from the amplitude time-series correlation analysis. The analysis was carried out across participants, with 220 features in total (20 per participant). The graph plots are separated into the seven extracted frequency bands, from delta (dark violet) to gamma (yellow). The connection's width reflects how often the correlation occurred in the main features across participants, with the thicker line representing higher occurrence across subjects. (A) Delta. (B) Theta. (C) Low alpha. (D) High alpha. (E) Low beta. (F) High beta. (G) Gamma.

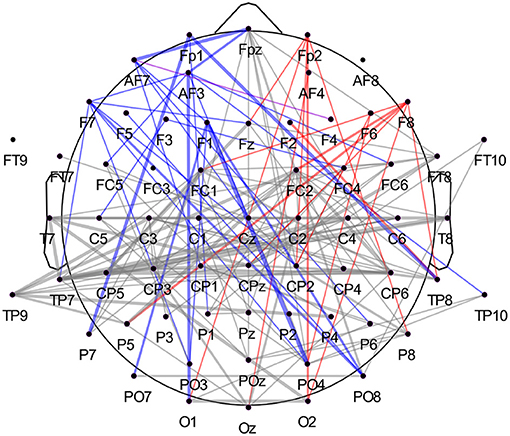

We observed that correlations involving the left frontal area occurred with more prevalence in the highest-ranked features across participants than correlations involving the right frontal hemisphere. The ratio was approximately 2:1 (44 to 20 features) when comparing channels Fp1/Fp2, AF3/AF4, AF7/AF8, F1/F2, F3/F4, F5/F6, and F7/F8. In Figure 7, we summarized all frequency bands and highlighted the correlations of these left and right frontal channels in blue and red, respectively. Once more, we report a high involvement of the left-hemispheric channel AF7 compared to the complementary right-hemispheric channel AF8, particularly in the high alpha band (Figures 6D, 7).

Figure 7. Highest-ranked amplitude time-series correlation features from all frequency bands. When separating correlations involving left frontal channels (in blue, Fp1, AF3, AF7, F1, F3, F5, F7) and right frontal channels (in red, Fp2, AF4, AF8, F2, F4, F6, F8), we observe a high prevalence of the left frontal cortex compared to the right. The analysis was carried out across participants, with 220 features in total (20 per participant). Again, the connection's width reflects how often the correlation occurred in the main features across participants, with the thicker line representing higher occurrence across subjects. The correlation between AF7 and F4 is plotted in violet and counted as both left- and right-hemispheric frontal correlation.

3.3. Subjective Ratings and Correlation With SVM Performance

We assessed emotional intensity, the usefulness of mantras, fatigue, and concentration levels at the end of each block. An overview of subjective ratings is presented in Figure 8. On average, the emotional intensity was rated “moderate” to “high” for the first and the last blocks, with a slight increase for the second and the fourth blocks. Figure 8A suggests that participants felt both tenderness and anguish with the same intensity. Concentration levels show minor differences across conditions and blocks, with the highest concentration level reported during the first block for both emotions. As expected, fatigue ratings showed a slightly increasing trend throughout the experiment, with a “very low” and “low” level for the first blocks and up to “moderate” levels for the last blocks. Average responses across participants with regards to mantras usefulness showed higher variance compared to the other self-rated measures across blocks and conditions, as shown in Figure 8D. Correlation analysis between subjective ratings and classifier performances using both feature sets was not significant for any of the four measures (p > 0.05).

Figure 8. Participants' subjective ratings were assessed at the end of each of the eight blocks (four for each emotion). Orange refers to the “tenderness” condition, while purple refers to “anguish.” The y-axis represents the 5-point Likert scale, going from “very mild” to “very intense” for (A), from “very low” up to “very high” for (B,C), and from “not useful” to “very useful” for (D).

4. Discussion

Our study demonstrated the feasibility of using EEG and ML tools to distinguish complex emotional states using a near-immersive emotion-elicitation paradigm. We selected stimuli, such as VR with music, that potentially serve as BCI for NFB experiments. We showed that, despite low spatial resolution and the inability to infer activity in subcortical structures, EEG is a suitable tool for classifying discrete emotions, such as tenderness and anguish. We performed cross-validation using SVM and we reached moderate to high accuracy using time-frequency/FAA and correlational features calculated in short time-windows. Furthermore, we were interested in identifying the most relevant features for classification that were shared among participants. To do so, we performed a feature-selection procedure and we repeated cross-validation using a subset of 100 selected features. We found that the most discriminative features belonged to channels of the frontal left hemisphere, of the temporal, and the occipital lobes. The feature-selection procedure also highlighted the importance of high-beta and gamma bands to distinguish between anguish and tenderness trials. Finally, we calculated the Pearson correlation coefficient between subjective ratings (i.e., the intensity of emotion, the utility of mantras, fatigue, and concentration levels) and classification performance across participants to investigate the relationship between the self-reported ratings and inter-subject variability in SVM performance. We reported no significant association between self-assessment measures and accuracy distributions.

One crucial challenge of neuroimaging studies on affective processes has usually concerned participants' difficulty in engaging and sustaining valid emotional states in an experimental setting, especially when trying to elicit complex emotions. Here, the use of a multimodal realistic virtual environment (VR) may have eased and encouraged the participants' involvement in the experiment by providing an engaging setup, as proven by previous studies (Cho et al., 2002; Lécuyer et al., 2008; Kim et al., 2014; Kovacevic et al., 2015). Moreover, musical excerpts' accompaniment may have facilitated emotion-elicitation, given that music is a powerful tool for inducing strong emotional experience (Brown et al., 2004; Trost et al., 2012; Koelsch, 2014). Lastly, the self-induction of emotional states through personalized mantras may have influenced the good outcome of discrete emotion classification, since we combined external elicitation with an internal locus of emotional induction. However, this may also have played a role in the substantial inter-subject variability in classification accuracy, given that self-reported ratings showed high variance in participants' responses to the mantras' usefulness. Nonetheless, it has to be noted that we did not find any significant correlation between any self-ratings measures and model's performance, suggesting that subjecting ratings were not associated with higher/lower performance.

Using time-frequency and FAA features, we reported accuracies ranging from 59.2% up to 92.9% when testing the model on unseen data. Similarly, features extracted from the amplitude time-series correlation analysis showed a comparable model performance when tested on unknown data, with the lowest accuracy being 62.4% and the highest 92.4%. The non-ergodicity in human subjects studies can explain the high interindividual variability we found in these results (Fisher et al., 2018). Several studies have observed individual differences in emotional processing (Canli, 2004; Aftanas et al., 2006; Kuppens et al., 2009; Molenaar and Campbell, 2009), stressing the importance of analyzing data at the individual level. Moreover, we assume that different amounts of noise in the datasets may have contributed to the inter-subject variability of classification results. As stated in the section 2, we decided not to apply any artifact removal or epoch rejection going beyond ICA to remove eyeblink and muscular artifacts. We wanted to test the classifier's performance as close as possible to the practical NFB scenario, in which epochs cannot be rejected during online analysis. We prioritized our model's utility in real-world application and aimed for a robust classification, despite the inclusion of noisy window-samples and a conservative validation with samples from unseen trials. Notwithstanding, reducing the datasets' dimensionality through a feature selection procedure led to lower performance variability across subjects, especially for correlational features.

Analysis of the highest-ranked features across participants did not show the importance of FAA features, as found in previous classification studies involving tenderness (Zhao et al., 2018b). However, as shown in Figure 5, the left frontal electrodes, especially channel AF7, appeared to be particularly discriminative for the two emotions. The relevance of the electrode AF7 is in line with previous studies using MUSE EEG headband comprising four channels (TP9, AF7, AF8, TP10) for emotion classification purposes, highlighting the importance of channel AF7 to accurately distinguish between mental states (Bird et al., 2019; Raheel et al., 2019). Interesting to notice is that channels from the right frontal hemisphere did not appear to be as discriminative as their left-sided counterparts, both for time-frequency and correlational features, as shown in Table 3 and Figure 7, respectively. The most common features across participants and both feature sets also revealed the relevance of temporal and occipital sites to discriminate between tenderness and anguish. We are not the first to report the importance of the temporal lobes for distinguishing between emotions. Zheng and colleagues conducted a study aimed at finding stable electrophysiological patterns across subjects and sessions of emotion recognition (Zheng et al., 2017). The authors found a stronger activation of lateral temporal areas for positive compared to negative emotions, both in the beta and gamma bands. Subject-independent features stemmed mostly from those brain areas and frequency bands (Zheng et al., 2017). Similarly, Jatupaiboon et al. (2013) found that the temporal lobe was particularly useful for classifying happy from unhappy emotions. Moreover, activation of sensory areas, such as temporal and occipital lobes, has been linked to emotional processing, regardless of the stimulus type. For example, in several neurological and psychiatric disorders, the temporal cortex's abnormal structure and function have been linked to emotion processing difficulties. Patients with temporal lobe epilepsy show deficits in emotion recognition, particularly fear and other negative emotions (Monti and Meletti, 2015). In schizophrenic patients, Goghari et al. (2011) found a correlation between reduced temporal lobe gray matter and poor facial emotion recognition (Goghari et al., 2011). Similarly, in frontotemporal dementia, a core problem is the impaired processing of emotional signals (Marshall et al., 2019). A different neuronal response has been observed in the occipital cortex in response to stimuli eliciting distinct emotions. Kragel et al. (2019) succeeded in predicting 11 specific emotion categories with convolutional neural networks, solely based on the occipital cortex's activity. Mattavelli et al. (2016) hypothesize that the frontal cortex exerts an early influence on the occipital cortex for discriminating emotions when a subject is presented with emotionally loaded visual stimuli. Regarding the contribution of the different frequency bands, high-beta and gamma bands had the highest impact on discrimination between emotional states across subjects. This result is also consistent with the literature providing evidence of these bands' importance for distinguishing different emotional states (Glauser and Scherer, 2008; Li and Lu, 2009; Daly et al., 2014; Li et al., 2019). However, it has to be noted that the feature-ranking algorithm does not allow us to infer which of the two classes showed increased or decreased spectral power or amplitude-related coherence patterns.

Although conducted in an offline manner, we would like to emphasize that the present study is a proof of concept addressing the crucial aspect of the classification of discrete complex emotions in short time-windows using EEG signals. Here we did not aim at maximizing classification accuracy at the expense of applicability in real-time. Deep learning methods such as CNNs are to date unsuitable for an individual-based real-time EEG experiment, where training data is limited and EEG feature sets are high-dimensional and sparse. As a rule of thumb, these networks are recommended to be given 10x the number of samples as compared to parameters in the network (Miotto et al., 2018). Moreover, parameter tuning for large networks trough trial and error is both time consuming and impractical, and online re-training of deep learning algorithms still remains under research (Aloysius and Geetha, 2017). Therefore, we chose the SVM as a ML algorithm with a low computational cost and online re-training ability, requiring both little training data and time when compared to the above-mentioned methods. Moreover, we provided appropriate validation techniques given the methods usually employed in an online setting.

Affiliative emotions, such as tenderness, have been proven to play a central role in a range of psychological and social processes. Mental training of such emotions has been increasingly applied in clinical settings. For example, there is evidence that Compassion-focused Therapy (CFT) is helpful for a broad range of mental health and neurological issues (Gilbert and Procter, 2006; Germer and Siegel, 2012). Loving-kindness meditation training has also shown positive effects on psychological well-being for both clinical and non-clinical populations (Lutz et al., 2008; Neff and Germer, 2013). With the EEG being the preferred tool in the clinical environment, the findings of this work are significant because they contribute to advances in the field of biofeedback and non-invasive neuroimaging approaches. Our proof-of-concept study promotes the development of personalized EEG-based NFB training on the regulation of such complex emotions, which may have therapeutic potential in restoring balanced neural activity for those suffering from emotional disturbances.

However, this study presents some pitfalls. Most importantly, the small sample size and the non-randomization of trials across participants, together with the presence of repetitive stimuli. The usage of the same stimulus presentation with no variety between trials (i.e., the naturalistic landscape) may have influenced sensory processing and classification results. At the same time, using identical musical tracks for all subjects may have influenced participants' emotional elicitation differently due to variances in individual taste in music. For future work on emotion elicitation, choosing personalized musical tracks could improve individuals' induction of emotional states. Another interesting future perspective would be to evaluate whether the combination of visual and auditory stimuli is more efficacious in inducing the desired emotions than either one sensory modality alone. Moreover, the dominance of a sensory domain at evoking emotions could be investigated by exposing participants to either only visual or auditory or mismatched stimuli. Another limitation of our study, as already stressed above, is the high inter-subject variability we found in the two affective states' classification. Although we assessed emotional intensity at the end of each block, we do not have any fine-grained assessment of the duration of the emotional experience throughout the experiment. Since emotions, both at experiential and neurophysiological levels, cannot be reduced to constant patterns across individuals (Barrett et al., 2007; Kuppens et al., 2009), future studies should involve a bigger sample size in order to shed light both on the individual differences and the common characteristics of emotional dynamics. Future directions would be to test this experimental protocol and SVM model on the training of such emotions through EEG-based NFB, which can represent another tool for exploring these affective states and contribute to the development of personalized interventions.

5. Conclusions

This proof of concept study showed that a multimodal near-immersive emotion-eliciting scenario is a feasible approach to evoke complex emotions, such as anguish and tenderness, in a laboratory setting. We proposed a pipeline that may be applicable for EEG-based BCI and NFB applications that require real-time data processing. Our findings indicate that the SVM classification of discrete emotions using EEG signals is achievable in a subject-wise manner, using short time-windows as in an online experimental setting. We identified features from the temporal, occipital, and left frontal sites as the most discriminative between the two emotions. Remarkably, we found a different involvement of left frontal and right frontal features, particularly in channel AF7 compared to channel AF8. The high-beta and gamma frequency bands appeared to have a substantial role in differentiating between anguish and tenderness. Together our results suggest that the EEG is a suitable tool for classifying discrete emotions, and that the proposed pipeline may be implemented in real-time to enable EEG-based NFB.

Data Availability Statement

The dataset analyzed and the codes used in this study are available upon reasonable request from the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by D'Or Institute Ethics and Scientific Committee. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

ED: methodology, formal analysis, data curation, writing—original, draft, review, and editing. MW: formal analysis, visualization, validation, writing—original, draft, review, and editing. BM: conceptualization, software, and investigation. CT-C: conceptualization, investigation, and supervision. TB: conceptualization, funding acquisition, and project administration. GD: methodology and supervision. JM: funding acquisition, resources, and supervision. All authors contributed to the article and approved the submitted version.

Funding

This study was supported by internal grants from the D'Or Institute for Research and Education (IDOR), and grants by the National Institute for Translational Neuroscience (INNT/Brazil) and the Fundação de Amparo à Pesquisa do Estado do Rio de Janeiro (FAPERJ; E-26/ 202.962/2015).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Aftanas, L., Reva, N., Savotina, L., and Makhnev, V. (2006). Neurophysiological correlates of induced discrete emotions in humans: an individually oriented analysis. Neurosci. Behav. Physiol. 36, 119–130. doi: 10.1007/s11055-005-0170-6

Aloysius, N., and Geetha, M. (2017). “A review on deep convolutional neural networks,” in 2017 International Conference on Communication and Signal Processing (ICCSP) (Chennai: IEEE), 588–592. doi: 10.1109/ICCSP.2017.8286426

Anthony, G., and Ruther, H. (2007). “Comparison of feature selection techniques for SVM classification,” in 10th International Symposium on Physical Measurements and Signatures in Remote Sensing (Davos), 1–6.

Balconi, M., and Mazza, G. (2009). Brain oscillations and BIS/BAS (behavioral inhibition/activation system) effects on processing masked emotional cues.: ERS/ERD and coherence measures of alpha band. Int. J. Psychophysiol. 74, 158–165. doi: 10.1016/j.ijpsycho.2009.08.006

Baños, R. M., Botella, C., Alcañiz, M., Liaño, V., Guerrero, B., and Rey, B. (2004). Immersion and emotion: their impact on the sense of presence. Cyberpsychol. Behav. 7, 734–741. doi: 10.1089/cpb.2004.7.734

Barrett, L. F., Mesquita, B., Ochsner, K. N., and Gross, J. J. (2007). The experience of emotion. Annu. Rev. Psychol., 58, 373–403. doi: 10.1146/annurev.psych.58.110405.085709

Baumeister, R. F., and Leary, M. R. (1995). The need to belong: desire for interpersonal attachments as a fundamental human motivation. Psychol. Bull. 117:497. doi: 10.1037/0033-2909.117.3.497

Baumgartner, T., Esslen, M., and Jäncke, L. (2006). From emotion perception to emotion experience: emotions evoked by pictures and classical music. Int. J. Psychophysiol. 60, 34–43. doi: 10.1016/j.ijpsycho.2005.04.007

Bird, J. J., Ekart, A., Buckingham, C., and Faria, D. R. (2019). “Mental emotional sentiment classification with an EEG-based brain-machine interface,” in Proceedings of theInternational Conference on Digital Image and Signal Processing (DISP'19) (Oxford, UK). doi: 10.1109/IS.2018.8710576

Brown, S., Martinez, M. J., and Parsons, L. M. (2004). Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport 15, 2033–2037. doi: 10.1097/00001756-200409150-00008

Canli, T. (2004). Functional brain mapping of extraversion and neuroticism: learning from individual differences in emotion processing. J. Pers. 72, 1105–1132. doi: 10.1111/j.1467-6494.2004.00292.x

Cho, B.-H., Ku, J., Jang, D. P., Kim, S., Lee, Y. H., Kim, I. Y., et al. (2002). The effect of virtual reality cognitive training for attention enhancement. CyberPsychol. Behav. 5, 129–137. doi: 10.1089/109493102753770516

Cohen, M. X. (2014). Analyzing Neural Time Series Data: Theory and Practice. Cambridge: MIT Press. doi: 10.7551/mitpress/9609.001.0001

Cohen, M. X. (2019). A better way to define and describe morlet wavelets for time-frequency analysis. Neuroimage 199, 81–86. doi: 10.1016/j.neuroimage.2019.05.048

Coogan, C. G., and He, B. (2018). Brain-computer interface control in a virtual reality environment and applications for the internet of things. IEEE Access 6, 10840–10849. doi: 10.1109/ACCESS.2018.2809453

Corbett, L. (2015). The Soul in Anguish: Psychotherapeutic Approaches to Suffering. Asheville: Chiron Publications.

Daly, I., Malik, A., Hwang, F., Roesch, E., Weaver, J., Kirke, A., et al. (2014). Neural correlates of emotional responses to music: an EEG study. Neurosci. Lett. 573, 52–57. doi: 10.1016/j.neulet.2014.05.003

Delorme, A., and Makeig, S. (2004). EEGlab: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Eslinger, P. J. (1998). Neurological and neuropsychological bases of empathy. Eur. Neurol. 39, 193–199. doi: 10.1159/000007933

Fisher, A. J., Medaglia, J. D., and Jeronimus, B. F. (2018). Lack of group-to-individual generalizability is a threat to human subjects research. Proc. Natl. Acad. Sci. U.S.A. 115, E6106–E6115. doi: 10.1073/pnas.1711978115

Friston, K. J., Jezzard, P., and Turner, R. (1994). Analysis of functional MRI time-series. Hum. Brain Mapp. 1, 153–171. doi: 10.1002/hbm.460010207

Germer, C. K., and Siegel, R. D. (2012). Wisdom and Compassion in Psychotherapy: Deepening Mindfulness in Clinical Practice. New York, NY: The Guilford Press.

Gilbert, P., and Procter, S. (2006). Compassionate mind training for people with high shame and self-criticism: overview and pilot study of a group therapy approach. Clin. Psychol. Psychother. Int. J. Theory Pract. 13, 353–379. doi: 10.1002/cpp.507

Glauser, E. S. D., and Scherer, K. R. (2008). Neuronal processes involved in subjective feeling emergence: oscillatory activity during an emotional monitoring task. Brain Topogr. 20, 224–231. doi: 10.1007/s10548-008-0048-3

Goghari, V. M., MacDonald, A. W. III., and Sponheim, S. R. (2011). Temporal lobe structures and facial emotion recognition in schizophrenia patients and nonpsychotic relatives. Schizophr. Bull. 37, 1281–1294. doi: 10.1093/schbul/sbq046

Huang, H., Xie, Q., Pan, J., He, Y., Wen, Z., Yu, R., et al. (2019). An EEG-based brain computer interface for emotion recognition and its application in patients with disorder of consciousness. IEEE Trans. Affect. Comput (IEEE). doi: 10.1109/TAFFC.2019.2901456

Jatupaiboon, N., Pan-Ngum, S., and Israsena, P. (2013). Real-time EEG-based happiness detection system. Sci. World J. 2013:618649. doi: 10.1155/2013/618649

Johnson, N., Carey, J., Edelman, B., Doud, A., Grande, A., Lakshminarayan, K., et al. (2018). Combined rtms and virtual reality brain-computer interface training for motor recovery after stroke. J. Neural Eng. 15:016009. doi: 10.1088/1741-2552/aa8ce3

Kim, K., Rosenthal, M. Z., Zielinski, D. J., and Brady, R. (2014). Effects of virtual environment platforms on emotional responses. Comput. Methods Prog. Biomed. 113, 882–893. doi: 10.1016/j.cmpb.2013.12.024

Koelsch, S. (2014). Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 15, 170–180. doi: 10.1038/nrn3666

Kory, J. M., and D'Mello, S. K. (2015). “Affect elicitation for affective computing,” in The Oxford Handbook of Affective Computing, eds R. A. Calvo, S. K. D'Mello, J. Gratch, and A. Kappas (Oxford University Press), 371–383. doi: 10.1093/oxfordhb/9780199942237.013.001

Kovacevic, N., Ritter, P., Tays, W., Moreno, S., and McIntosh, A. R. (2015). “My virtual dream”: collective neurofeedback in an immersive art environment. PLoS ONE 10:e0130129. doi: 10.1371/journal.pone.0130129

Kragel, P. A., Reddan, M. C., LaBar, K. S., and Wager, T. D. (2019). Emotion schemas are embedded in the human visual system. Sci. Adv. 5:eaaw4358. doi: 10.1126/sciadv.aaw4358

Kuppens, P., Stouten, J., and Mesquita, B. (2009). Individual differences in emotion components and dynamics: introduction to the special issue. Cogn. Emot. 23, 1249–1258. doi: 10.1080/02699930902985605

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (1997). International affective picture system (IAPS): technical manual and affective ratings. NIMH Center Study Emot. Attent. 1, 39–58.

Lang, P. J., Greenwald, M. K., Bradley, M. M., and Hamm, A. O. (1993). Looking at pictures: affective, facial, visceral, and behavioral reactions. Psychophysiology 30, 261–273. doi: 10.1111/j.1469-8986.1993.tb03352.x

Lécuyer, A., Lotte, F., Reilly, R. B., Leeb, R., Hirose, M., and Slater, M. (2008). Brain-computer interfaces, virtual reality, and videogames. Computer 41, 66–72. doi: 10.1109/MC.2008.410

Li, J., Zhang, Z., and He, H. (2018). Hierarchical convolutional neural networks for EEG-based emotion recognition. Cogn. Comput. 10, 368–380. doi: 10.1007/s12559-017-9533-x

Li, M., and Lu, B.-L. (2009). “Emotion classification based on gamma-band EEG,” in 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Minneapolis, MN: IEEE), 1223–1226.

Li, P., Liu, H., Si, Y., Li, C., Li, F., Zhu, X., et al. (2019). EEG based emotion recognition by combining functional connectivity network and local activations. IEEE Trans. Biomed. Eng. 66, 2869–2881. doi: 10.1109/TBME.2019.2897651

Liu, H., Dougherty, E. R., Dy, J. G., Torkkola, K., Tuv, E., Peng, H., et al. (2005). Evolving feature selection. IEEE Intell. Syst. 20, 64–76. doi: 10.1109/MIS.2005.105

Lorenzetti, V., Melo, B., Basilio, R., Suo, C., Yücel, M., Tierra-Criollo, C. J., et al. (2018). Emotion regulation using virtual environments and real-time fmri neurofeedback. Front. Neurol. 9:390. doi: 10.3389/fneur.2018.00390

Lotte, F., Bougrain, L., Cichocki, A., Clerc, M., Congedo, M., Rakotomamonjy, A., et al. (2018). A review of classification algorithms for EEG-based brain-computer interfaces: a 10 year update. J. Neural Eng. 15:031005. doi: 10.1088/1741-2552/aab2f2

Lutz, A., Brefczynski-Lewis, J., Johnstone, T., and Davidson, R. J. (2008). Regulation of the neural circuitry of emotion by compassion meditation: effects of meditative expertise. PLoS ONE 3:e1897. doi: 10.1371/journal.pone.0001897

Marshall, C. R., Hardy, C. J., Russell, L. L., Bond, R. L., Sivasathiaseelan, H., Greaves, C., et al. (2019). The functional neuroanatomy of emotion processing in frontotemporal dementias. Brain 142, 2873–2887. doi: 10.1093/brain/awz204

Mattavelli, G., Rosanova, M., Casali, A. G., Papagno, C., and Lauro, L. J. R. (2016). Timing of emotion representation in right and left occipital region: evidence from combined tms-EEG. Brain Cogn. 106, 13–22. doi: 10.1016/j.bandc.2016.04.009

Miotto, R., Wang, F., Wang, S., Jiang, X., and Dudley, J. T. (2018). Deep learning for healthcare: review, opportunities and challenges. Brief. Bioinform. 19, 1236–1246. doi: 10.1093/bib/bbx044

Molenaar, P. C., and Campbell, C. G. (2009). The new person-specific paradigm in psychology. Curr. Direct. Psychol. Sci. 18, 112–117. doi: 10.1111/j.1467-8721.2009.01619.x

Moll, J., Bado, P., de Oliveira-Souza, R., Bramati, I. E., Lima, D. O., Paiva, F. F., et al. (2012). A neural signature of affiliative emotion in the human septohypothalamic area. J. Neurosci. 32, 12499–12505. doi: 10.1523/JNEUROSCI.6508-11.2012

Moll, J., De Oliveira-Souza, R., and Zahn, R. (2008). The neural basis of moral cognition: sentiments, concepts, and values. Ann. N. Y. Acad. 1124, 161–180. doi: 10.1196/annals.1440.005

Moll, J., Zahn, R., de Oliveira-Souza, R., Bramati, I. E., Krueger, F., Tura, B., et al. (2011). Impairment of prosocial sentiments is associated with frontopolar and septal damage in frontotemporal dementia. Neuroimage 54, 1735–1742. doi: 10.1016/j.neuroimage.2010.08.026

Moll, J., Zahn, R., de Oliveira-Souza, R., Krueger, F., and Grafman, J. (2005). The neural basis of human moral cognition. Nat. Rev. Neurosci. 6, 799–809. doi: 10.1038/nrn1768

Monti, G., and Meletti, S. (2015). Emotion recognition in temporal lobe epilepsy: a systematic review. Neurosci. Biobehav. Rev. 55, 280–293. doi: 10.1016/j.neubiorev.2015.05.009

Moon, S.-E., Jang, S., and Lee, J.-S. (2018). “Convolutional neural network approach for EEG-based emotion recognition using brain connectivity and its spatial information,” in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (Calgary: IEEE), 2556–2560. doi: 10.1109/ICASSP.2018.8461315

Neff, K. D., and Germer, C. K. (2013). A pilot study and randomized controlled trial of the mindful self-compassion program. J. Clin. Psychol. 69, 28–44. doi: 10.1002/jclp.21923

Perrin, F., Bertrand, O., and Pernier, J. (1987a). Scalp current density mapping: value and estimation from potential data. IEEE Trans. Biomed. Eng. 4, 283–288. doi: 10.1109/TBME.1987.326089

Perrin, F., Pernier, J., Bertnard, O., Giard, M.-H., and Echallier, J. (1987b). Mapping of scalp potentials by surface spline interpolation. Electroencephalogr. Clin. Neurophysiol. 66, 75–81. doi: 10.1016/0013-4694(87)90141-6

Perrin, F., Pernier, J., Bertrand, O., and Echallier, J. (1989). Spherical splines for scalp potential and current density mapping. Electroencephalogr. Clin. Neurophysiol. 72, 184–187. doi: 10.1016/0013-4694(89)90180-6

Raheel, A., Majid, M., Anwar, S. M., and Bagci, U. (2019). “Emotion classification in response to tactile enhanced multimedia using frequency domain features of brain signals,” in 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Berlin: IEEE), 1201–1204. doi: 10.1109/EMBC.2019.8857632

Ribeiro, F. S., Santos, F. H., Albuquerque, P. B., and Oliveira-Silva, P. (2019). Emotional induction through music: measuring cardiac and electrodermal responses of emotional states and their persistence. Front. Psychol. 10:451. doi: 10.3389/fpsyg.2019.00451

Rubin, D. C., and Talarico, J. M. (2009). A comparison of dimensional models of emotion: evidence from emotions, prototypical events, autobiographical memories, and words. Memory 17, 802–808. doi: 10.1080/09658210903130764

Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychol. Rev. 110:145. doi: 10.1037/0033-295X.110.1.145

Scherer, K. R. (2004). Which emotions can be induced by music? What are the underlying mechanisms? and how can we measure them? J. New Music Res. 33, 239–251. doi: 10.1080/0929821042000317822

Trost, W., Ethofer, T., Zentner, M., and Vuilleumier, P. (2012). Mapping aesthetic musical emotions in the brain. Cereb. Cortex 22, 2769–2783. doi: 10.1093/cercor/bhr353

Van der Maaten, L., and Hinton, G. (2008). Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2605.

Wang, F., He, Y., Qu, J., Xie, Q., Lin, Q., Ni, X., et al. (2017). Enhancing clinical communication assessments using an audiovisual BCI for patients with disorders of consciousness. J. Neural Eng. 14:046024. doi: 10.1088/1741-2552/aa6c31

Yang, H., Han, J., and Min, K. (2019). A multi-column cnn model for emotion recognition from EEG signals. Sensors 19:4736. doi: 10.3390/s19214736

Yankauer, A. (1999). The therapeutic mantra of Emile Coué. Perspect. Biol. Med. 42, 489–495. doi: 10.1353/pbm.1999.0012

Zahn, R., Moll, J., Paiva, M., Garrido, G., Krueger, F., Huey, E. D., et al. (2009). The neural basis of human social values: evidence from functional mri. Cereb. Cortex 19, 276–283. doi: 10.1093/cercor/bhn080

Zhang, Y., Chen, J., Tan, J. H., Chen, Y., Chen, Y., Li, D., et al. (2020). An investigation of deep learning models for EEG-based emotion recognition. Front. Neurosci. 14:622759. doi: 10.3389/fnins.2020.622759

Zhao, G., Zhang, Y., and Ge, Y. (2018a). Frontal EEG asymmetry and middle line power difference in discrete emotions. Front. Behav. Neurosci. 12:225. doi: 10.3389/fnbeh.2018.00225

Zhao, G., Zhang, Y., Ge, Y., Zheng, Y., Sun, X., and Zhang, K. (2018b). Asymmetric hemisphere activation in tenderness: evidence from EEG signals. Sci. Rep. 8, 1–9. doi: 10.1038/s41598-018-26133-w

Keywords: emotions, electroencephalography, classification, machine-learning, neuro-feedback, multimodal virtual scenario

Citation: De Filippi E, Wolter M, Melo BRP, Tierra-Criollo CJ, Bortolini T, Deco G and Moll J (2021) Classification of Complex Emotions Using EEG and Virtual Environment: Proof of Concept and Therapeutic Implication. Front. Hum. Neurosci. 15:711279. doi: 10.3389/fnhum.2021.711279

Received: 18 May 2021; Accepted: 29 July 2021;

Published: 26 August 2021.

Edited by:

Lourdes De Martínez, Panamerican University, MexicoReviewed by:

Bradley Jay Edelman, Max Planck Institute of Neurobiology (MPIN), GermanyCaterina Cinel, University of Essex, United Kingdom

Copyright © 2021 De Filippi, Wolter, Melo, Tierra-Criollo, Bortolini, Deco and Moll. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eleonora De Filippi, ZWxlb25vcmEuZGVmaWxpcHBpQHVwZi5lZHU=

†These authors have contributed equally to this work

Eleonora De Filippi

Eleonora De Filippi Mara Wolter

Mara Wolter Bruno R. P. Melo

Bruno R. P. Melo Carlos J. Tierra-Criollo

Carlos J. Tierra-Criollo Tiago Bortolini

Tiago Bortolini Gustavo Deco

Gustavo Deco Jorge Moll

Jorge Moll