94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 14 July 2021

Sec. Motor Neuroscience

Volume 15 - 2021 | https://doi.org/10.3389/fnhum.2021.602723

Irini Giannopulu*

Irini Giannopulu* Haruo Mizutani

Haruo MizutaniMotor imagery (MI) is assimilated to a perception-action process, which is mentally represented. Although several models suggest that MI, and its equivalent motor execution, engage very similar brain areas, the mechanisms underlying MI and their associated components are still under investigation today. Using 22 Ag/AgCl EEG electrodes, 19 healthy participants (nine males and 10 females) with an average age of 25.8 years old (sd = 3.5 years) were required to imagine moving several parts of their body (i.e., first-person perspective) one by one: left and right hand, tongue, and feet. Network connectivity analysis based on graph theory, together with a correlational analysis, were performed on the data. The findings suggest evidence for motor and somesthetic neural synchronization and underline the role of the parietofrontal network for the tongue imagery task only. At both unilateral and bilateral cortical levels, only the tongue imagery task appears to be associated with motor and somatosensory representations, that is, kinesthetic representations, which might contribute to verbal actions. As such, the present findings suggest the idea that imagined tongue movements, involving segmentary kinesthetic actions, could be the prerequisite of language.

Internal imagery, specifically mental imagery, is one of the most frequently used aspects of the mind as it allows for the simulation of sensations, motor, and/or verbal actions (Fox, 1914; Guillot et al., 2012; Bruno et al., 2018). The mental imagery preceding action accomplishment implies that actions need to be thought, prepared, planned, and organized before any execution takes place. Imagined actions, motor actions, in particular, occur on the basis of a specific cognitive process classically named “Motor imagery” (MI; Ehrsson et al., 2003). When used in research, participants are required to imagine executing an action without doing the corresponding movement (Papaxanthis et al., 2002; Fadiga et al., 2005). MI comprises the visualization of the required movement, which necessitates the implication of a dynamic brain network (Fadiga and Craighero, 2004; Li et al., 2018). Regardless of whether the movement is global, i.e., involving the whole body, or segmental, i.e., involving part of the body, the neural composition of this network is still under investigation. During MI, information associated with ongoing and preceding brain connections is activated (Friston, 2011). Multimodal in nature, this contains fundamental components of intra-structure synergy among motor, somatosensory, and visuospatial inputs (Naito et al., 2002; Vogt et al., 2013; Giannopulu, 2016, 2017, 2018). It has therefore been reported that during MI, people have similar kinesthetic (Jeannerod, 1994; Chholak et al., 2019) and/or tactile (Schmidt and Blankenburg, 2019) sensations to those they experience when effectively performing the movement that obviously involves motor and somesthetic functions (Frackoviak, 2004).

The “motor simulation hypothesis” suggests that MI associated with global or segmental body movements would enlist analogous motor representations (Fadiga and Craighero, 2004; Corballis, 2010). In accordance with this, some experimental studies support the idea of a temporal congruence between imagined and actual actions (Guillot et al., 2012). Some others suggest that the spatial distribution of neural activity during MI mirrors the spatial distribution of neural activity during actual movements (Miller et al., 2010). This latter includes important aspects to conceive the synchronization and coherence of neural activity between several brain areas. Neuroimaging studies in humans showed that when MI involves body parts and more particularly, hand movements, this typically initiates various areas associated with sensorimotor control areas including bilateral premotor, prefrontal supplementary motor, left posterior parietal area, and caudate nuclei (Gerardin et al., 2000). Recent brain network analysis has shown that frontal and parietal brain areas are also involved while performing MI of the right hand in third-person perspective (i.e., the subject was looking at a robotic hand movement) but not involved in the first-person perspective (i.e., the subject mentally controlled robot’s hand movement; Alanis-Espinosa and Gutiérrez, 2020). Moreover, in the prior mentioned study, prefrontal and sensorimotor brain areas demonstrated higher brain activity in first than in third-person perspective. Less contralateral and bilateral sensorimotor networks were observed for the left-hand MI, but no hemisphere lateralization for both left and right hands mental imagery was reported in the sensorimotor areas (Li et al., 2019). When right hand finger movements are considered, Binkofski et al. (2000) reported that they only involve Broca’s area. Based on the hypothesis that motor preparation shares the same mechanisms as MI (Jeannerod, 1994; Lotze and Halsband, 2006), Wang et al. (2020) analyzed the EEG patterns associated with the preparation of voluntary motion of left and right fingers and demonstrated that sensorimotor processes (essentially central C3 and C4 electrodes) were implicated. Open issues exist with regard to the neural involvement of the primary motor cortex in MI tasks, but studies have shown that the engagement of fingers, toes, and tongue leads to the activation of different subdivisions of the primary motor cortex together with the premotor cortex (Ehrsson et al., 2003). The premotor cortex and primary somatosensory area have demonstrated significant connections of unilateral left and right foot imagery movements in healthy participants (Gu et al., 2020). It was also proposed that regardless of the effector related to the observed movement, i.e., toe, finger, or tongue, the somatotopic organization reflecting the sensory homunculus would be engaged (Penfield and Baldrey, 1937; Penfield and Rasmussen, 1950; Stippich et al., 2002). Taken together, the above-mentioned data are consistent with the assumption that the MI associated with kinesthetic sensations affects somatotopic representations (Ehrsson et al., 2003).

The processes underlying motor action and verbal action are known to involve overlapping neural populations. For example, Broca’s area is not only implicated in language expression but is also activated during the execution of hand movements, oro-laryngeal, and oro-facial movements (Skipper et al., 2007; Clerget et al., 2009; Ferpozzi et al., 2018). Verbal information conveyed through hand gestures and oral sounds is processed in a similar way (Kohler et al., 2002; Fadiga et al., 2005). Nevertheless, mouth gestures seem to serve to disambiguate hand gestures (Emmorey et al., 2002). It was therefore, suggested that mouth actions, which are consistently associated with voicing and tongue actions, progressively granted authority over hand actions (Corballis, 2003). With that in mind, it seems that the evolutionary transition would be from hand to face to voice; in other words, from gesticulation to verbal generation, and was considered to be mediated by the mirror neuron system (MNS). This system becomes active during communicative mouth actions, i.e., lip-smacking (Ferrari et al., 2005), which are theorized to include somatosensory and motor patterns of the tongue and other areas within the mouth. In humans, moving a tongue is directly and unambiguously related to verbal actions expressed through complex sounds that take specific meanings when people communicate with one another (Corballis, 2010; Corballis, 2018).

In light of the aforementioned neuroanatomical and physiological arguments, it was hypothesized that tongue action imagery, which is widely associated with verbal actions, and obviously with the symbolic representations of these actions (Fadiga and Craighero, 2004; Rizzolatti and Sinigaglia, 2010), might cause stronger neural network activity and connectivity in the frontal areas than movements which involve upper (i.e., hands) and inferior (i.e., feet) limbs. To that end, network connectivity analysis in the topology of the whole brain was accomplished via graph theory (Beharelle and Small, 2016). Contrary to Qingsong et al. (2019) where the feature extraction of four-class motor signals of the same paradigm was performed on a general functional brain network, that is, without neural network specifications, the present study explores the implication of anterior (frontal) and posterior (parietal) brain networks on the tongue MI. Centrality [i.e., Degree centrality (DC) and betweenness centrality (BC)] and integration measures (i.e., local and global efficiency; Beharelle and Small, 2016) when healthy participants performed MI tasks involving different body parts, and more particularly, the tongue, left and right hand, and feet (Leeb et al., 2007; Bullmore and Sporns, 2009; Carlson and Millan, 2013), were considered. Expressly, it was hypothesized that the degree of centrality and betweenness centrality would be significantly higher in anterior frontal than in posterior parietal areas; that local and global efficiency would be significantly higher in frontal than in parietal areas for the MI of the tongue than for the other body parts.

Nineteen volunteers, nine males and 10 females participated in the study. The mean age was 25.8 years (s.d. 3.5 years). All were free from any known neurological disorder. All participants were right-handed. The experimental procedure was approved by the local ethics committee, the Bond University Human Research Ethics Committee (BUHREC 16065) and conformed to the National statement and the declaration of Helsinki 2.0. All subjects provided an informed consent.

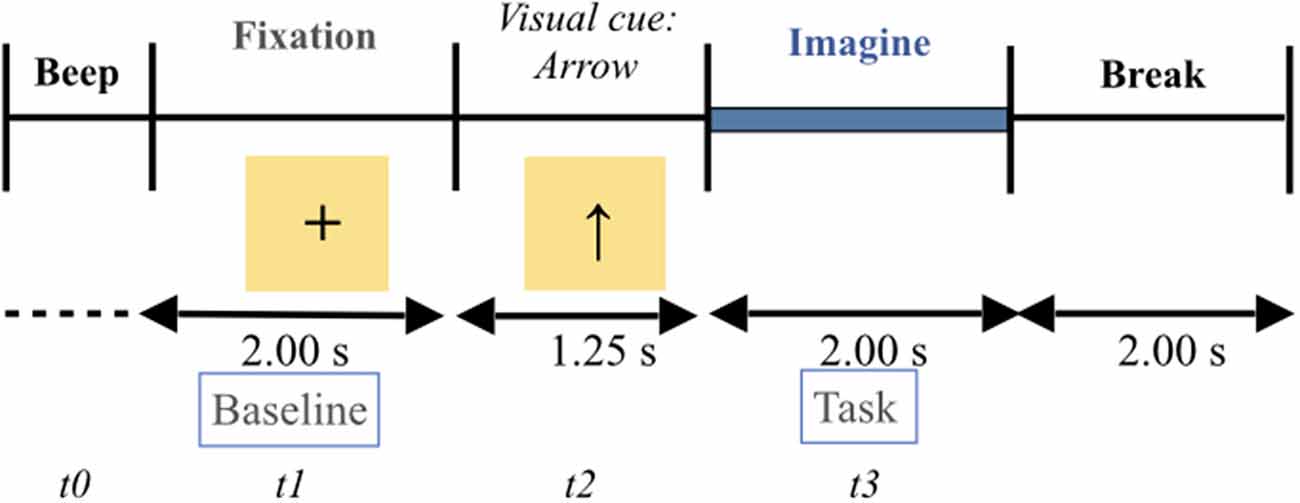

The participants were seated in an armchair in front of an LCD monitor. The experimental paradigm is a repetition of visual cue-based synchronous trials of four different MI tasks (e.g., Naeem et al., 2009; Tangermann et al., 2012; Friedrich et al., 2013). In accordance with this paradigm, all participants were instructed to imagine the movement of their body parts (i.e., first-person perspective) one by one: left hand (task 1), right hand (task 2), feet (task 3,) and tongue (task 4). A typical trial run is described in Figure 1. As presented in the Figure, the start of each trial was signified by the presence of a short “beep tone”. This corresponds to t = 0. Immediately, a fixation cross appeared in the middle of the screen for 2 s, i.e., t = 1, fixation cross. The 2 s duration time constitutes the baseline. After these 2 s, an arrow indicating either left, right, up, or down direction appeared for 1.25 s on the screen. The arrow was the visual cue, i.e., t = 2. By definition, each direction of the arrow corresponds to a body part, as follows: left arrow ← left hand, right arrow → right hand, up arrow ↑ tongue, and down arrow ↓ feet. Each arrow required the participant to perform the corresponding MI task. Once the arrow disappeared from the screen, each participant, facing the black screen, performed a MI task, one at a time, for 2 s, i.e., t = 3. At the end of each MI task, each participant was allowed to take a break and relax. The inter-trial interval was around 2 s. The dataset of each participant consisted of two sessions. Each session comprised six sequences. Each of the four types of MI tasks (left hand, right hand, tongue, and feet) were displayed 12 times within each sequence in a randomized order, i.e., 72 trials per session. There were 288 trials in each session for each participant. The total number of trials was approximately 10,944.

The data analysis in this article compared the following periods of all the sequences of each session: fixation time (baseline), i.e., 2 s, with the MI task, i.e., 2 s.

Figure 1. Timing presentation of the four motor imagery experimental paradigm. The start of each trial was signified by the presence of a short “beep tone”. This corresponds to t = 0. Immediately, a fixation cross appeared in the middle of the screen for 2 s, i.e., t = 1, fixation cross. The 2 s duration time constitutes the baseline. After these 2 s, an arrow indicating either left, right, up, or down direction appeared for 1.25 s on the screen. The arrow is the visual cue, i.e., t = 2. By definition, each direction of the arrow corresponds to a body part, as follows: left arrow ← left hand (i.e., task 1), right arrow → right hand (i.e., task 2), up arrow ↑ tongue (i.e., task 3), and down arrow ↓ feet (i.e., task 4). Each arrow required the participant to perform the corresponding MI task. Once the arrow disappeared from the screen, each participant, facing the black screen, performed a MI task, one at a time, for 2 s, i.e., t = 3. At the end of each motor imagery task, each participant was allowed to take a break and relax. The inter-trial interval was around 2 s.

Twenty-two Ag/AgCl electrodes with an inter-electrode distance of 3.5 cm were used to record via the EEG. The electrode montage corresponds to the international 10–20 system: Fz, FC1, FC3, FCz, FC2, FC4, T7, C3, C1, Cz, C2, C4, T8, CP3, CP1, CPz, CP2, CP4, P3, Pz, P2, Oz. The signals were recorded monopolarly with the left mastoid serving as the reference and the right mastoid as ground. The signals were sampled with 250 Hz and bandpass-filtered between 0.5 Hz and 100 Hz. The sensitivity of the amplifier was set to 100 μV (Naeem et al., 2009). An additional 50 Hz notch filter was used to suppress line noise. Three more monopolar EOG (electrooculogram) channels were used and also sampled with 250 Hz. They were bandpass filtered between 0.5 Hz and 100 Hz with the 50 Hz notch filter enabled, and the sensitivity of the amplifier was set to 1 mv (Ang et al., 2012). The EOG channels were used for the subsequent application of artifact processing methods and were not be used for classification. A visual inspection of all datasets and trials containing artifacts was carried out by an expert. 4.8% of trials with EOG artifacts and 1.2% of trials with EMG (electromyographic) artifacts were marked and eliminated; while 94% of the trials were preserved. ICA algorithms decomposing the EEG signal into individual source signals were used.

A simple graph (i.e., G = <V,E>) whose vertices (V) are unweighted, undirected, and multiples edges (E) was used. V (vertices) were represented by each of the 22 EEG electrodes (i.e., ch1 to ch22); E (edges) corresponded to each couple of electrodes as resulted by the adjacency matrix. The adjacency matrices for each body part to imagine (i.e., tongue, left hand, right hand, and feet) and the baseline were created with 22 rows and 22 columns (i.e., 22 vertices and 22 vertices) and cross-correlation coefficients were calculated for 22 × 22 combinations for each participant to assess the strength of the connection between the two electrodes (i.e., edges). To analyze the trends of whole brain topology network the properties of centrality and integration were considered. Centrality was represented by degree centrality and betweenness centrality. Integration was represented by local and global efficiency: (1) Degree centrality (DC) describes the number of vertices that are connected to a vertix. By definition, the more vertices, the more important the vertex is and similarly connected areas tend to communicate with each other; (2) betweenness centrality (BC) defines the part of all the shortest paths in the network that run through a given vertix. It facilitates functional integration. BC represents the vertix’s ability to make connections with other edges of the graph. The closer the vertices are to each other, the shorter is the path length and the more efficient is the transfer of the information between them, and (3) efficiency (local and global) (E) measures topological distances between vertices and how accurately the vertices communicate between them. Local efficiency reflects interconnected neighboring vertices and global efficiency indices the interconnectedness of all vertices across the entire brain.

The adjacency matrices for each MI task and the baseline were considered and the produced functional connectivity values were transformed into z-scores (i.e., normalization with zero mean and variance three separately for each participant). In that way, each element of each correlation matrix corresponded to the z scores for each vertices combination (Rubinov and Sporns, 2010). In addition, a permuted t-test was computed to assess the differences between each imagery task (i.e., left hand, right hand, feet, and tongue) and the baseline. To regulate the statistical confidence measures (i.e., the resulted values; Genovese et al., 2002; Rouam, 2013), the False Discovery Rate (FDR) approach was then applied and q-values (i.e., adjusted p-values) were implemented to determine the resulted differences as an alternative to the p-value. Such an approach allowed the incorrectly rejected null hypotheses, i.e., type I error, to be determined among all significant results (Benjamini and Hochberg, 1995; Schwartzman and Lin, 1999).

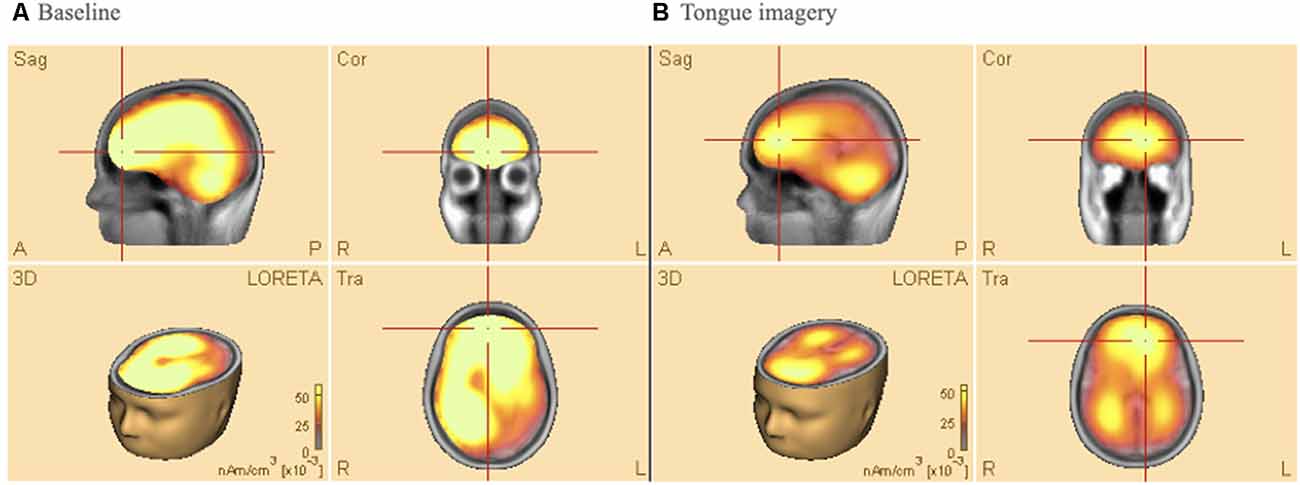

Low-Resolution Brain Electromagnetic Tomography (LORETA) transform was used to perform source analysis using BESA® Research 7.0 software (BESA®) to identify which brain areas were activated during the baseline and the MI task. LORETA was used to adjust brain areas in 3D space. Time windows were selected in 50 ms at the time of 2 s (baseline) and 2 s (imagery task) from the beginning of the epoch. The scale was set between 0–400 × 10−3 nAm/cm3.

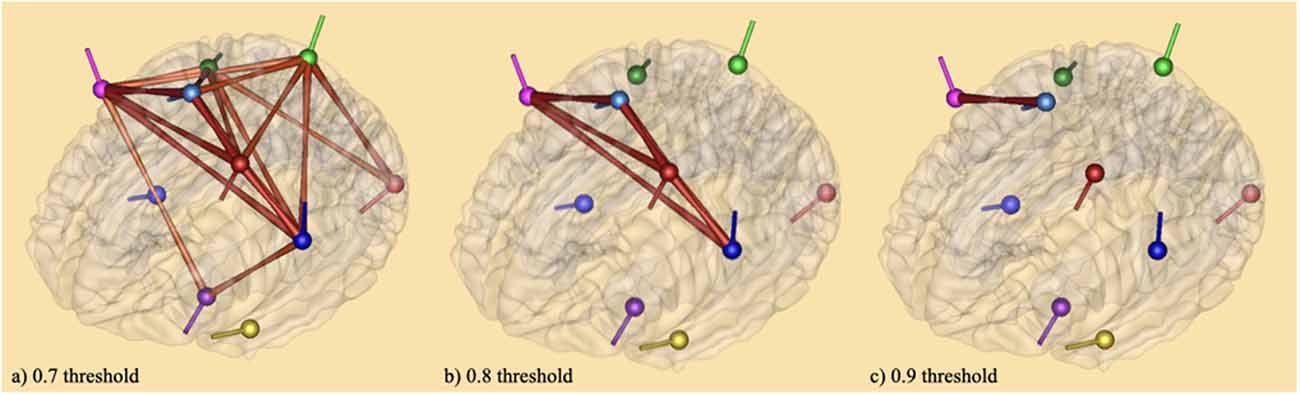

The cortical network across the sources, i.e., global EEG coherence across all frequency bands (1–45 Hz), a functional connectivity analysis was performed by applying the coherence method (Rosenberg et al., 1989; Laptinskaya et al., 2020) followed by complex demodulation with time and frequency sampling 0.5 Hz, 100 ms in BESA Connectivity 1.0 software (BESA®). The number of signal sources for the connectivity analysis was selected as 10, and the locations of dipole orientations in the brain were calculated using the genetic algorithm. The connectivity between these sources was displayed in 3D brain mode using the LORETA software.

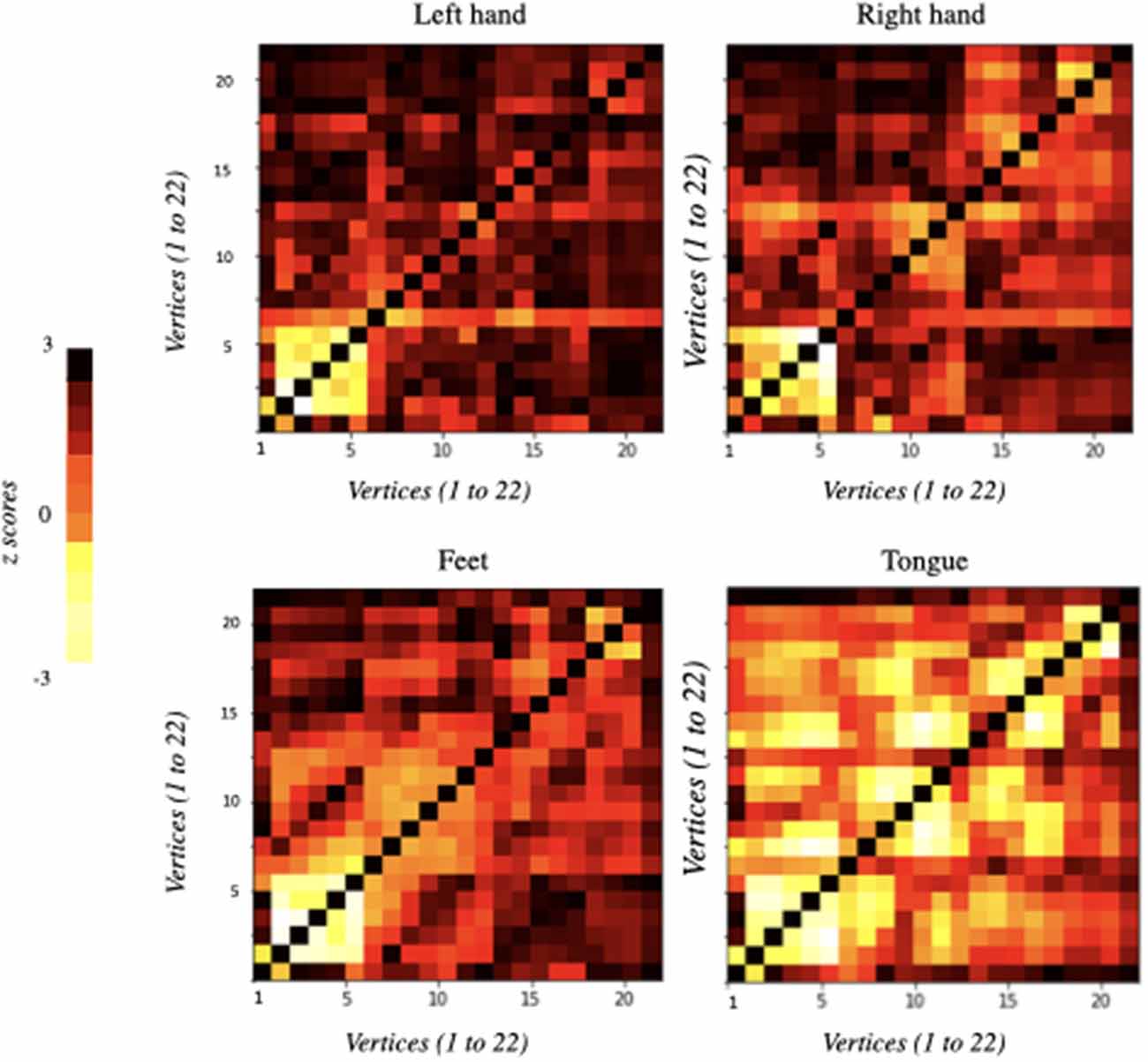

The functional connectivity matrices resulting from the differences between the baseline and each MI task separately (i.e., left hand, right hand, feet, and tongue) are represented in Figure 2. The only statistically significant results were observed for the tongue imagery task. All vertices correlations in the remaining imaginary tasks were not statistically significant. Only the statistically significant results will be considered in the following analysis.

Figure 2. Functional connectivity matrices obtained from the comparison of EEG data between baseline and imagery tasks. They were ordered according to the motor imagery task: left hand, right hand, feet, and tongue. Information flows from the vertices marked on the vertical axis to the vertices marked on the horizontal axis: 22 vertices × 22 vertices (i.e., 22 electrodes × 22 electrodes). Each element of the correlation matrix shows z-scores corresponding to each node comparison. Statistically significant results were only obtained for tongue motor imagery (p < 0.05).

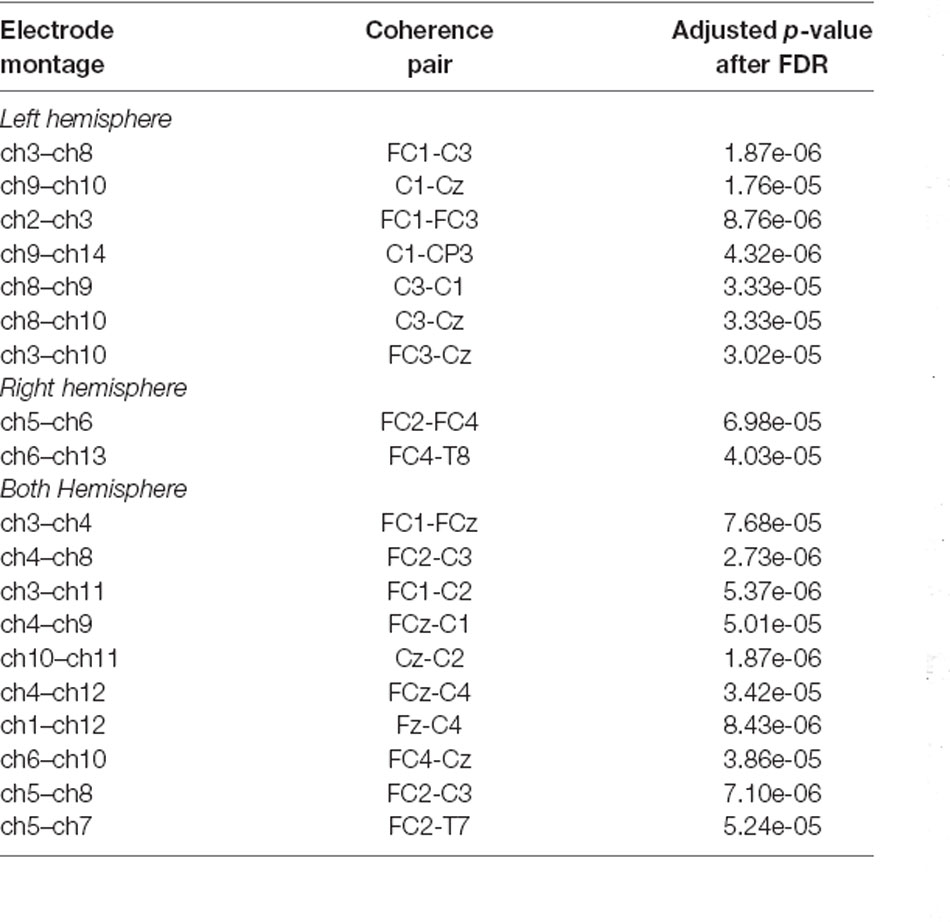

Table 1 displays the largest 19 vertices correlations that were significantly reduced during tongue imagery movement compared to the baseline (adjusted p-values are described above). The 19 statistically significant combinations in the tongue imagery task include seven in a left hemisphere, two in a right hemisphere, and 10 over both hemispheres.

Table 1. Presentation of both inter-hemispheric and intra-hemispheric coherence combinations between 22 × 22 vertices and adjusted p-values (i.e., q-values) after False Discovery Rate (FDR) computation. The top 19 vertices statistically significant combinations in which the reduction appeared during the four-task experimental paradigm are associated with the tongue motor imagery only.

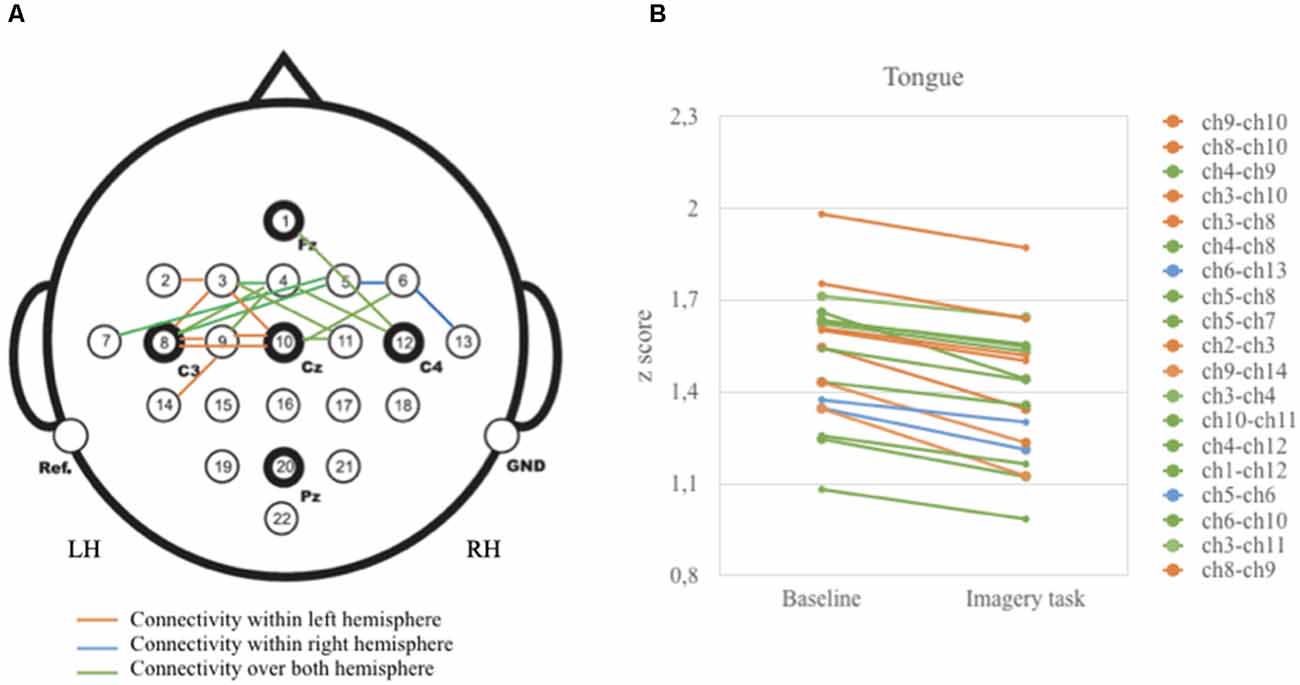

As illustrated in Figure 3, vertices correlations in the left hemisphere and over both left and right hemispheres displayed relatively higher z-scores than in the right hemisphere. These correlations are highly present in the anterior (i.e., frontal) and central (i.e., frontal and parietal) areas.

Figure 3. Graphical presentation on the relationship between baseline and tongue imagery task (A) 19 vertices significant correlations (B) configuration of z-scores. Brain activity is reduced when participants performed the tongue imaginative task. Note that vertices correlations were statistically significant for the anterior and central brain areas (p < 0.05).

Three key parameters representing network properties (Rubinov and Sporns, 2010), such as measures of centrality and integration, were computed. Measures of centrality were typified by degree centrality (DC) and betweenness centrality (BC). The average and the standard deviation of degree centrality were 3.87 ± 0.872 respectively with the highest number of vertices (5) in FC3, C3, Cz, followed by FCz, C1 (4 vertices), and FC2 and FC4 (3 vertices). In other words, the degree of centrality was higher in anterior (frontal) and central (frontoparietal) than posterior brain areas. Betweenness centrality (BC) measures gave the highest index for anterior (frontal) and central (frontoparietal) areas for the MI of the tongue (t = 13.32, p < 0.05, Cohen’s d = 0.78) but not for the MI of the other body parts (i.e., t = 0.65, p > 0.05 for the left hand; t = 1.25, p > 0.05 for the right hand; t = 1.02, p > 0.05 for the feet).

Measures of integration were represented by both local and global efficiency (E). Local efficiency is associated with the features of how interconnected neighboring vertices are to each other. The average and standard deviation of local efficiency was constantly higher during the rest condition (mean = 2.154, sd = 0.054) compared to the imagined condition for the tongue (mean = 1.646, sd: 0.032; t = 12.94, p < 0.05, Cohen’s d = 0.69). However, local efficiency was not significantly different across the other imagined conditions (i.e., t = 0.52, p > 0.05 for the left hand; t = 0.88, p > 0.05 for the right hand; t = 0.23, p > 0.05 for the feet). Similarly, the average and standard deviation of global efficiency, that is, the measure of the interconnectedness of all vertices across the entire brain, was significantly higher during the rest condition (mean = 1.323, sd = 0.021) compared to the tongue imagery condition (mean = 0.634, sd = 0.015; t = 13.22, p < 0.05, Cohen’s d = 0.71) but not compared to all the other MI conditions (i.e., t = 0.22, p > 0.05 for the left hand; t = 2.15, p > 0.05 for the right hand; t = 0.99, p > 0.05 for the feet).

In order to further verify the validity of the above results, the topology of the electrical activity in the brain based on multichannel surface EEG (i.e., LORETA) and brain maps of coherence connectivity were computed and described. The direct comparison of the four body parts (i.e., left hand, right hand, tongue, and feet) was also reported.

Figure 4 shows average results of Low-Resolution Electromagnetic Tomography (LORETA) source analysis in both baseline (a), and imagery task (b) conditions when the participants performed the tongue MI. The data of all participants were analyzed to find 3D determination with the intensity of brain activity in those conditions. The estimated LORETA images mirror the intensity of the brain activity, which tended to reduce during the tongue MI in anterior (premotor and motor areas) and posterior (parietal and visual) areas of both hemispheres (t = 13.67, p < 0.01, Cohen’s d = 0.75 and t = 18.7 p < 0.01, Cohen’s d = 0.68 respectively).

Figure 4. Low-resolution electromagnetic tomography (LORETA) source analysis during (A) baseline (B) tongue imagery. On average, the mirrored intensity of the brain activity was reduced for the tongue imagery in premotor, motor, parietal and visual areas bilaterally (p < 0.01). The orange/yellow scale is a positive one, i.e., the more high, the more intense. Orange/Yellow shades indicate decreased sources. Abbreviations: L: left; R: right; A: anterior; P: posterior; Sag: Sagittal; Cor: Coronal.

The results of connectivity analysis using coherence described in the method section are given in Figure 5. The coherence method was applied to average source analyses with 19 individual subjects. This method gives the functional connectivity of different brain areas, that is, the synchronicity of brain activities at both bilateral and unilateral levels (Friston, 2011). It was followed by complex demodulation with time and frequency sampling 100 ms and 0.5 Hz applied respectively in BESA Connectivity 1.0 software (BESA®). Thresholding was based on the following observations: (1) thresholds can be defined arbitrarily (Garrison et al., 2014); (2) a narrow range of thresholds (r = 0.05–0.4 or r = 0.03–0.5) can lead to incomplete or misleading results (van den Heuvel et al., 2009; Formito et al., 2010); (3) thresholds can be applied based on a correlation coefficient of r = 0.1–0.8 (Buckner et al., 2009; Tomasi and Volkow, 2010). In attempts to avoid inaccurate results, the connections between the sources on the brain areas were illustrated with three thresholds: 0.7 (a), 0.8 (b), and 0.9 (c). At 0.7 threshold (a), the connections crosswise the vertices were significant for the baseline vs. tongue imagery task. The vertices represent the significant areas revealed by the source space analysis; the edges are graded and colored according to their connectivity strength. The edges connecting the sources indicate the cortical connections. The networks were significant at p < 0.05. Some of the strongest connectivities are depicted in the frontal (i.e., premotor, motor) and somatosensory cortical areas (i.e., kinesthetic areas) for the entire tongue imagery task with reference to the baseline. These areas also appear to be connected with motor (frontal) related areas in both hemispheres which appear to be strongly associated with tongue imagery.

Figure 5. Brain maps of coherence connectivity at (A) 0.7 threshold (B) 0.8 threshold and (C) 0.9 threshold in relation to tongue imagery. A coherence method followed by complex demodulation with time and frequency sampling 100 ms and 0.5 Hz respectively was applied in BESA Connectivity 1.0 software (BESA®). When the 0.7 threshold is considered, the connections across the vertices were significant for the baseline vs. tongue imagery (p < 0.05) only. The vertices represent the significant brain areas reported by the source space analysis. The edges are loaded and colored according to the connectivity strength. They indicate significant higher (intense color) and lower (soft color) areas of synchronization both bilaterally and unilaterally.

A one-way Anova was conducted to test for differences in neural activity during MI of the body parts (left and right hand, feet, and tongue). In completing the imagery tasks, participants’ neural activity was significantly affected, F(2,18) = 6.638, p < 0.05, η2 = 0.1579. Tukey post hoc comparisons showed that tongue mental imagery (M = 2.65, sd = 0.54) involves greater neural activity than the mental imagery of the left (M = 1.29, sd = 0.99), and right (M = 1.01, sd = 0.67) hand and the feet (M = 1.38, sd = 0.77).

In the present study, brain activity was analyzed when healthy adults were required to imagine performing voluntary movements of their body parts: left and right hands, feet, and the tongue. Scrutinizing interactions between regions on a whole-brain level, and identifying the role of individual regions within a network, graph theory method, and coherence approach were used (Beharelle and Small, 2016). Consistent with the hypothesis, the results revealed significant neural implications when participants imagined complete tongue movements only. The present findings might reflect a complex network connected to the left hemisphere and to both hemispheres. More precisely, the brain areas associated with tongue imagery, appear to involve the premotor cortex of the left hemisphere (unilaterally), the primary motor cortex, i.e., M1 and the primary somatosensory area (S1; bilaterally). Interestingly, and regardless of the type of tongue movement, these results are coherent with existing data (Ehrsson et al., 2003) reporting that tongue MI appears to preferentially engage the edge areas, and likely 4a and 4p. Both these areas have extensive cortico-cortical projections from higher-order motor regions, specifically with Broca’s area (Buccino et al., 2004), and receive inputs from somesthetic areas (Geyer et al., 1999). During tongue MI, the premotor cortex seems to be activated too. The involvement of this cortical region is consistent with the fact that area 6 is considered to be active and plays a role in planning movement, in using abstract rules to achieve tasks, and in verbal production. From a neuroanatomical point of view, the premotor cortex (area 6) could be seen to have distinct interconnectivity to the primary motor cortex (area 4) and this latter is interconnected to the ascendant parietal cortex, i.e., the primary somatosensory cortex (1, 2, 3), as well as to the superior (5, 7) and inferior (39, 40) parietal cortices. This suggests that the motor and somatosensory activations observed during the imagery task, i.e., both premotor and primary motor areas, might be interconnected and can be assumed to depend on top-down influences from other motor areas of the frontal cortex (Lissek et al., 2013) including Broca’s area in the left and the right hemispheres.

Consistent with previous studies (Ehrsson et al., 2003; Kilteni et al., 2018), the current findings imply that imagery of tongue movement might involve somatopically organized parts of the frontal and parietal cortices bilaterally. In other words, tongue imagery would engage the motor and somatosensory areas, that is, kinesthetic areas (Jacobs, 2011). Altogether, the findings support the idea that in healthy participants the brain would construct internal kinesthetic simulations of tongue movements. The patterns of motor activation during mental imagery observed in the present study are not only consistent with existing studies, but also provide support for the simulation hypothesis of MI (Papaxanthis et al., 2002; Fadiga and Craighero, 2004; Corballis, 2010; Piedimonte et al., 2014). Following this hypothesis, the motor representations engaged when an action is executed are also present when the same action is imagined. In the present situation, this signifies that internal imagery of specific body parts, i.e., the tongue, activates analogous somesthetic representations in addition to motor representations. When qualified movements are achieved, such as the ones that have been used in this study, the somatosensory cortex, and more specifically, the primary somatosensory cortex, has a key role in processing afferent somatosensory inputs. The somatosensory cortex appears to support the integration of both sensory and motor signals on the basis of co-representations (Borich et al., 2015). Studies have revealed a relationship between the type of stimulation, i.e., visual stimulation that the participants have imagined, and the activation of the corresponding brain areas, i.e., the visual brain areas (O’Craven and Kanwisher, 2000). Currently, even if a visual cue preceded the imagery task, motor and somatosensory activations, i.e., kinesthetic activations, visual activations were not significantly correlated with the imaginative task. Taken together, the present findings and the above-mentioned interconnections between frontal and parietal regions, build on the existing observations related to both motor and somatosensory areas. Accordingly, this suggests that a relationship exists between the movement that has been imagined and the activation patterns of somatotopically organized motor and somesthetic areas, i.e., kinesthetic areas. Specifically, the tongue imagery task would be associated with both motor and somatosensory representations, namely kinesthetic representations not only in the left but also in the right hemisphere.

Contrary to studies reported on somatotopic unilateral or bilateral forward (i.e., prefrontal and/or frontal) and backward (i.e., parietal) activations when participants were instructed to perform MI tasks of the right or left hand (Li et al., 2019; Alanis-Espinosa and Gutiérrez, 2020) and the left or right foot (Gu et al., 2020), the current investigation did not report somatotopic motor and/or somesthetic activations, i.e., motor and/or somesthetic representations of the upper and lower limbs. The inconsistencies between our results and the above-mentioned findings could be understood from a methodological point of view. First, the sample size importantly differs between the studies: only two subjects performed the motor hand imagery task via brain-computer interfaces (BCI) in the investigation of Alanis-Espinosa and Gutiérrez (2020), and 10 participants performed the feet imagery task in the Gu et al. (2020). In the study of Li et al. (2019), a conventional number of 40 participants was initially included but only the data of 22 subjects, totally unbalanced between male (21 subjects) and female (one subject), were analyzed. Although limited, the sample of our study was balanced as it was composed of nine males and 10 females. Second, the studies differ with regard to their objectives. For instance, the aim of the Alanis-Espinosa and Gutiérrez (2020) study was to control the movement of a robotic hand using MI at the first and third-person perspective. For the other studies, the aim was to imagine moving different body parts according to a defined experimental design using various visual stimuli (Li et al., 2019; Gu et al., 2020). Also, a specific MI paradigm was included in each study. More particularly, BCI was utilized to control an immersive telepresence system via MI (Alanis-Espinosa and Gutiérrez, 2020), but MI of the upper limbs (Li et al., 2019) and the lower limbs (Gu et al., 2020) was also performed. In the present study, a totally different procedure was employed where participants were instructed to complete a 4-class imagery task of the tongue and the upper and lower body limbs. Next, even if graph theory was employed in all the aforementioned studies, each study included specific graph-theoretical parameters on EEG data analysis, which were associated with predefined (or not) ROI (regions of interest) and statistical analyses depending on the proffered hypotheses. Some studies analyzed event-related desynchronization/synchronization (ERD/ERS) on specific electrodes (Li et al., 2019) while others investigated alpha and beta oscillation networks (Gu et al., 2020) or theta, alpha, beta, and gamma oscillations (Alanis-Espinosa and Gutiérrez, 2020) associated with predefined ROIs. Lastly, as all the above results are dependant on the selected neuroimaging techniques, the obtained observations might reflect and be understood by the limited spatial resolution of EEG. Expressly, in the current study, 22 Ag/AgCl electrodes were used. In contrast, 15 Ag/AgCl and 32 Ag/AgCI electrodes were managed by Li et al. (2019) and Alanis-Espinosa and Gutiérrez (2020) respectively, and 64 Ag/AgCl electrodes were employed by Gu et al. (2020). Note that the electrode montage differs with regard to the international electrode system (i.e., 10–10 vs. 10–20), the correspondent electrode impedance, EEG data digitalization, and bandpass filter. Moreover, the differences between the present results and the aforementioned studies can also be explained by the impossibility to obtain the real source signal (Gu et al., 2020), and also by the fact that the physiological correlates of actual, not imagined movements, are not always precisely organized (Ehrsson et al., 2003). Finally, given the temporal resolution of the EEG technique, it is also likely that the time it takes to imagine a specific movement depends on the procedure that has been used and the instructions that have been given to the participants. In this study, a classic 4-class imagery paradigm was used (e.g., Naeem et al., 2009; Tangermann et al., 2012; Friedrich et al., 2013) where the participants were invited to mentally simulate the movements of different body parts without receiving explicit instructions, i.e., what kind of movement the participants should and should not imagine. One could expect that the absence of explicit instructions would facilitate the activation of cortical areas due to electromyographic EMG activity (Bruno et al., 2018). On the contrary, the current findings support the idea that motor activation associated with muscle activity does not occur during “non pure” MI tasks, even when explicit instructions to avoid overt movements are not given to the participants. With that in mind, these findings are consistent with studies demonstrating that patterns of activation, which are very close at cortical level, as in the case for the feet imagery motor task, cannot really be discriminated (Penfield and Baldrey, 1937; Penfield and Rasmussen, 1950; Graimann et al., 2010). Additionally, the presence of motor and somesthetic activations during the tongue imagery task and their absence during the hand imagery tasks is also consistent with the assumption that tongue actions gradually overshadow hand actions (Corballis, 2003).

In the present study, all participants were untrained and instructed to imagine the movement of their body parts: right and left hand, feet, and tongue. Due to the data which states that motor commands for muscle contractions are blocked by the motor system via inhibitory processes even when prepared during the imagery task (Jeannerod, 1994; Roosink and Zijdewind, 2010; Guillot et al., 2012), the EMG activity was not recorded during the imaginative tasks. Instead, all trials containing EMG and EOG artifacts were marked and eliminated after visual inspection made by an expert. The presence of cortical activity during the tongue MI and its absence during hand and feet imagery, simply indicates that there is no reason to speculate that the obtained findings refer to actual muscle contraction instead of an imaginative task. In addition, in the current situation, the tongue imagery task that participants performed was a voluntary active mental imagery of common movements: the movements people perform when they verbally communicate with other people. Interestingly, similar results were reported even when participants were instructed to perform specific tongue movements during which electromyographic activity was recorded (Ehrsson et al., 2003). Note that tongue movements are verbal and nonverbal, i.e., onomatopoeias, movements per se. The participants were invited to a conscious mental action, that is, to mentally manipulate an internal representation of their own body parts, i.e., a kinesthetic representation. Recent studies theorized that the mental imagery of the upper limbs performing motor actions depends on the intensity of their “ownership” (Alimardani et al., 2013, 2014). Taken together, the above and actual findings suggest that the ownership hypothesis could concern both nonverbal motor actions and verbal actions.

Limitations of the study are partly due to its exploratory nature and methodological choices. Although the obtained results are statistically significant, it is acknowledged that the sample size of the current study is limited to 19 participants. To further validate the results, it is planned to increase sample size and also include neurological patients (e.g., frontal and parietal diseases) to perform intra and inter individual comparisons. A classic 4-class experimental paradigm (left, right hand, feet, and tongue) was utilized and untrained healthy participants were tested. This paradigm is commonly used, and several studies have been published (e.g., Naeem et al., 2009; Tangermann et al., 2012; Friedrich et al., 2013), but it is agreed that a new topology has to be introduced in order to improve it. The paradigm can be improved by the introduction of new cues. For instance, in the present study, visual cues were utilized (i.e., four arrows) and participants were instructed to imagine their body parts one by one (i.e., each arrow corresponds to a body part). It would be interesting to facilitate participants’ insight by introducing direct visual (i.e., the image of the body part to imagine) and/or somesthetic cues (i.e., touching each body part to imagine) into the paradigm. Moreover, when body parts are taken into account, the left and the right foot should be included in a future study. This is not the case in the present study. The current research proposes new paradigms for maximizing neural network recruitment, but these paradigms depend on the limited spatial resolution of the 22-EEG system that has been used. In a future study, a 62-EEG system and additional graph theory parameters for a deeper analysis of the data should be employed. Finally, and given the results, it is obvious that the speculated relationship between the imagined tongue movements and language (both expression and comprehension) needs to be tested on a new methodological ground.

In conclusion, using the internal perspective essentially associated with visual and kinesthetic information, the results of the present study support the idea that only one movement, the movement of the tongue, which is a skilled movement in itself, was imagined using the motor and the somesthetic mechanisms from a first-person view (i.e the imagined speaker). This makes particular sense when considering that the tongue and the mouth are both structurally and conceptually connected to verbal communication (Corballis, 2003, 2010; Buccino et al., 2004; Gallese, 2005; Xu et al., 2009). It implies that even if the participants were not explicitly instructed to imagine a specific tongue movement, tongue movements are specific per se and are directly and/or indirectly related to verbal actions. It also implies that the neural architecture that supports verbal actions would be embrained in a complex kinesthetic connectedness, and should not be considered as working independently of similarly organized cortico-cortical circuits (Falk, 2012). This suggests intimate connections between tongue imagined movements and verbal action that concern both the left and right hemispheres. The results of the current study provide support for the idea that imagined tongue movements, which are sophisticated kinesthetic systems in essence, could be the precursors of verbal actions.

The raw data supporting the conclusions will be made available by the authors, without undue reservation for all participants who allowed to make their data available.

The studies involving human participants were reviewed and approved by Bond University Human Research Ethics Committee (BUHREC 16065).

IG and HM performed the study and prepared the manuscript. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We would like to thank Brunner, C., Leeb, R., Muller-Putz, G. R., Schlögl, A., and Pfurtscheller, G. from the Institute for Knowledge Discovery and the Institute for Human-Computer Interfaces of the Graz University of Technology, Austria for the experimental paradigm and partial dataset collection of nine participants that are part of BNCI Horizon 2020.

Alanis-Espinosa, M., and Gutiérrez, D. (2020). On the assessment of functional connectivity in an immersive brain-computer interface during motor imagery. Front. Psychol. 11:1301. doi: 10.3389/fpsyg.2020.01301

Alimardani, M., Nishio, S., and Ishiguro, H. (2013). Humanlike robot hands controlled by brain activity arouse illusion of ownership in operators. Sci. Rep. 3:2396. doi: 10.1038/srep02396

Alimardani, M., Nishio, S., and Ishiguro, H. (2014). Effect of biased feedback on motor imagery learning in BCI-teleoperation system. Front. Syst. Neurosci. 52, 1–8. doi: 10.3389/fnsys.2014.00052

Ang, K. K., Chin, Z. Y., Wang, C., Guan, C., and Zhang, H. (2012). Filter bank common spatial pattern algorithm on BCI competition IV Datasets 2a and 2b. Front. Neurosci. 6:39. doi: 10.3389/fnins.2012.00039

Beharelle, A. R., and Small, S. L. (2016). “Imaging brain networks for language: methodology and examples from the neurobiology of reading,” in Neurobiology of Language, (Amsterdam, Netherlands: Elsevier Inc), 805–814.

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate - a practical and powerful approach to multiple testing. J. R. Stat. Soc. B. 57, 289–300. doi: 10.1111/j.2517-6161.1995.tb02031.x

Binkofski, F., Amunts, K., Stephan, K. M., Posse, S., Schormann, T., Freund, H. J., et al. (2000). Broca’s region subserves imagery of motion: a combined cytoarchitectonic and fMRI study. Hum. Brain. Mapp. 11, 273–285. doi: 10.1002/1097-0193(200012)11:4<273::aid-hbm40>3.0.co;2-0

Borich, M. R., Brodie, S. M., Gray, W. A., Ionta, S., and Boyd, L. A. (2015). Understanding the role of the primary somatosensory cortex: opportunities for rehabilitation. Neuropsychologia 79, 246–255. doi: 10.1016/j.neuropsychologia.2015.07.007

Bruno, V., Fassataro, C., and Garbarini, F. (2018). Inhibition or facilitation? modulation of corticospinal excitability during motor imagery. Neuropsychologia 111, 360–368. doi: 10.1016/j.neuropsychologia.2018.02.020

Buccino, G., Binkofski, F., and Riggio, L. (2004). The mirror neuron system and action recognition. Brain Lang. 89, 370–376. doi: 10.1016/S0093-934X(03)00356-0

Buckner, R. L., Sepulcre, J., Talukdar, T., Krienen, F. M., Liu, H., Hedden, T., et al. (2009). Cortical hubs revealed by intrinsic functional connectivity: mapping, assessment of stability and relation to Alzheimer’s disease. J. Neurosci. 26, 1860–1873. doi: 10.1523/JNEUROSCI.5062-08.2009

Bullmore, E., and Sporns, O. (2009). Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186–198. doi: 10.1038/nrn2575

Carlson, T., and Millan, J. D. R. (2013). Brain-controlled wheelchairs: a robotic architecture, robotics & automation magazine. IEEE 1, 65–73. doi: 10.1109/MRA.2012.2229936

Chholak, P., Niso, G., Maksimenko, V.A., Kurkin, S.A., Frolov, N.S., Pitsik, E.N., et al. (2019). Visual and kinesthetic modes affect motor imagery classification in untrained subjects. Sci. Rep. 9:9838. doi: 10.1038/s41598-019-46310-9

Clerget, E., Winderickx, A., Fadiga, L., and Olivier, E. (2009). Role of Broca’s area in encoding sequential human actions: a virtual lesion study. NeuroReport 20, 1496–1499. doi: 10.1097/WNR.0b013e3283329be8

Corballis, M. C. (2010). Mirror neurons and the evolution of language. Brain Lang. 112, 25–35. doi: 10.1016/j.bandl.2009.02.002

Corballis, M. C. (2018). Mirror-image equivalence and interhemispheric mirror-image reversal. Front Hum Neurosci., 12:140. doi: 10.3389/fnhum.2018.00140

Corballis, M. C. (2003). From mouth to hand: gesture, speech and the evolution of right-handedness. Behav. Brain Sci. 26, 199–208. doi: 10.1017/s0140525x03000062

Ehrsson, H. H., Geyer, S., and Naito, E. (2003). Imagery of voluntary movement of fingers, toes and tongue activates corresponding body part specific motor representations. J. Neurophysiol. 90, 3304–3316. doi: 10.1152/jn.01113.2002

Emmorey, K., Damasio, H., McCullough, S. S., Grabowski, T., Ponto, L. L. B., Hichwa, R. D., et al. (2002). Neural systems underlying spatial language in american sign language. Neuroimage 17, 812–824. doi: 10.1006/nimg.2002.1187

Fadiga, L., Craighero, L., and Olivier, E. (2005). Human motor cortex excitability during the perception of others’ action. Curr. Opin. Neurobiol. 15, 213–218. doi: 10.1016/j.conb.2005.03.013

Fadiga, L., and Craighero, L. (2004). Electrophysiology of action representation. J. Clin. Neurophysiol. 21, 157–169. doi: 10.1097/00004691-200405000-00004

Falk, D. (2012). “Hominin paleoneurology: where are we now,” in Evolution of the Primate Brain: From Neuron to Behaviour, eds M. A. Hofman, and D. Falk (London: Elsevier), 255–272.

Ferpozzi, V., Fornia, L., Montagna, M., Siodambro, C., Castellano, A., Borroni, P., et al. (2018). Broca’s area as a pre-articulatory phonetic encoder: gating the motor program. Front. Neurosci. 12:64. doi: 10.3389/fnhum.2018.00064

Ferrari, P. F., Maiolini, C., Addessi, E., Fogassi, L., and Visalberghi, E. (2005). The observation and hearing of eating actions activates motor programs related to eating in macaque monkeys. Behav. Brain Res. 161, 95–101. doi: 10.1016/j.bbr.2005.01.009

Frackoviak, R. S. J. (2004). “Somesthetic function,” in Human Brain Function, 2nd edition, eds Karl J. Friston, Christopher D. Frith, Raymond J. Dolan, Cathy J. Price, Semir Zeki, John T. Ashburner, and William D. Penny (London, UK: Elsevier), 75–103.

Friedrich, E. V. C., Scherer, R., and Neuper, C. (2013). Long-term evaluation of a 4-class imagery-based brain-computer interface. Clin. Neurophysiol. 124, 916–927. doi: 10.1016/j.clinph.2012.11.010

Friston, K. J. (2011). Functional and effective connectivity: a review. Brain Connect. 1:1. doi: 10.1089/brain.2011.0008

Formito, A., Zalesky, A., and Bullmore, E.T. (2010). Network scaling effects in graph analytic studies of human resting-state fMRI data. Front. Syst. Neurosci. 4:22. doi: 10.3389/fnsys.2010.00022

Fox, C. (1914). The conditions which arouse mental images in thought. Br. J. Psychol. 6, 420–431. doi: 10.1111/j.2044-8295.1914.tb00101.x

Gallese, V. (2005). Embodied simulation: from neurons to phenomenal experience. Phenomenol. Cogn. Sci. 4, 23–48. doi: 10.3389/fpsyg.2012.00279

Garrison, K. A., Scheinost, D., Constable, R. T., and Brewer, J. A. (2014). BOLD signal and functional connectivity associated with loving kindness meditation. Brain Behav. 4, 337–347. doi: 10.1002/brb3.219

Genovese, R. C., Lazar, N. A., and Nichols, T. (2002). Thresholding of statistical maps in functional neuroimaging using the false discovery rate. NeuroImage 15, 870–888. doi: 10.1006/nimg.2001.1037

Gerardin, E., Sirigu, A., Lehéricy, S., Poline, J. B., Gaymard, B., Marsault, C., et al. (2000). Partially overlapping neural networks for real and imagined hand movements. Cereb. Cortex 10, 1093–1104. doi: 10.1093/cercor/10.11.1093

Geyer, S., Schleicher, A., and Zilles, K. (1999). Areas 3a, 3b and 1 of human primary somatosensory cortex: 1. Microstructural organization and interindividual variability. NeuroImage 10, 63–83. doi: 10.1006/nimg.1999.0440

Giannopulu, I. (2016). “Enrobotment: Toy robots in the developing brain,” in Handbook of Digital Games and Entertainment Technologies, ed R. Nakatsu (Singapore: Springer Science), 1011–1039.

Giannopulu, I. (2017). “Visuo-vestibular and somesthetic contributions to spatial navigation in children and adults,” in Mobility of Visually Impaired People, eds E. Pissaloux, and R. Velazquez (Berlin, Germany: Springer),201–233.

Giannopulu, I. (2018). Neuroscience, Robotics and Virtual Reality: Internalised vs Externalised Mind/Brain. Berlin, Germany: Springer International Publishing.

Graimann, B., Allison, B. Z., and Pfurtscheller, G. Eds. (2010). Brain-Computer Interfaces. Revolutionizing Human-Computer Interaction. New York: Springer Science.

Gu, L., Yu, Z., Ma, T., Wang, H., Li, Z., Fan, H., et al. (2020). EEG-based classification of lower limb motor imagery with brain network analysis. Neuroscience 436, 93–109. doi: 10.1016/j.neuroscience.2020.04.006

Guillot, A., Hoyek, N., Louis, M., and Collet, C. (2012). Understanding the timing of motor imagery: recent findings and future directions. Int. Rev. Sport Exerc. Psychol. 5, 3–22. doi: 10.1080/1750984X.2011.623787

Jacobs, K. M. (2011). “Somatosensory System,” in Encyclopedia of Clinical Neuropsychology, eds J. S. Kreutzer, J. DeLuca, and B. Caplan (New York, NY, USA: Springer), 171–203.

Jeannerod, M. (1994). The representative brain: neural correlates of motor intention and imagery. Behav. Brain Sci. 17, 187–202. doi: 10.1017/S0140525X00034026

Kilteni, K., Andersson, B. J., Houborg, C., and Ehrsson, H. (2018). Motor imagery involves predicting the sensory consequences of the imagined movement. Nat. Commun. 9:1617. doi: 10.1038/s41467-018-03989-0

Kohler, E., Keysers, C., Umiltà, M. A., Fogassi, L., Gallese, V., and Rizzolatti, G. (2002). Hearing sounds, understanding actions: action representation in mirror neurons. Science 297, 846–848. doi: 10.1126/science.1070311

Laptinskaya, D., Fissler, P., Küster, O. C., Jakob Wischniowski, J., Thurm, F., Elbert, T., et al. (2020). Global EEG coherence as a marker for cognition in older adults at risk for dementia. Psychophysiology 57:e13515. doi: 10.1111/psyp.13515

Leeb, R., Friedman, D., Muller-Putz, G., Scherer, R., Slater, M., and Pfurtscheller, G. (2007). Self-paced (Asynchronous) BCI Control of a wheelchair in virtual environments: a case study with a tetraplegic. Comput. Intell. Neurosci. 1:79642. doi: 10.1155/2007/79642

Li, F., Zhang, T., Li, B. J., Zhang, W., Zhao, J., and Song, L. P. (2018). Motor imagery training induces changes in brain neural networks in stroke patients. Neural Regen. Res. 13:1771. doi: 10.4103/1673-5374.238616

Li, F., Peng, W., Jiang, Y., Song, L., Liao, Y., Yi, C., et al. (2019). The dynamic brain networks of motor imagery: time-varying causality analysis of scalp EEG. Int. J. Neural. Syst. 29:1850016. doi: 10.1142/S0129065718500168

Lissek, S., Vallana, G. S., Güntürkün, O., Dinse, H., and Tegenthoff, M. (2013). Brain activation in motor sequence learning is related to the level of native cortical excitability. PLoS One 8:e61863. doi: 10.1371/journal.pone.0061863

Lotze, M., and Halsband, U. (2006). Motor Imagery. J. Physiol. 99, 386–395. doi: 10.1016/j.jphysparis.2006.03.012

Miller, K. J., Schalk, G., Fetz, E. E., den Nijs, M., Ojemann, J. G., and Rao, R. P. N. (2010). Cortical activity during motor execution, motor imagery and imagery-based online feedback. Proc. Nat. Acad. Sci. USA 107, 4430–4435. doi: 10.1073/pnas.0913697107

Naito, E., Kochiyama, T., Kitada, R., Nakamura, S., Matsumura, M., Yonekura, Y., et al. (2002). Internally simulated movement sensations during motor imagery activate cortical motor areas and the cerebellum. J. Neurosci. 22, 3683–3691. doi: 10.1523/JNEUROSCI.22-09-03683.2002

Naeem, M., Brunner, C., and Pfurtscheller, G. (2009). Dimensionality reduction and channel selection of motor imagery electroencephalographic data. Comput. Intell. Neurosci. 8. doi: 10.1155/2009/537504

O’Craven, K. M., and Kanwisher, N. (2000). Mental imagery of faces and places activates corresponding stimulus-specific brain regions. J. Cogn. Neurosci. 12, 1013–1023. doi: 10.1162/08989290051137549

Qingsong, A., Anqi, C., Kun, C., Quan, L., Tichao, Z., Sijin, X., et al. (2019). Feature extraction of four-class motor imagery EEG signals based on functional brain network. J. Neural Eng. 16:026032. doi: 10.1088/1741-2552/ab0328

Papaxanthis, C., Schieppati, M., Gentili, R., and Pozzo, T. (2002). Imagined and actual arm movements have similar durations when performed under different conditions of direction and mass. Exp. Brain Res. 143, 447–452. doi: 10.1007/s00221-002-1012-1

Penfield, W., and Baldrey, E. (1937). Somatic motor and sensory representation in the cerebral cortex in man as studied by electrical stimulation. Brain 60, 389–443. doi: 10.1093/brain/60.4.389

Penfield, W., and Rasmussen, T. (1950). The cerebral cortex of man. A clinical study of localization of function. JAMA 144:1412. doi: 10.1001/jama.1950.02920160086033

Piedimonte, A., Garbarini, F., Rabuffetti, M., Pia, L., and Berti, A. (2014). Executed and imagined bimanual movements: a study across different ages. Dev. Psychol. 50, 1073–1080. doi: 10.1037/a0034482

Rizzolatti, G., and Sinigaglia, C. (2010). The functional role of the parieto-frontal mirror circuit: interpretations and misinterpretations. Nat. Rev. Neurosci. 11, 264–274. doi: 10.1038/nrn2805

Roosink, M., and Zijdewind, I. (2010). Corticospinal excitability during observation and imagery of simple and complex hand tasks: implications for motor rehabilitation. Behav. Brain Res. 213, 35–41. doi: 10.1016/j.bbr.2010.04.027

Rosenberg, J. R., Amjad, A. M., Breeze, P., Brillinger, D. R., and Halliday, D. M. (1989). The fourier approach to the identification of functional coupling between neuronal spike trains. Prog. Biophys. Mol. Biol. 53, 1–31. doi: 10.1016/0079-6107(89)90004-7

Rouam, S. (2013). “False Discovery Rate (FDR)”, in Encyclopedia of Systems Biology, eds W. Dubitzky, O. Wolkenhauer, K. H. Cho, and H. Yokota (New York, NY: Springer), 42–141.

Rubinov, M., and Sporns, O. (2010). Complex network measures of brain connectivity: Uses and interpretations. NeuroImage 52, 1059–1069. doi: 10.1016/j.neuroimage.2009.10.003

Schmidt, T. T., and Blankenburg, F. (2019). The somatotopy of mental tactile imagery. Front. Neurosci. 13:10. doi: 10.3389/fnhum.2019.00010

Schwartzman, A., and Lin, X. (1999). The effect of correlation in false discovery rate estimation. Biometrika 98, 199–214. doi: 10.1093/biomet/asq075

Skipper, J. I., van Wassenhove, V., Nusbaum, H. C., and Small, S. L. (2007). Hearing lips and seeing voices: how cortical areas supporting speech production mediate audiovisual Speech perception. Cereb. Cortex 17, 2387–2399. doi: 10.1093/cercor/bhl147

Stippich, C., Ochmann, H., and Sartor, K. (2002). Somatotopic mapping of the primary sensorimotor cortex during motor imagery and motor execution by functional magnetic resonance imaging. Neurosci. Lett. 331, 50–54. doi: 10.1016/s0304-3940(02)00826-1

Tangermann, M., Müller, K. R., Aertsen, A., Birbaumer, N., Braun, C., Brunner, C., et al. (2012). Review of the BCI competition IV. Front. Neurosci. 6:55. doi: 10.3389/fnins.2012.00055

Tomasi, D., and Volkow, N. D. (2010). Functional connectivity density mapping. Proc. Natl. Acad. Sci. U S A 107, 9885–9890. doi: 10.1073/pnas.1001414107

van den Heuvel, M. R., Stam, J. C., Kahn, R. S., and Hulshoff Pol, H. E. (2009). Efficiency of functional brain networks and intellectual performance. J. Neurosci. 29, 7619–7624. doi: 10.1523/JNEUROSCI.1443-09.2009

Vogt, S., Di Rienzo, F., Collet, C., Collins, A., and Guillot, A. (2013). Multiple roles of motor imagery during action observation. Front. Neurosci. 7:807. doi: 10.3389/fnhum.2013.00807

Wang, K., Xu, S., Chen, L., and Ming, D. (2020). Enhance decoding of pre-movement EEG patterns for brain-computer interfaces. J. Neural. Eng. 17:016033. doi: 10.1088/1741-2552/ab598f

Keywords: connectivity, kinesthetic representations, verbal actions, body parts, motor mental imagery

Citation: Giannopulu I and Mizutani H (2021) Neural Kinesthetic Contribution to Motor Imagery of Body Parts: Tongue, Hands, and Feet. Front. Hum. Neurosci. 15:602723. doi: 10.3389/fnhum.2021.602723

Received: 08 September 2020; Accepted: 31 May 2021;

Published: 14 July 2021.

Edited by:

Mariano Serrao, Sapienza University of Rome, ItalyReviewed by:

Gianluca Coppola, Sapienza University of Rome, ItalyCopyright © 2021 Giannopulu and Mizutani. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Irini Giannopulu, aWdpYW5ub3BAYm9uZC5lZHUuYXU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.