- 1Laboratory of Brain & Cognitive Sciences for Convergence Medicine, Hallym University College of Medicine, Anyang, South Korea

- 2Department of Otorhinolaryngology, College of Medicine, Hallym University, Anyang, South Korea

Objective: The ability to detect frequency variation is a fundamental skill necessary for speech perception. It is known that musical expertise is associated with a range of auditory perceptual skills, including discriminating frequency change, which suggests the neural encoding of spectral features can be enhanced by musical training. In this study, we measured auditory cortical responses to frequency change in musicians to examine the relationships between N1/P2 responses and behavioral performance/musical training.

Methods: Behavioral and electrophysiological data were obtained from professional musicians and age-matched non-musician participants. Behavioral data included frequency discrimination detection thresholds for no threshold-equalizing noise (TEN), +5, 0, and −5 signal-to-noise ratio settings. Auditory-evoked responses were measured using a 64-channel electroencephalogram (EEG) system in response to frequency changes in ongoing pure tones consisting of 250 and 4,000 Hz, and the magnitudes of frequency change were 10%, 25% or 50% from the base frequencies. N1 and P2 amplitudes and latencies as well as dipole source activation in the left and right hemispheres were measured for each condition.

Results: Compared to the non-musician group, behavioral thresholds in the musician group were lower for frequency discrimination in quiet conditions only. The scalp-recorded N1 amplitudes were modulated as a function of frequency change. P2 amplitudes in the musician group were larger than in the non-musician group. Dipole source analysis showed that P2 dipole activity to frequency changes was lateralized to the right hemisphere, with greater activity in the musician group regardless of the hemisphere side. Additionally, N1 amplitudes to frequency changes were positively related to behavioral thresholds for frequency discrimination while enhanced P2 amplitudes were associated with a longer duration of musical training.

Conclusions: Our results demonstrate that auditory cortical potentials evoked by frequency change are related to behavioral thresholds for frequency discrimination in musicians. Larger P2 amplitudes in musicians compared to non-musicians reflects musical training-induced neural plasticity.

Introduction

Understanding speech and other everyday sounds require the processing of the temporal and spectral information in sounds. Psychoacoustically, pitch perception is the ability to extract the frequency information of a complex stimulus. It relies on spectral cues because it requires the mapping of frequencies onto meaningful speech or music (Stangor and Walinga, 2014). In both speech and music, pitch provides spectral information to facilitate the perception of musical structure and the acquisition of speech understanding inferred from the pitch contour and prosody information (Moore, 2008; Oxenham, 2012). Pitch processing is even more crucial for understanding sounds under adverse listening conditions such as background noise (Fu et al., 1998; Won et al., 2011). Difficulties with listening in noise have been attributed to the reduced ability to segregate the spectral cues and noise (Gaudrain et al., 2007).

Attempts to demonstrate a relationship between frequency coding and music perception have been made to investigate the neural activities underlying the auditory function of people who have undergone musical training. There is a large body of literature on assessing whether long-term musical training affects perceptual changes in frequency coding (Shahin et al., 2003; Micheyl et al., 2006; Deguchi et al., 2012; Liang et al., 2016) since examining the neural processing of sounds in musicians can provide a conceptual model of auditory training. Several studies have reported a strong relationship between musical training and speech perception. For example, the acquisition of a foreign language can be facilitated by musical training due to the enhanced neural encoding for speech relevant cues such as formant frequencies of speech (Intartaglia et al., 2017). Musical training in early life produces an even greater influence on both neural and behavioral speech processing than in adult life. It has been revealed that young children engaged in piano training have enhanced cortical responses to pitch changes, and the neural changes are associated with their behavioral performances in word discrimination (Nan et al., 2018). These improvements suggest a link between musical training and functional and structural changes in the human brain. Neuroimaging studies have provided converging evidence that the volume of the brain regions related to speech processing is larger in musicians compared to non-musicians, which indicates neurophysiological changes occurred by training-induced brain plasticity (Schneider et al., 2002; Gaser and Schlaug, 2003; Bermudez et al., 2009; Hyde et al., 2009). These findings indicate that music and speech processing rely on partially overlapping neural and cognitive resources.

Perceiving music requires listeners to integrate various sources of sound information, including pitch, timbre, and rhythm, and these musical features are linked to cognitive/perceptual processing at the cortical level. Previous studies have found that musical training can change various auditory functions, including sound discrimination (Zuk et al., 2013), listening to a foreign language (Marques et al., 2007), as well as auditory attention (Seppänen et al., 2012). These studies have also demonstrated that the neural changes underlying the perceptual and cognitive changes as a function of musical training can be reflected in cortical responses to complex stimuli (Pantev et al., 1998; Shahin et al., 2003, 2007). In general, musicians show greater N1/P2 and late positive responses to musical and tone stimuli compared to non-musicians. The improved cortical activities in musicians exhibit experience-driven neural changes (Shahin et al., 2003; Marques et al., 2007; Seppänen et al., 2012). However, some studies have suggested that the neuroplasticity evidence in musicians may be an innate property in people whose auditory function is superior to others rather than music experience-driven factors (Schellenberg, 2015, 2019; Mankel and Bidelman, 2018).

Cortical N1/P2 responses can be elicited by changes in various types of sound: speech (Han et al., 2016), tonal (Martin and Boothroyd, 2000), and noise (Bidelman et al., 2018). Using frequency-modulated tonal stimuli, it has been found that the N1/P2 responses vary depending on the rate and the magnitude of frequency (Dimitrijevic et al., 2008; Pratt et al., 2009; Vonck et al., 2019). Furthermore, N1/P2 responses to frequency change are enhanced by long-term auditory training. For instance, a recent study by Liang et al. (2016) found that N1/P2 amplitudes are enhanced with an increase in the magnitude of frequency changes, and this was more evident in musicians compared to non-musicians. However, in their study, no relationship was found between behavioral performance for frequency change detection and the N1/P2 response measures.

Although there has been extensive research on the subject (Koelsch et al., 1999; Schneider et al., 2002, 2005; Bidelman et al., 2014; Hutka et al., 2015) which indicates that musical training can change neural processing to spectral change, the idea that altered neural responses are induced by long-term musical training or by other environmental/congenital properties is still controversial. Given that tracking the frequency pattern of tone and extracting the pitch of musical sounds both rely on spectral processing in the auditory cortex, an attempt to assess the neural sensitivity of musicians to subtle frequency changes can provide knowledge about the underlying auditory processing for music and speech. Therefore, we examined how the central auditory system of musicians encodes frequency information differently and related this to their behavioral perception ability. It is important to determine whether relationships exist between behavioral performance and objective cortical activities since it would indicate that cortical responses evoked by frequency change can be used as a marker for behavioral frequency discrimination. To answer the research question, we applied base frequencies of 250 and 4,000 Hz, because a previous study reported that cortical responses elicited by stimuli with the frequencies had a strong relationship with psychoacoustical thresholds of frequency discrimination (Dimitrijevic et al., 2008). We hypothesized that the cortical activity to frequency change is more enhanced in musicians compared to non-musicians. We further predicted that behavioral thresholds and the duration of musical training relate to the measures of cortical activity. Also, we measured the behavioral frequency discrimination both in quiet and noise conditions to associate with cortical responses, because musical training has improved sound in noise perception ability (Parbery-Clark et al., 2009b; Yoo and Bidelman, 2019). We assumed that musicians reveal better noise perception than non-musicians, indicating the musician’s advantage on the sound in noise perception.

Materials and Methods

The Participants

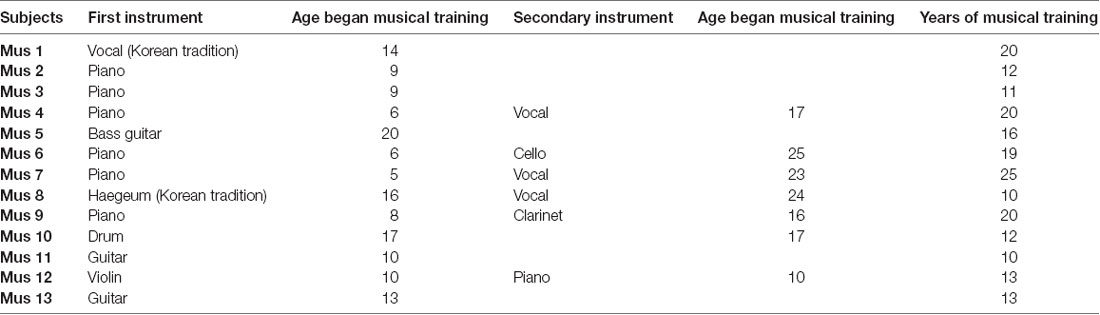

A total of 13 (six male) musicians [mean age ± standard deviation (SD) = 27.1 ± 5.0 years, all right-handed] and 11 (six male) age-matched non-musicians (mean age ± SD = 26.8 ± 5.31 years, all right-handed) participated in this study. We ran a two-sample t-test to examine whether our findings were driven by age. Results showed no significant difference in the age of musician and non-musician groups (p = 0.904), suggesting that age is not a contributing factor. All musicians reported that they had been receiving professional musical training for over 10 years regularly and received musical training at least three times a week during the training period. The types of musical training were vocal, piano, drum, haegeum, guitar, and violin. Details on musical training of the musicians are provided in Table 1. All participants were recruited through online advertising and were compensated for their participation. Both groups had normal pure-tone thresholds below 20 dB hearing loss (HL) at octave test frequencies from 250 to 8,000 Hz, and they had no history of neurological or hearing disorders. The study protocol was approved by the Institutional Review Board of Hallym University Sacred Hospital, Gangwon-Do, South Korea (File No. 2018-02-019-001), and written informed consent were obtained from each participant.

Psychoacoustics: Frequency Discrimination Test

Frequency discrimination was applied as a standard adaptive, three-interval, three-alternative, forced-choice, two-down, one-up procedure to detect the threshold for each subject. The base frequencies used for the frequency discrimination test were 250 and 4,000 Hz. During each trial, two of three intervals contained base frequency pure tones, while the remaining one had a pure tone with a higher frequency change. Individual tones were 300 ms in duration and separated by an inter-stimulus interval of 500 ms. The three intervals were presented randomly. The initial differences in frequency between the base and the change were 50 and 100 Hz for 250 and 4,000 Hz tones, respectively. The step size from the second trial was 12 Hz for 250 Hz and 50 Hz for 4,000 Hz. The difference was decreased or increased for the subsequent trial depending on whether there were two consecutive correct responses or a single incorrect response, respectively. When the current step size was larger than the difference, it was varied to half the difference. Each condition was ended after a maximum of 60 trials or 12 reversals. The averaged thresholds were measured with the last eight reversals to compute each subject’s difference limen (DL). Subjects were instructed to find the frequency change among the three interval choices by clicking a mouse on a computer screen. For a noise condition, four types of background threshold-equalizing noise (TEN) were used; no TEN and +5, 0, and −5 dB signal-to-noise ratio (SNR) TEN. These noise conditions for each base tone were randomly presented to avoid the carryover effect (Logue et al., 2009; Dochtermann, 2010; Bell, 2013). During the testing, the subjects were seated in a sound-attenuated booth, and sound stimuli were presented through 2 channel speakers at a level of 70 dB HL.

Outliers were determined based on the interquartile range (IQR) method (Kokoska and Zwillinger, 2000). The outliers were identified by defining limits on the sample values that are a factor k of the IQR below the 25th percentile or above the 75th percentile. We used 3 for k to identify values that are extreme outliers. None of the musicians were defined as an outlier while two non-musicians were rejected.

Electroencephalogram (EEG) Acquisition and Analysis

Stimuli and Experimental Procedure

Stimuli for the frequency change and experimental procedures were based on a previous frequency change experiment (Dimitrijevic et al., 2008). Auditory stimuli were generated in MATLAB (MathWorks, Inc., Natick, MA, USA), and they were sampled at a rate of 48,828 Hz. Frequency change stimuli were constructed using two continuous base tones, 250 and 4,000 Hz, each with upward frequency changes of 10%, 25%, or 50% for 400 ms. The order of the frequency changes was randomly determined. The intensity of the frequency change was equated to equal loudness concerning the base frequency. The ongoing stimuli consisted of frequency change stimuli followed by base frequency tones varied from 1.6 to 2.2 s to prevent anticipating the point where the frequency change occurred. To avoid a transient click, which was produced when changing the stimuli, we manipulated the stimuli to occur at the zero phase. Figure 1 shows a schematic of the frequency changes of the stimulus. A minimum of 100 trials for each frequency change was presented in two blocks. The total electroencephalogram (EEG) recording time for each subject was approximately 30 min, during which the subjects were seated in a comfortable reclining chair and watched a close-captioned movie of their choice while the frequency change stimuli were presented through 2 channel speakers located 1.0 m away from the subject.

Figure 1. Schematic representation of frequency change stimulus. Continuous tones with base frequencies of 250 or 4,000 Hz are presented with occasional changes in frequency change of 10%, 25%, or 50% lasting 400 ms.

EEG Acquisition and Data Processing

Multi-channel EEG data were acquired using the actiCHamp Brain Products recording system (Brain Products GmbH, Germany). Scalp potentials were recorded at 64 equidistant electrode sites, all electrodes were referenced to the reference electrode, electrical impedances were reduced below 10 kΩ, and EEG signals were amplified and digitized at 1,000 Hz. During the EEG recording, continuous data were band-pass-filtered from 0.1 to 120 Hz and a notch filter for 60 Hz noise was applied.

EEG Data Analysis

All EEG data were preprocessed offline using Brain Vision Analyzer 2.2 (Brain Products GmbH, Germany). Continuous eye blink and horizontal movement artifacts were rejected using the independent component analysis (ICA) algorithm. After the ICA correction, the data were further analyzed in MATLAB. Continuous EEG data were down-sampled to 250 Hz and band-pass-filtered from 0.1 to 40 Hz. The data were segmented from −100 to 400 ms with 0 ms at the onset of frequency change. Segmented data were baseline-corrected from −100 to 0 ms and re-referenced to an average reference. Separate averages for individual frequency changes were also performed. Peak detection was performed for N1/P2 on the frontal central electrodes located at the near vertex. N1 peaks were determined as the first negative potentials between 70 and 150 ms after stimulus onset, while the most positive potentials between 120 and 230 ms were defined as P2 peaks.

Dipole Source Analysis

This was performed using BESA Research 7.0 (Brain Electrical Source Analysis, GmbH, Germany), as described previously (Han et al., 2016). The source analysis was performed on individual averaged waveforms with band-pass filtering (0.5–40 Hz, 12 dB/octave, zero-phase). In the first step, two symmetric regional dipole sources were inserted near the auditory cortical regions. For N1 and P2 dipole fitting, the mean area over a 20 ms window around the N1 and P2 peaks on the global field power was used for further analysis. The dipole source activities were allowed to vary in location, orientation, and strength, and the maximum tangential sources were fitted on the N1 and P2 peaks. The residual variance was examined for each 20 ms window, for which all subjects obtained 5% or less variance. Statistical differences in the grand mean source waveforms were assessed across the different conditions and subject groups.

Statistical Analysis

For the behavioral thresholds, the main effect of the subject groups (musician vs. non-musician), the noise (+5 SNR, 0 SNR, and −5 SNR; for noise condition only) and base frequency (250 and 4,000 Hz) settings were examined using repeated-measures analysis of variance (rmANOVA) for quiet and noise condition, separately. rmANOVA was used to assess the main effects of frequency change (10%, 25%, and 50%), the base frequency for within-subject comparison on the cortical measures (the frequency change and the base frequency were set as continuous variables). For between-subject factors, musician and non-musician groups were included. We performed this analysis using the fitrm and ranova functions in MATLAB. Post hoc testing was applied using Tukey’s honestly significant difference tests, and paired t-tests were conducted for group comparisons. Pearson’s product-moment correlation coefficient was applied to assess relationships among the behavioral measures and demographic factors with the electrophysiological measures. Multiple pairwise comparisons were adjusted with the false discovery rate (FDR). All data are expressed as the mean ± standard error (SE) unless otherwise stated.

Results

Behavioral Frequency Discrimination

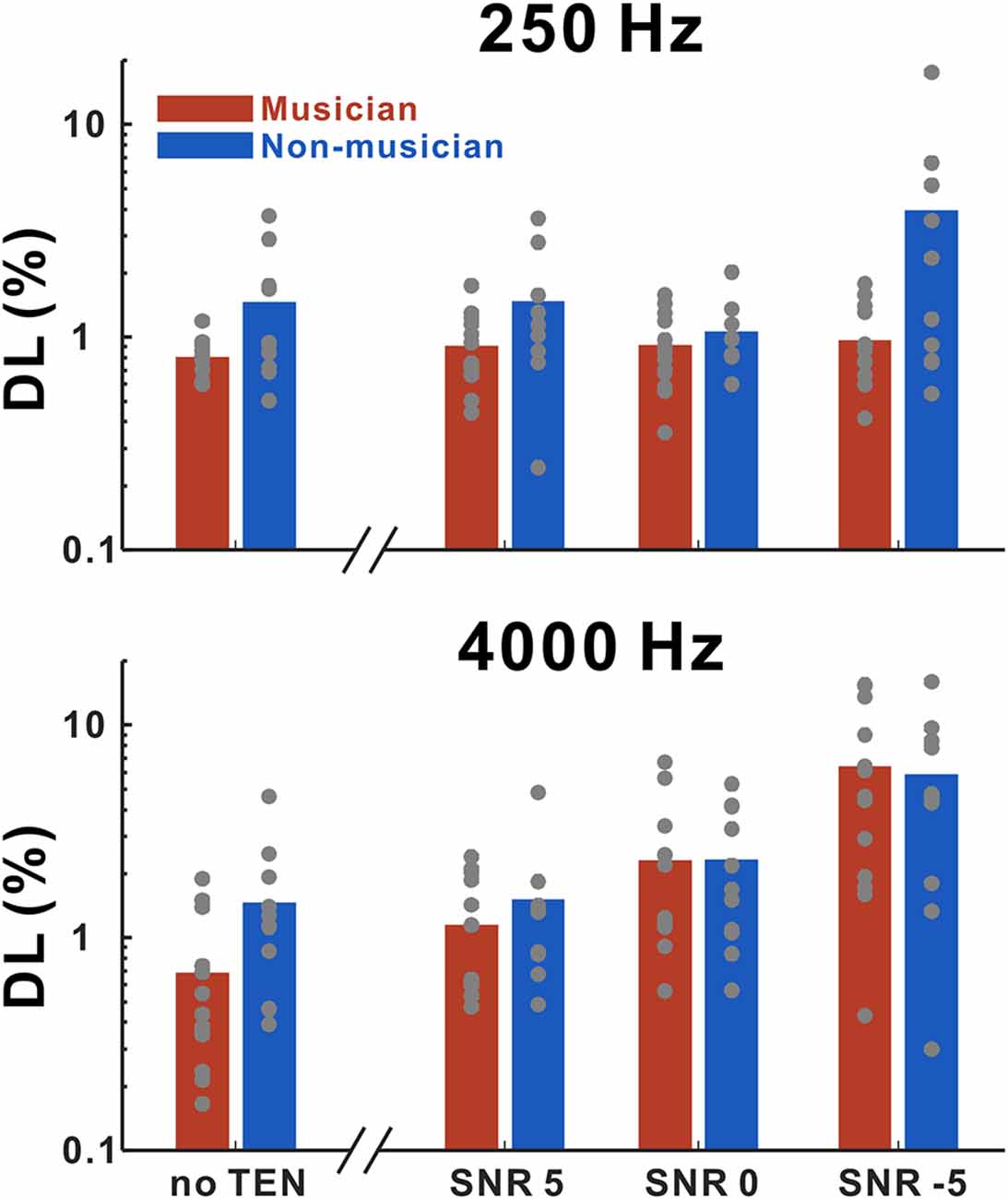

Figure 2 shows frequency discrimination thresholds for 250 and 4,000 Hz as a function of listening conditions. We performed rmANOVA to examine the main effects of group, base frequency, and noise level (for the noise condition only) for quiet and noise conditions, separately. In the quiet condition, the results revealed significant main effects of the groups (F(1,20) = 5.18; p = 0.034) in that the thresholds in the musicians were lower than those in the non-musicians. In the noise condition, a significant interaction between noise level and base frequency (F(2,34) = 64.75; p < 0.0001) was found. Tukey’s HSD (honestly significant difference) test showed that the thresholds at 250 Hz base frequency were significantly lower than those at the 4,000 Hz base frequency for −5 SNR (p < 0.0001) and 0 SNR (p = 0.0086) conditions. In addition, significant differences between +5 SNR and 0 SNR (p = 0.0168), +5 SNR and −5 SNR (p < 0.0001), and 0 SNR and −5 SNR (p < 0.0001) were found for the 4,000 Hz base frequency.

Figure 2. Mean frequency discrimination thresholds for 250 and 4,000 Hz in musicians and non-musicians as a function of listening conditions including no threshold-equalizing noise (TEN), SNR +5 dB, SNR 0 dB, and SNR −5 dB. Note that gray dots indicate each subject. Musicians show decreased thresholds compared to non-musicians for both 250 and 4,000 Hz in no TEN condition.

Electrophysiology

N1/P2 Cortical Responses

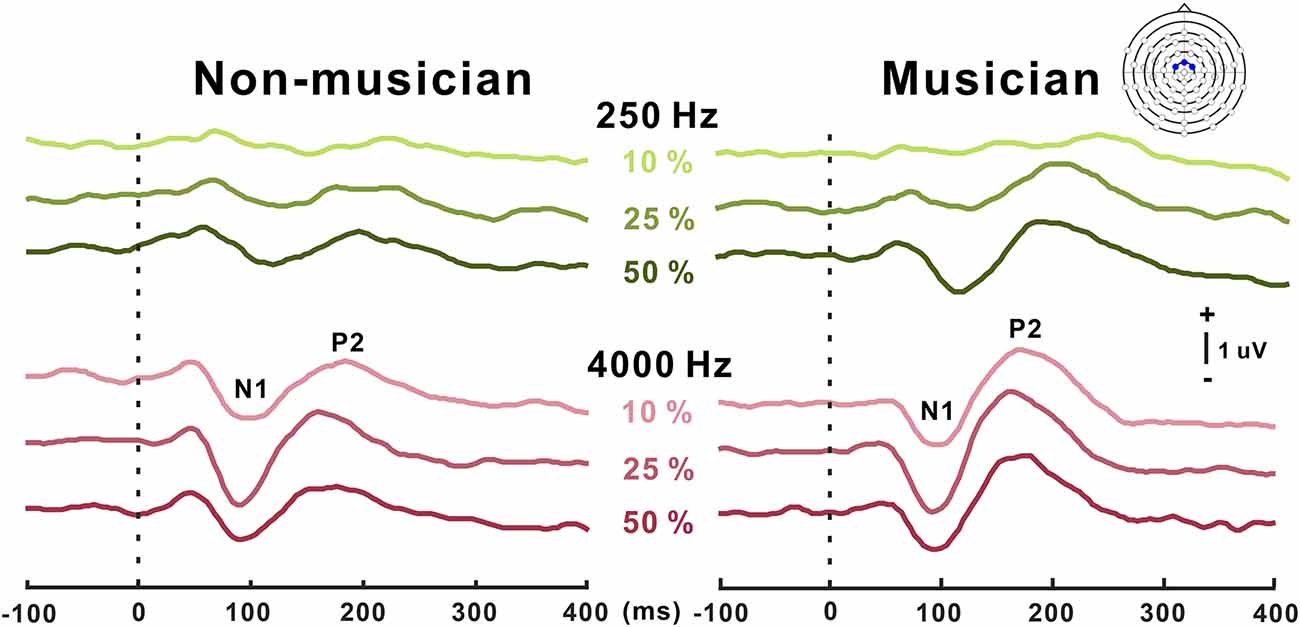

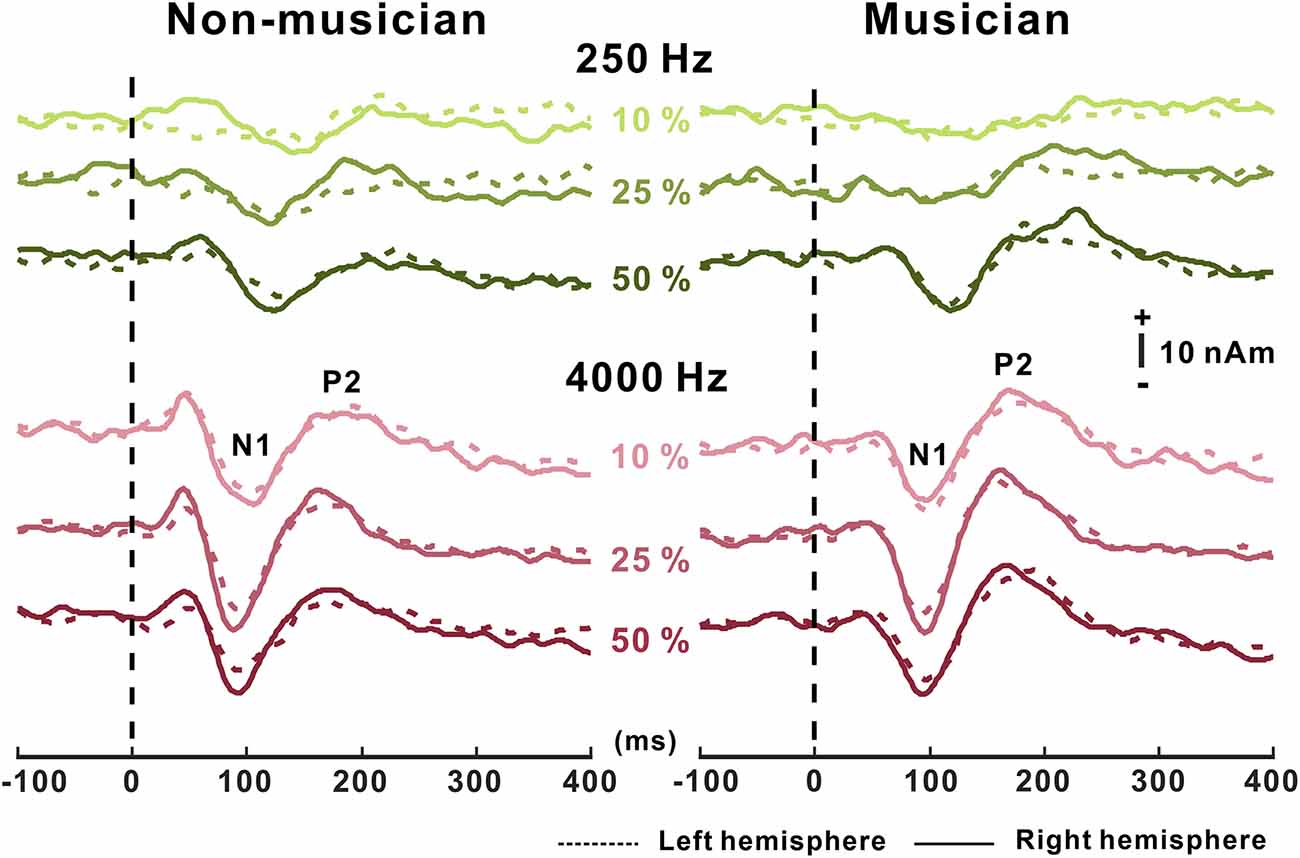

Grand mean waveforms as a function of frequency change for non-musicians and musicians are given in Figure 3. In general, the N1/P2 cortical responses were modulated by frequency changes, and the modulations were more evident at 4,000 Hz and the musician group, compared with 250 Hz and the non-musician group.

Figure 3. Grand average N1/P2 responses to frequency changes. N1/P2 cortical potentials to frequency change stimuli in non-musicians (left) and musicians (right). Green and red color waveforms represent cortical responses for 250 and 4,000 Hz base frequencies, respectively. The amount of frequency change is indicated as a percentage. In the top right, a figure shows an equidistant cap layout indicating the frontal central electrodes (blue dots).

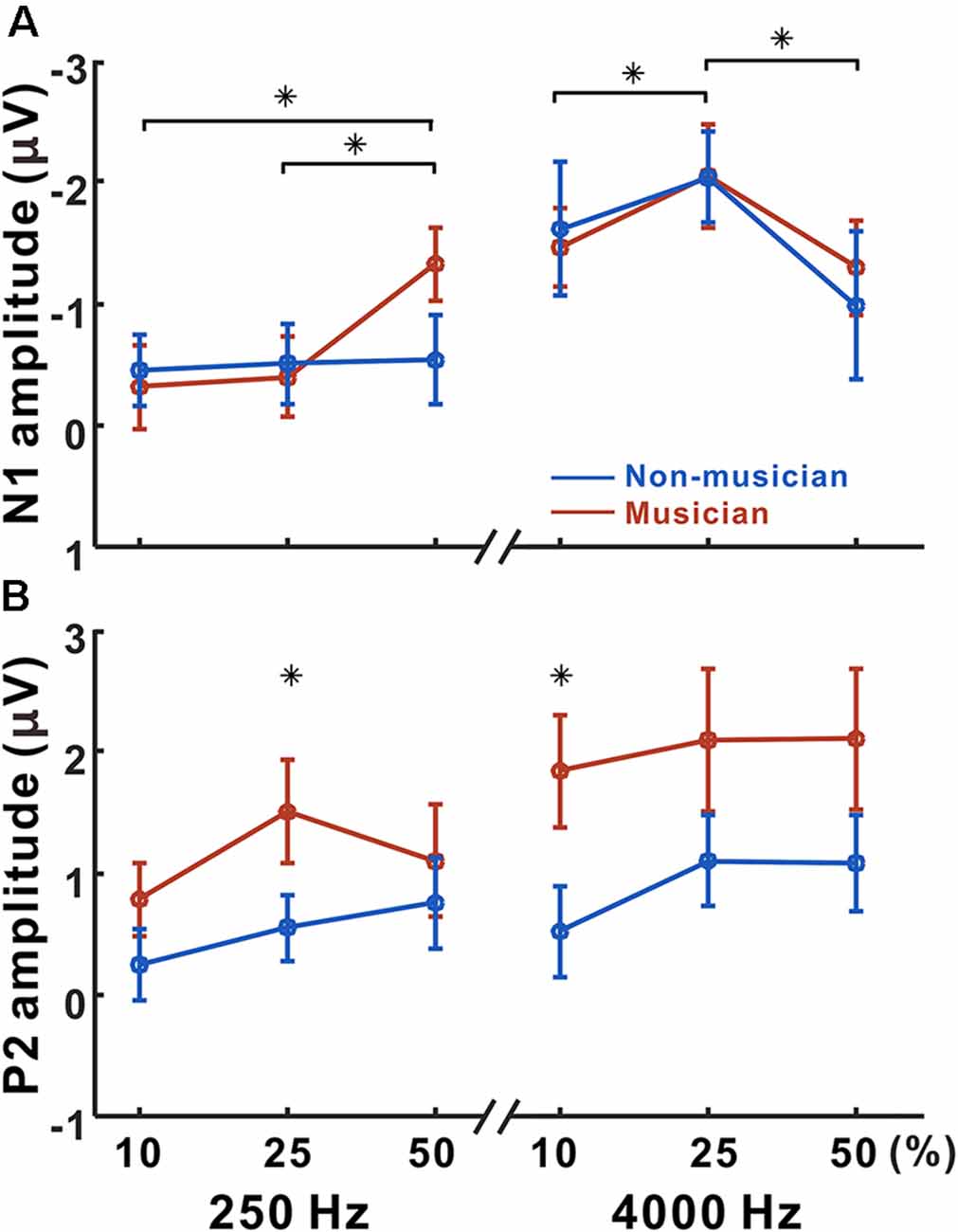

Figure 4 shows N1 and P2 amplitudes as a function of frequency change starting at 250 and 4,000 Hz for the musician and non-musician groups. rmANOVA to examine the effect of frequency change on N1 response revealed a significant frequency change × base frequency interaction for N1 amplitude (F(1,22) = 24.32; p < 0.0001) and latency (F(1,22) = 54.43; p < 0.0001). The post hoc analysis confirmed that the N1 amplitude to the 50% change was larger than those to the 10% (p = 0.005), and 25% (p = 0.024) changes at the 250 Hz base frequency. The post hoc analysis also showed that the N1 amplitude to the 25% change was larger than those to the 10% (p = 0.038) and 50% (p < 0.0001) changes at the 4,000 Hz base frequency. In addition, the N1 amplitude for 4,000 Hz was significantly larger compared to 250 Hz with 10% (p < 0.0001) and 25% (p < 0.0001) frequency changes. For the N1 latency, the response at 4,000 Hz was significantly shorter than that at 250 Hz for all frequency changes (p < 0.0001). No significant group differences were found for N1.

Figure 4. Mean N1 and P2 amplitudes as a function of frequency change. Mean of N1 (A) and P2 (B) amplitudes in musicians and non-musicians are shown. Note that significant differences among listening conditions are revealed for the N1 (250 Hz: 10 vs. 50, 25 vs. 50%, 4,000 Hz: 10 vs. 25, 25 vs. 50%), whereas the differences between musicians and non-musicians are found for the P2 amplitude (250 Hz: 25%, 4,000 Hz: 10%). Asterisks (*) indicate significant differences (p < 0.05).

Significant base frequency × frequency change interactions were found for P2 amplitude (F(1,22) = 10.97; p = 0.003) and latency (F(1,22) = 14.64; p < 0.0001). The post hoc results show that P2 amplitude to 25% change was greater than that to the 10% change for 250 Hz only (p = 0.004). For the P2 latency, the 25% frequency change elicited significantly shorter responses compared to the 10% change for 4,000 Hz (p = 0.019). Compared to 4,000 Hz, the P2 responses for 250 Hz significantly decreased in amplitude for 10% (p = 0.012), 25% (p = 0.022), and 50% (p = 0.015) frequency changes, while the latency increased for 10% (p < 0.0001), 25% (p < 0.0001), and 50% (p = 0.015). Significant differences between the musician and non-musician groups were found in the P2 amplitudes: that of the P2 in musician group was significantly larger than that of the non-musician (F(1,22) = 6.58; p = 0.018). In addition, an interaction between the groups and frequency change was revealed (F(1,22) = 4.67; p = 0.042). The post hoc results show that the P2 amplitudes of the musicians were greater than those of the non-musicians for 10% (p = 0.005) and 25% (p = 0.022) frequency changes. In addition, the P2 amplitudes for the 25% frequency change were greater than those for the 10% frequency change in both the musician group (p = 0.009) and the non-musician group (p = 0.029). No group differences were found for P2 latency.

Dipole Source Activity

The grand average N1 dipole source waveforms as a function of frequency changes for the 250 and 4,000 Hz base frequencies are shown in Figure 5. Using two symmetric single equivalent dipoles, the N1/P2 dipoles were fitted, and amplitudes and latencies of N1/P2 sources waveforms were averaged for each hemisphere. The overall morphology of the N1 dipole waveforms was similar to the N1 scalp-recorded waveforms in that N1 activity increased as the frequency change became greater, which was more apparent at 4,000 Hz than 250 Hz. P2 dipole source analysis showed that the musician group had greater P2 dipole activity than the non-musician group.

Figure 5. Dipole source waveforms to frequency changes in non-musician and musician groups. N1 dipole source waveforms to frequency change stimuli in non-musicians (left) and musicians (right). Green and red color waveforms represent dipole activity for 250 and 4,000 Hz base frequencies, respectively. Dashed lines of waveform represent dipole activity in the left hemisphere and solid lines indicate activity in the right hemisphere.

A significant frequency change × base frequency × hemisphere interaction was found for N1 dipole amplitude (F(1,22) = 6.45; p = 0.019). The post hoc results show that the N1 dipole amplitudes in the right hemisphere were greater than those in the left hemisphere for 25% (p = 0.019) and 50% (p = 0.031) changes at 4,000 Hz. Similar to the dipole amplitude, a significant frequency change × base frequency × hemisphere interaction for N1 dipole latency was revealed (F(1,22) = 6.63; p = 0.017). The results show that the dipole latencies in the right hemisphere were shorter than those in the left hemisphere for 4,000 Hz with the 50% frequency change (p = 0.03).

For P2 dipole amplitude, two interactions including frequency change × hemisphere (F(1,22) = 9.43; p = 0.006) and frequency change × base frequency (F(1,22) = 114.04; p < 0.0001) were found. The P2 dipole amplitudes were greater in the right hemisphere than in the left hemisphere for 25% (p = 0.053) and 50% change (p = 0.019). Also, the P2 dipole amplitudes to 4,000 Hz were greater compared to 250 Hz for 10% (p = 0.005), 25% (p = 0.007), and 50% frequency changes (p = 0.029). For P2 dipole latency, a significant base frequency × frequency change interaction was found (F(1,22) = 3,159; p < 0.0001) such that the P2 latencies for a 25% frequency change were significantly shorter than those for 10% at 4,000 Hz (p = 0.006). The P2 dipole latencies for 250 Hz were prolonged compared to 4,000 Hz for 25% (p = 0.002) and 50% (p = 0.004) frequency changes.

The effect of musical training on hemispheric asymmetry for spectral processing was examined by comparing left- and right-hemispheric activation separately between the musician and non-musician groups. For the group comparison, we conducted a two-sample t-test and found significant group differences for both left (p = 0.001) and right hemispheres (p = 0.013). The results indicate that P2 dipole source activities in both hemispheres of the musicians were larger than in non-musicians.

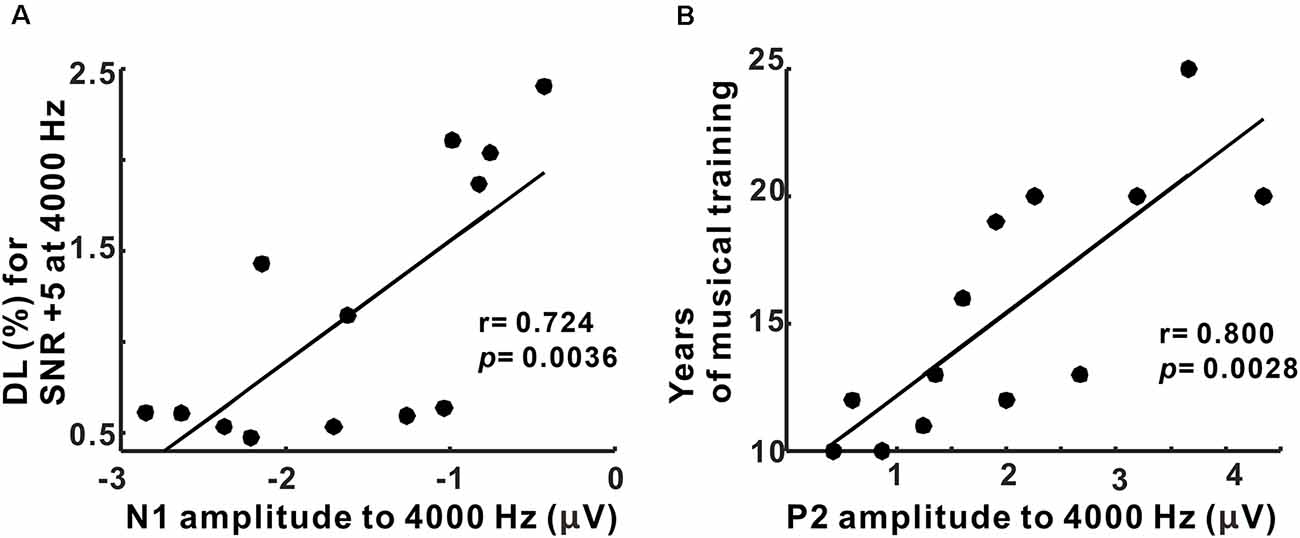

Relationship Between N1/P2 Cortical Response and Behavioral Performance/Duration of Musical Training

Pearson’s correlation results showed that the N1 amplitudes to 4,000 Hz were positively correlated with behavioral thresholds for 4,000 Hz with +5 SNR TEN (p = 0.0036, corrected for multiple comparisons; Figure 6A). Moreover, the P2 amplitudes to 4,000 Hz base tone were associated with the duration of musical training (p = 0.0028, corrected for multiple comparisons; Figure 6B). However, none of the latency measures were correlated with behavioral performance and musical training.

Figure 6. Correlations between behavioral performance/duration of musical training and N1/P2 amplitudes in musicians. (A) N1 amplitudes to 4,000 Hz condition are significantly related to frequency discrimination thresholds for SNR +5 at 4,000 Hz. (B) A significant relationship between P2 amplitudes to 4,000 Hz and the duration of musical training is revealed.

Discussion

Our aim in this study was to examine the effect of musical training on behavioral frequency discrimination as well as N1/P2 cortical responses. These are elicited by tones with frequency change, and their relationships with the threshold for frequency discrimination and duration of musical training were assessed. Our results demonstrate that P2 was increased in musicians compared to non-musicians whereas N1 revealed more stimulus-dependent characteristics in that it was modulated by frequency change. The results of the dipole source analysis show that the N1/P2 dipole activity in response to the frequency change stimuli was greater in the right hemisphere, and the P2 dipole source activities in musicians were larger than those in the non-musicians for both hemispheres. Finally, the N1 and P2 amplitudes were related to behavioral performances and the duration of musical training, respectively.

Effect of Musical Training on Behavioral Frequency Discrimination

In the behavioral frequency discrimination test, the thresholds of the musicians were lower than those of the non-musicians in the no TEN condition. These results indicate that the musicians were able to discriminate smaller spectral differences that the non-musicians could not, especially under quiet listening conditions. Previous studies assessing pitch discrimination in quiet conditions have reported relatively consistent results that musicians outperformed non-musicians in discriminating spectral features of stimuli, thereby confirming the better pitch perception of the former (Tervaniemi et al., 2005; Micheyl et al., 2006; Liang et al., 2016). Indeed, musical training leads to an enhancement in the ability to track frequency change and detect spectral cues in sounds. On the other hand, in the noise condition, the threshold for frequency discrimination in the musicians was not different from that in the non-musicians, which is similar to recent studies reporting that any advantage incurred by musical training on sound perception is questionable in the presence of noise-masking (Ruggles et al., 2014; Boebinger et al., 2015; Madsen et al., 2019). Meanwhile, it is still controversial whether musician advantage for auditory perception in noise exists or not. Studies in which behavioral tests were conducted on musicians have shown that musical training can improve speech-in-noise perception (Parbery-Clark et al., 2009a, 2012; Yoo and Bidelman, 2019). Furthermore, those studies have provided neurological evidence of better speech-in-noise perception by musicians (Musacchia et al., 2007; Parbery-Clark et al., 2011, 2012; Zendel et al., 2015; and reviewed in Coffey et al., 2017). However, in the current study, musical expertise for noise perception was not evident. One possible reason for this is related to the test paradigm and stimulus type used to evoke a response. In a study using speech with multiple maskers varied in content and similarity to speech, improved performances by musicians in a frequency discrimination task have been revealed, although this does not carry over to speech-in-noise perception (Boebinger et al., 2015). Similarly, Micheyl et al. (2006) and Ruggles et al. (2014) reported that musicians have an advantage in pitch discrimination that is not present for perceiving masked sounds. Another explanation for no effect of music training on sound in noise processing is that the musician benefits on the noise perception can be restricted to the specific sounds which are more linguistically and cognitively demanding. Several studies have suggested that the musician’s advantage in noise perception is dependent on the complexity of target sounds or tasks (Krizman et al., 2017; Yoo and Bidelman, 2019). For example, Yoo and Bidelman reported that musicians revealed improved sentences in noise perception, but the musician advantage was not applied for words in noise processing. In summary, the results of these studies suggest that the possible advantage of sound perception incurred by musical training is questionable in the presence of noise-masking, and it would be dependent on the complexity of the task (Ruggles et al., 2014; Boebinger et al., 2015; Madsen et al., 2019).

Investigating the neural overlap between pitch perception and perceiving sound in noise could uncover a mechanism to explain the perceptual advantages observed in musicians. Musical practice is a complex form of training consisting of dozens of perceptual and cognitive skills drawing on hearing, selective attention, and auditory memory. However, previous works examining the relationship between musical experience and cognitive/perceptual skills have shown that musical training is only related to specific musical features such as pitch, melody, and rhythm perception (Ruggles et al., 2014). Thus, selective listening related to the perception of masked sounds may not be a crucial aspect linked to musical training. Moreover, it has been suggested that the outcomes of musical training may not always be generalizable beyond the tasks that are closely related to musical perception (Geiser et al., 2009; Okada and Slevc, 2018). Rather, high sensitivity to sound in noise seems to be associated with daily music-related behavior, such as listening to music in everyday life (Kliuchko et al., 2015). Alternatively, the neurological basis for music perception is another possibility for the result as the cortical areas governing music and speech may not completely share their neural origins (Albouy et al., 2020; reviewed in Peretz et al., 2015).

Effects of Musical Training on N1/P2 Cortical Potentials

N1 Modulation as a Function of Frequency Change

We found that N1 is modulated as a function of frequency change to a greater degree than P2, whereas P2 reflects the musical training-induced enhancements in the musicians. Previously, it has been suggested that the N1/P2 responses are evoked by acoustic changes in a sound: either amplitude (Han and Dimitrijevic, 2015), intensity (Dimitrijevic et al., 2009) or frequency (Shahin et al., 2003; Dimitrijevic et al., 2008; Pratt et al., 2009). The N1 was evoked by stimuli with changes in frequency which is close to the level of behavioral thresholds in frequency discrimination. In turn, the N1 may not be elicited by the sound with frequency change which is not detected by listeners perceptually (Martin and Boothroyd, 2000; Jones and Perez, 2001). In general, the N1 amplitude is modulated by an increase in frequency that is more apparent for stimuli with frequencies higher than 1,000 Hz (Picton, 2011). Enhanced amplitude with frequency increase has been attributed to the level of neuronal activation relating to the range in basilar membrane deflection (Rose et al., 1967; Picton, 2011).

In our study, N1/P2 amplitudes were larger for the higher frequency relative to the lower one. Although these were larger for the lower frequency compared to the higher one, some studies using the mismatch negativity (MMN) paradigm have reported similar results to our findings. For example, using a frequency change as a deviant stimulus, Novitski et al. (2004) found larger MMN responses to a higher frequency than a lower one. A possible reason for the increased cortical responses to the higher frequency could be related to the frequency change experiment including change stimuli embedded in the ongoing tones. This is similar to the MMN paradigm (Lavikainen et al., 1995) in which infrequent deviant stimuli presented with repetitive standard sounds. From this point of view, we speculate that the listening condition of the frequency change activates the neuronal populations in a similar way to the MMN paradigm (see the review in Alho, 1995).

P2 Response Reflecting Musical-Training Induced Plasticity

In contrast to N1, our results show that P2 responses to frequency changes in musicians are more robust than in non-musicians. Human electrophysiological studies have also shown enhanced P2 cortical activity in individuals with short-term auditory training (reviewed in Tremblay, 2007; Tremblay et al., 2014), language experience (Wagner et al., 2013), and short/long-term musical training (Atienza et al., 2002; Shahin et al., 2003; Tremblay, 2007; Tong et al., 2009). More recently, results showing increased P2 to trained pitch sounds during passive listening infer that training-induced cortical plasticity is related to permanent perception changes rather than the effect of selective attention (Wisniewski et al., 2020). There is growing neuroimaging evidence to support the notion that the neural representations of complex sounds such as music at the peripheral and central levels are influenced extensively by experience or training (Parbery-Clark et al., 2012; Sankaran et al., 2020). Perceiving music requires listeners to integrate sources of information, including amplitude, timbre, and pitch, each of which can provide cues for perceiving music. Neuroanatomically, the understanding of music requires systematic processing as a set of hierarchical neural representations in different areas of the brain. Previous studies have proposed that music perception requires the activation of multiple areas of the brain involved with not only sound discrimination but also cognitive/perceptual skills (Okada and Slevc, 2018). Among the long-latency responses, P2 is related to neural processes mediating cognitive/perceptual aspects of sound processing (Näätänen et al., 1993; Alain et al., 2007). Therefore, we assume that P2 represents training-induced cortical plasticity due to the characteristic of being sensitive to acoustic features importantly contributing to music perception.

In Neuromusicology, there has been a long-standing debate about whether the central auditory processing to musical features is altered by musical training (nurture) or preexisting factors (nature). Previous findings have supported the idea of experience-driven plasticity of musicians by showing that training changes the neural representation of acoustic features in individuals with extensive musical experience (Pantev et al., 2001; Shahin et al., 2008). It has been suggested that skilled musicians exhibit enhanced cortical representations of musical timbres associated with the instrument they have trained with (Pantev et al., 2001; Pantev and Herholz, 2011). Such timbre specificity constrained to the principal instrument supports the theory that changes in neural activity in musicians are mainly driven by experience (reviewed in Pantev and Herholz, 2011). Furthermore, in studies investigating the effect of short-term musical training on cortical plasticity, Tremblay et al. (2001) and Atienza et al. (2002) found that P2 responses were enhanced by short-term intensive training in non-musicians. These results indicate that auditory cortical responses can be altered regardless of the musical training duration of the non-musicians. Given that professional musicians receive much longer training than non-musicians, it can be inferred that the cortical plasticity induced by the training should be greater in musicians. Numerous studies have reported the musical training effects both the behavioral level (Shahin et al., 2003, 2008; Tervaniemi et al., 2005; Liang et al., 2016; Intartaglia et al., 2017) and the perceptual levels (Gaser and Schlaug, 2003; Bermudez et al., 2009; Hyde et al., 2009) of the auditory processing. Meanwhile, an attempt to explain the musician’s advantage of innate properties has been made. These studies have supported the view that genetic factors could be involved in the etiology of musical properties including absolute pitch (Gregersen et al., 1999, 2001; Theusch et al., 2009; Theusch and Gitschier, 2011), congenital amusia (Peretz et al., 2007), and music perception (Drayna et al., 2001; Pulli et al., 2008; Ukkola et al., 2009; Ukkola-Vuoti et al., 2013; Oikkonen et al., 2015). In a study assessing non-musicians, individuals with superior musical ability showed enhanced neural encoding of speech. Moreover, they were less susceptible to noise in a similar way to what appeared in professional musicians (Mankel and Bidelman, 2018). Swaminathan and Schellenberg (2018) examined relationships among musical training and non-musical factors and musical ability to find a marker for musical competence. In this study, non-musical factors such as socioeconomic status, short-term memory, general cognitive ability, and personality were indirectly associated with the musical ability along with the musical training, suggesting that the musical competence would be established by complex interactions between nature and nurture traits. It seems difficult to make a conclusion of nature vs. nurture debate at this point. To clarify the issue, further studies are necessary to compare multiple factors relating to the musical ability in a large group of musicians.

N1/P2 Correlation With Behavioral Performance and Musical Training Experience

In our study, N1 is correlated with the perceptual change to frequency information in musician whereas the duration of musical training is related to P2. The lack of a consistent N1 relationship with musical training may be accounted for by the notion that N1 is related to neural processing for frequency information in sound rather than a musical experience. In particular, the relationship between N1 and behavioral performance was found between frequency discrimination thresholds in noise and N1 amplitudes to frequency change (see Figure 6). This finding is related to the previous finding that spectral processing is associated to sound perception in noise (Fu et al., 1998; Won et al., 2007), and difficulty with sound in noise has been attributed to a reduction in the ability to distinguish acoustic signals from noise (Gaudrain et al., 2007). For P2, we found that the amplitude increased with longer duration of musical training but not with age at the onset of the training (data not shown). Relationships between musical training and P2 evoked by auditory stimuli have been reported in previous studies on adults (Atienza et al., 2002; Shahin et al., 2003; Choi et al., 2014) as well as children (Shahin et al., 2003, 2004). Moreover, the P2 amplitude elicited by musical tones is correlated with musical training (Choi et al., 2014). These results suggest that continuous musical training may help to maintain cortical synaptic plasticity regardless of when musical training started. Meanwhile, a previous study comparing behavioral thresholds for speech discrimination and objective/cognitive properties has shown that the P2 threshold is associated with cognitive factors such as non-verbal IQ but not with musical experience (Boebinger et al., 2015); the authors suggested that the musician’s advantage may be accounted for by co-variation in higher-order cognitive factors with musicianship. To better understand the complex relationships among musical training and cognitive and perceptual processing, more studies are necessary to compare perceptual measures of sound processing, cortical activity, and cognitive factors interconnected through both bottom-up and top-down auditory pathways.

Asymmetrical Hemispheric Activation to Frequency Change

We investigated whether hemispheric asymmetry in the processing of frequency change exists at the cortical level. The findings from the N1/P2 dipole source analysis showed that source activation in response to frequency changes was greater in the right hemisphere than in the left hemisphere. This result is consistent with previous reports (Shahin et al., 2003, 2007; Dimitrijevic et al., 2008; Pratt et al., 2009; Okamoto and Kakigi, 2015) showing that the processing of frequency information is lateralized to the right hemisphere. The right hemisphere dominance for the processing of frequency change seems to be based on fundamental brain mechanisms that are closely related to the functional specialization of the right hemisphere for pitch perception (Zatorre and Belin, 2001). Research on a large sample of musicians has reported that the musicians were sensitive to pitch change and their behavioral sensitivity was associated with the right-ward asymmetry for pitch processing (Schneider et al., 2005). Moreover, a lesion-related study reported abnormal pitch discrimination in patients who had undergone the removal of the right Heschl’s gyrus (Johnsrude et al., 2000). Zatorre and Belin (2001) also confirmed that spectral processing recruits anterior superior temporal regions bilaterally, with greater activation in the right hemisphere (Zatorre and Belin, 2001).

By comparing the left- and right-hemispheric activities separately in musicians and non-musicians, we found that the dipole source activity in musicians evoked by frequency changes was larger than that in non-musicians in both hemispheres. Increased bilateral engagement of the hemispheres in the musician was mainly attributed to the group difference, and the effects of musical training on hemispheric reorganization were only observed for the P2 dipole. Indeed, increased bilateral hemispheric activation following long-term musical experience has previously been reported. Using near-infrared spectroscopy, Gibson et al. (2009) found greater bilateral frontal activity in musicians compared to non-musicians during a cognitively demanding task; they suggested that extensive musical experience yields the symmetrical activities in the musicians. Also, Tremblay et al. (2009) reported that short-term auditory training evoked a different pattern of hemispheric asymmetry such that the P2 dipole sources to training-specific stimuli increased in the left hemisphere. This is consistent with our results showing that musical training enhances cortical activity in the left hemisphere. Furthermore, all of the musicians except for the vocalists in our study require both hands to play their instruments. Musicians can incorporate auditory feedback to play instruments and appropriately alter their motor response in both hands in a very short period. Given that this auditory-motor interaction interplays between the left and right hemispheres, this process may strengthen the direct connections between the hemispheres (reviewed inZatorre et al., 2007).

Conclusions

In the present study, we showed that the effect of frequency change was more apparent for N1, while P2 responses are closely related to musical training. An enhanced N1 response to frequency changes is associated with better frequency discrimination whereas P2 responses are positively related to the duration of musician training, indicating training-induced cortical plasticity. Also, musicians had more robust P2 source activation in both hemispheres, which indicates musical experience may alter the hemispheric lateralization for processing of frequency change more symmetrically. Given that enhanced P2 activity with frequency change reflects changes in the summation of postsynaptic field potentials in the auditory cortex, our findings infer that neural plasticity evoked by long-term musical training can alter the cortical representation of a change in frequency even when passively listening to sounds. In future studies, we will examine the cortical activity to frequency change with noise-masking to compare with quiet listening to define a neural overlap between pitch perception and sound in noise perception. Also, the effect of attention on spectral processing is worth investigating in that the selective attention in musicians increases the neural encoding of sound and suppresses background noise to enhance their speech-in-noise perception ability (Strait and Kraus, 2011).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The study involving human participants were reviewed and approved by the ethics committee of the Hallym University Sacred Hospital. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

JL and J-HH contributed to the conception, design of the study, and wrote the manuscript. JL performed the experiment and statistical analysis. JL, J-HH, and H-JL contributed to manuscript revision, read, and approved the submitted version.

Funding

This project was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2020R1I1A1A0107091411 and 2019R1A2B5B01070129), the Center for Women in Science, Engineering and Technology (WISET) Grant funded by the Ministry of Science ICT and Future Planning of Korea (MSIP) under the Program for Returners into R&D (WISET-2020-203), and by the Hallym University Research Fund (HURF-2017-79).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Dr. Andrew Dimitrijevic for providing MATLAB codes to generate stimuli and perform behavioral frequency discrimination test.

References

Alain, C., Snyder, J. S., He, Y., and Reinke, K. S. (2007). Changes in auditory cortex parallel rapid perceptual learning. Cereb. Cortex 17, 1074–1084. doi: 10.1093/cercor/bhl018

Albouy, P., Benjamin, L., Morillon, B., and Zatorre, R. J. (2020). Distinct sensitivity to spectrotemporal modulation supports brain asymmetry for speech and melody. Science 367, 1043–1047. doi: 10.1126/science.aaz3468

Alho, K. (1995). Cerebral generators of mismatch negativity (MMN) and its magnetic counterpart (MMNM) elicited by sound changes. Ear Hear. 16, 38–51. doi: 10.1097/00003446-199502000-00004

Atienza, M., Cantero, J. L., and Dominguez-marin, E. (2002). The time course of neural changes underlying auditory perceptual learning. Learn. Mem. 9, 138–150. doi: 10.1101/lm.46502

Bell, A. (2013). Randomized or fixed order for studies of behavioral syndromes? Behav. Ecol. 24, 16–20. doi: 10.1093/beheco/ars148

Bermudez, P., Lerch, J. P., Evans, A. C., and Zatorre, R. J. (2009). Neuroanatomical correlates of musicianship as revealed by cortical thickness and voxel-based morphometry. Cereb. Cortex 19, 1583–1596. doi: 10.1093/cercor/bhn196

Bidelman, G. M., Davis, M. K., and Pridgen, M. H. (2018). Brainstem-cortical functional connectivity for speech is differentially challenged by noise and reverberation. Hear. Res. 367, 149–160. doi: 10.1016/j.heares.2018.05.018

Bidelman, G. M., Weiss, M. W., Moreno, S., and Alain, C. (2014). Coordinated plasticity in brainstem and auditory cortex contributes to enhanced categorical speech perception in musicians. Eur. J. Neurosci. 40, 2662–2673. doi: 10.1111/ejn.12627

Boebinger, D., Evans, S., Rosen, S., Lima, C. F., Manly, T., and Scott, S. K. (2015). Musicians and non-musicians are equally adept at perceiving masked speech. J. Acoust. Soc. Am. 137, 378–387. doi: 10.1121/1.4904537

Choi, I., Bharadwaj, H. M., Bressler, S., Loui, P., Lee, K., and Shinn-Cunningham, B. G. (2014). Automatic processing of abstract musical tonality. Front. Hum. Neurosci. 8:988. doi: 10.3389/fnhum.2014.00988

Coffey, E. B. J., Mogilever, N. B., and Zatorre, R. J. (2017). Speech-in-noise perception in musicians: a review. Hear. Res. 352, 49–69. doi: 10.1016/j.heares.2017.02.006

Deguchi, C., Boureux, M., Sarlo, M., Besson, M., Grassi, M., Schön, D., et al. (2012). Sentence pitch change detection in the native and unfamiliar language in musicians and non-musicians: behavioral, electrophysiological and psychoacoustic study. Brain Res. 1455, 75–89. doi: 10.1016/j.brainres.2012.03.034

Dimitrijevic, A., Lolli, B., Michalewski, H. J., Pratt, H., Zeng, F. G., and Starr, A. (2009). Intensity changes in a continuous tone: auditory cortical potentials comparison with frequency changes. Clin. Neurophysiol. 120, 374–383. doi: 10.1016/j.clinph.2008.11.009

Dimitrijevic, A., Michalewski, H. J., Zeng, F.-G., Pratt, H., and Starr, A. (2008). Frequency changes in a continuous tone: auditory cortical potentials. Clin. Neurophysiol. 119, 2111–2124. doi: 10.1016/j.clinph.2008.06.002

Dochtermann, N. A. (2010). Behavioral syndromes: Carryover effects, false discovery rates and a priori hypotheses. Behav. Ecol. 21, 437–439. doi: 10.1093/beheco/arq021

Drayna, D., Manichaikul, A., de Lange, M., Snieder, H., and Spector, T. (2001). Genetic correlates of musical pitch recognition in humans. Science 291, 1969–1972. doi: 10.1126/science.291.5510.1969

Fu, Q.-J., Shannon, R. V., and Wang, X. (1998). Effects of noise and spectral resolution on vowel and consonant recognition: acoustic and electric hearing. J. Acoust. Soc. Am. 104, 3586–3596. doi: 10.1121/1.423941

Gaser, C., and Schlaug, G. (2003). Brain structures differ between musicians and non-musicians. J. Neurosci. 23, 9240–9245. doi: 10.1523/JNEUROSCI.23-27-09240.2003

Gaudrain, E., Grimault, N., Healy, E. W., and Béra, J. C. (2007). Effect of spectral smearing on the perceptual segregation of vowel sequences. Hear. Res. 231, 32–41. doi: 10.1016/j.heares.2007.05.001

Geiser, E., Ziegler, E., Jancke, L., and Meyer, M. (2009). Early electrophysiological correlates of meter and rhythm processing in music perception. Cortex 45, 93–102. doi: 10.1016/j.cortex.2007.09.010

Gibson, C., Folley, B. S., and Park, S. (2009). Enhanced divergent thinking and creativity in musicians: a behavioral and near-infrared spectroscopy study. Brain Cogn. 69, 162–169. doi: 10.1016/j.bandc.2008.07.009

Gregersen, P. K., Kowalsky, E., Kohn, N., and Marvin, E. W. (1999). Absolute pitch: prevalence, ethnic variation and estimation of the genetic component. Am. J. Hum. Genet. 65, 911–913. doi: 10.1086/302541

Gregersen, P. K., Kowalsky, E., Kohn, N., and Marvin, E. W. (2001). Early childhood music education and predisposition to absolute pitch: teasing apart genes and environment. Am. J. Med. Genet. 98, 280–282. doi: 10.1002/1096-8628(20010122)98:3<280::aid-ajmg1083>3.0.co;2-6

Han, J. H., and Dimitrijevic, A. (2015). Acoustic change responses to amplitude modulation: a method to quantify cortical temporal processing and hemispheric asymmetry. Front. Neurosci. 9:38. doi: 10.3389/fnins.2015.00038

Han, J. H., Zhang, F., Kadis, D. S., Houston, L. M., Samy, R. N., Smith, M. L., et al. (2016). Auditory cortical activity to different voice onset times in cochlear implant users. Clin. Neurophysiol. 127, 1603–1617. doi: 10.1016/j.clinph.2015.10.049

Hutka, S., Bidelman, G. M., and Moreno, S. (2015). Pitch expertise is not created equal: cross-domain effects of musicianship and tone language experience on neural and behavioural discrimination of speech and music. Neuropsychologia 71, 52–63. doi: 10.1016/j.neuropsychologia.2015.03.019

Hyde, K. L., Lerch, J., Norton, A., Forgeard, M., Winner, E., Evans, A. C., et al. (2009). Musical training shapes structural brain development. J. Neurosci. 29, 3019–3025. doi: 10.1523/jneurosci.5118-08.2009

Intartaglia, B., White-Schwoch, T., Kraus, N., and Schön, D. (2017). Music training enhances the automatic neural processing of foreign speech sounds. Sci. Rep. 7:12631. doi: 10.1038/s41598-017-12575-1

Johnsrude, I. S., Penhune, V. B., and Zatorre, R. J. (2000). Functional specificity in the right human auditory cortex for perceiving pitch direction. Brain 123, 155–163. doi: 10.1093/brain/123.1.155

Jones, S. J., and Perez, N. (2001). The auditory “C-process”: analyzing the spectral envelope of complex sounds. Clin. Neurophysiol. 112, 965–975. doi: 10.1016/s1388-2457(01)00515-6

Kliuchko, M., Heinonen-Guzejev, M., Monacis, L., Gold, B. P., Heikkilä, K. V., Spinosa, V., et al. (2015). The association of noise sensitivity with music listening, training and aptitude. Noise Health 17, 350–357. doi: 10.4103/1463-1741.165065

Koelsch, S., Schröger, E., and Tervaniemi, M. (1999). Superior pre-attentive auditory processing in musicians. Neuroreport 10, 1309–1313. doi: 10.1097/00001756-199904260-00029

Kokoska, S., and Zwillinger, D. (2000). CRC Standard Probability and Statistics Tables and Formulae, Student Edition. Boca Raton: CRC Press, 200.

Krizman, J., Bradlow, A. R., Lam, S. S. Y., and Kraus, N. (2017). How bilinguals listen in noise: linguistic and non-linguistic factors. Biling. 20, 834–843. doi: 10.1017/s1366728916000444

Lavikainen, J., Huotilainen, M., Ilmoniemi, R. J., Simola, J. T., and Näätänen, R. (1995). Pitch change of a continuous tone activates two distinct processes in human auditory cortex: a study with whole-head magnetometer. Electroencephalogr. Clin. Neurophysiol. 96, 93–96. doi: 10.1016/0013-4694(94)00283-q

Liang, C., Earl, B., Thompson, I., Whitaker, K., Cahn, S., Xiang, J., et al. (2016). Musicians are better than non-musicians in frequency change detection: behavioral and electrophysiological evidence. Front. Neurosci. 10:464. doi: 10.3389/fnins.2016.00464

Logue, D. M., Mishra, S., McCaffrey, D., Ball, D., and Cade, W. H. (2009). A behavioral syndrome linking courtship behavior toward males and females predicts reproductive success from a single mating in the hissing cockroach, Gromphadorhina portentosa. Behav. Ecol. 20, 781–788. doi: 10.1093/beheco/arp061

Madsen, S. M. K., Marschall, M., Dau, T., and Oxenham, A. J. (2019). Speech perception is similar for musicians and non-musicians across a wide range of conditions. Sci. Rep. 9:10404. doi: 10.1038/s41598-019-46728-1

Mankel, K., and Bidelman, G. M. (2018). Inherent auditory skills rather than formal music training shape the neural encoding of speech. Proc. Natl. Acad. Sci. U S A 115, 13129–13134. doi: 10.1073/pnas.1811793115

Marques, C., Moreno, S., Castro, S. L., and Besson, M. (2007). Musicians detect pitch violation in a foreign language better than nonmusicians: behavioral and electrophysiological evidence. J. Cogn. Neurosci. 19, 1453–1463. doi: 10.1162/jocn.2007.19.9.1453

Martin, B. A., and Boothroyd, A. (2000). Cortical, auditory, evoked potentials in response to changes of spectrum and amplitude. J. Acoust. Soc. Am. 107, 2155–2161. doi: 10.1121/1.428556

Micheyl, C., Delhommeau, K., Perrot, X., and Oxenham, A. J. (2006). Influence of musical and psychoacoustical training on pitch discrimination. Hear. Res. 219, 36–47. doi: 10.1016/j.heares.2006.05.004

Moore, B. C. J. (2008). The role of temporal fine structure processing in pitch perception, masking and speech perception for normal-hearing and hearing-impaired people. J. Assoc. Res. Otolaryngol. 9, 399–406. doi: 10.1007/s10162-008-0143-x

Musacchia, G., Sams, M., Skoe, E., and Kraus, N. (2007). Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc. Natl. Acad. Sci. U S A 104, 15894–15898. doi: 10.1073/pnas.0701498104

Näätänen, R., Paavilainen, P., Tiitinen, H., Jiang, D., and Alho, K. (1993). Attention and mismatch negativity. Psychophysiology 30, 436–450. doi: 10.1111/j.1469-8986.1993.tb02067.x

Nan, Y., Liu, L., Geiser, E., Shu, H., Gong, C. C., Dong, Q., et al. (2018). Piano training enhances the neural processing of pitch and improves speech perception in mandarin-speaking children. Proc. Natl. Acad. Sci. U S A 115, E6630–E6639. doi: 10.1073/pnas.1808412115

Novitski, N., Tervaniemi, M., Huotilainen, M., and Näätänen, R. (2004). Frequency discrimination at different frequency levels as indexed by electrophysiological and behavioral measures. Cogn. Brain Res. 20, 26–36. doi: 10.1016/j.cogbrainres.2003.12.011

Oikkonen, J., Huang, Y., Onkamo, P., Ukkola-Vuoti, L., Raijas, P., Karma, K., et al. (2015). A genome-wide linkage and association study of musical aptitude identifies loci containing genes related to inner ear development and neurocognitive functions. Mol. Psychiatry 20, 275–282. doi: 10.1038/mp.2014.8

Okada, B. M., and Slevc, L. R. (2018). Individual differences in musical training and executive functions: a latent variable approach. Mem. Cognit. 46, 1076–1092. doi: 10.3758/s13421-018-0822-8

Okamoto, H., and Kakigi, R. (2015). Encoding of frequency-modulation (FM) rates in human auditory cortex. Sci. Rep. 5:18143. doi: 10.1038/srep18143

Oxenham, A. J. (2012). Pitch perception. J. Neurosci. 32, 13335–13338. doi: 10.1523/JNEUROSCI.3815-12.2012

Pantev, C., and Herholz, S. C. (2011). Plasticity of the human auditory cortex related to musical training. Neurosci. Biobehav. Rev. 35, 2140–2154. doi: 10.1016/j.neubiorev.2011.06.010

Pantev, C., Roberts, L. E., Schulz, M., Engelien, A., and Ross, B. (2001). Timbre-specific enhancement of auditory cortical representations in musicians. Neuroreport 12, 169–174. doi: 10.1097/00001756-200101220-00041

Pantev, C., Ross, B., Berg, P., Elbert, T., and Rockstroh, B. (1998). Study of the human auditory cortices using a whole-head magnetometer: left vs. right hemisphere and ipsilateral vs. contralateral stimulation. Audiol. Neurootol. 3, 183–190. doi: 10.1159/000013789

Parbery-Clark, A., Anderson, S., Hittner, E., and Kraus, N. (2012). Musical experience strengthens the neural representation of sounds important for communication in middle-aged adults. Front. Aging Neurosci. 4:30. doi: 10.3389/fnagi.2012.00030

Parbery-Clark, A., Marmel, F., Bair, J., and Kraus, N. (2011). What subcortical-cortical relationships tell us about processing speech in noise. Eur. J. Neurosci. 33, 549–557. doi: 10.1111/j.1460-9568.2010.07546.x

Parbery-Clark, A., Skoe, E., and Kraus, N. (2009a). Musical experience limits the degradative effects of background noise on the neural processing of sound. J. Neurosci. 29, 14100–14107. doi: 10.1523/jneurosci.3256-09.2009

Parbery-Clark, A., Skoe, E., Lam, C., and Kraus, N. (2009b). Musician enhancement for speech-in-noise. Ear Hear. 30, 653–661. doi: 10.1097/AUD.0b013e3181b412e9

Peretz, I., Cummings, S., and Dubé, M. P. (2007). The genetics of congenital amusia (tone deafness): a family-aggregation study. Am. J. Hum. Genet. 81, 582–588. doi: 10.1086/521337

Peretz, I., Vuvan, D., Lagrois, M.-É., and Armony, J. L. (2015). Neural overlap in processing music and speech. Philos. Trans. R. Soc. Lond. B Biol. Sci. 370:20140090. doi: 10.1098/rstb.2014.0090

Picton, T. (2011). Human Auditory Evoked Potentials. 1st Edn. (San Diego, CA: Plural Publishing Inc).

Pratt, H., Starr, A., Michalewski, H. J., Dimitrijevic, A., Bleich, N., and Mittelman, N. (2009). Auditory-evoked potentials to frequency increase and decrease of high- and low-frequency tones. Clin. Neurophysiol. 120, 360–373. doi: 10.1016/j.clinph.2008.10.158

Pulli, K., Karma, K., Norio, R., Sistonen, P., Göring, H. H. H., Järvelä, I., et al. (2008). Genome-wide linkage scan for loci of musical aptitude in finnish families: evidence for a major locus at 4q22. J. Med. Genet. 45, 451–456. doi: 10.1136/jmg.2007.056366

Rose, J. E., Brugge, J. F., Anderson, D. J., and Hind, J. E. (1967). Phase-locked response to low-frequency tones in single auditory nerve fibers of the squirrel monkey. J. Neurophysiol. 30, 769–793. doi: 10.1152/jn.1967.30.4.769

Ruggles, D. R., Freyman, R. L., and Oxenham, A. J. (2014). Influence of musical training on understanding voiced and whispered speech in noise. PLoS One 9:e86980. doi: 10.1371/journal.pone.0086980

Sankaran, N., Carlson, T. A., and Thompson, W. F. (2020). The rapid emergence of musical pitch structure in human cortex. J. Neurosci. 40, 2108–2118. doi: 10.1523/JNEUROSCI.1399-19.2020

Schellenberg, E. (2019). Music training, music aptitude and speech perception. Proc. Natl. Acad. Sci. U S A 116, 2783–2784. doi: 10.1073/pnas.1821109116

Schellenberg, E. G. (2015). Music training and speech perception: a gene-environment interaction. Ann. N Y Acad. Sci. 1337, 170–177. doi: 10.1111/nyas.12627

Schneider, P., Scherg, M., Dosch, H. G., Specht, H. J., Gutschalk, A., and Rupp, A. (2002). Morphology of Heschl’s gyrus reflects enhanced activation in the auditory cortex of musicians. Nat. Neurosci. 5, 688–694. doi: 10.1038/nn871

Schneider, P., Sluming, V., Roberts, N., Scherg, M., Goebel, R., Specht, H. J., et al. (2005). Structural and functional asymmetry of lateral Heschl’s gyrus reflects pitch perception preference. Nat. Neurosci. 8, 1241–1247. doi: 10.1038/nn1530

Seppänen, M., Hämäläinen, J., Pesonen, A. K., and Tervaniemi, M. (2012). Music training enhances rapid neural plasticity of N1 and P2 source activation for unattended sounds. Front. Hum. Neurosci. 6:43. doi: 10.3389/fnhum.2012.00043

Shahin, A. J., Roberts, L. E., Chau, W., Trainor, L. J., and Miller, L. M. (2008). Music training leads to the development of timbre-specific gamma band activity. NeuroImage 41, 113–122. doi: 10.1016/j.neuroimage.2008.01.067

Shahin, A. J., Roberts, L. E., Pantev, C., Aziz, M., and Picton, T. W. (2007). Enhanced anterior-temporal processing for complex tones in musicians. Clin. Neurophysiol. 118, 209–220. doi: 10.1016/j.clinph.2006.09.019

Shahin, A., Bosnyak, D. J., Trainor, L. J., and Roberts, L. E. (2003). Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J. Neurosci. 23, 5545–5552. doi: 10.1523/jneurosci.23-13-05545.2003

Shahin, A., Roberts, L. E., and Trainor, L. J. (2004). Enhancement of auditory cortical development by musical experience in children. Neuroreport 15, 1917–1921. doi: 10.1097/00001756-200408260-00017

Stangor, C., and Walinga, J. (2014). Introduction to Psychology - 1st Canadian Edition (p. 709). Ch5.3 Hearing Retrieved from. Available online at https://opentextbc.ca/introductiontopsychology/.

Strait, D. L., and Kraus, N. (2011). Can you hear me now? Musical training shapes functional brain networks for selective auditory attention and hearing speech in noise. Front. Psychol. 2:113. doi: 10.3389/fpsyg.2011.00113

Swaminathan, S., and Schellenberg, E. G. (2018). Musical competence is predicted by music training, cognitive abilities and personality. Sci. Rep. 8:9223. doi: 10.1038/s41598-018-27571-2

Tervaniemi, M., Just, V., Koelsch, S., Widmann, A., and Schröger, E. (2005). Pitch discrimination accuracy in musicians vs. nonmusicians: an event-related potential and behavioral study. Exp. Brain Res. 161, 1–10. doi: 10.1007/s00221-004-2044-5

Theusch, E., and Gitschier, J. (2011). Absolute pitch twin study and segregation analysis. Twin Res. Hum. Genet. 14, 173–178. doi: 10.1375/twin.14.2.173

Theusch, E., Basu, A., and Gitschier, J. (2009). Genome-wide study of families with absolute pitch reveals linkage to 8q24.21 and locus heterogeneity. Am. J. Hum. Genet. 85, 112–119. doi: 10.1016/j.ajhg.2009.06.010

Tong, Y., Melara, R. D., and Rao, A. (2009). P2 enhancement from auditory discrimination training is associated with improved reaction times. Brain Res. 1297, 80–88. doi: 10.1016/j.brainres.2009.07.089

Tremblay, K. L. (2007). Training-related changes in the brain: evidence from human auditory-evoked potentials. Semin. Hear. 28, 120–132. doi: 10.1055/s-2007-973438

Tremblay, K. L., Ross, B., Inoue, K., McClannahan, K., and Collet, G. (2014). Is the auditory evoked P2 response a biomarker of learning? Front. Syst. Neurosci. 8:28. doi: 10.3389/fnsys.2014.00028

Tremblay, K. L., Shahin, A. J., Picton, T., and Ross, B. (2009). Auditory training alters the physiological detection of stimulus-specific cues in humans. Clin. Neurophysiol. 120, 128–135. doi: 10.1016/j.clinph.2008.10.005

Tremblay, K., Kraus, N., McGee, T., Ponton, C., and Otis, B. (2001). Central auditory plasticity: changes in the N1–P2 complex after speech-sound training. Ear Hear. 22, 79–90. doi: 10.1097/00003446-200104000-00001

Ukkola, L. T., Onkamo, P., Raijas, P., Karma, K., and Järvelä, I. (2009). Musical aptitude is associated with AVPR1A-haplotypes. PLoS One 4:e5534. doi: 10.1371/journal.pone.0005534

Ukkola-Vuoti, L., Kanduri, C., Oikkonen, J., Buck, G., Blancher, C., Raijas, P., et al. (2013). Genome-wide copy number variation analysis in extended families and unrelated individuals characterized for musical aptitude and creativity in music. PLoS One 8:e56356. doi: 10.1371/journal.pone.0056356

Vonck, B. M. D., Lammers, M. J. W., van der Waals, M., van Zanten, G. A., and Versnel, H. (2019). Cortical auditory evoked potentials in response to frequency changes with varied magnitude, rate and direction. J. Assoc. Res. Otolaryngol. 20, 489–498. doi: 10.1007/s10162-019-00726-2

Wagner, M., Shafer, V. L., Martin, B., and Steinschneider, M. (2013). The effect of native-language experience on the sensory-obligatory components, the P1–N1-P2 and the T-complex. Brain Res. 1522, 31–37. doi: 10.1016/j.brainres.2013.04.045

Wisniewski, M. G., Ball, N. J., Zakrzewski, A. C., Iyer, N., Thompson, E. R., and Spencer, N. (2020). Auditory detection learning is accompanied by plasticity in the auditory evoked potential. Neurosci. Lett. 721:134781. doi: 10.1016/j.neulet.2020.134781

Won, J. H., Clinard, C. G., Kwon, S., Dasika, V. K., Nie, K., Drennan, W. R., et al. (2011). Relationship between behavioral and physiological spectral-ripple discrimination. J. Assoc. Res. Otolaryngol. 12, 375–393. doi: 10.1007/s10162-011-0257-4

Won, J. H., Drennan, W. R., and Rubinstein, J. T. (2007). Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. J. Assoc. Res. Otolaryngol. 8, 384–392. doi: 10.1007/s10162-007-0085-8

Yoo, J., and Bidelman, G. M. (2019). Linguistic, perceptual and cognitive factors underlying musicians’ benefits in noise-degraded speech perception. Hear. Res. 377, 189–195. doi: 10.1016/j.heares.2019.03.021

Zatorre, R. J., and Belin, P. (2001). Spectral and temporal processing in human auditory cortex. Cereb. Cortex 11, 946–953. doi: 10.1093/cercor/11.10.946

Zatorre, R. J., Chen, J. L., and Penhune, V. B. (2007). When the brain plays music: auditory-motor interactions in music perception and production. Nat. Rev. Neurosci. 8, 547–558. doi: 10.1038/nrn2152

Zendel, B. R., Tremblay, C.-D., Belleville, S., and Peretz, I. (2015). The impact of musicianship on the cortical mechanisms related to separating speech from background noise. J. Cogn. Neurosci. 27, 1044–1059. doi: 10.1162/jocn_a_00758

Keywords: frequency change, spectral processing, musical training, N1/P2 auditory evoked potential, hemispheric asymmetry

Citation: Lee J, Han J-H and Lee H-J (2020) Long-Term Musical Training Alters Auditory Cortical Activity to the Frequency Change. Front. Hum. Neurosci. 14:329. doi: 10.3389/fnhum.2020.00329

Received: 29 April 2020; Accepted: 24 July 2020;

Published: 21 August 2020.

Edited by:

Cunmei Jiang, Shanghai Normal University, ChinaReviewed by:

Gavin M. Bidelman, University of Memphis, United StatesFawen Zhang, University of Cincinnati, United States

Copyright © 2020 Lee, Han and Lee. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hyo-Jeong Lee, aHlvamxlZUBoYWxseW0uYWMua3I=

Jihyun Lee

Jihyun Lee Ji-Hye Han

Ji-Hye Han Hyo-Jeong Lee

Hyo-Jeong Lee