95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 03 April 2020

Sec. Sensory Neuroscience

Volume 14 - 2020 | https://doi.org/10.3389/fnhum.2020.00109

Frederique J. Vanheusden1,2*

Frederique J. Vanheusden1,2* Mikolaj Kegler3

Mikolaj Kegler3 Katie Ireland4

Katie Ireland4 Constantina Georga4

Constantina Georga4 David M. Simpson2

David M. Simpson2 Tobias Reichenbach3

Tobias Reichenbach3 Steven L. Bell2

Steven L. Bell2Background: Cortical entrainment to speech correlates with speech intelligibility and attention to a speech stream in noisy environments. However, there is a lack of data on whether cortical entrainment can help in evaluating hearing aid fittings for subjects with mild to moderate hearing loss. One particular problem that may arise is that hearing aids may alter the speech stimulus during (pre-)processing steps, which might alter cortical entrainment to the speech. Here, the effect of hearing aid processing on cortical entrainment to running speech in hearing impaired subjects was investigated.

Methodology: Seventeen native English-speaking subjects with mild-to-moderate hearing loss participated in the study. Hearing function and hearing aid fitting were evaluated using standard clinical procedures. Participants then listened to a 25-min audiobook under aided and unaided conditions at 70 dBA sound pressure level (SPL) in quiet conditions. EEG data were collected using a 32-channel system. Cortical entrainment to speech was evaluated using decoders reconstructing the speech envelope from the EEG data. Null decoders, obtained from EEG and the time-reversed speech envelope, were used to assess the chance level reconstructions. Entrainment in the delta- (1–4 Hz) and theta- (4–8 Hz) band, as well as wideband (1–20 Hz) EEG data was investigated.

Results: Significant cortical responses could be detected for all but one subject in all three frequency bands under both aided and unaided conditions. However, no significant differences could be found between the two conditions in the number of responses detected, nor in the strength of cortical entrainment. The results show that the relatively small change in speech input provided by the hearing aid was not sufficient to elicit a detectable change in cortical entrainment.

Conclusion: For subjects with mild to moderate hearing loss, cortical entrainment to speech in quiet at an audible level is not affected by hearing aids. These results clear the pathway for exploring the potential to use cortical entrainment to running speech for evaluating hearing aid fitting at lower speech intensities (which could be inaudible when unaided), or using speech in noise conditions.

Accurate speech understanding is essential in day-to-day communication. There is currently much interest in gaining insight into the entrainment of neural activity in the auditory cortex to running speech stimuli (Zatorre et al., 2002; Schroeder and Lakatos, 2009; Giraud and Poeppel, 2012; Ding et al., 2017; Riecke et al., 2018). Cortical entrainment can be defined as the phase adjustment of neuronal oscillations in the auditory cortex to ensure high sensitivity to relevant (quasi-)rhythmic speech features (Lakatos et al., 2005; Giraud and Poeppel, 2012; Peelle et al., 2013; Alexandrou et al., 2018). These phase adjustments are considered to persist over time (Lakatos et al., 2005). Alternatively, cortical entrainment has been defined as the “observation of a constant phase of neural response to the same speech stimulus,” which avoids the need for an intrinsic relationship between the speech stimulus and neuronal oscillations (Alexandrou et al., 2018).

There is strong evidence that the neural activity in the auditory cortex entrains to low-frequency modulations in speech (in the delta-, theta-, and gamma-band, see e.g., Giraud and Poeppel, 2012; Ding et al., 2017). This idea stems from studies that showed high speech recognition of vowels and consonants after removing high-frequency spectral cues (Shannon et al., 1995). Furthermore, it has been shown that running speech shows a dominant frequency component in this slow modulation range resulting from rhythmic jaw movement associated with syllable structure in English (Peelle et al., 2013), especially around 4 Hz (Golumbic et al., 2012). When removing these low-frequency components, cortical envelope tracking is reduced, with the reduction in correlation consistent with a decrease in perceived speech intelligibility (Doelling et al., 2014). These results have led to the hypothesis that cortical entrainment to speech can be an objective measure for evaluating speech understanding and intelligibility and for an evaluation of hearing loss treatment strategies (Somers et al., 2018).

The exact electrophysiological mechanisms behind cortical entrainment to speech remain unclear. One electrophysiological model suggests that the auditory cortex segments (running) speech into discrete units based on temporal speech features, which allows cortical readout of syllabic and phonetic units (Giraud and Poeppel, 2012). Here, speech onsets are suggested to trigger a stronger coupling between theta-band (4–8 Hz) activity and gamma-band (25–40 Hz) activity cortical generators, with gamma-band activity controlling the excitability of neurons and theta-band activity tracking the temporal speech envelope. Cortical entrainment to these oscillations has also been suggested to be asymmetric, with theta-band activity more strongly represented in the right hemisphere and gamma-band activity more strongly represented in the left hemisphere (Morillon et al., 2012). Computer models of coupled theta-band/gamma-band coupling have further shown that theta-band activity can regulate a phoneme-level response based on gamma-band spiking activity (Hyafil et al., 2015). Studies have also found that theta-band phase-locking of the cortex to speech stimuli is an important mechanism for discriminating speech (Luo and Poeppel, 2007; Howard and Poeppel, 2012).

On the other hand, some studies have suggested that theta-band activity only reflects perceptual processing of speech, whereas delta-band (1–4 Hz) activity is involved with understanding speech (Molinaro and Lizarazu, 2018). This is in line with speech spectrum studies showing spectral peaks at sentence and word rates (0.5 Hz and 2.5 Hz, respectively) and showing that delta-band activity contains prosodic information which if removed reduces perceived speech intelligibility (Woodfield and Akeroyd, 2010; Edwards and Chang, 2013). One study comparing EEG responses to speech in noise at different frequencies with behavioral responses showed a decline in delta-band activity with reduced speech signal-to-noise ratio resembling the decline in subjectively rated speech intelligibility, whereas theta-band activity showed a linear decline (Ding and Simon, 2013). Another study showed that delta-band, low theta-band and high theta-band entrainment correspond to different features of the speech stream, indicating that both delta-band and theta-band entrainment might be necessary for optimal speech understanding (Cogan and Poeppel, 2011).

Several studies have focused on cortical responses to short speech like sounds using magneto- (MEG) or electro-encephalography (EEG) (Shahin et al., 2007; Ding and Simon, 2013; Millman et al., 2015; Mirkovic et al., 2015; Di Liberto et al., 2018a). Traditionally, these studies focused on evoked cortical responses to short stimuli such as words, consonants and vowel or speech-like tones (Friedman et al., 1975; Cone-Wesson and Wunderlich, 2003; Tremblay et al., 2003; Shahin et al., 2007; Van Dun et al., 2012). Often, these stimuli are short such that they can be repeated, which allows the signal-to-noise ratio of the cortical response to be enhanced through coherent averaging (Ahissar et al., 2001; Aiken and Picton, 2008), or through correlation between template and response EEG averages (Suppes et al., 1998). These studies have shown that cortical responses differ for different speech tokens (Cone-Wesson and Wunderlich, 2003; Tremblay et al., 2003) even at infancy (Van Dun et al., 2012). They have also been used to estimate auditory thresholds in adults (Lightfoot, 2016). Furthermore, it has been suggested that cortical evoked potentials can reflect speech-in-noise performance in children (Anderson et al., 2010).

The main issue with these approaches is that these short speech stimuli consist of individual, independent onsets and offsets to which the evoked response is measured. Running speech, however, is a continuous flow of onsets and offsets, which are dependent on the stimulus and adapt to the spectro-temporal structures of the stimulus. Running speech therefore gives the potential to track a collective of speech features (often referred to as cortical entrainment), rather than only onsets (evoked responses) (Ding and Simon, 2014). Another aspect of repeated short stimuli is that they do not reflect ecologically relevant stimuli that are encountered in everyday life (Alexandrou et al., 2018). In most cases, running speech is taken from audiobooks (Ding and Simon, 2013; O’Sullivan et al., 2014; Di Liberto et al., 2015; Mirkovic et al., 2015). Although still not exactly the same as naturally occurring every-day speech (dialogues), these stimuli are considered more relevant as they can be encountered in naturally occurring circumstances such as theater visits or news bulletins (Alexandrou et al., 2018).

Evaluation of cortical entrainment to running speech can be achieved through coherence analysis, with a focus on finding responses in the relevant frequency bands (Luo and Poeppel, 2007; Doelling et al., 2014). A technique that has gained much popularity for reconstructing running speech features from EEG (and MEG) signals is the temporal response function (TRF), which represents a linear mapping between features of the speech stimulus and the neural response (Lalor et al., 2006; O’Sullivan et al., 2014; Crosse et al., 2016a). TRF algorithms can be used either to predict EEG signals from stimulus features (forward model) or to reconstruct stimulus features from collected EEG signals (backward model) (Crosse et al., 2016a). Early studies used forward models to predict EEG responses to unseen stimuli based on a single stimulus feature (e.g., the speech envelope) (Lalor and Foxe, 2010). Recently, however, multivariate TRF models have been used to predict EEG responses in separate frequency bands based on speech spectrograms (Crosse et al., 2016a) and even phonetic features (Di Liberto et al., 2015). Similarly, although traditional backward models mostly attempted to only reconstruct a single feature from recorded EEG data (Ding and Simon, 2012, 2013, 2014; Mirkovic et al., 2015), multimodal algorithms have been developed that allow extracting information from both audio and visual features simultaneously (Crosse et al., 2016b). The TRF has shown the potential to identify an attended speaker in a cocktail party setting of many competing voices (Power et al., 2012; Horton et al., 2013; O’Sullivan et al., 2014), to predict speech-in-noise thresholds (Vanthornhout et al., 2018), to decode speech comprehension (Etard and Reichenbach, 2019), and has been used to investigate atypical speech processing in subjects suffering from dyslexia (Di Liberto et al., 2018b). TRF algorithms have also shown to have better response detection as compared to a cross-correlation analysis between EEG signals and speech stimuli (Crosse et al., 2016a).

Currently, interest is growing to apply TRF algorithms for evaluating hearing function and hearing aid fitting (Decruy et al., 2019). Better audibility, due to wearing a hearing aid, is expected to correlate with the level of cortical tracking of the speech envelope. Some studies have, however, shown that presenting vowel stimuli through hearing aids may affect cortical evoked responses, possibly due to the effect of hearing aid speech processing software on the speech spectrum (Easwar et al., 2012; Jenstad et al., 2012). The potential effect of hearing aid processing on cortical entrainment has, however, not yet been explored.

This study aimed to determine how cortical entrainment to the temporal envelope of running speech stimuli is affected by hearing aids in a cohort of mild-to-moderate hearing-impaired subjects when presenting the speech stimuli at an audible level. This was achieved by comparing the correlation between the original temporal speech envelope and the envelope reconstructed from EEG signals under aided and unaided conditions using a backward TRF algorithm. Mild to moderate hearing impaired subjects were chosen as they represent the largest group of users that are seen in typical hearing aid clinics (Suppes et al., 1998). If no effect of hearing aid processing would be observed, it would provide a first step toward objective audiological evaluation of hearing aid fitting using ecologically relevant stimuli, rather than clicks or tone stimuli (Billings, 2013). This might also facilitate the application of TRF algorithms in future real-time hearing aid speech processing, for example through providing input for optimization of hearing aid algorithms through cortical entrainment evaluations obtained from in-the-ear EEG systems (Mikkelsen et al., 2015).

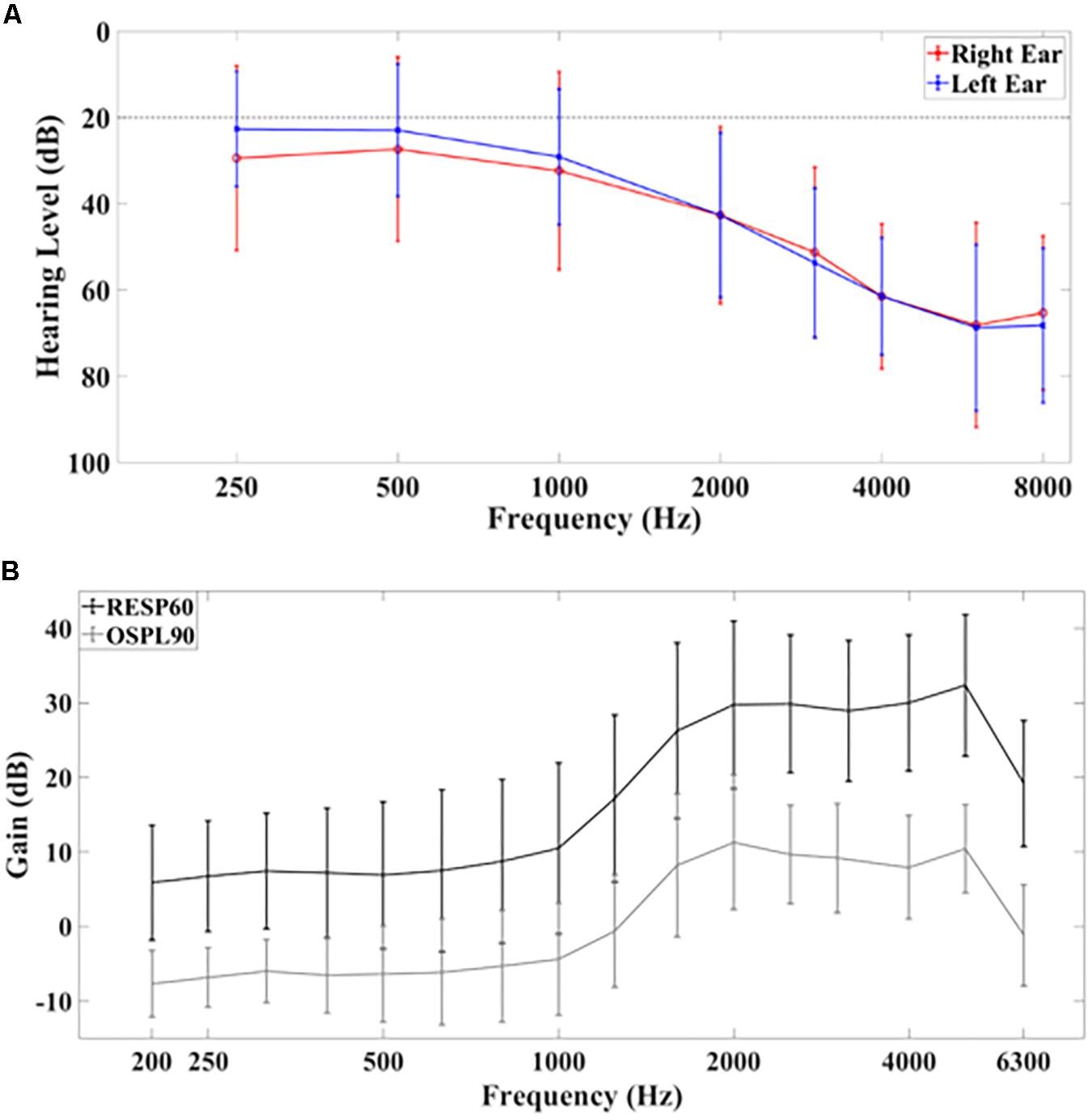

Seventeen native English-speaking subjects (11 males, 6 females, age 65 ± 5 years) with mild to moderate sensorineural and bilateral hearing impairment were recruited for this study (for full demographics, see Table 1). Hearing function was assessed through pure-tone audiometry (PTA). Figure 1A shows the average PTA hearing levels (in dB). Levels for the left ear at 250 Hz, 500 Hz, 1000 Hz, 2000 Hz, 3000 Hz, 4000 Hz, 6000 Hz, and 8000 Hz were: 23 dB ± 13 dB, 23 dB ± 15 dB, 29 dB ± 16dB, 43 dB ± 19 dB, 54 dB ± 17 dB, 61 dB ± 14 dB, 69 dB ± 19 dB, and 68 ± 18 dB, respectively (mean ± standard deviation). For the right ear, these values were: 29 dB ± 21 dB, 27 dB ± 21 dB, 32 dB ± 23 dB, 43 dB ± 21 dB, 51 dB ± 20 dB, 61 dB ± 17 dB, 68 dB ± 24 dB and 65 dB ± 18 dB, respectively.

Figure 1. (A) Average hearing levels for the left and right ear based on pure tone audiometry. The dashed line at 20 dB indicates the threshold above which hearing is considered to be normal. (B) Hearing aid gain according to RESP60 (black) and OSPL90 (gray) tests. Error bars indicate ±1 standard deviation.

All subjects had hearing aids fitted binaurally based on NAL-NL2 guidelines with real-ear measurements (see Table 2 for hearing aid features) (Aazh and Moore, 2007; Keidser et al., 2011). The average use of hearing aids over all subjects was 84 ± 48 months (mean ± standard deviation, range 4–191 months). The average real ear hearing aid gain for ISTS noise at 60 dB SPL input (RESP60, black) and the output sound pressure level with input of 90 dB SPL and gain full on (OSPL90, gray) measurements are shown in Figure 1B. Speech understanding was further evaluated by asking the participants to repeat a set of randomized Bamford-Kowal-Bench (BKB) sentences (Bench et al., 1979) presented at 65 dBA SPL under aided and unaided conditions. The sentence list was different for both conditions. All participants gave informed consent for the study. The study was approved by the local National Health Service (NHS) ethics committee.

Subjects were asked to listen to eight running speech segments of about 3 min each under aided and unaided conditions (total stimulus length of about 25 min). The stimulus was taken from a freely available audiobook1 and presented by a female speaker. Speech was sampled at 44,100 Hz and low-pass filtered at 3,000 Hz using 120th order finite impulse response (FIR) filter before presentation. Conditions were randomized amongst subjects. Segments were presented at 70 dBA equivalent sound pressure level (LeqA SPL) through a loudspeaker positioned 1.2 m directly in front of the subject. After each segment, participants were asked multiple-choice questions about the segments’ contents to determine if they paid attention to and understood the speech. Simultaneously, EEG data were collected using a 32-channel EEG system (BioSemi, Netherlands, sampling rate 2048 Hz) with two additional electrodes positioned at either mastoid. The electrodes were positioned according to the standard 10–20 system and referenced to the average EEG signal over all electrodes.

Objective assessment of speech understanding was based on measuring the entrainment of slow neural oscillations to speech, by correlating the actual stimulus speech envelope with that reconstructed from the EEG data using a linear model.

The recorded EEG was bandpass filtered (FIR filter, Hamming window, one-pass forward and compensated for delay) according to distinct frequency bands of slow neural oscillations. In particular the corner frequencies of the applied zero-phase filters were 1–4 Hz (transitions bandwidth: 1 Hz (low), 2 Hz (high), order 6759), 4–8 Hz (transitions bandwidths: 2 Hz (low), 2 Hz (high), order 3379), and 1–20 Hz (transitions bandwidths: 1 Hz (low), 5 Hz (high), order 6759), corresponding to the delta-, theta-, and broad-band EEG activity, respectively. The resulting signal was furthermore down-sampled to 64 Hz. All the above specified pre-processing steps were performed using functions from the MNE python package (Gramfort et al., 2013, 2014).

To extract the temporal speech envelope from the stimulus, an absolute value of its analytic signal was computed. Specifically, the analytic signal was a complex signal composed of the original stimulus as a real part and its Hilbert transform as an imaginary part. The stimulus’ temporal speech envelope obtained this way was subsequently filtered and down-sampled in the same way as the EEG recordings.

To reconstruct the stimulus’ temporal speech envelope from the EEG data, a spatiotemporal model was established. Specifically, at each time instance tn, the temporal speech envelope y(tn) was estimated as a linear combination of neural recording xj(tn + τk) at a delay τk:

The index j refers to the recording channel, τk to the delay of the EEG with respect to stimulus ranging from −100 ms to 400 ms and βj,k is a set of the decoder’s weights. For each subject, to obtain the model coefficients, a regularized ridge regression was applied: β = XtX + λI)−1Xty, where X is the design matrix, Xt is the transpose of X, λ is a regularization parameter and I is an identity matrix. NT columns of the design matrix correspond to recording channels at different latencies xj(tn + τk) and each row represents a different time tn.

To evaluate the reconstruction performance of the decoder, for each participant, a five-fold cross-validation procedure was applied. In each of five iterations, 80% of the data (∼20 min) was used to estimate the model and the remaining 20% (∼5 min) was employed to reconstruct the temporal speech envelope from the EEG (ŷ=Xβ). The reconstructed envelope and the actual (y) were subsequently divided into ten-seconds long parts (∼30 segments per fold of data) and the Pearson’s correlation coefficient between the two was computed for each of the obtained segments. For each subject, 50 different regularization parameters with values ranging from 10–15 to 1015 were tested to optimize the decoder. The optimal regularization parameter was the one that yielded the largest correlation coefficient averaged across all the testing folds and segments. For the optimal regularization parameter, correlation coefficients obtained from all the testing segments, across all the five folds were then pooled together to form a single distribution. Mean and standard deviation of this distribution reflected the envelope reconstruction performance of the optimized decoder.

To assess the empirical chance level reconstruction performance, the same procedure, including the same cross-validation and the optimization of the regularization parameter, was applied but the temporal speech envelope was reversed in time. The obtained correlation coefficients from short testing segments were similarly pooled together across all the five folds to form a null distribution. The chance-level correlations were subsequently compared to those obtained from the forward speech model, using the same methodology, via a Wilcoxon signed-rank test.

As Pearson’s correlation coefficients, used here to assess the temporal speech envelop reconstruction, were non-normally distributed, non-parametric tests were used during the study. EEG correlations and behavioral results under aided and unaided conditions were compared using a Wilcoxon signed-rank test. A Kruskal–Wallis test was used for comparing differences in variances. Linear correlations between cortical entrainment correlations and behavioral data were fitted using a bisquare robust regression algorithm. As two subjects could not complete the BKB sentence test due to experiments overrunning, their EEG data were excluded for this part of the study. Significance was assessed after adjusting for multiple comparisons based on expected false-discovery rates according to the Benjamini–Yekutieli algorithm (Benjamini and Yekutieli, 2001). Note that this adjustment allows for p-values to be higher than 1.

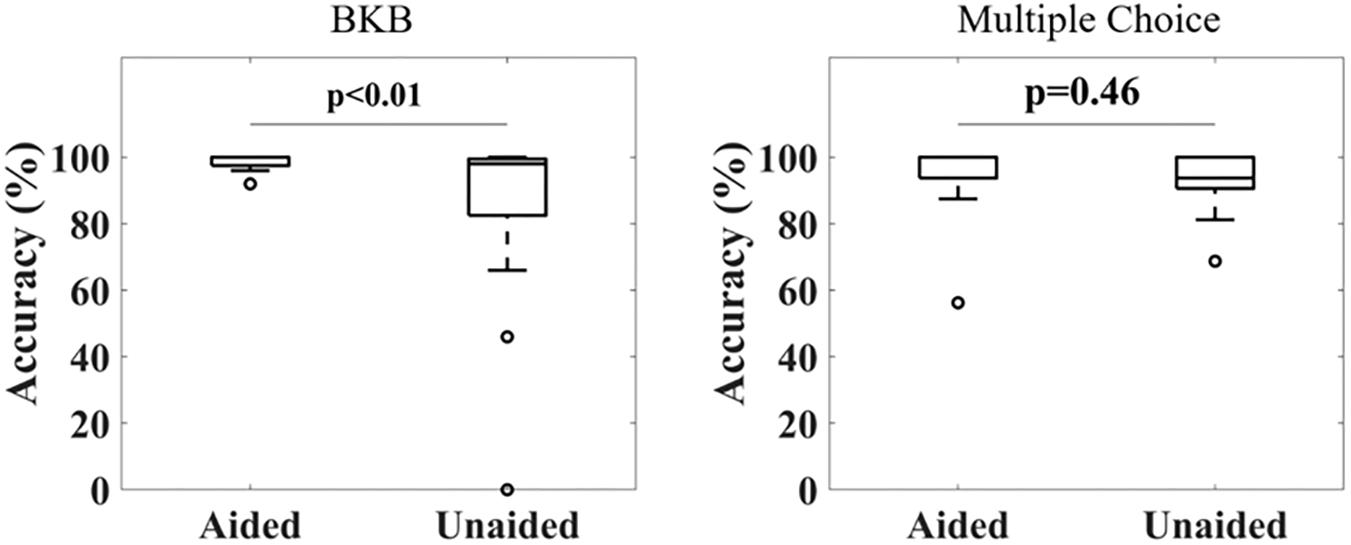

Figure 2 shows the accuracy in repeating the BKB sentences (left) and answering the multiple-choice questions (right) for all subjects under aided and unaided conditions. For the BKB sentences, 16 out of 17 subjects achieved a score above 95% under aided conditions, with the other subject scoring 92% (mean ± standard deviation: 98% ± 2%). The scores for unaided conditions were distributed over a larger range, with four subjects scoring less than 90% and another three subjects scoring below 95% (mean ± standard deviation: 84% ± 28%). Applying a Wilcoxon signed-rank test showed a significant difference in the distribution means of both conditions (p < 0.001). A significant difference could still be observed after removing data from two outliers scoring <50% in the unaided condition (p = 0.04). Similarly, the Kruskal–Wallis test showed a significant difference in variance between both conditions (p = 0.032). For the multiple-choice questions, high accuracy was obtained for both aided (mean ± standard deviation: 95% ± 10%) and unaided (mean ± standard deviation: 92% ± 11%) conditions. No significant difference between the distributions was found (Wilcoxon signed-rank test).

Figure 2. Distribution of correct response ratios under aided and unaided conditions for a BKB sentence list (Left) and multiple choice questions related to the audiobook (Right). A significant difference in accuracy was observed for the BKB sentence lists under aided compared to unaided conditions, but not for the multiple choice questions (Wilcoxon signed-rank test, α = 0.05).

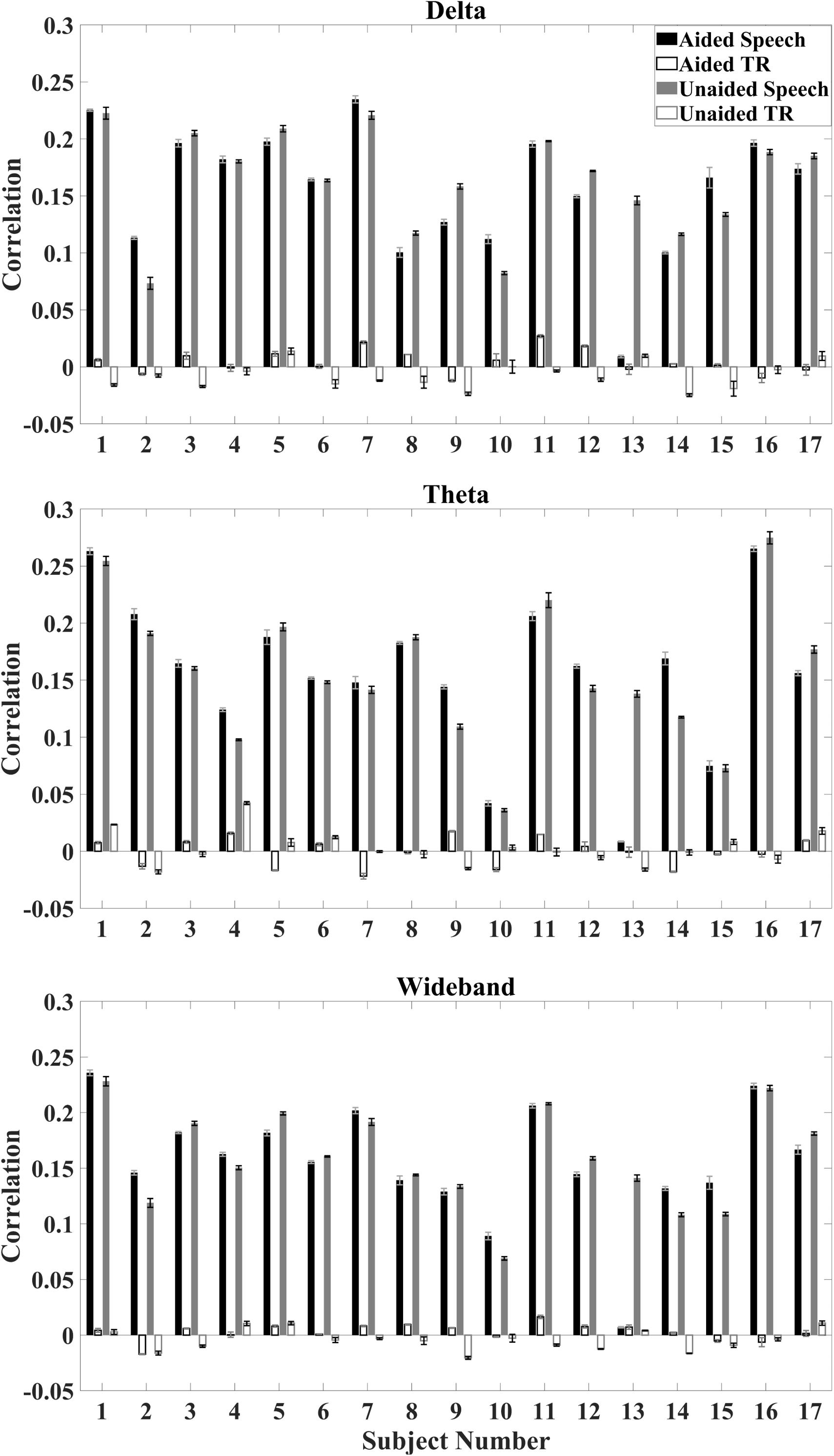

Correlations between the reconstructed temporal envelope based on the decoder algorithm and the time-aligned as well as time-reversed speech envelope were evaluated on the individual subject and the population level. On an individual level, the median correlation between reconstructed envelopes and the aligned speech envelope is higher than the median correlation between the reconstructed envelopes and time-reversed speech envelope for all subjects (Figure 3).

Figure 3. Average correlation for individual subjects under aided and unaided conditions for speech envelopes reconstructed from EEG signals to the aligned speech and time-reversed (TR) speech envelope. Error bars indicate standard deviations. Top: delta activity; Middle: theta activity; Bottom: wideband activity.

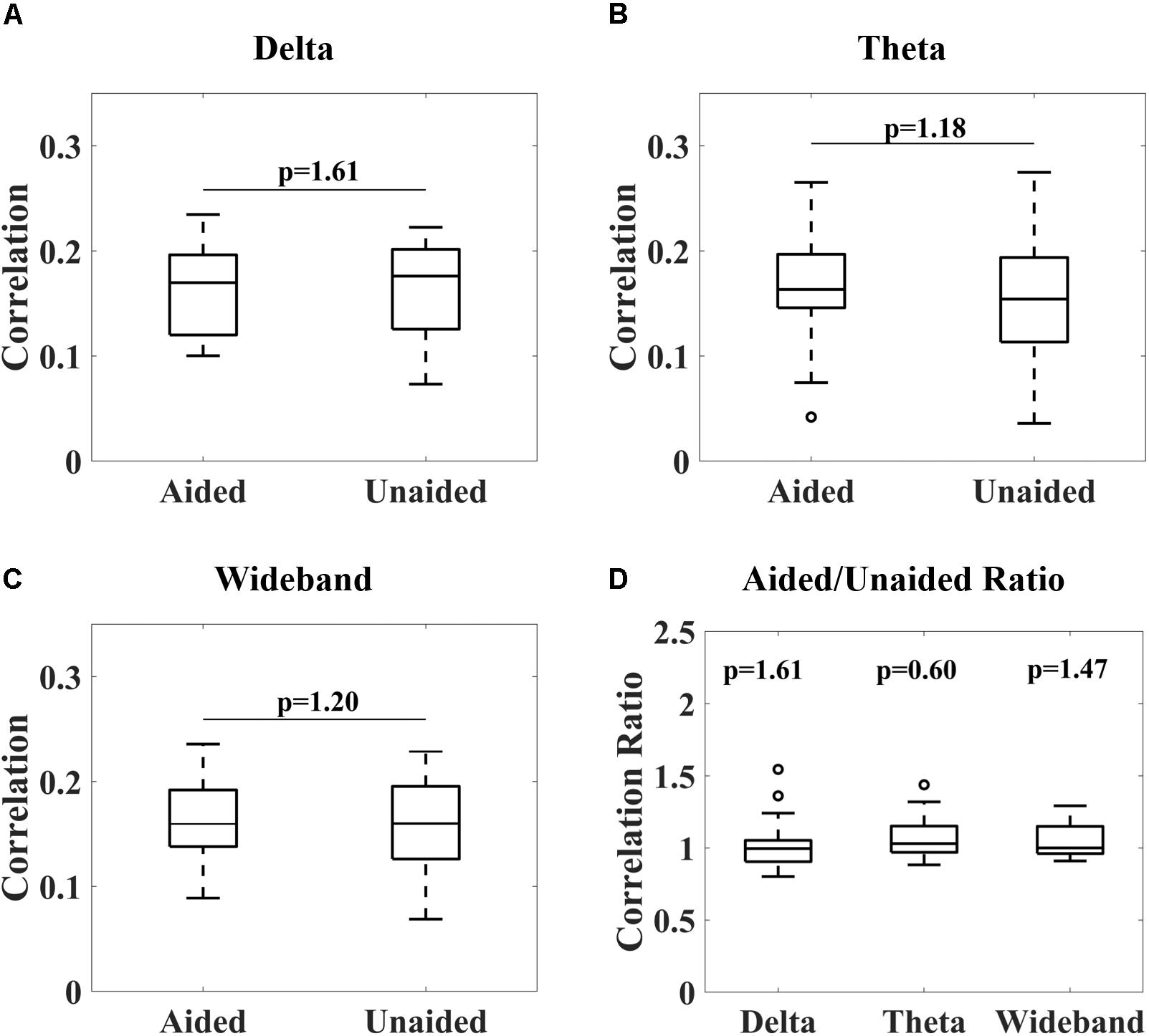

Figure 4 shows the overall distribution of correlations between the reconstructed and speech envelope for the different EEG bands under aided and unaided conditions for the remaining subjects. Generally, results show that hearing aids did not significantly alter cortical entrainment to the speech envelope. For all EEG bands, correlations varied between 0.07 and 0.24 for wideband and delta-band activity and 0.04 and 0.27 for theta-band activity over all subjects except subject 13. After checking the power spectral density function, it was observed that a technical issue occurred while collecting subject 13’s data, which were therefore removed from further analysis.

Figure 4. Correlations between reconstructed and real audiobook speech envelopes for delta-band (1–4 Hz, A), theta-band (4–8 Hz, B) and wideband (1–20 Hz, C) activity under aided and unaided conditions. No significant differences in distribution could be observed (Wilcoxon signed-rank test, p-values adjusted for multiple comparisons according to Benjamini–Yekutieli algorithm). A Wilcoxon signed-rank test comparing aided/unaided ratios further showed these ratios were not significantly different from 1 (D).

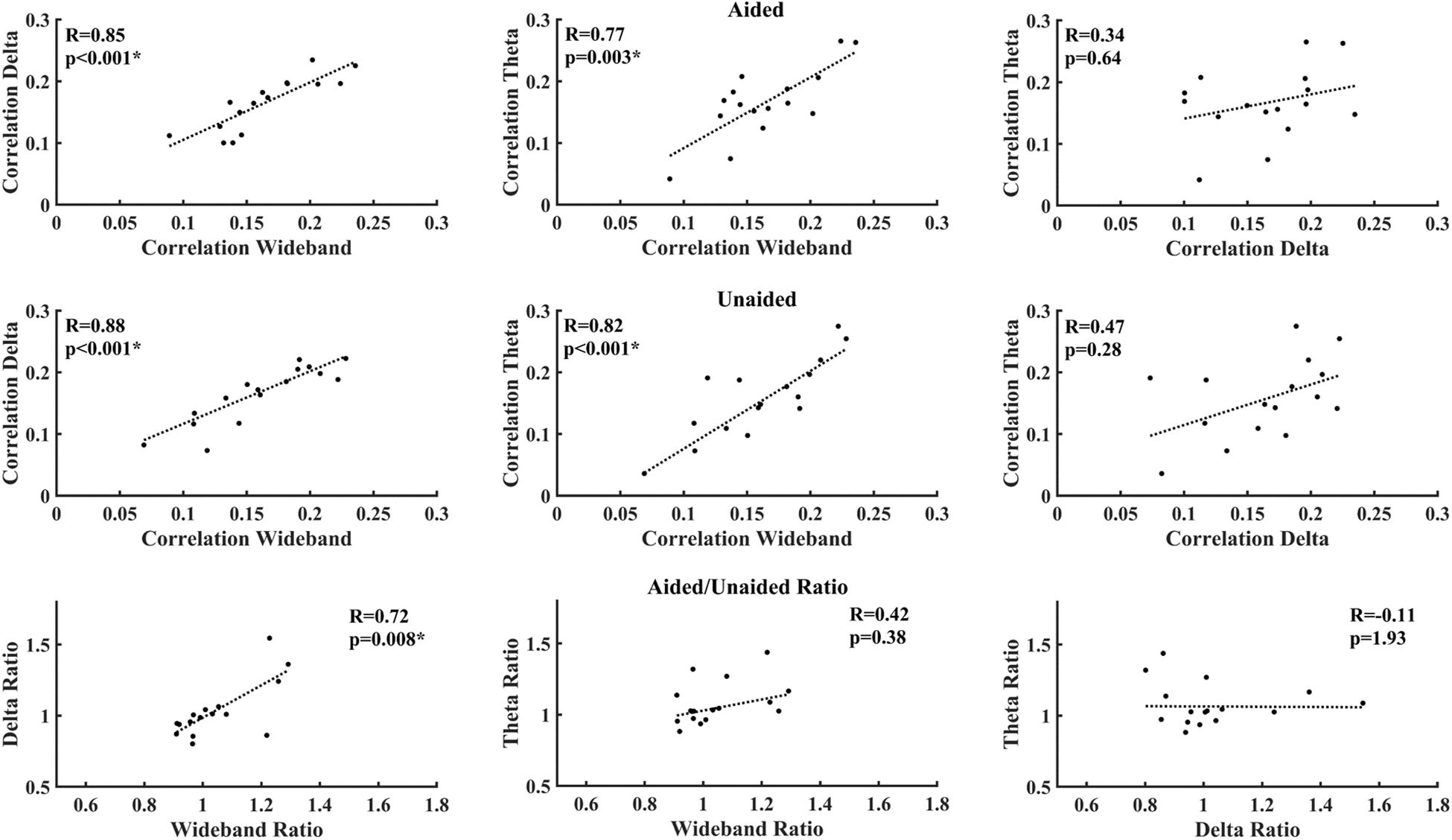

Figure 5 shows the correlations between different EEG bands under aided and unaided conditions. Both delta-band and theta-band activity show a strong and significant correlation after correcting for multiple comparisons, with the wideband activity under aided and unaided conditions. However, the correlation between delta-band and theta-band activity is lower and not significant.

Figure 5. Correlation between average decoder correlation values under aided (Top) and unaided conditions (Middle), as well as between aided/unaided ratios (Bottom). Asterisks indicate significant correlations after correcting for multiple comparisons (Benjamini–Yekutieli adjusted p-values).

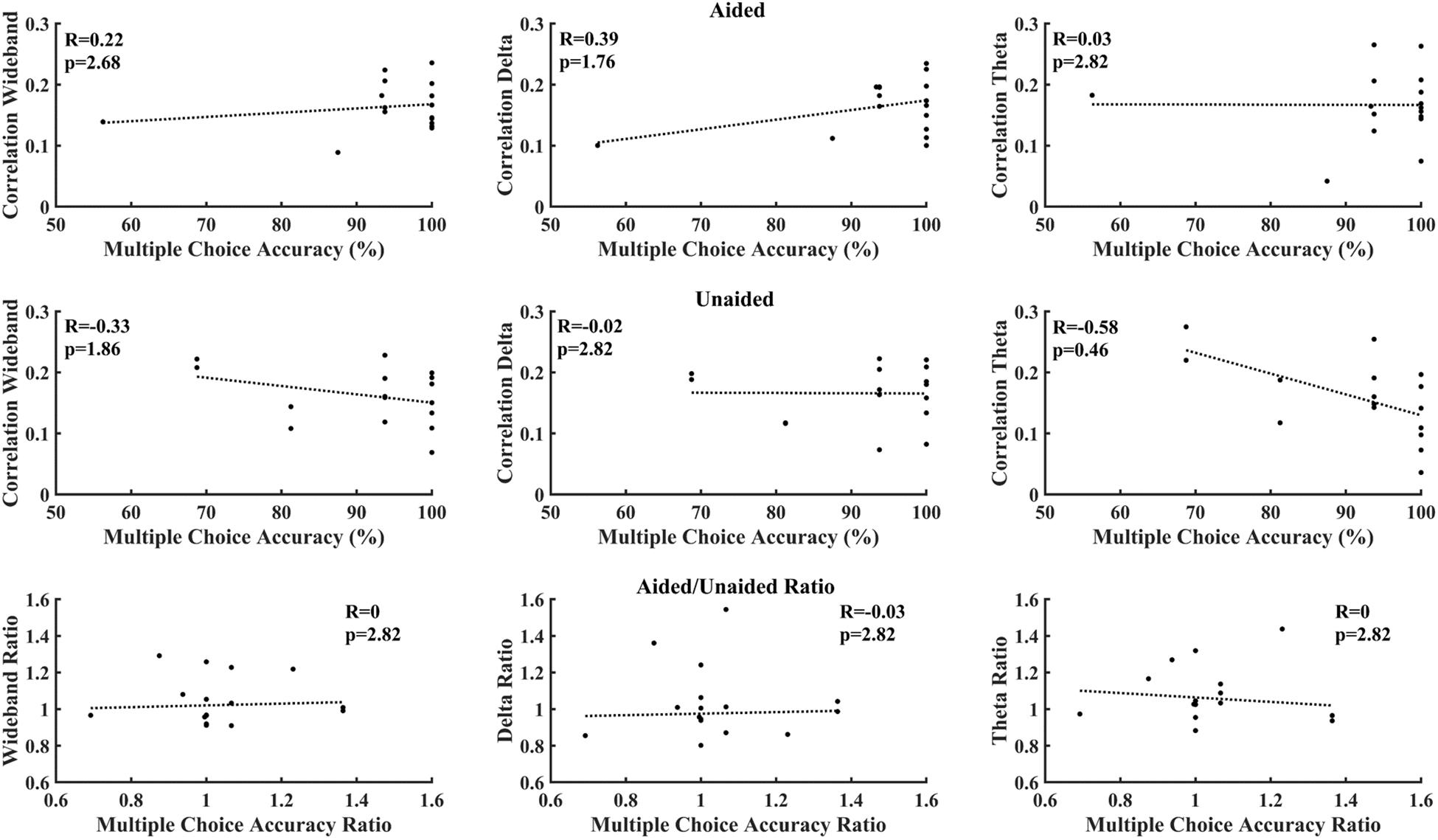

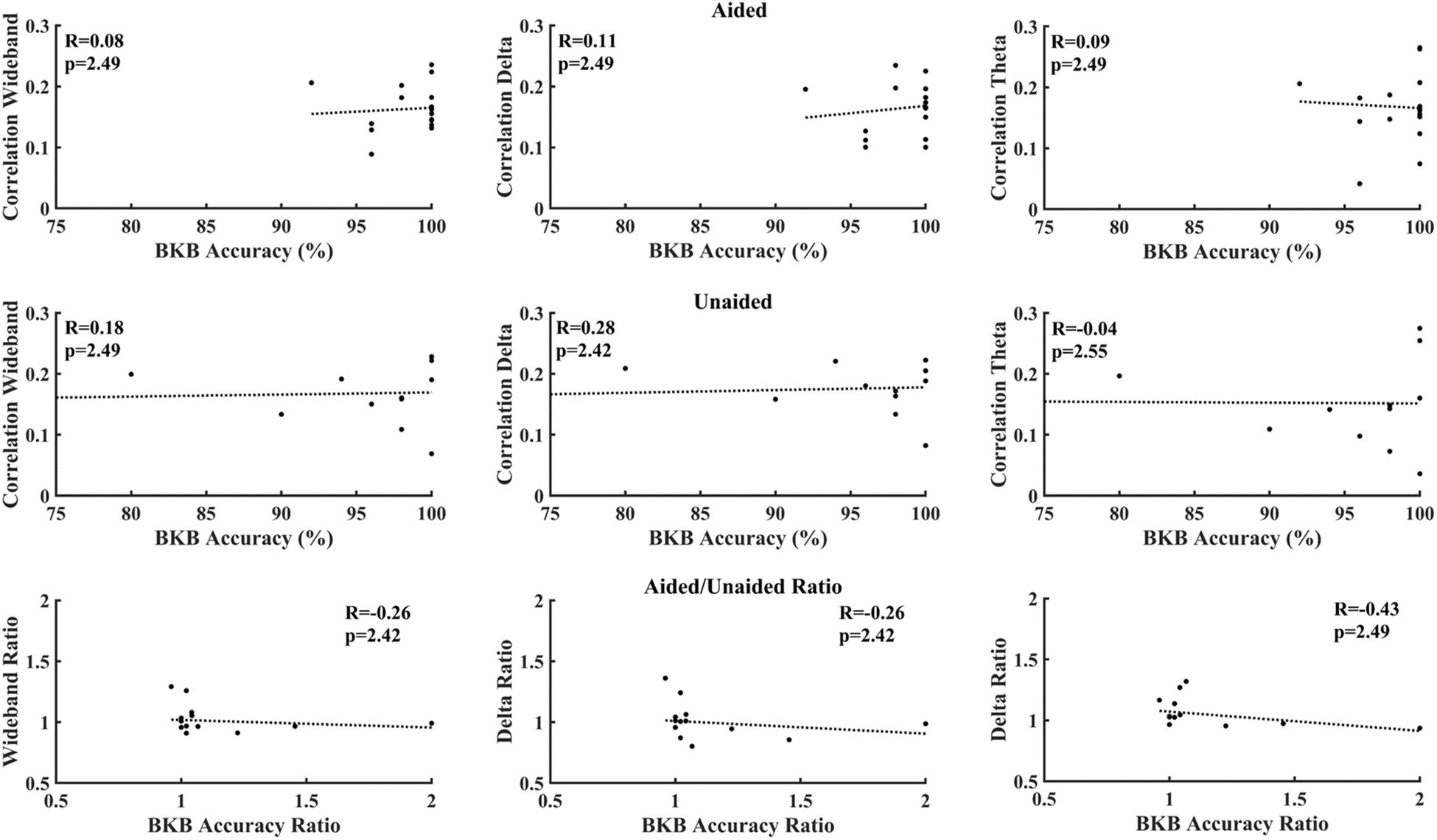

Figure 6 correlates cortical entrainment with the multiple-choice scores. No significant correlations were found between the EEG activity and multiple-choice scores after correcting for multiple comparisons (Benjamini–Yekutieli adjusted p-value). Changes in cortical entrainment did also not correlate with change in multiple-choice scores when taking the difference in correlation in cortical entrainment and multiple-choice scores between aided and unaided conditions. Similarly, no significant correlations could be found between behavioral scores obtained from BKB sentences and neural entrainment (Figure 7).

Figure 6. Correlation between multiple choice accuracy and decoder reconstruction accuracy. Top: aided; Middle: unaided; Bottom: ratio (aided/unaided). No significant correlations could be observed between EEG activity and multiple-choice accuracy after correction for multiple comparisons (Benjamini–Yekutieli adjusted p-values).

Figure 7. Correlation between BKB test accuracy and decoder reconstruction accuracy. Top: aided; Middle: unaided; Bottom: ratio (aided/unaided). No significant correlations could be observed between EEG activity and multiple-choice accuracy after correction for multiple comparisons (Benjamini–Yekutieli adjusted p-values).

Evaluation of low-frequency cortical entrainment to speech stimuli has been suggested as a potential indicator of speech understanding and intelligibility (Cogan and Poeppel, 2011; Ding and Simon, 2013; Di Liberto et al., 2015; Vanthornhout et al., 2018), and therefore evaluation of hearing function and hearing aid fitting. However, the effect of hearing aid speech processing software on this entrainment has not yet been investigated (Easwar et al., 2012; Jenstad et al., 2012). This study investigated if cortical entrainment to temporal speech envelopes is affected when presenting the speech stimuli at an audible level under aided against un-aided conditions in a cohort of mild-to-moderate hearing-impaired subjects. As the speech was audible for all our subjects, differences in cortical entrainment observed would be caused by the application of hearing aids. Speech stimuli used in this study were taken from an audiobook. Although not equal to and less frequently occurring in everyday life compared to natural conversations, this type of stimulus is considered more ecologically relevant compared to repeating sentences (Alexandrou et al., 2018).

Behavioral results (Figure 2) showed that subjects were able to hear the speech, and that under aided conditions, subjects performed significantly better in reproducing BKB sentences. One subject had a lower score of 92% accuracy under aided conditions, which could not be explained through demographic or hearing aid characteristics nor the duration of hearing aid use. However, we did not test our subjects for cognitive impairment, which even in mild conditions may affect performance in speech tests and has been shown to affect cortical and brainstem responses to sound stimuli (Moore et al., 2014; Bidelman et al., 2017). For the multiple-choice questions, higher variability in performance could be observed compared to BKB sentences, which again could not be directly explained through demographics or hearing aid features. Apart from the suggested effect of cognitive function, this might have been due to increased fatigue as it has been shown that listening attentively to long-duration speech stimuli requires more effort for hearing impaired subjects (Nachtegaal et al., 2009; Ayasse et al., 2017).

Based on decoder analysis for individual subjects, no specific trend between aided and unaided conditions could be observed (Figure 3). Some subjects show a higher correlation under aided conditions, whereas others show a higher correlation under unaided conditions. One subject (subject 13) showed a specifically low correlation for the time-aligned speech under aided conditions for all EEG bands.

This gave cause to analyze if results were confounded by which condition was used first in presenting the speech stimulus. Since a sign test showed that the median difference in correlation between the first and second condition played to each subject was not significantly different from 0, it could be determined that there was no correlation between the strength of the correlation and which condition was played first. This indicates that, for the remaining subjects, differences in correlation were not due to a lack of attention during the repeat of the stimulus.

From Figure 4, it can be observed that no significant differences in cortical entrainment occurred for either delta-band, theta-band or wideband activity (Wilcoxon signed-rank test, Benjamini–Yekutieli adjusted p-value). Unaided against aided correlation ratios were also tested for each frequency band to determine if trends in cortical entrainment could be observed (Figure 4D). However, this analysis also failed to show any significant trend. Results of this study are similar to correlations found in previous studies, which mostly used study populations consisting of younger test subjects (Ding and Simon, 2012; Crosse et al., 2016b; Vanthornhout et al., 2018). These results are probably caused by the speech stimuli being presented at a comparatively high intensity, well audible for the participants, even without their hearing aid. The good audibility already presents in the unaided condition, corroborated by the good unaided speech comprehension (Figure 2, right), lead to a significant neural response to the unaided speech envelope that presumably was not further increased by the hearing aid. The rationale for using quite a high speech level of 70 dB (A) was to ensure good entrainment to speech was possible for subjects even under unaided conditions (Etard and Reichenbach, 2019), and therefore establish that hearing aids do not significantly alter this entrainment under fully audible conditions. Due to long test durations, we could not explore the effect of lower intensity stimuli in this study. Evaluations of cortical entrainment under lower intensity stimuli conditions will be an important area of future research to determine if changes in cortical entrainment can be observed when hearing impaired subjects are listening to stimuli only audible under aided conditions, and therefore if cortical entrainment can find applications in hearing aid fitting evaluation.

Although hearing aid processing may alter the temporal speech envelope, our data shows that such alterations do not significantly alter the cortical entrainment. It could be that the decoder technique is robust to small changes in the speech envelope, which would be in agreement with a previous study that showed that different types of computing amplitude modulation of speech - using the Hilbert envelope or a more involved model of the auditory periphery with an auditory filter bank and non-linear compression - did not greatly affect the neural entrainment as measured from scalp EEG (Biesmans et al., 2016). This study, therefore, shows that the application of hearing aids does not significantly affect cortical entrainment of speech in quiet at a sound intensity above the hearing threshold. This provides some reassurance that cortical entrainment to speech can be evaluated in future studies to determine its potential in improving hearing aid fitting strategies for speech presented in noise or at an intensity below threshold for mild to moderately impaired hearing subjects (where the hearing aid should then make the speech audible). Recent studies have already shown that cortical entrainment might have potential in assessing hearing function in severely hearing-impaired subjects who have received a cochlear implant (Somers et al., 2018).

Correlations between activities of individual EEG bands are shown in Figure 5. Although both delta-band and theta-band entrainment showed a strong and significant correlation with the wideband activity as expected, the correlation between delta-band and theta-band activity was not significant. This reduced correlation possibly indicates that delta-band and theta-band activity entrain to different features of speech, as suggested in previous work (Giraud and Poeppel, 2012; Ding and Simon, 2013, 2014). For aided-unaided ratios, the only strong and significant correlation could be observed between the wideband and delta-band ratio, suggesting that wideband activity might be mostly driven by delta-activity.

Figures 6, 7 evaluate the correlation between cortical entrainment and behavioral results (multiple choice questions and BKB sentence analysis) for all EEG frequency bands of interest. No significant correlation between cortical entrainment and behavioral results could be observed, possibly because the fluctuations in cortical entrainment were larger than those in behavioral scores and the low number of participants in the current study. Another study evaluating cortical entrainment to speech in noise in normal-hearing subjects did find a correlation between strength of entrainment and behavioral responses (Vanthornhout et al., 2018). Further studies on larger hearing-impaired cohorts will be required to evaluate the strength of the correlation between behavioral responses to speech and cortical entrainment under aided and unaided conditions with speech in quiet and noise to determine the applicability of cortical entrainment analysis on hearing aid fitting evaluation.

Another interesting aspect was that trends in behavioral responses to speech (multiple-choice questions) differed to those of BKB sentences. Correct response ratios for BKB sentences under unaided conditions were significantly lower than under aided conditions, whereas no difference could be found for multiple-choice questions (Figure 2). Apart from a difference in intensity, a possible reason for this is that it might be easier to derive the correct answer due to having a context in running speech. Answers to the multiple-choice questions were often repeated during the audiobook or could be derived from the storyline. This repetition can lead to mind wandering and loss of attention, yet studies have shown that this would only affect performance in case strong detachment from the task occurs (Mooneyham and Schooler, 2016). Through observation, we were able to ensure participants were never fully losing attention to the speech stimulus. With audiobooks speech stimuli generally being clear and at a lower pace than natural conversations, repetition might have improved recalling answers to the multiple choice questions under unaided conditions, as studies have shown reduced speech rate can decrease cognitive load (Donahue et al., 2017; Millman and Mattys, 2017). BKB sentences on the other hand are independent from one another and not repeated, preventing subjects deriving correct answers by using context or recall from memory.

This paper investigated if cortical entrainment to running speech is affected by hearing aid processing in a cohort of mild-to-moderately impaired subjects. Speech was presented at audible levels in aided and unaided conditions. Results show that measurement of entrainment to the temporal speech envelope is reliable with and without hearing aids. At these levels hearing aids do not significantly alter cortical entrainment to the speech envelope acquired before hearing aid processing, however. As speech was presented at an audible level, behavioral data indicated high understanding of the unaided speech stimulus. No significant correlation between cortical entrainment and behavioral data could be found. Future studies measuring cortical entrainment to speech in more challenging conditions, for example presented at or below subject-specific hearing levels or in a noisy environment could further clarify the potential of cortical responses to optimize hearing aid fitting evaluation.

All data supporting this study are openly available from the University of Southampton repository at https://doi.org/10.5258/SOTON/D1140.

The studies involving human participants were reviewed and approved by local National Health Service (NHS) ethics committee. The patients/participants provided their written informed consent to participate in this study.

FV, KI, DS, TR, and SB contributed to the conception and design of the study. FV, KI, and CG organized the database and data collection. FV and MK performed data analysis and statistical analysis. FV wrote the first draft of the manuscript and MK wrote sections of the manuscript. All authors contributed to manuscript revision, read and approved the submitted version.

This project was funded by the Engineering and Physical Sciences Research Council, United Kingdom (Grant Nos. EP/M026728/1 and EP/R032602/1).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Aazh, H., and Moore, B. C. (2007). The value of routine real ear measurement of the gain of digital hearing aids. J. Am. Acad. Audiol. 18, 653–664. doi: 10.3766/jaaa.18.8.3

Ahissar, E., Nagarajan, S., Ahissar, M., Protopapas, A., Mahncke, H., and Merzenich, M. M. (2001). Speech comprehension is correlated with temporal response patterns recorded from auditory cortex. Proc. Natl. Acad. Sci. U.S.A. 98, 13367–13372. doi: 10.1073/pnas.201400998

Aiken, S. J., and Picton, T. W. (2008). Human cortical responses to the speech envelope. Ear Hear. 29, 139–157. doi: 10.1097/aud.0b013e31816453dc

Alexandrou, A. M., Saarinen, T., Kujala, J., and Salmelin, R. (2018). Cortical entrainment: what we can learn from studying naturalistic speech perception. Lang. Cogn. Neurosci. 1–13. (in press). doi: 10.1080/23273798.2018.1518534

Anderson, S., Chandrasekaran, B., Yi, H.-G., and Kraus, N. (2010). Cortical-evoked potentials reflect speech-in-noise perception in children. Eur. J. Neurosci. 32, 1407–1413. doi: 10.1111/j.1460-9568.2010.07409.x

Ayasse, N. D., Lash, A., and Wingfield, A. (2017). Effort not speed characterizes comprehension of spoken sentences by older adults with mild hearing impairment. Front. Aging Neurosci. 8:329. doi: 10.3389/fnagi.2016.00329

Bench, J., Kowal, A., and Bamford, J. (1979). The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. Br. J. Audiol. 13, 108–112. doi: 10.3109/03005367909078884

Benjamini, Y., and Yekutieli, D. (2001). The control of the false discovery rate in multiple testing under dependency. Ann. Statist. 29, 1165–1188. doi: 10.1186/1471-2105-9-114

Bidelman, G. M., Lowther, J. E., Tak, S. H., and Alain, C. (2017). Mild cognitive impairment is characterized by deficient brainstem and cortical representations of speech. J. Neurosci. 37, 3610–3620. doi: 10.1523/JNEUROSCI.3700-16.2017

Biesmans, W., Das, N., Francart, T., and Bertrand, A. (2016). Auditory-inspired speech envelope extraction methods for improved EEG-based auditory attention detection in a cocktail party scenario. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 402–412. doi: 10.1109/TNSRE.2016.2571900

Billings, C. J. (2013). Uses and limitations of electrophysiology with hearing aids. Semin. Hear. 34, 257–269. doi: 10.1055/s-0033-1356638

Cogan, G. B., and Poeppel, D. (2011). A mutual information analysis of neural coding of speech by low-frequency MEG phase information. J. Neurophysiol. 106, 554–563. doi: 10.1152/jn.00075.2011

Cone-Wesson, B., and Wunderlich, J. (2003). Auditory evoked potentials from the cortex: audiology applications. Curr. Opin. Otolaryngol. Head Neck Surg. 11, 372–377. doi: 10.1097/00020840-200310000-00011

Crosse, M. J., Di Liberto, G. M., Bednar, A., and Lalor, E. C. (2016a). The multivariate temporal response function (mTRF) toolbox: a MATLAB toolbox for relating neural signals to continuous stimuli. Front. Hum. Neurosci. 10:604. doi: 10.3389/fnhum.2016.00604

Crosse, M. J., Di Liberto, G. M., and Lalor, E. C. (2016b). Eye can hear clearly now: inverse effectiveness in natural audiovisual speech processing relies on long-term crossmodal temporal integration. J. Neurosci. 36, 9888–9895. doi: 10.1523/JNEUROSCI.1396-16.2016

Decruy, L., Vanthornhout, J., and Francart, T. (2019). Evidence for enhanced neural tracking of the speech envelope underlying age-related speech-in-noise difficulties. J. Neurophysiol. 122, 601–615. doi: 10.1152/jn.00687.2018

Di Liberto, G. M., Lalor, E. C., and Millman, R. E. (2018a). Causal cortical dynamics of a predictive enhancement of speech intelligibility. Neuroimage 166, 247–258. doi: 10.1016/j.neuroimage.2017.10.066

Di Liberto, G. M., Peter, V., Kalashnikova, M., Goswami, U., Burnham, D., and Lalor, E. C. (2018b). Atypical cortical entrainment to speech in the right hemisphere underpins phonemic deficits in dyslexia. Neuroimage 175, 70–79. doi: 10.1016/j.neuroimage.2018.03.072

Di Liberto, G. M., O’Sullivan, J. A., and Lalor, E. C. (2015). Low-frequency cortical entrainment to speech reflects phoneme-level processing. Curr. Biol. 25, 2457–2465. doi: 10.1016/j.cub.2015.08.030

Ding, N., Patel, A. D., Chen, L., Butler, H., Luo, C., and Poeppel, D. (2017). Temporal modulations in speech and music. Neurosci. Biobehav. Rev. 81, 181–187. doi: 10.1016/j.neubiorev.2017.02.011

Ding, N., and Simon, J. Z. (2012). Emergence of neural encoding of auditory objects while listening to competing speakers. Proc. Natl. Acad. Sci. U.S.A. 109, 11854–11859. doi: 10.1073/pnas.1205381109

Ding, N., and Simon, J. Z. (2013). Adaptive temporal encoding leads to a background-insensitive cortical representation of speech. J. Neurosci. 33, 5728–5735. doi: 10.1523/JNEUROSCI.5297-12.2013

Ding, N., and Simon, J. Z. (2014). Cortical entrainment to continuous speech: functional roles and interpretations. Front. Hum. Neurosci. 8:311. doi: 10.3389/fnhum.2014.00311

Doelling, K. B., Arnal, L. H., Ghitza, O., and Poeppel, D. (2014). Acoustic landmarks drive delta-theta oscillations to enable speech comprehension by facilitating perceptual parsing. Neuroimage 85, 761–768. doi: 10.1016/j.neuroimage.2013.06.035

Donahue, J., Schoepfer, C., and Lickley, R. (2017). The effects of disfluent repetitions and speech rate on recall accuracy in a discourse listening task. Proc. DISS 2017, 17–20.

Easwar, V., Purcell, D. W., and Scollie, S. D. (2012). Electroacoustic comparison of hearing aid output of phonemes in running speech versus isolation: implications for aided cortical auditory evoked potentials testing. Intern. J. Otolaryngol. 2012:10. doi: 10.1155/2012/518202

Edwards, E., and Chang, E. F. (2013). Syllabic (~ 2-5 Hz) and fluctuation (~ 1-10 Hz) ranges in speech and auditory processing. Hear. Res. 305, 113–134. doi: 10.1016/j.heares.2013.08.017

Etard, O., and Reichenbach, T. (2019). The neural tracking of the speech envelope in the delta and in the theta frequency band differentially encodes comprehension and intelligibility of speech in noise. J. Neurosci. 39, 5750–5759. doi: 10.1523/jneurosci.1828-18.2019

Friedman, D., Simson, R., Ritter, W., and Rapin, I. (1975). Cortical evoked potentials elicited by real speech words and human sounds. Electroencephalogr. Clin. Neurophysiol. 38, 13–19. doi: 10.1016/0013-4694(75)90205-9

Giraud, A.-L., and Poeppel, D. (2012). Cortical oscillations and speech processing: emerging computational principles and operations. Nat. Neurosci. 15:511. doi: 10.1038/nn.3063

Golumbic, E. M. Z., Poeppel, D., and Schroeder, C. E. (2012). Temporal context in speech processing and attentional stream selection: a behavioral and neural perspective. Brain Lang. 122, 151–161. doi: 10.1016/j.bandl.2011.12.010

Gramfort, A., Luessi, M., Larson, E., Engemann, D. A., Strohmeier, D., Brodbeck, C., et al. (2013). MEG and EEG data analysis with MNE-Python. Front. Neurosci. 7:267. doi: 10.3389/fnins.2013.00267

Gramfort, A., Luessi, M., Larson, E., Engemann, D. A., Strohmeier, D., Brodbeck, C., et al. (2014). MNE software for processing MEG and EEG data. Neuroimage 86, 446–460. doi: 10.1016/j.neuroimage.2013.10.027

Horton, C., D’Zmura, M., and Srinivasan, R. (2013). Suppression of competing speech through entrainment of cortical oscillations. J. Neurophysiol. 109, 3082–3093. doi: 10.1152/jn.01026.2012

Howard, M. F., and Poeppel, D. (2012). The neuromagnetic response to spoken sentences: co-modulation of theta band amplitude and phase. Neuroimage 60, 2118–2127. doi: 10.1016/j.neuroimage.2012.02.028

Hyafil, A., Fontolan, L., Kabdebon, C., Gutkin, B., and Giraud, A.-L. (2015). Speech encoding by coupled cortical theta and gamma oscillations. eLife 4:e06213. doi: 10.7554/eLife.06213

Jenstad, L. M., Marynewich, S., and Stapells, D. R. (2012). Slow cortical potentials and amplification—Part II: Acoustic measures. Intern. J. Otolaryngol. 2012:14. doi: 10.1155/2012/386542

Keidser, G., Dillon, H., Flax, M., Ching, T., and Brewer, S. (2011). The NAL-NL2 prescription procedure. Audiol. Res. 1:e24.

Lakatos, P., Shah, A. S., Knuth, K. H., Ulbert, I., Karmos, G., and Schroeder, C. E. (2005). An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J. Neurophysiol. 94, 1904–1911. doi: 10.1152/jn.00263.2005

Lalor, E. C., and Foxe, J. J. (2010). Neural responses to uninterrupted natural speech can be extracted with precise temporal resolution. Eur. J. Neurosci. 31, 189–193. doi: 10.1111/j.1460-9568.2009.07055.x

Lalor, E. C., Pearlmutter, B. A., Reilly, R. B., McDarby, G., and Foxe, J. J. (2006). The VESPA: a method for the rapid estimation of a visual evoked potential. Neuroimage 32, 1549–1561. doi: 10.1016/j.neuroimage.2006.05.054

Lightfoot, G. (2016). Summary of the N1-P2 cortical auditory evoked potential to estimate the auditory threshold in adults. Semin. Hear. 37, 1–8. doi: 10.1055/s-0035-1570334

Luo, H., and Poeppel, D. (2007). Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron 54, 1001–1010. doi: 10.1016/j.neuron.2007.06.004

Mikkelsen, K. B., Kappel, S. L., Mandic, D. P., and Kidmose, P. (2015). EEG recorded from the ear: characterizing the ear-EEG method. Front. Neurosci. 9:438. doi: 10.3389/fnins.2015.00438

Millman, R. E., Johnson, S. R., and Prendergast, G. (2015). The role of phase-locking to the temporal envelope of speech in auditory perception and speech intelligibility. J. Cogn. Neurosci. 27, 533–545. doi: 10.1162/jocn_a_00719

Millman, R. E., and Mattys, S. L. (2017). Auditory verbal working memory as a predictor of speech perception in modulated maskers in listeners with normal hearing. J. Speech Lang. Hear. Res. 60, 1236–1245. doi: 10.1044/2017_JSLHR-S-16-0105

Mirkovic, B., Debener, S., Jaeger, M., and De Vos, M. (2015). Decoding the attended speech stream with multi-channel EEG: implications for online, daily-life applications. J. Neural Eng. 12:046007. doi: 10.1088/1741-2560/12/4/046007

Molinaro, N., and Lizarazu, M. (2018). Delta (but not theta)-band cortical entrainment involves speech-specific processing. Eur. J. Neurosci. 48, 2642–2650. doi: 10.1111/ejn.13811

Mooneyham, B. W., and Schooler, J. W. (2016). Mind wandering minimizes mind numbing: Reducing semantic-satiation effects through absorptive lapses of attention. Psychonom. Bull. Rev. 23, 1273–1279. doi: 10.3758/s13423-015-0993-2

Moore, D. R., Edmondson-Jones, M., Dawes, P., Fortnum, H., McCormack, A., Pierzycki, R. H., et al. (2014). Relation between speech-in-noise threshold, hearing loss and cognition from 40-69 years of age. PLoS One 9:e107720. doi: 10.1371/journal.pone.0107720

Morillon, B., Liégeois-Chauvel, C., Arnal, L. H., Bénar, C. G., and Giraud, A.-L. (2012). Asymmetric function of theta and gamma activity in syllable processing: an intra-cortical study. Front. Psychol. 3:248. doi: 10.3389/fpsyg.2012.00248

Nachtegaal, J., Kuik, D. J., Anema, J. R., Goverts, S. T., Festen, J. M., and Kramer, S. E. (2009). Hearing status, need for recovery after work, and psychosocial work characteristics: results from an internet-based national survey on hearing. Intern. J. Audiol. 48, 684–691. doi: 10.1080/14992020902962421

O’Sullivan, J. A., Power, A. J., Mesgarani, N., Rajaram, S., Foxe, J. J., Shinn-Cunningham, B. G., et al. (2014). Attentional selection in a cocktail party environment can be decoded from single-trial EEG. Cereb. Cortex 25, 1697–1706. doi: 10.1093/cercor/bht355

Peelle, J. E., Gross, J., and Davis, M. H. (2013). Phase-locked responses to speech in human auditory cortex are enhanced during comprehension. Cereb. cortex 23, 1378–1387. doi: 10.1093/cercor/bhs118

Power, A. J., Foxe, J. J., Forde, E.-J., Reilly, R. B., and Lalor, E. C. (2012). At what time is the cocktail party? A late locus of selective attention to natural speech. Eur. J. Neurosci. 35, 1497–1503. doi: 10.1111/j.1460-9568.2012.08060.x

Riecke, L., Formisano, E., Sorger, B., Baskent, D., and Gaudrain, E. (2018). Neural entrainment to speech modulates speech intelligibility. Curr. Biol. 28, 161–169.

Schroeder, C. E., and Lakatos, P. (2009). Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 32, 9–18. doi: 10.1016/j.tins.2008.09.012

Shahin, A. J., Roberts, L. E., Miller, L. M., McDonald, K. L., and Alain, C. (2007). Sensitivity of EEG and MEG to the N1 and P2 auditory evoked responses modulated by spectral complexity of sounds. Brain Topogr. 20, 55–61. doi: 10.1007/s10548-007-0031-4

Shannon, R. V., Zeng, F.-G., Kamath, V., Wygonski, J., and Ekelid, M. (1995). Speech recognition with primarily temporal cues. Science 270, 303–304. doi: 10.1126/science.270.5234.303

Somers, B., Verschueren, E., and Francart, T. (2018). Neural tracking of the speech envelope in cochlear implant users. J. Neural Eng. 16:016003. doi: 10.1088/1741-2552/aae6b9

Suppes, P., Han, B., and Lu, Z.-L. (1998). Brain-wave recognition of sentences. Proc. Natl. Acad. Sci. U.S.A. 95, 15861–15866. doi: 10.1073/pnas.95.26.15861

Tremblay, K., Friesen, L., Martin, B., and Wright, R. (2003). Test-retest reliability of cortical evoked potentials using naturally produced speech sounds. Ear Hear. 24, 225–232. doi: 10.1097/01.aud.0000069229.84883.03

Van Dun, B., Carter, L., and Dillon, H. (2012). Sensitivity of cortical auditory evoked potential detection for hearing-impaired infants in response to short speech sounds. Audiol. Res. 2:e13. doi: 10.4081/audiores.2012.e13

Vanthornhout, J., Decruy, L., Wouters, J., Simon, J. Z., and Francart, T. (2018). Speech Intelligibility Predicted from Neural Entrainment of the Speech Envelope. J. Assoc. Res. Otolaryngol. 19, 181–191. doi: 10.1007/s10162-018-0654-z

Woodfield, A., and Akeroyd, M. A. (2010). The role of segmentation difficulties in speech-in-speech understanding in older and hearing-impaired adults. J. Acoust. Soc. Am. 128, EL26–EL31. doi: 10.1121/1.3443570

Keywords: cortical entrainment, hearing impairment, hearing aid evaluation, speech detection, electroencephalography

Citation: Vanheusden FJ, Kegler M, Ireland K, Georga C, Simpson DM, Reichenbach T and Bell SL (2020) Hearing Aids Do Not Alter Cortical Entrainment to Speech at Audible Levels in Mild-to-Moderately Hearing-Impaired Subjects. Front. Hum. Neurosci. 14:109. doi: 10.3389/fnhum.2020.00109

Received: 21 November 2019; Accepted: 11 March 2020;

Published: 03 April 2020.

Edited by:

Nathalie Linda Maitre, Nationwide Children’s Hospital, United StatesReviewed by:

Celine Richard, Nationwide Children’s Hospital, United StatesCopyright © 2020 Vanheusden, Kegler, Ireland, Georga, Simpson, Reichenbach and Bell. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Frederique J. Vanheusden, ZnJlZGVyaXF1ZS52YW5oZXVzZGVuQG50dS5hYy51aw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.