- 1School of Computer Science and Electrical Engineering, Handong Global University, Pohang, South Korea

- 2Wadsworth Center, New York State Department of Health, Albany, NY, United States

- 3Department of Psychiatry, University of North Carolina at Chapel Hill, Chapel Hill, NC, United States

- 4School of Electrical Engineering and Computer Science, Gwangju Institute of Science and Technology, Gwangju, South Korea

Performance variation is a critical issue in motor imagery brain–computer interface (MI-BCI), and various neurophysiological, psychological, and anatomical correlates have been reported in the literature. Although the main aim of such studies is to predict MI-BCI performance for the prescreening of poor performers, studies which focus on the user’s sense of the motor imagery process and directly estimate MI-BCI performance through the user’s self-prediction are lacking. In this study, we first test each user’s self-prediction idea regarding motor imagery experimental datasets. Fifty-two subjects participated in a classical, two-class motor imagery experiment and were asked to evaluate their easiness with motor imagery and to predict their own MI-BCI performance. During the motor imagery experiment, an electroencephalogram (EEG) was recorded; however, no feedback on motor imagery was given to subjects. From EEG recordings, the offline classification accuracy was estimated and compared with several questionnaire scores of subjects, as well as with each subject’s self-prediction of MI-BCI performance. The subjects’ performance predictions during motor imagery task showed a high positive correlation (r = 0.64, p < 0.01). Interestingly, it was observed that the self-prediction became more accurate as the subjects conducted more motor imagery tasks in the Correlation coefficient (pre-task to 2nd run: r = 0.02 to r = 0.54, p < 0.01) and root mean square error (pre-task to 3rd run: 17.7% to 10%, p < 0.01). We demonstrated that subjects may accurately predict their MI-BCI performance even without feedback information. This implies that the human brain is an active learning system and, by self-experiencing the endogenous motor imagery process, it can sense and adopt the quality of the process. Thus, it is believed that users may be able to predict MI-BCI performance and results may contribute to a better understanding of low performance and advancing BCI.

Introduction

Motor imagery based brain–computer interface (BCI) has become an increasingly prevalent process, and in recent decades researchers have made valuable achievements and demonstrated the feasibility of BCI for various applications, such as communication, control, rehabilitation, entertainment, and others (Wolpaw et al., 2002; Millán et al., 2010; Ortner et al., 2012; LaFleur et al., 2013; Ahn M. et al., 2014; Bundy et al., 2017; Guger et al., 2017). Despite such advances in BCI, there is still a significant hurdle to overcome before BCI can move forward to the public market. Reportedly, about 10 — 30% of BCI users do not modulate the classifiable brain signals that are critical to run a BCI system; such a phenomenon is called “BCI-illiteracy” (Blankertz et al., 2010). In recent years, researchers have investigated the BCI-illiteracy phenomena and proposed techniques to improve current BCI systems in the context of control paradigm, system feedback modality, algorithms, and training protocols (Hwang et al., 2009; Grosse-Wentrup et al., 2011; Kübler et al., 2011; Fazli et al., 2012; Hammer et al., 2012; Maeder et al., 2012; Ahn S. et al., 2014; Suk et al., 2014; Jeunet et al., 2015a, 2016a,c; Pacheco et al., 2017).

According to a recent review (Ahn and Jun, 2015), researchers have approached this problem from different perspectives, all aiming to answer one question: “What is the best correlate of performance variation?” The aims of such studies can be summarized as follows: First, identify what distinct characteristics exist in poor performers; second, understand why these traits are common in the lower performers; and, lastly, use the correlates to classify the poor performers in advance, prior to using BCI.

To resolve performance variation, many ideas have been proposed. These methods include introducing advanced signal processing techniques such as co-adaptive learning (Vidaurre et al., 2011; Xia et al., 2012; Merel et al., 2015), training users until they are able to generate classifiable signals (Mahmoudi and Erfanian, 2006; Hwang et al., 2009; Tan et al., 2014), and brain tuning that shifts the current brain state to a better state for motor imagery using tactile (Ahn S. et al., 2014) or electrical (Pichiorri et al., 2011; Wei et al., 2013; Yi et al., 2017) stimulation. These ideas are based on the fact that the human brain is able to reflect on, learn from, and even adapt to its experiences. In fact, the classical signal processing methods overlooked this aspect and thus focused on designing a better feature extractor or classifier than a fixed decoder (McFarland et al., 1997; Ramoser et al., 2000; Lemm et al., 2005). In recent years, advanced BCI system designs have been proposed to employ the concept of co-adaptation, which is the process whereby a user learns to control the BCI in conjunction with the adaptation of learning brain states of the user. However, in the studies of performance variation, such functionality of the brain is not seriously considered.

In general, the feedback modality of BCI system is considered important (Kübler et al., 2001; Hinterberger et al., 2004; Blankertz et al., 2006; Cincotti et al., 2007; Leeb et al., 2007; Nijboer et al., 2008; Gomez-Rodriguez et al., 2011; Ramos-Murguialday et al., 2012; McCreadie et al., 2014; Jeunet et al., 2015b, 2016b). Similarly, user feedback may be equally as important as system feedback. Interestingly, a few studies have evaluated feedback from users (Burde and Blankertz, 2006; Hammer et al., 2012; Vuckovic and Osuagwu, 2013). These studies used indirect measures to correlate with BCI performance. However, the variation of such parameters was not investigated while subjects performed the motor imagery tasks. Our ultimate aim is to predict a user’s performance prior to using the BCI system; therefore, it may make more sense to let users predict their performance directly. The brain can adapt to the external environment. Such functionality has been introduced in a co-adaptive learning algorithm in BCI systems; likewise, we expect that users may be able to directly estimate their own BCI performance. This idea is supported by a recent study by Vuckovic and Osuagwu (2013) that investigated the motor imagery quality indicated by users. The authors demonstrated that the proprioceptive sensation of a movement (kinesthetic imagery), which was assessed with 5-point rating scales, can be used as a test to differentiate between “good” and “poor” performers in motor imagery BCI.

Several benefits are conceivable with the self-prediction of BCI performance. First, it removes or reduces time-consuming preparation or installation and recording procedures that usually require tremendous effort from users and experimenters. Note that relevant studies employed brain signal recording (Blankertz et al., 2010; Ahn et al., 2013a,b), brain imaging (Halder et al., 2011, 2013) and intensive psychological tests (Hammer et al., 2012). However, if self-prediction is possible and usable, the only necessary step is to ask the user how his/her BCI performance will be based on his/her own feelings or experiences. This is the best scenario because it does not require any further behavioral, psychological, or experimental tasks. In a study by Vuckovic and Osuagwu (2013), subjects also conducted some behavioral and mental tasks, and they could therefore answer questions based on their sensations. Therefore, the best-case scenario, in which the user immediately answers questions about his/her performance, is less likely. Rather, the user may need to perform some tasks—either behavioral, mental, or motor imagery—to give enough experience and time to build the sense of their individual motor imagery proficiency. Even if this is proven true, however, we can still reap the other benefits of self-prediction. BCI performance may be somewhat influenced by unique characteristics of the user and the hardware/software of the system (Kübler et al., 2011). Therefore, a BCI user may realize that he/she is good or bad at conducting motor imagery at some point during the training or testing of BCI; the user is then able to clarify where or when the BCI problem occurs. This is the second benefit. The third benefit is that the inferior data can be separated from the good dataset. This is important because poor-quality data is much more likely to introduce a bias in classification results and can eventually lead to incorrect conclusions in research.

In summary, the brain is a good learning system and motor imagery is an endogenous process. Therefore, a user’s self-assessment provides valuable feedback and includes information relevant to understanding performance variations. However, to the best of our knowledge, this aspect has not been well investigated in the existing studies on BCI illiteracy; thus, a rigorous analysis on it would be quite interesting and informative. To address the issue of whether a user’s self-predicted score is correlated with offline BCI performance and, if so, how long it may take for the user to get a sense of the connection, we investigated the user’s self-assessed parameters, including mental and physical states, the quality of motor imagery, and self-predictions of motor imagery performance before and between runs.

Materials and Methods

Motor Imagery Task

In this study, 52 healthy subjects (26 males, 26 females; mean age: 24.8 ± 3.86 years) participated. Among all subjects, six subjects experienced BCI or biofeedback with neurophysiological signals. The Institutional Review Board from the Gwangju Institute of Science and Technology approved this experiment. All participants were informed of the purpose and process of the experiment and each written consent form was collected before conducting the experiment. We used BCI2000 software (Schalk et al., 2004) and Biosemi Active 2 system (64 channels, sampling rate: 512 Hz) for stimulus presentation and electroencephalography (EEG) signal acquisition, respectively. At the beginning of the experiment, we recorded 1-min resting state signals under eyes-open conditions, and we then conducted a conventional two-class motor imagery experiment. A detailed step-by-step description of the motor imagery experiment follows.

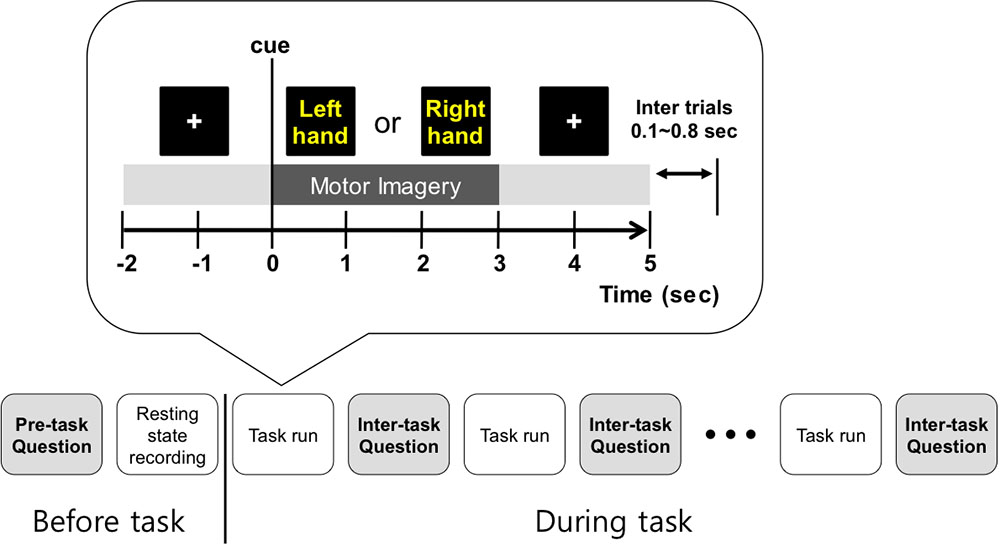

On the monitor screen, the visual instruction was presented. At the beginning of each trial, a cross appeared for 2 s, and then text indicating left or right was shown for 3 s. Subjects were asked to imagine left- or right-hand movement according to the presented direction at the motor imagery phase. Right after the motor imagery phase, a cross appeared for 2 s again. Thus, the total time of each trial was 7 s and the inter-trial interval was set randomly to between 0.1 and 0.8 s. In each run, 20 trials for each condition (left or right) were collected, and at the end of each run we asked the subject if he/she could perform the next run. Only when the subjects responded ‘Yes’ would they perform the next run. All subjects conducted 5 or 6 runs with about a 2-min break between runs. Thus, we obtained a total of 100 or 120 trials for each condition for each subject. During the experiment, there was no feedback given on how well subjects performed the motor imagery tasks. The procedure for one trial is illustrated in Figure 1.

FIGURE 1. Overall experiment design. Before the 1st run, personal information was collected, and subjects practiced motor imagery and predicted their classification accuracy. Then, resting state EEG was recorded. Between tasks, the follow-up questions were asked to collect the self-assessed condition levels and motor imagery related scores, including prediction of BCI performance.

Questionnaire Survey

Prior to the motor imagery experiment, the subjects were asked to practice kinesthetic imagery rather than visual imagery since kinesthetic imagery yields better discriminable brain wave patterns than visual imagery does (Neuper et al., 2005; Vuckovic and Osuagwu, 2013). An explanation regarding the meaning of binary classification was also given, so subjects would understand the concept of poor (50%) and perfect (100%) accuracies. Thus, most of the subjects answered between 50 and 100%, with the exception of six subjects (sbj7, sbj21, sbj28, sbj36, sbj51, and sbj52) as seen in Supplementary Table 1.

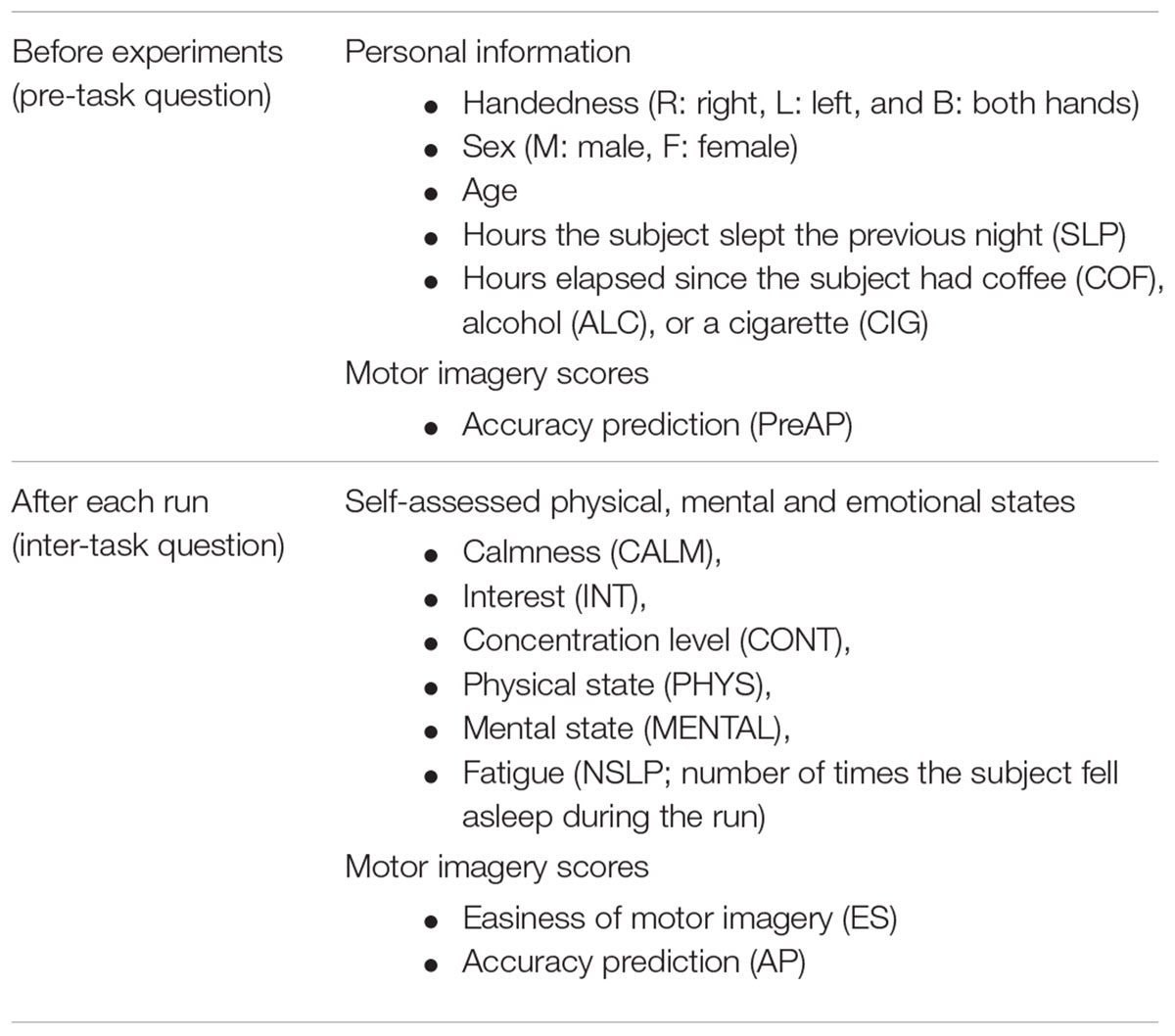

Two questionnaires were generated: pre-task and inter-task forms (Table 1). Each subject filled out a pre-task questionnaire that contained question items regarding age, handedness, sex, hours the subject had slept the previous night, hours elapsed since the subject had ingested substances (coffee, alcohol, or cigarettes), and predicted accuracy. Then the subject started the actual motor imagery task. In order to obtain the feedback about physical, mental, and emotional states and motor imagery scores of each run, an inter-task questionnaire was provided at the end of each run, and the subject filled out the form.

Subjects scored the easiness of motor imagery on a scale from 1 to 5 (1: easiest to 5: very difficult) and the prediction of their classification accuracies between 50 and 100%. We note that the subjects were not given any feedback on their motor imagery performance during the experiment; their predicted motor imagery scores were purely based on their own experiences or expectations. In conducting the self-assessment of physical, mental, and emotional states (except for fatigue), each question was answered according to a 1–5 scale (1: most or best to 5: least or worst). The overall procedure of the experiment is illustrated in Figure 1. For detailed experiment information and questionnaire forms, refer to the literature (Cho et al., 2017).

Motor Imagery Classification Accuracy

Motor imagery classification accuracy was calculated in a conventional way, by using Common Spatial Pattern (CSP) and Fisher Linear Discriminant Analysis (FLDA) (Ramoser et al., 2000; Ahn et al., 2013b). For the statistically reasonable accuracy, different groups of training and testing trials were generated multiple times (120 repetitions), and the final accuracy was obtained from this cross-validation method. The detailed procedure is as follows. First, EEG signals were filtered with the specific frequency band (8–30 Hz) and temporal interval (0.4–2.4 s after cue onset) to include the informative event-related (de) synchronization of Alpha/Beta (Pfurtscheller and Lopes da Silva, 1999; Ahn et al., 2012), which was reported as the primary feature in motor imagery BCI. With the filtered epochs, the cross-validation technique was applied to produce a statistically reasonable estimate of classification accuracy. Data was processed by first grouping all trials into 10 subsets of equal size; these 10 subsets were separated into seven training and three testing sub-groups. Therefore, the total number of such possible separations is 120. For each separation, the 10 most significant spatial filters were extracted from training trials by CSP and then a class separation line through FLDA was generated. By applying these 10 spatial filters and the classification line to corresponding testing trials, the correct rate (the number of correct epochs divided by the number of total epochs) was estimated. This procedure was repeated for other separations. Finally, all correct rates were averaged and this average was defined as the motor imagery classification accuracy.

Analysis

In the analysis of personal information, for each comparison we divided subjects into two groups according to Sex (male vs. female) or Age (older vs. younger than 24.8 years, which is the mean age of subjects), or Coffee/Alcohol/Cigarettes (subjects who had coffee/alcohol/cigarettes within 24 h vs. subjects who did not) since the data showed that many subject answered that they did not consume the substances. Most of the subjects were right-handed, thus the handedness classification was excluded for further analysis. Then, the offline classification accuracy was compared at the in-group level. Here, the Wilcoxon Rank-Sum test was applied to check statistical significance. For the other questions, which had 5-scale scores, we first flipped the self-assessed scores in the following way: score 1 switched to score 5, 2 switched to 4, and so on. Then, the correlation coefficient between the scores (for each personal information category) and motor imagery classification accuracy over subjects and corresponding p-values were calculated using the MATLAB “corr()” function, which computes Pearson’s Correlation. For the statistically reasonable results, a permutation test was conducted (n = 2,500) and correction for multiple comparisons was made for p-values using the False Discovery Rate (FDR) (Benjamini and Hochberg, 1995; Genovese et al., 2002) with a q value of 0.1.

These processing steps made the results more intuitive; if results from one question item were highly correlated to the motor imagery classification, it would show an overall positive correlation. For pre-task questions, the raw answer values were used, but the scores in the inter-task questions were averaged over runs for further analysis. The Pearson’s correlation coefficient was also computed with actual classification accuracy. A permutation test in which actual BCI performance is shuffled was also conducted (n = 2,500) and corrected for the statistical significance.

For investigation of the variations of self-prediction over time, session-wise classification accuracy was compared with the self-prediction at each run. The correlation coefficient and the corresponding Root Mean Square Error (RMSE) were quantified. In addition, the cross-validated classification accuracy at each run was computed using the same method (six CSP filters and FLDA) and compared with the prediction to see the evolution of run-wise accuracy and prediction across runs. The result from this analysis will be used in the Section “Discussion.”

Results

Personal Information and Conditions

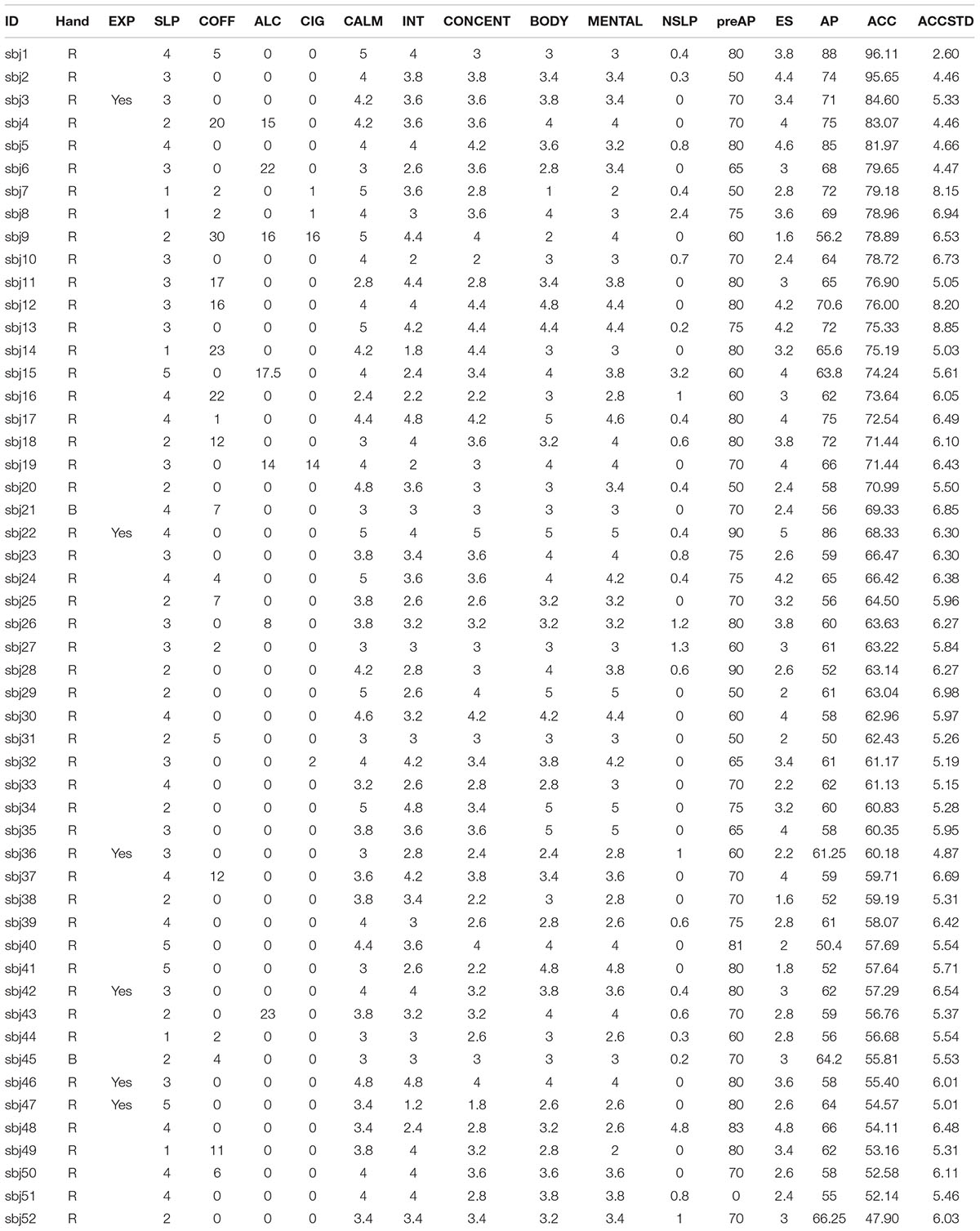

The scores from the questionnaire survey and offline classification accuracy are tabulated in Table 2 (for accuracy prediction at each run, see Supplementary Table 1). The overall classification accuracy yielded 66.93 ± 11.05% (average ± standard deviation), ranging from a minimum of 46.9% to a maximum of 96.1%. We observed that the classification accuracies were well scattered, and our collected datasets therefore had a broad spectrum of subjects from poor to good performers in terms of motor imagery proficiency.

TABLE 2. Questionnaire results and neurophysiological index. For detailed information about items, please see the text.

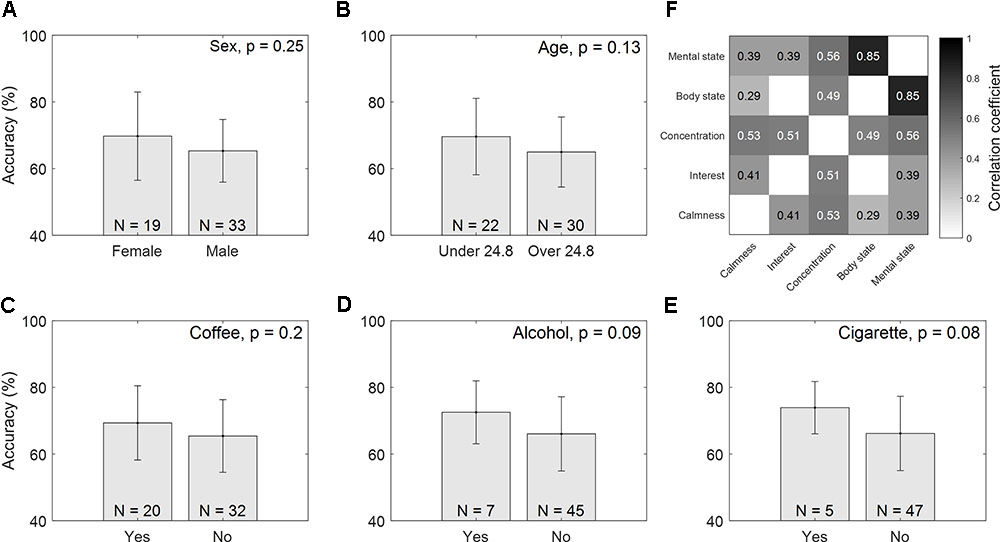

Figures 2A–F show the accuracy comparisons of different groups classified according to Sex, Age, Coffee, Alcohol, and Cigarettes. There are apparent differences between groups. Young female subjects seemed to perform better than male and relatively older subjects who were more than 24.8 years of age. Additionally, subjects who had coffee/alcohol/cigarettes in the 24 h preceding the tests were likely to show higher motor imagery accuracy than the subjects who had not. However, these differences were not statistically significant (Sex: p = 0.25, Age: p = 0.07, Coffee: p = 0.2, Alcohol: p = 0.09, and Cigarettes: p = 0.08). In the correlation analysis of physical and mental states, none of the items (SLP: r = -0.06, NSLP: r = -0.04, Calmness: r = 0.25, Interest: r = 0.08, Concentration: 0.25, Physical state: -0.08, or Mental state: r = 0.0 with p > 0.05 for all results) were significantly correlated with classification accuracy, although they were highly correlated to each other, as shown in Figure 2F.

FIGURE 2. Accuracy comparisons between different groups. Mean accuracy with standard deviations are presented, and the total number of subjects within each group is noted on the bottom of each figure: (A) Sex, (B) Age, (C) Coffee, (D) Alcohol, and (E) Cigarettes. Figure (F) notes the correlation coefficients between physical/mental scores (FDR-corrected).

Easiness of Motor Imagery and Self-Prediction of Accuracy

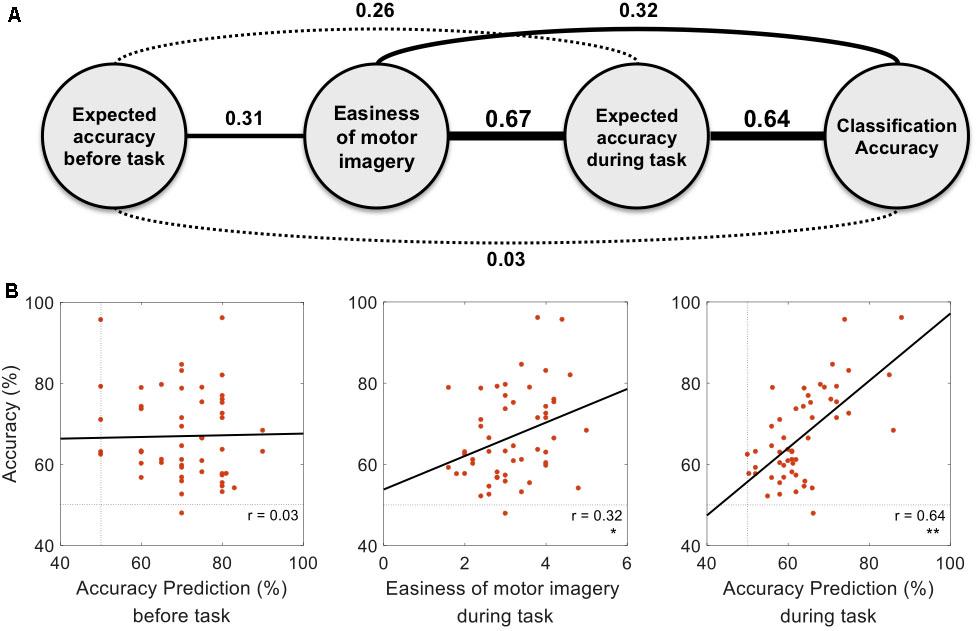

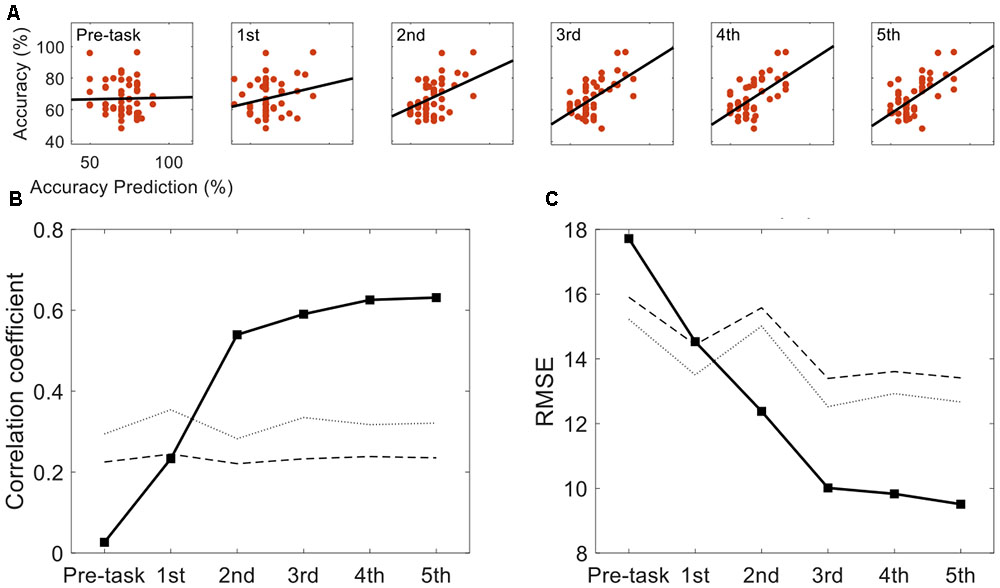

Figure 3 represents the results of the correlation analysis. Most measures were positively correlated with classification accuracy, but the magnitude of this correlation (how strong) varied depending on the measures. The predicted accuracy during runs (the average of many predictions assessed during runs) showed a higher correlation coefficient (r = 0.64, p < 0.01, permutation test n = 2,500) than easiness of motor imagery (r = 0.32, p < 0.05, permutation test n = 2,500), even though they are quite positively correlated (r = 0.67). On the other hand, the classification accuracy which subjects predicted before the playing task yielded no significant correlation with offline classification accuracy (r = 0.03, p > 0.05, permutation test n = 2,500). It is inferred from these results that during the task, subjects could more accurately estimate their motor imagery proficiency to some extent. However, they were not able to do so before completing the task. A more in-depth analysis was conducted with the individual estimates at each run. Figure 4A demonstrates how self-prediction changes as the subjects participate in each task from pre-task to 5th run. The increasing tendency of the correlation coefficient was clearly observed, and it dramatically jumped from the pre-task expectation (r = 0.03) to the 1st (r = 0.23) and 2nd (r = 0.54) runs in Figure 4B; it then increased marginally afterward. Similarly, as shown in Figure 4C, RMSE steeply decreased from pre-task (RMSE = 17.7%) to the 3rd run (RMSE = 10%) and then fluctuated slightly. Permutation tests (n = 2,500) revealed that correlation coefficient and RMSE in the 2nd to 5th runs are statistically different from the two statistical ceilings [p = 0.05 (dashed line) and p = 0.01 (dotted line)].

FIGURE 3. Correlations with actual classification accuracy. (A) The relationships between measures are presented with correlation coefficients. The non-significant correlation (p > 0.05) and significant correlation (p < 0.05) are presented with dotted and solid lines, respectively. Line width represents the strength of correlation. (B) Dots in each figure represent subjects, and the black line is the linear regression line to data points. Statistical significance is marked with one star (p < 0.05) or double stars (p < 0.01) on the right bottom of each figure (p-values were FDR corrected).

FIGURE 4. Self-prediction comparison across task runs. (A) The evolution of accuracy and self-prediction from pre-task to the 5th run. (B) Correlation coefficients between self-predicted performance and actual classification performance are presented across pre-task and the 1st to 5th runs. (C) Corresponding Root Mean Square Error between the predicted performance and actual classification performance are presented. Statistical lines are marked with the dotted (p = 0.01) and the dashed (p = 0.05) lines.

Discussion

In this study, we instructed subjects to predict their motor imagery performance and investigated how their predictions correlated with actual (offline) classification accuracy. Initially, participants estimated their performance poorly. As observed in the pre-task and 1st run in Figure 4B, the correlation coefficients were determined to be non-significant from the permutation test; however, as participants completed the motor imagery task (from 2nd to 5th runs, the correlation coefficients were statistically significant and increasing), they seemed to get a better sense of their motor imagery quality.

Subjects evaluated how easy their motor imagery was, and the self-assessed motor imagery scores wound up being positively correlated with offline classification accuracy. This relationship concurred with the results of the reports from Vuckovic and Osuagwu (2013) and Marchesotti et al. (2016), which stated that simply asking questions about the quality of motor imagery can be used to predict motor imagery performance. Interestingly, the predicted accuracy during tasks was also highly positively correlated with actual classification accuracy (r = 0.64, p < 0.01), and this value was notably larger than the correlation coefficient of scores on the easiness of motor imagery (r = 0.32, p < 0.05).

Considering Kübler et al.’s (2001) statement that “perception of the electrocortical changes is obviously related to the control of these changes,” perception is an important feature to understand the internal state, and the self-report is the tool to express such information to the external world. Related to this notion, the above-mentioned results raise an interesting question. The information that the subjects used to predict their performance accuracy may have come from the same source they used for evaluating the easiness of motor imagery, so both measures may overlap in a certain domain. From our results, it is believed that this is true, since the two measures are highly correlated (r = 0.67), as shown in Figure 3A. However, we observed a greater difference between the two measures in terms of the correlation coefficient with actual classification accuracy. Considering that many psychometrics by self-report (e.g., vividness of motor imagery, mental rotation, and visuo-motor coordination), were confirmed to be significant measures for predicting an individual’s BCI performance (Hammer et al., 2012; Jeunet et al., 2015a; Marchesotti et al., 2016), self-assessment seems to be a useful tool for evaluating motor imagery. These results demonstrate the psychological capacity of sensing the internal state of a participant based on a certain model that depends on the given question or task to perform.

In this study, subjects might develop the internal model of evaluating themselves in the accuracy domain. Easiness of motor imagery is simply a score selected from a 1 to 5 scale. However, to predict classification accuracy, subjects were given an explanation regarding the method of classification and the ranges of accuracy (50–100%). Thus, in transforming the subject’s actual feeling regarding the quality of motor imagery to the other domain—the accuracy measure—the self-evaluated value seems to become more like the form of accuracy. From this result, we learned the following important lessons. First, directly estimating the target measure (here, BCI performance) that researchers want to see may be better than correlating the indirect value (any correlates) with the target measure. Second, subjects should be given sufficient information on what they are evaluating.

Based on our results, the ideal case—wherein subjects predict their performance in a short time without performing any tasks—may not be achievable; they need time to develop an accurate sense of how to evaluate their motor imagery quality and to predict BCI performance. In this context, an interesting question is how many trials are sufficient for users to build a sense of predicting BCI performance. Figure 4 gives us a brief clue. At the 1st and 2nd runs, the correlation between BCI performance and self-prediction showed dramatic increases, and the RMSE steeply decreased from 17.7% (pre-task) to 10% at the 3rd run. Because 40 motor imagery trials (for both left and right imagery) were collected at each run, it is inferred that 80–120 motor imagery trials (roughly 9–16 min in time) would be required for subjects to develop the ability to achieve relatively accurate self-assessment. In conclusion, BCI-illiterate persons could stop at about half the number of planned tasks (the planned number of trials: 200 or 240) in our experiment.

Related to the evolution of self-prediction, another interesting question is whether users can recall the experience from one session and accurately evaluate themselves in the following session. If that were the case, then it would be possible for participants to accurately predict their performance without having training time to sense their abilities. Multi-session data will help to answer that question.

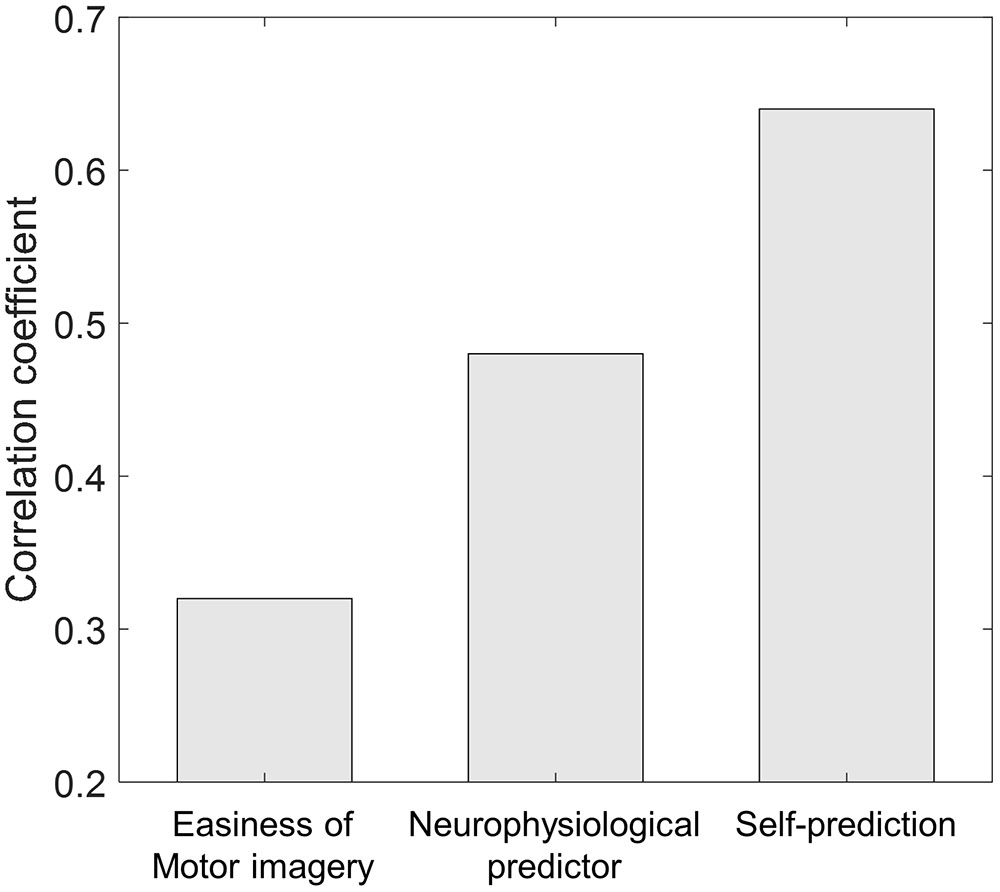

Another interesting question is whether self-prediction is better as a predictor for BCI performance than other correlates. In the literature, various types of correlates were investigated and researchers reported the following correlation coefficients: r = 0.59 (Burde and Blankertz, 2006) and r = 0.50 (Hammer et al., 2012) in psychological parameters; r = 0.72 and r = 0.63 (Halder et al., 2011, 2013) in anatomical parameters; and r = 0.53 (Blankertz et al., 2010) in neurophysiological parameters. Results from our previous study indicate that low alpha and high theta are the typical neurophysiological pattern of a BCI-illiterate user, and a performance predictor combining four different values of spectral band power (theta, alpha, beta, and gamma) was proposed and evaluated using the same data. Figure 5 demonstrates the comparison between the correlation coefficients of the three methods. Interestingly, the proposed neurophysiological predictor yielded r = 0.48 and self-prediction yielded r = 0.64. Even though more thorough investigation of physiological, anatomical, and psychological correlates should be conducted using the same dataset, we may conclude from our findings that self-prediction is quite comparable to the existing predictors; moreover, it is an easy and quick way to predict BCI performance because it does not require long preparations for the installation of systems or complex brain imaging.

FIGURE 5. Correlation coefficient comparison across different methods. For the neurophysiological predictor, the value from the study by Ahn et al. (2013b) was adopted.

As we observed, self-prediction may not be the most suitable strategy to quickly determine BCI-illiteracy in advance. However, the result revealed that the domain, which has thus far been overlooked, should be taken into account for investigating BCI-illiteracy. This means that user feedback is just as important as other factors. Thus, it is an interesting topic to introduce user feedback in updating and advancing the current BCI training protocol, control paradigms (e.g., p300 BCI), algorithms, different imaging modality (Functional near-infrared spectroscopy and Magnetoencephalography), and hybrid BCIs.

Here, we discuss the limitations of this study. First the observed self-prediction becomes closer to the session-wise performance that was calculated from the overall trials from 1st run to the last run. Thus, it is possible that the significant correlation could be due either to a good estimation of their performance by the participants or to the fact that subjects become bored and so stop putting effort into performing the task. Considering the statistics of accuracy (mean: 66.9%, median: 63.4%, and standard deviation: 11.0%) and prediction (mean: 63.5%, median: 62.0%, and standard deviation: 8.5%), and the fact that the numbers of subjects whose classification accuracy/or prediction is below than 60% are N = 18 (accuracy), N = 16 (prediction), and N = 9 (both), the latter reason might influence the result. If this is true, it could cause the performance to occur around chance level.

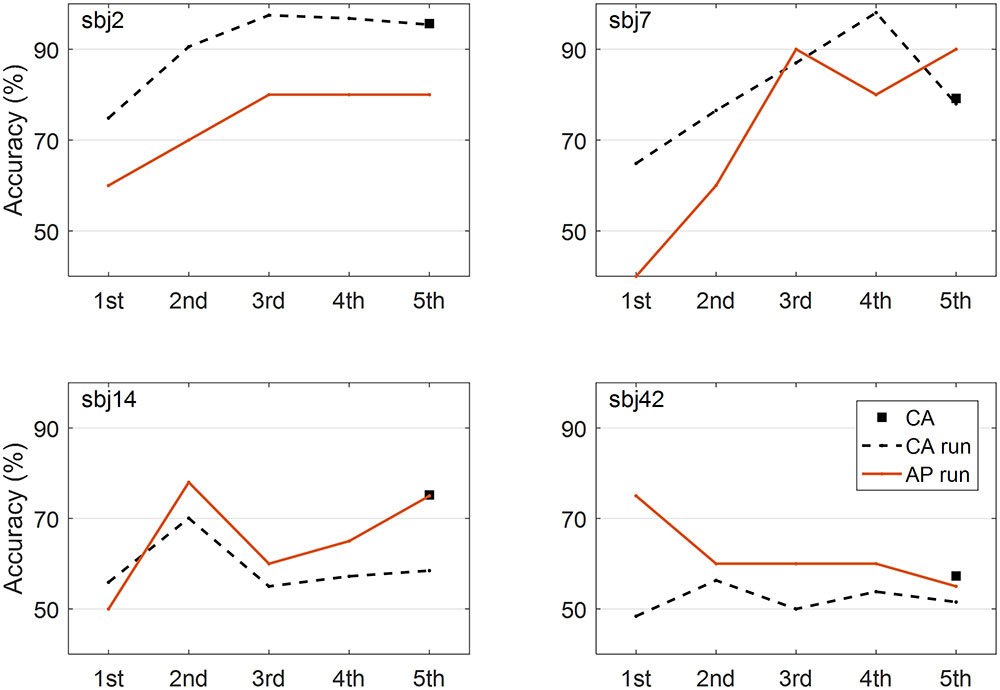

To address this issue, the performance at each run was estimated using the same method (CSP with six filters and FLDA), and the evolutions of performance and prediction were investigated for all subjects. Figure 6 represents the results from the four representative subjects. As a result, it was not the case that performance goes to the chance level and prediction is around chance level, too. However, only 20 trials per class were collected in this study, so obtaining the trustable classification accuracy is difficult when comparing the both accuracy and prediction at each run because the small number of trials dramatically increases the chance level (Müller-Putz et al., 2008). In addition, considering the relatively large portion of low performers (N = 16), further investigation with sufficient number of trials should be done to reach a solid conclusion on this issue.

FIGURE 6. Evolutions of performance and prediction. Four representative figures show how the performances and predictions evolve across runs. Classification accuracy (CA) from all the trials (5 runs), classification accuracy (CA run) at each run, and accuracy prediction (AP) at each run are presented.

Second, only the task without feedback was evaluated in this study, but considering the report that training with feedback (e.g., neuro-feedback based training (Hwang et al., 2009) helps users to modulate classifiable brain waves in BCI, it is interesting to see how much influence that feedback from the system has on building the sense of motor imagery quality; such feedback may be helpful or may cause users to have a biased model of self-sensing. We believe that direct comparison of two tasks with or without feedback would give a greater indication of the influence of feedback on the internal model, which will be under investigation.

Third, we used the general techniques (i.e., CSP and FLDA) common in the BCI field, and sixteen subjects showed accuracy lesser than 60%, which may be considered a chance level. Therefore, it is worthwhile to check if such population might have an influence on the results. However, re-computation without such subjects showed that the high correlation is still observable (r = 0.67) as seen on Supplementary Figure 1. More complex algorithms may find the better features and construct more accurate decision hyperplane, thus yielding higher classification accuracy. But considering the existing literature (Samek et al., 2012; Lotte, 2015; Alimardani et al., 2017), the order of performance across subjects is hardly changed. Therefore, we believe that a higher-order ML algorithm will not weaken the significance of the result.

Conclusion

We investigated the correlations between the personal information/conditions and self-assessed motor imagery scores of study participants with actual (offline) classification accuracy in MI-BCI. None of the personal information or conditions were statistically significantly correlated with actual classification accuracy. However, we observed a high positive correlation between the self-predicted BCI performance and actual classification accuracy. Additionally, our results demonstrate that such self-prediction improves as the subjects experience more of the motor imagery trials (after roughly 16 min), even though there was no feedback to subjects regarding performance. In conclusion, the introduction of a self-prediction by a BCI user is also useful information for understanding BCI performance variation.

Ethics Statement

This study was carried out in accordance with the recommendations of the Institutional Review Board in Gwangju Institute of Science and Technology with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Institutional Review Board in Gwangju Institute of Science and Technology.

Author Contributions

MA, HC, SA, and SJ designed the study. MA, HC, and SA collected data. MA analyzed data and wrote the manuscript. MA and SJ contributed to the editing of the final manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by the Institute for Information and Communications Technology Promotion (IITP) grant funded by the Korean government (MSIT) (No. 2017-0-00451) and the GIST Research Institute (GRI) grant funded by the Gwangju Institute of Science and Technology (GIST) in 2018.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2018.00059/full#supplementary-material

References

Ahn, M., Ahn, S., Hong, J. H., Cho, H., Kim, K., Kim, B. S., et al. (2013a). Gamma band activity associated with BCI performance: simultaneous MEG/EEG study. Front. Hum. Neurosci. 7:848. doi: 10.3389/fnhum.2013.00848

Ahn, M., Cho, H., Ahn, S., and Jun, S. C. (2013b). High theta and low alpha powers may be indicative of bci-illiteracy in motor imagery. PLOS ONE 8:e80886. doi: 10.1371/journal.pone.0080886

Ahn, M., Hong, J. H., and Jun, S. C. (2012). Feasibility of approaches combining sensor and source features in brain–computer interface. J. Neurosci. Methods 204, 168–178. doi: 10.1016/j.jneumeth.2011.11.002

Ahn, M., and Jun, S. C. (2015). Performance variation in motor imagery brain-computer interface: a brief review. J. Neurosci. Methods 243, 103–110. doi: 10.1016/j.jneumeth.2015.01.033

Ahn, M., Lee, M., Choi, J., and Jun, S. C. (2014). A review of brain-computer interface games and an opinion survey from researchers, developers and users. Sensors 14, 14601–14633. doi: 10.3390/s140814601

Ahn, S., Ahn, M., Cho, H., and Jun, S. C. (2014). Achieving a hybrid brain–computer interface with tactile selective attention and motor imagery. J. Neural Eng. 11:066004. doi: 10.1088/1741-2560/11/6/066004

Alimardani, F., Boostani, R., and Blankertz, B. (2017). Weighted spatial based geometric scheme as an efficient algorithm for analyzing single-trial EEGS to improve cue-based BCI classification. Neural Netw. 92, 69–76. doi: 10.1016/j.neunet.2017.02.014

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B Methodol. 57, 289–300. doi: 10.2307/2346101

Blankertz, B., Dornhege, G., Krauledat, M., Schröder, M., Williamson, J., Murray-Smith, R., et al. (2006). “The berlin brain-computer interface presents the novel mental typewriter hex-o-spell,” in Proceedings of the 3rd International Brain-Computer Interface Workshop Training Course, Graz, 108–109.

Blankertz, B., Sannelli, C., Halder, S., Hammer, E. M., Kübler, A., Müller, K.-R., et al. (2010). Neurophysiological predictor of SMR-based BCI performance. Neuroimage 51, 1303–1309. doi: 10.1016/j.neuroimage.2010.03.022

Bundy, D. T., Souders, L., Baranyai, K., Leonard, L., Schalk, G., Coker, R., et al. (2017). Contralesional brain–computer interface control of a powered exoskeleton for motor recovery in chronic stroke survivors. Stroke 48, 1908–1915. doi: 10.1161/STROKEAHA.116.016304

Burde, W., and Blankertz, B. (2006). “Is the locus of control of reinforcement a predictor of brain-computer interface performance?,” in Proceedings of the 3rd International Brain–Computer Interface Workshop and Training Course, Berlin, 76–77.

Cho, H., Ahn, M., Ahn, S., Kwon, M., and Jun, S. C. (2017). EEG datasets for motor imagery brain computer interface. Gigascience 6, 1–8. doi: 10.1093/gigascience/gix034

Cincotti, F., Kauhanen, L., Aloise, F., Palomäki, T., Caporusso, N., Jylänki, P., et al. (2007). Vibrotactile feedback for brain-computer interface operation. Comput. Intell. Neurosci. 2007:12. doi: 10.1155/2007/48937

Fazli, S., Mehnert, J., Steinbrink, J., Curio, G., Villringer, A., Müller, K.-R., et al. (2012). Enhanced performance by a hybrid NIRS–EEG brain computer interface. Neuroimage 59, 519–529. doi: 10.1016/j.neuroimage.2011.07.084

Genovese, C. R., Lazar, N. A., and Nichols, T. (2002). Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 15, 870–878. doi: 10.1006/nimg.2001.1037

Gomez-Rodriguez, M., Peters, J., Hill, J., Schölkopf, B., Gharabaghi, A., and Grosse-Wentrup, M. (2011). Closing the sensorimotor loop: haptic feedback facilitates decoding of motor imagery. J. Neural Eng. 8:036005. doi: 10.1088/1741-2560/8/3/036005

Grosse-Wentrup, M., Schölkopf, B., and Hill, J. (2011). Causal influence of gamma oscillations on the sensorimotor rhythm. Neuroimage 56, 837–842. doi: 10.1016/j.neuroimage.2010.04.265

Guger, C., Allison, B., Cao, F., and Edlinger, G. (2017). A brain-computer interface for motor rehabilitation with functional electrical stimulation and virtual reality. Arch. Phys. Med. Rehabil. 98:e24. doi: 10.1016/j.apmr.2017.08.074

Halder, S., Agorastos, D., Veit, R., Hammer, E. M., Lee, S., Varkuti, B., et al. (2011). Neural mechanisms of brain-computer interface control. Neuroimage 55, 1779–1790. doi: 10.1016/j.neuroimage.2011.01.021

Halder, S., Bogdan, M., Kübler, A., Sitaram, R., and Birbaumer, N. (2013). Prediction of brain-computer interface aptitude from individual brain structure. Front. Hum. Neurosci. 7:105. doi: 10.3389/fnhum.2013.00105

Hammer, E. M., Halder, S., Blankertz, B., Sannelli, C., Dickhaus, T., Kleih, S., et al. (2012). Psychological predictors of SMR-BCI performance. Biol. Psychol. 89, 80–86. doi: 10.1016/j.biopsycho.2011.09.006

Hinterberger, T., Neumann, N., Pham, M., Kübler, A., Grether, A., Hofmayer, N., et al. (2004). A multimodal brain-based feedback and communication system. Exp. Brain Res. 154, 521–526.

Hwang, H.-J., Kwon, K., and Im, C.-H. (2009). Neurofeedback-based motor imagery training for brain-computer interface (BCI). J. Neurosci. Methods 179, 150–156. doi: 10.1016/j.jneumeth.2009.01.015

Jeunet, C., Jahanpour, E., and Lotte, F. (2016a). Why standard brain-computer interface (BCI) training protocols should be changed: an experimental study. J. Neural Eng. 13:036024. doi: 10.1088/1741-2560/13/3/036024

Jeunet, C., Lotte, F., and N’Kaoua, B. (2016b). “Human learning for brain–computer interfaces,” in Brain–Computer Interfaces 1, eds U. Clerc, L. Bougrain, and F. Lotte (Hoboken, NJ: John Wiley & Sons, Inc), 233–250.

Jeunet, C., N’Kaoua, B., and Lotte, F. (2016c). Advances in user-training for mental-imagery-based BCI control: psychological and cognitive factors and their neural correlates. Prog. Brain Res. 228, 3–35. doi: 10.1016/bs.pbr.2016.04.002

Jeunet, C., N’Kaoua, B., Subramanian, S., Hachet, M., and Lotte, F. (2015a). Predicting mental imagery-based BCI performance from personality, cognitive profile and neurophysiological patterns. PLOS ONE 10:e0143962. doi: 10.1371/journal.pone.0143962

Jeunet, C., Vi, C., Spelmezan, D., N’Kaoua, B., Lotte, F., and Subramanian, S. (2015b). “Continuous tactile feedback for motor-imagery based brain-computer interaction in a multitasking context,” in Human-Computer Interaction – INTERACT 2015 Lecture Notes in Computer Science, eds J. Abascal, S. Barbosa, M. Fetter, T. Gross, P. Palanque, and M. Winckler (Cham: Springer), 488–505. doi: 10.1007/978-3-319-22701-6_36

Kübler, A., Blankertz, B., Müller, K.-R., and Neuper, C. (2011). “A model of BCI-control,” in Proceedings of the 5th International Brain–Computer Interface Workshop and Training Course, Graz, 100–103.

Kübler, A., Kotchoubey, B., Kaiser, J., Wolpaw, J. R., and Birbaumer, N. (2001). Brain-computer communication: unlocking the locked in. Psychol. Bull. 127, 358–375.

LaFleur, K., Cassady, K., Doud, A., Shades, K., Rogin, E., and He, B. (2013). Quadcopter control in three-dimensional space using a noninvasive motor imagery-based brain-computer interface. J. Neural Eng. 10:046003. doi: 10.1088/1741-2560/10/4/046003

Leeb, R., Lee, F., Keinrath, C., Scherer, R., Bischof, H., and Pfurtscheller, G. (2007). Brain-computer communication: motivation, aim, and impact of exploring a virtual apartment. IEEE Trans. Neural Syst. Rehabil. Eng. 15, 473–482. doi: 10.1109/TNSRE.2007.906956

Lemm, S., Blankertz, B., Curio, G., and Müller, K.-R. (2005). Spatio-spectral filters for improving the classification of single trial EEG. IEEE Trans. Biomed. Eng. 52, 1541–1548. doi: 10.1109/TBME.2005.851521

Lotte, F. (2015). Signal processing approaches to minimize or suppress calibration time in oscillatory activity-based brain #x2013;computer interfaces. Proc. IEEE 103, 871–890. doi: 10.1109/JPROC.2015.2404941

Maeder, C. L., Sannelli, C., Haufe, S., and Blankertz, B. (2012). Pre-stimulus sensorimotor rhythms influence brain-computer interface classification performance. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 653–662. doi: 10.1109/TNSRE.2012.2205707

Mahmoudi, B., and Erfanian, A. (2006). Electro-encephalogram based brain-computer interface: improved performance by mental practice and concentration skills. Med. Biol. Eng. Comput. 44, 959–969. doi: 10.1007/s11517-006-0111-8

Marchesotti, S., Bassolino, M., Serino, A., Bleuler, H., and Blanke, O. (2016). Quantifying the role of motor imagery in brain-machine interfaces. Sci. Rep. 6:srep24076. doi: 10.1038/srep24076

McCreadie, K. A., Coyle, D. H., and Prasad, G. (2014). Is sensorimotor BCI performance influenced differently by mono, stereo, or 3-D auditory feedback? IEEE Trans. Neural Syst. Rehabil. Eng. 22, 431–440. doi: 10.1109/TNSRE.2014.2312270

McFarland, D. J., McCane, L. M., David, S. V., and Wolpaw, J. R. (1997). Spatial filter selection for EEG-based communication. Electroencephalogr. Clin. Neurophysiol. 103, 386–394.

Merel, J., Pianto, D. M., Cunningham, J. P., and Paninski, L. (2015). Encoder-decoder optimization for brain-computer interfaces. PLOS Comput. Biol. 11:e1004288. doi: 10.1371/journal.pcbi.1004288

Millán, J. D. R., Rupp, R., Müller-Putz, G. R., Murray-Smith, R., Giugliemma, C., Tangermann, M., et al. (2010). Combining brain–computer interfaces and assistive technologies: state-of-the-art and challenges. Front. Neurosci. 4:161. doi: 10.3389/fnins.2010.00161

Müller-Putz, G. R., Scherer, R., Brunner, C., Leeb, R., and Pfurtscheller, G. (2008). Better than random? A closer look on BCI results. Int. J. Bioelectromagn. 10, 52–55.

Neuper, C., Scherer, R., Reiner, M., and Pfurtscheller, G. (2005). Imagery of motor actions: Differential effects of kinesthetic and visual–motor mode of imagery in single-trial EEG. Cogn. Brain Res. 25, 668–677. doi: 10.1016/j.cogbrainres.2005.08.014

Nijboer, F., Sellers, E. W., Mellinger, J., Jordan, M. A., Matuz, T., Furdea, A., et al. (2008). A P300-based brain-computer interface for people with amyotrophic lateral sclerosis. Clin. Neurophysiol. 119, 1909–1916. doi: 10.1016/j.clinph.2008.03.034

Ortner, R., Irimia, D.-C., Scharinger, J., and Guger, C. (2012). A motor imagery based brain-computer interface for stroke rehabilitation. Stud. Health Technol. Inform. 181, 319–323.

Pacheco, K., Acuna, K., Carranza, E., Achanccaray, D., and Andreu-Perez, J. (2017). Performance predictors of motor imagery brain-computer interface based on spatial abilities for upper limb rehabilitation. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2017, 1014–1017. doi: 10.1109/EMBC.2017.8036998

Pfurtscheller, G., and Lopes da Silva, F. H. (1999). Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857.

Pichiorri, F., Fallani, F. D. V., Cincotti, F., Babiloni, F., Molinari, M., Kleih, S. C., et al. (2011). Sensorimotor rhythm-based brain–computer interface training: the impact on motor cortical responsiveness. J. Neural Eng. 8:025020. doi: 10.1088/1741-2560/8/2/025020

Ramoser, H., Muller-Gerking, J., and Pfurtscheller, G. (2000). Optimal spatial filtering of single trial EEG during imagined hand movement. IEEE Trans. Rehabil. Eng. 8, 441–446. doi: 10.1109/86.895946

Ramos-Murguialday, A., Schürholz, M., Caggiano, V., Wildgruber, M., Caria, A., Hammer, E. M., et al. (2012). Proprioceptive feedback and Brain Computer Interface (BCI) based neuroprostheses. PLOS ONE 7:e47048. doi: 10.1371/journal.pone.0047048

Samek, W., Vidaurre, C., Müller, K.-R., and Kawanabe, M. (2012). Stationary common spatial patterns for brain–computer interfacing. J. Neural Eng. 9:026013. doi: 10.1088/1741-2560/9/2/026013

Schalk, G., Mcfarl, D. J., Hinterberger, T., Birbaumer, N., and Wolpaw, J. R. (2004). BCI2000: A general-purpose brain-computer interface (BCI) system. IEEE Trans. Biomed. Eng. 51, 2004.

Suk, H.-I., Fazli, S., Mehnert, J., Müller, K.-R., and Lee, S.-W. (2014). Predicting BCI subject performance using probabilistic spatio-temporal filters. PLOS ONE 9:e87056. doi: 10.1371/journal.pone.0087056

Tan, L.-F., Dienes, Z., Jansari, A., and Goh, S.-Y. (2014). Effect of mindfulness meditation on brain-computer interface performance. Conscious. Cogn. 23, 12–21. doi: 10.1016/j.concog.2013.10.010

Vidaurre, C., Sannelli, C., Müller, K.-R., and Blankertz, B. (2011). Co-adaptive calibration to improve BCI efficiency. J. Neural Eng. 8:025009. doi: 10.1088/1741-2560/8/2/025009

Vuckovic, A., and Osuagwu, B. A. (2013). Using a motor imagery questionnaire to estimate the performance of a Brain-Computer Interface based on object oriented motor imagery. Clin. Neurophysiol. 124, 1586–1595. doi: 10.1016/j.clinph.2013.02.016

Wei, P., He, W., Zhou, Y., and Wang, L. (2013). Performance of motor imagery brain-computer interface based on anodal transcranial direct current stimulation modulation. IEEE Trans. Neural Syst. Rehabil. Eng. 21, 404–415. doi: 10.1109/TNSRE.2013.2249111

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain-computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791.

Xia, B., Zhang, Q., Xie, H., Li, S., Li, J., and He, L. (2012). “A co-adaptive training paradigm for motor imagery based brain-computer interface,” in Advances in Neural Networks – ISNN 2012 Lecture Notes in Computer Science, eds J. Wang, G. G. Yen, and M. M. Polycarpou (Berlin: Springer), 431–439.

Keywords: BCI-illiteracy, performance variation, prediction, motor imagery, BCI

Citation: Ahn M, Cho H, Ahn S and Jun SC (2018) User’s Self-Prediction of Performance in Motor Imagery Brain–Computer Interface. Front. Hum. Neurosci. 12:59. doi: 10.3389/fnhum.2018.00059

Received: 21 August 2017; Accepted: 31 January 2018;

Published: 15 February 2018.

Edited by:

Stefan Haufe, Technische Universität Berlin, GermanyReviewed by:

Camille Jeunet, École Polytechnique Fédérale de Lausanne, SwitzerlandNoman Naseer, Air University, Pakistan

Copyright © 2018 Ahn, Cho, Ahn and Jun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sung C. Jun, c2NqdW5AZ2lzdC5hYy5rcg==

Minkyu Ahn

Minkyu Ahn Hohyun Cho

Hohyun Cho Sangtae Ahn

Sangtae Ahn Sung C. Jun

Sung C. Jun