95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Hum. Neurosci. , 14 August 2017

Sec. Brain Imaging and Stimulation

Volume 11 - 2017 | https://doi.org/10.3389/fnhum.2017.00409

This article is part of the Research Topic Pathophysiology of the Basal Ganglia and Movement Disorders: Gaining New Insights from Modeling and Experimentation, to Influence the Clinic View all 20 articles

Neural systems are characterized by their complex dynamics, reflected on signals produced by neurons and neuronal ensembles. This complexity exhibits specific features in health, disease and in different states of consciousness, and can be considered a hallmark of certain neurologic and neuropsychiatric conditions. To measure complexity from neurophysiologic signals, a number of different nonlinear tools of analysis are available. However, not all of these tools are easy to implement, or able to handle clinical data, often obtained in less than ideal conditions in comparison to laboratory or simulated data. Recently, the temporal structure function emerged as a powerful tool for the analysis of complex properties of neuronal activity. The temporal structure function is efficient computationally and it can be robustly estimated from short signals. However, the application of this tool to neuronal data is relatively new, making the interpretation of results difficult. In this methods paper we describe a step by step algorithm for the calculation and characterization of the structure function. We apply this algorithm to oscillatory, random and complex toy signals, and test the effect of added noise. We show that: (1) the mean slope of the structure function is zero in the case of random signals; (2) oscillations are reflected on the shape of the structure function, but they don't modify the mean slope if complex correlations are absent; (3) nonlinear systems produce structure functions with nonzero slope up to a critical point, where the function turns into a plateau. Two characteristic numbers can be extracted to quantify the behavior of the structure function in the case of nonlinear systems: (1). the point where the plateau starts (the inflection point, where the slope change occurs), and (2). the height of the plateau. While the inflection point is related to the scale where correlations weaken, the height of the plateau is related to the noise present in the signal. To exemplify our method we calculate structure functions of neuronal recordings from the basal ganglia of parkinsonian and healthy rats, and draw guidelines for their interpretation in light of the results obtained from our toy signals.

The nervous system is complex at many levels. It is built as a network of nonlinear elements with complex dynamics themselves: the neurons (Rulkov, 2002; Korn and Faure, 2003). As a result, the output of the nervous system exhibits complex dynamics at multiple scales, which is reflected in neural signals from the level of single neurons, to microcircuits and larger neuronal networks. This complexity can be measured from different kinds of data, for instance microelectrode recordings (MER), electroencephalograms (EEG) and functional magnetic resonance imaging (fMRI) (Mpitsos et al., 1988; Elger et al., 2000; Yang et al., 2013a,b). Although the theoretical concepts used to characterize these different signals are not scale specific (chaoticity, entropy, nonlinearity, fractality), the tools of analysis required to calculate complexity measures from different signals do differ, and need to be tuned for each particular case.

Our motivation comes from the observation of complex properties in the neuronal activity of the basal ganglia (Darbin et al., 2006; Lim et al., 2010; Andres et al., 2011). Different complexity measures of basal ganglia activity show a correlation to different physiologic/pathologic conditions, like arousal level or the presence of specific pathologies (dystonia, parkinsonism) (Sanghera et al., 2012; Andres et al., 2014a; Alam et al., 2015). Even more, some of these measures can be modified with therapeutic interventions, suggesting that a correct characterization of basal ganglia complexity has potentially high clinical impact (Dorval et al., 2008; Lafreniere-Roula et al., 2010). However, complexity measures are not always easily transferred to the clinic. Nonlinear tools are sensitive not only to parameters' settings, but also to the number of data analyzed (length of the recordings), noise and linear correlations present in the signals. Because of that, the correct implementation of nonlinear tools depends critically on the behavior of the tool at hand for the particular system under study (Schreiber, 1999).

In this context, the temporal structure function emerged as a powerful tool for the analysis of basal ganglia activity. In previous work we tested this tool on human, animal and simulated neuronal data, and observed a correlation of abnormalities of the temporal structure of basal ganglia spike trains with parkinsonism (Andres et al., 2014b, 2015, 2016). Importantly, the calculation of temporal structure functions of spike trains is robust and efficient computationally. However, the interpretation of results remains difficult, partly because the use of temporal structure functions for the characterization of spike trains is relatively new. Here, we perform an analysis of the temporal structure of toy signals, i.e., signals with known properties, as a means to characterize the behavior of the tool. This task has been partly attempted before, but the analysis did not include a study of any complex system or a comparison with neuronal data, and is therefore incomplete to our purpose (Yu et al., 2003). Our goal is to draw general guidelines for the interpretation of structure functions of neuronal data. To illustrate the method, we calculate the structure function of spike trains obtained from the basal ganglia of healthy and parkinsonian rats during the transition from deep anesthesia to alertness.

Consider the following time series:

where Itn are successive recordings of the variable I at the times t1, t2 to tn. The length of the time series is n. For this time series, the temporal structure function is a function of the scale τ and of order q, defined as the average of the absolute value of the differences between elements of the time series separated by time lags corresponding to the scale τ, elevated to the power of q (Lin and Hughson, 2001):

Here, Δ denotes the difference, |·| denotes the absolute value and 〈·〉 denotes the average.

It is often the case for complex systems that structure functions of increasing order follow a relation like (Lin and Hughson, 2001):

This justifies the plotting of structure functions with double logarithmic axes, since the exponent ζ can be recovered from this plot as the slope of the linear regression, because:

Therefore, when discussing the slope of Sq(τ) plotted in log-log, we are in fact making reference to the exponent ζ. In addition, the double logarithmic plotting enhances the visualization of small scales.

An analogous definition applies to spatial series of data, where I (x) are recordings obtained simultaneously in a spatial arrangement, instead of at successive times (Stotskii et al., 1998). In that case, the structure function is called spatial structure function, in opposition to the temporal structure function, in which we are interested here. In the case of a velocity field, a linear transformation (i.e., the velocity) relates the spatial and the temporal structure functions.

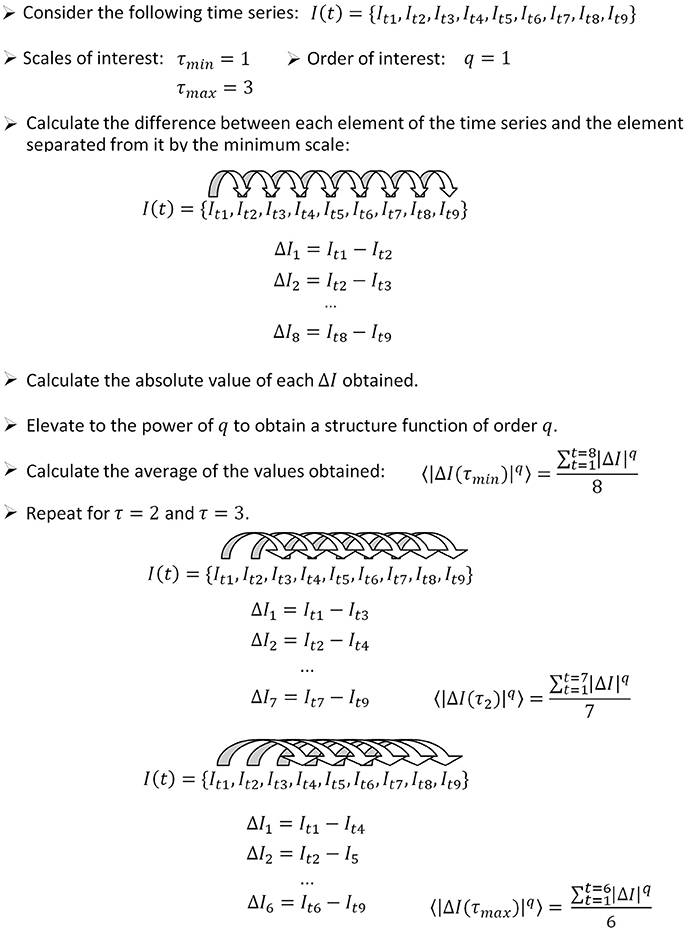

In this section, we introduce briefly a step by step algorithm for the calculation of the temporal structure function from a given signal. The procedure is illustrated in Figure 1. To calculate the temporal structure function of a signal I (t), proceed as follows.

Figure 1. How to apply the algorithm for the calculation of the temporal structure function to a sample time series. In this example, we calculate the structure function of order 1, therefore the power step is not needed. The number of differences computed for each scale (used as the denominator to calculate the average) is equal to the length of the time series minus the scale. We show how to calculate three points of a temporal structure function (S(τ1, 2, 3)), corresponding to scales 1–3. Typically, large scale ranges are of interest to characterize short and long term dynamics (for instance S(τ1−1000)).

Step 1: Define the range of scales of interest, going from the minimum scale τmin to the maximum scale τmax.

Step 2: Define the range of orders of interest, going from the minimum order qmin to the maximum order qmax.

Step 3: Calculate the difference between each element of the time series It and the element separated from it by a number of elements equal to τmin:

The number of values obtained is equal to the length of the time series minus the scale (n − τmin).

Step 4: Calculate the absolute value of the differences obtained from step 3.

Step 5: Elevate the values obtained from step 4 to the power of qmin.

Step 6: Calculate the average of all the values obtained from step 5.

Step 7: Increase τ and repeat steps 3–6 until τmax is reached. In this way, one obtains Sq_min(τ).

Step 8: To calculate structure functions of higher order, repeat steps 3–7 until qmax is reached.

In this paper we focus on temporal structure functions of order 1, in which case steps 5 and 8 are needless. The behavior of the slope of the structure function at increasing q indicates the kind of fractal structure present in the signal: mono- vs. multifractal properties (Lin and Hughson, 2001). These properties might indeed be useful for a better characterization of neuronal signals. However, in previous work we found that the structure function of pallidal neurons of the rat shows no great differences for orders up to q = 6 (Andres et al., 2014b). Therefore in this paper we chose to stay at order one, for simplicity. As is good practice, we normalized S (τ) by dividing by the initial value, and therefore all the structure functions shown in this paper start at S (1) = 1.

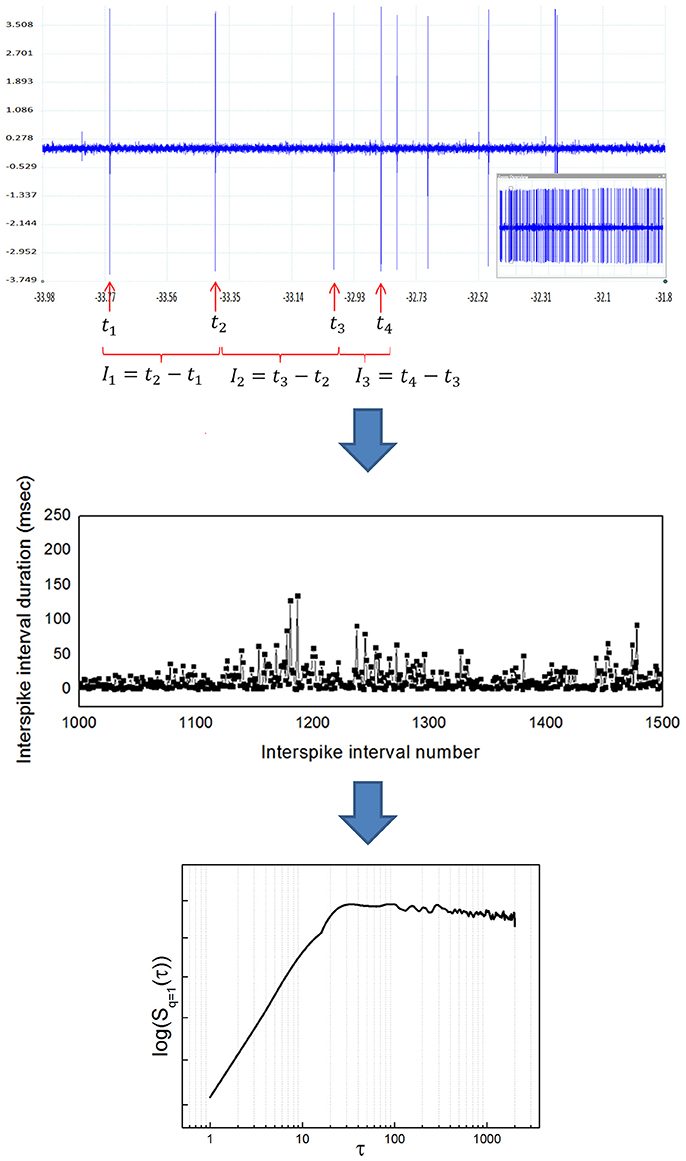

If the signal of interest is a neuronal recording of spiking activity, some previous conditioning is needed before applying the algorithm described. Previous steps include: (1) isolate single neuronal activity employing some spike sorting algorithm (see for example Quiroga et al., 2004), (2) detect the times of occurrence of spikes, and (3) build a time series of interspike intervals (ISI) to obtain the time series I (t). Figure 2 illustrates the whole transformation process from the raw neuronal recording to the structure function.

Figure 2. Transformation from a raw neuronal recording into a temporal structure function. (Upper) Sample raw extracellular microelectrode recording of neuronal activity. This recording was obtained from the entopeduncular nucleus of a healthy rat (technical details can be found in Andres et al., 2014a). The vertical axis indicates electric potential (mV) and the horizontal axis indicates time (s). The inlet at the lower right shows the whole recording, from which a zoom is shown in the bigger window. Individual spikes are marked with a red arrow. Once spikes are classified as belonging to a single neuron's activity, interspike intervals (ISI) are calculated as shown (ISI = time elapsed between the occurrence of a spike and the next). (Middle) Sample time series of interspike intervals, obtained from a neuronal recording like the one shown in the upper panel. The vertical axis indicates ISI duration (ms) and the horizontal axis indicates ISI number (position in the time series). Notice the high variability of the ISI, typical of complex systems. (Lower) Temporal structure function obtained from a time series of ISI like the one shown in the middle panel. The vertical axis is the value of the function S(τ) and the horizontal axis is the scale τ. In pallidal neurons it is common to observe a positive slope of the function at lower scales, followed by a breakpoint and a plateau at higher scales, also typical of complex systems. The double logarithmic scale helps visualization of smaller τ.

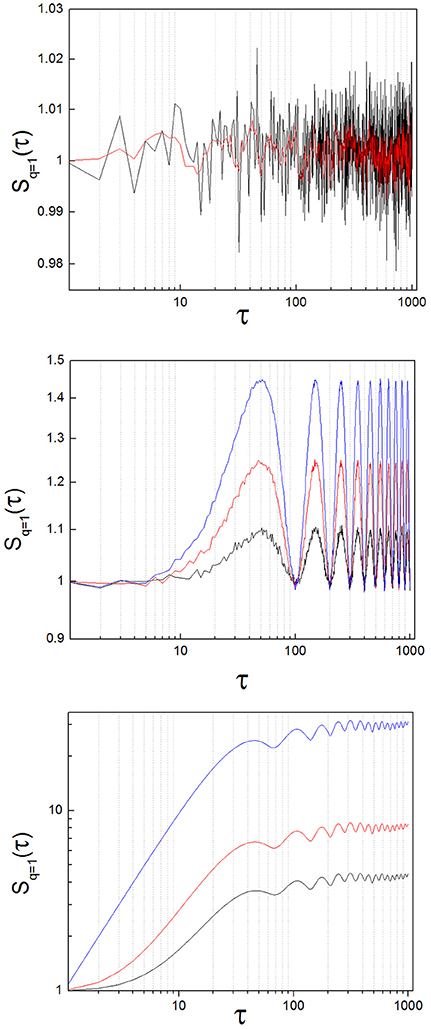

Theoretical work demonstrates that the structure function of random signals has mean slope equal zero (Lin and Hughson, 2001). To test the practical implementation of this statement, we generated 30 random signals that followed a normal distribution (mean = 1, SD = 0.1) with a length of 104 numbers. We obtained S(τ) of these random signals and calculated the slope of S(τ) with a linear regression (Figure 3, upper panel). The slope had a value close to zero for all cases (8.07·10−8 ± 2.31·10−7: mean ± standard deviation; SD).

Figure 3. Structure function of toy signals, with and without added noise. (Upper) Random signal, before (black) and after (red) applying a low-pass filter. The slope calculated with a linear regression lies around zero for both cases. (Middle) Oscillatory signal (sin(x)), with increasing levels of added noise, (blue: x1.0, red: x1.5, black: x2.0; see the text). The slope is zero on average, but the oscillations of the signal are clearly translated into the structure function. As added noise increases, the amplitude of the oscillations diminishes. (Lower) Lorenz system (x variable; parameters: σ = 10, ρ = 28, β = 8/3), with increasing levels of added noise, (blue: no noise, red: x1.0, black: x2.0; see the text). The breaking point lies around 40 < τ1 < 110 for this example. Observe that the position of the breaking point in the structure function does not change as added noise increases, but the height of the plateau diminishes. In the limit, the initial ascending phase disappears and the slope of the structure function is zero at all scales, as random dynamics prevail over the nonlinear system.

A well-known measure in time series analysis is the autocorrelation function. In the case of random series, the autocorrelation falls rapidly to zero indicating independent behavior of the elements of a signal. This behavior is hardly differentiated from the rapid loss of autocorrelation of complex nonlinear systems with highly variable output, like the Lorenz system. On the contrary, the zero-slope behavior of the structure function of random signals is clearly different from the temporal structure of complex systems.

We calculated S(τ) from sin(x) (Figure 3, middle panel). Structure functions of oscillatory signals oscillate steadily between a lower and an upper bound. In general, the frequency of oscillation is translated into the structure function following the rule

where I (t) is the original signal and samp is the sampling rate. As is the case with random signals, the mean slope of S(τ) of perfectly oscillatory signals is close to zero (linear regression, slope = −1.18 x 10−4).

To test the effect of adding increasing noise levels to an oscillatory signal, we multiplied a time series of random numbers with normal distribution (0 ± 1, mean ± SD) by a factor of 1.0, 1.5 and 2.0 successively, and added it to sin (x). We calculated S(τ) from the three resulting time series. The results of adding increasing noise levels are plotted in blue, red and black in the middle panel of Figure 3. The slope of the temporal structure function S(τ) remains around zero as the noise level is increased, while its amplitude diminishes, due to the effect of the random variable on the average term of the structure function. It needs to be noted that the slope of S(τ) of periodic signals is not zero, if scales smaller than the period are considered. However, the mean slope of S(τ) of periodic oscillatory signals is close to zero, if a time sufficiently longer than the period itself is measured, which is observed in our results.

To analyze the structure function of nonlinear systems with complex properties we obtained a signal representative of the time evolution of the well-known, chaotic Lorenz attractor (Strogatz, 1994). We integrated numerically the Lorenz equations with the Euler method, then extracted the temporal variable x(t) and finally calculated the structure function of x(t) (Figure 3, lower panel). The results show a structure function with a positive slope at small scales and a clear breaking point. At this breaking or inflection point, which we have called τ1 in previous work, the function turns into a plateau, turning more or less abruptly into a zero-sloped function (Andres et al., 2015). This behavior is related to the loss of autocorrelation of the system, associated to its chaoticity. However, the autocorrelation function C(τ) is very similar for random and complex systems. On the contrary, complex systems with long range, nonlinear correlations (like the Lorenz system) exhibit structure functions S(τ) dramatically different from random and oscillatory signals. The main difference is observed in the mean slope of S(τ), which is no longer zero at every scale.

To test the effect of added noise on this nonlinear system, we followed a similar procedure as with the oscillatory signals. We multiplied a time series of random numbers with normal distribution (0 ± 1, mean ± SD) by a factor of 1.0 and 2.0 successively, and added it to the nonlinear time series. The position of the breaking point in the function does not change as noise is added to the signal (Figure 3, lower panel, blue line: no noise, red and black lines: increasing noise levels). However, the height of the plateau of the structure function is sensitive to the noise level, and as a consequence the slope of the function tends to zero as noise is added.

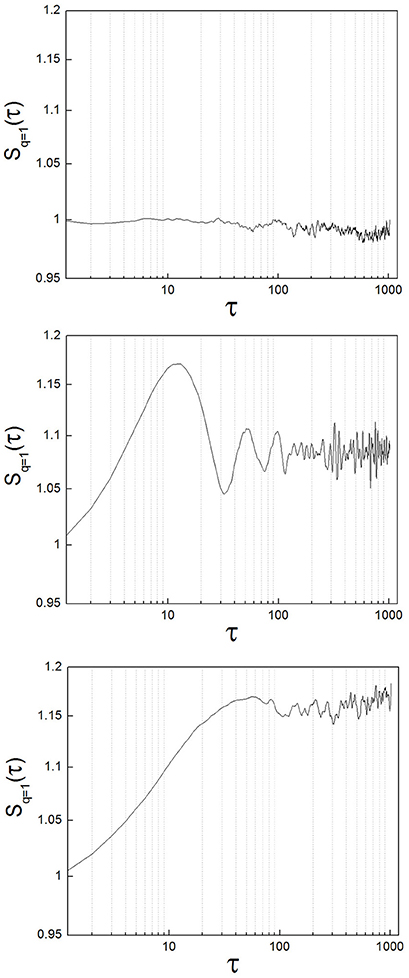

To exemplify the implementation of the method, we analyzed neuronal recordings of the entopeduncular nucleus of the rat (analogous to the internal segment of the globus pallidus in the primate/human: GPi). The experimental protocol was revised and approved by FLENI Ethics Committee, Buenos Aires, Argentina. Recordings belonged to two groups of animals: healthy and parkinsonian rats. Detailed methodological information can be found in Andres et al. (2014a). Briefly, in adult Sprague-Dawley rats we induced Parkinsonism implementing the 6-hydroxydopamine (6-OHDA) partial retrograde lesion of the nigrostriatal pathway. We recorded spontaneous neuronal activity of the GPi under intraperitoneal anesthesia with chloral-hydrate and at increasing levels of alertness. Alertness levels are as described in Andres et al. (2014a): level (1) deep anesthesia; level (2) mild alertness; level (3) full alertness. We analyzed a total of 45 neuronal recordings, belonging to the following groups: from 11 healthy animals, 22 neuronal recordings (level 1: n = 5; level 2: n = 11; level 3: n = 6) and from 9 parkinsonian animals, 23 neuronal recordings (level 1: n = 7; level 2: n = 9; level 3: n = 7). In Figure 4 we show sample structure functions of neuronal recordings, to illustrate the occurrence in neuronal activity of features such as those of toy systems (randomness, oscillations and nonlinear properties). A majority of the recordings (64%) showed marked nonlinear behavior (corresponding to type A neurons of Andres et al., 2015). Additionally, 13% of the recordings presented clear oscillations. A minority of neurons (33%) presented a zero-slope of the structure function at all scales, indicating random behavior. These percentages did not vary significantly between the control and the parkinsonian group or at different levels of alertness, but these results need to be further tested with greater numbers of recordings.

Figure 4. Different types of structure functions from neuronal recordings show features of random, oscillatory, and nonlinear systems. (Upper) Sample neuron with a structure function showing zero-slope at all scales, indicating random behavior. (Middle) Sample neuron with a structure function showing oscillations. (Lower) This case is the most representative of all the neurons analyzed (64%). The structure function has clear, nonlinear behavior.

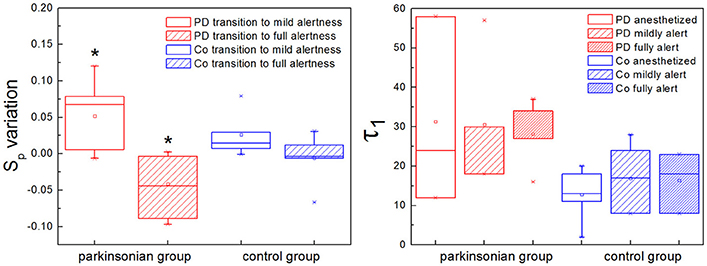

Two characteristic numbers can be extracted from S(τ) to quantify its behavior, when a change of slope typical of nonlinear systems is observed: the inflection point τ1, where the slope of the function changes, and the height of the plateau, which we call Sp. In previous work we developed an algorithm for the calculation of τ1, and observed a higher τ1 in GPi neurons with nigrostriatal lesion (Andres et al., 2015). This observation was done under conditions of full alertness, i.e., animals were under local anesthesia plus analgesia, alert and head restrained at the moment of the surgery. Now we calculated τ1 applying the same algorithm from neuronal recordings obtained during the whole arousal process, going from deep anesthesia, to mild and full alertness. All statistical comparisons were calculated applying the Kolmogorov-Smirnov test; results were considered statistically significant when p < 0.05. Results show that τ1 is higher in the parkinsonian group at all alertness levels, with a more pronounced effect as alertness increases (i.e., as the animal awakens from anesthesia; Figure 5, right panel). These results were not statistically significant (p > 0.05) and need to be tested on more experimental data.

Figure 5. The plateau height (Sp) and the inflection point (τ1) characterize the structure function if nonlinearity is present. (Left) The plateau height Sp can be used to compare the activity of the same neuron at different time points, assuming that recording conditions do not change. In the parkinsonian group, Sp varies significantly more between anesthesia and mild alertness than between mild and full alertness (*p < 0.01). This is new evidence showing that in Parkinson's disease (PD) basal ganglia neurons are unable to handle the awakening process well. This effect is not observed in the control group of animals (Co). (Right) The inflection point τ1 is higher in the parkinsonian group for all alertness levels, with a more pronounced effect as alertness increases. These results are not statistically significant (p > 0.05), and need to be confirmed with larger experimental data.

We calculated the plateau height Sp as the mean value of S(τ) for 100 < τ < 200, a range where all the neurons analyzed had reached a plateau, if this was present. We have shown in the previous section that the plateau height is sensitive to the amount of noise added to a signal. In this sense Sp might not be reliable as a raw measure to compare neuronal data corresponding to different experimental groups. This disadvantage can be overcome by studying variations of Sp instead of raw values, i.e., subtracting a given Sp from a previous value of itself obtained under the same recording conditions. In our study case we recorded activity from single neurons during long periods of time (1–3 h), and we can safely assume that environmental conditions (electrical noise and any other source of interference) did not vary during the whole recording. We calculated Sp from isolated segments of activity obtained at the beginning, middle and end of the recording, corresponding to deep anesthesia, mild alertness and full alertness, respectively. Thus, we obtained the following values of Sp: Sp1−2, as the difference between the plateau height at mild alertness minus the plateau height at deep anesthesia, and Sp2−3, as the difference between the plateau height at full alertness minus the plateau height at mild alertness. In the control group we did not observe any difference between Sp1−2 and Sp2−3, whereas under parkinsonian conditions Sp1−2 was significantly higher than Sp2−3 (p < 0.01; Figure 5, left panel).

Nonlinear properties of neuronal activity are critical for normal basal ganglia functioning and deteriorate in specific ways in disease, in particular in movement disorders (Parkinson's disease, dystonia, and others) (Montgomery, 2007; Darbin et al., 2013; Alam et al., 2015). Even more, therapeutic interventions are able to restitute such properties to normal, suggesting the clinical importance of quantifying nonlinear features of neuronal activity (Rubin and Terman, 2004; Lafreniere-Roula et al., 2010). However, up to now the community has not agreed on any method as a gold standard to quantify nonlinear properties of the basal ganglia. This is partly due to difficulties in the implementation of nonlinear methods of analysis, which are typically sensitive to a wide range of parameters. Opposed to that, the temporal structure function S(τ) is a nonlinear tool of analysis easy to implement, and robust to short recordings, but it is not well known and therefore difficult to interpret. We analyzed the behavior of S(τ) from signals with known properties (toy systems), and observed that: (1) S(τ) has zero-slope at every scale for random systems; (2) S(τ) is oscillatory and bounded for oscillatory systems, and the frequency of oscillations can be recovered from S(τ) if the sampling rate is known; and (3) S(τ) has a positive slope at small scales for nonlinear systems, which changes to a plateau with zero-slope at large scales.

In the light of our observations for toy systems we analyzed a number of neuronal recordings of healthy and parkinsonian basal ganglia at different levels of alertness (from deep anesthesia to full alertness). In a majority of the neurons studied, nonlinear behavior was clearly present. In these cases we extracted two characteristic numbers from S(τ) to quantify its behavior: (1) the height of the plateau (Sp), and (2) the scale of the inflection point or slope change (τ1). Since the plateau height is sensitive to the noise added to the signal, we used it in a relative way, measuring the changes of Sp for alertness transitions within single neuronal recordings, when we can assume that recording conditions were stationary. For parkinsonian neurons the change of plateau height from deep anesthesia to mild alertness was significantly higher than from mild to full alertness (Sp1−2 > Sp2−3, p < 0.01). This difference in the variation of Sp was not observed in the control group. The fact that Sp1−2 is significantly higher than Sp2−3 in parkinsonian animals is in agreement with previous observations indicating that the basal ganglia of animals with dopamine depletion do not handle well the awakening process (Andres et al., 2014a). Importantly, as a consequence of the averaging process Sp is independent from the frequency of discharge of the neurons by definition, solving a previous controversy about the structure function method (Darbin et al., 2016). Regarding the inflection point τ1, it was higher in Parkinson's disease than in control neurons with a more pronounced effect at higher alertness levels, but this effect was not statistically significant (p > 0.05) and needs to be further tested in larger studies. Although preliminary, our findings are relevant for understanding results obtained from human surgery on Parkinson's disease, usually performed with the patient awake, under local anesthesia only. We report on the inability of pallidal neurons with Parkinson's disease to handle normally the transition from anesthesia to alertness, which might be a key finding to better understand the pathophysiology of the basal ganglia.

In previous work, we determined that the positive slope at small scales of the log-log temporal structure function is associated with particular properties of neuronal dynamics. Specifically, in a neuronal network with nonlinear properties we showed that the slope depends on the coupling strength (Andres et al., 2014b). This indicates that the temporal structure function captures critical properties of the underlying dynamics of the system. Importantly, in our previous modeling study we observed that a smaller percentage of neurons behave in random fashion, which seems to be related to the stability of the system (Andres et al., 2014b). This finding is now reproduced in our experimental results.

Finally, we would like to draw some attention to other nonlinear tools that are available for the characterization of neurophysiologic signals (Amigó et al., 2004; Pereda et al., 2005; Song et al., 2007). Every tool shows advantages and shortcomings, making them more or less suitable for the study of specific neurologic systems. Recently Zunino et al. introduced two methods that seem to be particularly powerful for the analysis of physiologic time series (Zunino et al., 2015, 2017). A detailed review and comparison of the performance of the temporal structure function with these other tools is beyond the scope of this paper. Nevertheless, we are still interested in a detailed analysis of the temporal structure function, because it has previously shown to be useful for the characterization of neuronal spike trains obtained from human patients with Parkinson's disease, which tend to be short (around 5,000 data per time series) (Andres et al., 2016). Another limitation of our work is that we have studied the temporal structure function of only one nonlinear system (i.e., the Lorenz attractor). However, our results are supported by results from other well-known nonlinear systems, which exhibit similar behavior (Lin and Hughson, 2001).

To conclude, we would like to draw some brief guidelines for the interpretation of the temporal structure function of neuronal activity. The most important feature distinguishing S(τ) of random vs. complex signals is its slope. If S(τ) has zero slope for every τ, randomness can be assumed at the scales analyzed, meaning that the order of the events in the time series (in this case interspike intervals) is not different from what would be observed for an independent variable. On the other hand, if S(τ) has a first segment with positive slope then turning into a plateau, the behavior falls in the category of complex systems. In this case, one can look at τ1 and Sp. The breaking point τ1 is related to the memory limit of the system, and therefore to its chaoticity. For scales below τ1 the order of events (ISI) is not random, and therefore memory or temporal organization is present. In other words, at scales smaller than τ1 the times of occurrence of single spikes are not independent from each other, but nonlinear organization plays a role in the signal. At scales larger than τ1 the system behaves in random fashion. Regarding the neural code, it can be assured that at scales larger than τ1 only a rate code or other averaged coding scheme can be used, since complex time patterns cannot be transmitted from neuron to neuron beyond the memory limit of the system (Bialek et al., 1991; Ferster and Spruston, 1995). The second quantitative measure that can be obtained from the temporal structure function is Sp. While τ1 is related to the memory limit and is robust to noisy signals, Sp is sensitive to added noise. Therefore it is necessary to be cautious when comparing Sp between experimental data, if it cannot be assured that the data were obtained under similar conditions, in particular regarding external noise and interference. If recording conditions can be safely assumed to be stationary, then variations of Sp indicate a change in the power of the random components of the system. Finally, oscillations of the original signal are translated into the structure function, and the original frequency can be recovered from S(τ) if the sampling rate is known.

All animal experiments and procedures were conducted with adherence to the norms of the Basel Declaration. The experimental protocol was revised and approved by our local ethics committee CEIB, BuenosAires, Argentina.

DA designed the study and conducted the experiments. FN processed and analyzed the data. Both authors contributed to the preparation of the manuscript and approved its final version.

The work of Daniela Andres is supported by the Ministry of Science and Innovative Production, Argentina, and by National University of San Martin.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors acknowledge the work of the technical personnel at National University of San Martin that made this work possible.

Alam, M., Sanghera, M. K., Schwabe, K., Lutjens, G., Jin, X., Song, J., et al. (2015). Globus pallidus internus neuronal activity: a comparative study of linear and non-linear features in patients with dystonia or Parkinson's disease. J. Neural Transm. 123, 231–240 doi: 10.1007/s00702-014-1277-0

Amigó, J. M., Szczepański, J., Wajnryb, E., and Sanchez-Vives, M. V. (2004). Estimating the entropy rate of spike trains via Lempel-Ziv complexity. Neural Comput. 16, 717–736. doi: 10.1162/089976604322860677

Andres, D. S., Cerquetti, D., and Merello, M. (2011). Finite dimensional structure of the GPI discharge in patients with Parkinson's disease. Int. J. Neural Syst. 21, 175–186. doi: 10.1142/S0129065711002778

Andres, D. S., Cerquetti, D., and Merello, M. (2015). Neural code alterations and abnormal time patterns in Parkinson's disease. J. Neural Eng. 12:026004. doi: 10.1088/1741-2560/12/2/026004

Andres, D. S., Cerquetti, D., and Merello, M. (2016). Multiplexed coding in the human basal ganglia. J. Phys. 705:012049. doi: 10.1088/1742-6596/705/1/012049

Andres, D. S., Cerquetti, D., Merello, M., and Stoop, R. (2014a). Neuronal entropy depends on the level of alertness in the Parkinsonian globus pallidus in vivo. Front. Neurol. 5:96. doi: 10.3389/fneur.2014.00096

Andres, D. S., Gomez, F., Ferrari, F. A., Cerquetti, D., Merello, M., et al. (2014b). Multiple-time-scale framework for understanding the progression of Parkinson's disease. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 90:062709. doi: 10.1103/PhysRevE.90.062709

Bialek, W., Rieke, F. R. R., and de Ruyter van Steveninck Warland, D. (1991). Reading a neural code. Science 252, 1854–1857. doi: 10.1126/science.2063199

Darbin, O., Adams, E., Martino, A., Naritoku, L., Dees, D., and Naritoku, D. (2013). Non-linear dynamics in parkinsonism. Front. Neurol. 4:211. doi: 10.3389/fneur.2013.00211

Darbin, O., Jin, X., Von Wrangel, C., Schwabe, K., Nambu, A., Naritoku, D., et al. (2016). Neuronal entropy-rate feature of entopeduncular nucleus in rat model of Parkinson's disease. Int. J. Neural Syst. 26:1550038. doi: 10.1142/S0129065715500380

Darbin, O., Soares, J., and Wichmann, T. (2006). Nonlinear analysis of discharge patterns in monkey basal ganglia. Brain Res. 1118, 84–93. doi: 10.1016/j.brainres.2006.08.027

Dorval, A. D., Russo, G. S., Hashimoto, T., Xu, W., Grill, W. M., and Vitek, J. L. (2008). Deep brain stimulation reduces neuronal entropy in the MPTP-primate model of Parkinson's disease. J. Neurophysiol. 100, 2807–2018. doi: 10.1152/jn.90763.2008

Elger, C. E., Widman, G., Andrzejak, R., Arnhold, J., David, P., and Lehnertz, K. (2000). Nonlinear EEG analysis and its potential role in epileptology. Epilepsia 41(Suppl. 3), S34–S38. doi: 10.1111/j.1528-1157.2000.tb01532.x

Ferster, D., and Spruston, N. (1995). Cracking the neuronal code. Science 270, 756–757. doi: 10.1126/science.270.5237.756

Korn, H., and Faure, P. (2003). Is there chaos in the brain? II. Experimental evidence and related models. C. R. Biol. 326, 787–840. doi: 10.1016/j.crvi.2003.09.011

Lafreniere-Roula, M., Darbin, O., Hutchison, W. D., Wichmann, T., Lozano, A. M., and Dostrovsky, J. O. (2010). Apomorphine reduces subthalamic neuronal entropy in parkinsonian patients. Exp. Neurol. 225, 455–458. doi: 10.1016/j.expneurol.2010.07.016

Lim, J., Sanghera, M. K., Darbin, O., Stewart, R. M., Jankovic, J., and Simpson, R. (2010). Nonlinear temporal organization of neuronal discharge in the basal ganglia of Parkinson's disease patients. Exp. Neurol. 224, 542–544. doi: 10.1016/j.expneurol.2010.05.021

Lin, D. C., and Hughson, R. L. (2001). Modeling heart rate variability in healthy humans: a turbulence analogy. Phys. Rev. Lett. 86, 1650–1653. doi: 10.1103/PhysRevLett.86.1650

Montgomery, E. B. Jr. (2007). Basal ganglia physiology and pathophysiology: a reappraisal. Parkinsonism Relat. Disord. 13, 455–465. doi: 10.1016/j.parkreldis.2007.07.020

Mpitsos, G. J., Burton, R. M. Jr., Creech, H. C., and Soinila, S. O. (1988). Evidence for chaos in spike trains of neurons that generate rhythmic motor patterns. Brain Res. Bull. 21, 529–538. doi: 10.1016/0361-9230(88)90169-4

Pereda, E., Quiroga, R. Q., and Bhattacharya, J. (2005). Nonlinear multivariate analysis of neurophysiological signals. Prog. Neurobiol. 77, 1–37. doi: 10.1016/j.pneurobio.2005.10.003

Quiroga, R. Q., Nadasdy, Z., and Ben-Shaul, Y. (2004). Unsupervised spike detection and sorting with wavelets and superparamagnetic clustering. Neural Comput. 16, 1661–1687. doi: 10.1162/089976604774201631

Rubin, J. E., and Terman, D. (2004). High frequency stimulation of the subthalamic nucleus eliminates pathological thalamic rhythmicity in a computational model. J. Comput. Neurosci. 16, 211–235. doi: 10.1023/B:JCNS.0000025686.47117.67

Rulkov, N. F. (2002). Modeling of spiking-bursting neural behavior using two-dimensional map. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 65(4 Pt. 1):041922. doi: 10.1103/PhysRevE.65.041922

Sanghera, M. K., Darbin, O., Alam, M., Krauss, J. K., Friehs, G., Jankovic, J., et al. (2012). Entropy measurements in pallidal neurons in dystonia and Parkinson's disease. Mov. Disord. 27(Suppl. 1), S1–S639. (Abstracts of the Sixteenth International Congress of Parkinson's Disease and Movement Disorders. June 17-21, (2012). Dublin, Ireland)

Schreiber, T. (1999). Interdisciplinary application of nonlinear time series methods. Phys. Rep. 308, 1–64. doi: 10.1016/S0370-1573(98)00035-0

Song, D., Chan, R. H. M., Marmarelis, V. Z., Hampson, R. E., Deadwyler, S. A., and Berger, T. W. (2007). Nonlinear dynamic modeling of spike train transformations for Hippocampal-Cortical prostheses. IEEE Trans. Biomed. Eng. 54, 1053–1066. doi: 10.1109/TBME.2007.891948

Stotskii, A., Elgered, K. G., and Stotskaya, I. M. (1998). Structure analysis of path delay variations in the neutralatmosphere. Astron. Astrophys. Trans. 17, 59–68. doi: 10.1080/10556799808235425

Yang, A. C., Huang, C. C., Yeh, H. L., Liu, M. E., Hong, C. J., and Tu, P. C. (2013a). Complexity of spontaneous BOLD activity in default mode network is correlated with cognitive function in normal male elderly: a multiscale entropy analysis. Neurobiol. Aging 34, 428–438. doi: 10.1016/j.neurobiolaging.2012.05.004

Yang, A. C., Wang, S. J., Lai, K. L., Tsai, C. F., Yang, C. H., Hwang, J. P., et al. (2013b). Cognitive and neuropsychiatric correlates of EEG dynamic complexity in patients with Alzheimer's disease. Prog. Neuropsychopharmacol. Biol. Psychiatry 47, 52–61. doi: 10.1016/j.pnpbp.2013.07.022

Yu, C. X., Gilmore, M., Peebles, W. A., and Rhodes, T. L. (2003). Structure function analysis of long-range correlations in plasma turbulence. Phys. Plasmas 10, 2772–2779. doi: 10.1063/1.1583711

Zunino, L., Olivares, F., Bariviera, A. F., and Rosso, O. A. (2017). A simple and fast representation space for classifying complex time series. Phys. Lett. A 381, 1021–1028. doi: 10.1016/j.physleta.2017.01.047

Keywords: Parkinson's disease, neuronal activity, interspike intervals, 6-hydroxydopamine, alertness, basal ganglia, complexity, temporal structure

Citation: Nanni F and Andres DS (2017) Structure Function Revisited: A Simple Tool for Complex Analysis of Neuronal Activity. Front. Hum. Neurosci. 11:409. doi: 10.3389/fnhum.2017.00409

Received: 18 May 2017; Accepted: 25 July 2017;

Published: 14 August 2017.

Edited by:

Felix Scholkmann, University Hospital Zurich, University of Zurich, SwitzerlandReviewed by:

Luciano Zunino, Consejo Nacional de Investigaciones Científicas y Técnicas (CONICET), ArgentinaCopyright © 2017 Nanni and Andres. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daniela S. Andres, ZGFuZHJlc0B1bnNhbS5lZHUuYXI=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.