95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 14 July 2017

Sec. Brain-Computer Interfaces

Volume 11 - 2017 | https://doi.org/10.3389/fnhum.2017.00359

This article is part of the Research Topic Detection and Estimation of Working Memory States and Cognitive Functions Based on Neurophysiological Measures View all 17 articles

We studied the capability of a Hybrid functional neuroimaging technique to quantify human mental workload (MWL). We have used electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) as imaging modalities with 17 healthy subjects performing the letter n-back task, a standard experimental paradigm related to working memory (WM). The level of MWL was parametrically changed by variation of n from 0 to 3. Nineteen EEG channels were covering the whole-head and 19 fNIRS channels were located on the forehead to cover the most dominant brain region involved in WM. Grand block averaging of recorded signals revealed specific behaviors of oxygenated-hemoglobin level during changes in the level of MWL. A machine learning approach has been utilized for detection of the level of MWL. We extracted different features from EEG, fNIRS, and EEG+fNIRS signals as the biomarkers of MWL and fed them to a linear support vector machine (SVM) as train and test sets. These features were selected based on their sensitivity to the changes in the level of MWL according to the literature. We introduced a new category of features within fNIRS and EEG+fNIRS systems. In addition, the performance level of each feature category was systematically assessed. We also assessed the effect of number of features and window size in classification performance. SVM classifier used in order to discriminate between different combinations of cognitive states from binary- and multi-class states. In addition to the cross-validated performance level of the classifier other metrics such as sensitivity, specificity, and predictive values were calculated for a comprehensive assessment of the classification system. The Hybrid (EEG+fNIRS) system had an accuracy that was significantly higher than that of either EEG or fNIRS. Our results suggest that EEG+fNIRS features combined with a classifier are capable of robustly discriminating among various levels of MWL. Results suggest that EEG+fNIRS should be preferred to only EEG or fNIRS, in developing passive BCIs and other applications which need to monitor users' MWL.

Mental workload (MWL) affects people who are interacting with computers and other devices. The use of technology in everyday life may impose high cognitive demands as users navigate complex interfaces. Mental overload may compromise users' performance and sometimes safety, by increasing error rates and engendering fatigue, decline in motivation, higher reaction times (Xie and Salvendy, 2000; Young and Stanton, 2002), and neglect of critical information, known as cognitive tunneling (Thomas and Wickens, 2001; Dixon et al., 2013; Dehais et al., 2014). Taking into account the users' cognitive characteristics and limitations are thus critical in improving the design of human-machine interfaces (BMI) and for operating them efficiently by installing adaptive features that can respond to changes in the MWL (Kaber et al., 2000; Parasuraman and Wilson, 2008; Gagnon et al., 2012).

MWL has been defined as the proportion of the human operator's mental capabilities that is occupied during the performance of a given task (Boff et al., 1994). According to the prevalent Multiple Resources theory (Navon and Gopher, 1979; Wickens, 2002), performing different tasks requires a subject to tap into a set of separate resources, which are limited in capacity and distributable among tasks (Horrey and Wickens, 2003). In general, these resources can be categorized among four dimensions: processing stage (perception or cognition vs. response), perceptual modality (visual vs. auditory), visual channel (focal vs. ambient), and processing code (verbal vs. spatial; Wickens, 2002; Horrey and Wickens, 2003). Based on the Multiple Resources theory, equal resource demands between two tasks that both recruit one level of a given dimension will interfere with each other more than two tasks that recruit separate levels on the dimension (Wickens, 2002), and may create bottlenecks and consequent decrements in performance. Similar conclusions have been reached in the areas of aviation (Stanney and Hale, 2012; Causse and Matton, 2014; Durantin et al., 2014), education (Palmer and Kobus, 2007; Spüler et al., 2016), and a variety of clinical situations (Carswell et al., 2005; Stefanidis et al., 2007; Prabhu et al., 2010; Yurko et al., 2010; Byrne, 2013; Guru et al., 2015). In the case of driving while having a phone conversation, in addition to the interference of resources the “engagement phenomenon” also controls the outcome of multi-tasking scenario. This happens when one of the tasks attracts so much attention that the advantage of separate resource demand would be eliminated (Strayer and Johnston, 2001; Strayer and Drews, 2007).

MWL is a construct that arises from the interaction of the properties of a task, the environment in which it is performed, and the characteristics of the human operator performing it (Longo, 2016). Task properties include the difficulty and monotony of the task and the types of resources that it engages. The environment may contain various degrees of distraction and noise. The subject characteristics involve training and expertise as well as changing levels of fatigue, motivation, and vigilance. Thus, the MWL can be systematically adjusted by tuning a subset of these variables while controlling for the rest.

Methods of determining MWL fall into three broad categories: (1) Self-reporting and subjective ratings using standard questionnaires such as the NASA-TLX (Hart and Staveland, 1988); (2) Behavioral measures, such as primary- and secondary-task performance; and (3) Measures based on the physiology of the user, including heart rate variability, oculomotor activity, pupillometry, electromyography, galvanic skin response, and brain activity (Xiao et al., 2005; Wickens, 2008; Sahayadhas et al., 2012). Self-reporting and behavioral based information tends to be delayed, sporadic, and intrusive to obtain. Performance based information, in addition, can be misleading since multiple degrees of MWL may accompany the same level of performance (Yurko et al., 2010). Physiological measures, on the other hand, do not require overt behavior, can be arranged to have little or no interference with task execution, and can supply information continuously without significant delay. Progresses in miniaturization and wireless technology have amplified these advantages of physiological measures (Sahayadhas et al., 2012).

Most studies of MWL based on brain function have utilized electroencephalography (EEG), following a large number of studies using EEG for developing BMI (Wolpaw and Wolpaw, 2012). Functional near-infrared spectroscopy (fNIRS) as a newer modality have shown promising capabilities in BMI applications for discrimination of different motor tasks (Naseer and Hong, 2013) or decoding subjects' binary decisions (Naseer et al., 2014). The relationship between MWL and central nervous system activity is well-established (McBride and Schmorrow, 2005). BMIs that do not attempt to directly control a device but modulate its user interface based on real-time user status are referred as passive BMIs (Gateau et al., 2015). In such applications, of paramount importance. Recently multi-modal techniques utilizing concurrent EEG and fNIRS have gained popularity due to their relatively richer information content (Hirshfield et al., 2009; Liu Y. et al., 2013; Liu T. et al., 2013; Keles et al., 2014b; Aghajani and Omurtag, 2016; Buccino et al., 2016; Omurtag et al., 2017). Available evidence indicates that brain activity measures of MWL are more informative than ocular or peripheral physiology measures (Hogervorst et al., 2014).

Concurrent EEG and fNIRS, which we refer to as EEG+fNIRS, is promising as a practical technique that is more accurate than the individual modalities alone. fNIRS provides information that is complementary to EEG, by measuring the changes in cerebral blood flow (CBF) and related hemoglobin concentrations through near-infrared light source/detectors on the scalp. It is comparable to EEG in portability. fNIRS does not have electromyographic (EMG) and blink artifacts and its signal closely correlates with the blood oxygen level dependent (BOLD) signal from functional magnetic resonance imaging (fMRI; Strangman et al., 2002; Huppert et al., 2006), which is a gold-standard for measuring cerebral hemodynamics. In addition to the advantages of pooling different types of signals, EEG+fNIRS offers new types of features, ultimately based on neurovascular coupling (NVC), the cascade of processes by which neural activity modulates local blood flow and oxygenation, and NVC related features are not resolvable by a uni-modal signal sensitive to only neural activity (e.g., EEG) or only hemodynamics (e.g., BOLD).

Working memory (WM) is a brain system that provides transient holding and processing of the information necessary for complex cognitive tasks (Baddeley, 2003). It has been investigated in previous functional neuroimaging studies, which identified the prefrontal cortex (PFC) as the most relevant area of activation (Cohen et al., 1997; Smith and Jonides, 1997; Hoshi et al., 2003; Owen et al., 2005). MWL detection using WM load as an experimental paradigm has been studied using EEG (Berka et al., 2007; Dornhege et al., 2007; Grimes et al., 2008; Brouwer et al., 2012), fNIRS (Hoshi et al., 2003; Izzetoglu et al., 2003; Ayaz et al., 2012; Durantin et al., 2014; Herff et al., 2014), and concurrent EEG and fNIRS (Hirshfield et al., 2009; Coffey et al., 2012). We have previously shown that aspects of NVC are characterizable by EEG+fNIRS, by taking advantage of the synergistic interaction between the modalities (Keles et al., 2016). The potential of EEG+fNIRS for active BCIs has recently been investigated (Fazli et al., 2012; Liu Y. et al., 2013; Tomita et al., 2014; Buccino et al., 2016). In this study, we built on this work to explore the unique properties of EEG+fNIRS for MWL detection.

The n-back task was introduced by Kirchner (1958). n-back is a continues-performance task for measurement of WM capacity, which has been used frequently in the field of cognitive neuroscience. Gevins (Gevins et al., 1997, 1998; Smith and Gevins, 2005a) and Smith (Smith and Gevins, 2005b) showed during high task-load conditions EEG theta activity increases in the frontal midline and alpha activity attenuates during the performance of an n-back task. In addition, fNIRS revealed WM load while performing n-back task activates PFC (Ayaz et al., 2012; Sato et al., 2013; Fishburn et al., 2014; Herff et al., 2014; Mandrick et al., 2016b). The n-back task engages WM and becomes more demanding as the value of n increases. We have therefore used the n-back task as our experimental paradigm with n ranging from 0 to 3, allowing us to tune the task difficulty. Our study maintained all other conditions constant by employing only healthy adult volunteers with no previous experience with this task, all performing under the same laboratory conditions with no distractions or additional activities. Our experimental design is consistent with numerous BMI studies that take WM load as a proxy for MWL (Berka et al., 2007; Grimes et al., 2008; Coffey et al., 2012; Herff et al., 2014).

The aim of this study was, first, to introduce and validate a state of the art EEG+fNIRS set up in a single headpiece. Since PFC is the main region of interest in WM load detection (Owen et al., 2005), our design had the advantage of frontal lobe coverage by fNIRS. We had whole-head coverage by EEG. We used the term whole-head to refer to the fact that we placed EEG electrodes at all (except frontopolar) standard 10–20 sites bilaterally covering the frontal, central, temporal, parietal, and occipital areas. Having fNIRS optodes on the forehead not only improved the quality of acquired signal but also reduced the preparation time. The second aim of this study was to develop EEG+fNIRS measures that discriminate among levels of MWL and show that they are promising for the practical and accurate quantification of MWL in real-world settings. We developed such measures by extracting EEG, fNIRS, and Hybrid (EEG+fNIRS) based features from the full set of signals. Most discriminating features were selected and fed into support vector machines (SVM) to perform binary or multi-class classification. The handful of EEG+fNIRS studies currently available (Coffey et al., 2012; Putze et al., 2014; Buccino et al., 2016) have not systematically quantified the performance of subsets of a Hybrid system and its features contribute to the accuracy of classification. Therefore, the third aim of this study was to rigorously compare the performance of uni-modal and Hybrid systems.

Seventeen healthy volunteers (16 males, 1 female) with a mean age of 26.2 and standard deviation of 7.7 years from University of Houston students or employees participated in the experiment. The experimental procedures involving human subjects described in this paper were approved by the Institutional Review Board of the University of Houston. The participants gave written informed consent prior to the experiments and were compensated for their effort by being given a gift card from a major retailer. During the performance of the verbal n-back task, target letters should be detected by the operator by means of pressing Space button on the keyboard. All subjects were right-handed and used their dominant hand for performing the experiment. This will reduce the variability of brain signals based on the motor function through all subjects. None of the subjects had ever taken part in an n-back study, thus no training effects were expected.

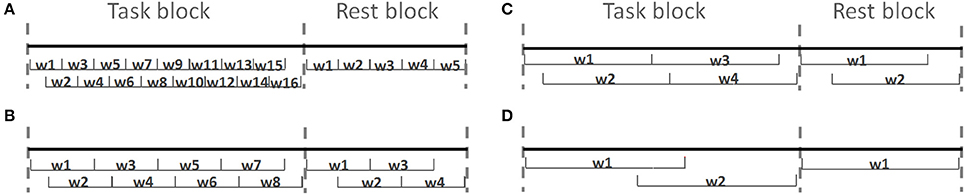

One of the most common WM paradigm for MWL assessment is the n-back task, which was first introduced by Kirchner (1958). In the letter n-back task, participants observe a sequence of single letters separated by a certain amount of time each; for each letter they decide whether it is a target, i.e., identical to the item that appeared n items back in the sequence. The value of n is kept constant during a segment of the experiment referred to as a session. As n increases the difficulty of the task becomes higher. In the literature usually 0-back task has been used as a control state. Figure 1 illustrates how letter n-back task works when n is 0, 1, 2, or 3. Depending on n, subject should find the target letter and interact with the user-interface.

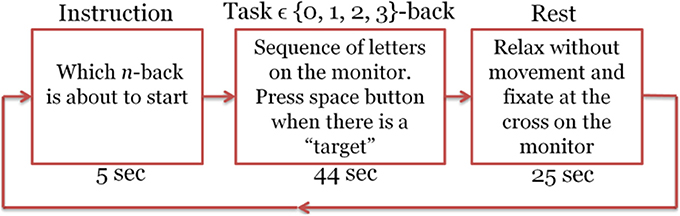

In each experiment, we had a total number of 40 sessions. These sessions were presented in pseudorandom order, 10 sessions per n. Each session started with an instruction block that is displayed for 5 s on the screen and informed the subject about which type of the n-back tasks was about to start (instruction block). Then 22 randomly selected letters (out of 10 candidate pool of letters) appeared in sequence on the screen (task block). Each letter stayed on the screen for 500 ms and the subject had 1,500 ms to press the space button in case that the letter was a target according to the type of session. At the end of each session there was a 25 s resting block. During this block the subject remained relaxed and fixated at a cross on the screen to let the brain activation return to its baseline and get ready for the next n-back session (Herff et al., 2014). Figure 2 shows one sample session. Total recording time was 50 min. The program for implementation of this task was written using Presentation software (Neurobehavioral Systems, Inc.). All the information about the appearance time of each letter, session type, subject's response time, and also whether the presented letter was a target or not was recorded by this software and stored as a text file for later processing. The objective performance of the subjects within each session was computed from this information. Subjects who had too low accuracy (<90% in the 0- or <80% in the 1-back) were deemed insufficiently focused on the task. Performance level was measured by computing the accuracy defined as the fraction of correct responses. We considered a missed target as an incorrect response.

Figure 2. Experimental design for the letter n-back task. Each session includes the Instruction, task, and rest blocks.

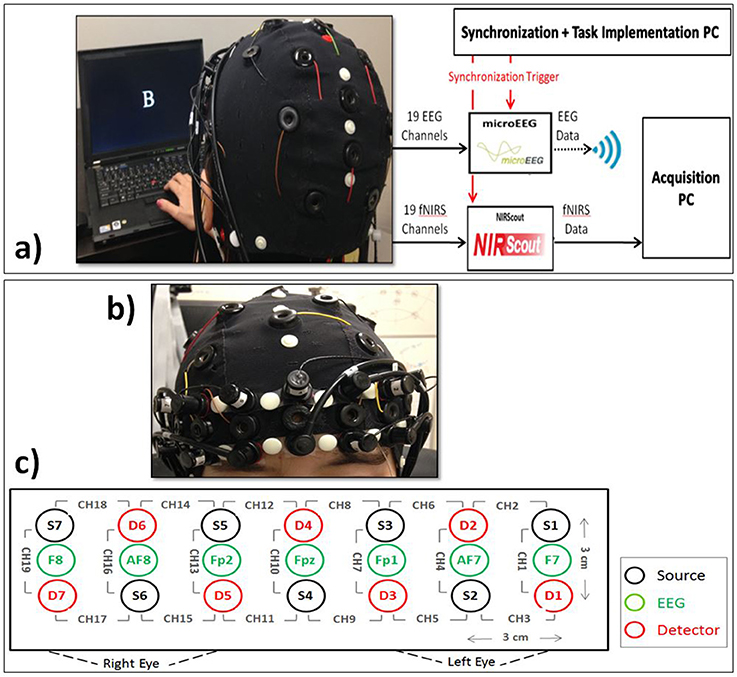

A quantitative meta-analysis has found the cortical regions that were activated robustly during letter n-back task (Broadman Areas 6, 7, 8, 9, 10, 32, 40, 45, 46, 47, and supplementary motor area; Owen et al., 2005). We used this information together with the results of previous EEG studies to choose the optimum locations for our 19 EEG electrodes (F7, F8, F3, F4, Fz, Fc1, Fc2, T3, T4, C3, C4, Cp1, Cp2, P3, P4, Pz, Poz, O1, O2). We used Fpz as the ground and Cz as the reference electrode. In the literature, several different reference electrode positioning is indicated, which have their own set of strengths and weaknesses. Among them, linked ears and vertex (Cz) are the most common. Cz reference is advantageous when it is located in the middle among active electrodes, however, for close points, it may result in poor resolution (Teplan, 2002). Based on the previous studies, central brain region in not majorly involved during the performance of a WM task compared to the frontal and parietal lobes and choosing Cz as the reference may be more appropriate rather than any other electrode in the 10–20 system. microEEG (a portable device made by Bio-Signal Group Inc., Brooklyn, New York) was used to sample EEG at 250 Hz (Figure 3a). Electrode impedances were kept below 10 kΩ. A 128-channel electrode cap with Ag/AgCl electrodes (EasyCap, Germany) was used to physically stabilize the sensors and provide uniform scalp coverage. We located the fNIRS optodes on the subject's forehead to fully cover the PFC, which plays a significant role in WM (Fitzgibbon et al., 2013). Seven sources and seven detectors were located on the forehead resulting in 19 optical channels, each consisting of a source–detector (S–D) pair separated by a distance of 3 cm. The 19 optical channels used in this study are shown in Figure 3c. The S–D placement starts from the left hemisphere and ends on the right hemisphere. S4 and D4 are located at the center of forehead, where D4 is located at the AFz location and channel 10 is located at the Fpz location according to the standard international 10–20 system (Figures 3b,c). We used our triplet-holders (Keles et al., 2014a) on the forehead to keep each EEG electrode in the middle of an S–D pair and fix the distances between the sensors. fNIRS signals were acquired at 8.93 Hz via NIRScout extended (NIRx Medical Technologies, New York) device, which was synchronized with the EEG data by means of common event triggers (Figure 3a). NIRScout is a dual wavelength continuous wave system. The EEG signal was band-pass filtered (0.5–80 Hz), and a 60 Hz notch filter was used to reduce the power line noise.

Figure 3. (a) EEG+fNIRS recording setup. Subject interaction with the computer, synchronization of EEG and fNIRS signal, recording of EEG and fNIRS signals, and data transmission to the acquisition platform. (b) Coronal view of the subject showing the close view of the placement fNIRS optodes and EEG electrodes. (c) Topographical view of fNIRS sources (Si, black) and detectors (Di, red) and EEG electrodes (green). Each pair of source and detector separated by 3 cm creates a channel (CHi). We used the signals from F7, Fpz, and F8.

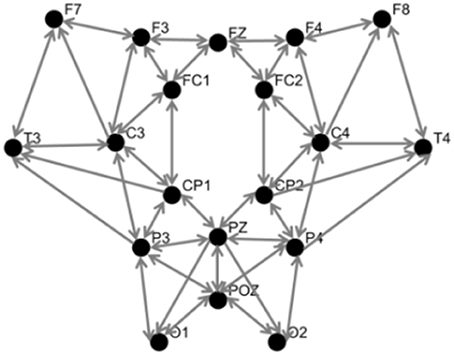

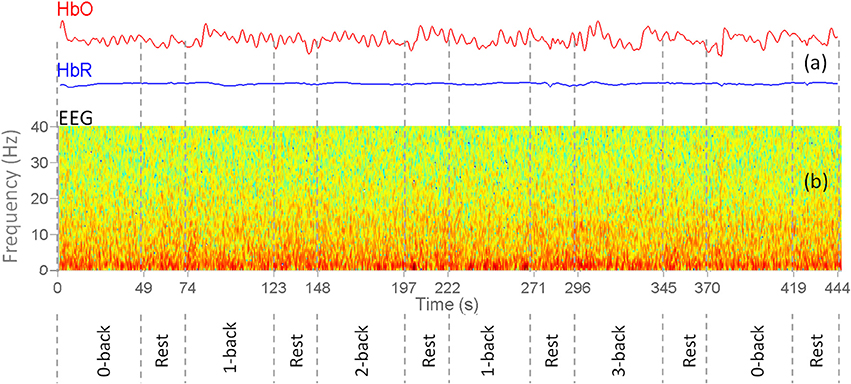

The spatial Laplacian transform is generally effective in muscle artifact removal from EEG signal (Fitzgibbon et al., 2013). We subtracted the mean EEG voltage of the neighbor electrodes from each EEG signal. Figure 4 shows the configuration of neighbor electrodes for 19 EEG channels. Each detector in NIRScout device records the signal from each separate source in two different wavelengths (760 and 850 nm). Oxy- and deoxyhemoglobin concentration changes (HbO and HbR) were computed using the modified Beer-Lambert law (Sassaroli and Fantini, 2004) using standard values for the chromophore extinction coefficients and differential path-length factor (Keles et al., 2016). fNIRS might be contaminated with the movement, heart rate, and Mayer wave artifacts. In order to reduce these artifacts while retaining the maximum possible amount of information, a band pass filter of 0.01–0.5 Hz was applied to fNIRS signals. After the preprocessing step, two subjects were excluded from the rest of analysis due to the poor quality of the signal and excessive noise. The processed signals were inspected visually for the presence of muscle and motion, eye movements, and other artifacts. The recordings that were contaminated in excess of 10% by artifact were excluded as a whole (Keles et al., 2016). In addition, one subject was excluded since he was not sufficiently focused in the experiment according to 0-back low accuracy cut-off. Figure 5 shows a segment of preprocessed data for one of the subjects. The figure indicates the temporal variations in the fNIRS signals and the EEG frequency bands, which are utilized in feature extraction. First and second rows are HbO and HbR of fNIRS channel 17, respectively. Third row is the EEG time-frequency map for channel O2.

Figure 4. Topographic view of EEG electrodes showing neighborhood pattern for Laplacian spatial filtering. Inward arrows to each node indicate the corresponding neighbors used for spatial filtering.

Figure 5. Sample preprocessed EEG+fNIRS data for one of the subjects. Vertical dashes separate different n-back task and rest blocks. (a) Concentration changes of oxy-hemoglobin (red curve) and deoxy-hemoglobin (blue) for channel 17. (b) EEG Time-frequency map of the channel O2.

After preprocessing, each task block ({0, 1, 2, 3}-back) and rest block was divided into 5, 10, 20, or 25 s epochs in order to assess the effect of window size on classification results. Figure 6 shows four different epoch type with window size from 5 to 25 s. In most of the cases there is an overlap between adjacent epochs (half size of epoch's length). This overlap was considered in order to capture the unique temporal response for each individual, as there could be inter-subject variability in the time required for the hemodynamic response to peak, and/or in the number of peaks (Power et al., 2012). In addition, during the classification phase, an imbalance in the number of features within each class biases the training procedure in favor of the class with a higher number of training features (He and Garcia, 2009). In our experiment design we have 40 rest blocks and 10 blocks from each n-back task type. From each task block, 16, 8, 4, and 2 features were extracted when we changed the size of the window from 5 to 25 s, respectively. From each rest block 5, 4, 2, and 1 features were extracted when we changed the size of the window from 5 to 25 s, respectively.

Figure 6. Four different epoch styles based on length of windows. The task and rest blocks are divided into (A) 5, (B) 10, (C) 20, and (D) 25 s windows (wi).

We extracted from each window three main categories of features for all 19 EEG electrodes and 19 fNIRS channels: EEG (uni-modal), fNIRS (uni-modal), and EEG+fNIRS (multi-modal or Hybrid).

EEG-based features were computed from the frequency band power (PSD), phase locking value (PLV), phase-amplitude coupling (PAC), and the asymmetry of frequency band power between right and left hemispheres (Asym_PSD). Initially, the spectrogram was calculated using short-time Fourier transform method with windows of 1 s and half window size overlapping and frequency resolution of 1 Hz. The power was calculated in eight frequency bands each with a width of 4 Hz in the range 0 to 32 Hz. The ranges are referred to by their conventional labels: delta (0–4 Hz), theta (4–8), alpha (8–12), followed by five intervals ranging from low beta (12–16) to high beta (28–32). We also used the labels f1, f2,…, f8 for these frequency bands. EEG frequency band power for each epoch was extracted by integration of the corresponding power over each frequency band. We imposed the 32 Hz cutoff since higher frequencies in scalp EEG are generally not considered informative about cortical activity (Goncharova et al., 2003; Muthukumaraswamy, 2013). PLV is a measure of phase synchrony between two distinct neuronal populations, which is computed between two selected EEG electrodes as an estimate the inter-area synchrony (Vinck et al., 2011). PLV was estimated between electrode pairs that were selected to assess three different types of synchrony: intra-hemispheric (F3-P3, F4-P4, Fc1-Cp1, Fc2-Cp2, Fz-Poz), symmetric inter-hemispheric (F7-F8, F3-F4, Fc1-Fc2, C3-C4, T3-T4, Cp1-Cp2, P3-P4, O1-O2), and asymmetric inter-hemispheric (F3-P4, F4-P3, Fc1-Cp2, Fc2-Cp1). PLV was computed for four band of interest ([3–5], [9–11], [19–21], [39–41] Hz). PAC measures coupling between the phase of a low frequency (here [4–7], [9–13] Hz) oscillations and the amplitude of a high frequency ([15–35], [30–60] Hz) oscillation (Radwan et al., 2016). It provides an estimate of local, multi-frequency organization of neuronal activity (Dvorak and Fenton, 2014). We chose 8 EEG channel pairs between right and left hemispheres (F8-F7, F4-F3, Fc2-Fc1, T4-T3, C4-C3, Cp2-Cp1, P4-P3, and O2-O1) for Asym_PSD feature.

fNIRS features were based on HbO and HbR amplitude (HbO/R Amp.), slope of HbO and HbR (HbO/R slope), standard deviation of HbO and HbR (HbO/R Std.), skewness of HbO and HbR (HbO/R Skew.), and kurtosis of HbO and HbR (HbO/R Kurt.). The statistics of HbO and HbR are commonly used as features in fNIRS studies of MWL and BMIs (Naseer and Hong, 2015; Naseer et al., 2016a,b). Our inspection of the fNIRS data revealed patterns of correlation between HbO and HbR that were time and area dependent. Hence, we also included the zero-lagged correlation between HbO and HbR (HbO-HbR Corr.) as an additional feature. Hybrid features were based on EEG and fNIRS features in addition to specifically Hybrid quantities that depend simultaneously on both systems.

We chose to focus on a straightforward quantity, which can be easily calculated within the time windows of interest: the zero-lagged correlation between the Hb (HbO or HbR) amplitude and the EEG frequency band power (in eight separate bands described above). These neurovascular features based on HbO and HbR were denoted NVO (oxygenated neurovascular coupling) and NVR (deoxygenated neurovascular coupling), respectively. To calculate NVO/R for the left hemisphere, the correlation between each fNIRS channel (CH1 to CH9) and each frequency band of F7 EEG channel was calculated (band-passed filter within the specific frequency range). For the right hemisphere, the correlation between each fNIRS channel (CH11–CH19) and each frequency band of F8 EEG channel was calculated. For the fNIRS channel 10, which is located at the center we used the average of F7 and F8 channels to find NVO and NVR. This resulted in 152 (19 × 8) NVO and 152 NVR features from each window. Each set of features extracted from one subject's data were dc-shifted and scaled in order to have a mean value of zero and standard deviation of one.

Following feature extraction, we implemented SVM classification and k-fold cross-validation with k = 10. SVM is a non-parametric supervised classification method, which already showed promising results in the medical diagnostics, optical character recognition, electric load forecasting, and other fields. SVM can be a useful tool in the case of non-regularity in the data, for example when the data are not regularly distributed or have an unknown distribution (Auria and Moro, 2008). Linear SVM constructs an optimal hyperplane creating a decision surface maximizing the margin of separation between the closest data points belonging to different classes (Aghajani et al., 2013). The observations were randomly partitioned into k groups of approximately the same size. One group was selected as the testing and the rest as the training data. Principal component analysis (PCA) was applied to the training set. We applied PCA separately on each feature subgroup (11 subgroups, with the subgroups divided further by frequency bands as described in our feature extraction methods). For example, the EEG alpha frequency band power (8–12 Hz) consisted of the time series from 19 EEG channels. After PCA, these signals yielded 19 principal components (PC) and their associated time series as the new set of features. A similar PCA was applied to each feature subgroup. The resulting PCs contained a set of weights (for the EEG channels), which could be interpreted as an activation map. PCA therefore allowed us to interpret the topographic distribution of activation associated with every feature. PCA also yielded an eigenvalue corresponding to the variance of that feature. The eigenvalues typically decrease sharply, the sum of the first few accounting for almost all of the total energy of the 19 PCs. However, the most energetic PCs are not necessarily the most informative, as shown in Results (Table 1). In order to estimate the features' discriminating ability, we used the Pearson correlation coefficient method (Mwangi et al., 2014). A reference time series was constructed by labeling each window by a distinct integer that represented the rest or the task difficulty level ({0 (rest), 1 (0-back), 2 (1-back), 3 (2-back), 4 (3-back)}). We used R2, the square of the Pearson correlation between the time series and the reference signal, to rank the set of features. The testing data were projected into the PC space that was obtained from the training data and the testing features were ranked by using the same method. In part of our analysis, we have chosen to reduce the number of features of the systems (EEG, fNIRS, and Hybrid) by truncating all systems at the same fixed size, eliminating the lowest ranked features. The labeled training examples were fed into a binary linear SVM. Each training example contained a vector of feature values in a given window and its label that denoted one of the two classes of interest. The SVM constructed an optimal hyper-plane creating a decision surface maximizing the margin of separation between the closest data points belonging to different classes (Aghajani et al., 2013). In this study there were 10 possible pairs of binary classifications corresponding to our five distinct classes. In order to investigate the ability to discriminate WM loading against a baseline, we have chosen the pairs {1-back v rest}, {2-back v rest}, {3-back v rest}, {1-back v 0-back}, {2-back v 0-back}, and {3-back v 0-back} and performed binary classifications on them. We also investigated the ability to discriminate between degrees of MWL by using a multi-class scheme. For this purpose we utilized the error-correcting output code multiclass model (ECOC), which employs a set of binary classifiers. We adopted an all-pairs ECOC model to train a binary classifier on the pairs of classes in the training data and, for each new instance in the testing data, assigned the label that minimizes the aggregate Hamming loss from the predictions of all binary classifiers (Dietterich and Bakiri, 1995). In comparison to its alternatives, this approach has been shown to enhance accuracy while maintaining a low run-time complexity (Fürnkranz, 2002). We investigated four groups of multi-class sets that contained narrow gradations of MWL: {3-back v 2-back v 1-back}, {3-backv 2-back v 1-back v 0-back}, {3-back v 2-back v 1-back v rest}, and {3-back v 2-back v 1-back v 0-back v rest}. The accuracy was computed as the fraction of labels in the testing data that were correctly identified by the SVM. Finally, the cross-validation was repeated k times with each group of observations being used exactly once as the testing data. The overall accuracy was calculated as the mean of the repetitions. In addition to overall accuracy, confusion matrices yield a very detailed overview of a classifier's performance. Usually, the confusion matrix is further summarized by some proportions extracted from the confusion matrix. The main metrics are (a) sensitivity of class A (Sens.A) which describes how well the classifier recognize observations of class A, (b) specificity of class A (Spec.A) which describes how well the classifier recognizes that an observation does not belong to class A, (c) positive predictive value of class A (PPVA) tells us given the prediction is class A, what is the probability that the observation truly belongs to class A?, (d) negative predictive value of class A (NPVA) tells us given a prediction does not belong to class A, what is the probability that the sample truly does not belong to class A (Beleites et al., 2013)? We pooled all the k confusion matrices of the k-fold cross validation to calculate Sens.A, Spec.A, PPVA, and NPVA. For all the calculations described in this paper we used Matlab v.8.6.0.267246 (R2015b) (The MathWorks, Inc., Natick, Massachusetts, United States).

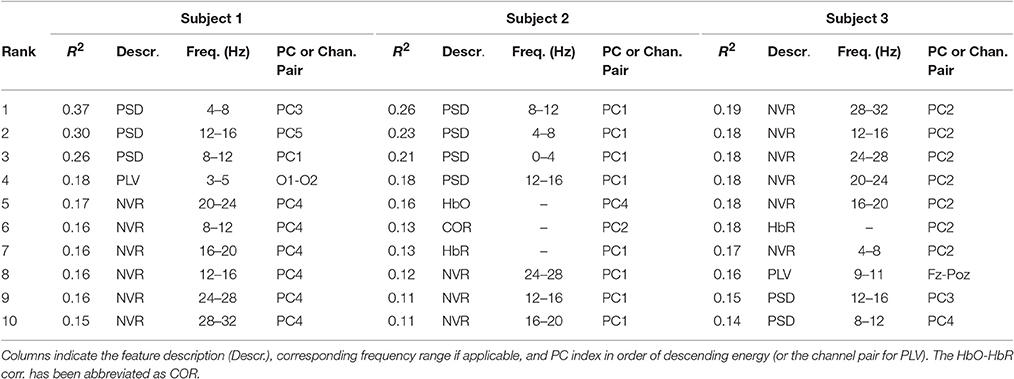

Table 1. The top R2 ranked features for three representative subjects for the binary rest v 3-back classification.

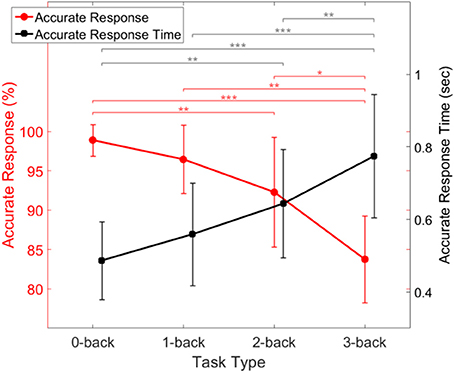

We initially investigated the relationship between the subjects' performance and task difficulty, in order to insure that it was consistent with expectations. Figure 7 shows the accuracy and response time of all subjects with error bars showing the standard deviation of inter-subject variability. The figure shows that the fraction of accurate responses decreased with increasing task difficulty. There was little or no accuracy decrement between 0- and 1-back tasks, as expected (Jonides et al., 1997). Furthermore, the time it took subjects to produce a correct response increased (and eventually more than doubled) with task difficulty (Figure 7).

Figure 7. Behavioral performance of the subjects during task conditions of increasing difficulty, showing response accuracy (red) and response time (black). Error bars indicate the standard deviation of inter-subject variability. Asterisks indicate statistical significance derived from a two-way ANOVA comparison of each two response accuracy (red) or response time (black) (*p < 0.05, **p < 0.001, ***p < 0.0001).

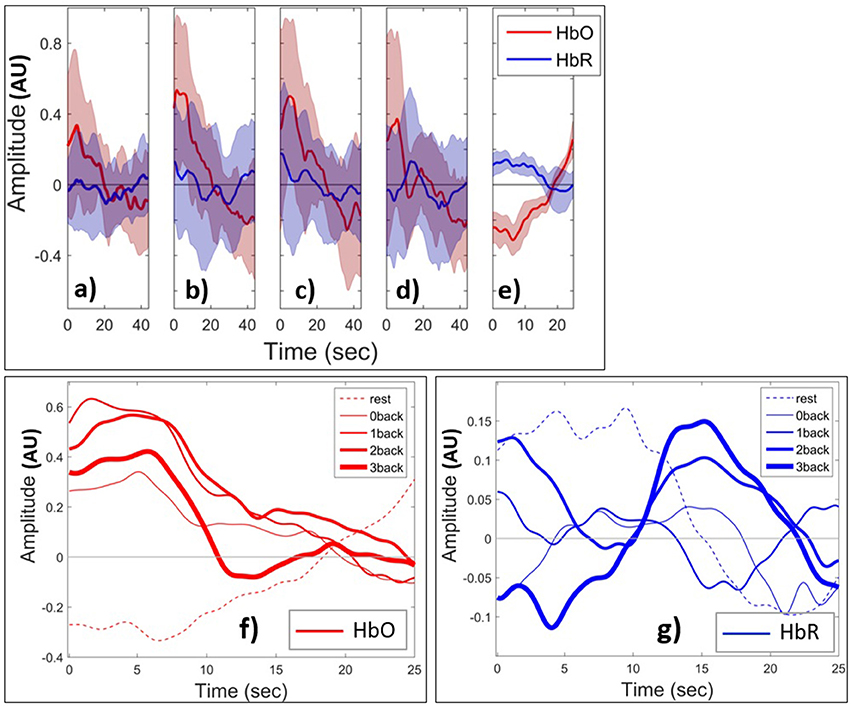

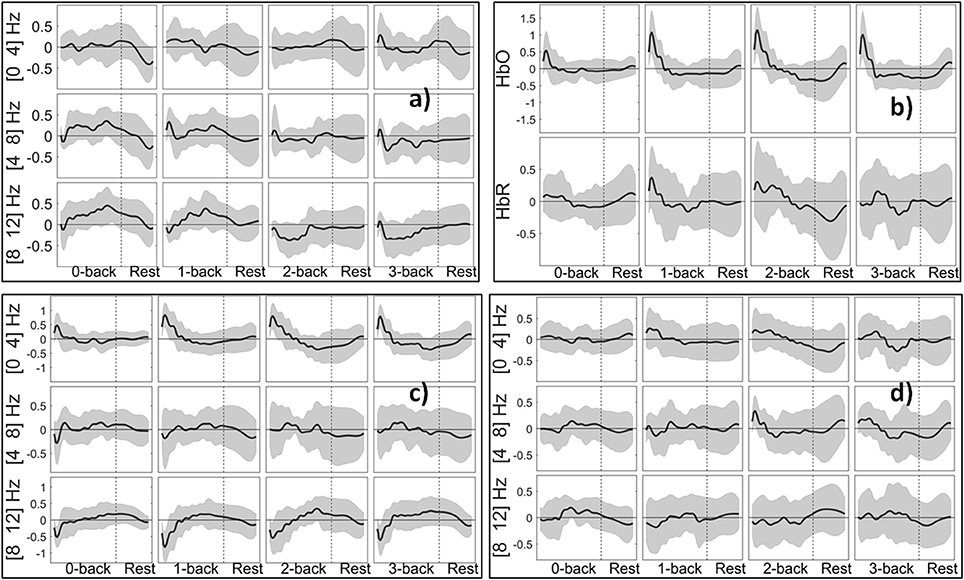

We next examined the HbO and HbR patterns during changes in mental load. Figures 8 (a–e) shows the grand block average of HbO (red) and HbR (blue) amplitude. The shaded area shows the standard deviation of the inter-subject variability. In this paper, the term grand block averaging denotes the average over the blocks of the same class and over all channels and subjects. Following neural activation, local blood flow and volume typically increase on a time scale of seconds and, at the beginning of the task, there is a localized rise in oxygenation in PFC (Huppert et al., 2006), which creates the positive peak of HbO. After a few seconds due to the metabolic consumption of oxygen the oxyhemoglobin concentration decreases leading to a negative HbO amplitude. During the rest state which comes after the task block, the oxyhemoglobin concentration starts to increase and HbO returns to baseline. Toward the end of rest window there is an apparent task anticipating rise in HbO. The range of changes of HbO is obviously higher than those of HbR during the task periods. From 0- to 2-back the positive peak of HbO increases and then decreases from 2- to 3-back. HbO and HbR have the opposite sign and are hence negatively short time correlated in the rest state. However, this appears to change during task in ways depending on the value of n. The range of HbO changes increases with n although they appear to slightly decrease as n changes from 2 to 3. In (Figures 8f,g) we show the grand block average of all tasks v rest state for one specific fNIRS channel (channel 10, which is located at Fpz, near the center of the forehead). In this figure the curves corresponding to rest and task with all values of n have been shown in one plot to make the comparison easier. And we just show the first 25 s of the n-back task block (out of the full 44 s). The shaded areas for standard deviation are omitted for clarity. Figure 8f shows that the peak amplitude of HbO is positive for task performance and negative for rest. In addition, it decreases with increasing load for n > 0. The area under curve clearly discriminates between rest and task since it is negative during rest and positive for all other n-back tasks. By contrast, the peaks of the amplitude of grand block average of HbR (Figure 8g) that occur after the 10 s have a positive correlation with the level of mental load. These patterns are not observed in the case of the 0-back task since it is related to perception only and is less involved with WM system. We also examined the time course of selected features that were extracted from the signals.

Figure 8. Grand block average of normalized HbO (red) and HbR (blue) during (a) 0-back, (b) 1-back, (c) 2-back, (d) 3-back, (e) rest. The thick curves show the average over all channels and subjects. The shaded area indicates the standard deviation of inter-subject variability. Grand block average of HbO (f) and HbR (g) for rest (dashed curves) and task (solid). Increasing thickness of solid curves corresponds to increasing task difficulty from 0- to 3-back. AU means arbitrary units.

Figure 9 shows the PSD extracted from EEG, HbO/R Amp. from fNIRS, and NVO/R features from EEG+fNIRS change in relation to the degree of WM load. We use the term session to denote a task block and the following rest block. For each cognitive state, we then have 10 sessions per subject. In the case of 5 s windowing, for each feature, we have 21 values in each session (16 from task block and 5 from rest block). The curves in Figure 9 were computed by first applying a simple triangular moving average filter covering three samples at each step, and then a cubic spline interpolation. The figure shows that the theta and alpha bands of EEG are positive during 0- and 1-back, although they become negative for 2- and 3-back tasks. The positive peak of HbO increases from 0- to 2-back and has a slightly lower peak for 3-back compared to 2-back. The figure also shows that the Hybrid features (such as NVO in the delta range) generally resemble the corresponding uni-modal features (such as HbO and PSD in the delta range) however they were dominated by neither, suggesting that the Hybrid feature contained additional information.

Figure 9. Grand block average of normalized features from 5 s windows: (a) PSD (delta, theta, alpha bands) of channel O2. (b) HbO/R Amp. for channel 10. (c) NVO (delta, theta, alpha bands) for channel 10. (d) NVR features (delta, theta, alpha bands) for channel 10. Shaded areas indicate the standard deviation of inter-subject variability.

Table 1 shows the top 10 highest ranked features (based on R2) for three subjects obtained during the 3-back v rest training set. The features are characterized by the description (e.g., PSD, PLV, HbO, as described in Section Methods) and the particular frequency band, where applicable. The frequency band is applicable only to the EEG and neurovascular features. The table also indicates the order of the feature according to the magnitude of its eigenvalue [ordered from the most energetic (1) to the least (19)]. Since PCA was not used in the case of PLV, the channel label is given instead of the PC order. For example, the highest ranked feature for subject one was the third most energetic PC from the EEG frequency band power in the theta range (4–8 Hz). For subject 3, the highest ranked feature was the second most energetic PC from the neurovascular feature based on the correlation between HbR and the EEG frequency band power in the high beta range (28–32 Hz). The table illustrates that the types of features in the top ranked group may vary among subjects and that high discriminating ability of a feature does not imply high energy in the sense of the PCA.

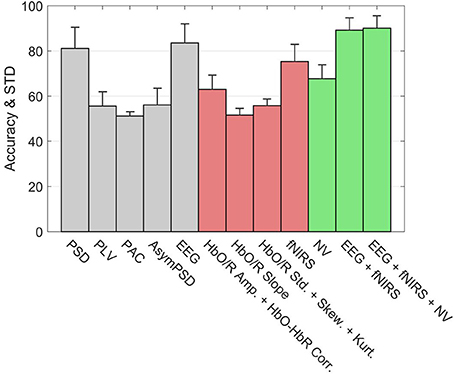

Figure 10 shows the classification accuracies of various subsystems as well as the Hybrid system for the 3-back v rest using 5 s windows. The error bars represent the standard deviation of inter-subject variability. Within the EEG group (gray bars), the leftmost bar is the accuracy of a system based only the PSD features. On its immediate right is the accuracy of the subsystem based only on PLV features, and similarly for PAC and other feature types. The rightmost bar in the EEG group shows the accuracy of the full EEG system which includes all feature types based on EEG signals. Clearly the PSD is the primary contributor to the discriminating ability of the EEG, however, the accuracy appears to be slightly enhanced by including the other types of features. Among the fNIRS systems (red) the leftmost bar indicates that Hb amplitudes together with the HbO-HbR correlation is the primary contributor to the accuracy of detection. Unlike the EEG system, the other feature types such as slope and higher order statistics significantly enhance the accuracy of the fNIRS system. The overall accuracy of the fNIRS system is lower than the overall accuracy of the EEG system. The accuracy based only on the neurovascular features is indicated by the leftmost bar in the Hybrid group (green). The middle bar in the Hybrid group represents the pooling of all features from the EEG and fNIRS systems. Finally the inclusion of the neurovascular features in the Hybrid system (rightmost green bar) appears to slightly enhance the accuracy.

Figure 10. Accuracy of types of features in classifying rest v 3-back with 5 s feature windows. The error bars indicate the standard deviation of inter-subject variability. The union of neurovascular features is abbreviated as NV. Features are extracted from different systems: EEG (gray bars), fNIRS (red bars), and Hybrid (green bars).

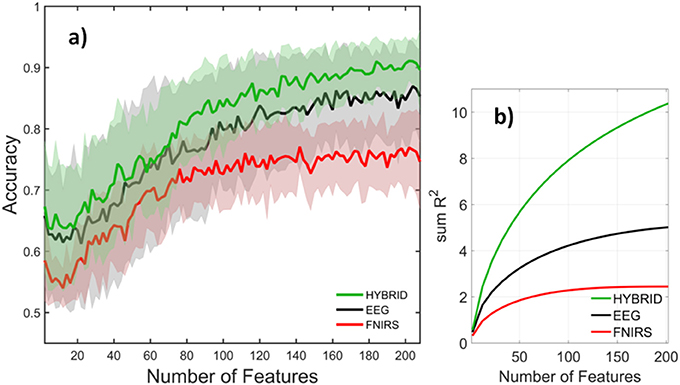

Figure 10 compared the accuracies of various systems with each system containing the full set of features that belonged to it. The number of features in the full set was different for each system, for example the EEG, fNIRS, and Hybrid systems contained 360, 209, and 873 features, respectively. This may raise the question of to what extent these systems' accuracies were influenced by the number of features they contained, rather than by the information content per feature. In order to examine this topic, we computed the systems' accuracies after they had been truncated to contain the same number of features. This was done by selecting the top ranking group after the features had been sorted in order of descending values of R2. The goal was to perform a comparison on an equal footing by truncating each system in the same way. The calculation was repeated by varying the number of features from two to the available maximum. Figure 11a indicates that the fNIRS system had the lowest accuracy over the entire range of the number of features. The EEG system performed better, while the Hybrid accuracy was consistently superior to either system, similar to the results shown in Figure 10. Figure 11b shows the cumulative sums of R2 index v number of features for three systems which qualitatively agree with Figure 11a. The calculations are for the 3-back v rest using 5 s windows and they qualitatively agree with the results (not shown) of binary classifications of other pairs of classes and window sizes. The shaded areas indicate the standard deviation of inter-subject variability.

Figure 11. (a) Accuracy and (b) cumulative sum of R2 for EEG (black), fNIRS (red), and Hybrid (green) systems as a function of system size. Mean and standard deviation over subjects are indicated by the solid curves and shaded areas, respectively. The classification task was rest v 3-back and feature window size was 5 s.

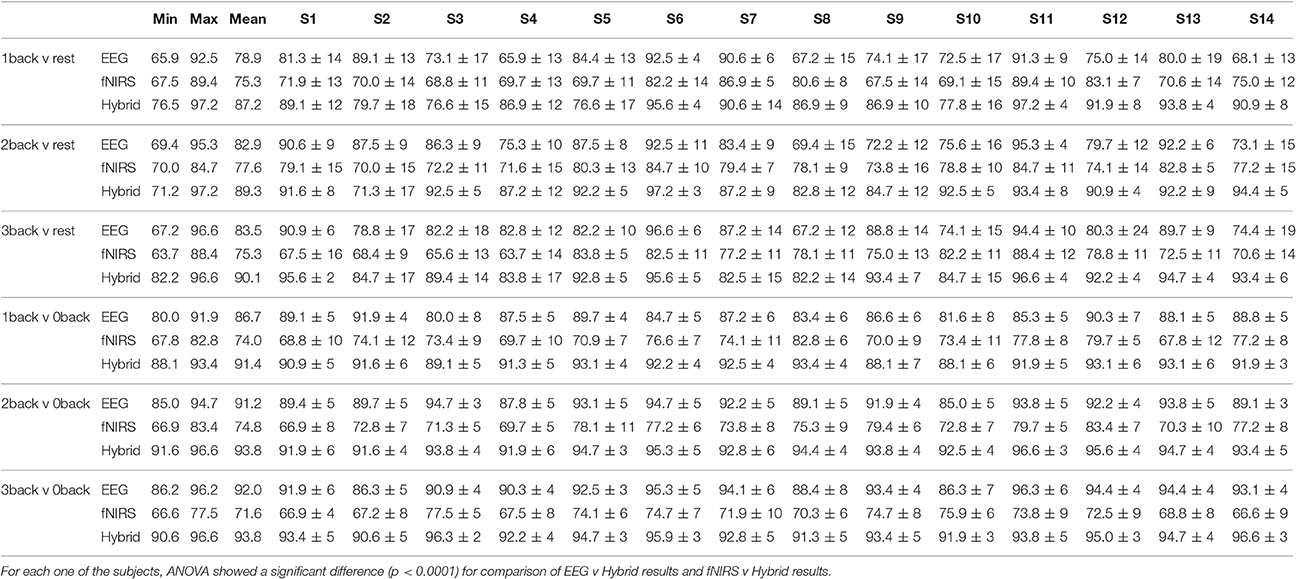

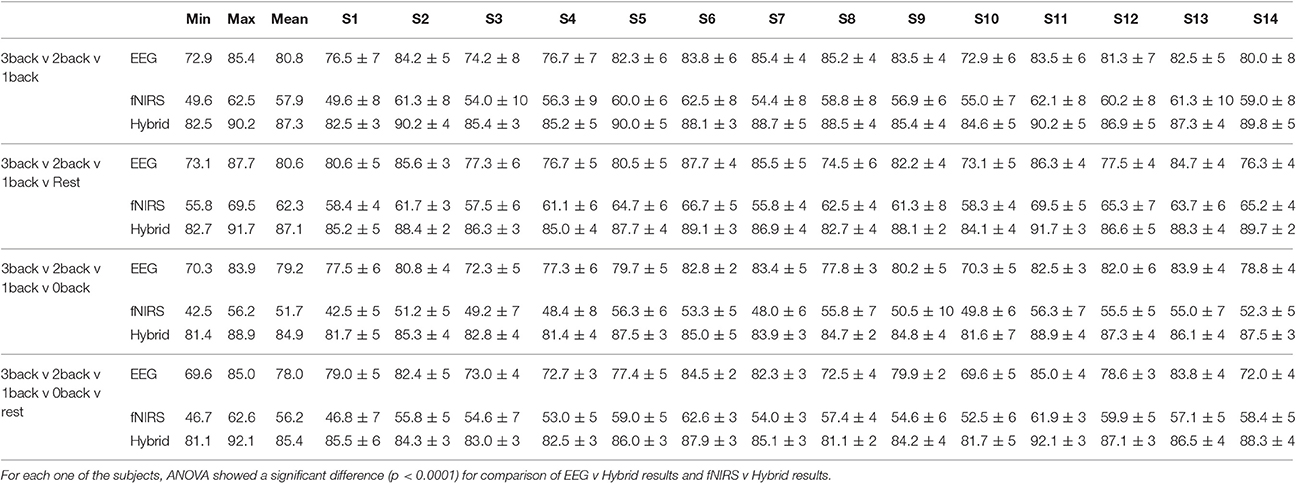

Figure 10 provided the results for only one type of binary classification (3-back v rest) and the variability over subjects as a standard deviation. However, it is highly instructive to examine the result for each subject as well as for every binary and multi-class problem that was described previously in our Methods. Tables 2, 3 break down the accuracy of classification for each subject (S1, S2,…, S14), system type (EEG, fNIRS, Hybrid), and the type of classification problem. The mean as well as the minimum and maximum of the values for the subject population are provided as three separate columns on the left. The height of the rightmost bars within the EEG (gray), fNIRS (red), and Hybrid (green) groups in Figure 10 correspond in Table 2 to the accuracy percentages 83.5, 75.3, and 90.1 shown under the column “Mean” and the row “3-back v rest.” In the columns for individual subjects, Table 2 shows the mean accuracy and the standard deviation from the trials in the 10-fold cross validation.

Table 2. Binary classification accuracy for all subjects included in the study (S1 to S14) for 10-fold cross validation.

Table 3. Multi-class classification accuracy for all subjects included in the study (S1 to S14) for 10-fold cross validation.

Table 2 suggests that the mean accuracy of classifying task against a baseline increases with n, as expected. The accuracy of detecting 0-back v n-back appears to be slightly greater than that of detecting rest v n-back (n > 0). For example, 87.2% for 1-back v rest and 91.4% for 1-back v 0-back. Table 3 shows the results for multi-class classification. In this case, the accuracy tends to decline slightly as more classes are included in the classification problem. In all subjects and classification problems, the Hybrid system has the greatest accuracy without exception. We investigated whether the observed superiority of the Hybrid system was statistically significant. A two-way ANOVA was performed on every classification problem (a row of Table 2 or Table 3) by using as the two factors the type of system (EEG, fNIRS, Hybrid) and the subject. The analysis was repeated by taking the classification problems as, first, the binary types in Table 2 and, second, the multi-class types in Table 3. In all cases, the differences of accuracy among the subjects were not significant and there were no interactions between system type and subject, while the differences in accuracy between the Hybrid and the uni-modal system was significant with a p < 0.001.

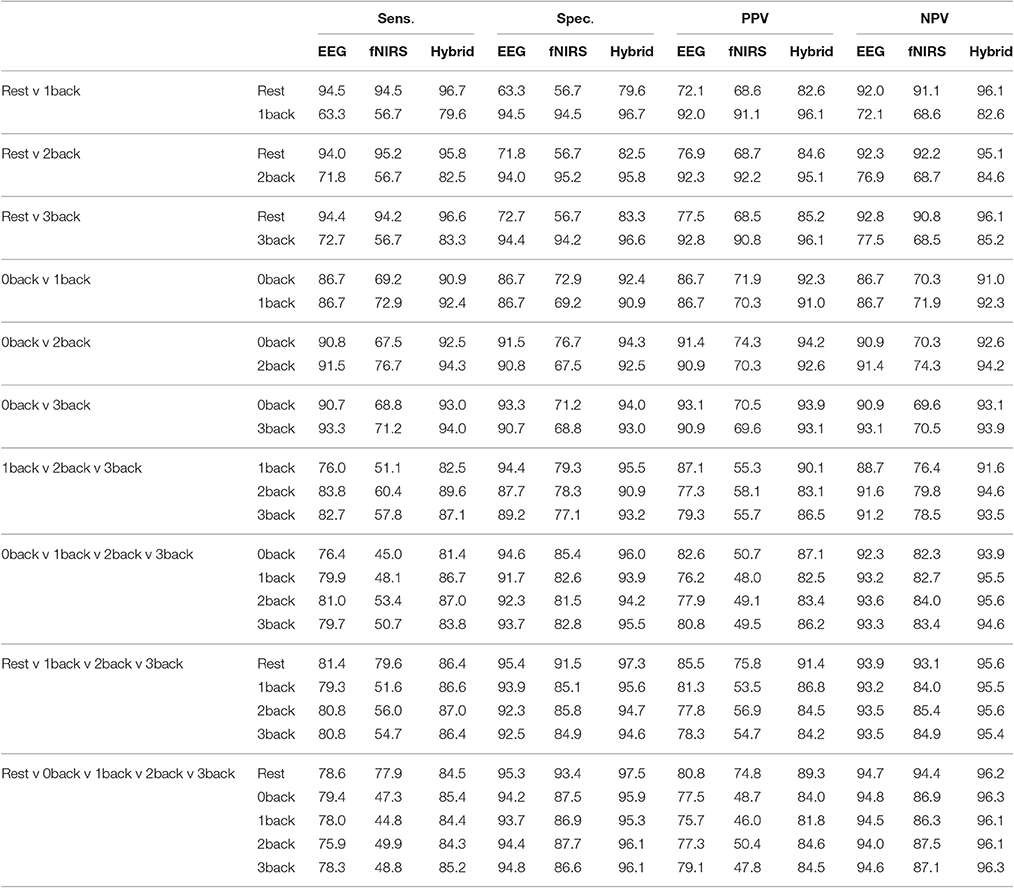

Table 4 lists the sensitivity (Sens.), specificity (Spec.), positive predictive value (PPV), and negative predictive value (NPV) for each individual class within a classification case. For example for the case of {Rest v 3back}, each one of rest and 3-back classes would have a Sens., Spec., PPV, and NPV, respectively. In addition, this table summarizes all these metrics for EEG, fNIRS, and Hybrid systems in order to make it easier to compare between their capabilities.

Table 4. Sensitivity (Sens.), specificity (Spec.), positive predictive value (PPV), and negative predictive value (NPV) are listed in percentage (%) for all classification cases (binary and multi-class) and all systems (EEG, fNIRS, Hybrid).

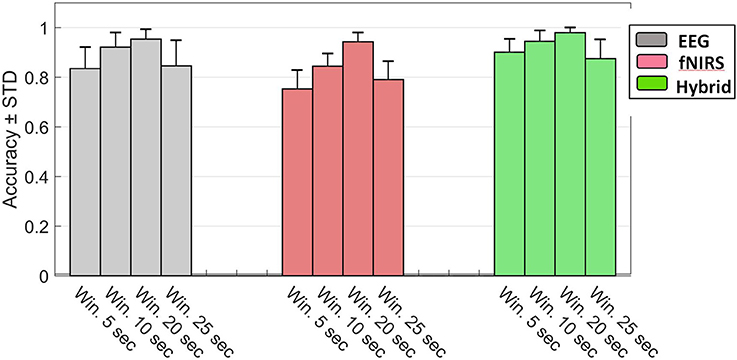

The foregoing results corresponded to 5 s windows but qualitatively agreed with patterns we observed with other window sizes as well. We also assessed the effect of window length on classification accuracy for EEG, fNIRS, and Hybrid systems. Figure 12 shows the results of this assessment. We examined four different lengths for the windows (5, 10, 20, and 25 s). Change of window length has the same effect on all three types of systems. By increasing the length from 5 to 20 the accuracy increases and declines thereafter.

Figure 12. Accuracy of the rest v 3-back classification as a function of window size for EEG (gray), fNIRS (red), and Hybrid (green) systems. Error bars indicate the standard deviation of inter-subject variability.

The functional activity of the human brain can be observed with various imaging techniques including fMRI, fNIRS, and EEG. Each of these modalities has its advantages and disadvantages. The advantage of using Hybrid EEG+fNIRS system can be divided into two main categories: First, each of these modalities is measuring the changes in a specific brain physiology. EEG results directly from the electrical activity of cortical and subcortical neurons with a sub-millisecond temporal resolution. On the other hand, fNIRS yields local measures of changes in HbO and HbR concentration and is, therefore, an indicator of metabolic/hemodynamic changes associated with neural activity. Second, the physics of measurement behind EEG and fNIRS are quite different. This property, for example, makes EEG signal prone to blink and muscle artifacts, while this is not the case for fNIRS. Hence using a multimodal recording system we are able to assess brain behavior from different physiological perspectives in addition to compensating for some weaknesses of one modality by the other one.

Our results suggest that EEG+fNIRS combined with a classifier are capable of robustly discriminating among various levels of MWL. In our study, the Hybrid system had an accuracy higher than either EEG or fNIRS alone for every subject. The pooling of EEG and fNIRS features and the inclusion of neurovascular features resulted in a synergistic enhancement, rather than in a diluting effect (which would have given a performance intermediate between the two modalities). In mission-critical contexts such as aviation or surgery, even small improvements in MWL detection can translate into significant gains in safety and efficiency. Our experiments were designed to use WM load (adjusted through the value of n in the n-back task) as a correlate of MWL in general. Furthermore, EEG and fNIRS can be integrated without excessive cost, effort, or intrusiveness for the user. The combination of all these considerations suggests that EEG+fNIRS should be preferred to only EEG or fNIRS, in developing passive BCIs and other applications which need to monitor users' MWL.

Our preliminary analysis of the experimental data was consistent with expectations. For example Figure 7 indicated that the fraction of accurate responses declined more steeply as n increased. This can be explained by noting that temporal tagging is the cognitive process that imposes the greatest load in the n-back task, as compared to the other processes which are also involved such as encoding, storage, matching, and inhibition to dampen the oldest memory traces (Jonides et al., 1997).

Temporal tagging, unused in the 0- and 1-back, begins to affect MWL substantially as n > 1. Another interesting preliminary result was the observation that HbO showed an anticipatory increase near the end of the rest sessions (Figure 8E). This is consistent with related fMRI findings (Sakai and Passingham, 2003) and with the fact that the PFC is involved in planning future action. A negative correlation between HbO and HbR has been seen in the literature. Based on (Figures 8A–E), the shaded area, which is the standard deviation of normalized HbO and HbR variations within the block for all of the subjects and all of the fNIRS channels, is relatively high. This shows the high level of inter-subjects variability and that might be the reason that we are not seeing such anti-correlated pattern between HbO and HbR in Figure 8. Izzetoglu et al. (2004) showed that the reason behind the drop of the peak of HbO in Figure 8d is that when a participant reaches his maximum performance capacity or in another word starts to overloads cognitively, he loses his concentration on the task and as a result performance as well as the oxygenated hemoglobin changes decline.

Figure 5 did not show any differences between rest and task states that were obvious to visual inspection of the preprocessed EEG or fNIRS signals. Subject and block averaging of various features shown in Figure 9 did, however, indicate that such systematic variations existed. Lower values of the EEG alpha band power in the 2- and 3-back task, and higher values of HbO in the beginning of the task period in the n-back (increasing with n) relative to those in the rest state were examples of such visible variations. To take advantage of such variations we employed discrimination through linear SVM. In the case of non-linear SVM, the kernel can help with the non-linearly separable data and map it into a new feature space in which the dataset are separable with a linear SVM. In non-linear SVM in order to improve the accuracy choosing the optimum kernel parameters, is necessary. This can reduce classifier's generalization potential for new subjects when we don't want to adjust the kernel's parameters. It will increase the probability of overfitting occurrence, since increasing the complexity level of a classifier gives it the flexibility to match exactly to the train set. In addition, the option of choosing a non-linear SVM depends on the exact application to make a trade-off between a slightly higher accuracy rather than calculation speed. The same trade-off we face to choose the windows size. The results shown in Figure 10, Tables 2, 3 were highly promising for accurately discriminating among the rest and task states. As Tables 2, 3 show, the subject averaged accuracy of the Hybrid system in binary discrimination was lowest (87.2%) for 1-back v rest and highest (96.6%) for 3-back v 0-back. The corresponding lowest and highest results for uni-modal systems were fNIRS (71.6%) and EEG (92.0%), both for 3-back v rest. We calculated the overall average of accuracy one time for all of the binary cases and one time for all of the muli-class cases. We did this calculation for EEG, fNIRS, and Hybrid systems separately. The results show that EEG, fNIRS, and Hybrid system, in the case of binary classification, have 85.9, 74.8, and 90.9% overall accuracy, respectively. EEG, fNIRS, and Hybrid system, in the case of multi-class classification, have 79.6, 57.0, 86.2% overall accuracy, respectively. These numbers convey that the accuracy of each one of EEG, fNIRS, and Hybrid systems are higher for the binary cases. The multi-class accuracies were generally lower; however, note that the chance level accuracy for multi-class classification is less than binary classification (33% for 3-back v 2-back v 1-back, 25% for 3-back v 2-back. v 1-back v rest and also 3-back v 2-back. v 1-back v 0-back, and 20% for 3-back v 2-back. v 1-back v 0-back v rest).

Table 4 reveals that, for all of the four extracted metrics from the confusion matrix (sensitivity, specificity, PPV, NPV), always Hybrid system has a higher value than EEG system and EEG system has a higher value than fNIRS system. In the cases of binary classification, for those that we are detecting between task and rest, the sensitivity of detection of rest state is significantly higher than the sensitivity of detection of task state. As its complement, the specificity of detecting task state is higher than detecting the rest state. In the cases of binary classification, for those that we are detecting between task and task, the sensitivity of detecting the task with a higher difficulty level is more than those with less level of difficulty, although the difference is not very significant. The PPV and NPV are usually more important than sensitivity and specificity. Patients and doctors want to know whether this particular patient is ill rather than whether the test can recognize ill people (Beleites et al., 2013). Here, our result (Table 4) shows that Hybrid system has at the same time a very promising PPV and NPV for all of the classification cases. Except for the case of {1-back v rest}, the minimum of sensitivity, specificity, PPV, NPV for the Hybrid system are 81.4, 82.5, 81.8, and 84.6%, respectively.

Selecting an optimal subset from the full set of features is crucial for achieving high accuracy and avoiding over-fitting. In some applications, e.g., those involving on-board real-time analysis, it may also be important to keep the system size small and avoid computational delays. Figure 11b shows the cumulative sum of R2 v number of features for three systems which qualitatively agrees with Figure 11a, suggesting that R2 ranking is an effective method of feature selection. We have not used an explicit artifact rejection step in our analysis. However, it is well known that PCA can segregate non-cerebral artifacts (typically of higher amplitude than contributions of cortical origin) into distinct PCs. Our feature selection based on R2 then assigns a lower rank to such PCs and they are excluded from a truncated system.

One of the main considerations in developing an online system is computational speed. It is instructive to review the computational loads of particular feature types in conjunction with how effectively they discriminate among rest and task states. For example, Figure 10 shows that PAC is the least discriminating EEG feature. This may be important in designing a compact and efficient detector, as PAC is also the most computationally time-consuming feature. By contrast, the most effective EEG feature (PSD) was also the fastest to compute. In our study, the central processing unit (CPU) time required for computing PSD, PLV, PAC, and Asym_PSD were, respectively, 0.1, 14.3, 44.4, and 0.2 s. The CPU times required for other features were as follows: HbO/R Amp. and HbO-HbR Corr. (0.1 s), HbO/R slope (14.3 s), Std., Skew. and Kurt. collectively (3.3 s), and NVO and NVR (3.4 s).

Our results suggest that Hybrid outperforms the uni-modal systems for each subject (Tables 2, 3), every classification problem (Tables 2, 3), every number of features (Figure 11), and every window size (Figure 12). This could have been due to the neurovascular features that the uni-modal systems do not contain. NV obviously had a higher classification performance rather than any of fNIRS based feature subgroups. However, Figure 10 indicates that such features contribute little if any to the accuracy (two rightmost bars) after the other EEG and fNIRS features have been pooled. The likely explanation instead is related to inter-subject variability. We have found that the top ranked (in terms of R2) features tend to differ among different subjects. Although, the EEG frequency band power (especially in the alpha range) tended to play an important role for most subjects, for other subjects other feature types, fNIRS- or Hybrid based, dominated the top ranks. An example of this is provided in Table 1 where the third subject's most discriminating PCs were neurovascular. EEG and fNIRS use different physical processes for detection and the underlying physiology which they detect are different. Hence deficiencies such as artifacts, weak sensor coupling, or subject variability leading to a weak signal would selective affect only one modality rather than both. The Hybrid advantage may be associated primarily with the complementary nature of the individual modalities.

Figure 12 indicates that accuracy can be increased by using larger windows. But this presents a tradeoff between accuracy and rapid detection. The windows with the highest accuracy were 20 s long and may be impractical for some applications if the online response to rapid changes in MWL is desirable. As window size increases, although the amount of information per window likely increases, the number of windows available for training the classifier decreases. Fewer training data are expected to cause the classifiers to underperform (Grimes et al., 2008). The decline in accuracy in Figure 12 for windows >20 s may be due to the excessively small number of data available for training.

A handful of studies on concurrent EEG and fNIRS and WM tasks have been previously published. Hirshfield et al. (2009) combined an 8 channel fNIRS covering the forehead with 32 channel whole-head EEG with N = 4 subjects as they performed a counting and mental arithmetic task with adjustable difficulty. They used separate classifiers for the fNIRS and EEG (k-nearest neighbor and Naive Bayes classification, respectively) and obtained a maximum accuracies of 64% (with fNIRS) and 82% (EEG). They did not attempt to use the multi-modal data concurrently. The generally higher accuracy of the EEG is consistent with our results. Their overall lower accuracies relative to our results may be due to the relatively short 2 s feature extraction window. Liu Y. et al. (2013) used a 16-optode fNIRS system covering the forehead and 28 EEG sensors at the standard 10–20 sites, with N = 16 subjects performing a n-back task. They found significant correlations between WM load and some EEG frequency band powers as well as HbO and HbR, however did not attempt classification. Their study focused on discovering neural correlates of the effects of practice time on performance. Coffey et al. (2012) recorded three fNIRS channels over the left forehead together with 8 EEG electrodes placed mainly in the frontal and central areas, from N = 10 subjects in a n-back task. They extracted EEG frequency band power and fNIRS Hb amplitude features from 5 s windows and employed them in linear discriminant analysis classifiers. They report maximum accuracies of 89.6% (EEG), 79.7% (fNIRS), and 91.0% (Hybrid). Their results differed from ours in that in some subjects all their systems had very low accuracies and their Hybrid accuracies were not always higher than those of both uni-modal systems. However, the fact that EEG generally had the higher uni-modal accuracy and that Hybrid could attain the highest observed accuracy were consistent with our findings. The differences from our results could be attributed to the relatively lower number of sensors and fewer types of features they employed.

Acquiring the very low-frequency (VLF) oscillations (< 0.5 Hz) in the EEG signal requires highly specialized amplifiers (DC-coupled, high input impedance, high DC stability, and a wide dynamic range; Demanuele et al., 2007). In addition, VLF oscillations are known to be linked with specific pathologies such as epileptic seizures or attention deficit hyperactivity disorder (Steriade et al., 1993; Vanhatalo et al., 2004; Demanuele et al., 2007) that are not within the range of interest in this study. On the other hand, some studies (Gevins et al., 1997; Berka et al., 2007; So et al., 2017) demonstrated EEG within gamma range as a biomarker for discrimination between different cognitive states. We defined the band-pass filter cutoff frequency (0.5–80 Hz) based on these criteria. Although in the feature extraction section, we did not consider gamma frequency range features and have considered this as the future work.

The present study had several limitations which we have not directly addressed due to constraints of available time or effort. Firstly, the group of subjects included only one female. This may have been due to the demographics of the subjects, who happened to be interested in volunteering for our study. In addition to the neural correlates of MWL, we have recorded the subjects' performance characteristics. However, it may prove insightful to collect data on the MWL by using additional techniques such as self-reporting, which was not done in this study. In some studies for assessment of MWL participants filled out the NASA Task Load Index (NASA TLX) questionnaire (Hart and Staveland, 1988) to provide a subjective evaluation of the mental demand induced by different levels of task difficulty. In this study, we implicitly used the assumption that an increase in the level of task difficulty will result in a higher MWL. This can be also considered in future studies. In addition, it is possible that during the course of an experiment the subjects' performance and MWL change through training effects. Studying the performance and neural correlates of MWL for subsets of our data could reveal differences in the beginning and at the end of the study. This would also require an additional investigation of statistical validity, and was not attempted. The statistical significance of the results of our study was demonstrated through a two-way ANOVA that showed significant differences in the accuracy of the Hybrid v uni-modal systems. However, we have not investigated whether a smaller group of subjects would still yield a significant result. We have investigated the capabilities of various subsets of the types of features that were available. It would also be illuminating to investigate the classification accuracy of subsets of the full array of our sensors. Such information can help design more compact headsets and is the subject of an ongoing study. The headset we used is lightweight and no discomfort was reported by any of the subjects. However, wearing it may nevertheless affect performance, and this could be revealed in a parallel set of experiments which we have not done. The primary goal of our study was to apply machine learning techniques in discriminating levels of MWL. We used multiple statistical techniques to ensure that the statistical significance of the values of accuracy that we obtained for such discrimination. Our observations regarding the range of changes of Hb are therefore only qualitative and observational, serving to ensure that our results are consistent with expectations.

In this study, we have taken steps toward investigating the EEG+fNIRS feature extraction and analysis methods by using a popular WM task. We anticipate and hope that converging efforts in Hybrid hardware integration (Safaie et al., 2013) and data analysis (Biessmann et al., 2011; Keles et al., 2016), potentially based on detailed knowledge of underlying physiology (Bari et al., 2012; Mandrick et al., 2016a), will lead to more effective passive BMIs and other applications in neuroergonomics.

HA and AO designed the study. HA collected and analyzed the data. HA, AO, and MG wrote the manuscript.

This work is based partly on support by the National Science Foundation I/UCRC for Cyber-Physical Systems for the Hospital Operating Room under Grant no. IIP-1266334 and by industry partners. We would also like to thank the Department of Biomedical Engineering and the Cullen College of Engineering at University of Houston for its financial support (Award no. R413022).

AO participated in the development of the wireless portable EEG device (microEEG), which was used in this research. He holds a financial interest in Bio-Signal Group which is the maker of microEEG.

The other authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Aghajani, H., and Omurtag, A. (2016). “Assessment of mental workload by EEG + fNIRS,” in 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Orlando, FL), 3773–3776.

Aghajani, H., Zahedi, E., Jalili, M., Keikhosravi, A., and Vahdat, B. V. (2013). Diagnosis of early Alzheimer's disease based on EEG source localization and a standardized realistic head model. IEEE J. Biomed. Health Inform. 17, 1039–1045. doi: 10.1109/JBHI.2013.2253326

Auria, L., and Moro, R. A. (2008). Support Vector Machines (SVM) as a Technique for Solvency Analysis. Rochester, NY: Social Science Research Network.

Ayaz, H., Shewokis, P. A., Bunce, S., Izzetoglu, K., Willems, B., and Onaral, B. (2012). Optical brain monitoring for operator training and mental workload assessment. Neuroimage 59, 36–47. doi: 10.1016/j.neuroimage.2011.06.023

Baddeley, A. (2003). Working memory: looking back and looking forward. Nat. Rev. Neurosci. 4, 829–839. doi: 10.1038/nrn1201

Bari, V., Calcagnile, P., Molteni, E., Re, R., Contini, D., Spinelli, L., et al. (2012). From neurovascular coupling to neurovascular cascade: a study on neural, autonomic and vascular transients in attention. Physiol. Meas. 33, 1379–1397. doi: 10.1088/0967-3334/33/8/1379

Beleites, C., Salzer, R., and Sergo, V. (2013). Validation of soft classification models using partial class memberships: an extended concept of sensitivity & co. applied to grading of astrocytoma tissues. Chemom. Intell. Lab. Syst. 122, 12–22. doi: 10.1016/j.chemolab.2012.12.003

Berka, C., Levendowski, D. J., Lumicao, M. N., Yau, A., Davis, G., Zivkovic, V. T., et al. (2007). EEG correlates of task engagement and mental workload in vigilance, learning, and memory tasks. Aviat. Space Environ. Med. 78(5 Suppl.), B231–B244.

Biessmann, F., Plis, S., Meinecke, F. C., Eichele, T., and Müller, K.-R. (2011). Analysis of multimodal neuroimaging data. IEEE Rev. Biomed. Eng. 4, 26–58. doi: 10.1109/RBME.2011.2170675

Boff, K. R., Kaufman, L., and Thomas, J. P. (1994). Handbook of Perception and Human Performance, Vol. 2, Cognitive Processes and Performance. New York, NY: John Wiley & Sons.

Brouwer, A.-M., Hogervorst, M. A., van Erp, J. B. F., Heffelaar, T., Zimmerman, P. H., and Oostenveld, R. (2012). Estimating workload using EEG spectral power and ERPs in the N-back task. J. Neural Eng. 9:045008. doi: 10.1088/1741-2560/9/4/045008

Buccino, A. P., Keles, H. O., and Omurtag, A. (2016). Hybrid EEG-fNIRS asynchronous brain-computer interface for multiple motor tasks. PLoS ONE 11:e0146610. doi: 10.1371/journal.pone.0146610

Byrne, A. (2013). Mental workload as a key factor in clinical decision making. Adv. Health Sci. Educ. Theory Pract. 18, 537–545. doi: 10.1007/s10459-012-9360-5

Carswell, C. M., Clarke, D., and Seales, W. B. (2005). Assessing mental workload during laparoscopic surgery. Surg. Innov. 12, 80–90. doi: 10.1177/155335060501200112

Causse, M., and Matton, N. (2014). “Using near infrared spectroscopy to detect mental overload in flight simulator,” in Advances in Cognitive Engineering and Neuroergonomics, eds M. K. Stanney, and S. K. Hale. Available online at: http://oatao.univ-toulouse.fr/16199/

Coffey, E. B. J., Brouwer, A.-M., and van Erp, J. B. F. (2012). Measuring workload using a combination of electroencephalography and near infrared spectroscopy. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 56, 1822–1826. doi: 10.1177/1071181312561367

Cohen, J. D., Perlstein, W. M., Braver, T. S., Nystrom, L. E., Noll, D. C., Jonides, J., et al. (1997). Temporal dynamics of brain activation during a working memory task. Nature 386, 604–608. doi: 10.1038/386604a0

Dehais, F., Causse, M., Vachon, F., Régis, N., Menant, E., and Tremblay, S. (2014). Failure to detect critical auditory alerts in the cockpit: evidence for inattentional deafness. Hum. Factors 56, 631–44. doi: 10.1177/0018720813510735

Demanuele, C., James, C. J., and Sonuga-Barke, E. J. (2007). Distinguishing low frequency oscillations within the 1/f spectral behaviour of electromagnetic brain signals. Behav. Brain Funct. 3, 62. doi: 10.1186/1744-9081-3-62

Dietterich, T. G., and Bakiri, G. (1995). Solving multiclass learning problems via error-correcting output codes. J Artif. Int. Res. 2, 263–286.

Dixon, B. J., Daly, M. J., Chan, H., Vescan, A. D., Witterick, I. J., and Irish, J. C. (2013). Surgeons blinded by enhanced navigation: the effect of augmented reality on attention. Surg Endosc. 27, 454–61. doi: 10.1007/s00464-012-2457-3

Dornhege, G., Millán, J. R., Hinterberger, T., McFarland, D., and Müller, K. (2007). “Improving human performance in a real operating environment through real-time mental workload detection,” in Toward Brain-Computer Interfacing (MIT Press), 409–422. Available online at: http://ieeexplore.ieee.org/xpl/articleDetails.jsp?arnumber=6281209

Durantin, G., Gagnon, J.-F., Tremblay, S., and Dehais, F. (2014). Using near infrared spectroscopy and heart rate variability to detect mental overload. Behav. Brain Res. 259, 16–23. doi: 10.1016/j.bbr.2013.10.042

Dvorak, D., and Fenton, A. A. (2014). Towards a proper estimation of phase-amplitude coupling in neural oscillations. J. Neurosci. Methods 225, 42–56. doi: 10.1016/j.jneumeth.2014.01.002

Fazli, S., Mehnert, J., Steinbrink, J., Curio, G., Villringer, A., Müller, K.-R., et al. (2012). Enhanced performance by a hybrid NIRS–EEG brain computer interface. Neuroimage 59, 519–529. doi: 10.1016/j.neuroimage.2011.07.084

Fishburn, F. A., Norr, M. E., Medvedev, A. V., and Vaidya, C. J. (2014). Sensitivity of fNIRS to cognitive state and load. Front. Hum. Neurosci. 8:76. doi: 10.3389/fnhum.2014.00076

Fitzgibbon, S. P., Lewis, T. W., Powers, D. M. W., Whitham, E. W., Willoughby, J. O., and Pope, K. J. (2013). Surface laplacian of central scalp electrical signals is insensitive to muscle contamination. IEEE Trans. Biomed. Eng. 60, 4–9. doi: 10.1109/TBME.2012.2195662

Fürnkranz, J. (2002). Round Robin classification. J. Mach. Learn. Res. 2, 721–747. doi: 10.1162/153244302320884605

Gagnon, J.-F., Durantin, G., Vachon, F., Causse, M., Tremblay, S., and Dehais, F. (2012). “Anticipating human error before it happens: towards a psychophysiological model for online prediction of mental workload,” in Human Factors: A View from an Integrative Perspective. 57–66. Available online at: https://goo.gl/Ma6HbT

Gateau, T., Durantin, G., Lancelot, F., Scannella, S., and Dehais, F. (2015). Real-time state estimation in a flight simulator using fNIRS. PLoS ONE 10:e0121279. doi: 10.1371/journal.pone.0121279

Gevins, A., Smith, M. E., Leong, H., McEvoy, L., Whitfield, S., Du, R., et al. (1998). Monitoring working memory load during computer-based tasks with EEG pattern recognition methods. Hum. Factors 40, 79–91. doi: 10.1518/001872098779480578

Gevins, A., Smith, M. E., McEvoy, L., and Yu, D. (1997). High-Resolution EEG mapping of cortical activation related to working memory: effects of task difficulty, type of processing, and practice. Cereb. Cortex 7, 374–385. doi: 10.1093/cercor/7.4.374

Goncharova, I. I., McFarland, D. J., Vaughan, T. M., and Wolpaw, J. R. (2003). EMG contamination of EEG: spectral and topographical characteristics. Clin. Neurophysiol. 114, 1580–1593. doi: 10.1016/S1388-2457(03)00093-2

Grimes, D., Tan, D. S., Hudson, S. E., Shenoy, P., and Rao, R. P. N. (2008). “Feasibility and pragmatics of classifying working memory load with an electroencephalograph,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '08) (New York, NY: ACM), 835–844.

Guru, K. A., Shafiei, S. B., Khan, A., Hussein, A. A., Sharif, M., and Esfahani, E. T. (2015). Understanding cognitive performance during robot-assisted surgery. Urology 86, 751–757. doi: 10.1016/j.urology.2015.07.028

Hart, S. G., and Staveland, L. E. (1988). “Development of NASA-TLX (Task Load Index): results of empirical and theoretical research,” in Advances in Psychology, Vol. 52, Human Mental Workload, eds P. A. Hancock and N. Meshkati, (Elsevier), 139–183. Available online at: http://www.sciencedirect.com/science/article/pii/S0166411508623869

He, H., and Garcia, E. A. (2009). Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 21, 1263–1284. doi: 10.1109/TKDE.2008.239

Herff, C., Heger, D., Fortmann, O., Hennrich, J., Putze, F., and Schultz, T. (2014). Mental workload during n-back task—quantified in the prefrontal cortex using fNIRS. Front. Hum. Neurosci. 7:935. doi: 10.3389/fnhum.2013.00935

Hirshfield, L. M., Chauncey, K., Gulotta, R., Girouard, A., Solovey, E. T., Jacob, R. J. K., et al. (2009). “Combining electroencephalograph and functional near infrared spectroscopy to explore users' mental workload,” in Foundations of Augmented Cognition Neuroergonomics and Operational Neuroscience, eds D. D. Schmorrow, I. V. Estabrooke, M. Grootjen (Berlin; Heidelberg: Springer), 239–247. Available online at: http://link.springer.com/chapter/10.1007/978-3-642-02812-0_28

Hogervorst, M. A., Brouwer, A.-M., and van Erp, J. B. F. (2014). Combining and comparing EEG, peripheral physiology and eye-related measures for the assessment of mental workload. Front. Neurosci.8:322. doi: 10.3389/fnins.2014.00322

Horrey, W. J., and Wickens, C. D. (2003). Multiple Resource Modeling of Task Interference in Vehicle Control, Hazard Awareness and in-Vehicle Task Performance. Available online at: https://trid.trb.org/view.aspx?id=698780

Hoshi, Y., Tsou, B. H., Billock, V. A., Tanosaki, M., Iguchi, Y., Shimada, M., et al. (2003). Spatiotemporal characteristics of hemodynamic changes in the human lateral prefrontal cortex during working memory tasks. Neuroimage 20, 1493–1504. doi: 10.1016/S1053-8119(03)00412-9

Huppert, T. J., Hoge, R. D., Diamond, S. G., Franceschini, M. A., and Boas, D. A. (2006). A temporal comparison of BOLD, ASL, and NIRs hemodynamic responses to motor stimuli in adult humans. Neuroimage 29, 368–382. doi: 10.1016/j.neuroimage.2005.08.065

Izzetoglu, K., Bunce, S., Izzetoglu, M., Onaral, B., and Pourrezaei, K. (2003). “fNIR spectroscopy as a measure of cognitive task load,” in Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vol.4 (IEEE Cat No03CH9) (Cancun), 3431–3434.

Izzetoglu, K., Bunce, S., Onaral, B., Pourrezaei, K., and Chance, B. (2004). Functional optical brain imaging using near-infrared during cognitive tasks. Int. J. Hum. Comp. Inter. 17, 211–227. doi: 10.1207/s15327590ijhc1702_6

Jonides, J., Schumacher, E. H., Smith, E. E., Lauber, E. J., Awh, E., Minoshima, S., et al. (1997). Verbal working memory load affects regional brain activation as measured by PET. J. Cogn. Neurosci. 9, 462–475. doi: 10.1162/jocn.1997.9.4.462

Kaber, D. B., Onal, E., and Endsley, M. R. (2000). Design of automation for telerobots and the effect on performance, operator ‘situation awareness, and subjective workload. Hum. Factors Ergon. Manuf. Serv. Ind. 10, 409–430. doi: 10.1002/hfm.20168

Keles, H. O., Barbour, R. L., Aghajani, H., and Omurtag, A. (2014b). “Multimodality mapping approach for evolving functional brain connectivity patterns: a fNIRs-EEG study,” in Biomedical Optics (Optical Society of America) BT5B.2. OSA Technical Digest. Available online at: http://www.opticsinfobase.org/abstract.cfm?URI=BIOMED-2014-BT5B.2

Keles, H. O., Barbour, R. L., Aghajani, H., and Omurtag, A. (2014a). Investigation of the Neurovascular Coupling from Simultaneous fNIRS-EEG System Using the Triplet Holder. Montreal, QC. Available online at: https://goo.gl/UVmtwT

Keles, H. O., Barbour, R. L., and Omurtag, A. (2016). Hemodynamic correlates of spontaneous neural activity measured by human whole-head resting state EEG+fNIRs. Neuroimage 138, 76–87. doi: 10.1016/j.neuroimage.2016.05.058

Kirchner, W. K. (1958). Age differences in short-term retention of rapidly changing information. J. Exp. Psychol. 55, 352–358. doi: 10.1037/h0043688

Liu, T., Xiao, T., and Shi, J.-N. (2013). Neural correlates of conflict control on facial expressions with a flanker paradigm. PLoS ONE 8:e69683. doi: 10.1371/journal.pone.0069683