- 1Department of English, Miyagi Gakuin Women's University, Sendai, Japan

- 2Department of Foreign Languages, Kyoto Women's University, Kyoto, Japan

- 3Graduate School of Arts and Letters, Tohoku University, Sendai, Japan

- 4Institute of Development, Aging and Cancer, Tohoku University, Sendai, Japan

Children naturally acquire a language in social contexts where they interact with their caregivers. Indeed, research shows that social interaction facilitates lexical and phonological development at the early stages of child language acquisition. It is not clear, however, whether the relationship between social interaction and learning applies to adult second language acquisition of syntactic rules. Does learning second language syntactic rules through social interactions with a native speaker or without such interactions impact behavior and the brain? The current study aims to answer this question. Adult Japanese participants learned a new foreign language, Japanese sign language (JSL), either through a native deaf signer or via DVDs. Neural correlates of acquiring new linguistic knowledge were investigated using functional magnetic resonance imaging (fMRI). The participants in each group were indistinguishable in terms of their behavioral data after the instruction. The fMRI data, however, revealed significant differences in the neural activities between two groups. Significant activations in the left inferior frontal gyrus (IFG) were found for the participants who learned JSL through interactions with the native signer. In contrast, no cortical activation change in the left IFG was found for the group who experienced the same visual input for the same duration via the DVD presentation. Given that the left IFG is involved in the syntactic processing of language, spoken or signed, learning through social interactions resulted in an fMRI signature typical of native speakers: activation of the left IFG. Thus, broadly speaking, availability of communicative interaction is necessary for second language acquisition and this results in observed changes in the brain.

Introduction

It is a trivial fact that all normal children effortlessly acquire a particular language used around them. Less trivial is the fact that children do so through social interactions: children cannot acquire a language from linguistic input such as TV, or computer presentations (Sachs et al., 1981; Baker, 2001; Kuhl et al., 2003). This fact is all the more worth remarking, considering that other cognitive systems such as the visual system do not require human interaction for them to develop properly from birth. In this sense, language is uniquely human in that it is inherently social (de Saussure, 1916/1972).

In addition to the atypical cases of children raised in social isolation such as the wild boy of Aveyron (Lane, 1976) and Genie (Curtiss, 1977), the importance of a communicative partner in language acquisition has been illustrated by Sachs et al. (1981): the case of hearing children raised by deaf parents, who attempted in vain to teach them spoken English via television. Kuhl et al. (2003) provide more direct evidence for the experimental effects of social interactions on phonetic learning (discrimination) in a foreign language. Infants less than 6 months old of age can discriminate various speech contrasts in the world that do not exist in their mother tongues (Eimas et al., 1971; Werker and Tees, 1984), but they lose the discriminating ability between 6 and 12 months of age (Werker and Tees, 1984). During this period, they grow into “native listeners” from “universal listeners.” In Kuhl et al.'s experiment, 9-to-10-old month American babies were exposed to a new language, Mandarin Chinese, over 4–6 weeks through four different speakers of Mandarin Chinese or via televised recordings of Mandarin Chinese speakers. After exposure, the researchers performed a head-turn phonetic discrimination task of a Mandarin fricative-affricate contrast that does not exist in English. Only infants exposed to Mandarin Chinese speakers retained their sensitivity to distinguish the non-native Mandarin speech contrast and showed the same level of phonetic discrimination as native speakers of Mandarin Chinese. The result clearly indicates that phonetic learning is not triggered by simple exposure to linguistic input, but that infants must be exposed to a language in socially interactive situations to develop speech perception (Kuhl, 2007). TV programs or DVDs cannot be substitutes for human instruction in the early periods of phonetic learning.

Social interactions provide a variety of information needed for language development, so that several explanations have been offered for the findings in Kuhl et al. (2003). Social interactions may “attract more attention and increase motivation” in infants (Verga and Kotz, 2013, p. 3) resulting in phonetic learning; joint attention may provide more referential information needed for the association of a word and its referent (Kuhl et al., 2003); social contingency or back-and-forth feedback from humans may play a vital role in language development (Kuhl, 2007; Roseberry et al., 2014); infants may not be familiar or experienced with DVD presentations. These explanations are not mutually exclusive or implausible in that infants acquire a language through social interactions with their caregivers that involve child-directed speech (Bruner, 1983). The reader is referred to Hoff (2006) and Verga and Kotz (2013) for the review of relevant studies showing that social interaction influences language learning in infants.

Despite the alleged importance of social interaction in language development, previous language learning studies on social interaction only focused on vocabulary learning (Kuhl, 2007) and phonetic discrimination (Kuhl et al., 2003; Kuhl, 2007) in a foreign language during childhood, and word learning in a first language (Krcmar et al., 2007; Roseberry et al., 2009; Verga and Kotz, 2013). Language is, however, more than words and sounds. Human language is a computational system of connecting meaning and sound (or a visual-manual channel in sign languages) by means of syntactic structure. Syntactic structure has not been observed in other species (Hauser et al., 2002), so structure dependence in this sense is the most characterizing feature of human language (Chomsky, 2013a,b; Everaert et al., 2015; Berwick and Chomsky, 2016).

In spite of the fact that syntax is “the basic property” of human language (Chomsky, 2013a,b) and that social interaction plays a key role in early language development, syntax has not been discussed in adult second language acquisition research from the perspective of social interaction. It is true that the number of neuroscience studies on social interactions has exponentially increased over the last decade (for a research review, see Verga and Kotz, 2013), little social neuroscience research has until recently dealt with adult second language acquisition. One of the few studies on second language acquisition in different social settings is Jeong et al. (2010). Jeong and her colleagues tested the effects of social interactions on the acquisition of second language vocabulary by adult learners. They compared the retrieval of words learned from text-based learning (written translations) and that of words learned from situation-based learning (real-life situations). The result shows that the comprehension of words learned through movie-clips depicting a social situation elicited activity in the right supramarginal gyrus similar to that evoked by the comprehension of vocabulary in one's native language. The result indicates the effects of social interaction in second language acquisition of vocabulary on the brain, but it should be noted that participants in situation-based learning contexts learned foreign language vocabulary through “artificial” movie-clips of a dialogue. Therefore, it remains to be elucidated what differences natural social interaction with a teacher makes in second language acquisition in comparison to learning a second language with artificial interaction such as DVDs (Verga and Kotz, 2013). In fact, no study, to our knowledge, has yet investigated how social interaction during foreign language learning in adulthood will affect neural mechanisms. Thus, whether adult learners benefit from learning in social contexts is still an open issue.

Given that linguistic knowledge is internalized in the brain and that more people are using computer-assisted learning without human interaction, we reasonably address the non-trivial question of whether social interaction will have distinctive effects on the brains of adults learning foreign language syntax, which is more complex than vocabulary and phonetic learning. Due to resource constraints, non-interactive learning through a combination of audio and video is common among second language learners who have few opportunities to interact with native speakers of a target language. It should be noted, however, that there is no clear evidence that computer-supported learning without social interaction has the same effects on the learning of syntactic rules in a foreign language as learning through human interaction. Most studies on social interactions are based on behavior or performance data, but behavioral data have some limitations. First, behavioral scores of linguistic knowledge are blurred by numerous factors such as attention, cognition, and perception. It is, therefore, extremely difficult, if not impossible, to tease them apart, which in turn makes the interpretation of the performance data inconclusive (Raizada et al., 2008). Second, behavioral data do not reveal the neurocognitive mechanisms responsible for the processing of second language knowledge. Third, similar behavioral data do “not necessarily implicate reliance on similar neural mechanisms” (Morgan-Short et al., 2012, p. 934). Indeed, several brain imaging studies (Musso et al., 2003; Osterhout et al., 2008; Sakai et al., 2009) have reported the evidence for the difference between performance data and their respective neuroscience data.

The present paper discusses whether presence or absence of a human being has distinct effects on neural (fMRI) and behavioral (performance) measures of syntactic processing of a foreign language in adults. As a foreign language, we tested the acquisition of Japanese sign language (JSL) by Japanese adults who had not learned JSL. A sign language is mistakenly conceived to be a kind of artificial pantomime-like gesture lacking linguistic structure or at least a variant of a spoken language, but neither is well-grounded. A great deal of research in recent years demonstrates that sign language is a natural language with rich grammatical properties that characterize other natural languages such as spoken English or Japanese (Sandler and Lillo-Martin, 2006). Cecchetto et al. (2012), for example, demonstrate that Italian sign language respects structure dependence based on abstract hierarchical syntactic structure characteristic of only human languages (Chomsky, 2013a,b; Everaert et al., 2015). Studies of language development have also provided evidence that deaf children experience almost the same stages of language development as hearing children (Petitto and Marentette, 1991). Deaf babies, for instance, experience a stage of manual babbling during the same period as hearing children go through a stage of vocalization babbling. This confirms that irrespective of superficial speech modality differences, the same mechanism applies to core functions of sign and spoken languages. Differences between the two languages derive from the modalities in which they are produced and comprehended (MacSweeney et al., 2008). Furthermore, neuroimaging studies show that comprehension of spoken and sign languages activates the classical language brain regions including the left inferior frontal gyrus (IFG) (Sakai et al., 2005) in addition to the left superior temporal gyrus and sulcus (for a relevant literature review, see MacSweeney et al., 2008).

Areas in the left IFG, specifically the posterior pars opercularis (BA 44) and the more anterior pars triangularis (BA 45) of Broca's area, are known to be involved in processing linguistic and non-linguistic information (e.g., Koechlin and Jubault, 2006; Tettamanti and Weniger, 2006). This leads to the suggestion that Broca's area works as a “supramodal processor of hierarchical structures” (Tettamanti and Weniger, 2006). The “supramodal syntactic processor” (Clerget et al., 2013) has been localized either in BA 44 (Bahlmann et al., 2009; Fazio et al., 2009) or in BA 45 (Santi and Grodzinsky, 2010; Pallier et al., 2011). We will not go into the issue of which region, BA 44 or BA 45, is selectively responsible for processing syntactic structure (Musso et al., 2003; Pallier et al., 2011; Yusa, 2012; Goucha and Friederici, 2015; Zaccarella and Friederici, 2015; Zaccarella et al., 2017), but instead follow previous research showing that syntactic processing in a first language and a second language activates Broca's area in the left IFG (Perani and Abutalebi, 2005; Abutalebi, 2008). In particular, syntactic rules satisfying structure dependence selectively activate the language area of the brain, specifically the left IFG, while syntactic rules violating structure-dependent rules do not (Musso et al., 2003; Yusa et al., 2011). In addition, instruction effects of syntax in a second language are reflected in the left IFG (Musso et al., 2003; Sakai et al., 2009; Yusa et al., 2011). Recent extensive research on syntax processing also validates the claim that the left IFG is responsible for processing syntactic structure (Moro et al., 2001; Musso et al., 2003; Friederici et al., 2011; Goucha and Friederici, 2015). All taken together, we assume that activation of the left IFG is indicative of the acquisition of syntactic rules respecting structure-dependence.

We show, by examining the acquisition of JSL under two different social learning conditions, that learning through interaction with a deaf signer resulted in a stronger activation of the left IFG than learning through identical input via DVD presentations, though behavioral data did not show distinct differences.

Japanese Sign Language

JSL has the basic word or constituent order of SOV (subject-object-verb), but exhibits free word order as spoken Japanese does. The basic word order SOV can be changed into its topicalized order OSV with the topicalized O accompanied by a set of non-manual markers (NMM) such as eyebrow raising and nodding. There are, however, some restrictions on constituent order. Consider the following wh-cleft sentence “/PT-I/ /FATHER/ /OCCUPATION/ /WHAT/ /DOCTOR/,” which means “What my father is is a doctor.” (Following conventions, signs are written as glosses in capital letters and PT stands for “pointing to the nose or chest with the index finger of either hand”). In JSL, possessives cannot be moved from their modifying head nouns, whose phenomenon in spoken languages has been discussed in terms of the Left Branch Condition since Ross (1967). We call this the Possessive Construction Restriction. For example, possessive pronoun MY indicated by /PT-I/ cannot be separated from FATHER as in “/FATHER/ /OCCUPATION/ /PT-I/ /WHAT/ /DOCTOR/,” which is ungrammatical. Although languages differ as to whether they allow left-branch extraction (Bošković, 2005), it suffices to note for the purpose of the present paper that left-branch extraction is disallowed in JSL. What matters here is the syntactic difference between the optionality of topicalization of objects and the prohibition of the movement of possessives from their modifying nouns. In this sense, movement of a constituent respects structure dependence in a sense that movement of a constituent depends on the syntactic structure of the moved constituent.

It is interesting to note at this point that even a native speaker of JSL in our experiment had not considered the possessive construction restriction until it was pointed out, so it is natural that no book on JSL we know of refers to any aspects of the possessive construction restriction.

Materials and Methods

Participants

Forty six adult Japanese without any knowledge of JSL participated in our experiment. Participants were all recruited from Miyagi Gakuin Women's University, Sendai, Japan. They were divided into the Live-Exposure Group and the DVD-Exposure Group on the basis of working memory measured by the reading span test. As a result, those groups were indistinguishable on working memory before JSL lessons (t(44) = 0.249, p = 0.80). Before the experiment, all participants were provided with minute explanations of the experiment and its safety. They gave written informed consent for the study and right-handedness was verified using the Edinburgh Inventory (Oldfield, 1971). All experiments were performed in compliance with the relevant institutional guidelines approved by Tohoku University. Approval for the study was obtained from the Ethics Committee of the Institute of Development, Aging and Cancer, Tohoku University.

Procedure and Stimuli

The Live-Exposure Group and the DVD-Exposure Group learned JSL in two different contexts. Twenty two participants in the Live-Exposure Group learned JSL through social interactions with a native signer of JSL in ten 80-min classes in 1 month, where they learned the JSL expressions related to self-introduction, numbers, family, transportation, weather, hobbies, food and so on. A native signer did not teach the participants the grammar of JSL, but a large number of expressions in JSL in an implicit way. On the other hand, 24 participants in the DVD-Exposure Group learned JSL in the same number of classes during the same period through the DVDs that recorded the class lessons in the Live-Exposure Group. Therefore, the difference between the Live-Exposure Group and the DVD-Exposure Group was the existence/absence of social interchanges through a deaf signer.

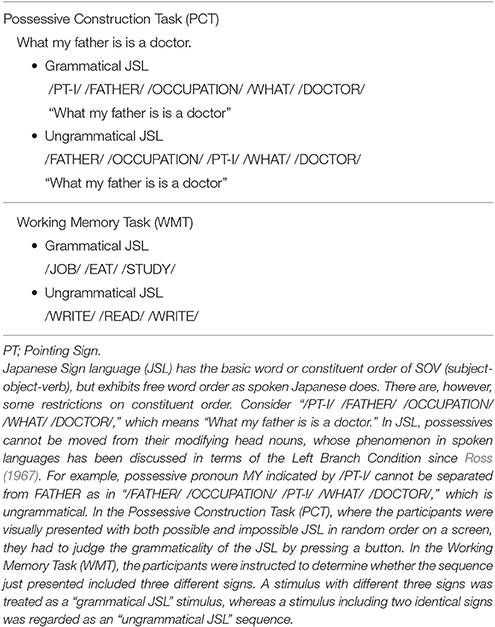

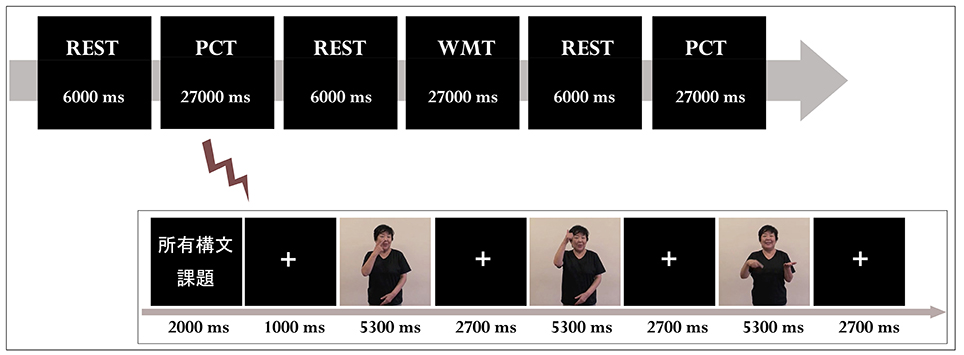

The participants in both groups underwent two sets of fMRI measurements after the 4th class (TEST 1) and the 10th class (TEST 2). Stimuli were visually presented to the participants in a block design (Figure 1). The total number of stimuli was 72, which was divided into two sessions with 36 stimuli each. Each session consisted of three conditions: Possessive Construction Task (correct/incorrect), Working Memory Task (correct/incorrect), and Rest Task (Table 1). In the Possessive Construction Task (PCT), the participants were visually presented with both possible and impossible JSL in random order on a screen; they had to judge the grammaticality of the JSL by pressing a button. The second task was the Working Memory Task (WMT): the participants were presented with three signs in sequence and had to judge whether the sequence included three different signs: A stimulus with three different signs was judged as a “grammatical JSL,” whereas a stimulus involving two identical signs was regarded as an “ungrammatical JSL.”

Figure 1. Timeline in the experimental task. PCT, Possessive Construction Task; WMT, Working Memory Task; REST, Rest Task. The experiment was performed in a block design. Participants were asked to judge whether the JSL they saw on the screen was correct. Response time was recorded from the beginning of each stimulus sentence until the button was pressed. E-prime ver. 2.0 (Psychology Software Tools) was used to present the stimuli and obtain the behavioral data.

The Rest Task (REST) required the participants to gaze at a fixation cross. All stimuli were controlled using E-prime ver. 2.0 (Psychology Software Tools). Figure 1 shows how the experiments proceeded. Following Hashimoto and Sakai (2002), we employed the WMT in our experiment. Its rationale was to disassociate working memory effects from the comprehension of JSL. The comprehension of a language is based on structure-dependent operations. Moreover, language comprehension is incremental in that linguistic information of a lexical item is processed immediately every time it is encountered (Neville et al., 1991; Phillips, 2003). Therefore, the PCM task implicitly required the participants to encode linguistic information of signs and decode it from working memory when they judged the JSL.

Image Acquisition

Functional neuroimaging data were acquired with a 3.0 Tesla MRI scanner (Philips Achieva Quasar Dual, Philips Medical Systems, Best, The Netherlands) using a gradient echo planar image (EPI) sequence ([TE] = 30 ms, field of view [FOV] = 192 mm, flip angle [FA] = 70°, slice thickness = 5 mm, slice gap = 0 mm). Thirty-two axial slices spanning the entire brain were obtained every 2 s. After the attainment of functional imaging, T1-weighted anatomical images were also acquired from each participant.

Analysis

All data processing and group analyses were performed using MATLAB (The Mathworks Inc., Natick, MA, USA) and SPM8 (Wellcome Department of Cognitive Neurology, London, UK). The acquisition timing of each slice was corrected using the middle (16th in time) slice as a reference for EPI data. In order to correct for head movement artifacts, functional images were first resliced and subsequently realigned with the first scan of the subjects. After alignment to the AC-PC line, each participant's T1-weighted image was coregistered to the mean functional EPI image and segmented using the standard tissue probability maps provided in SPM8. The coregistered structural image was spatially normalized to the Montreal Neurological Institute (MNI) standard brain template. All normalized functional images were then smoothed with a Gaussian kernel of 8 mm full-width at half-maximum (FWHM). An analysis of the tasks for each participant was conducted at the first statistical stage and a group statistical analysis at the second stage. Contrasts in the PCT – WMT condition was calculated using a one sample t-test. The threshold for significant activation of each contrast was set at p < 0.001, uncorrected. The spatial extent threshold was set at k = 10 voxels. Finally, we performed a region of interest (ROI) analysis in the brain area obtained from the comparison [PCT – WMT(TEST 2)] – [PCT – WMT (TEST 1)]. Activation maxima are reported as MNI-coordinates and anatomical regions are based on the Talairach Client (Lancaster and Fox, Research Imaging Center, University of Texas Health Science Center San Antonio; Talairach and Tournoux, 1988; Lancaster et al., 2000).

Predictions

If linguistic input is sufficient to induce JSL learning in adults, then exposure to JSL via a deaf signer or DVDs should result in the same changes in behavioral and imaging data. Instead, if social interaction is required and is an important factor in JSL learning, the Live-Exposure Group and the DVD-Exposure Group should show a different pattern of activation in the brain, or more specifically, the former group should have greater activation in the left IFG than the latter group.

Results

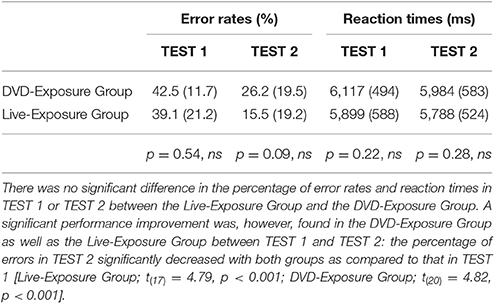

All data processing analyses were performed using SPM8. The threshold for significant activation of each contrast was set at p < 0.001, uncorrected. We analyzed data from 18 participants (mean age ± SD: 20.7 ± 0.76 years) in the Live-Exposure Group and 21 participants (mean age ± SD: 20.6 ± 0.76 years) in the DVD-Exposure Group. There was no significant difference in the percentage of error rates in TEST 1 or TEST 2 between the Live-Exposure Group and the DVD-Exposure Group [TEST 1, t(37) = −0.62, p = 0.54; TEST 2, t(37) = −1.71, p = 0.09] (Table 2). The result indicates that the participants in both groups developed the same level of knowledge of the Possessive Construction at the 4th and 10th trainings; their performance or behavior results were not significantly different. No significant difference in reaction times was observed in TEST 1 or TEST 2 between the Live-Exposure Group and the DVD-Exposure Group, either [TEST 1, t(37) = −1.26, p = 0.22; TEST 2, t(37) = −1.09, p = 0.28].

A significant performance improvement was, however, found in the DVD-Exposure Group as well as the Live-Exposure Group between TEST 1 and TEST 2 (Table 2): the percentage of errors in TEST2 significantly decreased with both groups as compared to that in TEST 1, indicating that teaching JSL through a native signer or DVDs had significant effects on the acquisition of the Possessive Construction Restriction [Live-Exposure Group; t(17) = 4.79, p < 0.001; DVD-Exposure Group; t(20) = 4.82, p < 0.001].

Imaging Data

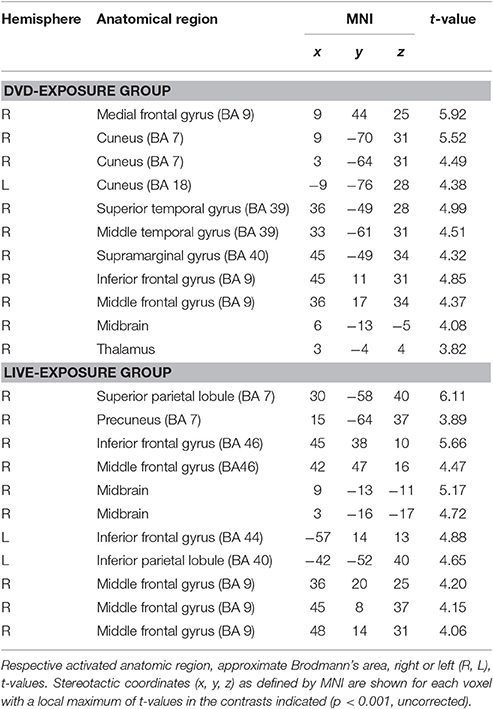

To identify cortical activation generated in two different learning contexts (i.e., via social interactions with a deaf signer and through DVDs), we subtracted [PCT – WMT(TEST 1)] from [PCT – WMT(TEST 2)]. Table 3 shows the activated regions in the comparison of [PCT – WMT(TEST 2)] – [PCT – WMT (TEST 1)]. For the DVD-Exposure Group, we found increased activations in the right middle frontal gyrus, the bilateral cuneus, the right superior temporal gyrus, the right middle temporal gyrus, the right IFG. For the Live-Exposure Group, activations in the right parietal lobule, the right IFG, the right middle frontal gyrus, the left IFG, the left Inferior parietal gyrus, and the middle frontal gyrus increased.

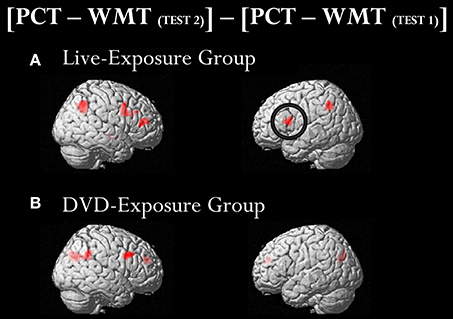

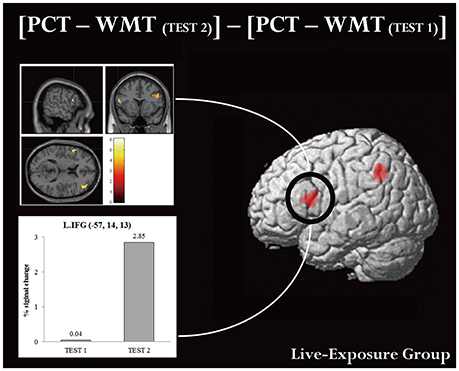

A ROI analysis of each cluster was conducted using the SPSS 19 (SPSS Inc., IBM, Armonk, NY, USA) on the value of the single voxel of the peak coordinate, which was obtained using an in-house SPM-compatible MATLAB script. The ROI was set at the activated area in the contrast [PCT – WMT(TEST 2)] – [PCT – WMT(TEST 1)] pooling the data from two groups. Activity in this ROI was compared in each group between [PCT – WMT(TEST 1)] and [PCT – WMT(TEST 2)] using a paired t-test. Significant activations in the left IFG, an area assumed to be involved in the processing of syntactic rules (Musso et al., 2003; Abutalebi, 2008; Yusa, 2012; Zaccarella et al., 2017), were found only for the Live-Exposure Group [paired t-test: t(17) = −4.88, p < 0.001]. No significant cortical activation change in the left IFG, by contrast, was found for the DVD-Exposure Group, who experienced the same visual input for the same duration via the DVD presentations [paired t-test: t(20) = −0.29, p = 0.78, n.s.] (Figures 2, 3; Table 3). This result shows that (superficially) similar performance between the groups “does not necessarily implicate reliance on similar neural mechanisms” (Morgan-Short et al., 2012, p. 934). Given that the LIFG is involved in the syntactic processing of language, spoken or signed, only training in an interactional setting resulted in an fMRI signature typical of native speakers: activation of the left IFG.

Figure 2. Brain activated regions in the contrast [PCT – WMT(TEST 2)] − [PCT – WMT(TEST 1)]. The participants in both groups underwent two sets of fMRI measurements after the 4th class (TEST 1) and the 10th class (TEST 2). To identify cortical activation generated after the instruction, we subtracted [PCT − WMT (TEST 1)] from [PCT − WMT(TEST 2)]. Significant activations in the left inferior frontal gyrus (IFG) were found only for the Live-Exposure Group (A). No significant cortical activation change, by contrast, was found for the DVD-Exposure Group, who experienced the same visual input for the same duration via the DVD presentations (B).

Figure 3. Brain activation in MNI space and ROI analysis for the left IFG. An ROI analysis was conducted in the left IFG, which is assumed to be involved in the processing of language. (Upper panel) cortical activation in [PCT – WMT(TEST 2)] – [PCT – WMT (TEST 1)] condition. (Lower panel) histograms for averaged maximum amplitudes of fitted hemodynamic responses at the local maximum in the left IFG. Each bar represents signal changes for TEST 1 and TEST 2, respectively. Note that signal changes in TEST 2 were significantly larger than in TEST 1 [t(17) = −4.88, p < 0.001, d = −0.89].

Discussion

The aim of the current investigation was to investigate the effects of social interaction on JSL learning in adult speakers. To examine social impacts on learning, we set up two types of learning contexts (that is, learning JSL through a deaf signer or through DVDs). Our results show that participants learned JSL equally in terms of behavioral data in both contexts, but that social interaction caused significant changes in the brain, particularly in the left IFG. This suggests that in addition to early speech learning in infants (Kuhl, 2007), social interaction is crucial in order for adult second language learners to come to rely on native-like neural mechanisms in processing syntactic rules or their efficient use. Social interaction through the interchanges with a deaf native signer may make it easier to “crack the JSL code,” neurologically supporting the view that language is inherently social (de Saussure, 1916/1972). Thus, learning accompanied by changes in brain functions is not triggered solely by linguistic input such as DVDs, but is enhanced by social interaction. The current research provides a significant platform for studies on second language learning in adults: linguistic input is necessary for second language learning, but influences of a social partner are different from the ones exerted from the source without social interactions.

Numerous studies reveal that JSL has linguistic characteristics distinct from spoken Japanese (Fischer, 1996, 2017; Matsuoka, 2015), which are to be discussed below. One might, however, object that the participants in our experiment simply transferred the knowledge of the possessive construction restriction in JSL from spoken Japanese, since extraction of possessives from their modifying nouns is also prohibited in spoken Japanese. This objection is plausible in light of the finding that in bilingualism both languages unconsciously influence each other (Kroll et al., 2006; Jarvis and Pavlenko, 2008), but it cannot explain why only the Live-Exposure Group experienced functional changes in the left IFG. If transfer from spoken Japanese had been a crucial factor in the learning of JSL in our experiment, learning JSL via DVD presentations would also have elicited similar activity in the left IFG. However, the lack of activation in the left IFG in the DVD Group rules out this possibility. Thus, the differences in the left IFG suggest that the two groups employed different mechanisms to learn JSL.

This raises an interesting question of what the DVD Group actually learned in our experiment. On this question, the activation of the right supramarginal gyrus in the DVD Group is suggestive in terms of the result in Jeong et al. (2010): the right supramarginal gyrus is crucially involved in the retrieval of words learned by means of situation-based learning using media-clips of a dialogue. Note here that situation-based learning in Jeong et al. (2010) roughly corresponds to learning via DVD recordings in our experiment. The right supramarginal gyrus is part of the right parietal lobule, which is considered to play a key role in incorporating multimodal information from different senses (Macaluso and Driver, 2003). Jeong et al. (2010) suggest that the activation of the right supramariginal gyrus is associated with imitation learning, since the area is proposed to constitute a part of human mirror neuron systems (Chong et al., 2008). Mirror neurons are active not only during the execution of an action but also during the observation of the same action (Gallese, 2008). Learners in the DVD Group might have developed the knowledge of JSL only by observing the DVD recordings, inferring the intentions of a signer recorded there and imitating JSL to adapt to a given situation in learning sessions. The imitation of familiar gestures is also known to invoke activation in the right supramarginal gyrus (Peigneux et al., 2004). The right IFG [45, 11, 31] can also be considered the anterior component of the mirror neuron system. Putting these together, it might be reasonable to conclude that participants in the DVD Group developed the knowledge of JSL through imitation learning.

We have assumed, following the generative tradition (Chomsky, 2013a,b; Everaert et al., 2015; Berwick and Chomsky, 2016), that aside from externalization at the sensory-motor level (sign language or speech), the brain contains a universal computational system, which merges or combines smaller elements into larger elements or constituents in a hierarchical manner, generating hierarchical structures. This structure-building operation called Merge is universal, so that it does not need to be learned. If JSL and spoken Japanese differ only in “their modality of externalization” with their syntactic operations the same, one might ask what participants in the Live-Exposure Group learned. It is interesting to note here that activity in the right middle frontal gyrus ([45, 8, 37], [48, 14, 31]) in the Live-Exposure Group might show the involvement of the anterior component of the mirror neuron system, suggesting the role of the mirror neuron in the acquisition of JSL in the Live-Exposure Group. It is natural to think that the Live-Exposure Group learned JSL through observing a teacher use JSL, but second language acquisition involves much more than imitation.

Successful second language acquisition involves assembling or mapping syntactic, semantic and phonological features into new configurations, that is, second language acquisition learners are required to reconfigure features from the way they are coded in the first language into the new configuration where they are represented in the second language; this is a proposal termed “Feature Reassembly Hypothesis” (Lardiere, 2009). On this hypothesis, second language learners of JSL must develop the knowledge of which signs and non-manual markers such as facial expressions, and their variants represent which syntactic, semantic, and phonological features. In addition, they must acquire the knowledge of whether such signs are obligatory, optional or prohibited under which syntactic, semantic, phonological, lexical and pragmatic conditions (Hwang and Lardiere, 2013; Slabakova, 2016). Assuming the Feature Reassembly Hypothesis, we assume that what developed in the Live-Exposure Group is the knowledge of reassembling relevant features in spoken Japanese into new configurations in JSL by means of associating abstract features carrying grammatical information in spoken Japanese and their exponents (signs) in JSL.

To be more specific, at least two points are relevant to the question of the relation between learning second language syntactic rules and feature-reassembly. One is the knowledge of the wh-cleft in JSL and the other is the knowledge of the possessive construction in JSL.

The wh-cleft in JSL is different at least in three points from the wh-cleft in spoken Japanse (for the wh-cleft in American Sign Language, see Caponigro and Davidson, 2011). The wh-phrase in JSL must be accompanied by NMMs such as “a repeated weak headshake and furrowed eyebrows” (Matsuoka, 2015) as well as “the following fixation of the head” (Ichida, 2005). Following the analysis of wh-interrogatives in JSL by Uchibori and Matsuoka (2016), we assume that the wh-element in JSL is morphologically made up of a wh-phrase (represented by a wh-sign) and a Q-particle or a wh-interrogative marker (represented by wh-NMMs) (Uchibori and Matsuoka, 2016). The lack of these NMMs results in ungrammatical wh-cleft sentences. The wh-phrase and the Q-particle ka in spoken Japanese are pronounced in different positions, while in JSL the wh-phrase and the Q-particle must co-occur. Therefore, the participants had to reassembly the Q or wh-interrogative feature into the NMM in JSL and to express the wh-phrase and the NMM simultaneously. Incidentally, it is interesting to note here that Shushi Nihongo or Nihongotaiou Shuwa “Signed Japanese,” a variant of spoken Japanese, lacks NMMs (Kimura, 2011).

Second, semantics is different: the element following the wh-phrase in JSL does not receive a focus interpretation, while the counterpart in spoken Japanese is in focus. Third, pragmatics is different; the wh-cleft in JSL is commonly used and does not sound “orotund” unlike the wh-cleft in spoken Japanese (Matsuoka, 2015). These differences are what the participants learned in our experiments.

Regarding the possessive construction in JSL, nominative “I,” expressed by POINTING AT THE SPEAKER, is not accompanied by the NMM of nodding. When nodding co-occurs with pointing at the speaker, it means “and.” Thus, the difference between “my father” and “I and father” depends on the NMM (nodding). Therefore, the participants had to learn that the possessive pronoun is morphologically composed of two parts: the sign meaning the first person and the absence of nodding (NMM). It is clear that learning of the wh-cleft and the possessive construction is related to externalization, which is in turn related to the fact that a sign language can use more than one articulator simultaneously.

Second language acquisition is influenced by similarities and differences between the feature arrays charactering the first language and those in the second language input. Consequently, the magnitude of feature reassembly depends on the nature of the input: “feature reassembly may occur slowly or not at all if the relevant evidence is rare or ambiguous in the input” (Slabakova et al., 2014, p. 602). Thus, the knowledge of the association interacts with structure-building operations to result in the knowledge of specific constructions such as the possessive construction in JSL. From this perspective, it is more appropriate to say that as a result of feature reassembly the participants in Live-Exposure Group learned several constructions including the possessive construction. Even so, it is noteworthy that learning JSL through social interactions with a communicative partner had a different impact on the left IFG from learning it via DVD presentations without such interactions. Our result also suggests that the association might be influenced by the source of information, human or non-human, at least in the early stages of foreign language acquisition in adults.

We conclude the paper by pointing out four remaining issues. The first issue is concerned with the fMRI data of the Live-Exposure Group. The difference between TEST 1 and TEST 2 was found at uncorrected thresholds. One possible explanation for this result is that the Live-Exposure Group had already learned the possessive construction at the time of TEST 1, which was conducted just after the fourth class; knowledge of the possessive construction at TEST 1 could have washed away clear instruction effects at TEST 2, leading to the result at uncorrected thresholds. Had TEST 1 been carried out before the instruction of JSL started, more significant results at corrected thresholds should have been obtained.

The second concerns the behavioral results in TEST 2 obtained just after the tenth class, which did not show any significant differences in error rates between the Live-Exposure Group and the DVD-Exposure Group. This result seems strange but it is consistent with previous research showing that the same performance outcomes do not show the use of the same brain system (Poldrack et al., 2001; Foerde et al., 2006; Morgan-Short et al., 2012). Greater changes in the brain may be needed to show the corresponding changes in the behavior (Boyke et al., 2008). It is not clear from our experiment whether knowledge acquired from DVD-Exposure learning is as durable as knowledge obtained from social interactions with a deaf signer. The impact of social interaction on the long-term retention of newly acquired knowledge in adults is an issue for future research.

The third has to do with interactive learning tools such as video chatting with the properties of social interactions and video, as well as interactive media such as Skype or FaceTime. The interactive situation resembles a natural learning situation between a teacher and a student. Positive effects of interactive media use on second-language learning, if confirmed, will provide new insights into the issue of quality and quantity of input in second-language learning, thereby rethinking the issue of critical or sensitive periods in second-language learning. In birds, richer social interaction can delay the critical period closure for learning (Brainard and Knudsen, 1998). Even adults beyond sensitive periods in second language acquisition may also benefit from richer social interaction (Zhang et al., 2009). In addition to the quantity of input, its quality, not age, matters in the attainment of native-like processing of a second language (Piske and Young-Scholten, 2009).

The last is concerned with the relation of linguistic experience (input) and innate mechanisms in language acquisition. Whatever approaches to language acquisition, there is some consensus that language grows in the brain from the interaction of several factors, including at least three factors: genetic endowment (innate mechanisms), experience (linguistic input) and language-independent properties (Chomsky, 2005; Everaert et al., 2015). Although the importance of the second factor (input) for the ontogenesis of language in an individual is not controversial, what properties are attributed to innate mechanisms characterize two approaches to language acquisition. One approach (called generative approach) assumes that a human is born with the language-dedicated cognitive system (called Universal Grammar), which grows into knowledge of a particular language through the interaction of linguistic experiences; the other (called a general or nativist emergent approach) denies a language-specific innate mechanism, but instead proposes the innate domain-general learning mechanism including statistical learning. On the latter account, linguistic knowledge emerges as a result of linguistic experiences or linguistic usage through statistical learning (O'Grady, 2005). However, the current minimalist program in generative grammar has dramatically minimized the innate language-specific properties by reducing them to other cognitive systems (see Chomsky, 2005, 2013a,b). As a result, the two approaches just mentioned are not as mutually exclusive as they used to be (Yang, 2004; Kirby, 2014). Further research needs to examine whether and to what extent the two approaches converge. It should be noted that generative grammar has never claimed that social interaction or frequency of words is not responsible for language acquisition. Then, what effects does social interaction have on second language acquisition? Our data show that learning second language syntax in social and non-social contexts can lead to differences in brain processing that cannot be reflected by behavioral data. Future research will be needed to characterize the details of the relationship between social interaction and adult second language learning, and thereby to maximize the brain development responsible for learning.

Conclusion

The current study investigated effects of social interaction on the acquisition of syntax in adult second language learners. We found that learning JSL through interactions with a deaf signer resulted in a stronger activation of the left IFG than learning through identical input via DVD presentations, though behavioral data did not show distinct differences. This study provides the first neuroimaging data to show that interaction with a human being aids acquiring syntactic rules and in turn causes significant changes in the brain. If the activation in the left IFG is indicative of native-like processing of syntax, one implication for second language learning is that learning second language syntax in a richer social context may well lead to native-like attainment of second language processing. This implication calls for further studies on whether interactive media such as Skype or FaceTime will induce distinct changes than traditional learning media such as DVDs and TV programs.

Author Contributions

NY contributed to the experimental design. NY, JK, and MK created experimental materials and conducted the experiment with MS and RK. JK analyzed the data. NY wrote the manuscript with advice from JK, MK, and MK, except for the Image Acquisition and Analysis sections, which JK wrote. JK prepared the figures. NY financially supported the experiment.

Funding

This work was supported in part by the Grants-in-Aid for Scientific Research on Priority Areas (#20020022, Noriaki Yusa, PI), Challenging Exploratory Research (#25580133, 16K13266, Noriaki Yusa, PI) from the Japan Society for the Promotion of Science, and the 2010 Special Research Grant from Miyagi Gakuin Women's University (Noriaki Yusa, PI).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer VH and handling Editor declared their shared affiliation, and the handling Editor states that the process nevertheless met the standards of a fair and objective review.

Acknowledgments

We would like to thank Neal Snape for proofreading an early version of this paper as well as making useful comments on it, and Kazumi Matsuoka for answering our questions about JSL. We are also grateful to Keiko Hanzawa, Yoko Mano, Jo Matsuzaki, Noriko Sakamoto, Kei Takahashi, Takashi Tsukiura, Sanae Yamaguchi, Satoru Yokoyama, and Taichi Yusa for their valuable support for our experiment of the present study. The usual disclaimers apply.

References

Abutalebi, J. (2008). Neural aspects of second language representation and language control. Acta Psychol. 128, 466–478. doi: 10.1016/j.actpsy.2008.03.014

Bahlmann, J., Schubotz, R. I., Mueller, J. L., Koester, D., and Friederici, A. D. (2009). Neural circuits of hierarchical visuo-spatial sequence processing. Brain Res. 1298, 161–170. doi: 10.1016/j.brainres.2009.08.017

Baker, M. (2001). The Atoms of Language: The Mind's Hidden Rules of Grammar. New York, NY: Basic Books.

Bošković, Ž. (2005). On the locality of left branch extraction and the structure of NP. Stud. Linguist. 59, 1–45. doi: 10.1111/j.1467-9582.2005.00118.x

Boyke, J., Driemeyer, J., Gaser, C., Büchel, C., and May, A. (2008). Training-induced brain structure changes in the elderly. J. Neurosci. 28, 7031–7035. doi: 10.1523/JNEUROSCI.0742-08.2008

Brainard, M. S., and Knudsen, E. I. (1998). Sensitive periods for visual calibration of the auditory space map in the barn owl optic tectum. J. Neurosci. 18, 3929–3942.

Caponigro, I., and Davidson, K. (2011). Ask, and tell as well: question–answer clauses in American Sign Language. Natural Lang. Semant. 19, 323–371. doi: 10.1007/s11050-011-9071-0

Cecchetto, C., Checchetto, A., Geraci, C., and Zucchi, S. (2012). The same constituent structure. Formal Exp. Adv. Sign Lang. 2012, 22.

Chomsky, N. (2005). Three factors in language design. Linguist. Inq. 36, 1–22. doi: 10.1162/0024389052993655

Chomsky, N. (2013a). What kind of creatures are we? J. Philos. CX, 645–662. doi: 10.5840/jphil2013110121

Chong, T. T. J., Cunnington, R., Williams, M. A., Kanwisher, N., and Mattingley, J. B. (2008). fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Curr. Biol. 18, 1576–1580. doi: 10.1016/j.cub.2008.08.068

Clerget, E., Andre, M. A., and Olivier, E. (2013). Deficit in complex sequence processing after a virtual lesion of BA 45. PLoS ONE 8:e63722. doi: 10.1371/journal.pone.0063722

Curtiss, S. (1977). Genie: A Psycholinguistic Study of Modern-day “Wild Child.” New York, NY: Academic Press.

Eimas, P. D., Siqueland, E. R., Jusczyk, P., and Vigorito, J. (1971). Speech perception in infants. Science 171, 303–306. doi: 10.1126/science.171.3968.303

Everaert, M. B. H., Huybregts, M. A. C., Chomsky, N., Berwick, R. C., and Bolhuis Johan, J. (2015). Structures, not strings: linguistics as part of the cognitive sciences. Trends Cogn. Sci. 19, 729–743. doi: 10.1016/j.tics.2015.09.008

Fazio, P., Cantagallo, A., Craighero, L., D'Ausilio, A., Roy, A. C., Pozzo, T., et al. (2009). Encoding of human action in Broca's area. Brain 132, 1980–1988. doi: 10.1093/brain/awp118

Fischer, S. (1996). The role of agreement and auxiliaries in sign language. Lingua 98, 103–119. doi: 10.1016/0024-3841(95)00034-8

Fischer, S. (2017). Crosslinguistic variation in sign language syntax. Annu. Rev. Linguist. 3, 1.1–1.23. doi: 10.1146/annurev-linguistics-011516-034150

Foerde, K., Knowlton, B. J., and Poldrack, R. A. (2006). Modulation of competing memory systems by distraction. Proce. Natl. Acad. Sci. U.S.A. 103, 11778–11783. doi: 10.1073/pnas.0602659103

Friederici, A. D., Bahlmann, J., Friedrich, R., and Makuuchi, M. (2011). The neural basis of recursion of complex syntactic hierarchy. Biolinguistics 5, 87–104.

Gallese, V. (2008). Mirror neurons and the social nature of language: the neural exploitation hypothesis. Soc. Neurosci. 3, 317–333. doi: 10.1080/17470910701563608

Goucha, T., and Friederici, A. D. (2015). The language skeleton after dissecting meaning: a functional segregation within Broca's Area. Neuroimage 114, 294–302. doi: 10.1016/j.neuroimage.2015.04.011

Hashimoto, R., and Sakai, K. L. (2002). Specialization in the left prefrontal cortex for sentence comprehension. Neuron 35, 589–597. doi: 10.1016/S0896-6273(02)00788-2

Hauser, M. D., Chomsky, N., and Fitch, W. T. (2002). The faculty of language: what is it, who has it, and how did it evolve? Science 298, 1569–1579. doi: 10.1126/science.298.5598.1569

Hoff, E. (2006). How social contexts support and shape language development. Dev. Rev 26, 55–88. doi: 10.1016/j.dr.2005.11.002

Hwang, S. H., and Lardiere, D. (2013). Plural-marking in L2 Korean: a feature-based approach. Second Lang. Res. 29, 57–86. doi: 10.1177/0267658312461496

Ichida, Y. (2005). Bun koozoo to atama no ugoki: Nihon shuwa no bunpoo (6), gojun, hobun, kankei setu (Clause structures and head movements: the grammar of Japanese Sign Language (6), word orders, complement clauses and relative clauses). Gekkan Gengo 34, 91–99.

Jarvis, S., and Pavlenko, A. (2008). Crosslinguistic Influence in Language and Cognition. London: Routledge.

Jeong, H., Sugiura, M., Sassa, Y., Wakusawa, K., Horie, K., Sato, S., et al. (2010). Learning second language vocabulary: neural dissociation of situation-based learning and text-based learning. Neuroimage 50, 802–809. doi: 10.1016/j.neuroimage.2009.12.038

Kimura, H. (2011). Nihon Shuwa to Nihongo Taioo Shuwa (Shushi Nihongo): Aida ni aru “fukai tani” (Japanese Sign Language and Signed Japanese: A “deep gap” Between Them). Tokyo: Seikatsu Shoin.

Kirby, S. (2014). “Major theories in acquisition of syntax research,” in The Routledge Handbook of Syntax, eds A. Carnie, Y. Sato, and D. Siddiqi (London; New York, NY: Routledge), 426–445.

Koechlin, E., and Jubault, T. (2006). Broca's area and the hierarchical organization of human behavior. Neuron 50, 963–974. doi: 10.1016/j.neuron.2006.05.017

Krcmar, M., Grela, B., and Lin, K. (2007). Can toddlers learn vocabulary from television? An experimental approach. Media Psychol. 10, 41–63. doi: 10.1080/15213260701300931

Kroll, J. F., Bobb, S., and Wodniecka, Z. (2006). Language selectivity is the exception, not the rule: arguments against a fixed locus of language selection in bilingual speech. Bilingualism Lang. Cogn. 9, 119–135. doi: 10.1017/S1366728906002483

Kuhl, P. (2007). Is speech learning ‘gated’ by the social brain? Dev. Sci. 10, 110–120. doi: 10.1111/j.1467-7687.2007.00572.x

Kuhl, P. K., Tsao, F. M., and Liu, H. M. (2003). Foreign-language experience in infancy: effects of short-term exposure and social interaction on phonetic learning. Proc. Nat. Acad. Sci. U.S.A. 100, 9096–9101. doi: 10.1073/pnas.1532872100

Lancaster, J. L., Woldorff, M. G., Parsons, L. M., Liotti, M., Freitas, C. S., Rainey, L., et al. (2000). Automated Talairach atlas labels for functional brain mapping. Hum. Brain Mapp. 10, 120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8

Lardiere, D. (2009). Some thoughts on the contrastive analysis of features in second language acquisition. Second Lang. Res. 25, 173–227. doi: 10.1177/0267658308100283

Macaluso, E., and Driver, J. (2003). Multimodal spatial representations in the human parietal cortex: evidence from functional imaging. Adv. Neurol. 93. 219–233.

MacSweeney, M., Capek, C. M., Campbell, R., and Woll, B. (2008). The signing brain: the neurobiology of sign language. Trends. Cogn. Sci. 12, 432–440. doi: 10.1016/j.tics.2008.07.010

Matsuoka, K. (2015). Nihonshuwa de Manabu Shuwa Gengogaku no Kiso (Foundations of Sign Language Linguistics with a Special Reference to Japanese Sign Language). Tokyo: Kuroshio.

Morgan-Short, K., Steinhauer, K., Sanz, C., and Ullman, M. T. (2012). Explicit and implicit second language training differentially affect the achievement of native-like brain activation patterns. J. Cogn. Neurosci. 24, 933–947. doi: 10.1162/jocn_a_00119

Moro, A., Tettamanti, M., Perani, D., Donati, C., Cappa, S. F., and Fazio, F. (2001). Syntax and the brain: disentangling grammar by selective anomalies. Neuroimage 13, 110–118. doi: 10.1006/nimg.2000.0668

Musso, M., Moro, A., Glauche, V., Rijntjes, M., Reichenbach, J., Büchel, C., et al. (2003). Broca's area and the language instinct. Nat. Neurosci. 6, 774–781. doi: 10.1038/nn1077

Neville, H., Nicol, J. L., Barss, A., Forster, K. I., and Garrett, M. F. (1991). Syntactically based sentence processing classes: evidence from event-related brain potentials. J. Cogn. Neurosci. 3, 151–165. doi: 10.1162/jocn.1991.3.2.151

O'Grady, W. (2005). Syntactic Carpentry: An Emergentist Approach to Syntax. Mahwah, NJ: Lawrence Erlbaum.

Oldfield, R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113. doi: 10.1016/0028-3932(71)90067-4

Osterhout, L., Poliakov, A., Inoue, K., McLaughlin, J., Valentine, G., Pitkanen, I., et al. (2008). Second-language learning and changes in the brain. J. Neuroling. 21, 509–521. doi: 10.1016/j.jneuroling.2008.01.001

Pallier, C., Devauchelle, A.-D., and Dehaene, S. (2011). Cortical representation of the constituent structure of sentences. Proc. Natl. Acad. Sci. U.S.A. 108, 2522–2527. doi: 10.1073/pnas.1018711108

Peigneux, P., Van der Linden, M., Garraux, G., Laureys, S., Degueldre, C., Aerts, J., et al. (2004). Imaging a cognitive model of apraxia: the neural substrate of gesture-specific cognitive processes. Hum. Brain Mapp. 21, 119–142. doi: 10.1002/hbm.10161

Perani, D., and Abutalebi, J. (2005). The neural basis of first and second language processing. Curr. Opin. Neurobiol. 15, 202–206. doi: 10.1016/j.conb.2005.03.007

Petitto, L. A., and Marentette, P. F. (1991). Babbling in the manual mode: evidence for the ontogeny of language. Science 251, 1493–1496. doi: 10.1126/science.2006424

Phillips, C. (2003). Linear order and constituency. Linguist. Inq. 34, 37–90. doi: 10.1162/002438903763255922

Piske, T., and Young-Scholten, M. (eds.) (2009). Input Matters in SLA. Bristol: Multilingual Matters.

Poldrack, R., Clark, J., Paré-Blagoev, E. J., Shohamy, D., Creso Moyano, J., Myers, C., et al. (2001). Interactive memory systems in the human brain. Nature 414, 546–550. doi: 10.1038/35107080

Raizada, R. D. S., Richards, T. L., Meltzoff, A. N., and Kuhl, P. K. (2008). Socioeconomic status predicts hemispheric specialization of the left inferior frontal gyrus in young children. Neuroimage 40, 1392–1401. doi: 10.1016/j.neuroimage.2008.01.021

Roseberry, S., Hirsh-Pasek, K., and Golinkoff, R. M. (2014). Skype me! Socially contingent interactions help toddlers learn language. Chi. Dev. 85, 956–970. doi: 10.1111/cdev.12166

Roseberry, S., Hirsh-Pasek, K., Parish-Morris, J., and Golinkoff, R. M. (2009). Live action: can young children learn verbs from video? Chi. Dev. 80, 1360–1375. doi: 10.1111/j.1467-8624.2009.01338.x

Ross, J. R. (1967). Constraints on Variables in Syntax. Unpublished doctoral dissertation, Massachusetts Institute of Technology.

Sachs, J., Bard, B., and Johnson, M. L. (1981). Language learning with restricted input: case studies of two hearing children of deaf parents. Appl. Psycholinguist. 2, 33–54. doi: 10.1017/S0142716400000643

Sakai, K. L., Nauchi, A., Tatsuno, Y., Hirano, K., Muraishi, Y., Kimura, M., et al. (2009). Distinct roles of left inferior frontal regions that explain individual differences in second language acquisition. Hum. Brain. Mapp. 30, 2440–2452. doi: 10.1002/hbm.20681

Sakai, K. L., Tatsuno, Y., Suzuki, K., Kimura, H., and Ichida, Y. (2005). Sign and speech: amodal commonality in left hemisphere dominance for comprehension of sentences. Brain 128, 1407–1417. doi: 10.1093/brain/awh465

Sandler, W., and Lillo-Martin, D. (2006). Sign Language and Linguistic Universals. Cambridge: Cambridge University Press.

Santi, A., and Grodzinsky, Y. (2010). fMRI adaptation dissociates syntactic complexity dimensions. Neuroimage 51, 1285–1293. doi: 10.1016/j.neuroimage.2010.03.034

Slabakova, R., Leal, T. L., and Liskin-Gasparro, J. (2014). We have moved on: current concepts and positions in generative SLA. Appl. Linguisti. 35, 601–606. doi: 10.1093/applin/amu027

Talairach, J., and Tournoux, P. (1988). Co-planar Stereotaxic Atlas of the Human Brain. New York, NY: Thieme.

Tettamanti, M., and Weniger, D. (2006). Broca's area: a supramodal hierarchical processor? Cortex 42, 491–494. doi: 10.1016/S0010-9452(08)70384-8

Uchibori, A., and Matsuoka, K. (2016). Split movement of wh-elements in Japanese Sign Language: a preliminary study. Lingua 183, 107–125. doi: 10.1016/j.lingua.2016.05.008

Verga, L., and Kotz, S. A. (2013). How relevant is social interaction in second language learning? Front. Hum. Neurosci. 7:550. doi: 10.3389/fnhum.2013.00550

Werker, J. F., and Tees, R. C. (1984). Cross language speech perception-evidence for perceptual reorganization during the first year of life. Infant Behav. Dev. 7, 49–63. doi: 10.1016/S0163-6383(84)80022-3

Yang, C. (2004). Universal grammar, statistics, or both? Trends Cogn. Sci. 8. 451–456. doi: 10.1016/j.tics.2004.08.006

Yusa, N. (2012). “Structure dependence in the brain,“ in Five Approaches to Language Evolution. Proceedings of the Workshops of the 9th International Conference on the Evolution of Language, eds L. McCrohon, K. Fujita, R. Martin, K. Okanoya, R, Suzuki (Kyoto: Evolang9 Organizing Committee), 25–26.

Yusa, N., Koizumi, M., Kim, J., Kimura, N., Uchida, S., Yokoyama, S., et al. (2011). Second-language instinct and instruction effects: nature and nurture in second-language acquisition. J. Cogn. Neurosci. 23, 2716–2730. doi: 10.1162/jocn.2011.21607

Zaccarella, E., and Friederici, A. D. (2015). Merge in the human brain: a sub-region based functional investigation in the seft pars opercularis. Front. Psychol. 6:1818. doi: 10.3389/fpsyg.2015.01818

Zaccarella, E., Meyer, L., Makuuchi, M., and Friederici, A. D. (2017). Building by syntax: the neural basis of minimal linguistic structures. Cereb. Cortex 27, 411–421. doi: 10.1093/cercor/bhv234

Keywords: social interaction, foreign language learning, fMRI, Japanese sign language, syntax, left inferior frontal gyrus

Citation: Yusa N, Kim J, Koizumi M, Sugiura M and Kawashima R (2017) Social Interaction Affects Neural Outcomes of Sign Language Learning As a Foreign Language in Adults. Front. Hum. Neurosci. 11:115. doi: 10.3389/fnhum.2017.00115

Received: 28 October 2016; Accepted: 23 February 2017;

Published: 31 March 2017.

Edited by:

Mila Vulchanova, Norwegian University of Science and Technology, NorwayReviewed by:

Viktória Havas, Norwegian University of Science and Technology, NorwayKoji Fujita, Kyoto University, Japan

Copyright © 2017 Yusa, Kim, Koizumi, Sugiura and Kawashima. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Noriaki Yusa, bl95dXNhQG1lLmNvbQ==

Noriaki Yusa

Noriaki Yusa Jungho Kim

Jungho Kim Masatoshi Koizumi

Masatoshi Koizumi Motoaki Sugiura

Motoaki Sugiura Ryuta Kawashima4

Ryuta Kawashima4