94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Hum. Neurosci., 09 December 2015

Sec. Cognitive Neuroscience

Volume 9 - 2015 | https://doi.org/10.3389/fnhum.2015.00648

This article is part of the Research TopicPerceiving and Acting in the real world: from neural activity to behaviorView all 26 articles

Flavia Filimon1,2*

Flavia Filimon1,2*The use and neural representation of egocentric spatial reference frames is well-documented. In contrast, whether the brain represents spatial relationships between objects in allocentric, object-centered, or world-centered coordinates is debated. Here, I review behavioral, neuropsychological, neurophysiological (neuronal recording), and neuroimaging evidence for and against allocentric, object-centered, or world-centered spatial reference frames. Based on theoretical considerations, simulations, and empirical findings from spatial navigation, spatial judgments, and goal-directed movements, I suggest that all spatial representations may in fact be dependent on egocentric reference frames.

Do animals use spatial reference frames that are independent of an egocentric viewpoint? In other words, does the brain represent map-like spatial layouts, or spatial locations of objects and landmarks, in an allocentric, or “other-centered” spatial reference frame, independent of the ego's perspective or location? Does the choice of spatial reference frame depend on (passive) perception vs. sensorimotor interactions with the environment, such as target-directed movements or navigation?

It is well-established that neurons in many brain regions, especially parieto-frontal cortex, represent the spatial location of objects in egocentric spatial reference frames, centered on various body parts such as the eye (retina), the head, or the hand (Colby, 1998; Hagler et al., 2007; Sereno and Huang, 2014). However, whether the brain also represents spatial locations of external objects relative to other objects in an allocentric or object-centered spatial reference frame, or constructs an abstract map of such relationships that is independent of the egocentric perspective, is debated (Bennett, 1996; Driver and Pouget, 2000; Wang and Spelke, 2002; Burgess, 2006; Wehner et al., 2006; Rorden et al., 2012; Li et al., 2014).

Here, I review empirical (behavioral, neuropsychological, neurophysiological, and neuroimaging) evidence for and against allocentric vs. egocentric spatial representations. In addition, I discuss theoretical considerations and computational models addressing this distinction.

Based on theoretical considerations and empirical evidence, I suggest that object-centered, allocentric, or world-centered spatial representations may be explained via egocentric spatial reference frames. I shall argue that allocentric task effects could alternatively be explained via the following processes:

(1) mentally shifting (translating, rotating) an object, thereby lining it up with the egocentric midline (or fovea), such that the object's left (right) and the ego's left (right) are equivalent. Spatial decisions regarding where targets are relative to the object are thus translated into egocentric left/right decisions (ego-relative remapping);

(2) mental transformations of the ego (e.g., mental rotation or translation of the ego into a new imagined orientation or position, then referencing the location of objects and landmarks to this new, mentally transformed, egocentric position);

(3) rule-based decision making; for instance, prefrontal top-down control is exerted on a number of brain regions, including on sensorimotor parieto-frontal areas (e.g., top-down inputs from dorsolateral prefrontal cortex to supplementary eye fields or posterior parietal regions such as areas LIP or 7a). Here, rather than using an allocentric spatial reference frame to represent spatial locations, neurons appear to learn to respond categorically in a learned, rule-based fashion, not because of bottom-up construction of an allocentric spatial reference frame based on visual input, but because of categorical signals from prefrontal cortex. This rule-based response only emerges after training, in contrast to, e.g., bottom-up retinotopic representations;

(4) object, landmark, or scene recognition, whereby an object, landmark, or scene has been encoded from one or multiple (egocentric) viewpoints (e.g., by medial temporal lobe memory networks). View-dependent object or scene recognition then predominantly activates the ventral, rather than dorsal, visual stream, as well as hippocampal and related structures, depending on the task.

The latter point suggests that landmark or scene recognition via viewpoint-matching is more akin to object recognition than a spatial representation of object coordinates and locations relative to an external, environment-based reference frame. As such, the brain might not rely on allocentric spatial reference frames either for spatial judgments in spatial perception, or during navigation, or in sensorimotor transformations for goal-directed movements (e.g., grasping, pointing, or eye movements) toward external objects. Thus, I will argue that neither the way we encode space, nor the way we interact with space, need make use of allocentric spatial reference frames independent of egocentric representations. Object-based representations do exist, especially in the ventral visual stream, but are not spatial in the sense of referring locations external to the viewer to another external object. Ventral object-centered representations are essentially akin to object recognition, with spatial decisions remaining anchored to a fundamentally egocentric spatial reference frame.

I will commence with some theoretical examples for why it is difficult if not impossible to relate spatial locations (whether left or right, up or down, or simply “the center of”) to external, non-egocentric coordinates. I will then review empirical evidence for different spatial reference frames in navigation, spatial judgments, and goal-directed movements (interactions with spatial targets), as well as computational (simulation) explanations for the effects observed. By attempting to unify a wide range of findings from multiple research areas, this review will necessarily not be fully comprehensive within each domain, but will instead highlight representative studies. Finally, I will conclude with a new suggested categorization of networks contributing to spatial processing, as well as with several predictions made by the egocentric account.

Different definitions have been used to define the term “allocentric.” Klatzky (1998), for instance, distinguishes between three “functional modules”: egocentric locational representation, allocentric locational representation, and allocentric heading. Whereas egocentric locational representations reference locations of objects to the observer (ego), allocentric representations reference object locations to space external to the perceiver. For instance, positions could be represented in Cartesian or Polar coordinates with the origin centered on an external reference object (Klatzky, 1998). Allocentric heading, on the other hand, defines the angle between an object's axis of orientation and an external reference direction. Other authors have proposed distinctions between “allocentric” and “object-centered” representations (e.g., Humphreys et al., 2013).

Although different authors have used the terms “allocentric,” “object-centered,” or “world-centered” in many different ways, the majority of the spatial cognition literature has used these terms to refer to representations of spatial relationships between objects or landmarks that do not reference objects' locations to the viewer's body, but to other, external objects (Foley et al., 2015). Here, I shall refer to “allocentric,” “object-centered,” “object-based,” “object-relative,” “world-centered,” or “cognitive map-like” interchangeably, to refer to the representation of the spatial location of an object relative to that of another external object, independent of the ego's position or orientation, whether present, imagined, or remembered. This is equivalent to Klatzky's (1998) allocentric locational representation.

In contrast, I shall refer to “egocentric” or “ego-relative” spatial reference frames whenever the observer invokes the position or orientation of the present, remembered, or imagined (e.g., mentally rotated or translated) self, as opposed to an external landmark, to represent the location of external objects.

A spatial reference frame means the receptive field (RF) of a neuron, or the response of the neural population as a whole, is anchored to a particular reference point. For instance, an eye-centered reference frame moves with the eyes (Colby, 1998). A cell preferring stimulation in the left visual field only signals objects when they fall in that cell's RF, which is anchored to the retina. As the eyes move across the visual field, objects' spatial locations change constantly relative to the retina (e.g., an object “left of the eyes” can suddenly be “right of the eyes”). Objects' spatial locations are thus constantly updated such that different eye-centered cells, with spatial RFs tiling the visual space, signal the new eye-centered location. An external, “abstract” reference frame, on the other hand, would represent object locations relative to an external reference point, independent of where the observer is (e.g., the location of the microwave relative to the fridge).

For the purpose of this paper it is irrelevant whether neurons with similar reference frames are arranged in a map of space, such as a retinotopic map of space where cells with similar preferences (e.g., “left half of space”) are clustered together. Cells can be eye-centered and yet be part of either an orderly retinotopic map or a scrambled map of space, with neighboring eye-centered cells having retinal response fields in different locations (Filimon, 2010). I also do not distinguish between reference frames or maps of space represented at the single cell or population level—e.g., the entire population may signal “left of me,” but individual cells' responses may be less clear-cut. The important point addressed here is whether any neural representations, at the single-cell or population level, explicitly signal spatial relationships between objects independent of their spatial location relative to the ego, i.e., whether an explicit object-centered or allocentric spatial representation is formed at whichever computational stage of processing. As defined by Deneve and Pouget (2003), an explicit representation would involve neurons with invariant responses in object-centered coordinates—e.g., the cell should only respond to “left of object,” regardless of where the object is relative to the ego.

Klatzky (1998) also made the distinction between primitive parameters conveyed by a spatial representation and derived parameters which can be computed from primitives in one or more computational steps. Thus, allocentric location is a primitive parameter in an allocentric locational representation, just like egocentric location is a primitive in the egocentric locational representation (Klatzky, 1998). However, I will review evidence that suggests that allocentric location representations are unlikely to be primitives, but are instead derived from egocentric representations at higher levels of the computational hierarchy, and may not be represented explicitly.

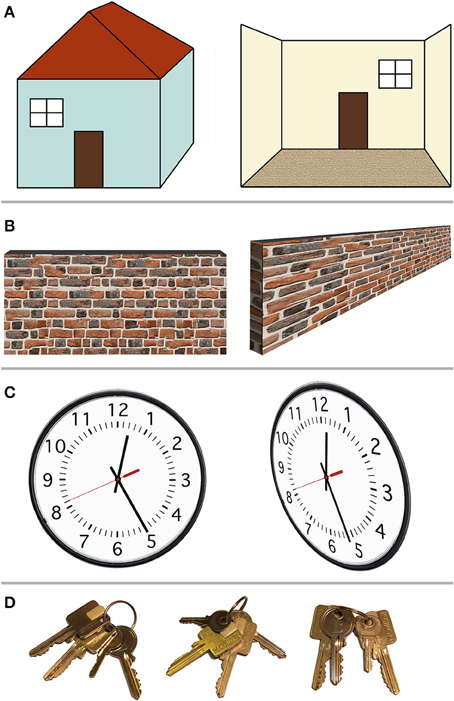

Figure 1 shows several examples of spatial arrangements that would at first instance appear to be object-based, allocentric spatial relationships. For instance, one could refer to left/right terminology to describe the spatial location of a window relative to a door.

Figure 1. Example scenarios in which so-called allocentric spatial representations in fact depend on the egocentric viewpoint. (A) Left–right relationships. The window may be defined as “left of the door” (left). However, this only holds when viewing the door and the window from outside the house; when stepping inside the house (right), the window is now “right of the door,” lining up with the egocentric left and right. (B) Center-of-object spatial decisions. The center or middle point of a wall (or bar) is easily perceived when the ego is positioned perpendicular to the wall, in front of that center (left). When viewed from the side, however (right), the center point of the wall is harder to determine, because the egocentric perspective distorts the image of the wall on the retina. (C) Relative alignment between two objects. The alignment between the minute hand and the individual minute lines (left) suggests the time is 12:25. From a different (egocentric) viewpoint, however, this so-called allocentric spatial relationship shifts, with the new perspective indicating 12:26. (D) Proximity (closest to landmark) relationships. Out of two identical-looking square keys, the square key next to the little round key is the one we want. Here, the target square key can be identified independent of the viewer's viewpoint. However, this resembles object recognition with the square and round key forming one unit, followed by rule-based decision making: first identify the little round key, then find the square key closest to it. This seems less like a spatial representation than object recognition.

In Figure 1A (left) one could argue that the window is “left of the door” and the door is “right of the window,” regardless of whether the observer is located left of the house (where both the window and the door are on the egocentric right) or to the right of the house (where both objects are on the egocentric left). The fact that the window is “left” of another object, even though it is egocentrically on the right, could be interpreted as an object-centered, ego-independent spatial representation. However, as can be seen in Figure 1A (right), this arrangement is nevertheless dependent on the egocentric viewpoint. Once the observer has walked inside the house, viewing the door and window from the inside, the left–right relationship is reversed: now the window is to the right of the door and the door is to the left of the window. This example demonstrates the ego-dependence of “left” and “right” spatial judgments. The observer merely has to imagine the house aligned with the egocentric center point, such that the house's left (right) and the egocentric left (right) are congruent. Such imagined rotation or imagined translation that transforms the ego's orientation or position relative to an object, or conversely the position of an object relative to the ego, has been called imaginal updating (Klatzky, 1998). Since the definition of left and right depends on the egocentric perspective, this definition of left/right relative to the object (the house or any landmark on it) is not an example of true allocentric or object-centered (ego-independent) spatial representations.

Figure 1B demonstrates another possible way of conceptualizing object-centered spatial representations. Instead of using spatial judgment terms such as “left” and “right,” which appear tied to egocentric perspectives, one could use “center of an object.” Clearly something that is in the center of an object should remain in the center of the object regardless of whether the observer is in front or behind that object. However, as Figure 1B demonstrates, establishing the center point of, e.g., a wall, remains dependent of the egocentric perspective: as soon as the observer is positioned at one end of the object (e.g., at the left end of the wall), the distorted retinal perspective obtained from that (egocentric) location makes it much harder to determine where the center point of the wall is. This may not apply to small objects that can be foveated. However, for small objects (which can be mentally shifted to line up with the fovea), the egocentric left/right and the object's left/right are congruent, and the center point can be estimated based on retinal extent. Alternatively, small objects may be treated as a point in space. As explained above, the critical test for an allocentric representation is independence of object locations from any egocentric perspective, thus relying on abstract spatial relationships between objects independent of the observer.

Avoiding “left/right” and “center of” terminology, one might devise a stimulus (Figure 1C) where the relative spatial alignment of two objects is what matters (e.g., the alignment on a clock between the minutes hand and the minute mark corresponding to 25 min). Does the clock indicate 25 min past the hour? As Figure 1C (right) demonstrates, this depends on the egocentric perspective: viewed from the side, the alignment between the minute hand and the twenty-fifth minute mark appears shifted such that one is unsure if the time is 12:25 or 12:26. Thus, even relative spatial alignment between two objects does not appear ego-independent.

Finally, ignoring examples that rely on absolute spatial location (either left or right of center, estimating the center based on distance from the edge, or detecting alignment based on distance between two objects), what about spatial proximity? Figure 1D shows two square keys that appear identical. One of the square keys is located next to a little round key. One can argue that no matter what egocentric perspective one assumes (no matter how the keys are rotated on the key chain), the square key in question will always be closer to the little round key than the other square key. Therefore, this should constitute an allocentric, ego-independent spatial representation. However, rather than involving spatial cognition, this example may rely on object recognition followed by rule-based reasoning: identify the little key first, then take the square key next to it (regardless of spatial distances or locations). Whereas egocentric spatial selectivity (however malleable) is already present before training, rules need learned. Alternatively, the square key and little key could be encoded holistically as a unit, with one feature activating the entire object configuration in object memory. For instance, in face perception, the spatial location of the nose could be represented relative to the spatial location of the eyes, or the face could be perceived holistically. Holistic object recognition relies on matching entire configurations of features to a stored template. This differs from representing individual features' spatial location relative to other features' spatial location in an allocentric spatial frame, because the spatial relationship between feature A and C should remain unchanged if other parts of the object (features B, D, E, for example) are removed. Logothetis (2000) has argued that not only faces, but even arbitrary objects are processed holistically, as a unit, with neurons responding to particular feature configurations rather than processing individual features.

The examples in Figure 1 primarily pertain to reference frames for spatial judgments. However, it could be argued that the main purpose of allocentric spatial frames is navigation and orienting in the environment. Perhaps identifying locations as “north of” or “west of” another object would reveal true allocentric spatial cognition. After all, north remains north regardless of an animal's orientation or location.

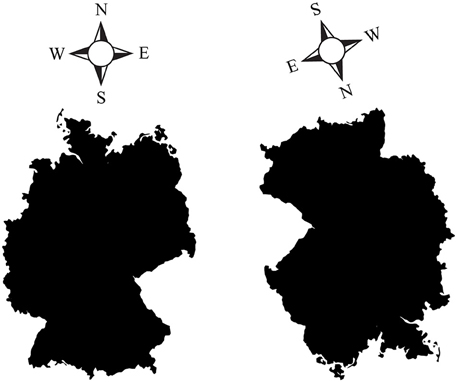

However, even seemingly external, allocentric, coordinates such as north, south, west, and east may be re-centered on the ego's up, down, left and right coordinates. Figure 2 (left) shows a right-side up map of Germany, with north pointing up. In this orientation, it is easy to figure out, for instance, that Moscow (Russia), located east/north-east relative to Germany, is somewhere slightly up and to the right of the image. However, when the map is rotated downward (Figure 2, right), it is much harder to guess where Moscow is, despite the fact that the cardinal directions are still indicated. Why are upside-down maps hard to read? Subjectively, it seems that we perform better when “north” is lined up with the egocentric “up,” and when west and east correspond to the egocentric left and right, because we are then able to rely on our egocentric spatial reference frame to point relative to us. It is likely that most people mentally rotate the map upright to match their egocentric coordinates when making such spatial decisions, rather than relying on an abstract, allocentric map independent of our egocentric coordinates.

Figure 2. (Left) A “right side up” map of Germany, with the four cardinal directions (North, South, West, East) indicated. (Right) An upside-down (rotated) map of Germany, with correspondingly rotated cardinal directions. Pointing to Moscow (Russia) is easy with the left map, but harder with the rotated map on the right. Despite the cardinal directions being indicated, it is much harder to orient oneself in the map on the right. This is presumably due to the fact that we tend to mentally line up north, south, west and east with our egocentric coordinates: north is up, south is down, west is left, east is right. As soon as the familiar, egocentric arrangement is disturbed, it takes us longer to mentally rotate what is supposedly an abstract, viewer-independent, hence allocentric map, back up to match our egocentric coordinates.

Multiple animal species may rely on magnetoreception to orient relative to cardinal directions (Eder et al., 2012; Wu and Dickman, 2012). Note that comparing the ego's heading to an external reference direction is not the same as allocentric heading in Klatzky's (1998) terminology, which would involve comparing the axis of orientation of an external object and the external reference direction (e.g., “north”). The magnetic field axis appears to be used as an external reference direction to which the egocentric axis is compared during navigation. In other words, the deviation of the ego's axis from an external axis, not the relationship between one object's axis and another external axis, is signaled. Thus, the question remains: does this magnetic sense allow animals to compute the location of one object relative to another object (e.g., object A is “north” of object B), independent of the animal's orientation, or does it signal “I'm still too far south” or “if I head this way, the destination is ahead?” The latter still entails referencing places in the environment relative to the ego.

The process of aligning oneself with an external axis so that, e.g., north-selective cells receive the strongest stimulation, could be viewed as similar to a primate moving its fovea onto an object in order to get the best (egocentric) viewpoint on it. Aligning one's “magnetic fovea” with the magnetic field's north-south (or east-west, or other) orientation could be viewed as no more allocentric and independent of the ego than aligning one's retinal fovea with a source of visual stimulation in order to get a better (fovea-centered) view of the object, and hence the strongest stimulation. This is also separate from the question of whether distances are represented (e.g., “50 miles north of me”), as opposed to local chemical and other sensory cues being used to recognize landmarks upon arrival. Navigating directly toward recognized objects or landmarks does not constitute using an allocentric spatial map (Bennett, 1996).

The question should be not whether an external point or axis can be represented relative to one's own body. This would be equivalent to assuming that “representation of any external point must be allocentric, because that point is, after all, external to the perceiving ego.” Any external point can be represented relative to the ego in egocentric coordinates, thus an external object does not by default imply allocentric processing.

Rather, the question is: are external objects represented relative to other external object locations, independent of the egocentric perspective (whether actual or imagined/remembered)? Evidence for the latter would constitute a true allocentric representation. This is precisely the role hippocampal place cells have been proposed to play in navigation, discussed next.

Upon the discovery of place cells in the rat hippocampus (O'Keefe and Dostrovsky, 1971; O'Keefe and Nadel, 1978), it was suggested that place cells, together with head direction cells and grid cells, form an internal ‘cognitive map’ (Tolman, 1948) of the environment, representing allocentric space (for reviews, see McNaughton et al., 2006; Moser et al., 2008).

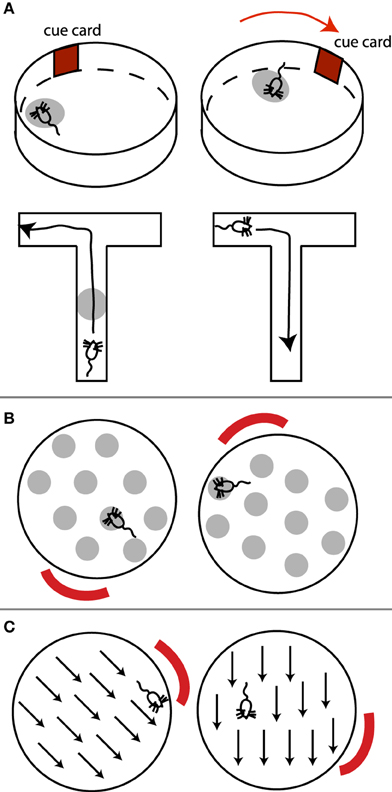

Hippocampal place cells fire at a particular location in the environment (the cell's place field), independent of the rat's orientation inside that place field (Figure 3A, top). “Grid cells,” located in medial entorhinal cortex, display similar spatial tuning, except that each cell has multiple firing fields, effectively forming a periodic array or grid that tiles the environment (Figure 3B; Moser et al., 2008). Similarly, head direction cells (Figure 3C), present in multiple regions including the presubiculum and thalamus, indicate the direction the animal's head is facing, independent of the position or orientation of the animal in the environment (McNaughton et al., 2006). All these cells are anchored to (visual or other sensory) environmental cues (landmarks), and rotate or move their place fields or preferred head direction relative to such external distal cues, if the cues are rotated (Muller and Kubie, 1987; Moser et al., 2008). In other words, these cells appear to signal where the animal thinks it is located (or the direction it thinks it is facing). Place fields, grid fields, and head direction signals also persist in the dark, suggesting a reliance on self-motion (path integration) information for maintaining and updating such representations (Moser et al., 2008; e.g., by keeping track of how many steps the animal has taken, or vestibular, head turning signals).

Figure 3. (A) Place cells, (B) grid cells, and (C) head direction cells. (A) (top) A place cell's place field (light gray oval) rotates with the rotation of an external cue. Note place field is independent of the rat's orientation within it. (A) (bottom) In T-maze environments where routes from one point to the next can be planned, place cells exhibit directional selectivity. This place cell only fires when the rat is moving up the maze, but not when the rat is returning. (B) Example grid field rotating with an external cue. The rat's orientation within a grid spot does not matter. (C) Preferred direction of a head direction cell rotates with an external cue.

Due to the independence of place and grid fields of the direction from which the animal enters a place or grid field, and hence of the animal's egocentric orientation, it has been suggested that these cells contribute to an allocentric map of the environment (Moser et al., 2008).

However, several pieces of evidence suggest alternative interpretations to an allocentric observer-independent map of space. Although a rat's orientation appears to have no influence on place cells in simple laboratory environments such as high-walled cylinders or open circular platforms, place fields are in fact spatially and directionally selective in environments that require the animal to plan a route between points of special significance, such as in radial mazes where food has been placed (Markus et al., 1995). In such cases, place cells respond at a particular location in the environment only if the animal traverses that location in a particular direction, but not in the other direction (Figure 3A). This contradicts an abstract map-like representation of the environment, since a place on the map should remain the same regardless of how it is traversed. By “abstract map” I mean a “cartographer-like map” independent of the animal's orientation, goals, motivations, memory, or other factors unrelated to the spatial relationship between objects.

In addition, the size of a place field depends on the amount of incoming sensory information. In big brown bats, hippocampal place fields are small immediately after an echolocating call, but rapidly start to diffuse as time passes and echo information decreases (Ulanovsky and Moss, 2011). Moreover, the size of the place field depends on the exploratory mode of the animal: when the bat is scanning the environment from a fixed location using echolocation (akin to a primate saccading around from a fixed position), place fields are more diffuse, and place cells exhibit lower firing rates, than during locomotion through the environment (Ulanovsky and Moss, 2011). The fact that place cells respond differently to the same locations in the environment depending on the animal's behavior, and amount of sensory information received, seems to contradict an abstract map signaling fixed, allocentric, ego-independent relationships between places. After all, the relationship between a door and a window should not change depending on whether the ego is observing this relationship remotely or is passing by. Note that this is unlikely due to a difference in recall: the animal is scanning the landmark in question in both cases, i.e., the landmark has been activated in memory (recalled). What appears to differ is the egocentric relationship of the animal relative to the landmark.

Moreover, place fields are over-represented at motivationally salient locations, such as around a hidden platform in a water maze toward which rats are trained to swim (Hollup et al., 2001). This suggests a dependence of the spatial representation on the ego's behavioral goals, rather than a cartographer-like map of the environment.

Place cells are also re-activated during sleep, when the animal dreams about, imagines, or remembers being in a certain place (Pavlides and Winson, 1989). However, this is consistent with the idea that place cells signal the animal's current, remembered, or imagined position in the environment relative to some landmark.

Thus, although place cells might appear to encode a cognitive, map-like representation of an environment, place cells might not signal abstract spatial relationships between two places or two landmarks, independent of where the animal is located. Place cells may instead signal place recognition, e.g., “I'm by the door,” regardless of whether I have my back to the door or am facing the door. If the door moves (without the animal noticing), a place cell's place field shifts to continue signaling “I am by the door,” even though this is a new geocentric location. Such cells may not indicate “The door is by the window.” In this sense, place cells might act more like object recognition cells than cells that represent spatial relationships between landmarks independent of the observer.

Similarly, while grid cells may map out a regular grid across an environment, with cells responding at fixed, regular intervals as the animal traverses it, the rigid grid-like structure would seem to preclude a flexible spatial representation of one object relative to another object, since no specific object-based relationship is signaled by such an arrangement. Both grid cells and place cells are driven by self-motion cues as the animal keeps track of its changing position (Moser et al., 2008).

Head direction cells signal the animal's heading relative to an external landmark. As described above, however, this signal may compare an egocentric (head) orientation with an external landmark, not the orientation of an external object to a reference landmark.

A recently discovered type of cells, entorhinal border cells, respond along the boundaries of an environment and may form a reference frame for place representations (Solstad et al., 2008). However, such cells do not fire at a distance from a wall or other boundary, but only along the boundary. This may suggest that rather than forming an abstract allocentric reference frame, they signal to the animal “I am near the wall.” Thus, rather than signaling an abstract, allocentric environmental geometry, border cells may similarly represent the ego relative to some landmark, or conversely the landmark relative to the ego, not one landmark relative to another landmark.

Further support for the idea that hippocampal place cells are involved in place recognition in a process more akin to object recognition than spatial cognition comes from recent evidence that human place cells are reactivated during retrieval of objects associated with specific episodic memories (Miller et al., 2013). Participants navigated in a virtual environment, where they were presented with different objects at different locations. At the end of each trial, participants were asked to recall as many of the items as possible, in any order. The authors found that place cells' firing patterns during spontaneous recall of an item were similar to those during exploration of the environment where they had encountered the item. This suggests that recall of objects reactivates their spatial context, but also that place cells encode episodic memories more generally (Miller et al., 2013). Similar to rat place cells (Markus et al., 1995), the majority of human place cells were direction-dependent, only exhibiting place fields when traversed in a particular direction (Miller et al., 2013). This is consistent with an egocentric-dependent viewpoint in scene encoding and recognition, rather than an abstract, allocentric map implemented by place cells.

Finally, it is unknown whether place cells, grid cells, and other types of cells that have been studied in small-scale laboratory environments contribute to navigation in much larger, natural, environments, because it has been impossible to record from such cells in kilometer-sized environments (Geva-Sagiv et al., 2015). In most laboratory experiments, the entire spatial environment can be perceived with little or no movement, meaning that all information needed to calculate the spatial location of different landmarks is available from the animal's current location (Wolbers and Wiener, 2014). This means that in practice, the use of allocentric as opposed to egocentric information may be poorly controlled.

While the functional interpretation of place, grid, head direction, and boundary cells and their contribution to an allocentric map of the environment remains unclear, behavioral studies on animal navigation have also questioned whether animals make use of an allocentric, cognitive map during navigation.

Bennett (1996) has argued that a critical test of a “cognitive map” of space is the ability to take novel shortcuts, instead of following previously experienced routes. According to Bennett, previous evidence for shortcut-taking and putative cognitive maps in insects, birds, rodents, as well as human and non-human primates can be explained more simply either as path integration or recognition of familiar landmarks from a different angle, followed by movement toward them. The animal would thus only need to memorize routes and recognize landmarks to navigate toward them, rather than store a detailed cognitive map of spatial relationships between landmarks. The lack of shortcut-taking ability and hence absence of evidence for a cognitive map is supported by more recent research in a variety of species (Wehner et al., 2006; Grieves and Dudchenko, 2013). Instead, many species appear to rely on view-dependent place recognition, and to match learned viewpoints when approaching landmarks (Wang and Spelke, 2002).

However, when path integration and view-dependent place recognition fail, subjects do appear to be reorienting based on the geometry of a room or based on the “shape of the surface layout” (Wang and Spelke, 2002). Disoriented subjects search for target objects both at the correct corner and geometrically opposite corner of a room—but do not appear to be relying on the spatial configuration between objects (Wang and Spelke, 2002). In other words, not all allocentric information is represented; instead, simpler, geometric layout information is used, which perhaps functions more like object recognition.

In summary, it is unclear if place cells, grid cells, border cells, and head direction cells form the building blocks of an abstract, allocentric map of the environment for navigation, and to what extent these cells are involved in representing the spatial location of an external object relative to another object. Behavioral studies have questioned whether animals actually use an allocentric map for navigation, and whether whichever internal representation is used has the same characteristics as an abstract, cartographic map of the environment (Ekstrom et al., 2014).

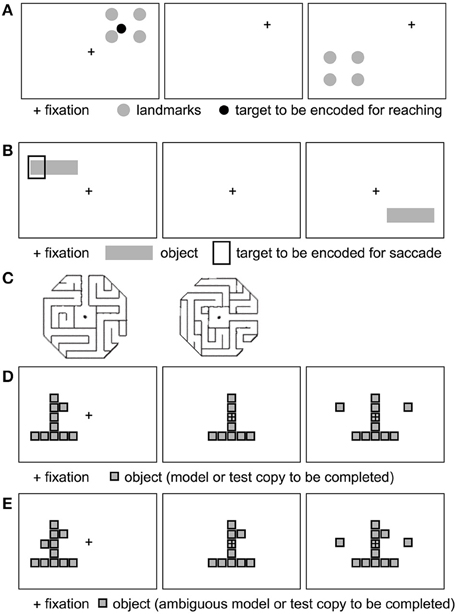

Several behavioral studies have investigated which spatial reference frames are used in goal-directed actions, such as (delayed or immediate) pointing, reaching, grasping, or saccades to (visual or remembered) targets (for reviews, see Battaglia-Mayer et al., 2003; Crawford et al., 2011). Many such studies have investigated spatial reference frames in the context of spatial updating (Colby, 1998; Crawford et al., 2011), where a spatial target is briefly presented, followed by a change in gaze direction before the reach (or saccade) to the remembered location of the target. Saccade or reach endpoint errors and other metrics can then be investigated in the context of landmarks being present vs. absent at the moment of target presentation (Figure 4).

Figure 4. Example stimuli used to probe allocentric spatial reference frames (see text). (A) Four landmarks surround an initially displayed reach target. Following a gaze shift (fixation cross moves), the landmarks reappear at a novel location, prompting the subject to point to the remembered target relative to the landmarks. Example based on Chen et al. (2011). (B) A target is displayed relative to a horizontal bar. After a delay, the bar reappears without the target. The monkey saccades to the bar-relative location of the target. Inspired by Olson (2003). (C) Example maze stimuli to test for maze solving. Adapted from Crowe et al. (2004), with permission. (D, E) Example object construction tasks. Panel (D) shows a model, followed by the removal of a critical element defined in relation to the model object. Following a delay, the monkey selects the missing piece to complete the object. (E) Ambiguous model object: the monkey does not know which of the two knobs (left and right squares) will be removed. Adapted from Chafee et al. (2005) (see text), with permission.

Substantial evidence exists for gaze-centered (egocentric) updating of reach targets following an intervening saccade, for both immediate and delayed movements (Henriques et al., 1998; Medendorp and Crawford, 2002; Thompson and Henriques, 2008; Rogers et al., 2009; Selen and Medendorp, 2011). These studies suggest that the spatial location of a visual target is maintained in an eye-centered reference frame (i.e., as the retinal distance between the current gaze direction or fixation point, and the remembered target location), and is updated across eye movements. While some evidence suggests that gaze-centered updating persists even after long delays (Fiehler et al., 2011), others have suggested that allocentric spatial representations are used when movements are delayed (Westwood and Goodale, 2003).

Several studies have demonstrated more accurate reaching in the presence of landmarks following gaze shifts (e.g., Byrne et al., 2010), and that integration of egocentric and allocentric or landmark information may depend on the stability of visual cues; i.e., the weight assigned to landmarks depends on whether the landmark is moving around (Byrne and Crawford, 2010). Note that the presence of a landmark should not automatically be assumed to involve allocentric (object-centered) reference frames. Both the landmark and the target could be represented relative to the ego. However, can behavioral differences between memory-guided reaches with and without landmarks be explained without relying on the assumption that an allocentric spatial reference frame is used? What accounts for the observed behavioral effects? I will describe two representative experiments in detail to illustrate how egocentrically-encoded landmarks could contribute to such differences.

In a study by Schütz et al. (2013), subjects reached to remembered target locations after intervening saccades, either in the presence or absence of visual landmarks. Subjects foveated a briefly displayed target, and continued fixating its location after its disappearance. After a delay of 0, 8, or 12 s subjects then saccaded to a new fixation cross which appeared at various visual eccentricities. Following the gaze shift, the fixation cross also disappeared and subjects reached to the remembered target location in complete darkness. In the allocentric condition, two light tubes were present left and right of the screen, respectively. Pointing errors varied systematically with gaze shift, e.g., when fixating to the left, subjects overshot the remembered target location in the opposite direction, in both the visual landmark and the no-landmark condition. This is consistent with previous evidence that reaching is carried out in eye-centered (hence, egocentric) coordinates (Henriques et al., 1998). Moreover, the different delays led to similar reach endpoint errors, i.e., the effect of the (egocentric) gaze shift remained the same regardless of a delay or not. This suggests that both immediate and delayed reaches rely on gaze-dependent (egocentric) spatial representations.

In addition to varying with gaze shift (an egocentric influence), however, endpoint errors were reduced in the landmark condition. One possible interpretation of this landmark influence is that egocentric and allocentric spatial representations are combined (Schütz et al., 2013). While it is possible that reach targets are represented relative to both landmarks and gaze position, an entirely egocentric explanation cannot be ruled out. For instance, both the initial target and the landmarks could be represented in gaze-centered coordinates. In the no-landmark condition, the target disappears before the fixation cross reappears at a novel location, with the subject sitting in complete darkness during the variable delay. When the novel fixation cross appears, the egocentric estimate of how far the eyes have moved relative to the remembered target (the retinal distance) is less precise. Even in the 0 s delay condition, the target still disappears before the new fixation cross appears, i.e., the new fixation location and the target are never simultaneously displayed, which may lead to a less precise calculation of the saccade vector from (former) target location to (novel) fixation cross location. Previous research (Chen et al., 2011) has shown egocentric information decays gradually, with decay commencing as soon as the target disappears (Westwood and Goodale, 2003). In the absence of external visual landmarks, these factors could thus contribute to a less accurate estimation of how far the eyes have moved away from the initial target location, or greater uncertainty regarding gaze position relative to the former target location (in retinal coordinates), when the reach is initiated. In contrast, in the allocentric landmark condition the landmarks are present throughout the trial, which can lead to a more accurate retinal (egocentric) estimate of how far the eyes have moved. Subjects can represent both the target and the landmarks relative to their gaze when initially viewing the target, and update this eye-centered representation after the saccade. For instance, the left landmark may be at −10° of visual angle relative to the target in the beginning, and at −5° after the saccade to the new fixation cross, when the reach target has disappeared. The gaze shift vector (in eye-centered coordinates) will thus be estimated more accurately, and can be subtracted from the previous eye-centered position of the hand, to more accurately lead the hand to the remembered target position (in eye-centered coordinates, e.g., Medendorp and Crawford, 2002).

Thus, although the combination of allocentric and egocentric cues remains a possibility, the reduced endpoint reach error in the landmark condition could be explained in terms of less accurate egocentric updating. This explanation is more parsimonious, as it involves a single (egocentric) spatial reference frame. To tease apart these competing accounts, the egocentric account makes a testable prediction: if the new fixation cross were to appear before the target is extinguished, there should be reduced uncertainty regarding how far the eyes have moved, even in the absence of landmarks, and hence reduced endpoint errors, similar to the landmark condition. Future experiments could address this prediction. A second prediction could be tested to tease apart allocentric vs. egocentric influences: the two light tubes (landmarks) could be briefly turned off at the same time as the target, during the saccade to a new fixation cross. The landmarks could reappear just before or at the time of the reach. The prediction is that a disruption in egocentric updating of how far the eyes have moved will lead to greater reach error, even when the landmarks reappear later. This would support an egocentric explanation of the landmark effect.

In another study, Chen et al. (2011) compared the rate of memory decay for egocentric and allocentric reach targets, using delayed reaching to remembered target locations following intervening saccades.

In the egocentric condition, a target appeared in the periphery relative to the fixation cross. After the target disappeared, subjects shifted their gaze to a new fixation location. Following a variable (short, medium, or long) delay, the fixation cross disappeared, and subjects reached to the remembered (and egocentrically remapped) location of the reach target.

In the allocentric condition (Figure 4A), the target was surrounded by four landmarks. These landmarks reappeared at a different location following the short, medium or long delay after the gaze shift, and subjects reached to the remembered (and remapped) target location, relative to the landmarks.

In a similar third condition, the allo-to-ego conversion condition, the four landmarks reappeared at the new location both before and after the variable delay.

The authors found that in the egocentric and allo-to-ego conversion condition, reaching variance (endpoint error, reduced precision) increased from short to medium delays, whereas reaching variance remained constant across delays in the allocentric condition. Similarly, reaction times in the egocentric and allo-to-ego conditions were longer at short delays compared to longer delays, whereas reaction times did not vary according to delay in the allocentric condition. The authors concluded that egocentric representations of target locations decay faster than allocentric representations. It was also suggested that allocentric information is converted to an egocentric representation at the first possible opportunity (Chen et al., 2011). Thus, the allocentric landmarks appearing both before and after the delay in the allo-to-ego condition could be used to infer the location of the target in egocentric coordinates before the delay (an allo-to-ego conversion at the first opportunity), and this egocentric information decays with increasing delays. This interpretation could explain the increase in endpoint errors across delays in the egocentric and the allo-to-ego conditions, and the absence of a modulation by delay in the allocentric condition (when landmarks only appear after delays).

Can these behavioral differences between egocentric and allocentric conditions be explained using a purely egocentric reference frame? It is possible that both the target and the surrounding landmarks were represented in egocentric coordinates, and were mentally shifted to center on the fovea (i.e., the center of mass of the square in Figure 4A would line up with the fixation point). As such, a target closer to e.g., the bottom left landmark would also be in the egocentric lower left relative to the fovea. When the landmarks reappeared at a new egocentric location, the new target location could be remapped in egocentric coordinates based on shifting the entire structure (landmarks plus retinocentrically remapped/remembered target) to the new retinal location. Alternatively, even without mentally shifting the landmarks to imagine them around the fixation point, retinal distance vectors can be computed from the fixation point to both the landmark nearest the target (“vector x”) and to the target (“vector y”). The difference between vectors x and y can be stored as a retinal vector (“z”). When the landmark reappears at a different location in the visual field, the retinal vector to its (egocentric) coordinates is calculated, and the difference vector z can be added to infer the new target location in egocentric, rather than allocentric, coordinates. Egocentric remapping of targets has been demonstrated in multiple brain regions (Colby, 1998).

Why then were there differences between egocentric and allocentric reach accuracies and reaction times? Unlike in the egocentric condition, the allocentric landmarks reappear after the delay, just before movement onset, thereby facilitating remapping of the remembered target in egocentric coordinates just before movement onset. Since the landmarks are displayed just before movement onset in each of the three delay conditions, with the delays preceding, not following, the reappearance of the landmarks at the new location, the (egocentrically) remapped location does not get a chance to decay before movement onset. This could explain the shorter and constant reaction times in the landmark condition compared to the egocentric condition. In contrast, in the egocentric condition no new cues are presented after the intervening saccade and variable delay. The longer the delay, the greater the egocentric information decay, consistent with the authors' interpretation (Chen et al., 2011).

What about the allo-to-ego condition, which resembled the egocentric condition in terms of an increase in reach errors across delays? In the allo-to-ego condition, the amount of time the landmarks are displayed at the new location is halved: instead of reappearing for 1.5 s after the delay, they appear for only 0.75 s before and 0.75 s after the variable delay. This shorter presentation time may have led subjects to rely on the first reappearance of the landmarks to update both landmarks and the target in egocentric coordinates, as suggested by the authors. Since the variable delay follows the first reappearance of landmarks, egocentric information decays just like in the no-landmark, egocentric, condition.

In summary, although it is possible that a fundamental difference exists between egocentric spatial representations, thought to decay rapidly across delays, and allocentric spatial representations, which are thought to be more stable and decay less rapidly, these results are equally compatible with an egocentric remapping of all targets, whether surrounded by landmarks or not, accompanied by an egocentric decay in all cases where the remapped information precedes a variable delay. This and similar studies therefore do not necessarily demonstrate the existence of allocentric spatial representations.

Behavioral studies have also investigated visual illusions such as the Müller–Lyer illusion, in which a line segment is flanked by either pointed arrow heads or arrow tails. Subjects perceive identical-length segments with arrow tails as longer than those with arrow heads, which could be interpreted as evidence of allocentric encoding of object features relative to each other. However, Howe and Purves (2005) have shown that this illusion can be explained by natural image statistics where the physical sources giving rise to a 2D retinal image of a line segment with arrow heads tend to belong to the same plane (object, or surface area), whereas physical sources for arrow tails are less likely to come from the same plane. The illusion could thus arise from a probabilistic interpretation of 2D retinal projections of the real world—and would not require allocentric spatial encoding of individual features. A review of 33 studies of pointing to Müller–Lyer stimuli showed that visually-guided pointing (rather than from memory) is typically not subject to the Müller–Lyer illusion, suggesting that this illusion is mediated by the ventral rather than dorsal visual stream (Bruno et al., 2008).

Other studies have investigated pointing accuracy to surrounding objects after subjects were disoriented through self-rotation, with objects hidden from view (Wang and Spelke, 2000, 2002). In such experiments subjects show increased configuration pointing errors, i.e., a deterioration in the internal representation of the angular relationship between targets (e.g., where the TV is relative to the table). This has been interpreted as a disruption to dynamic egocentric updating of target locations (relative to the current ego location), even after controlling for vestibular stimulation, re-orientation via an external light, and other factors, contradicting an enduring cognitive map of allocentric spatial relations between objects independent of the observer (Wang and Spelke, 2000).

Conversely, other studies have shown that disorientation leads to much lower error in “judgments of relative direction” (JRD tasks), where, rather than pointing from the current ego location to objects' locations, subjects imagine themselves by an object and point to another object from that imagined location (e.g., imagining the ego by the door and pointing toward the TV from that location; Burgess, 2006; Waller and Hodgson, 2006; Ekstrom et al., 2014).

However, it is unclear whether higher performance in the JRD task necessarily means subjects rely on stored allocentric representations of object locations relative to each other. The JRD task may simply involve accessing stored egocentric viewpoints, mentally rotating (shifting) the ego to one of the objects, and making an egocentric decision as to where objects are—relative to the ego. In Waller and Hodgson's study (Waller and Hodgson, 2006), for example, participants walked past each of the objects to be encoded, thereby presumably obtaining multiple egocentric viewpoints on the scene layout. Disorientation does not affect JRDs compared to pointing from the current ego orientation, because JRDs rely on stored egocentric viewpoints, whereas orientation-dependent pointing requires re-establishing ego-relative object locations anew. Behavioral differences or effects between two experimental conditions thus do not necessarily demonstrate that allocentric vs. egocentric spatial reference frames are used. The two tasks can be viewed as different egocentric tasks, with differences due different egocentric mechanisms being activated (mental rotation of the ego and recall of egocentric viewpoints vs. remapping current target locations relative to the ego following disorientation). Such mental rotations are supported by evidence that recognition times of arrays of objects displayed on a circular table, when rotated to various degrees, increase linearly with the angle of rotation away from the original display (Wang and Spelke, 2002).

If allocentric tasks can be solved by mentally rotating or shifting either the ego or a display of landmarks back to an egocentric (perhaps retinal) center, what if only subsets of objects are shifted in a scene—could reach errors reveal whether subjects encode targets relative to objects rather than the ego? Fiehler et al. (2014) found that the greater the number of objects shifted, the greater the deviation of reach endpoints in the direction of object shifts. While this suggests a plausible allocentric mechanism whereby target locations are encoded relative to other objects, rather than relative to the ego, this could depend on whether an egocentric reference point is provided during encoding of object (target) locations. If a retinal reference point is missing (no fixation cross provided during encoding), subjects may not notice shifts in clusters of objects and still rely on view-dependent (partial) scene recognition, with reaching performed relative to a presumed egocentric reference point that could not be accurately established during encoding. Shifting single large or single smaller local objects had no effect on reach endpoint errors (Fiehler et al., 2014). Similar view-dependent local scene encoding or retinal visual distance calculations can account for other studies in which combined egocentric and allocentric influences were examined (Byrne and Henriques, 2012; Camors et al., 2015).

A number of neuropsychological studies of hemineglect patients have identified seemingly dissociable egocentric vs. object-centered (or allocentric) neglect symptoms, as well as dissociable brain damage sites (for reviews and critiques, see Olson, 2003; Rorden et al., 2012; Yue et al., 2012; Humphreys et al., 2013; Li et al., 2014).

Following damage to (predominantly) the right hemisphere, patients exhibit unawareness of the contralateral (egocentric left) side of space (Humphreys et al., 2013). In addition to egocentrically-defined hemineglect, some patients ignore the left half of an object or of objects, even if presented in their intact (egocentrically right) hemifield, or even if rotated such that the left half of the object falls on the (intact) right visual field (e.g., Caramazza and Hillis, 1990; Driver and Halligan, 1991; Behrmann and Moscovitch, 1994; Behrmann and Tipper, 1994; for review, see Humphreys et al., 2013). The fact that the left half of an object is neglected even when rotated and presented in the egocentric right half of space has been interpreted as evidence for object-centered spatial representations.

However, alternative explanations have been proposed for this pattern of object-based hemineglect. For instance, rotated objects presented in non-canonical orientations may be mentally rotated back upright to match an egocentric, canonical (mental) representation of the object, the left half of which is then ignored (Buxbaum et al., 1996; Humphreys et al., 2013).

Similarly, computational models suggest that a decreasing attentional gradient from (the egocentric) right to left could lead to the left half of any item anywhere in the visual field being less salient and therefore more likely to be ignored (Driver and Pouget, 2000; Pouget and Sejnowski, 2001). Models relying on such “relative egocentric neglect” (Driver and Pouget, 2000; Pouget and Sejnowski, 2001) have successfully modeled what appears to be object-centered neglect (Pouget and Sejnowski, 1997, 2001; Mozer, 1999, 2002).

Such a lesion-induced (egocentric) gradient of salience, which could affect either the stored representation of an object or the allocation of attention to this representation, is supported by evidence that the severity of allocentric neglect is modulated by egocentric position, with milder allocentric deficits at more ipsilesional egocentric positions (Niemeier and Karnath, 2002; Karnath et al., 2011). The field of view across which such a gradient in salience is exhibited may be flexibly adjusted (similar to a zoom lens; Niemeier and Karnath, 2002; Karnath et al., 2011; Rorden et al., 2012). For instance, exploratory eye movement patterns in neglect patients did not differ between egocentric and allocentric neglect, but rather differed according to the task goal and strategies, with the same item either detected or neglected depending on the task (Karnath and Niemeier, 2002).

However, double dissociations between egocentric and allocentric neglect have been reported, together with apparent double-dissociations in lesion sites (Humphreys and Heinke, 1998; Humphreys et al., 2013). Egocentric neglect tends to be associated with more anterior sites in supramarginal gyrus and superior temporal cortex, whereas allocentric neglect tends to correlate with more posterior injuries such as to the angular gyrus (Medina et al., 2009; Chechlacz et al., 2010; Verdon et al., 2010).

In contrast, several recent studies have reported that allocentric neglect co-occurs with egocentric neglect, and that the lesion sites overlap (Rorden et al., 2012; Yue et al., 2012; Li et al., 2014). Rorden et al. argue that previous studies have used vague or categorical criteria in classifying patients with allocentric vs. egocentric neglect, leading to an apparent double dissociation between deficits. (For instance, a patient with both egocentric and allocentric deficits would be categorized as allocentric-only, leading to an apparent double-dissociation). To identify whether egocentric and object-centered neglect are dissociable, Rorden et al. used a “defect detection” task in which right-hemisphere stroke patients had to separately circle intact circles and triangles as well as circles and triangles with a “defect” (e.g., a gap in the left half of a circle). Unlike previous studies, which had coded allocentric and egocentric neglect in a categorical, dichotomous manner, thereby ignoring the varying severity of deficits, Rorden et al. used a continuous measure. Allocentric neglect scores were calculated based on the number of correctly detected items with defects as well as intact items correctly marked, on both the contralesional and ipsilesional side. In addition, they also used a center of cancelation task to calculate egocentric neglect scores based on how many targets (e.g., the letter A) were identified in a cluttered field of letters, weighted according to their position from left to right.

Confirming previous findings by Yue et al. (2012), Rorden et al. (2012) found that allocentric deficits were always observed in conjunction with egocentric deficits, with no pure cases of allocentric neglect. In contrast, egocentric neglect did occur on its own. The allocentric neglect score was strongly correlated with patients' egocentric neglect score, and substantial allocentric neglect was only present with substantial egocentric neglect, suggesting that allocentric neglect is a function of severe egocentric neglect.

Moreover, the regions of brain damage associated with egocentric and allocentric neglect strongly overlapped. Rorden et al. (2012) suggest that previous findings of an association between posterior temporo-parietal lesions with allocentric neglect, and superior and middle temporal lesions with egocentric neglect, may in fact result from the same mechanism, namely the extent to which the middle cerebral artery territory is affected by stroke. According to this account, allocentric deficits may be subclinical in milder forms of neglect, which are associated with damage restricted to the central aspect of the middle cerebral artery territory, thus producing what appears to be purely egocentric neglect. In contrast, more severe forms of neglect, comprising both egocentric and allocentric deficits, are due to damage to a larger extent of middle cerebral artery territory, including more posterior regions typically associated with allocentric neglect.

The fact that patterns of object-centered neglect can be explained in terms of an egocentric gradient in salience, as well as recent evidence of a lack of double-dissociation between egocentric and allocentric neglect symptoms and lesion sites, argue against independent egocentric and allocentric spatial representations, and support a single (egocentric) mechanism.

Other neuropsychological investigations have focused on lesions to the ventral visual stream. For instance, patient D.F. shows impairment in (conscious) visual shape perception, but accurate visuomotor performance (such as correct grip aperture) in actions directed to different object shapes (Goodale and Milner, 1992; Goodale and Humphrey, 1998). This has been interpreted as evidence for separate vision for perception (ventral) and vision for action (dorsal) streams (Goodale and Milner, 1992). Schenk (2006) has questioned whether D.F.'s impairment is perceptual, rather than allocentric. In Schenk (2006), D.F. was impaired on a visuomotor task that involved proprioceptively-guided pointing to the right or left of the current hand position by a similar amount as displayed visually between a visual cross and visual target. As suggested by Milner and Goodale (2008), however, the impairment could have been due to the task requiring D.F. to make a perceptual judgment (visual estimate) of the distance between the visual stimuli, before being able to translate that visual distance into a visuomotor plan to a different location. Moreover, this estimate could happen via a “perspectival” (egocentric viewpoint-dependent) mechanism (Foley et al., 2015), rather than an allocentric mechanism. The latter interpretation would thus suggest the ventral visual stream is involved in perceptual (e.g., visual size) estimates underlying shape perception, not necessarily allocentric spatial cognition.

Foley et al. (2015) have argued that whereas the dorsal visual stream uses effector-based egocentric spatial representations, the ventral visual stream may use a perspectival egocentric representation of scenes or objects. Note that this perspectival account is compatible with holistic configural scene or object processing (Logothetis, 2000). Moreover, according to Foley et al., the purpose of ventral visual stream computations is object recognition, attaching emotional or reward value to such a representation, or habitual learning (i.e., what to do with such an object, regardless of the current egocentric perspective on it).

These proposed processes are consistent with the findings presented in the present review, and are compatible with an egocentric account of spatial processing.

Neurophysiological studies have shown that multiple egocentric (e.g., hand-centered and eye-centered) representations of the same target can co-exist in parallel or change fluidly during sensorimotor transformations (Battaglia-Mayer et al., 2003). In fact, many neurons exhibit hybrid (e.g., both eye and hand-centered) reference frames (Avillac et al., 2005; Mullette-Gillman et al., 2009). Here I examine whether single-unit neurophysiology evidence supports the representation of an allocentric reference frame at the neuronal level at any point in the sensorimotor transformation. A number of single-unit recording studies have reported object-centered spatial representations in both prefrontal and posterior parietal cortex. In a series of studies, Olson and colleagues (Olson and Gettner, 1995, 1999; Olson and Tremblay, 2000; Tremblay et al., 2002; for review, see Olson, 2003) reported object-centered spatial selectivity in macaque supplementary eye field (SEF) neurons during saccade planning. A typical task (Figure 4B) involves first presenting a horizontal bar with a cue left or right on the bar, at various retinal locations, while the monkey is fixating centrally. Following a variable-duration delay, the horizontal bar is presented at another location in the visual field. After a second variable-length delay, the fixation point disappears and the monkey executes a saccade to the remembered target location relative to the object, i.e., left or right on the bar, regardless of whether the bar is now in the left or right visual field. Interestingly, many SEF neurons show differential activity during the post-cue delay prior to object-left vs. object-right saccades, even though the monkey does not yet know the direction of the physical saccade. In other words, while the monkey is holding the object-centered location in working memory, after the bar and cue disappear, but before the new horizontal bar appears, SEF cells selectively signal object-right vs. object-left locations, suggesting object-centered spatial selectivity. This effect is also obtained if color cues or discontinuous objects/cues (e.g., left vs. right of two dots) are used to instruct left vs. right saccades relative to the object (Olson, 2003).

While these results are consistent with object-centered spatial representations in SEF, several additional findings allow for an alternative interpretation. For instance, the neurons that prefer the bar-right condition are predominantly in the left hemisphere, while bar-left neurons predominate in the right hemisphere (Olson, 2003), consistent with an egocentric contralateral representation of each half of space. The fact that neurons selective for object coordinates are arranged according to egocentric space in the brain could suggest a recentering of the mental representation of the object during the delay, such that the left half of the object falls in the (egocentrically) left visual field and the right half of the object in the (egocentrically) right visual field.

The idea that a (re-)centered mental representation is driving these responses is also supported by other characteristics of SEF neurons' responses: the object-centered spatial selectivity emerges during the post-cue delay, even when the new target bar isn't visible yet, i.e., before a new object-relative target position can be calculated (e.g., Figures 1, 4, in Olson, 2003).

Interestingly, color cues take longer (200 ms) than spatial configuration cues to evoke object-centered activity, suggesting a top-down, rule-based decision process, perhaps coming from other prefrontal regions such as dorsolateral prefrontal cortex (DLPFC). SEF neurons can also learn to respond to color instructions even if the color cue that signals an object-left rule appears at the right of the object (dot array; Olson, 2003). In such cases, the neuron indicates both the object-relative location of the cue (i.e., if the neuron prefers left on the object, yet the cue signaling a future left-object saccade appears on the right, the neuron responds weakly to the cue) and the object-relative location of the target (i.e., if the target then appears on the left in a left-object preferring neuron, a strong response is obtained; Olson, 2003). This pattern has been interpreted as object-centered spatial selectivity, and that the target could not be selected by an object-centered rule (since the cue appeared on the right of the object, and yet instructed a left response; Olson, 2003). However, this response pattern occurred in SEF neurons previously trained to select targets using precisely an object-centered rule. Importantly, as discussed by Olson (2003), SEF neurons only show weak object-centered signals before training.

This training-dependence suggests that rather than responding to object-based spatial locations in a bottom-up manner (via object-centered spatial selectivity), such putatively object-centered neurons require extensive training, i.e., respond most likely to top-down signals. This could suggest rule-based decision making signals from other (perhaps dorsolateral prefrontal) regions, rather than spatial perception in an object-centered spatial reference frame. A testable prediction is that DLPFC activity should precede SEF activity on such tasks. In humans, a testable fMRI prediction would be that the effective connectivity between e.g., DLPFC and SEF should increase when the rule needs applied.

This interpretation of a superimposition of a rule onto SEF neuronal activity is also consistent with the fact that SEF neurons showed a modulation by egocentric saccade directions, i.e., a right-object selective SEF neuron still showed some preference for physically (egocentric) rightward saccades even if they fell on the (non-preferred) left end of the object (Olson, 2003).

Other studies have investigated object-centered representations in posterior parietal areas (for review, see Chafee and Crowe, 2012). Crowe et al. (2004) recorded from inferior parietal area 7a while monkeys were shown visual stimuli depicting octogonal mazes (viewed from the top), with a straight main path extending from the center box out (Figure 4C). In exit mazes, the main path exited to the perimeter, whereas in no-exit mazes, the main path ended in a dead end inside the maze. Monkeys mentally solved mazes to determine whether each maze had an exit path or not, without moving their eyes from the fixation point located at the center of each maze. While mentally solving the maze task, one quarter of neurons in parietal area 7a exhibited spatial tuning for maze path directions.

Interestingly, and consistent with the top-down hypothesis of object-centered processing, neuronal tuning for maze path direction only emerged after training (Crowe et al., 2004). In other words, naive animals that viewed the same maze stimuli without solving them did not show tuning to path direction. This argues against an existing, object-centered spatial representation, i.e., an “allocentric lens” through which spatial relationships in the world are viewed. If object-based spatial relationships did exist, these neurons should have represented them in a “bottom-up” manner just like retinocentric or egocentric spatial relationships are represented, which do not require task training. A neuron that has a preference for a certain object-centered spatial relationship (e.g., maze path exiting to the right of the maze) should exhibit such an object-centered preference whenever the monkey is looking at such a stimulus. It is possible that allocentric spatial tuning takes longer to develop with more complex visual stimuli, where multiple object-centered spatial relationships could be represented. Such training dependence, however, is also observed for simple bar stimuli, as reported by Olson (2003).

As in SEF, object-centered parietal area 7a neurons had a preference for contralateral path directions. In other words, neurons located in the left hemisphere preferred maze exits to the egocentric right. However, preferred maze path directions (e.g., up and to the right) were largely independent of receptive field (RF) locations as mapped with spot stimuli (Crowe et al., 2004). Spatial tuning for path direction in the maze task was also not systematically related to saccade direction tuning as mapped in an oculomotor control task. While this dissociation between the RFs mapped using control tasks and maze path direction would seem to suggest an independence of egocentric variables, it is also possible that individual neurons' RFs obtained with the visually more complex maze object shift dynamically with more complex tasks. The fact that the maze task needs solved mentally (without moving the eyes) would suggest that some mental remapping of information across receptive fields is necessary. I.e., neurons might dynamically and predictively represent the information expected to fall in their RFs if the eyes were moved. Thus, the classically defined RF location as mapped by spot light stimuli would seem less relevant than finding out what kind of remapping might be happening during mental solving of the maze task. Remapping of information even prior to saccades has been demonstrated in neighboring area LIP (Colby, 1998).

In fact, a subsequent study of the maze task (Crowe et al., 2005) studied the neuronal population dynamics during maze task solving. Crowe et al. (2005) found that following presentation of the maze, the population vector (the direction signaled by the majority of cells) in parietal area 7a began to grow in the direction of the exit path. In trials in which maze paths had a right-angle turn, the population vector rotated in the direction of the turn, however, not 90°, but 45°.

In other words, imagine a triangle corner centered at the fovea, with one triangle side extending vertically up from the fixation point; from the top of the vertical side, another side extends to the right, forming a right angle with the vertical line. If you were to move your eyes up one side of the triangle and then turn 90° right, the hypotenuse is 45° relative to the vertical meridian from your initial fixation point. In object coordinates, the configuration of the path toward the exit is first up, then 90° to the right. However, the populations of cells that became active were first cells preferring up, then cells preferring 45° to the right.

This is the vector angle one would expect if the vector origin were anchored to the fovea (initial fixation point), with the tip of the vector signaling the maze exit from the foveal origin to 45° up and to the right, as suggested by the authors (Crowe et al., 2005; Chafee and Crowe, 2012). This suggests the maze problem was solved from an egocentric, specifically retinocentric, perspective, and is less consistent with an object-centered representation, at least at the population level.

Another approach to studying object-centered spatial representations is to use a visual “object construction task” (Figures 4D,E), in which presentation of a model object consisting of a configuration of elements is followed by a test object in which one element is missing (Chafee et al., 2005, 2007). For instance, an inverted T-like structure consisting of Tetris-like blocks arranged vertically and horizontally was followed by a test structure where one block was missing left or right of the vertical object axis. Monkeys were trained to then “complete” the test object by choosing between two elements, one of which was on the correct side of the missing element location. Once the element was chosen, it was attached to the test object at the appropriate location.

By presenting either the test object or the model object at different retinal locations, Chafee et al. (2005, 2007) could investigate whether neurons in area 7a are sensitive to the object-referenced location of the missing element (e.g., top right of the object) regardless of the egocentric (retinal) location of the element. Chafee and colleagues found two populations of neurons in area 7a. One population coded the missing element in viewer-referenced (egocentric) coordinates, whereas a partially overlapping population encoded the missing element in object-referenced coordinates, signaling the missing piece both when the test object appeared left and right of the fixation cross. Object-centered neurons showed object-centered responses both when the whole shape (model) was presented, and when the test object (with a missing piece) was shown.

Several neurons indicated a joint viewer- and object-referenced influence, responding more strongly when both the element and the object were on the preferred side (for instance, both on the egocentric left and object-referenced left).