95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 20 April 2012

Sec. Motor Neuroscience

Volume 6 - 2012 | https://doi.org/10.3389/fnhum.2012.00084

This article is part of the Research Topic Meaning in mind: Semantic richness effects in language processing View all 18 articles

The theory of embodied cognition postulates that the brain represents semantic knowledge as a function of the interaction between the body and the environment. The goal of our research was to provide a neuroanatomical examination of embodied cognition using action-related pictures and words. We used functional magnetic resonance imaging (fMRI) to examine whether there were shared and/or unique regions of activation between an ecologically valid semantic generation task and a motor task in the parietal-frontocentral network (PFN), as a function of stimulus format (pictures versus words) for two stimulus types (hand and foot). Unlike other methods for neuroimaging analyses involving subtractive logic or conjoint analyses, this method first isolates shared and unique regions of activation within-participants before generating an averaged map. The results demonstrated shared activation between the semantic generation and motor tasks, which was organized somatotopically in the PFN, as well as unique activation for the semantic generation tasks in proximity to the hand or foot motor cortex. We also found unique and shared regions of activation in the PFN as a function of stimulus format (pictures versus words). These results further elucidate embodied cognition in that they show that brain regions activated during actual motor movements were also activated when an individual verbally generates action-related semantic information. Disembodied cognition theories and limitations are also discussed.

Determining how the interaction between the body and the environment influences the evolution, development, organization, and processing of the human brain is important in the understanding of how conceptual information is represented (Barsalou, 1999; Wilson, 2002; Gibbs, 2006; Siakaluk et al., 2008a). According to Barsalou (1999) Perceptual Symbols Systems theory, there is a strong relationship between an individual’s perceptual experiences and his or her conceptual representations. Specifically, the perceptual symbols or representations of an experience are encoded in the brain, these representations form a conceptual simulation of that experience, and when an individual retrieves information about a concept, the perceptual symbols (or experiences) associated with the concept are simulated. As such, conceptual information is grounded in perceptual, or sensorimotor, experiences that are simulated when re-enacting interactions with stimuli (see also Barsalou, 2008, 2009). These notions are the theoretical underpinnings of embodied cognition, which proposes that cognitive processes are embedded in sensorimotor processing (Wilson, 2002; Siakaluk et al., 2008b). Accordingly, embodied cognition theorists suggest that cognition is bodily based, in that the mind is used to guide action, and that the brain developed as a function of interaction with the environment to facilitate sensory and motor processing (Wilson, 2002; Gibbs, 2006). As such, the theory of embodied cognition would suggest that conceptual information is grounded in sensorimotor processes, and thus sensorimotor regions involved in encoding conceptual knowledge should be active during conceptual (i.e., semantic) processing.

A recent goal of cognitive neuroscience has been to determine whether the brain regions that control actions are also involved when responding to action-related language (for a review see Willems and Hagoort, 2007). That is, are motor regions active during responding to semantic tasks through using action-related language, even when the task involves no motor movement or actions? If sensorimotor regions are active when using action-related language during semantic tasks, this could be taken as neuroanatomical support for embodied cognition theories. In the action-semantics and embodied cognition research literature, activation of the parietal-frontocentral network (PFN; including the supramarginal gyrus, inferior and superior parietal lobule, and sensory cortex of the parietal lobes, and the supplementary motor area (SMA), and the premotor regions of the frontal lobes) when responding to action-related language, is typically referred to as somatotopic-semantics. As such, evidence of somatotopic-semantics supports the idea that action-related conceptual knowledge is grounded in action and perceptual systems (Pulvermuller, 2005; Barsalou, 2008; Boulenger et al., 2009).

Recent neuroimaging studies have shown that sensorimotor regions are activated somatotopically during the processing of action-related language, much like Penfield’s map of the sensory and motor homunculus (Penfield and Rasmussen, 1950), and this has been taken as neuroanatomical evidence of embodied cognition (Hauk et al., 2004; Tettamanti et al., 2005; Esopenko et al., 2008; Boulenger et al., 2009; Raposo et al., 2009). Such research has shown that action-related language activates the PFN and is organized somatotopically dependent upon the body part the object/action represents (Hauk et al., 2004; Pulvermuller, 2005; Tettamanti et al., 2005; Esopenko et al., 2008; Raposo et al., 2009). For example, when participants covertly read action words (Hauk et al., 2004), listen to action-related sentences referring to specific body parts (mouth, arm, leg; Tettamanti et al., 2005), or overtly generate a response to how they would use a hand- or foot-related object presented in word format (Esopenko et al., 2008), regions proximal to where the body part is represented on the motor cortex are activated. Aziz-Zadeh et al. (2006) found that regions activated by observing actions overlapped with regions activated by reading phrases depicting actions in a somatotopic arrangement. As such, the abovementioned research suggests that the PFN is accessed during processing of action-related language in a somatotopic fashion, or in other words, the semantic representations for action-related language are embodied. However, it is important to note that there are some criticisms to embodied cognition theories in the literature. The major criticism is that the motor system is not required for the processing of action-related stimuli, and if activation does occur in the motor system, it happens after the semantic analysis of the stimulus has occurred (Caramazza et al., 1990; Mahon and Caramazza, 2005, 2008). In other words, according to this view, the motor system is not required for processing action-related stimuli, but is an automatic by-product of processing such stimuli. We will return to this issue in the Discussion.

Previous neuroimaging research examining embodied cognition has not directly compared activation in the regions that have been shown to process embodied information (i.e., in the PFN), using the same stimuli in both picture and word format. Previous patient research has shown that patients can present with deficits in recognition dependent upon stimulus format (e.g., Lhermitte and Beauvois, 1973; Bub et al., 1988; Lambon Ralph and Howard, 2000). Behavioral research has also shown that pictures and words have differential access to semantic action-related knowledge. Specifically, Thompson-Schill et al. (2006) have suggested that pictorial stimuli contain form information and thus have privileged access to manipulation knowledge compared to word stimuli. For example, Chainey and Humphreys (2002) found that participants are faster at making action decisions to picture stimuli compared to word stimuli, which is suggested to occur because stored associations for actions are more easily accessed by the visual properties of the object. In addition, Saffran et al. (2003) have shown that a significantly greater number of verbs are produced for pictures compared to words. The authors suggest that this could occur because pictures provide the affordance to how the object can be used, thus making it easier to produce a verb representing the object use. Such research suggests that although pictures and words have access to general semantic knowledge, there is evidence that there are differences in the information that pictures and words activate. Thus, it is important to examine whether pictures and words activate unique, as well as common, brain regions that process action-related stimuli.

Our research examined whether there were differences in the neuroanatomical processing of embodied, or action-related, stimuli presented in picture and word format. To examine this, participants completed either the hand or foot variant of the semantic generation task from Esopenko et al. (2008, 2011), with the same stimuli presented in both picture and word formats to directly compare whether there are differences in processing in the PFN dependent upon picture versus word format. Furthermore, we sought to examine whether the semantic generation and motor localization tasks showed a shared network of activation in the PFN. We compared a word semantic generation task, a picture semantic generation task, and a motor localization task to examine the following hypotheses: (1) to the extent that conceptual knowledge is grounded in sensorimotor processing (i.e., embodied), the semantic generation tasks should activate the PFN in a somatotopic fashion (i.e., in proximity to the hand and foot sensory and motor cortices); (2) to the extent that this network in the PFN is common to both picture and word format, we should see similar shared activation maps between the semantic generation and motor localization tasks for both pictures and words; and (3) to the extent that pictures and words have differential access to action-related knowledge, there should also be unique regions of activation for pictures versus words representing the embodied action-related knowledge in the PFN.

To examine the extent of unique and overlapping activation in the PFN for the processing of action-related stimuli, we use unique and shared activation maps that were developed in our lab (Borowsky et al., 2005a,b, 2006, 2007). These shared maps allow one to determine, within-participants, what activation is common between two tasks, whereas unique activation maps allow one to determine what regions are uniquely activated for each task. This differs from the traditional examination of what is unique to each task using subtraction activation maps, whereby traditional subtraction maps show unique activation based on what task activation is of highest intensity when two tasks are pitted against each other. As such, the unique and shared maps used in the fMRI analysis will allow for an additional perspective on what is unique to each of two processes (e.g., motor vs. semantic; picture vs. word), and also, what is shared between the two processes. Given that the shared/unique maps are computed within-participants and then averaged for the final maps, the final shared map is mathematically independent (not mathematically exclusive) of the final unique map.

University undergraduate students (N = 16; mean age = 23; all right-handed) with normal or corrected to normal vision participated in this experiment. The research was approved by the University of Saskatchewan Behavioral Sciences Ethics Committee.

In the following experiment, participants completed a motor localization task, and a picture and word semantic generation task. Participants completed both the picture and word semantic generation tasks with either hand or foot stimuli (i.e., eight participants in the foot condition and eight participants in the hand condition). The motor localization task was used to determine the location of hand and foot motor cortex. To allow comparisons to the tasks described below, a visual cue was given on each trial, such that in the hand condition the word “Hand,” and in the foot condition the word “Foot,” was presented on the screen and participants were instructed to move the body parts that the word represented while it was on the screen. For the hand condition, movement involved sequential bimanual finger-to-thumb movements. For the foot condition, movement involved bimanual foot-pedaling motions. The order of these two motor tasks was counterbalanced across participants. For the foot-pedaling motions, participants were instructed to only move their feet and no other part of their body. By using a large-angled piece of foam under the knees, we were able to ensure that the foot-pedaling condition did not create any motion in the upper body.

For the semantic generation tasks, the stimuli consisted of visually presented pictures and words referring to objects that are typically used by the hand (e.g., stapler) or the foot (e.g., soccerball). There were 50 objects in each of the picture and word conditions (Appendix A). The same objects were presented in both the picture and word conditions. Although we are not comparing hand and foot conditions to each other, we nevertheless matched the hand and foot stimuli as closely as possible on length [t (51) = 1.157, p = 0.253] and subtitle word frequency (SUBTLEX frequency per million words) [t (51) = −1.173, p = 0.246] using the norms from the English Lexicon Project (Balota et al., 2007). However, some words could not be matched given that some words were not included in the database. Order of presentation format (picture/word) and stimulus type (hand/foot) was counterbalanced across participants. Participants were presented with five blocks of pictures or words (with five words/pictures in each block) referring to objects that are primarily used by the hand or the foot and were instructed to quickly describe how they would physically interact with the object during a gap in image acquisition (i.e., using a sparse-sampling image acquisition method). This paradigm allows the participant to report their own conceptual knowledge about the objects, as opposed to judging whether they agree with some pre-determined categorization of the objects. The gap allowed the experimenter to listen to each response to ensure that the participant provided a response that was appropriate for the task (e.g., Borowsky et al., 2005a, 2006, 2007; Esopenko et al., 2008). An example of a hand response is (e.g., for pen) “write with it,” and for a foot response is (e.g., for soccerball) “kick it.”

The imaging was conducted using a 1.5 T Siemens Symphony (Erlanger, Germany) magnetic resonance imager. For both the motor and semantic generation tasks, 55 image volumes were obtained, with each image volume consisting of 12 axial slice single-shot fat-saturated echo-planar images (EPI); TR = 3300 ms, with a 1650 ms gap of no image acquisition at the end of the TR, TE = 55 ms, 64 × 64 acquisition matrix, 128 × 128 reconstruction matrix. Each slice was 8 mm thick with a 2 mm thick interslice gap and was acquired in an interleaved sequence (e.g., slices 1–3-5–2–4 etc.) to reduce partial volume crosstalk in the slice dimension. For all tasks, the first five image volumes were used to achieve a steady state and were discarded prior to analysis. The remaining volumes were organized into five blocks of 10 volumes each for a total of 50 image volumes. Each block consisted of five image volumes collected during the presentation of, and response to, the stimuli, followed by five image volumes collected during rest. A computer running E-Prime software (Psychology Software Tools, Inc., Pittsburgh, PA) was used to trigger each image acquisition in synchrony with the presentation of visual stimuli. The stimuli were presented using a data projector (interfaced with the E-prime computer) and a back-projection screen that was visible to the participant through a mirror attached to the head coil. In order to capture a full-cortex volume of images for each participant, either the third or fourth inferior-most slice was centered on the posterior commissure, depending on the superior-inferior distance between the posterior commissure and the top of the brain for each participant. T1-weighted high-resolution spin-echo anatomical images (TR = 400 ms, TE = 12 ms, 256 × 256 acquisition matrix, 8 mm slice thickness with 2 mm between slices) were acquired in axial, sagittal, and coronal planes. The position of the twelve T1 axial images matched the EPI.

The motor and semantic generation tasks were analyzed using the BOLDfold technique, which involves correlating the raw data with the averaged BOLD function. This method of analysis requires that sufficient time elapse between tasks for the hemodynamic response function (HRF or BOLD function) to fully return to baseline levels. After correcting for baseline drift, the mean BOLD function for each voxel, collapsing across the repetitions of task and baseline, was empirically determined then repeated and correlated to the actual data as a measure of consistency across repetitions. In other words, the empirically determined BOLD function averaged over blocks was correlated to the actual data as a measure of consistency across repetitions. The squared correlation (r2) represents the goodness of fit between the mean BOLD function and the observed BOLD data, capturing the variance accounted for in the data by the mean BOLD response. This method also serves to reduce the number of false activations associated with the traditional t test method, and, in particular, it is less sensitive to motion artifacts (Sarty and Borowsky, 2005). The correlation, r, was used as follows. A threshold correlation of r = 0.60 was used to define an active voxel. The false-positive probability is p < 0.05 with this threshold using a Bonferroni-correction for 100,000 comparisons (the approximate number of voxels in an image volume). The use of both a gap in image acquisition and the BOLDfold analysis method minimizes motion artifact (see Sarty and Borowsky, 2005 for a detailed description).

FMRI maps were computed for the motor and semantic generation tasks using a technique for separating activations unique to each condition from those that are shared between conditions (Borowsky et al., 2005a, 2007). For each condition, C, for each participant, a threshold map rC(p) of r correlation values and a visibility map VC(p) (intensity of BOLD amplitude) were computed where p is a voxel coordinate. The corresponding activation map for C, for each participant, was defined as MC(p) = χ C,θ(p) VC(p) where χ C,θ(p) = 1 if rC(p) > θ and zero otherwise. This threshold value represents the minimal acceptable correlation between the original BOLD function and its mean (repeated across the five blocks), and thus serves as a BOLD response consistency threshold. In other words, voxels are included into a binary mask if the correlation representing consistency between the original BOLD function and its mean exceeds 0.60 (binary value = 1), and excluded if the coefficient is below 0.60 (binary value = 0). We used a threshold of θ = 0.60 to define active voxels. Shared maps (Mshared), and unique maps (Munique) were computed for paired conditions A and B for each participant according to:

Equation 1 (unique activation) examines the two conditions for each voxel within each participant: if both conditions surpass the BOLD consistency threshold correlation, then the latter part of the equation amounts to [1–1] and the unique activation for that voxel would be zero; if only one condition surpasses the consistency threshold, then the latter part of the equation amounts to [1–0] and the unique activation for that voxel for that condition would be determined by the earlier part of the equation [BOLD intensity for condition A–0] or [0–BOLD intensity for condition B]. In other words, unique activation is driven by only one, but not both, conditions passing the BOLD consistency threshold. Equation 2 (shared activation) also examines the same two conditions for each voxel within each participant: if both conditions surpass the BOLD consistency threshold correlation, then the earlier part of the equation amounts to 1 * 1 and the shared activation for that voxel would be determined by the average of the BOLD intensities for both conditions; if only one condition surpasses the consistency threshold, then multiplication by 0 results in zero shared activation for that voxel. In other words, shared activation is driven by both conditions passing the BOLD consistency threshold.

The unique map represents a difference (A∪B)\(A∩B) and shows task subtraction for activations that are not common to conditions A and B (A is > 0, B is < 0). The shared map represents an intersection A∩B showing activation common to both conditions A and B with the activation amplitude coded as the average of A and B. Unique and shared maps were averaged across participants separately for each condition after smoothing and transformation to Talairach coordinates (Talairach and Tournoux, 1988) to produce the final maps as described below. Consistent and significant low-intensity BOLD functions are as important to understanding perception and cognition as consistent and significant high-intensity BOLD functions, thus the maps are presented without scaling the color to vary with intensity (i.e., the maps are binary, see also Borowsky et al., 2005a, 2006, 2007, 2012; Esopenko et al., 2008).

Using the AFNI software (Cox, 1996), voxels separated by 1.1 mm distance (i.e., the effective in-plane voxel resolution) were clustered, and clusters of volume less than 100 μL were clipped out at the participant level. The data were then spatially blurred using an isotropic Gaussian blur with a full width at half maximum (FWHM) of 3.91 mm. The averaging of images across subjects was subsequently done after Talairach transformation to a standardized brain atlas (Talairach and Tournoux, 1988). Visual inspection of the individual participant anatomical images did not reveal any structural abnormalities that would compromise the averaging of data in Talairach space. Mean activation maps in Talairach coordinates were determined for each map type along with the corresponding one sample t statistic for map amplitude (against zero) for each voxel. The final maps for the motor and semantic generation conditions surpass the θ threshold at an individual level, and the one-tailed t test of map amplitude against zero at the group level [t (7) = 1.895, p < 0.05].

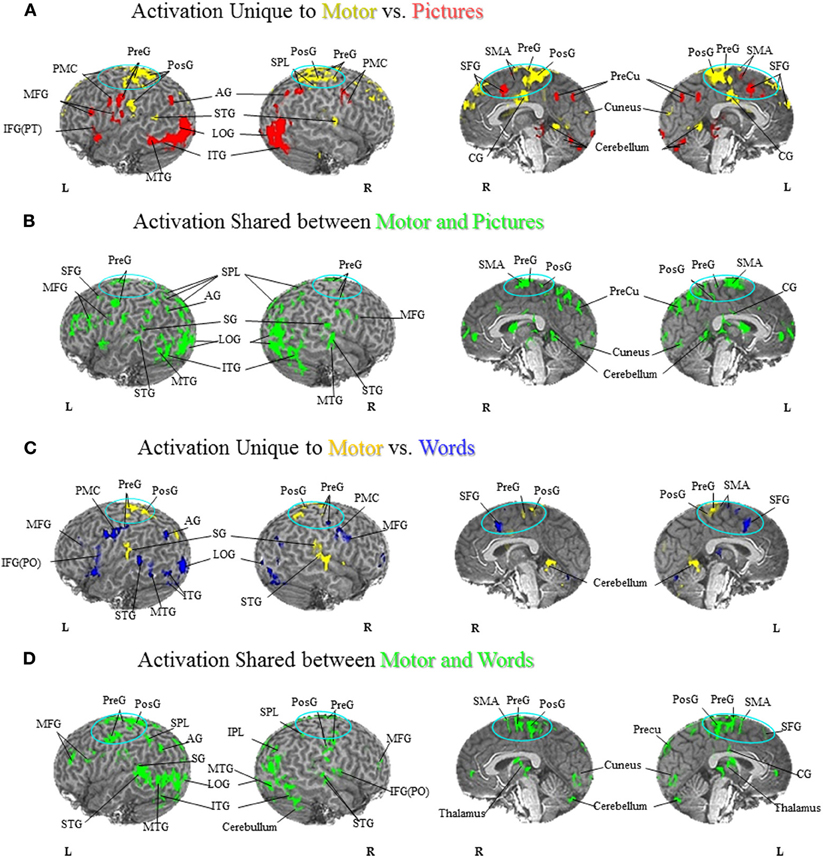

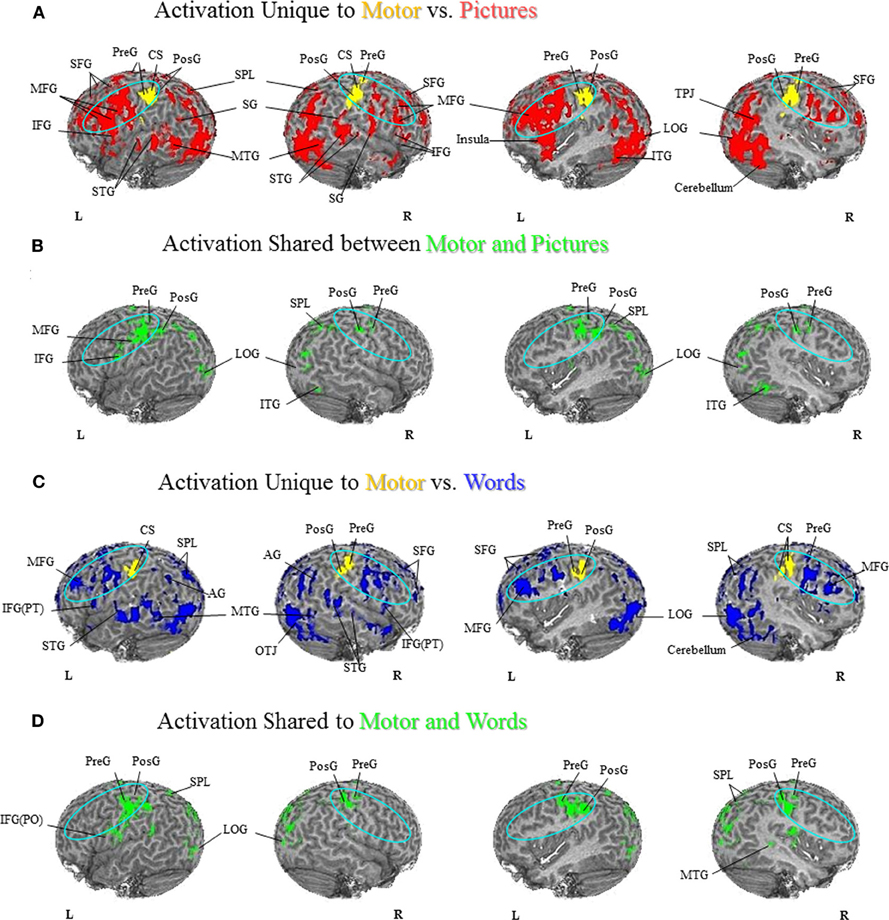

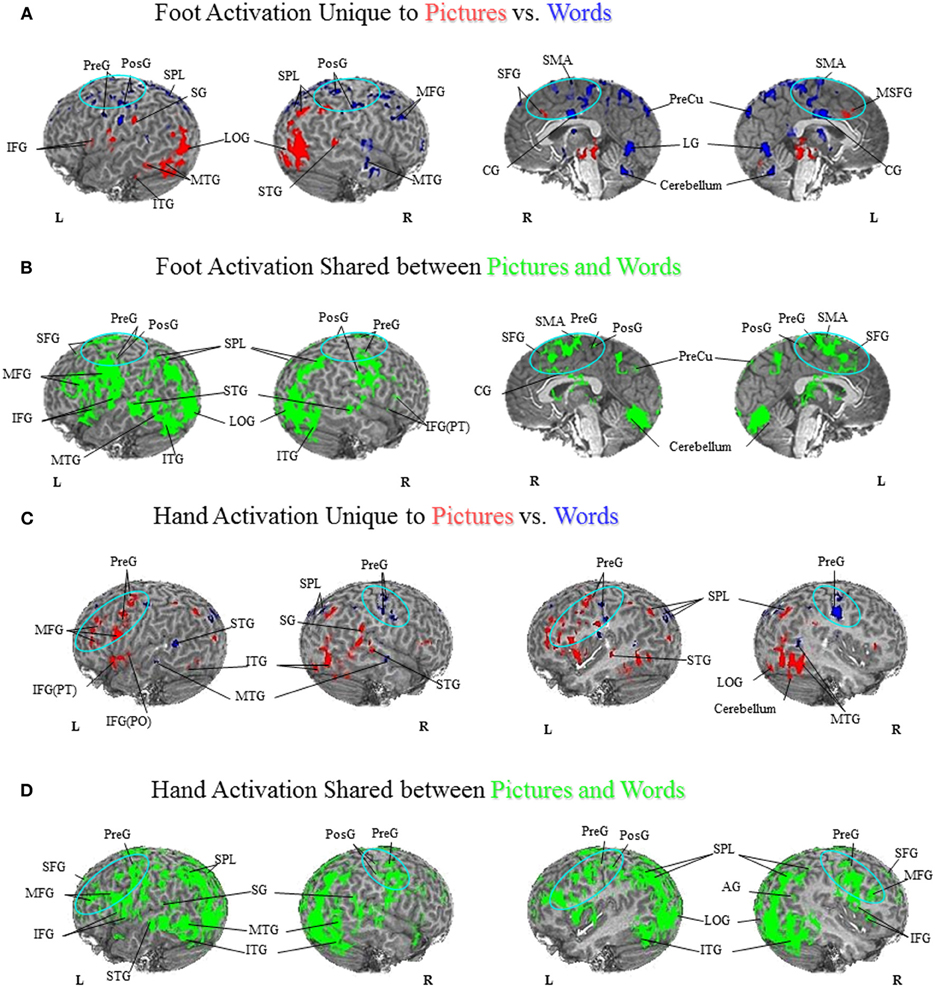

Comparison of unique versus shared activation in the PFN is central to the hypotheses that are evaluated in this paper. A detailed description of the regions activated in all tasks can be found in Figures 1–3 and listed in the Figure Captions. Figures 1–3 clearly show both significant unique and significant shared activation in the comparison of the motor localization to pictures and words, for both hand and foot stimuli. A detailed description of all regions activated in the cortex for the motor localization and semantic generation tasks is also reported in Figures 1–3. The main finding of our experiment was that there is somatotopically organized shared activation in proximity to the sensorimotor and premotor cortices (see areas within the ellipses on Figures 1B and D, 2B and D) for the processing of hand and foot semantic generation and motor localization tasks. Furthermore, somatotopically organized unique activation was shown for the hand and foot semantic generation task in proximity to the hand and foot motor localization tasks (see areas within the ellipses on Figures 1A and C, 2A and C). In addition, in the comparison of the semantic generation of pictures versus words we found significant shared and unique activation in the ventral stream (Figure 3). This is to be expected given previous research showing that the ventral stream processes semantic information (for a review see Martin, 2001, 2007; Martin and Chao, 2001). For example, previous research has shown that generating action words to visually presented pictures and words activates the middle temporal gyrus (Martin and Chao, 2001), while the loss of conceptual object knowledge is associated with damage to the left posterior temporal cortex (Hart and Gordon, 1990).

Figure 1. Foot Motor Localization versus Semantic Generation. (A) Activation Unique to Motor vs. Pictures. Activation unique to the motor localization task (yellow coded activation) was found in bilateral superior temporal gyrus (STG), precentral gyrus (PreG) and postcentral gyrus (PosG), and the right superior parietal lobule (SPL) on the lateral surface. Activation was also found in bilateral PreG, PosG, supplementary motor area (SMA), superior frontal gyrus (SFG), and cingulate gyrus (CG) along the midline. For the semantic generation to foot picture stimuli (red coded activation), activation was found in bilateral premotor cortex (PMC) and angular gyrus (AG) on the lateral surface. Activation was also found in the left PreG, middle frontal gyrus (MFG), inferior frontal gyrus [pars triangularis; IFG(PT)], as well as the right SPL on the lateral surface. Activation along the midline was found in bilateral, SMA, SFG, and precuneus (PreCu). Regions of unique activation for the semantic generation to foot picture stimuli and motor localization task outside the PFN were found in bilateral inferior temporal gyrus (ITG) and lateral occipital gyrus (LOG), as well as the left middle temporal gyrus (MTG) on the lateral surface. Activation along the midline was found in bilateral cuneus and cerebellum. (B) Activation Shared between Motor and Pictures. Activation shared between the motor localization and semantic generation to foot picture stimuli (green coded activation) was found in bilateral PreG, SPL, and MFG, as well as the left SFG, supramarginal gyrus (SG), and AG on the lateral surface. Activation was also found in bilateral SMA, PreG, PosG, PreCu and CG along the midline. Regions of activation shared between the motor localization and the semantic generation to foot picture stimuli outside the PFN were found in bilateral ITG, MTG, STG, LOG, and right cerebellum on the lateral surface, and bilateral cuneus and cerebellum along the midline. (C) Activation Unique to Motor vs. Words. Activation unique to the motor localization task (yellow coded activation) was found in bilateral PreG, PosG, and SG, and right STG on the lateral surface. Activation was also found in bilateral PreG, and PosG, as well as the left SMA along the midline. For the semantic generation to foot word stimuli (blue coded activation), activation was found in bilateral PMC, MFG, and PreG, as well as left IFG [pars opercularis; IFG(PO)], and AG and right inferior parietal lobule (IPL) on the lateral surface. Activation was also found in bilateral SFG, and left SMA along the midline. Regions of unique activation for the semantic generation to foot word stimuli and motor localization task outside the PFN were found in the bilateral LOG and cerebellum, as well as the left STG, MTG, and ITG on the lateral surface. (D) Activation Shared between Motor and Words. Shared activation between the motor localization and semantic generation to foot word stimuli (green coded activation) was found in bilateral MFG, PreG, PosG, SPL, as well as the left AG and SG, and right IPL, and IFG(PO) on the lateral surface. Activation was also found in bilateral SMA, PreG, and PosG, and the left SFG, PreCu, and CG along the midline. Regions of shared activation between the motor localization and semantic generation to foot word stimuli outside the PFN were found in bilateral STG, ITG, MTG, LOG and cerebellum on the lateral surface. Regions of shared activation between the motor localization and semantic generation to foot word stimuli outside the PFN along the midline were found in bilateral cuneus, and cerebellum.

Figure 2. Hand Motor Localization versus Semantic Generation. (A) Activation Unique to Motor vs. Pictures. Activation unique to the motor localization task (yellow coded activation) was found in bilateral PreG and PosG. For the semantic generation to hand picture stimuli (red coded activation), activation was found in bilateral MFG, SFG, PMC, IFG, SPL, and SG, as well as the right temporal-parietal junction, and IPL. Regions of unique activation outside the PFN for the semantic generation to hand picture stimuli were found in bilateral STG, MTG, and LOG, and right cerebellum. (B) Activation Shared between Motor and Pictures. Shared activation between the motor localization and semantic generation to hand picture stimuli (green coded activation) was found in bilateral PreG, PosG, and SPL, as well as the left PMC and IFG. Regions of shared activation between the motor localization and semantic generation to hand picture stimuli outside the PFN were found in the bilateral LOG and right ITG. (C) Activation Unique to Motor vs. Words. Activation unique to the motor localization task (yellow coded activation) was found in bilateral PreG and PosG. For the semantic generation to hand word stimuli (blue coded activation), activation was found in bilateral MFG, SFG, IFG(PT), SPL, and left AG, as well as right IPL. Regions of unique activation for the semantic generation to hand word stimuli outside the PFN were found in bilateral STG, MTG, LOG, as well as right cerebellum. (D) Activation Shared between Motor and Words. Shared activation between the motor localization and semantic generation to hand word stimuli (green coded activation) was found in bilateral PreG, PosG, and SPL, and the left SG and IFG(PO). Regions of shared activation between the motor localization task and semantic generation to words outside the PFN were found in bilateral LOG, and right MTG.

Figure 3. Semantic Generation of Pictures versus Words. (A) Foot Activation Unique to Pictures vs. Words. For the semantic generation to foot picture stimuli (red coded activation), activation was found in the left IFG and SG and right IPL on the lateral surface, and bilateral SFG along the midline. For the semantic generation to foot word stimuli (blue coded activation), unique activation was found in bilateral PreG, PosG and SPL, and right MFG on the lateral surface, and bilateral SMA, CG, and PreCu, as well as right SFG along the midline. Regions of unique activation for the semantic generation to foot picture stimuli outside the PFN were found in the bilateral LOG, the left ITG and MTG, and the right STG on the lateral surface. Regions of unique activation for the semantic generation to foot word stimuli outside the PFN were found in the right MTG on the lateral surface, and bilateral lingual gyrus and cerebellum along the midline. (B) Foot Activation Shared between Pictures and Words. Shared activation between the semantic generation to foot picture stimuli and foot word stimuli (green coded activation) was found in bilateral PreG, PosG, PMC, IFG, and left SPL, AG, MFG, and SFG, on the lateral surface. Activation was also found in bilateral SFG, SMA, PreG, PosG, CG and PreCu, along the midline. Regions of shared activation for the semantic generation to foot picture and word stimuli outside the PFN were found in bilateral STG, ITG, and LOG, as well as the left MTG on the lateral surface, and bilateral cerebellum along the midline. (C) Hand Activation Unique to Pictures vs. Words. For the semantic generation to hand picture stimuli (red coded activation), activation was found in the left STG, MFG, PMC, IFG(PO), IFG(PT), PreG, the right SG, and bilateral SPL. For the semantic generation to hand word stimuli (blue coded activation), activation was found in bilateral PreG and SPL. Regions of unique activation for the semantic generation to hand picture stimuli outside the PFN were found in bilateral ITG, MTG, and the right STG, LOG, and cerebellum. Regions of unique activation for the semantic generation to hand word stimuli outside the PFN were found in the left STG, and bilateral MTG. (D) Hand Activation Shared between Pictures and Words. Shared activation between the semantic generation to hand picture stimuli and hand word stimuli (green coded activation) was found in bilateral MFG, IFG, SFG, PreG, PosG, PMC, SG, and SPL, as well as the left AG and right IPL. Regions of shared activation for the semantic generation to hand picture and hand word stimuli outside the PFN were found in bilateral STG, MTG, ITG, and LOG.

The goal of our current research was to examine whether the semantic generation to pictures and words activated the PFN somatotopically, and moreover, to determine whether the semantic generation and motor localization tasks activated a shared network in the PFN. That is, to determine whether generating a use for a hand or foot stimulus activates regions in proximity to the sensorimotor cortices, and furthermore whether there was shared activation between the semantic generation and motor localization tasks in proximity to the hand or foot sensorimotor cortices (see areas included in the ellipses in Figures 1–3). The word task in our experiment replicated earlier work from our lab showing unique and shared activation between the motor localization and semantic generation of words in the premotor regions. Our results show that, in the motor localization task, the foot-pedaling task produced unique activation in the motor cortex for feet (Figures 1A,C), while the finger-touch task produced unique activation in the motor cortex for hands (Figures 2A,C). In the comparison of the foot motor localization and semantic generation tasks, when participants responded to how they would interact with foot stimuli presented in both picture and word format there was a superior dorsal network of activation in the PFN (see areas in ellipses: Figures 1A,C). In the comparison of the hand motor localization and semantic generation tasks, when participants described how they would interact with hand stimuli presented in both picture and word format there was a dorsolateral network of activation in the PFN (see areas in ellipses: Figures 2A,C). As such, these results not only replicate earlier findings from the Esopenko et al. (2008) semantic generation task with words, but also show that generating responses to picture stimuli also activates regions proximal to the sensorimotor cortices. In addition, we found a shared network of activation between the hand and foot motor localization and semantic generation tasks in the PFN regardless of presentation format (i.e., pictures and words; see areas in ellipses: Figures 1B,D and Figures 2B,D). This shared activation was somatotopically organized in accordance with the sensorimotor somatotopic locations, whereby foot stimuli activated the dorsal regions of the PFN, while hand stimuli activated the dorsolateral regions of the PFN.

Embodied cognition theorists suggest that the brain represents semantic knowledge as a function of interacting with the environment, and to facilitate the processing of sensorimotor information (Wilson, 2002; Gibbs, 2006). According to Gallese and Lakoff (2005, pg. 456), an individual's “conceptual knowledge is embodied,” whereby conceptual knowledge is “mapped within our sensory-motor system.” Moreover, theories regarding mental simulation purport that conceptual processing is bodily based, in that it makes use of our sensorimotor system via simulation of action and perception (Svensson and Ziemke, 2004; Gallese and Lakoff, 2005). As such, the neural structures that are responsible for processing action and perceptual information would also be responsible for the conceptual processing of action-related language (Svensson and Ziemke, 2004; Grafton, 2009). Hence, one would expect that if the brain represents semantic knowledge in a way to facilitate sensorimotor processing, then we should see evidence of embodiment in the regions that process sensorimotor information (Barsalou, 1999). Past neuroimaging research has shown evidence consistent with embodied cognition, in that such research has demonstrated somatotopic semantic organization in the PFN when responding to action-related language (Hauk et al., 2004; Tettamanti et al., 2005; Boulenger et al., 2009). These results show that regions proximal to the motor cortex are activated when processing action-related language. However, to determine whether these regions reflect organization consistent with the theory of embodied cognition, we examined whether there is evidence of common regions of activation between motor and conceptual language tasks in the current study (see also Esopenko et al., 2008 experiment with word stimuli). The comparisons involving the hand and foot motor localization tasks and semantic generation to pictures and words showed that generating responses to hand and foot stimuli activated regions proximal to the sensorimotor and premotor cortices in a somatotopic fashion. Our results are consistent with the hypothesis that regions that encode sensorimotor experiences are activated when retrieving sensorimotor information, and thus provide neuroanatomical support for the theory of embodied cognition. In addition, these results show that the PFN responds to action-related stimuli regardless of word versus picture presentation format.

A major criticism of embodied theories is that the motor system is activated as a by-product of the semantic analysis of a stimulus and is not required for semantic processing (Caramazza et al., 1990; Mahon and Caramazza, 2005, 2008). According to the disembodied view, conceptual representations are abstract and symbolic and are distinct entities from sensory and motor experiences (Caramazza et al., 1990; Mahon and Caramazza, 2005, 2008). However, in the disembodied view the motor system may still be activated, but it is not required. Specifically, although both conceptual and motor regions may be activated when processing conceptual information, processing occurs in conceptual regions and then spreads to the motor regions (Mahon and Caramazza, 2008). As such, conceptual processing is not associated with simulation of sensorimotor experiences, and moreover, is not a requirement to understand the meaning of a conceptual representation (Mahon and Caramazza, 2005, 2008). Evidence in favor of the disembodied account comes from apraxia patients who are impaired when using objects, but can name and pantomime the use associated with an object (Negri et al., 2007). This suggests that although there is damage to the motor regions, action-related language is still intact. However, it should be noted that the patients examined by Negri and colleagues have lesions that are not restricted to the motor, sensory, and parietal regions, but rather were wide-spread including regions outside the PFN (e.g., the temporal lobe). Nevertheless, disembodied theories suggest that activation in the premotor regions must be due to spreading activation from other regions after the semantic processing of a stimulus. That being said, even though the disembodied perspective can provide a plausible explanation as to why we see motor activation during conceptual processing, there is evidence that the sensorimotor and premotor cortices involvement during the processing of action-related semantic information is more than simply due to spreading activation.

Previous neuroimaging research has shown that the sensorimotor and premotor cortices are activated when processing action-related stimuli (e.g., silently reading action words or listening to action-related sentences), and that this activation is organized somatotopically dependent upon the effector the stimulus represents (Hauk et al., 2004; Pulvermuller, 2005; Tettamanti et al., 2005; Esopenko et al., 2008; Boulenger et al., 2009; Raposo et al., 2009; Boulenger and Nazir, 2010). In addition, behavioral research has shown that the sensorimotor properties of a sentence can affect an individual’s ability to make a physical response to that sentence (Glenberg and Kaschak, 2002). Furthermore, behavioral studies have shown that the degree of physical interaction associated with a stimulus affects responding, with stimuli that are easier to interact with being responded to faster and more accurately in tasks that target semantic, phonological, and orthographic processing (Siakaluk et al., 2008a,b). Finally, studies of patient groups who have damage to the motor system and motor pathways show deficits in responding to action-related language, suggesting that the motor system is involved in responding to action-related semantic information (Bak et al., 2001; Boulenger et al., 2008; Cotelli et al., 2006a,b).

Recent electrophysiological and stimulation studies have provided some support for the theory of embodied cognition by demonstrating that the motor system is activated quickly, and likely during semantic processing and not just after it. To determine whether the sensorimotor and premotor regions are activated during or post semantic processing, previous research has used either: (1) magnetoencephalography (MEG) to examine whether semantic processing occurs before the sensorimotor system is activated, or whether the motor system is activated quickly following the presentation of a stimulus, which would suggest that semantic processing requires the motor system; or (2) by applying TMS to the motor system to determine whether responding to action words is facilitated or inhibited when stimulation is applied to these regions. Using MEG, Pulvermuller et al. (2005b) examined the spatial and temporal processing of spoken face-related (e.g., eat) and leg-related (e.g., kick) action words. They found that face-related and leg-related words activated the frontocentral and temporal regions. Of particular interest, Pulvermuller et al. found that the processing of face-related and leg-related words activated the frontocentral cortex somatotopically, whereby face-related words more strongly activated the inferior frontocentral regions, while leg-related words more strongly activated more dorsal superior central regions. Moreover, Pulvermuller and colleagues (2005b) found that semantic processing occurred early in these regions, in that the inferior frontocentral and superior central regions were found to be activated approximately 170–200 ms after presentation of word stimuli. They also found early activation peaking around 160 ms in the superior temporal regions, but suggest that this activation was likely related to phonological, acoustic and lexical processing rather than semantic processing. As such, the authors suggest that access to semantic information in the frontocentral motor regions occurs quite early, suggesting that activation in these regions is not likely occurring after semantic processing takes place. Furthermore, research has shown that semantic activation typically occurs later than 200 ms after stimulus onset. For example, Pulvermuller et al. (2000) have shown that differentiating between classes of verbs referring to different action types began 240 ms following the onset of an action word. Moreover, Pulvermuller et al. (1999) have shown that the semantic distinction between noun and verb word classes happens between 200–230 ms, again suggesting that the early activation in the motor cortices shown by Pulvermuller et al. (2005b) most likely occurs just prior to, or at least during, the semantic analysis of the stimulus.

Given the findings that the motor system is involved in the processing of language, Pulvermuller et al. (2005a) sought to examine whether applying stimulation (through TMS) to the motor system affects the processing of action-related language. Sub-threshold TMS was applied to hand and leg cortical areas while participants read arm-related (e.g., grasp) and leg-related (e.g., kick) words, pseudowords, and completed a lexical decision task. They found that applying TMS to motor regions facilitated responses to action words. In particular, the authors found that when TMS was applied to the arm motor regions, lexical decisions to arm stimuli were faster than lexical decisions to leg stimuli, whereas when TMS was applied to the leg motor regions, lexical decisions to leg stimuli were faster than lexical decisions to arm stimuli. Based on the finding that sub-threshold TMS facilitates responding to effector-specific action words, Pulvermuller and colleagues (2005a) proposed that the activation of the motor regions is not simply due to the motor regions being activated after semantic processing, but rather that these regions are actively involved in processing action-related language. Furthermore, they suggested that the sensorimotor regions process language information that is effector-specific, and thus play a significant role in the processing of effector-specific action words. Taken together, the findings from both studies suggest that the involvement of the motor system in the processing of action-related language is not simply a by-product of the semantic processing of the stimulus, but rather that the motor system plays a role in the semantic processing of the stimulus.

Our functional imaging results are consistent with the theory of embodied cognition, in that they show that the motor and pre-motor system is involved in responding to action-related stimuli. Specifically, the results demonstrated shared, or overlapping, activation in regions that are activated during a motor localization task and during a semantic generation task where no arm and leg motor movements occurred. The shared activation as measured here (within-participants and prior to averaging, unlike other conjoint analysis methods in the literature that do not first isolate the shared regions within-participants) between the motor and semantic tasks can be taken as support for embodied cognition in the spatial domain of brain topography, as it shows that activation of the motor system overlaps spatially with activation for conceptual representations. However, research still needs to be done to determine the temporal dynamics of this system using electrophysiological methods (e.g., event-related potentials, electroencephalography and MEG) during an overt semantic generation task. If the methods with higher temporal resolution ultimately demonstrate that semantic activation occurs prior to the activation in these shared regions (as measured by a motor task and an overt semantic generation task), then there would be more compelling evidence in support of disembodied cognition in the temporal domain of mental chronometry. That said, one must also use caution when employing this logic, as it ignores the issue of top-down processing. For example, activation that has been reported in primary visual cortex as a function of mental imagery (e.g., Kosslyn et al., 1995) can not begin before some degree of semantic activation has occurred (i.e., one needs to know what one is to imagine before the primary visual regions can simulate the referent), but it does not follow that this primary visual activation is just a by-product of imagination, when it clearly reflects top-down activation of an essential component of visual imagery. Later motor/somatosensory activation in the current context could simply reflect a top-down effect of semantics on the motor/sensory system, but need not make the involvement of the motor/sensory system any less interesting or important. Indeed, if one were to re-define embodied cognition as simply another example of imagery (or simulation), like visual imagery but in the motor/sensory domain, it would be part of a larger (and less-contentious) field of research on top-down processing.

Previous patient and behavioral research suggests that pictures and words have differential access to action-related knowledge (Lhermitte and Beauvois, 1973; Bub et al., 1988; Lambon Ralph and Howard, 2000; Chainey and Humphreys, 2002; Saffran et al., 2003; Thompson-Schill et al., 2006). Furthermore, previous neuroimaging research has shown that pictures and words are processed in both shared and unique brain regions (Borowsky et al., 2005a; Vandenberghe et al., 1996). Based on these results, we had predicted that if pictures and words both result in access to action-related semantic representations, we should see shared regions of activation in the PFN. However, given that patients can show a deficit in the ability to retrieve information when a stimulus is presented in picture format, but still have access to this same information when the stimulus is presented in word format (and vice versa), we predicted that we should also see unique activation in the PFN. As such, our research sought to examine whether picture and word stimulated action-related processes occur in the same regions, as shown by shared activation, or whether they are processed in separate regions, as shown by unique activation. As shown in Figure 3, there is substantial shared activation in the PFN between pictures and words, demonstrating that both stimuli formats have access to action representations in the PFN. However, our results also show unique activation between pictures and words in the PFN, suggesting that there is differential access to action-related knowledge. Taken together, our results suggest that there is both shared and unique activation for pictures and words in regions that process embodied information.

One limitation of our research was that participants only responded to either arm or leg stimuli. Given that our analysis for computing unique and shared activation maps requires within-participant manipulation of conditions, we could not determine the degree to which (or if any) shared or unique activation seen in the PFN was due to overlap between effectors, or some general overlap in processing. In other words, it could be the case that responding to hand and foot stimuli could cause cross-effector activation, where some hand stimuli may activate foot regions, while some foot stimuli may activate hand regions. Such overlapping motor programs could potentially affect the shared and unique activation in the PFN. As such, one avenue for future research is to have each participant complete each of the hand and foot motor localization and semantic generation tasks. Such a design would allow us to compare all possible combinations of conditions, and determine whether any shared activation may be due to overlap between hand and foot motor programs.

The fMRI experiment presented here provides a comprehensive examination of a variant of a semantic generation task that permits participants to express their own semantic knowledge in response to action-related picture and word stimuli. Using this ecologically valid task, and a method of analysis that allows for a fair separation of shared regions of processing from unique regions, the functional neuroimaging results extend the data pertinent to evaluating the theory of embodied cognition. Sensorimotor and premotor regions are activated when openly responding to action-related stimuli, and there is shared activation between the motor localization tasks and the semantic generation tasks in the PFN, for both word and picture action-related stimuli.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This research was supported by the Natural Sciences and Engineering Research Council (NSERC) of Canada in the form of post-graduate scholarships to C. Esopenko and J. Cummine, a research assistantship to L. Gould, an undergraduate student research award to N. Kulhmann, and grants to G. E. Sarty and R. Borowsky. R. Borowsky is currently funded by NSERC Discovery Grant 183968–2008–2013. The lead author (C. Esopenko) and senior author (R. Borowsky) contributed equally to this work.

Aziz-Zadeh, L., Wilson, S. M., Rizzolatti, G., and Iacoboni, M. (2006). Congruent embodied representations for visually presented actions and linguistic phrases describing actions. Curr. Biol. 16, 1818–1823.

Bak, T. H., O’Donovan, D. G., Xuereb, J. H., Boniface, S., and Hodges, J. R. (2001). Selective impairment of verb processing associated with pathological changes in Brodmann areas 44 and 45 in the motor neurone disease-dementia-aphasia syndrome. Brain 124, 103–120.

Balota, D. A., Yap, M. J., Cortese, M. J., Hutchison, K. A., Kessler, B., Loftis, B., Neely, J. H., Nelson, D. L., Simpson, G. B., and Treiman, R. (2007). The English Lexicon Project. Behav. Res. Methods 39, 445–459.

Barsalou, L. (2009). Simulation, situated conceptualization, and prediction. Philos. Trans. R. Soc. Biol. Sci. 364, 1281–1289.

Borowsky, R., Cummine, J., Owen, W. J., Friesen, C. K., Shih, F., and Sarty, G. (2006). FMRI of ventral and dorsal processing streams in basic reading processes: insular sensitivity to phonology. Brain Topogr. 18, 233–239.

Borowsky, R., Esopenko, C., Cummine, J., and Sarty, G. E. (2007). Neural representations of visual words and objects: a functional MRI study on the modularity of reading and object processing. Brain Topogr. 20, 89–96.

Borowsky, R., Esopenko, C., Gould, L., Kuhlmann, N., Sarty, G., and Cummine, J. (2012). Localisation of function for noun and verb reading: converging evidence for shared processing from fMRI activation and reaction time. Lang. Cogn. Processes Spec. Sect. Cogn. Neurosci. Lang.

Borowsky, R., Loehr, J., Friesen, C. K., Kraushaar, G., Kingstone, A., and Sarty, G. (2005a). Modularity and intersection of “what”, “where”, and “how” processing of visual stimuli: a new method of fMRI localization. Brain Topogr. 18, 67–75.

Borowsky, R., Owen, W. J., Wile, T. A., Friesen, C. K., Martin, J. L., and Sarty, G. E. (2005b). Neuroimaging of language processes: fMRI of silent and overt lexical processing and the promise of multiple process imaging in single brain studies. Can. Assoc. Radiol. J. 56, 204–213.

Boulenger, V., Hauk, O., and Pulvermuller, F. (2009). Grasping ideas with the motor system: semantic somatotopy in idiom comprehension. Cereb. Cortex 19, 1905–1914.

Boulenger, V., Mechtouff, L., Thobois, S., Brousolle, E., Jeannerod, M., and Nazir, T. A. (2008). Word processing in Parkinson’s disease is impaired for action verbs but not for concrete nouns. Neuropsychologia 46, 743–756.

Boulenger, V., and Nazir, T. A. (2010). Interwoven functionality of the brain’s action and language systems. Ment. Lexicon 5, 231–254.

Bub, D., Black, S., Hampson, E., and Kertesz, A. (1988). Semantic encoding of pictures and words: some neuropsychological observations. Cogn. Neuropsychol. 5, 27–66.

Caramazza, A., Hillis, A. E., Rapp, B. C., and Romani, C. (1990). The multiple semantics hypothesis: multiple confusions? Cogn. Neuropsychol. 7, 161–189.

Chainey, H., and Humphreys, G. W. (2002). Privileged access to action for objects relative to words. Psychon. Bull. Rev. 9, 348–355.

Cotelli, M., Borroni, B., Manenti, R., Alberici, A., Calabria, M., Agosti, C., Arevalo, A., Ginex, V., Ortelli, P., Binetti, G., Zanetti, O., Padovani, A., and Cappa, S. F. (2006a). Action and object naming in frontotemporal dementia, progressive supranuclear palsy, and corticobasal degeneration. Neuropsychology 20, 558–565.

Cotelli, M., Manenti, R., Cappa, S. F., Geroldi, C., Zanetti, O., Rossini, P. M., and Miniussi, C. (2006b). Effect of transcranial magnetic stimulation on action naming in patients with Alzheimer’s disease. Arch. Neurol. 63, 1602–1604.

Cox, R. W. (1996). AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res. 29, 162–173.

Esopenko, C., Crossley, M., Haugrud, N., and Borowsky, R. (2011). Naming and semantic processing of action-related stimuli following right versus left hemispherectomy. Epilepsy Behav. 22, 261–271.

Esopenko, C., Borowsky, R., Cummine, J., and Sarty, G. (2008). Mapping the semantic homunculus: a functional and behavioral analysis of overt semantic generation. Brain Topogr. 22, 22–35.

Gallese, V., and Lakoff, G. (2005). The brain’s concepts: the role of the sensory-motor system in conceptual knowledge. Cogn. Neuropsychol. 22, 455–479.

Glenberg, A. M., and Kaschak, M. P. (2002). Grounding language in action. Psychon. Bull. Rev. 9, 558–565.

Grafton, S. T. (2009). Embodied cognition and the simulation of action to understand others. Ann. N.Y. Acad. Sci. 1156, 97–117.

Hart, J., and Gordon, B. (1990). Delineation of single-word semantic comprehension deficits in aphasia, with anatomical correlation. Ann. Neurol. 27, 226–231.

Hauk, O. J., ohnsrude, I., and Pulvermuller, F. (2004). Somatotopic representation of action words in human motor and premotor cortex. Neuron 41, 301–307.

Kosslyn, A. M., Thompson, W. L., Kim, I. J., and Alpert, N. M. (1995). Topographic representations of mental images in primary visual cortex. Nature 378, 496–498.

Lambon Ralph, M. A., and Howard, D. (2000). Gogi aphasia or semantic dementia? Simulating and accessing poor verbal comprehension in a case of progressive fluent aphasia. Cogn. Neuropsychol. 17, 437–465.

Lhermitte, F., and Beauvois, M. F. (1973). A visual-speech disconnexion syndrome: report of a case with optic aphasia, agnosia alexia, and colour agnosia. Brain 96, 695–714.

Mahon, B. Z., and Caramazza, A. (2005). The orchestration of the sensory-motor systems: clues from neuropsychology. Cogn. Neuropsychol. 22, 480–494.

Mahon, B. Z., and Caramazza, A. (2008). A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. J. Physiol. Paris 102, 59–70.

Martin, A. (2001). “Functional neuroimaging of semantic memory,” in Handbook of functional neuroimaging of cognition, eds R. Cabeza and A. Kingstone (Cambridge, MA: MIT Press), 153–186.

Martin, A. (2007). The representation of object concepts in the brain. Annu. Rev. Psychol. 58, 25–45.

Martin, A., and Chao, L. L. (2001). Semantic memory and the brain: structure and processes. Curr. Opin. Neurobiol. 11, 194–201.

Negri, G., Rumiati, R., Zadini, A.Ukmar, M., Mahon, B., and Caramazza, A. (2007). What is the role of motor simulation in action and object recognition? Evidence from apraxia. Cogn. Neurosci. 24, 795–816.

Penfield, W., and Rasmussen, T. (1950). The Cerebral Cortex of Man: A Clinical Study of Localization of Function. Oxford, England: Macmillan.

Pulvermuller, F., Harle, M., and Hummel, F. (2000). Neurophysiological distinction of verb categories. Neuroreport 11, 2789–2793.

Pulvermuller, F., Hauk, O., Nikulin, V. V., and Ilmoniemi, R. J. (2005a). Functional links between motor and language systems. Eur. J. Neurosci. 21, 793–797.

Pulvermuller, F., Lutzenberger, W., and Preissl, H. (1999). Nouns and verbs in the intact brain: evidence from even-related potentials and high-frequency cortical responses. Cereb. Cortex 9, 497–506.

Pulvermuller, F., Shtyrov, Y., and Ilmoniemi, R. J. (2005b). Brain signatures of meaning access in action word recognition. J. Cogn. Neurosci. 17, 884–892.

Raposo, A., Moss, H. E., Stamatakis, E. A., and Tyler, L. (2009). Modulation of motor and premotor cortices by actions, action words, and action sentences. Neuropsychologia 47, 388–396.

Saffran, E. M., Coslett, H. B., and Keener, M. T. (2003). Differences in word associations to pictures and words. Neuropsychologia 41, 1541–1546.

Sarty, G., and Borowsky, R. (2005). Functional MRI activation maps from empirically defined curve fitting. Magn. Reson. Eng. 24b, 46–55.

Siakaluk, P. D., Pexman, P. M., Aguilera, L., Owen, W. J., and Sears, C. R. (2008b). Evidence for the activation of sensorimotor information during visual word recognition: the body-object interaction effect. Cognition 106, 433–443.

Siakaluk, P. D., Pexman, P. M., Sears, C. R., Wilson, K., Locheed, K., and Owen, W. J. (2008a). The benefits of sensorimotor knowledge: body-object interaction facilitates semantic processing. Cogn. Sci. 32, 591–605.

Svensson, H., and Ziemke, T. (2004). “Making sense of embodiment: simulation theories and the sharing of neural circuitry between sensorimotor and cognitive processes,” in Presented at the 26th Annual Cognitive Science Society Conference, Chicago, IL.

Talairach, J., and Tournoux, P. (1988). Co-Planar Stereotaxic Atlas of the Human Brain. New York, NY: Thieme Medical Publishers, Inc.

Tettamanti, M., Buccino, G., Saccuman, M. C., Gallese, V., Danna, M., Scifo, P., Fazio, F., Rizzolatti, G., Cappa, S. F., and Perani, D. (2005). Listening to action-related sentences activates fronto-parietal motor circuits. J. Cogn. Neurosci. 17, 273–81.

Thompson-Schill, S. L., Kan, I. P., and Oliver, R. T. (2006). Functional neuroimaging of semantic memory. in Handbook of Functional Neuroimaging of Cognition 2nd edn, eds R. Cabeza and A. Kingstone (Cambridge, MA: MIT Press), 149–190.

Vandenberghe, R., Price, C., Wise, R., Josephs, O., and Frackowiak, R. S. J. (1996). Functional anatomy of a common semantic system for words and pictures. Nature 383, 254–256.

Willems, R. M., and Hagoort, P. (2007). Neural evidence for the interplay between, language, gesture, and action: a review. Brain Lang. 101, 278–289.

Stimuli (see Table 1, available on Frontiers of Neuroscience website: http://www.frontiersin.org/Human_Neuroscience/10.3389/fnhum.2012.00084/abstract)

AG Angular gyrus

CG Cingulate gyrus

IFG(PO) Inferior frontal gyrus (pars operculatis)

IFG(PT) Inferior frontal gyrus (pars triangularis)

IPL Inferior parietal lobe

ITG Inferior temporal gyrus

LOG Lateral occipital gyrus

MFG Middle frontal gyrus

MTG Middle temporal gyrus

PMC Premotor cortex

PreCu Precuneus

PreG Precentral gyrus

PosG Postcentral gyrus

SFG Superior frontal gyrus

SG Supramarginal gyrus

SMA Supplementary motor area

SPL Superior parietal lobule

STG Superior temporal gyrus

Keywords: embodied cognition, fMRI, semantic generation, pictures, words, action-related

Citation: Esopenko C, Gould L, Cummine J, Sarty GE, Kuhlmann N and Borowsky R (2012) A neuroanatomical examination of embodied cognition: semantic generation to action-related stimuli. Front. Hum. Neurosci. 6:84. doi: 10.3389/fnhum.2012.00084

Received: 02 December 2011; Accepted: 25 March 2012;

Published online: 20 April 2012.

Edited by:

Penny M. Pexman, University of Calgary, CanadaReviewed by:

Diane Pecher, Erasmus University Rotterdam, NetherlandsCopyright: © 2012 Esopenko, Gould, Cummine, Sarty, Kuhlmann, and Borowsky. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Ron Borowsky, Department of Psychology, University of Saskatchewan, 9 Campus Drive, Saskatoon, SK S7N 5A5, Canada. e-mail:cm9uLmJvcm93c2t5QHVzYXNrLmNh

Carrie Esopenko, Rotman Research Institute, Baycrest Centre, 3560 Bathurst St. Toronto, ON M6A 2E1, Canada. e-mail:cesopenko@rotman-baycrest.on.ca

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.