- 1Department of Microsurgery, Orthopedic Trauma and Hand Surgery, The First Affiliated Hospital, Sun Yat-sen University, Guangzhou, China

- 2Department of Hand and Foot Rehabilitation, Guangdong Provincial Work Injury Rehabilitation Hospital, Guangzhou, China

- 3Guangdong Provincial Engineering Laboratory for Soft Tissue Biofabrication, Guangzhou, China

- 4Guangdong Provincial Key Laboratory for Orthopedics and Traumatology, Guangzhou, China

Background: Radial, ulnar, or median nerve injuries are common peripheral nerve injuries. They usually present specific abnormal signs on the hands as evidence for hand surgeons to diagnose. However, without specialized knowledge, it is difficult for primary healthcare providers to recognize the clinical meaning and the potential nerve injuries through the abnormalities, often leading to misdiagnosis. Developing technologies for automatically detecting abnormal hand gestures would assist general medical service practitioners with an early diagnosis and treatment.

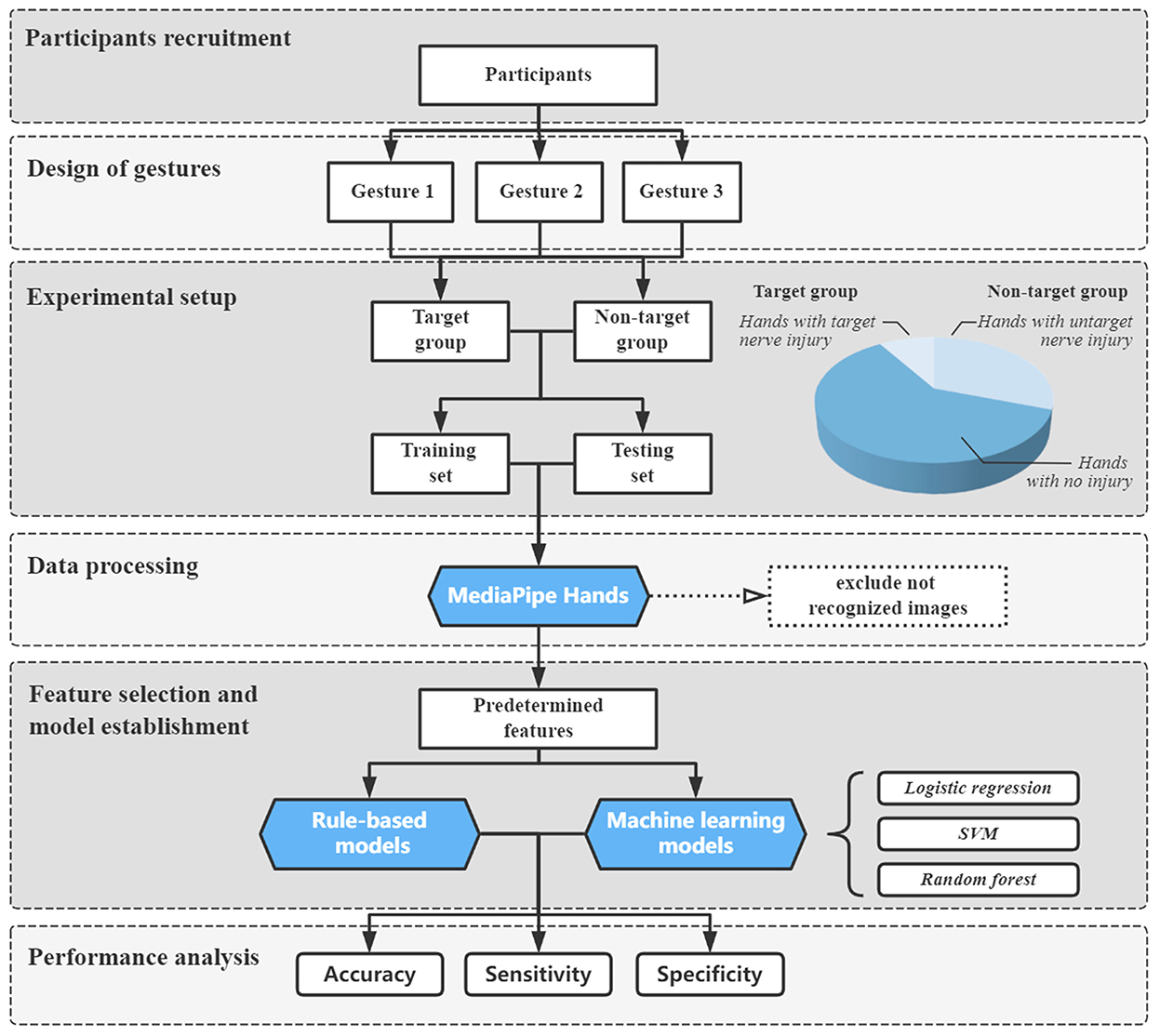

Methods: Based on expert experience, we selected three hand gestures with predetermined features and rules as three independent binary classification tasks for abnormal gesture detection. Images from patients with unilateral radial, ulnar, or median nerve injuries and healthy volunteers were obtained using a smartphone. The landmark coordinates were extracted using Google MediaPipe Hands to calculate the features. The receiver operating characteristic curve was employed for feature selection. We compared the performance of rule-based models with logistic regression, support vector machine and of random forest machine learning models by evaluating the accuracy, sensitivity, and specificity.

Results: The study included 1,344 images, twenty-two patients, and thirty-four volunteers. In rule-based models, eight features were finally selected. The accuracy, sensitivity, and specificity were (1) 98.2, 91.7, and 99.0% for radial nerve injury detection; (2) 97.3, 83.3, and 99.0% for ulnar nerve injury detection; and (3) 96.4, 87.5, and 97.1% for median nerve injury detection, respectively. All machine learning models had accuracy above 95% and sensitivity ranging from 37.5 to 100%.

Conclusion: Our study provides a helpful tool for detecting abnormal gestures in radial, ulnar, or median nerve injuries with satisfying accuracy, sensitivity, and specificity. It confirms that hand pose estimation could automatically analyze and detect the abnormalities from images of these patients. It has the potential to be a simple and convenient screening method for primary healthcare and telemedicine application.

Introduction

Peripheral nerve injury (PNI) is devastating and frequently results in life-long disability, severely decreasing patients' quality of life. It affects more than one million people worldwide, with an incidence of ~13–23 per 100,000 persons per year (1, 2). Among PNIs of the upper limb, radial, ulnar, and median nerve injuries are common, causing sensory and motor dysfunction in the control area of the damaged nerves. In medical practice, early detection of nerve injury may prevent patients from delayed diagnoses, delayed repairs, and potentially worse outcomes. However, the anatomical complexity of the hand may bring difficulties in examination and diagnosis (3). According to a large German database of hand and forearm injuries, nerve injuries were among the most commonly missed injuries, accounting for 24.8% of missed hand injuries (4). The misdiagnosis rate would be considerably higher in areas with insufficient specialized services. In China, for example, there have been reports that most treated patients with missed nerve injuries were from primary healthcare hospitals (5, 6).

The clinical diagnosis of nerve injury depends on clinical history, clinical symptoms, and physical and neurological examinations (7, 8). Among the examinations, electromyography (EMG), nerve conduction studies (NCS), MRI, and high-resolution ultrasonography have been successfully used as diagnostic methods for PNI (9–11). However, these examinations are expensive, invasive, and rely on specialized equipment and professional operators, restricting their application in primary assessment. Given these problems, there is a need for an affordable, non-invasive, and accessible method to detect possible nerve injuries for initial evaluation of the hand, significantly helping clinicians who are not specialized in hand surgery and benefiting patients in remote or rural areas. Some abnormal signs or deformities of the hand may indicate the nerve injuries, such as the limited extension of the wrist and digits for radial nerve injury, the claw hand deformity and the Wartenberg sign for ulnar nerve injury, and the ape hand deformity for median nerve injury. These clinical experiences have been well-acknowledged in hand surgery, where nerve injuries can lead to various morphological changes based on the different innervation of the hand (12, 13). So far, deformity of digit(s)' joint(s) has been used to describe specific nerve injury as supporting evidence (14–16). This unique connection between abnormalities and nerve injury was determined by the independent anatomical nerve innervation to the muscle. Additionally, it is possible to observe these abnormalities through several gestures. Therefore, detecting abnormal gestures can be a simple method for predicting hand nerve injuries.

With the advancement in computer vision, hand pose estimation is possible using automatic analysis techniques for detecting the hand and predicting the articulated joint locations from images or videos (17, 18). Hand pose estimation is crucial and popular in achieving several tasks, such as gesture recognition (19), action recognition (20), and sign language recognition (21), in general populations. There has been an increasing interest in hand pose estimation techniques for medical research and application, such as investigating automatic assessment of hand rehabilitation (22) and using 3D cameras for the classification of Parkinson's disease (23). These techniques seem to help extract the features of the hand. Among them, MediaPipe Hands is a deep-learning-based hand-tracking solution provided by Google. It does not require specialized hardware and is adequately light to run in real-time on mobile devices; it can also predict 21 landmarks of a hand from a single RGB camera with high prediction quality (24). MediaPipe Hands are popular in human-computer interaction systems and applications of virtual and augmented reality (21, 24, 25). However, it remains unknown whether MediaPipe Hands could detect the abnormalities in patients with nerve injuries, distinguish normal and abnormal gestures in the medical application, and further predict possible injuries based on rule-based methods.

The purpose of the present study was to propose an automatic method for abnormal hand gesture detection caused by radial, ulnar, or median nerve injury. We hypothesize that hand pose estimation could provide specific features to classify the presence of nerve injury and predict the exact type of injury.

Materials and methods

Participants recruitment

The experimental procedure of this study is illustrated in Figure 1. From June 2021 to October 2022, we recruited preoperative patients with unilateral radial, ulnar, or median nerve injuries who were scheduled to undergo surgery in our department. The diagnosis was made after a comprehensive evaluation of the related history, the observation of typical abnormal gestures, and EMG or ultrasound examination, which was further confirmed in the surgical exploration. Patients with any musculoskeletal disease (such as tendon rupture or arthritis) and neurological diseases (such as stroke and traumatic brain injury) that would influence the movement of the hands (not including the wrist joint) and those who refused to participate were excluded. A group of healthy volunteers were also invited during this period. The inclusion criteria for the volunteers were the absence of symptoms such as clumsiness or numbness of hands and no abnormal findings in the physical examination that indicated nerve injuries. All participants understood the study's protocol and cooperated in taking the images. Verbal consent was obtained for the usage of their images for research purposes. This study was approved by the institutional review board of the First Affiliated Hospital of Sun Yat-sen University (ID: [2021]387).

Design of gestures

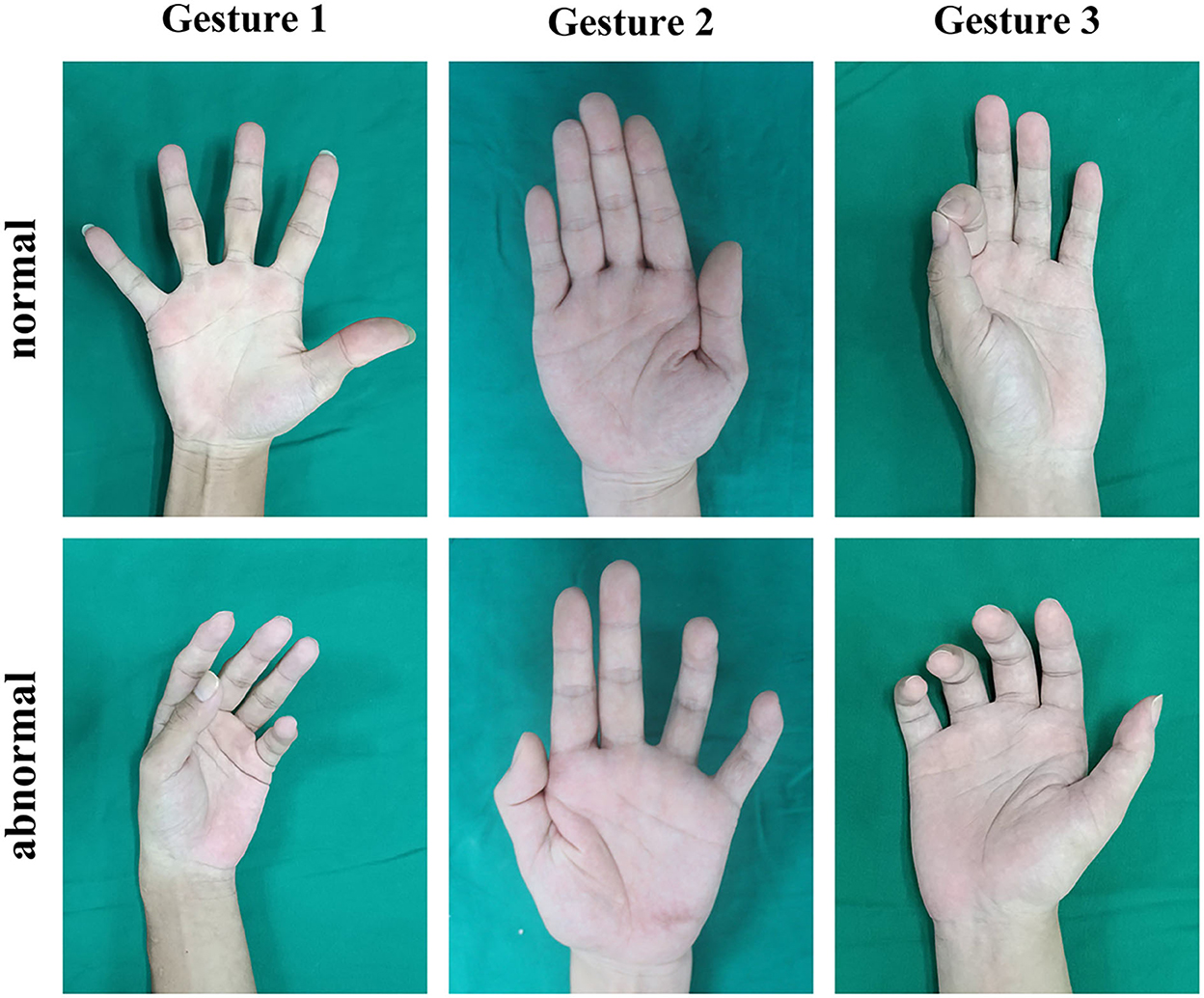

Based on the expert experience mentioned above (12, 13), we chose three gestures (Figure 2) to detect abnormalities caused by radial, ulnar, or median nerve injuries as three binary classification tasks. The normal and the expected abnormal gestures were demonstrated as follows:

• Gesture 1 was used to detect radial nerve injuries. Participants were requested to make a maximum abduction of digits to the medial and lateral sides with full extension. Patients with radial nerve injury would have impaired functions of the musculus abductor pollicis longus, the musculus extensor pollicis longus, the musculus extensor pollicis brevis, and the musculus extensor digitorum. The patients were expected to have difficulties extending all metacarpophalangeal (MCP) joints.

• Gesture 2 was used to detect ulnar nerve injuries. Participants were requested to make adduction of digits in full extension toward the middle finger. Patients with ulnar nerve injury would have impaired the function of the musculus lumbricales 3 and 4 and the musculus interosseous palmaris. This would lead to flexed proximal interphalangeal (PIP) joints and distal interphalangeal (DIP) joints of the ring and little fingers (the claw hand deformity) and limited adduction of the ring and particularly the little fingers (the Wartenberg sign).

• Gesture 3 was used to detect median nerve injuries. Participants were requested to perform a tip-to-tip pinch between the thumb and the index finger, i.e., to form an “OK” gesture. Patients with median nerve injury would have impaired functions of the musculus opponens pollicis, the musculus flexor pollicis brevis, and the musculus lumbricales 1 and 2. When performing this gesture, the decreased range of palmar abduction of the thumb would occur, and it could have trouble flexing the thumb and index finger, even a failure to make contact with the tips of the two digits.

Experimental setup

Before taking images, all participants washed and cleaned their hands. Jewelry, watches, and clothing on the wrist or hand were removed. Then, the hand was placed on the test table with the forearm kept in a supination position. Participants would first perform Gesture 1 for radial nerve classification. Injured hands with radial nerve injury were put in the target group; injured hands with ulnar or median nerve injury and hands without nerve injury were put in the non-target group. Then, stratified random sampling was used between the target and non-target groups to form the training and testing sets. The ratio of training to testing sets was ~3:1. Next, Gesture 2 and Gesture 3 were performed in the same way to separate respective training and testing sets.

Each gesture was requested to be performed four times with a rest interval of ~10 s to obtain more available data. For every gesture of a hand, four images were obtained. The images were taken 40–50 cm above the hand between a slightly radial view and a slightly ulnar view using a smartphone (iPhone XS Max, Apple Inc., image resolution: 1,980*1,080 p) to make them more different. If the forearm had limited supination, images would be taken from the exact distance parallel to the palm.

Data processing

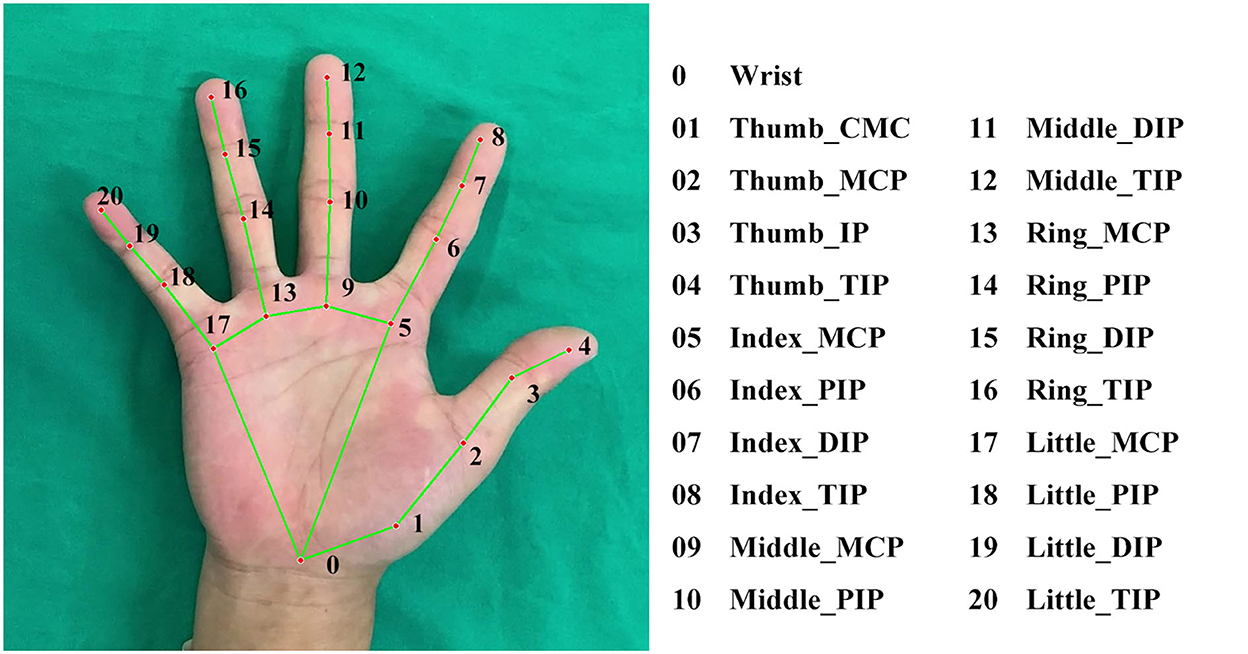

All images were analyzed using MediaPipe Hands solution (Version 0.8.9) with Python (Version 3.8). MediaPipe Hands would first detect and locate the hand through a palm detector algorithm. If the hand was successfully located in the image, then the coordinates of the landmarks could be obtained by its landmark model (24) (Figure 3). If the algorithm did not detect the hand, the image could not be recognized and was excluded from further analysis. After receiving the coordinates, features could be automatically calculated using the following equations:

where

Equation (1) calculates the targeted angle θ, where and represent the vectors of the related phalanges (26);

Equation (2) calculates the targeted distance d, where x1, y1, z1 and x2, y2, z2 represent the coordinates of the related landmarks;

Equation (3) standardizes any distance values d′ by dividing its original distance doriginal by the distance between the landmarks of the tip and IP joints of the thumb dstandard.

Feature selection and model establishment

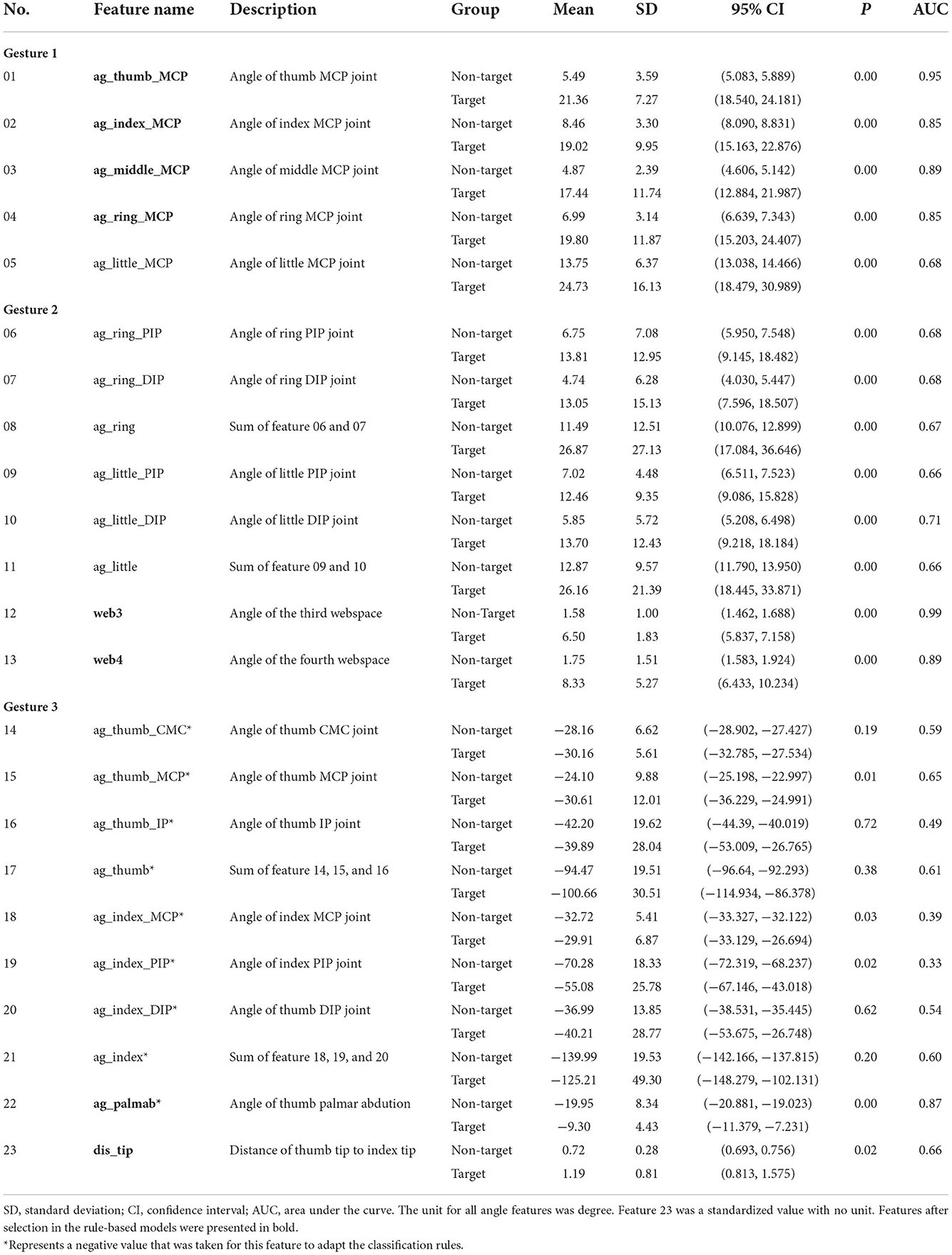

Since the radial, ulnar, and median nerves have independent innervations to the muscle (mentioned in the “Design of gestures” section), medical knowledge and expert experience can provide a series of primary and anatomical-based features. For example, the ulnar nerve is related to the movement of the ring and little fingers, so the features of these two fingers are much more important than the other digits, and irrelevant features could be ignored. In this consideration, we predetermined 23 features with our experience, including joints' angles, key point's distance, and their combinations (Table 1), for feature selection and model establishment.

In the study, two different methods for model establishment were compared. One was the rule-based method, which means that the model was built under a manually designed feature selection and decision-making processes based on knowledge and experience. The other was the machine learning (ML) method, which means that the whole process was learned from the data. The logistic regression (LR) model, the support vector machine (SVM) model, and the random forest (RF) model were chosen for the ML method. For each gesture, one rule-based model and three ML models were established.

In the rule-based method, feature selection for the predetermined features was analyzed using the receiver operating characteristic (ROC) curve, a standard method to assess the performance of binary classification models (27, 28). The classification efficacy of features could be assessed by calculating the area under the curve (AUC), which was thought to be poor (< 0.6), fair (0.6–0.7), good (0.7–0.8), very good (0.8–0.9), or excellent (>0.90) (29). We calculated the AUC of predetermined features using the training sets, filtered features with an AUC below 0.8, and selected at least two features in each gesture classification model as the classifiers. Next, we determined the threshold value of each classifier according to ROC curves. Finally, all classifiers were ensembled according to the rules of the model: if every classifier was below the threshold, the gesture was predicted as normal and regarded as uninjured; otherwise, the gesture was predicted as abnormal and regarded as injured.

In the ML models, all predetermined features were taken, standardized, and trained in the training sets using scikit-learn python application program interface (API) (https://scikit-learn.org.cn/). Grid search with 5-fold cross-validation was performed to find the best hyperparameters, enhancing the efficacy of the ML models. The feature selection of ML models was “embedded” and completed in the training process. We used “coef_” API in the LR and SVM models and “feature_importances_” API in the RF model to evaluate the importance of features and compare the results of feature selection between rule-based and machine learning methods.

Performance analysis

The performance of the rule-based model and the LR, SVM, and RF models was evaluated using the testing sets for accuracy, sensitivity, and specificity. The confusion matrixes were used to visualize the agreement between the prediction and the actual label. The indices used in this study were calculated as follows:

where

TP represents the number of abnormal gestures correctly predicted as abnormal,

FP represents the number of normal gestures wrongly predicted as abnormal,

FN represents the number of abnormal gestures wrongly predicted as normal,

TN represents the number of normal gestures correctly predicted as normal.

Statistical analysis

For predetermined features, the mean values in all target and non-target groups were presented as mean ± standard deviation (SD) with a 95% confidence interval (CI). An independent t-test was used to analyze the difference between the target and non-target groups. Subgroup analysis was also performed for features in each rule-based model with an independent t-test. A P-value of < 0.05 was considered statistically significant. The statistical analysis was performed using IBM SPSS version 25 (IBM, Armonk, NY, USA).

Results

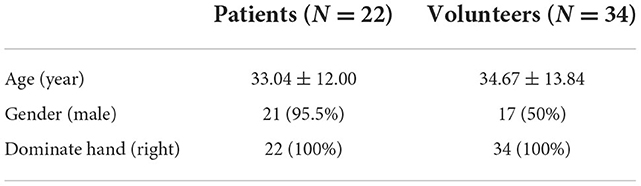

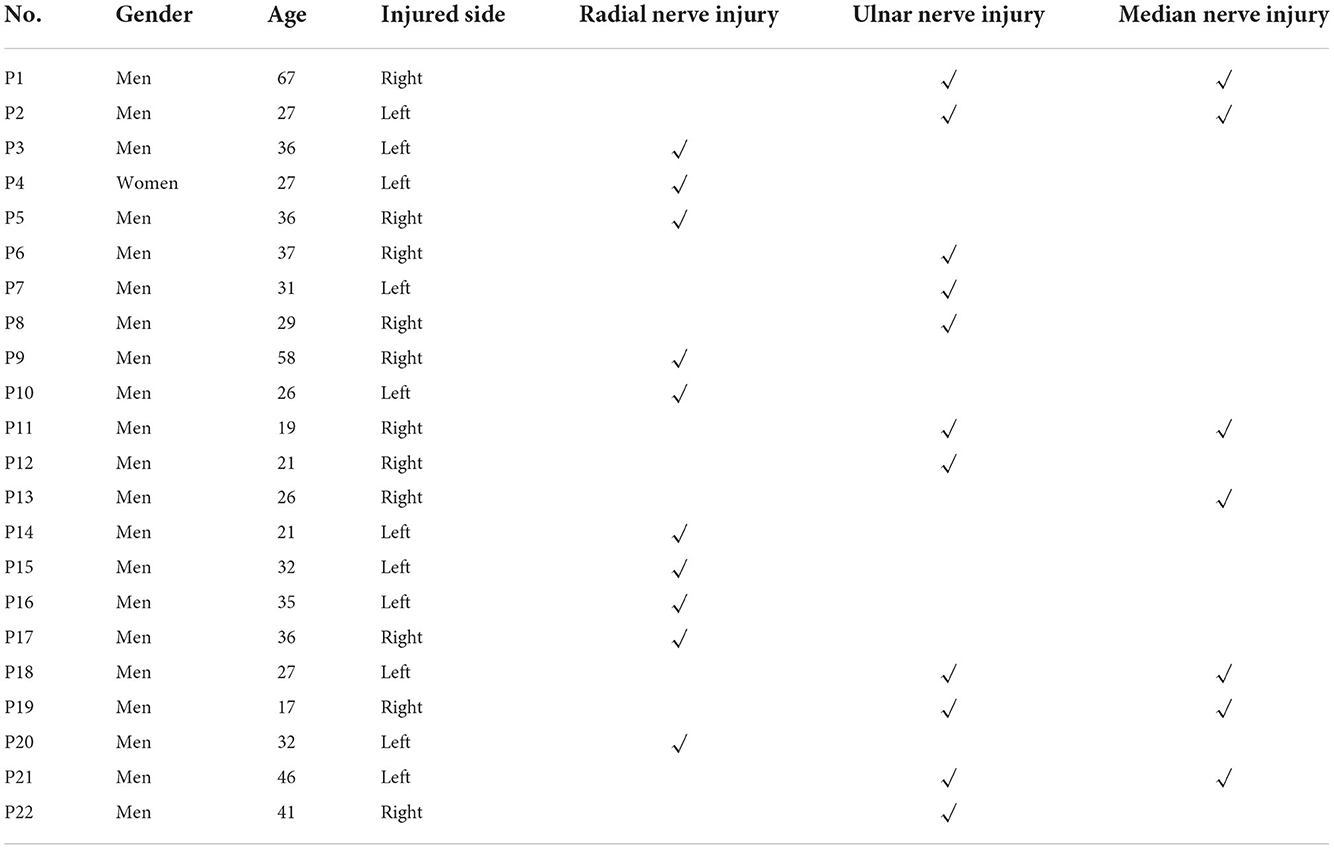

The study included twenty-two patients and thirty-four healthy volunteers who met the inclusion and exclusion criteria. The demographic of the participants is summarized in Table 2, where the mean ages of patients and volunteers were 33.04 ± 12.00 and 34.67 ± 13.84, respectively. Detailed information on patients is shown in Table 3. Among the patients, ten were diagnosed with radial nerve injury, five with ulnar nerve injury, one with median nerve injury, and six with combined ulnar and median nerve injuries. We obtained 1,344 images in total, with 448 images in each gesture classification task. MediaPipe Hands failed to recognize four images, and the overall recognition rate was 99.7%. All non-recognized images belonged to the training set of Gesture 3. In total, three rule-based models and nine ML models were established.

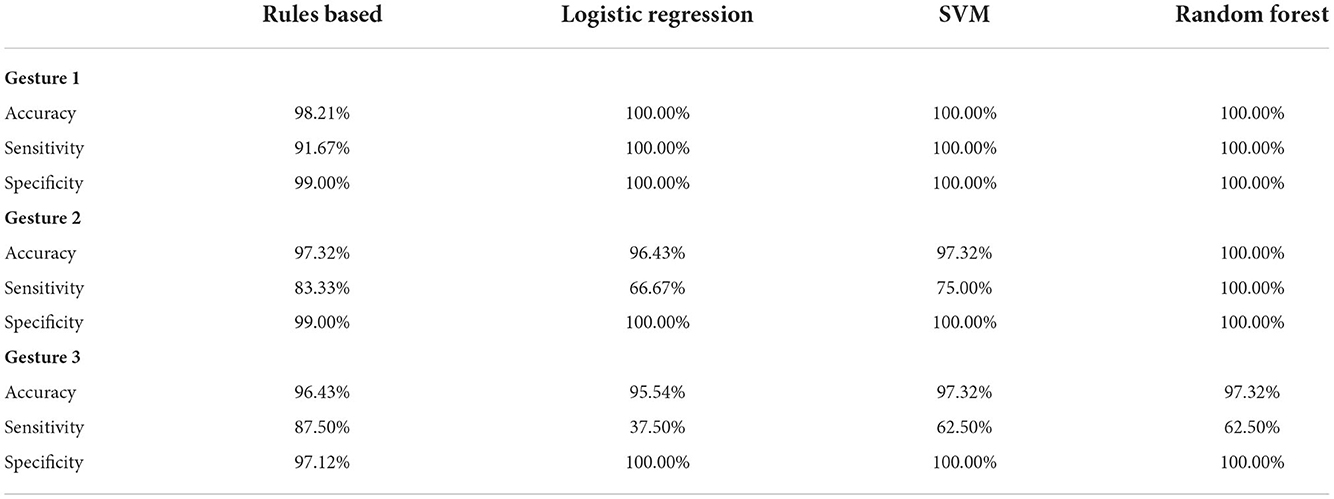

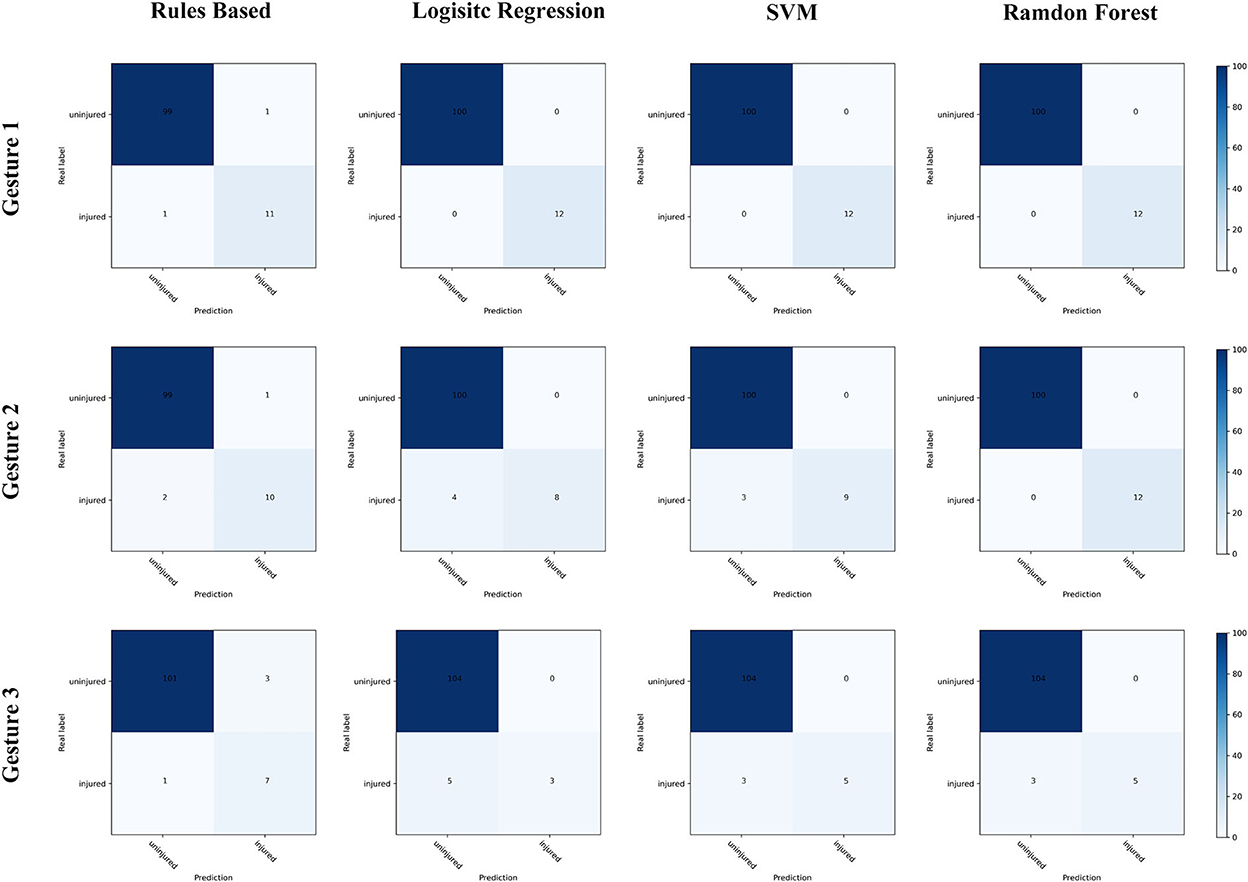

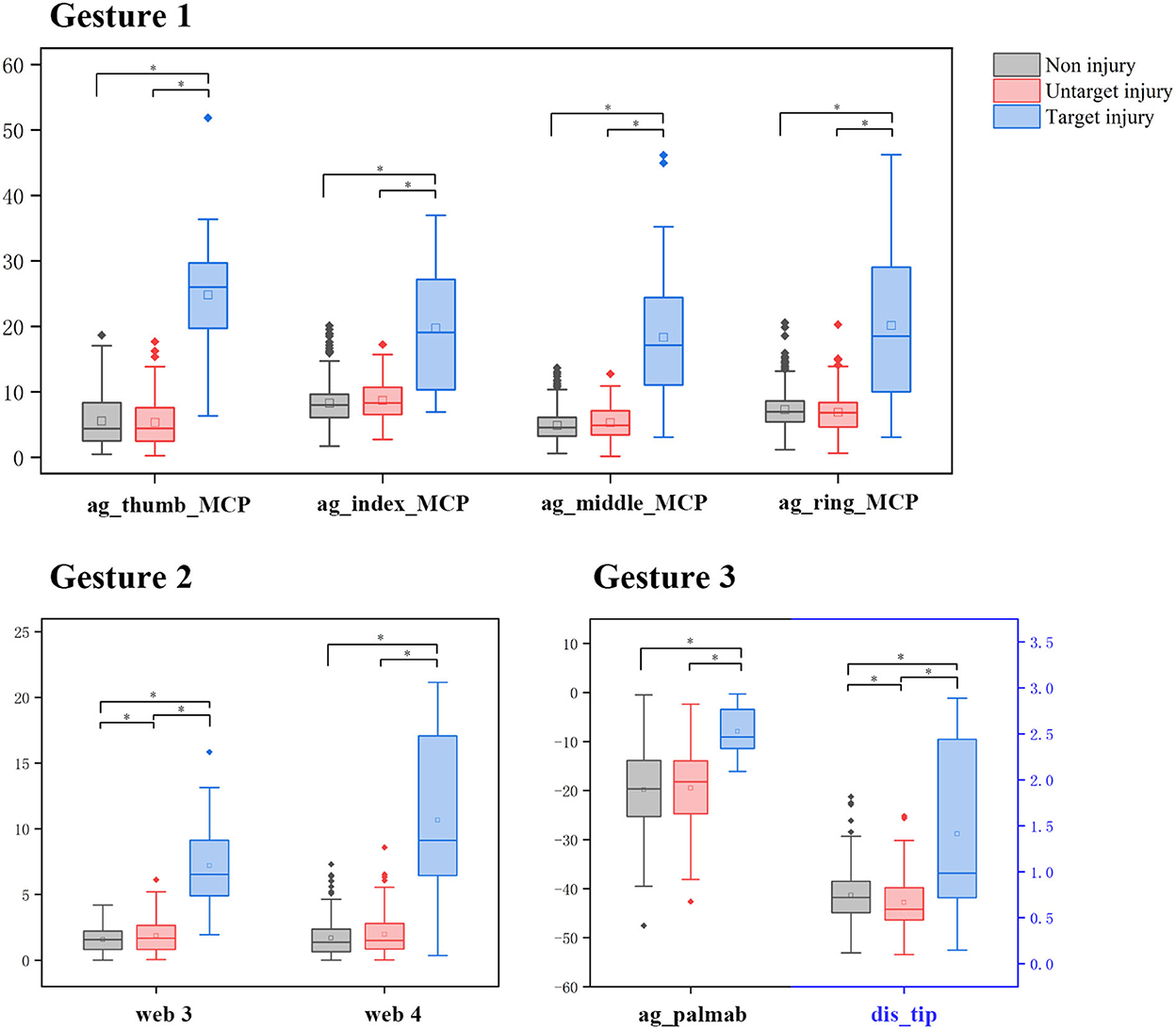

The statistical analysis of the predetermined features is shown in Table 1. In the independent t-test, all 23 predetermined features significantly differed between target and non-target groups, except 5 in Gesture 3. In the rule-based methods, eight features were selected after feature selection. In the subgroup analysis (shown in Figure 4), most selected features showed no significant difference in the non-target groups, except the angle of the third webspace and the tip distance between the thumb and the index finger. When compared with the ML method, selected features in the rule-based method also showed higher weight or importance in the ML models. For example, in Gesture 2, the angles of the third and fourth webspaces were selected in the rule-based model and were the only features in the logistic regression model. The hyperparameters, coefficients, and feature importance of all ML models are summarized in Supplemental material 1.

Figure 4. The subgroup analysis of features after selection. *Represents a significant difference among the hands with non-injury, untargeted injury, or targeted injury of all selected features (P < 0.05, independent t-test).

The performance of all models is shown in Table 4, Figure 5. The accuracy, sensitivity, and specificity of rule-based models were (1) 98.2, 91.7, and 99.0% for radial nerve injury detection; (2) 97.3, 83.3% and 99.0% for ulnar nerve injury detection; and (3) 96.4, 87.5, and 97.1% for median nerve injury detection, respectively. In the ML models, radial nerve injury classification achieved the best performance with 100% accuracy. In all classification tasks, the rule-based method maintained sensitivity above 80%. In comparison, lower sensitivity appears in median nerve injury classification, with 62.5% in the SVM and RF models and only 37.5% in the RL model.

Discussion

An abnormal gesture can be recognized by well-trained hand surgeons and indicates the presence of radial, ulnar, or median nerve injury before the diagnostic examination. In line with this diagnosis, we designed an automatic detection procedure for abnormal gestures enabled by an advanced hand pose estimation algorithm and rule-based models. According to the result, several features help detect the abnormalities from images to predict the type of nerve injuries. Nevertheless, our study is not intended to replace the role of clinicians or create a diagnostic method. We aimed to provide a simple, effective, and convenient way to alert the possible nerve injuries for unspecialized healthcare providers based on anatomical knowledge and clinical experience.

In this study, MediaPipe Hands, one of the novel pose estimation techniques, has been used as an automatic feature extraction method for classification tasks. We observed that the calculated angles from the landmark coordinates were not precisely in agreement with the manual assessment. For example, in the non-target groups of Gesture 1 and Gesture 2, all MCP joints and the PIP and DIP joints of the ring and the little fingers were higher than 5°. The mean value of the little MCP joint even reached 13.75°. When the digits are completely extended, these values are expected to be near 0° for people without nerve injury. There are several possible reasons to explain the differences. First, the pose estimation predicts the spatial coordinates of the landmark, yet manual goniometry usually measures the landmark on the surface of the hand. Second, the landmarks are not precisely the same, for there are no landmarks at the base of the metacarpals but only a single wrist landmark in MediaPipe skeleton models (24, 30), which may lead to noticeable differences in measuring the angles of MCP joints. In similar studies, a portable infrared camera called Leap Motion has been reported in functional assessments of hand rehabilitation (22). Researchers also found that the measurement result of Leap Motion was not favorable with manual measurement (31–34).

Under such circumstances, the threshold value of features could not be directly determined by clinical experience. Therefore, the training sets were used for feature selection and finding the cutoff. In the rule-based method, we used a filter strategy with a ROC curve because absolute distinctions exist between the injured and uninjured hands both in images and from the clinical practice experience. According to the independent t-test, all selected features could effectively categorize normal and abnormal gestures. Besides, the subgroup analysis further proved that, in each gesture, features between targeted and untargeted injuries were completely different, while features between untargeted injuries and non-injury were similar. In other words, our method is consistent with anatomical knowledge. Our study shows that MediaPipe Hands is competent at providing features for qualitative analysis. However, four unrecognized images in Gesture 3, one from the uninjured hand of a patient and three from the hand with radial nerve injury, also suggest that there is still room to propose estimation improvement. More annotated data and datasets are needed (35), especially for the medical population and the medical setting.

The rule-based models have been compared with three machine-learning models commonly used for binary classification in medical research (36–38). In the feature selection process, similar results are obtained for rule-based and ML methods, for the selected features in rule-based models have higher coefficients in the LR models and higher importance in the RF models. In the performance analysis, high specificity in all models shows the prediction for uninjured people is easy and correct. The sensitivity of ML models fluctuates from 37.5 to 100%. In comparison, the sensitivity of our models is stable from 83.3 to 91.7%, mainly because our rules seek the most essential and valuable features but might miss some synergistic effects among them. The overall performance of our methods is satisfactory. We believe that medical knowledge and clinical experience were the keys to maximizing the classification performance, even using minimal features and simple rules. They work as a shortcut to find the right features and make the detection performance in our tasks comparable to machine learning models.

The rule-based method is also interpretable (39, 40), which means the process is easier to modify and understand in human terms. It can provide expertise more conveniently and spread expert knowledge to primary healthcare providers. Tang et al. (11) reported a quantitative assessment method for upper-limb traumatic PNI. In their study, the presence of radial, ulnar, or median nerve injury was identified under an expert system using the data of surface EMG with 81.82% sensitivity and 98.90% specificity. Compared with Tang et al., our study possesses a little higher sensitivity and more straightforward implementation. Unlike surface EMG, taking images is more convenient and comfortable for patients. We are confident that our method has reliable results and is much easier to be applied by primary healthcare providers. As smartphones have become available and acceptable tools for telemedicine (41) and during the COVID-19 pandemic, performing virtual hand examinations through images and videos raises excellent interests in the medical field (42, 43). The proposed method has the potential to be a convenient screening tool for online health services and remote areas.

The relatively small sample size should be the main limitation of the present study. Since this was a prospective study and there was no available dataset of hand images with PNIs, continually updating our dataset is necessary to provide better automatic healthcare solutions. The smallest sample size in our study is patients with median nerve injury, which might weaken the generalizability of the proposed method. Although the differences between normal and abnormal gestures are noticeable, minor feature changes are highly possible when the sample size becomes more extensive. At present, our study only focuses on detecting nerve injuries and cannot detect abnormal gestures caused by other injuries, such as tendon rupture. In addition, the detection accuracy relies on the compliance of the users. Further study should invite more users in remote or rural areas to verify whether the rightful gestures can also be performed without the supervision of clinicians. Finally, our method functions as decision support to prevent missing nerve injuries in the primary assessment. After the initial discovery of the nerve injury, comprehensive examinations, differential diagnoses, and medical treatments are necessary.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by Institutional Review Board of The First Affiliated Hospital of Sun Yat-sen University (ID: [2021]387). Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

XL, JY, and QZ contributed to the conception and design of the study. FG, JF, and CC performed the investigation and wrote the original draft. ZW organized the database and performed the statistical analysis. All authors contributed to the manuscript revision, read, and approved the submitted version.

Acknowledgments

The authors thank Mr. Bai Leng, Mr. Zhenguo Lao, and Ms. Jianwen Zheng for technical help, writing assistance, and general support.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fneur.2022.1052505/full#supplementary-material

References

1. Yu TH, Xu YX, Ahmad MA, Javed R, Hagiwara H, Tian XH. Exosomes as a promising therapeutic strategy for peripheral nerve injury. Curr Neuropharmacol. (2021) 19:2141–51. doi: 10.2174/1570159X19666210203161559

2. Sullivan R, Dailey T, Duncan K, Abel N, Borlongan CV. Peripheral nerve injury: stem cell therapy and peripheral nerve transfer. Int J Mol Sci. (2016) 17:1608–17. doi: 10.3390/ijms17122101

3. Sanderson M, Mohr B, Abraham MK. The emergent evaluation and treatment of hand and wrist injuries: an update. Emerg Med Clin North Am. (2020) 38:61–79. doi: 10.1016/j.emc.2019.09.004

4. Fitschen-Oestern S, Lippross S, Lefering R, Kluter T, Behrendt P, Weuster M, et al. Missed hand and forearm injuries in multiple trauma patients: an analysis from the TraumaRegister DGU(R). Injury. (2020) 51:1608–17. doi: 10.1016/j.injury.2020.04.022

5. Che Y, Xu L. Analysis on misdiagnosis of ulnar nerve injury: a report of 18 cases. China J Orthop Trauma. (2008) 21:769–70.

6. Liang XJ, Liu YL, Yan XF. Analysis of misdiagnosis of 49 cases of peripheral nerve injury. Chin J Mod Med. (1998) 8:36–7.

7. Edshage S. Peripheral nerve injuries–diagnosis and treatment. N Engl J Med. (1968) 278:1431–6. doi: 10.1056/NEJM196806272782606

8. Dahlin LB, Wiberg M. Nerve injuries of the upper extremity and hand. EFORT Open Rev. (2017) 2:158–70. doi: 10.1302/2058-5241.2.160071

9. Pridmore MD, Glassman GE, Pollins AC, Manzanera Esteve IV, Drolet BC, Weikert DR, et al. Initial findings in traumatic peripheral nerve injury and repair with diffusion tensor imaging. Ann Clin Transl Neurol. (2021) 8:332–47. doi: 10.1002/acn3.51270

10. Zeidenberg J, Burks SS, Jose J, Subhawong TK, Levi AD. The utility of ultrasound in the assessment of traumatic peripheral nerve lesions: report of 4 cases. Neurosurg Focus. (2015) 39:E3. doi: 10.3171/2015.6.FOCUS15214

11. Tang W, Zhang X, Sun Y, Yao B, Chen X, Chen X, et al. Quantitative assessment of traumatic upper-limb peripheral nerve injuries using surface electromyography. Front Bioeng Biotechnol. (2020) 8:795. doi: 10.3389/fbioe.2020.00795

12. Gu YD, Wang SD, Shi D. Gu Yudong and Wang Shuhuan's Hand Surgery. Shanghai: Shanghai Scientific & Technical Publishers Press (2002).

13. Kim DH, Kline DG, Hudson AR. Kline and Hudson's Nerve Injuries: Operative Results for Major Nerve Injuries, Entrapments and Tumors. Singapore: Saunders Elsevier Press (2008).

14. Larson EL, Santosa KB, Mackinnon SE, Snyder-Warwick AK. Median to radial nerve transfer after traumatic radial nerve avulsion in a pediatric patient. J Neurosurg Pediatr. (2019) 24:209–14. doi: 10.3171/2019.3.PEDS18550

15. Bauer B, Chaise F. Correction of ulnar claw hand and Wartenberg's sign. Hand Surg Rehabil. (2022) 41S:S118–27. doi: 10.1016/j.hansur.2020.11.012

16. Soldado F, Bertelli JA, Ghizoni MF. High median nerve injury: motor and sensory nerve transfers to restore function. Hand Clin. (2016) 32:209–17. doi: 10.1016/j.hcl.2015.12.008

17. Erol A, Bebis G, Nicolescu M, Boyle RD, Twombly X. Vision-based hand pose estimation: a review. Comp Vis Image Understand. (2007) 108:52–73. doi: 10.1016/j.cviu.2006.10.012

18. Pham T, Pathirana PN, Trinh H, Fay P. A non-contact measurement system for the range of motion of the hand. Sensors. (2015) 15:18315–33. doi: 10.3390/s150818315

19. Zhou YM, Jiang GL, Lin YR. A novel finger and hand pose estimation technique for real-time hand gesture recognition. Pattern Recognit. (2016) 49:102–14. doi: 10.1016/j.patcog.2015.07.014

20. Hong S, Kim Y. Dynamic pose estimation using multiple RGB-D cameras. Sensors. (2018) 18:3865. doi: 10.3390/s18113865

21. Adhikary S, Talukdar AK, Sarma KK. A Vision-Based System for Recognition of Words used in Indian Sign Language Using MediaPipe. Guwahati: Gauhati University (2021).

22. Li C, Cheng L, Yang H, Zou Y, Huang F. An automatic rehabilitation assessment system for hand function based on leap motion and ensemble learning. Cybern Syst. (2021) 52:3–25. doi: 10.1080/01969722.2020.1827798

23. Butt AH, Rovini E, Dolciotti C, De Petris G, Bongioanni P, Carboncini MC, et al. Objective and automatic classification of Parkinson disease with Leap Motion controller. Biomed Eng Online. (2018) 17:168. doi: 10.1186/s12938-018-0600-7

24. Zhang F, Bazarevsky V, Vakunov A, Tkachenka A, Sung G, Chang CL, et al. MediaPipe hands: On-device real-time hand tracking. arXiv [Preprint]. (2020). arXiv: 2006.10214. Available online at: https://arxiv.org/abs/2006.10214

25. Chunduru V, Roy M, Chittawadigi RG. Hand Tracking in 3D Space Using MediaPipe and PnP Method for Intuitive Control of Virtual Globe. Bengaluru: Amrita Vishwa Vidyapeetham, Amrita School of Engineering (2021).

26. Zhu Y, Lu W, Gan W, Hou W. A contactless method to measure real-time finger motion using depth-based pose estimation. Comput Biol Med. (2021) 131:104282. doi: 10.1016/j.compbiomed.2021.104282

27. Mushari NA, Soultanidis G, Duff L, Trivieri MG, Fayad ZA, Robson P, et al. Exploring the utility of radiomic feature extraction to improve the diagnostic accuracy of cardiac sarcoidosis using FDG PET. Front Med. (2022) 9:840261. doi: 10.3389/fmed.2022.840261

28. Koyama T, Fujita K, Watanabe M, Kato K, Sasaki T, Yoshii T, et al. Cervical myelopathy screening with machine learning algorithm focusing on finger motion using noncontact sensor. Spine. (2022) 47:163–71. doi: 10.1097/BRS.0000000000004243

29. Jorgensen HS, Behets G, Viaene L, Bammens B, Claes K, Meijers B, et al. Diagnostic accuracy of noninvasive bone turnover markers in renal osteodystrophy. Am J Kidney Dis. (2022) 79:667–76 e1. doi: 10.1053/j.ajkd.2021.07.027

30. Ganguly A, Rashidi G, Mombaur K. Comparison of the performance of the leap motion controller (TM) with a standard marker-based motion capture system. Sensors. (2021) 21:1750. doi: 10.3390/s21051750

31. Gamboa E, Serrato A, Castro J, Toro D, Trujillo M. Advantages and limitations of leap motion from a developers', physical therapists', and patients' perspective. Methods Inf Med. (2020) 59:110–6. doi: 10.1055/s-0040-1715127

32. Chophuk P, Chumpen S, Tungjitkusolmun S, Phasukkit P. Hand postures for evaluating trigger finger using leap motion controller. In: 2015 8th Biomedical Engineering International Conference (BMEiCON). Pattaya (2015).

33. Nizamis K, Rijken NHM, Mendes A, Janssen M, Bergsma A, Koopman B. A novel setup and protocol to measure the range of motion of the wrist and the hand. Sensors. (2018) 18:3230. doi: 10.3390/s18103230

34. Trejo RL, Vazquez JPG, Ramirez MLG, Corral LEV, Marquez IR. Hand goniometric measurements using leap motion. In: 2017 14th Ieee Annual Consumer Communications & Networking Conference (CCNC). Las Vegas, NV (2017). p. 137–41.

35. Chatzis T, Stergioulas A, Konstantinidis D, Dimitropoulos K, Daras P. A comprehensive study on deep learning-based 3D hand pose estimation methods. Appl Sci. (2020) 10:6850. doi: 10.3390/app10196850

36. Yokoyama M, Yanagisawa M. Logistic regression analysis of multiple interosseous hand-muscle activities using surface electromyography during finger-oriented tasks. J Electromyogr Kinesiol. (2019) 44:117–23. doi: 10.1016/j.jelekin.2018.12.006

37. Mebarkia K, Reffad A. Multi optimized SVM classifiers for motor imagery left and right hand movement identification. Australas Phys Eng Sci Med. (2019) 42:949–58. doi: 10.1007/s13246-019-00793-y

38. Bouacheria M, Cherfa Y, Cherfa A, Belkhamsa N. Automatic glaucoma screening using optic nerve head measurements and random forest classifier on fundus images. Phys Eng Sci Med. (2020) 43:1265–77. doi: 10.1007/s13246-020-00930-y

39. Ribeiro MT, Singh S, Guestrin C. “Why should i trust you?” Explaining the predictions of any classifier. In: Kdd'16: Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining. California (2016). p. 1135–44.

40. Doshi-Velez F, Kim B. Towards a rigorous science of interpretable machine learning. arXiv [Preprint]. (2017). arXiv: 1702.08608. Available online at: https://arxiv.org/abs/1702.08608

41. Hsieh C, Yun D, Bhatia AC, Hsu JT, Ruiz De Luzuriaga AM. Patient perception on the usage of smartphones for medical photography and for reference in dermatology. Dermatol Surg. (2015) 41:149–54. doi: 10.1097/DSS.0000000000000213

42. Wainberg MC, Jurisson ML, Johnson SE, Brault JS. The telemedicine hand examination. Am J Phys Med Rehabil. (2020) 99:883. doi: 10.1097/PHM.0000000000001555

Keywords: peripheral nerve injury, hand pose estimation, hand gesture, abnormal gesture detection, expert system, machine learning

Citation: Gu F, Fan J, Cai C, Wang Z, Liu X, Yang J and Zhu Q (2022) Automatic detection of abnormal hand gestures in patients with radial, ulnar, or median nerve injury using hand pose estimation. Front. Neurol. 13:1052505. doi: 10.3389/fneur.2022.1052505

Received: 24 September 2022; Accepted: 14 November 2022;

Published: 07 December 2022.

Edited by:

Lei Xu, Fudan University, ChinaReviewed by:

Adriano De Oliveira Andrade, Federal University of Uberlândia, BrazilIvan Stojkovic, Temple University, United States

Copyright © 2022 Gu, Fan, Cai, Wang, Liu, Yang and Zhu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jiantao Yang, eWFuZ2p0NUBtYWlsLnN5c3UuZWR1LmNu; Qingtang Zhu, emh1cWluZ3RAbWFpbC5zeXN1LmVkdS5jbg==

†These authors have contributed equally to this work and share first authorship

Fanbin Gu

Fanbin Gu Jingyuan Fan

Jingyuan Fan Chengfeng Cai2†

Chengfeng Cai2† Qingtang Zhu

Qingtang Zhu