- 1Department of Physical Therapy, Steinhardt School of Culture, Education, and Human Development, New York University, New York, NY, United States

- 2Vestibular Rehabilitation, New York Eye and Ear Infirmary of Mount Sinai, New York, NY, United States

- 3Department of Applied Statistics, Social Science, and Humanities, Steinhardt School of Culture Education and Human Development, New York University, New York, NY, United States

- 4Department of Otolaryngology-Head and Neck Surgery, New York Eye and Ear Infirmary of Mount Sinai, New York, NY, United States

Virtual reality allows for testing of multisensory integration for balance using portable Head Mounted Displays (HMDs). HMDs provide head kinematics data while showing a moving scene when participants are not. Are HMDs useful to investigate postural control? We used an HMD to investigate postural sway and head kinematics changes in response to auditory and visual perturbations and whether this response varies by context. We tested 25 healthy adults, and a small sample of people with diverse monaural hearing (n = 7), or unilateral vestibular dysfunction (n = 7). Participants stood naturally on a stable force-plate and looked at 2 environments via the Oculus Rift (abstract “stars;” busy “street”) with 3 visual and auditory levels (static, “low,” “high”). We quantified medio-lateral (ML) and anterior-posterior (AP) postural sway path from the center-of-pressure data and ML, AP, pitch, yaw and roll head path from the headset. We found no difference between the different combinations of “low” and “high” visuals and sounds. We then combined all perturbations data into “dynamic” and compared it to the static level. The increase in path between “static” and “dynamic” was significantly larger in the city environment for: Postural sway ML, Head ML, AP, pitch and roll. The majority of the vestibular group moved more than controls, particularly around the head, when the scenes, especially the city, were dynamic. Several patients with monaural hearing performed similar to controls whereas others, particularly older participants, performed worse. In conclusion, responses to sensory perturbations are magnified around the head. Significant differences in performance between environments support the importance of context in sensory integration. Future studies should further investigate the sensitivity of head kinematics to diagnose vestibular disorders and the implications of aging with hearing loss to postural control. Balance assessment and rehabilitation should be conducted in different environmental contexts.

Introduction

The ability to adapt to changes in the sensory environment is considered critical for balance (1). Healthy individuals are able to maintain their balance with their eyes closed, for example, because they will rely on other senses (e.g., vestibular, somatosensory) for postural control (2, 3). An inability to reweight sensory information may lead to loss of balance with environmental changes, e.g., darkness, rapidly moving vehicles (due to visual dependence), or slippery surfaces (due to somatosensory dependence) (2, 4). Historically, the inputs considered for balance consisted of visual, vestibular, and somatosensory, but recent studies suggest that auditory input may serve as a 4th balance input (5). The presence of stationary white noise has been shown to be associated with reduced postural sway, particularly during challenging balance tasks such as standing on foam or closing the eyes (6). To understand the role of sounds in postural control, it is important to combine different levels of auditory and visual cues to better reflect day-to-day postural responses in healthy individuals.

Context has been shown to have an important impact on balance performance, potentially induced by cognitive and emotional aspects, such as postural threats, fear of imbalance or symptoms related to past experiences within specific environments (7). This top-down modulation can interact with the multisensory integration process to affect the motor plan and cannot be captured without providing an environmental context. To facilitate transfer of balance control, it is imperative that we test and train individuals in conditions as close as possible to those commonly encountered during daily activities (8). The importance of context may be analogous for auditory stimuli. While limited research exists on the relationship between auditory input and postural control, a few studies incorporated natural sounds (e.g., a fountain) and suggested that differences in response to natural sounds relate to the properties of the sounds (greater variety of binaural and monaural cues including static and moving features) (9) and the innate emotional/cognitive responses of the individual (5). The majority of studies, reporting that balance is context-dependent, however, refer to the task (single or dual, static or dynamic) or the surface type (10–13). Current virtual reality technology allows context-based testing of multisensory integration and balance using Head Mounted Displays (HMDs) (14).

A novel HMD-based sensory integration paradigm where visual and auditory cues are manipulated in different contexts could be of particular importance to people with sensory loss. Individuals with vestibular dysfunction appear to develop a substitution strategy whereby the remaining sensory inputs (e.g., vision) are weighted more heavily (15–21). Such a strategy is problematic in hectic environments (22). Indeed, individuals with vestibular dysfunction complain of worsening dizziness and balance loss in complex settings such as busy streets (23–25). Data are accumulating regarding the importance of sounds for balance (6). Likewise, several studies suggested an independent relationship between hearing loss, balance impairments and increased risk for falls (26, 27). At present, the mechanism underlying imbalance in patients with hearing loss who do not present with vestibular symptoms is not clear; potential mechanisms include a common inner ear pathology, abnormal sensory weighting/reweighting, cognitive processing or a combination of these (6). Additional research is necessary to explore these and/or other mechanisms mediating imbalance in these groups.

Postural responses to visual perturbations and the contributions of sounds to balance are typically quantified via postural sway (6). HMDs, designed to move the virtual scene according to the participant's head movement, accurately (28) record head position at 60–90 Hz with no additional equipment (29). Some studies, however, found differences in head kinematics, and not in postural sway, between patients with visual sensitivity or vestibular dysfunction and controls in response to visual perturbations (30–33). Indeed, people with vestibular loss demonstrated increased head movement compared with controls in response to head perturbations, potentially associated with excessive work of their neck muscles in order to control the head in space (34, 35). In related work we observed that responses to visual cues were magnified at the head segment also among healthy young adults. Head kinematics may provide an important additional facet of postural control beyond postural sway.

The aims of this study were as follows:

1. Determine how postural sway and head kinematics change in healthy adults in response to auditory perturbations when combined with visual perturbations. We expected more movement in response to the visual perturbations, particularly around the head but also for postural sway. Based on prior studies showing a significant reduction in head movement among healthy adults with broadband white noise via speakers (36) or a reduction in head movement with 2 speakers projecting a 500 Hz wave in people who are congenitally blind (37) we hypothesized that head movement will also increase with the sound perturbations.

2. Determine whether the response to sensory perturbations (via postural sway and head kinematics) varies by context. Given that balance is known to be context-dependent, we expected the responses to sensory perturbations to be magnified in a semi-real contextual scene.

3. Explore the feasibility of this novel HMD assessment in individuals with vestibular loss and hearing loss and establish a protocol for future research.

Methods

Sample

Healthy controls (N = 25) were recruited from the University community. Adults with chronic (>3 months) unilateral vestibular hypofunction (N = 7) participating in vestibular rehabilitation for complaints of dizziness or imbalance were recruited from the vestibular rehabilitation clinic at the New York Eye and Ear Infirmary of Mount Sinai (NYEEIMS). Adults with monaural hearing (N = 7) for various reasons who had no current vestibular issues or self-reported imbalance were recruited from the Otolaryngology clinic at the NYEEIMS. We defined monaural hearing as an ability to hear on one side only due to a single-sided hearing loss or due to bilateral profound hearing loss corrected with a single cochlear implant (CI). Inclusion criteria for all groups were: 18 or older, normal or corrected to normal vision, normal sensation at the bottom of the feet, and ability to comprehend and sign an informed consent in English. This study was approved by the Institutional Review Board of Mount Sinai and by the New York University Committee on Activities Involving Human Subjects.

System

Visuals were designed in C# language using standard Unity Engine version 2018.1.8f1 (64-bit) (Unity Tech., San Francisco, CA, USA). The scenes were delivered via the Oculus Rift headset (Facebook Technologies, LLC) controlled by a Dell Alienware laptop 15 R3 (Round Rock, TX, USA) with a single sensor placed on a tripod 1.6 meters in front of the participant. The rift has a resolution of 1,080 ×1,200 pixels per eye and uses accelerometers and gyroscopes to monitor head position with a refresh rate of 90 Hz. It has a field of view of 80° horizontal and 90° vertical. Environmental auditory cues were captured with the Sennheiser Ambeo microphone in first order Ambisonics format. The background sounds merged with a sound design process which involved simulating the detailed environmental sounds that exist within the natural environment to develop a real-world sonic representation. Abstract sounds were generated in Matlab. The audio files were processed in Wwise and integrated into Unity.

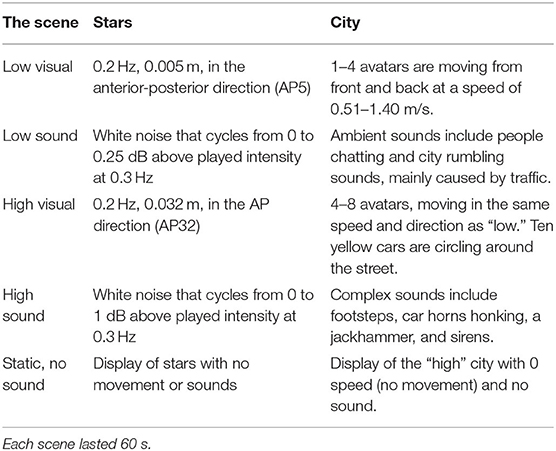

Scenes

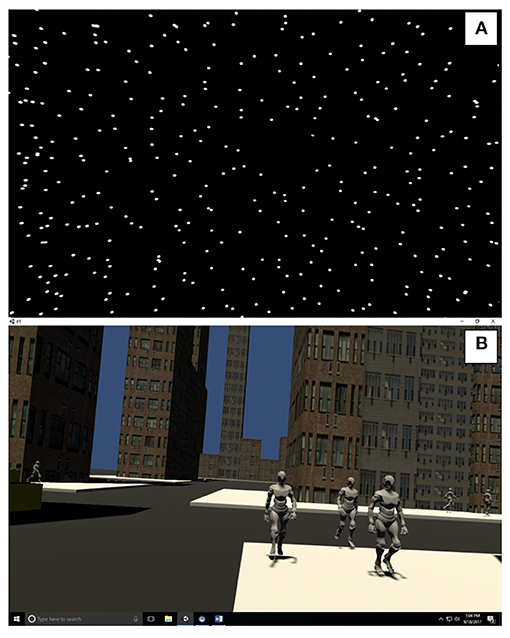

Stars

The participants observed a 3-wall (front and 2 sides) display of randomly distributed white spheres (diameter 0.02 m) on a black background (See Figure 1A and Supplementary Material) (38). Each wall was 6.16 by 3.2 m with clear central area of occlusion of 0.46 m in diameter to suppress the visibility of aliasing effects in the foveal region (39). Similar to Polastri and Barela (40) the spheres were either static or moving at a constant frequency of 0.2 Hz with either a “low” amplitude (5 mm, AP5) or “high” amplitude (32 mm, AP32). The sounds were developed as rhythmic white noise, scaled to the visual input (Table 1).

City

The city scene simulates a street with buildings at randomly generated heights, cars and pedestrian avatars (See Figure 1B and Supplementary Material). The difference between “low” and “high” visuals was the amount of avatar pedestrians and the addition of moving cars. Pre-recorded sounds were also scaled “low” and “high” levels of complexity (Table 1).

Testing Protocol

Participants stood hip-width apart on a stable force-platform wearing the Oculus Rift and were asked to look straight ahead and do whatever felt natural to them to maintain their balance. Participants were guarded by a student physical therapist. Two quad canes were placed on either side of the force-platform for safety and to help with stepping on and off the force-platform. Most participants completed 3 repetitions of each dynamic combination (low, low; low, high; high, low; high, high) and 1 of the “static visuals/no sound” scene per environment. To monitor cybersickness, the Simulator Sickness Questionnaire (SSQ) (41) was administered at baseline, breaks and at the end of the session. The Dizziness Handicap Inventory (DHI), (42) the Activities Specific Balance Confidence Scale (ABC), (24) and a demographic questionnaire were completed during their rest breaks.

Data Reduction and Outcome Measures

The scenes were 60-s long and the last 55 s were used for analysis (38). Postural sway was recorded at 100 Hz by Qualisys software for a Kistler 5233A force-platform (Winterthur, Switzerland). Head kinematics was recorded at 90 Hz by a custom-made software for the Oculus Rift headset. The criterion validity of the Oculus Rift to quantify head kinematics within postural tasks as compared with a motion capture system has been established (28). We applied a low-pass 4th order Butterworth filter with a conservative cutoff frequency at 10 Hz (43). Directional Path (DP) (44) was calculated as the total path length of the position curve for a selected direction. DP is a measure of postural steadiness and is used as an indication of how much static balance was perturbed with a given sensory manipulation. DP was calculated in 2 directions for force-platform data (AP, ML in mm) and 5 directions of head data [AP, ML in mm, pitch (up and down rotation), yaw (side to side rotation), roll (side flexion) in radians]. DP derived from a force platform is a valid and reliable measure of postural steadiness (45). We previously demonstrated the test-retest reliability of postural sway DP within a similar protocol without the sounds (46).

Statistical Analysis

Aim 1

We generated box plots for each outcome measure (postural sway DP AP and ML and head DP AP, ML, pitch, yaw, roll) per environment across the 5 conditions (Table 1). We conducted a visual inspection of the box plots to determine whether the distributions differed by sensory perturbations among healthy adults.

Aim 2

Given that Aim 1 showed complete overlap of the box plots for the 4 dynamic conditions regardless of environment or variable, we combined all dynamic scenes into a single level (dynamic). For each of the 7 variables, we fit a linear mixed effects model (47, 48). Linear mixed-effect models were used to estimate overall differences between environments (Stars, City) and 2 levels of sensory perturbations (static, dynamic) in healthy adults. Based on initial inspection of the residual plots for these models we used a log-transformation of the response variable to limit the impact of heteroscedasticity. These models account for the individual-level variation that is inherently present when repeated measures are obtained from individuals through a random intercept for each individual. No random slopes were used. We present the model coefficients and their 95% confidence interval (CI) for each environment (stars, city) and level (static, dynamic). P-values for each fixed effect are calculated through the Satterthwaite approximation for the degrees of freedom for the T-distribution (49). In addition, for ease of clinical interpretation, we provide the estimated marginal means for each of the 4 conditions on the original response scale (mm or radians) along with their confidence intervals. Analyses and figures were created in R Studio version 1.1.423 (50).

Aim 3

We used descriptive statistics and inspected violin plots to explore how the patients are distributed around the controls' mean performance. The violin plot depicts the kernel density estimate where the width of each curve corresponds with the frequency of data points in each region. A box plot is overlaid to provide median and interquartile range. Individual data points are represented as black dots.

Results

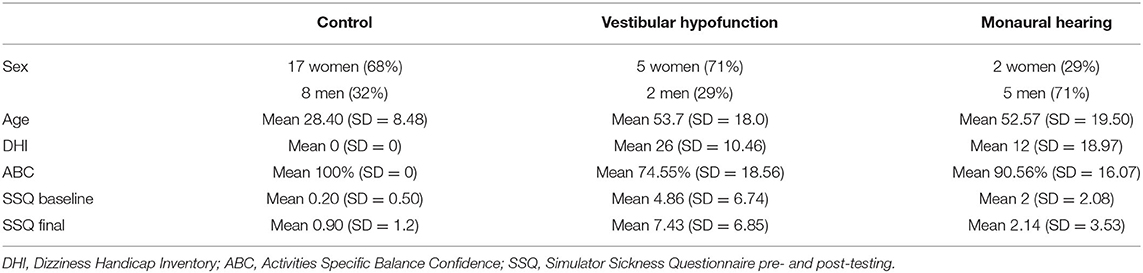

For a description of the sample see Table 2.

Aim 1

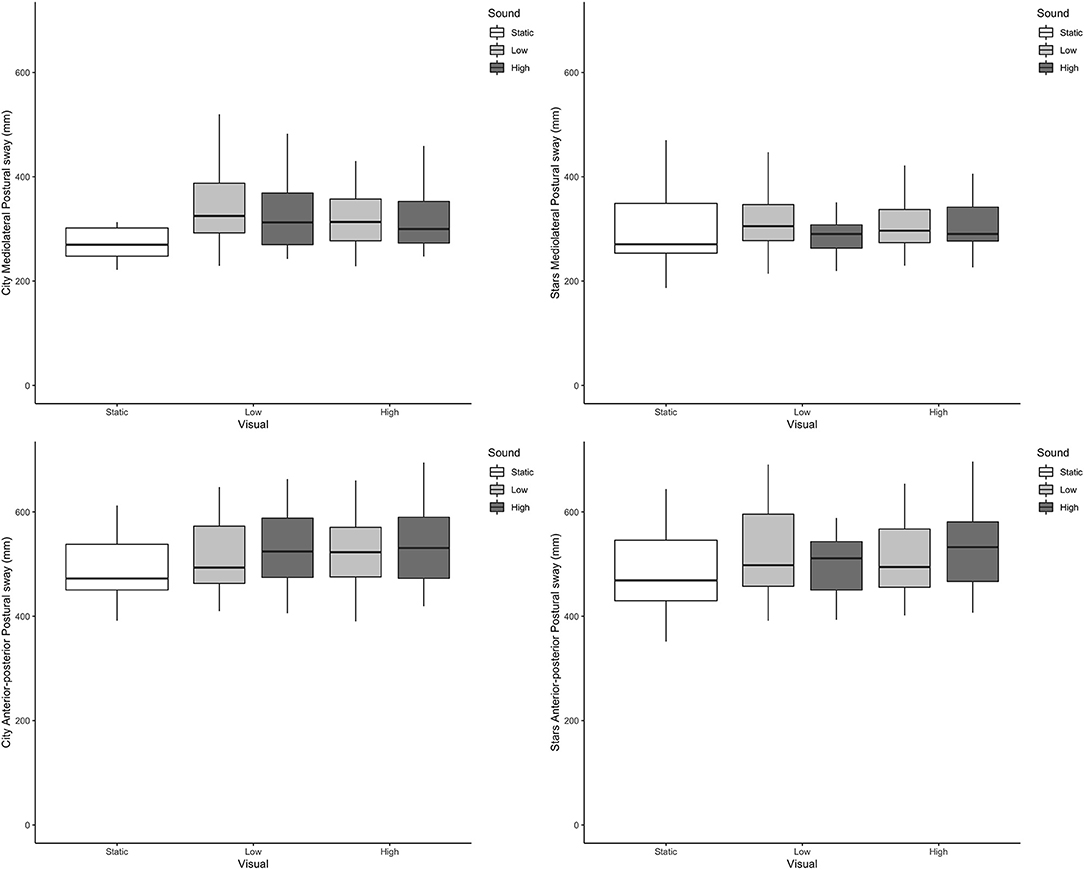

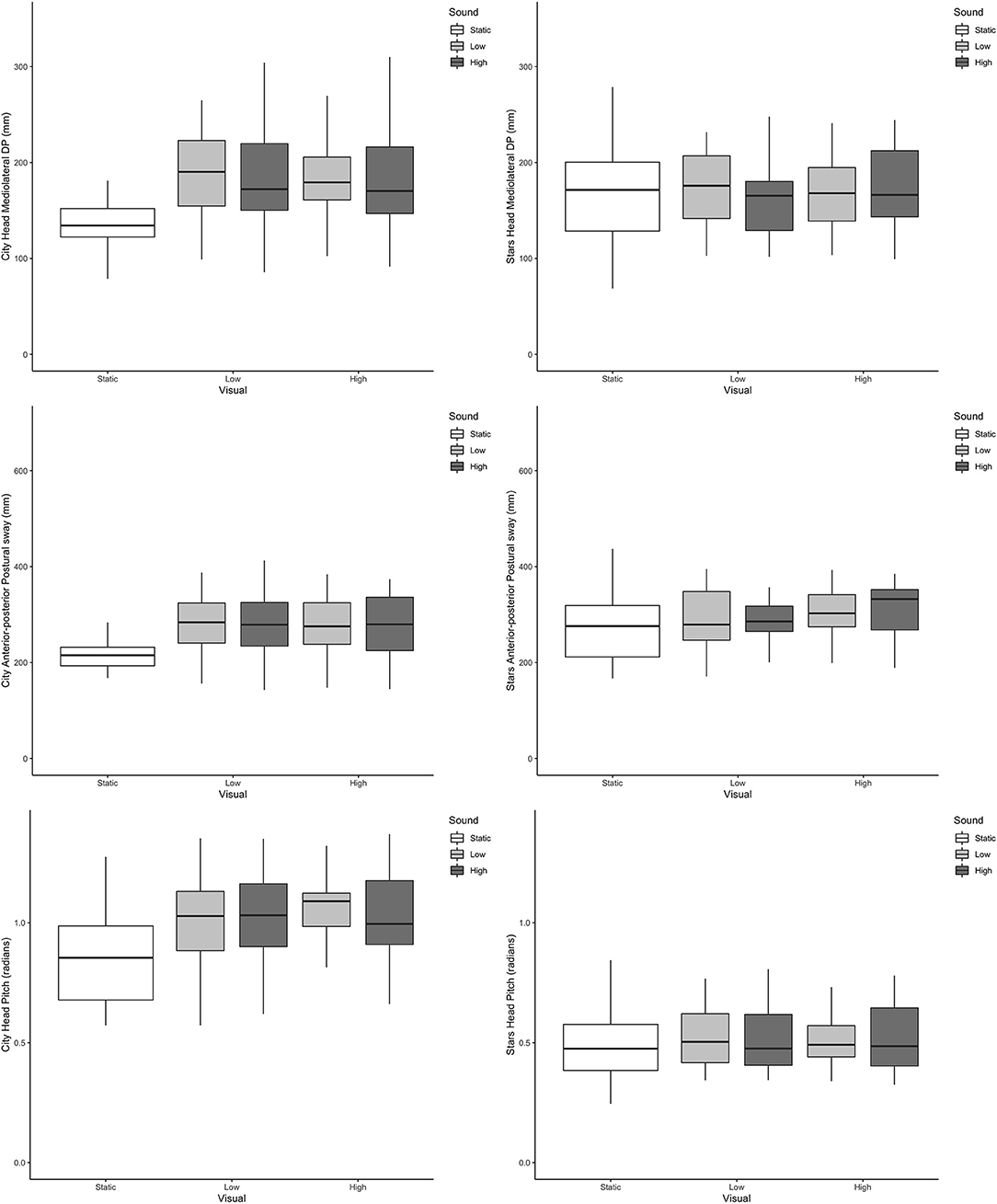

Across all outcome measures and all conditions, box plots of the scenes that included dynamic visual and auditory perturbations showed a complete overlap. Representative examples from postural sway and head data can be seen in Figures 2, 3, respectively.

Figure 2. Boxplots of postural sway directional path in mm (Y axis) across the different visual and auditory levels for the city scene (left-hand side) and stars scene (right-hand side) in the medio-lateral direction (top) and anterior-posterior direction (bottom).

Figure 3. Boxplots of head directional path (Y axis) across the different visual and auditory levels for the city scene (left-hand side) and stars scene (right-hand side) in the medio-lateral direction (top, mm); anterior-posterior direction (middle, mm) and pitch (bottom, radians).

Aim 2

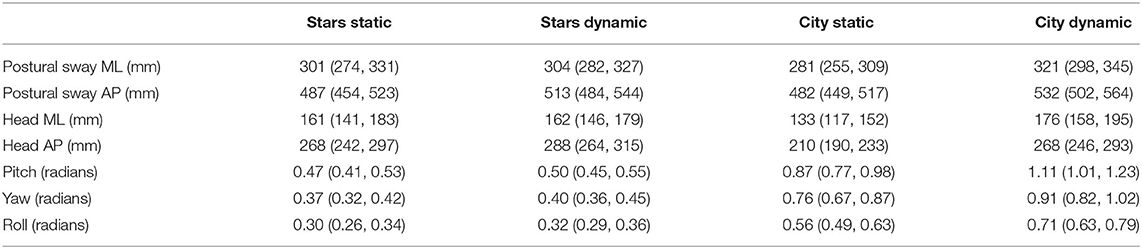

Given the lack of difference between “low” and “high” perturbations, we combined all perturbations data into a single “dynamic” category and compared it to the static level. All model coefficients are presented on the log scale whereas estimated marginal means in the response scale are presented in Table 3.

Table 3. Directional path estimated marginal means in the response scale with 95% confidence intervals.

Postural Sway ML

We observed no significant main effects of sensory perturbations or environment, but a significant sensory perturbation by environment interaction such that the increase in Sway ML was larger between static and dynamic conditions in the city (β = 0.124, 95% CI 0.028, 0.221, P = 0.012) compared with the stars.

Postural Sway AP

We observed a significant increase in Sway AP between static and dynamic for both scenes (β = 0.051, 95% CI 0.006, 0.096, P = 0.025), with no main effect of environment or sensory perturbations by environment interaction.

Head ML

There were no significant differences between static and dynamic for Head ML for the stars scene. A significant main effect of environment was observed, such that that Head ML was significantly lower in the city compared with the stars (β = −0.191, 95% CI −0.311, −0.07, P = 0.002) and a significant sensory perturbation by environment interaction (β = 0.274, 95% CI 0.148, 0.399, P < 0.001) such that there was a significant increase with the dynamic condition in the city.

Head AP

We observed a significant increase in Head AP DP between static and dynamic for both scenes (β = 0.073, 95% CI 0.015, 0.13, P = 0.013), a significantly lower Head AP DP with city compared with stars (β = −0.244, 95% CI −0.322, −0.166, P < 0.001) and a significant sensory perturbations by environment interaction (β = 0.172, 95% CI 0.09, 0.253, P < 0.001) such that the increase with the dynamic condition was higher in the city compared with the stars.

Head Pitch, Yaw, and Roll

There were no significant main effect of sensory perturbations for pitch, yaw or roll. We observed a significant main effect of environment for pitch (β = 0.628, 95% CI 0.519, 0.737, P < 0.001) and roll (β = 0.623, 95% CI 0.521, 0.726, P < 0.001) but not for yaw. Significant sensory perturbations by environment interactions were also observed for pitch (β = 0.177, 95% CI 0.063, 0.291, P = 0.002) and roll (β = 0.171, 95% CI 0.065, 0.278, P = 0.002), but not for yaw.

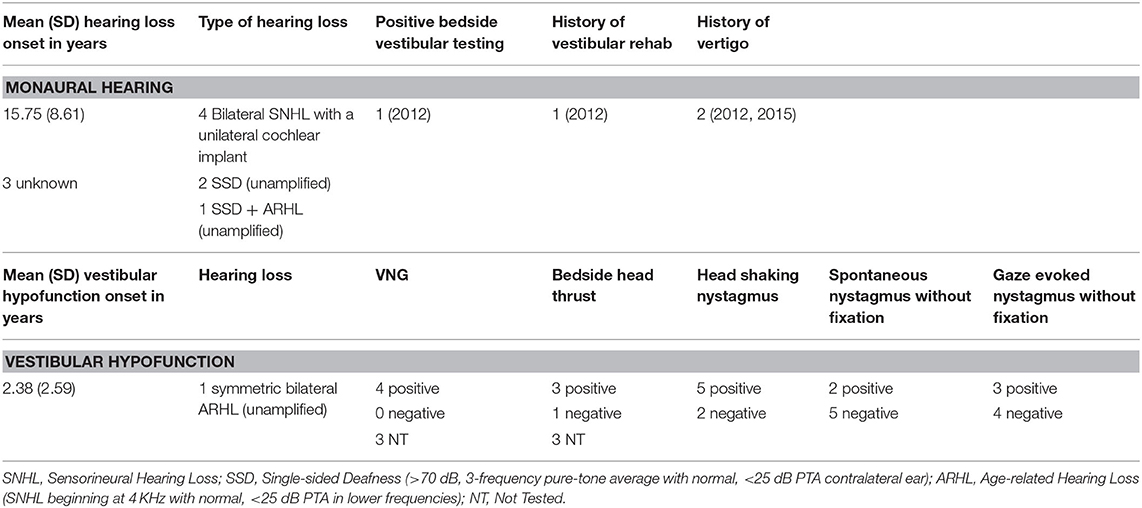

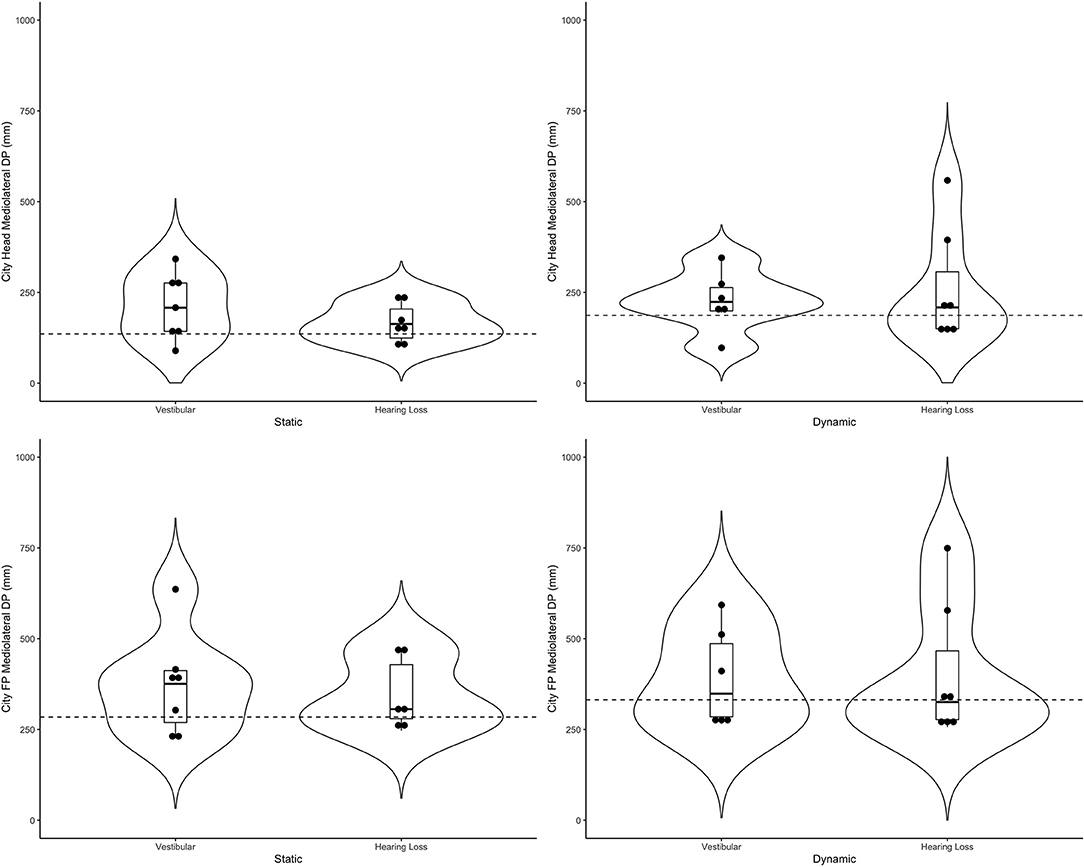

Aim 3

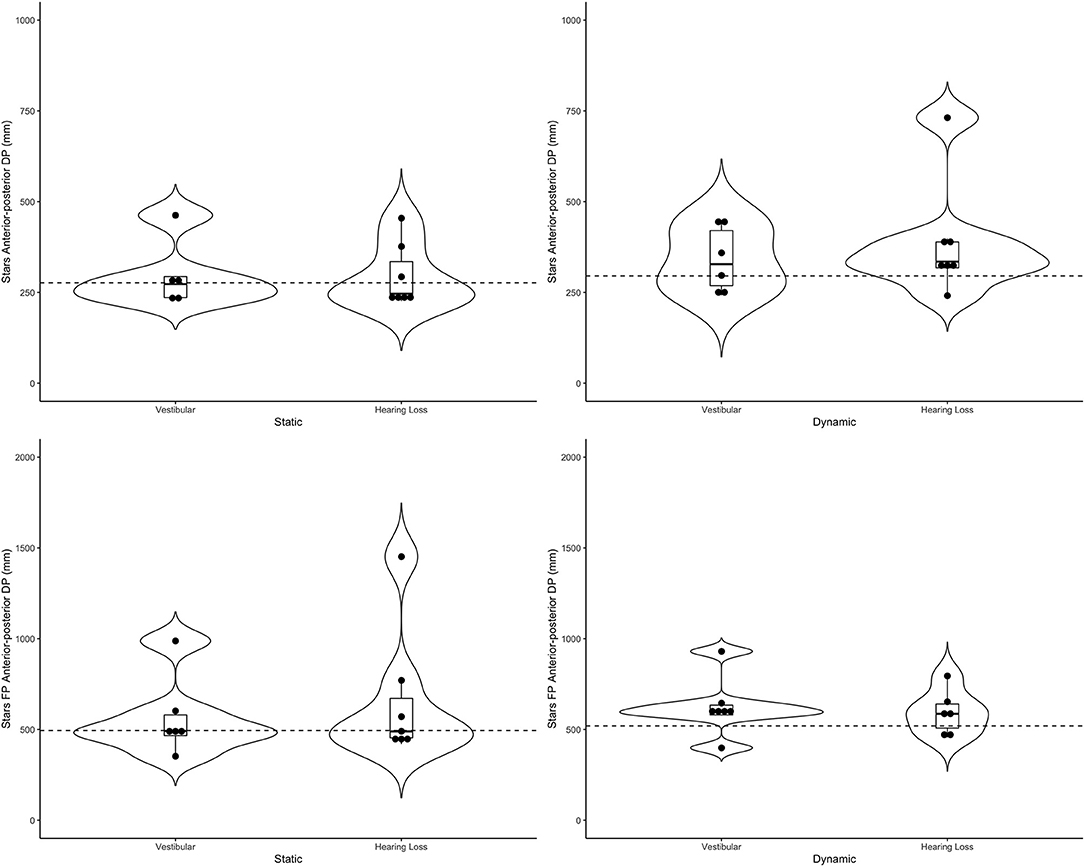

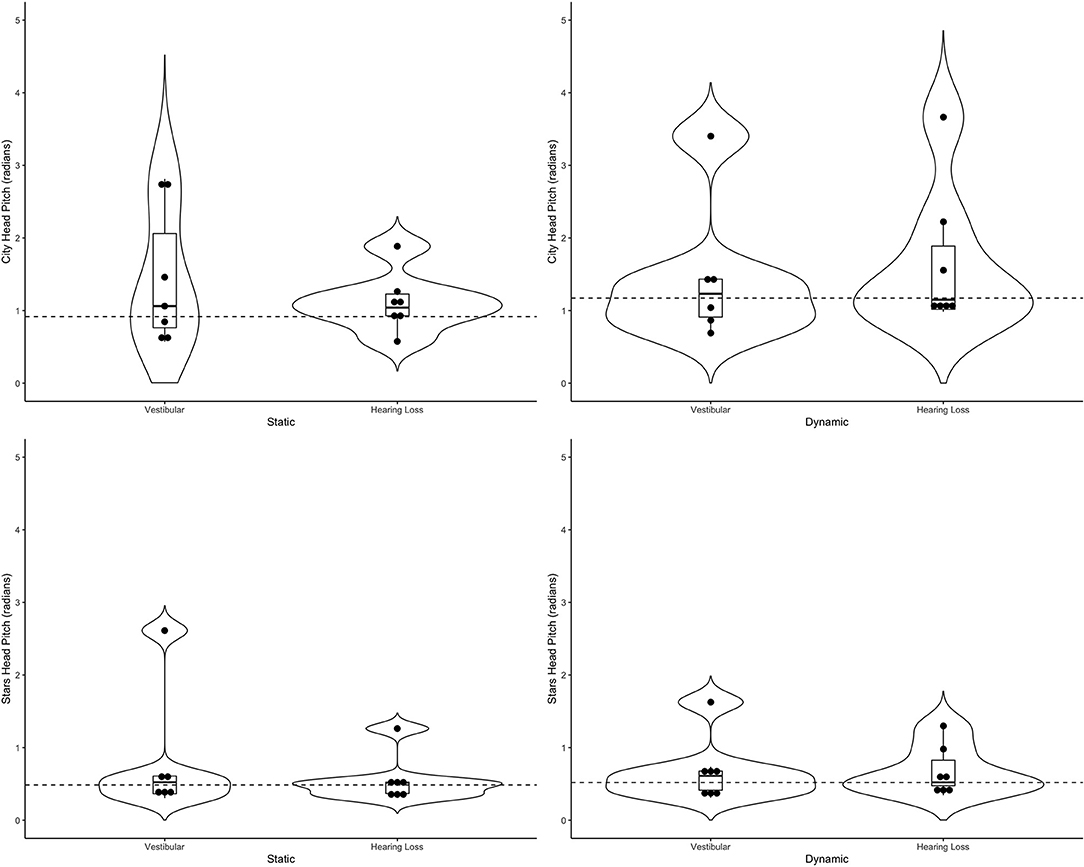

Table 4 includes a detailed description of the clinical groups. Representative violin plots can be seen in Figure 4 (city ML, postural sway, and head), Figure 5 (stars AP, postural sway, and head) and Figure 6 (pitch). All descriptive statistics per group can be found on Appendices A, B.

Figure 4. Violin plots representing the distribution of both clinical groups around the mean of the control group (represented by the dashed line) for the city scene in the medio-lateral direction. Top plots show head data and bottom plots represent postural sway data (FP = forceplate). Left-hand side represents the static scenes and right-hand side represents the dynamic scenes.

Figure 5. Violin plots representing the distribution of both clinical groups around the mean of the control group (represented by the dashed line) for the stars scene in the anterior-posterior direction. Top plots show head data and bottom plots represent postural sway data (FP = forceplate). Left-hand side represents the static scenes and right-hand side represents the dynamic scenes.

Figure 6. Violin plots representing the distribution head pitch (radians) of both clinical groups around the mean of the control group (represented by the dashed line) for the city scene (top) and stars scene (bottom) Left-hand side represents the static scenes and right-hand side represents the dynamic scenes.

Generally speaking, the majority of the vestibular group moved more than controls when the scenes were dynamic, particularly in the city scene. The monaural hearing group was more diverse. While 4 out of 7 performed similarly to controls, 3 patients emerged outside of the group on dynamic scenes. Of the 3, 1 had prior vestibular rehab, 1 had reduced hearing compared to the rest of the sample. Two of the 3 were elderly CI users (above 70 years of age), but 2 other younger CI users performed similarly to controls. Two of the three had the worst DHI (above 30 when the rest of the group was at 0) and ABC (the only 2 below 90%) scores (for averages see Table 2).

Discussion

This pilot study provided insights into the inquiry of sounds for postural control, the importance of context, and provided further support for head kinematics as an important additional metric (32, 33) that goes beyond the information provided by postural sway alone. The headset used for this scientific inquiry, the Oculus Rift, allows for simultaneous manipulation of high-quality visuals and sounds while obtaining accurate head kinematics. The study also generated hypotheses for future research investigating postural control in people with monaural hearing and contextual sensory integration in people with vestibular loss.

The first question of this study was how postural sway and head kinematics change in healthy adults in response to auditory perturbations when combined with visual perturbations. We expected to see changes in postural sway between the “low” and “high” visual environments based on previous research using similar visual conditions (38, 40). We also hypothesized that head movement will increase with the sound perturbations. However, these hypotheses were not met as in the current study, the “low” and “high” levels of auditory and visual stimulation did not make a difference in participants' movement when they were standing on the floor with their feet hip-width apart (based on descriptive statistics as well as median-based comparisons not shown). By combining the “low” and “high” data, we were able to explore the role of context, but no longer study the contribution of sounds to balance alone. It is possible that the addition of sounds, which were developed for this study, masked those differences. While all patients noticed the subtle differences between the dynamic scenes, most healthy adults could only detect a difference between the static and dynamic environments.

Our second question was whether the response to sensory perturbations (via postural sway and head kinematics) varies by context. Several observations with respect to this question should be discussed. First, medio-lateral postural sway increased with sensory perturbations in the city scene more than the stars scene. Anterior-posterior postural sway increased with sensory perturbations similarly in both environments. This is probably because the visual perturbation in both environments was in the anterior-posterior plane (flow of people or stars), and so a greater response in this direction is expected with an increased visual weight (51). As expected, further insights into people's motor behavior can be obtained from the head segment. For both medio-lateral and anterior-posterior head directional path we observed less movement on the static city environment vs. the static stars. This could be explained by the fact that a static street may feel more natural to people as compared with the “space” feeling many participants reported within the stars scene. This finding highlights the importance of including a static baseline scene to every environment to be studied. Interestingly, the transition from static to dynamic perturbations was larger in the city scene. Our sample also showed significantly more head pitch and roll on the city vs. stars environment. Potentially the participants were more influenced by the moving avatars than the moving stars, and perhaps felt a need to look away or avoid the flow of avatars that were moving toward them.

Our clinical sample was small and diverse, and the analysis of this sample was exploratory. Vestibular and auditory anatomy are closely linked (52), and peripheral vestibular hypofunction is often accompanied by various degrees of hearing loss. The current study did not include diagnostic vestibular testing on patients with hearing loss, so it is possible that these patients with hearing loss had an undiagnosed (or well-compensated) vestibulopathy. Patients with vestibular hypofunction were recruited from a physical therapy clinic whereas patients with monaural hearing loss were recruited from the physician's office where they were seen for their hearing. Patients with vestibular hypofunction also had higher level of simulator sickness than the other 2 groups, particularly after testing. Clinically, diagnostic vestibular testing is not routinely done in people with hearing loss unless they complain of dizziness. Interestingly, the two patients with monaural hearing loss that consistently showed much larger movement in response to sensory perturbation than the rest of the group (particularly AP postural sway and head movement, mostly in the stars scene) were the oldest in the group and had the worst DHI and ABC scores. It is possible that issues related to balance and hearing loss emerge in older age. It also suggests that all people with hearing loss should be regularly queried regarding dizziness and balance. The vestibular group had increased ML postural sway and head movement, particularly in the dynamic city scene. Even though the visual flow of the avatars was in the AP direction, it is possible that patients with vestibular dysfunction attempted to avoid collision by increasing lateral movement, even more than controls did, despite the fact that they were not asked to do so. All participants were asked to “do whatever feels naturally to them to maintain their balance.” Anecdotally, patients in the vestibular group were more likely to report some fear and discomfort with the avatars walking toward them (see Supplementary Video). In our prior work, we observed that participants with unilateral vestibular hypofunction had larger head movement than controls on different dynamic scenes (32, 33) but we did not include a static scene. The current pilot work suggests that larger head movement in the vestibular group particularly occurred in response to the dynamic scene and is not a constant difference (movement was closer to controls on the static scenes). It is important to consider that the descriptive differences observed between the clinical groups and controls were seen despite the fact that the current protocol was done when the patients were standing on a stable surface in a comfortable hips-width stance and the visual perturbations themselves were quite mild. Therefore, any behavior observed in response to the dynamic scenes could be potentially interpreted as excessive visual dependence associated with other sensory loss. To tap into somatosensory dependence we could add a challenging support surface to the paradigm (53). It is likely that further differences would arise between the vestibular group and controls when the surface does not provide stable, reliable somatosensory cues (19).

In addition to the small sample, other design limitations should be mentioned. Given that the study was designed with multilevel sensory load, the static/no sound scene was only repeated once. In the future, we will separate the auditory load from the visual load and include abstract as well as ecological sounds and perform the same number of repetitions on all scenes. Because previous studies did not find loudness/volume of the sound to be a factor in postural sway (6, 54–57), we used the same volume with a range of 62 db (stars) to 68 db (city) for all participants. It is therefore possible that some participants, particularly in the hearing loss group, were not impacted by the addition of the sounds because the sounds were not loud enough for them. In the future, in order to assess individual responses to sounds we will project the sounds at the “loudest that is still comfortable” level. Baseline levels of postural sway and head movement without an HMD were not obtained and could further contribute to the interpretation of the data. The lack of diagnostic vestibular testing on all groups is a limitation as well and needs to be added in future studies.

Conclusion And Future Research

The current settings were too subtle to test differences between responses to visuals and sounds. Future studies should isolate each modality at the presence of the other. Our data show the importance of context in the study of sensory integration and the feasibility of an HMD setup to do so. In addition, a static baseline scene should be included for each environment. We hypothesize that a varying context will show particular importance in people with vestibular disorders who may be anxious due to the flow of avatars in the immersive contextual environment. The head is an extension of postural sway and responses to HMD-derived sensory perturbations will be magnified around the head segment. While the clinical importance of this observation should be further investigated, it currently appears that HMD-based head kinematics augment data derived from a force platform, and could potentially provide a distinct characteristic of people with vestibular loss. This should be further studied, potentially in combination with electromyography (EMG) of the neck muscles. Fall risk in people with hearing loss has been shown in older adults and our pilot data suggest balance impairments in people with single-sided hearing are more likely to arise in older participants with moderate DHI scores. Future studies utilizing HMDs should further assess aging with and without hearing loss and its impact on postural performance in a larger sample with a more cohesive diagnosis. Vestibular testing needs to be conducted for a clear separation between vestibular-related and hearing-related pathologies and to clarify whether observed performance deficits relate to an underlying vestibular problem or directly to their hearing loss.

Data Availability Statement

The datasets generated for this study are available at: Lubetzky, Anat (2020), “Auditory Pilot Summer 2020”, Mendeley Data, v1 http://dx.doi.org/10.17632/9jwcp78xzf.1.

Ethics Statement

The studies involving human participants were reviewed and approved by Institutional Review Board of Mount Sinai; The New York University Committee on Activities Involving Human Subjects. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

AL, JK, BH, DH, and MC: substantial contributions to the conception or design of the work, or interpretation of data for the work. JK, BH, and MC: recruitment of participants. AL: data acquisition and drafting the work. DH and JL: statistical analysis of the data. All authors revising the work critically for important intellectual content, final approval of the submitted version, and agreement to be accountable for all aspects of the work.

Funding

This research was supported in part by the Hearing Health Foundation Emerging Research Grant 2019. AL and DH were funded by a grant from the National Institutes of Health National Rehabilitation Research Resource to Enhance Clinical Trials (REACT) pilot award. The sponsors had no role in the study design, collection, analysis and interpretation of data; in the writing of the manuscript; or in the decision to submit the manuscript for publication.

Disclaimer

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Conflict of Interest

MC reported unpaid participation in research on cochlear implants and other implantable devices manufactured by Advanced Bionics, Cochlear Americas, MED-El, and Oticon Medical outside the submitted work.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Zhu Wang, PhD candidate, NYU Courant Future Reality Lab and Tandon School of Engineering, for the development of the testing applications. We thank Susan Lunardi and Madison Pessel, physical therapy students at NYU, for their assistance with data collection.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fneur.2021.597404/full#supplementary-material

References

1. Horak FB, Shupert CL, Mirka A. Components of postural dyscontrol in the elderly: a review. Neurobiol Aging. (1989) 10:727–38. doi: 10.1016/0197-4580(89)90010-9

2. Peterka RJ. Sensorimotor integration in human postural control. J Neurophysiol. (2002) 88:1097–118. doi: 10.1152/jn.2002.88.3.1097

3. Carver S, Kiemel T, Jeka JJ. Modeling the dynamics of sensory reweighting. Biol Cybern. (2006) 95:123–34. doi: 10.1007/s00422-006-0069-5

4. Jeka J, Oie KS, Kiemel T. Multisensory information for human postural control: integrating touch and vision. Exp Brain Res. (2000) 134:107–25. doi: 10.1007/s002210000412

5. Raper SA, Soames RW. The influence of stationary auditory fields on postural sway behaviour in man. Eur J Appl Physiol Occup Physiol. (1991) 63:363–7. doi: 10.1007/BF00364463

6. Lubetzky AV, Gospodarek M, Arie L, Kelly J, Roginska A, Cosetti M. Auditory input and postural control in adults: a narrative review. JAMA Otolaryngol Head Neck Surg. (2020) 146:480–7. doi: 10.1001/jamaoto.2020.0032

7. Keshner EA, Fung J. The quest to apply VR technology to rehabilitation: tribulations and treasures. J Vestib Res. (2017) 27:1–5. doi: 10.3233/VES-170610

8. Bronstein AM. Multisensory integration in balance control. Handb Clin Neurol. (2016) 137:57–66. doi: 10.1016/B978-0-444-63437-5.00004-2

9. Gandemer L, Parseihian G, Bourdin C, Kronland-Martinet R. ound and Posture: An Overview of Recent Findings. São Paulo: CMMR (2016).

10. Freyler K, Krause A, Gollhofer A, Ritzmann R. Specific stimuli induce specific adaptations: sensorimotor training vs. reactive balance training. PLoS ONE. (2016) 11:e0167557. doi: 10.1371/journal.pone.0167557

11. Naumann T, Kindermann S, Joch M, Munzert J, Reiser M. No transfer between conditions in balance training regimes relying on tasks with different postural demands: specificity effects of two different serious games. Gait Posture. (2015) 41:774–9. doi: 10.1016/j.gaitpost.2015.02.003

12. Shumway-Cook A, Woollacott M. Attentional demands and postural control: the effect of sensory context. J Gerontol Biol Sci Med Sci. (2000) 55:M10–16. doi: 10.1093/gerona/55.1.m10

13. Jamet M, Deviterne D, Gauchard GC, Vançon G, Perrin PP. Age-related part taken by attentional cognitive processes in standing postural control in a dual-task context. Gait Posture. (2007) 25:179–84. doi: 10.1016/j.gaitpost.2006.03.006

14. Anson E, Jeka J. Perspectives on aging vestibular function. Front Neurol. (2015) 6:269. doi: 10.3389/fneur.2015.00269

15. Eysel-Gosepath K, McCrum C, Epro G, Brüggemann G-P, Karamanidis K. Visual and proprioceptive contributions to postural control of upright stance in unilateral vestibulopathy. Somatosens Mot Res. (2016) 33:72–8. doi: 10.1080/08990220.2016.1178635

16. Gazzola JM, Caovilla HH, Doná F, Ganança MM, Ganança FF. A quantitative analysis of postural control in elderly patients with vestibular disorders using visual stimulation by virtual reality. Braz J Otorhinolaryngol. (2020) 86:593–601.

17. Hoffmann CP, Seigle B, Frère J, Parietti-Winkler C. Dynamical analysis of balance in vestibular schwannoma patients. Gait Posture. (2017) 54:236–41. doi: 10.1016/j.gaitpost.2017.03.015

18. Mulavara AP, Cohen HS, Peters BT, Sangi-Haghpeykar H, Bloomberg JJ. New analyses of the sensory organization test compared to the clinical test of sensory integration and balance in patients with benign paroxysmal positional vertigo. Laryngoscope. (2013) 123:2276–80. doi: 10.1002/lary.24075

19. Pedalini MEB, Cruz OLM, Bittar RSM, Lorenzi MC, Grasel SS. Sensory organization test in elderly patients with and without vestibular dysfunction. Acta Otolaryngol. (2009) 129:962–5. doi: 10.1080/00016480802468930

20. Sprenger A, Wojak JF, Jandl NM, Helmchen C. Postural control in bilateral vestibular failure: its relation to visual, proprioceptive, vestibular, and cognitive input. Front Neurol. (2017) 8:444. doi: 10.3389/fneur.2017.00444

21. Horak FB. Postural compensation for vestibular loss and implications for rehabilitation. Restor Neurol Neurosci. (2010) 28:57–68. doi: 10.3233/RNN-2010-0515

22. Haran FJ, Keshner EA. Sensory reweighting as a method of balance training for labyrinthine loss. J Neurol Phys Ther. (2008) 32:186–91. doi: 10.1097/NPT.0b013e31818dee39

23. Pardasaney PK, Slavin MD, Wagenaar RC, Latham NK, Ni P, Jette AM. Conceptual limitations of balance measures for community-dwelling older adults. Phys Ther. (2013) 93:1351–68. doi: 10.2522/ptj.20130028

24. Whitney SL, Hudak MT, Marchetti GF. The activities-specific balance confidence scale and the dizziness handicap inventory: a comparison. J Vestib Res. (1999) 9:253–9.

25. Jacobson GP, Calder JH. Self-perceived balance disability/handicap in the presence of bilateral peripheral vestibular system impairment. J Am Acad Audiol. (2000) 11:76–83.

26. Lin FR, Ferrucci L. Hearing loss and falls among older adults in the United States. Arch Intern Med. (2012) 172:369–71. doi: 10.1001/archinternmed.2011.728

27. Heitz ER, Gianattasio KZ, Prather C, Talegawkar SA, Power MC. Self-reported hearing loss and nonfatal fall-related injury in a nationally representative sample. J Am Geriatr Soc. (2019) 67:1410–16. doi: 10.1111/jgs.15849

28. Lubetzky AV, Wang Z, Krasovsky T. Head mounted displays for capturing head kinematics in postural tasks. J Biomech. (2019) 27:175–82. doi: 10.1016/j.jbiomech.2019.02.004

29. Ribo M, Pinz A, Fuhrmann AL. A new optical tracking system for virtual and augmented reality applications. In: IMTC 2001. Proceedings of the 18th IEEE Instrumentation and Measurement Technology Conference. Rediscovering Measurement in the Age of Informatics (Cat. No.01CH 37188). Vol. 3 (2001). p. 1932–6.

30. Keshner EA, Streepey J, Dhaher Y, Hain T. Pairing virtual reality with dynamic posturography serves to differentiate between patients experiencing visual vertigo. J Neuroeng Rehabil. (2007) 4:24. doi: 10.1186/1743-0003-4-24

31. Keshner EA, Dhaher Y. Characterizing head motion in 3 planes during combined visual and base of support disturbances in healthy and visually sensitive subjects. Gait Posture. (2008) 28:127–34. doi: 10.1016/j.gaitpost.2007.11.003

32. Lubetzky AV, Hujsak BD. A virtual reality head stability test for patients with vestibular dysfunction. J Vestib Res. (2018) 28:393–400. doi: 10.3233/VES-190650

33. Lubetzky AV, Hujsak BD, Fu G, Perlin K. An oculus rift assessment of dynamic balance by head mobility in a virtual park scene: a pilot study. Motor Control. (2019) 23:127–42. doi: 10.1123/mc.2018-0001

34. Allum JH, Gresty M, Keshner E, Shupert C. The control of head movements during human balance corrections. J Vestib Res. (1997) 7:189–218. doi: 10.3233/VES-1997-72-309

35. Horak FB, Shupert CL, Dietz V, Horstmann G. Vestibular and somatosensory contributions to responses to head and body displacements in stance. Exp Brain Res. (1994) 100:93–106. doi: 10.1007/BF00227282

36. Zhong X, Yost WA. Relationship between postural stability and spatial hearing. J Am Acad Audiol. (2013) 24:782–8. doi: 10.3766/jaaa.24.9.3

37. Easton RD, Greene AJ, DiZio P, Lackner JR. Auditory cues for orientation and postural control in sighted and congenitally blind people. Exp Brain Res. (1998) 118:541–50. doi: 10.1007/s002210050310

38. Lubetzky AV, Harel D, Kelly J, Hujsak BD, Perlin K. Weighting and reweighting of visual input via head mounted display given unilateral peripheral vestibular dysfunction. Hum Mov Sci. (2019) 68:102526. doi: 10.1016/j.humov.2019.102526

39. Williams DR. Aliasing in human foveal vision. Vision Res. (1985) 25:195–205. doi: 10.1016/0042-6989(85)90113-0

40. Polastri PF, Barela JA. Adaptive visual re-weighting in children's postural control. PLoS ONE. (2013) 8:e82215. doi: 10.1371/journal.pone.0082215

41. Kennedy RS, Lane NE, Berbaum KS, Lilienthal MG. Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int J Aviat Psych. (1993) 3:203–20. doi: 10.1207/s15327108ijap0303_3

42. Jacobson GP, Newman CW. The development of the dizziness handicap inventory. Arch Otolaryngol Head Neck Surg. (1990) 116:424–7. doi: 10.1001/archotol.1990.01870040046011

43. Soames RW, Atha J. The spectral characteristics of postural sway behaviour. Eur J Appl Physiol Occup Physiol. (1982) 49:169–77. doi: 10.1007/BF02334065

44. Quatman-Yates CC, Lee A, Hugentobler JA, Kurowski BG, Myer GD, Riley MA. Test-retest consistency of a postural sway assessment protocol for adolescent athletes measured with a force plate. Int J Sports Phys Ther. (2013) 8:741–8.

45. Li Z, Liang Y-Y, Wang L, Sheng J, Ma S-J. Reliability and validity of center of pressure measures for balance assessment in older adults. J Phys Ther Sci. (2016) 28:1364–7. doi: 10.1589/jpts.28.1364

46. Lubetzky AV, Kary EE, Harel D, Hujsak B, Perlin K. Feasibility and reliability of a virtual reality oculus platform to measure sensory integration for postural control in young adults. Physiother Theory Pr. (2018) 34:935–50. doi: 10.1080/09593985.2018.1431344

47. Gelman A, Hill A. Data Analysis Using Regression and Multilevel/Hierarchical Models. Cambridge: Cambridge University Press (2007).

48. Harel D, McAllister T. Multilevel models for communication sciences and disorders. J Speech Lang Hear Res. (2019) 62:783–801. doi: 10.1044/2018_JSLHR-S-18-0075

49. Kackar R, Harville D. Approximations for standard errors of estimators of fixed and random effects in mixed linear models. J Am Stat Assoc. (1984) 79:853–62. doi: 10.1080/01621459.1984.10477102

50. R Core Team. R: A Language and Environment for Statistical Computing. Boston, MA: R Foundation for Statistical Computing (2019).

51. Maheu M, Sharp A, Pagé S, Champoux F. Congenital deafness alters sensory weighting for postural control. Ear Hear. (2017) 38:767–70. doi: 10.1097/AUD.0000000000000449

52. Santos TGT, Venosa AR, Sampaio ALL. Association between hearing loss and vestibular disorders: a review of the interference of hearing in the balance. Int J Otolaryngol Head Amp Neck Surg. (2015) 4:173. doi: 10.4236/ijohns.2015.43030

53. Shumway-Cook A, Horak FB. Assessing the influence of sensory interaction of balance. Suggestion from the field. Phys Ther. (1986) 66:1548–50. doi: 10.1093/ptj/66.10.1548

54. Park SH, Lee K, Lockhart T, Kim S. Effects of sound on postural stability during quiet standing. J Neuroeng Rehabil. (2011) 8:67. doi: 10.1186/1743-0003-8-67

55. Siedlecka B, Sobera M, Sikora A, Drzewowska I. The influence of sounds on posture control. Acta Bioeng Biomech. (2015) 17:96–102.

56. Polechonski J, Błaszczyk J. The effect of acoustic noise on postural sway in male and female subjects. J Hum Kinet. (2006) 15.

Keywords: sensory integration for postural control, Head Mounted Display, vestibular disorders, hearing loss, balance

Citation: Lubetzky AV, Kelly JL, Hujsak BD, Liu J, Harel D and Cosetti M (2021) Postural and Head Control Given Different Environmental Contexts. Front. Neurol. 12:597404. doi: 10.3389/fneur.2021.597404

Received: 21 August 2020; Accepted: 07 May 2021;

Published: 03 June 2021.

Edited by:

Judith Erica Deutsch, Rutgers, The State University of New Jersey, United StatesReviewed by:

Antonino Naro, Centro Neurolesi Bonino Pulejo (IRCCS), ItalyDara Meldrum, Trinity College Dublin, Ireland

Copyright © 2021 Lubetzky, Kelly, Hujsak, Liu, Harel and Cosetti. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anat V. Lubetzky, YW5hdEBueXUuZWR1

Anat V. Lubetzky

Anat V. Lubetzky Jennifer L. Kelly

Jennifer L. Kelly Bryan D. Hujsak

Bryan D. Hujsak Jenny Liu

Jenny Liu Daphna Harel

Daphna Harel Maura Cosetti

Maura Cosetti