94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurol., 17 July 2020

Sec. Neurotrauma

Volume 11 - 2020 | https://doi.org/10.3389/fneur.2020.00670

Jonas Stenberg1,2*

Jonas Stenberg1,2* Justin E. Karr3,4,5,6,7

Justin E. Karr3,4,5,6,7 Douglas P. Terry3,5,6

Douglas P. Terry3,5,6 Simen B. Saksvik8,9

Simen B. Saksvik8,9 Anne Vik1,2

Anne Vik1,2 Toril Skandsen1,9

Toril Skandsen1,9 Noah D. Silverberg10,11,12

Noah D. Silverberg10,11,12 Grant L. Iverson3,5,6,7

Grant L. Iverson3,5,6,7Background: Measuring cognitive functioning is common in traumatic brain injury (TBI) research, but no universally accepted method for combining several neuropsychological test scores into composite, or summary, scores exists. This study examined several possible composite scores for the test battery used in the large-scale study Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury (CENTER-TBI).

Methods: Participants with mild traumatic brain injury (MTBI; n = 140), orthopedic trauma (n = 72), and healthy community controls (n = 70) from the Trondheim MTBI follow-up study completed the CENTER-TBI test battery at 2 weeks after injury, which includes both traditional paper-and-pencil tests and tests from the Cambridge Neuropsychological Test Automated Battery (CANTAB). Seven composite scores were calculated for the paper and pencil tests, the CANTAB tests, and all tests combined (i.e., 21 composites): the overall test battery mean (OTBM); global deficit score (GDS); neuropsychological deficit score-weighted (NDS-W); low score composite (LSC); and the number of scores ≤5th percentile, ≤16th percentile, or <50th percentile.

Results: The OTBM and the number of scores <50th percentile composites had distributional characteristics approaching a normal distribution. The other composites were in general highly skewed and zero-inflated. When the MTBI group, the trauma control group, and the community control group were compared, effect sizes were negligible to small for all composites. Subgroups with vs. without loss of consciousness at the time of injury did not differ on the composite scores and neither did subgroups with complicated vs. uncomplicated MTBIs. Intercorrelations were high within the paper-and-pencil composites, the CANTAB composites, and the combined composites and lower between the paper-and-pencil composites and the CANTAB composites.

Conclusion: None of the composites revealed significant differences between participants with MTBI and the two control groups. Some of the composite scores were highly correlated and may be redundant. Additional research on patients with moderate to severe TBIs is needed to determine which scores are most appropriate for TBI clinical trials.

The European Commission has funded a large-scale, multi-national longitudinal observational study called the Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury (CENTER-TBI) (1–4). Collectively, CENTER-TBI aspires to identify best practices, develop precision medicine, and improve outcomes for people with TBIs via comparative-effectiveness studies. Repositories of comprehensive clinical patient data, neuroimaging, genetics, and blood biomarkers are being developed that can be used to advance the field of brain injury medicine in diverse ways, including improving diagnosis, clinical management, and prognostication (1). The cognitive test battery used in CENTER-TBI includes both computerized and traditional paper-and-pencil tests. Tests from the Cambridge Neuropsychological Test Automated Battery (CANTAB) (5), a battery of computerized cognitive tests that has been used in research on a variety of neurological disorders, including TBI (6–12), were included in the CENTER-TBI battery. The present study evaluates candidate cognitive endpoints, or composite scores, for the CENTER-TBI neuropsychological battery using data from the Trondheim MTBI follow-up study. In this study, as well as in the CENTER-TBI study, patients with mild traumatic brain injury (MTBI) were assessed 2 weeks after the injury. The extent of cognitive deficits 2 weeks after MTBI is uncertain, and empirical studies report effect sizes ranging from very small to medium (13–16).

A cognitive composite score combines several test scores into a single score (17). If an injury to the brain is associated with a deficit in one specific cognitive domain, it could be argued that this deficit will be washed out in a composite that includes measures of several domains, such as the overall mean score of a battery of tests. However, there is substantial variability between studies regarding which cognitive domains may be most affected after MTBI (18), suggesting heterogeneity in deficits between patients (e.g., some patients have attentional deficits and others have memory problems). Under these circumstances, a cognitive composite that considers each person's individual profile of low test scores might be well-suited for identifying deficits not only for the person, but also at the group level. A well-validated cognition composite score could serve as a primary or secondary endpoint in rehabilitation clinical trials (i.e., a summary variable that is used as a primary outcome to gauge the efficacy of an intervention), or as a variable of interest in a broad range of diagnostic or prognostic TBI studies involving neuroimaging and serum biomarkers. An endpoint that has strong psychometric properties would be sensitive to detecting small changes in cognitive functioning, which could be especially helpful in the TBI field given the variability of cognitive domains that could be affected by the injury (18). However, there is no well-validated and widely accepted cognition endpoint at present, neither in the TBI field in general (17), nor in the CENTER-TBI study. Seven candidate cognition summary scores have been developed in prior studies and applied to the Automated Neuropsychological Assessment Metrics (Version 4) Traumatic Brain Injury Military (ANAM4 TBI-MIL) (19) and the Delis-Kaplan Executive Function System (D-KEFS) (20) as part of a program of research designed to validate a cognition endpoint for TBI clinical trials (17). These previous studies evaluated a set of neuropsychological tests that are from the same publisher and are co-normed. However, in both research and clinical practice, neuropsychologists commonly administer a variety of tests that are standardized on different normative samples, and the composites have not yet been evaluated in neuropsychological test batteries that are not co-normed. Given that the test battery in CENTER-TBI consists of tests from different publishers and the study is conducted in multiple nations, a single normative group is not possible to use. Evaluating cognitive composites in a battery using different normative reference groups is important. The purpose of this study is to compare and contrast the seven cognition composite scores using the CENTER-TBI test battery in adults with MTBI, orthopedic injuries, and in healthy community controls. More specifically, we will (1) evaluate if some composites reveal greater group differences than others, and (2) assess the degree of intercorrelation between the composites to investigate whether certain endpoints could be considered redundant.

The participants in the present study were part of the Trondheim MTBI Follow-Up Study (total N = 378) (21). Patients with MTBI were recruited from April 2014 to December 2015. In the present study, adult patients were included if they were between ages 18 and 59 years and sustained a MTBI per the criteria described by the WHO Collaborating Center Task Force on MTBI: (a) mechanical energy to the head from external physical forces; (b) Glasgow Coma Scale (GCS) score of 13–15 at presentation to the emergency department; and (c) either witnessed loss of consciousness (LOC) <30 min, confusion, or post-traumatic amnesia (PTA) <24 h, or intracranial traumatic lesion not requiring surgery (22). Exclusion criteria were: (a) non-fluency in the Norwegian language; (b) pre-existing severe neurological (e.g., stroke, multiple sclerosis), psychiatric, somatic, or substance use disorders, determined to be severe enough to likely interfere with follow-up; (d) a prior history of a complicated mild, moderate, or severe TBI; (e) other concurrent major trauma (e.g., multiple fractures or internal bleeding) or brain injuries more severe than a MTBI.

Recruitment took place at a level 1 trauma center in Trondheim, Norway, and at the municipal emergency clinic, an outpatient clinic run by general practitioners. Patients were identified by daily screening of all referrals to head CT and patient lists at the municipal emergency clinic. Patients with a likely or possible MTBI were approached in the hospital ward or in the emergency department by study personnel, or contacted by telephone if they had left the emergency departments. LOC was self-reported and was categorized as present only if it was witnessed. Duration of PTA was also based on self-report and defined as the time after injury for which the patient had no continuous memory. It was dichotomized to either <1 or 1–24 h. A structured interview was conducted to assess LOC, PTA, and pre-injury health problems. Intracranial traumatic findings were obtained from Magnetic Resonance Imaging (MRI), performed within 72 h. MRI was performed on a 3.0 Tesla Siemens Skyra system (Siemens Healthcare, Erlangen, Germany) with a 32-channel head coil. The MTBI was classified as uncomplicated if there were no intracranial traumatic lesions on MRI, as described in detail previously (23). All patients in the present study underwent MRI. In addition, CT was performed in the majority of the patients, but none of the patients had intracranial findings on CT that were not detected on MRI (23).

Two control groups were recruited. One group consisted of patients with orthopedic injuries, free from trauma affecting the head, neck, or the dominant upper extremity (i.e., trauma controls). The trauma controls were identified by screening patient lists from the emergency departments. The other group consisted of healthy community controls. The community controls were recruited among hospital and university staff, students, and acquaintances of staff, students, and patients. The exclusion criteria were the same for the control groups and the MTBI group, but in addition, the community control group could not receive treatment for a serious psychiatric condition, even if they might be able to comply with follow-up. With this exception, medication in itself (e.g., analgesics) was not an exclusion criteria, neither for the MTBI group, nor for the control groups. The study was approved by the regional committee for research ethics (REK 2013/754) and was conducted in accordance with the Helsinki declaration. All participants gave informed consent.

Participants with MTBI and trauma controls underwent neuropsychological testing approximately 2 weeks after the injury (MTBI: M = 16.6 days, SD = 3.1 days; Trauma controls: M = 17.1 days, SD = 3.4 days). The tests were administered by research staff with at least a Bachelor's degree in clinical psychology or neuroscience who were supervised by a licensed clinical psychologist. The total test time was around 90 min. The testing involved a larger battery, but in line with the purpose of the present study, only the tests included in the CENTER-TBI neuropsychological battery were analyzed in the current study. It should be noted that Norwegian norms do not exist for the CENTER-TBI battery and it is specified below which norms were used for each test. The Vocabulary subtest from the Norwegian version of the Wechsler Abbreviated Scale of Intelligence (WASI) was used to estimate premorbid intellectual functioning and raw scores were converted to age-referenced T scores using the normative data in the manual (24, 25). The Vocabulary subtest is commonly used for this purpose in TBI research because test performance is relatively unaffected by cognitive impairment following TBI (26, 27).

The traditional paper-and-pencil tests included in the CENTER-TBI battery are the Trail Making Test (TMT) Parts A and B and the Rey Auditory Verbal Test (RAVLT). On the TMT Part A (28), the task is to connect the numbers 1–25 with a line as fast as possible. On the TMT Part B, the participant is asked to draw a line alternating between numbers and letters as fast as possible. The outcome measure is time-to-completion and norms from Mitrushina et al. were used to calculate age-referenced T scores (29). On the RAVLT (28), the administrator reads a list of 15 words, and the participant is asked to recall as many words as possible. The test includes five trials. Then, an interference list is read and participants are asked to recall the words from the interference list. Thereafter, they are asked to recall the words from the original list immediately after the interference list, and again after 20 min. The sum of words remembered across the five trials and the number of words recalled following a 20-min delay (i.e., delayed recall score) were the outcome measures included in composite score calculation. Norms from Schmidt (30) published in Strauss et al. (28) were used to calculated age-referenced T scores. No stand-alone performance validity test was administered. For exploratory purposes, we examined rates of unusually low RAVLT scores that might reflect poor effort. Boone et al. (31) examined the RAVLT in patients suspected of giving non-credible memory performance based on their results on stand-alone performance validity tests. They reported that the Recognition Trial was the most useful for identifying possible poor effort. Given that the Recognition Trial was not administered as part of the CENTER TBI battery, we selected two other cutoff scores from Boone et al. that reflect unusually low scores that might reflect poor effort: Trial 5 ≤ 6 and Trials 1–5 ≤ 28. In our samples, the percentages of subjects who scored ≤6 on Trial 5 were as follows: MTBI = 2.1% (n = 3), trauma controls = 0%, and community controls = 0%. The percentages who scored ≤28 on Trials 1–5 were as follows: MTBI = 2.1% (n = 3), trauma controls = 1.4% (n = 1), and community controls = 0%. Given that there were no incentives to deliberately under-perform and the rates of these low scores were so low and of uncertain meaning, we did not exclude any subjects on the basis of these scores.

The CANTAB involves a tablet-based assessment. The tests included in the CENTER-TBI battery are Attention Switching Task (AST), Paired Associates Learning (PAL), Rapid Visual Processing (RVP), Spatial Working Memory (SWM), Reaction Time Index (RTI), and Stockings of Cambridge (SOC). Raw scores were converted to age-referenced T scores using the CANTAB software. No norms are available for the AST (5), and this test was therefore not included in the present study (i.e., five CANTAB tests were included). Each CANTAB test generates up to 53 outcome measures (e.g., SWM). For inclusion in the composite scores, one outcome measure for each of the five tests was chosen. The outcome measure chosen for each test was the variable with normative data closest to being a total achievement/summary score in the “Recommended Measures Report” (5). On PAL, several boxes that contain different patterns are shown. Each pattern is subsequently shown for 1 s and the participant is asked to identify which box contains that pattern. “Total errors adjusted” was chosen as the outcome measure, with more errors indicative of worse performance (“adjusted” means that the score is adjusted for the number of trials completed). On RVP, participants are presented with numbers appearing on the screen at the rate of 100 digits per minute. The task is to press a button each time one of three target sequences (three digits) is shown. “A prime,” the outcome measure chosen, is a measure of the ability to identify the target sequence (i.e., the relationship between the probability of identifying a target sequence and the probability of identifying a non-target sequence). A higher score is indicative of better performance. On SWM, the task is to search through boxes for a token. When the token is found, a new token is placed in a different box. A token is not hidden in the same box twice; and to avoid errors, participants must remember where previous tokens appeared. The outcome measure chosen, “between errors,” is defined as the number of times the subject revisits a box in which a token has previously been found. A lower score is indicative of better performance. On RTI, the participant responds as fast as possible when a yellow dot is presented in one of five white circles. Response time in milliseconds was chosen as the outcome measure, with faster responding indicative of better performance. On SOC, two displays with three balls presented inside stockings appear on the screen, and the aim is to move the balls in one display such that it is identical to the arrangement of balls in the other display. The number of problems solved with the minimum possible moves was chosen as the outcome measure, with a higher score indicative of better performance.

Seven different composite scores, previously described in detail (19, 20), were calculated for the present study. Each composite score was calculated for the traditional paper-and-pencil tests only, the CANTAB tests only, and all tests (i.e., a combined composite), leading to 21 composites in total. All raw scores were converted to age-adjusted T scores (M = 50, SD = 10, in the normative sample), with higher scores indicative of better performance, before the composites, described below, were calculated. To avoid a disproportionate impact by unusual results on the composite scores, no subject was given a T score below 10 or above 90 (e.g., if a participant's score was converted to a T score of 9, this was set to 10). Only participants who completed all the nine tests included in the composite scores were included in the present study.

• The Overall Test Battery Mean (OTBM) was calculated by averaging T scores for all tests (32, 33). Lower scores equal worse performance.

• The Global Deficit Score (GDS) (34, 35) was calculated by assigning the following weights to T scores from each test: ≥40 = 0, 39–35 = 1, 34–30 = 2, 29–25 = 3, 24–20 = 4, and ≤19 = 5. Each participant's mean weight was then calculated for the each of the batteries. Higher scores equal worse performance.

• The Neuropsychological Deficit Score-Weighted (NDS-W) is a new composite calculated in previous cognition endpoint research only (19, 20). It assigns the following weights to T scores: ≥50 = 0, 49–47 = 0.25, 46–44 = 0.5, 43–41 = 1, 40–37 = 1.5, 36–35 = 2, 34–31 = 3, 30–28 = 4, 27–24 = 5, 23–21 = 6, and ≤20 = 7. The mean weight was then calculated for each of the batteries. Higher scores equal worse performance. This new deficit score is similar to the GDS, but provides an increase in gradations to lower the floor effect of the GDS.

• The Low Score Composite (LSC) is a new composite calculated in previous cognition endpoint research only (19, 20). T scores of 50 or higher are assigned a weight of 50, and T scores below 50 are assigned a weight that equals the T score (i.e., a T score of 40 would equal a weight of 40). The mean weight was then calculated for each of the batteries. Lower scores equal worse performance. This new composite score provides an even greater increase in gradation than the NDS-W.

• The number of scores at or below the 5th percentile (#≤ 5th %tile) is calculated by assigning the value 1 to scores at or below the 5th percentile (T score 34) and a zero to scores above the 5th percentile. These values are then summed for each participant. Higher scores equal worse performance. This score has been used in research calculating multivariate base rates for a range of neuropsychological test batteries (29–36).

• The number of scores at or below the 16th percentile (#≤ 16th %tile) is calculated by assigning the value 1 to scores at or below the 16th percentile (T score 40) and a zero to scores above the 16th percentile. These values are then summed for each participant. Higher scores equal worse performance. This score has also been calculated in previous multivariate base rate research (29–36).

• The number of scores below the 50th percentile (#< 50th %tile) is a new composite score, inspired by research on multivariate base rates, and previously calculated in cognition endpoint research only (19, 20). It is calculated by assigning the value 1 to scores below the 50th percentile (T score 49) and a zero to scores at or above the 50th percentile. These values are then summed for each participant. Higher scores equal worse performance.

Mann-Whitney U-tests and Kruskal-Wallis Rank Sum tests were used to examine differences in demographic variables, individual test scores, and in cognitive composite scores between the groups. These non-parametric methods were chosen because the majority of the composite scores showed non-normal distribution. The groups compared were: (1) participants with MTBI, trauma controls, and community controls; (2) participants with and without intracranial findings (i.e., complicated vs. uncomplicated MTBI); (3) participants with witnessed LOC and patients without LOC; (4) participants with long PTA (1–24 h) and participants with short PTA (<1 h). Cliff's delta was used to determine effect sizes between the groups on the composite scores. Cliff's delta is a measure of overlap between two distributions and is a suitable effect size measure for non-normal distributions (36). A Cliff's delta of 0 indicates complete overlap between the distributions, while a Cliff's delta of 1 or −1 indicate no overlap. As a guideline, a Cliff's delta of 0.11 is considered a small effect size, 0.28 a moderate effect size, and 0.43 a large effect size (37). Cohen's ds are also reported, but these should be interpreted with caution because of the non-normal distribution characterizing most of the composite scores. A Cohen's d of 0.20 is considered small, 0.50 moderate, and 0.80 large (38). The effect sizes were coded such that worse cognitive outcome in the presumed most affected group would result in a positive effect size. Notably, no corrections for multiple comparisons were applied because this study was designed to comprehensively explore a large number of candidate composite scores derived from the CENTER-TBI battery, with an emphasis on effect size interpretation as opposed to significance testing. Spearman's rho was used to examine the intercorrelations between the composite scores. All analyses were performed in IBM SPSS Statistics v. 25.

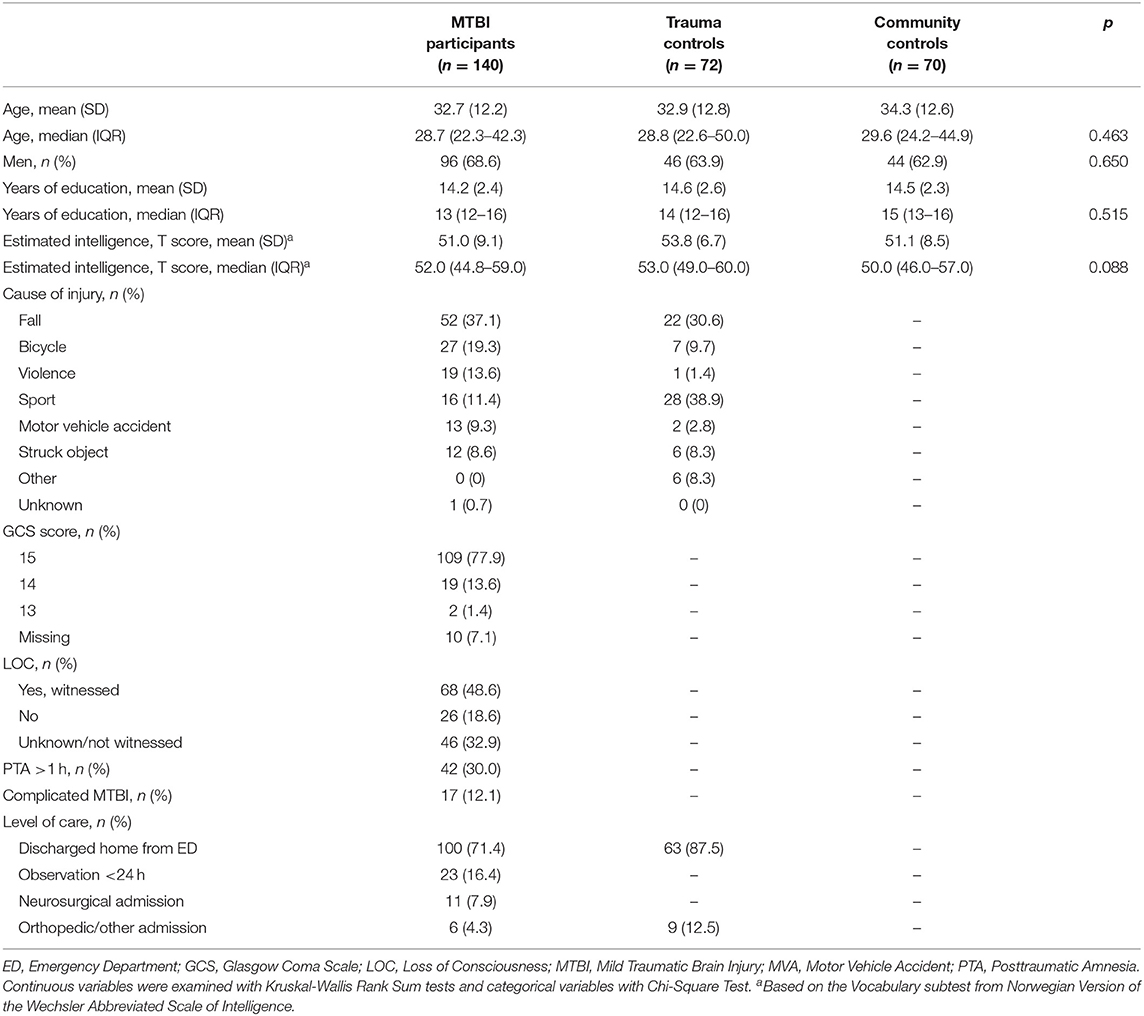

In total, 166 adults (≥18 years old) with MTBI were scheduled for the neuropsychological assessment 2 weeks following injury, as were 75 trauma controls and 74 community controls. Included in the present study are the 140 (84.3% of the scheduled patients) participants with MTBI, the 72 trauma controls (96.0%), and the 70 community controls (94.6%) who completed all nine tests constituting the composite scores. Demographic and clinical characteristics of the three groups are reported in Table 1. There were no statistically significant differences in age, sex, education, or estimated intelligence between the groups.

Table 1. Demographic and clinical characteristics of patients with MTBI, trauma controls, and community controls.

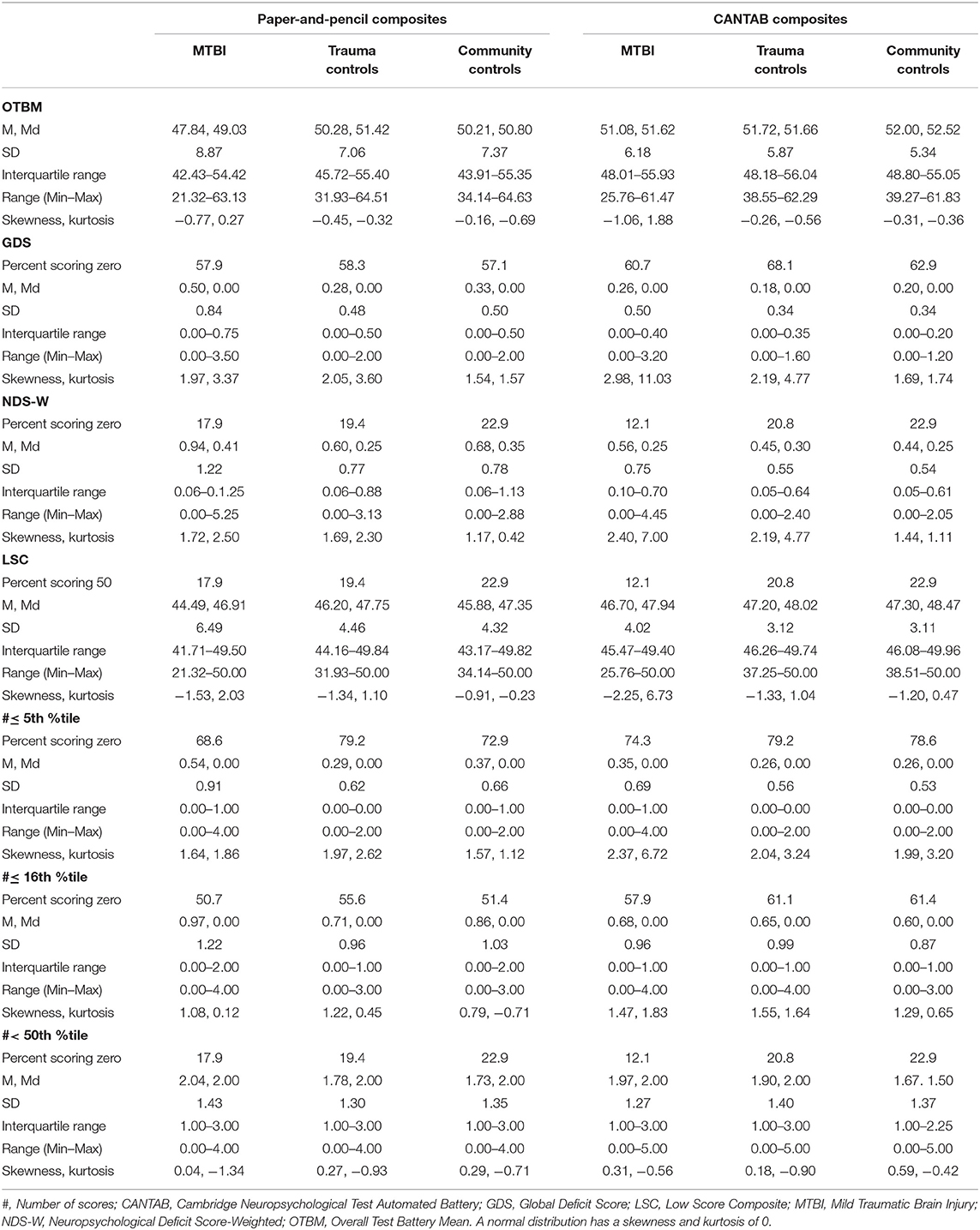

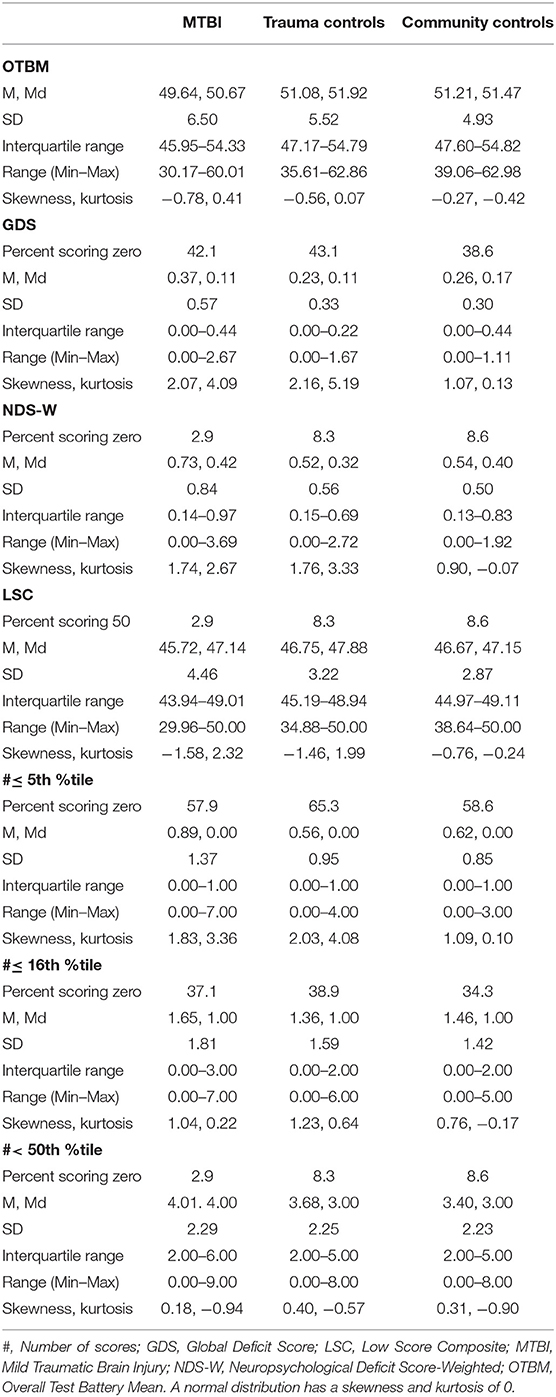

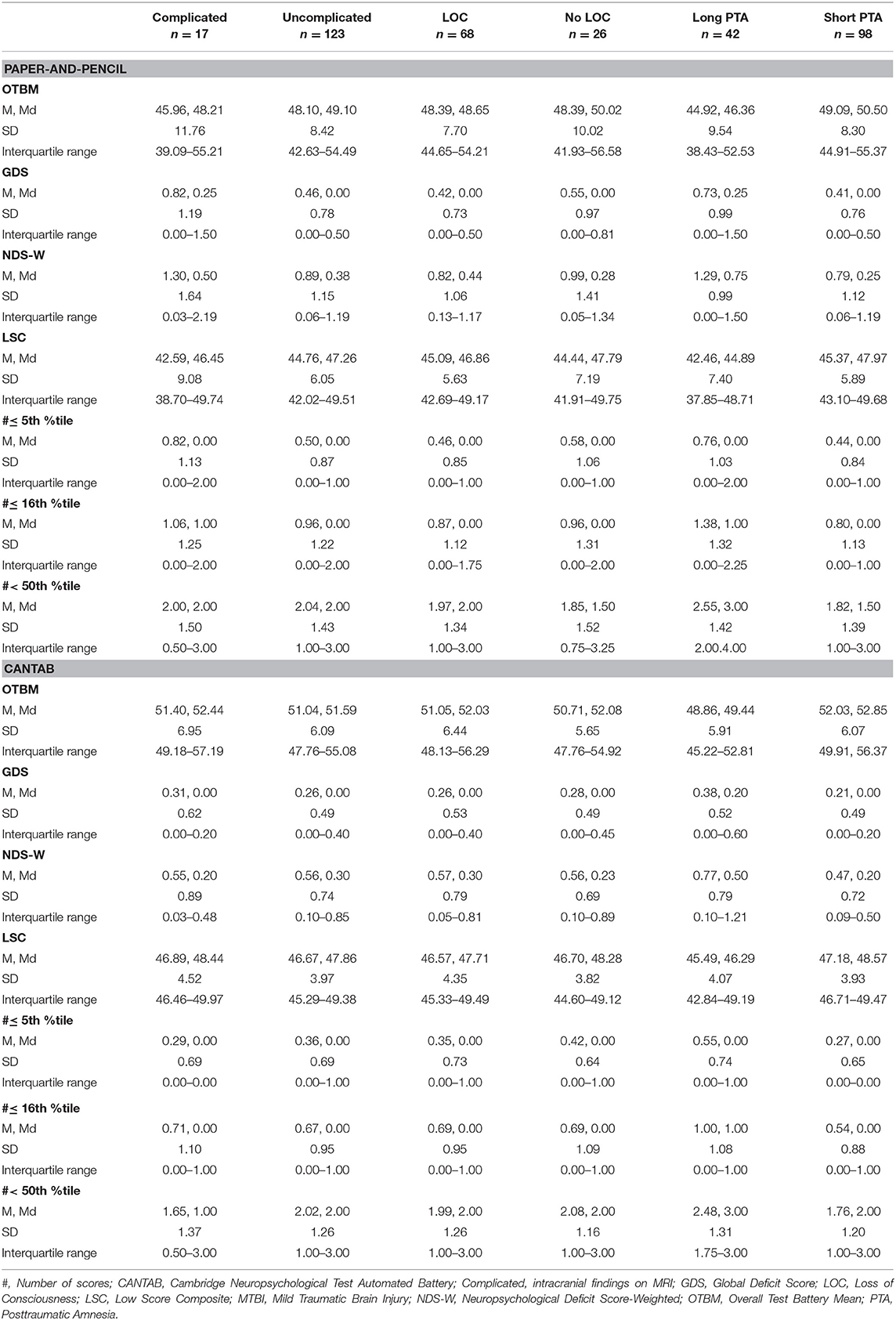

Scores from the individual tests that constitute the composite scores are reported in Table 2. There were no significant differences between the MTBI group, the trauma control group, and the community control group on any of the individual tests. Descriptive statistics and distributional characteristics for the composite scores in the MTBI group, the trauma control group, and the community control group are shown in Tables 3, 4, and the descriptive statistics for the composites in the severity subgroups (i.e., complicated MTBI, LOC, long PTA) are shown in Tables 5, 6. The OTBM and the #< 50th %tile composites had distributional characteristics (i.e., skewness and kurtosis) approaching a normal distribution, even if some deviations were seen (i.e., the CANTAB OTBM composite score had a skewness of −1.06 in the MTBI group). The other composites were, in general, highly skewed and zero-inflated (except the LCS, where 50 was the most common score). Group comparison (p-values and effect sizes) of the 21 composite scores are shown in Table 7. There were no significant differences between the MTBI group, the trauma control group, and the community control group on the composite scores and effect sizes were negligible (Cliff's delta <0.11) to small (Cliff's delta <0.28) for all composites. To investigate whether only including participants who completed all nine tests biased the results, we also calculated the OTBM (combined battery) for all participants who completed at least seven tests (i.e., the missing test score/s were replaced by the mean of that participant‘s available tests scores). The difference between this imputed OTBM and the one presented in the paper was negligible [for the MTBI group: 49.38 ± 6.59 (compared to the OTBM presented in Table 4: 49.64 ± 6.50); for the trauma control group 50.91 ± 5.80 (compared to the OTBM presented in Table 4: 51.08 ± 5.52); and for the community control group 50.82 ± 5.06 (compared to the OTBM presented in Table 4: 51.21 ± 4.93)].

Table 3. Descriptive statistics of the seven composite scores based on the traditional paper and pencil tests (4 individual test scores) and the CANTAB tests (5 individual test scores).

Table 4. Descriptive statistics of the combined composite scores (based on all 9 individual test scores).

Table 5. Descriptive statistics of the traditional paper-and-pencil composite scores and the CANTAB composite scores in the severity subgroups.

There were no significant differences in age (p = 0.745), sex (p = 0.560), education (p = 0.354), or estimated intelligence (p = 0.491) between participants with complicated MTBI and uncomplicated MTBI. None of composites revealed statistically significant differences between the groups and effect sizes (Cliff's delta) were negligible to small (Table 7). Similarly, there were no significant differences in age (p = 0.852), sex (p = 0.489), education (p = 0.542), or estimated intelligence (p = 0.802) between participants with and without LOC, and none of the composites revealed statistically significant differences between the groups (Table 7). Participants with long PTA had significantly lower estimated intelligence than participants with short PTA (long PTA: M = 48.1, SD = 9.3; short PTA: M = 52.2, SD = 8.8; p = 0.011), but there were no significant differences in age (p = 0.181), sex (p = 0.266), or education (p = 0.101). All composites except the paper-and-pencil GDS and #≤ 5th %tile differed significantly between participants with long and short PTA, with lower cognitive functioning in participants with long PTA (Table 7). Effect sizes ranged from small to moderate. On the combined composites, the largest effect sizes (Cliff's delta) were observed on the #< 50th %tile (0.36) and the OTBM (0.35) composites and the smallest on the #≤ 5th %tile (0.26) composite. Similar effect sizes (small to moderate) were seen on the paper-and-pencil composites and on the CANTAB composites.

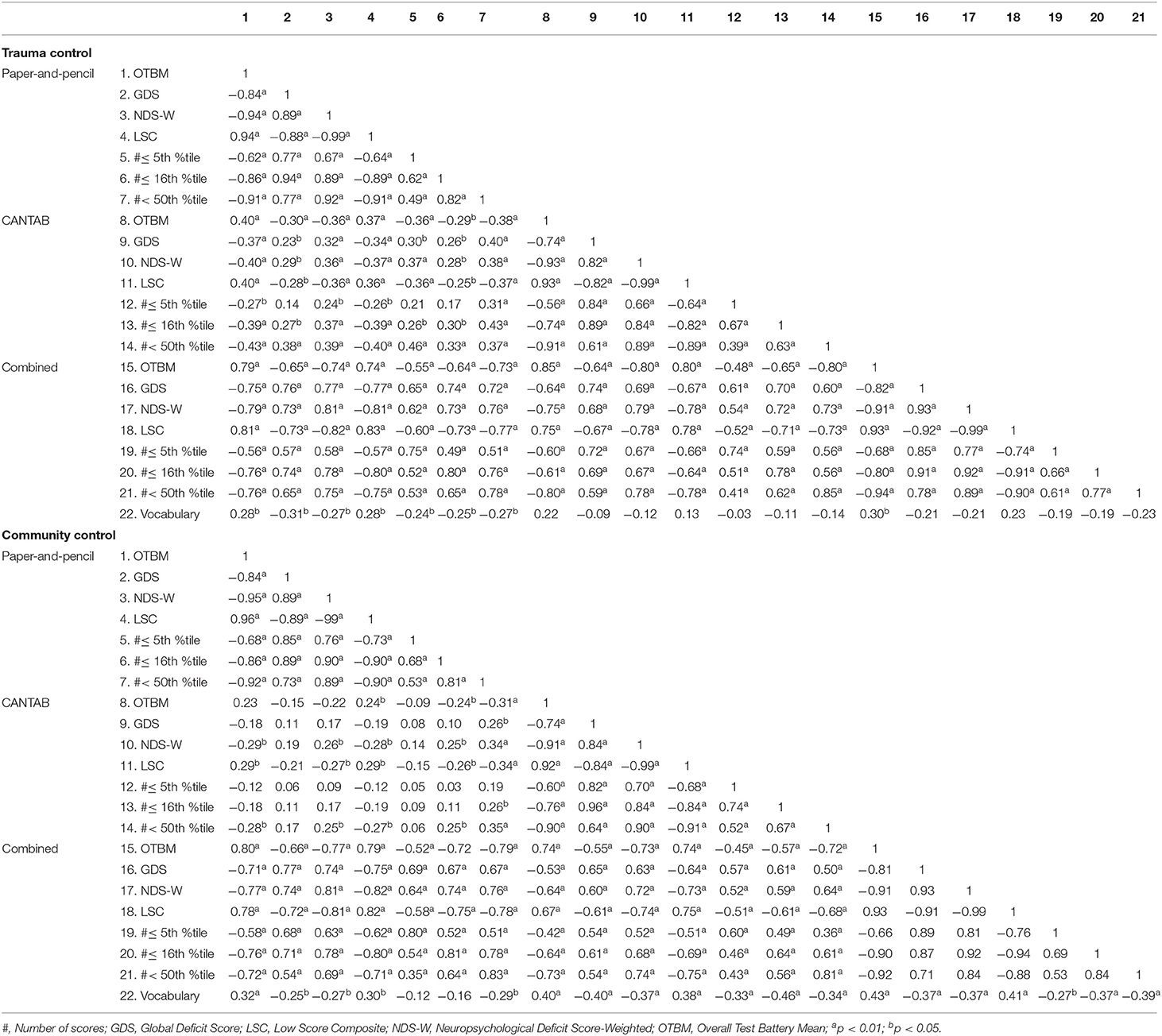

In general, the intercorrelations between the composite scores were high on analyses that stratified the data by group (i.e., MTBI; trauma control; community control) and test battery (i.e., paper-and-pencil, the CANTAB, and combined; Tables 8, 9). For example, in the MTBI group, the correlations between the composite scores on the combined battery ranged from 0.68 (between the #≤ 5th %tile and the #< 50th %tile) to −0.99 (between the NDS-W and the LSC). Correlations between the paper-and-pencil composites and the CANTAB composites were lower, ranging in the MTBI group from 0.55 (between the paper-and-pencil and CANTAB OTBM composites) to 0.33 (between the paper-and-pencil #≤ 5th %tile and the CANTAB #≤ 16th %tile composites). In the control groups, the correlations were somewhat lower between the paper-and-pencil composites and the CANTAB composites. For example, the correlation between the paper-and-pencil #≤ 5th %tile and the CANTAB #≤ 16th %tile composites was 0.26 in the trauma control group and 0.09 in the community control group. Correlations between estimated intelligence (the Vocabulary subtest) and the composites scores are also shown in Tables 8, 9, and most composites were significantly correlated with estimated intelligence.

Table 9. Spearman intercorrelation matrices for the composite scores in the trauma control group and the community control group.

This study is part of a program of research designed to develop and evaluate a cognition endpoint suitable for TBI research and clinical trials (17, 19, 20). This study calculated and evaluated seven candidate composite scores for the neuropsychological battery used in the CENTER-TBI. The MTBI sample, on average, performed in the broadly normal range on all of the individual cognitive test scores at 2 weeks post injury (Table 2), which is generally consistent with studies examining neuropsychological outcome at 2 weeks after injury (13–16) and reflects the favorable cognitive outcome experienced by this sample. Further, there were no statistically significant differences in any of the composite scores, or in the individual tests, when comparing the MTBI, trauma control, and community control groups (Tables 2, 7). Within the MTBI group, subgroups were created based on injury severity. There were no statistically significant differences on any composite when comparing those with complicated vs. uncomplicated MTBI (Table 7), which is somewhat surprising given that participants were evaluated only 2 weeks following injury. However, the literature on neuropsychological outcome from complicated MTBI during the acute and subacute period following injury is mixed, with some studies showing greater cognitive deficits and some studies showing comparable cognitive functioning to those with uncomplicated MTBIs (39–48). The majority of the patients were discharged to home from the emergency department. Thus, our sample represents the milder end of MTBI, possibly contributing to the lack of differences between complicated and uncomplicated MTBI. There were no statistically significant differences on any composite when comparing participants with and without LOC at the time of injury (Table 7). There were significant differences on multiple composites between those with a greater duration of PTA at the time of injury and those with a shorter duration of PTA (Table 7), a finding that aligns with some previous research (49, 50). However, these results are confounded by participants with longer duration of PTA having lower estimated longstanding intellectual functioning than those with short duration PTA. Because intellectual functioning is known to be correlated with neuropsychological test performance (51–53), and with the composite scores in the present study (Tables 8, 9), the findings are likely in part related to premorbid differences in intelligence.

Two of the composite scores had distributional characteristics that were approximately normal (i.e., the OTBM and the #< 50th %tile composites; Tables 3, 4). Most of the composite scores, however, had skewed distributions because they are based on deficit scores, or the number of low scores. Some have notable zero-inflation, meaning that a large number of people obtain a score of zero (Tables 3, 4). The distributional characteristics of the composite scores are important to consider when choosing a composite score for TBI research and clinical trials because these characteristics have consequences for the statistical methods available for data analyses. There is often a need to control for demographic variables, such as education or intelligence, or injury severity variables, in the statistical analyses in TBI research. The OTBM score will approach a normal distribution in most cases and covariates can easily be included in traditional parametric methods, such as ordinary least squares regression. The zero-inflated composites, however, likely require non-parametric methods (e.g., Mann-Whitney U-tests used in the present study), which do not allow for covariates. If there is a need to include covariates, regression models developed for count data (e.g., Poisson or negative binominal regression) could be an alternative for the zero-inflated composite scores.

Intercorrelations between the composite scores were higher within a test battery (paper-and-pencil; CANTAB; combined) than between one test battery and a different test battery (Tables 8, 9). The correlation of 0.55 between the paper-and-pencil OTBM composite and the CANTAB OTBM composite in the MTBI group is not surprisingly low considering that in concurrent validity studies on neuropsychological tests, it is common to find only moderate correlations between tests designed to measure even the same cognitive function (54, 55). Moreover, the correlation might be somewhat attenuated due to method variance (i.e., computerized vs. traditional testing). Despite a moderate correlation, the effect sizes were broadly similar for the paper-and-pencil battery and the CANTAB battery when groups and subgroups were compared. Based on this, we cannot conclude that one of the batteries (paper-and-pencil and CANTAB) is more sensitive than the other. Within the paper-and-pencil composites and the CANTAB composites, the NDS-W and LSC were extremely highly correlated (i.e., 0.99 in almost all groups and all batteries), which is consistent with previous findings (19, 20). It is likely that these two composites are redundant. The OTBM and the #< 50th %tile composites were also highly correlated. Correlations above 0.90 between these composites have also been found in the D-KEFS battery (20) and in the ANAM4 TBI-MIL battery (19). Therefore, researchers interested in using more than one of these composite scores are encouraged to avoid including redundant scores as outcome measures and instead choose two composites with lower intercorrelation (such as the OTBM and the #≤ 5th %tile).

The present study differs from previous cognition endpoint research (19, 20) in that the tests in the neuropsychological battery used were not co-normed. Thus, when the age-referenced T scores were calculated (Table 2), different norm groups were used for the different neuropsychological tests. In both TBI research and neuropsychological clinical practice, this is a very common scenario. There are dozens of tests recommended as common data elements outcome measures following TBI (56), and these tests have diverse sets of normative reference values. Using cognitive tests from multiple normative samples, as we did in this study, mirrors typical research and clinical practice and increases the generalizability of these findings. However, the use of different norms requires some extra considerations. Even though T scores are designed so that the mean is 50 and the standard deviation is 10 in the normative sample, this is not necessarily the case in the groups of interest in a study. Obtained scores in a study sample often vary from that expected distribution due to characteristics of the sample (e.g., level of education or intellectual functioning), differences in the normative reference groups, or both. For example, in the community control group in present study, PAL scores derived from the CANTAB normative sample had a mean of 51.80 and a standard deviation of 6.55, while RAVLT Trial 1–5 scores derived from the Schmidt et al. meta-norms (30) had a mean of 48.19 and a standard deviation of 11.60 (Table 2). Assuming a normal distribution of the scores in the community control group, this means that a score at the 16th percentile (e.g., one standard deviation below the mean for the community control group) for the PAL would be a norm-referenced T score of about 45 and a score at the 16th percentile on the RAVLT would be a norm-referenced T score of about 37. Consequently, observed deficit scores would vary by individual measures. For example, fewer participants would have T scores low enough to lead to a positive GDS on the PAL than the RAVLT, because the GDS requires the score to be lower than a norm-referenced T score of 40 to be a non-zero value. Although a similar portion of the community control group (~16%) obtained a T score ≤45 on the PAL and ≤37 on the RAVLT, the influence these scores have on the GDS would not be equivalent. It should be noted that the same situation also can arise from batteries that are co-normed (i.e., the standard deviations will, more or less, deviate from 10 in the study groups), but that differences may be more pronounced when different norms are used.

Prior developmental work on this topic has been done exclusively with people with MTBIs or with healthy participants (19, 20). Although this study extends prior work by having MTBI participants, trauma controls, and community controls in the same study, there is a need to compare and contrast these composite scores in people who have sustained moderate or severe TBIs and who have varying degrees of cognitive impairments before determining which cognition endpoint is most useful for TBI research and clinical trials. An additional limitation of the present study relates to the representativeness of the norms used for composite score calculation. The sample was recruited from Norway and the tests were administered in Norwegian, but none of the normative samples were comprised of people from Norway. This is the clinical and research reality in many countries; normative samples for many neuropsychological tests are from North America, mostly from the United States. The comparisons in the present study, however, were between groups, all of which were assessed with the same tests and norms, making the representativeness of the norms less confounding. Further, the mean OTBM for the community controls was 51.21 (i.e., close to the norm group mean of 50), indicating the mean performances for the observed sample were similar to those of the normative samples. The subgroup comparisons in the present study are limited by the relatively small number of patients with complicated MTBI (n = 17) and patients without LOC (n = 26). Because we required LOC to be witnessed in order to be coded as present, many patients were excluded from the LOC vs. no LOC comparisons due to uncertain LOC status. Thus, the lack of differences between patients with complicated and uncomplicated MTBI, and between patients with and without LOC, should be interpreted with caution. Also, the duration of PTA was self-reported and there is some evidence that people tend to overestimate their PTA duration, especially patients with cognitive deficits (57). The finding of lower cognitive functioning in patients with longer PTA could have been influenced by reporting bias. Finally, no stand-alone performance validity tests were included in this study. The context in which this study took place may make this concern less critical, but still important. We recruited a representative sample of MTBI patients in the acute phase, and not patients seeking health care later and test results from this study were solely for research purposes, not available to anyone else, and no financial gain could be obtained.

This study adds to a body of literature exploring methods to aggregate neuropsychological test scores in a manner that could be useful to produce summary outcome scores for use in observational research and clinical trials. It is an explicit goal of this program of research to develop a cognition endpoint score suitable for diverse batteries of tests, administered in different countries and in different languages, to people with TBIs of broad severity and at varying time points following injury (17). In the present study, cross-sectional comparisons between MTBI, trauma control, and community control groups 2 weeks following injury showed that the seven composites had similar effect sizes of group differences, along with significant redundancy between some of the composite scores as evidenced by high intercorrelations. If future studies on moderate to severe TBI confirm similar effect sizes between the composites, the OTBM composite may be preferred as a clinical trial endpoint because no data reduction (i.e., using cutoffs for defining a low score that are then changed into specific values) is needed when this composite is calculated. Also, the risk of unequal impact from different scores when several normative samples are used might be smaller for the OTBM score because this composite does not rely on cutoffs based on normative data. For studies seeking to enrich or stratify, however, a composite score that classifies people as having mild cognitive impairment might be preferred. The studies to date have examined the distributional characteristics of composites, similarities between them, and group differences based on mild injuries. These studies have had largely similar findings. Future studies examining additional psychometric aspects of the composite scores (e.g., test-retest reliability, reliable change, and minimal clinically important differences) are needed.

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by the regional committee for research ethics (REK 2013/754). The patients/participants provided their written informed consent to participate in this study.

JS, JK, DT, NS, and GI designed the study. JS performed the statistical analyses. TS and AV secured funding for the larger parent study and directed that study. JS, SS, and TS collected the data. JS and GI drafted the manuscript. All authors critically reviewed the manuscript, read, and approved the last version of this manuscript.

Foundations for this work were funded in part by the U.S. Department of Defense as part of the TBI Endpoints Development Initiative with a grant entitled Development and Validation of a Cognition Endpoint for Traumatic Brain Injury Clinical Trials (subaward from W81XWH-14-2-0176). JS received funding from the Liaison Committee between the Central Norway Regional Health Authority (RHA) and the Norwegian University of Science and Technology (NTNU) (project number 90157700). Unrestricted philanthropic support was provided by the Spaulding Research Institute. JK acknowledges support from the Spaulding Research Institute Leadership Catalyst Fellowship. NS received salary support from the Michael Smith Foundation for Health Research (Health Professional Investigator Award).

GI serves as a scientific advisor for BioDirection, Inc., Sway Operations, LLC, and Highmark, Inc. He has a clinical and consulting practice in forensic neuropsychology, including expert testimony, involving individuals who have sustained mild TBIs. He has received research funding from several test publishing companies, including ImPACT Applications, Inc., CNS Vital Signs, and Psychological Assessment Resources (PAR, Inc.). He received royalties from one neuropsychological test (WCST-64; PAR, Inc.). He has received research funding as a principal investigator from the National Football League, and salary support as a collaborator from the Harvard Integrated Program to Protect and Improve the Health of National Football League Players Association Members. He acknowledges unrestricted philanthropic support from ImPACT Applications, Inc., the Heinz Family Foundation, and the Mooney-Reed Charitable Foundation.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

1. Wheble JL, Menon DK. TBI-the most complex disease in the most complex organ: the CENTER-TBI trial-a commentary. J R Army Med Corps. (2016) 162:87–9. doi: 10.1136/jramc-2015-000472

2. Maas AI, Menon DK, Steyerberg EW, Citerio G, Lecky F, Manley GT, et al. Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury (CENTER-TBI): a prospective longitudinal observational study. Neurosurgery. (2015) 76:67–80. doi: 10.1227/NEU.0000000000000575

3. Burton A. The CENTER-TBI core study: the making-of. Lancet Neurol. (2017) 16:958–9. doi: 10.1016/S1474-4422(17)30358-7

4. Steyerberg EW, Wiegers E, Sewalt C, Buki A, Citerio G, De Keyser V, et al. Case-mix, care pathways, and outcomes in patients with traumatic brain injury in CENTER-TBI: a European prospective, multicentre, longitudinal, cohort study. Lancet Neurol. (2019) 18:923–34. doi: 10.1016/S1474-4422(19)30232-7

5. Cambridge Cognition Ltd. CANTABeclipse Test Administration Guide. Bottisham, CA: Cambridge Cognition Ltd. (2012).

6. Pearce AJ, Rist B, Fraser CL, Cohen A, Maller JJ. Neurophysiological and cognitive impairment following repeated sports concussion injuries in retired professional rugby league players. Brain Inj. (2018) 32:498–505. doi: 10.1080/02699052.2018.1430376

7. Pearce AJ, Hoy K, Rogers MA, Corp DT, Davies CB, Maller JJ, et al. Acute motor, neurocognitive and neurophysiological change following concussion injury in Australian amateur football. A prospective multimodal investigation. J Sci Med Sport. (2015) 18:500–6. doi: 10.1016/j.jsams.2014.07.010

8. Pearce AJ, Hoy K, Rogers MA, Corp DT, Maller JJ, Drury HG, et al. The long-term effects of sports concussion on retired Australian football players: a study using transcranial magnetic stimulation. J Neurotrauma. (2014) 31:1139–45. doi: 10.1089/neu.2013.3219

9. Sterr A, Herron K, Hayward C, Montaldi D. Are mild head injuries as mild as we think? neurobehavioral concomitants of chronic post-concussion syndrome. BMC Neurol. (2006) 6:1–10. doi: 10.1186/1471-2377-6-7

10. Kim GH, Kang I, Jeong H, Park S, Hong H, Kim J, et al. Low prefrontal GABA levels are associated with poor cognitive functions in professional boxers. Front Hum Neurosci. (2019) 13:193. doi: 10.3389/fnhum.2019.00193

11. Lunter CM, Carroll EL, Housden C, Outtrim J, Forsyth F, Rivera A, et al. Neurocognitive testing in the emergency department: a potential assessment tool for mild traumatic brain injury. Emerg Med Australas. (2019) 31:355–61. doi: 10.1111/1742-6723.13163

12. Salmond CH, Chatfield DA, Menon DK, Pickard JD, Sahakian BJ. Cognitive sequelae of head injury: involvement of basal forebrain and associated structures. Brain. (2005) 128:189–200. doi: 10.1093/brain/awh352

13. Mayer AR, Hanlon FM, Dodd AB, Ling JM, Klimaj SD, Meier TB. A functional magnetic resonance imaging study of cognitive control and neurosensory deficits in mild traumatic brain injury. Hum Brain Mapp. (2015) 36:4394–406. doi: 10.1002/hbm.22930

14. Mayer AR, Ling J, Mannell MV, Gasparovic C, Phillips JP, Doezema D, et al. A prospective diffusion tensor imaging study in mild traumatic brain injury. Neurology. (2010) 74:643–50. doi: 10.1212/WNL.0b013e3181d0ccdd

15. Waljas M, Lange RT, Hakulinen U, Huhtala H, Dastidar P, Hartikainen K, et al. Biopsychosocial outcome after uncomplicated mild traumatic brain injury. J Neurotrauma. (2014) 31:108–24. doi: 10.1089/neu.2013.2941

16. Nelson LD, Furger RE, Gikas P, Lerner EB, Barr WB, Hammeke TA, et al. Prospective, head-to-head study of three computerized neurocognitive assessment tools part 2: utility for assessment of mild traumatic brain injury in emergency department patients. J Int Neuropsychol Soc. (2017) 23:293–303. doi: 10.1017/S1355617717000157

17. Silverberg ND, Crane PK, Dams-O'Connor K, Holdnack J, Ivins BJ, Lange RT, et al. Developing a cognition endpoint for traumatic brain injury clinical trials. J Neurotrauma. (2017) 34:363–71. doi: 10.1089/neu.2016.4443

18. Karr JE, Areshenkoff CN, Garcia-Barrera MA. The neuropsychological outcomes of concussion: a systematic review of meta-analyses on the cognitive sequelae of mild traumatic brain injury. Neuropsychology. (2014) 28:321–36. doi: 10.1037/neu0000037

19. Iverson GL, Ivins BJ, Karr JE, Crane PK, Lange RT, Cole WR, et al. Comparing composite scores for the ANAM4 TBI-MIL for research in mild traumatic brain injury. Arch Clin Neuropsychol. (2019) 35:56–69. doi: 10.1093/arclin/acz021

20. Iverson G, Karr J, Terry D, Garcia-Barrera M, Holdnack J, Ivins B, et al. Developing an executive functioning composite score for research and clinical trials. Arch Clin Neuropsychol. (2019) 35:312–325. doi: 10.1093/arclin/acz070

21. Skandsen T, Einarsen CE, Normann I, Bjoralt S, Karlsen RH, McDonagh D, et al. The epidemiology of mild traumatic brain injury: the trondheim MTBI follow-up study. Scand J Trauma Resusc Emerg Med. (2018) 26:34. doi: 10.1186/s13049-018-0495-0

22. Carroll LJ, Cassidy JD, Holm L, Kraus J, Coronado VG. Methodological issues and research recommendations for mild traumatic brain injury: the WHO collaborating centre task force on mild traumatic brain injury. J Rehabil Med. (2004) (43 Suppl):113–25. doi: 10.1080/16501960410023877

23. Einarsen CE, Moen KG, Haberg AK, Eikenes L, Kvistad KA, Xu J, et al. Patients with mild traumatic brain injury recruited from both hospital and primary care settings: a controlled longitudinal magnetic resonance imaging study. J Neurotrauma. (2019) 36:3172–82. doi: 10.1089/neu.2018.6360

24. Wechsler D. Wechlser Abbreviated Scale of Intelligence. San Antonio, TX: Psychol Corporation (1999).

25. Wechsler D. Wechsler Abbreviated Scale of Intelligence (Norwegian Version). San Antonio, TX: Pearson Assessment (2007).

26. Levi Y, Rassovsky Y, Agranov E, Sela-Kaufman M, Vakil E. Cognitive reserve components as expressed in traumatic brain injury. J Int Neuropsychol Soc. (2013) 19:664–71. doi: 10.1017/S1355617713000192

27. Donders J, Tulsky DS, Zhu J. Criterion validity of new WAIS-II subtest scores after traumatic brain injury. J Int Neuropsychol Soc. (2001) 7:892–8. doi: 10.1017/S1355617701777132

28. Strauss E, Sherman EMS, Spreen O. A Compendium of Neuropsychological Tests: Administration, Norms, and Commentary. New York, NY: Oxford University Press (2006).

29. Mitrushina M, Boone KB, Razani J, D'Elia LF. Handbook of Normative Data for Neuropsychological Assessment. New York, NY: Oxford University Press (2005).

30. Schmidt M. Rey Auditory-Verbal Learning Test. Los Angeles, CA: Western Psychological Services (1996).

31. Boone KB, Lu P, Wen J. Comparison of various RAVLT scores in the detection of noncredible memory performance. Arch Clin Neuropsychol. (2005) 20:301–19. doi: 10.1016/j.acn.2004.08.001

32. Miller LS, Rohling ML. A statistical interpretive method for neuropsychological test data. Neuropsychol Rev. (2001) 11:143–69. doi: 10.1023/a:1016602708066

33. Rohling ML, Meyers JE, Millis SR. Neuropsychological impairment following traumatic brain injury: a dose-response analysis. Clin Neuropsychol. (2003) 17:289–302. doi: 10.1076/clin.17.3.289.18086

34. Kendler KS, Gruenberg AM, Kinney DK. Independent diagnoses of adoptees and relatives as defined by DSM-III in the provincial and national samples of the Danish adoption study of Schizophrenia. Arch Gen Psychiatry. (1994) 51:456–68. doi: 10.1001/archpsyc.1994.03950060020002

35. Carey CL, Woods SP, Gonzalez R, Conover E, Marcotte TD, Grant I, et al. Predictive validity of global deficit scores in detecting neuropsychological impairment in HIV infection. J Clin Exp Neuropsychol. (2004) 26:307–19. doi: 10.1080/13803390490510031

36. Cliff N. Dominance statistics: ordinal analyses to answer ordinal questions. Psychol Bull. (1993) 114:494. doi: 10.1037/0033-2909.114.3.494

37. Vargha A, Delaney HD. A critique and improvement of the CL common language effect size statistics of McGraw and Wong. J Educ Behav Stat. (2000) 25:101–32. doi: 10.3102/10769986025002101

38. Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2nd ed. Hillsdale, NJ: Lawrence Erlbaum Associates (1988).

39. Iverson GL, Franzen MD, Lovell MR. Normative comparisons for the controlled oral word association test following acute traumatic brain injury. Clin Neuropsychol. (1999) 13:437–41. doi: 10.1076/1385-4046(199911)13:04;1-Y;FT437

40. Hofman PA, Stapert SZ, van Kroonenburgh MJ, Jolles J, de Kruijk J, Wilmink JT. MR imaging, single-photon emission CT, and neurocognitive performance after mild traumatic brain injury. AJNR Am J Neuroradiol. (2001) 22:441–9.

41. Hughes DG, Jackson A, Mason DL, Berry E, Hollis S, Yates DW. Abnormalities on magnetic resonance imaging seen acutely following mild traumatic brain injury: correlation with neuropsychological tests and delayed recovery. Neuroradiology. (2004) 46:550–8. doi: 10.1007/s00234-004-1227-x

42. Lange RT, Iverson GL, Zakrzewski MJ, Ethel-King PE, Franzen MD. Interpreting the trail making test following traumatic brain injury: comparison of traditional time scores and derived indices. J Clin Exp Neuropsychol. (2005) 27:897–906. doi: 10.1080/1380339049091290

43. Iverson GL. Complicated vs uncomplicated mild traumatic brain injury: acute neuropsychological outcome. Brain Inj. (2006) 20:1335–44. doi: 10.1080/02699050601082156

44. Lee H, Wintermark M, Gean AD, Ghajar J, Manley GT, Mukherjee P. Focal lesions in acute mild traumatic brain injury and neurocognitive outcome: CT versus 3T MRI. J Neurotrauma. (2008) 25:1049–56. doi: 10.1089/neu.2008.0566

45. Levin HS, Hanten G, Roberson G, Li X, Ewing-Cobbs L, Dennis M, et al. Prediction of cognitive sequelae based on abnormal computed tomography findings in children following mild traumatic brain injury. J Neurosurg Pediatr. (2008) 1:461–70. doi: 10.3171/PED/2008/1/6/461

46. Lange RT, Iverson GL, Franzen MD. Neuropsychological functioning following complicated vs. uncomplicated mild traumatic brain injury. Brain Inj. (2009) 23:83–91. doi: 10.1080/02699050802635281

47. de Guise E, Lepage JF, Tinawi S, LeBlanc J, Dagher J, Lamoureux J, et al. Comprehensive clinical picture of patients with complicated vs uncomplicated mild traumatic brain injury. Clin Neuropsychol. (2010) 24:1113–30. doi: 10.1080/13854046.2010.506199

48. Iverson GL, Lange RT, Waljas M, Liimatainen S, Dastidar P, Hartikainen KM, et al. Outcome from complicated versus uncomplicated mild traumatic brain injury. Rehabil Res Pract. (2012) 2012:415740. doi: 10.1155/2012/415740

49. Stulemeijer M, Vos PE, van der Werf S, van Dijk G, Rijpkema M, Fernandez G. How mild traumatic brain injury may affect declarative memory performance in the post-acute stage. J Neurotrauma. (2010) 27:1585–95. doi: 10.1089/neu.2010.1298

50. Shores EA, Lammel A, Hullick C, Sheedy J, Flynn M, Levick W, et al. The diagnostic accuracy of the revised westmead PTA scale as an adjunct to the glasgow coma scale in the early identification of cognitive impairment in patients with mild traumatic brain injury. J Neurol Neurosurg Psychiatry. (2008) 79:1100–6. doi: 10.1136/jnnp.2007.132571

51. Steward KA, Kennedy R, Novack TA, Crowe M, Marson DC, Triebel KL. The role of cognitive reserve in recovery from traumatic brain injury. J Head Trauma Rehabil. (2018) 33:E18–E27. doi: 10.1097/HTR.0000000000000325

52. Rabinowitz AR, Arnett PA. Intraindividual cognitive variability before and after sports-related concussion. Neuropsychology. (2013) 27:481–90. doi: 10.1037/a0033023

53. Leary JB, Kim GY, Bradley CL, Hussain UZ, Sacco M, Bernad M, et al. The association of cognitive reserve in chronic-phase functional and neuropsychological outcomes following traumatic brain injury. J Head Trauma Rehabil. (2018) 33:E28–E35. doi: 10.1097/HTR.0000000000000329

54. Allen BJ, Gfeller JD. The Immediate Post-Concussion Assessment and Cognitive Testing battery and traditional neuropsychological measures: a construct and concurrent validity study. Brain Inj. (2011) 25:179–91. doi: 10.3109/02699052.2010.541897

55. Jaeggi SM, Buschkuehl M, Perrig WJ, Meier B. The concurrent validity of the N-back task as a working memory measure. Memory. (2010) 18:394–412. doi: 10.1080/09658211003702171

56. Hicks R, Giacino J, Harrison-Felix C, Manley G, Valadka A, Wilde EA. Progress in developing common data elements for traumatic brain injury research: version two – the end of the beginning. J Neurotrauma. (2013) 30:1852–61. doi: 10.1089/neu.2013.2938

Keywords: brain concussion, brain injury, cognition, neuropsychology, psychometrics

Citation: Stenberg J, Karr JE, Terry DP, Saksvik SB, Vik A, Skandsen T, Silverberg ND and Iverson GL (2020) Developing Cognition Endpoints for the CENTER-TBI Neuropsychological Test Battery. Front. Neurol. 11:670. doi: 10.3389/fneur.2020.00670

Received: 09 March 2020; Accepted: 05 June 2020;

Published: 17 July 2020.

Edited by:

Firas H. Kobeissy, University of Florida, United StatesReviewed by:

Leia Cherie Vos, Milwaukee VA Medical Center, United StatesCopyright © 2020 Stenberg, Karr, Terry, Saksvik, Vik, Skandsen, Silverberg and Iverson. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jonas Stenberg, am9uYXMuc3RlbmJlcmdAbnRudS5ubw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.