- 1MGH Institute of Health Professions, Boston, MA, United States

- 2Speech-Language Pathology Department, Spaulding Rehabilitation Hospital, Boston, MA, United States

- 3Speech-Language and Swallow Department, Brigham and Women's Hospital, Boston, MA, United States

The research to practice gap is a significant problem across all disciplines of healthcare. A major challenge associated with the adoption of evidence into routine clinical care is the disconnect between findings that are identified in a controlled research setting, and the needs and challenges of a real-world clinical practice setting. Implementation Science, which is the study of methods to promote research into clinical practice, provides frameworks to promote the translation of findings into practice. To begin to bridge the research-practice gap in assessing recovery in individuals with aphasia in the acute phases of recovery following stroke, clinicians in an acute care hospital and an inpatient rehabilitation hospital followed an implementation science framework to select and implement a standardized language assessment to evaluate early changes in language performance across multiple timepoints. Using a secure online database to track patient data and language metrics, clinically-accessible information was examined to identify predictors of recovery in the acute phases of stroke. We report on the feasibility of implementing such standardized assessments into routine clinical care via measures of adherence. We also report on initial analyses of the data within the database that provide insights into the opportunities to track change. This initiative highlights the feasibility of collecting clinical data using a standardized assessment measure across acute and inpatient rehabilitation care settings. Practice-based evidence may inform future research by contributing pilot data and systematic observations that may lead to the development of empirical studies, which can then feed back into clinical practice.

Introduction

Two million people in the United States are living with aphasia—an impairment in language comprehension and production. Speech-language pathologists play a central role in the assessment and diagnosis of individuals with language deficits following stroke, and current clinical practice for the assessment of language skills following stroke is variable across and within clinical practice settings (1). Lack of consistency places limitations on the understanding of early stroke recovery, and limits care continuity between settings and clinicians. Further, there is a disconnect between what occurs within clinical practice and advancements being made in research to inform recovery predictions.

The research to practice gap, defined as the discrepancy between evidenced-based interventions and what takes place in practice, has been well-documented (2–6). Studies have suggested that it takes 17 years for 14% of healthcare research to be adopted into routine clinical practice (7). This slow translation of research to the clinic is one of the disconnects that confines healthcare and clinicians' abilities to optimize care for patients. The limited uptake of research has been attributed to a variety of factors, including the level of relevance of research findings to practice, organizational constraints that impact the adoption of findings into practice, and the degree of benefit to the target population to sustain the practice (4, 6, 8).

Evidence-based medicine calls for the integration of the best available evidence from systematic research in the care and clinical decision-making process for individual patients (9). The goal of EBP involves the integration of (1) external scientific evidence, (2) clinical expertise, and (3) client, patient, and caregiver values and perspectives (9–11). A major challenge associated with the adoption of EBP, however, is the disconnect between the findings identified in a controlled lab setting and those that are ultimately implemented in a real-world clinical practice setting (3, 12–16). The scientific pipeline has generally prioritized scientific control for internal validity; while categorically important, the focus on internal validity may come at the expense of external validity, or generalizability across setting and time (3). By bringing research closer to the actual practice setting and creating practice-based evidence, results may be more relevant, tailored, and actionable to patients and clinicians (3, 17–19).

Important for any attempts to bring research close to the practice setting is Implementation Science. Implementation Science is the study of methods that promote systematic uptake of research into routine clinical practice (8, 20–22), offering frameworks and structure to help guide successful implementation [e.g., (22–24)]. Additionally, practice-based evidence, the concept that clinicians can structure practice and measure outcomes in the real-world care setting, offers an opportunity to inform research needs and speed the research to practice transfer (3, 17–20, 25).

Prior work has demonstrated that, with the guidance of implementation science frameworks, a standardized process for the evaluation of language was feasible in acute care and improved diagnosis and reporting of aphasia [see (26)]. The current manuscript describes a follow-up study that reports on the long-term adherence to the implemented measure, and on the extension to an inpatient rehabilitation facility. Clinicians in acute care and inpatient rehabilitation hospitals, both within the same healthcare network, have been working together with the long-term goal of populating a database with consistent measures of language performance across the early stages of aphasia diagnosis to begin to inform early language recovery patterns. Stroke-related information, including lesion size and lesion location, have been identified as key factors in predicting language outcomes, and initial aphasia severity has been acknowledged as the most robust factor in predicting language recovery (27–33). The extent to which clinicians make recovery predictions, however, and share these with their patients is limited. One of the reasons research knowledge about recovery has not translated to the clinic is that current predictive information is not fine-grained enough to capture clinically-observable skills at the individual level, or they require high-level analyses that are more consistent with the research setting. Standardizing clinical practice to gather data may help shed light on the types of data that are feasible to capture clinically and could be informative to outcomes.

Thus, to begin to bridge the research-practice gap in predicting language abilities in individuals with aphasia in the acute phases of recovery following stroke, this manuscript reports on (1) the feasibility of adhering to a standardized language assessment protocol in acute care over a 2-year period, (2) the iterative implementation process utilized in an inpatient rehabilitation care facility following an implementation science framework, and (3) a pilot evaluation of data collected through standardized assessments to begin to evaluate predictive models of language recovery after stroke.

Part I—The Feasibility of Adhering to a Standardized Language Assessment Protocol in Acute Care

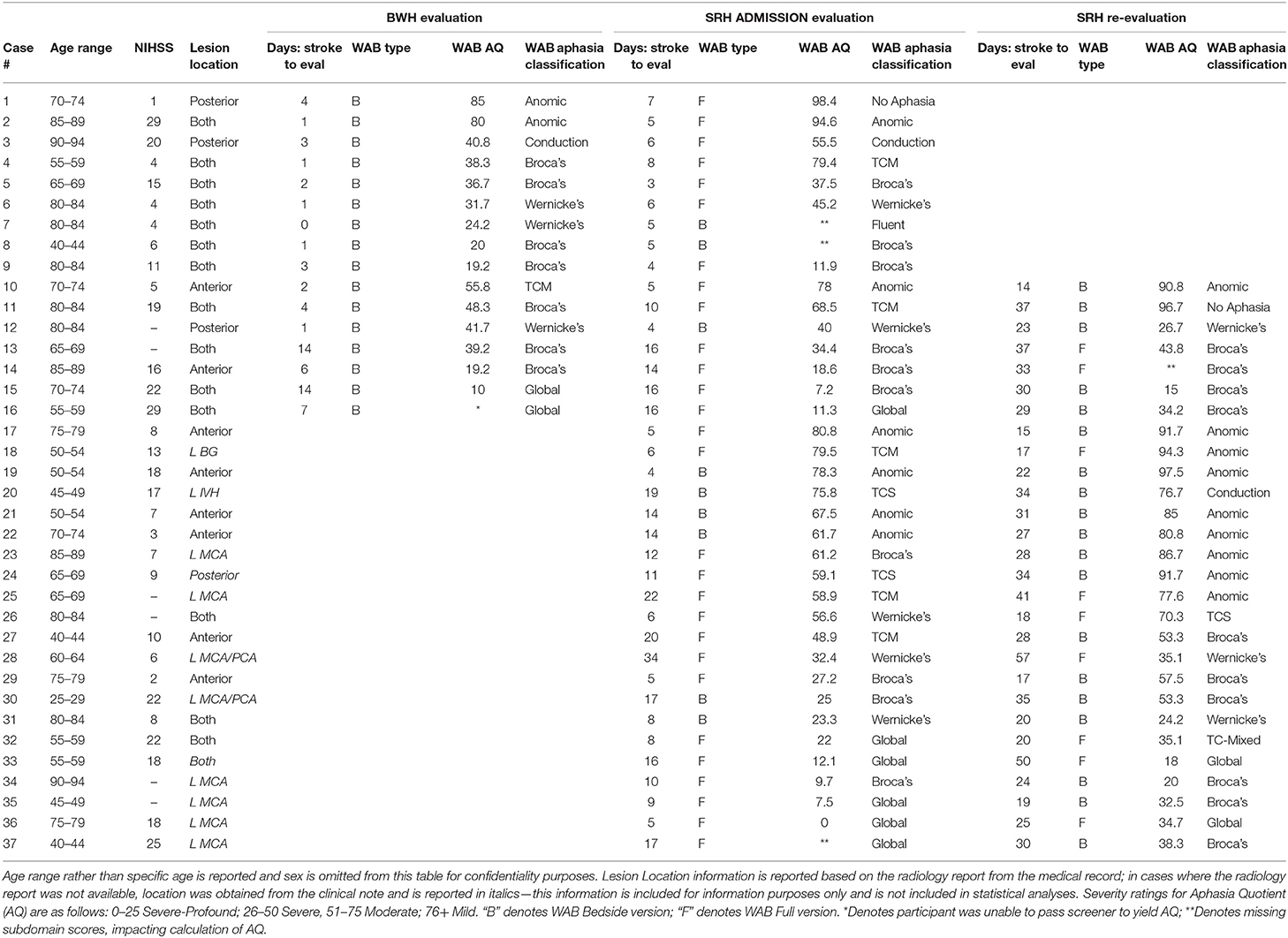

Between October of 2016 and June of 2017, an iterative process of implementation was carried out at Brigham and Women's Hospital (BWH) to standardize the process of language evaluation. BWH is a 777-bed acute-care teaching hospital of Harvard Medical School within the Partners HealthCare Network. The hospital transitioned from paper medical records to electronic medical records in 2015, which created an opportunity for clinicians to assess clinical practices and consider how to most effectively integrate clinical expertise within the new documentation structure. The goal of this implementation project was to identify a clinical process to improve the evaluation and diagnosis of aphasia within the constraints of the acute care setting and to maximize efficiency and clarity of information within the electronic medical record. In brief review [see (26) for full report], a team of researchers and clinicians formed an implementation team and carried out the implementation process using the fourteen-step, four phase, Quality Implementation Framework (QIF) proposed by Meyers et al. [(22), see Figure 1].

Figure 1. Fourteen critical steps of quality implementation according to the Quality Implementation Framework (QIF) established by Meyers et al. (22).

QIF Phase 1 (considerations of the host setting), readiness for change was facilitated by the transition to the electronic record. During QIF Phase 2 (creating a structure for implementation), a literature review was performed by the implementation team to select an assessment that was feasible to administer in acute care that addressed implementation goals of improved diagnosis [the Western Aphasia Battery-Bedside Version (WAB-Bedside)], and software was selected to support data collection and entry into the medical record [REDCap, a secure online database supported by Partners HealthCare (34)]. In QIF Phase 3 (maintenance of the structure once implementation begins), a screening tool was developed to assess patient ability to participate in assessment given that certain patients seen in acute care were not sufficiently alert and oriented to attempt purposeful responses [see (26) for additional details regarding the screener], training sessions were held to educate staff about the measure, and surveys were collected to gather data about evolving practice patterns and needs. QIF Phase 4 (improving future applications) involved evaluation of the implementation to improve future practice. Evaluation of the implementation, carried out through medical record review of 50 (25 post-implementation and 25 pre-implementation) records demonstrated improved consistency of reporting on language domains of repetition ability, naming ability, yes-no question response accuracy, and awareness of errors, as well as a significant increase in the reporting of specific aphasia diagnosis (26). In addition to quantifiable improvements, the team felt that administering a standardized measure helped improve handoff communication and streamlined practice. Therefore, in July of 2017 training sessions were held to expand the standardized measure to the entire BWH clinical team. In this follow-up study, we aimed to determine adherence to the standardized protocol over the two-year period since expansion to the clinical team.

Methods

Based on processes established via the implementation referenced above, since 2017, when a consult was placed requesting a language evaluation at BWH, patients were screened to determine if they were sufficiently alert to complete the standardized assessment. If passed, participants were given the spoken and auditory comprehension portions of the WAB-Bedside (35), and data were entered directly into an online database supported by REDCap. The onboarding of new staff involved training on the administration of the standardized assessment and on the data entry process in REDCap by supervisors and senior staff. Once new clinicians were ready to administer the measure in their clinical practice, they were observed by a senior clinician who provided feedback on administration. Clinicians were accompanied by a senior clinical team member until they were judged to adhere to the standardized protocol. Standardized evaluation procedures were reinforced quarterly through staff meetings.

Retrospective medical record review was conducted to evaluate adherence to the standardized evaluation process in acute care. This retrospective medical record review was approved by the Institutional Review Board of Partners HealthCare. Partners HealthCare has a Research Patient Data Registry (RPDR), that allows data to be queried based on the International Classification of Diseases (ICD-10) diagnosis codes. Using the RPDR, we identified patients older than 18 who were admitted to BWH from July 2017 to August 2019 with diagnosis codes that contained the search terms: speech and language deficits (following cerebrovascular disease, cerebral infarction, hemorrhage etc.), aphasia, cognitive deficits, cognitive impairment, cognitive functions, and brain neoplasm (see Data Sheet 1 for a full list of query items). Billing data from these queries were searched for Current Procedural Terminology (CPT) billing codes 92523 (Evaluation of speech sound production; with evaluation of language comprehension and expression and 96105 (Assessment of aphasia and cognitive performance testing), the two billing codes used at BWH for language evaluations. In this manner, medical record numbers for patients admitted to BWH who received language evaluations were identified. Duplicate entries were removed and billing data was compared to language evaluation data in the REDCap database to determine the percentage of language evaluations that were performed using the standardized process over the 2-year period.

Results

The RPDR data pull resulted in 371 entries corresponding to patients who were billed for receiving a language evaluation in the period from June 2017 until August 2019. These patients represented primary diagnoses that included cerebral infarction, non-traumatic hemorrhage, and malignant neoplasm. An examination of adherence demonstrates that of the 371 entries, 260 individuals (70.1%) received the standardized assessment protocol.

Part II—The Iterative Implementation Process Utilized in an Inpatient Rehabilitation Care Facility Following an Implementation Science Framework

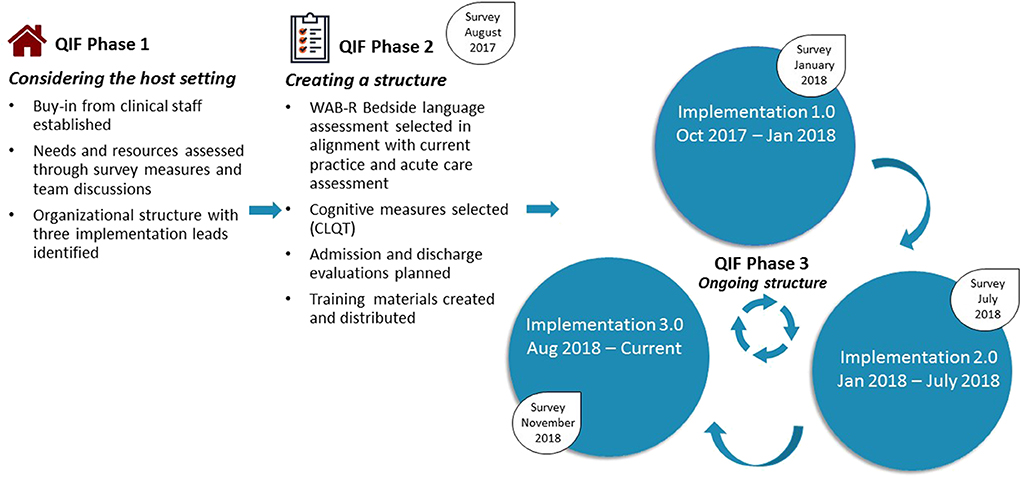

In early 2016, clinicians at Spaulding Rehabilitation Hospital (SRH), an acute rehabilitation hospital within the Partners HealthCare Network, recognized the need for standardization and began trialing a standardized language assessment tool with all patients admitted to the Stroke Rehabilitation Program, where all admitted patients carry a diagnosis of stroke. The standardization process followed an informal procedure until 2017, when a collaboration was formed with Brigham and Women's Hospital.

Methods

In June of 2017, based on prior work, teams worked together to initiate an iterative implementation based on the four phases of the quality improvement framework (QIF) for implementation proposed by [Meyers et al. (22)] with the goal of aligning procedures and resources across the two facilities. Three phases of QIF have been implemented, with key considerations and/or changes identified in Figure 2. Survey measures were administered to clinical staff at each phase of implementation to gather feedback and evaluate for potential improvements.

Figure 2. Summary of QIF Process at Spaulding Rehabilitation Hospital. Implementation 1.0 included the WAB Bedside, as well as four subtests from the CLQT (Clock Drawing, Symbol Cancellation, Design Memory, Design Generation). Assessment of reading and writing skills was formalized to improve consistency of administration. The assessment measure was administered to all patients with CVA admitted to the Stroke Program. Implementation 2.0 included the addition of a screening tool, administration of the full WAB (rather than WAB Bedside) upon admission for individuals with L MCA stroke, and re-evaluation via the WAB Bedside at 10–14 days post admission. Implementation 3.0 included training and expansion for assessment administration by all clinicians, including full-time employees and per diem weekend staff, as well as expansion to CVA admissions hospital-wide (rather than just those admitted to the Stroke Program).

QIF Phase 1: Initial Considerations Regarding the Host Setting

Initial considerations of the host setting demonstrated that buy-in from stakeholders was already established, as the SRH clinical team had recognized the need for standardization 1 year prior. SRH was using the WAB-bedside assessment, which aligned with the measure implemented at BWH, making alignment of measures readily feasible. An organizational structure was implemented, with three members of the clinical team identified as implementation leads. A survey was distributed to clinicians to identify aspects of current practice that were effective and those that might be improved upon (see Data Sheet 2). Implementation leads held meetings with BWH researcher-clinicians to gather insights about the process at BWH and establish a plan for SRH.

QIF Phase 2: Creating a Structure for Implementation

In addition to the WAB-bedside language evaluation, data on cognitive measures was important for SRH clinicians to gather, therefore, four subtests of the Cognitive Linguistic Quick Test [CLQT (36)] were added to the standardized process. In addition, it was determined that re-evaluation prior to discharge would be meaningful to evaluate change, and re-administration of the WAB-bedside and CLQT subtests was targeted to occur within 48 h of planned discharge. While at BWH, WAB-bedside data were entered directly into a REDCap database, the CLQT is not as seamlessly administered on a computer and SRH clinicians felt paper administration was more conducive to the rehabilitation environment, therefore a decision was made to use traditional paper and pencil formats for both assessments. It was decided that the standardized implementation would first be carried out only by full time clinicians within the Stroke Program of SRH. Staff training occurred through meetings and printed materials distributed throughout the Stroke Program. This plan was put into place in October of 2017 and maintained until January 2018 and was referred to as Implementation 1.0.

QIF Phase 3: Ongoing Structure Once Implementation Begins

At the end of implementation 1.0, a survey was distributed to full-time clinical staff. Survey responses and observations from implementation leads revealed that clinicians felt that the WAB-bedside was not sufficient, in many cases, to evaluate language abilities for individuals having experienced left middle cerebral artery (MCA) or anterior cerebral artery (ACA) cerebrovascular accidents, who are those who most consistently present with aphasia. A more comprehensive evaluation was requested. In addition, re-administering measures was difficult to do 48-h prior to discharge in the setting of shifting discharge plans and caseloads. In response to these observations, the standardized process was modified to (1) include different language assessments for patients experiencing left hemisphere strokes (Full WAB-R) vs. those affecting the right hemisphere and/or cerebellum (WAB-Bedside) (see Supplementary Figure 1), and (2) schedule re-testing to take place 10–14 days after initial assessment so that calendar alerts could be programmed and re-testing scheduled. This structure (Implementation 2.0) was carried out until July 2018, when another survey was administered. Survey data and implementation guided observation led to Implementation 3.0 characterized by the creation of templates to guide write-ups of evaluations and expansion of the measure to include per diem staff for improved consistency. Educational materials about the standardized process were distributed to all staff and full time clinicians were identified as point-people for per diem clinicians. To establish a process for evaluating data, research staff also joined the project, and, on a weekly basis, a research assistant at the MGH Institute of Health Professions pulled standardized language evaluation data from the Spaulding electronic medical record into the REDCap database. Implementation 3.0 was carried out on the Stroke Program from August 2018 until December 2018. This period is referred to as Implementation 3.0-Stroke Program. In January 2019, educational meetings were held and the standardized process was expanded to include clinicians on other services within SRH also involved in language evaluations. The period from January 2019 to August 2019 is referred to as Implementation 3.0-Hospital.

Evaluation of Adherence

Adherence to the implementation measure was evaluated over Implementation 3.0-Stroke Program and Implementation 3.0-Hospital. To do so, Spaulding Rehabilitation Hospital admission data were retrieved for all patients admitted with diagnosis classifications of stroke rehabilitation, physical medicine and rehabilitation (PMR) stroke, Acute neurology stroke, PMR neurology, neurology and brain injury. For the period from August 2018 to December 2018, data were filtered to only consider patients admitted to the Stroke Program. From January 2018 to August 2019 all stroke admissions data were included (Implementation 3.0-Hospital). Admissions data were then compared with REDCap data to determine whether patients received the standardized protocol or another assessment procedure.

Results

From August 2018 to December 2018, there were a total of 169 admissions to the Stroke Program comprising 79 with L MCA/ACA strokes and 90 with other stroke locations. Eighty-three percent of these patients were evaluated using a standardized assessment for language. Examining specific adherence to the administration of the Full WAB for L MCA/ACA CVA patients, however, demonstrated 33% adherence, with the remaining 50% receiving the WAB bedside. See Table 1 for additional adherence rates.

For Implementation 3.0 Hospital (January 2019–August 2019) there were a total of 402 stroke admissions hospital-wide with 170 and 232 admissions for L MCA/ACA strokes and other stroke locations, respectively. Sixty-four percent of these patients were evaluated using a standardized assessment for language. Examining specific adherence to the administration of the Full WAB for L MCA/ACA CVA patients, however, demonstrated 27% adherence, with the remaining 37% receiving the WAB bedside.

Part III—Pilot Evaluation of Data Collected Through Standardized Assessments to Begin to Evaluate Predictive Models of Language Recovery After Stroke

One of the long-term goals of the collaborative standardization of evaluations is to contribute to a language database that can be used to inform recovery predictions of language in the acute phase of recovery. In order to begin to evaluate data, we conducted pilot analyses over cases with at least two time points of evaluation.

Methods

Participants

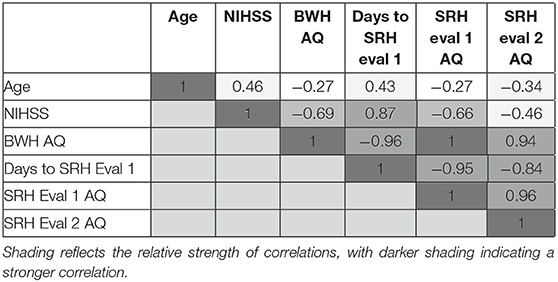

Records from standardized language evaluations completed at BWH and SRH per clinical protocol were retrieved for patients who were evaluated at a minimum of two timepoints between June 2017 and July 2019. To be included in pilot analyses, patients had to be native English speakers, 18 years of age or older, and have sustained a left MCA stroke that could have extended into anterior cerebral artery (ACA) and posterior cerebral artery (PCA) territory within the same hemisphere. Patients with prior history of stroke or comorbidities including developmental delay or other significant neurologic history (e.g., neurodegenerative disorder) were excluded. See Table 2 for demographic and stroke-related information, including WAB Aphasia Quotient (AQ) and Aphasia Classification information across timepoints.

Of the 796 database entries, 37 patients met inclusion criteria and were evaluated at two or more timepoints. Of these 37 patients, 9 were evaluated at BWH admission, then again at SRH admission, while another 7 were evaluated at all three timepoints: BWH admission, SRH admission, and SRH re-evaluation. The remaining 21 patients received evaluation at the two SRH timepoints, SRH admission and SRH re-evaluation. In addition to language evaluation data, patient age, sex, NIH Stroke Scale NIHSS) score, receipt of Tissue Plasminogen activator (tPA), date of stroke, and date of hospital admission were retrieved from the database.

Radiology Scan Information

Radiology reports and clinical scans (MRI) were retrieved from the Partners HealthCare Research Patient Data Registry (RPDR) for all patients whose acute care hospitalization was within the Partners HealthCare Network. Clinical scans were retrieved with the intent of completing lesion masking (outlining the lesion) and calculating lesion volume and location based on regions of interest. The fact that these were clinical scans, however, presented several challenges for lesion masking and normalization. Motion artifacts were present in many samples and structural scans varied in their alignment, slice resolution, and whole-brain coverage, with many of the higher resolution scans only including partial brains. It was determined that reliable lesion volumes would not be obtainable from these non-standardized scans, therefore based on the lesion information outlined in radiology reports, as well as clinical scan data, lesions were classified at anterior lesions, posterior lesions, or both anterior/posterior lesions. Classifications were reviewed by two study staff. For patients admitted to SRH from a hospital outside the Partners HealthCare Network for whom radiology reports were not available, lesion data was retrieved from clinical notes within the medical record for informational purposes only and this lesion data was not included in statistical analyses, with the exception of two patients for whom complete radiology report information was available.

Data Analysis

Statistical analyses were preformed using R Software for Statistical Computing (37). The first set of analyses examined the dependent variable, SRH Admission AQ. Data on this dependent measure were available from 34 patients, as three of the patients in our sample were missing a WAB subdomain score, impacting calculation of an AQ. Regression analyses were run in a forward selection manner to evaluate the relationship between independent and dependent variables, and strength of potential models, entering up to three variables due to our sample size. Variables were entered into the model based on their hypothesized predictability as reported in the literature and on correlation strength with the dependent variable. The first regression evaluated aphasia severity (AQ) accounting for days post-onset of evaluation. Then, additional models were evaluated in a step-up manner, adding lesion location, coded as anterior/posterior only or both, and NIHSS. We then ran a second set of analyses using a different outcome variable: aphasia severity (AQ) at SRH re-evaluation.

In addition to pilot regression analyses, we were interested in examining the proportion of maximal recovery made by each patient. Given that patients varied in their initial severity, a proportional maximal recovery was computed for each patient to account for the differences in potential change. This was calculated as the observed change, or difference between scores, divided by the maximum potential change (T2 severity – T1 severity)/(severity score maximum - T1 severity) (28, 38). An important limitation to address here is a lack of consistency over whether the WAB-Bedside or Full WAB was entered into this comparison. Both tests yield an Aphasia Quotient and according to the WAB Testing manual, interpretation of the WAB-Bedside sections and tasks are consistent with the full test (35), suggesting that a comparison is possible, but should be interpreted with caution.

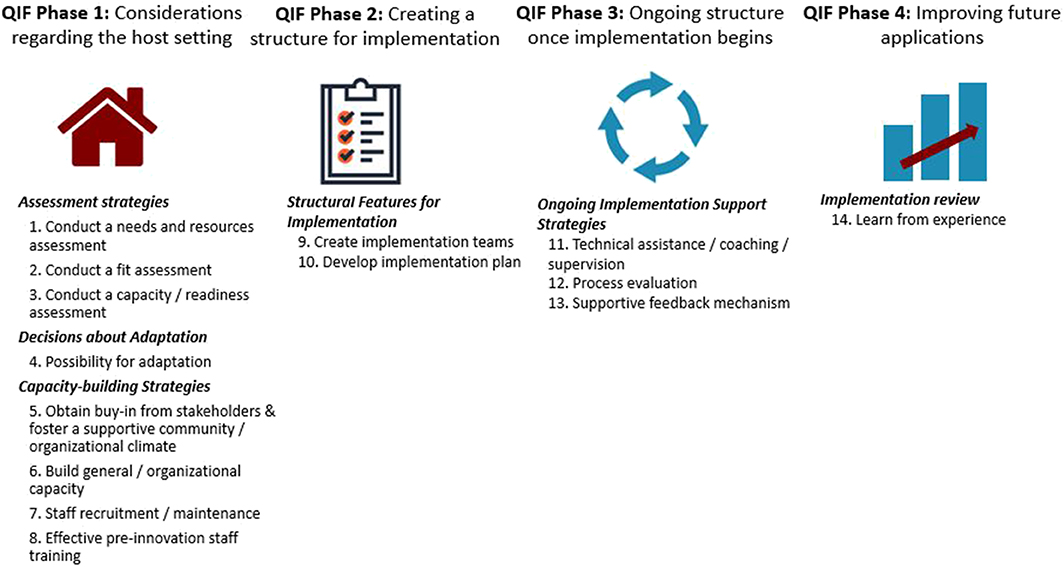

Results

Correlation across continuous variables of interest was assessed (Table 3). A very strong negative correlation was observed between time (number of days from stroke until rehabilitation admission evaluation) and aphasia severity (AQ) at all three timepoints. A strong correlation was observed between time and NIHSS, and a minimal to moderate correlation was observed between time and age. NIHSS was moderate-strongly negatively correlated with initial BWH acute care severity, however, the correlation was observed to be less strong by the time of SRH re-evaluation. Predictor variables were not highly correlated with each other.

Predictors of SRH Admission AQ and SRH Re-Evaluation AQ

Regression analysis with days post-onset of evaluation as the predictor and SRH Admission AQ as the outcome variable only accounted for 3% of the variance, and was not statistically significant (p = 0.321). Consistent with prior studies, when lesion was included in the model as a predictor, the model was statistically significant, accounting for 26.0% of the variance in SRH Admission AQ [F(2, 22) =3.871, p = 0.03]. NIHSS, which was the next most highly correlated variable was added to the model and contributed to an R-squared change of 5.4%. Though this model accounted for a larger percentage of the variance, the model was not significant [F(3, 18) = 2.75, p = 0.07].

Regression analysis with days post-onset of evaluation as the predictor, and SRH Re-Evaluation AQ as the outcome variable, was not statistically significant (p = 0.285) and only accounted for 4.4% of the variance in the model. Including lesion in the model as a predictor explained an additional 14.3% of the variance in SRH Re-Evaluation AQ, but was again not statistically significant [F(2, 15) = 1.728, p = 0.21]. Similarly, the addition of NIHSS explained an additional 9.6% of the variance, but the model was not statistically significant.

Language Severity Change

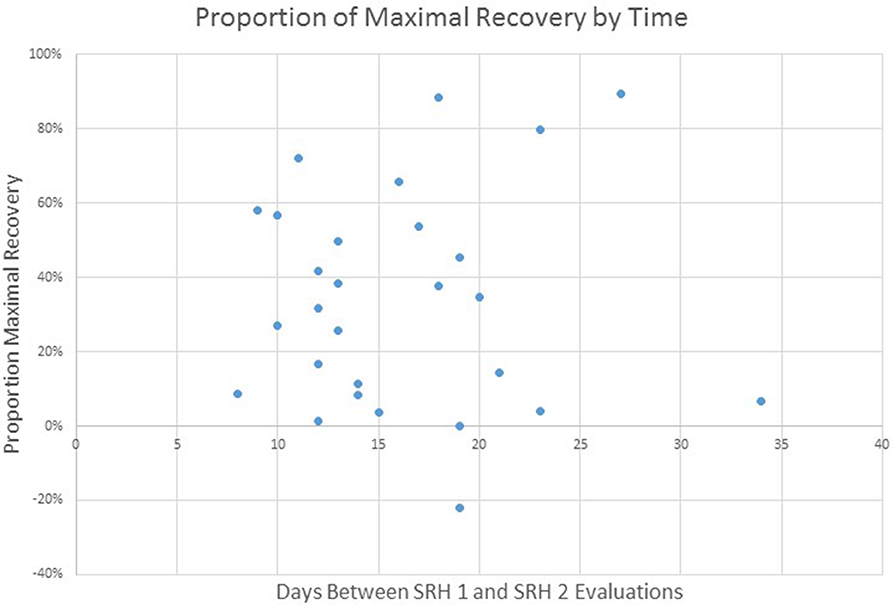

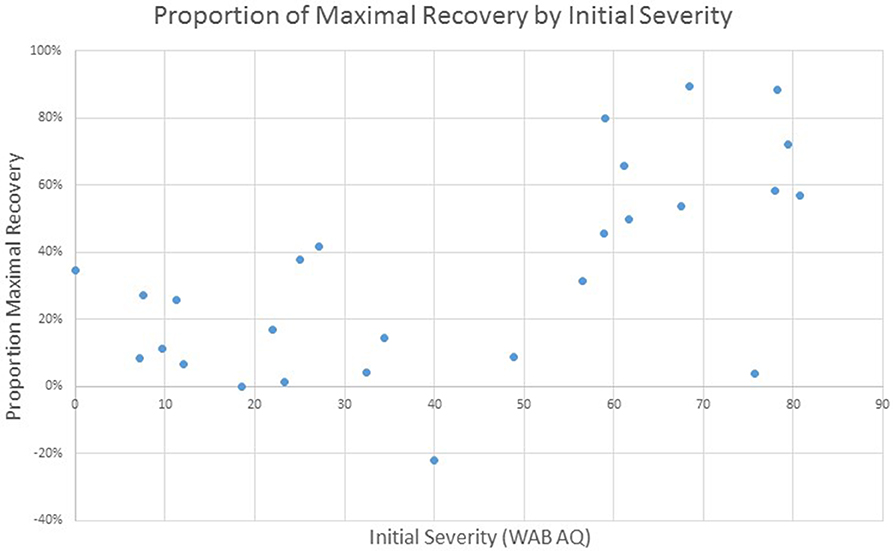

Given the focus of this project on the implementation of standardized language assessment measures in acute care and inpatient rehabilitation, we were interested in examining the proportion of maximal recovery made by individual patients. Comparisons of aphasia severity at SRH Admission and SRH re-evaluation showed a wide variety of proportion change ranging from 1% proportion maximal recovery to 89% proportion maximal recovery. The correlation between time between evaluations (as measured in days) and change was not significant, r(27) = 0.01, p = 0.95 (see Figure 3). The correlation of proportion maximum recovery and aphasia severity at initial evaluation was significant r(27) = 0.62, p <0.001 (see Figure 4). Individuals with lower aphasia severity scores corresponding to more severe language impairment showed more limited proportion recovery over this limited timeframe.

Figure 3. WAB AQ proportion of maximal recovery from SRH initial evaluation to re-evaluation as a function of time (days) between evaluations.

Figure 4. WAB AQ proportion of maximal recovery from SRH initial evaluation to re-evaluation as a function of severity at initial assessment.

Discussion

Results from the current study demonstrate that implementing standardized processes for the evaluation of language is feasible in acute care and inpatient rehabilitation settings, though an ideal process has yet to be identified, particularly for the inpatient rehabilitation setting. For the acute care setting, adherence rates to a standardized protocol over a 2-year period demonstrated 70% adherence. In a survey regarding practice patterns, 70.1% of clinicians reported completing informal assessment measures and 51.1% reported using individualized assessments developed by clinicians or the institution (1) in the acute stages post-stroke, thus 70% adherence over a two-year period with limited reinforcement measures is encouraging. Follow-up conversations with clinicians have revealed that in some cases, computers were not available in rooms, sessions were interrupted by other caregivers or exclusionary conditions, such as evaluating non-English speaking patients prevented the complete administration of the bedside WAB. Clinicians continue to express satisfaction with the measure, stating that the administration is efficient and informative and that using a standardized vocabulary across caregivers is helpful for patient hand-off. In this acute practice setting, the primary needs are to determine the presence or absence of aphasia, administer a diagnosis, initiate therapy and determine the next level of recommended care, conditions satisfied by the measure. Importantly, clinicians continue to supplement the standardized protocol, evaluating additional cognitive-linguistic domains based on their clinical judgment.

Within the inpatient rehabilitation setting, the QIF framework, and collaborative approach, has led to multiple iterations of implementation. Overall results of this initiative demonstrated that administering a standardized assessment in inpatient rehabilitation is feasible, with standardized language or cognitive assessment being completed upon admission for between 52 and 71% of patients in the Stroke Program. Clinician adherence is consistent with rates reported in studies that examine standardized assessment practices within other rehabilitation disciplines, such as physical therapy [48–66% adherence (39, 40)], and those specifically examining post-stroke standardized assessment practice patterns [52–88% adherence (41, 42)]. Incorporating measurable outcomes into clinical practice has been recognized as important for evaluating the effect of interventions, quality of care, advancing knowledge and policy (43–45). While standardization initiatives represented changes in practice, changes were feasible and adhered to over time in acute care.

The iterative process of implementation, however, revealed challenges identifying a suitable language measure for all patients. Initially, the WAB-Bedside was judged to be too abbreviated for L MCA CVA patients in the inpatient rehabilitation setting, yet closer examination once the Full WAB was recommended revealed low rates of administration. This indicates a need to revisit assessment procedures to improve adherence in a way that supports clinical data collection and decision-making. The inpatient rehabilitation setting offers more time for evaluation relative to acute care, but these evaluations establish foundations for goals targeted over a longer period of time than in acute care and that must ready the patient, in many cases, for discharge home. Language interventions are often characterized as being either impairment-based, focusing on stimulating impaired subdomains of speaking, listening, reading, or writing; or communication-based, focused on building functional communication through a variety of methods (46). The WAB is an impairment-based measure, which may not capture the range of deficits and abilities important to evaluate when selecting a combination of impairment-based and communication-based interventions, particularly for patients returning home or to work and resuming activities of daily life [e.g., work demands, finances, group and/or social activities, routine home activities see (47)]. While clinicians expressed an interested in utilizing the full WAB, it may be that on a case-by-base basis the more abbreviated bedside WAB, which provides an overall evaluation of language ability, accompanied by more comprehensive impairment-based testing of specific domains and/or evaluations of communication functioning was better suited than the full WAB. In the acute rehabilitation setting, language evaluations are used to plan interventions that must stimulate the language system and also provide access to functional communication sufficient for the home, work or next level of care. The inclusion of functional measures should be considered in future iterations of implementation as they may more appropriately capture patient performance and level of functioning, important for guiding planning for participation at the next level of care.

Interestingly, clinician adherence to the standardized protocol was higher for the CLQT than for language assessment in both the stroke program and the hospital. This may reflect the fact that there are fewer alternate assessments of cognitive abilities that are suitable for stroke and individuals with language deficits. This may also reflect the importance of insights gained from the assessment of cognitive domains on intervention goals at this level of care. Clinicians are tasked with making initial recommendations regarding discharge planning early in each patient's rehabilitation stay. Discharge recommendations (e.g., discharge home independently, 24-h supervision, or skilled nursing services) go beyond considerations of language ability to consider level of cognitive functioning and safety, making cognitive evaluations meaningful.

Based on the data obtained through standardized assessment of language skills across settings, initial model evaluations over pilot data support previous studies that have found that lesion location and size are predictive of outcomes (31, 33, 48–50). Though limited in power, models that incorporated lesion location accounted for the largest degree of variance. Initial evaluation of proportion maximal recovery demonstrated greater proportion of recovery for individuals with lower severities of aphasia at initial assessment, consistent with prior studies which have shown that patients with more severe levels of impairment show more limited improvement (28, 51).

The current evaluation of predictors of outcomes was only preliminary given the small sample size. Furthermore, the assessment measures incorporated in the current implementation were impairment-based measures that present potential limitations. We propose that an improved understanding of the predictors of recovery will come through consideration of both impairment-based and functional outcome measures. Next steps in evaluating appropriate outcome measures should also examine practice patterns to better understand how outcome measures are utilized to guide intervention planning, as information obtained in assessments needs to be deemed meaningful to clinical practice. Clinical-decision making tools, such as algorithms have been shown to reduce variability in clinical care practices and improve patient outcomes (52). Guidelines that help align outcome measurement with treatment selection, however, are not readily available to guide aphasia assessment and intervention practices.

Additionally, future work will involve exploring metrics obtained by other disciplines, including physical and occupational therapy, through interdisciplinary partnerships to identify what measures are meaningful and clinically-feasible. Adoption into routine clinical practice offers the potential to contribute data that can then be evaluated via new predictive models of improvement. While analyses of data collected in a clinical context may not advance knowledge in the same manner as highly-controlled empirical studies, enlisting clinicians, and creating practice-based evidence may inform the research trajectory and contribute pilot data or systematic observations that can lead to the development of well-controlled empirical studies, which can then feed back into clinical practice. A pattern of practice, evaluation, analysis, and knowledge transfer has the potential to result in research findings that more readily translate into clinical practice, strengthening the bridge that links research and practice.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Partners IRB. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

MS, SV-R, and CT-H organized the database. MS performed statistical analyses and wrote the first draft of the manuscript. SV-R and CT-H wrote sections of the manuscript. All authors contributed to the conception and design of the study and contributed to the manuscript review and revision.

Funding

Institutional funding was provided from MGH Institute of Health Professions to support graduate students on this ongoing initiative.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors offer their sincere thanks to graduate students Carly Levine, Heidi Blackham, and Eilis Welsh, who assisted in database organization and data entry. The authors also wish to thank Dr. Annie B. Fox, who provided statistical consultation. Finally, the authors wish to sincerely thank the speech-language pathology teams and leadership at Brigham and Women's Hospital and Spaulding Rehabilitation Hospital, without whom this work would not have been possible.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fneur.2020.00412/full#supplementary-material

References

1. Vogel AP, Maruff P, Morgan AT. Evaluation of communication assessment practices during the acute stages post stroke. J Eval Clin Pract. (2010) 16:1183–8. doi: 10.1111/j.1365-2753.2009.01291.x

2. Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annu Rev Public Health. (2007) 28:413–33. doi: 10.1146/annurev.publhealth.28.021406.144145

3. Green LW. Making research relevant: if it is an evidence-based practice, where's the practice-based evidence? Family Pract. (2008) 25:i20–4. doi: 10.1093/fampra/cmn055

4. Mulhall A. Bridging the research-practice gap: breaking new ground in health care. Int J Palliative Nurs. (2001) 7:389–94. doi: 10.12968/ijpn.2001.7.8.9010

5. Upton DJ. How can we achieve evidence-based practice if we have a theory-practice gap in nursing today? J Adv Nurs. (1999) 29:549–55. doi: 10.1046/j.1365-2648.1999.00922.x

6. Wallis L. Barriers to implementing evidence-based practice remain high for U.S. nurses. Am J Nurs. (2012) 112:15. doi: 10.1097/01.NAJ.0000423491.98489.70

7. Balas EA, Boren SA. Managing clinical knowledge for health care improvement. Yearb Med Inform. (2000) 9:65–70. doi: 10.1055/s-0038-1637943

8. Olswang LB, Prelock PA. Bridging the gap between research and practice: implementation science. J Speech Lang Hearing Res. (2015) 58:S1818–26. doi: 10.1044/2015_JSLHR-L-14-0305

9. Sackett DL. Evidence based medicine: what it is and what it isn't. BMJ. (1996) 312:70–2. doi: 10.1136/bmj.312.7023.71

10. ASHA. Evidence-Based Practice in Communication Disorders [Position Statement]. Rockville, MD: American Speech-Language-Hearing Association (2005).

11. Yorkston KM, Baylor CR. Evidence-based practice: applying research outcomes to inform clinical practice. In: Golper LA, Fratalli C, editors. Outcomes in Speech-Langauge Pathology, 2nd ed. New York, NY: Thieme (2013). p. 265–78.

12. Bayley MT, Hurdowar A, Richards CL, Korner-Bitensky N, Wood-Dauphinee S, Eng JJ, et al. Barriers to implementation of stroke rehabilitation evidence: findings from a multi-site pilot project. Disabil Rehab. (2012) 34:1633–8. doi: 10.3109/09638288.2012.656790

13. Hughes AM, Burridge JH, Demain SH, Ellis-Hill C, Meagher C, Tedesco-Triccas L, et al. Translation of evidence-based Assistive Technologies into stroke rehabilitation: users' perceptions of the barriers and opportunities. BMC Health Serv Res. (2014) 14:124. doi: 10.1186/1472-6963-14-124

14. Laffoon JM, Nathan-Roberts D. Increasing the use of evidence based practices in stroke rehabilitation. Proc Int Symp Hum Factors Ergonomics Health Care. (2018) 7:249–54. doi: 10.1177/2327857918071058

15. Pollock AS, Legg L, Langhorne P, Sellars C. Barriers to achieving evidence-based stroke rehabilitation. Clin Rehab. (2000) 14:611–7. doi: 10.1191/0269215500cr369oa

16. Salbach NM, Jaglal SB, Korner-Bitensky N, Rappolt S, Davis D. Practitioner and organizational barriers to evidence-based practice of physical therapists for people with stroke. Phys Ther. (2007) 87:1–20. doi: 10.2522/ptj.20070040

17. Deutscher D, Hart DL, Dickstein R, Horn SD, Gutvirtz M. Implementing an integrated electronic outcomes and electronic health record process to create a foundation for clinical practice improvement. Phys Ther. (2008) 88:270–85. doi: 10.2522/ptj.20060280

18. Horn SD, Dejong G, Deutscher D. Practice-based evidence research in rehabilitation: an alternative to randomized controlled trials and traditional observational studies. Arch Phys Med Rehabil. (2012) 93:S127–37. doi: 10.1016/j.apmr.2011.10.031

19. Horn SD, Gassaway J. Practice-based evidence study design for comparative effectiveness research. Med Care. (2007) 45(10 Suppl. 2):50–7. doi: 10.1097/MLR.0b013e318070c07b

20. Douglas NF, Burshnic VL. Implementation science: tackling the research to practice gap in communication sciences and disorders. Perspect ASHA Special Interest Groups. (2019) 4:3–7. doi: 10.1044/2018_PERS-ST-2018-0000

21. Eccles M, Mittman B. Welcome to implementation science. Implement Sci. (2006) 1:1. doi: 10.1186/1748-5908-1-1

22. Meyers DC, Durlak JA, Wandersman A. The quality implementation framework: a synthesis of critical steps in the implementation process. Am J Commun Psychol. (2012) 50:462–80. doi: 10.1007/s10464-012-9522-x

23. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. (2009) 4:1–15. doi: 10.1186/1748-5908-4-50

24. Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. (2013) 103:e38–46. doi: 10.2105/AJPH.2013.301299

25. Green LW, Ottoson JM. From efficacy to effectiveness to community and back: evidence-based practice vs. practice-based evidence. In: Conference From Clinical Trials to Community: The Science OF Translating Diabetes and Obesity Research. Bethesda, MD: National Institutes of Health (2004).

26. Vallila-Rohter S, Kasparian L, Kaminski O, Schliep M, Koymen S. Implementing a standardized assessment battery for aphasia in acute care. Semin Speech Lang. (2018) 39:37–52. doi: 10.1055/s-0037-1608857

27. Laska AC, Hellblom A, Murray V, Kahan T, Von Arbin M. Aphasia in acute stroke and relation to outcome. J Int Med. (2001) 249:413–22. doi: 10.1046/j.1365-2796.2001.00812.x

28. Lazar RM, Minzer B, Antoniello D, Festa JR, Krakauer JW, Marshall RS. Improvement in aphasia scores after stroke is well predicted by initial severity. Stroke. (2010) 41:1485–8. doi: 10.1161/STROKEAHA.109.577338

29. Nicholas ML, Helm-Estabrooks N, Ward-Lonergan J, Morgan AR. Evolution of severe aphasia in the first two years post onset. Arch Phys Med Rehabil. (1993) 74:830–6. doi: 10.1016/0003-9993(93)90009-Y

30. Pedersen P, Jorgensen HS, Nakayama H, Raaschou HO, Olsen TS. Aphasia in acute stroke: incidence, determinants, and recovery. Ann. Neurol. (1995) 38:659–66. doi: 10.1002/ana.410380416

31. Plowman E, Hentz B, Ellis C. Post-stroke aphasia prognosis: a review of patient-related and stroke-related factors. J Eval Clin Pract. (2011) 18:689–94. doi: 10.1111/j.1365-2753.2011.01650.x

32. Wade DT, Hewer RL, David RM, Enderby P. Aphasia after stroke: natural history and associated deficits. J Neurol Neurosurg Psychiatry. (1986) 49:11–6. doi: 10.1136/jnnp.49.1.11

33. Watila MM, Balarabe B. Factors predicting post-stroke aphasia recovery. J Neurol Sci. (2015) 352:12–8. doi: 10.1016/j.jns.2015.03.020

34. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) - a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. (2009) 42:377–81. doi: 10.1016/j.jbi.2008.08.010

36. Helm-Estabrooks N. Cognitive Linguistic Quick Test (CLQT). San Antonio, TX: The Psychological Corporation (2001).

37. R Core Team. R: A language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing (2019). Available online at: https://www.r-project.org/

38. Dunn LE, Schweber AB, Manson DK, Lendaris A, Herber C, Marshall RS, et al. Variability in motor and language recovery during the acute stroke period. Cerebrovasc Dis Extra. (2016) 6:12–21. doi: 10.1159/000444149

39. Jette DU, Halbert J, Iverson C, Miceli E, Shah P. Use of standardized outcome measures in physical therapist practice: perceptions and applications. Phys Ther. (2009) 89:125–35. doi: 10.2522/ptj.20080234

40. Russek L, Wooden M, Ekedahl S, Bush A. Attitudes toward standardized data collection. Phys Ther. (1997) 77:714–29. doi: 10.1093/ptj/77.7.714

41. Bland MD, Sturmoski A, Whitson M, Harris H, Connor LT, Fucetola R, et al. Clinician adherence to a standardized assessment battery across settings and disciplines in a poststroke rehabilitation population. Arch Phys Med Rehabil. (2013) 94:1048–53. doi: 10.1016/j.apmr.2013.02.004

42. Duncan PW, Horner RD, Reker DM, Samsa GP, Hoenig H, Hamilton B, et al. Adherence to postacute rehabilitation guidelines is associated with functional recovery in stroke. Stroke. (2002) 33:167–77. doi: 10.1161/hs0102.101014

43. Jones TL. Outcome measurement in nursing: imperatives, ideals, history, and challenges. Online J Iss Nurs. (2016) 21:1–19. doi: 10.3912/OJIN.Vol21No02Man01

44. Wilson L, Kane HL, Falkenstein K. The importance of measurable outcomes. J Perianesthesia Nurs. (2008) 23:345–8. doi: 10.1016/j.jopan.2008.07.008

45. Arnold H, Wallace SJ, Ryan B, Finch E, Shrubsole K. Current practice and barriers and facilitators to outcome measurement in aphasia rehabilitation: a cross-sectional study using the theoretical domains framework. Aphasiology. (2020) 34:47–69. doi: 10.1080/02687038.2019.1678090

46. Davis A. Aphasia Therapy Guide. (2011). Available online at: https://www.aphasia.org/aphasia-resources/aphasia-therapy-guide/

47. Reiman MP, Manske RC. The assessment of function: how is it measured? A clinical perspective. J Manual Manipul Ther. (2011) 19:91–9. doi: 10.1179/106698111X12973307659546

48. Hillis AE, Beh YY, Sebastian R, Breining B, Tippett DC, Wright A, et al. Predicting recovery in acute poststroke aphasia. Ann Neurol. (2018) 83:612–22. doi: 10.1002/ana.25184

49. Hope TMH, Seghier ML, Leff AP, Price CJ. Predicting outcome and recovery after stroke with lesions extracted from MRI images. NeuroImage Clin. (2013) 2:424–33. doi: 10.1016/j.nicl.2013.03.005

50. Maas MB, Lev MH, Ay H, Singhal AB, Greer DM, Smith WS, et al. The prognosis for aphasia in stroke. J Stroke Cerebrovasc Dis. (2012) 21:350–7. doi: 10.1016/j.jstrokecerebrovasdis.2010.09.009

51. Pedersen PM, Vinter K, Olsen TS. Aphasia after stroke: type, severity and prognosis: the Copenhagen aphasia study. Cerebrovasc Dis. (2004) 17:35–43. doi: 10.1159/000073896

Keywords: implementation science, aphasia, standardized assessment, acute care, rehabilitation, stroke recovery

Citation: Schliep ME, Kasparian L, Kaminski O, Tierney-Hendricks C, Ayuk E, Brady Wagner L, Koymen S and Vallila-Rohter S (2020) Implementing a Standardized Language Evaluation in the Acute Phases of Aphasia: Linking Evidence-Based Practice and Practice-Based Evidence. Front. Neurol. 11:412. doi: 10.3389/fneur.2020.00412

Received: 13 August 2019; Accepted: 20 April 2020;

Published: 01 June 2020.

Edited by:

Lisa Tabor Connor, Washington University in St. Louis, United StatesReviewed by:

Evy Visch-brink, Erasmus University Medical Center Rotterdam, Rotterdam, NetherlandsRobert Peter Fucetola, Washington University in St. Louis, United States

Copyright © 2020 Schliep, Kasparian, Kaminski, Tierney-Hendricks, Ayuk, Brady Wagner, Koymen and Vallila-Rohter. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Megan E. Schliep, bXNjaGxpZXBAbWdoaWhwLmVkdQ==; Sofia Vallila-Rohter, c3ZhbGxpbGFyb2h0ZXJAbWdoaWhwLmVkdQ==

Megan E. Schliep

Megan E. Schliep Laura Kasparian

Laura Kasparian Olga Kaminski

Olga Kaminski Carla Tierney-Hendricks

Carla Tierney-Hendricks Esther Ayuk

Esther Ayuk Lynne Brady Wagner2

Lynne Brady Wagner2 Semra Koymen

Semra Koymen Sofia Vallila-Rohter

Sofia Vallila-Rohter