- 1International Center for Primate Brain Research (ICPBR), Center for Excellence in Brain Science and Intelligence Technology (CEBSIT), Chinese Academy of Sciences (CAS), Shanghai, China

- 2Department of Cognitive Neurophysiology, Max Planck Institute for Biological Cybernetics, Tübingen, Germany

- 3Graduate School of Neural and Behavioral Sciences, International Max Planck Research School, Eberhard-Karls University of Tübingen, Tübingen, Germany

- 4Centre for Imaging Sciences, Biomedical Imaging Institute, The University of Manchester, Manchester, United Kingdom

- 5Department of Empirical Inference, Max Planck Institute for Intelligent Systems, Tübingen, Germany

Introduction: Transient phenomena play a key role in coordinating brain activity at multiple scales, however their underlying mechanisms remain largely unknown. A key challenge for neural data science is thus to characterize the network interactions at play during these events.

Methods: Using the formalism of Structural Causal Models and their graphical representation, we investigate the theoretical and empirical properties of Information Theory based causal strength measures in the context of recurring spontaneous transient events.

Results: After showing the limitations of Transfer Entropy and Dynamic Causal Strength in this setting, we introduce a novel measure, relative Dynamic Causal Strength, and provide theoretical and empirical support for its benefits.

Discussion: These methods are applied to simulated and experimentally recorded neural time series and provide results in agreement with our current understanding of the underlying brain circuits.

1 Introduction

During both wakefulness and sleep, the mammalian brain is able to implement numerous functions key to our survival with extraordinary reliability. This implies precise coordination of transient mechanisms at multiple spatiotemporal scales ensuring both the synergy between brain regions contributing to the same task, and the non-interference between network activities in charge of different functions. Evidence for such transient mechanisms is provided by the variety of neural events that can be observed in brain activity across multiple structures. Such phenomena may occur in response to stimuli, as has been observed for gamma oscillations (Tallon-Baudry and Bertrand, 1999; Fries, 2015), and may play a role in the dynamic encoding of information. However, key phenomena can also occur spontaneously, as exemplified by the variety of events occurring during sleep. These include Sharp Wave-Ripples (SWR) complexes that occur in the hippocampus during the same sleep stages, and take the form of a slow deflection (the sharp wave, SW) superimposed with a fast short-lived oscillation (the ripple). SWRs have been extensively studied and a large set of evidence supports their key role in episodic memory consolidation and the recall of previous experiences (Lee and Wilson, 2002; Diba and Buzsaki, 2007; Ego-Stengel and Wilson, 2010).

In order to understand how these transient phenomena operate mechanistically, causality measures based on observed neural time series can be very helpful to quantify the underlying transient influences between brain structures. Several measures of causality have been proposed, starting in the econometrics literature with Granger causality (GC) (Granger, 1969), relying on vector auto-regressive models. This measure can be generalized to an information-theoretic quantity: Transfer Entropy (TE) (Schreiber, 2000). In the present work, we focus on “model-free” quantities such as TE that are defined independently of the specific functional relationships entailed by a particular model of the dynamics. TE and GC have been used to assess the significance of causal links, but also the “strength” of these links. However, whether they are appropriate quantities to measure such strength is debated (Janzing et al., 2013; Stokes and Purdon, 2017).

Structural Causal Models (SCM, see Supplementary Section SA for background) also allow causal strength measures to be evaluated by their ability to reflect the magnitude of the changes resulting from removing the causal links. In this context, the relevance of causality measures has been investigated by Ay and Polani (2008), who discuss how to account for the effect of knockout experiments, and introduce a measure of information flow, emphasizing its desirable properties; Janzing et al. (2013) provide interesting theoretical justifications for this kind of measure and extend it to define causal strength (CS) of an arbitrary set of arrows in a graphical model. With respect to TE, information flow and CS have the benefit to be local, in the sense that they depend only on the direct causes of the observed effects and their associated mechanisms. This makes CS a good candidate to measure transient connectivity changes during non-stationary neural events, as they would be able to restrict themselves to causal influences that take place at a specific time, associated to specific arrows in the “unrolled” causal graph describing time-varying interactions.

However, we will argue that, even in simple unidirectional settings where a “source” region is driving events in a target region, such TE and CS may not reflect well a key element for neuroscientists: the role played by transient dynamics. Based on the potential outcome framework (Rubin, 1974), causal reasoning has also been used to provide intuitive measures of the causal impact of a specific phenomenon happening at a given time point (Brodersen et al., 2015), by comparing it to a putative scenario where this phenomenon does not happen and called synthetic control in economics (Abadie, 2021). This inspired us to take into account the peri-event change of signals compared to a pre-event stage as another component of causal influence.

Therefore, we look at causal influences through the lens of interventions in SCMs to propose a principled quantification of the strength of causal interactions in peri-event time series, i.e., datasets collected specifically around the times of occurrence of an identified phenomenon in neural signals. Based on information theoretic analyses, we assess the relevance and issues raised by a time-varying implementation of GC, TE and causal strength (DCS, where D stands for Dynamic), and extend DCS to a novel measure, the relative DCS (rDCS), to quantify causal influences reflected by both the connectivity and the event-related change at the cause. We show theoretically that rDCS is effective in uncovering dynamic causal influences for task-dependent events that are often accompanied with a deterministic component, as well as for spontaneous events. We also demonstrate how choices made for aligning peri-event time series collected across multiple occurrences of these events may bias causality measures, and we propose a way to align the detected events favoring the recovery of the ground truth causal direction for a uni-directionally coupled system. The benefits of rDCS over TE and DCS is demonstrated by both simulated toy models and neurophysiological recordings of SWRs. Overall, our results suggest that rDCS helps better quantify the causal interactions between transient dynamical events, and thus uncover elementary mechanisms that shape brain activities.

2 Methods

2.1 General principles for the analysis of event-related causal interactions

2.1.1 Structural Causal Models (SCM)

Mathematically, an SCM for variables {V1, …, Vd} is a collection of so-called “structural assignments” of the form

where the right hand side function fj determines the assignment of the value of Vj on the left-hand side based on the values of parents variables PAj ⊂ {V1, …, Vd} and of the so-called exogenous random variable Nj. The SCM is associated to a directed graph, the causal graph, where each variable Vj is represented by a node, and the parent-child relations between them is indicated by a “parent → child” arrow. While SCMs do not necessary include time information, we can exploit them to study dynamic interactions between two subsystems by considering causal relations linking variables representing one subsystem at past time points, to variables representing the other subsystem at a future time point. As an example, Figure 1A shows two uni-directionally coupled brain regions whose activities are represented by time series

where:

•

•

• the t subscript in all parameters

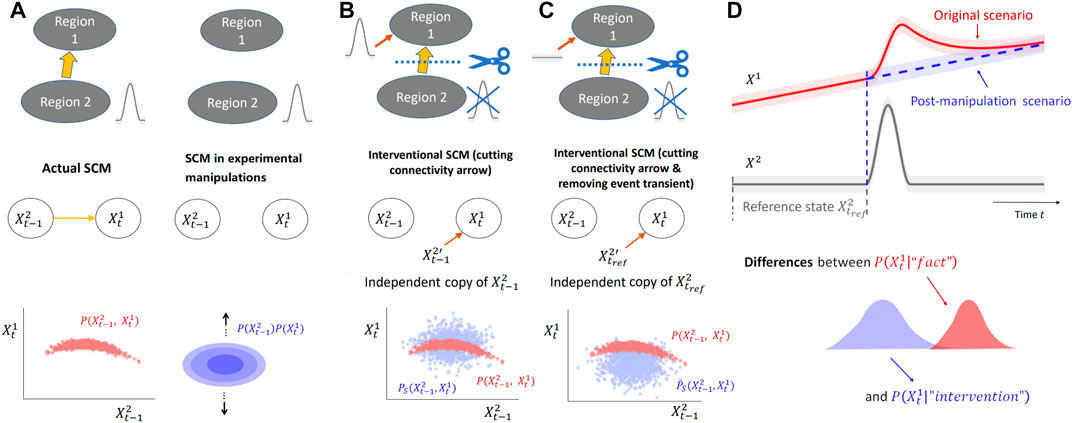

FIGURE 1. Analysis of event-related causality via interventions in SCMs. (A) (Top) Diagrams representing two brain regions with uni-directional connectivity from Region 2 to Region 1 and the post-manipulation scenario where the connectivity is removed. Region 2, as the “cause region”, exhibits transient events (grey) that influence Region 1 by propagating along the anatomical connection. (middle) SCMs underlying the diagrams, where

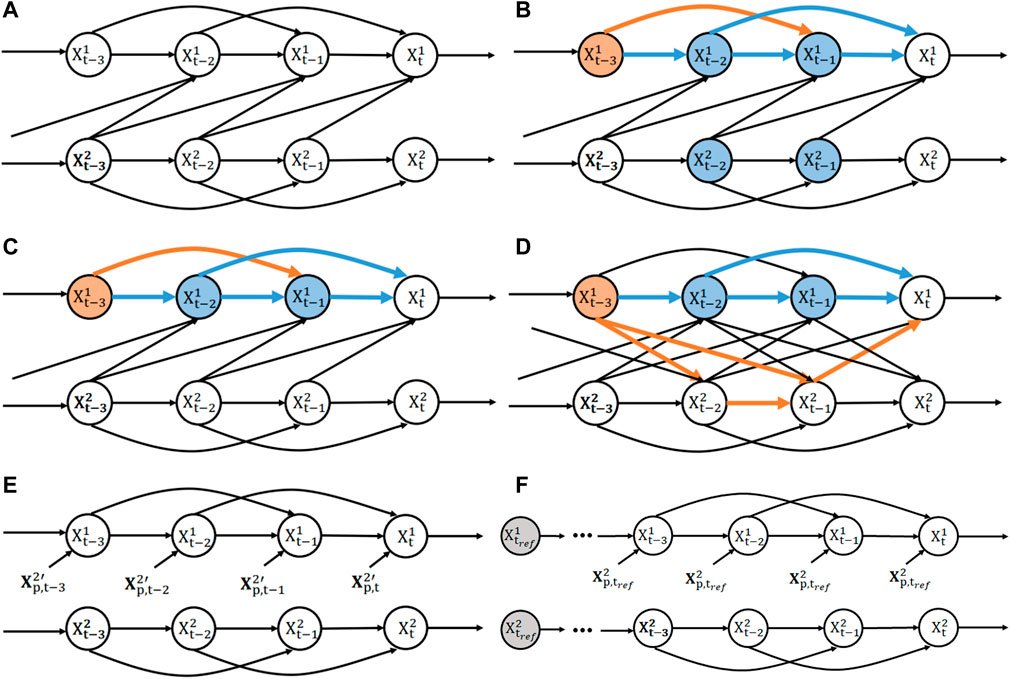

FIGURE 2. D-separation of bi-variate SVAR(2) model. (A) Structural causal model of a bi-variate SVAR(2) model defined in Eq. 2a with uni-directional coupling from X2 to X1. (B) Conditioning on both past states of X1 and X2 blocks all paths from

Note that the constraint of independence between exogenous variables

2.1.2 Interventions in SCMs

One key question in causality is estimating the effect of (possibly imaginary) manipulations (see, e.g., Woodward, 2001) of the system of interest from data, which boils down to comparing two “worlds” or scenarios (Shpitser and Pearl, 2008): the original world where no manipulation is performed, and the “post-manipulation” world.

Both original and post-manipulation worlds typically cannot be measured simultaneously (e.g., “treatment” and “no treatment” in the same patient). However, estimating their differences arguably forms the basis of causal investigations in empirical sciences, for example, by performing randomized experiments on multiple instances of a system designed with mutually exclusive treatments to infer the outcome of manipulations of this system. However, even performing carefully controlled experiments on close to identical instances of a system is often challenging in reality, as many physical and physiological phenomena cannot be easily reproduced or manipulated. This is typically the case for spontaneous transient neural events investigated in this paper, where neurophysiological experimental techniques limit the understanding and control of their conditions of occurrence, as well as the ability to precisely modify some aspects of network activity to test assumptions on the underlying mechanisms.

Under additional model assumptions, such as the absence of unobserved confounders, the framework of SCMs (as briefly introduced in Section 2.1.1 and Supplementary Section SA), can be leveraged to infer the outcome of manipulations based on observational data only. Assuming those assumptions are met (see also Discussion for examples), the SCM inferred from data can be modified using a family of operations named interventions to model the manipulations of the system described by the SCM (Pearl, 2000; Peters et al., 2017). Intervening typically refers to modifying the structural equation associated to one node in the SCM, to study the modifications it brings about in the system. When interventions are performed, the only affected mechanistic relations (represented by arrows in an SCM) are the ones between the intervened nodes and their parent nodes. For instance, one can impose a fixed deterministic value to a node, or that this node’s variable is drawn from a given distribution, independently from other variables in the SCM (Janzing et al., 2013; Correa and Bareinboim, 2020; Peters et al., 2017, Chapter 3). Both such interventions lead to an intervened causal graph where the arrows between the node intervened upon and its parents are removed. For a better understanding of the manipuation modelled in these two cases, consider examples from experimental manipulations in electrophysiology. The first case involves fixing a value, as in voltage clamp techniques used to study channel conductance. By fixing the membrane potential of a single neuron, the causal arrow between the membrane potential and extracellular ion concentration is broken. An example of the second case is injecting current in plasticity studies to maintain certain firing rates in a patch-clamped neuron, ensuring that the pharmacological shutdown of certain ion channels does not cause a change in neuronal firing patterns.

Importantly, while an intervention modifies the graph associated to an SCM, the variables’ joint distribution can still be obtained by exploiting interventional knowledge, (unintervened) observational data and prior assumptions related to the unaffected conditionals.

For the SCM introduced in Eq. 1, intervening on Vk consists in replacing its structural assignment by a new one:

where the new function

2.1.3 From causal strength to a measure of event-based causal influence

Typically, one brain region influences another through axonal propagation of spiking activity in afferent neurons, and synaptic transmission to dendrites of the target region. In the simplest bi-variate case (i.e., if we focus on two brain regions with direct synaptic projections), this relationship can be represented in an SCM by Figure 1A(left) where two nodes representing the neural activities of the two regions are linked by a uni-directional arrow. For the sake of simplicity, we ignore for now the influence of the past of X2 on itself, such that the only causal link is from the past (at t − 1) of region 2 to the present (at t) of region 1. The joint probability can be causally factorized as

A natural manipulation to study the causal mechanism is to shut down the synaptic transmission during event occurrence and compare the outcomes, e.g., via physically cutting the pre-synaptic dendrite attached to the synapse, or pharmacological blockade of the relevant ion channel. Suppose that the experiment could be done, the data obtained in this hypothetical manipulated scenario can be modeled by another SCM without the arrow between

This approach will be thoroughly discussed in Section 2.2.3 but here we explain it briefly in this simplified example to provide the readers with an intuitive understanding of the principles. Figure 1B illustrates the intervention performed in the SCM: cut the causal arrow from

• choose

• replace the cause → effect mechanism

The strength of the causal arrow is then quantified by the Kullback-Leibler (KL) divergence DKL between original (unintervened) and intervened joint densities

However, we will argue that this choice of intervention is not an ideal way to measure the event-based causal influences between two brain regions. Going back to the manipulation experiment, despite the transient activities occurring at the pre-synaptic neurons as an input to the synapse, due to the cutoff of afferents or blockage of ion channels, the activity of the post-synaptic neuron (i.e., the effect variable) is expected to remain at baseline level without being influenced by the event occurring in the cause. In this context, the operation of feeding the effect node

2.2 Candidate time-varying causality measures

We now present the time-varying versions of commonly-adopted causal strength measures of a given direction of causation X2 → X1 and discuss their properties in the context of transient event-based causality analysis, in light of the above principles. The candidate measures include time-varying extensions of Granger causality (GC), Transfer Entropy (TE) and Causal Strength (CS) (Janzing et al., 2013). To make the comparison quantitative, time series are modeled using the bivariate linear SVAR model of Eq. 2a and Eq. 2b. As we will see, all measures boil down to comparing the “full” bivariate model to a model where the contribution of the cause time series to the effect is removed in some way. Generalization to more than two observed time series is possible in all cases and briefly mentioned for each approach.

2.2.1 Granger causality

Granger causality (GC), as well as its information-theoretic extension, Transfer Entropy (TE) is rooted in Wiener’s principle of causality. For the bivariate case, Granger (1969) defines the statement of (Granger-)causality from X2 to X1 whenever knowledge of

• Scenario 1: predict

• Scenario 2: predict

The predictive model describing the first scenario is referred to as the full model (Geweke, 1984) and corresponds to Eq. 2a of the SVAR model, where the first variable X1 is dependent on both variables X1 and X2. An estimate of the innovation variance of

where the model order p′, the coefficient a′, the innovations mean

If X2 Granger-causes X1, then the full model should fit the data more accurately compared to the reduced model as measured by the estimated variance

where the factor 1/2 is chosen for consistency with TE (see Section 2.2.2). While the above linear SVAR model is the most widely used, Granger causality has been extended to non-linear models following the same predictive approach (e.g., Marinazzo et al., 2008; Marinazzo et al., 2011b; Diks and Wolski, 2016; Wismüller et al., 2021, for a recent review see Shojaie and Fox, 2022).

2.2.2 Transfer Entropy

TE is an information-theoretic implementation of Wiener’s principle, where a comparison between the prediction performance of the above two scenarios is quantified with conditional entropy. TE quantifies to which amount X2 causes X1 in the Granger sense and is defined by the entropy difference

Interestingly, using the Kullback-Leibler (KL) divergence DKL between two probability densities

As noticed by Barnett et al. (2009), under stationary Gaussian SVAR assumptions the analytic expression of Gaussian entropy applied to Eq. 6 leads to

A key issue is that the reduced model ignores but does not remove the influence of past values of X2

As a consequence, the reduced model cannot be generally interpreted as an intervention on the original SCM that would result in a model where arrows associated to the causal influence of interest would be removed. In addition, in case of bi-directional coupling, the reduced model of Eq. 4 is misspecified (in a generic case) for any finite order. This can be seen easily by exploiting the d-separation criterion (Supplementary Section SA), as illustrated in Figure 2. Figure 2B shows the estimation in the full model, where conditioning on both

This issue has been both raised and addressed in the literature, in particular by resorting to Autoregressive Moving Average models and state space models for defining an appropriate reduced model (e.g., (Barnett and Seth, 2015; Solo, 2016)). However, this remains an important limitation when extending TE to time-varying versions, where the model is assumed to be stationary at best locally in time. For example, when defining a non-stationary SVAR model as Eq. 2a, we assume a different linear model in each 1-point time window. The non-locality of TE is particularly problematic for such a time-varying model assumption because of the implicit influence of past activities on this quantity.

2.2.3 Dynamic causal strength

To overcome the limitations of TE and GC, Ay and Polani (2008) have proposed a measure of information flow to quantify the influence of some variables on others in a system, which has been further studied and generalized in Janzing et al. (2013) as a measure of the Causal Strength (CS) of an arbitrary set of arrows in a graphical model. In the present paper, we define CS in the context of time-inhomogeneous vector autoregressive processes and their associated unrolled causal graph, and thus call it Dynamic Causal Strength (DCS).

DCS can be naturally defined using the SCM interventional formalism (Pearl (2000); Peters et al. (2017), see Section 2.1.2). Briefly, interventions are performed on nodes in order to remove the specific arrows from the causal graph whose influence we wish to quantify. In agreement with Ay and Polani (2008) and Janzing et al. (2013), in the context of inhomogeneous SVAR models (as illustrated in Figure 2A), an appropriate intervention to remove the causal influence from

which does not depend on

A parametric formulation under linear Gaussian model assumptions is given in Supplementary Section SD.5. Generalization to more than two time series is also straightforward following (Janzing et al., 2013): pDCS and DCS are simply computed by also including conditioning on the past of all other time series, in addition to

Remark: In contrast with Janzing et al. (2013), but in line with Ay and Polani (2008), we do not use jointly independent copies of each component of

2.3 Near deterministic behavior of TE and DCS

The analysis of transient neural events leads us to analyze signals that have limited stochasticity in several respects: on the one hand, strongly synchronized oscillatory signals can be represented by SVAR models with low innovation variance, relative to the variance of the measured signal. Moreover, when a study focuses on a reproducible type of transient pattern, it is often characterized by a waveform that has little variability across collected trials. Such a situation can be modeled with a time-varying deterministic innovation, exhibiting strong variation of its mean across time, but no or little variance. We investigate the theoretical properties of TE and DCS in this regime, showing a benefit of DCS with respect to TE, but also remaining limitations.

2.3.1 TE behavior for strongly synchronized signals

Besides, it has also been pointed out that the definition of TE in Eq. 7 has some other non-intuitive implications (Ay and Polani, 2008; Janzing et al., 2013). In particular, there are situations in which TE(X2 → X1) almost vanishes, although the influence is intuitively clear. How frequent are the practical situations in which we have these detrimental effects is unclear; however, theoretical analysis suggests that this can happen when the time series are strongly correlated.

To see this, we can derive from Eq. 7 the case where X2 is a deterministic function of X1 such that TE vanishes. Take the special case where

Plugging into Supplementary Equation S8 in Supplementary Section SD.4 yields,

However, a strong correlation between two observed time series does not necessarily imply that causal interactions between them are weak, from an SCM perspective. We will investigate this case in Section 3.1 and compare with the results of DCS to show that DCS does not suffer from this non-intuitive vanishing problem.

2.3.2 Insensitivity of TE and DCS to deterministic perturbations

While several intuitive properties make DCS a good candidate to quantify causal influences, we exhibit a counterintuitive property common to TE and DCS in the context of peri-event time series. Transient neural events are mainly investigated in two types of analyses: 1) stimulus-triggered (or response-triggered) data that are temporally aligned by task (or response) onset and 2) event-triggered data where occurrences of a type of brain-activity pattern are detected along the time course of the recordings (manually or algorithmically) and used to create peri-event trials.

In both cases, neural activities are likely to have a deterministic component appearing in the peri-event ensembles, due the similarity of the response to successive stimuli in case 1), or due to the similarity of the neural patterns detected in the recordings in case 2). Here we will show that, in a linear setting, TE and DCS are insensitive to such a deterministic component. Specifically, TE and DCS values are unaffected by interventions on the innovations’ mean at any time point.

First, we exhibit the role played by a deterministic perturbation in an example.

Example 1. Consider the bi-variate SVAR(1) model in the following form

with a, b ≠ 0 and a stationary innovation for X1,

When varying α, this models a intervention on the second time series. Then it can be easily shown that the expected time course of X1 is

This witnesses the causal influence of

We will show this in the more general setting of the SVAR(p) model of Eq. 2a and Eq. 2b.

Proposition 1. For linear SVAR models defined by Eq. 2a and Eq. 2b, TE and DCS measures are invariant to deterministic perturbations, i.e., to changes in the mean of the innovation’s distributions

Proof. Without loss of generality, we will show invariance to an elementary intervention at a single time t0 that transforms

To compute how the intervention distribution of the new variables denoted

which is a set of linear deterministic difference equations with a unique solution making X and

which implies the same for conditional marginal densities, e.g.,

and

As a consequence TE on the intervention distribution writes

And by change of variable we get the invariance property:

which can be generalized to arbitrary deterministic perturbations. The same reasoning can be applied to DCS leading to invariance as well (Supplementary Section SB) and this concludes the proof.

Arguably, this result is not what we would expect from an event-related measure of influence, because in the above example of Eq. 10a and Eq. 10b, setting a large α intuitively leads to a large influence of X2 on X1 provided b ≠ 0. Provided that TE and DCS can be made arbitrarily small by reducing the innovation’s variance

As elaborated above, this is in contrast to what would be expected in the neuroscientific context, and directly relates to the observational, event-related setting that we investigate: the deterministic component is due to the alignment of the data with respect to an event of interest, and we do not have a different condition to contrast the occurrence of this event with what would have happened in its absence. This analysis calls for building a synthetic baseline condition that would allow deterministic changes to be detected.

2.4 A novel measure: relative Dynamic Causal Strength

2.4.1 Motivation

Following the guidelines for event-based causality (presented in Section 2.1), we propose a novel measure, the relative Dynamic Causal Strength (rDCS), as a modification of DCS. This measure aims at taking into account the influence of event-based changes in the cause signals independent from the connectivity (the mechanism), and notably those driven by deterministic exogenous inputs. In the specific problem we are investigating, the cause is the past states of X2, denoted

In the case where X2 is driven by a deterministic exogenous input in a transient window, the cause exhibits significant changes relative to baseline; thus, intuitively, the causal effect should also be enhanced even if the causal arrow remains the same (i.e., the coefficient b stays unchanged). Apart from intervening on the causal arrow, further intervention can be implemented on the cause node to construct a post-intervention scenario where the cause receives no time-varying innovations. Therefore, inspired by causal impact (Section 2.1.3) which characterizes the difference between the current state and a baseline state, we propose (additionally to DCS) to replace the marginal of

with

The implementation of rDCS given a SVAR model is derived in Supplementary Section SD.6. Generalization to more than two time series can be done in the same way as for DCS, by including extra conditioning on the past of other time series for all quantities.

Intuitively, the term relative originates from the comparison between the current past state

2.4.2 Sensitivity of rDCS to deterministic perturbations

The definition of rDCS implies sensitivity to deterministic perturbations. Indeed, taking the example in Section 2.3.2, the reference state

because

2.5 Alignment for spontaneous events

The relevance of peri-event time-varying causal analysis using the proposed rDCS, as well as TE and DCS, depends on the modeling assumptions of peri-event data. In particular, we assume that the neural events we want to study reflect a sequence of continuously changing hidden states and that values at each peri-event time point t′ are sampled i.i.d. across trials from the same ground truth distribution (Shao et al., 2022) at t′. This is easily justified for stimulus-evoked events, as addressed in Section 2.3.2 and Section 2.4.2, with an intrinsic reference time for occurrence (i.e., the triggering time). However, analyzing spontaneous events whose occurrence times are not known a priori, such as transient events observed during sleep, requires 1) a selection procedure to identify them and 2) a procedure to choose a reference time point for each detected event, which is used to align all of them on a common peri-event time grid. The idea of reference points for alignment is similar to the anchor points in Phase Rectified Signal Averaging (Bauer et al., 2006). In contrast with such work, we focus on a transient phenomenon at the time scale of a peri-event time window instead of a very fast increase in the signal amplitude. Given a signal exhibiting spontaneous events, common procedures involve 1) selecting the events by thresholding a filtered version of this signal (that amplifies the events’ features of interest); 2) aligning events according to the local peak of this same filtered signal to best reflect the evolution of the underlying state. The result may only approximately recover the ground truth distribution of the events, as it is influenced by the choice of filtered signal and putative signal perturbations.

Importantly, selection may lead to a biased estimation of event statistics and peri-event dynamics, due to selecting data based on a specific detection signal, resulting in a misleading characterization of causal interactions (e.g., wrong causal directions as seen in Supplementary Figure S6B). We will thus study how event selection affects the estimation of causal influence and propose an appropriate procedure on this basis. To model the effect of selection, we use an SCM-based perspective on selection bias (Bareinboim and Pearl, 2012; Bareinboim et al., 2014). We can modify the SCM in Figure 2A to incorporate an additional node S representing the selection variable, which is a binary variable indicating whether the time window, with specified reference time point, is selected (Supplementary Section SC for background). Typically S is defined by testing whether a continuous random variable D goes over a predefined threshold. D is itself a function of the time series nodes within the peri-event time window, corresponding, for example, to the aforementioned filtering operation. A practical example is the detection of oscillatory events using a band-pass filter, where the dependency of D (and thus S) on other nodes reflects the dependency of the filtered signals on past samples of X through the coefficients of a causal Finite Impulse Response (FIR) filter.

In practice, we can a priori choose S to depend either on the cause variables X2 (Figure 3A) or on the effect variables X1 (Figure 3B). Assuming that the filter (i.e., for constructing the continuous RV) is well chosen, and the selection threshold is high enough, choosing windows satisfying S = 1 will typically “over-select”, i.e., exclude some peri-event time series that would actually be relevant for our analysis. Figure 3C(left, top right) illustrates how thresholding selects only a subset of peri-event trajectory samples at t′ = 0 in a simulated scenario. This over-selection can then be modeled as sampling peri-event data from a conditional peri-event distribution p(X|S), while we are interested in analyzing a ground truth distribution p(X). This conditioning may induce a so-called selection bias in the estimation of quantities we are interested in, notably the conditional distributions that enter the calculations of TE, DCS and rDCS. The impact of such bias on those quantities as been investigated in Bareinboim and Pearl (2012); Bareinboim et al. (2014) within the SCM framework, as we describe in the following.

FIGURE 3. Illustration of selection bias due to thresholding and alignment. (A) SCM of a bi-variate SVAR(2) model with uni-directional coupling from X2 to X1 and a selection node S depending on states of the cause variable before peri-event time (t′< =0). The selection node S represents partial selection of samples due to thresholding of the filtered cause signal (as the detection signal). Orange arrows makes the recoverable arrows with the current selection node, while purple arrows indicates the unrecoverable ones. (B) The same SCM as in (A) with the selection node depending in a similar way on the effect signal. (C) An example event ensemble for the cause variable

For simplicity and consistency with the Results section, we will restrict ourselves to models with a unidirectional causal effect (either X1 → X2 or X2 → X1) and assume that S is only dependent on a finite number of past peri-event times (t′ ≤ 0) as in the case of a causal FIR filter (for other cases, refer to Supplementary Section SC.2). Figures 3A, B illustrate in this setting that the conditional associated to causal arrow (X2 → X1) can be recovered at any peri-event time only when the selection node depends on the cause variable (Supplementary Section SC.2 for justification). Specifically, this means that

Therefore, the KL divergence for the ground truth direction X2 → X1 can be estimated correctly when selecting the event based on the ground-truth cause variable “S(X2)”, while this does not hold for the opposite direction X2 → X1 nor when selecting based on the ground-truth effect “S(X1)”. As the true causal direction is unknown, we thus propose that, to investigate the dominant causal direction between two event ensembles, we should focus on comparing the causality measures (TE, DCS and rDCS) for each direction when the events are aligned on the putative cause, i.e., X2 → X1|S(X2) compared to X1 → X2|S(X1). Although rDCS is expected to be biased for the second case when aligned by the effect variable, for uni-directionally coupled systems, as the ground truth rDCS is zero, we expect the bias to still lead to a comparatively small rDCS value relative to ground truth, such that the contrast between two directions is preserved.

Other factors may affect the estimation of causal strength. Since rDCS is defined as the expectation over the KL divergence over the past states

Next, the above mentioned alignment procedure may affect causal strength estimation. Perfect alignment (considered ground truth) refers to the condition where the ground-truth hidden states are identical for all trials at each peri-event time t′ in an extracted event ensemble, as shown in Figure 3D for t′ = 0. In this scenario, no further alignment is needed as all trials are intrinstically aligned. In order to study the influence of the aforementioned selection bias specifically due to thresholding, we may still apply selection to the perfectly aligned dataset, resulting in excluding below threshold samples from the estimation procedure. We refer to this situation as single-time selection of events where trials are aligned based on known ground-truth reference time. This setting assumes that one knows the hidden state, which is possible only for stimulus triggered or simulated events, but impossible for experimentally observed spontaneous events. In the latter case, by thresholding over the whole observed signal one typically end up selecting successive sliding time windows that all have a detection signal exceeding the threshold (e.g., Figure 3D(Bottom right)). Selecting all these points can be interpreted as smoothing the ground truth state over all these neighboring state space points, an alignment scenario which we name as smoothed alignment of events. In practice, a common alternative is to further select among above-threshold overlapping peri-event time-windows the local peaks as the reference points, which can be understood as a non-uniform subsampling of the smoothed alignment and can be unified into the same scenario category.

2.6 Data processing pipeline

The whole analysis procedure can be conducted in two phases: event selection and causal analysis. We will elaborate on the detailed steps in each phase in the following.

• Phase 1: Event Selection

1. Filtering: given a bi-variate signal (as a simple case), for different purposes of study, one would need to find an appropriate filter to apply to the original signals such that certain features of the underlying system can be amplified. For example, to locate the Sharp Wave-Ripples (introduced in the Introduction and analyzed in Section 3.3) that are prominent in the ripple band [80–250]Hz, one would use a bandpass filter such that the irrelevant components are attenuated. Events can be also detected with a template matching procedure, which is another type of filtering (Supplementary Figure S2).

2. Thresholding: a certain threshold is determined beforehand (up to the specific feature of the question) and applied to the filtered signal. As the filtered signal is designed to amplify the feature, time points where the filtered signals are over the threshold are candidate reference points. Reference points define the peri-event time t’ and are used to extract peri-event data as multiple trials.

3. Alignment: the thresholding procedure can be applied to either the cause or effect signals. One can select all candidate reference points obtained by filtering either signal (for the smoothed alignment case) or the time points of local peaks (of the filtered signal) as reference points. Then the bi-variate peri-event trials are extracted in a fixed-length window surrounding the reference points, thus forming the peri-event ensemble for further analysis.

• Phase 2: causal analysis

1. Model order selection: as mentioned in Section 2.2.1, our estimation of information theoretic quantities is based on time-inhomogeneous SVAR models. One thus needs to determine the optimal SVAR model order that best reflects the underlying dynamics. A common approach for model order selection is the Bayesian Information Criterion (BIC), which we have extended to the time-varying case in Shao et al. (2022) using the extracted event ensembles obtained in the first phase.

2. SVAR model estimation: Shao et al. (2022) also provide a way to estimate the SVAR model with the extracted event ensemble and the optimized model order. Thus we will obtain an estimate of the autoregressive parameters, i.e., the autoregressive coefficients and innovation mean and variance.

3. Computation of causality measures: with the estimated autoregressive parameters and the signals second order statistics, we can estimate the time-varying causality measures as detailed in Supplementary Section SD: TE based on Supplementary Equation S8, DCS on Supplementary Equation S9 and rDCS on Supplementary Equation S10.

Notably, the causal analysis procedure can be applied to event ensembles obtained with any type of alignment. However, as elaborated in Section 2.5, we propose to compare the causality measures in two different directions from the event ensembles where trials are aligned by the putative causes. To facilitate the application of this analysis framework, we have made available the code that performs the aforementioned experimental procedure (see https://github.com/KaidiShao/event_causality_frontiers).

3 Results

In this section, we first focus on illustrating the properties of TE, DCS and rDCS with simulated toy models. The problem of vanishing TE occurring with synchronized signals and the benefits of DCS in the same situation will be investigated in Section 3.1. Next, we simulate a simple uni-directionally coupled SVAR(4) system with rhythmic perturbations of the cause variable to generate transient events, where we will show that rDCS is able to reflect the change of causal effects due to this perturbation while TE and DCS fail. We also study the influence of the alignment method in the same example, as well as in experimental in vivo recordings from uni-directionally coupled hippocampal regions during SWRs.

3.1 The case of strongly-correlated signals

As mentioned in Section 2.3.1, TE does not capture well causal influences when the cause and effect signals are strongly correlated with each other, contray to DCS. Here, to illustrate such contrast, we simulate a bivariate dynamical system in the form of two synchronized continuous harmonic oscillators x(t) and y(t), with uni-directional coupling (i.e., x(t) driving y(t)):

In this system, x(t) is designed as an under-damped oscillator (ζx = 0.015722), which approximately oscillates at a period Tx = 200 samples corresponding to natural (angular) frequency ωx = 2π/Tx = 0.0314 rad/sample. To achieve synchrony, y(t) is also designed as an under-damped oscillator (ζy = 0.2) whose intrinsic oscillation gradually vanishes and finally follows the oscillation of x(t) with a coupling strength of c = 0.098. For y(t), Ty = 20, ωy = 2π/Ty = 0.314. We also add small Gaussian innovations to both oscillators:

Using the Euler method with a time step of 1 and random initial points

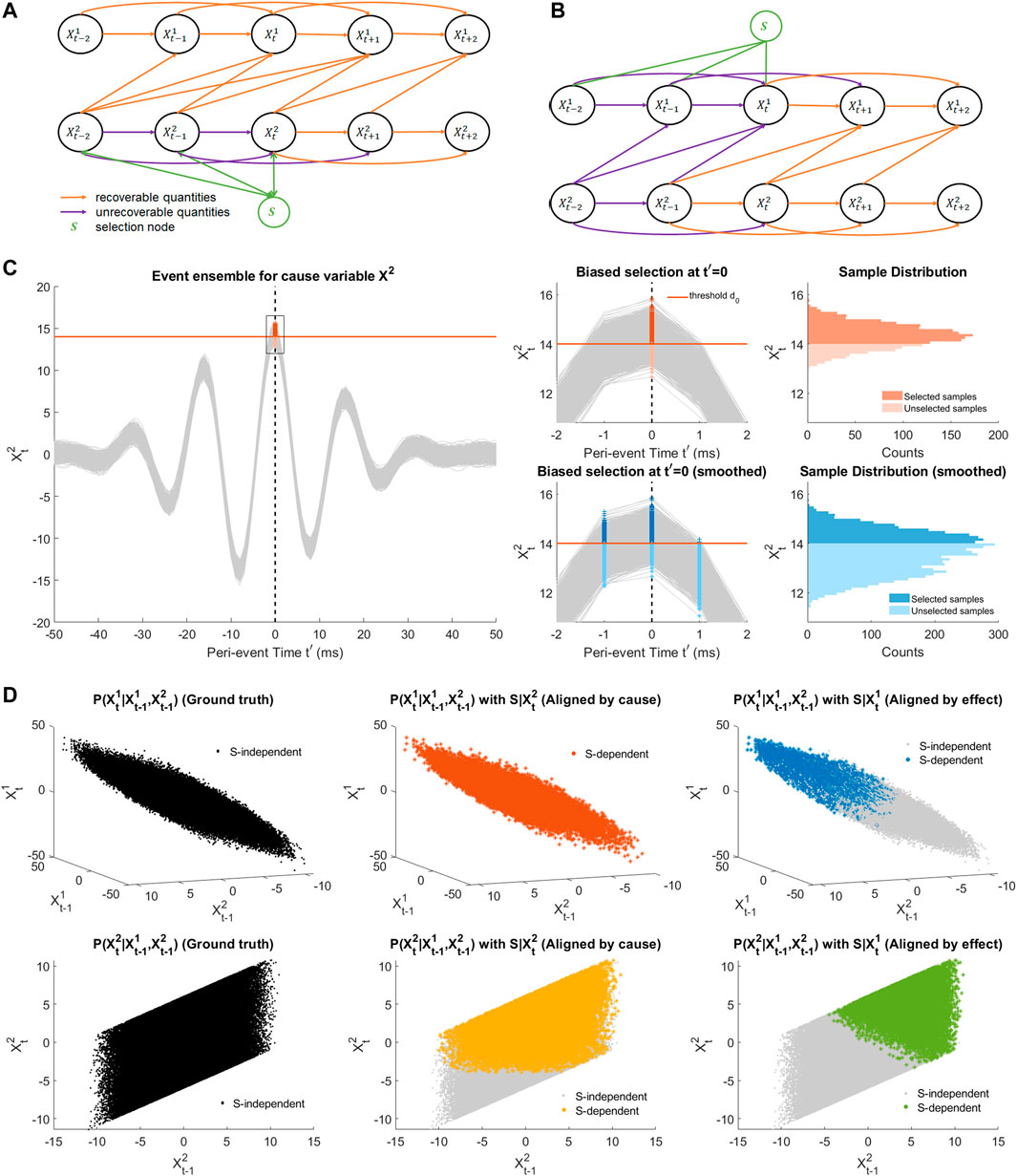

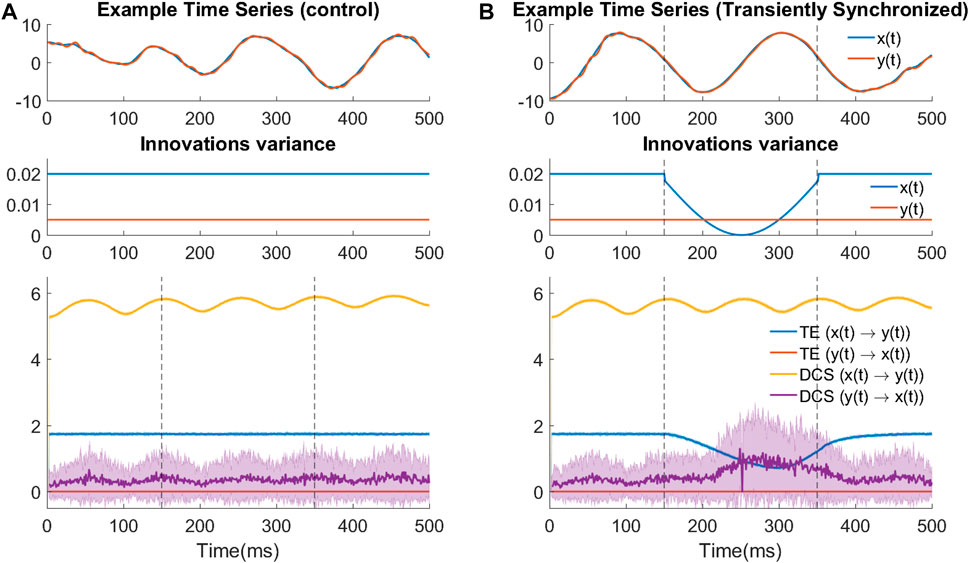

Figure 4A shows the results of time-varying TE and DCS for assessing the causal effects between x(t) and y(t). Calculation is performed in both the ground truth direction (x(t) → y(t)) and the opposite direction. We first look at the control experiment. Consistent with the system’s stationarity, TE is constant in both directions while being higher in the ground-truth direction. DCS in the ground-truth direction stays at a relatively high level, despite some small oscillation under a frequency similar to the intrinsic oscillation frequency of x(t).

FIGURE 4. TE fails when the signals are strongly synchronized. (A) Control experiments where synchrony is not changed. (top) Example trace of the bivariate signal in the control experiment. (middle) Time-varying design of innovation’s variance for both variables in the control experiment. (bottom) Time-varying TE and DCS results in the control experiment. (B) TE underperforms during transient increased synchrony induced by a tiny change in noise variance. The transient change can be seen as an event. Subfigure designs are the same as (A).

With respect to the detection of causal direction, both measures are able to detect the correct direction (i.e., causation for x(t) → y(t) is much larger than in the opposite direction). It is also reasonable that DCS in both directions is higher than TE, according to its definition in section 2.2.3. However, from the control experiment, we cannot conclude that the smaller TE values are due to its definition or due to the strong synchrony in the signals.

Therefore, we introduced a transient decrease of the noise variance in the cause signals (x(t)). The logic of designing this transient change is the following: the level of synchronization will increase with weaker noise, but the system and input magnitude remain the same because the contribution of the noise change to the signal amplitude is negligible; thus if TE is insensitive to the level of synchronization of signals, its values are expected to stay constant. However, as the results show in Figure 4B, there is a transient decrease of TE during the interval where noise variance is decreased, suggesting that TE performs poorly in the cases where the cause and effect signals are strongly synchronized. As such strong synchronized oscillations are common phenomena in the context of transient neural events, one would need to pay extra attention when using TE (as a widely-applied causality measure) to investigate the direction of causation during these transient phenomena.

3.2 The case of deterministic perturbations

In this section, we directly address the benefits of rDCS over TE and DCS when applied to signals driven by deterministic perturbations. To illustrate this specific property, we designed some simple transient events perturbing the innovation parameters of a stationary SVAR process with uni-directional coupling. The events are generated by feeding the cause signal with innovations with non-zero time-varying means, such that both signals will exhibit temporal oscillations. We refer to these events as perturbation events in the following sections. These perturbations intrinsically define a hidden state that parametrizes the ground truth distribution of peri-event data. We exploit the hidden state and demonstrate that the proposed alignment method in Section 2.5 is efficient for recovering the time-varying causal direction between the two variables.

3.2.1 Simulation procedure

We simulated a non-stationary uni-directionally-coupled autoregressive system defined in Eq. 2a and Supplementary Eq. 2b. The causal direction is X2 → X1. The system is designed as a bivariate SVAR(4) process with a time-invariant coefficient matrix: a⊤ = [−0.55, − 0.45, − 0.55, − 0.85], b⊤ = [1.4, − 0.3, 1.5, 1.7], c⊤ = [0, 0, 0, 0] and d⊤ = [0.9, − 0.25, 0, 0.25]. These coefficients were randomly generated and kept after checking the stability of the SVAR(4) system. Uni-directional interactions are ensured by setting the autoregressive coefficients associated to interactions in the opposite direction (i.e., c) to zero for all lags.

We enforce non-stationarity of

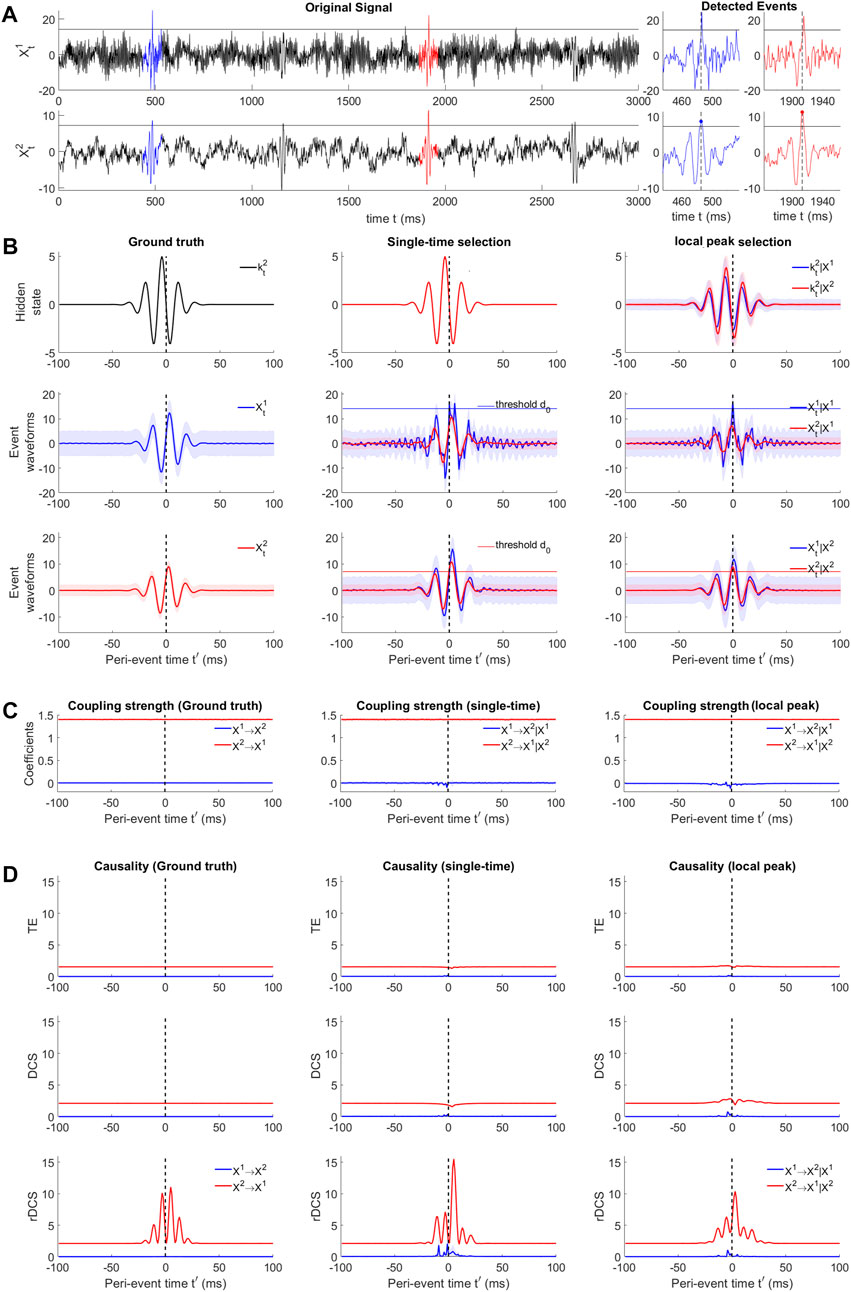

FIGURE 5. Causal analysis for simulated perturbation events with non-zero innovations. (A) Example signal traces of the bi-variate SVAR(4) system (black). Blue and red traces mark two example events detected by thresholding over the cause

We generated this bi-variate SVAR(4) process for 1300s consisting of 5,000 trials of perturbation events by transiently varying

3.2.2 Effect of trial selection and alignment on model estimation and causality measures

The designed deterministic innovation (i.e., identical across trials), can be seen as imposing a hidden state evolving across the peri-event interval. The event ensembles obtained by this ground truth model define a dataset where no event selection and alignment is needed. We can compare the SVAR model estimation and causality measures resulting from this dataset to the outcomes obtained by selecting and aligning events based on either variable X2 or X1, as discussed in Section 2.5.

To validate the recoverability theory in the presence of selection bias due to the event detection procedure, we test the single-time selection setting (see Section 2.5) where sub-threshold trials are removed from the ground truth peri-event dataset (as illustrated in Figure 3D(Top right)), thus preserving the ground-truth hidden states (Figure 5B(Top middle)). The peri-event trials having reference point values higher than a threshold d0 = 3SD for the chosen variable are selected, where the standard deviation is computed from the whole signal. The selected event ensembles are shown in Figure 5A(middle center) for thresholding based on

Next we demonstrate the appropriateness of the approach performed on real data (i.e., selection and smoothed alignment based on putative cause), we set d0 as a threshold and performed smoothed alignment over the original signal itself. We obtain an event ensemble by selecting local peaks for points over d0 as new reference points, which is shown in Figure 5B(middle right and bottom right). This can be seen as a smoothed version of the ground-truth dynamics, which is also confirmed by checking the aligned hidden states (Figure 5B(Top right)).

While inferring SVAR model parameters of the event ensembles according to Shao et al. (2022), the true model order 4) can be recovered for all five ensembles. Figure 5C demonstrates the recoverability of conditional probabilities for ensembles aligned by the putative cause. Coupling strengths from the putative cause to the putative effect are plotted in red. As described in the simulation procedure in Section 3.2.1, the coupling strength is constant over time, which is reflected in Figure 5C(left). Consistent with the theory in Section 2.5, biased selection of event trials on the samples at t′ = 0 leads to unbiased estimation of the coupling strength

Figure 5D (Top, middle, bottom) shows the corresponding results of how causality measures perform in the three alignment scenarios. For clearer visualization of TE and DCS with a zoomed vertical scale, see Supplementary Figure S8. During the periods where no transient events occur, all three measures are able to infer a time-invariant causal effect in the ground-truth direction (X2 → X1) compared to the opposite direction. Besides, in line with theoretical predictions, DCS is higher than TE and is equal to rDCS. During the perturbation events, in the ground truth direction TE and DCS remain constant and rDCS exhibit a rhythmic pattern. These results match the theoretical predictions: TE and DCS measures the connectivity strength, which does not change, while rDCS measures the combined causal effect related to the connectivity and the event-based changes at the cause, yielding larger variations transmitted to the effect node.

In the transient time scale, thresholding leads to selection bias in estimating causality measures. In the case where event ensembles are aligned by single-time selection of the cause

Thus, this simulation experiment of perturbation events demonstrates the effectiveness of rDCS in reflecting the causal influence when the cause is perturbed by a deterministic exogenous input compared to TE and DCS, validating that rDCS is a better measure to address event-based causal interactions. More importantly, we highlight here the trial alignment problem when dealing with event-based data, especially when events occur spontaneously. Supplementary Figure S7 is a clear example showing the impact of alignment on information-theoretic measures: aligning on the actual effect could reverse the detected direction of causation. Thus, by contrasting the different impacts of alignment on information-theoretic measures, we show that in practice, selection via thresholding and aligning the event ensemble with the local peaks of the putative cause is a good way to assess the ground truth event-based causality given uni-directional connections. This approach will be further applied to real data in the next section.

3.3 Validation on SWRs-based causality between CA3 and CA1 regions

Sharp Wave-Ripple (SWR) events, hypothesized as a key element in implementing memory consolidation in the brain, have been reported in the electrophysiological recordings within the hippocampus of both macaques and rodents. In this section we detect SWRs in an experimental dataset to investigate the behavior of TE, DCS and rDCS in a neuroscientific context where the event-hosting brain regions are uni-directionally coupled, i.e., in a situation where the causal direction is known a priori.

SWRs are primarily generated in the CA1 area of the hippocampus. The somas of CA1 pyramidal cells are located in the pyramidal layer (‘pl’) while their dendritic trees are rooted in the stratum radiatum (‘sr’). It is hypothesized that the dendritic trees receive strong excitatory inputs from the pyramidal cells in CA3 which generate post-synaptic activities in the dendritic trees. This results in LFP activities at low frequencies (0–30Hz, due to the sharp-wave) and in the gamma band (30–80Hz, due to CA3 oscillations). Then the dendritic activities propagate to the soma, where recurrent interactions between inhibitory and excitatory cells generate a fast oscillation, the ripples (80–250 Hz).

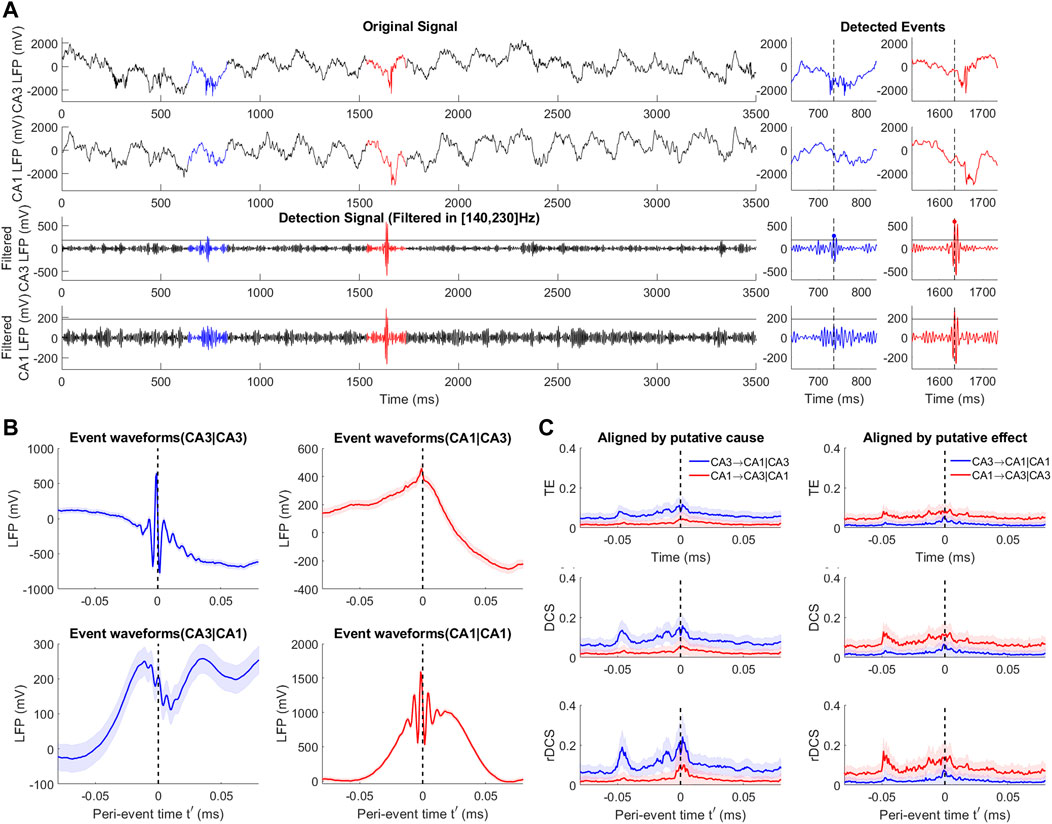

We applied the event-based causality analysis to an open source dataset where electrophysiological recordings in the CA3 and CA1 regions of rodent hippocampus have been performed with 4 shanks of 8 channels simultaneously in each region (Mizuseki et al., 2014). In agreement with the SWR generation mechanism explained in the above paragraph, anatomical studies (Csicsvari et al., 2000) support uni-directional anatomical coupling between these two regions within the hippocampal formation, i.e., the ground truth direction is known to be CA3 → CA1. The analysis is based on two Local Field Potential (LFP) data sessions recorded from the rat named ‘vvp01’ with a sampling rate of 1252 Hz. An example trace of a channel pair of both CA3 and CA1 regions is shown in Figure 6A. As SWRs are more challenging to observe during behavioral sessions, we perform our analysis only on a session of sleep which lasts 4943.588s.

FIGURE 6. Event-based causal analysis for SWRs in rodent hippocampal CA3 and CA1 regions. (A) Examples signal traces of the original signals and bandpass filtered signals of CA3 and CA1 regions (black). Blue and red traces mark two example events detected by thresholding over the cause CA3 and aligned by the local peak. Blue and red dots show other reference points. (B) Event waveforms of SWR event ensembles at CA3 (left) and CA1 (right) regions aligned by CA3 (Top) and CA1 (Bottom) signals. Shades repensent the ensemble standard error averaged over 1024 channel pairs. (C) Peri-event causality measured by TE (Top), DCS (middle) and rDCS (Bottom) for event ensembles aligned by the putative cause (left) and the putative effect (right). Shades reflect standard deviation of 100 repeated bootstrapped ensembles.

Following Mizuseki et al. (2009), we detect SWRs by applying an 49-ordered FIR filter in the frequency band [140, 230]Hz to each channel of signals in both regions. The detailed detection procedure has been elaborated in Section 2.6 for the reference of readers and is similar to what is performed in Section 3.2. We set a threshold over the mean of the filtered signals (5 SD) to locate the events and align them according to the local peak time points over threshold.

Figure 6A(Bottom) shows the case aligned on the CA3 signals. The peri-event window for display has been chosen to be [-79.9, 79.9]ms, while VAR model estimation and the BIC-based model order selection are performed according to Shao et al. (2022). For each channel pair, we obtain two bi-variate event ensembles, corresponding to the two alignment conditions; thus, in total, we extract 2*1024 event ensembles (1024 channel pairs and 2 alignment conditions). The event waveforms and statistics of an example channel pair for different alignments are illustrated in Figure 6B.

SWR-based causality measures shown in Figure 6C compare the alignment by the putative cause and by the putative effect. The reference states used for estimating rDCS are the averaged states over the first 16 ms time points in the window. The standard deviation plotted in the figure originates from 100 times bootstrapped ensembles and the variability is averaged over 1024 channel pairs. In line with the theoretical predictions, the ground truth direction (CA3 → CA1) is well recovered when using an alignment by the putative cause, but not when aligning by the putative effect. TE, DCS and rDCS in the opposite of the truth direction are not significantly different from zero, which is consistent with the uni-directionality of anatomical connections posited by anatomical studies. Significantly stronger causal influences in the ground truth direction are shown by TE, DCS and rDCS before the alignment point (t′ = 0), matching the hypothesized SWR generating mechanism that the CA3 region drives the SWR interactions in CA1 region. The lack of difference between the two directions in more stationary states might be explained by the ineffectivity of causal measures based on linear VAR models to capture non-linearity (Shajarisales et al., 2015). The transient increase in the non-ground truth direction when using alignment on the putative cause might be explained by the selection bias elaborated on in Section 2.5.

4 Discussion

In summary, we have discussed the benefits and shortcomings of two time-varying causality measures (TE and DCS) in characterizing causal interactions based on peri-event data. To address their insensitivity to deterministic perturbations, we proposed a novel measure, rDCS, justified within the SCM framework. We compared the performance of these causality measures on perturbation events with innovations having time-varying means and electrophysiological recordings of hippocampal SWRs. The benefits of rDCS are supported by the perturbation events presented in Section 3.2. As causality analysis of transient events aims at uncovering the network mechanisms underlying these phenomena (e.g., addressing whether one event drives the other), we argue for the use of rDCS as it provably captures causal influences due to event-related changes in the cause that propagate to target regions through anatomical connections, even if these changes have little variability across trials. The outcome of rDCS is further illustrated on in vivo recordings of SWRs events in two hippocampal subfields.

Transient events are nonstationary signals that likely occur when the brain undergoes a transition from one state to another. Studying the “local” properties of the underlying non-equilibrium dynamics in regions of the state space might provide insights into the mechanism driving this transition. Earlier methods investigating such local dynamic properties include the local Lyapunov exponent (Pikovsky, 1993), while other common methods characterize local interactions between state variables within a short sliding time window, e.g., the local cross correlation (Buchner et al., 2009) or piecewise Granger causality (Ding et al., 2006). Our approach, although focused on the meaningful quantification of causal strength, is in line with the latter idea, where the time-varying SVAR model finds a 1-step local linear mapping in the trajectory formed by event trajectories, thus enabling to reveal transient causal interactions at a fast time scale, which may differ from the results obtained at equilibrium. As the measures are based on SVAR models, they can also be easily extended to a spectral form in order to capture the rich spectral properties in transient dynamics.

Contrasting the three measures of causal strength, TE is designed to assess conditional dependencies in observational data, while DCS and rDCS exploit this information to infer the impact of performing interventions of the SCM. In theory and as shown in the experiment of Section 3.1, TE can lead to counterintuitive outcomes applied to strongly synchronized events (a widely observed nonlinear phenomenon). While support has been provided for DCS and rDCS to be more appropriate measures of causal strength, they still require, like TE, certain assumption to be met (see also Section 2.1). A major concern is unobserved confounding, which might bias the estimated causal directions (e.g., the Simpson’s paradox in Pearl (2000)). Confounding effects can be corrected for by including activities from other regions, and there are also a few theoretical approaches to account for unobserved confounding under strong assumptions (Geiger et al., 2015; Mastakouri et al., 2021).

Selection bias is a fundamental issue for analyzing spontaneous neural activities, especially in case of any unsupervised detection or analysis. In this study we have demonstrated its impact on the alignment of the detected transient events and the resulting bias in causal inference. However, our proposal of putative cause alignment to estimate causal effect is theoretically supported only in the case of uni-directional coupling. Future work should assess the effect of selection bias in the case of bidirectional interactions and establish a framework to correct for such bias, not only in the context of causal strength inference but more generally for recovering the underlying event dynamics.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://github.com/KaidiShao/event_causality_frontiers, https://crcns.org/data-sets/hc/hc-3/about-hc-3.

Author contributions

Conceptualization, MB and NL; Methodology, MB and KS; Software, KS; Validation, KS; Formal Analysis, KS and MB; Resources, NL; Writing—Original Draft Preparation, KS and MB; Writing—Review and Editing, KS, and MB; Supervision, MB. All authors contributed to the article and approved the submitted version.

Funding

All authors were supported by the Max Planck Society. NL and KS acknowledge the support from the Shanghai Municipal Science and Technology Major Project (Grant No. 2019SHZDZX02). MB was also supported by the German Federal Ministry of Education and Research (BMBF): Tübingen AI Center, FKZ: 01IS18039B.

Acknowledgments

MB and KS would like to thank Philipp Geiger and Mingyu Yang for useful discussions.

Conflict of interest

The author NL declared that he was an editorial board member of Frontiers, at the time of submission.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnetp.2023.1085347/full#supplementary-material

Footnotes

1Because initial conditions of this deterministic linear system are set to zero before the intervention at t0

References

Abadie, A. (2021). Using synthetic controls: Feasibility, data requirements, and methodological aspects. J. Econ. Literature 59, 391–425. doi:10.1257/jel.20191450

Ay, N., and Polani, D. (2008). Information flows in causal networks. Adv. complex Syst. 11, 17–41. doi:10.1142/S0219525908001465

Bareinboim, E., and Pearl, J. (2012). Controlling selection bias in causal inference. Artif. Intell. Statistics 22, 100–108.

Bareinboim, E., Tian, J., and Pearl, J. (2014). Recovering from selection bias in causal and statistical inference. AAAI 28, 2410–2416. doi:10.1609/aaai.v28i1.9074

Barnett, L., Barrett, A. B., and Seth, A. K. (2009). Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 103, 238701. doi:10.1103/PhysRevLett.103.238701

Barnett, L., and Seth, A. K. (2015). Granger causality for state-space models. Phys. Rev. E Stat. Nonlin Soft Matter Phys. 91, 040101. doi:10.1103/PhysRevE.91.040101

Barnett, L., and Seth, A. K. (2014). The MVGC multivariate Granger causality toolbox: A new approach to Granger-causal inference. J. Neurosci. methods 223, 50–68. doi:10.1016/j.jneumeth.2013.10.018

Barrett, A. B., Barnett, L., and Seth, A. K. (2010). Multivariate Granger causality and generalized variance. Phys. Rev. E 81, 041907. doi:10.1103/PhysRevE.81.041907

Bauer, A., Kantelhardt, J. W., Bunde, A., Barthel, P., Schneider, R., Malik, M., et al. (2006). Phase-rectified signal averaging detects quasi-periodicities in non-stationary data. Phys. A Stat. Mech. its Appl. 364, 423–434. doi:10.1016/j.physa.2005.08.080

Besserve, M., Lowe, S. C., Logothetis, N. K., Schölkopf, B., and Panzeri, S. (2015). Shifts of gamma phase across primary visual cortical sites reflect dynamic stimulus-modulated information transfer. PLoS Biol. 13, e1002257. doi:10.1371/journal.pbio.1002257

Besserve, M., Schölkopf, B., Logothetis, N. K., and Panzeri, S. (2010). Causal relationships between frequency bands of extracellular signals in visual cortex revealed by an information theoretic analysis. J. Comput. Neurosci. 29, 547–566. doi:10.1007/s10827-010-0236-5

Brodersen, K. H., Gallusser, F., Koehler, J., Remy, N., and Scott, S. L. (2015). Inferring causal impact using Bayesian structural time-series models. Ann. Appl. Statistics 9, 247–274. doi:10.1214/14-aoas788

Buchner, T., Zebrowski, J. J., Gielerak, G., and Grzeda, M. (2009). “Direction of information flow between heart rate, blood pressure and breathing,” in Complex dynamics in physiological systems: From heart to brain (Berlin, Germany: Springer), 33–47.

Cekic, S., Grandjean, D., and Renaud, O. (2018). Time, frequency, and time-varying Granger-causality measures in neuroscience. Statistics Med. 37, 1910–1931. doi:10.1002/sim.7621

Correa, J., and Bareinboim, E. (2020). A calculus for stochastic interventions: Causal effect identification and surrogate experiments. Proc. AAAI Conf. Artif. Intell. 34, 10093–10100. doi:10.1609/aaai.v34i06.6567

Csicsvari, J., Hirase, H., Mamiya, A., and Buzsáki, G. (2000). Ensemble patterns of hippocampal CA3-CA1 neurons during sharp wave–associated population events. Neuron 28, 585–594. doi:10.1016/S0896-6273(00)00135-5

Diba, K., and Buzsaki, G. (2007). Forward and reverse hippocampal place-cell sequences during ripples. Nat. Neurosci. 10, 1241–1242. doi:10.1038/nn1961

Diks, C., and Wolski, M. (2016). Nonlinear Granger causality: Guidelines for multivariate analysis. J. Appl. Econ. 31, 1333–1351. doi:10.1002/jae.2495

Ding, M., Chen, Y., and Bressler, S. L. (2006). Granger causality: Basic theory and application to neuroscience. Handbook of Time Series Analysis: Recent Theoretical Developments and Applications 2006, 437–460.

Ego-Stengel, V., and Wilson, M. A. (2010). Disruption of ripple-associated hippocampal activity during rest impairs spatial learning in the rat. Hippocampus 20, 1–10. doi:10.1002/hipo.20707

Faes, L., Nollo, G., and Porta, A. (2011). Information-based detection of nonlinear Granger causality in multivariate processes via a nonuniform embedding technique. Phys. Rev. E 83, 051112. doi:10.1103/PhysRevE.83.051112

Fries, P. (2015). Rhythms for cognition: Communication through coherence. Neuron 88, 220–235. doi:10.1016/j.neuron.2015.09.034

Geiger, P., Zhang, K., Schoelkopf, B., Gong, M., and Janzing, D. (2015). Causal inference by identification of vector autoregressive processes with hidden components. International Conference on Machine Learning (PMLR) (Lille, France). 1917–1925.

Geweke, J. F. (1984). Measures of conditional linear dependence and feedback between time series. J. Am. Stat. Assoc. 79, 907–915. doi:10.1080/01621459.1984.10477110

Granger, C. W. J. (1969). Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424. doi:10.2307/1912791

Janzing, D., Balduzzi, D., Grosse-Wentrup, M., and Schölkopf, B. (2013). Quantifying causal influences. Ann. Stat. 41, 2324–2358. doi:10.1214/13-aos1145

Lee, A. K., and Wilson, M. A. (2002). Memory of sequential experience in the hippocampus during slow wave sleep. Neuron 36, 1183–1194. doi:10.1016/s0896-6273(02)01096-6

Lizier, J. T. (2014). JIDT: An information-theoretic toolkit for studying the dynamics of complex systems. Front. Robotics AI 1, 11. doi:10.3389/frobt.2014.00011

Marinazzo, D., Liao, W., Chen, H., and Stramaglia, S. (2011a). Nonlinear connectivity by Granger causality. Neuroimage 58, 330–338. doi:10.1016/j.neuroimage.2010.01.099

Marinazzo, D., Liao, W., Chen, H., and Stramaglia, S. (2011b). Nonlinear connectivity by Granger causality. Neuroimage 58, 330–338. doi:10.1016/j.neuroimage.2010.01.099

Marinazzo, D., Pellicoro, M., and Stramaglia, S. (2008). Kernel method for nonlinear Granger causality. Phys. Rev. Lett. 100, 144103. doi:10.1103/PhysRevLett.100.144103

Mastakouri, A. A., Schölkopf, B., and Janzing, D. (2021). “Necessary and sufficient conditions for causal feature selection in time series with latent common causes,” in International Conference on Machine Learning (PMLR). July 18 - 24, 2021 7502–7511.

McAllester, D., and Stratos, K. (2020). “Formal limitations on the measurement of mutual information,” in International Conference on Artificial Intelligence and Statistics (PMLR). 875–884.

Mizuseki, K., Diba, K., Pastalkova, E., Teeters, J., Sirota, A., and Buzsáki, G. (2014). Neurosharing: Large-scale data sets (spike, LFP) recorded from the hippocampal-entorhinal system in behaving rats. F1000Research 3, 98. doi:10.12688/f1000research.3895.1

Mizuseki, K., Sirota, A., Pastalkova, E., and Buzsáki, G. (2009). Theta oscillations provide temporal windows for local circuit computation in the entorhinal-hippocampal loop. Neuron 64, 267–280. doi:10.1016/j.neuron.2009.08.037

Moneta, A., Chlass, N., Entner, D., and Hoyer, P. (2011). “Causal search in structural vector autoregressive models,” in Proceedings of the neural information processing systems mini-symposium on causality in time series. Editors F. Popescu, and I. Guyon (Vancouver, Canada: PMLR).

Montalto, A., Faes, L., and Marinazzo, D. (2014). MuTE: A MATLAB toolbox to compare established and novel estimators of the multivariate transfer entropy. PloS one 9, e109462. doi:10.1371/journal.pone.0109462

Peters, J., Janzing, D., and Schölkopf, B. (2017). Elements of causal inference – foundations and learning algorithms. Cambridge: MIT Press.

Pikovsky, A. S. (1993). Local Lyapunov exponents for spatiotemporal chaos. Chaos Interdiscip. J. Nonlinear Sci. 3, 225–232. doi:10.1063/1.165987

Rubin, D. B. (1974). Estimating causal effects of treatments in randomized and nonrandomized studies. J. Educ. Psychol. 66, 688–701. doi:10.1037/h0037350

Runge, J., Heitzig, J., Petoukhov, V., and Kurths, J. (2012). Escaping the curse of dimensionality in estimating multivariate transfer entropy. Phys. Rev. Lett. 108, 258701. doi:10.1103/PhysRevLett.108.258701

Schreiber, T. (2000). Measuring information transfer. Phys. Rev. Lett. 85, 461–464. doi:10.1103/PhysRevLett.85.461

Shajarisales, N., Janzing, D., Schölkopf, B., and Besserve, M. (2015). Telling cause from effect in deterministic linear dynamical systems. International Conference on Machine Learning (PMLR), 285–294.

Shao, K., Logothetis, N. K., and Besserve, M. (2022). Bayesian information criterion for event-based multi-trial ensemble data. arXiv preprint arXiv:2204.14096. doi:10.48550/ARXIV.2204.14096

Shojaie, A., and Fox, E. B. (2022). Granger causality: A review and recent advances. Annu. Rev. Statistics Its Appl. 9, 289–319. doi:10.1146/annurev-statistics-040120-010930

Shorten, D. P., Spinney, R. E., and Lizier, J. T. (2021). Estimating transfer entropy in continuous time between neural spike trains or other event-based data. PLoS Comput. Biol. 17, e1008054. doi:10.1371/journal.pcbi.1008054

Shpitser, I., and Pearl, J. (2008). Complete identification methods for the causal hierarchy. J. Mach. Learn. Res. 9, 1941–1979.

Solo, V. (2016). State-space analysis of Granger-Geweke causality measures with application to fMRI. Neural comput. 28, 914–949. doi:10.1162/NECO_a_00828

Stokes, P. A., and Purdon, P. L. (2017). A study of problems encountered in Granger causality analysis from a neuroscience perspective. Proc. Natl. Acad. Sci. U. S. A. 114, E7063–E7072. doi:10.1073/pnas.1704663114

Tallon-Baudry, C., and Bertrand, O. (1999). Oscillatory gamma activity in humans and its role in object representation. Trends cognitive Sci. 3, 151–162. doi:10.1016/s1364-6613(99)01299-1

Vicente, R., Wibral, M., Lindner, M., and Pipa, G. (2011). Transfer entropy—A model-free measure of effective connectivity for the neurosciences. J. Comput. Neurosci. 30, 45–67. doi:10.1007/s10827-010-0262-3

Wen, X., Rangarajan, G., and Ding, M. (2013). Multivariate granger causality: An estimation framework based on factorization of the spectral density matrix. Philosophical Trans. R. Soc. A Math. Phys. Eng. Sci. 371, 20110610. doi:10.1098/rsta.2011.0610

Wibral, M., Pampu, N., Priesemann, V., Siebenhühner, F., Seiwert, H., Lindner, M., et al. (2013). Measuring information-transfer delays. PLoS ONE 8, e55809. doi:10.1371/journal.pone.0055809

Wibral, M., Vicente, R., and Lindner, M. (2014). Transfer entropy in neuroscience. Directed information measures in neuroscience (Berlin, Heidelberg: Springer).

Wismüller, A., Dsouza, A. M., Vosoughi, M. A., and Abidin, A. (2021). Large-scale nonlinear granger causality for inferring directed dependence from short multivariate time-series data. Sci. Rep. 11, 7817. doi:10.1038/s41598-021-87316-6

Wollstadt, P., Lizier, J. T., Vicente, R., Finn, C., Martinez-Zarzuela, M., Mediano, P., et al. (2019). IDTxl: The information dynamics toolkit xl: A python package for the efficient analysis of multivariate information dynamics in networks. J. Open Source Softw. 4, 1081. doi:10.21105/joss.01081

Keywords: information theory, causal strength, graphical models, transfer entropy, structural equations, neural oscillations

Citation: Shao K, Logothetis NK and Besserve M (2023) Information theoretic measures of causal influences during transient neural events. Front. Netw. Physiol. 3:1085347. doi: 10.3389/fnetp.2023.1085347

Received: 31 October 2022; Accepted: 11 May 2023;

Published: 31 May 2023.

Edited by:

Michal Javorka, Comenius University, SlovakiaReviewed by:

Michele Allegra, Dipartimento di Fisica e Astronomia “G. Galilei”, ItalyTeodor Buchner, Warsaw University of Technology, Poland

Sven Braeutigam, University of Oxford, United Kingdom

Copyright © 2023 Shao, Logothetis and Besserve. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michel Besserve, bWljaGVsLmJlc3NlcnZlQHR1ZWJpbmdlbi5tcGcuZGU=

Kaidi Shao

Kaidi Shao Nikos K. Logothetis

Nikos K. Logothetis Michel Besserve

Michel Besserve