95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

HYPOTHESIS AND THEORY article

Front. Comput. Neurosci. , 07 May 2024

Volume 18 - 2024 | https://doi.org/10.3389/fncom.2024.1367712

This article is part of the Research Topic Brain-inspired Cognition and Understanding for Next-generation AI: Computational Models, Architectures and Learning Algorithms Volume II View all 7 articles

The Causal Cognitive Architecture is a brain-inspired cognitive architecture developed from the hypothesis that the navigation circuits in the ancestors of mammals duplicated to eventually form the neocortex. Thus, millions of neocortical minicolumns are functionally modeled in the architecture as millions of “navigation maps.” An investigation of a cognitive architecture based on these navigation maps has previously shown that modest changes in the architecture allow the ready emergence of human cognitive abilities such as grounded, full causal decision-making, full analogical reasoning, and near-full compositional language abilities. In this study, additional biologically plausible modest changes to the architecture are considered and show the emergence of super-human planning abilities. The architecture should be considered as a viable alternative pathway toward the development of more advanced artificial intelligence, as well as to give insight into the emergence of natural human intelligence.

The Causal Cognitive Architecture (Schneider, 2023, 2024) is a brain-inspired cognitive architecture (BICA). It is hypothesized that the navigation circuits in the amniotic ancestors of mammals duplicated many times to eventually form the neocortex (Rakic, 2009; Butler et al., 2011; Chakraborty and Jarvis, 2015; Fournier et al., 2015; Kaas, 2019; Güntürkün et al., 2021; Burmeister, 2022). Thus, the millions of neocortical minicolumns are modeled in the Causal Cognitive Architecture as millions of spatial maps, which are termed “navigation maps.”

The architecture is not a rigid replication of the mammalian brain at the lower level of the spiking neurons, nor does it attempt to behaviorally replicate the higher-level psychological properties of the mammalian brain. Instead, it considers, given the postulations above, the properties and behaviors that emerge from a cognitive architecture based on navigation maps. The architecture represents a more functionalist system, as per Lieto (2021a,b), although a continuum exists between functionalist and structuralist models. Even in this study, where an enhancement to the architecture is considered, the constraints of biology and anatomy are taken into account.

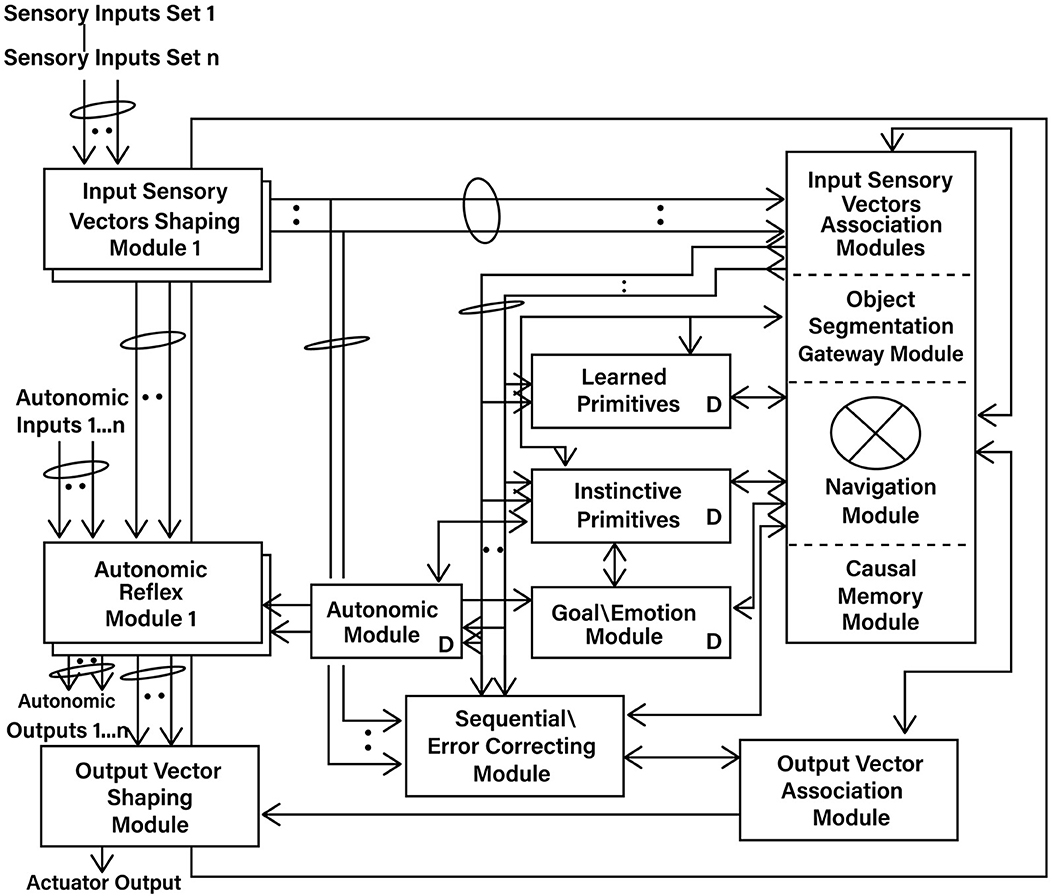

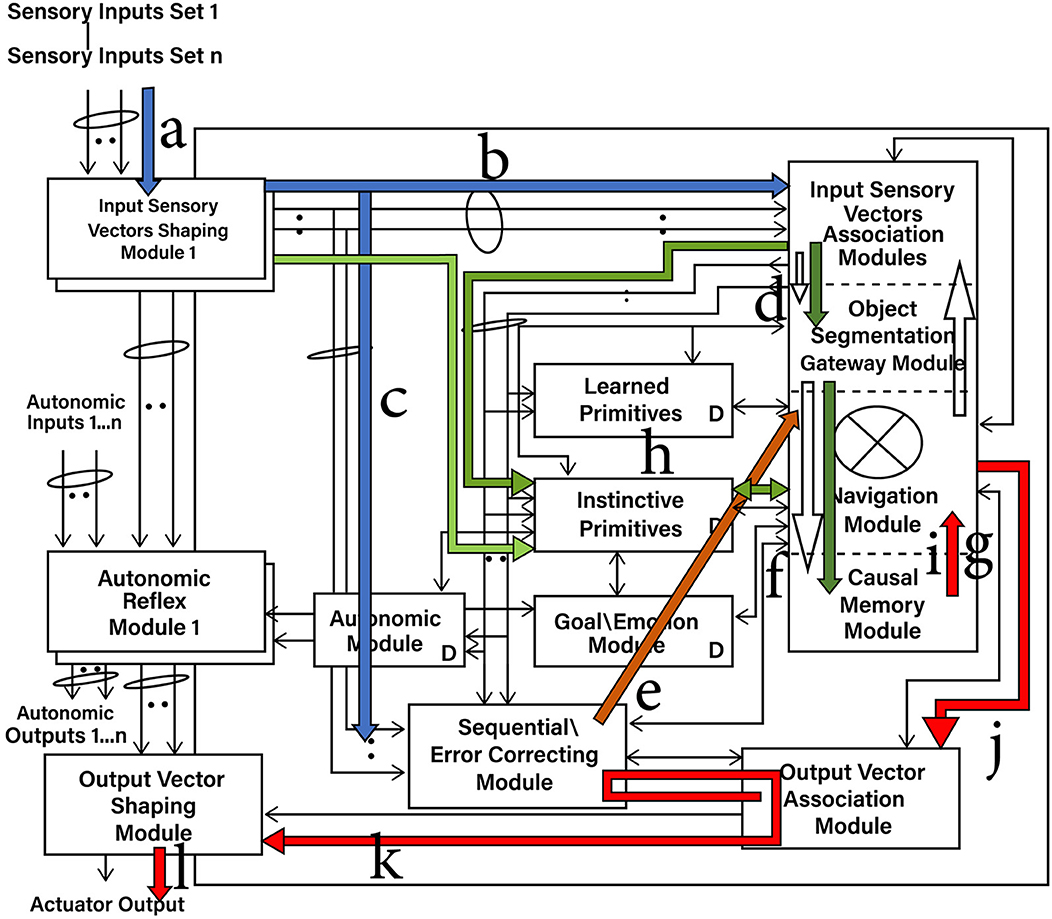

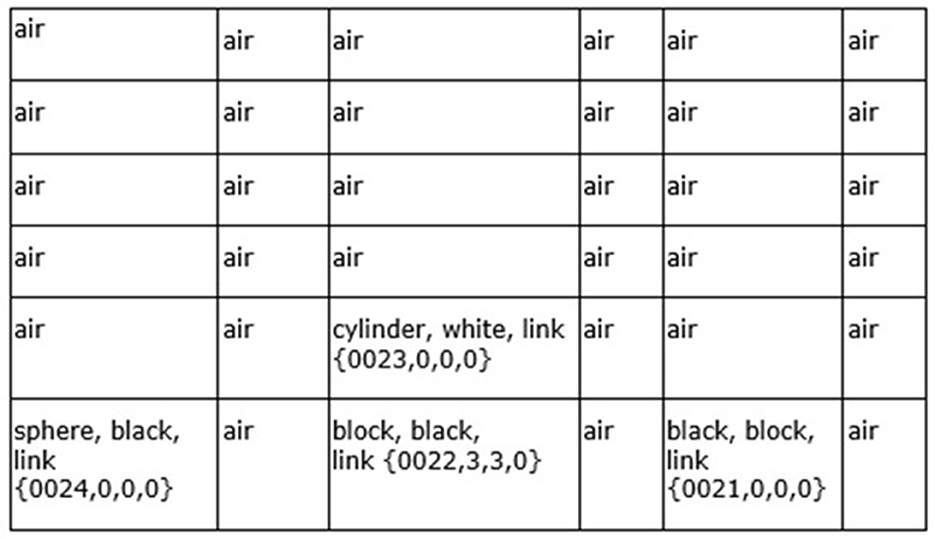

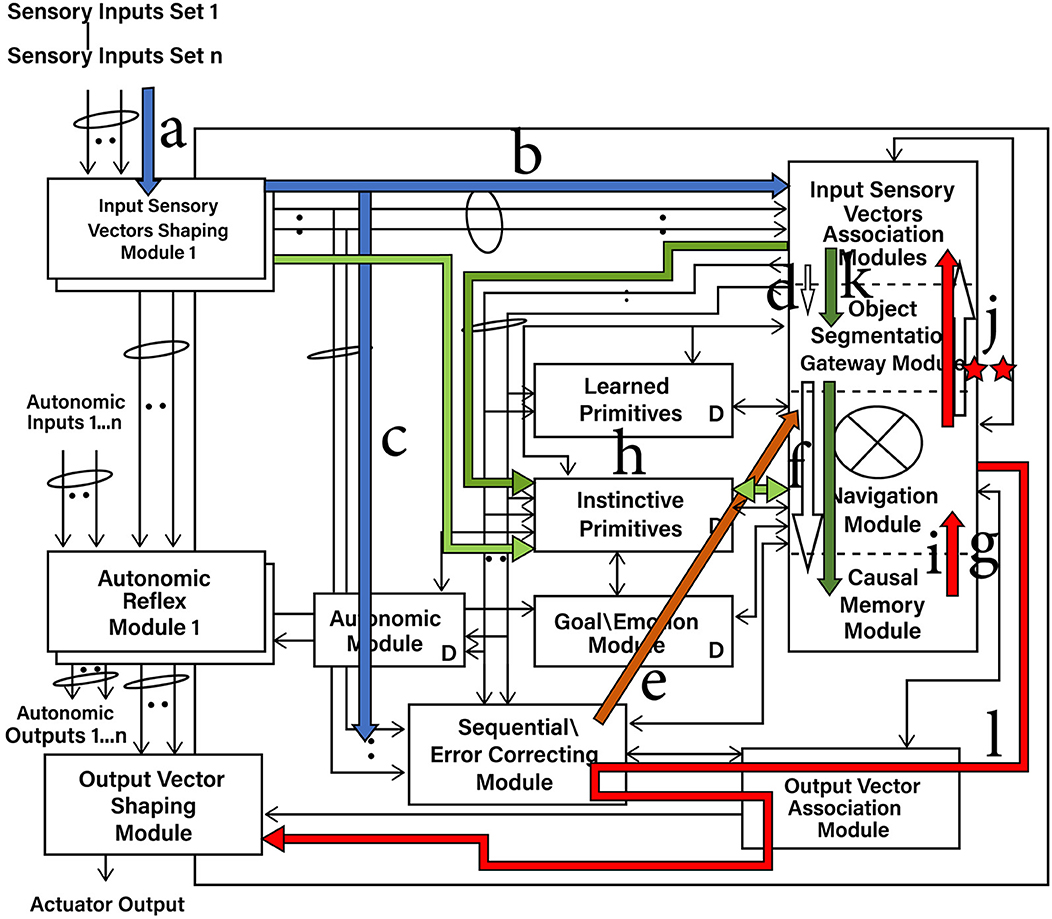

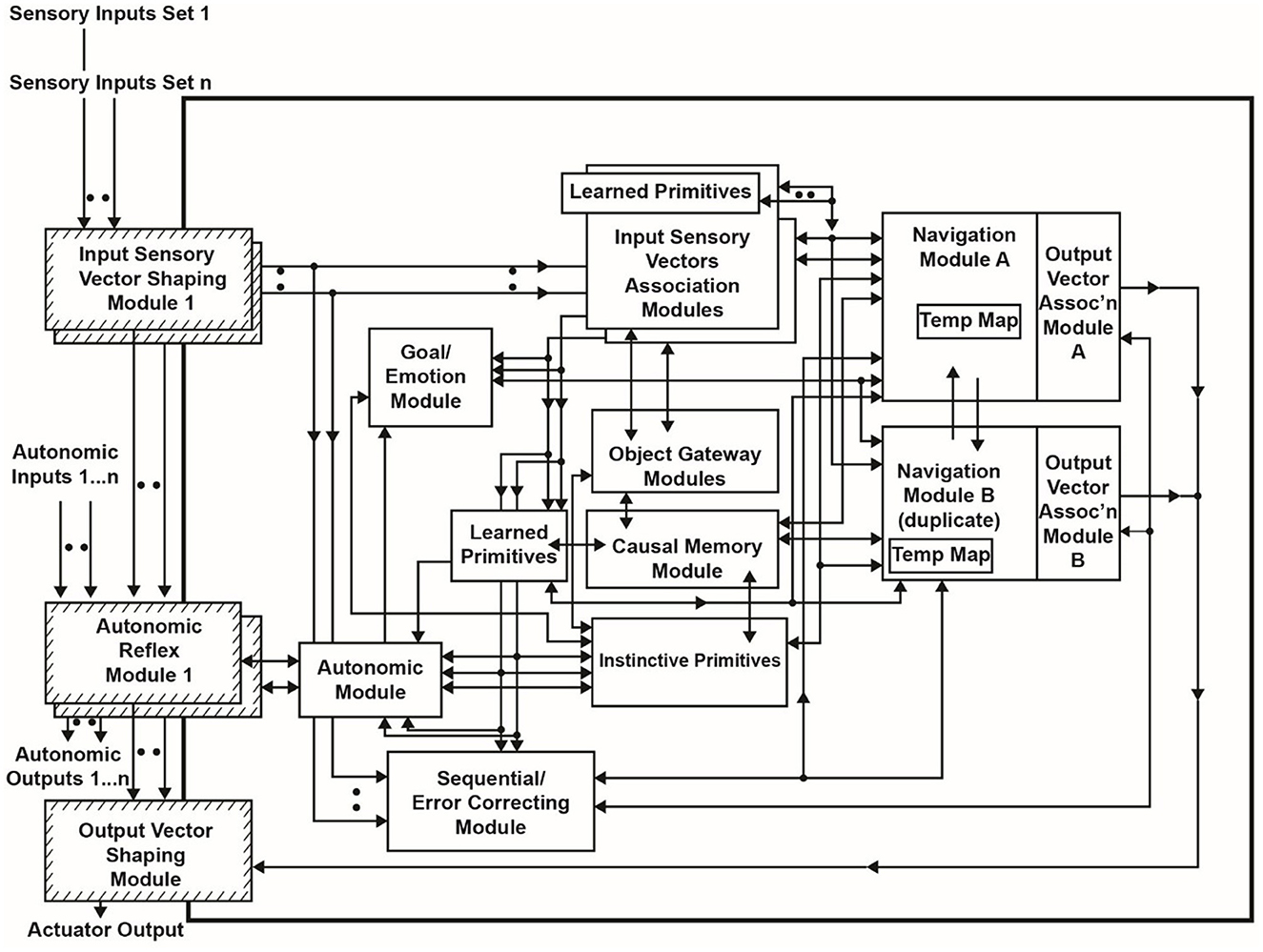

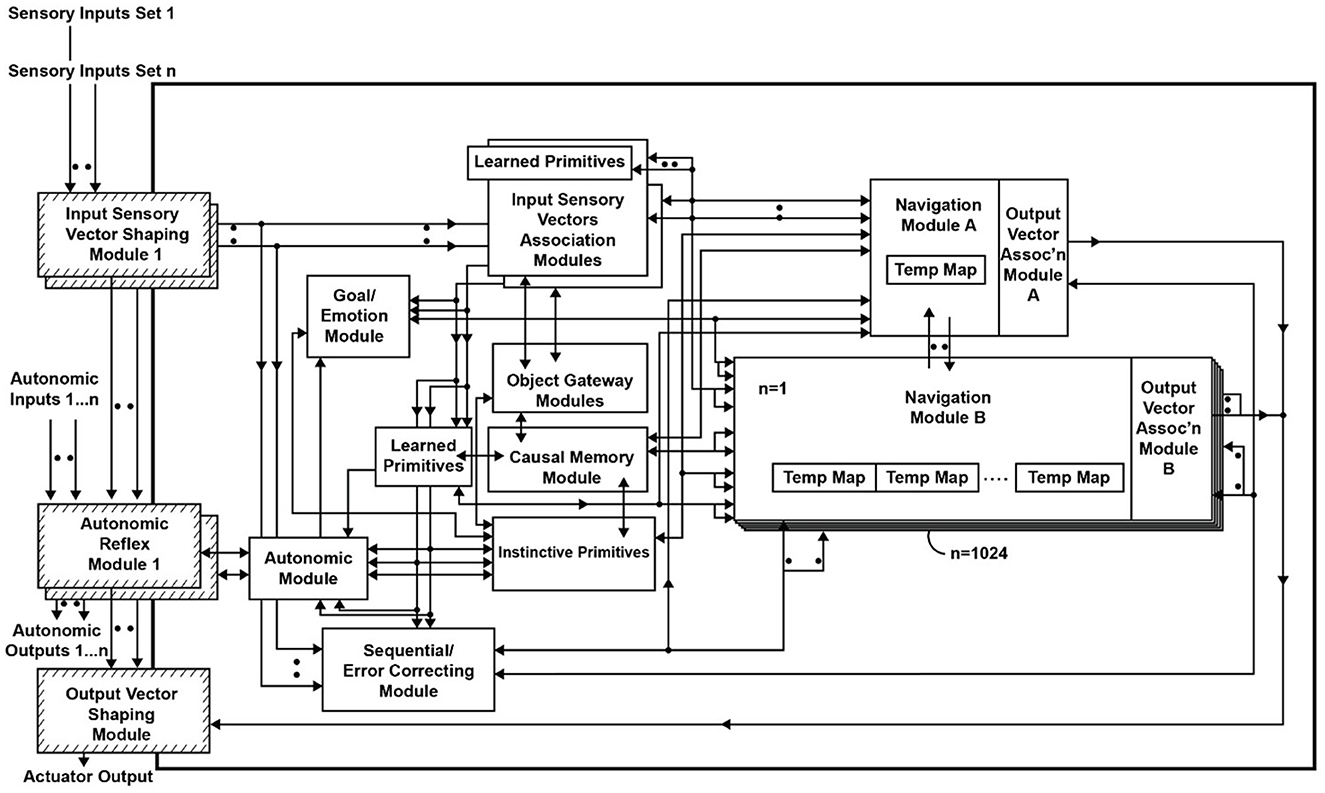

Figure 1 shows an overview of the Causal Cognitive Architecture 5 (Schneider, 2023). Figure 2 shows the operation of the architecture through a cognitive cycle—a cycle of sensory inputs processed and then an output (or null output) resulting. As noted above, the millions of neocortical minicolumns are modeled in the Causal Cognitive Architecture as millions of spatial maps, termed navigation maps. An example of a simplified navigation map used in a simulation of the architecture is shown in Figure 3. The operation of the Causal Cognitive Architecture is given in more detail below; here, a simple overview of its function is provided.

Figure 1. Overview of the Causal Cognitive Architecture 5 (CCA5). See text for a description of modules and their operation (“D” indicates the module has developmental function, i.e., changes algorithms with experience; ovals indicate pathways that are n = 0, 1, 2… where there are sets of modules providing signals).

Figure 2. Cognitive cycle: sensory inputs are processed and an output cccurs. a—Sensory inputs stream into the Input Sensory Vectors Shaping Modules. b—Processed and normalized sensory inputs propagate to the Input Sensory Vectors Association Modules and best-matching Local Navigation Maps (LMNs) for each sensory system produced (spatial binding). c—Processed and normalized sensory inputs propagate to the Sequential/Error-Correcting Module (temporal binding). d—Segmentation of objects in the input sensory scenes. e—Spatial mapping of the temporal mapping of the sensory inputs from the Sequential/Error-Correcting Module. f—Match to the best-matching Multi-Sensory Navigation Map from the Causal Memory Module, producing the Working Navigation Map (WNM) for the Navigation Module. g—Working Navigation Map (WNM) in/accessible by Navigation Module. h—Selecting a best-matching primitive (an Instinctive Primitive in this case). i—Instinctive Primitive operating on the Working Navigation Map (WNM). j—action signal produced by operation of Instinctive Primitive on WNM. k—output_vector signal, motion corrected by the Sequential/Error Correcting Module. l—Signal to actuators or for electronic transmission.

Figure 3. Example of a Navigation Map—the 6x6x0 spatial dimensions are shown, containing sensory features and links to other navigation maps. This represents the sensory scene of Figure 6A. Although this navigation map only contains visual sensory features, other navigation maps can contain combinations of visual, auditory, olfactory, and so on, sensory features.

In each cognitive cycle, sensory inputs stream into the Input Sensory Vectors Shaping Modules (Figure 2). One shaping module exists for each sensory system, e.g., vision, auditory, etc. The shaping module normalizes the sensory inputs into a form that can be used within the architecture. The normalized sensory inputs then move to the Input Sensory Vectors Association Modules. Here, sensory features are spatially mapped onto navigation maps dedicated to one sensory system. Again, there is one association module for each sensory system, e.g., vision, auditory, etc. The navigation maps holding sensory inputs of a given sensory system (e.g., vision) are matched against the best-matching stored navigation map in that (e.g., visual) Input Sensory Vectors Association Module. That best-matching stored navigation map is retrieved and then updated with the actual sensory input of that sensory system (or a new navigation map is created if there are too many changes). As such, there is a type of predictive coding occurring—the architecture anticipates what it is sensing and then considers the differences with the actual input signal. This works well for the perception of noisy, imperfect sensory inputs.

The navigation maps are then propagated to the Object Gateway Modules (Figure 2), where portions and the entire navigation maps are matched against stored multisensory navigation maps (i.e., contain features from multiple sensory systems) in the Causal Memory Module. The best-matched navigation map from the Causal Memory Module is then considered and moved to the Navigation Module. This best-matching navigation map is updated with the actual sensory information sensed from the environment. The updated navigation map is termed the Working Navigation Map. An example of a Working Navigation Map is shown in Figure 3.

Instinctive primitives (pre-programmed) and learned primitives (learned) are small procedures that can perform operations on the Working Navigation Map. They are selected by a similar matching process in terms of the sensory inputs as well as signals from the Goal/Emotion Module and from the previous values of the Navigation Modules. The arrow (i) in Figure 2 shows the actions of the best-matching instinctive primitive on the Working Navigation Map in the Navigation Module. These operations are essentially matrix operations, such as comparing arrays, adding a vector to an array, and other straightforward operations that could be expected of brain-inspired circuitry. The result is an output signal to the Output Vector Association Module and then to the Output Vector Shaping Module. This results in the activation of an actuator or the transmission of an electronics communication signal. Then, the cognitive cycle repeats—sensory inputs are processed again, the Navigation Module may produce an output action, and the cognitive cycle repeats again, and so on.

Feedback pathways, only partially shown in Figures 1, 2, exist throughout the architecture. As noted above, there is a type of predictive coding occurring—the architecture anticipates what it is sensing and then considers the differences with the actual input or intermediate signal. This is advantageous for the perception of noisy, imperfect sensory inputs. In the prior literature, it has been shown how, by enhancing some of these feedback pathways, causal reasoning, analogical inductive reasoning, and compositional language readily emerge from the architecture (Schneider, 2023, 2024). The mechanisms behind these emergent properties are discussed in more detail below.

This study asks what if the evolution of the human brain were to continue as it has in the past, and given an environment for such evolution, what advantageous changes could occur as reflected in a model such as the Causal Cognitive Architecture? More specifically, the study considers an evolution where there are increased intelligence abilities (e.g., Legg and Hutter, 2007; Adams et al., 2012; Wang, 2019; or the ability to better solve complex problems which humans encounter in their lives).

A vast number of cognitive architectures exist (Samsonovich, 2010; Kotseruba and Tsotsos, 2020). However, given that this study considers the further biological evolution of the brain modeled via the Causal Cognitive Architecture, this section focuses on the Causal Cognitive Architecture. The purpose of this section is to review the architecture so that the reader is provided with an understanding of its properties and operation before the new work is discussed in this article in the following section. In the last sub-section of this section, the Causal Cognitive Architecture is then compared with other existing cognitive architectures as well as previous work on cognitive maps.

In this section, the Causal Cognitive Architecture is considered in more detail. Appendix A gives a more formal description of the Causal Cognitive Architecture. Modified equations are used to describe the architecture. They are “modified” in the sense that many of the equations contain pseudocode. A pseudocode is a common language (e.g., English) description of the logic of a software routine (Olsen, 2005; Kwon, 2014). Traditional pseudocode tends to reproduce program structure, although, in English, it includes constructs such as While, Repeat-Until, For, If-Then, Case, and so on. However, doing so to describe the Causal Cognitive Architecture would be quite lengthy. Using the more abstracted pseudocode in the equations describing the architecture provides a more understandable description of the architecture without sacrificing much accuracy.

The subject of this study is the CCA7 version of the architecture. However, the operation of the CCA5 (Causal Cognitive Architecture 5) version of the architecture will first be described. Then, in the sections further below, the evolution to the CCA6 (Casual Cognitive Architecture 6) version will be briefly considered. Then, the focus of the study will be on the evolution, operation, and properties of the CCA7 version of the architecture. The early sections of the formal description of the Causal Cognitive Architecture 7 (CCA7) in Appendix A apply to the CCA5, CCA6, and CCA7 versions of the architecture.

An overview of the CCA5 version of the architecture is shown in Figures 1, 2. It is seen that sensory inputs stream into the Input Sensory Vectors Shaping Modules, with one module for each sensory system, e.g., vision and auditory. As noted above, the Causal Cognitive Architecture works in terms of cognitive cycles. These cycles are biologically inspired. For example, Madl et al. (2011) hypothesize that the essence of human cognition is cascading cycles of operations. In the CCA5, each cognitive cycle, whatever information in the previous time period of the previous cognitive cycle has streamed into the Input Sensory Vectors Shaping Modules (arrow “a,” Figure 2) is further processed, normalized, and then propagated to the Input Sensory Vectors Association Modules (arrow “b,” Figure 2), as well as to the Sequential/Error Correcting Module (arrow “c,” Figure 2).

The details of sensory perception, i.e., sensory signal processing, from the quantum level to the output produced by a transducer after possibly multi-layered initial signal processing, are largely abstracted away in this formalization. This does not diminish the importance of better signal processing. However, the architecture is concerned with whatever processed sensory inputs stream in, and that is what is considered here.

Equations (9, 10) from Appendix A are shown below. S1, t is an array of sensory inputs of sensory system 1 (visual in the current simulation), which has accumulated since the last cognitive cycle to the present cognitive cycle at time t. S2, t, S3, t, and so on are arrays of sensory inputs from other sensory systems. Input_Sens_Shaping_Mods.normalize is a method operating on arrays S in the Input Sensory Shaping Modules, processing and normalizing the raw input sensory data. S' is a normalized array, i.e., the raw array of the streams of sensory inputs for that sensory system has been normalized to a size and form that allows straightforward operations with the navigation maps within the architecture, which are also arrays. The vector s'(t) holds the normalized arrays of sensory inputs at time t for the different sensory systems.

In simulations of the CCA5, CCA6, and CCA7 versions of the architecture, visual, auditory, and olfactory simulated inputs have been considered. However, the architecture is very flexible in accepting any number of different sensory system inputs. For example, a radar sensory system (or most other artificial sensory systems) could easily be added to the architecture—its inputs, once processed and normalized, will be treated as any other sensory inputs. Similarly, a particular sensory system can be rendered inoperable, and if the other sensory systems are sufficient, often there will be limited impact on the architecture's final perception and behavior. As will be seen below, there is a very flexible approach toward the processing of sensory input data from a number of different sensory systems.

The Causal Cognitive Architecture is inspired by the mammalian brain. However, to simplify the system of equations used to model the architecture, the olfactory sensory system and any additional non-biological senses are treated the same as the other senses, which in the brain relay through the thalamus to the neocortex. Similarly, the architecture does not model the left-right sides and the interhemispheric movement of working memory in the biological brain.

As can be seen from Figure 1, outputs from the Input Sensory Vectors Shaping Module are also propagated to the Autonomic Reflex Modules. These Reflex Modules perform straightforward actions in response to certain input stimuli, analogous to reflex responses in mammals. In this study, there is more of a focus on the higher-level cognition occurring in the architecture. Similarly, this study does not fully consider or model the repertoire of lower-level learning and behavior routines that exist in humans.

Vector s'(t) (Equation 10), holding the normalized arrays of sensory inputs, is propagated to the Input Vectors Association Modules (arrow “b,” Figure 2) and the Sequential/Error Correcting Module (arrow “c,” Figure 2). Spatial binding of the sensory inputs will occur in the Input Vectors Association Modules, i.e., each sensory system's inputs will be mapped onto a navigation map (essentially an array) where the spatial relationship of different sensory inputs are maintained to some extent (e.g., the navigation map in Figure 3 which represents the sensory scene of Figure 6A).

In the Sequential/Error Correcting Module, temporal binding of the sensory inputs occurs—temporal relationships of different sensory inputs, i.e., those of the current cognitive cycle time t = t and those of previous cycles t = t-1 (i.e., 1 cognitive cycle ago), t = t-2, and t = t-3 are bound spatially onto a navigation map and later combined with the spatial navigation maps (Schneider, 2022a,b). This is discussed in more detail in Section A-4 in Appendix A. The need to store snapshots of the input sensory navigation maps every 30th of a second, for example, to track and make memories of motion (as well as changes in higher level cognitive processes), is obviated by transforming the temporal changes into a spatial features that are then mapped on to the same navigation map with the other spatial features.

Temporal binding is an essential feature of the Causal Cognitive Architecture. As mentioned above, it is described in more detail in Appendix A and the literature (Schneider, 2022a). If there is a motion of an object, the temporal binding allows the creation of a vector representing this motion, which can then be mapped onto the existing spatial binding navigation map representing the input sensory data. In addition, the motion of ideas, i.e., changes in navigation maps, can also be similarly mapped onto other navigation maps. However, this study will not focus on temporal binding but rather consider in more detail the flow of sensory inputs s'(t) into the Input Sensory Vectors Association Modules and then to the Navigation Module and related modules (Figure 1).

Equation (18) from Appendix A is shown below. In each Input Sensory Vectors Association Module (there is a separate one for each sensory system), the sensory input features (S'σ, t) for that sensory system σ are spatially mapped onto a navigation map. Each such navigation map is then matched (“match_best_local_navmap”) against the best navigation maps stored in that Input Sensory Vectors Association Module for that sensory system σ (all_mapsσ, t,). That best-matching stored navigation map is retrieved and then updated with the actual sensory input of that sensory system (or a new navigation map is created if there are too many changes). LNM(σ, γ, t) is the updated navigation map (where σ is the sensory system, γ is the map address, and t is the time corresponding to the current cognitive cycle). LNM stands for “Local Navigation Map” referring to this being the updated input sensory navigation map for that local sensory system (e.g., vision, auditory, olfactory, and so on). The vector lnmt (Equation 23) contains the best-matching and updated navigation maps for each sensory system.

In predictive coding, the brain or artificial agent makes a prediction about the environment, and then this prediction is sent down to lower levels of sensory inputs. Actual sensory inputs are compared to the prediction, and the prediction errors are then used to update and refine future predictions. Essentially, the brain or the artificial agent effectively functions to minimize prediction errors (Rao and Ballard, 1999; Friston, 2010; Millidge et al., 2021; Georgeon et al., 2024).

In Equation (18), WNM't − 1 refers to the Working Navigation Map, i.e., the navigation map the architecture was operating on in the Navigation Module (Figure 1) in the previous cognitive cycle t = t-1, which will influence the matching of sensory inputs to the best local sensory navigation map stored in the particular Input Sensory Vectors Association module in the current cognitive cycle t = t. The architecture anticipates to some extent what it will be sensing and then considers the differences with the actual input signal, i.e., which stored navigation map of that sensory system's stored navigation maps (all_mapsσ, t) is the closest match based on the actual sensory input (S'σ, t) and based on what the Navigation Module expects to see (WNM't − 1). The architecture essentially matches sensory inputs with navigation maps it already has experience with and then considers the differences (i.e., the error signal) with updated navigation maps (i.e., error signal resolved) saved in memory. This works well for the perception of noisy, imperfect sensory inputs, and this is easy to implement with the navigation map data structure (e.g., Figure 3) used by the architecture.

While this process has a number of similar aspects to predictive coding, it was not designed as a predictive coding architecture. For example, the architecture does not actively attempt to minimize free energy or minimize prediction errors, although this effect often results. Instead, this arrangement emerged in the attempt to model the evolution of the brain, albeit in a loosely functionalist approach (e.g., Lieto, 2021b).

The Local Navigation Maps [i.e., the best-matching navigation maps for each “local” sensory system updated by the actual sensory inputs, represented by lnmt in Equation (23)] are then propagated to the Object Gateway Modules (arrow “d,” Figure 2), with one module for each sensory system. In the Object Gateway Modules, portions of each navigation map will be attempted to be segmented into different objects, as best it can do. If there is, for example, a black sphere in a sensory scene, the Object Gateway Module, when processing the visual LNM (local navigation map), will readily segment out a black sphere from the rest of the sensory scene [the navigation map shown in Figure 3 was constructed in this manner from various visual close-up sensory inputs. For example, in cell (0,0,0), the link shown, i.e., {0024, 0,0,0}, refers to another navigation map where the various lines and colors of a sphere were extracted from the navigation map as a black sphere, and where the descriptive labels “sphere” and “black” were linked to].

The temporal binding (i.e., motion) of the sensory inputs that have occurred in the Sequential/Error Correcting Module is spatially mapped to each of the Local Navigation Maps (arrow “e,” Figure 2). For example, Equation (62) taken from Appendix A shows the Local Navigation Map for the visual sensory inputs LNM(1, γ, t) being updated with a “Vector Navigation Map” “VNM”t (i.e., the motion prediction vector created in the Sequential/Error Correcting Module and applied to an array), with the updated Local Navigation Map LNM'(1, γ, t) resulting as follows:

The different sensory system-updated Local Navigation Maps LNM'(1…., γ, t) are then matched against the multisensory (i.e., have features from all sensory systems as well as perhaps other features created and stored on the maps) navigation maps stored in the Causal Memory Module (arrow “f,” Figure 2). WNMt is the best-matching multisensory navigation map. Equation (67) from Appendix A shows that the Object Segmentation Gateway Module (“Object_Seg_Mod”) built-in method (i.e., part of the circuitry of the Object Segmentation Gateway Module) “differences” compares the number of differences between actual sensory information on the Local Navigation Maps represented by actualt to the features represented by WNMt.

As Equation (67) shows, if the number of differences is low enough, then the best-matching multisensory navigation map WNMt is updated with actual sensory information from the Local Navigation Maps represented by actualt [if there are too many differences between the best-matching map and the actual input maps, i.e., >h' as shown in Equation (67), then in another equation in Appendix A, there will be the creation of a new multisensory navigation map and updating it, rather than the modification of the existing WNMt]. The resulting multisensory navigation map WNM't is called the “Working Navigation Map” and is the navigation map upon which instinctive primitives and learned primitives operate in the Navigation Module (arrow “g,” Figure 2). Figure 3 is an example of a Working Navigation Map.

In the CCA5 version of the architecture, there is a single Navigation Module (Figure 1). However, the Navigation Modules are increased in the CCA6 version (Figure 5) and the CCA7 version (Figure 9). Nonetheless, this section applies to all these versions of the architecture.

Instinctive primitives are small procedures that can perform operations on the Working Navigation Map (WNM't) in the Navigation Module. Instinctive primitives are pre-existing—they come preprogrammed with the architecture. Learned primitives are similar to small procedures that can perform operations on the Working Navigation Map (WNM't). However, learned primitives are learned by the architecture, rather than being preprogrammed.

The instinctive primitives are inspired by the instinctive behaviors present in human infants and children, as well as in some non-human primates (Spelke, 1994; Kinzler and Spelke, 2007). Spelke groups these instinctive behaviors in terms of the physics of objects, agents, numbers, geometry, and reasoning about social group members.

As can be seen from Figure 2 (arrow “h”), the processed Input Sensory Vectors Association Modules' navigation maps, as well as inputs from other parts of the architecture, propagate to the store of both learned primitives and instinctive primitives in the architecture. Either an instinctive primitive or a learned primitive will be selected. The primitive is selected by a similar matching process to the one discussed above, but here in terms of the sensory inputs as well as signals from the Goal/Emotion Module and the previous values of the Navigation Modules.

Arrow “i” in Figure 2 shows the best-matching instinctive primitive acting on the Working Navigation Map (WNM't) in the Navigation Module. These operations are essentially matrix/tensor operations, such as comparing arrays, adding a vector to an array, and other straightforward operations that could be expected of brain-inspired circuitry. Equation (82) is taken from Appendix A. In the Navigation Module, the best-matching primitive (it can be either an instinctive primitive or a learned primitive), WPRt is applied against the Working Navigation Map WNM' (in the CCA6 and CCA7 versions of the architecture where there is more than one Navigation Module, this occurs in Navigation Module A).

In Equation (82), “apply_primitive” is a built-in method (i.e., part of the circuitry of the Navigation Module) that does the actual operations of applying the primitive WPRt on the Working Navigation Map WNM'. The result is an output signal actiont (Equation 82). As indicated by arrow “j” in Figure 2, output signal actiont is propagated to the Output Vector Association Module. As indicated by arrow “k” in Figure 2, there is a modification of the output signal actiont with respect to temporal motions introduced in the Output Vector Association Module, creating an intermediate output_vectort signal. This is further modified by the motion_correctiont signal produced by the Sequential/Error Correcting Module. The result is the output_vectort signal, as shown in Equation (87). The output_vectort signal propagates to the Output Vector Shaping Module. The signal is further transformed for interface with the real world (arrow “l,” Figure 2), where it can result in the activation of an actuator or the transmission of an electronic communication signal.

Then, the cognitive cycle repeats—sensory inputs are processed, the Navigation Module may produce an output action which results in an actuator output, and then the cycle repeats again, and so on.

Step-by-step examples of the architecture processing a particular sensory scene are given by Schneider (2022a, 2023). In addition, more details on the processes that occur are given in Appendix A.

Feedback pathways, only partially shown in Figures 1, 2, exist throughout the Causal Cognitive Architecture. These feedback pathways are essential—the architecture considers the differences with the actual input or intermediate signal compared to its existing internal representations, as discussed above. This is advantageous for the perception of noisy, imperfect sensory inputs.

Schneider (2022a) describes how, by enhancing some of these feedback pathways, causal properties readily emerge from the architecture. In Figure 4A, the feedback pathways from the Navigation Module back to the Input Sensory Vectors Association Modules are enhanced. Biologically, such a change could have occurred in the evolution of the brain through a number of genetic mechanisms (e.g., Rakic, 2009; Chakraborty and Jarvis, 2015).

Figure 4. Consider the above figure under these situations: (A) (arrows a→j) When the operation of the selected instinctive or learned primitive on the Working Navigation Map in the Navigation Module does not produce an output action or a meaningful one, the results, i.e., the new contents of the Working Navigation Map, can instead be fed back to the Input Sensory Vectors Association Modules. (B) (arrows k→l) Instead of actual sensory inputs, the intermediate results from the Navigation Module (previous figure), which have been temporarily stored in the Input Sensory Vectors Association Modules, are now automatically propagated to the Navigation Module. In this new cognitive cycle, perhaps a new instinctive primitive will end up being selected (or the same ones used) and applied to previous intermediate results, possibly producing a valid output action. If so, then the output action goes to the Output Vector Association Module and then to the real world. However, if no valid output action occurs, the new intermediate results can be fed back again and, in the next cognitive cycle, processed again. (C) (j**) (Note: Although not shown, assume there is a TempMap memory storage area in the Navigation Module, which is shown more explicitly in Figure 5.) No meaningful output was produced by the interaction of the primitive on the Working Navigation Map (WNM) in the Navigation Module. As before, the Working Navigation Map (i.e., the intermediate results) are fed back and stored in the Sensory Association Modules. However, the operations at j** occur now (described below and in the text now occur). Effectively, induction by analogy automatically occurs in these steps, allowing the production of an actionable output in many situations. a—Sensory inputs stream into the Input Sensory Vectors Shaping Modules. b—Processed and normalized sensory inputs propagate to the Input Sensory Vectors Association Modules and best-matching Local Navigation Maps (LMNs) for each sensory system produced (spatial binding). c—Processed and normalized sensory inputs propagate to the Sequential/Error Correcting Module (temporal binding). d—Segmentation of objects in the input sensory scenes. e—Spatial mapping of the temporal mapping of the sensory inputs from the Sequential/Error Correcting Module. f—Match to the best-matching Multi-Sensory Navigation Map from the Causal Memory Module, producing the Working Navigation Map (WNM) for the Navigation Module. g—Working Navigation Map (WNM) in/accessible by Navigation Module. h—Selecting a best-matching primitive (an Instinctive Primitive in this case). i—Instinctive Primitive operating on the Working Navigation Map (WNM). j—No meaningful action signal is produced by the operation of Instinctive Primitive on WNM; thus, the contents of WNM are fed back to the Input Sensory Vectors Associations Modules. k—In the new cognitive cycle, the stored WNM, i.e., the previous cycle's intermediate results, are now reprocessed through the Navigation Module. l—Perhaps a meaningful action signal is now produced, and an output signal results. j**–No meaningful action signal is produced by the operation of Instinctive Primitive on WNM; thus, the following happens (represented above by ** since there is not enough space to show the various arrows required): 1. The contents of WNM are fed back to the Input Sensory Vectors Associations Modules, like before. 2. The contents of WNM are also fed to trigger a match with the best-matching nav map in the Causal Memory Module (WNM't-best_match). 3. The nav map that WNM't-best_match links to is placed in “TempMap” memory of the Navigation Module. 4. The difference (WNM't-difference) between WNM't-best_match and “TempMap” is kept in the Navigation Module. 5. The original WNM being stored in the Input Sensory Vectors Association Modules in the next cognitive cycle propagates forward and is added to WNM't-difference, resulting in a new Working Navigation Map WNM, which will be processed again (i.e., action by primitive against it) in the new cognitive cycle, and this time may (or may not) result in an actionable output from the Navigation Map.

The result of this enhancement of this feedback pathway is that when the operation of the instinctive or learned primitive on the Working Navigation Map in the Navigation Module does not produce an output action or a meaningful output action, the results, i.e., the new contents of the Working Navigation Map, can instead be fed back to the Input Sensory Vectors Association Modules instead of being sent to the Output Vector Association Module.

As shown in Figure 4A, the Navigation Module in this cognitive cycle did not produce any output signal actiont. However, as arrow “j” (Figure 4A) shows, the contents of the Navigation Module are fed back to the Input Vectors Association Module. These saved contents essentially now represent the intermediate results of the Navigation Module. In the next cognitive cycle, they can be fed forward to the Navigation Module and operated on again.

As Figure 4B shows, in the next cognitive cycle, instead of processing the actual sensory inputs, these stored intermediate results will automatically propagate forward to the Navigation Module and be processed again (arrow “k”). These saved, essentially intermediate results become again the current Working Navigation Map (WNM') in the Navigation Module. The advantage of reprocessing intermediate results is that another operation of the instinctive (or learned) primitive on these results can often result in a better, actionable output signal actiont (Equation 82). If not, the new intermediate results (i.e., the new contents of the Working Navigation Map) can be fed back and again be re-processed in the next one or many repeated cognitive cycles (at present, determination of what is a meaningful result can be determined in part by a learned or instinctive primitive's procedures, as well as in part if the action signal sent to the Output Vector Association Module can be acted upon).

Equations (88, 89) taken from Appendix A show that if the action signal produced by the Navigation Module is not actionable (i.e., actiont ≠ “move*”), then the Working Navigation Map in the Navigation Module is fed back to the various sensory system Input Vector Association Modules (Equation 88). In the next cognitive cycle t = t+1, the best-matching Local Navigation Map in each module now becomes the sensory features extracted from the fed-back Working Navigation Map (Equation 89), and so, these Local Navigation Maps will be automatically propagated forward to the Navigation Module, where they will constitute the Working Navigation Map again. Thus, intermediate results of the previous cycle will be operated on a second time by whatever instinctive or learned primitives are selected in this cognitive cycle.

While this seems like an inelegant way to re-operate on intermediate results, evolving this mechanism in the brain takes modest changes, i.e., enhancement of particular feedback pathways. Indeed, in humans, when the brain switches from the automatic operations of System 1 to the more complex operations of System 2 (Kahneman, 2011), which is similar to re-operating on intermediate results, less attention can be paid to the normal stimuli around us, which is what happens in the Causal Cognitive Architecture during re-operating on intermediate results.

Schneider (2022a) shows that by re-operating on the intermediate results, the architecture can generate casual behavior by exploring possible causes and effects of the actions. An example is where the CCA3 version of the Causal Cognitive Architecture controls a hospital patient assistant robot. A new robot has never seen a patient fall to the ground and has never been trained on a closely identical case. However, it has a learned primitive (i.e., procedure) from a rudimentary education before doing this work that it should not allow any patient it is with to fall down on the ground.

The robot one day happens to be assisting a patient who begins to fall toward the ground. The learned primitive concerning a person falling is triggered but does not produce an actual output signal. The intermediate result calls the physics instinctive primitive (i.e., a general knowledge procedure pre-programmed in the architecture), which pushes back against something falling or moving to stop the movement. Thus, the robot pushes back against the falling patient and stops the patient from falling, even though it has never actually done this before in training.

Even with reprocessing of intermediate results, there are still many situations where the Navigation Module will not produce any actionable output. Schneider (2023) shows that with biologically feasible, modest modifications to the feedback operations, analogical reasoning readily emerges. Although not explicitly shown, note that a temporary memory area, “TempMap,” now also exists in the Navigation Module (Figure 4C; this memory area is treated equivalently to an array in the equations, hence the bolding of its name).

Given the existence of a temporary memory area, why, for causal behavior, as shown above, is it necessary to feed back the intermediate results of the Navigation Module to the Input Sensory Vectors Association Modules rather than just store them in the temporary memory area? As Schneider (2023) notes, the reason is that the Causal Cognitive Architecture is biologically inspired, and from an evolutionary perspective, it seems more reasonable that storage of intermediate results could occur by enhancing feedback pathways rather than by creating new memory areas. To efficiently carry out analogical operations, as described below, the evolutionary usurpation of some brain regions as a temporary storage region would have been advantageous at this point. Thus, the CCA5 and higher versions of the architecture possess a temporary memory area associated with the Navigation Module.

As before, the Working Navigation Map (WNM't; i.e., the intermediate results) is fed back and stored in the Input Sensory Association Modules (arrow “j” in Figures 4B, C). However, the Working Navigation Map is also propagated to the Causal Memory Module, where it will automatically match the best stored multisensory navigation map, which becomes the new Working Navigation Map in the Navigation Module. The navigation map that this navigation map recently linked to is triggered and retrieved and then propagated to and subtracted from the new Working Navigation Map in the Navigation Module (“**” in Figure 4C). In the next cognitive cycle, as before, the original Working Navigation Map is automatically propagated, although now added to the differences in the Navigation Module. As a result of a few modifications to the feedback pathways and operations on the navigation maps here, effectively induction by analogy automatically occurs in these steps.

Equations (95–99) are taken from the Appendix A and show these operations. If there is no actionable output from the Navigation Module (i.e., actiont ≠” move*,” where “move*” is an output signal giving instructions about moving something or moving a message), then these operations are automatically triggered, i.e., these equations occur [note that there is only one Working Navigation Map WNM't in the Navigation Module (Figure 4C) at any time. However, since the contents of what is WNM't change several times in these operations, for better clarity to the reader, a small descriptor is appended to its name, e.g., “WNM't-original,” etc.].

In Equation (95), the contents of the Navigation Module, i.e., WNM't, which for better readability is called “WNM't-original” here, are fed back to be stored in the Input Sensory Vectors Association Modules, the same as before. However, “WNM't-original” is also propagated to the Causal Memory Module where automatically the “Causal_Mem_Mod.match_best_map” built-in method will occur, matching it with the best matching multisensory map, which becomes the new Working Navigation Map called here as “WNM't-best_match” (Equation 96).

In Equation (97), the built-in method “Nav_ModA.use_linkaddress1_map” retrieves the navigation map that “WNM't-best_match” most recently linked to and puts this map into the TempMap memory. Then, as Equation (98) shows, the difference between “WNM't-best_match” and TempMap gets stored in the Navigation Module as “WNM't-difference.” Then, a new cognitive cycle starts, and WNM't-original is automatically fed forward but now added to “WNM't-difference” (Equation 99). The new Working Navigation Map WNM't is termed “WNM't-analogical” since it represents an analogic inductive result.

Consider a navigation map x which is represented by “WNM't-original.” Given that there was no actionable output in the last cognitive cycle, it is advantageous to induce what this navigation map x will do next, i.e., which navigation map it will call. In Equation (100), it is shown that it has properties/features P1…Pn. Consider navigation map y represented as “WNM't-best_match” in Equation (96). It is the best-matching navigation map to navigation map x and thus assumed it will share many properties, as shown in Equation (101).

Navigation map y has previously linked to (i.e., it was pulled into the Navigation Module) the navigation map in TempMap (Equation 97), i.e., as given by the built-in method “use_linkaddress1_map” [Schneider (2023) discusses other links and groups of links to use as the basis for analogical induction]. The difference between navigation map y and TempMap is “WNM't-difference” (Equation 98). Thus, consider this difference, i.e., “WNM't-difference,” to be property N, as noted in Equation (102).

Since navigation map y has property N, by induction by analogy, it can be said that navigation map x also has property N (Equation 103). Thus, add property N, which is actually “WNM't-difference,” to navigation map x, which is actually “WNM't-original,” producing the result of navigation map x with property N as being “WNM't-analogical” (Equation 99).

As noted above, Equations (100–103) essentially define induction by analogy. In Equation (100), x has properties/features P1 to Pn. y is similar and also has properties/features P1 to Pn (Equation 101). y also has the feature “N” (Equation 102). Thus, by induction by analogy, x has the feature “N” (Equation 103). As shown above, a ready mechanism now exists in the Causal Cognitive Architecture, which follows this definition. If an actionable resolution of a Working Navigation Map (WNM't) does not immediately occur (i.e., a primitive applied to WNM't does not produce an actionable output from the Navigation Module), the architecture can follow the analogical mechanism above to produce an analogical result which can be operated on in the next cognitive cycle.

Of interest is that analogical intermediate results are useful in typical day-to-day functioning rather than being considered as something only used in exceptional high-level problem-solving tasks (e.g., writing an intelligence test). For example, in the study by Schneider (2023), there is an example of a robot controlled by a CCA5 version of the architecture. The robot needs to cross a river and has instinctive primitives that guide it to stay on solid ground to do so. However, there are piles of leaves floating on the river, which appear solid and for which the robot has no experience nor any instinctive primitives. By analogical reasoning, it is shown how the robot automatically uses a previous navigation map (i.e., experience) of stepping on pieces of newspaper floating in a puddle and its leg going into the puddle to not use the leaves to cross the river. The robot has no knowledge whatsoever about newspapers or leaves other than they appear to be solid, yet by automatically using its analogical reasoning mechanism, it successfully crosses the river via another path and not by stepping on the piles of floating leaves.

Hofstadter (2001) provides evidence that supports the use of analogies as the core of human cognition. Of interest, full analogical reasoning does not appear to be present in chimpanzees (Penn et al., 2008), although more recent reports show some animals capable of some aspects of analogical reasoning (Flemming et al., 2013; Hagmann and Cook, 2015). The mechanisms described above for the Causal Cognitive Architecture show theoretically that modest modifications can result in the emergence of analogical reasoning from a chimpanzee–human last common ancestor, albeit a loosely functionalist model thereof.

Given the usefulness of the Navigation Module, duplicating it into two Navigation Modules might be more advantageous. Again, biologically, such a change could have readily occurred in the evolution of the brain through a number of genetic mechanisms (e.g., Rakic, 2009; Chakraborty and Jarvis, 2015). Figure 5 shows the duplication of the Navigation Module into Navigation Module A and Navigation Module B. This new version of the architecture is called the Causal Cognitive Architecture 6 (CCA6).

Figure 5. The Causal Cognitive Architecture 6 (CCA6). The Navigation Module of the CCA5 architecture has been duplicated into Navigation Module A and Navigation Module B, along with other changes, resulting in the CCA6 version of the architecture.

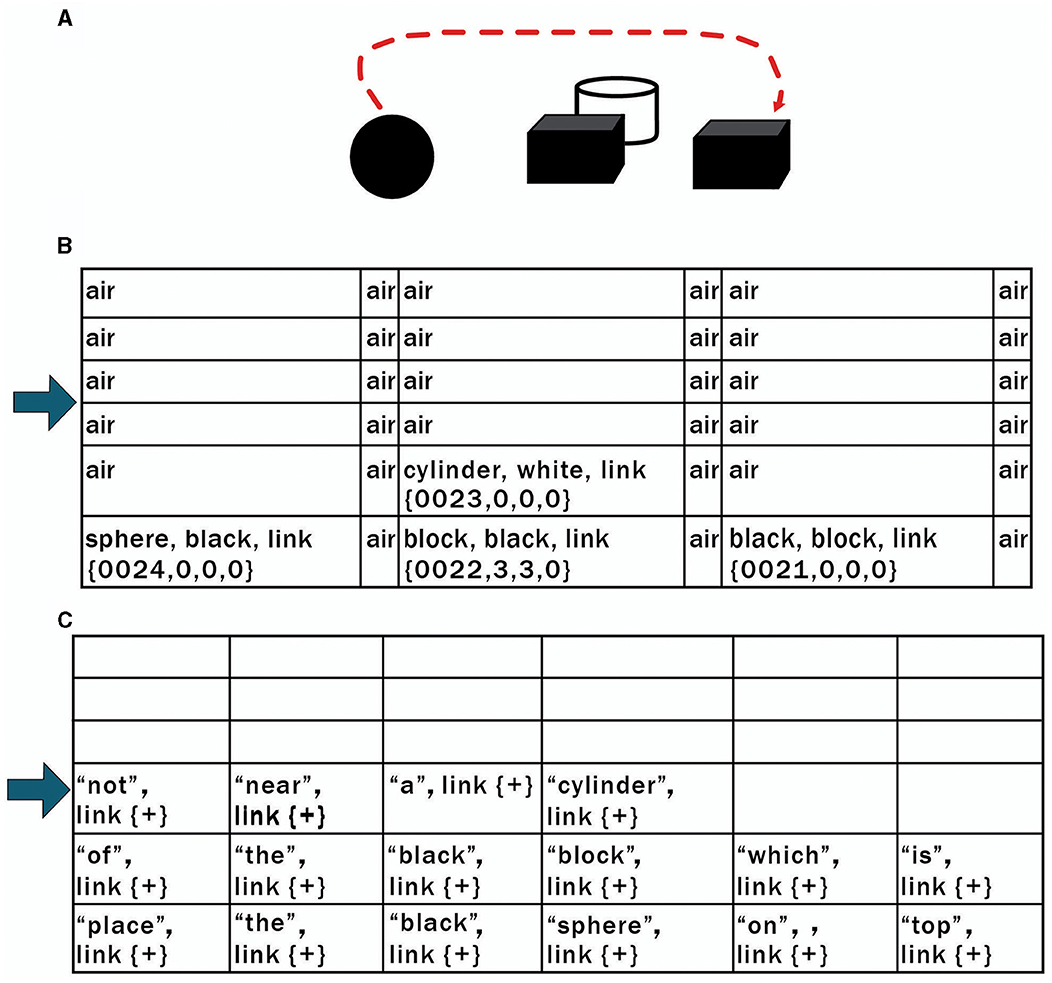

Consider the compositional problem shown in Figure 6A, such as following the command to “place the black sphere on top of the black block which is not near a cylinder” (the arrow shows the correct solution to this problem. Of course, the arrow is not shown to the system being asked to solve this problem). Connectionist systems have trouble with such compositional problems. For example, Marcus et al. (2022) give a similar example to DALL-E2 and prompt it to place a red ball on top of a blue pyramid behind a car above a toaster. DALL-E2 tries 10 times and produces various output images in response to the command, but none of these actually depict the requested relationships correctly.

Figure 6. (A) Connectionist systems have difficulty solving problems such as “place the black sphere on top of the black block which is not near a cylinder” (the arrow shows the correct solution to this problem, but of course, it is not shown to the system being asked to solve this problem). (B, C) The sensory scene of (A) is loaded in Navigation Module A (B). The instruction associated with (A) (“place the black sphere on top of the black block which is not near a cylinder”) is loaded in Navigation Module B (C). In subsequent cognitive cycles, the contents of Navigation Module B are processed against the contents of Navigation Module A via operations of various instinctive primitives discussed in the text.

However, Schneider (2024) shows that if the Navigation Module is duplicated into Navigation Module A and Navigation Module B, as shown in Figure 5, then compositionality and compositional language readily emerge from this CCA6 version of the architecture.

In the CCA6 architecture shown in Figure 5, compositional operations tend to occur in Navigation Module B. Instinctive primitives (as well as learned primitives) involved in compositional operations and language operations will generally operate on the navigation map in Navigation Module B. Consider the example shown in Figure 6A of “placing the black sphere on top of the black block which is not near a cylinder.” The sensory scene of the spheres and blocks will propagate through the architecture (Figure 5) and be mapped to a navigation map in Navigation Module A, as shown in Figure 6B [it actually takes a few cognitive cycles and close-up views of the objects, as evidenced by the links in some of the cells (e.g., link to {map = 24, x = 0, y = 0, z = 0} for cell (0,0,0) with the labels “sphere” and “black”), to create this navigation map].

Equations (109–114) are taken from Appendix A. The instinctive primitive “parse_sentence” is triggered by the instruction (“instruction sentence”) associated with this sensory image. In Equation (109), “parse_sentence.copy()” maps the instruction (“place the black sphere on top of the black block which is not near a cylinder”) to a navigation map (WNMB't) in Navigation Module B (“Nav_ModB”). This is shown in Figures 6B, C. The “link{+}” in the cells in the Navigation Map (WNMB't) in Navigation Module B just means that the cell links to its neighbor to the right.

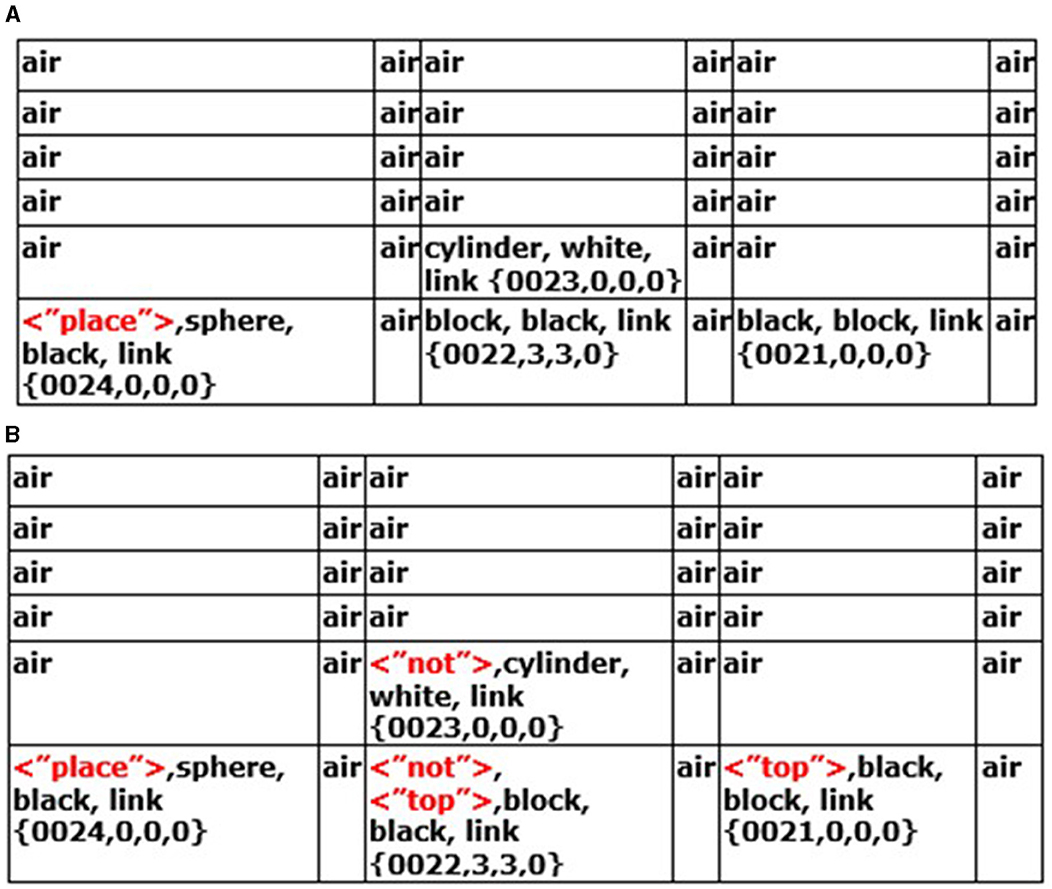

The “parse_sentence.parse()” instinctive primitive parses through Navigation Map B WNMB', i.e., the instruction sentence (Equation 110). Each word is matched against the Causal Memory Module “parse_sentence.parse.match()” (Equation 111). If what is called an “action word” is found (i.e., it matches some specific action to do to the other cells), then it is mapped to cells in Navigation Map A WNM' in Navigation Module A (Figure 7A) containing features associated with or mapping to the action word. In Figure 7A, it can be seen, for example, that “place” has been matched to cell (0,0,0) in Navigation Module A. This makes sense since this action word is associated with the black sphere in the instruction sentence (this is described in more detail in Appendix A).

Figure 7. (A) The instinctive primitive “parse_sentence()” has entered the tag < “place”> in the cell containing the black sphere. (B) After a few more cognitive cycles, the instinctive primitive “parse_sentence()” and then the instinctive primitive “physics_near_object()” have now written these tags in the various cells of the navigation map in Navigation Module A.

A “near_trigger” is a feature that is spatially near something else or not near something else that can trigger various physics instinctive primitives. The instruction sentence word “near” triggers instinctive primitive “Nav_ModB.physics_near_object()” (Equation 112). The result of this instinctive primitive is to place the tag “not” in the cells “not near” the white cylinder, as seen in the Navigation Map of Navigation Module A in Figure 7B.

In each cognitive cycle, the CCA6 architecture will continue to parse through the instruction sentence. When it reaches the “end_of_communication” (i.e., the end of the sentence), it then parses through Navigation Module A, looking for a “place” tag. Suppose there is a “place” tag (e.g., cell (0,0,0)) in Navigation Module A in Figure 7B, then instinctive primitive “Nav_ModA.place_object()” is triggered (Equation 113). This instinctive primitive will go through the navigation map looking for other tagged notations such as “top” in cells (2,0,0) and (4,0,0) in Navigation Module A (Figure 7B). It will ignore (2,0,0) since there is a “not” tag there, but it will consider (4,0,0) valid. It will then trigger the instinctive primitive “Nav_ModA.move(),” which then sends the action signals to the Output Vector Association Module A, which in turn sends a motion-corrected signal to the Output Vector Shaping Module, which instructs the actuators to move the black sphere to the cell (4,0,0) with the black block on the right (Equation 114).

Compositionality is a key property of an intelligent system. Without compositionality, such a system would need to experience every (or very many) permutations of a vast number of sensory scenes and actions to learn them. Above, it was shown how, with the duplication of the navigation modules, compositional abilities can readily emerge. This is discussed in more detail in Schneider (2024), including the emergence of language.

Samsonovich (2010) and Kotseruba and Tsotsos (2020) review the many cognitive architectures proposed in the literature. Kotseruba and Tsotsos note the large diversity of cognitive architectures proposed and the difficulty of defining the term. They consider cognitive architectures broadly as programs that “could reason about problems across different domains” and attempt to help determine what “particular mechanisms succeed in producing intelligent behavior” in terms of modeling the human mind.

Laird et al. (2017) attempt to unify the field of cognitive architectures with what they term a “standard model of the mind.” In their standard model, perception feeds into working memory, while motor outputs feed out of it. There is bidirectional movement of information between a declarative long-term memory and the working memory. Similarly, there is a bidirectional movement of information between procedural long-term memory and working memory. This is a very generic model of a cognitive architecture, and it would be expected to capture the inclusion of most of the models listed by Samsonovich (2010) or Kotseruba and Tsotsos (2020). However, the CCA7 version of the Causal Cognitive Architecture surprisingly does not fit within this “standard model.”

In this standard model of the mind, there are separate areas for declarative long-term memory and procedural long-term memory. However, in the CCA7, there can be both declarative long-term memory (i.e., navigation maps of experiences) and procedural long-term memory (i.e., instinctive and learned primitives) mixed together in the different Input Sensory Vectors Association Modules and within multisensory navigation maps which are operated on in the Navigation Modules A and B.

The CCA7 architecture is largely defined by its binding of sensory inputs into navigation maps and comparing these inputs with pre-stored information. The CCA7 architecture is also largely defined by its heavy usage of feedback of intermediate results of navigation maps. Again, these operations are not typical for most of the architectures defined by the standard model of the mind or included by Samsonovich (2010) or by Kotseruba and Tsotsos (2020).

As noted above, the Causal Cognitive Architecture hypothesizes that the navigation circuits in the amniotic ancestors of mammals duplicated many times to eventually form the neocortex. Thus, the millions of neocortical minicolumns are modeled in the Causal Cognitive Architecture as millions of navigation maps. As noted above, using this postulation, it has been possible to show the emergence of causal reasoning, analogical reasoning, and compositionality from a brain based on such navigation maps [Schneider, 2022a, 2023, 2024; Albeit, not rigidly replicating the mammalian brain, but at a more functionalist system as per Lieto (2021b)].

Similar to the concept of navigation maps, cognitive maps were proposed by Tolman (1948). A cognitive map is considered the way the brain represents the world and allows navigation and operations on paths and objects in the world. Thus, cognitive maps can hold geographical information as well as information from personal experiences. Before the work by O'Keefe and Nadel (1978), there was much debate about the existence of cognitive maps in a large spectrum of the animal world. This debate still continues, for example, whether cognitive maps exist in insects (Dhein, 2023).

In mammals, experimental work has largely found evidence for cognitive maps existing in terms of spatial navigation (e.g., O'Keefe and Nadel, 1978; Alme et al., 2014). However, Behrens et al. (2018) and Whittington et al. (2022) review extensions of cognitive maps into other domains of cognition. Hawkins et al. (2019) note evidence within the mammalian neocortex for the equivalent of grid cells. Schafer and Schiller (2018) have also hypothesized that the mammalian neocortex contains maps of spatial objects and maps of social interactions.

Buzsaki and Moser (2013) consider cognitive maps in planning, an area in which the new work on the Causal Cognitive Architecture below has developed. They propose that the memory and planning properties of the mammalian brain actually evolved from the same mechanisms used for navigation of the physical world. With regard to the neuroanatomical and neurophysiological basis for cognitive maps in the brain, the study by Schuck et al. (2016) suggests that the human orbitofrontal cortex holds a cognitive map of the current states of a task being performed.

As noted in the Introduction section, Causal Cognitive Architecture is a brain-inspired cognitive architecture (BICA) that was developed from the hypothesis that the navigation circuits in the amniotic ancestors of mammals duplicated many times to eventually form the neocortex. The thousands or millions (depending on the organism) of neocortical minicolumns are modeled in the architecture as navigation maps. The modeling of the mammalian brain and its evolution is done in a loosely functionalist approach (e.g., Lieto, 2021b) with constraints imposed by structuralist concerns. Small modifications in the architecture, akin to what could have been reasonable genetic and developmental changes, have been postulated and explored in the development of the versions of the architecture from the Causal Cognitive Architecture CCA1 version to the CCA6 version.

This very functionalist and theoretical approach to mammalian brain functioning and evolution is quite different than approaches that have attempted to more faithfully replicate brain structure and function (e.g., Markram, 2012; Frégnac, 2023). However, the approach taken by the Causal Cognitive Architecture does allow the emergence of mechanisms that could hypothetically explain the functioning of the mammalian brain as well as how ordinary genetic and developmental mechanisms could have readily allowed the emergence, i.e., evolution, of the seemingly discontinuous features in humans (i.e., the sharp cognitive and behavioral differences between humans and our closest evolutionary relatives). In addition, the approach taken by the Causal Cognitive Architecture creates a mechanism (i.e., a particular cognitive architecture) that can be used as the basis of building intelligent artificial systems.

As noted above, in this study, the question is asked what if the evolution of the human brain were to continue as it has in the past, and given an environment selecting for the ability to better solve complex problems which humans encounter in their lives (very roughly indicated by measures of intelligence, for example, e.g., Legg and Hutter, 2007; Adams et al., 2012; Wang, 2019), then what advantageous changes could occur as reflected in a model such as the Causal Cognitive Architecture?

A computer engineer interested in enhancing the “intelligence” (as per the definitions above) capabilities of the CCA6 version of the architecture (Figure 5) could readily add a large language model (LLM) module to the architecture or even just add a simple calculator module to the architecture. If one assumes that the CCA6 could be developed to the point of human intelligence (i.e., with adequate instinctive and learned primitives and with adequate experiences stored throughout the architecture), then adding even a calculator module could create a super-human intelligence (albeit, given the assumptions above). For example, in computing various strategies or outcomes, numerical answers would be readily available for many problems in such an architecture, unlike in the CCA6 version shown in Figure 5 or unlike in the case of an actual human.

Adding a calculator module or, even more advantageously, adding complete or multiple LLM modules to the Causal Cognitive Architecture in Figure 5 could be considered in future work. Indeed, adding LLMs to cognitive architectures is an active area of research at the time of writing (e.g., Joshi and Ustun, 2023; Laird et al., 2023; Sun, 2024). However, in this study, the assumption is that there will be an environment selecting for the ability to better solve complex problems. Thus, although there is not in this study the construction, mutation, and testing of millions of copies of the CCA6, there is a consideration of what advantageous modifications could emerge next, rather than design in modules (e.g., calculator module, LLM, and so on), which would not emerge naturally on their own as such (a calculator module or a complex LLM module would not readily emerge on its own from the CCA6 version of the architecture shown in Figure 5).

It is hypothesized that a first step in such an evolution could be the duplication of the TempMap temporary memory area in Navigation Module B into many such TempMap temporary memory areas. As noted above, various mechanisms are feasible for the duplication of brain structures (e.g., Rakic, 2009; Chakraborty and Jarvis, 2015). Thus, as a first step in the continued evolution of the CCA6 version of the architecture, there are multiple duplications of the TempMap temporary memories in Navigation Module B. This is shown in Figure 8. Previously, there was a single TempMap temporary memory area in Navigation Module B; now, there are many.

Figure 8. The CCA6 architecture with the duplication of the TempMap temporary memory areas in Navigation Module B.

The temporary memory area TempMap was discussed above in its use for allowing analogical reasoning (Equation 97). Mammalian brain working memory, particularly human working memory, is the inspiration for the architecture's Navigation Modules and the TempMap temporary memory. There is, in fact, variability in human working memory capacity in the population. The study by Friedman et al. (2008) claims that individual differences in executive function and, by implication, human working memory are almost completely genetic in origin. However, despite decades of research on working memory, its measurement still remains uncertain in many regards (Ando et al., 2001; Cowan, 2001; Carruthers, 2013; Ma et al., 2014; Oberauer et al., 2016; Friedman and Miyake, 2017; Chuderski and Jastrzȩbski, 2018). Although only a single TempMap memory was required by the CCA5 or CCA6 versions of the architecture for compositional language processing (Schneider, 2024), it is known that in humans, higher working memory capacity is associated with higher intellectual performance (e.g., Aubry et al., 2021). As noted above, Navigation Module B is associated with compositional operations. Thus, the additional temporary memories incorporated into Navigation Module B, as shown in Figure 8, could allow more complex instinctive primitives and more complex learned primitives to emerge that require additional temporary memory storage. This will be explored below.

While it is possible to navigate by simple rules/heuristics or similarly generate words of communication by simple rules/heuristics, planning a navigation route, planning words to generate in communication, or planning any other similar task can be advantageous. In any task where planning can improve the outcome, there are usually many possible paths that can be chosen, and it can be very advantageous to run possible plans in parallel.

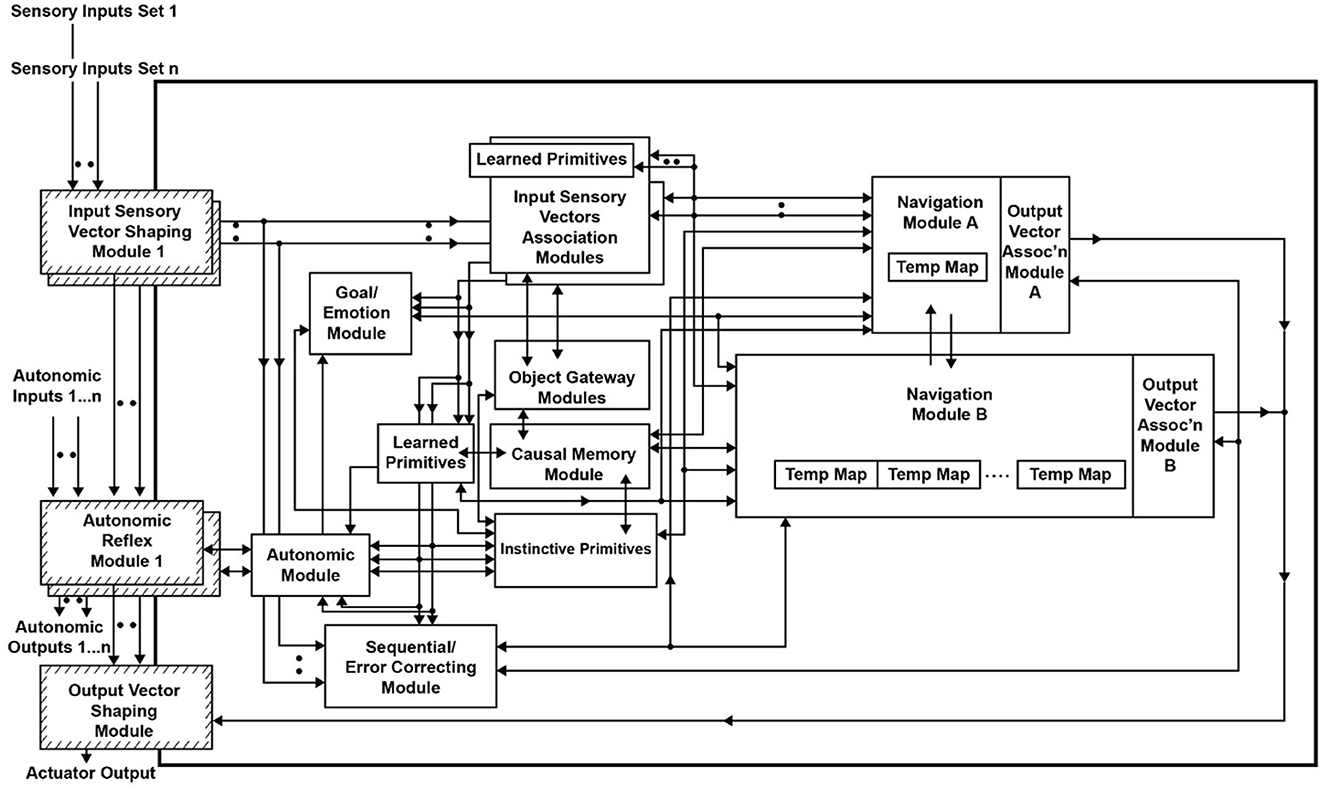

Thus, it is hypothesized that another step in the evolution of the Causal Cognitive Architecture could be the duplication of Navigation Module B into many such Navigation Module B's. As noted above, various mechanisms are feasible for the duplication of brain structures (e.g., Rakic, 2009; Chakraborty and Jarvis, 2015). Thus, as the next step in the continued evolution of the CCA6 version of the architecture, there are multiple duplications of the Navigation Module Bs. This is shown in Figure 9. The evolved architecture (i.e., multiple Navigation Module Bs and multiple temporary memories within each of the Navigation Module Bs) is named the Causal Cognitive Architecture 7 (CCA7).

Figure 9. The Causal Cognitive Architecture 7 (CCA7). This is the architecture shown in Figure 8 with duplication (1,024 copies) of Navigation Module B.

In the CCA7 version of the architecture shown in Figure 9, there are 1,024 copies of Navigation Module B. In every single Navigation Module B, there are 1,024 TempMap temporary memories (the number is not shown in the figure). Temporary memories are accessible for the operations of present and future instinctive primitives and learned primitives. Each TempMap temporary memory is capable of storing and representing a navigation map.

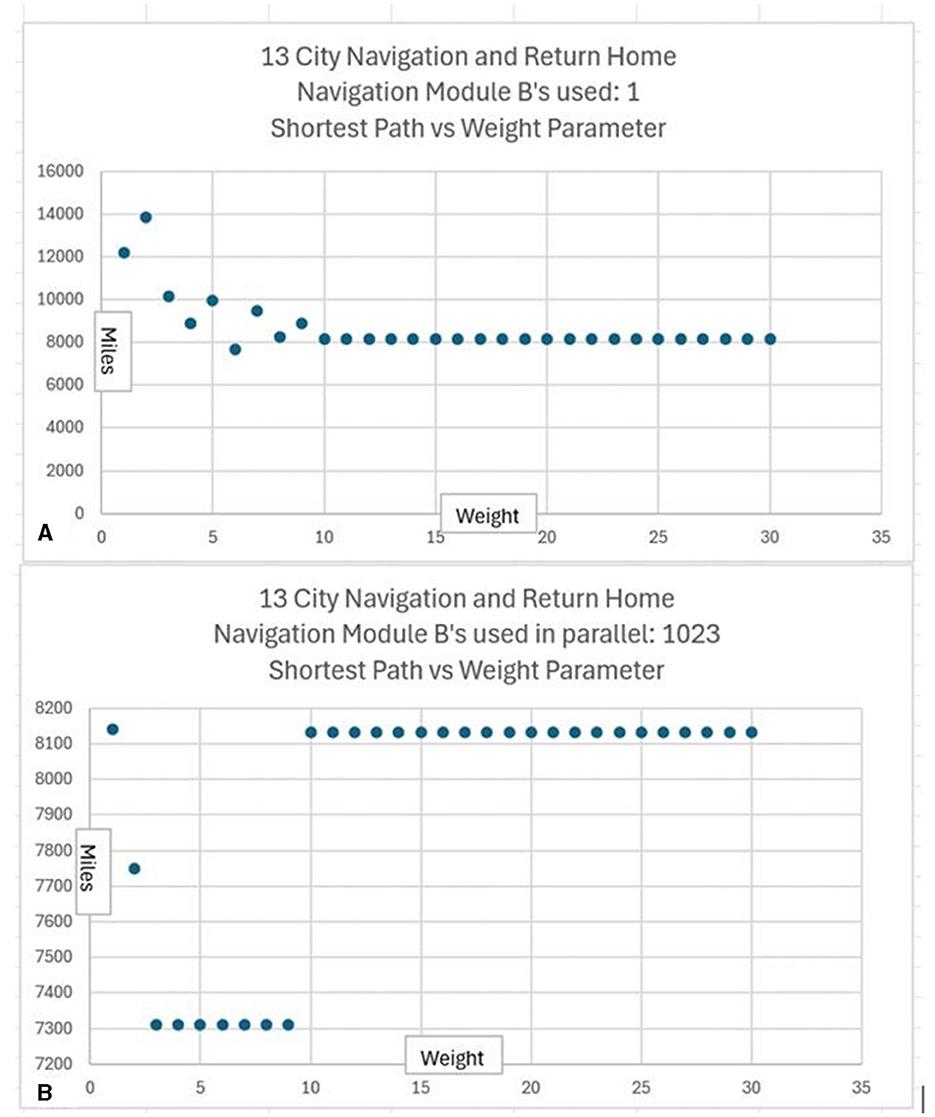

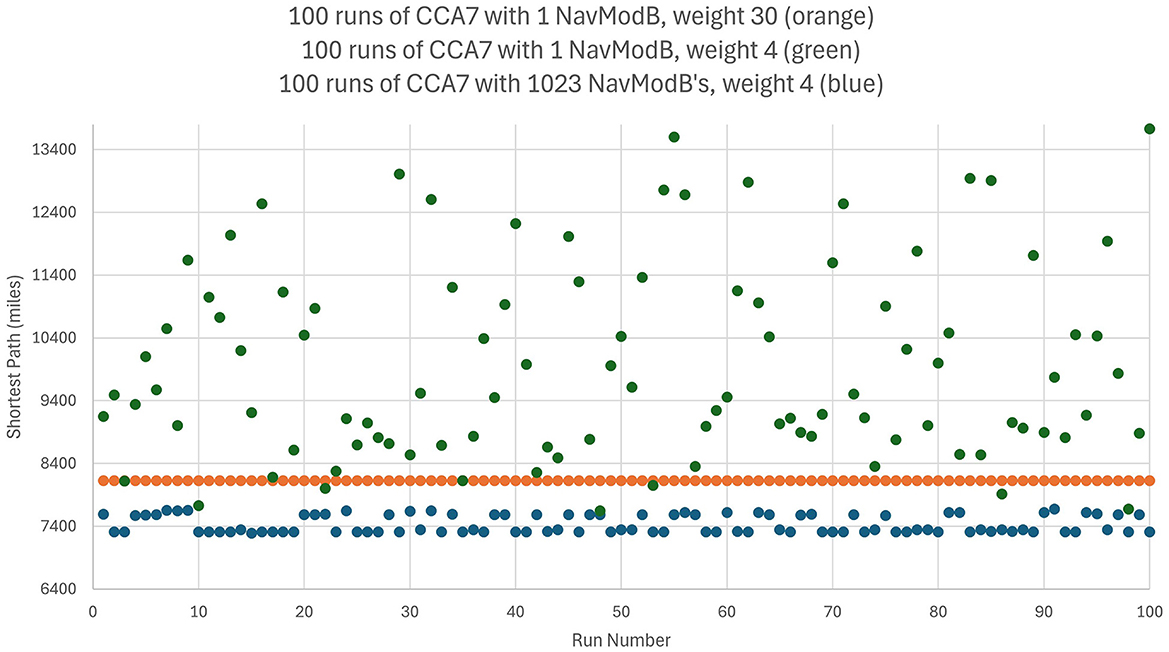

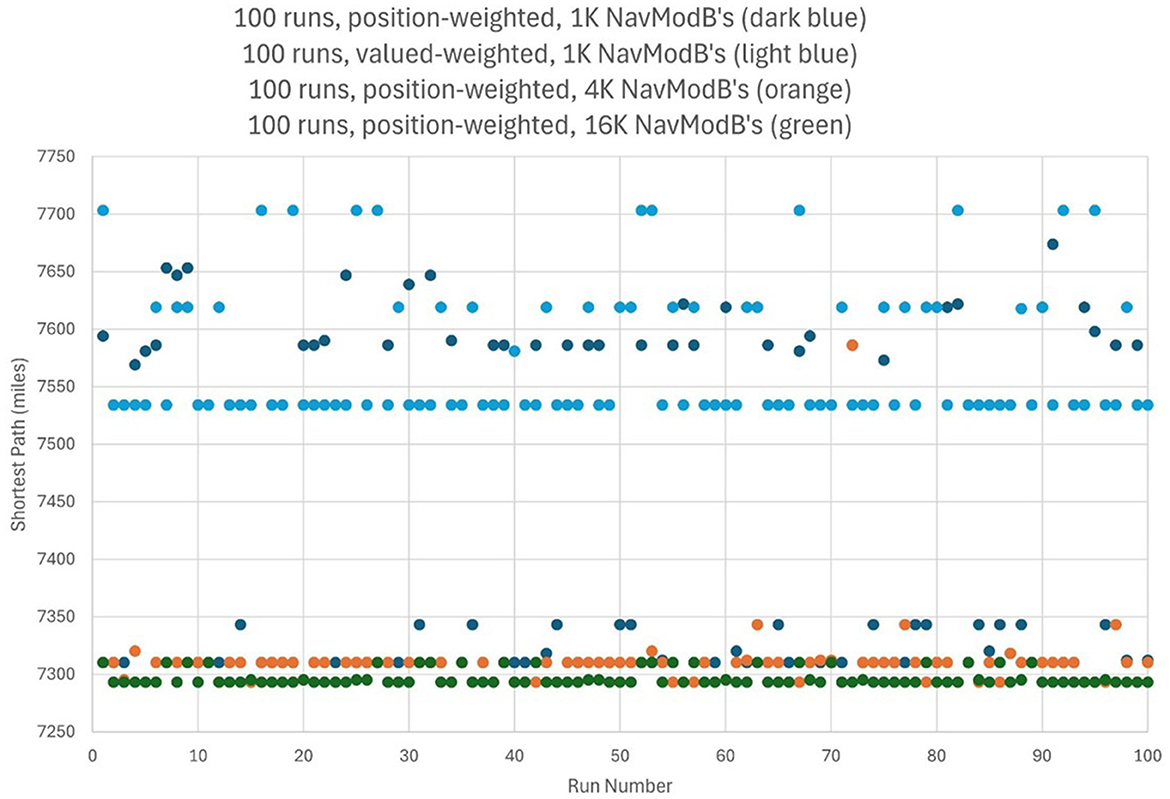

Consider the well-known traveling salesperson problem where a salesperson, or in this case an agent controlled by a CCA7 version of the architecture, must find the shortest route to visit only once each of a number of different cities and then return to the starting city. This is an NP-hard problem where the number of possible navigation routes to consider in finding the best solution increases exponentially with the number of cities. However, in the CCA7 version of the architecture, given that there are now over 1,000 Navigation Module Bs, then many of the possible routes (or promising routes given the exponential nature of the problem) can be evaluated in parallel, and a more optimal route planned ahead of time. This will be explored below in more detail, including a detailed examination of the CCA7 version of the architecture's internal operations and internal navigation maps.

As noted above, in the traveling salesperson example, the salesperson (or, in this case, an agent controlled by a CCA7 version of the architecture) must find the shortest route to visit only once each of a number of different cities and then return to the starting city. The many Navigation Module Bs should allow the CCA7 to evaluate many of the possible (or promising) routes in parallel and plan a more optimal route ahead of time.

For example, if there are a half-dozen cities (or locations or other equivalent destinations) that need to be visited, then this represents (6-1)! or 120 navigation routes (actually, only 60 of these routes need to be considered—returning home to the original city creates a cyclic graph that can be navigated forwards or backwards). If each possible route can be represented in a separate navigation module and there are hundreds of navigation modules in the architecture available, with each running a different combination of routes, then this problem can be solved much faster than if only a single navigation module was available.

If there were, for example, a dozen cities (or locations) that need to be visited, then this represents (12-1)!/2 or nearly 20 million navigation routes to explore to find the best solution. Even with a thousand navigation modules, this would not be enough to run each possible navigation route in a separate navigation module. However, having the thousand navigation modules, in combination with other instinctive primitives and learned primitives of the architecture, can still greatly accelerate a reasonable solution in this case. For example, the nearest neighbor solution algorithm is a relatively simple algorithm where the agent chooses the nearest city (or location) as the next city to visit (Rosenkrantz et al., 1977). However, this algorithm can sometimes give very poor solutions, i.e., very lengthy navigation routes to the problem (Bang-Jensen et al., 2004). However, since there are over a 1,000 different navigation modules, it is possible to consider over a thousand different implementations of the nearest neighbor solution algorithm. Without any sophisticated algorithms (e.g., simply apply random choices for some cities rather than the nearest and, e.g., simply apply various local properties such as avoiding crossings or not avoiding crossings of paths, etc.) by using the over 1,000 navigation modules to run slightly different solutions to the problem, the architecture can better ensure that the solution produced is less likely to be one of the worst solutions.

There is a very large body of literature on solutions to the traveling salesperson problem. A myriad of algorithms have been proposed, including many parallel solutions (e.g., Tschoke et al., 1995). For example, Dorigo and Gambardella (1997) describe using an algorithm based on a colony of ants to find successively shorter routes by laying down pheromone trails. Of interest, for certain variants of the problems, humans can visually produce solutions that are close to the optimal solution (Dry et al., 2006). While the literature gives much more sophisticated possible solutions, the traveling salesperson is considered here simply as an example to illustrate that having multiple navigation modules can be greatly advantageous to various planning strategies the architecture is required to perform.

As discussed above, instinctive primitives are effectively small procedures operating on the contents of the navigation map(s) in the navigation module(s). The instinctive primitives are inspired by the work of Spelke et al., who have described many innate behaviors in human infants (Spelke, 1994; Spelke and Kinzler, 2007). Human infants do have innate behaviors with regard to simple planning (e.g., Claxton et al., 2003; McCormack and Atance, 2011; Liu et al., 2022). Thus, given the brain-inspired origins of the architecture, it is reasonable that the CCA7 architecture contains an instinctive primitive capable of simple planning (as opposed to learning how to do simple planning via a learned primitive). The CCA7 architecture now includes an additional instinctive primitive “small_plan()” for simple planning.

The instinctive primitive “small_plan()” can use a single Navigation Module B as in the case of the CCA6 version of the architecture (Figure 5), or in the case of the CCA7, it will make use of all the Navigation Module Bs present (which in Figure 9 consists of 1,024 modules). The simultaneous usage of over a thousand navigation modules does not reflect, of course, a similar innate behavior described by Spelke et al. The details and operation of “small_plan()” are discussed in the section below (of course, with education, the CCA7 can acquire learned primitives that allow it better planning strategies, including better algorithms for the solution of the traveling salesperson problem. This is beyond the scope of this paper and is not discussed here).

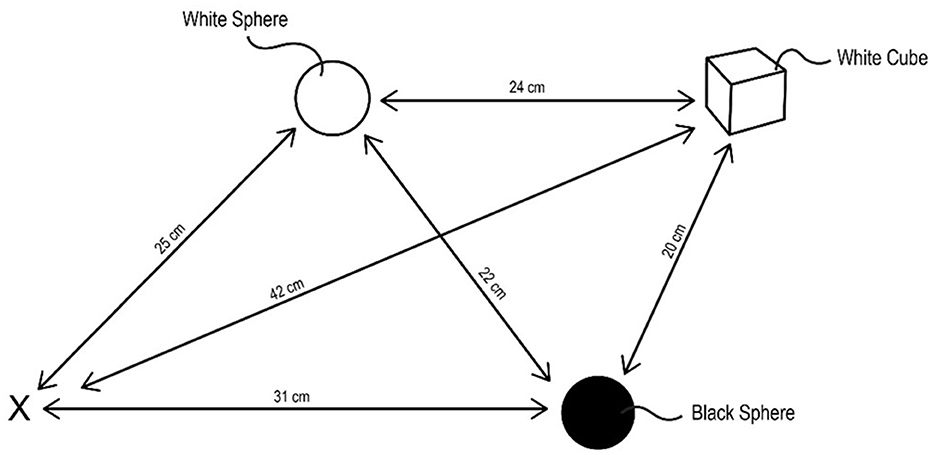

Consider an agent, i.e., a robot, controlled by the CCA7 architecture shown in Figure 9. For simplicity, the CCA7 architecture and the robot embodiment will be called the “CCA7” or “CCA7 robot.” The CCA7 robot comes to location “X” in Figure 10. It receives the instruction that starting at its existing position (i.e., “X”), it must visit each object and then return to the starting location.

Figure 10. Starting at location “X,” the CCA7 robot must go to the white sphere, the black sphere, and the white cube in any order and then return back to the starting location. The instruction does not specify that the CCA7 should visit each object only once, but this will be implicit in the instinctive primitive triggered, as described in the text. Similarly, the instruction does not specify this, but implicit in the instinctive primitive triggered, it should attempt to do this using the navigation path with the shortest distance.

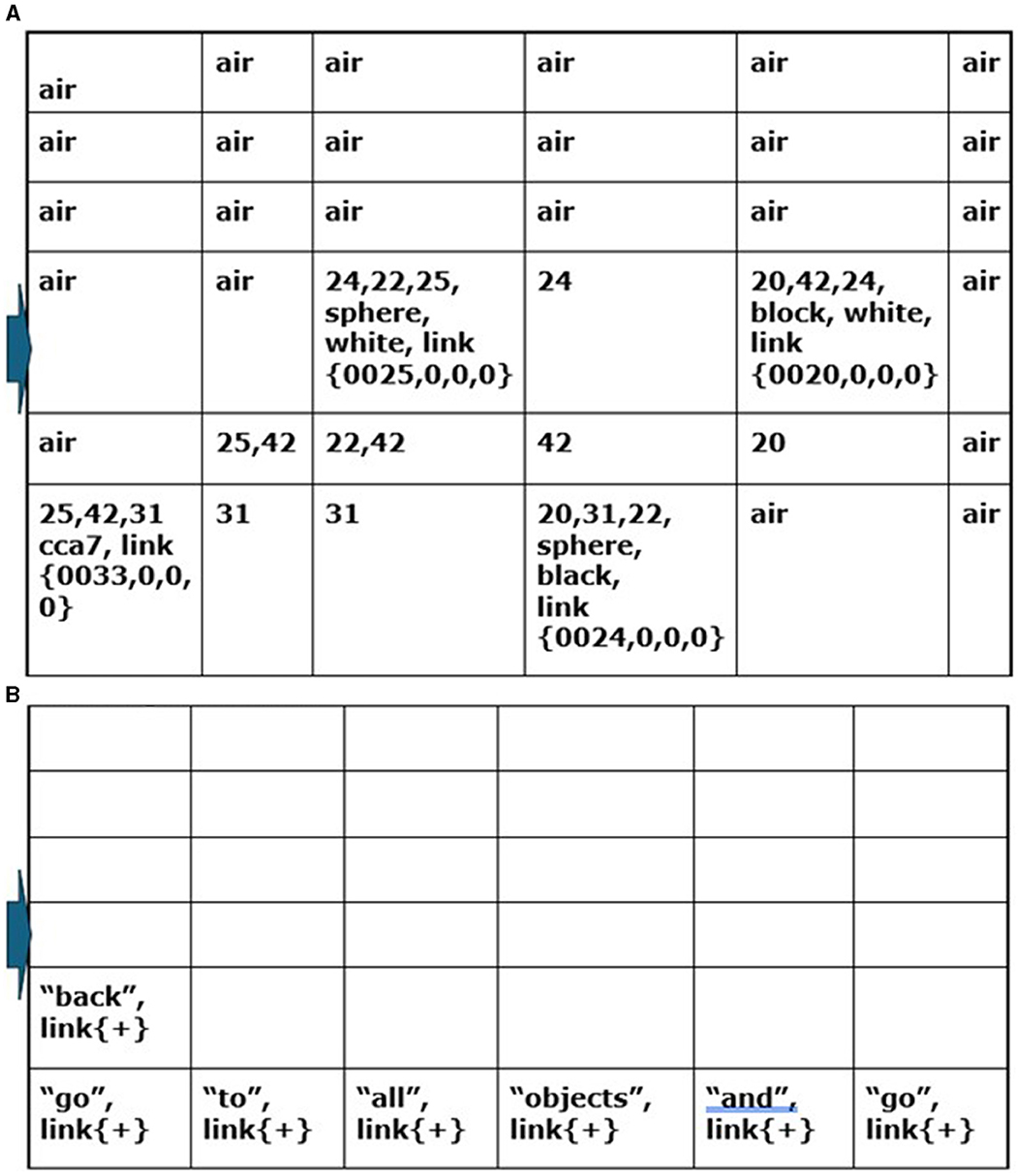

While in location “X,” the CCA7 robot maps a sensory scene into the navigation map in Navigation Module A, which is what it automatically does in each available cognitive cycle when there are new sensory inputs to process. The resulting navigation map in Navigation Module A is shown in Figure 11A. The CCA7 robot receives distances (either with the visual sensory information or via a separate ultrasonic distance sensory system). The numbers refer to the distance (in centimeters) between the objects in the different cells (the distance number can be determined by matching the same number in the path between two cells. In addition, note a clockwork recording of distances in each cell). The instruction “go to all objects and go back” is placed in Navigation Module B, as shown in Figure 11B. These operations are similar in nature to ones already described above for the CCA6 version of the architecture in its initial processing of the example of the sensory scene and instruction concerning the “placing a black sphere on top of the black block which is not near a cylinder” (Figures 6, 7).

Figure 11. (A) While in location “X,” the CCA7 robot maps the sensory scene of Figure 10 into the navigation map in Navigation Module A. The numbers refer to the distance (in centimeters) between the objects in the different cells (the distance number can be determined by matching the same number in the path between two cells. In addition, note a clockwork recording of distances in each cell). (B) The instruction “go to all objects and go back” is placed in Navigation Module B n = 1, as shown.

However, as described above and shown in Figure 9, there are now in the CCA7 multiple Navigation Modules—one Navigation Module A and over a thousand (1,024) duplicated Navigation Module Bs. Equation (115) (taken from Appendix A) indicates that the Working Navigation Map B' WNMB' (upon which primitives operate in Navigation Module B) is an array like before, but now can be one of 1,024 different navigation maps (corresponding to a different navigation map in each of the Navigation Module Bs.)

The Navigation Module Bs are numbered n = 1 to n = 1,023. The top (or first) Navigation Module B appears to be the n = 1 Navigation Module B, as shown in Figure 9. However, a n = 0 Navigation Module B exists and is used to store a copy of the compositional instructions so that if the other layers are overwritten, there is still a copy of the instructions. Layer n = 0 is considered “reserved” and will not be overwritten. If there is other information that an instinctive or learned primitive needs to ensure remains intact for the current operations, other Navigation Module Bs can be temporarily designated “reserved” as well.

Equation (116) indicates that the same instinctive primitive or the same learned primitive (i.e., procedure) is initially applied to all of the Navigation Module Bs. As discussed below, random fluctuations can be introduced in the different Navigation Module Bs to produce a variety of results to choose from. In the example below (i.e., a traveling salesperson problem), the same instinctive primitives are used by all the Navigation Module Bs, and this does not cause any particular issues. However, in other types of problems, in subsequent cognitive cycles, the initial primitive applied may trigger different primitives in different Navigation Modules. This issue is discussed below in the Section 6.

At present, there is no energy-saving operation or Autonomic Module (Figure 9) interaction to turn off the multiple Navigation Module Bs and use only a sole Navigation Module B n = 0 or n = 1, i.e., much like the previous CCA6 version of the architecture functioned. However, if an instinctive primitive or learned primitive does not require the thousand-plus Navigation Module Bs, it can simply ignore the results in the multiple modules and use the results of operations in Navigation Module B n = 1. This is also discussed below in the Section 6.

As shown in Figure 11A, Navigation Module A contains the Working Navigation Map (WNMA) of the sensory scene of the various places the agent has to navigate to. In Navigation Module B, n = 1 (Figure 11B) is a Working Navigation Map (WNMBn = 1) of the instruction sentence to “go to all the objects and go back.”

The word “go” in the first cell of the navigation map in Navigation Module B (Figure 11A) is matched against the Causal Memory Module as an action word and triggers the instinctive primitive “goto()” (Equation 117). “WNMA't = Nav_ModA.goto()” indicates that this instinctive primitive, i.e., “goto(),” is being applied to the Working Navigation Map A in Navigation Module A.

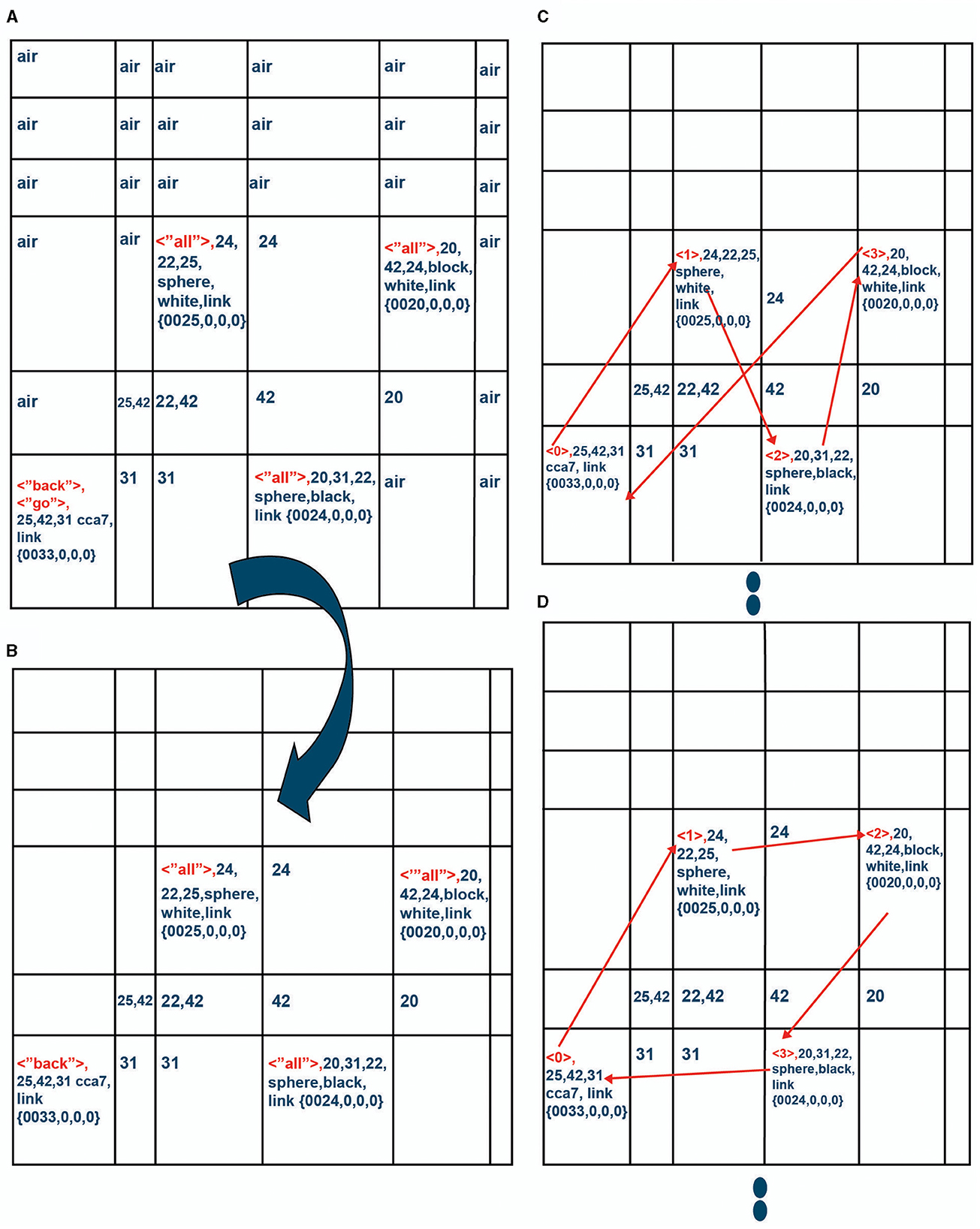

The instinctive primitive “goto()” causes the CCA7 robot to tag a location(s) and then essentially move to whatever location is indicated by the tag(s). The word “all,” which is associated with the active word < “go”> (until another action word is encountered, as in an earlier example above), will cause the tag < “all”> to be placed in all the cells with objects in the navigation map in Navigation Module A (Figure 12A).

Figure 12. (A) The navigation map in Navigation Module A is tagged via the operation of the “goto()” instinctive primitive operating on the navigation maps in Navigation Module A and Navigation Module B. (B) The instinctive primitive “small_plan()” copies Navigation Module A into the 1,023 (n = 1..1023) navigation maps in Navigation Module Bs, removing any action words and just leaving the tagged cells to navigate to. One of the navigation maps of Navigation Module B is shown here. (C) Navigation Module B n = 1: The instinctive primitive “small_plan()” is used as the starting point for the tagged cell and considers which distance to another tagged cell (i.e., representing an object) is the shortest. The cell that has the shortest distance is tagged with a < 1>. Then, it considers cell < 1> as the starting point and considers which distance to another tagged cell is the shortest. This continues until all the tagged cells in the navigation map are considered and re-tagged with a number indicating in which order they should be navigated. After all cells are navigated, there is a return to starting position which has been tagged with a < 0>. This simple nearest neighbor algorithm occurs in the navigation map in the n = 1 Navigation Module B. Note that the sum of the distances is 25+22+20+42 = 109 cm in this navigation map. (D) Navigation Module B n = 2: The simple nearest neighbor algorithm occurs again in the navigation map in the n = 2 Navigation Module B; however, random fluctuations have been introduced (see text). The instinctive primitive “small_plan()” considers the starting tagged cell and considers which distance to another tagged cell (i.e., representing an object) is the shortest. Random fluctuations are now introduced. Note that when deciding which cell to navigate to after cell (2,2,0) containing the white sphere, the random fluctuation introduced here causes “small_plan()” to choose cell (4,2,0) even though the distance of 24 to that cell was not the nearest neighbor. Note that the sum of the distances is 25+24+20+31 = 100 cm in this navigation map.

The words “go back” are also associated with the instruction word < “go”> and will cause the tag < “back”> to be placed in the starting cell [which is (0,0,0) in this example]. This can be seen in Figure 12A.

Once the instinctive primitive “goto()” tags the cell(s) where it has to move to, it then decides if it will move (i.e., “go to”) the cell with the tag. However, if there are multiple tags, i.e., multiple locations to navigate to [“locations > 1” in Equations (118–123)], then the “small_plan()” instinctive primitive is activated instead of moving to a single location. As discussed above, this instinctive primitive will plan a navigation route to whatever multiple tagged locations are indicated on the navigation map(s).