94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 15 June 2023

Volume 17 - 2023 | https://doi.org/10.3389/fncom.2023.1189949

This article is part of the Research TopicSymmetry as a Guiding Principle in Artificial and Brain Neural Networks, Volume IIView all 7 articles

Parts of this article's content have been modified or rectified in:

Erratum: Covariance properties under natural image transformations for the generalised Gaussian derivative model for visual receptive fields

The property of covariance, also referred to as equivariance, means that an image operator is well-behaved under image transformations, in the sense that the result of applying the image operator to a transformed input image gives essentially a similar result as applying the same image transformation to the output of applying the image operator to the original image. This paper presents a theory of geometric covariance properties in vision, developed for a generalised Gaussian derivative model of receptive fields in the primary visual cortex and the lateral geniculate nucleus, which, in turn, enable geometric invariance properties at higher levels in the visual hierarchy. It is shown how the studied generalised Gaussian derivative model for visual receptive fields obeys true covariance properties under spatial scaling transformations, spatial affine transformations, Galilean transformations and temporal scaling transformations. These covariance properties imply that a vision system, based on image and video measurements in terms of the receptive fields according to the generalised Gaussian derivative model, can, to first order of approximation, handle the image and video deformations between multiple views of objects delimited by smooth surfaces, as well as between multiple views of spatio-temporal events, under varying relative motions between the objects and events in the world and the observer. We conclude by describing implications of the presented theory for biological vision, regarding connections between the variabilities of the shapes of biological visual receptive fields and the variabilities of spatial and spatio-temporal image structures under natural image transformations. Specifically, we formulate experimentally testable biological hypotheses as well as needs for measuring population statistics of receptive field characteristics, originating from predictions from the presented theory, concerning the extent to which the shapes of the biological receptive fields in the primary visual cortex span the variabilities of spatial and spatio-temporal image structures induced by natural image transformations, based on geometric covariance properties.

The image and video data, that a vision system is exposed to, is subject to geometric image transformations, due to variations in the viewing distance, the viewing direction and the relative motion of objects in the world relative to the observer. These natural image transformations do, in turn, cause a substantial variability, by which an object or event in the world may appear in different ways to a visual observer:

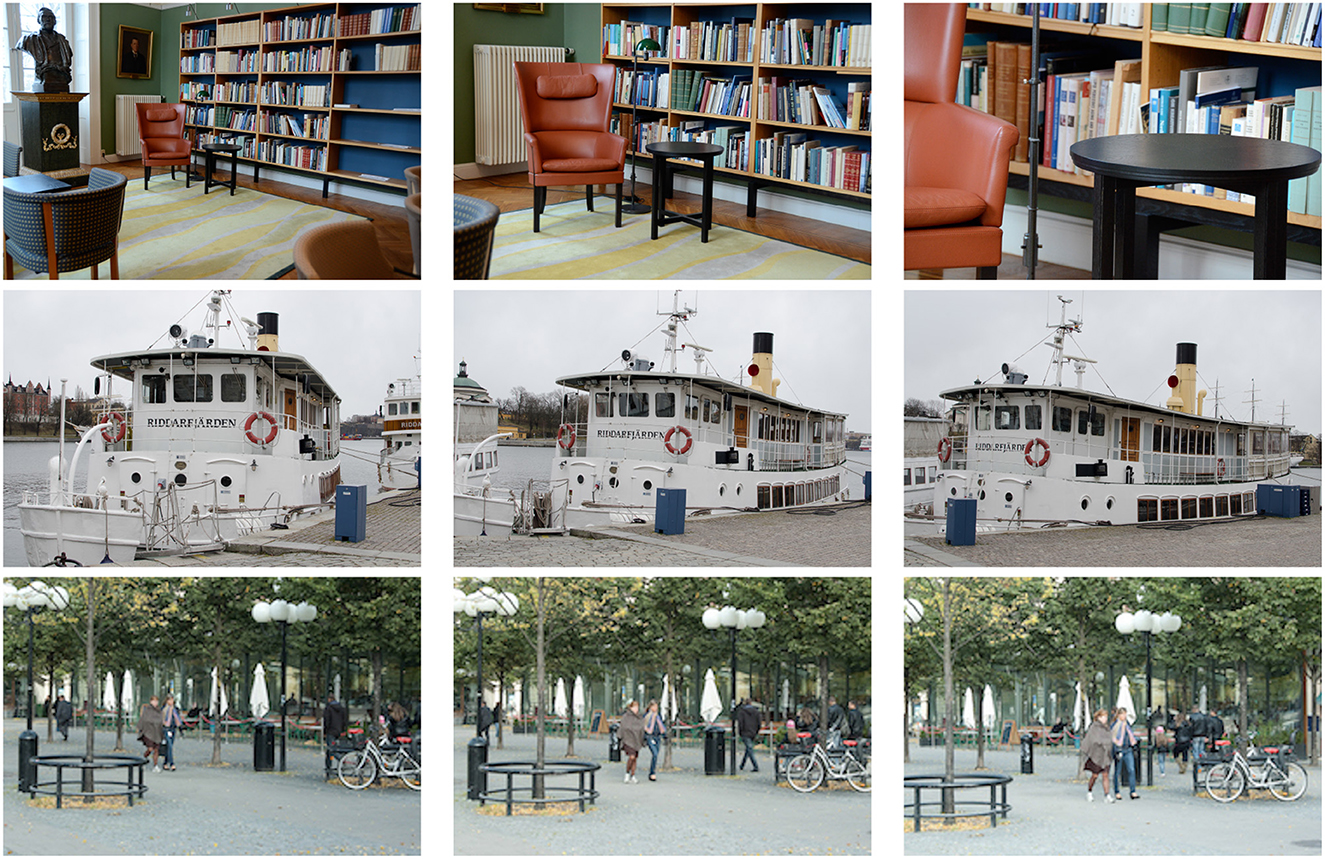

• Variations in the distance between objects in the world and the observer lead to variations in scale, often up to over several orders of magnitude, which, to first order of approximation of the perspective mapping, can be modelled as (uniform) spatial scaling transformations (see Figure 1 top row).

• Variations in the viewing direction between the object and the observer will lead to a wider class of local image deformations, with different amount of foreshortening in different spatial directions, which, to first order of approximation, can be modelled as local spatial affine transformations, where the monocular foreshortening will depend upon the slant angle of a surface patch, and correspond to different amount of scaling in different directions, also complemented by a skewing transformations (see Figure 1 middle row).

• Variations in the relative motion between objects in the world and the viewing direction will additionally transform the joint spatio-temporal video domain in a way that, to first order of approximation, can be modelled as local Galilean transformations. (see Figure 1 bottom row).

Figure 1. Due to the effects of natural image transformations, the perspective projections of spatial objects and spatio-temporal events in the world may appear in substantially different ways depending on the viewing conditions. This figure shows images from natural scenes under variations in (top row) the viewing distance, (middle row) the viewing direction and (bottom row) the relative motion between objects in the world and the observer. By approximating the non-linear perspective mapping by a local linearization (the derivative), these geometric image transformations can, to first order of approximation, be modelled by spatial scaling transformations, spatial affine transformations and Galilean transformations.

In this paper, we will study the transformation properties of receptive field1 responses in the primary visual cortex (V1) under these classes of geometric image transformations, as well as for temporal scaling transformations that correspond to objects in the world that move as well as spatio-temporal events that occur faster or slower. We will also study the transformation properties of neurons in the lateral geniculate nucleus (LGN) under a lower variability over spatial scaling transformations, spatial rotations, temporal scaling transformations and Galilean transformations. An overall message that we will propose is that if the family of visual receptive fields is covariant (or equivariant2) under these classes of natural image transformations, as the family of generalised Gaussian derivative based receptive fields that we will study is, then these covariance properties make it possible for the vision system to, up to first order of approximation, match the receptive field responses under the huge variability caused by these geometric image transformations, which, in turn, will make it possible for the vision system to infer more accurate cues to the structure of the environment.

We will then use these theoretical results to express predictions concerning biological receptive fields in the primary visual cortex and the lateral geniculate nucleus, to characterize to what extent the variability of receptive field shapes, in terms of receptive fields tuned to different orientations, motion directions as well as spatial and temporal scales, as has been found by neurophysiological cell recordings of biological neurons (DeAngelis et al., 1995; Ringach, 2002, 2004; DeAngelis and Anzai, 2004; Conway and Livingstone, 2006; Johnson et al., 2008; De and Horwitz, 2021), could be explained by the primary visual cortex computing a covariant image representation over the basic classes of natural geometric image transformations.

For spatial receptive fields, that integrate spatial information over non-infinitesimal regions over image space, the specific way that the receptive fields accumulate evidence over these non-infinitesimal support regions will crucially determine how well they are able to handle the huge variability of visual stimuli caused by geometric image transformations.

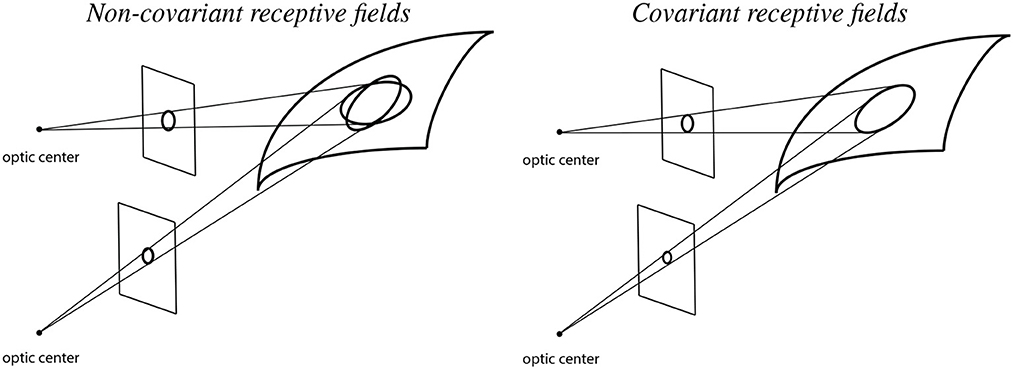

To illustrate the importance of natural image transformations with respect to image measurements in terms of receptive fields, consider a visual observer that views a 3-D scene from two different viewing directions as shown in Figure 2.

Figure 2. Illustration of backprojected receptive fields for a visual observer that observes a smooth surface from two different viewing directions in the cases of (Left) non-covariant receptive fields and (Right) covariant receptive fields. When using non-covariant receptive fields, the backprojected receptive fields will not match, which may cause problems for higher level visual processes that aim at computing estimates of local surface orientation, whereas when using covariant receptive fields, the backprojected receptive fields can be adjusted to match, which in turn enable more accurate estimates of local surface orientation (See the text in Section 1.1. for a more detailed explanation.).

Let us initially assume that the receptive fields for the two different viewing conditions are based on image measurements over rotationally symmetric support regions in the two image domains only. (More precisely, with respect to the theory that is to be developed next, concerning receptive fields that are defined from spatial derivatives of spatial smoothing kernels, the first crucial factor in this model concerns the support regions of the underlying spatial smoothing kernels of the spatial receptive fields in the two image domains).

If we backproject these rotationally symmetric support regions in the two image domains to the tangent plane of the surface in the world, then these backprojections will, to first order of approximation, be ellipses. Those ellipses will, however, not coincide in the tangent plane of the surface, implying that if we try to make use of the difference between the image measurements over the two spatial image domains, then there will be an unavoidable source of error, caused by the difference between the backprojected receptive fields, which will substantially affect the accuracy when computing cues to the structure of the world.

If we instead allow ourselves to consider different shapes of the support regions in the two image domains, and also match the parameters that determine their shapes such that they coincide in the tangent plane of the surface, then we can, on the other hand, to first order of approximation, reduce the mismatch source to the error. For the affine Gaussian derivative model for spatial receptive fields developed in this article, that matching property is achieved by matching the spatial covariance matrices of the affine Gaussian kernels, in such a way that the support regions of the affine Gaussian kernels may be ellipses in the respective image domains, and may, for example, in the most simple case correspond to circles in the tangent plane of the surface.

Of course, a complementary problems then concerns how to match the actual values of the receptive field parameters in a particular viewing situation. That problem can, however, be seen as a complementary more algorithmic task, in contrast to the fundamental problem of eliminating an otherwise inescapable geometric source of error. In Lindeberg and Gårding (1997) one example of such a computational algorithm was developed for the task of estimating local surface orientation from either monocular or binocular cues. It was demonstrated that it was possible to design an iterative procedure for successively adapting the shapes of the receptive fields, in such a way that it reduced the reconstruction error by about an order of magnitude after a few (2–3) iterations.

While this specific example for illustrating the influence of the backprojected support regions of the receptive fields, when deriving cues to the structure of the world from local image measurements, is developed for the case of spatial affine transformations under a binocular or multi-view viewing situation, a similar geometric problem regarding the backprojected support regions of the receptive fields arises also under monocular projection, as well as for spatio-temporal processing under variations in the relative motion between objects or events in the world and the observer, then concerning the backprojections of the spatio-temporal receptive fields in the 2+1-D video domain to the surfaces of the objects embedded in the 3+1-D world.

A main subject of this paper is to describe a theory for covariant receptive fields under natural image transformations, which makes it possible to perfectly match the backprojected receptive field responses under natural image transformations, approximated by spatial scaling transformations, spatial affine transformations, Galilean transformations and temporal scaling transformations. Since these image transformations may be present in essentially every natural imaging situation, they constitute essential components to include in models of visual perception.

The receptive fields of the neurons in the visual pathway constitute the main computational primitives in the early stages of the visual sensory system. These visual receptive fields integrate and process visual information over local regions over space and time, which is then passed on to higher layers. Capturing the functional properties of visual receptive fields, as well as trying to explain what determines their computational function, constitute key ingredients in understanding vision.

In this work, we will for our theoretical studies build upon the Gaussian derivative model for visual receptive fields, which was initially theoretically proposed by Koenderink (1984), Koenderink and van Doorn (1987, 1992), used for modelling biological receptive fields by Young (1987), Young and Lesperance (2001), Young et al. (2001) and then generalized by Lindeberg (2013, 2021). With regard to the topic of the thematic collection on “Symmetry as a Guiding Principle in Artificial and Brain Neural Networks”, the subject of this article is to describe how symmetry properties in terms of covariance properties under natural image transformations constitute an essential component in that normative theory of visual receptive fields, as well as how such covariance properties may be important with regard to biological vision, specifically to understand the organization of the receptive fields in the primary visual cortex.

It will be shown that a purely spatial version of the studied generalised Gaussian derivative model for visual receptive fields allows for spatial scale covariance and spatial affine covariance, implying that it can, to first order of approximation, handle variations in the distance between objects in the world and the observer, as well as first-order approximations of the geometric transformations induced by viewing the surface of a smooth 3-D object from different viewing distances and viewing directions in the world. It will also be shown that for a more general spatio-temporal version of the resulting generalised Gaussian derivative model, the covariance properties do, in addition, extend to local Galilean transformations, which makes it possible to perfectly handle first-order approximations of the geometric transformations induced by viewing objects in the world under different relative motions between the object and the observer. The spatio-temporal receptive fields in this model do also obey temporal scale covariance, which makes it possible to handle objects that move as well as spatio-temporal events that occur faster or slower in the world. In these ways, the resulting model for visual receptive fields respects the main classes of geometric image transformations in vision.

We argue that if the goal is to build realistic computational models of biological receptive fields, or more generally a computational model of a visual system that should be able to handle general classes of natural image or video data in a robust and stable manner, it is essential that the internal visual representations obey sufficient covariance properties under these classes of geometric image transformations, to, in turn, make it possible to achieve geometric invariance properties at the systems level of the visual system.

We conclude by using predictions from the presented theory to describe implications for biological vision. Specifically, we state experimentally testable hypotheses to explore to what extent the variabilities of receptive field shapes in the primary visual cortex span corresponding variabilities as described by the basic classes of natural image transformations, in terms of spatial scaling transformations, spatial affine transformations, Galilean transformations and temporal scaling transformations. These hypotheses are, in turn, intended for experimentalists to explore and characterize to what extent the primary visual cortex computes a covariant representation of receptive field responses over those classes of natural image transformations.

In this context, we do also describe theoretically how receptive field responses at coarser levels of spatial and temporal scales can be computed from finer scales using cascade smoothing properties over spatial and temporal scales, meaning that it could be sufficient for the visual receptive fields in the first layers to only implement the receptive fields at the finest level of spatial and temporal scales, from which coarser scale representations could then be inferred at higher levels in the visual hierarchy.

In this section, we will describe the generalised Gaussian derivative model for linear receptive fields, in the cases of either (i) image data defined over a purely spatial domain, or (ii) video data defined over a joint spatio-temporal domain, including brief conceptual overviews of how this model can be derived in a principled axiomatic manner, from symmetry properties in relation to the first layers of the visual hierarchy. We do also give pointers to previously established results that demonstrate how these models do qualitatively very well model biological receptive fields in the lateral geniculate nucleus (LGN) and the primary visual cortex (V1), as established by comparisons to results of neurophysiological recordings of visual neurons. This theoretical background will then constitute the theoretical background for the material in Section 3, concerning covariance properties of the visual receptive fields according to the Gaussian derivative model, and the implications of such covariance properties for modelling and explaining the families of receptive field shapes found in biological vision.

For image data defined over a 2-D spatial domain with image coordinates , an axiomatic derivation in Lindeberg (2011); Theorem 5, based on the assumptions of linearity, translational covariance, semi-group3 structure over scale and non-creation of new structures from finer to coarser levels of scales in terms of non-enhancement of local extrema4, combined with certain regularity assumptions, shows that under evolution of a spatial scale parameter s, that reflects the spatial size of the receptive fields, the spatially smoothed image representations L(x1, x2; s) that underlie the output from the receptive fields must satisfy a spatial diffusion equation of the form

with initial condition L(x1, x2; 0) = f(x1, x2), where f(x1, x2) is the input image, is the spatial gradient vector, Σ(x1, x2) is a spatial covariance matrix and δ(x1, x2) is a spatial drift vector.

The physical interpretation of this equation is that if we think of the intensity distribution L in the image plane as a heat distribution, then this equation describes how the heat distribution will evolve over the virtual time variable s, where hot spots will get successively cooler and cold spots will get successively warmer. The evolution will in this sense serve as a spatial smoothing process over the variable s, which we henceforth will refer to as a spatial scale parameter of the receptive fields.

The spatial covariance matrix Σ(x1, x2) in this equation determines how much spatial smoothing is performed in different directions in the spatial domain, whereas the spatial drift vector δ(x1, x2) implies that the smoothed image structures may move spatially over the image domain during the smoothing process. The latter property can, for example, be used for aligning image structures under variations in the disparity field for a binocular visual observer. For the rest of the present treatment, we will, however, focus on monocular viewing conditions and disregard that term in the case of purely spatial image data. For the case of spatio-temporal data, to be considered later, a correspondence to this drift term will on the other hand be used for handling motion, to perform spatial smoothing of objects that change the positions of their projections in the image plane over time, without causing excessive amounts of motion blur, if desirable, as it will be for a receptive field tuned to a particular motion direction.

In terms of the spatial smoothing kernels that underlie the definition of a family of spatial receptive fields, that integrate information over a spatial support region in the image domain, this smoothing process corresponds to smoothing with spatially shifted affine Gaussian kernels of the form

for a scale dependent spatial covariance matrix Σs and a scale-dependent spatial drift vector δs of the form Σs = s Σ(x1, x2) and δs = s δ(x1, x2). Requiring these spatial smoothing kernels to additionally be mirror symmetric around the origin, and removing the parameter s from the notation, does, in turn, lead to the regular family of affine Gaussian kernels of the form

Considering that spatial derivatives of the output of affine Gaussian convolution also satisfy the diffusion equation (1), and incorporating scale-normalised derivatives of the form (Lindeberg, 1998)

where α = (α1, α2) denotes the order of differentiation, as well as combining multiple partial derivatives into directional derivative operators according to

where we choose the directions φ for computing the directional derivative to be aligned with the directions of the eigenvectors of the spatial covariance matrix Σ, we obtain the following canonical model5 for oriented spatial receptive fields of the form [Lindeberg, 2021; Equation (23)]

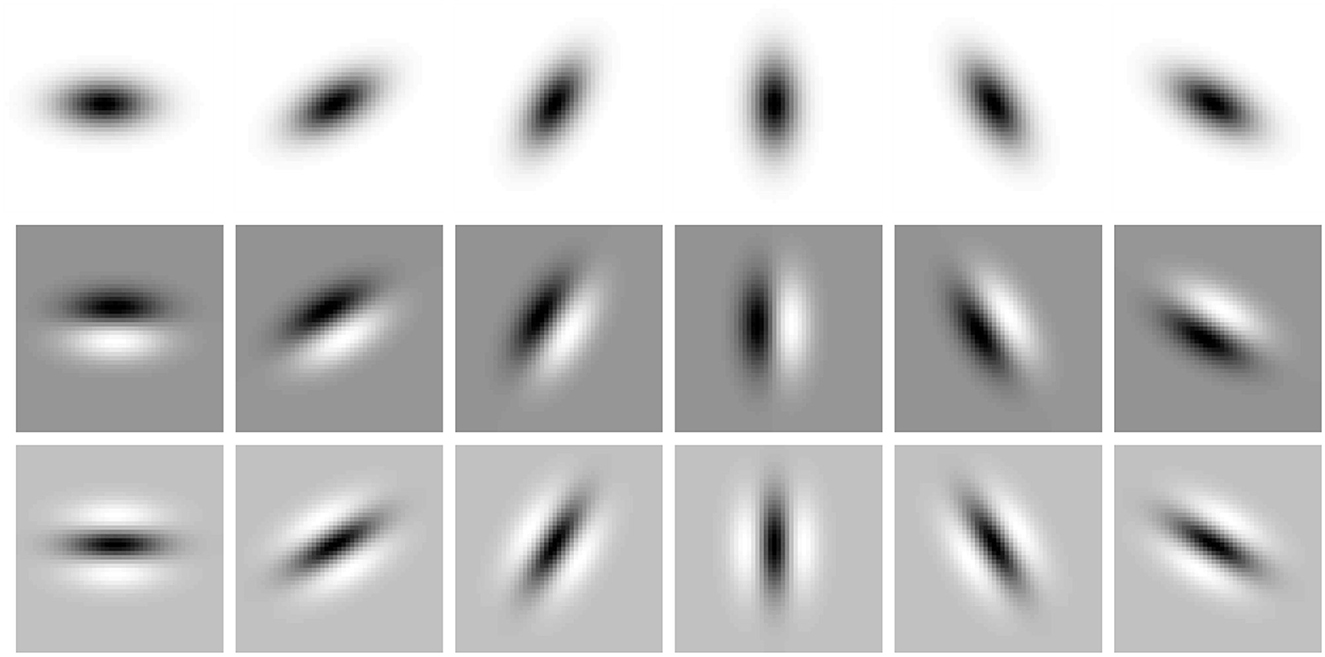

Figure 3 shows examples of such affine Gaussian kernels with their directional derivatives up to order two, for different orientations in the image domain, expressed for one specific choice of the ratio between the eigenvalues of the spatial covariance matrix Σ. More generally, this model also comprises variations of the ratio between the eigenvalues, as illustrated in Figure 4 Left, which visualizes a uniform distribution of zero-order affine Gaussian kernels on a hemisphere. The receptive fields in the latter illustration should then, in turn, be complemented by directional derivatives of such kernels up to a given order of spatial differentiation, see Figure 4 Right for an illustration of such directional derivative receptive fields of order one. In the most idealised version of the theory, one could think of all these receptive fields, with their directional derivatives up to a certain order, as being present at every point in the visual field (if we disregard the linear increase in minimal receptive field size from the fovea towards the periphery in a foveal vision system). With respect to a specific implementation of this model in a specific vision system, it then constitutes a complementary design choice, to sample the variability of that parameter space in an efficient manner.

Figure 3. Illustration of the variability of receptive field shapes over spatial rotations and the order of spatial differentiation. This figure shows affine Gaussian kernels g(x1, x2; Σ) with their directional derivatives ∂φg(x1, x2; Σ) and ∂φφg(x1, x2; Σ) up to order two, here with the eigenvalues of Σ being λ1 = 64, λ2 = 16 and for the image orientations φ = 0, π/6, π/3, π/2, 2π/3, 5π/6. With regard to the classes of image transformations considered in this paper, this figure shows an expansion over the rotation group. In addition, the family of affine Gaussian kernels also comprises an expansion over a variability over the ratio between the eigenvalues of the spatial covariance matrix Σ, that determines the shape of the affine Gaussian kernels, see Figure 4 for complementary illustrations (Horizontal dimension: x ∈ [−24, 24]. Vertical dimension: y ∈ [−24, 24].).

Figure 4. Illustration of the variability of receptive field shapes over spatial affine transformations that also involve a variability of the eccentricity of the receptive fields. (Left) Zero-order affine Gaussian kernels for different orientations φ in the image domain as well as for different ratios between the eigenvalues λ1 and λ2 of the spatial covariance matrix Σ, here illustrated in terms of a uniform distribution of isotropic receptive field shapes on a hemisphere, which will correspond to anisotropic affine Gaussian receptive fields when mapping rotationally symmetric Gaussian receptive fields from the tangent planes of the sphere to the image domain by orthographic projection. (Right) First-order directional derivatives of the zero-order affine Gaussian kernels according to that distribution, with the spatial direction for computing the directional derivatives aligned with the orientation of one of the eigenvectors of the spatial covariance matrix Σ that determines the shape of the affine Gaussian kernel. When going from the center of each of these images to the boundaries, the eccentricity, defined as the ratio between the square roots of the smaller and larger eigenvalues λmin and λmax of Σ varies from 1 to 0, where the receptive fields at the center are maximally isotropic, whereas the receptive fields at the boundaries are maximally anisotropic.

For rotationally symmetric receptive fields over the spatial domain, a corresponding model can instead be expressed of the form [Lindeberg, 2021; Equation (39)]

In Lindeberg (2013) it is proposed that the purely spatial component of simple cells in the primary visual cortex can be modelled by directional derivatives of affine Gaussian kernels of the form (6); see Figures 16 and 17 in Lindeberg (2021) for illustrations. It is also proposed that the purely spatial component of LGN neurons can be modelled by Laplacians of Gaussians of the form (7); see Figure 13 in Lindeberg (2021).

The affine Gaussian derivative model of simple cells in (6) goes beyond the previous theoretical studies by Koenderink and van Doorn (1987, 1992) and as well as the previous biological modelling work by Young (1987), in that the underlying spatial smoothing kernels in our model are anisotropic, as opposed to isotropic in Young's and Koenderink and van Doorn's models. This anisotropy leads to better approximation of the biological receptive fields, which are more elongated (anisotropic) over the spatial image domain than can be accurately captured based on derivatives of rotationally symmetric Gaussian kernels. The generalised Gaussian derivative model based on affine Gaussian kernels does also enable affine covariance, as opposed to mere scale and rotational covariance for the regular Gaussian derivative model, based on derivatives of the rotationally symmetric Gaussian kernel.

For video data defined over a 2+1-D non-causal spatio-temporal domain with coordinates , a corresponding axiomatic derivation, also based on Theorem 5 in Lindeberg (2011), while now formulated over a joint spatio-temporal domain, shows that under evolution over a joint spatio-temporal scale parameter u, the spatio-temporally smoothed video representations L(x1, x2, t; s, τ, v, Σ), that underlie the output from the receptive fields, must satisfy a spatio-temporal diffusion equation of the form

for u being some convex combination of the spatial scale parameter s and the temporal scale parameter τ, and with initial condition L(x1, x2, t; 0, 0, v, Σ) = f(x1, x2, t), where f(x1, x2, t) is the input video, is the spatio-temporal gradient, Σ(x1, x2, t) is a spatio-temporal covariance matrix and δ(x1, x2, t) is a spatio-temporal drift vector.

If we think of the intensity distribution L over joint space-time as a heat distribution, then this equation describes how the heat distribution will evolve over an additional virtual time variable u (operating at a higher meta level than the physical time variable t), with the spatio-temporal covariance matrix Σ(x1, x2, t) describing how much smoothing of the virtual heat distribution will be performed in different directions in joint (real) space-time, whereas the spatio-temporal drift vector δ(x1, x2, t) describes how the image structures may move in joint (real) space-time as function of the virtual variable u, which is important for handling the perspective projections of objects that move in the real world.

In Lindeberg (2021); Appendix B.1 it is shown that the solution of this equation can be expressed as the convolution with spatio-temporal kernels of the form

where Σ is a spatial covariance matrix, v = (v1, v2) is a velocity vector and h(t; τ) is a 1-D temporal Gaussian kernel. Combining this model with spatial directional derivatives in a similar way as for the spatial model, and introducing scale-normalised temporal derivatives (Lindeberg, 2016) of the form

as well as corresponding velocity-adapted temporal derivatives according to

then leads to a non-causal spatio-temporal receptive field model of the form [Lindeberg, 2021; Equation (32)]

Regarding the more realistic case of video data defined over a 2+1-D time-causal spatio-temporal domain, in which the future cannot be accessed, theoretical arguments in Lindeberg (2016, 2021); [Appendix B.2; see Equation (33)] lead to spatio-temporal smoothing kernels of a similar form as in (12),

but now with the non-causal temporal Gaussian kernel replaced by a time-causal temporal kernel referred to as the time-causal limit kernel

defined by having a Fourier transform of the form

and corresponding the an infinite set of truncated exponential kernels coupled in cascade, with specifically chosen time constants to obtain temporal scale covariance (Lindeberg, 2016, Section 5; Lindeberg, 2023b, Section 3.1).

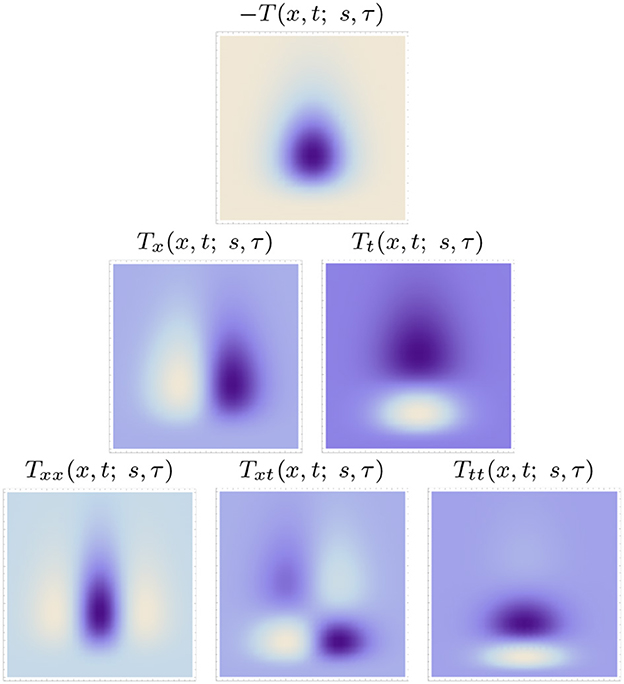

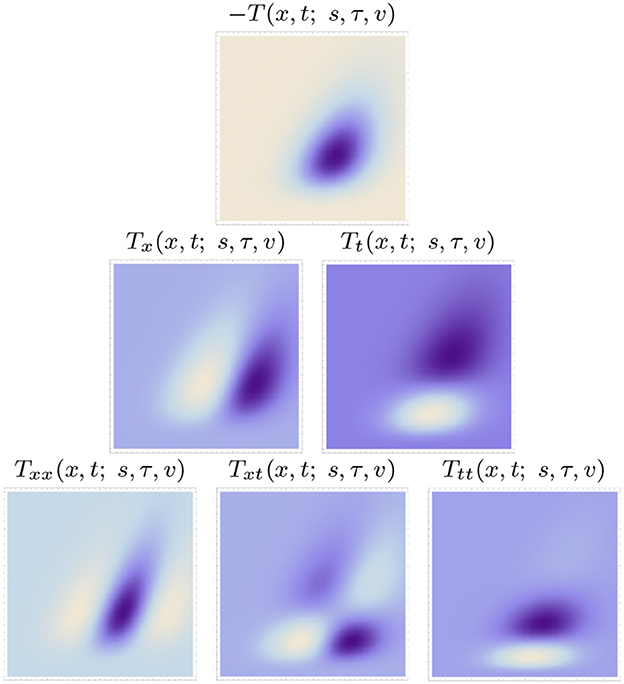

Figures 5, 6 show examples of such spatio-temporal receptive fields up to order two, for the case of a 1+1-D spatio-temporal domain. In Figure 5, where the image velocity v is zero, the receptive fields are space-time separable, whereas in Figure 6, where the image velocity is non-zero, the receptive fields are not separable over space-time. In the most idealised version of the theory, we could think of these velocity-adapted receptive fields, for all velocity values within some range, as being present at every point in the image domain, see Figure 7 for an illustration.

Figure 5. Space-time separable kernels according to the time-causal spatio-temporal receptive field model in (13) up to order two over a 1+1-D spatio-temporal domain for image velocity v = 0, using the time-causal limit kernel (14) with distribution parameter for smoothing over the temporal domain, at spatial scale s = 1 and temporal scale τ = 1. (Horizontal dimension: space x ∈ [−3.5, 3.5]. Vertical dimension: time t ∈ [0, 5].)

Figure 6. Non-separable spatio-temporal kernels according to the time-causal spatio-temporal receptive field model in (13) up to order two over a 1+1-D spatio-temporal domain for image velocity v = 1/2, using the time-causal limit kernel (14) with distribution parameter for smoothing over the temporal domain, at spatial scale s = 1 and temporal scale τ = 1 (Horizontal dimension: space x ∈ [−3.5, 3.5]. Vertical dimension: time t ∈ [0, 5].).

Figure 7. Illustration of the variability of receptive field shapes over Galilean motions. This figure shows zero-order spatio-temporal receptive fields of the form (13), for a self-similar distribution of the velocity values v, using Gaussian smoothing over the spatial domain and smoothing with the time-causal limit kernel (14) with distribution parameter over the temporal domain, in the case of a 1+1-D time-causal spatio-temporal domain, for spatial scale parameter s = 1 and temporal scale parameter τ = 4. These primitive spatio-temporal smoothing operations should, in turn, be complemented by spatial differentiation and velocity-adapted temporal differentiation operators according to for different orders m and n of spatial and temporal differentiation, respectively, to generate the corresponding family of spatio-temporal receptive fields of the form (13). The central receptive field in this illustration, for zero image velocity is space-time separable, whereas the other receptive fields, for velocity values v = −0.4, −0.2, −0.1, 0.1, 0.2 and 0.4 from left to right, are not separable (Horizontal dimension: space x ∈ [−4, 4]. Vertical dimension: time t ∈ [0, 8].).

In this way, the receptive field family will be Galilean covariant, which makes it possible for the vision system to handle observations of moving objects and events in the world, irrespective of the relative motion between the object or the event in the world and the viewing direction.

For rotationally symmetric receptive fields over the spatial domain, a corresponding model can instead be expressed of the form [Lindeberg, 2021; Equation (39)]

In Lindeberg (2016) it is proposed that simple cells in the primary visual cortex can be modelled by spatio-temporal kernels of the form (13) for h(t; τ) = ψ(t; t) according to (15); see Figure 18 in Lindeberg (2021) for illustrations. It is also proposed that lagged and non-lagged LGN neurons can be modelled by temporal derivatives of Laplacians of Gaussians of the form (16); see Figure 12 in Lindeberg (2021).

These models go beyond the previous modelling work by Young and Lesperance (2001), Young et al. (2001) in that our models are truly time-causal, as opposed to Young's non-causal model, and also that the parameterization of the filter shapes in our model is different, more in line with the geometry of the spatio-temporal image transformations.

Both of the above spatial and spatio-temporal models can be extended from operating on pure grey-level image information to operating on colour image data, by instead applying these models to each channel of a colour-opponent colour space; see Lindeberg (2013, 2021) for further details as well as modelling results. Since the geometric image transformations have the same effect on colour-opponent channels as on grey-level information, we do here in this article, without loss of generality, develop the theory for the case of grey-level images only, while noting that similar algebraic transformation properties will hold for all the channels in a colour-opponent representation of the image or video data.

In this section, we will describe covariance properties of the above described generalised Gaussian derivative model, in the cases of either (i) spatial receptive fields over a purely spatial domain, or (ii) spatio-temporal receptive fields over a joint spatio-temporal domain.

An initial treatment of this topic, in less developed form, was given in the supplementary material of Lindeberg (2021), however, on a format that may not be easy to digest for a reader with neuroscientific background. In the present treatment, we develop the covariance properties of the spatial and spatio-temporal receptive field models in an extended as well as more explicit manner, that should be more easy to access. Specifically, we incorporate the transformation properties of the full derivative-based receptive field models as opposed to only the transformation properties of the spatial or spatio-temporal smoothing processes in the previous treatment, and also comprising more developed interpretations of these covariance properties with regard to the associated variabilities in image and video data under natural image transformations. Then, in Section 3.2. we will use these theoretical results to formulate predictions with regard to implications for biological vision, including the formulation of a set of testable biological hypotheses as well as needs for characterising the distributions of biological receptive field shapes.

It will be shown that the purely spatial model for simple cells in (16) is covariant under affine image transformations of the form

which includes the special cases of covariance under spatial scaling transformations with

and spatial rotations with

For the spatial model of LGN neurons (7), affine covariance cannot be achieved, because the underlying spatial smoothing kernels are rotationally symmetric. That model does instead obey covariance under spatial scaling transformations and spatial rotations.

It will also be shown that the joint spatio-temporal model of simple cells in (13) is, in addition to affine covariant, also covariant under Galilean transformations of the form

as well as covariant under temporal scaling transformations

For the joint spatio-temporal model of LGN neurons (16), the covariance properties are, however, restricted to spatial scaling transformations, spatial rotations and temporal scaling transformations.6

The real-world significance of these covariance properties is that:

• If we linearize the perspective mapping from a local surface patch on a smooth surface in the world to the image plane, then the deformation of a pattern on the surface to the image plane can, to first order of approximation, be modelled as a local affine transformation of the form (17).

• If we linearize the projective mapping between two views of a surface patch from different viewing directions, then the projections of a pattern on the surface to the two images can, to first order of approximation, be related by an affine transformation of the form (17).

• If we view an object or a spatio-temporal event in the world in two situations, that correspond to different relative velocities in relation to the viewing direction, then the two spatio-temporal image patterns can, to first order of approximation, be related by local Galilean transformations of the form (20).

• If an object moves or an event in the world occurs faster or slower, then the spatio-temporal image patterns arising from that moving object or event are related by a temporal scaling transformation of the form (21).

By the family of receptive fields being covariant under these image transformation implies that the vision system will have the potential ability to perfectly match the output from the receptive field families, given two or more views of the same object or event in the world. In this way, the vision system will have the potential of substantially reducing the measurement errors, when inferring cues about properties in the world from image measurements in terms of receptive fields. For example, in an early work on affine covariant and affine invariant receptive field representations based on affine Gaussian kernels, it was demonstrated that it is possible to design algorithms that reduce the error in estimating local surface orientation from monocular or binocular cues by an order of magnitude, compared to using receptive fields based on the rotationally symmetric Gaussian kernel (Lindeberg and Gårding, 1997; see the experimental results in Tables 1–3).

Based on this theoretical reasoning, in combination with previous experimental support, we argue that it is essential for an artificial or biological vision system to obey sufficient covariance properties, or sufficient approximations thereof, in order to robustly handle the variabilities in image and video data caused by the natural image transformations that arise when viewing objects and events in a complex natural environment.

When applying an image transformation between two image domains, not only the spatial or the spatio-temporal representations of the receptive field output need to be transformed, but also the parameters for the receptive field models need to be appropriately matched. In other words, it is necessary to also derive the transformation properties of the spatial scale parameter s and the spatial covariance matrix Σ for the spatial model, and additionally the transformation properties of the temporal scale parameter τ and the image velocity v for the spatio-temporal model.

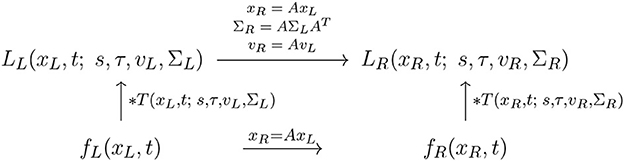

In the following subsections, we will derive the covariance properties of the receptive field models under the respective classes of image transformations. To highlight how the parameters are also affected by the image transformations, we will therefore express these covariance properties in terms of explicit image transformations regarding the output from the receptive field models, complemented by explicit commutative diagrams. To reduce the complexity of the expressions, we will focus on the transformation properties of the output from the underlying spatial and spatio-temporal smoothing kernels only.

The output from the actual derivative-based receptive fields can then be obtained by also complementing the transformation properties of the output from the spatial and spatio-temporal smoothing operations with the transformation properties of the respective spatial and temporal derivative operators, where spatial derivatives transform according to

under an affine transformation (17) of the form

whereas spatio-temporal derivatives transform according to

under a Galilean transformation (20) of the form

with and .

By necessity, the treatment that follows next in this section will be somewhat technical. The hasty reader may skip the mathematical derivations in the following text and instead focus on the resulting commutative diagrams in Figures 8–13, which describe the essence of the transformation properties of the receptive fields in the generalised Gaussian derivative model.

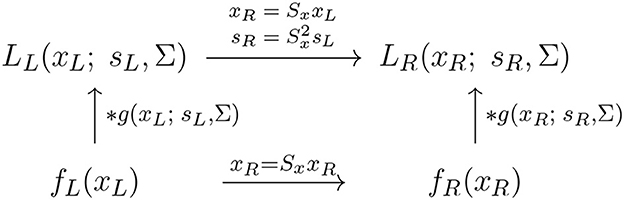

Figure 8. Commutative diagram for the spatial smoothing component in the purely spatial receptive field model (16) under spatial affine transformations of the image domain. This commutative diagram, which should be read from the lower left corner to the upper right corner, means that irrespective of the input image fL(xL) is first subject to a spatial affine transformation xR = AxL and then smoothed with an affine Gaussian kernel g(xR; s, ΣR), or instead directly convolved with an affine Gaussian kernel g(xL; s, ΣL) and then subject to a similar affine transformation, we do then get the same result, provided that the spatial covariance matrices are related according to (and assuming that the spatial scale parameters for the two domain sR = sL = s are the same).

To model the essential effect of the receptive field output in terms of the spatial smoothing transformations applied to two spatial image patterns fL(xL) and fR(xR), that are related according to a spatial affine transformation (17) of the form

let us define the corresponding spatially smoothed representations LL(xL; s, ΣL) and LR(xL; s, ΣR) obtained by convolving fL(xL) and fR(xR) with affine Gaussian kernels of the form (3), using spatial scale parameters sL and sR as well as spatial covariance matrices ΣL and ΣR, respectively.

Then, according to Lindeberg and Gårding (1997); Section 4.1, these spatially smoothed representations7 are related according to

for

which constitutes an affine covariant transformation property, as illustrated in the commutative diagram in Figure 8. Specifically, the affine covariance property implies that for every receptive field output from the image fL(xL) computed with spatial covariance matrix ΣL, there exists a corresponding spatial covariance matrix ΣR, such that the receptive field output from the image fR(xR) using that covariance matrix can be perfectly matched to the receptive field output from image fL(xL) using the spatial covariance matrix ΣL.

This property thus means that the family of spatial receptive fields will, up to first order of approximation, have the ability to handle the image deformations induced between the perspective projections of multiple views of a smooth local surface patch in the world.

For the spatial LGN model in (7), a weaker rotational covariance property holds, implying that under a spatial rotation

where R is a 2-D rotation matrix, the corresponding spatially smoothed representations are related according to

This property implies that image structures arising from projections of objects that rotate around an axis aligned with the viewing direction are handled in a structurally similar manner.

In the special case when the spatial affine transformation represented by the affine matrix A reduces to a scaling transformation, having scaling matrix S with scaling factor Sx according to (18)

the above affine covariance relation reduces to the form

provided that the spatial scale parameters are related according to

and assuming that the spatial covariance matrices are the same

With regard to the previous treatment in Section 2.1.1, this reflects the scale covariance property of the spatial smoothing transformation underlying the simple cell model in (6), as illustrated in the commutative diagram in Figure 9.

Figure 9. Commutative diagram for the spatial smoothing component in the purely spatial receptive field model (16) under spatial scaling transformations of the image domain. This commutative diagram, which should be read from the lower left corner to the upper right corner, means that irrespective of the input image fL(xL) is first subject to spatial scaling transformation xR = SxxL and then smoothed with an affine Gaussian kernel g(xR; sR, Σ), or instead directly convolved with an affine Gaussian kernel g(xL; sL, Σ) and then subject to a similar affine transformation, we do then get the same result, provided that the spatial scale parameters are related according to (and assuming that the spatial covariance matrices for the two image domains are the same ΣR = ΣL = Σ).

A corresponding spatial scale covariance property does also hold for the spatial smoothing component in the spatial LGN model in (7), and is of the form

provided that the spatial scale parameters are related according to

This scale covariance property implies that the spatial receptive field family will, up to first order of approximation, have the ability to handle the image deformations induced by viewing an object from different distances relative to the observer, as well as handling objects in the world that have similar spatial appearance, while being of different physical size.

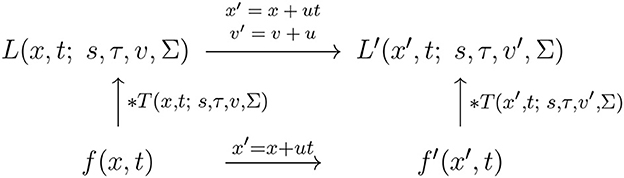

To model the essential effect of the receptive field output in terms of the spatio-temporal smoothing transformation applied to two spatio-temporal video patterns f(p) and f′(p′), that are related according to a Galilean transformation (20) according to

for t′ = t′ and

let us define the corresponding spatio-temporally smoothed video representations8 L(x, t; s, τ, v, Σ) and L′(x′, t′; s′, τ′, v′, Σ′) obtained by convolving f(p) and f′(p) with spatio-temporal smoothing kernels of the form (9) using spatial scale parameters s and s′, temporal scale parameters τ and τ′, velocity vectors v and v′, and spatial covariance matrices Σ and Σ′, respectively:

By relating these two representations to each other, according to a change of variables, it then follows that the spatio-temporally smoothed video representations are related according to the Galilean covariance property

provided that the velocity parameters for the two different receptive field models are related according to

and assuming that the other receptive field parameters are the same, i.e., that s′ = s, Σ′ = Σ and τ′ = τ, see the commutative diagram in Figure 10 for an illustration.

Figure 10. Commutative diagram for the spatio-temporal smoothing component in the joint spatio-temporal receptive field model (13) under Galilean transformations over a spatio-temporal video domain. This commutative diagram, which should be read from the lower left corner to the upper right corner, means that irrespective of the input video f(x, t) is first subject to Galilean transformation x′ = x′+u and then smoothed with a spatio-temporal kernel kernel T(x′, t; s, τ, v′, Σ), or instead directly convolved with the spatio-temporal smoothing kernel T(x, t; s, τ, v, Σ) and then subject to a similar Galilean transformation, we do then get the same result, provided that the velocity parameters of the spatio-temporal smoothing kernels are related according to v′ = v + u (and assuming that the other parameters in the spatio-temporal receptive field models are the same).

The regular LGN model (7), based on space-time separable receptive fields, is not covariant under Galilean transformations. Motivated by the fact that there are neurophysiological results showing that that there also exist neurons in the LGN that are sensitive to motion directions (Ghodrati et al., 2017, Section 1.4.2), we could, however, also formulate a tentative Galilean covariant receptive field model for LGN neurons, having rotationally symmetric receptive fields over the spatial domain, according to

Then, the underlying purely spatio-temporally smoothed representations, disregarding the Laplacian operator and the temporal derivative operator , will under a Galilean transformation (38) be related according to

provided that the velocity parameters for the two different receptive field models are related according to

and assuming that the other receptive field parameters are the same, i.e., that s′ = s and τ′ = τ.

These two Galilean covariance properties mean that the corresponding families of spatio-temporal receptive fields will, up to first order of approximation, have the ability to handle the image deformations induced between objects that move, as well as spatio-temporal events that occur, with different relative velocities between the object/event and the observer.

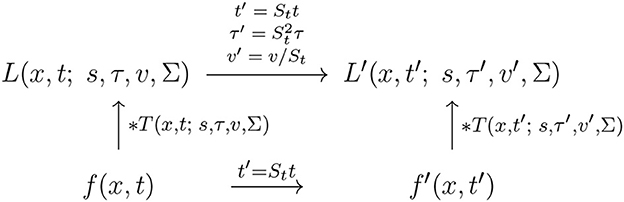

To model the essential effect of the receptive field output in terms of the spatio-temporal smoothing transformations applied to two spatio-temporal video patterns f(p) and f′(p′), that are related according to a temporal scaling transformation (21) according to

for x′ = x and

with the temporal scaling factor St restricted9 to being an integer10 power of the distribution parameter c > 1 of the time-causal limit kernel ψ(t; τ, c) in the time-causal spatio-temporal receptive field model

let us analogously to above define the corresponding spatio-temporally smoothed video representations L(x, t; s, τ, v, Σ) and L′(x′, t′; s′, τ′, v′, Σ′, v′) of f(p) and f′(p′), respectively, according to (39) and (40).

Then, due to the scale-covariant property of the time-causal limit kernel [Lindeberg, 2016, Equation (47); Lindeberg, 2023b, Equation (34)], these spatio-temporally smoothed video representations are related according to the temporal scale covariance property

provided that the temporal scale parameters and the velocity parameters for the two receptive field models are matched according to

and assuming that the other receptive field parameters are the same, i.e., that s′ = s and Σ′ = Σ, see the commutative diagram in Figure 11 for an illustration.

Figure 11. Commutative diagram for the spatio-temporal smoothing component in the joint spatio-temporal receptive field model (13) under temporal scaling transformations over a spatio-temporal video domain. This commutative diagram, which should be read from the lower left corner to the upper right corner, means that irrespective of the input video f(x, t) is first subject to a temporal scaling transformation and then smoothed with a spatio-temporal kernel kernel T(x, t′; s, τ′, v′, Σ), or instead directly convolved with the spatio-temporal smoothing kernel T(x, t; s, τ, v, Σ) and then subject to a similar temporal scaling transformation, we do then get the same result, provided that the temporal scale parameters as well as the velocity parameters of the spatio-temporal smoothing kernels are matched according to and (and assuming that the other parameters in the spatio-temporal receptive field models are the same).

A corresponding temporal covariance property does also hold for the space-time separable spatio-temporal LGN model in (16), of the form

provided that the temporal scale parameters are related according to

These temporal scaling covariance properties mean that the families of spatio-temporal receptive fields will be able to handle objects that move and events that occur faster or slower in the world.

To model the essential effect of the receptive field output in terms of the spatio-temporal smoothing transformation applied to two spatio-temporal video patterns f(pL) and f(pR), that are related according to a spatial affine transformation (17) according to

let us analogously to in Section 3.1.3.1. define the corresponding spatio-temporally smoothed video representations LL(xL, tL; sL, τL, vL, ΣL) and LR(xR, tR; sR, τT, vR, ΣR) of f(pL) = f(p) and , respectively, according to (39) and (40).

Then, based on similar transformation properties as are used for deriving the affine covariance property of the purely spatial receptive field model in Section 3.1.2.1., it follows that these spatio-temporally smoothed video representations are related according to the spatial affine covariant transformation property

for

provided that the other receptive field parameters are the same, i.e., that sR = sL, τR = τL and vR = vL.

With regard to the previous treatment in Section 2.1.1, this property reflects the spatial affine covariance property of the spatio-temporal smoothing transformation underlying the simple cell model in (13), as illustrated in the commutative diagram in Figure 12.

Figure 12. Commutative diagram for the spatio-temporal smoothing component in the joint spatio-temporal receptive field model (13) under spatial affine transformations over a spatio-temporal video domain. This commutative diagram, which should be read from the lower left corner to the upper right corner, means that irrespective of the input video f(xL, t) is first subject to a spatial affine transformation xR = AxL and then smoothed with a spatio-temporal kernel kernel T(xR, t; s, τ, vR, ΣR), or instead directly convolved with the spatio-temporal smoothing kernel T(xL, t; s, τ, vL, ΣL) and then subject to a similar temporal scaling transformation, we do then get the same result, provided that the spatial covariance matrices as well as the velocity parameters of the spatio-temporal smoothing kernels are matched according to and vR = AvL (and assuming that the other parameters in the spatio-temporal receptive field models are the same).

This affine covariance property implies that, also under non-zero relative motion between an object or event in the world and the observer, the family of spatio-temporal receptive fields will have the ability to, up to first order of approximation, handle the image deformations caused by viewing the surface pattern of a smooth surface in the world under variations in the viewing direction relative to the observer.

For the spatio-temporal LGN model with velocity adaptation in (43), a weaker rotational covariance property holds, implying that under a spatial rotation

where R is a 2-D rotation matrix, the corresponding spatially smoothed representations are related according to

provided that

For the spatio-temporal LGN model without velocity adaptation in (16), a similar result holds, with the conceptual difference that vR = vL = 0.

As for the previously studied purely spatial case, these rotational covariance properties imply that image structures arising from projections of objects that rotate around an axis aligned with the viewing direction are handled in a structurally similar manner.

In the special case when the spatial affine transformation represented the affine matrix A reduces to a scaling transformation with a spatial scaling matrix S, with spatial scaling factor Sx according to (18)

the above spatial affine covariance relation reduces to the form

provided that the spatial scale parameters and the velocity vectors are related according to

and assuming that the other receptive field parameters are the same, i.e., that τR = τL = τ, ΣR = ΣL = Σ and vR = vL.

With regard to the previous treatment in Section 2.1.1, this instead reflects the scale covariance property of the spatio-temporal smoothing transformation underlying the simple cell model in (6), as illustrated in the commutative diagram in Figure 13.

Figure 13. Commutative diagram for the spatio-temporal smoothing component in the joint spatio-temporal receptive field model (13) under spatial scaling transformations over a spatio-temporal video domain. This commutative diagram, which should be read from the lower left corner to the upper right corner, means that irrespective of the input video f(xL, t) is first subject to a spatial scaling transformation xR = SxxL and then smoothed with a spatio-temporal kernel kernel T(xR, t; sR, τ, vR, Σ), or instead directly convolved with the spatio-temporal smoothing kernel T(xL, t; sL, τ, vL, Σ) and then subject to a similar temporal scaling transformation, we do then get the same result, provided that the spatial scale parameters as well as the velocity parameters of the spatio-temporal smoothing kernels are matched according to and vR = SxvL (and assuming that the other parameters in the spatio-temporal receptive field models are the same).

A corresponding spatial scale covariance property does also hold for the space-time separable spatio-temporal LGN model in (16), of the form

provided that the spatial scale parameters are related according to

Similar to the previous spatial scale covariance property for purely spatial receptive fields in Section 3.1.2.2, this spatial covariance property means that the spatio-temporal receptive field family will, up to first order of approximation, have the ability to handle the image deformations induced by viewing an object from different distances relative to the observer, as well as handling objects in the world that have similar spatial appearance, while being of different physical size.

Concerning biological implications of the presented theory, it is sometimes argued that the oriented simple cells in the primary visual cortex serve as mere edge detectors. In view of the presented theory, the oriented receptive fields of the simple cells can, on the other hand, also be viewed as populations of receptive fields, that together make it possible to capture local image deformations in the image domain, to, in turn, serve as a cue for deriving cues to surface orientation and surface shape in the world. In addition, the spatio-temporal dependencies of the simple cells are also essential to handle objects that move as well as spatio-temporal events that occur, with possibly different relative motions in relation to the observer.

According to the presented theory, the spatial and spatio-temporal receptive fields are expanded over their associated filter parameters, whereby the population of receptive fields becomes able to handle the different classes of locally linearised image and video deformations. This is done in such a way that the output from the receptive fields can be perfectly matched under these image and video transformations, provided that the receptive field parameters are properly matched to the actual transformations that occur in a particular imaging situation. Specifically, an interesting follow-up question of this work to biological vision research concerns how well biological vision spans corresponding families of receptive fields, as predicted by the presented theory.

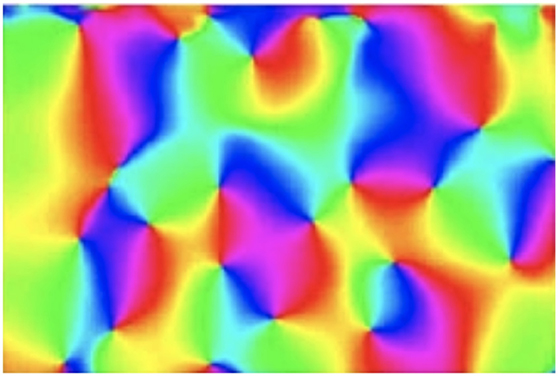

In the study of orientation maps of receptive field families around pinwheels (Bonhoeffer and Grinvald, 1991), it has been found that for higher mammals, oriented receptive fields in the primary visual cortex are laid out with a specific organization regarding their directional distribution, in that neurons with preference for a similar orientation over the spatial domain are grouped together, and in such a way that the preferred orientation varies continuously around the singularities in the orientation maps known as pinwheels (see Figure 14). First of all, such an organization shows that biological vision performs an explicit expansion over the group of spatial rotations, which is a subgroup of the affine group.

Figure 14. Orientation map in the primary visual cortex (in cat area 17), as recorded by Koch et al. (2016) (OpenAccess), which by a colour coding shows how the preferred spatial orientations for the visual neurons vary spatially over the cortex. In terms of the theory presented in this article, regarding connections between the influence of natural image transformation on image data and the shapes of the visual receptive fields of the neurons that process the visual information, these results can be taken as support that the population of visual receptive fields in the visual cortex perform an expansion over the group of spatial rotations. Let us take into further account that the receptive fields of the neurons near the pinwheels are reported to be comparably isotropic, whereas the receptive fields of the neurons further away from the pinwheels are more anisotropic. Then, we can, from the prediction of an expansion over affine covariance matrices of directional derivatives of affine Gaussian kernels, proposed as a model for the spatial component of simple cells, raise the question if the population of receptive fields can also be regarded as spanning some larger subset of the affine group than mere rotations. If the receptive fields of the neurons span a larger part of the affine group, involving non-uniform scaling transformations that would correspond to spatial receptive fields with different ratios between the characteristic lengths of the receptive fields in the directions of the orientation of the receptive field and its orthogonal direction, then they would have better ability to derive properties, such as the surface orientation and the surface shape of objects in the world, compared to a population of receptive fields that does not perform such an expansion.

Beyond variations in mere orientation selectivity of neurons, neurophysiological investigations by Blasdel (1992) have, however, also shown that the degree of orientation selectivity varies regularly over the cortex, and is different near vs. further away from the center of a pinwheel; see also Nauhaus et al. (2008) and Koch et al. (2016). Specifically, the orientation selectivity is lowest near the positions of the centers of the pinwheels, and then increases with the distance from the pinwheel. In view of the spatial model for simple cells (6), such a behaviour would be a characteristic property, if the spatial receptive fields would be laid out over the cortex according to a distribution over the spatial covariance matrices of the affine Gaussian kernels, which determine the purely spatial smoothing component in the spatial receptive field model.

For the closest to isotropic affine Gaussian kernels, a small perturbation of the spatial covariance matrix of the spatial smoothing kernel could cause a larger shift in the preferred orientation than for a highly anisotropic Gaussian kernel. The singularity (the pinwheel) in such a model would therefore correspond to the limit case of a rotationally symmetric Gaussian kernel, alternatively an affine Gaussian kernel as near as possible to a rotationally symmetric Gaussian kernel, within some complementary constraint not modelled here, if the parameter variation in the biological system does not reach the same limits as in our idealised theoretical model.

Compare with the orientation maps that would be generated from the distribution of spatial receptive fields shown in Figure 4, although do note that in that illustration, the same spatial orientation is represented at two opposite spatial positions in relation to the origin, whereas the biological orientation maps around the pinwheels only represent a spatial orientation once. That minor technical problem can, however, be easily fixed, by mapping the angular representation in Figure 4 to a double angle representation, which would then identify the directional derivatives of affine Gaussian kernels with opposite polarity, in other words kernels that correspond to flipping the sign of the affine Gaussian derivatives.

An interesting follow-up question for biological vision research does thus concern if it can be neurophysiologically established if the distribution of the spatial shapes of the the spatial smoothing component of the simple cells spans a larger part of the affine group than a mere expansion over spatial rotations?11 What would then the distribution be over different eccentricities, i.e, different ratios12 between the eigenvalues of the spatial covariance matrix Σ, if the spatial component of each simple cells is modelled as a directional derivative (of a suitable order) of an affine Gaussian kernel? Note, in relation to the neurophysiological measurements by Nauhaus et al. (2008), which show a lower orientation selectivity at the pinwheels and increasing directional selectivity when moving further away from the pinwheel, that the spatial receptive fields based on the maximally isotropic affine Gaussian kernels in the centers of Figure 4 (for eccentricity ϵ = 1) would have the lowest degree of orientation selectivity, whereas the spatial receptive fields towards the periphery (for ϵ decreasing towards 0) would have the highest degree of orientation selectivity, see Lindeberg (2023a)13 for an in-depth treatment of this topic.

If an expansion over eccentricities for simple cells could be established over the spatial domain, it would additionally be interesting to investigate if such an expansion would be coupled also to the expansion over image velocities v in space-time14 for joint spatio-temporal receptive fields, or if a potential spatial expansion over eccentricities in the affine group (alternatively over some other subset of the affine group) is decoupled from the image velocities in the Galilean group. If those expansions are coupled, then the dimensionality of the parameter space would be substantially larger, while the dimensionality over two separate expansions over eccentricities vs. motion directions would be substantially lower. Is it feasible for the earliest layers of receptive field to efficiently represent those dimensions of the parameter space jointly, which would then enable higher potential accuracy in the derivation of cues to local surface orientation and surface shape from moving objects that are not fixated by the observer, and thus moving with non-zero image velocity relative to the viewing direction. Or do efficiency arguments call for a separation of those dimensions of the parameter space, into separate spatial and motion pathways, so that accurate surface orientation and shape estimation can only be performed with respect to viewing directions that are fixated by the observer in relation to a moving object?

It would additionally be highly interesting to characterize to what extent the early stages in the visual system perform expansions of receptive fields over multiple spatial and temporal scales. In the retina, the spatial receptive fields mainly capture a lowest spatial scale level, which increases linearily with the distance from the fovea (see Lindeberg, 2013, Section 7). Experimental evidence do on the other hand demonstrate that biological vision achieves scale invariance over wide ranges of scale (Biederman and Cooper, 1992; Ito et al., 1995; Logothetis et al., 1995; Furmanski and Engel, 2000; Hung et al., 2005; Isik et al., 2013). Using the semi-group properties of the rotationally symmetric as well as the affine Gaussian kernels,15 it is in principle possible to compute coarser scale representations from finer scale levels by adding complementary spatial smoothing stages in cascade. The time-causal limit kernel (14) used for temporal smoothing in our spatio-temporal receptive field model does also obey a cascade smoothing property over temporal scales, which makes it possible to compute representations at coarser temporal scales from representations at finer spatial scales, by complementary (time-causal) temporal filtering, in terms of first-order temporal integrators coupled in cascade16 (Lindeberg, 2023b). Do the earliest layers in a biological visual system explicitly represent the image and video data by expansions of receptive fields over multiple spatial and temporal scales, or do the earliest stages in the vision system instead only represent a lowest range of spatial and temporal scales explicitly, to then handle coarser spatial and temporal scales by other mechanisms?

The generalised Gaussian derivative model for visual receptive fields can finally be seen as biologically plausible, in the respect that the computations needed to perform the underlying spatial smoothing operations and spatial derivative computations can be performed by local spatial computations. The spatial smoothing is modelled by diffusion equations (1), which can be implemented by local computations based on connections between neighbours, and thus be performed by groups of neurons that interact with each other spatially by local connections. Spatial derivatives can also be approximated by local nearest neighbour computations. The temporal smoothing operation in the time-causal model based on smoothing with the time-causal limit kernel corresponds to first-order temporal integrators coupled in cascade, with very close relations to our understanding of temporal processing in neurons. Temporal derivatives can, in turn, be computed from linear combinations of temporally smoothed scale channels over multiple temporal scales, based on a similar recurrence relation over increasing orders of temporal differentiation as in Lindeberg and Fagerström (1996) [Equation (18)]. Thus, all the individual computational primitives needed to implement the spatial as well as the spatio-temporal receptive fields according to the generalised Gaussian derivative model can, in principle, be performed by computational mechanisms available to a network of biological neurons.

For a visual neuron in the primary visual cortex that can be well modelled as a simple cell, let us assume that the spatio-temporal dependency of its receptive field can be modelled as a combination of a dependency on a spatial function hspace(x1, x2) and a dependency on a temporal function htime(t) of the joint form17

for some value of a velocity vector v = (v1, v2), where we here in this model assume that both the spatial and the temporal dependency functions hspace(x1, x2) and htime(t) as well as the velocity vector v are individual for each visual neuron. Then, we can formulate testable hypotheses for the above theoretical predictions in the following ways:

Hypothesis 1 (Expansion of spatial receptive field shapes over a larger part of the affine group than mere rotations or uniform scale changes): Define characteristic lengths σφ and σ⊥φ that measure the spatial extent of the spatial component hspace(x1, x2) of the receptive field in the direction φ representing the orientation of the oriented simple cell as well as in its orthogonal direction ⊥φ, respectively. Then, if the hypothesis about an expansion over a larger part of the affine group than mere rotations is true, there should be a variability in the ratio of these characteristic lengths between different neurons

If the spatial component of the receptive field hspace(x1, x2) can additionally be well modelled as a directional derivative of an affine Gaussian kernel for some order of spatial differentiation, then this ratio between the characteristic lengths can be taken as the ratio between the effective scale parameters18 of the affine Gaussian kernel in the directions of the orientation of the receptive field and its orthogonal direction

Hypothesis 2 (Joint expansion over image velocities and spatial eccentricities of the spatio-temporal receptive fields): Assuming that there is a variability in the ratio ϵ between the orthogonal characteristic lengths of the spatial components of the receptive fields between different neurons, as well as also a variability of the absolute velocity values

between different neurons, then these variabilities should together span a 2-D region in the composed 2-D parameter space, and not be restricted to only a set of 1-D subspaces, which could then be seen as different populations in a joint scatter diagram or a histogram over the characteristic length ratio ϵ and the absolute velocity value vspeed.

Hypothesis 3 (Separate expansions over image velocities and spatial eccentricities of the spatio-temporal receptive fields): The neurons that show a variability over the velocity values vspeed all have a ratio ϵ of the characteristic lengths in two orthogonal spatial directions for the purely spatial component of the receptive field that is close to constant, or confined within a narrow range.

Note that Hypotheses 2 and 3 are mutually exclusive.

In addition to investigating if the above working hypotheses hold, which would then give more detailed insights into how the spatial and spatio-temporal receptive field shapes of simple cells are expanded over the degrees of freedom of the geometric image transformations, it would additionally be highly interesting to characterize the distributions of the corresponding receptive field parameters, in other words, the distributions of

• the spatial characteristic length ratio ϵ,

• the image velocity vspeed,

• a typical spatial size parameter σspace, which for a spatial dependency function hspace(x1, x2) in (13) corresponding to a directional derivative of an affine Gaussian kernel for a specific normalization of the spatial covariance matrix Σ would correspond to the square root of the spatial scale parameter, i.e., , and

• a typical temporal duration parameter σtime, which for the temporal dependency function htime(t) in (13) corresponding to a temporal derivative of the time-causal limit kernel would correspond to the square root of the temporal scale parameter, i.e., .

Characterising the distributions of these receptive field parameters, would give quantitative measures on how well the variabilities of the receptive field shapes of biological simple cells span the studied classes of natural image transformations, in terms of spatial scaling transformations, spatial affine transformations, Galilean transformations and temporal scaling transformations.

When performing a characterization of these distributions, as well as investigations of the above testable hypotheses, it should, however, be noted that special care may be needed, to only pool statistics over biological receptive fields that have a similar qualitative shape, in terms of the number of dominant positive and negative lobes over space and time. For the spatial and spatio-temporal receptive fields according to the studied generalised Gaussian derivative model, this would imply initially only collecting statistics for receptive fields that correspond to the same orders of spatial and temporal differentiation. Alternatively, if a unified model can be expressed for receptive fields of differing qualitative shape, as it would be possible if the biological receptive fields can be well modelled by the generalised Gaussian derivative model, statistics could also be pooled over different orders of spatial and/or temporal differentiation, by defining the characteristic spatial lengths from the spatial scale parameter s according to , and the characteristic temporal durations from the temporal scale parameter τ according to .

We have presented a generalised Gaussian derivative model for modelling visual receptive fields that can be modelled as linear, and which can be derived in an axiomatic principled manner from symmetry properties of the environment, in combination with structural constraints on the first stages of the visual system, to guarantee internally consistent visual representations over multiple spatial and temporal scales.

In a companion work (Lindeberg, 2021), it has been demonstrated that specific instances of receptive field models obtained within this general family of visual receptive fields do very well model properties of LGN neurons and simple cells in the primary visual cortex, as established in neurophysiological cell recordings by DeAngelis et al. (1995), DeAngelis and Anzai (2004), Conway and Livingstone (2006), Johnson et al. (2008). Indeed, based on the generalised Gaussian derivative model for visual receptive fields, it is possible to reproduce the qualitative shape of all the main types of receptive fields reported in these neurophysiological studies, including space-time separable neurons in the LGN, and simple cells in the primary visual cortex with oriented receptive fields having strong orientation preference over the spatial domain, as well as being either space-time separable over the joint spatio-temporal domain, or with preference to specific motion directions in space-time.

Specifically, we have in this paper focused on the transformation properties of the receptive fields in the generalised Gaussian derivative model under geometric image transformations, as modelled by local linearizations of the geometric transformations between single or multiple views of (possibly moving) objects or events in the world, expressed in terms of spatial scaling transformations, spatial affine transformations and Galilean transformations, as well as temporal scaling transformations. We have shown that the receptive fields in the generalised Gaussian derivative model possess true covariance properties under these classes of natural image transformations. The covariance properties do, in turn, imply that a vision system, based on populations of these receptive fields, will have the ability to, up to first order of approximation, handle: (i) the perspective mapping from objects or events in the world to the image or video domain, (ii) handle the image deformations induced by viewing image patterns of smooth surfaces in the world from multiple views, as well as (iii) the video patterns arising from viewing objects and events in the world, that move with different velocities relative to the observer, or (iv) spatio-temporal events that occur faster or slower in the world.