- Institute of Cognitive Sciences and Technologies (ISTC), National Research Council of Italy (CNR), Padua, Italy

We present a normative computational theory of how the brain may support visually-guided goal-directed actions in dynamically changing environments. It extends the Active Inference theory of cortical processing according to which the brain maintains beliefs over the environmental state, and motor control signals try to fulfill the corresponding sensory predictions. We propose that the neural circuitry in the Posterior Parietal Cortex (PPC) compute flexible intentions—or motor plans from a belief over targets—to dynamically generate goal-directed actions, and we develop a computational formalization of this process. A proof-of-concept agent embodying visual and proprioceptive sensors and an actuated upper limb was tested on target-reaching tasks. The agent behaved correctly under various conditions, including static and dynamic targets, different sensory feedbacks, sensory precisions, intention gains, and movement policies; limit conditions were individuated, too. Active Inference driven by dynamic and flexible intentions can thus support goal-directed behavior in constantly changing environments, and the PPC might putatively host its core intention mechanism. More broadly, the study provides a normative computational basis for research on goal-directed behavior in end-to-end settings and further advances mechanistic theories of active biological systems.

1. Introduction

Traditionally, sensorimotor control in goal-directed actions like object-reaching is viewed as a sensory-response mapping involving several steps, starting with perception, movement planning in the body posture domain, translation of this plan in muscle commands, and finally movement execution (Erlhagen and Schöner, 2002). However, each of these steps is hindered by noise and delays, which make the approach unfeasible to operate in changing environments (Franklin and Wolpert, 2011). Instead, Predictive Coding or “Bayesian Brain” theories propose that prior knowledge and expectations over the environmental and bodily contexts provide crucial anticipatory information (Rao and Ballard, 1999). Under this perspective, motor control begins with target anticipation and motor planning even before obtaining sensory evidence. Here, we take on this view and extend an increasingly popular Predictive Coding based theory of action, Active Inference (Friston et al., 2010), with the formalization of flexible target-dependent motor plans. Moreover, based on extensive neural evidence for the role of the PPC in goal coding and motor planning (Snyder et al., 2000; Galletti et al., 2022), we propose that this cortical structure is the most likely neural correlate of the core intention manipulation process.

In primates, the dorsomedial visual stream provides critical support for continuously monitoring the body posture and the spatial location of objects to specify and guide actions, and for performing visuomotor transformations in the course of the evolving movement (Cisek and Kalaska, 2010; Fattori et al., 2017; Galletti and Fattori, 2018). The PPC, located at the apex of the dorsal stream, is also bidirectionally connected to frontal areas, motor and somatosensory cortex, placing it in a privileged position to set goal-directed actions and continuously adjust motor plans by tracking moving targets and posture (Andersen, 1995; Gamberini et al., 2021) in a common reference frame (Cohen and Andersen, 2002). Undoubtedly, the PPC plays a crucial role in visually-guided motor control (Desmurget et al., 1999; Filippini et al., 2018; Gamberini et al., 2021)—with the specific subregion V6A involved in the control of reach-to-grasp actions (Galletti et al., 2022)—but its peculiar role is still disputed. The most consistent view is that the PPC estimates the states of both body and environment and optimizes their interactions (Medendorp and Heed, 2019). Others see the PPC as a task estimator (Haar and Donchin, 2020) or as being involved in endogenous attention and task setting (Corbetta and Shulman, 2002). Its underlying computational mechanism is not fully understood, especially as regards the definition of goals in motor planning and their integration within the control process (Shadmehr and Krakauer, 2008). For example, the prevailing Optimal Feedback Control theory defines motor goals through task-specific cost functions (Todorov, 2004). Neural-level details of motor goal coding are becoming increasingly important in light of the growing demand for neural interfaces that provide information about motor intents (Gallego et al., 2022) in support of intelligent assistive devices (Velliste et al., 2008; Srinivasan et al., 2021).

Intentions encode motor goals—or plans—set before the beginning of motor acts themselves and could be therefore viewed as memory holders of voluntary actions (Andersen, 1995; Snyder et al., 1997; Lau et al., 2004; Fogassi et al., 2005). Several cortical areas handle different aspects of this process: the Premotor cortex (PM) encodes structuring while the Supplementary Motor Area (SMA) controls phasing (Gallego et al., 2022). In turn, the PPC plays a role in building motor plans and their dynamic tuning, as different PPC neurons are sensitive to different intentions (Snyder et al., 2000). Notably, intention neurons respond not only when performing a given action but also during its observation, allowing observers to predict the goal of the observed action and, thus, to “read” the intention of the acting individual (Fogassi et al., 2005). Motor goals have also been observed down the motor hierarchy, which is an expression of Hierarchical Predictive Coding in the motor domain (Friston et al., 2011).

To investigate how neural circuitry in the PPC supports sensory-guided actions through motor intentions from a computational point of view, we adopted the Active Inference theory of cognitive and motor control, which provides fundamental insights of increasing appeal about the computational role and principles of the nervous system, especially about the perception-action loop (Friston and Kiebel, 2009; Friston et al., 2010; Bogacz, 2017; Parr et al., 2022). Indeed, Active Inference provides a formalization of these two cortical tasks, both of which are viewed as aiming to resolve the critical goal of all organisms: to survive in uncertain environments by operating within preferred states (e.g., maintaining a constant temperature). Accordingly, both tasks are implemented by dynamic minimization of a quantity called free energy, whose process generally corresponds to the minimization of high- and low-level prediction errors, that is, the satisfaction of prior and sensory expectations. There are two branches of Active Inference appropriate to tackle two different levels of control. The discrete framework can explain high-level cognitive control processes such as planning and decision-making, i.e., it evaluates expected outcomes to select actions in discrete entities (Pezzulo et al., 2018). In turn, dynamic adjustment of action plans in the PPC matches by functionality the Active Inference framework in continuous state space (Friston et al., 2011, 2012). In short, this theory departs from classical views of perception, motor planning (Erlhagen and Schöner, 2002), and motor control (Todorov, 2004), unifying and considering them as a dynamic probabilistic inference problem (Toussaint and Storkey, 2006; Kaplan and Friston, 2018; Levine, 2018; Millidge et al., 2020). The biologically implausible cost functions typical of Optimal Control theories are replaced by high-level priors defined in the extrinsic state space, allowing complex movements such as walking or handwriting (Friston, 2011; Adams et al., 2013).

In the following, we first outline the background computational framework and then elaborate on movement planning and intentionality in continuous Active Inference. Our most critical contributions regard the formalization of goal-directed behavior and the processes linking dynamic goals (e.g., moving visual targets) with motor plans through the definition of flexible intentions. We also investigate a more parsimonious approach to motor control based solely on proprioceptive predictions. We then provide implementation details and a practical demonstration of the theoretical contribution in terms of a simulated Active Inference agent, which we show is capable of detecting and reaching static visual goals and tracking moving targets. We also provide detailed performance statistics and investigate the effects of system parameters whose balance is critical to movement stability. Additionally, gradient analysis provides crucial insights into the causes of the movements performed. Finally, we discuss how intentions could be selected to perform a series of goal-directed steps, e.g., a multi-phase action, and illustrate conditions for neurological disorders.

2. Computational background

We first outline the computational principles of the underlying probabilistic and Predictive Coding approach and provide background on variational inference, free energy minimization, Active Inference, and variational autoencoders necessary to comprehend the following main contribution.

2.1. The Bayesian brain hypothesis

An interesting visual phenomenon, called binocular rivalry, happens when two different images are presented simultaneously to each eye: the perception does not conform to the visual input but alternates between the two images. How and why does this happen? It is well-known that priors play a fundamental role in driving the dynamics of perceptual experience, but dominant views of the brain as a feature detector that passively receives sensory signals and computes motor commands have so far failed to explain how such illusions could arise.

In recent years, there has been increasing attention to a radically new theory of the mind called the Bayesian brain, according to which our brain is a sophisticated machine that constantly makes use of Bayesian reasoning to capture causal relationships in the world and deliver optimal behavior in an uncertain environment (Doya, 2007; Hohwy, 2013; Pezzulo et al., 2017). At the core of the theory is the Bayes theorem, whose application here implies that posterior beliefs about the world are updated according to the product of prior beliefs and the likelihood of observing sensory input. In this view, perception is more than a simple bottom-up feedforward mechanism that detects features and objects from the current sensorium; rather, it comprises a predictive top-down generative model which continuously anticipates the sensory input to test hypotheses and explain away ambiguities.

According to the Bayesian brain hypothesis, this complex task is accomplished by Predictive Coding, implemented through message passing of top-down predictions and bottom-up prediction errors between adjacent cortical layers (Rao and Ballard, 1999). The former are generated from latent states maintained at the highest levels, representing beliefs about the causes of the environment, while the latter are computed by comparing sensory-level predictions with the actual observations. Each prediction will then act as a cause for the layer below, while the prediction error will convey information to the layer above. It is thanks to this hierarchical organization and through error minimization at every layer that the cortex is supposed to be able to mimic and capture the inherently hierarchical relationships that model the world. In this view, sensations are only needed in that they provide, through the computation of prediction errors, a measure of how good the model is and a cue to correct future predictions. Thus, ascending projections do not encode the features of a stimulus, but rather how much the brain is surprised about it, considering the strict correlation between surprise and model uncertainty.

2.2. Variational bayes

Organisms are supposed to implement model fit or error minimization by some form of variational inference, a broad family of techniques based on the calculus of variations and used to approximate intractable posteriors that would otherwise be infeasible to compute analytically or even with classical sampling methods like Monte Carlo (Bishop, 2006). Under the Bayesian brain hypothesis, we can assume that the nervous system maintains latent variables z about both the unknown state of the external world and the internal state of the organism. By exploiting a prior knowledge p(z) and the partial evidence p(s) of the environment provided by its sensors, it can apply Bayesian inference to improve its knowledge (Ma et al., 2006). To do so, given the observation s, the nervous system needs to evaluate the posterior p(z|s):

However, directly computing such quantity is infeasible due to the intractability of the marginal p(s) = ∫ p(z, s)dz, which involves integration over the joint density p(z, s). What does the variational approach is approximating the posterior with a simpler to compute recognition distribution q(z) ≈ p(z|s) through minimization of the Kullback-Leibler (KL) divergence between them:

The KL divergence can be rewritten as the difference between log evidence ln p(s) and a quantity known as evidence lower bound, or ELBO (Bishop, 2006):

Since the KL divergence is always nonnegative, the ELBO provides a lower bound on log evidence, i.e., . Therefore, minimizing the KL divergence with respect to q(z) is equivalent to maximizing , which at its maximum corresponds to an approximate density that is closest the most to the real posterior, depending on the particular choice of the form of q(z). In general, few assumptions are made about the form of this distribution—a multivariate Gaussian is a typical choice—with a trade-off between having a tractable optimization process and still leading to a good approximate posterior.

2.3. Free energy and prediction errors

How can Bayesian inference be implemented through a simple message passing of prediction errors? Friston (2002, 2005) proposed an elegant solution based on the so-called free energy, a concept borrowed from thermodynamics and defined as the negative ELBO. Accordingly, Equation (3) can be rewritten as:

Minimizing the free energy with respect to the latent states z—a process called perceptual inference—is then equivalent to ELBO maximization and provides an upper bound on surprise:

In this way, the organism indirectly minimizes model uncertainty and is able to learn the causal relations between unknown states and sensory input, and to generate predictions based on its current representation of the environment. Free energy minimization is simpler than dealing with the KL divergence between the approximate and true posteriors as the former depends on quantities that the organism has access to, namely the approximate posterior and the generative model.

To this concern, it is necessary to distinguish between the latter and the real distribution producing sensory data, called generative process, which can be modeled with the following non-linear stochastic equations:

Where the function g maps latent states or causes z to observed states or sensations s, the function f encodes the dynamics of the system, i.e., the evolution of z over time, while ws and wz are noise terms that describe system uncertainty.

Nervous systems are supposed to approximate the generative process by making a few assumptions: that (i) under the mean-field approximation the recognition density can be partitioned into independent distributions: q(z) = ∏iq(zi), and that (ii) under the Laplace approximation each of these partitions is Gaussian: , where μi represents the most plausible hypothesis—also called belief about the hidden state zi - and Πi is its precision matrix (Friston et al., 2007). In this way, the free energy does not depend on z and simplifies as follows:

Where C is a constant term. A more precise description of the unknown environmental dynamics can be achieved by considering not only the 1st order of Equation 6 but also higher temporal orders of the corresponding approximations: —called generalized coordinates (Friston, 2008; Friston et al., 2008). This allows us to better represent the environment with the following generalized model:

Where is the differential (shift) operator matrix such that , denotes the generalized sensors, while and denote the generalized model functions of all temporal orders. Note that in this system, the sensory data at a particular dynamical order s[d]—where [d] is the order—engage only with the same order of belief μ[d], while the generalized equation of motion, or system dynamics, specifies the coupling between adjacent orders. Such equations are generated from the generalized likelihood and prior distributions, which can be expanded as follows:

As defined above, these variational probability distributions are assumed to be Gaussian:

Where L and M are the dimensions of sensations and internal beliefs, respectively with precisions Πs and Πμ. Note that the probability distributions are expressed in terms of sensory and dynamics prediction errors:

The factorized probabilistic approximation of the dynamic model allows easy state estimation performed by iterative gradient descent over the generalized coordinates, that is, by changing the belief over the hidden states at every temporal order:

Gradient descent is tractable because the Gaussian variational functions are smooth and differentiable and the derivatives are easily computed in terms of generalized prediction errors, since the logarithm of Equation (7) vanishes the exponent of the Gaussian. The belief update thus turns to:

It is crucial to keep in mind the nature of the three components that compose this update equation: a likelihood error computed at the sensory level, a backward error arising from the next temporal order, and a forward error coming from the previous order. These terms represent the free energy gradients relative to the belief μ[d] of Equation (11) for the likelihood, and μ[d+1] and μ[d] of Equation (12) for the dynamics errors.

In short, by making a few plausible simplifying assumptions, the complexity of free energy minimization reduces to the generation of predictions, which are constantly compared with sensory observations to determine a prediction error signal. This error then flows back through the cortical hierarchy to adjust the distribution parameters accordingly and minimize sensory surprise—or maximize evidence—in the long run.

2.4. Active Inference

Describing the relationship between Predictive Coding and Bayesian inference still does not explain why has the cortex evolved in such a peculiar way. The answer comes from the so-called free energy principle (FEP), regard to which the Bayesian brain hypothesis is supposed to be a corollary. Indeed, learning the causal relationships of some observed data (e.g., what causes an increase in body temperature) is insufficient to keep organisms alive (e.g., maintaining the temperature in a vital range).

The FEP states that, for an organism to maintain a state of homeostasis and survive, it must constantly and actively restrict the set of latent states in which it lives to a narrow range of life-compatible possibilities, counteracting the natural tendency for disorder (Friston, 2012)—hence the relationship with thermodynamics. If these states are defined by the organism's phenotype, from the point of view of its internal model they are exactly the states that it expects to be less surprising. Thus, while perceptual inference tries to optimize the belief about hidden causes to explain away sensations, if on the other hand the assumptions defined by the phenotype are considered to be the true causes of the world, interacting with the external environment means that the agent will try to sample those sensations that make the assumptions true, fulfilling its needs and beliefs. Active inference becomes a self-fulfilling prophecy. In this view, there is no difference between a desire and a belief: we simply seek the states in which we expect to find ourselves (Friston et al., 2010; Buckley et al., 2017).

For achieving a goal-directed behavior, it is then sufficient to minimize the free energy also with respect to the action (see Equation 7):

Given that motor control signals only depend on sensory information, we obtain:

Minimizing the free energy of all sensory signals is certainly useful, as every likelihood contribution will drive the belief update; however, it requires the knowledge of an inverse mapping from exteroceptive sensations to actions (Baltieri and Buckley, 2019), which is considered a "hard problem" being in general highly non-linear and not univocal (Friston et al., 2010). In a more realistic scenario, only proprioception drives the minimization of free energy with respect to the motor signals; this process is easier to realize since the corresponding sensory prediction is already in the intrinsic domain. Control signals sent from the motor cortex are then not motor commands as in classical views of Optimal Control theories; rather, they consist of predictions that define the desired trajectory. Under this perspective, proprioceptive prediction errors computed locally at the spinal cord serve two purposes that only differ in how these signals are conveyed. They drive the current belief toward sensory observations—happening to realize perception—like for exteroceptive signals. But they also drive sensory observations toward the current belief by suppression in simple reflex arcs that activate the corresponding muscles—thus happening to realize movement (Adams et al., 2013; Parr and Friston, 2018; Versteeg et al., 2021).

In conclusion, perception and action can be seen as two sides of the same coin implementing the common vital goal of minimizing entropy or average surprise. In this view, what we perceive never tries to perfectly match the real state of affairs of the world, but is constantly biased toward our preferred states. This means that action only indirectly fulfills future goals; instead, it continuously tries to fill the gap between sensations and predictions generated from our already biased beliefs.

2.5. Variational autoencoders

Variational Autoencoders (VAEs) belong to the family of generative models, since they learn the joint distribution p(z, s) and can generate synthetic data similar to the input, given a prior distribution p(z) over the latent space. VAEs use the variational Bayes approach to capture the posterior distribution p(z|s) of the latent representation of the inputs when the computation of the marginal is intractable (Goodfellow et al., 2016). A VAE is composed of two probability distributions, both of which are assumed to be Gaussian: a probabilistic encoder corresponding to the recognition distribution q(z|s), and a generative function p(s|z) called probabilistic decoder computing a distribution over the input space given a latent representation z (Figure 3C):

Although VAEs have many similarities with traditional autoencoders, they are actually a derivation of the AEVB algorithm when a neural network is used for the recognition distribution (Kingma and Welling, 2014). Unlike other variational techniques, the approximate posterior is generally not assumed to be factorial, but since the calculation of the ELBO gradient is biased, a method called reparametrization trick is used so that it is independent of the parameters ϕ. This method works by expressing the latent variable z by a function:

Where ϵ is an auxiliary variable independent of ϕ and s. The ELBO for a single data point can thus be expressed as:

Which can be minimized through backpropagation. Here, the KL divergence can be seen as a regularizer, while the second RHS term is an expected negative reconstruction term that depends on all the mth components of the latent variable z.

3. A framework for flexible intentions

In what follows, we develop a computational theory of the circuitry controlling goal-directed actions in a dynamically changing environment through flexible intentions and discuss its putative neural basis in the PPC and related areas. We first elaborate on intentionality in Active Inference, then provide a proof-of-concept agent endowed with visual input. The theory is exemplified and assessed in the following sections through simulations of visually-guided behaviors. The theoretical work is motivated by basic research showing the critical role of the PPC in goal-directed sensorimotor control through intention coding (Andersen, 1995; Desmurget et al., 1999; Galletti and Fattori, 2018) and extends previous theoretical and applied research on Active Inference (Friston et al., 2009; Pio-Lopez et al., 2016; Lanillos and Cheng, 2018; Limanowski and Friston, 2020) and VAE-based vision support (Rood et al., 2020; Sancaktar et al., 2020). The simulations are inspired by a classical monkey reaching task (Breveglieri et al., 2014).

3.1. Flexible intentions

State-of-the-art implementations of continuous Active Inference have proven to successfully tackle a wide range of tasks, from oculomotion dynamics (Adams et al., 2015) to the well-known mountain car problem (Friston et al., 2009). Most simulations involve reaching movements in robotic experiments, where several strategies have been tried for designing goal states, which are expressed in terms of an attractor embedded in the system dynamics. However, there seem to be a few issues regarding biological plausibility. First, the goal state is usually static and the agent is not able to deal with continuously changing environments, expecting that the world will always evolve in the same way (Baioumy et al., 2020). For dynamic goals, one has to use low-level information of sensory signals (e.g., a visual input about a moving target) directly into the high-level dynamics function (Friston, 2011). Second, when goals are specified in an exteroceptive domain, one uses sensory predictions to obtain a belief update direction through backpropagation of the corresponding error (Oliver et al., 2019; Sancaktar et al., 2020). In this case, the same generative model that produces predictions and compares them with the actual observations, has to be duplicated into the system dynamics to further compare the belief with the desired cue. In other words, two specular mechanisms are used for the same model, with additional concerns when the latter can be changed by learning.

A common question seems to be behind these two similar issues: how does dynamic sensory information get available for generating high-level dynamic goals? The same inference process of environmental causes should be at work for the same signal flow, and a goal state should be computed locally without information passed inconsistently. How then to design a flexible exteroceptive attractor that avoids implausible scenarios?

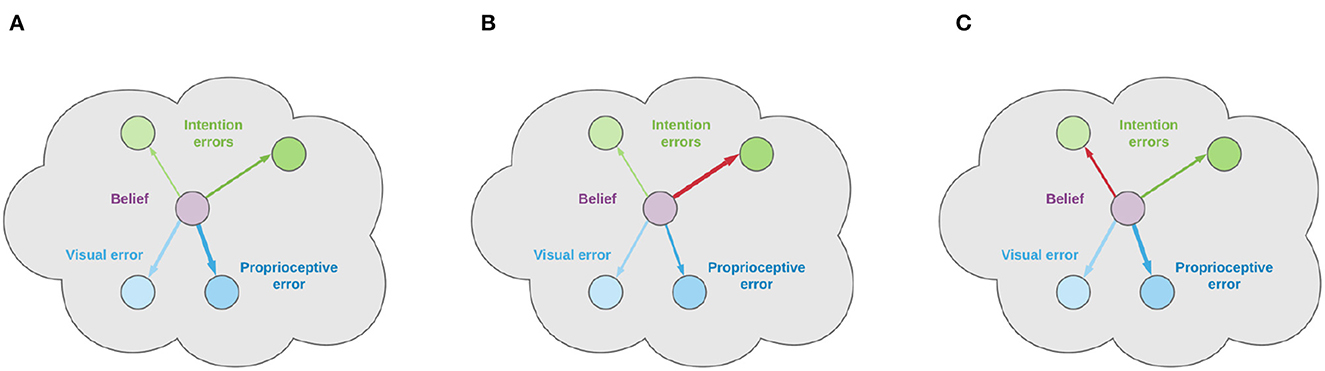

Although the high-level latent state could be as simple as encoding body configurations only, an agent could also maintain a dynamically estimated belief over moving objects in the scene. An intention can then be computed by exploiting this new information to compute a future action goal in terms of body posture, so that the attractor—either defined in the belief domain or at the sensory level—is not fixed but depends on current perceptual and internal representations of the world (but also on past memorized experiences). This intention may also depend on priors generated from higher-level areas (Friston et al., 2011), so that the considered belief is located at an intermediate level between the generative models that produce sensory predictions, and the ones that define its evolution over time. In a non-trivial task, its dynamics may be generally composed of several contributions and not restricted to a single intention: we thus propose to decompose it into a set of functions, each one providing an independent expectation that the agent will find itself in a particular state. The belief is then constantly subject to several forces of two natures: one from lower hierarchical levels—proportional to sensory prediction errors—that pulls it toward what the agent is currently perceiving, and one from lateral or higher connections—which we call intention prediction errors—that pulls it toward what the agent expects to perceive in the future.

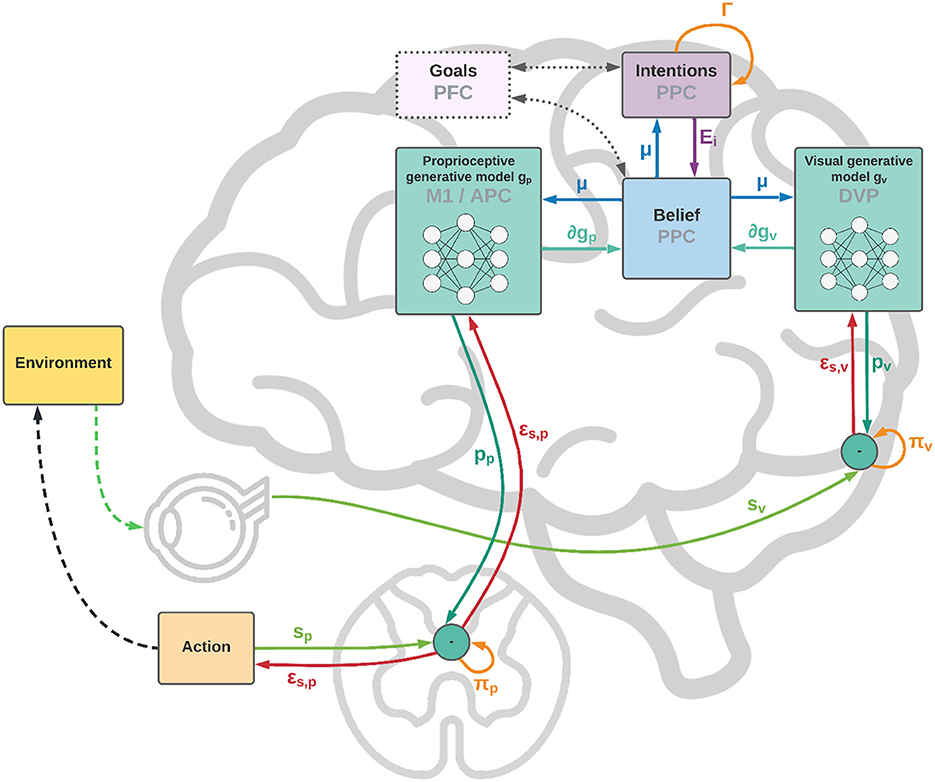

As shown in Figure 1, from a neural perspective the PPC is the ideal candidate for a cortical structure computing beliefs over bodily states and flexible intentions: on one hand, being at the apex of the Dorsal Visual Stream (DVS) and other sensory generative models, and on the other linked with motor and frontal areas that produce continuous trajectories and plans of discrete action chunks. The PPC is known to be an associative region that integrates information from multiple sensory modalities and encodes visuomotor transformations—e.g., area V6A is thought to encode object affordances during reaching and grasping tasks (Fattori et al., 2017; Filippini et al., 2017). Moreover, evidence suggests that the PPC encodes multiple goals in parallel during sequences of actions, even when there is a considerable delay between different goals (Baldauf et al., 2008).

Figure 1. Functional architecture and cortical overlay. The process starts with the computation of future intentions h (not explicitly represented in the figure) in the PPC under the coordination of frontal and motor areas. In the middle of the sensorimotor hierarchy, the PPC maintains beliefs μ over the latent causes of sensory observations sp and sv, and computes proprioceptive and visual predictions through the somatosensory and dorsal visual pathways (for simplicity, we have omitted the somatomotor pathway and considered a single mechanism for both motor control and belief inference). The lower layers of the hierarchy compute sensory prediction errors εsp and εsv, while the higher layers compute intention prediction errors Ei; both are propagated back toward the PPC, which thus integrates information from multiple sensory modalities and intentions. Free energy is minimized throughout the cortical hierarchy by changing the belief about the causes of the current observation (perception) and by sending proprioceptive predictions from the motor cortex to the reflex arcs (action). An essential element of this process is the computation of gradients ∂gp and ∂gv by inverse mappings from the sensations toward the deepest latent states. In this process, intentions act as high-level attractors and the belief propagated down to compute sensorimotor predictions embeds a component directing the body state toward the goals.

In short, the agent constantly maintains plausible hypotheses over the causes of its percepts, either bodily states or objects in exteroceptive domains; by manipulating them, the agent dynamically constructs representations of future states, i.e., intentions, which in turn act as priors over the current belief. Thus, if the job of the sensory pathways is to compute sensory-level predictions, we hypothesize that higher levels of the sensorimotor control hierarchy integrate into the PPC previous states of belief with flexible intentions, each predicting the next plausible belief state.

3.2. Dynamic goal-directed behavior in Active Inference

For a more formal definition, we assume that the neural system perceives the environment and receives motor feedback through J noisy sensors S comprising multiple domains (most critically, proprioceptive and visual). Under the VB and Gaussian approximations of the recognition density, we also assume that the nervous system operates on beliefs μ ∈ ℝM that define an abstract internal representation of the world. Furthermore, we assume that the agent maintains generalized coordinates up to the 1st order resulting from free energy minimization in the generalized belief space .

We then define intentions hk as predictions of target goal states over the current belief μ computed with the help of K functions . Although both belief and intentions could be abstract representations of the world—comprising states in extrinsic and intrinsic coordinates—we assume a simpler scenario in which the intentions operate on beliefs in a common intrinsic motor-related domain, e.g., the joint angles space. As explained before, we assume that there are two conceptually different components in both the belief μ and the output of the intention functions ik. The first component could represent the bodily states and serve to drive actions, while the second one could represent the state of other objects—mostly targets to interact with—which can be internally encoded in the joint angles space as well (the reason for this particular encoding will be clear later). These targets could be observed, but they could also be imagined or set by higher-level cognitive control frontal areas such as the PFC or PMd (Genovesio et al., 2012; Stoianov et al., 2016).

For the sake of notational simplicity, we group all intentions into a single matrix H ∈ ℝMxK:

Intention prediction errors eik are then defined as the difference between the current belief and every intention:

In turn, sensory predictions are produced by a set of generative models gj, one for each sensory modality. We group the predictions into a prediction matrix P:

Note that each term pj is a multidimensional sensory-level representation that provides predictions for a particular sensory domain, with its own dimensionality, which we group into a single quantity for notational simplicity. Sensory prediction errors εsj are then computed as the difference between sensations from each domain and the corresponding sensory-level predictions:

Under the assumption of independence among intentions and sensations, we can factorize the joint probability of the generative model into a product of distributions for each sensory modality and intention, which expands as follows:

In the following, we will not consider the prior probability over the 0th order belief p(μ). The other probability distributions are assumed to be Gaussian:

Where γk and πj are, respectively, the precisions of intention k and sensor j. Here, and fk correspond to the kth component of the 1st order dynamics function:

Where λ is the gain of intention prediction errors Ei. Note that the goal states are embedded into these functions, acting as belief-level attractors for each intention, so that the agent expects to be pulled toward target states with a velocity proportional to the error. Although the generalized belief allows encoding information about the dynamics of the true generative process, in the simple case delineated the agent does not have any such prior. For example, the agent does not know the trajectory of a moving target in advance (whose prior, in a more realistic scenario, would be present and acquired through learning of past experiences) and will update the belief only relying on the incoming sensory information. Nevertheless, the agent maintains (false) expectations about target dynamics, and it is indeed the discrepancy between the evolution of the (real) generative process and that of the (internal and biased) generative model that makes it able to implement a goal-directed behavior.

The prediction errors of the dynamics functions can be grouped into a single matrix:

From Equation (14), we can now compute the free energy derivative with respect to the belief:

Here, ⊙ is the element-wise product, G and F enclose the gradients of all sensory generative models and dynamics functions, while Π and Γ comprise all sensory and intention precisions:

In the following, we will neglect the backward error in the 0th order of Equation (28) since it has a much smaller impact on the overall dynamics, and treat as the actual attractor force the forward error at the 1st order:

Where ϵs and ϵi, respectively, stand for the contributions (in the belief domain) of precision-weighted sensory and intention prediction errors. Considering the 1st order forward error as attractive force instead of the 0th order backward error results in simpler computations since there is no gradient of the dynamics functions to be considered. Further studies are however needed to understand the relationships between these two forces in goal-directed behavior. We can interpret γk as a quantity that determines the relative attractor gain of intention k, so that intentions with greater strength have a more significant impact on the overall update direction; these gains could also be modulated by projections from higher-levels areas applying cognitive control. In turn, πj corresponds to the confidence about each sensory modality j, so that the agent relies more on sensors with higher strength.

Similarly, we can compute control signals by minimizing the free energy with respect to the actions, expressing the mapping from sensations to actions by:

Where ∂aμ is an inverse model from belief to actions. If motor signals are defined in terms of joint velocities, we can decompose and approximate the inverse model as follows:

Where θ are the joint angles, the subscript p indicates the proprioceptive contribution and we approximated ∂agp by a time constant Δt (Oliver et al., 2019). If we assume that the belief over hidden states is encoded in joint angles, the computation of the inverse model may be as simple as finding the pseudoinverse of a matrix. However, if the belief is specified in a more generic reference frame and the proprioceptive generative model is a non-linear function, it could be harder to compute the corresponding gradient, causing additional control problems like temporal delays on sensory signals (Friston, 2011). Alternatively, we can consider a motor control driven only by proprioceptive predictions, so that the control signal is already in the correct domain and may be achieved through simple reflex arc pathways (Adams et al., 2013; Versteeg et al., 2021). In this case, all that is needed is a mapping from proprioceptive predictions to actions:

Expressing in Equation (31) the mapping from sensations to actions by the product of the inverse model ∂aμ and the gradient of the generative models allows the control signals to be defined in terms of the weighted sensory contribution ϵs, already computed during the inference process. Such an approach may have some computational advantages (as will be explained later), but it is unlikely to be implemented in the nervous system as control signals are supposed to convey predictions and not prediction errors (Adams et al., 2013).

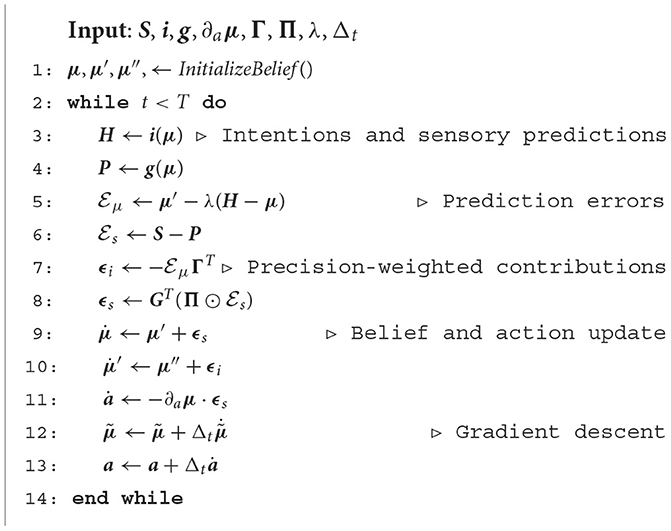

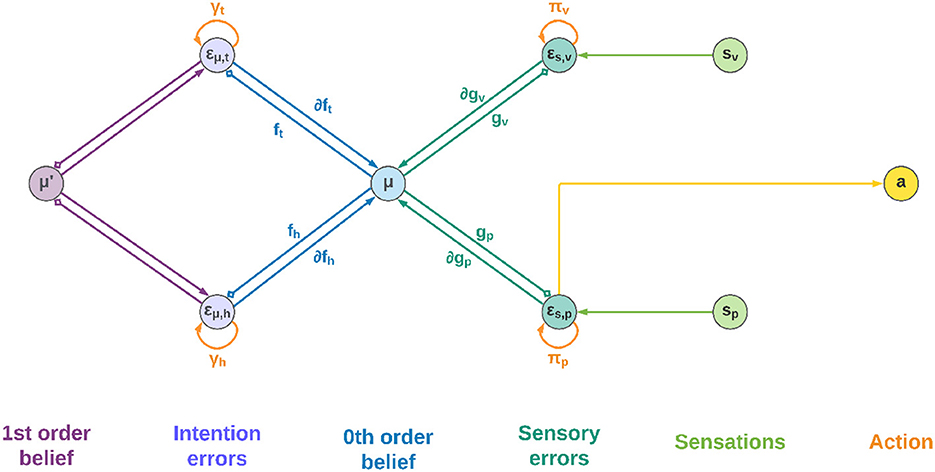

Algorithm 1 outlines a schematic description of the flow of dynamic computations. For simplicity, we used the term "intention" also when describing the dynamics functions and their precisions, but one has to keep in mind the difference between the intention prediction errors Ei, which directly encode the direction toward target states, and the dynamics prediction errors , which arise from the derivation of the corresponding probability distributions.

3.3. Neural implementation

Figure 2 shows a schematic neuronal representation of the proposed agent, which further extends earlier perceptual inference schemes (Bogacz, 2017) to full-blown Active Inference. In this simple model, the intentions consist of a single layer with two neurons, and the goal states are implicitly defined in the dynamics functions; however, in a realistic setting the latter would be composed of networks of neurons where these states are explicitly encoded, and non-linear functions could also be used to achieve more advanced behaviors. Note also that intentions hk and sensory generative models gj are all part of the same architecture, the only difference being the location in the cortical hierarchy.

Figure 2. Neuronal representation with two intentions. Small squares stand for inhibitory connections.

Low-level prediction errors for each sensory modality are represented by neurons whose dynamics depends on both observations and predictions of the sensory generative models:

Upon convergence of the neural activity, that is, , we obtain the prediction error computation derived above. In turn, the internal activity of neurons corresponding to high-level prediction errors is obtained by subtracting the generated dynamics function from the 1st order belief:

Having received information coming from the top and bottom of the hierarchy, the belief is updated by integrating every signal:

Which parallels the update formula derived above (Equation 28). Correspondingly, the 1st order component of the belief is updated as follows:

The belief is thus constantly pushed toward a direction that matches sensations on one side and intentions on the other. We adopted the idea that the slow-varying precisions are encoded as synaptic strengths (Bogacz, 2017), but alternative views consider them as gains of superficial pyramidal neurons (Bastos et al., 2012). In any case, they could be dynamically optimized during inference in a direction that minimizes free energy—e.g., if a sensory modality does not help predict sensations, its weight will decrease. This is also true for the intention weights: by dynamically changing during the movement, they can act as modulatory signals that select the best intention to realize at every moment, which can be useful for solving simultaneous or sequential tasks. Nonetheless, the distinction is purely conceptual as the agent does not discriminate between modulating a future intention or increasing the confidence of a sensory signal. At the belief level, every element just follows the rules of free energy minimization.

4. Method

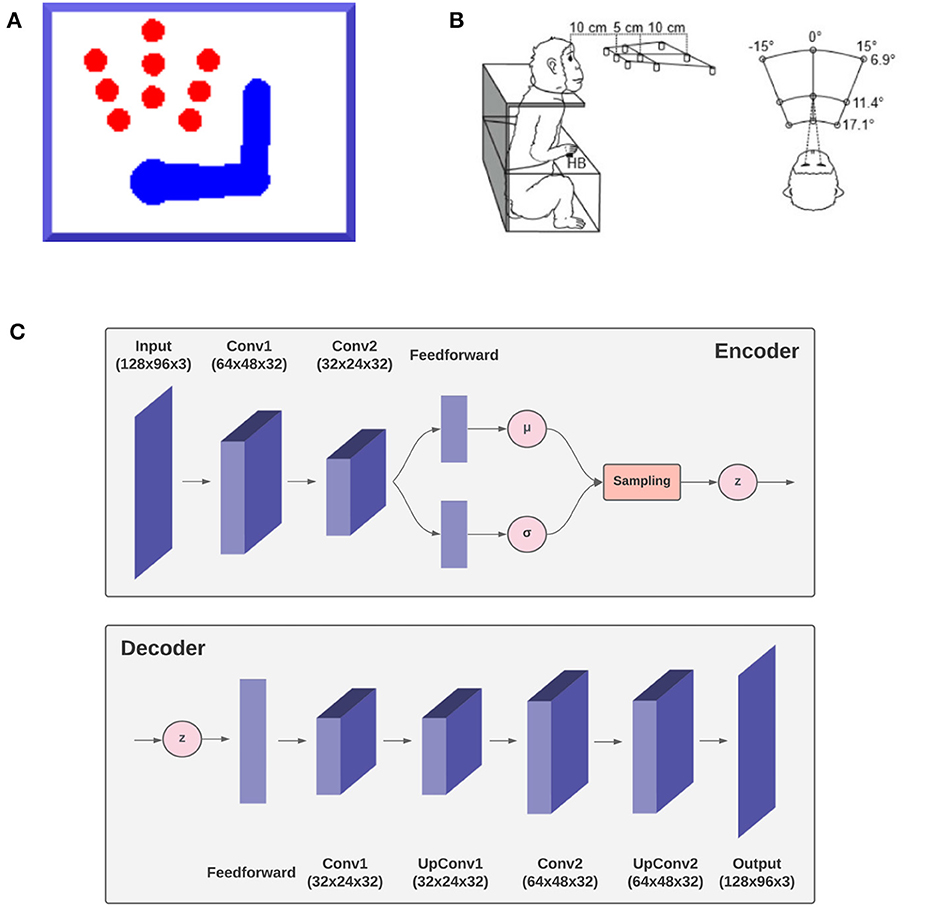

To demonstrate the feasibility of the approach and its capacity to successfully implement goal-directed behavior in dynamic environments, we simulated an agent consisting of an actuated upper limb with visual and proprioceptive sensors that allow it to perceive and reach static and moving targets within its reach.1 Figure 3A shows the size and position of the targets, as well as limb size and a sample posture. Since the focus here was on theoretical aspects, we simulated just a coarse 3-DoF limb model moving on a 2D plane. However, the approach easily generalizes to a more elaborated limb model and 3D movements. In the following, we describe the agent, the specific implementation, and the simulated task. Then, in the Results section we assess the agent's perceptual and motor control capabilities in static and dynamic conditions. The static condition simulated a typical monkey reaching task of peripersonal targets as in Figure 3 (Breveglieri et al., 2014). In turn, the dynamic condition involved a moving target that the agent had to track continuously.

Figure 3. Simulation outline. The agent, a simulated 3-DoF actuated upper limb shown in (A) is set to reach one of the nine red circle targets as in the reference monkey experiment (Breveglieri et al., 2014) outlined in (B). The agent is equipped with a fixed virtual camera providing peripersonal visual input and a visual model, the decoder gv of a VAE shown in (C) simulating functions of the DVS.

4.1. Delayed reaching task

The primary testbed task is a simplified version of a delayed reaching monkey task in which a static target must be reached with a movement that can only start after a delay period (Breveglieri et al., 2014). Delayed actions are used to separately investigate neural processes related to action preparation (e.g., perception and planning) and execution in goal-directed behavior, and are thus useful to analyze the two main computational components of free energy minimization, namely, perceptual and active inference, which otherwise work in parallel. Delayed reaching could be implemented using various approaches: the update of the posture component of the belief dynamics could be blocked by setting the intention gain λ to zero during inference (implemented here): in this way, there are no active intentions and the belief only follows sensory information. Alternatively, action execution could be temporarily suspended by setting to zero the proprioceptive precision, so that the agent still produces proprioceptive predictions but does not trust their prediction errors: in this scenario, the belief dynamics includes a small component directed toward the intention, but the discrepancy produced is not minimized through movement.

Reach trials start with the hand placed on a home button (HB) located in front of the body center (i.e., the “neck”), and the belief is initialized with this configuration. Then one of the 9 possible targets of the reference experiment (Figure 3) is lit red. Follows a delay period of 100 time steps during which the agent is only allowed to perceive the visible target and the limb, and the inference process can only change the belief. After that, the limb is allowed to move and the joint angles are updated according to Equation (38). As in the reference task, upon target reaching the agent stops for a sufficiently long period, i.e., a total of 300 time steps per trial. After that, the agent reaches back the HB (not analyzed here). The simulation included 100 repetitions per target, i.e., 900 trials in total.

4.2. Body

The body consists of a simulated monkey upper limb composed of a moving torso attached to an anchored neck, an upper arm, and a lower arm, as shown in Figure 3. The three moving segments are schematized as rectangles, each with unit mass, while the joints (shoulder, elbow) and the tips (neck, hand) as circles. The proportions of the limb segment and the operating range of the joint angles were derived from monkey data Macaca mulatta (Kikuchi and Hamada, 2009). The state of the limb and its dynamics are described by the joint angles θ and their first moment . We assume noisy velocity-level motor control, whereby the motor efferents a noisily control the first moment of joint angles with zero-centered Gaussian noise:

4.3. Sensors

The agent receives information about its proprioceptive state and visual context. Simplified peripersonal visual input sv was provided by a virtual camera that included three 2D color planes, each of them 128 x 96 pixels in size. The location and orientation of the camera were fixed so that the input provided full vision of peripersonal targets and the entire limb in any possible limb state within its operating range. The limb could occlude the target in some configurations.

As in the simulated limb, the motor control system also receives proprioceptive feedback through sensors sp, providing noisy information on the true state of the limb (Tuthill and Azim, 2018; Versteeg et al., 2021). We further assumed that sp provides a noisy reading of the state of all joints only in terms of joint angles, ignoring other proprioceptive signals such as force and stretch (Srinivasan et al., 2021), which the Active Inference framework can natively incorporate.

4.4. Belief

We assume that both the orders of the generalized belief comprise three components: (i) beliefs over arm joint angles, or posture; (ii) beliefs over the target location represented again in the joint angles space—i.e., the posture corresponding to the arm touching the target; and (iii) beliefs over a memorized HB configuration. Thus, μ = [μa, μt, μh]. Note that the last two components can be interpreted as affordances, allowing the agent to implement interactions in terms of bodily configurations (Pezzulo and Cisek, 2016).

4.5. Sensory model

The sensory generative distribution has two components, one for each sensory modality: a simplified proprioceptive model gp(μ) and a full-blown visual model gv(μ):

Since the belief is already in the joint angles domain, we implemented a simple proprioceptive generative model gp(μ) = Gpμ = μa, where Gp is a mapping that only extracts the first component of the belief:

Where 0 and 𝕀 are respectively 3 x 3 zero and identity matrices. Note that gp(μ) could be easily extended to a more complex proprioceptive mapping if the body and/or joint sensors have a more complex structure and the belief has a richer and abstract representation.

In turn, the visual generative model gv is the decoder component of a VAE (see Figure 3C). It consists of one feedforward layer, two transposed convolutional layers, and two standard convolutional layers needed to smooth the output. Its latent space is composed of two elements, representing the joint angles of arm and target (example in Figure 13). The first component is used to generate, in the visual output, an arm with a specific joint configuration, while the second component is used to produce only the image of the target through direct kinematics of every joint angle. The VAE was trained in a supervised manner for 100 epochs on a dataset comprising 20.000 randomly drawn body-target configurations that uniformly spanned the entire operational space, and the corresponding visual images. The target size varied with a radius ranging from 5 to 12 pixels.

The proprioceptive gradient ∂gp simplifies to the mapping Gp itself, while the visual component ∂gv is the gradient of the decoder computed by backpropagation. Since the Cartesian position of the target is encoded in joint angles, this gradient implicitly performs a kinematic inversion. Therefore, predictions P and prediction errors take the form:

Note that defining sensory predictions on both proprioceptive and visual sensory domains allows the agent to perform efficient goal-directed behavior also in conditions of visual uncertainty, e.g., due to low visibility. Indeed, since the belief is maintained over time, the agent remembers the last known target position and can thus accomplish reaching tasks also in case of temporarily occluded targets.

4.6. Intentions

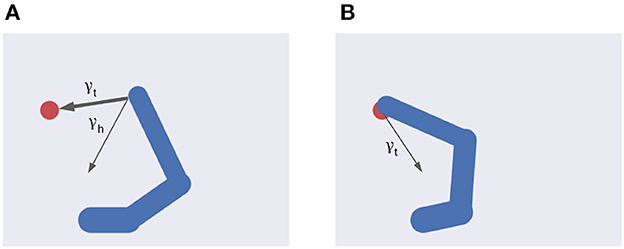

Stepping on the proposed formalization (Equation 20), we define two specific intentions (Figure 4) as follows:

Here ht = it(μ) defines the agent's expectation that the arm belief is equal to the joint configuration corresponding to the target to be reached, and it is implemented as a simple mapping It that sets the first belief component equal to the second one. In turn, the intention hh = ih(μ) encodes the future belief of the agent that the arm will be at the HB position. The two intention mappings are defined by:

The corresponding intention prediction errors are then:

These errors provide an update direction respectively toward the target and HB joint angles. As there is no intention to move the target or the HB, the second and third components of the prediction errors will be zero.

Figure 4. Multiple intentions. Intention prediction errors of variable strength (arrow width) controlled by intention precisions point at different target states. (A) A stronger target attraction (the red circle) implements the reaching action. (B) A stronger attraction of the invisible but previously memorized HB implements the return-to-home action.

4.7. Precisions

Free energy minimization and Predictive Coding in general heavily depend on precisions modulation. To investigate their role, we parameterized the relative precisions of each intention and sensory domain with parameters α and β as follows:

The parameter α controls the relative strength of the error update due to proprioception and vision, while the parameter β controls the relative attraction by each intention. With these parameters, the sensory and intention weighted contributions are unpacked as follows:

Equation (46) shows the balance between visual and proprioceptive information. For example, if α = 0 the agent will only use proprioceptive feedback, while for α = 1 the belief will be updated only relying on visual feedback. Note that these are extreme conditions—e.g., the former may correspond to null visibility—and typical sensory systems provide balanced feedback. In turn, Equation (47) spells out the control of belief attraction. The agent will follow the first intention when β = 0, or the second one when β = 1 (Figure 4). Note that the introduction of a possible competing reach movement creates a conflict among intentions aiming to fulfill opposing goals (e.g., for intermediate values of β) while the agent can physically realize only one of them at a time (Figure 4). Thus, we assume that the control of intention selection is realized through mutual inhibition and higher-level bias. Finally, the parameter λ controls the overall attractor magnitude (see also Equation 26).

We can also use the precision parameter α to manipulate the strength of the free energy derivative with respect to the actions as follows:

Note that by increasing α—i.e., more reliability on vision—the magnitude of the belief update remains constant, while action updates decrease because the agent becomes less confident about its proprioceptive information. Also, one could differentially investigate the effect of precision strength on belief and action by directly manipulating the precisions—e.g., visual precision πv may include different components that follow the belief structure:

Where we used the parameter α only for the arm belief. For example, when α = 0 and πvt > 0, the target belief is updated using visual input while the arm moves only using proprioception, a scenario that emulates movement in darkness with a lit target.

5. Results

In the following, we assess the capacities of the intention-driven Active Inference agent to perceive and perform goal-directed actions in reaching tasks with static and dynamic visual targets. The main testbed task was delayed reaching, but we simulated several other conditions.

Sensorimotor control that implements goal-directed behavior was investigated in various sensory feedback conditions, including pure proprioceptive or mixed visual and proprioceptive, in which the VAE decoder provided support for dynamic estimation of visual targets and bodily states. The latter is the typical condition of performing reaching actions and allows greater accuracy (Keele and Posner, 1968). In an additional baseline (BL) condition, the target was estimated by the decoder, but the movement was performed without visual feedback or proprioceptive noise, to allow comparisons with the typical approach in previous continuous Active Inference studies, e.g., Pio-Lopez et al. (2016). We also investigated the effects of sensory and intention precisions, motor control type, and movement onset policy. Finally, we analyzed the visual model and the nature of its gradients to provide critical information about the causes of the observed motor behavior.

Action performance was assessed with the help of several measures: (i) reach accuracy: success in approaching the target within 10 pixels of its center, i.e., the hand touching the target; (ii) reach error: L2 hand-target distance at the end of the trial; (iii) reach stability: standard deviation of L2 hand-target distance during the period from target reach to the end of the trial, in successful trials; (iv) reach time: number of time steps needed to reach the target in successful trials. We also assessed target perception through analog measures based on the L2 distance between the target location and its estimate transformed from joint angles into visual position by applying the geometric (forward) model. Specifically, we defined the following measures: (v) perception accuracy: success in estimating the target location within 10 pixels; (vi) perception error: L2 distance between the true and estimated target position at the end of the trial; (vii) perception stability: standard deviation of the L2 distance between the target position and its estimation during the period starting from successful estimation until end of the trial; (viii) perception time: number of time steps needed to successfully estimate the target position.

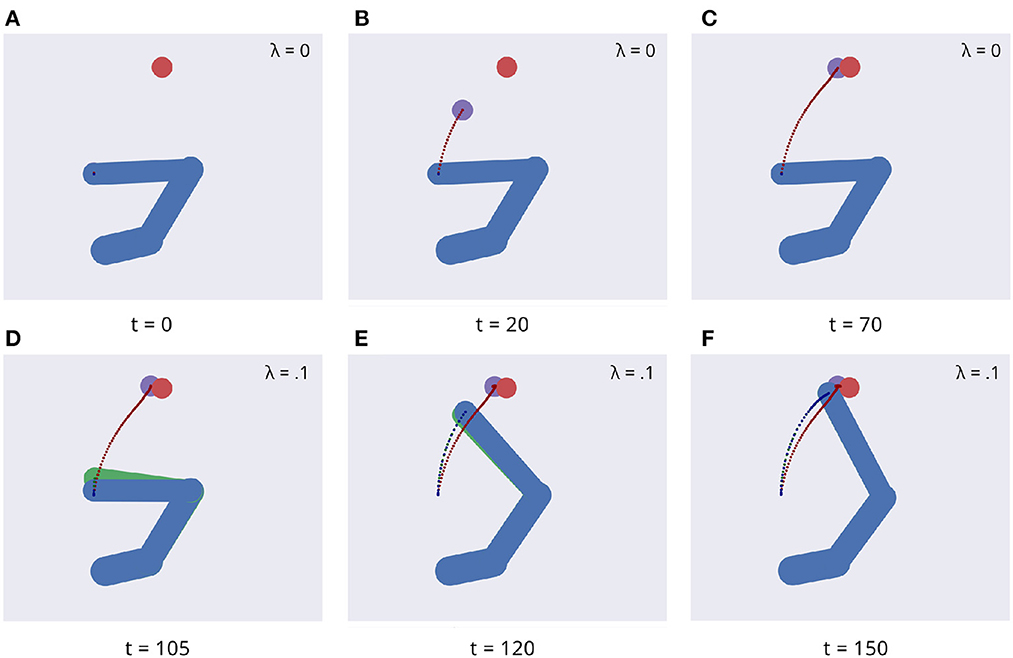

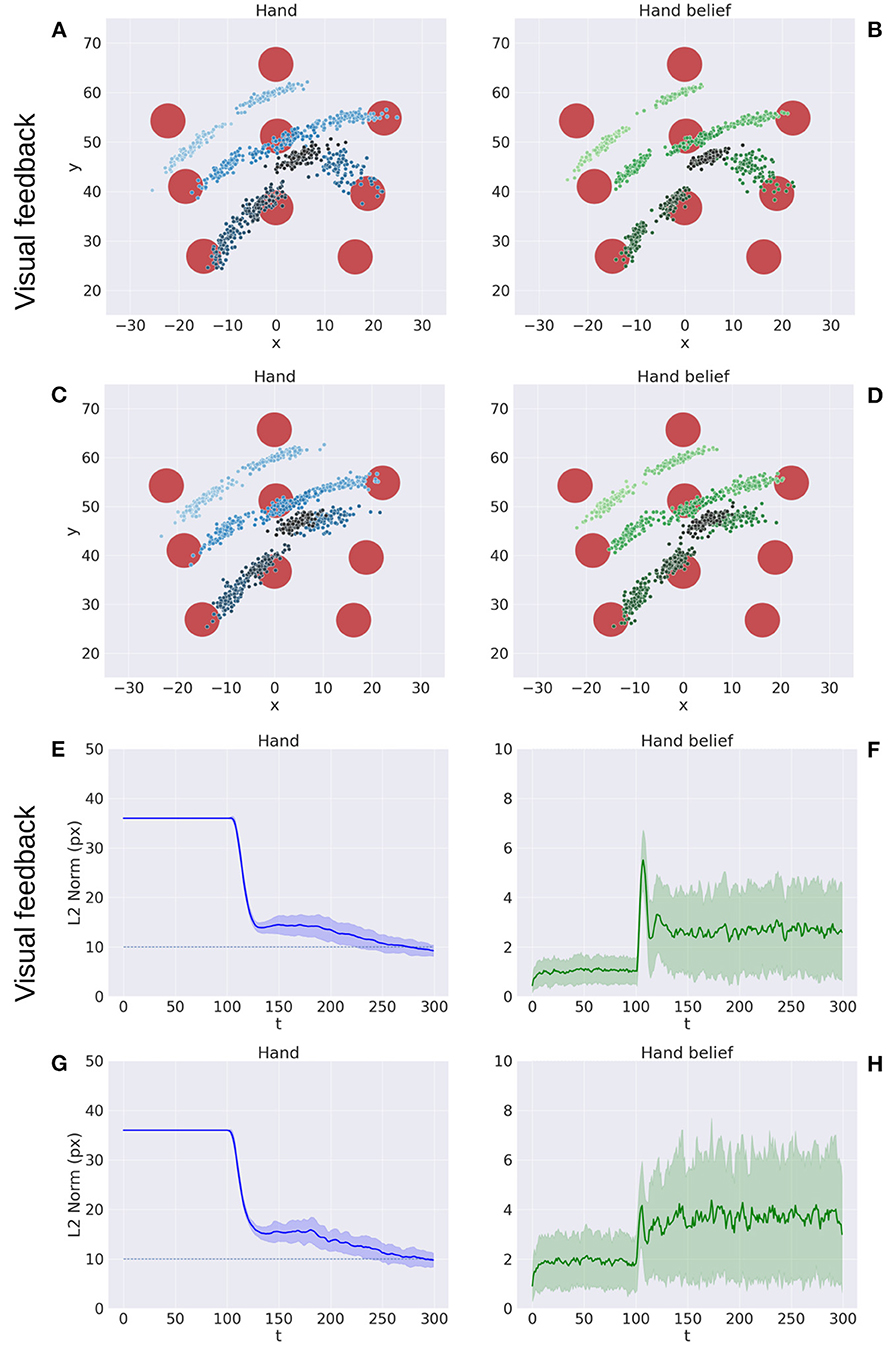

Figure 5 illustrates key points of the delayed reaching task. During the delay period (Figures 5A–C), the posture does not change since the joint angles only follow the arm belief, which is kept fixed, while the target belief is attracted by the sensory evidence and gradually shifts toward it. When movement is allowed (Figures 5D–F) by setting λ > 0 and β = 0.1, the combined attractor produces a force that moves the arm belief toward the target, generating proprioceptive predictions—therefore motor commands—that let the real arm follow this trajectory. Reaching performance is summarized in Figure 6. Panels A-D show spatial statistics of the final hand location (with the corresponding belief) for each target, separately for reaching with proprioception only or proprioceptive and visual sensory feedback. Descriptive statistics revealed an important benefit of visual feedback (Figures 6E–H), in parallel with classical behavioral observations (Keele and Posner, 1968): reach accuracy was higher (with: 88.28%; without: 83.72%) and both reach stability and arm belief error were considerably better with visual feedback as well (stability: 1.35; error: 1.98px) compared to the condition with only proprioception (stability: 1.78; error: 2.87px).

Figure 5. Dynamics of the delayed reaching task. At trial onset (A) a visual target (red circle) appears, the arm (in blue) is located on the HB position, and the arm belief (in green) is set at the true arm state. During the delay period, the perceptual inference process gradually drives the target belief (purple circle) toward the real position (B, C). During this phase, the intention gain λ is set to 0, so that movement is inhibited and the arm belief does not change given the unchanged proprioceptive evidence. After movement onset, the arm freely follows its belief (D, E) until they both arrive at the goal state (F).

Figure 6. Performance of the delayed reaching task. (A–D) Spatial distribution of hand positions (A, C) and corresponding beliefs (B, D) per target at the end of the reach movements, with (A, B) and without (C, D) visual feedback. Each point represents a trial (100 trials per target). Reach error (E, G) and belief error (F, H) over time, with (E, F) and without (G, H) visual feedback (bands represent C.I.). The reach criterion of the hand-target distance is visualized as a dotted line. L2 norm for the hand belief is computed by the difference between real and estimated hand positions. Reaching with visual feedback resulted in a more stable hand belief.

5.1. Precision balance

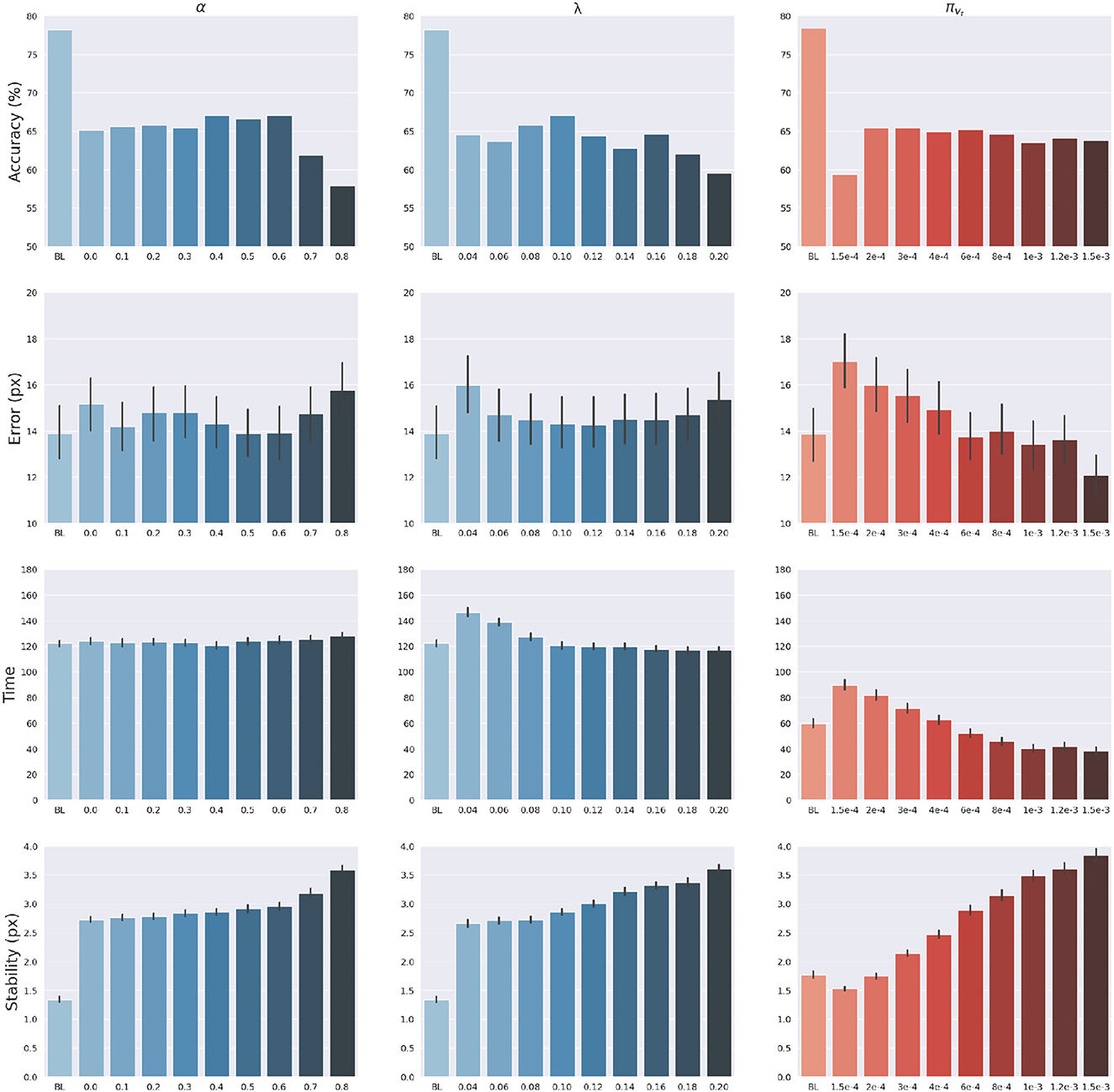

The effects of sensory feedback led to a further systematic assessment of the effects of sensory and intention precisions α, πvt and λ (see Equations 45, 49). The assessment was carried out following the structure of the delayed reaching task. We varied the above precisions one at a time, using levels shown on the abscissas in Figure 7, while keeping the non-varied precisions at their default values. Note that α = 0 corresponds to reaching without visual feedback, while the conditions α>0 may be interpreted as reaching with different levels of arm visibility. We recall that the baseline condition (BL) performs reaching movements without visual feedback and proprioceptive noise, i.e., α = 0 and wp = 0. To obtain a systematic evaluation, each condition was run on a rich set of 1,000 randomly selected targets that covered the entire operational space. Finally, we only considered the target-reaching intention, i.e., β = 0; everything else was the same as in the main task.

Figure 7. Effects of precision balance. Reach accuracy (1st row), reach error, i.e., L2 hand-target distance (2nd row), reach time (3rd row), and reach stability (4th row). Reach performance is shown as a function of arm sensory precision α (left) and attractor strength λ (middle). In turn, perception performance is shown as a function of target sensory precision πvt (right). Vertical bars represent C.I.

The results are shown in Figure 7. The panels in the left column show the effect of α compared to the BL agent with noiseless proprioception. Active Inference with only proprioception (i.e., α = 0) has a lower reach accuracy and higher error, while the best performance is obtained with balanced proprioceptive and visual input, in corroboration with the observations of the basic delayed reaching task. In the latter case, the motor control circuitry continuously integrates all available sensory sources to implement visually-guided behavior (Saunders and Knill, 2003). However, accuracy and stability rapidly decrease for excessively high values of α, due to the discrepancy in update directions between the belief—which makes use of all available sensory information, including the more precise visual feedback—and action—which in this case relies on excessively noisy proprioception. Furthermore, as in the main experiment, the effects of visual precision are evident in the stability of the arm belief, which gradually improves with increasing values (Figure 8): In addition to the reliability of the visual input, this effect is also a consequence of the smaller action updates due to the reduced proprioceptive precision.

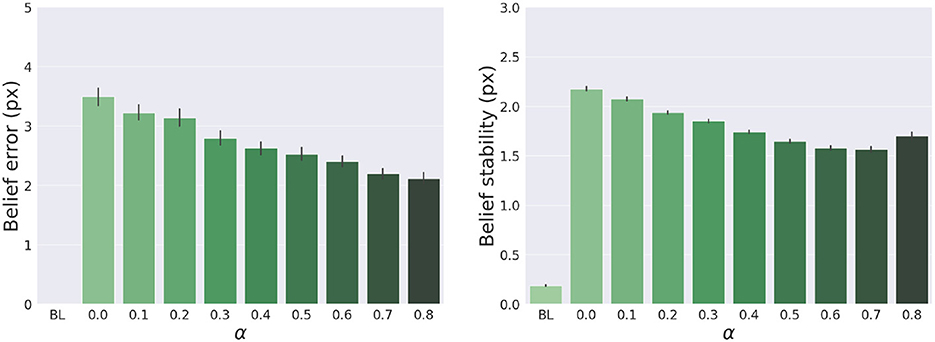

Figure 8. Belief error and stability—representing the difference between real and estimated hand positions—for different values of α. Vertical bars represent C.I.

In turn, the panels in the middle column of Figure 7 reveal the effects of the attractor gain λ; to remind the reader, the greater the gain, the greater the contribution of intention prediction errors in the belief updates. The results show that as the intention gain λ increases reach accuracy generally improves, and the number of time steps needed to reach the target decreases. However, beyond a certain level, the accuracy tends to decrease since the trajectory dynamics becomes unstable; thus, excessively strong action drag is counterproductive to the implementation of smooth movements. Finally, the panels in the right column of Figure 7 show the effects of the target precision πvt, which directly affects the quality of target perception. Note that better performances are generally obtained in terms of accuracy, error, and perception time for values of πvt higher than the arm visual precision, which corresponds to a classical effect of contrast on perception, but also means here that the arm and target beliefs follow different dynamics.

5.2. Motor control

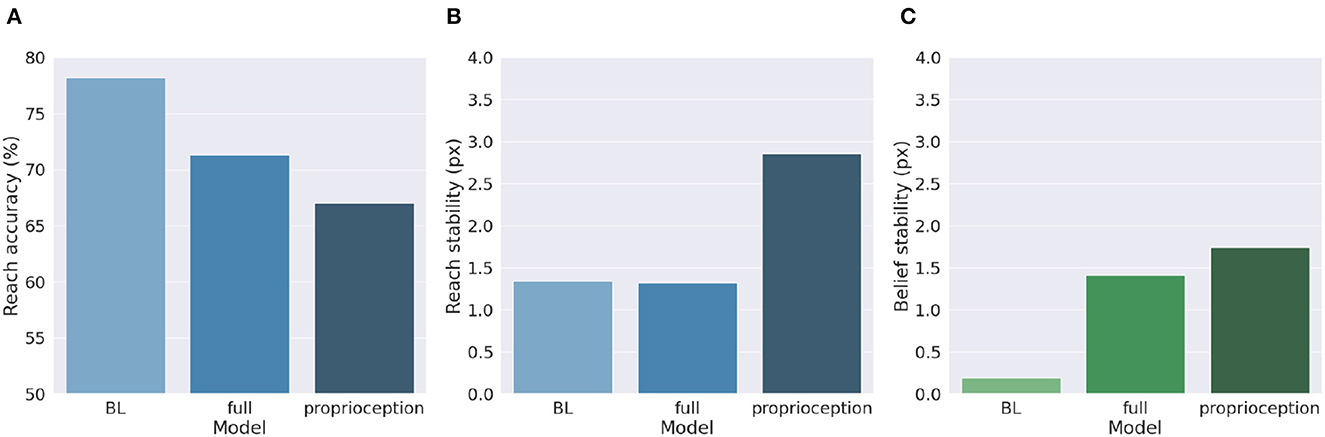

We described earlier two different ways of implementing motor control in Active Inference: making use of all sensory information, or proprioception only. The first method requires significantly more computations since the agent needs to know the inverse mapping from every sensory domain to compute the control signals. However, given the assumptions we made, this approach is potentially more stable because it updates both belief and action with the same information. On the other hand, a pure proprioception-based control mechanism could produce potentially incorrect movements because the motor control commands result from comparing proprioceptive predictions with noisy observations. Greater cost-effectiveness of the second method thus might come at the cost of worsened performances, which we investigate here.

Figure 9 shows a comparison of the two control methods and the BL agent, evaluated under the same conditions we used to investigate precision balance, including 1,000 random targets. Performance was measured in terms of belief and reach stability and reach accuracy. The results reveal, first, that the expected decreased belief stability of the full model with respect to the BL agent (Figure 9C) does not affect hand stability (Figure 9B), although the proprioceptive noise apparently contributed to decreased reach accuracy (Figure 9A). More importantly, the results confirm our expectations that pure proprioception control has considerably lower reach stability caused by incorrect update directions of the motor control signals, resulting in a greater decreased reach accuracy relative to the full model.

Figure 9. Motor control methods. Reach accuracy (A), reach stability (B), and belief stability (C) for a BL agent and the two different implementations of motor control, based either on all sensory information (full control) or on proprioception only.

5.3. Movement onset policy

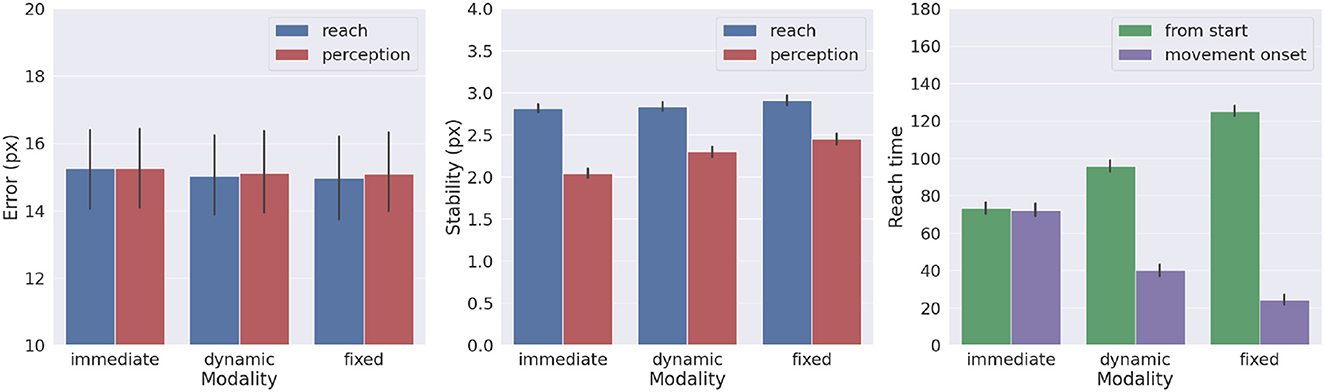

We also investigated the effects of movement onset using several policies, which differ by the duration of the period of pure perception preceding full Active Inference. One such policy we investigate here is characteristic of actions performed under time pressure, in which movement starts along with perception, i.e., action is immediate. Another policy that could be considered typical for acting under normal conditions has movement beginning with the satisfaction of a certain perception criterion. This policy dynamically deliberates the onset of movement. Various perception criteria could be used: here, the action starts when the norm of the target belief remained below a given threshold (i.e., 0.01) for a certain period (i.e., 5 time steps). These parameters were arbitrarily chosen in consideration of exploratory delayed reaching simulations. Finally, we include the previously used delayed action policy in which movement onset is delayed by a fixed period (here, 100 time steps, sufficient to obtain a precise target estimation). To obtain systematic observations, each policy was again run on 1,000 randomly selected targets. Measurements included reach and perception accuracy, motor control stability after reach, target perception stability, as well as reach time since the beginning of the trial or after movement onset.

Figure 10 shows the results with the three different policies. Although the reach error is approximately the same in all tested conditions, the agent controlled by the immediate policy reached the target within the lowest total number of time steps: target perception and intention setting were dynamically computed along with movement onset. However, if we consider the total task time, the number of time steps is the highest in this condition, since the arm belief and the arm itself move along with the slow visual target estimation. In turn, if the agent starts the movement when the uncertainty about the target position is already minimized (either in the dynamic or fixed condition), the movement time decreases, although if added on top of the perception time results in slower actions relative to the immediate movement condition. Finally, we note that target perceptual stability somewhat decreases for dynamic and fixed policies; this somewhat unexpected result is encouraging for dynamic target tracking tasks in which immediate movement onset is mandatory.

Figure 10. Effects of movement onset policy. Reach error (left), stability (middle), and time (right) across several policies (immediate, dynamic, and fixed delay). Vertical bars represent C.I.

5.4. Tracking dynamic targets

In a second testbed task, the agent was required to track a smooth-moving target whose initial location was randomly chosen from the entire operational space. In each trial, the targets received an initial velocity of 0.1px per step in a direction uniformly spanning the 0–360° range. When the target reached a border, its movement was reflected. As in the previous simulations, the belief was initialized at the HB configuration and the trial time limit was 300 time steps. However, for the agent to correctly follow the targets, both the belief and action were dynamically and continuously inferred in parallel, i.e., without a pure perceptual period.

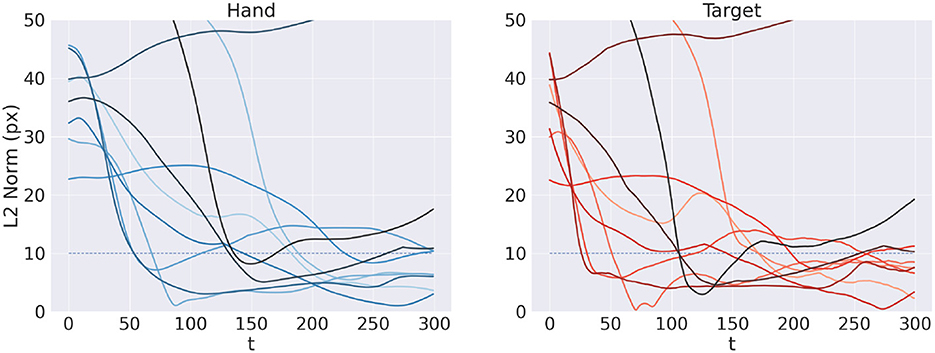

Figure 11 shows the reach trajectory in dynamic target tracking for 10 random trials. The left panel shows the evolution over time of L2 hand-target distance, while the right panel represents the error between estimated and true target positions. The results suggest that the agent is generally able to correctly and dynamically estimate the beliefs over both target and arm for almost every trial, also in the case of moving targets. In some cases however, mainly when the target is out of reach, it is temporarily or permanently "lost" in terms of its belief, which has also the consequences of losing the target in terms of reach. Further analysis with a more realistic bodily configuration and visual sensory system—as well as comparisons with actual kinematic data—should provide further insights into the capabilities of Active Inference to perform dynamic reaching.

Figure 11. Tracking dynamic targets. Reach (left) and perception (right) error over time for 10 random trials.

5.5. Free energy minimization

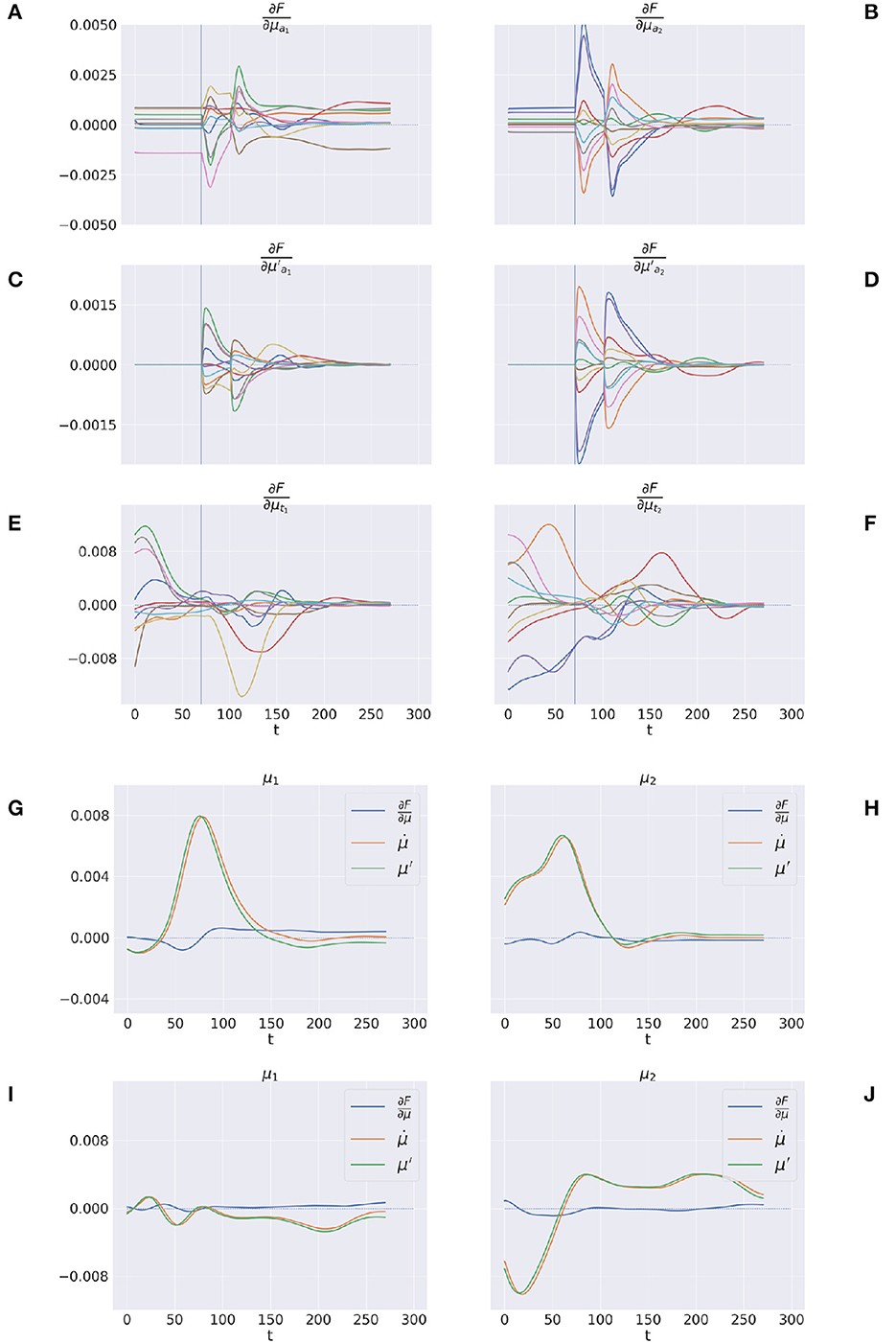

Here we illustrate the dynamics of free energy minimization in delayed reaching, which is at the heart of continuous Active Inference. To that aim, we run 10 new reaching trials with static and dynamic targets and recorded the free energy derivatives with respect to generalized belief and action.

Figures 12A–F shows the trajectory of the free energy derivatives with respect to the arm and target components during delayed reaching of a static target; the two columns show the trends for the last two joints, i.e., the arm and forearm segments, that most strongly articulate the reaching action. Note that the gradients of the free energy with respect to the target belief are rapidly minimized during the initial perceptual phase while the arm gradients remain still. Upon action execution (indicated by a vertical line), the arm gradients rapidly change as well, resulting in updated proprioceptive predictions that drive arm movements. However, arm movements cause changes in the visuals scene, resulting in a secondary effect over the just minimized free energy on target belief. Figures 12G–J goes even deeper, showing a direct comparison between , μ′, and the difference , on sample static (G-H) and dynamic (I-J) targets. We recall that free energy minimization implies that the two reference frames (the path of the mode and the mode of the path μ′) should overlap at some point in time, when the agent has inferred the correct trajectory of the generalized hidden states. This is crucial especially in dynamic reaching, in which the aim is to capture the instantaneous trajectory of every object in the scene. The decreasing free energy gradients (blue lines) show that this aim is indeed successfully achieved in both static and dynamic tasks.

Figure 12. Free energy minimization. (A–F) Free energy derivative with respect to the 0th and 1st order belief for arm (A–D) and target (E, F). (G–J) Comparison between the reference frames of the belief—the path of the mode and the mode of the path μ′—for sample static (G, H) and dynamic (I, J) trials. The left/right columns refer to the arm/forearm segments. Trials data are smoothed with a 30 time-step moving average.

5.6. Visual model analysis

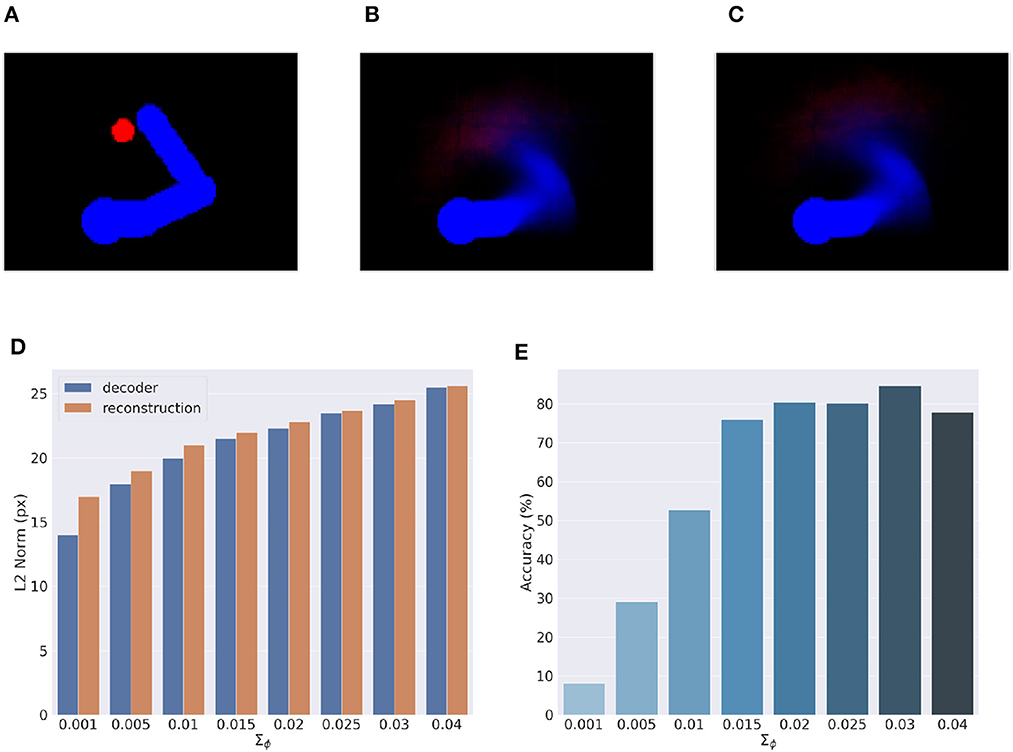

Here, we provide an assessment of the visual model whose performance is critical for accurate visually-guided motor control. To recall, the visual model is implemented with a VAE trained offline to reconstruct images of arm-target configurations such as the one in Figure 13A. A critical VAE parameter is the variance of the recognition (encoder) density Σϕ (see Equation 17). We therefore evaluated its effect on perception and action by training several VAEs with different variance levels. VAE performance was assessed on other 10.000 randomly selected configurations that uniformly sampled the space, with a target size of 5 pixels (the default condition for the Active Inference tests).

Figure 13. Visual model analysis. (A–C) Sample visual observation (A) and its decoding from joint angles (B) and through a complete encoding-decoding process (C). (D, E) Visual model performance. Quality of perception is measured as the L2 norm between observed and reconstructed images (D) and accuracy of Active Inference (E), as a function of the recognition density variance Σϕ.

Most critical was the VAE capacity to generate adequate images of joint arm-target configurations, which we measured with the help of the L2 norm between visual observations, and VAE-generated images. To provide more insights on the two VAE processes, decoding and encoding, we proceeded as follows: first, decoding was assessed by generating images for given body-target states such as that in Figure 13B. The decoded images were compared with the ground truth images produced by applying the geometric model for the same state of the body target (Figure 13A). Second, full VAE performance was assessed by computing the average L2 norm between observed images and their full VAE reconstruction, i.e., first encoding and then decoding them (as in Figure 13C). Third, we directly assessed the specific effects of the recognition density variance on Active Inference using the BL condition of the delayed reaching task as a measure.

Figure 13D represents the results of the perceptual assessment tests, showing the L2 norm between the original and generated images as a function of recognition density variance. As expected, lower variances generally resulted in lower errors with respect to both pure decoding and full encoding-decoding. Surprisingly, however, the accuracy of Active Inference in the reaching task behaved somewhat differently: the best accuracy was obtained not for predictions with low variance, but for intermediate variance levels (Figure 13E). This could be explained by the fact that low-variance images imply highly non-linear gradients that prevent correct gradient descent on free energy. On the other hand, as the variance increases the reconstructed image becomes somewhat blurred, which helps obtain a smoother gradient that correctly drives free energy minimization and therefore improves movement accuracy (more on this in the next section). However, as the variance continues to increase, the reconstructed images become too blurry, degrading both belief inference and motor control.

5.7. Visual gradient analysis

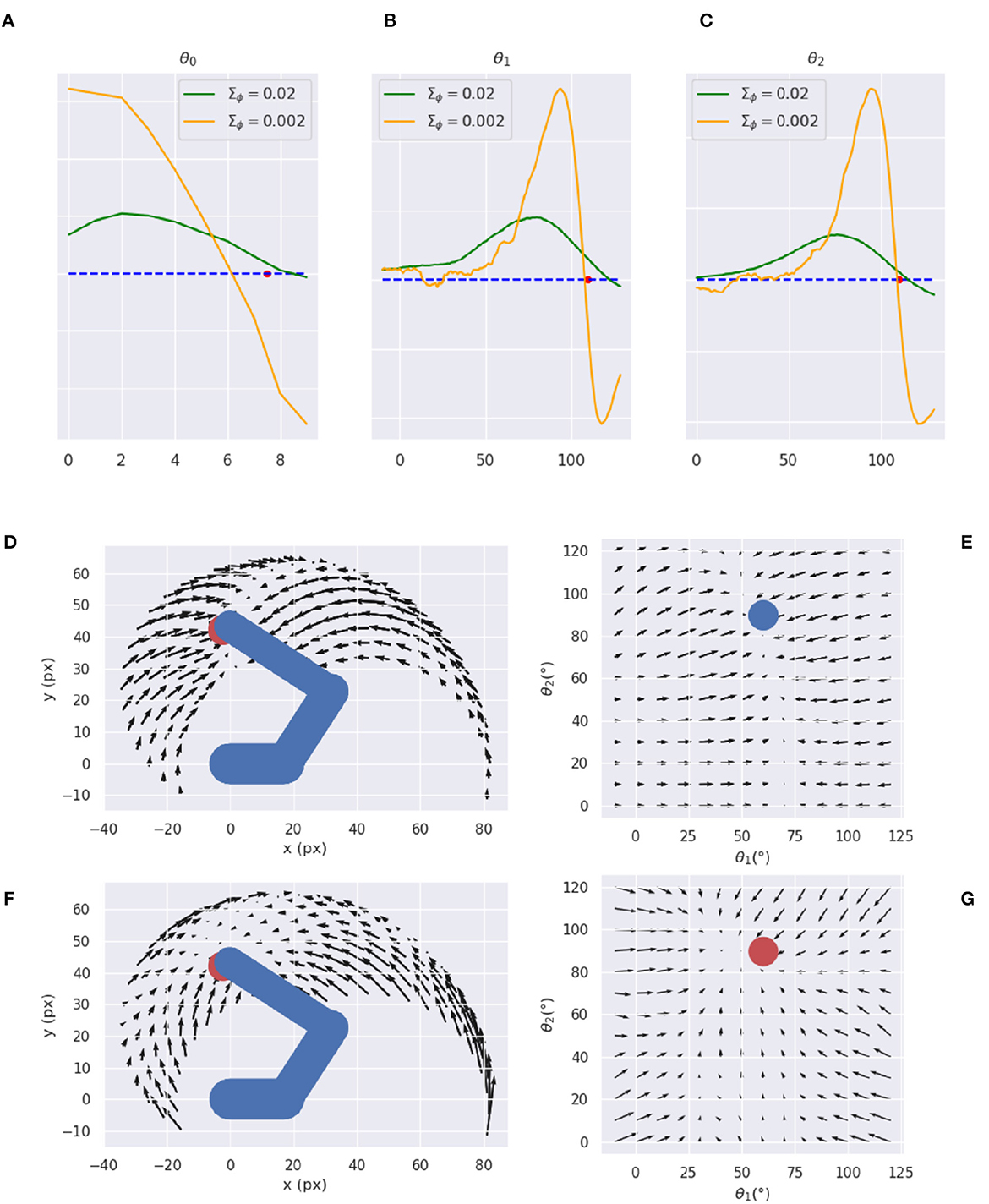

To further investigate the cause of the unexpected low variance issue, we analyzed the consistency of the visual gradient ∂gv of the decoder for several encoder variance values. To this aim, we computed the gradients for different reference states over the entire operational space.

Figures 14A–C reveals that a decoder with intermediate variance values (green line) causes smaller but smoother gradients, while a too-low variance (orange line) causes sharp peaks near the reference point and even incorrect gradient directions in some cases. Therefore, too low encoder variances seem to make the decoder prone to overfitting, while higher variance values help extract a smoother relationship between irregular multidimensional sensory domains and regular low-dimension causes. Figures 14D–G further illustrates the arm and target gradients relative to a sample reference posture and target location (the result is similar for other configurations) in both Cartesian and polar coordinates; the polar plot shows the two joints most relevant to the reaching action.

Figure 14. Visual gradient analysis. Marginal gradients for each joint, i.e., neck (A), shoulder (B), and elbow (C), and for two values of the recognition density variance Σϕ (green/orange line) computed by backpropagating the error between images with different arm configurations (abscissa: joint angle) and a reference image (whose angle is represented by the red dot on the abscissa). (D–G) Gradients for arm (D, E) and target (F, G) in both Cartesian (D, F) and joint (E, G) space.

The plots reveal greater arm gradients (upper panels) in the vicinity of the target location; in that subspace, the decoder has less uncertainty about which direction to choose to minimize the error. Notably, the gradients tend to compose curved directions, a characteristic of biological motions. The polar plot provides critical insights into the causes of the circular pattern: the gradients are mostly parallel to the horizontal axis, which corresponds to a movement consisting essentially of pure shoulder rotation. Thus, they provide a strong driving force on the shoulder almost throughout the operational space, while the area in which the elbow is controlled is limited to the vicinity of the target location. These gradients result in a two-phase reaching of static targets in which the agent first rotates the shoulder— resulting in a horizontal positioning—and then starts to rotate the elbow as soon as the latter enters its attraction area. The same gradients can explain the linear motion pattern of an arm tracking dynamically moving targets when the arm is close to the target: in that case, all gradients provide motion force as explained above. On the other hand, the gradients of the target belief (bottom panels) behave somewhat differently: since this belief is unconstrained and can freely move in the environment, update directions more directly approach the target in all angular coordinates (see the polar plot to the right). Yet, linear belief updates in the polar space still translate to curve directions in the Cartesian space.

6. Discussion

We presented a normative computational theory based on Active Inference of how the neural circuitry in the PPC and DVS may support visually-guided actions in a dynamically changing environment. Our focus was on the computational basis of encoding dynamic action goals in the PPC through flexible motor intentions and its putative neural basis in the PPC. The theory is based on Predictive Coding (Doya, 2007; Hohwy, 2013), Active Inference (Friston, 2010), and evidence suggesting that the PPC performs visuomotor transformations (Cisek and Kalaska, 2010; Fattori et al., 2017; Galletti and Fattori, 2018) and encodes motor plans (Andersen, 1995; Snyder et al., 1997). Accordingly, the PPC is proposed to maintain dynamic expectations of both current and desired latent states over the environment and use them to generate proprioceptive predictions that ultimately generate movements through reflex arcs (Adams et al., 2013; Versteeg et al., 2021). In turn, the DVS encodes a generative model that translates latent state expectations into visual predictions. Discrepancies between sensory-level predictions and actual sensations produce prediction errors sent back through the cortical hierarchy to improve the internal representation. The theory unifies research on intention coding (Snyder et al., 1997) and current views that the PPC estimates the body and environmental states (Medendorp and Heed, 2019), providing specific computational hypotheses regarding the involvement in goal-directed behavior. It also extends some perception-bound Predictive Coding interpretations of the PPC dynamics (FitzGerald et al., 2015) and provides a more comprehensive account of movement planning (Erlhagen and Schöner, 2002), tightly integrated into the overall sensorimotor control process.