95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci. , 03 October 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.994161

This study describes the construction of a new algorithm where image processing along with the two-step quasi-Newton methods is used in biomedical image analysis. It is a well-known fact that medical informatics is an essential component in the perspective of health care. Image processing and imaging technology are the recent advances in medical informatics, which include image content representation, image interpretation, and image acquisition, and focus on image information in the medical field. For this purpose, an algorithm was developed based on the image processing method that uses principle component analysis to find the image value of a particular test function and then direct the function toward its best method for evaluation. To validate the proposed algorithm, two functions, namely, the modified trigonometric and rosenbrock functions, are tested on variable space.

Imaging informatics plays a significant role in medical and engineering fields. In the diagnostic application software, during the segmentation procedure, different tools are used to interact with a visualized image and a graphical user interface (GUI) is used to parameterize the algorithms and for the visualization of multi-modal images and segmentation results in 2D and 3D. Hence, different toolkits, such as Medical Interaction Toolkit (Wolf et al., 2005) or the MevisLab (Ritter et al., 2011), are used to build appropriate GUIs, yielding an interface to integrate new algorithms from science to application. To produce better results, we used different sensors to generate a perfect image for denoising the tasks, thus focusing on massive denoising, as sometimes it is difficult for humans and computers to recognize the image. Furthermore, different filters (Wang, 2018) are used to get better denoising. The commonly used mathematical method for this purpose is the quasi-Newton method, which is preferred due to its better performance than other classical methods. Schröter and Sauer (2010) investigated quasi-Newton algorithms for medical image registration. Mannel and Rund (2021) implemented a hybrid semi-smooth quasi-Newton method for the non-smooth optimal control problems and proved its efficiency. Recently, Moghrabi et al. (2022) derived self-scaled quasi-Newton methods and proved their efficiency over the non-scaled version. Among the quasi-Newton methods, Broyden-Fletcher-Goldfarb-Shanno (BFGS) is a widely used method due to its better performance. Hence, this motivated the researchers to further develop these methods, one such method is the two-step quasi-Newton method.

The two-step quasi-Newton methods are considered to minimize unconstrained optimization problems.

The multi-step quasi-Newton methods, which were introduced by Ford and Moghrabi (1994, 1993), obtained the best results over the single-step quasi-Newton method. In the single-step quasi-Newton method, updation of Hessian approximation (Bi+1) is required to satisfy the secant equation

where si is the step size in the variable space xi

and yi is the step size in gradient space g(xi), such as

The quasi-Newton equation must satisfy the true Hessian Mi+1 of Newton equation which is defined as follows:

In the case of the two-step quasi-Newton methods, the secant equation (2) is replaced by

or

which is derived by interpolating the quadratic curve x(τ) in variable space and g(τ) in gradient space

for the purpose of interpolating the Lagrange polynomial that is found suitable in Jaffar and Aamir (2020). Therefore,

the derivatives of Equations (10) and (11) are defined as

the above relations obtained are substituted in Equation (14), which is a two-step form of Equation (5)

The new secant condition for the two-step quasi-Newton method is obtained in the form of Equation (6/7), which should be satisfied by the updated Hessian approximation Bi+1. The value of αi in Equation (6) is given by

where

Hence, the Broyden-Fletcher-Goldfarb-Shanno (BFGS) formula for the two-step method is defined as

The standard Lagrange polynomial depends on the values of and is defined as

The parametric values τk, for k= 0, 1, 2....,m, used in the computation of vectors, ri and wi, are found by the metric of the form

The matrix N is a positive definite matrix, and three choices are given for N as I, Bi, and Bi+1 on variable space and z1, z2 ϵ Rn.

This metric between different iterates in the current interpolation is measured by fixed-point and accumulative approaches (Ford and Moghrabi, 1994). In this study, an accumulative approach is used for finding the parametric values.

• Accumulative Methods

These methods accumulate the distance between the consecutive iterates in their natural sequence. The latest iterate xi+1, corresponding to the value τm of τ, is considered as the origin or base point, and the other values of τ are calculated by accumulating the distance between the consecutive pairs. Therefore, we have

In the two-step method, the accumulative type is determined as A1, A2, and A3, and the parametric values are found with the help of Equation (21) for k=0,1, where the base point will be τ2 = 0 for m=2 from Equation (20).

• Algorithm A1

The identity matrix I is taken as matrix N in this algorithm.

• Algorithm A2

In this algorithm, matrix N is taken as the current Hessian approximation Bi.

The above equation involves a matrix vector product which is computationally expensive. For instance, with the help of search direction, we can easily compute the parameters of the same situation as

since

By substituting Equation (26) in Equation (24), we get

The above expression is easy to calculate but the expression in τ0 is very difficult to compute in every iteration. Therefore, to lessen the computational cost, Ford and Moghrabi (1994) claimed that, in multi-step methods, when the quasi-Newton equation in Equation (2) is not satisfied, then we can consider that it satisfies approximately by replacing i+1 with i in Equation (2), where Bi is an approximation of matrix N. Therefore, we obtain

using Equation (28), we have

• Algorithm A3

In this algorithm, the choice of matrix N is Bi+1, which is the Hessian approximation at xi+1.

Since τ1 and τ0 are expensive to compute, Equation (2) is used.

Aamir and Ford (2021) investigated the multi-step skipping technique in which one-step and two-step skipping strategies were experimented and produced better results than those without skipping strategy. The authors also modified the search direction, which was implemented with/without the skipping technique to achieve good performance in minimum time duration. We experimented two test functions of different dimensions by the two-step quasi-Newton methods with different techniques, i.e., one-step skipping with no modified search direction and one-step skipping with modified search direction on variable space with a high rate of computational effort. Therefore, to lessen the computational burden and increase efficiency, an algorithm was developed to execute a particular function by using the best method only. Section 2 discusses the two-step quasi-Newton method with different techniques in detail. Section 3 proposes a self-decisive algorithm which is developed based on image processing method to find the image values of different test functions. Section 4 discusses the experimental setup of the proposed strategy. Section 5 analyzes the numerical results of one function, which can help the algorithm to execute a particular function by the best method only. In the last section, the conclusion is drawn based on different numerical simulations.

Different techniques in two-step methods, such as the one-step skipping technique with no modified search direction and the one-step skipping technique with modified search direction, are implemented on the selected test functions for the purpose of minimization. These functions are examined by function evaluation, the number of iterations, and time in seconds. The notation of different methods on different techniques is given in Table 1.

In quasi-Newton methods, updation of Inverse Hessian approximation Hi to Hi+1 is a very expensive procedure under certain circumstances. Tamara et al. (1998) introduced the idea of skipping updates for certain steps to lessen the burden of computational cost. They investigated the question of “how much and which information can be dropped in BFGS and other quasi-Newton methods without destroying the property of quadratic termination” and called this procedure “backing up.” They used this idea in the algorithm if the step length is 1.0 or the current iteration is odd.

Aamir and Ford (2021) investigated the skipping technique in single step and multi-step methods. The experimental results of comparison between the skipping and non-skipping methods revealed that skipping algorithms outperformed non-skipping algorithms.

• Algorithm of the Multi-step Skipping Method

The general algorithm of the skipping technique is as follows:

1. Select an initial approximation x0 and H0 and set i=1

2. For j=1: m (where m is the number of steps to be skipped)

• Calculate a search direction pi+j−2 = −Hi−1gi+j−2.

• Find t by giving ti+j−2 for executing line search xi+j−2 + tpi+j−2.

• Calculate new approximation xj = xj + tjpj.

End for

3. By the use of different methods, update Hi+j−2 to give Hi+m−1.

4. If ||gi|| ≤ ϵ, then stop, else i:=i+m and go to step 2. End if.

• Application of the skipping technique on the two-step method

Now that we are at xi+1, the matrix is updated by Bi−1, si, si−1, yi, and yi−1, using the following steps:

1. Using the above terms, compute τk and then find δ.

2. By the use of all the above values, through which we find ri and wi, we have

3. The Hessian approximation is updated by using all the above values.

Now we compute τk and/or δ under different methods.

Here, we explained the derivation of the modified search direction. The following notations are used during the derivation of modified search direction in the skipping technique.

Ĥi represents that the matrix is never computed.

represents modified search direction.

Now, let us consider that the single-step BFGS updated the matrix Hi−1. The search direction pi−1 is defined as

By using the skipping technique, the next search direction is

With the help of Ĥi, we can find the modified search direction . We define

where

Now, the modified search direction is

and by Equation (35), we get

From the above equation, it can be observed that Hi−1yi−1 and λi−1 cannot be easily computable due to the matrix vector product than other terms. However, with the help of Equation (34) and Equation (35), the expression Hi−1yi−1 can be defined as

Therefore, using the above equation in Equation (37), modified search direction can be calculated efficiently without explicitly computing .

• Algorithm of the Multi-step Skipping Quasi-Newton method with Modified Search Direction

The general algorithm is given as follows:

1. Select x0 and H0 as an initial approximation; set i=1

2. For j=1:m, where m is the number of steps,

3. Calculate pi+k−2 = −Hi−1gi+k−2.

4. Calculate modified search direction .

5. Do the line search along xi+j−2 + tpi+j−2 and also providing a value of ti+j−2 for t.

6. Calculate new approximation .End

7. Update Hi−1 to produce Hi+m−1 by using different methods discussed in previous sections.

8. Check for convergence, if it is not converged, then i=i+1 and go to step no: 2.

In the viewpoint of image processing “an image is an array or matrix of numeric values called pixels (Picture Element) arranged in columns and rows”. In mathematics an image is defined as “a graph of a spatial function” or “it is a two-dimensional function f(x,y), where x and y are the spatial (plane) coordinates, and the amplitude at any pair of coordinates (x,y) is called the intensity of the image at that level.” If x,y and the amplitude values of f are finite and discrete quantities, we call the image a digital image. A digital image is composed of a finite number of pixels, each of which has a particular location and value. Image processing is a process in which different mathematical operations are performed subject to application on the image to get improved or to extract significant information from the image for subsequent processing. When this process is applied to digital images is called digital image processing.

Digital image processing has a wide scope for researchers to work on various areas of science (such as, a agriculture, biomedical, and engineering). Previous studies showed that researchers applied and investigated different techniques of image processing for analysis and problem solving, such as detection and measurement of paddy leaf disease symptoms using image processing (Narmadha and Arulvadivu, 2017), breast cancer detection using image processing techniques (Christian et al., 2000), a novel outlier detection method for monitoring data in dam engineering (Shao et al., 2022), and counterfeit electronics detection using image processing and machine learning (Navid et al., 2017).

In the proposed strategy, the algorithm is developed by which the image values of different images I(x, y) of test functions are obtained by statistical technique, and the desired objective is achieved. In the first step, the images of different test functions are obtained with a resolution of 600 × 600 pixels. In the second step, the window of a size W×W is generated around each pixel of the image I(x, y), where the suggested size of the generated window is 3 × 3 and this window is treated as matrix S. The rows of the matrix S are considered as observations, and columns are considered as variables. Figure 1 shows the schematic diagram of the matrix S generation.

In the third step, covariance matrix C(x, y) of matrix S is computed with the help of the following equation:

In the fourth step, eigenvalues of the covariance matrix are calculated. The sum of the eigenvalues is directly proportional to edge strength, which is calculated as follows:

The third and fourth steps are done twice, the first time for the horizontal edge strength and the second time for the vertical edge strength calculation. Therefore, Equations (39) and (40) are used for calculating horizontal and vertical edge strength generation as follows:

The sum of horizontal and vertical edge strength gives the value of a pixel of I(x, y). Hence, the value of each pixel of an image is calculated as

the sum of all pixel values gives the value of an image I(x, y) defined as

Two test functions were selected from literature and were executed by using different techniques of the two-step quasi-Newton methods on variable space. The execution of test functions by every technique was computationally expensive. Therefore, an algorithm is required to enable the researchers to execute a particular function by the best method only to reduce computational cost.

Hence, our objective is to develop such an algorithm that can compute the image value of every input image of the test function and forward each function to the method by which it outperformed. The algorithm works in the following steps:

1. Obtain the images of each test function.

2. Compute the image value of each image.

3. Classify test functions by their image values.

4. Forward the particular function toward the best method.

To check the performance of different techniques used in two-step methods, we considered two test functions of different dimensions with four different starting points and epsilon value from the literature (Hillstrom et al., 1981), which are reported in Tables 2, 3. These test functions are categorized into three classes, namely soft, medium, and hard.

1. Soft: (2 ≤ n ≤ 20);

2. Medium: (20 ≤ n ≤ 60);

3. Hard: (61 ≤ n ≤ 150);

4. Combined: (2 ≤ n ≤ 150).

The equations of both test functions are mentioned below by which 600 × 600 resolution images (displayed in Figure 2) are generated to calculate the image value of each function (as reported in Table 4) and which are programmed successfully in the self-decisive algorithm.

1. Extended Rosenbrock function:

2. Modified Trigonometric function:

An outline of the self decisive algorithm can be defined as follows:

Step 0: Obtain image I(x, y) to generate window/matrix S.

Step 1: Compute covariance matrix .

Step 2: Find eigenvalues of C(x, y).

Step 3: Calculate the value of each pixel V(x, y)= (strength h)+ (strength v).

Step 4: Compute the value of each image .

Step 5: Set threshold on V(I).

Step 6: Function execution by indicated/best method type.

Two test functions, namely, Rosenbrock and modified trigonometric functions, are selected from the literature (Hillstrom et al., 1981) to evaluate their performance by using two different two-step techniques, i.e., one-step skipping with no modified search direction and one-step skipping with modified search direction. These functions are of different dimensions ranging from 2 to 150.

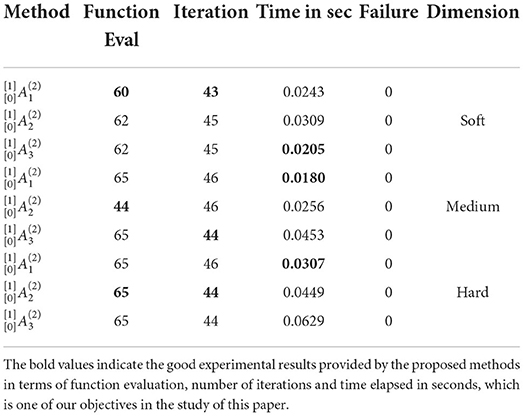

• It is evident from Table 5 that the function outperformed by the method ) during function evaluation and number of iterations, and time reduction is noted by ) in soft dimension. In medium dimension, the ) and ) methods outperformed in function evaluation, the number of iterations, respectively, while the ) method exhibited reduction in time. In hard dimension, the ) method showed better results to minimize the function evaluation and the number of iterations and the ) method reduced the computational effort.

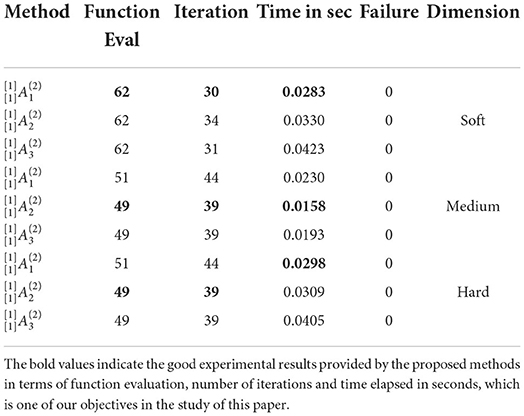

• The results of Table 6 show that the ) method outperformed in terms of function evaluation, the number of iterations, and computational time in soft dimension problems. In medium dimension, the ) method exhibited best results in function evaluation, the number of iterations, and computational time. The ) method showed a reduction in function evaluation and the number of iterations, and the ) method is executed in less time.

Table 5. Results of rosenbrock function of all dimension problems in a two-step method of the first technique.

Table 6. Results of rosenbrock function of all dimension problems in the two-step method of the second technique.

• Comparative analysis of both techniques

• The behavior of both techniques was compared, and our analysis concluded that one-step skipping with no modified search direction outperformed in function evaluation and computational time, while the second technique, i.e., one-step skipping with modified search direction, showed a reduction in the number of iterations in all dimension problems.

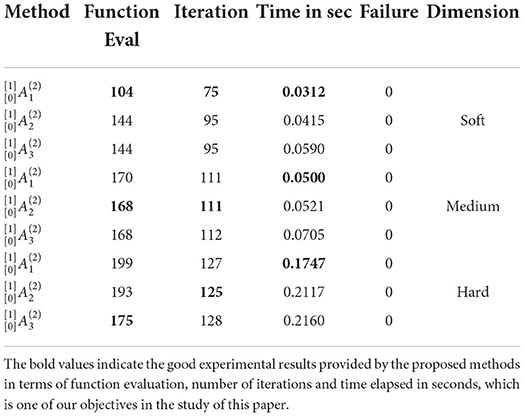

• Table 7 shows the function outperformed by the method in function evaluation, the number of iterations, and in computational time in soft dimension. In medium dimension, the method outperformed in function evaluation and the number of iteration while less computational time is noted by the method. The method showed better results in function evaluation, while the and methods outperformed in the number of iterations and time, respectively, in hard dimension.

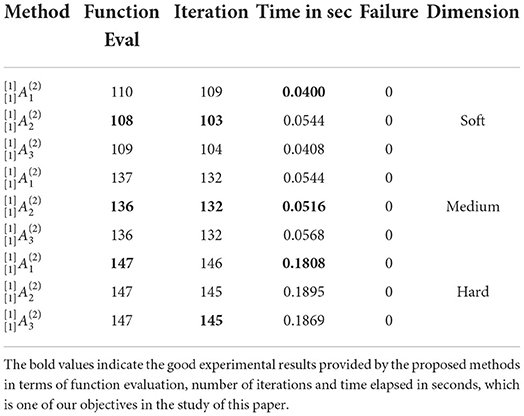

• In Table 8, the method showed the best results in function evaluation and the number of iterations in soft and medium dimensions, and the time reduction is observed by the method in soft dimension and by the method in medium dimension. In hard dimension, the method exhibited minimum results in function evaluation and computational time, and the method showed a reduction in the number of iterations.

Table 7. Results of modified trigonometric function of all dimension problems in the two-step method of the first technique.

Table 8. Results of modified trigonometric function of all dimension problems in the two-step method of the second technique.

• Comparative analysis of both techniques

• Both techniques were compared and analyzed based on experimental results. From the analysis, it can be concluded that one-step skipping with no modified search direction outperformed in function evaluation, the number of iterations, and computational time, except the one case of medium dimension, in which the second technique, i.e., one-step skipping with modified search direction, showed a reduction in function evaluation.

An algorithm was developed to compute the image value of a particular test function and direct it to its best method for execution. The two-step quasi-Newton methods with two techniques (one-step skipping with no modified search direction and one-step skipping with modified search direction) were chosen and experimented on two test functions, namely, Rosenbrock and modified trigonometric function. The best method was determined using the experimental results obtained in terms of function evaluation, the number of iterations, and computational time. This study concluded that the one-step skipping without modification in search direction technique showed superiority over the one-step skipping with modified search direction technique under both test functions. Hence, this algorithm directed all the functions having the same image value as Rosenbrock and modified trigonometric functions to the one-step skipping technique with no modified search direction.

To further strengthen the algorithm reported in this study, we propose to investigate the image recognition in terms of picture or graph instead of image value and then direct the reported function (or medical image) to the best method available for the obtaining solution. Based on the literary research, in the future, we are planning to collaborate with some biomedical labs to validate the practicality of the proposed algorithm.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

FJ, NA, and SA-m performed the main concept and experimental work. WM and MA were made critical revisions, reviewed, help in writing, analysis of this paper, and approved the final version. All authors contributed to the article and approved the submitted version.

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through Large Groups RGP.2/212/1443. WM was thankful to the Directorate of ORIC, Kohat University of Science and Technology for awarding the Project titled-Advanced Soft Computing Methods for Large-Scale Global Optimization Problems.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aamir, N., and Ford, J. (2021). Two-step skipping techniques for solution of nonlinear unconstrained optimization problems. Int. J. Eng. Works 8, 170–174. doi: 10.34259/ijew.21.806170174

Christian, C. T., Sutton, M. A., and Bezdek, J. C. (2000). “Breast cancer detection using image processing techniques,” in Ninth IEEE International Conference on Fuzzy Systems. FUZZ-IEEE 2000 (Cat. No. 00CH37063), Vol. 2 (Vellore: IEEE), 973–976.

Ford, J., and Moghrabi, I. (1993). Alternative parameter choices for multi-step quasi-Newton methods. Optim. Meth. Softw. 2, 357–370. doi: 10.1080/10556789308805550

Ford, J., and Moghrabi, I. (1994). Multi-step quasi-Newton methods for optimization. J. Comput. Appl. Math. 50, 305–323. doi: 10.1016/0377-0427(94)90309-3

Hillstrom, K., More, J., and Garbow, B. (1981). Testing unconstrained optimization software. ACM Trans. Math. Softw. 7, 136–140. doi: 10.1145/355934.355943

Jaffar, F., and Aamir, N. (2020). Comparative analysis of single/multi-step quasi-newton methods at different delta values. Punjab Univ. J. Math. 52, 65–77.

Mannel, F., and Rund, A. (2021). A hybrid semismooth quasi-newton method for nonsmooth optimal control with pdes. Optim. Eng. 22, 2087U2125. doi: 10.1007/s11081-020-09523-w

Moghrabi, I. A., Hassan, B. A., and Askar, A. (2022). New self-scaling quasi-newton methods for unconstrained optimization. Int. J. Math. Comput. Sci. 17, 1061U1077. doi: 10.11591/ijeecs.v21.i3.pp1830-1836

Narmadha, R. P., and Arulvadivu, G. (2017). “Detection and measurement of paddy leaf disease symptoms using image processing,” in 2017 International Conference on Computer Communication and Informatics (ICCCI) (Coimbatore: IEEE), 1–4.

Navid, A., Tehranipoor, M., and Forte, D. (2017). Counterfeit electronics detection using image processing and machine learning. J. Phys. Conf. Ser. 787, 012023. doi: 10.1088/1742-6596/787/1/012023

Ritter, F., Laue, T. B. A. H. H., Schwier, M., Link, F., and Peitgen, H.-O. (2011). Medical image analysis. IEEE Pulse 2, 60–70. doi: 10.1109/MPUL.2011.942929

Schröter, M., and Sauer, O. A. (2010). Quasi-newton algorithms for medical image registration. IFMBE Proc. 25, 433–436. doi: 10.1007/978-3-642-03882-2_115

Shao, C., Zheng, S., Gu, C., Hu, Y., and Qin, X. (2022). A novel outlier detection method for monitoring data in dam engineering. Expert Syst. Appl. 193, 116476. doi: 10.1016/j.eswa.2021.116476

Tamara, D. P. O., Kolda, G., and Nazareth, L. (1998). BFGS with update skipping and varying memory. J. Optim 8, 1060–1083. doi: 10.1137/S1052623496306450

Wang, J. (2018). Application of improved quasi-newton method to the massive image denoising. Multimed Tools Appl. 77, 12157–12170. doi: 10.1007/s11042-017-4863-y

Keywords: quasi-Newton method, multi-step quasi-Newton method, medical informatics, image processing, covariance matrix, eigenvalues

Citation: Jaffar F, Mashwani WK, Al-marzouki SM, Aamir N and Abiad M (2022) Self-decisive algorithm for unconstrained optimization problems as in biomedical image analysis. Front. Comput. Neurosci. 16:994161. doi: 10.3389/fncom.2022.994161

Received: 14 July 2022; Accepted: 27 July 2022;

Published: 03 October 2022.

Edited by:

Abdul Mueed Hafiz, University of Kashmir, IndiaReviewed by:

Asim Muhammad, Guangdong University of Technology, ChinaCopyright © 2022 Jaffar, Mashwani, Al-marzouki, Aamir and Abiad. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wali Khan Mashwani, bWFzaHdhbmlncjhAZ21haWwuY29t; Mohammad Abiad, TW9oYW1tYWQuYWJpYWRAYXVtLmVkdS5rdw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.