- 1Normandie Univ, UNIHAVRE, LMAH, FR-CNRS-3335, ISCN, Le Havre, France

- 2The Hudson School of Mathematics, New York, NY, United States

- 3Courant Institute of Mathematical Science and Center for Neural Science, New York University, New York, NY, United States

- 4School of Mathematics, School of Natural Sciences, Institute for Advanced Study, Princeton, NJ, United States

The brain produces rhythms in a variety of frequency bands. Some are likely by-products of neuronal processes; others are thought to be top-down. Produced entirely naturally, these rhythms have clearly recognizable beats, but they are very far from periodic in the sense of mathematics. The signals are broad-band, episodic, wandering in amplitude and frequency; the rhythm comes and goes, degrading and regenerating. Gamma rhythms, in particular, have been studied by many authors in computational neuroscience, using reduced models as well as networks of hundreds to thousands of integrate-and-fire neurons. All of these models captured successfully the oscillatory nature of gamma rhythms, but the irregular character of gamma in reduced models has not been investigated thoroughly. In this article, we tackle the mathematical question of whether signals with the properties of brain rhythms can be generated from low dimensional dynamical systems. We found that while adding white noise to single periodic cycles can to some degree simulate gamma dynamics, such models tend to be limited in their ability to capture the range of behaviors observed. Using an ODE with two variables inspired by the FitzHugh-Nagumo and Leslie-Gower models, with stochastically varying coefficients designed to control independently amplitude, frequency, and degree of degeneracy, we were able to replicate the qualitative characteristics of natural brain rhythms. To demonstrate model versatility, we simulate the power spectral densities of gamma rhythms in various brain states recorded in experiments.

1. Introduction

Rhythms, or oscillatory patterns of neural activity, occur ubiquitously in many parts of the central nervous system. One typically classifies them by their frequency bands. For example, β-band rhythms (12–30 Hz) are related to muscles and movements, and γ-rhythms (30–90 Hz) are implicated in information transfer and are associated with cognitive processes. Because brain rhythms have unique signatures and are relatively easy to record, they have been studied in hundreds of experimental article. The origins and functional roles of these rhythms, as well as their connections to various brain disorders, are active topics of current research, though much remains to be understood.

This article is not concerned with the biological origins of brain rhythms. Our interest lies in the signal itself, and our challenge is to generate mathematically signals that possess characteristics of rhythms produced naturally by the brain. We will focus on gamma rhythms, and for definiteness, we will base our study on gamma-band activity in the visual cortex, which has been the subject of detailed experimental studies e.g., Gray et al., 1989; Henrie and Shapley, 2005; Xing et al., 2012. Refer also to the review article by Cardin (2016), and the modeling article by Chariker et al. (2018).

Experimental data show that there are two aspects to the character of gamma rhythms: one is their oscillatory nature, and the other is their irregularity. In spite of their being called “a rhythm,” gamma rhythms are far from periodic in the sense of mathematics. There is a recognizable beat, to be sure, but spectral power density studies show that gamma rhythms are broad-band, with wandering frequencies and phases. Activity patterns are episodic; the beats are uneven in magnitude, degrading from time to time before the resumption of oscillatory behavior.

Several earlier theoretical studies (Ermentrout and Kopell, 1998; Brunel and Hakim, 1999; Brunel, 2000; Whittington et al., 2000; Tiesinga et al., 2001; Börgers and Kopell, 2003; Brunel and Wang, 2003; Fries, 2005) captured well the oscillatory behavior of gamma rhythms without delving into their irregular character. The broad-band, episodic nature of gamma rhythms was captured in biologically realistic models of the visual cortex, e.g., Rangan and Young (2013); Chariker and Young (2014); Chariker et al. (2018) using networks of hundreds to thousands of integrate-and-fire neurons. A more detailed exposition of earlier studies is given in the Section 6. In this article, we are interested in the following question: How can one generate, using reduced models or dynamical systems with few degrees of freedom, irregular rhythms with the variability in frequency and amplitude seen in natural brain rhythms?

We investigated the use of limit cycles perturbed by white noise as has been proposed by several authors and found that at the right noise level, these models do reproduce some gamma characteristics. The use of shear and anisotropic noise appeared to further improve the realism of the signal produced. We have found, however, that the most straightforward way to simulate the irregular character of gamma rhythms is to use a system of Ordinary Differential Equations (ODE) parameterized by quantities designed to control directly amplitude, frequency, and degree of degeneracy, and to allow these parameters to wander randomly.

For illustration, we present a specific example, consisting of a 2D slow-fast system inspired by the well-known FitzHugh-Nagumo and Leslie-Gower models. Viewing the two variables as Excitatory (E) and Inhibitory (I) conductances of typical neurons in a local population, this model produces results that resemble gamma-band activity in the real cortex. Bonuses of the model include moment-to-moment balancing of E and I-currents seen in experiments and known to theorists. To further demonstrate the versatility of this model, we challenged it to reproduce several sets of experimental data, including the power spectral densities and spectrograms recorded from awake vs. anesthetized monkeys, to simulate the changes in gamma-band activity associated with increased contrast and the repeated presentation of visual stimuli.

The rest of this article is organized as follows: In Section 2, we examine the use of limit cycles with noise to produce gamma-like rhythms. The main model is presented in Sections 3 and 4: Section 3 describes the deterministic model; a stochastic component is introduced in Section 4. In Section 5, we demonstrate the model's capabilities to simulate experimental data. This is followed by Section 6.

2. Rhythms Produced by Noisy Limit Cycles

As discussed in the Introduction, experimental data of gamma rhythms show a good deal of irregularity (Gray et al., 1989; Henrie and Shapley, 2005; Xing et al., 2012; Cardin, 2016); refer also to the modeling paper (Chariker et al., 2018). They show that gamma rhythms are far from periodic in the sense of mathematics but are broad-band, with wandering amplitudes and frequencies, their time courses punctuated by intermittent degradations in the rhythm. Reduced models that depict gamma rhythms as limit cycles (e.g., Ermentrout and Kopell, 1998; Fries, 2005) are not intended to possess irregular features. Several other authors (e.g., Brunel, 2000; Brunel and Wang, 2003) proposed to model gamma rhythms by limit cycles perturbed by white noise. Below we investigate the effectiveness of these stochastic models as a tool for simulating the irregular character of gamma rhythms, but first, we review quickly the idea of PSD, an important tool used by neuroscientists.

2.1. Quick Review of PSD

For periodic signals, Fourier coefficients provide the right mathematical tool for extracting the main frequencies. To capture the pseudo-periodicity of gamma rhythms, neuroscientists have used the following computational tool (Henrie and Shapley, 2005; Chariker et al., 2018).

The idea is to fix a time interval of suitable length T and to compute Fourier coefficients on [0, T] as if the signal was periodic with period T, i.e., let

and define the power concentrated at frequency k to be . To capture gamma-band frequencies, the time interval T is usually chosen to be between 100 and 500 ms: too short of an interval will fail to capture the relevant frequencies, and too long of an interval is ineffective since the signal is not truly periodic.

For a fixed initial time t0, we perform the computation above repeated with many time shifts, i.e., we sample the signal on [t, t+T] for t = t0, t0+dt, t0+2dt, ⋯ for small dt, and the squares of the computed Fourier coefficients, , are averaged over all of the samples to obtain what is called the power spectral density (PSD) and often referred as the Welch method in computational software, refer to Welch (1967).

2.2. Limit Cycles + White Noise

We consider here the system

which in polar coordinates is

In this system, the unit circle has period 2π, and it attracts all the trajectories distinct from the origin. To put the rhythm in the gamma range, we slow time by a factor of 3 to obtain a frequency of ~50 Hz. Adding white noise, we obtain the following system of stochastic differential equations (SDE):

where (B1, B2) denotes a two dimensional Wiener process.

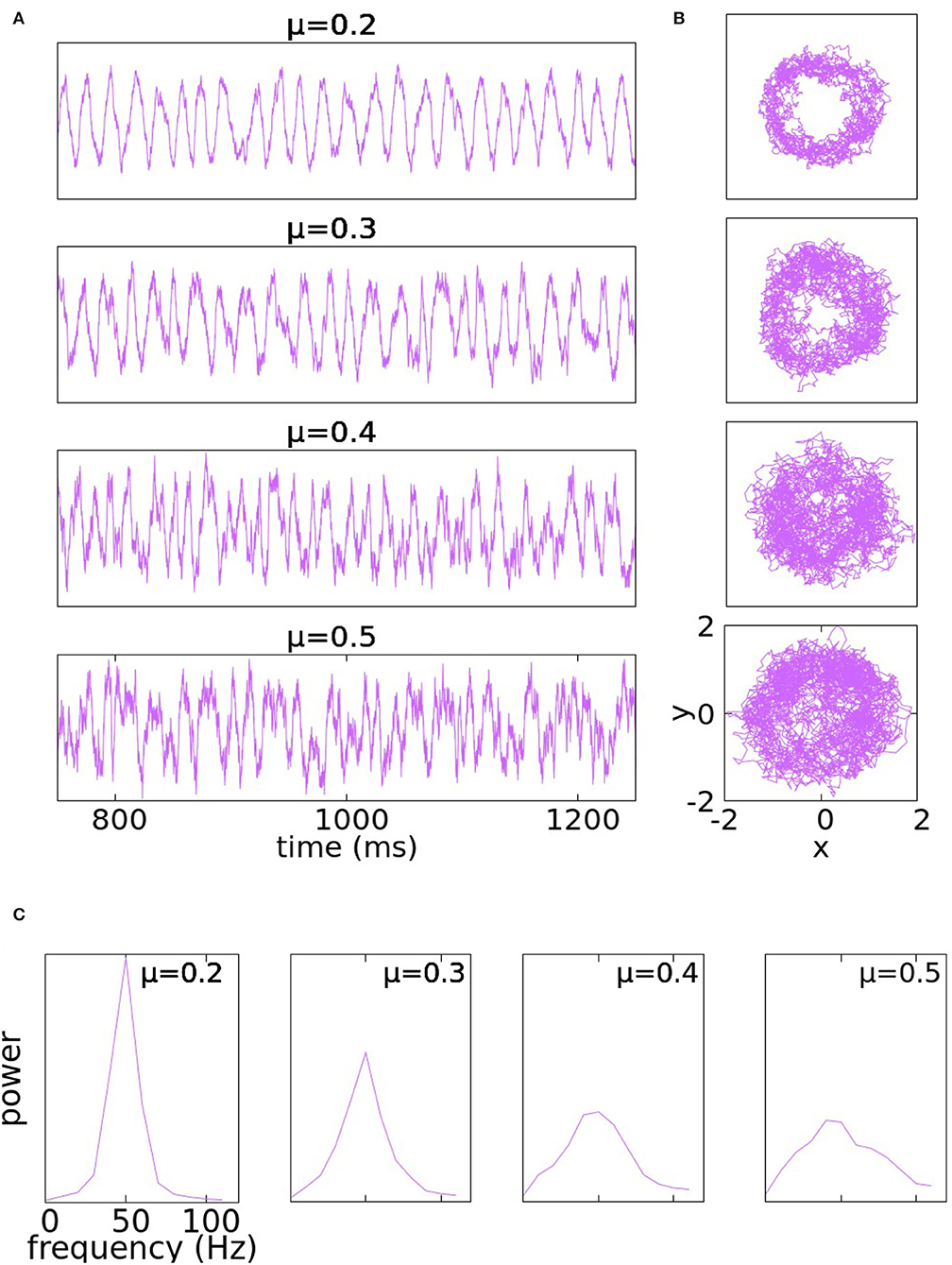

Typical solutions of Equation (4)- with μx = μ = μy at various levels of noise are shown in Figure 1. The traces are plots of the y-coordinate; the wriggly curves are sample paths of the SDE over many cycles. PSDs at corresponding noise levels are also shown.

Figure 1. Panel (A) illustrates the traces of the y-coordinate for Equation (4) with μx = μ = μy at various levels of noise: from top to bottom the parameter μ takes the values 0.2, 0.3, 0.4, and 0.5. Panel (B) illustrates the trajectories of the same solutions in the x − y plane. At the bottom, in panel (C) are PSDs of the corresponding noise levels.

At μ = 0.2, the peaks of the y-trace are somewhat irregularly spaced thanks to the noise, but the rhythm is too regular to resemble gamma rhythms produced by the real cortex. Increasing μ to 0.3 increases the amount of variability, but the rhythm is still too regular; in particular, it does not degenerate as can be seen by the empty spot near the origin in the phase plane trajectory. At μ = 0.4, the noise has significantly broadened the PSD (which is desirable), but local properties of Brownian paths also begin to manifest in the y-traces in the form of short rises and falls occurring at rapid successions. Such high-frequency oscillations on top of the main gamma rhythm are not typical of the behavior of membrane potentials in gamma activity. At μ = 0.5, the range of signal frequency becomes a little too broad, threatening to obstruct the main rhythm.

Our conclusion from the study above is that the addition of noise to purely periodic dynamics produces variability that goes some distance toward simulating the irregular character of gamma rhythms, but the use of a single parameter, namely μ= noise level, is too rigid: it is not irregular enough at low noise-levels and introduces undesirable features when noise-level is tuned too high.

2.3. Two Variants

Continuing to work with limit cycles subjected to white-noise forcing, we give two examples below to show the beneficial effects of an additional parameter.

Our first example adds shear to the deterministic dynamics. We introduce a new parameter α to Equation (4) to give

The deterministic of this equation, reads in polar coordinates as,

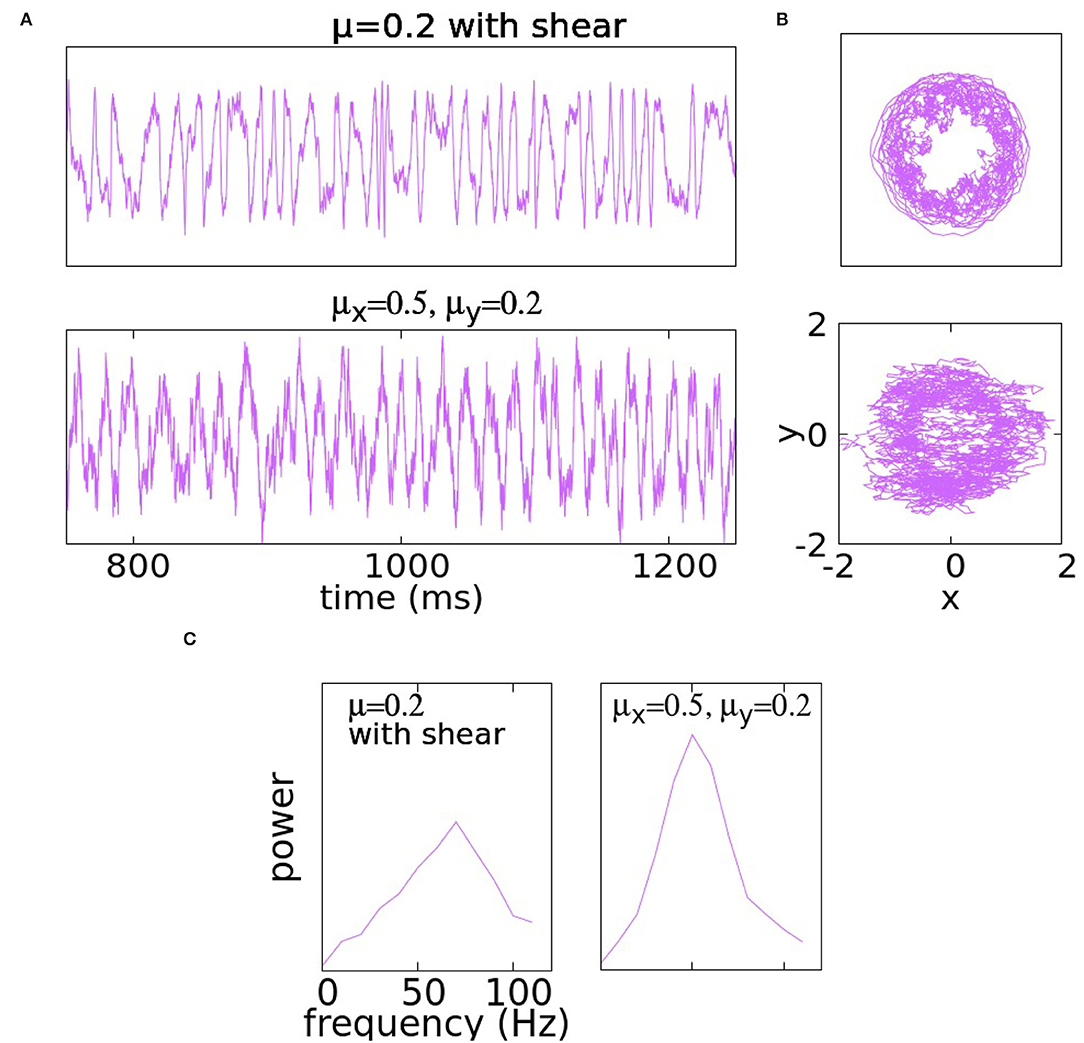

The idea is that the farther a trajectory is from the center, the higher its angular velocity. Figure 2A shows the case of μ = 0.2 with α = 3. It is evident that at this noise level, shear produces significantly more varied behaviors, a fact confirmed by a much broader spectrum.

Figure 2. Panel (A) shows traces of the y-coordinate for Equation (5) (i.e., with shear) with μx = μ = μy = 0.2 in the first row and for (4) with asymmetric coefficients μx = 0.5 and μy = 0.2. Panel (B) illustrates the trajectories of the same solutions in the x − y plane. At the bottom, the PSD is illustrated in (C).

In a second example, we use anisotropic noise, i.e., the equation is as in (4) with μx = 0.5 and μy = 0.2. The idea is that the larger μx would add variability, while the smaller μy would not result in the unwanted Brownian structures in y-traces. These expectations are confirmed in Figure 2B.

Remark 1. We conclude from the study above that because gamma signals are multi-faceted, to properly simulate them one needs to be able to control—independently—their frequencies, amplitudes, and degrees of degeneracy (i.e., the way the rhythm degrades from time to time and re-emerges). There has to be a mechanism for the system to switch from one regime to another at seemingly random times. Limit cycles with noise controlled by one or two parameters can reproduce certain aspects of the signal but do not possess sufficient flexibility. We propose in the sections to follow a system of ODEs with several parameters designed to control directly the properties we think are important and to produce the irregularity of gamma characteristics via stochastic motion in parameter space.

3. Proposed Model: Deterministic Part

In this section and the next, we present our main model, consisting of a simple system of ODE with randomly varying coefficients. The deterministic part of the model, its key features, together with the quantities to be varied are presented in Section 3; the stochastic component is introduced in Section 4.

3.1. Model Equations and Basic Dynamical Features

Below u and v represent the absolute values of the E and I-conductances of a typical neuron in a local population. We propose that their dynamics be described by

where a1, a2, b, and c are fixed parameters with . These values are chosen to reproduce the nullclines in Figures 3, 4, the dynamical significance of which are explained below. The key parameters are ϵ, γ, and K. They will be discussed at length below; for now, think of them as taking values in

We will adopt the following notation:

The nullclines of Equation (7) are then given by

for the first equation, and

for the second equation. Note that the polynomial f(u) = −K(u−a1)(u−a2) reaches its maximum for u = 0.5(a1+a2) with a value of .

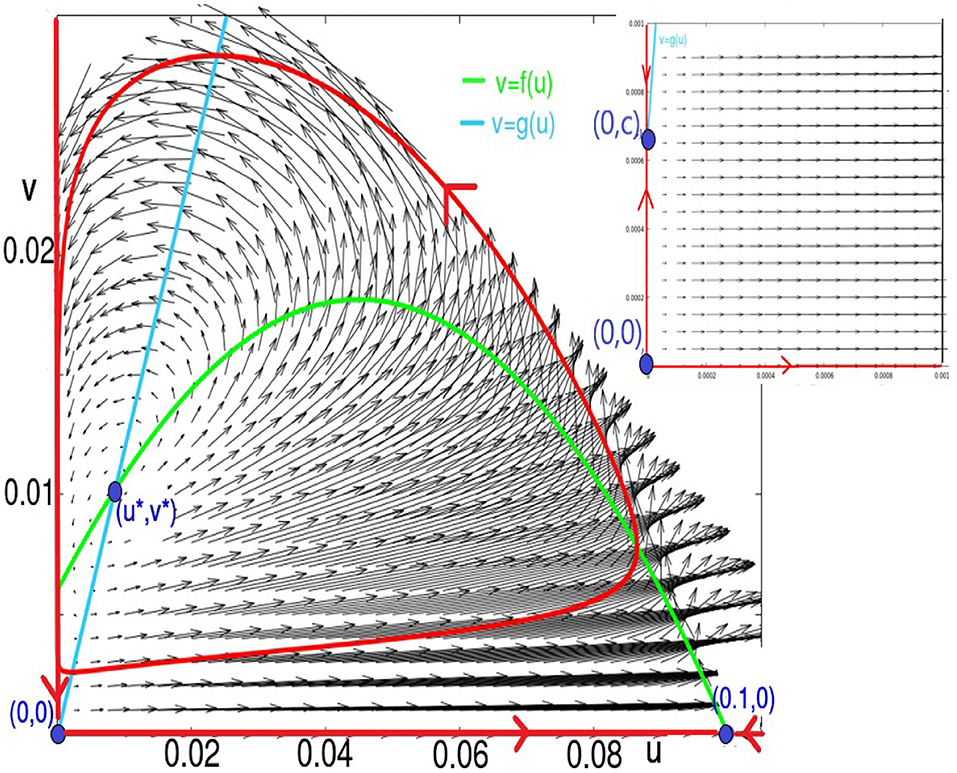

Figure 3. This figure shows the fixed points, nullclines, and vector field, as well as the trajectories lying in the sets u = 0, v = 0, and the limit-cycle for K = 60 and ϵ = 0.1. For these values, the parameter (a2, 0) is a saddle, and (u*, v*) is a source. The two fixed points (0, 0) and (0, c) are too close to be discernible in this figure. In the inset, we zoom in to visualize them: (0, 0) is a source, and (0, c) is a saddle.

Figure 4. This figure illustrates the effect of varying ϵ, from small to large values. The parameter K is set to K = 60. The top left panel illustrates ϵ = 0.001, the top right, ϵ = 0.1, bottom left, ϵ = 1, and bottom right ϵ = 10.

Our choice of parameters allows only one intersection between the quadratic function f(u) and the linear function g(u) in the positive quadrant. This is ensured by the condition:

which gives K < 176.8.

There are four stationary points in the positive quadrant, the region of interest. They are

where u* is the positive solution of

and v* = bu*+c. Refer to Figure 1, which gives a sense of the global dynamics and basic structures of the system.

Theorem 1 establishes rigorously the region of interest for this system.

Theorem 1. The positive (u, v)-quadrant is invariant under the dynamics defined by Equation (7), and there exists a bounded absorbing set to which all solutions enter.

Proof. The fact that the positive quadrant is positively invariant follows from the fact that u = 0, vt = γv(−v+c) and ϵut = −u(K(u−a1)(u−a2)), v = 0, are solutions lying on the u and v-axes. For the existence of an absorbing set, we compute

By using Young inequality, for u, v > 0,

we can split the right hand side of (8) into a polynomial in u of degree 4, and a polynomial in v of degree 3, with both negative leading coefficients. Since the solution lies in the positive quadrant, by polynomial comparison, we obtain:

where M > 0 and N > 0 can be chosen independently of initial conditions. Integrating (9) leads to the existence of an absorbing set attracting all trajectories.

Remark 2. Equation (7) is inspired by the FitzHugh-Nagumo (FHN) and Leslie-Gower (LG) models. Starting from the FHN system, a well-studied system with a slow-fast structure, we replaced the cubic nullcline with a parabola in the first equation and added factors of u and v respectively in front of the first and second equations to constrain the solutions to the upper-right quadrant. Such modifications were necessary because in the classical FHN system, one variable describes voltage and the other is the so-called recovery variable, but the dynamics of voltage excursions and recovery follow different time courses than E- and I-conductances. Also, both variables in FHN take negative values, which the magnitudes of conductances do not. The variables in the LG system, on the other hand, have been shown (refer to Ambrosio et al., 2018) to have a dynamical character closer to that of the REI mechanism in Chariker et al. (2018). Relaxing the fast constraint (i.e., allowing ϵ to be larger) induces correlated dynamics in u and v that are strikingly similar to those observed in experimental and numerical simulations of conductance dynamics; compare, e.g., Figure 1 in Okun and Lampl (2009) and Figures 2B,E in Chariker et al. (2018).

3.2. Varying the Parameter ϵ

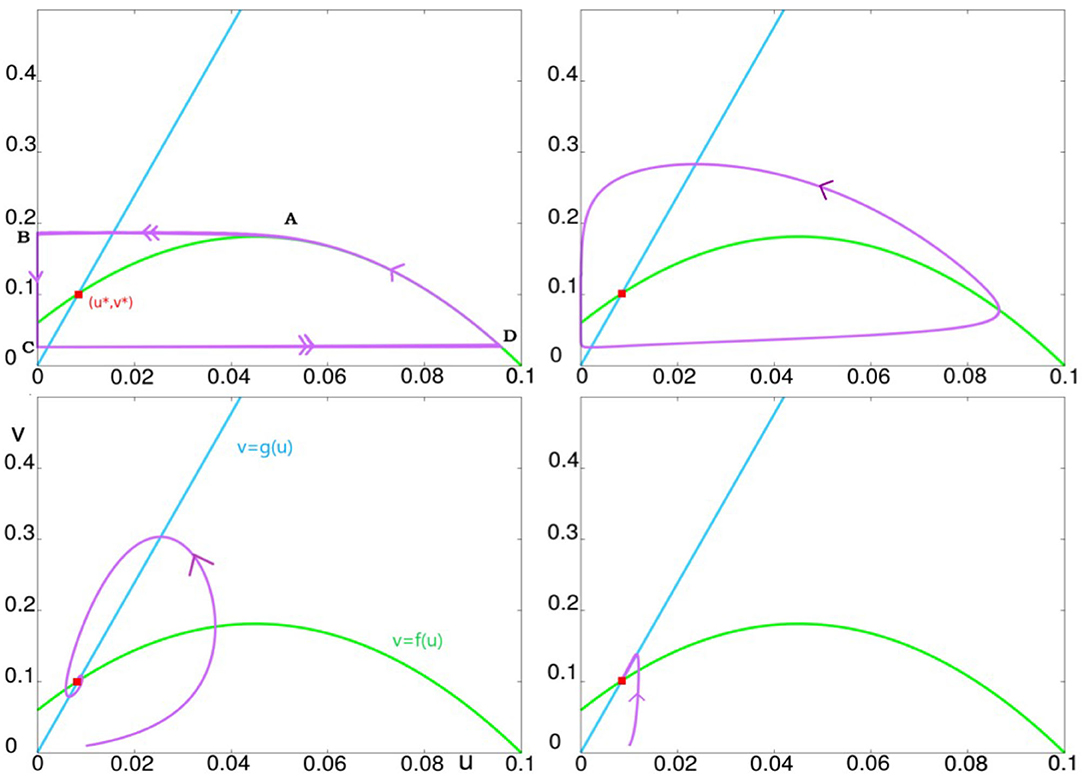

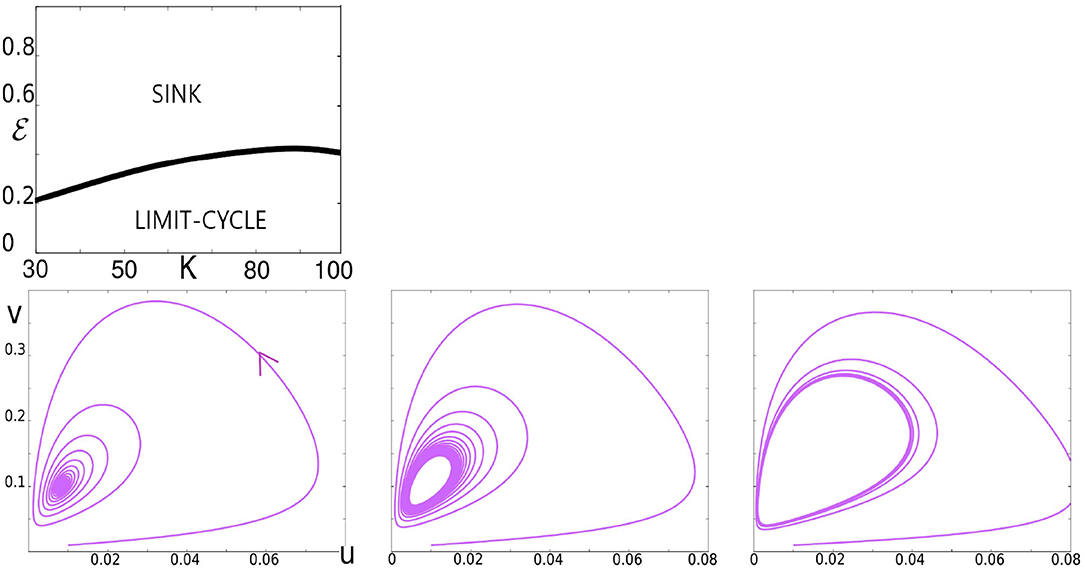

Recall that we have three parameters: ϵ, γ, and K. We first explain the role of ϵ, fixing for now γ = 1, and studying the dynamics as ϵ is varied for each value of K. The dynamics of the system from ϵ very small to very large for K = 60 (a fairly typical value of K) are summarized in Figure 2. For ϵ ≪ 1, Equation (7) describes a slow-fast system with a limit cycle as can be seen in the top two panels of Figure 2. As ϵ is increased, this limit cycle turns into a sink somewhere between ϵ = 0.1 and ϵ = 1. For large ϵ, e.g., at ϵ = 10, one can show that the critical attractive manifold is the line Δ:v = g(u). All the trajectories reach this line fast and then follow it slowly toward the fixed point (u*, v*) as can be seen in the bottom right panel.

Below we will give the analysis for ϵ very small, as well as the Hopf bifurcation that takes the limit cycle to the sink.

3.2.1. The Case of ϵ ≪ 1

For ϵ small enough a slow-fast analysis allows to compute the limit-cycle up to an O(ϵ) order. The behavior can be described geometrically as follows. We denote by the curve v = f(u). For ϵ small enough, a trajectory starting from the right side of (i.e., at any point on the curve between A and D), will increase along the curve (vt > 0 there) until it reaches the maximum point . Refer to Figure 2 top left panel. This is a jump point, refer to Krupa and Szmolyan (2001), i.e., from there the trajectory leaves and goes at high speed to reach a neighborhood of the point . After that, since at first ut < 0, the trajectory remains stuck near the line u = 0. It goes down (vt < 0) until it crosses the point (0, f(0)) = (0, −Ka1a2), at which point ut becomes positive. This is a fold point but not a jump point, refer to Krupa and Szmolyan (2001). Dynamics near this point have been analyzed in Ambrosio et al. (2018). Refer also to Wang and Zhang (2019) and references therein cited. The trajectory continues to follow the axis u = 0 until it reaches a point C on the axis u = 0 which is significantly below (0, f(0)). Here, there is the possibility of the so-called canard phenomenon, refer to Benoît et al. (1981); Krupa and Szmolyan (2001); Szmolyan and Wechselberger (2001). At C, the trajectory leaves the axis u = 0 and goes very quickly toward the point D on with the same ordinate as C. This gives a qualitative description of the limit-cycle. For ϵ sufficiently small, precise statements can be rigorously deduced from Geometrical Singular Perturbation Theory. Good reviews can be found in Hek (2010); Jones (1995); Kaper (1999); Krupa and Szmolyan (2001).

Let Γ′ be the closed curve defined by:

where is the arc from D to A.

Theorem 2. For ϵ > 0 sufficiently small, there is a limit cycle Γ within distance O(ϵ) of Γ′.

For proof of the uniqueness of the limit-cycle in the case ϵ small, refer to Wang and Zhang (2019).

We point out that the system defined by (7) provides a simple example, in a Neuroscience context, in which canard solutions emerge and can be computed explicitly. As a result of the polynomial expression of the vector field, the computations performed in Ambrosio et al. (2018) become simpler and explicit around the point (0, f(0)).

3.2.2. Hopf Bifurcations

As indicated in Section 2.1, the range of ϵ of interest is [0.1, 1], and it is in this range of ϵ that the limit cycle turns into a sink as shown in Figure 2. We now give more detail on this bifurcation, specifically, the Hopf bifurcation that occurs at (u*, v*) where (u*, v*) is the unique fixed point in the interior of the positive quadrant; refer to Section 2.1.

The Jacobian matrix at fixed points is

Substituting in v* = bu*+c, we obtain at (u*, v*), that

which gives

while

From the above expressions, we deduce the following proposition.

Proposition 1. For K ∈ [30, 100], det(J*) > 0. It follows that for each K there exists a value of ϵ at which a Hopf bifurcation occurs. This value is given by:

We close this section with an application of the Poincare-Bendixon theorem to our system.

Theorem 3. Each trajectory starting in the region {u > 0, v > 0} either converges to (u*, v*) or evolves toward a limit-cycle. For , it converges toward a limit-cycle.

Proof. The proof follows from the analysis of the nullclines and the nature of fixed points.

3.3. Dependence of Dynamics on the Parameters ϵ, K, and γ

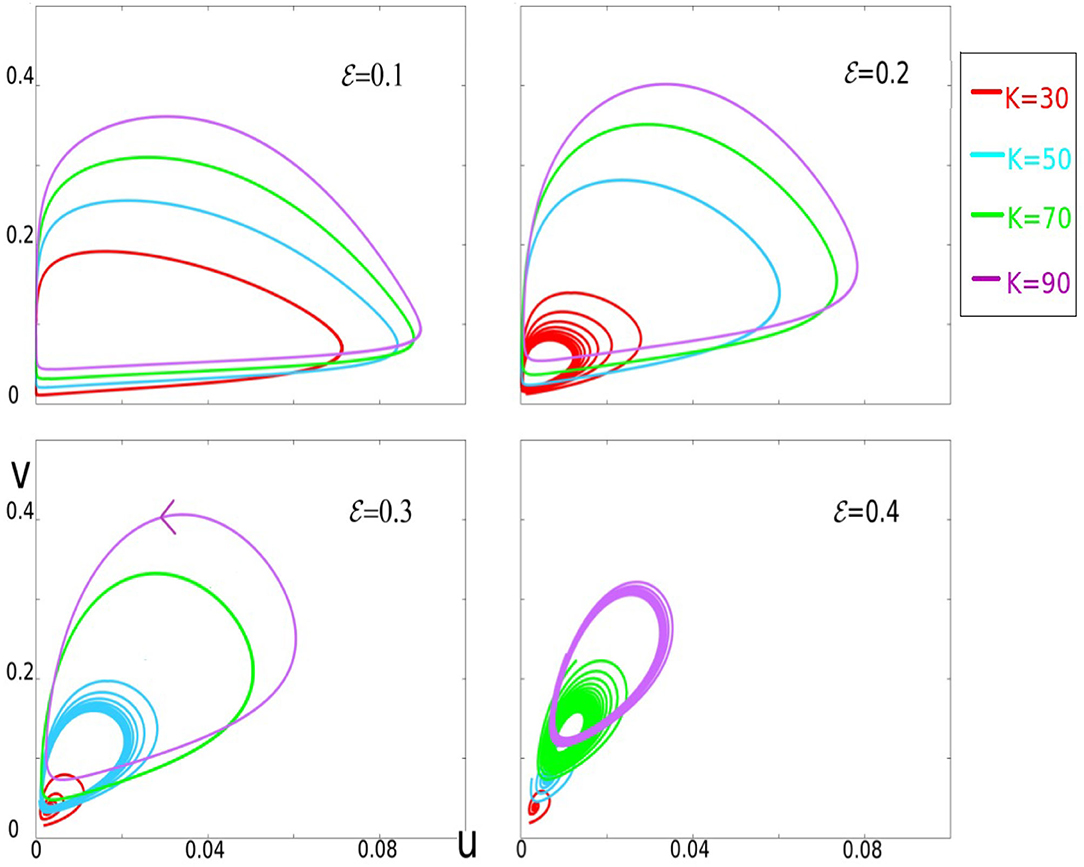

Continuing to keep γ = 1, we first examine the dynamics of Equation (7) as functions of K and ϵ. Simulation results are shown in Figure 4. Notice first that these results are consistent with those in Figure 3 with regard to increasing ϵ for fixed K. What is new here is the effect of varying K for each ϵ. Figure 4 shows clearly that larger K corresponds to larger excursions by u and v. This means

(i) When solutions are attracted to a limit cycle, the limit cycle has a larger diameter for larger K; and

(ii) Whether solutions eventually tend to a limit cycle or a sink, this attracting set is located closer to u = 0, v = 0 for smaller values of K.

Finally, we examine the effect of varying γ. From the equations, it is clear that trajectories of Equation (7) will trace out the same curves as long as γϵ remains constant; and that varying γ keeping γϵ fixed corresponds to changing the speed with which one moves along these curves. For example, at K = 60, for values of ϵ = 0.1 and γ = 1, numerical simulation gives a limit cycle with period ~44 ms (equivalently frequency around 22 Hz). For ϵ = 0.01, γ = 10, the period becomes 4.4 ms (frequency around 225 Hz).

Proposition 2. For each fixed K, the curves traced out by the trajectories of Equation (7) depend only on ϵγ. Fixing K and ϵγ, and varying γ, velocities are proportional to γ; in particular, the frequency of the limit cycle is proportional to γ−1.

The meaning and main general effects of the variation of parameters ϵ, K, and γ in Equation (7) can be summarized as follows:

• Increasing ϵ changes the dynamical regime from one with a limit cycle in a slow-fast system to one with an attractive fixed point;

• K controls the sizes of the excursion of (u, v) in the system's oscillatory behavior: in general, the larger K, the larger the excursions; while

• For each fixed value of ϵγ, the magnitude of γ controls the frequencies of the limit cycle.

As we will show momentarily, these are the parameters we need to vary to produce the irregularities seen in gamma rhythms.

4. Proposed Model: Stochastic Components

As discussed in the Section 1, there are two facets to gamma rhythms as observed in the real cortex: one is their oscillatory nature; the other is their irregular, episodic character. The deterministic system in Section 2 provided the underlying oscillations. Here, we create irregularity by adding randomness to the deterministic model. Instead of adding white noise to the system of ODE, we have found that allowing its key parameters (as described in Section 3) to drift freely, performing random walks within designated parameter ranges, produces better results. This wandering parameters paradigm is especially effective for modeling dynamical behavior that samples different regimes, drifting from one regime to another after seemingly random time durations. By choosing a deterministic model capable of supporting the relevant dynamical regimes at different parameter values, one can control the sampling of different regimes by controlling the way the model's parameters wander.

As discussed earlier, gamma-band activity is quite varied in character: when the rhythm is robust, the dynamics appear to be following periodic orbits, the amplitudes and frequencies of which vary with time in a way that is partially history-dependent. When the rhythm degrades, it is as though the trajectory is near a (weakly) stable equilibrium. Our deterministic model supports these regimes; moreover, we have learned in Section 3.3 how to switch between sinks and limit cycles, and how to vary the amplitudes and frequencies of the cycles by changing parameters. By allowing these parameters to wander, we ensure that the amplitudes and frequencies of the gamma cycles will wander. We then choose the ranges of parameters and the speeds with which they vary as we see fit.

In more detail, we first specify parameter ranges [Kmin, Kmax], [ϵmin, ϵmax], and [fmin, fmax] for K, ϵ, and ϵγ, respectively. For example, for the simulations shown in Figure 5, we used [Kmin, Kmax] = [30, 100], [ϵmin, ϵmax] = [0.04, 0.1], and [fmin, fmax] = [0.2, 0.5]. Let be independent random variables uniformly distributed on [−1, 1]. Starting from initial values of K, ϵ, and γ within the specified ranges, we update these parameters every 0.1 ms. At the ith step, we let

constraining K to [Kmin, Kmax] according to the rule that if and K(1+0.1u) falls outside of [Kmin, Kmax], then we set K = K(1−0.1u). Next, we update ϵ by letting

constraining ϵ to [ϵmin, ϵmax] as before.

Figure 5. Illustration of the Hopf bifurcation. In the top panel, we have plotted the Hopf bifurcation diagram in the K, ϵ plane. The bottom panels illustrate the bifurcation for K = 60 as ϵ decreases. Left: ϵ = 0.4, a trajectory spiraling toward a sink. Middle: ϵ = 0.36, trajectories accumulating on a limit cycle following the sink's loss of stability. Right: ϵ = 0.3, the limit cycle growing in size.

Finally, we set

if ϵγ ∈ [fmin, fmax]. If not, if ϵγ > fmax we set

and if ϵγ < fmin, we set

Recall that it is the product ϵγ that determines the curves traced out by the trajectories of the system (Proposition 2), and ϵγ ∈ [0.2, 0.5] corresponds to ϵ ∈ [0.2, 0.5] in Figures 3–6, where γ was set = 1. Thus, to simulate gamma rhythms, the parameters above are chosen so that most but not all of the time, the dynamics are oscillatory. Once parameters that produce suitable qualitative behaviors are located, it is generally simpler to adjust the values of u, v, or the mean frequencies of the oscillations by modifying slightly the two equations of Equation (7) (e.g., by inserting a scaling coefficient in front).

Figure 6. This figure gives a panorama of sample trajectories within the parameter's range of interest. Four panels corresponding to ϵ = 0.1, 0.2, 0.3, and 0.4 are shown. In each panel, trajectories for different values of K are depicted in different colors: K = 30 (red), 50 (cyan), 70 (green), and 90 (purple). For each value of ϵ, the larger K, the larger excursions in the phase-space.

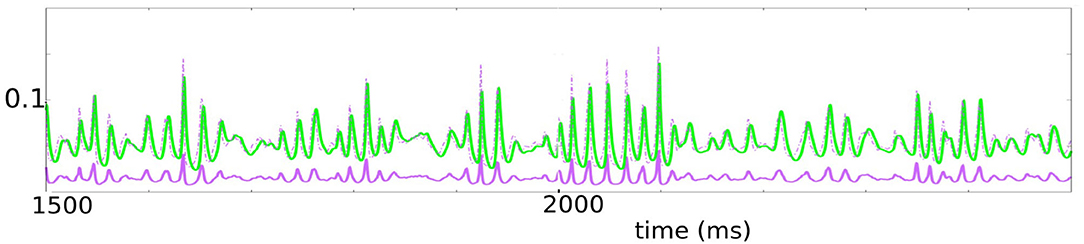

Figure 7 shows a solution to the stochastic version of Equation (7). The irregular nature of the rhythm is clearly visible, and it possesses features remarkably similar to those in experimental data, refer to e.g., Burns et al. (2011) (Figure 1B): One sees the trajectory switching between robustly periodic and more degenerate regimes as the parameters controlling degeneracy are varied; the use of random walks with adjustable speeds has allowed us to control the degree of history dependence. The amplitudes and frequencies sampled are also controllable.

Figure 7. This figure represents the evolution of the stochastic version of the system (7). The parameters used are K ∈ [30, 50], ϵ ∈ [0.04, 0.1], γϵ ∈ [0.2, 0.5]. We plotted ū = 1.96u+0.00672 in solid purple, to be thought of as representing E-conductance, v in green, representing I-conductance. Since the ratio of E-current to E-conductance is roughly three to four times that of the ratio of I-current to I-conductance, we have plotted also 3.5ū in dashed purple. Note the tight relationship between 3.5ū and v.

As discussed earlier, a bonus of this model is that it is more than just a phenomenological model of gamma: the two variables u and v suitably adjusted simulate the E and I-conductances of a typical neuron in a local population under drive. By definition, the E-current entering a neuron is defined to be its E-conductance times a factor proportional to the distance of membrane potential to the E-reversal potential, and the same is true for I-currents. As this factor for E-current is 3 to 4 times that for I, we have also plotted (in dash) a graph that is 3.5 times the height of the E-conductance. Modulo a multiplicative constant, then, the dashed purple and green plots can be thought of as approximations of E and I-currents, respectively, and it is striking how the two currents track one another.

We remark on the tightness with which the green plots (I-current) follow the dashed purple plots (E-current). There is a well-known theory of balanced states (van Vreeswijk and Sompolinsky, 1998) that asserts that in the limit as system size tends to infinity, E-currents and I-currents are balanced when averaged over time. Experimental results of Okun and Lampl (2008) and subsequent theory (Denève and Machens, 2016) and modeling paper (Chariker et al., 2018) show that much more than that is true, namely that these currents are in fact roughly balanced from moment to moment, not just when averaged over time. The tight relationship between our dashed purple and green curves in Figure 5 captured well this phenomenon.

5. Demonstration of Model Versatility

Below we give three examples to demonstrate that the ODE system with stochastically varying coefficients presented in Sections 3 and 4 can be used to generate signals that simulate rhythmic activity in the real cortex.

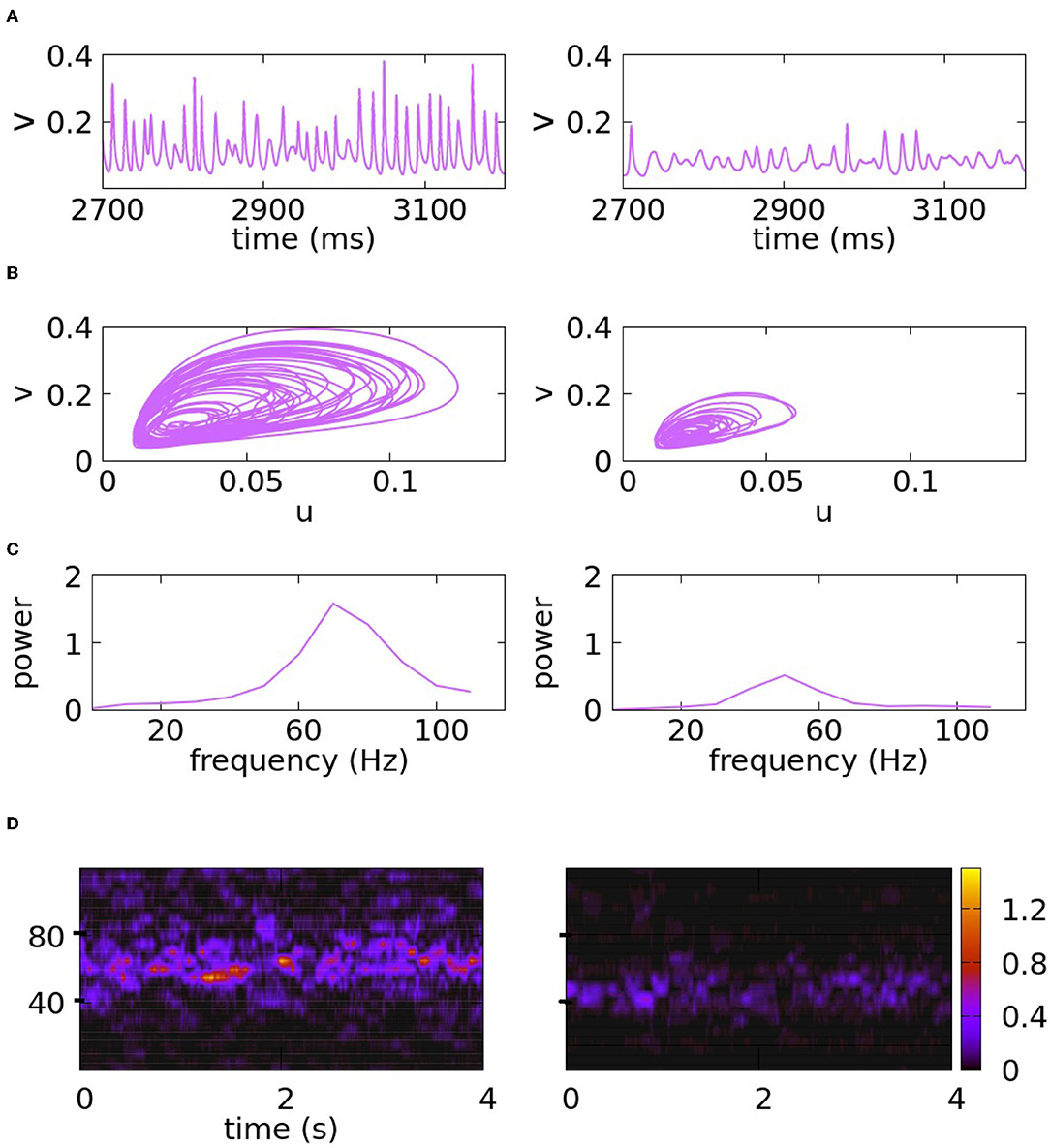

5.1. Example 1. Gamma Rhythms for Awake vs. Anaesthetized Monkey

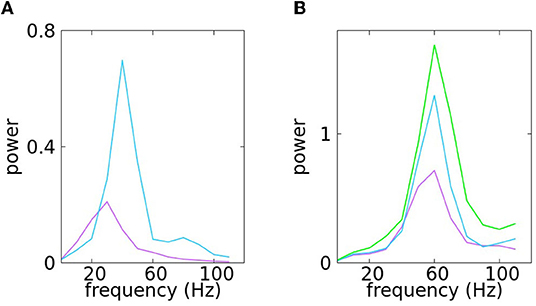

The first set of experimental results we used to challenge our model was that reported in Xing et al. (2012). In this article, the authors studied gamma rhythms in awake vs. anesthetized monkeys. LFP from V1 (the primary visual cortex) in response to high contrast sinusoidal grating patches were recorded and the resulting data was analyzed. After the initial power increase (which we do not model), peak gamma frequency was found to be about of 60 Hz in the awake and 40 Hz in the anesthetized monkeys studied (Figure 1 of Xing et al., 2012). Time frequency analysis of single trials confirmed that oscillations in the awake animal were faster (Figure 2). Also, for anesthetized monkeys, the signal was weaker, having an amplitude about 60% that of the awake (Figure 1). In addition to the PSD, which describes spectral power averaged over time, the authors of Xing et al. (2012) (Figure 2) studied temporal structures of gamma-band activity. They found intermittent bursts of activity lasting for small fractions of a second suggesting some short term history dependence.

As proof of concept, we challenged our model to produce two signals with the characteristics of brain rhythms of awake and anesthetized monkeys as reported in the experimental article above. Our goal is not a perfect match with data but to demonstrate how these quantities can be manipulated through parameter selection in a model like the one presented in Sections 3 and 4. Time traces, PSDs, and spectrograms (Fourier power computed on shifted time intervals and plotted as a function of time, refer to Section 2.1) for two signals intended to simulate these two very different cortical states are presented in Figure 8; the exact parameters used are given in the legend. The amplitudes and peak frequencies of the PSD plots are in agreement with the data and the spectrogram shows small bursts of activity.

Figure 8. Reproducing signals with the characteristics of awake and anesthetized monkeys. The figures on the left side correspond to the awake state, and the figures on the right side correspond to the anesthetized state. The figures result from the simulation of Equation (7). In (A), the traces of v are plotted as a function of time. In (B), we represent the trajectories in the phase space. Panel (C) corresponds to the PSD and panel (D) to the spectrograms. The parameters are as follows: Km = 50, KM = 90, ϵm = 0.07, ϵM = 0.16, fm = 0.35, fM = 0.4 for the left side. For the right side, the following changes are made: Km = 40, KM = 68, ϵm = 0.08, ϵM = 0.18.

5.2. Example 2. PSD in Primate Visual Response: High vs. Low Contrast

It is well-known to visual neuroscientists that in primate contrast response, peak frequency and spectral power increase with contrast (Henrie and Shapley, 2005). For definiteness, we challenged the model to reproduce characteristics of the signals in Jia et al. (2013). Figure 2C of Jia et al. (2013) shows that in response to a large grating (10° in diameter), peak frequency increased from about 30 to 44 Hz, and stimulus-induced gamma power was enhanced more than 3-fold as the contrast was increased from about 6 to 50%. Selecting suitable parameters from our model, we were able to build two signals having these spectral characteristics. They are shown in Figure 9A.

Figure 9. In (A), we reproduce low contrast vs. high contrast as in Jia et al. (2013). This results for simulation of equation (7) with: Km = 25, KM = 55.0, ϵm = 0.09, ϵM = 0.19, ϵγ ∈ [0.35, 0.4] for low contrast and Km = 40, KM = 70.0, ϵm = 0.11, ϵM = 0.21, ϵγ ∈ [0.35, 0.4] for high contrast. In (B), we illustrate the increase of power related to repeated stimulations as in Brunet et al. (2014). The set of parameters is as follows, low power: Km = 40, KM = 75.0, ϵm = 0.075, ϵM = 0.155, ϵγ ∈ [0.35, 0.4], mean power: Km = 45, KM = 80.0, ϵm = 0.09, ϵM = 0.16, ϵγ ∈ [0.35, 0.4], and Km = 50, KM = 90.0, ϵm = 0.09, ϵM = 0.19, ϵγ ∈ [0.35, 0.4] for high power.

5.3. Example 3. Effect of Stimulus Repetition on Gamma-Band Activity

It is shown by Brunet et al. (2014) that the repeated presentation of a visual grating stimulus to monkeys resulted in a steady increase of visually induced gamma-band activity in V1 and V4 (and in the synchronization of the two rhythms in these two areas). The authors proposed this as a plausible way to maintain effective stimulus signaling in the face of dwindling firing rates, presumably due to adaptation. Figure 1 in Brunet et al. (2014), which shows LFP traces and power spectra from a recording session with an awake monkey, shows peak frequencies increasing slightly but hovering mostly around 60 Hz, consistent with the values in Example 1. A striking feature here is that the PSDs become increasingly sharply peaked, with significant increases in gamma power at these frequencies with stimulus repetition. In Figure 9B, we present the PSD of 3 signals with these properties generated by our model.

The examples above demonstrate that the two dimensional ODE system with stochastically varying coefficients presented in Sections 3 and 4 of this article is sufficiently flexible that through parameter selection, one can reproduce, on demand, a variety of characteristics observed in gamma-band rhythms in the real cortex.

6. Discussion

Oscillatory behaviors are among the most widely observed dynamical phenomena in physiology. They occur in the spontaneous beating of heart cells (Glass et al., 1984), in central pattern generators in animal locomotion (Cohen et al., 1988), and in calcium oscillations that underlie a plethora of cellular responses (Thul et al., 2008). For more examples refer to Glass and Mackey (1988); Françoise (2005); Winfree (2000). Physiological processes can also be more complex than just purely oscillatory, sometimes they can even be mildly chaotic, as has been observed in several studies, such as Bondarenko (1994); Freeman (1987); van Vreeswijk and Sompolinsky (1998), and Lin et al. (2012). Brain rhythms, gamma-band oscillations, in particular, are neither purely oscillatory nor chaotic but somewhere in between, and their simulation has been much studied by theorists. We review below a sample of the main results prior to this study.

6.1. Previous Study on Models of Gamma Rhythms

An early and well-known model is PING (Whittington et al., 2000; Börgers and Kopell, 2003); similar models include (Ermentrout and Kopell, 1998; Tiesinga et al., 2001) among others. These models were the first to use non-linear dynamics to explain gamma rhythms. The original PING model produces highly regular population spikes, capturing successfully the oscillatory behavior of gamma rhythms but not their irregular character. There were several follow-up studies e.g., (Borgers et al., 2005; Börgers, 2017) in which network models were used to produce more nuanced spike patterns.

Another body of study that received much attention is (Brunel and Hakim, 1999; Brunel, 2000). In these studies, the authors started with networks of sparsely coupled integrate-and-fire neurons, and let system size tend to infinity while keeping the number of connections an infinitely small fraction of system size. Arguing that in such a limit distinct neurons are likely to have disjoint sets of presynaptic cells, the authors of Brunel and Hakim (1999); Brunel (2000) modeled neuronal dynamics by an equation consisting of a deterministic part describing meanfield activity plus a Gaussian noise that is independent of the neuron to neuron, and gamma rhythms were modeled as regimes following a supercritical Hopf bifurcation. These are the first reduced models of gamma rhythms that we know of. Another much cited article is by Brunel and Wang (2003). Here, the authors assumed that gamma rhythms consisted of purely periodic motion plus a noise term, and focused on the dependence of the period on various factors.

Experimental studies from the last 15 years brought to light some intriguing features of gamma rhythms produced by the real cortex, stressing their broad-band nature (Henrie and Shapley, 2005). They show that the amplitudes of the oscillations in local field potentials can be quite large (Henrie and Shapley, 2005), far from regimes that emerge following Hopf bifurcations. Another feature of interest revealed by experimental results is that the power and frequencies of the oscillations wander (Xing et al., 2012), with the same patterns often persisting for tens, sometimes up to 200 ms, indicative of some form of short-term memory.

More recently, a number of detailed biological network models have appeared showing that gamma rhythms with the properties above occur naturally as a consequence of Excitatory-Inhibitory interaction in local neuronal processes, calling attention to concepts such as multiple firing events (MFE) (Rangan and Young, 2013) and recurrent excitation-inhibition (REI) (Chariker et al., 2018). The analysis in these recent articles provided an understanding, at least qualitatively, of how both the oscillatory and irregular characters of gamma rhythms come about.

6.2. What This Article Is About: Goals and Conclusion

This article is concerned not with physiological processes associated with gamma rhythms but with the mathematical properties of the signal itself. As these rhythms are naturally produced and have very intriguing signatures, we sought to understand how they can be produced using reduced neuronal models defined by low dimensional dynamical systems. As noted above, reduced models of gamma rhythms have been studied before, but how well they capture the broad-band, irregular nature of gamma rhythms had not been evaluated up until now.

Thus, we began in Section 2 with a study of the main group of reduced models in the literature, namely those described by periodic dynamics perturbed by white noise (Brunel, 2000; Brunel and Wang, 2003). We found that these models do reproduce some aspects of the irregular side of gamma behavior but the multi-faceted nature of these behaviors required more multi-dimensional control. To that end, we proposed, in Sections 3 and 4, a model inspired by the FitzHugh-Nagumo system. We do not claim that the model we proposed is the only viable model, far from it, but the following properties of this model are of note: As parameters are varied, the dynamical regimes described range from stable equilibria to Hopf bifurcations to robust limit cycles. Other parameters offer direct control of the amplitudes and frequencies of the periodic regimes. We found also that varying parameter randomly but continuously, such as random walks in parameter space, capture more realistically the wandering nature of gamma characteristics.

In the final section, to demonstrate versatility we challenged the model to reproduce several sets of experimental data, and the model performed satisfactorily. We remark that the modeling approach employed here can be used to study other rhythms provided that we adjust augment the model to accommodate the characteristics of the rhythm in question. A challenging example is signals with superposition of rhythms. In this case, an idea would be to consider two coupled models of ours, the strength of coupling depending on the interaction of these rhythms.

7. Methods

Simulations of the system (7), in the deterministic case, were performed using a standard RK4 method with a time step of dt = 0.01, on the time interval [0, T], with T = 5, 000ms. The code is a personal code written by the first author in the C++ language.

More explicitly, this means that, classically, the time interval [0, T] is divided into sub-intervals [jdt, (j+1)dt], j ∈ {0, ..., T/dt−1}, and the solution of (7) is approximated by the sequence (uj, vj) j ∈ {0, ..., T/dt} with (uj, vj) computed from (uj−1, vj−1) by using the RK4 iteration.

For the stochastic version of Equation (7), the numerical method remains the same, with the specification that for j = 10, 20, ⋯ the parameters K, ϵ, and γ are changed randomly according to the description given in paragraph 3, i.e., let be independent random variables uniformly distributed on [−1, 1]. For j = 10i, i ∈ {1, ..., 49990}, we let

constraining K to [Kmin, Kmax] according to the rule that if and K(1+0.1u) falls outside of [Kmin, Kmax], then we set K = K(1−0.1u). Next, we update ϵ by letting

constraining ϵ to [ϵmin, ϵmax] as before. Finally, we set

if ϵ γ ∈ [fmin, fmax]. If not, if ϵ γ >fmax we set

and if ϵγ < fmin we set

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

BA and L-SY set up the mathematical model, performed the research, provided the mathematical analysis, and wrote the manuscript. BA did the numerical simulations. All authors contributed to the article and approved the submitted version.

Funding

Part of the research has been funded by Région Normandie France, ERDF (European Regional Development Fund) XTERM, CNRS International Emerging Actions program, and the Hudson School of Mathematics. Part of the research of L-SY was funded by NSF Grants 1901009 and 1734854.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

E, Excitatory; I, Inhibitory; LIF, leaky integrate and fire; PING model, Pyramidal-interneuronal network gamma model; ODE, Ordinary Differential Equation; FHN, FitzHugh-Nagumo; REI, Recurrent Excitation Inhibition; SDE, Stochastic Differential equation; PSD, Power Spectral Density; KAM, Kolmogorov-Arnold-Moser.

References

Ambrosio, B., Aziz-Alaoui, M., and Yafia, R. (2018). Canard phenomenon in a slow-fast modified Leslie-Gower model. Math. Biosci. 295, 48–54. doi: 10.1016/j.mbs.2017.11.003

Benoît, E., Callot, J. F., Diener, F., and Diener, M. (1981). Chasse au canard. Collectanea Mathematica 32, 37–119.

Bondarenko, V. E.. (1994). A simple neural network model produces chaos similar to the human EEG. Phys. Lett. A 196, 195–200. doi: 10.1016/0375-9601(94)91225-4

Börgers, C.. (2017). “Weak PING rhythms,” in An Introduction to Modeling Neuronal Dynamics (Cham: Springer International Publishing), 281–292. doi: 10.1007/978-3-319-51171-9_32

Borgers, C., Epstein, S., and Kopell, N. J. (2005). Background gamma rhythmicity and attention in cortical local circuits: a computational study. Proc. Natl. Acad. Sci. U.S.A. 102, 7002–7007. doi: 10.1073/pnas.0502366102

Börgers, C., and Kopell, N. (2003). Synchronization in networks of excitatory and inhibitory neurons with sparse, random connectivity. Neural Comput. 15, 509–538. doi: 10.1162/089976603321192059

Brunel, N.. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208. doi: 10.1023/A:1008925309027

Brunel, N., and Hakim, V. (1999). Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Comput. 11, 1621–1671. doi: 10.1162/089976699300016179

Brunel, N., and Wang, X.-J. (2003). What determines the frequency of fast network oscillations with irregular neural discharges? I. Synaptic dynamics and excitation-inhibition balance. J. Neurophysiol. 90, 415–430. doi: 10.1152/jn.01095.2002

Brunet, N. M., Bosman, C. A., Vinck, M., Roberts, M., Oostenveld, R., Desimone, R., et al. (2014). Stimulus repetition modulates gamma-band synchronization in primate visual cortex. Proc. Natl. Acad. Sci. U.S.A. 111, 3626–3631. doi: 10.1073/pnas.1309714111

Burns, S. P., Xing, D., and Shapley, R. M. (2011). Is gamma-band activity in the local field potential of v1 cortex a “clock” or filtered noise? J. Neurosci. 31, 9658–9664. doi: 10.1523/JNEUROSCI.0660-11.2011

Cardin, J. A.. (2016). Snapshots of the brain in action: Local circuit operations through the lens of oscillations. J. Neurosci. 36, 10496–10504. doi: 10.1523/JNEUROSCI.1021-16.2016

Chariker, L., Shapley, R., and Young, L.-S. (2018). Rhythm and synchrony in a cortical network model. J. Neurosci. 38, 8621–8634. doi: 10.1523/JNEUROSCI.0675-18.2018

Chariker, L., and Young, L.-S. (2014). Emergent spike patterns in neuronal populations. J. Comput. Neurosci. 38, 203–220. doi: 10.1007/s10827-014-0534-4

Cohen, A. H., Rossignol, S., and Grillner, S. (1988). Neural Control of Rhythmic Movements in Vertebrates. New York, NY: John Wiley.

Denéve, S., and Machens, C. K. (2016). Efficient codes and balanced networks. Nat. Neurosci. 19, 375–382. doi: 10.1038/nn.4243

Ermentrout, G. B., and Kopell, N. (1998). Fine structure of neural spiking and synchronization in the presence of conduction delays. Proc. Natl. Acad. Sci. U.S.A. 95, 1259–1264. doi: 10.1073/pnas.95.3.1259

Françoise, J.-P.. (2005). Oscillations en Biologie. Berlin; Heidelberg: Springer. doi: 10.1007/3-540-37670-4

Freeman, W. J.. (1987). Simulation of chaotic EEG patterns with a dynamic model of the olfactory system. Biol. Cybernet. 56, 139–150. doi: 10.1007/BF00317988

Fries, P.. (2005). A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends Cogn. Sci. 9, 474–480. doi: 10.1016/j.tics.2005.08.011

Glass, L., Guevara, M., Belair, J., and Shrier, A. (1984). Global bifurcations of a periodically forced oscillator. Phys. Rev. A 29, 1348–1357. doi: 10.1103/PhysRevA.29.1348

Glass, L., and Mackey, M. C. (1988). From Clocks to Chaos: The Rhythms of Life. Princeton: Princeton University Press. doi: 10.1515/9780691221793

Gray, C. M., König, P., Engel, A. K., and Singer, W. (1989). Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature 338, 334–337. doi: 10.1038/338334a0

Hek, G.. (2010). Geometric singular perturbation theory in biological practice. J. Math. Biol. 60, 347–386. doi: 10.1007/s00285-009-0266-7

Henrie, J. A., and Shapley, R. (2005). LFP power spectra in v1 cortex: the graded effect of stimulus contrast. J. Neurophysiol. 94, 479–490. doi: 10.1152/jn.00919.2004

Jia, X., Xing, D., and Kohn, A. (2013). No consistent relationship between gamma power and peak frequency in macaque primary visual cortex. J. Neurosci. 33, 17–25. doi: 10.1523/JNEUROSCI.1687-12.2013

Jones, C. K. R. T.. (1995). “Geometric singular perturbation theory,” in Dynamical Systems ed Johnson R (Berlin; Heidelberg: Springer), 44–118. doi: 10.1007/BFb0095239

Kaper, T. J.. (1999). An Introduction to Geometric Methods and Dynamical Systems Theory for Singular Perturbation Problems. American Mathematical Society. eds J. Cronin and R. E. O'Malley Jr. New Brunswick, NJ; Seattle, WA: Rutgers University; University of Washington. doi: 10.1090/psapm/056/1718893

Krupa, M., and Szmolyan, P. (2001). Extending geometric singular perturbation theory to nonhyperbolic points–fold and canard points in two dimensions. SIAM J. Math. Anal. 33, 286–314. doi: 10.1137/S0036141099360919

Lin, K. K., Wedgwood, K. C. A., Coombes, S., and Young, L.-S. (2012). Limitations of perturbative techniques in the analysis of rhythms and oscillations. J. Math. Biol. 66, 139–161. doi: 10.1007/s00285-012-0506-0

Okun, M., and Lampl, I. (2008). Instantaneous correlation of excitation and inhibition during ongoing and sensory-evoked activities. Nat. Neurosci. 11, 535–537. doi: 10.1038/nn.2105

Okun, M., and Lampl, I. (2009). Balance of excitation and inhibition. Scholarpedia. 4:7467. doi: 10.4249/scholarpedia.7467

Rangan, A. V., and Young, L.-S. (2013). Emergent dynamics in a model of visual cortex. J. Comput. Neurosci. 35, 155–167. doi: 10.1007/s10827-013-0445-9

Szmolyan, P., and Wechselberger, M. (2001). Canards in R3. J. Diff. Equat. 177, 419–453. doi: 10.1006/jdeq.2001.4001

Thul, R., Bellamy, T. C., Roderick, H. L., Bootman, M. D., and Coombes, S. (2008). “Calcium oscillations,” in Cellular Oscillatory Mechanisms. Advances in Exper-imental Medicine and Biology, eds M. Maroto and N. Monk (New York, NY: Springer), 1–27.

Tiesinga, P. H., Fellous, J.-M., José, J. V., and Sejnowski, T. J. (2001). Computational model of carbachol-induced delta, theta, and gamma oscillations in the hippocampus. Hippocampus 11, 251–274. doi: 10.1002/hipo.1041

van Vreeswijk, C., and Sompolinsky, H. (1998). Chaotic balanced state in a model of cortical circuits. Neural Comput. 10, 1321–1371. doi: 10.1162/089976698300017214

Wang, C., and Zhang, X. (2019). Relaxation oscillations in a slow-fast modified Leslie-Gower model. Appl. Math. Lett. 87, 147–153. doi: 10.1016/j.aml.2018.07.029

Welch, P.. (1967). The use of fast fourier transform for the estimation of power spectra: a method based on time averaging over short, modified periodograms. IEEE Trans. Audio Electroacoust. 15, 70–73. doi: 10.1109/TAU.1967.1161901

Whittington, M., Traub, R., Kopell, N., Ermentrout, B., and Buhl, E. (2000). Inhibition-based rhythms: experimental and mathematical observations on network dynamics. Int. J. Psychophysiol. 38, 315–336. doi: 10.1016/S0167-8760(00)00173-2

Winfree, A.. (2000). The Geometry of Biological Time. New York, NY: Springer-Verlag. doi: 10.1007/978-1-4757-3484-3

Keywords: brain rhythms, gamma-band activity, E/I-conductances, slow-fast dynamics, randomly varying coefficients, power spectral densities

Citation: Ambrosio B and Young L-S (2022) The Use of Reduced Models to Generate Irregular, Broad-Band Signals That Resemble Brain Rhythms. Front. Comput. Neurosci. 16:889235. doi: 10.3389/fncom.2022.889235

Received: 03 March 2022; Accepted: 19 April 2022;

Published: 13 June 2022.

Edited by:

Ranjit Kumar Upadhyay, Indian Institute of Technology Dhanbad, IndiaReviewed by:

Jun Ma, Lanzhou University of Technology, ChinaVitaly Volpert, UMR5208 Institut Camille Jordan (ICJ), France

Copyright © 2022 Ambrosio and Young. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Benjamin Ambrosio, YmVuamFtaW4uYW1icm9zaW9AdW5pdi1sZWhhdnJlLmZy

Benjamin Ambrosio

Benjamin Ambrosio Lai-Sang Young3,4

Lai-Sang Young3,4