94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 11 March 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.852281

This article is part of the Research TopicNonlinear Connectivity, Causality and Information Processing in NeuroscienceView all 5 articles

A crucial point in neuroscience is how to correctly decode cognitive information from brain dynamics for motion control and neural rehabilitation. However, due to the instability and high dimensions of electroencephalogram (EEG) recordings, it is difficult to directly obtain information from original data. Thus, in this work, we design visual experiments and propose a novel decoding method based on the neural manifold of cortical activity to find critical visual information. First, we studied four major frequency bands divided from EEG and found that the responses of the EEG alpha band (8–15 Hz) in the frontal and occipital lobes to visual stimuli occupy a prominent place. Besides, the essential features of EEG data in the alpha band are further mined via two manifold learning methods. We connect temporally consecutive brain states in the t distribution random adjacency embedded (t-SNE) map on the trial-by-trial level and find the brain state dynamics to form a cyclic manifold, with the different tasks forming distinct loops. Meanwhile, it is proved that the latent factors of brain activities estimated by t-SNE can be used for more accurate decoding and the stable neural manifold is found. Taking the latent factors of the manifold as independent inputs, a fuzzy system-based Takagi-Sugeno-Kang model is established and further trained to identify visual EEG signals. The combination of t-SNE and fuzzy learning can highly improve the accuracy of visual cognitive decoding to 81.98%. Moreover, by optimizing the features, it is found that the combination of the frontal lobe, the parietal lobe, and the occipital lobe is the most effective factor for visual decoding with 83.05% accuracy. This work provides a potential tool for decoding visual EEG signals with the help of low-dimensional manifold dynamics, especially contributing to the brain–computer interface (BCI) control, brain function research, and neural rehabilitation.

The human brain readily makes sense of visual images with specific dynamics in a complex environment, but how to quantify the visual response remains poorly understood (Kourtzi and Kanwisher, 2000; Pasley et al., 2012). In the past decade, the anatomy of visual conduction in the brain is well known: the visual information is transmitted through neural pathways from retina to cortex, which triggers specific dynamics to achieve cognitive functions such as memory and envision (de Beeck et al., 2008; Wen et al., 2018). Accordingly, decoding human brain activity triggered by visual stimuli has a significant impact on brain–computer interface (BCI), brain-inspired computing, and machine vision research (Hogendoorn and Burkitt, 2018). Although it has been demonstrated that human brain activity can be decoded from neurological data in recent research (Zheng et al., 2020), with the neurological data tending to be high dimensional and unstable, it is difficult to decode useful information directly from the complex neural data. How to decode visual information from brain activity remains a tantalizingly unsolved problem in neuroscience.

The research of specific links among brain activity, cognitive behavior, and decoding information from the brain has gained increasing attention. Early studies focus on the level of a single neuron. Recently, Cunningham and Byron point out that the majority of sensory, cognitive, and motor functions depend on the interactions among many neurons, and data cannot be fundamentally understood based on a single neuron (Cunningham and Byron, 2014). Consequently, this study of the neural system is undergoing a transition from a single neuron level to a population level (Pandarinath et al., 2018). With the development of electrophysiology and neuroimaging techniques, it has been acknowledged that neural population activities collected through neurophysiology [electroencephalogram (EEG)/magnetoencephalography (MEG)] and neuroimaging techniques [e.g., functional magnetic resonance imaging (fMRI)] are influenced by external stimulus about the categories of the visual object (Spampinato et al., 2017). Neural recordings with high temporal resolution are now readily obtained via EEG technique (Nunez and Srinivasan, 2006; Müller et al., 2008; Schirrmeister et al., 2017). However, the brain activity recordings pose severe decoding problems because of their time-varying spectral components, highly non-stationary properties and the multiple unknown noise. Alternatively, the activity recordings of the brain have been mostly analyzed by time-frequency analysis, complex network, and so on, which extract activity features from one side and make it hard to decode directly (Yu et al., 2019a). Thus, a new analytical method is needed to decode brain activity directly from a population perspective and investigate the visual mechanisms.

Recent advances in neuroscience have demonstrated that a neural manifold is present across the brain, which provides an idea for decoding brain activity. Manifold is the subregion that can capture behavior in a given task. Due to the high degree of correlation and redundancy across individual neural activity, the dimension of the neural system is less than the number of neurons (Levina and Bickel, 2005). Complex population activity can be explained by fewer unobservable latent factors and the latent factors change over time to form a manifold. Seung and Lee (2000) have proved that there is a stable balance state in brain cognitive activities through experiments and proposed that manifold learning might be a natural behavior mode in human cognition. Degenhart et al. (2020) demonstrated that it was possible to solve several neural recording instabilities such as baseline shifts, unit dropout, and tuning changes leveraging the low-dimensional structure present in neural activity. The existence of manifolds in the brain provides a novel idea and effective tool to decode the neural population activity using the dimensionality reduction technique.

Dimensionality reduction techniques allow us to investigate neural population dynamics by drawing the neural manifold and identifying relevant population features. Several explanatory variables can be discovered and extracted from the high-dimensional data according to a specific objective of different dimensionality reduction methods. Due to these explanatory variables being not directly observed, they are often referred to as latent factors (Gallego et al., 2017). Juan et al. have confirmed that brain function is based on the latent factors rather than on the activity of a single neuron (Gallego et al., 2017). They thought that latent factors are the elemental units of volitional control and neural computation in the brain (Gallego et al., 2018). In addition, the time processes of neural responses may vary substantially in experiments on the same task, especially in cognitive tasks such as attention and decision-making. In this case, averaging responses across trials tend to obscure the neural time course of interest, and the single-trial analyses are essential. Therefore, it is necessary to extract the latent factors of the neural population activity from a single-trial level.

A proven idea of data dimensionality reduction is manifold learning, which can obtain the eigenstructure information from the data that is consistent with human cognition. The common dimensionality reduction techniques can be divided into two categories (Wu and Yan, 2009). One is the linear method used for extracting linear manifolds, whose mapping function is linear and usually has explicit expression forms, such as principal component analysis (PCA) and multidimensional scaling (MDS) (Seung and Lee, 2000; Flint et al., 2020). The other is the non-linear method used for extracting the manifolds with non-linear structural characteristics, whose mapping function is a non-linear function, always without an explicit expression. The non-linear methods present the low-dimensional embedding representation of original high-dimensional data utilizing the implicit mapping, such as local linear mapping (LLE) and t-distribution random adjacency embedded (t-SNE) (Lin et al., 2020). Although these methods have deep similarities, the choice of method can have a significant bearing on the scientific interpretations of brain research.

Based on these analyses, this study aims to investigate visual decoding with the neural manifold in cortical activity found by PCA or t-SNE from a single-trial level. EEG signals are directly recorded from the human cortical surface in both resting and several tasks. Moreover, we aim to research the performance of decoding visual information by comparing the two types of manifold learning methods using one of the four types of decoders, that is, Takagi-Sugeno-Kang (TSK), linear Kalman filter (LKF), long–short-term memory-recurrent neural network (LSTM-RNN), and naïve Bayes (NB). Additionally, the different performances among brain regions are examined. In this work, the decoding methods are applied to the multichannel EEG signals in the cortical of healthy people that perform a visual cognitive task. The combination of t-SNE and fuzzy learning and the feature is extracted from the frontal lobe, the parietal lobe, and the occipital lobe which can achieve the highest performance with an accuracy of 83.05%.

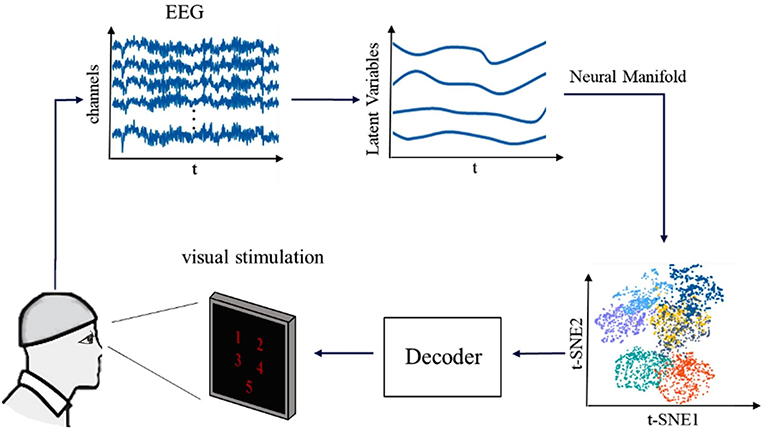

We designed an experiment to study decoding the visual information from brain activities (Figure 1). Ten volunteers (five women and five men) between the ages of 22 and 25 participate in the experiment. All of the volunteers signed informed consent forms, and all the protocols were conformed to the guidelines contained within the Declaration of Helsinki. All participants have no neurological, psychological disorders, or any other ingredients that might influence EEG activity and agree to coordinate the experiment verbally. In each experiment, the participant's brain activity is recorded by an EEG amplifier (NIHON KOHDEN) from the 64 Ag/AgCl scalp electrodes. The sampling rate of the EEG device is 1,000 Hz, and the rate of the hardware filter is 0.5–70Hz. Each participant has been informed of the steps of the experiment before the experiment begins. The participants sit in a comfortable chair about 0.5 m in front of a 22-inch visual display. Trials begin with a blank display for 120 s, during which the participants rest. Then, the numbers from 1 to 5 are presented on the screen for viewing for 30 s, followed by a 60-s rest (blank display). The five numbers from 1 to 5 are presented randomly to prevent people's conventional wisdom from influencing the results. Participants are asked to focus on what they see during the viewing and try to recognize the number. During the experiment, the participants sit calmly in a darkened room and are asked to stare at the screen as still as possible.

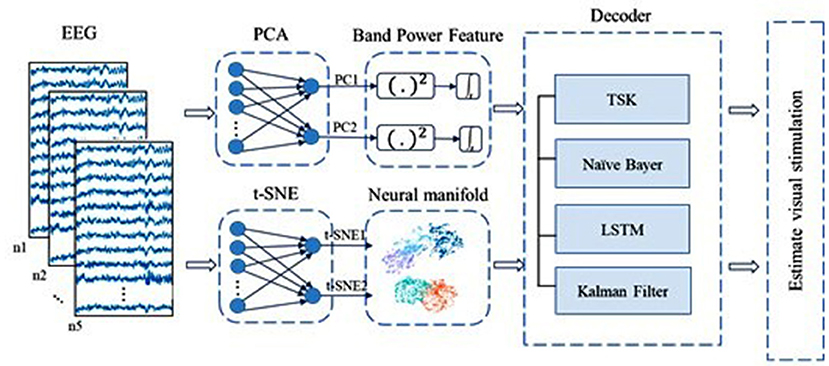

Figure 1. The framework of our research for decoding the visual information from brain activities. First multichannel EEG signals of five visual stimuli of volunteers are acquired and data preprocessing analysis is performed. Second, the latent factors are extracted, and the neural manifold dynamics are obtained. Finally, we combined various decoders to decode visual information.

Raw EEG data are contaminated by artifacts from many non-physiological (power line, bad electrode contact, broken electrodes, etc.) and physiological (cardiac pulse, muscle activity, sweating, movement, etc.) sources. These artifacts have to be carefully identified and either removed or excluded from further analysis (Michel and Brunet, 2019). We used a Butterworth filter to realize temporal filtering, of which the bandwidth was 0.1–50 Hz. Then, the subsequent independent component analysis method has been applied to detect and correct artifacts. Finally, the data were visually inspected, and those bad epochs influenced by transient artifacts were rejected.

To investigate the changes in brain dynamics during different tasks, 30-s length of EEG signals at the previsual stimuli, visual stimuli, and postvisual stimuli states are selected. The previous study shows that the changes in EEG during visual stimuli might occur between 0 and 30 Hz (Adebimpe et al., 2016). So, we studied the four main subbands of EEG: delta (1–4 Hz), theta (4–8 Hz), alpha (8–15 Hz), and beta (15–30 Hz), which are thought to be associated with cognitive activity and typically used in synchronization analysis (Müller et al., 2008; Adebimpe et al., 2016; Kobak et al., 2016; Shin et al., 2020). Therefore, a band-passed finite impulse digital filter based on fast Fourier transform is adopted to decompose the EEG data of each channel into four subbands. All analyses in this paper are performed in MATLAB 2017b.

The autoregressive (AR) Burg approach is applied to estimate the absolute power spectrum density (PSD) for each channel (Faust et al., 2008). To reach the objective of power comparisons in four frequency bands and different experimental states, the EEG data are segmented into 1-s epochs with 0.5-s overlap. A power spectrum analysis is performed for each time window. At a certain subband, the final power value at a certain time is obtained by averaging all power values of the frequency band on the current time window interval. The theoretical basis of the AR method is that the given signal is the output of a linear system whose input is white noise, which is

where ai represents the AR coefficients, k is the order of the AR model, and u(n) is the white noise with a variance of σ2. The order k can be described by AR parameters . The PSD is defined as

Principal component analysis is the common linear dimensionality reduction technique (Duffy et al., 1992). In this research, PCA is applied to the EEG of all subjects. To avert the case where channels with excessive fluctuations impact decoding, the EEG data are smoothed through a Gaussian kernel before employing dimensionality reduction techniques. In this research, PCA is performed to the EEG data of a single-trial level rather than trial averaged from all subjects to avoid the inaccuracy caused by averaging the responses across entire trials. The purpose of PCA is to extract principal components (PCs) from smoothed EEG data. Meanwhile, all PCs are arranged in descending order according to the magnitude of explained variances. The first 2 PCs are selected to decode visual stimuli.

Compared with PCA, t-SNE is a non-linear dimensionality reduction technique. t-SNE calculates the probability similarity for points using a normalized Gaussian kernel in a high-dimensional space (Van der Maaten and Hinton, 2008). Correspondingly, in low-dimensional space, the similarity is calculated through t-distribution. The similarity between sample points in high-dimensional space can be expressed by the asymmetric point probability distribution pij. For the t-SNE algorithm, the pij is defined as follows:

where σi is the variance of a Gaussian centered on ri. Due to the different distribution density of sample points in the dataset, σi corresponding to different sample points is also different. The denser the distribution of data point is, the smaller σi is. The sparser the distribution of data point is, the larger σi is. The value of σi is calculated by binary search.

Takagi-Sugeno-Kang is a fuzzy model (Takagi and Sugeno, 1985). For an input dataset and the corresponding class label O = {o1, o2, …, on} (). When the ith sample data belong to jth class, oi,j = 1; Otherwise, oi,j = 0. The kth fuzzy inference rules are usually described as

where the input vector of each fuzzy rule is represented as , are Gaussian antecedent fuzzy sets subscribed by the input variable ui of Rule k, the number of fuzzy rules is K, ∧ is a fuzzy conjunction operator, the kth fuzzy inference rule fk(u) can be expressed by a linear combination of the input vector , and are linear coefficients.

If the input vector of each rule is u, the output of TSK is calculated as

where is the fuzzy membership function and the normalized fuzzy membership function of the kth fuzzy rule is denoted as . is Gaussian membership function for fuzzy set , which is defined as

where the element is kth cluster center parameters that can be obtained through the classical fuzzy c-means (FCM) (Bezdek et al., 1984) clustering algorithm:

where the width parameter can be estimated by:

where xjk ∈ [0, 1] is the fuzzy membership between jth input vector and kth cluster (k = 1, 2, …, k), and the constant l is the scale parameter.

Given un as the input sample, we can obtain

Thereby, the output result õn of a TSK fuzzy decoder for the input vector un can be described as

The NB decoder is a type of Bayesian decoder that determines the probabilities of different outcomes, which can predict the most probable outcome. For the input dataset with m classes and a sample C = {c1,c2, …, cn}, Bayes' theorem can be defined as

Because P(C) is constant for all classes, the probability that C belongs to each class can be estimated by

According to this formula, the class with the highest probability is taken as the class of C.

An LSTM-RNN based on an RNN architecture has been well befitting in decoding brain activities (Tsiouris et al., 2018). The LSTM-RNN is constituted by memory cell c, forget gate f, input gate i, and output gate o. LSTM-RNN can accommodate the information flow through a cell via these gates, and each gate can be expressed by

where b is the vector of biases, h denotes the vector of the hidden layer, and W represents the weight matrix of recurrent connection from the input gate to the output gate. The input vector ut is the latent factors at time t and σsigmoid(·) represents the sigmoidal activation function. The subscripts of weight matrix W indicate the corresponding gates that include forgetting gate f, input gate i, and output gate o. The information flow of the cell memory can be updated by

where σtanh and ⊗ represent the hyperbolic tangent function and the element-wise product, respectively.

Linear Kalman filter is a commonly generative decoding method based on the linear dynamical. The hidden state yt is a linear combination of the hidden state yt−1 (Wu et al., 2003). The system model can be described as

where A denotes the system model parameter matrix, H is the observation model parameter matrix, and Q and V are the process noise and observation noise that obeys a Gaussian distribution. The observation vector lt is the vector of latent factors. After converging the Kalman gain to its stable state early, we initialized y0 to the average of the observation vector at the beginning of every trial to predict yt.

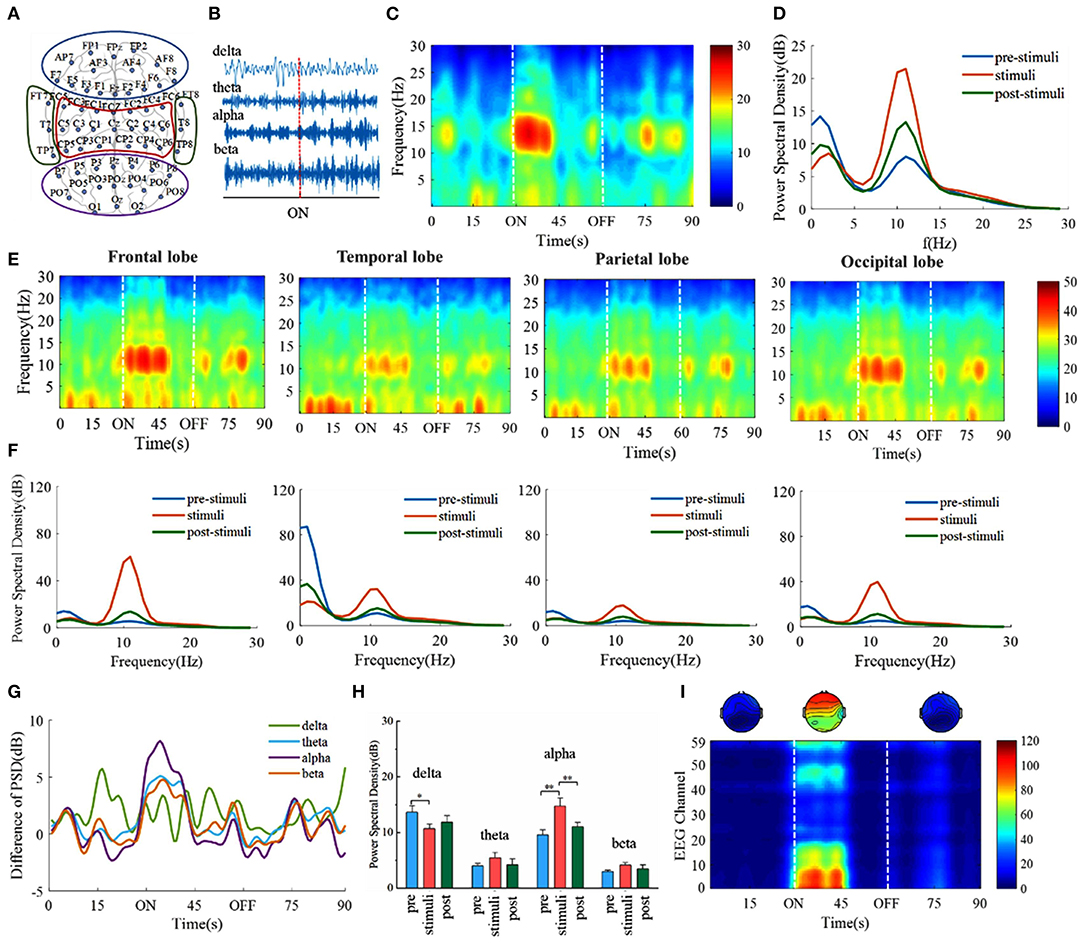

We first investigate whether there exist specific frequencies that are dominant in the digital cognitive process. EEG recordings of the awake volunteers are performed to explore the changes in EEG evoked by digital cognition (Figure 2A). The EEG signals are divided into four major frequency bands involved in sensory consciousness, information coding, cognitive memory, selective attention: delta (0.5–4 Hz), theta (4–8 Hz), alpha (8–15 Hz), beta (15–30 Hz) (Figure 2B). In a dark behavioral chamber, presentations of visual stimuli (30 s) induce alpha frequency oscillations, but the oscillations persist only ~10 s during the digital stimuli period (Figures 2C,G). The frequency spectra show that the power of the alpha band increases during the stimuli period compared with that during the prestimuli and poststimuli periods (Figure 2D). Furthermore, the spectral power across four frequency bands is compared in Figures 2G,H. It is found that alpha band power increases during the stimuli period with p < 0.01 whereas delta band power decreases with p < 0.05 (one-way ANOVA followed by Bonferroni's post-hoc test).

Figure 2. Take the number 1 as an example of visual stimuli, the frontal lobe alpha power saliently correlates with visual cognition. (A) Schematic drawing showing the location of EEG electrodes in the cerebral cortex. The schematic diagram of channel position with the frontal lobe is marked within blue lines and the temporal lobe, the parietal lobe, and the occipital lobe are marked with red, green, and purple lines, respectively. (B) Example EEG is divided into four subfrequency bands: delta (0.5–4 Hz), theta (4–8 Hz), alpha (8–15 Hz), beta (15–30 Hz). The red dotted line indicates the beginning of the visual stimulus. (C) EEG time-frequency spectrograms. 80-s time series raw EEG signals whereas the visual stimuli onset (30 s) and end (60 s) are marked with the white line. (D) Power spectrum density estimates for three states. (E) EEG time-frequency spectrograms of four lobes. (F) Power spectrum density of four lobes. (G) Change of averaged power across subjects in four frequency bands compared to the initial average power. (H) EEG power across four frequency bands. * p < 0.05, ** p < 0.01, one-way ANOVA followed by Bonferroni's post hoc test. Error bars describe standard error across subjects. (I) The variation of power of each EEG channel data in alpha.

The previous study has shown that specific brain areas may participate in specific cognitive functions (Gatti et al., 2020). The spectral power of the three periods across four brain lobes is calculated (Figures 2E,F). Alpha band power increases with the varying level in different brain lobes during the stimuli period, especially in the frontal and the occipital lobes. The variation of power of each EEG channel data in alpha is further analyzed and it is obtained that channels with relatively higher power spectrum values are mainly concentrated in the frontal and the occipital lobes (Figure 2I). In the following dimensionality reduction and decoding analysis, we are mainly focusing on the alpha frequency band and the first 10-s EEG signals during visual tasks. These results demonstrate that the alpha frequency band is the dominant frequency. Meanwhile, the frontal and the occipital lobes might be closely related to digital cognitive function.

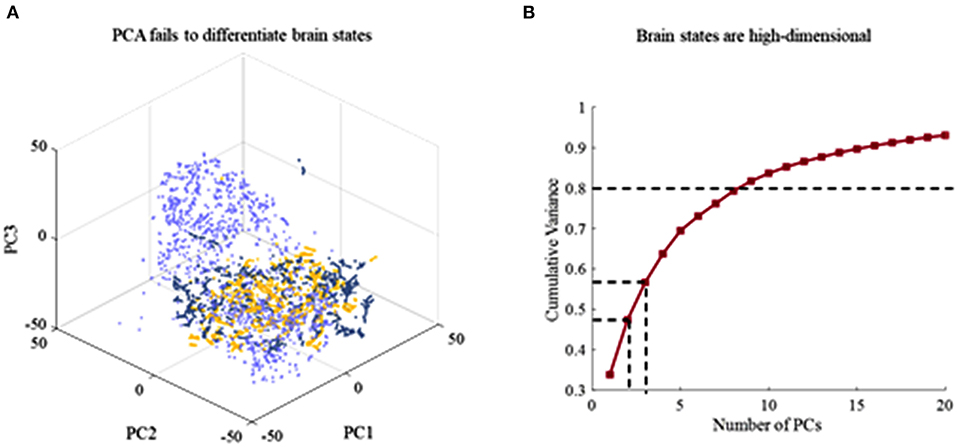

To identify the dynamics of brain states of different cognitive processes, dimensionally reduction techniques that include PCA and t-SNE are further leveraged to estimate latent factors that reflect the subject's brain. Linear dimensionality reduction based on PCA is first performed. It is the ineffective separation of brain dynamics at different cognitive task epochs by PCA (Figure 3A). Three states are not possible to be distinguished by chosen viewing angle. It describes the cumulative explained variance of brain states as a function of the number of PCs in Figure 2B. It is obtained that the brain states are high-dimensional since explaining 80% of the total variance requires 9 dimensions at least. It is difficult to capture key differences during different task epochs using PCA, and thus, dimensionality reduction fails to achieve effectively in a fashion. To overcome this issue, a non-linear dimensionality reduction technique, t-distributed stochastic neighbor embedding (t-SNE), is applied to the time series of brain activities.

Figure 3. The result of dimensionality reduction using PCA. (A) Ineffective separation of brain dynamics at different task epochs by PCA. (B) Cumulative explained variance of brain states as a function of the number of PCs included. Explaining 80% of the total variance requires 9 dimensions.

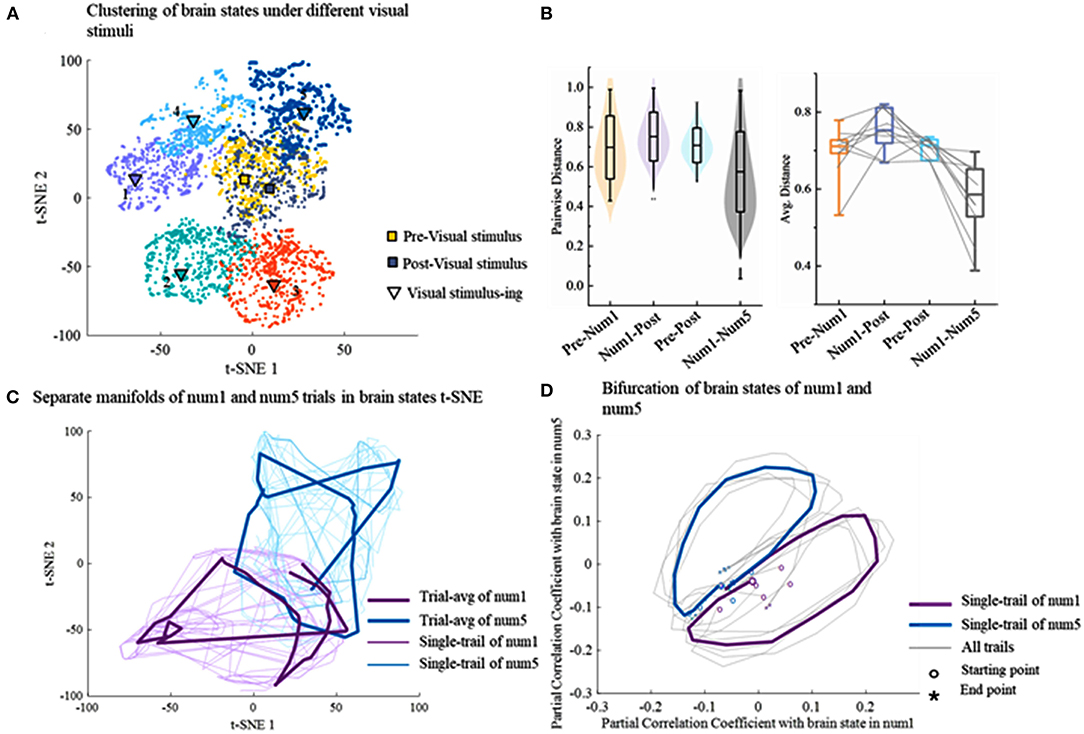

The non-linear dimensionality reduction technique t-SNE is used on the single trial, and the distributions of latent factors are shown in Figure 4A. Since the t-SNE is based on manifold learning, the latent factors after dimensionality reduction are divided into different manifolds. Except for a very small number of latent factors, the latent factors of the same task can be gathered in a region and the t-SNE clusters coincide well with distinct tasks. There are obvious classification boundaries among different tasks, which provides good conditions for the following decoding. More importantly, clusters in the rest states including prestimuli and poststimuli states are located in the same area. Latent factors from 10 experiments are further analyzed in the same way. The similarity of differences is measured via the pairwise Euclidean distances between brain states in the t-SNE space. The similarity between the clusters of two different tasks (Pre-Num1, Num1-Post, Num1–Num5) is lower than the similarity between the same tasks (pre–post) in Figure 4B. Different visual stimuli drive different transitions among brain states resulting in a lower similarity of the brain states between two different visual stimuli (Num1–Num5).

Figure 4. The result of dimensionality reduction using t-SNE. (A) The application of t-SNE on EEG data reveals seven distinct clusters corresponding to different tasks on a single trial. (B) The similarity of latent factor clusters within and across different tasks. The level of similarity is calculated by the pairwise Euclidean distances between brain states in the t-SNE space. The gray lines represent single trial. (C) Temporal evolution of brain states during different tasks lie on the separate cyclic manifold. The thin line represents a single trial and the thick line represents the trial average. (D) The bifurcation of brain states of different visual stimuli.

To investigate the transition process of brain states, the consecutive brain states are temporally connected in the t-SNE map on the trial-by-trial level, which is shown in Figure 4C. It illustrates that the brain states dynamically evolve into cyclic manifolds which are distinct with different tasks over time, whereas the evolutive paths of brain states are highly similar during the same task. Afterward, to further analyze the difference among temporal evolutions of brain states during different cognitive tasks, the partial correlation coefficient is calculated between each brain state in a trial and a reference brain state determined by averaging all brain states of five tasks. The brain states are projected into 2D space spanned by reference brain states for the number of 1 and the number of 5. Strikingly, the bifurcation toward different cognitive tasks could be observed well at the beginning of visual stimuli at the single-trial level (Figure 4D), which further suggests that different visual cognitive tasks drive different transitions among brain states.

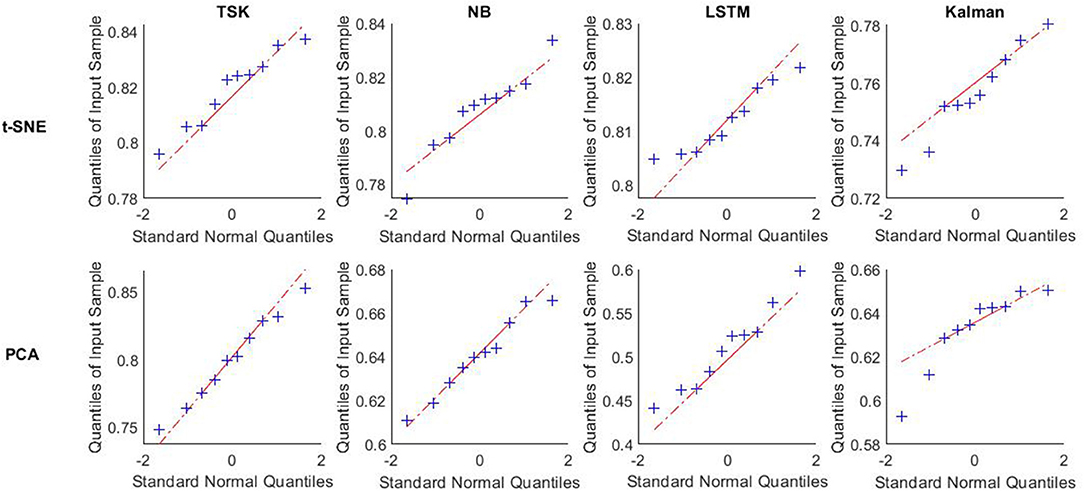

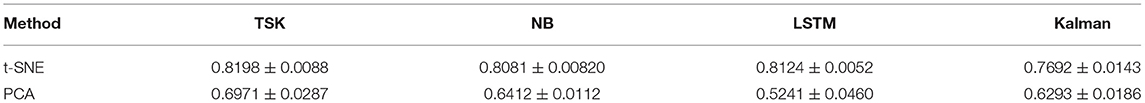

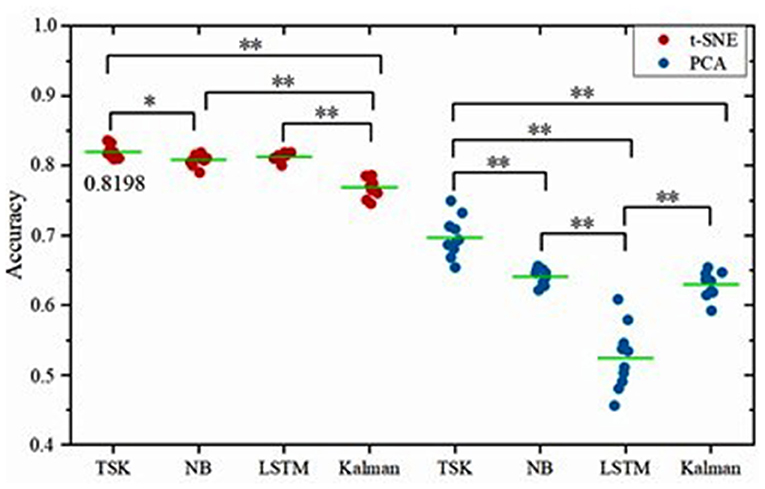

Given our analyses at the level of brain states and the latent factors under visual stimuli, it can be concluded that decoding brain activities by the non-linear dimensionality reduction technique is reliable. After dimension reduction, multiple machine learning decoders including linear decoder Kalman filter (KF), non-linear decoder long–short-term memory in recurrent neural networks (LSTM), fuzzy learning decoder Takagi-Sugeno-Kang fuzzy system (TSK), and naïve Bayes decoder (NB) are employed to decode brain activities. The decoding performance is evaluated for each combination of two-dimensionality reduction methods and four decoders (Figure 5). For the PCA method, the frequency band energy of the first two principal components is extracted, and the values x1, x2 are used as input vector x of the decoders. For the t-SNE method, the first two principal components x1, x2 are retained directly to obtain the neural manifold, which is used as the input vector x to the decoders. The decoding is implemented through 10-fold crossvalidation, which means that 9 out of 10 samples are selected as a training set, and the remaining 1 out of 10 samples are selected as the test set in each classification process. The classification process is repeated ten times, and the average value of the 10 times is taken as the final result. TSK decoder displays the encouraging consequence with 0.8198 accuracies with t-SNE and 0.6971 accuracies with PCA. The features extracted by the non-linear manifold learning method have better decoding performance than that of PCA. The performance of TSK is compared with three common methods (NB, LSTM, LKF) for EEG decoding. The accuracy data are normally distributed as the results of the Q-Q plot are approximately on a straight line (Figure 6). The classification results are shown in Table 1, Figure 7. Table 1 shows the classification accuracy of different decoders with two whole-brain features extracted by PCA and t-SNE. Meanwhile, TSK can achieve high performance in decoding EEG. The comparison of the decoding accuracy for visual stimuli across different latent factors and decoders is depicted in Figure 7. Friedman's test with Bonferroni correction is used to analyze the difference between the decoding accuracy of four decoders. The Friedman's test reveals the main effect of decoders on the decoder accuracy when using t-SNE (χ2 = 20.97, p < 0.01) or when using PCA (χ2 = 25.51, p < 0.01). When using t-SNE, the linear decoder KF shows lower accuracy than other decoders (p < 0.01) and TSK shows higher accuracy than NB (p < 0.05), whereas there is no difference between TSK and NB, LSTM. When using PCA, the TSK shows higher accuracy than other decoders (p < 0.01), and NB and KF also show higher accuracy than LSTM (p < 0.01). These indicate that TSK has a good decoding effect in both linear and non-linear dimensionality reduction techniques. Meanwhile, for all decoders, the t-SNE dimensionality reduction technique has a better decoding effect than PCA.

Figure 5. Overview of decoding based on two-dimensionality reduction techniques including PCA and t-SNE. EEG signals are down to two dimensions by PCA and t-SNE, respectively. For the two latent variables captured by PCA, we use their band power features in a short time as code to save their poor performance of original data. Meanwhile, latent variables from t-SNE are regarded as code directly. Decoders are TSK, NB, LSTM, and LKFS.

Figure 6. Q-Q plot of accuracy using different decoders after t-SNE and PCA vs. standard normal. Data about accuracy using different decoders after t-SNE and PCA in ten times trial are tested normality by q-q plot vs. standard normal. These decoders are TSK, NB, LSTM, and Kalman.

Table 1. Decoding accuracy of different decoders with two whole-brain features extracted by PCA and t-SNE.

Figure 7. Comparison of the decoding accuracy for visual stimuli between two latent factors for each decoder. The green lines indicate the mean accuracy and the asterisks denote the significant degree of difference (*p < 0.05, **p < 0.01). The left column corresponds to the method of t-SNE and the right column corresponds to the method of PCA.

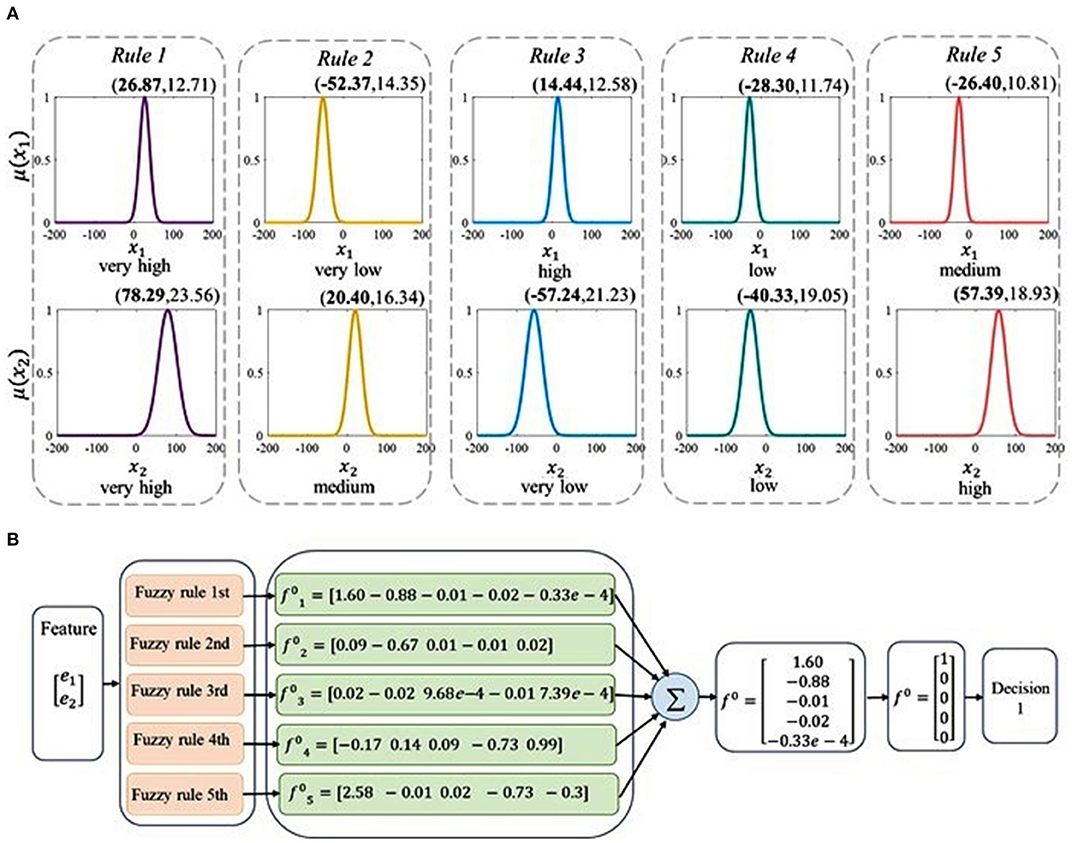

The brain activities are first decoded using the TSK decoder. The example of TSK with five rules is illustrated in Figure 8. It illustrates the corresponding membership functions of each fuzzy set obtained from five fuzzy rules in Figure 8A. Each membership function corresponds to a fuzzy linguistic description which is very high, high, medium, low, and very low. To provide further explanation, the antecedent parameters that include centers and standard variance are calculated, which are presented in Figure 8A. By the permutation of five linguistic descriptions, there can be twenty-five rules. Take five of them as an example. Based on the linguistic expressions and the corresponding linear function, the fuzzy rules can be shown as follows:

Rule 1: IF x1 is very high AND x2 is very high,

Rule 2: IF x1 is very low AND x2 is medium,

Rule 3: IF x1 is high AND x2 is very low,

Rule 4: IF x1 is low AND x2 is low,

Rule 5: IF x1 is medium AND x2 is high,

Based on these five fuzzy rules above, the mechanism of the decoding process is depicted in Figure 8B. The low-dimensional characteristics of different tasks are taken as the inputs of the decoder, and the label vector is predicted by the TSK system's decision output. The sum of the decision outputs based on the five rules is indicated by f0 and the decision outputs are handled by setting the maximal element in f0 to 1 and others to 0. In this example, the decision output is number 1.

Figure 8. The decoding principle of the TSK method. (A) The descriptions of the antecedent linguistic and membership functions of five rules in the TSK decoder. In each subgraph, the horizontal coordinate and the vertical coordinate denote the independent inputs and the membership functions, respectively. The antecedent parameters (centers, standard variance) are presented above the corresponding membership function curve. Fuzzy linguistic descriptions are shown below the membership functions. (B) The identification process of visual information via five fuzzy rules and the fuzzy decoder.

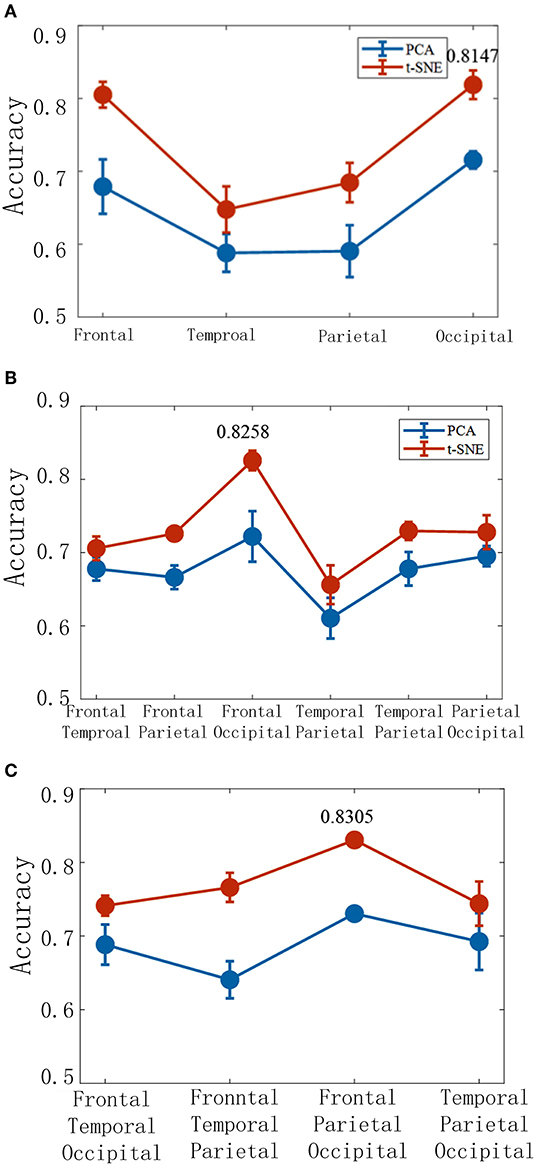

The brain activities are decoded with the low-dimensional features extracted from a single brain lobe, two brain lobes, and three brain lobes (Figure 9). The result shows that the frontal and the occipital lobes are the top two highest accuracies when the features are extracted from a single brain lobe. The combination of the frontal and the occipital lobes is the highest accuracy in the two lobes combination. Additionally, in the three lobes combination, the frontal and occipital and parietal lobes are the highest effective. We hence infer that the frontal and the occipital lobes with high accuracy may be the key areas of the brain's visual cognition process. It can be concluded that finding the dominant brain regions can improve decoding efficiency and accuracy, which is essential for BCI.

Figure 9. The corresponding decoding results under different brain lobe associations. The accuracy of the TSK decoder is based on two latent factors with a single brain lobe (A), two brain lobes (B), and three brain lobes (C).

In this work, we propose a method to decode cortical activity under visual stimuli from a single-trial level using the neural manifold. Through power spectrum analysis, it is found that visual cognition induces significant changes in the alpha band (Hogendoorn and Burkitt, 2018). Different visual stimuli lead to different spiking of neurons, which represent different information encoded by the human brain. The information is recorded in EEG and is exposed as the bifurcated manifold by dimension reduction methods. By further analyzing the latent factors extracted from the alpha band and using it to decode the brain activities, it is found that five major clusters that are associated with the visual stimuli distinctively occupy separate subregions.

The dimensionality reduction techniques are compared in terms of decoding performance. As excepted, it is confirmed that the latent factors obtained by t-SNE well-decoded brain activities. Decoding visual information using latent factors estimated by the t-SNE results in a superior performance to another case using PCA-estimated latent factors regardless of the decoder types, that is, TSK, NB, LSTM, and LKF. The research on the neurodynamic of the cerebral cortex has consistently uncovered low-dimensional manifolds that capture a crucial portion of neural variability (Arieli et al., 1996). PCA is widely used in motor cortex dynamics research. Gallego et al. (2018) confirmed the existence of a neural manifold in the motor cortex of monkeys by PCA. However, for behaviors whose dynamics explore non-motor brain areas, estimating neural manifolds using linear techniques requires latent factors of higher dimensions than the true dimensions in the data. EEG is commonly considered to have significant chaotic characteristics, linear methods provided poor estimates of the neural manifold (Figure 3). When brain activity is reduced to the same dimensions with xx, we can obtain a better recognition performance on the latent factors decoded by t-SNE during different cognitive tasks (Figure 4). The improvement in decoding brain activities using t-SNE suggests that the brain activities lie on a low-dimensional, non-linear manifold in the high-dimensional space and t-SNE can truly model the hidden state space of the brain in the cognitive process.

This may be due to TSK's good non-linear approximation ability as the performance of decoders is significantly more improved using latent factors estimated by t-SNE than PCA. Additionally, TSK could transform the decision output into a value between 0 and 1 through fuzzy rules, which is closer to human thoughts than other dichotomies of either 0 or 1 (Yu et al., 2019a). These findings suggest that we can design a simple fuzzy learning decoder (TSK) with t-SNE while achieving well performance. Multiple supervised machine learning methods that include TSK, NB, LSTM, KF are employed to classify different cognitive tasks based on two-dimensionality reduction techniques. Using latent factors estimated by t-SNE to decode visual information has higher accuracy than those estimated by PCA. Among these decoders, TSK with a promising classification result has been proved to be useful in the decoding analysis of neuroimaging data in many applications (Yu et al., 2019b).

According to the classification results, it is obtained that the number of brain lobes is not proportional to the classification performance (Figure 7). The frontal and the occipital lobes are found to be the most effective areas to classify different cognitive tasks. Compared with traditional methods, the visual decoding approach proposed has lower implementation difficulty and higher performance. Future work will consider finding key channels and frequencies to further reduce the amount of data analysis and improve decoding accuracy. The dominant brain lobes are found based on the decoding accuracy, but how to select features more efficiently and accurately is necessary to be further considered. In future works, it will be combined common spatial patterns (CSPs) with deep learning theory, further ensuring optimal channels and latent factors that are closely related to cognitive processes to achieve higher decoding accuracy.

In this work, a novel decoding model combining manifold dimensionality reduction approaches with machine learning is designed. By only capturing EEG signals from key brain regions, researchers can obtain the same or better decoding performance. Meanwhile, the overall computational efficiency of the visual information decoding can be improved easily with the reduction of the originally collected datasets. In particular, the TSK method integrates the advantages of fuzzy rules and membership function, so the TSK method has higher interpretability and robustness (Takagi and Sugeno, 1985; Azeem et al., 2000; Kuncheva, 2000). The work provides effective algorithms for the accurate control of BCI and is of great significance to the rehabilitation training and treatment of brain-related neurological diseases.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

JW and HY designed the study. QZ, SL, and KL performed the research. QZ wrote the manuscript.

This work was supported in part by the National Natural Science Foundation of China under Grant 62173241, in part by the Natural Science Foundation of Tianjin, China (Grant Nos. 19JCYBJC18800 and 20JCQNJC01160), the Foundation of Tianjin University under Grant 2020XRG-0018, and Tianjin Research Innovation Project for Postgraduate Students (Grant No. 2021YJSB188). The authors also gratefully acknowledge the financial support provided by Opening Fundation of Key Laboratory of Opto-technology and Intelligent Control (Lanzhou Jiaotong University), Ministry of Education (KFKT2020-01).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Adebimpe, A., Aarabi, A., Bourel-Ponchel, E., Mahmoudzadeh, M., and Wallois, F. (2016). EEG resting state functional connectivity analysis in children with benign epilepsy with centrotemporal spikes. Front. Neurosci. 10, 143. doi: 10.3389/fnins.2016.00143

Arieli, A., Sterkin, A., Grinvald, A., and Aertsen, A. (1996). Dynamics of ongoing activity: explanation of the large variability in evoked cortical responses. Science 273, 1868–1871. doi: 10.1126/science.273.5283.1868

Azeem, M. F., Hanmandlu, M., and Ahmad, N. (2000). Generalization of adaptive neuro-fuzzy inference systems. IEEE Trans. Neural Netw. 11, 1332–1346. doi: 10.1109/72.883438

Bezdek, J. C., Ehrlich, R., and Full, W. (1984). FCM: the fuzzy c-means clustering algorithm. Comput. Geosci. 10, 191–203. doi: 10.1016/0098-3004(84)90020-7

Cunningham, J. P., and Byron, M. Y. (2014). Dimensionality reduction for large-scale neural recordings. Nat. Neurosci. 17, 1500–1509. doi: 10.1038/nn.3776

de Beeck, H. P. O., Torfs, K., and Wagemans, J. (2008). Perceived shape similarity among unfamiliar objects and the organization of the human object vision pathway. J. Neurosci. 28, 10111–10123. doi: 10.1523/JNEUROSCI.2511-08.2008

Degenhart, A. D., Bishop, W. E., Oby, E. R., Tyler-Kabara, E. C., Chase, S. M., Batista, A. P., et al. (2020). Stabilization of a brain–computer interface via the alignment of low-dimensional spaces of neural activity. Nat. Biomed. Eng. 4, 672–685. doi: 10.1038/s41551-020-0542-9

Duffy, F. H., Jones, K., Bartels, P., McAnulty, G., and Albert, M. (1992). Unrestricted principal components analysis of brain electrical activity: issues of data dimensionality, artifact, and utility. Brain Topogr. 4, 291–307. doi: 10.1007/BF01135567

Faust, O., Acharya, R. U., Allen, A. R., and Lin, C. M. (2008). Analysis of EEG signals during epileptic and alcoholic states using AR modeling techniques. IRBM 29, 44–52. doi: 10.1016/j.rbmret.2007.11.003

Flint, R. D., Tate, M. C., Li, K., Templer, J. W., Rosenow, J. M., Pandarinath, C., et al. (2020). The representation of finger movement and force in human motor and premotor cortices. Eneuro 7:ENEURO.0063-20.2020. doi: 10.1523/ENEURO.0063-20.2020

Gallego, J. A., Perich, M. G., Miller, L. E., and Solla, S. A. (2017). Neural manifolds for the control of movement. Neuron 94, 978–984. doi: 10.1016/j.neuron.2017.05.025

Gallego, J. A., Perich, M. G., Naufel, S. N., Ethier, C., Solla, S. A., and Miller, L. E. (2018). Cortical population activity within a preserved neural manifold underlies multiple motor behaviors. Nat. Commun. 9, 1–13. doi: 10.1038/s41467-018-06560-z

Gatti, D., Van Vugt, F., and Vecchi, T. (2020). A causal role for the cerebellum in semantic integration: a transcranial magnetic stimulation study. Sci. Rep. 10, 1–12. doi: 10.1038/s41598-020-75287-z

Hogendoorn, H., and Burkitt, A. N. (2018). Predictive coding of visual object position ahead of moving objects revealed by time-resolved EEG decoding. Neuroimage 171, 55–61. doi: 10.1016/j.neuroimage.2017.12.063

Kobak, D., Brendel, W., Constantinidis, C., Feierstein, C. E., Kepecs, A., Mainen, Z. F., et al. (2016). Demixed principal component analysis of neural population data. Elife 5, e10989. doi: 10.7554/eLife.10989.022

Kourtzi, Z., and Kanwisher, N. (2000). Cortical regions involved in perceiving object shape. J. Neurosci. 20, 3310–3318. doi: 10.1523/JNEUROSCI.20-09-03310.2000

Kuncheva, L. I. (2000). How good are fuzzy if-then classifiers? IEEE Trans. Syst. Man Cybern. Part B 30, 501–509. doi: 10.1109/3477.865167

Levina, E., and Bickel, P. J. (2005). Maximum likelihood estimation of intrinsic dimension. Adv. Neural Inf. Process. Syst.

Lin, Q., Manley, J., Helmreich, M., Schlumm, F., Li, J. M., Robson, D. N., et al. (2020). Cerebellar neurodynamics predict decision timing and outcome on the single-trial level. Cell 180, 536–551. e517. doi: 10.1016/j.cell.2019.12.018

Michel, C. M., and Brunet, D. (2019). EEG source imaging: a practical review of the analysis steps. Front. Neurol. 10:325. doi: 10.3389/fneur.2019.00325

Müller, K.-R., Tangermann, M., Dornhege, G., Krauledat, M., Curio, G., and Blankertz, B. (2008). Machine learning for real-time single-trial EEG-analysis: from brain–computer interfacing to mental state monitoring. J. Neurosci. Methods 167, 82–90. doi: 10.1016/j.jneumeth.2007.09.022

Nunez, P. L., and Srinivasan, R. (2006). Electric Fields of the Brain: the Neurophysics of EEG. Oxford: Oxford University Press.

Pandarinath, C., Ames, K. C., Russo, A. A., Farshchian, A., Miller, L. E., Dyer, E. L., et al. (2018). Latent factors and dynamics in motor cortex and their application to brain–machine interfaces. J Neurosci. 38, 9390–9401. doi: 10.1523/JNEUROSCI.1669-18.2018

Pasley, B. N., David, S. V., Mesgarani, N., Flinker, A., Shamma, S. A., Crone, N. E., et al. (2012). Reconstructing speech from human auditory cortex. PLoS Biol. 10, e1001251. doi: 10.1371/journal.pbio.1001251

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., et al. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 38, 5391–5420. doi: 10.1002/hbm.23730

Seung, H. S., and Lee, D. D. (2000). The manifold ways of perception. Science 290, 2268–2269. doi: 10.1126/science.290.5500.2268

Shin, Y., Lee, M., and Cho, H. (2020). Analysis of EEG, cardiac activity status, and thermal comfort according to the type of cooling seat during rest in indoor temperature. Appl. Sci. 11:97. doi: 10.3390/app11010097

Spampinato, C., Palazzo, S., Kavasidis, I., Giordano, D., Souly, N., and Shah, M. (2017). “Deep learning human mind for automated visual classification,” in Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. doi: 10.1109/CVPR.2017.479

Takagi, T., and Sugeno, M. (1985). Fuzzy identification of systems and its applications to modeling and control. IEEE Trans. Syst. Man Cybern. 1, 116–132. doi: 10.1109/TSMC.1985.6313399

Tsiouris, K. M., Pezoulas, V. C., Zervakis, M., Konitsiotis, S., Koutsouris, D. D., and Fotiadis, D. I. (2018). A long short-term memory deep learning network for the prediction of epileptic seizures using EEG signals. Comput. Biol. Med. 99, 24–37. doi: 10.1016/j.compbiomed.2018.05.019

Van der Maaten, L., and Hinton, G. (2008). Visualizing data using t-SNE. J. Mach Learn. Res. 9:bhx268.

Wen, H., Shi, J., Zhang, Y., Lu, K.-H., Cao, J., and Liu, Z. (2018). Neural encoding and decoding with deep learning for dynamic natural vision. Cereb. Cortex 28, 4136–4160. doi: 10.1093/cercor/bhx268

Wu, W., Black, M. J., Gao, Y., Bienenstock, E., Serruya, M., Shaikhouni, A., et al. (2003). Neural decoding of cursor motion using a Kalman filter. Adv. Neural Inf. Process. Syst. 2003, 133–140.

Wu, X., and Yan, D.-Q. (2009). Analysis and research on method of data dimensionality reduction. Appl. Res. Comput. 26, 2832–2835. doi: 10.4028/www.scientific.net/AMR.97-101.2832

Yu, H., Lei, X., Song, Z., Liu, C., and Wang, J. (2019a). Supervised network-based fuzzy learning of EEG signals for Alzheimer's disease identification. IEEE Trans. Fuzzy Syst. 28, 60–71. doi: 10.1109/TFUZZ.2019.2903753

Yu, H., Li, X., Lei, X., and Wang, J. (2019b). Modulation effect of acupuncture on functional brain networks and classification of its manipulation with EEG signals. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 1973–1984. doi: 10.1109/TNSRE.2019.2939655

Keywords: neural manifold, visual stimulation, brain dynamics, decoding, machine learning

Citation: Yu H, Zhao Q, Li S, Li K, Liu C and Wang J (2022) Decoding Digital Visual Stimulation From Neural Manifold With Fuzzy Leaning on Cortical Oscillatory Dynamics. Front. Comput. Neurosci. 16:852281. doi: 10.3389/fncom.2022.852281

Received: 11 January 2022; Accepted: 03 February 2022;

Published: 11 March 2022.

Edited by:

Fei He, Coventry University, United KingdomCopyright © 2022 Yu, Zhao, Li, Li, Liu and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chen Liu, bGl1Y2hlbjcxNUB0anUuZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.