94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci. , 08 December 2022

Volume 16 - 2022 | https://doi.org/10.3389/fncom.2022.1079155

Maonian Wu1,2

Maonian Wu1,2 Ying Lu1

Ying Lu1 Xiangqian Hong3

Xiangqian Hong3 Jie Zhang4

Jie Zhang4 Bo Zheng1,2

Bo Zheng1,2 Shaojun Zhu1,2

Shaojun Zhu1,2 Naimei Chen5

Naimei Chen5 Zhentao Zhu5*

Zhentao Zhu5* Weihua Yang3*

Weihua Yang3*Purpose: To assess the value of an automated classification model for dry and wet macular degeneration based on the ConvNeXT model.

Methods: A total of 672 fundus images of normal, dry, and wet macular degeneration were collected from the Affiliated Eye Hospital of Nanjing Medical University and the fundus images of dry macular degeneration were expanded. The ConvNeXT three-category model was trained on the original and expanded datasets, and compared to the results of the VGG16, ResNet18, ResNet50, EfficientNetB7, and RegNet three-category models. A total of 289 fundus images were used to test the models, and the classification results of the models on different datasets were compared. The main evaluation indicators were sensitivity, specificity, F1-score, area under the curve (AUC), accuracy, and kappa.

Results: Using 289 fundus images, three-category models trained on the original and expanded datasets were assessed. The ConvNeXT model trained on the expanded dataset was the most effective, with a diagnostic accuracy of 96.89%, kappa value of 94.99%, and high diagnostic consistency. The sensitivity, specificity, F1-score, and AUC values for normal fundus images were 100.00, 99.41, 99.59, and 99.80%, respectively. The sensitivity, specificity, F1-score, and AUC values for dry macular degeneration diagnosis were 87.50, 98.76, 90.32, and 97.10%, respectively. The sensitivity, specificity, F1-score, and AUC values for wet macular degeneration diagnosis were 97.52, 97.02, 96.72, and 99.10%, respectively.

Conclusion: The ConvNeXT-based category model for dry and wet macular degeneration automatically identified dry and wet macular degeneration, aiding rapid, and accurate clinical diagnosis.

Macular degeneration, a neurodegenerative diseases and blinding eye disease, is the leading cause of irreversible central vision loss in developed countries and is expected to affect 288 million people worldwide by 2040 (Wong et al., 2014; Rozing et al., 2020). Macular degeneration can be divided into dry macular degeneration and wet macular degeneration. They differ in terms of prevalence, clinical symptoms, speed of onset, and treatment, and the distinction between the two is crucial in clinical diagnosis (Gelinas et al., 2022; Sarkar et al., 2022; Shen et al., 2022). The traditional diagnosis of macular degeneration relies on stereo-ophthalmoscopy, fundus photography, and optical coherence tomography retinal imaging, which are inefficient due to the high demand for medical resources for diagnosis; and some patients are unable to obtain a timely diagnosis, resulting in a delay in treatment (Qu et al., 2020; Rubner et al., 2022). Therefore, the design of an automatic classification model of dry and wet macular degeneration will aid in large-scale screening of dry and wet macular degeneration, improve the efficiency of disease diagnosis, compensate for the shortage of primary medical resource distribution, and facilitate the early detection, diagnosis, and treatment of the disease.

Recently, artificial intelligence technology has shown broad application prospects in ophthalmology. Current research hotspots are based on the automated analysis of fundus images, including segmentation of anatomical structures, detection, and classification of lesion sites, diagnosis of ocular diseases, and image quality assessment (Zheng et al., 2021a,b; Zhu et al., 2022), involving common eye diseases, such as diabetic retinopathy (Bora et al., 2021; Sungheetha and Sharma, 2021; Wan et al., 2021), glaucoma (Mahum et al., 2021; Ran et al., 2021), age-related macular degeneration (AMD) (Moraes et al., 2021; Thomas et al., 2021), and cataracts (Khan et al., 2021; Gutierrez et al., 2022). At present, artificial intelligence technology has made breakthrough progress in the field of macular degeneration, and its application in research is mainly focused on macular degeneration identification and macular degeneration severity grading. Celebi et al. (2022) achieved automatic detection of macular degeneration with an accuracy of 96.39%. Serener and Serte (2019) classified macular degeneration with 94% accuracy based on the ResNet18 model. Abdullahi et al. (2022) achieved the classification of macular degeneration based on ResNet50 with an accuracy of 96.56%. Few studies have classified dry and wet macular degeneration. Priya and Aruna (2014) used machine learning methods, such as probabilistic neural networks, to extract retinal features and classify dry and wet macular degeneration with a maximum accuracy, sensitivity, and specificity of 96, 96.96, and 94.11%, respectively, while Chen et al. (2021) used a multimodal deep learning framework to automate the detection of dry and wet macular degeneration with a maximum accuracy, sensitivity, and specificity of 90.65, 68.92, and 98.53%, respectively. In some of these studies, the evaluation indicators of the models were not sufficiently comprehensive, the sensitivity and specificity values of some models varied widely, and some models studied only dichotomous categories, with limited practical application.

Based on clinically collected normal, dry, and wet macular degeneration fundus images, this study trained the ConvNeXT (Liu et al., 2022) three-category model on the original and expanded datasets and compared the results with the VGG16 (Simonyan and Zisserman, 2014), ResNet18 (He et al., 2016), ResNet50 (Khan et al., 2018), EfficientNetB7 (Tan and Le, 2019), and RegNet (Radosavovic et al., 2020) three-category models. It is expected that, in this study, a suitable model can be found to assist AMD diagnosis.

The macular fundus image dataset used in this study was obtained from the Eye Hospital of Nanjing Medical University as JPG RGB color fundus images with good image quality. As factors, such as sex and age, did not affect the results of this study, and to prevent leakage of patients’ personal information, all images were desensitized before delivery and did not contain any private patient information. The partner hospital provided the images along with the true diagnostic result for each image, which was used as the result for the expert diagnostic group. All images were reviewed by two ophthalmologists. If the diagnosis was consistent, the final diagnosis was made; if the diagnosis was inconsistent, the final diagnosis was made by a third ophthalmologist. The fundus images provided by the partner hospital contain only one of the following: normal, dry macular degeneration, or wet macular degeneration; meaning that the diagnosis is unique.

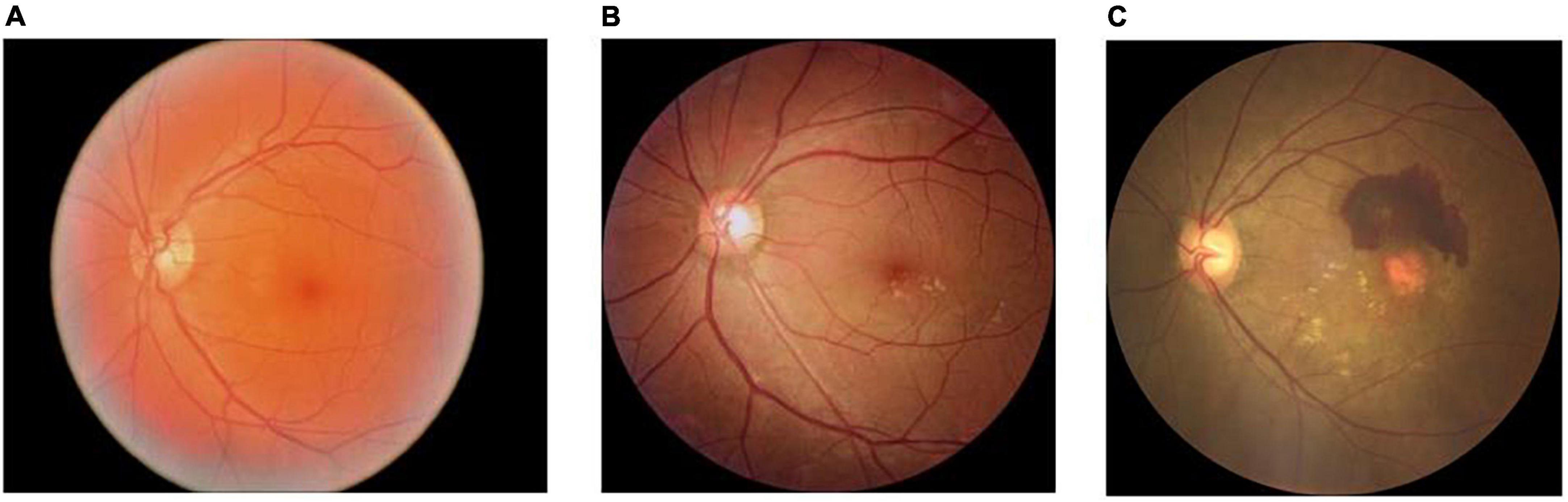

In this study, the original dataset consisted of 672 fundus images, which were first divided into a training set and a validation set according to 9:1. The training set consisted of 604 images, including 252 normal fundus images, 100 dry macular degeneration fundus images, and 252 wet macular degeneration fundus images; the validation set consisted of 68 images, including 28 normal fundus images, 12 dry macular degeneration fundus images, and 28 wet macular degeneration fundus images. In this study, 289 fundus images obtained from the clinic were used as the testing set, and the experts gave a diagnosis of 120 normal fundus, 48 dry macular degeneration, and 121 wet macular degeneration. The number of original data sets is limited and the distribution is not uniform. The number of images per category in each dataset is shown in Table 1. Fundus images of normal, dry, and wet macular degeneration are shown in Figure 1.

Figure 1. Normal, dry, and wet macular fundus images. (A) The normal fundus image; (B) the dry macular degeneration fundus image; and (C) the wet macular degeneration fundus image.

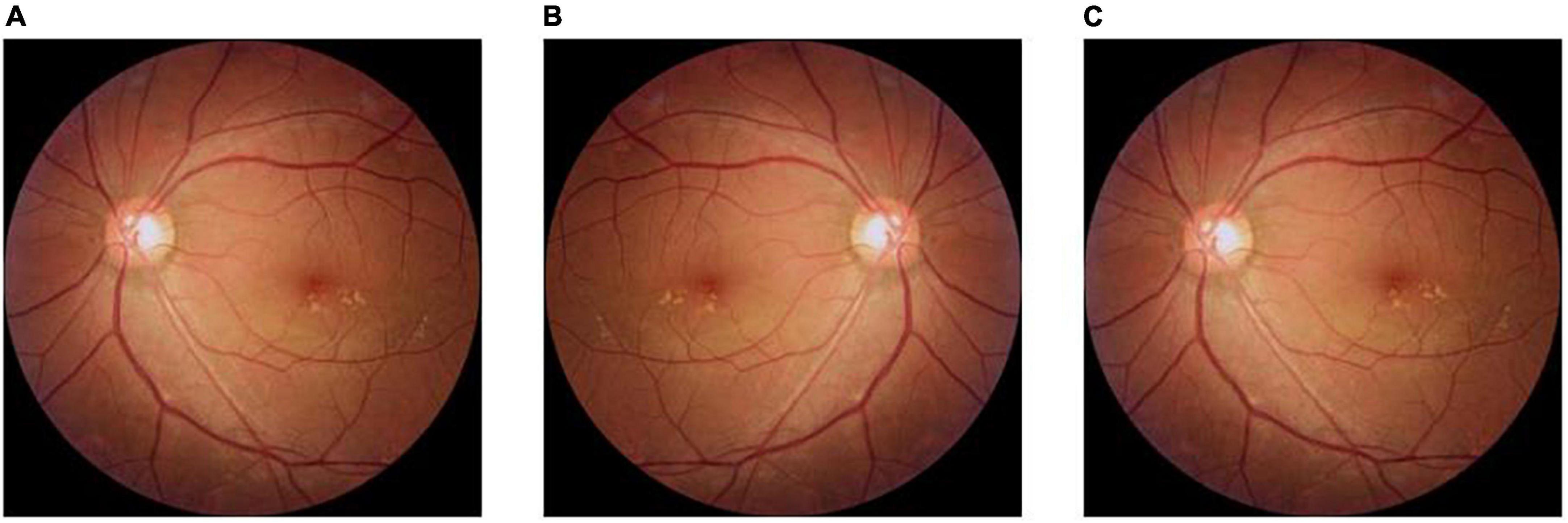

In this study, the amount of labeled data in the fundus image dataset is limited and the number of images in the three categories is unbalanced, which may lead to under-fitting in the category with a small amount of data and over-fitting in the category with a large amount of data during model training. Although deep learning has shown great potential in the field of smart healthcare, most of the existing methods can only handle data with high quality, sufficient data volume, balanced categories, and the same source. In order to improve the applicability of the model in helping the initial classification and diagnosis of macular degeneration, the fundus images of dry macular degeneration with the least amount of data in the training set were expanded. First, the original 100 dry fundus images were flipped horizontally, and then half of the original images were rotated 3° counterclockwise to obtain 250 dry fundus images, which balanced the number of different kinds of experimental data and ensured the generalization ability of the model. The original image, the image after horizontal rotation, and the image after 3° counterclockwise rotation are shown in Figure 2.

Figure 2. Method of expanding fundus images in dry macular degeneration. (A) The original image; (B) the image after horizontal flip; and (C) the image after a 3° counterclockwise rotation.

The data in the dataset were enhanced to improve the robustness and accuracy of the model. The data enhancement methods are as follows: cropping the images by setting a random aspect ratio, where the random size is set to 0.08–1 times the original image, and the random aspect ratio is set to 0.75–1.33 times of the original image; and horizontally flipping the image at random with a 50% chance.

In this study, the classification models were trained on the original dataset and the expanded dataset, respectively, and the models were tested using the same test dataset. The number of images per category in the original and expanded datasets is shown in Table 2. The Adam optimization algorithm was used in this study, and after 40 data iterations, the initial value of the learning rate was set to 0.0005. The dynamic adjustment strategy was to first calculate the multiplication factor based on the number of training iterations, and then multiply it with the initial learning rate. The initial parameters were loaded with those obtained from training ConvNeXT-T on ImageNet-1K. Meanwhile, the VGG16, ResNet18, ResNet50, EfficientNetB7, and RegNet models were selected to compare the classification results, all of which contain convolutional layers, pooling layers, and fully connected layers.

The ConvNeXT model has an input image size of 224 × 224, which is down sampled by a convolutional layer. The image size is reduced to 56 × 56, passing through four stages in turn, each consisting of a series of ConvNeXT blocks, and then passed through a global average pooling layer, normalization, and a full connection layer to obtain the final classification result. The structure of the ConvNeXT model is shown in Figure 3. The input feature matrix of the ConvNeXT block enters the residual block with two branches. The straight branch first goes through a depth-separable convolution, then through two convolutions output the feature matrix, which is added to the original input branch to obtain the final feature matrix.

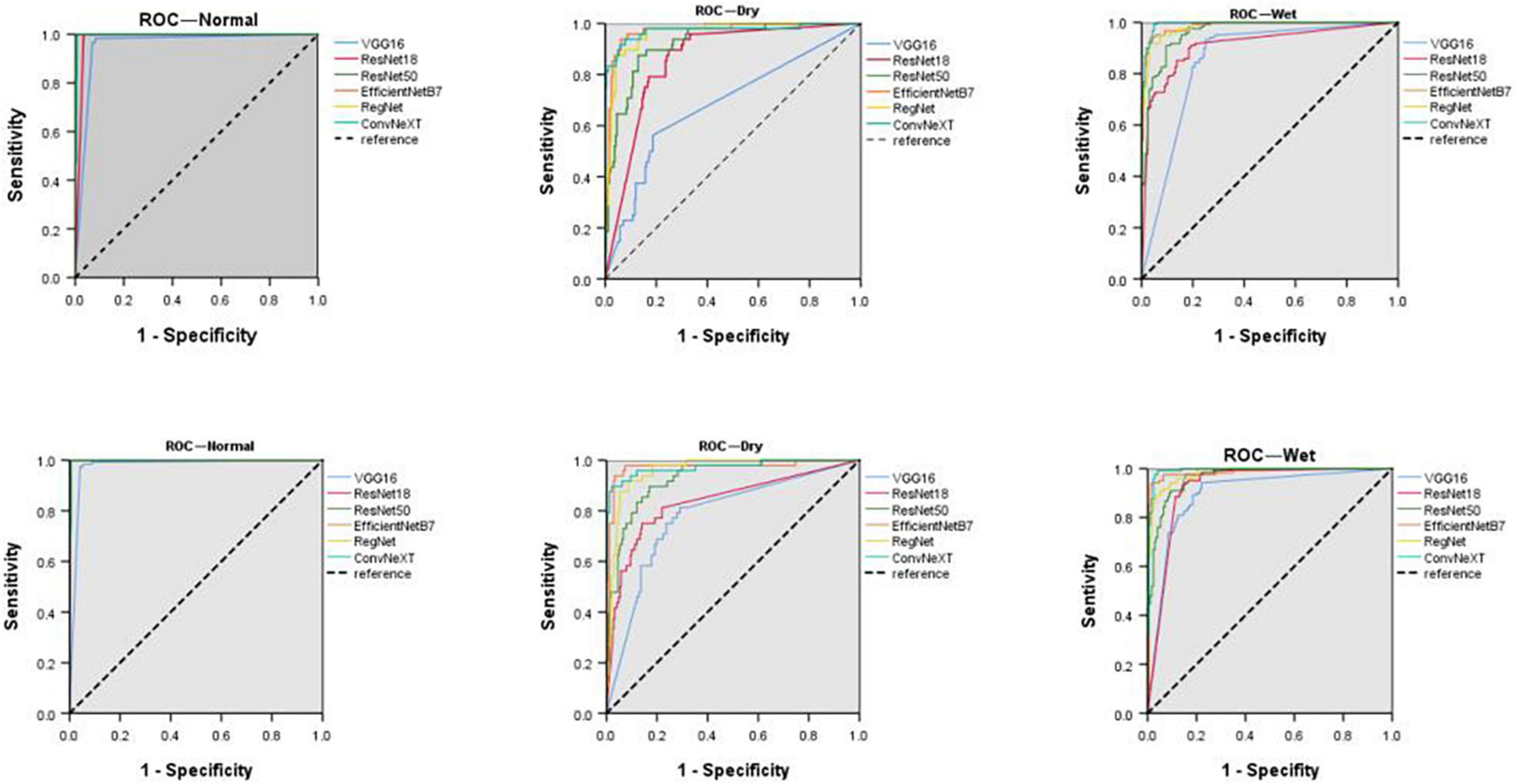

Statistical analysis was performed using IBM SPSS Statistics for Windows version 25.0 (IBM Corp., Armonk, NY, USA). The count data are expressed as the number of images and percentages. The sensitivity, specificity, F1-score, and area under the curve (AUC) of the macular degeneration intelligent aid diagnostic model for the diagnosis of normal, dry macular degeneration, and wet macular degeneration were calculated, receiver operating characteristic curves (ROCs) were plotted, and the consistency of the diagnostic results of the expert diagnostic group and the model were assessed using the Kappa test. The results of the expert diagnostic group were used as the clinical diagnostic criteria, and kappa values of 0.61–0.80 were considered as significant agreement, >0.80 was considered high agreement; ROC curves were used to analyze the diagnostic performance of different models; and AUC values of 0.50–0.70 were considered as low diagnostic values, 0.70–0.85 were considered average, >0.85 was considered good diagnostic value.

In this study, 289 clinical fundus images were used as test sets to assess the three-category models of dry and wet macular degenerations. The expert diagnostic group diagnosed 120 patients with normal fundus, 48 with dry macular degeneration, and 121 with wet macular degeneration. The results of the ConvNeXT three-category model on the original dataset were as follows: 120 normal fundus, 34 dry macular degeneration, and 118 wet macular degeneration. The results of the ConvNeXT three-category model on the expanded dataset were as follows: 120 normal fundus, 42 dry macular degeneration, and 118 wet macular degeneration. The diagnostic results of the model are presented in Tables 2, 3.

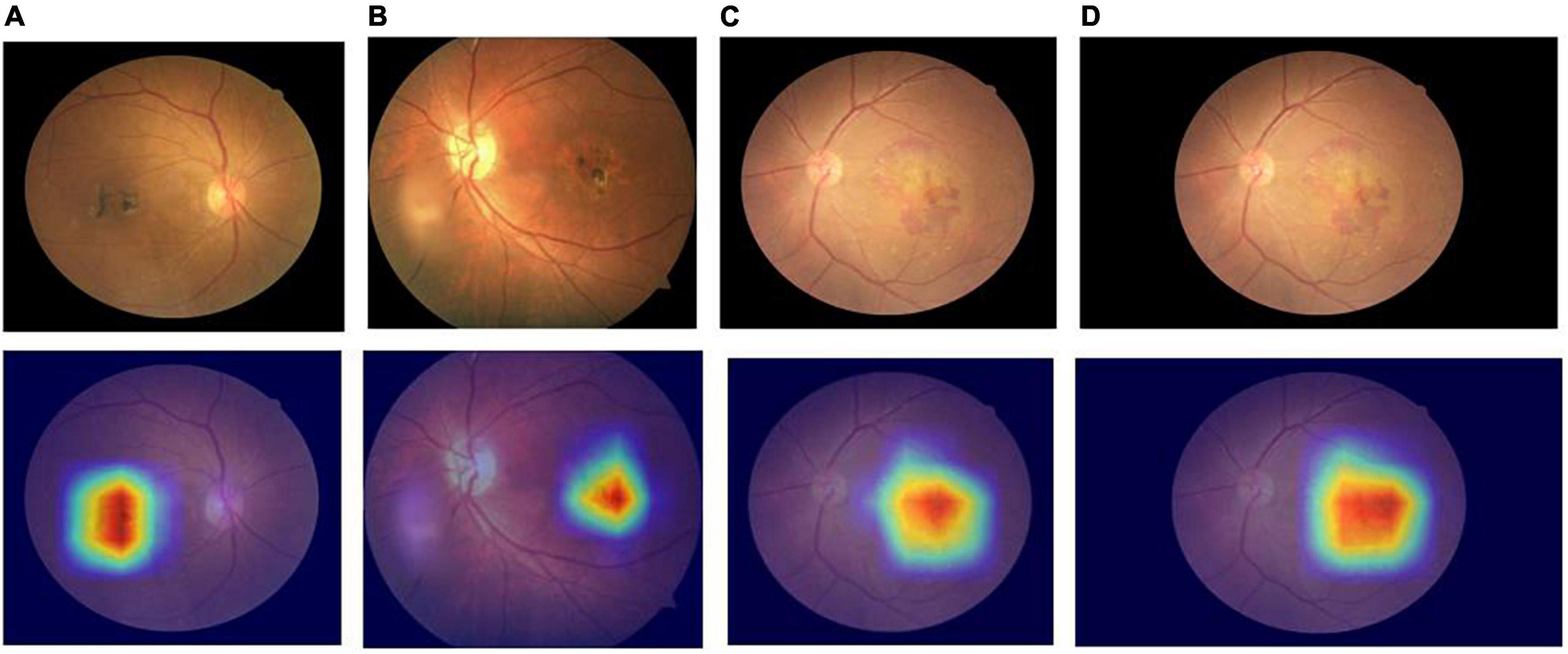

Comparing the diagnostic results of the three-category models VGG16, ResNet18, ResNet50, EfficientNetB7, RegNet, and ConvNeXT on the original dataset, the EfficientNetB7 model had the best results, followed by the ConvNeXT model. After data expansion, the ConvNeXT model achieved the best results, surpassing the EfficientNetB7 model. On the expanded dataset, the ConvNeXT accuracy was 96.89%, and the kappa value was 94.99%. The sensitivity, specificity, F1-score, and AUC values of the model for the diagnosis of normal fundus images were 100.00, 99.41, 99.59, and 99.80%, respectively. Regarding the diagnosis of dry macular degeneration, the sensitivity, specificity, F1-score, and AUC values were 87.50, 98.76, 90.32, and 97.10%, respectively. For the diagnosis of wet macular degeneration, the sensitivity, specificity, F1-score, and AUC values were 97.52, 97.02, 96.72, and 99.10%, respectively. The evaluation indicators for the diagnostic results of each model are listed in Tables 4, 5. The ROC curves are shown in Figure 4. The heap maps of ConvNeXT model are shown in Figure 5.

Figure 4. Receiver operating characteristic curves (ROCs) of normal fundus, dry, and wet macular degeneration on the original and extended data sets for six models. ROC, receiver operating characteristic curve.

Figure 5. Heap maps of ConvNeXT model for dry and wet macular degeneration. Panels (A,B) the heap maps of dry macular degeneration; panels (C,D) the heap maps of wet macular degeneration.

Macular degeneration is a relatively common and irreversible blinding fundus disease worldwide. Studies have shown that, as life expectancy increases, macular degeneration is one of the leading causes of vision loss in the elderly (Gong et al., 2021). However, in less economically developed countries and regions, the uneven distribution of medical resources and the long training period for professional ophthalmologists prevents a large number of AMD patients from being diagnosed and treated timeously. If manual diagnostic screening is used, it takes a considerable amount of time and human training costs. The application of an automated diagnostic system can provide a good platform for regular screening, effectively alleviating the medical needs of a large number of patients, and is vital to reducing the rate of blindness or impaired visual function due to macular degeneration (Cai et al., 2020). Thus, this study may assist ophthalmologists in the initial classification and diagnosis of macular degeneration by training an automatic classification model to improve diagnostic efficiency and benefit patients.

Compared with other classification models, the ConvNeXT-based classification model for dry and wet macular degeneration obtained excellent results. It borrowed ideas from Swin transformer and used training strategies such as depth-separable convolution, image down sampling, and increasing convolution kernel to extract a larger number of features at a higher semantic levels from fundus images and to reduce the computational effort, accelerate feature extraction, and stabilize model training, thus enhancing the expressive power of the model (Zhang et al., 2022). In addition, this study found that the EfficientNetB7 model gave the best results on the original dataset, the ConvNeXT model gave the best results on the expanded dataset, and the results of the EfficientNetB7 model were differed only slightly from those of the ConvNeXT model. Since EfficientNetB7 is a traditional convolutional neural network, the accuracy of the model is improved by scaling network dimensions such as width and depth. ConvNeXT is a new convolution based architecture with less inductive bias, introducing the inherent advantages of transformer. ConvNeXT can often perform slightly better than EfficientNetB7 when the amount of data increases. Meanwhile, the EfficientNetB7 model was nearly 20 times larger than ConvNeXT, and the number of parameters in the EfficientNetB7 model was nearly three times larger than that of ConvNeXT. Therefore, the ConvNeXT model is more suitable for practical medical diagnosis. In addition, some of the model results need to be improved, mainly because the fundus images themselves are more complex, and these models have a simpler structure compared to ConvNeXT and EfficientNetB7, and do not extract the image features sufficiently.

As shown in Tables 5, 6, the evaluation indices of normal fundus images are generally higher than the corresponding indices of dry and wet macular degeneration fundus images. The main reason for this is that the differences between the normal fundus images and macular degeneration fundus images are large and easy to distinguish. Even professional ophthalmologists have difficulty making accurate diagnoses of all cases through fundus images alone, so the diagnostic results of the model are slightly poor. In future, we will consider combining features extracted by deep learning with manually selected features to extract more comprehensive image features and further improve the sensitivity and specificity of the model. Simultaneously, the fusion of manually selected features can increase the interpretability of the features.

In this study, the original data were small and unevenly distributed, and data expansion was used to equalize the number of fundus images for each category. Comparing Tables 5, 6, it can be seen that the evaluation metrics of all models improved after data expansion, with ResNet18 improving the most, with a 5.19% increase in accuracy and a 6.66% increase in kappa value; VGG16 improved the least, with a 0.49% increase in accuracy and a 1.17% increase in kappa value. Thus, data expansion allows the model to learn features effectively for each category of images rather than focusing on categories with a larger number of samples; thus, improving the expressiveness of the model. The modern field of intelligent medical diagnosis generally has problems, such as a low amount of annotated data and data imbalance. This research team will gather more training data, while focusing on the progress of research on training models on small sample datasets, with the intention of conducting further research in the future.

Priya and Aruna (2014) used a machine learning approach to extract retinal image features for classification, with the support vector machine (SVM) classifier achieving a maximum accuracy of 96%. Chen et al. (2021) used a multimodal deep-learning framework to automatically classify macular degeneration with a maximum accuracy of 90.65%. Traditional machine learning algorithms exhibit poor generalization performance and are prone to over-fitting problems. The deep learning effect was more prominent when the amount of data increased. Multimodal deep learning frameworks require datasets containing diverse forms of color fundus images, optical coherence tomography, and fundus autofluorescence images, which are difficult to acquire and can add to the burden of human and medical resources. In this study, the ConvNeXT model was used to classify dry and wet macular degeneration, and only color fundus images were required. It was widely used in clinical applications, and the data were easy to obtain, with an accuracy of 96.89%, and demonstrated superior results. Simultaneously, the ConvNeXT model has fewer parameters and requires less memory than other models, making it easier to apply to end devices.

In this study, automatic classification of normal, dry, and wet macular degeneration was implemented based on the ConvNeXT model. Twelve models were trained on the original and expanded datasets. Results showed that the ConvNeXT model trained on the expanded dataset obtained high sensitivity, specificity, and accuracy and could be used to develop an automatic classification system for dry and wet macular degeneration. This automatic classification system may provide a good platform for regular screening in primary care and could help address the problem of many patients in less economically developed areas with fewer medical resources.

The original contributions presented in this study are included in this article/supplementary material, further inquiries can be directed to the corresponding authors.

MW and YL wrote the manuscript. BZ planned the experiments and the manuscript. SZ guided the experiments. YL and BZ trained the model. WY and MW reviewed the manuscript. XH, JZ, and ZZ collected and labeled the data. All authors issued final approval for the version to be submitted.

This study was supported by the National Natural Science Foundation of China (No. 61906066), Natural Science Foundation of Zhejiang Province (No. LQ18F020002), Postgraduate Research and Innovation Project of Huzhou University (No. 2022KYCX37), Shenzhen Fund for Guangdong Provincial High-level Clinical Key Specialties (No. SZGSP014), Sanming Project of Medicine in Shenzhen (No. SZSM202011015), and Shenzhen Science and Technology Planning Project (No. KCXFZ20211020163813019).

JZ was employed by Brightview Medical Technologies (Nanjing) Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdullahi, M. M., Chakraborty, S., Kaushik, P., and Sami, B. S. (2022). “Detection of dry and wet age-related macular degeneration using deep learning,” in Proceedings of the 2nd international conference on industry 4.0 and artificial intelligence (ICIAI 2021) (Amsterdam: Atlantis Press). doi: 10.2991/aisr.k.220201.037

Bora, A., Balasubramanian, S., Babenko, B., Virmani, S., Venugopalan, S., Mitani, A., et al. (2021). Predicting the risk of developing diabetic retinopathy using deep learning. Lancet Digit. Health 3, e10–e19. doi: 10.1016/S2589-7500(20)30250-8

Cai, L., Hinkle, J. W., Arias, D., Gorniak, R. J., Lakhani, P. C., Flanders, A. E., et al. (2020). Applications of artificial intelligence for the diagnosis, prognosis, and treatment of age-related macular degeneration. Int. Ophthalmol. Clin. 60, 147–168. doi: 10.1097/IIO.0000000000000334

Celebi, A. R. C., Bulut, E., and Sezer, A. (2022). Artificial intelligence based detection of age-related macular degeneration using optical coherence tomography with unique image preprocessing. Eur. J. Ophthalmol. 4:11206721221096294. doi: 10.1177/11206721221096294

Chen, Q., Keenan, T. D. L., Allot, A., Peng, Y., Agrón, E., Domalpally, A., et al. (2021). Multimodal, multitask, multiattention (M3) deep learning detection of reticular pseudodrusen: Toward automated and accessible classification of age-related macular degeneration. J. Am. Med. Inform. Assoc. 28, 1135–1148. doi: 10.1093/jamia/ocaa302

Gelinas, N., Lynch, A. M., Mathias, M. T., Palestine, A. G., Mandava, N., Christopher, K. L., et al. (2022). Gender as an effect modifier in the relationship between hypertension and reticular pseudodrusen in patients with early or intermediate age-related macular degeneration. Int. J. Ophthalmol. 15, 461–465. doi: 10.18240/ijo.2022.03.14

Gong, D., Kras, A., and Miller, J. B. (2021). Application of deep learning for diagnosing, classifying, and treating age-related macular degeneration. Semin. Ophthalmol. 36, 198–204. doi: 10.1080/08820538.2021.1889617

Gutierrez, L., Lim, J. S., Foo, L. L., Ng, W. Y., Yip, M., Lim, G. Y. S., et al. (2022). Application of artificial intelligence in cataract management: Current and future directions. Eye Vis. 9:3. doi: 10.1186/s40662-021-00273-z

He, K. M., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV. doi: 10.1109/CVPR.2016.90

Khan, M. S. M., Ahmed, M., Rasel, R. Z., and Khan, M. M. (2021). “Cataract detection using convolutional neural network with VGG-19 model,” in Proceedings of the 2021 IEEE world AI IoT congress (AIIoT) (Seattle, WA: IEEE).

Khan, R. U., Zhang, X., Kumar, R., and Aboagye, E. O. (2018). “Evaluating the performance of ResNet model based on image recognition,” in Proceedings of the 2018 international conference on computing and artificial intelligence, Chengdu. doi: 10.1145/3194452.3194461

Liu, Z., Mao, H., Wu, C. Y., Feichtenhofer, C., Darrell, T., and Xie, S. (2022). A ConvNet for the 2020s. arXiv [Preprint]. arXiv:2201.03545 doi: 10.1109/CVPR52688.2022.01167

Mahum, R., Rehman, S. U., Okon, O. D., Alabrah, A., Meraj, T., and Rauf, H. T. (2021). A novel hybrid approach based on deep CNN to detect glaucoma using fundus imaging. Electronics 11:26. doi: 10.3390/electronics11010026

Moraes, G., Fu, D. J., Wilson, M., Khalid, H., Wagner, S. K., Korot, E., et al. (2021). Quantitative analysis of OCT for neovascular age-related macular degeneration using deep learning. Ophthalmology 128, 693–705. doi: 10.1016/j.ophtha.2020.09.025

Priya, R., and Aruna, P. (2014). Automated diagnosis of age-related macular degeneration using machine learning techniques. Int. J. Comput. Appl. Technol. 49, 157–165. doi: 10.1504/IJCAT.2014.060527

Qu, Y., Zhang, H. K., Song, X., and Chu, B. R. (2020). Research progress of artificial intelligence diagnosis system in retinal diseases. J. Shandong Univ. Health Sci. 58, 39–44.

Radosavovic, I., Kosaraju, R. P., Girshick, R., He, K., and Dollar, P. (2020). “Designing network design spaces,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Seattle, WA). doi: 10.1109/CVPR42600.2020.01044

Ran, A. R., Tham, C. C., Chan, P. P., Cheng, C.-Y., Tham, Y.-C., Rim, T. H., et al. (2021). Deep learning in glaucoma with optical coherence tomography: A review. Eye 35, 188–201. doi: 10.1038/s41433-020-01191-5

Rozing, M. P., Durhuus, J. A., Nielsen, M. K., Subhi, Y., Kirkwood, T. B., Westendorp, R. G., et al. (2020). Age-related macular degeneration: A two-level model hypothesis. Prog. Retin. Eye Res. 76:100825. doi: 10.1016/j.preteyeres.2019.100825

Rubner, R., Li, K. V., and Canto-Soler, M. V. (2022). Progress of clinical therapies for dry age-related macular degeneration. Int. J. Ophthalmol. 15, 157–166. doi: 10.18240/ijo.2022.01.23

Sarkar, A., Sodha, S. J., Junnuthula, V., Kolimi, P., and Dyawanapelly, S. (2022). Novel and investigational therapies for wet and dry age-related macular degeneration. Drug Discov. Today 27, 2322–2332. doi: 10.1016/j.drudis.2022.04.013

Serener, A., and Serte, S. (2019). “Dry and wet age-related macular degeneration classification using OCT images and deep learning,” in Proceedings of the 2019 scientific meeting on electrical-electronics & biomedical engineering and computer science (EBBT) (Istanbul: IEEE). doi: 10.1109/EBBT.2019.8741768

Shen, J. J., Wang, R., Wang, L. L., Lyu, C. F., Liu, S., Xie, G. T., et al. (2022). Image enhancement of color fundus photographs for age-related macular degeneration: The Shanghai Changfeng study. Int. J. Ophthalmol. 15, 268–275. doi: 10.18240/ijo.2022.02.12

Simonyan, K., and Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv [Preprint]. arXiv:1409.1556

Sungheetha, A., and Sharma, R. (2021). Design an early detection and classification for diabetic retinopathy by deep feature extraction based convolution neural network. J. Trends Comput. Sci. Smart Technol. 3, 81–94. doi: 10.36548/jtcsst.2021.2.002

Tan, M. X., and Le, Q. (2019). “Efficientnet: Rethinking model scaling for convolutional neural networks,” in Proceedings of the 36th international conference on machine learning (PMLR), Long Beach, CA.

Thomas, A., Harikrishnan, P. M., Krishna, A. K., Palanisamy, P., and Gopi, V. P. (2021). A novel multiscale convolutional neural network based age-related macular degeneration detection using OCT images. Biomed. Signal Process. Control 67:102538. doi: 10.1016/j.bspc.2021.102538

Wan, C., Chen, Y., Li, H., Zheng, B., Chen, N., Yang, W., et al. (2021). EAD-net: A novel lesion segmentation method in diabetic retinopathy using neural networks. Dis. Mark. 2021:6482665. doi: 10.1155/2021/6482665

Wong, W. L., Su, X., Li, X., Cheung, C. M., Klein, R., Cheng, C. Y., et al. (2014). Global prevalence of age-related macular degeneration and disease burden projection for 2020 and 2040: A systematic review and meta-analysis. Lancet Glob. Health 2, e106–e116. doi: 10.1016/S2214-109X(13)70145-1

Zhang, H. K., Hu, W. Z., and Wang, X. Y. (2022). EdgeFormer: Improving light-weight ConvNets by learning from vision transformers. arXiv [Preprint]. arXiv:2203.03952

Zheng, B., Jiang, Q., Lu, B., He, K., Wu, M. N., Hao, X. L., et al. (2021a). Five-category intelligent auxiliary diagnosis model of common fundus diseases based on fundus images. Transl. Vis. Sci. Technol. 10:20. doi: 10.1167/tvst.10.7.20

Zheng, B., Liu, Y. F., He, K., Wu, M., Jin, L., Jiang, Q., et al. (2021b). Research on an intelligent lightweight-assisted pterygium diagnosis model based on anterior segment images. Dis. Mark. 2021:7651462. doi: 10.1155/2021/7651462

Keywords: dry and wet macular degeneration classification models, intelligent assisted diagnosis, deep learning, ConvNeXT model, artificial intelligence

Citation: Wu M, Lu Y, Hong X, Zhang J, Zheng B, Zhu S, Chen N, Zhu Z and Yang W (2022) Classification of dry and wet macular degeneration based on the ConvNeXT model. Front. Comput. Neurosci. 16:1079155. doi: 10.3389/fncom.2022.1079155

Received: 25 October 2022; Accepted: 24 November 2022;

Published: 08 December 2022.

Edited by:

Jin Hong, Guangdong Provincial People’s Hospital, ChinaReviewed by:

Lei Liu, Guangdong Provincial People’s Hospital, ChinaCopyright © 2022 Wu, Lu, Hong, Zhang, Zheng, Zhu, Chen, Zhu and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhentao Zhu, anNoYXl5enp0QDE2My5jb20=; Weihua Yang, YmVuYmVuMDYwNkAxMzkuY29t

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.