- 1Department of Computational Neuroscience, Institute of Physiology, Czech Academy of Sciences, Prague, Czechia

- 2Second Medical Faculty, Charles University, Prague, Czechia

The apparent stochastic nature of neuronal activity significantly affects the reliability of neuronal coding. To quantify the encountered fluctuations, both in neural data and simulations, the notions of variability and randomness of inter-spike intervals have been proposed and studied. In this article we focus on the concept of the instantaneous firing rate, which is also based on the spike timing. We use several classical statistical models of neuronal activity and we study the corresponding probability distributions of the instantaneous firing rate. To characterize the firing rate variability and randomness under different spiking regimes, we use different indices of statistical dispersion. We find that the relationship between the variability of interspike intervals and the instantaneous firing rate is not straightforward in general. Counter-intuitively, an increase in the randomness (based on entropy) of spike times may either decrease or increase the randomness of instantaneous firing rate, in dependence on the neuronal firing model. Finally, we apply our methods to experimental data, establishing that instantaneous rate analysis can indeed provide additional information about the spiking activity.

1. Introduction

One of the primary research areas of computational neuroscience is dedicated to understanding the principles of neuronal coding, i.e., the way information is embedded into neuronal signals. It is generally understood that neurons use brief electrical impulses (called action potentials or spikes) to convey information. The way information is presented in the time sequence of spikes, however, is still a matter of debate (Shadlen and Newsome, 1994; Stein et al., 2005).

A widely accepted answer to the problem is the rate coding hypothesis, which says that the neurons transmit information through the average number of spikes sent along the axon per a certain time window (this is called the mean firing rate). The origin of this theory is credited to Edgar Adrian who found out that the firing rate of the stretch receptor of a frog's muscle changes as a function of stimuli (Adrian, 1926). However, since then, many studies have shown that neurons can encode information without necessarily changing the mean firing rate in response to a stimulus (Perkel and Bullock, 1968; Gerstner and Kistler, 2002; Rigotti et al., 2013; Dettner et al., 2016) prompting the temporal coding hypothesis, which states that the temporal structure of the ISIs is also employed in the embedding of neural information in the spike train (Theunissen and Miller, 1995). Theoretically, information can be encoded in the temporal pattern of the ISIs in an infinite number of ways (Thorpe and Gautrais, 1997; Jacobs et al., 2009; Ainsworth et al., 2012), therefore measures are needed to quantify the features of spiking neuronal signals from different perspectives (Perkel and Bullock, 1968; Victor and Purpura, 1997; Buračas and Albright, 1999; Rieke et al., 1999; Nemenman et al., 2004). A possible way of looking at the role of temporal structures can be through variability coding hypothesis (Perkel and Bullock, 1968) which states that neuronal variability may not be entirely noise, and part of it might reflect the aspects of neural code that is not yet understood; whether it is the variability of the ISIs or of the firing rate. A standard measure for comparing variability in spike trains is through the coefficient of variation (CV) which is defined as the ratio of standard deviation to the mean of ISIs (Barbieri and Brunel, 2007). Variability of the firing rate is measured by the Fano factor (Ditlevsen and Lansky, 2011; Rajdl and Lansky, 2013; Stevenson, 2016) which is defined as the ratio of variance to the mean number of spikes in a fixed time window.

Another concept that is similar to variability but not equivalent, is the notion of randomness (Kostal et al., 2007b). Variability and randomness both are used as a differentiating measure in the cases where spike trains might seem similar from the rate coding perspective. It is important to distinguish between the two quantities because highly variable data might not be random at all if it consists of relatively predictable values. For example multi-modal data with well separated peaks may have higher variability than uniformly distributed data where the outcomes are the least predictable. Shannon's entropy (Shannon and Weaver, 1949) is widely used to measure randomness (Steuer et al., 2001; McDonnell et al., 2011; Watters and Reeke, 2014), however it is not suitable for continuous distributions. Few other randomness measures based on entropy have been used in neural context recently. In Kostal et al. (2007a) and Kostal et al. (2013) the authors propose a randomness measure for ISIs, creating an alternative to CV; whereas an entropy based randomness measure of spike counts is introduced in Rajdl et al. (2017), analogous to the Fano factor.

The instantaneous rate is often ambiguously defined as the inverse of a certain inter-spike interval, e.g., of the first complete ISI after stimulus onset, or of a combination of first n intervals, etc. (Bessou et al., 1968; Awiszus, 1988; Lansky et al., 2004). However, statistically consistent definition of the instantaneous rate (Kostal et al., 2018) cannot depend on the “intrinsic” timing given by the particular spike train realization (e.g., the first evoked spike). Instead, it must be evaluated with respect to the “external” time frame, consistently across trials, i.e., asynchronously with respect to individual spike train realizations. In this paper we consider models of spike trains described by renewal processes, and investigate the dependence between the ISI dispersion coefficients and instantaneous rate dispersion coefficients and emphasize that instantaneous rate provides another perspective in the evaluation of neuronal data.

The paper is divided as follows: first, the concept of instantaneous rate is introduced. Next, the concepts of variability and randomness are defined formally. In section 2, we derive the instantaneous rate distribution and the related dispersion measures for a few of the most commonly used models of steady-state neuronal activity. Included models are restricted to the framework of renewal spiking activity for the purpose of this paper. In section 3, we compare the dispersion characteristics of the neuronal models from rate coding and temporal coding perspectives. Upon comparison we find that for some neuronal models, the firing patterns have a different level of randomness in different settings whereas for others, the changed perspective of observation (from rate to temporal) does not affect the randomness of the data. More details on this is available in section 4.

2. Materials and Methods

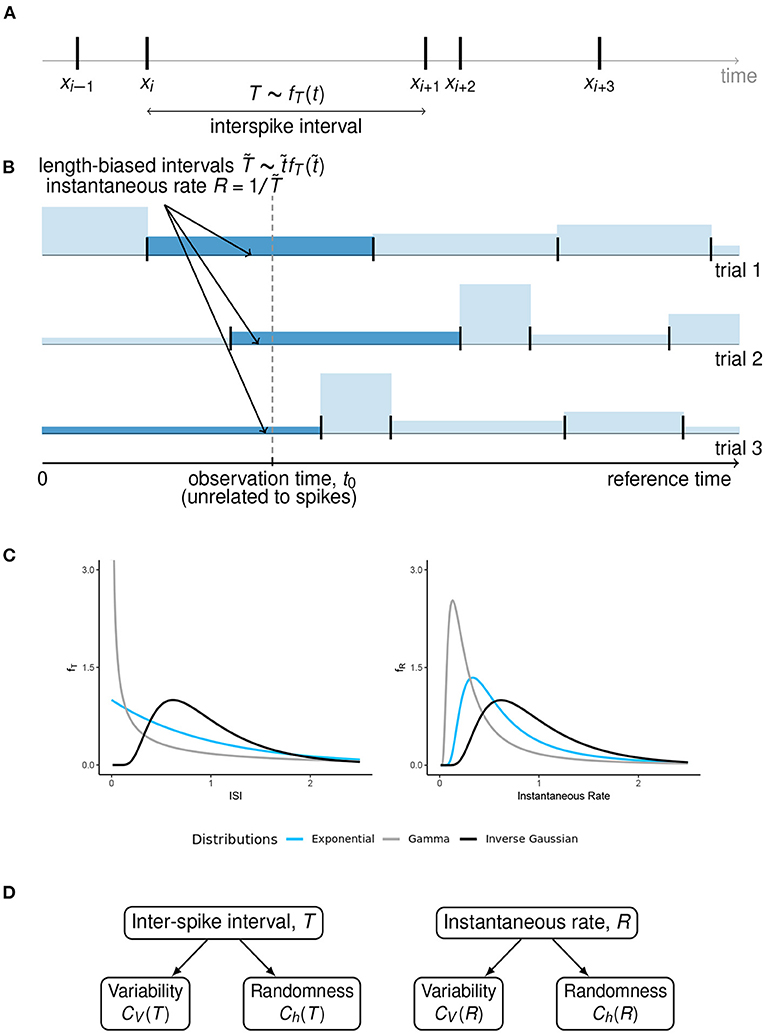

2.1. Instantaneous Rate

The class of stochastic processes whose realization consists of a sequence of point events in time is called stochastic point processes (Cox and Miller, 1965). Neuronal spike trains are often described as stochastic point processes, with spikes corresponding to events. There are essentially two ways of describing point processes, either in terms of the number of events occurring in a time window, or in terms of the intervals between these events. Consequently, a spike train can be described either by using a sequence of the occurrence times of its individual spikes, X1, X2, … (see Figure 1A) or through the ISIs defined as Ti = Xi+1 − Xi. Generally, the point process is stationary if the underlying joint probability distribution of the numbers of the spikes in k time intervals does not depend on displacement variable h (Cox and Lewis, 1966, p. 59). In this paper, we consider an important class of stationary point processes, the renewal point processes, in which the length T of consequent ISIs is an independently and identically distributed random variable with the probability distribution function (pdf) fT, T ~ fT(t). Renewal processes are often used to model the activity of spontaneously active cells (Tuckwell, 1988).

Figure 1. Length-biased ISIs and instantaneous rate. (A) An overview of the ISIs when the spikes occur at times Xi−1, Xi, Xi+1, …. We assume that the ISIs are independent and identically distributed with pdf fT(t), under steady state conditions. (B) When the observation time t0 is fixed with respect to some reference time, unrelated to the spike times, the probability of observing a particular ISI is proportional to its length. These “length-biased” intervals () are used to define the instantaneous rate R with the property 𝔼(R) = 1/𝔼(T). (C) A graphical representation of how the ISI distributions can visually differ from the instantaneous rate distributions, for some well-known ISI models with equal mean firing rate. (D) A summary of the concepts of variability and randomness for the two ways of spike train description that we have considered in this article.

Let the number of spikes that occur in the time interval [0, w] be denoted by N(w). A natural way of calculating the firing rate is to divide the number of spikes N(w) by the time window w. The mean of ISIs, 𝔼(T), satisfies the following relationship with the mean of the counting process 𝔼[N(w)] (Cox and Lewis, 1966; Rudd and Brown, 1997),

where λ is the point process intensity (Cox and Lewis, 1966).

For finite w, Equation (1) holds true for renewal processes only if time origin t0 is arbitrary, i.e., it is not related to the renewal point process realization (Figure 1B). In this case, t0 falls into some ISI, say Tk. Then the sequence of random variables (W, Tk+1, Tk+2, …), where W is the time to the first spike from the origin, is not stationary. Moreover, the point process intensity λ is equal to the mean firing rate. The corresponding renewal process is referred to as equilibrium renewal process, as opposed to the ordinary renewal process which starts from an arbitrary spiking event and all the ISIs follow the same renewal pdf (Cox and Miller, 1965).

The instantaneous firing rate is typically defined as the inverse of the ISIs (1/T) (Pauluis and Baker, 2000). However, as proven in Lansky et al. (2004), the mean instantaneous firing rate is higher than or equal to the mean firing rate,

with equality if all the ISIs are of the same length.

The undesirable inequality in Equation (2) becomes equality once we realize that the “time instant” (at which the instantaneous rate is measured) does not generally coincide with a spike. As shown in Kostal et al. (2018), the instant is chosen with respect to the “external” time frame, across trials, i.e., asynchronously with respect to individual spike train realizations. Consequently, the probabilities of observed ISIs () are proportional to their length, (Figure 1B). The mean inverse of these length-biased ISIs equals to the mean firing rate λ.

The instantaneous rate observed, in this case, is a random variable described by the pdf,

For a detailed overview of the derivations, refer to Kostal et al. (2018).

An immediate consequence of Equation (3) is,

Hence, for the purpose of the derivation of instantaneous rate distributions for different models of steady-state firing (Figure 1C), we will restrict ourselves to the case of equilibrium renewal process. The variability of instantaneous rate is explored in the next section.

2.2. Quantification of Variability and Randomness

The most common method to measure statistical dispersion of ISIs, described by a continuous positive random variable T, is the standard deviation σ(T), defined as

The relative dispersion measure quantity is known as coefficient of variation CV(T),

where λ = 1/𝔼(T). The coefficient of variation CV(T) is a dimensionless quantity and its value does not depend on the choice of the units of ISIs or on linear scaling; hence it can be used to meaningfully compare the ISI distributions with different means, unlike σ(T) (Softky and Koch, 1993; Doron et al., 2014).

From Equation (3), we can write the standard deviation for the instantaneous rate as

which leads to the relative dispersion measure,

From Equation (5), it follows that σ or CV measure how much the probability distribution is “spread” with respect to the mean value but these quantities do not describe all possible differences between spike trains with equal rate coding characteristics. Spike trains of equal variability may still differ in higher than second statistical moments. Moreover, neither σ nor CV quantifies how random or unpredictable the outcomes are Kostal et al. (2007a).

To quantify randomness as a further describing characteristic of a spike train, entropy based measures like differential entropy (Shannon and Weaver, 1949), h, have been proposed

where X is a continuous random variable with pdf fX. However, h(fX) by itself can not be used as a measure of randomness since it can take both positive and negative values and depends on the scaling of the random variable X. Kostal and Marsalek (2010) proposed the entropy-based dispersion coefficient σh,

Entropy-based dispersion σh can be interpreted with the help of asymptotic equipartition property (Principe, 2010; Cover and Thomas, 2012), the details of which can be found in Kostal et al. (2013). Analogous to Equation (6), the relative entropy-based measure of dispersion, Ch is defined as

An immediate consequence of the above equation is that the maximum value of Ch is Ch = 1, which occurs only in the case of exponential fT.

3. Results

Among the different point process models of stationary neuronal activity, we have chosen the classically used Gamma, lognormal, inverse Gaussian, shifted exponential, and the mixture of exponential distributions (Tuckwell, 1988).

First three distributions are part of the scale family (Casella and Berger, 2002), i.e., their ISI pdfs fT(t; λ), explicitly parameterized by the intensity λ, satisfy the relationship

We explore these neuronal models in the following subsections (Figure 1D). Detailed derivations of the following results are provided in the Supplementary Material for better legibility.

3.1. Gamma Distribution

Gamma distribution is frequently used as a descriptor of ISIs in experimental data analysis (Levine, 1991; McKeegan, 2002; Reeke and Coop, 2004; Pouzat and Chaffiol, 2009), its pdf is

where is the gamma function and a > 0, b > 0 are the parameters. The mean firing rate and the coefficient of variation are,

Using Equation (9), the expression for the entropy of the ISI distribution is derived as

where ψ(x) = Γ′(x)/Γ(x) is the digamma function.

Substituting the values from Equations (14) and (15) into Equation (11), gives the dispersion coefficient of randomness,

The instantaneous rate distribution fR(r) is obtained through Equation (3) and it follows the inverted gamma distribution,

Coefficient of variation CV(R) is evaluated through Equation (8),

The differential entropy for fR(r) is,

and the dispersion coefficient of randomness is,

The dispersion measures CV(T) and CV(R) are related through the following equation,

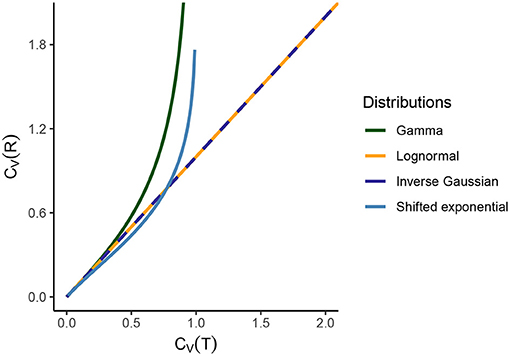

This relationship is illustrated in Figure 2 and we see that CV(R) → ∞ as CV(T) → 1. For CV(T) = 1, gamma distribution becomes exponential distribution with pdf,

Note that the firing intensity λ completely characterizes the exponential distribution and that CV(T) = 1, regardless of the value of λ. The entropy h(fT) of the exponential distribution is

One of the key features of the exponential distribution is that, for a fixed mean firing rate λ, it maximizes the differential entropy among all probability distributions on the real positive half line (Cover and Thomas, 2012). Deriving from Equation (11),

The corresponding instantaneous rate distribution, follows from Equation (3),

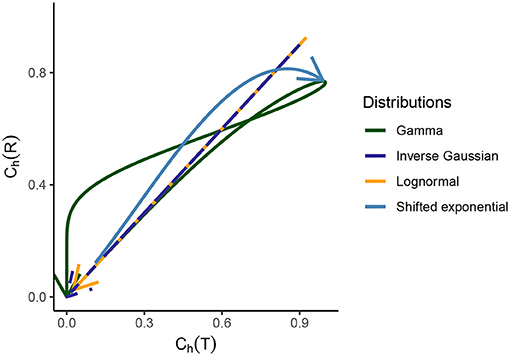

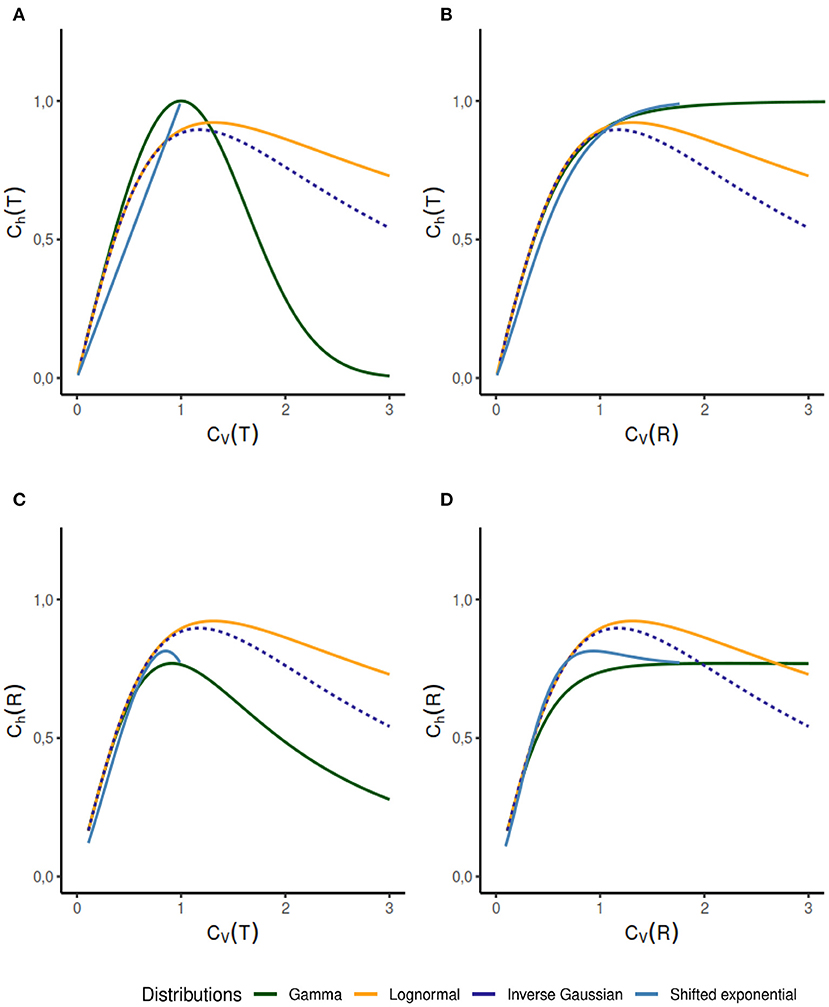

which is the inverse gamma distribution mentioned in Equation (17) with a = 1 and b = λ. For the inverse gamma distribution, the second moment exists only when a > 1. In Figure 3, we can see where gamma distribution becomes exponential and has Ch(T) = 1.

Figure 2. Comparison of CV(T) and CV(R) for several standard statistical ISI models. For the gamma distribution, CV(R) → ∞ as CV(T) → 1, since gamma distribution becomes exponential for CV(T) = 1. For lognormal and inverse Gaussian distribution, the relationship between the dispersion coefficients is an identity. For shifted exponential distribution, CV(T) and CV(R) depend on the mean firing rate λ and the refractory period τ. Hence, we vary the value of CV(T) and CV(R) (consequently of λ and τ) and we see that CV(R) > CV(T) until CV(T) = 0.7715 but then instantaneous rate distribution maintains a higher variability than ISIs. As CV(T) → 1, the shifted exponential becomes exactly exponential and therefore CV(R) → ∞.

Figure 3. Relationship between the dispersion coefficients of randomness for the gamma, lognormal, inverse Gaussian, and shifted exponential distribution of ISIs. Starting from the origin, the arrow indicated to the end corresponds to CV(T) values ranging from 0 to ∞. For the first three distributions, which are part of the scale family and for which we consider λ = 1 for a meaningful comparison, it holds that for CV(T) → 0, we see that Ch(T) → 0 for Ch(R) → 0. As CV(T) grows, the randomness grows for ISIs and instantaneous rate in the beginning. After a certain point, the randomness starts to decline and as CV(T) → ∞, we have Ch(T) → 0 and Ch(R) → 0. For the shifted exponential distribution where we have to consider a varying CV(T) and for that a varying mean firing rate λ and refractory period τ, Ch(T) increases monotonously as a function of CV(T), whereas Ch(R) obtains its maximum for CV(T) = 0.85 and then declines.

3.2. Lognormal Distribution

The lognormal distribution represents a common ISI descriptor in experimental data analysis (Levine, 1991; Pouzat and Chaffiol, 2009), even though it is rarely presented as a result of any of the neuronal models (Bershadskii et al., 2001). The pdf is,

where m is the scale parameter and σ > 0 is the shape parameter. The mean firing rate and coefficient of variation are as follows,

We compute the differential entropy

and the dispersion coefficient of randomness,

The instantaneous rate distribution follows the pdf,

with

The expression for the differential entropy is derived from Equation (11),

The dispersion coefficient is evaluated as,

For the lognormal distribution, there is a “symmetric” relationship between fT(t) and fR(r) (Kostal et al., 2018),

i.e., the shape of the probability distributions of ISI and instantaneous rates are exactly the same for λ = 1. Furthermore, the relationship between CV(T) and CV(R) is that of an identity (Figure 2),

and from Equations (29) and (33), we have

For lognormal distribution, the randomness and variability of instantaneous rate and ISI are the same, regardless of the perspective, as seen in Figures 2, 3.

3.3. Inverse Gaussian Distribution

Inverse Gaussian distribution is often fitted to experimentally observed ISIs (Gerstein and Mandelbrot, 1964; Berger et al., 1990; Levine, 1991; Pouzat and Chaffiol, 2009). The time that a Weiner process with positive drift takes to reach the first passage time is distributed according to the inverse Gaussian distribution. The probability density of inverse Gaussian distribution can be expressed as a function of its mean a > 0 and scale parameter b > 0

with

From Equation (9), the expression for differential entropy is,

where is the derivative of the modified Bessel function of the second kind (Abramowitz and Stegun, 1972).

Equation (11) gives,

The instantaneous firing rate follows the distribution

From Equation (8),

and from Equation (9), differential entropy is,

The expression for the dispersion coefficient of randomness is as follows,

Analogous to the lognormal distribution, we observe that the inverse Gaussian distribution also satisfies the “symmetrical” property (Equation 34) and,

Results from Figures 2, 3 illustrate this curious property that the randomness and variability of this distribution is equal for ISIs and instantaneous rate perspective.

3.4. Distribution Involving a Refractory Period

The refractory period is a state of the neuron, occurring right after a spike, where it is impossible for another spike to be emitted. A shifted exponential distribution function is used as an ISI descriptor for neurons with refractory period τ (Reeke and Coop, 2004). The probability density function of the shifted exponential function with parameter a > 0 and refractory period τ ≥ 0 is

with

We observe that CV(T) < 1 for a > 0 and τ > 0. The differential entropy for the shifted exponential distribution is evaluated as

and substituting these values into Equation (11), we arrive at

The pdf of the instantaneous rate is,

with

where is the upper incomplete gamma function (Abramowitz and Stegun, 1972).

Evaluation of the differential entropy from Equation (9) yields,

Expression for the dispersion coefficient is derived through Equation (11),

For the shifted exponential distribution, we observe that from Equation (48),

which leads to,

i.e., CV(T) depends on λ and τ. Hence, in order to analyze the relationship between CV(T) and CV(R) we have to vary the values of λ and τ (Figure 2).

Substituting the values from Equations (55) and (56) into Equation (54), we get

The dispersion coefficient of randomness Ch(R) increases until it attains its maximum value 0.8137 for CV(T) = 0.85, whereas Ch(T) keeps increasing monotonously. This behavior can be better understood by looking at the behavior of Ch(R) while CV(T) increases in Figure 6C. It is observed that Ch(R) attains its maximum value and then declines as CV(T) → 1, whereas Ch(T) monotonously increases.

3.5. Mixture of Two Exponential Distributions With Refractory Period and Application to Experimental Data

Throughout the years, empirical studies have produced evidence of bimodal or multimodal trends in ISI data (Rodieck et al., 1962; Nakahama et al., 1968; Obeso et al., 2000; Dorval et al., 2008). The underlying assumption is that a neuron might be in one of the several “states,” with each state being characterized by a different ISI distribution (Tuckwell, 1988). A mixture of two distributions is commonly used to modal such data, for example, Bhumbra and Dyball (2004) used a mixture of two lognormal distributions as an ISI descriptor of supraoptic nucleus neurons, and recently gamma-exponential mixture distribution has been used to characterize the ISI distribution in the auditory system (Heil et al., 2007; Neubauer et al., 2009).

In this section, we consider the mixture of two exponential distributions (Tuckwell, 1989), which is often used to describe the bursting activity in neurons, where a sequence of short ISIs is dispersed among comparatively longer ISIs. In 1965, Smith and Smith (1965) used the mixture of two exponential distributions to explain the bursting activity in the isolated cortical neurons of an unanesthetized cat. Thomas (1966) used mixed exponential distributions to describe the ISIs in their study of the clustered firing of cortical neurons. Trapani and Nicolson (2011) found that in the lateral line organs of a zebrafish, when the depolarizing currents were blocked, the ISI data of afferent neurons was best described by a mixture of exponential distributions.

The pdf of the mixed exponential distribution with refractory period τ ≥ 0 and mixture components with parameter a > 0, b > 0, a ≠ b is given by

where p ∈ (0, 1). In this case,

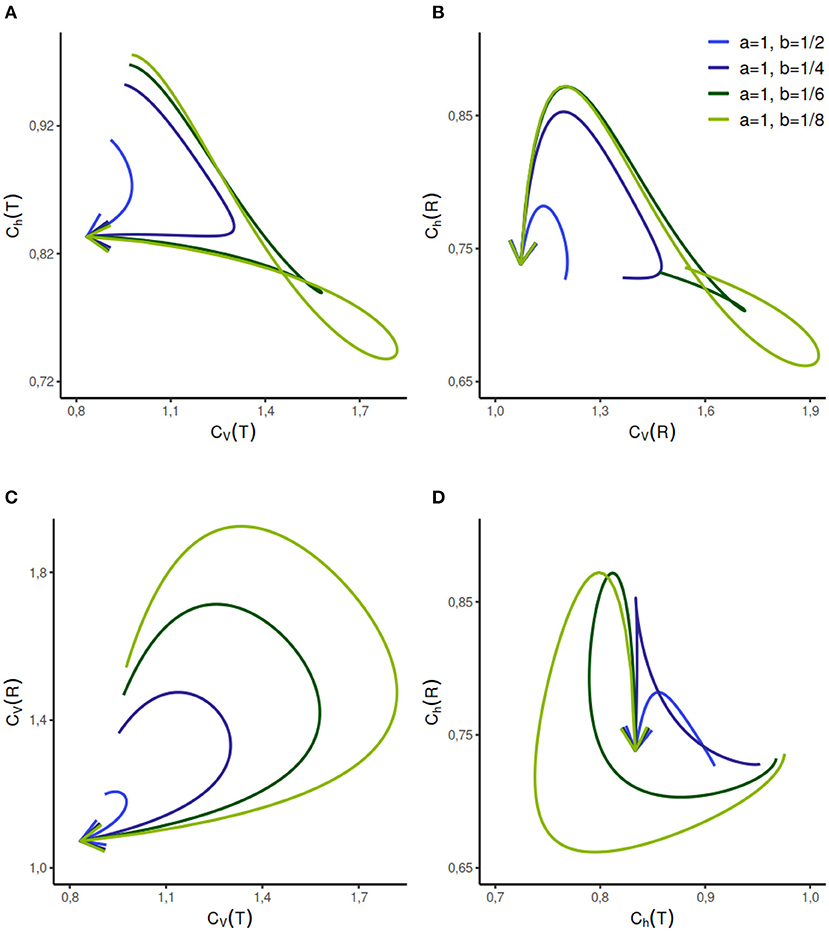

The analytical expressions for the CVs and Chs are difficult to obtain, however, they can be calculated numerically for a given set of parameters (Figure 4). The parameter range for this distribution can be vast, we analyze the dispersion coefficients for a few different sets of component rate parameters a and b, given a value of τ and p ∈ [0, 1]. We study the change in randomness and variability as probability variable p increases in the direction of the arrows. The behavior of this model is relatively complicated but for selected cases, such as when a = 1, b = 1/2, or a = 1, b = 1/4, different firing regimes are uniquely described by Ch(T) but not by CV(T).

Figure 4. Dispersion measures of the mixed exponential distribution with refractory period randomly set to τ = 0.2 and variable weight of components as p ∈ [0, 1] in the direction of the arrows. The rate parameter of one component is fixed at a = 1 whereas the rate parameter b of the second component varies. When p = 0 or p = 1, the distribution is shifted exponential with refractory period τ. The dispersion measure of randomness Ch(T) describes CV(T) uniquely when the a = 1, b = 1/2, whereas the reverse is not true (A). This relationship gets complicated with the increasing separation between the rate parameters of the components. As seen in (B–D), the relationship between the other dispersion measures is relatively complicated and non-unique for the given set of parameters.

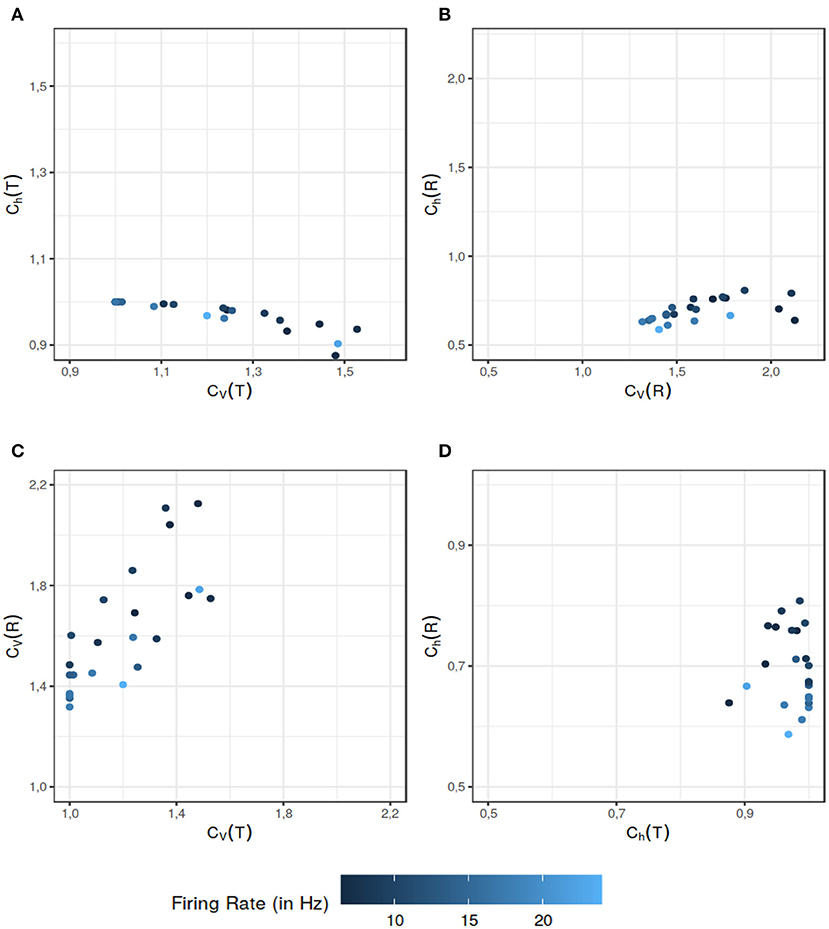

Song et al. (2018) model the spike generation in the spontaneously active afferent neurons of the Zebrafish lateral line, as a renewal process. The authors propose that a spike is generated if the neuron is recovered from the refractory period and a synaptic release (excitatory input) from hair cells has arrived and thus ISI T = τ + TE where τ is the absolute refractory period and TE is the time to excitation (we omit the small relative refractory period used in the original paper, for computational simplicity). It is demonstrated in the paper that the mixture of exponential distributions used to model the hair cell synaptic release time TE, yields the best fit for the ISI data. The pdf of the ISI, in this case is given by Equation (58). We calculated Ch and CV for ISIs and the instantaneous rate of each data set fitted with a combination of absolute refractory period and mixture of exponential distributions. For the given data sets, CV(T) and CV(R) offer more information than their randomness counterparts Ch(T) (Figure 5A) and Ch(R) (Figure 5B) respectively. When it comes to the dispersion measures of variability, as seen in Figure 5C, in some situations CV(R) offers additional information to distinguish further nuances in the data. The data sets which might seem of similar ISI variability, differ when it comes to the variability of their instantaneous rate. Similar results follow for the randomness (Figure 5D). This example supports our assertion that the dispersion measures based on the instantaneous rate can provide additional information to help differentiate among the data sets.

Figure 5. Comparison between the dispersion coefficients of variability and randomness of the experimental data for the data sets used in Song et al. (2018). The ISI data has two components: the refractory period and the hair-cell synaptic release time (the time to excitation input). Latter is modeled by a mixture distribution of two exponentials. The color gradient indicates the mean firing rate of the particular data set. The comparison of various dispersion quantities reveal various aspect to differentiate the data sets. As seen in (A), Ch(T) classifies the data sets in a similar category from the randomness perspective even if their ISI variability are on a wider scale. The dispersion measure of variability CV(R) also does a better job of differentiating among the data sets in (B) when their randomness dispersion measure would put them in a similar category. In (C), CV(T) and CV(R) reveal separate aspects of the data sets. Overall CV(R) helps differentiate among the data sets with similar CV(T) values. Finally in (D) we can see that Ch(R) further differentiates the data sets with equal Ch(T). The data sets which might have similar randomness on the ISI scale, can be differentiated on the basis of their instantaneous rate randomness. All of these figures support our assertion that dispersion measures of instantaneous rate provide additional information that can be helpful in distinguishing the data sets.

4. Discussion

We studied the spiking activity described by the renewal processes from two perspectives, the temporal point of view (in terms of ISIs) and the frequency point of view (in terms of instantaneous rate). We found that for a given spike train the temporal characteristics and the instantaneous rate characteristics, can either follow the same trend or opposite trends. This is due to the fact that the instantaneous rate distribution is obtained from length-biased sampling of ISIs (Equation 3). Spike trains described by non-renewal processes have been studied widely (Eden and Kass, 2016) but are beyond the scope of this paper. For the special case of serially correlated ISIs, the results of our analysis apply to marginal distributions and therefore remain unchanged (Kostal and Lansky, 2006).

1. We observe several different relationships between the variability from temporal and instantaneous rate perspectives (Figure 2). For gamma distribution, the variability of instantaneous rate is higher than the variability in ISIs whereas for lognormal and inverse Gaussian distribution it stays the same, CV(T) = CV(R). On the other hand, for shifted exponential distribution CV(R) < CV(T) until CV(T) = 0.7715 but after that CV(R) increases rapidly compared to CV(T).

2. In the case of gamma distribution, both the randomness measures Ch(T) and Ch(R) decline eventually as a function of CV(T) but they become constant as a function of CV(R) (Figures 6A–D). The randomness for gamma distribution differs in each particular case, and for small values of CV(T), Ch(R) < Ch(T). Lognormal and inverse Gaussian distributions attain their maximum for CV(T) values close to 1 and then their randomness keeps declining (Figures 6A,C). For these two distributions, Ch(T) = Ch(R) whether it is mapped against CV(T) or CV(R). For shifted exponential distribution, Ch(T) increases as a function of CV(T) (Figure 6A) whereas Ch(R) attains its maximum for CV(T) = 0.85 and then declines fast as CV → 1 (Figure 6C).

3. We studied the case of mixed exponential distribution with a refractory period. Although the theoretical analysis is complicated, we use the experimental data obtained from Song et al. (2018) to inspect the temporal and instantaneous rate perspectives. The dispersion measures for instantaneous rate provide a novel outlook on the data, different from the one provided by the dispersion measures for the ISIs (Figure 6). In cases like these, the instantaneous rate may be helpful in distinguishing further nuances in the data.

Figure 6. Dispersion measures of the gamma, lognormal, inverse Gaussian, and shifted exponential distributions. Each randomness dispersion measure Ch(R) and Ch(T) is categorized by their dependence on ISI variability CV(T) and on instantaneous rate variability Ch(T). Firing rate is fixed at λ = 1 for the scale family distributions. For the gamma distribution, Ch(T) declines as a function of CV(T) (A) but increases as a function of CV(R) (B). For lognormal and inverse Gaussian distribution Ch(T) = Ch(R), regardless of the perspective change (A–D). The shifted exponential distribution has a monotonously increasing Ch(T), when it is a function of CV(T) or CV(R) (A,B); whereas Ch(R) attains its maximum for CV(T) = 0.85 and CV(R) = 0.9282 respectively, and then declines (C,D).

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found at: https://static-content.springer.com/esm/art%3A10.1038%2Fs41598-018-33064-z/MediaObjects/41598_2018_33064_MOESM1_ESM.pdf.

Author Contributions

LK proposed the concept of the article. RT and LK wrote the manuscript. RT performed the calculations, numerical simulations, and analysis. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by the Czech Science Foundation project 20-10251S.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Prof. Josef Trapani from Amherst College, MA and Prof. Nessy Tania from Smith College, MA for their helpful discussions and for kindly providing the experimental data. We would also like to thank Joseph Farrow from Durham University for his useful insights.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2021.620410/full#supplementary-material

References

Abramowitz, M., and Stegun, I. (1972). Handbook of Mathematical Functions. New York, NY: American Association of Physics Teachers.

Adrian, E. D. (1926). The impulses produced by sensory nerve-ending. J. Physiol. 62, 33–51. doi: 10.1113/jphysiol.1926.sp002334

Ainsworth, M., Lee, S., Cunningham, M. O., Traub, R. D., Kopell, N. J., and Whittington, M. A. (2012). Rates and rhythms: a synergistic view of frequency and temporal coding in neuronal networks. Neuron 75, 572–583. doi: 10.1016/j.neuron.2012.08.004

Awiszus, F. (1988). Continuous functions determined by spike trains of a neuron subject to stimulation. Biol. Cybern. 58, 321–327. doi: 10.1007/BF00363941

Barbieri, F., and Brunel, N. (2007). Irregular persistent activity induced by synaptic excitatory feedback. Front. Comput. Neurosci. 1:5. doi: 10.3389/neuro.10.005.2007

Berger, D., Pribram, K., Wild, H., and Bridges, C. (1990). An analysis of neural spike-train distributions: determinants of the response of visual cortex neurons to changes in orientation and spatial frequency. Exp. Brain Res. 80, 129–134. doi: 10.1007/BF00228854

Bershadskii, A., Dremencov, E., Fukayama, D., and Yadid, G. (2001). Probabilistic properties of neuron spiking time-series obtained in vivo. Eur. Phys. J. B Condens. Matter Complex Syst. 24, 409–413. doi: 10.1007/s10051-001-8691-4

Bessou, P., Laporte, Y., and Pages, B. (1968). A method of analysing the responses of spindle primary endings to fusimotor stimulation. J. Physiol. 196:37. doi: 10.1113/jphysiol.1968.sp008492

Bhumbra, G., and Dyball, R. (2004). Measuring spike coding in the rat supraoptic nucleus. J. Physiol. 555, 281–296. doi: 10.1113/jphysiol.2003.053264

Buraças, G. T., and Albright, T. D. (1999). Gauging sensory representations in the brain. Trends Neurosci. 22, 303–309. doi: 10.1016/S0166-2236(98)01376-9

Cover, T. M., and Thomas, J. A. (2012). Elements of Information Theory. New York, NY: John Wiley & Sons.

Cox, D., and Miller, H. (1965). The Theory of Stochastic Processes. New York, NY: John Wiley & Sons.

Cox, D. R., and Lewis, P. A. W. (1966). The Statistical Analysis of Series of Events. London: Methuen & Co Ltd. doi: 10.1007/978-94-011-7801-3

Dettner, A., Münzberg, S., and Tchumatchenko, T. (2016). Temporal pairwise spike correlations fully capture single-neuron information. Nat. Commun. 7:13805. doi: 10.1038/ncomms13805

Ditlevsen, S., and Lansky, P. (2011). Firing variability is higher than deduced from the empirical coefficient of variation. Neural Comput. 23, 1944–1966. doi: 10.1162/NECO_a_00157

Doron, G., Von Heimendahl, M., Schlattmann, P., Houweling, A. R., and Brecht, M. (2014). Spiking irregularity and frequency modulate the behavioral report of single-neuron stimulation. Neuron 81, 653–663. doi: 10.1016/j.neuron.2013.11.032

Dorval, A. D., Russo, G. S., Hashimoto, T., Xu, W., Grill, W. M., and Vitek, J. L. (2008). Deep brain stimulation reduces neuronal entropy in the MPTP-primate model of Parkinson's disease. J. Neurophysiol. 100, 2807–2818. doi: 10.1152/jn.90763.2008

Eden, U. T., and Kass, R. E. (2016). “Chapter 107: Statistical models of spike train data,” in Neuroscience in the 21st Century, eds D. W. Pfaff and N. Volkow (New York, NY: Springer), 3137–3151. doi: 10.1007/978-1-4939-3474-4_167

Gerstein, G. L., and Mandelbrot, B. (1964). Random walk models for the spike activity of a single neuron. Biophys. J. 4, 41–68. doi: 10.1016/S0006-3495(64)86768-0

Gerstner, W., and Kistler, W. M. (2002). Spiking Neuron Models: Single Neurons, Populations, Plasticity. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511815706

Heil, P., Neubauer, H., Irvine, D., and Brown, M. (2007). Spontaneous activity of auditory-nerve fibers: insights into stochastic processes at ribbon synapses. J. Neurosci. 27, 8457–8474. doi: 10.1523/JNEUROSCI.1512-07.2007

Jacobs, A. L., Fridman, G., Douglas, R. M., Alam, N. M., Latham, P. E., Prusky, G. T., et al. (2009). Ruling out and ruling in neural codes. Proc. Natl. Acad. Sci. U.S.A. 106, 5936–5941. doi: 10.1073/pnas.0900573106

Kostal, L., and Lansky, P. (2006). Classification of stationary neuronal activity according to its information rate. Network 17, 193–210. doi: 10.1080/09548980600594165

Kostal, L., Lansky, P., and Pokora, O. (2013). Measures of statistical dispersion based on shannon and fisher information concepts. Info. Sci. 235, 214–223. doi: 10.1016/j.ins.2013.02.023

Kostal, L., Lansky, P., and Rospars, J. P. (2007a). Neuronal coding and spiking randomness. Eur. J. Neurosci. 26, 2693–2701. doi: 10.1111/j.1460-9568.2007.05880.x

Kostal, L., Lansky, P., and Stiber, M. (2018). Statistics of inverse interspike intervals: the instantaneous firing rate revisited. Chaos 28:106305. doi: 10.1063/1.5036831

Kostal, L., Lansky, P., and Zucca, C. (2007b). Randomness and variability of the neuronal activity described by the ornstein-uhlenbeck model. Network 18, 63–75. doi: 10.1080/09548980701243134

Kostal, L., and Marsalek, P. (2010). Neuronal jitter: can we measure the spike timing dispersion differently. Chin. J. Physiol. 53, 454–464. doi: 10.4077/CJP.2010.AMM031

Lansky, P., Rodriguez, R., and Sacerdote, L. (2004). Mean instantaneous firing frequency is always higher than the firing rate. Neural Comput. 16, 477–489. doi: 10.1162/089976604772744875

Levine, M. W. (1991). The distribution of the intervals between neural impulses in the maintained discharges of retinal ganglion cells. Biol. Cybern. 65, 459–467. doi: 10.1007/BF00204659

McDonnell, M. D., Ikeda, S., and Manton, J. H. (2011). An introductory review of information theory in the context of computational neuroscience. Biol. Cybern. 105:55. doi: 10.1007/s00422-011-0451-9

McKeegan, D. E. F. (2002). Spontaneous and odour evoked activity in single avian olfactory bulb neurones. Brain Res. 929, 48–58. doi: 10.1016/S0006-8993(01)03376-5

Nakahama, H., Suzuki, H., Yamamoto, M., Aikawa, S., and Nishioka, S. (1968). A statistical analysis of spontaneous activity of central single neurons. Physiol. Behav. 3, 745–752. doi: 10.1016/0031-9384(68)90146-7

Nemenman, I., Bialek, W., and Van Steveninck, R. D. R. (2004). Entropy and information in neural spike trains: progress on the sampling problem. Phys. Rev. E 69:056111. doi: 10.1103/PhysRevE.69.056111

Neubauer, H., Koppl, C., and Heil, P. (2009). Spontaneous activity of auditory nerve fibers in the barn owl (tyto alba): analyses of interspike interval distributions. J. Neurophysiol. 101, 3169–3191. doi: 10.1152/jn.90779.2008

Obeso, J. A., Rodriguez-Oroz, M. C., Rodriguez, M., Lanciego, J. L., Artieda, J., Gonzalo, N., et al. (2000). Pathophysiology of the basal ganglia in Parkinson's disease. Trends Neurosci. 23, S8–S19. doi: 10.1016/S1471-1931(00)00028-8

Pauluis, Q., and Baker, S. N. (2000). An accurate measure of the instantaneous discharge probability, with application to unitary joint-event analysis. Neural Comput. 12, 647–669. doi: 10.1162/089976600300015736

Pouzat, C., and Chaffiol, A. (2009). Automatic spike train analysis and report generation. an implementation with R, R2HTML and star. J. Neurosci. Methods 181, 119–144. doi: 10.1016/j.jneumeth.2009.01.037

Principe, J. C. (2010). Information Theoretic Learning: Renyi's Entropy and Kernel Perspectives. New York, NY: Springer Science & Business Media. doi: 10.1007/978-1-4419-1570-2

Rajdl, K., and Lansky, P. (2013). Fano factor estimation. Math. Biosci. Eng. 11:105. doi: 10.3934/mbe.2014.11.105

Rajdl, K., Lansky, P., and Kostal, L. (2017). Entropy factor for randomness quantification in neuronal data. Neural Netw. 95, 57–65. doi: 10.1016/j.neunet.2017.07.016

Reeke, G. N., and Coop, A. D. (2004). Estimating the temporal interval entropy of neuronal discharge. Neural Comput. 16, 941–970. doi: 10.1162/089976604773135050

Rieke, F., Warland, D., Van Steveninck, R., and Bialek, W. S. (1999). Spikes: Exploring the Neural Code, Vol 7. Cambridge: MIT Press.

Rigotti, M., Barak, O., Warden, M. R., Wang, X. J., Daw, N. D., Miller, E. K., et al. (2013). The importance of mixed selectivity in complex cognitive tasks. Nature 497:585. doi: 10.1038/nature12160

Rodieck, R., Kiang, N.-S., and Gerstein, G. (1962). Some quantitative methods for the study of spontaneous activity of single neurons. J. Biophys. 2, 351–368. doi: 10.1016/S0006-3495(62)86860-X

Rudd, M. E., and Brown, L. G. (1997). Noise adaptation in integrate-and-fire neurons. Neural Comput. 9, 1047–1069. doi: 10.1162/neco.1997.9.5.1047

Shadlen, M. N., and Newsome, W. T. (1994). Noise, neural codes and cortical organization. Curr. Opin. Neurobiol. 4, 569–579. doi: 10.1016/0959-4388(94)90059-0

Shannon, C. E., and Weaver, W. (1949). The Mathematical Theory of Communication. Urbana, IL: University of Illinois Press.

Smith, D. R., and Smith, G. K. (1965). A statistical analysis of the continual activity of single cortical neurones in the cat unanaesthetized isolated forebrain. Biophys. J. 5, 47–74. doi: 10.1016/S0006-3495(65)86702-9

Softky, W. R., and Koch, C. (1993). The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPS. J. Neurosci. 13, 334–350. doi: 10.1523/JNEUROSCI.13-01-00334.1993

Song, S., Lee, J. A., Kiselev, I., Iyengar, V., Trapani, J. G., and Tania, N. (2018). Mathematical modeling and analyses of interspike-intervals of spontaneous activity in afferent neurons of the Zebrafish lateral line. Sci Rep. 8, 1–14. doi: 10.1038/s41598-018-33064-z

Stein, R. B., Gossen, E. R., and Jones, K. E. (2005). Neuronal variability: noise or part of the signal? Nat. Rev. Neurosci. 6:389. doi: 10.1038/nrn1668

Steuer, R., Ebeling, W., Russell, D. F., Bahar, S., Neiman, A., and Moss, F. (2001). Entropy and local uncertainty of data from sensory neurons. Phys. Rev. E 64:061911. doi: 10.1103/PhysRevE.64.061911

Stevenson, I. H. (2016). Flexible models for spike count data with both over-and under-dispersion. J. Comput. Neurosci. 41, 2-9-43. doi: 10.1007/s10827-016-0603-y

Theunissen, F., and Miller, J. P. (1995). Temporal encoding in nervous systems: a rigorous definition. J. Comput. Neurosci. 2, 149–162. doi: 10.1007/BF00961885

Thomas, E. (1966). Mathematical models for the clustered firing of single cortical neurones. Br. J. Math. Stat. Psychol. 19, 151–162. doi: 10.1111/j.2044-8317.1966.tb00365.x

Thorpe, S. J., and Gautrais, J. (1997). “Rapid visual processing using spike asynchrony,” in Advances in Neural Information Processing Systems, Cambridge, 901–907.

Trapani, J., and Nicolson, T. (2011). Mechanism of spontaneous activity in afferent neurons of the Zebrafish lateral-line organ. J. Neurosci. 31, 1614–1623. doi: 10.1523/JNEUROSCI.3369-10.2011

Tuckwell, H. C. (1988). Introduction to Theoretical Neurobiology: Vol. 2, Nonlinear and Stochastic Theories, Vol. 8. Cambridge: Cambridge University Press.

Tuckwell, H. C. (1989). Stochastic processes in the neurosciences. SIAM. doi: 10.1137/1.9781611970159

Victor, J. D., and Purpura, K. P. (1997). Metric-space analysis of spike trains: theory, algorithms and application. Network 8, 127–164. doi: 10.1088/0954-898X_8_2_003

Keywords: variability, randomness, firing rate, entropy, rate coding, neural coding, temporal coding, instantaneous firing rate

Citation: Tomar R and Kostal L (2021) Variability and Randomness of the Instantaneous Firing Rate. Front. Comput. Neurosci. 15:620410. doi: 10.3389/fncom.2021.620410

Received: 22 October 2020; Accepted: 26 April 2021;

Published: 07 June 2021.

Edited by:

Mayank R. Mehta, University of California, Los Angeles, United StatesReviewed by:

Ergin Yilmaz, Bulent Ecevit University, TurkeyCharles Smith, North Carolina State University, United States

Taro Tezuka, University of Tsukuba, Japan

Cristina Zucca, University of Turin, Italy

Copyright © 2021 Tomar and Kostal. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rimjhim Tomar, cmltamhpbS50b21hckBmZ3UuY2FzLmN6

Rimjhim Tomar

Rimjhim Tomar Lubomir Kostal

Lubomir Kostal