- School of Computer and Communication Sciences and School of Life Sciences, École Polytechnique Fédérale de Lausanne, Lausanne, Switzerland

Synaptic changes induced by neural activity need to be consolidated to maintain memory over a timescale of hours. In experiments, synaptic consolidation can be induced by repeating a stimulation protocol several times and the effectiveness of consolidation depends crucially on the repetition frequency of the stimulations. We address the question: is there an understandable reason why induction protocols with repetitions at some frequency work better than sustained protocols—even though the accumulated stimulation strength might be exactly the same in both cases? In real synapses, plasticity occurs on multiple time scales from seconds (induction), to several minutes (early phase of long-term potentiation) to hours and days (late phase of synaptic consolidation). We use a simplified mathematical model of just two times scales to elucidate the above question in a purified setting. Our mathematical results show that, even in such a simple model, the repetition frequency of stimulation plays an important role for the successful induction, and stabilization, of potentiation.

1. Introduction

Synaptic plasticity, i.e., the modification of the synaptic efficacies due to neural activity, is considered the neural correlate of learning (Hebb, 1949; Martin et al., 2000; Caroni et al., 2012; Nabavi et al., 2014; Hayashi-Takagi et al., 2015; Holtmaat and Caroni, 2016). It involves several biochemical mechanisms which interact on multiple timescales. The induction protocols for short-term plasticity (STP, on the order of hundreds of milliseconds) (Turrigiano et al., 1996; Markram et al., 1998) and for the early phase of long-term potentiation or depression (LTP or LTD, on the order of minutes to hours) (Levy and Stewart, 1983; Brown et al., 1989; Artola et al., 1990; Bliss and Collingridge, 1993; Markram et al., 1997; Sjöström et al., 2001) are well-established and have led to numerous models (Bienenstock et al., 1982; Gerstner et al., 1996; Song et al., 2000; Van Rossum et al., 2000; Senn et al., 2001; Shouval et al., 2002; Rubin et al., 2005; Pfister and Gerstner, 2006; Brader et al., 2007; Graupner and Brunel, 2007; Clopath et al., 2010; Gjorgjieva et al., 2011; Nicolas and Gerstner, 2016). On the other hand, various experiments have shown that the further evolution of synaptic efficacies on the timescale of hours depends in a complex way on the stimulation protocol (Frey and Morris, 1997; Dudai and Morris, 2000; Nader et al., 2000; Redondo and Morris, 2011). This phenomenon is called synaptic consolidation, to be distinguished from memory consolidation, which is believed to take place through the interaction between hippocampus and cortex and which occurs on an even longer timescale (Hasselmo, 1999; Roelfsema, 2006; Brandon et al., 2011). Such a richness of plasticity mechanisms across multiple timescales has been hypothesized to be fundamental in explaining the large storage capacity of memory networks (Fusi et al., 2005; Benna and Fusi, 2016).

Synaptic consolidation is often studied in hippocampal or cortical slices, in which it is induced by extra-cellular stimulation of afferent fibers with short current pulses (Frey and Morris, 1997; Sajikumar and Frey, 2004a,b). Experimental protocols are typically organized in multiple repetitions of stimulation episodes, with variable repetition frequency and duration of each episode (Figure 1A). The dependence of the consolidation dynamics on the parameters of the experimental protocol is complex and has remained elusive. Both the intra-episode pulse frequency and the inter-episode delay play an important role in determining whether a synapse gets potentiated or not after the stimulation (Kumar and Mehta, 2011; Larson and Munkácsy, 2015). Furthermore, recent evidence suggests the existence of optimal parameters to achieve consolidation (Kumar and Mehta, 2011; Larson and Munkácsy, 2015). Existing models succeeded in reproducing experimental results on early and late LTP (Clopath et al., 2008; Barrett et al., 2009; Ziegler et al., 2015; Kastner et al., 2016), by a mathematical description of the interaction of different synaptic mechanisms. However, the complexity of those models prevents a complete characterization of the dynamics, that links stimulation protocols to synaptic consolidation. Here we address the following question: why is the temporal structure of stimulation, i.e., the timing of repetitions, so important for synaptic consolidation? (Zhou et al., 2003; Kramár et al., 2012; Benna and Fusi, 2016).

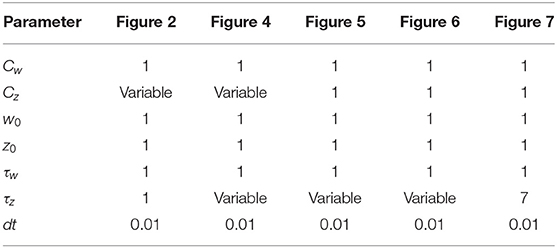

Figure 1. Schematic experimental setup and modeling framework. (A) Schematic of extra-cellular stimulation in experiments. The plasticity-inducing stimulus consists of several episodes of duration ton with inter-episode interval toff. Zoom: Each episode contains several high-frequency pulses. (B) Schematic of single-synapse consolidation model. The synapse is described by a weight variable w with time constant τw and a slower consolidation variable z with time constant τz ≥ τw. Each episode corresponds to a rectangular plasticity-inducing stimulus I(t). (C) Phase-plane for a specific choice of f(w, z) and g(w, z), I(t) = 0, and τz = 7τw. The fixed points in (w, z) = (−1, −1) and (w, z) = (1, 1) are stable and correspond to an unpotentiated and potentiated synapse, respectively. The black line separates the basins of attraction of the two stable fixed points. (D) Evolution of the system dynamics in the phase-plane. The system is initialized in the unpotentiated state and it evolves under the effect of a plasticity-inducing stimulus made of three pulses.

We introduce a phenomenological model of synaptic consolidation (Figures 1B–D) in which, as suggested by experiments (Petersen et al., 1998; O'Connor et al., 2005; Bosch et al., 2014), both model variables are bistable. We find that, despite the simplicity of our model, potentiation of a synapse depends in a complex way on the temporal profile of the stimulation protocol. Our results suggest that not just the total number of stimulation pulses, but also the precise timing within an episode and across repetitions of episodes are important, in agreement with anecdotal evidence that changes in protocols can have unexpected consequences.

2. Methods

In what follows, we introduce the synaptic consolidation model that we analyze in the Results section. Since describing the details of molecular interactions inside a synapse as a system of differential equations (Bhalla and Iyengar., 1999; Lisman and Zhabotinsky, 2001) would be far too complicated for our purpose, we aim to capture the essential dynamics responsible for synaptic consolidation with an effective low-dimensional dynamical system. In this view, variables are mathematical abstractions that represent the global state of a network of biochemical molecules inside a synapse, e.g., during a transition from one metastable configuration to another (Bosch et al., 2014).

2.1. Choice of the Model

A one-dimensional dynamical system is not expressive enough to capture experimental data. Indeed, in a one-dimensional differential equation, it would be sufficient to know the instantaneous state of a single variable of the synapse (such as the weight) to predict its evolution, while this is not the case in experiments. As a natural step toward more complexity, we consider a general autonomous two-dimensional model

where w represents the measured efficacy of a synaptic contact point (e.g., the amplitude of the EPSP caused by pre-synaptic spike arrival), while z is an abstract auxiliary variable. For simplicity, both variables will be considered unit-less. We choose the functions f and g, such that

where (w, z) = ±(w0, z0) are the stable fixed points of the two-dimensional system in the presence of a fixed coupling Cw ≥ 0, Cz ≥ 0 and in the absence of a drive, i.e., I = 0. In our simulations, we always choose w0 = z0 = 1. For Kw ≠ 0 and Kz ≠ 0, we could divide Equation (2) by Kw and Kz to further reduce the numbers of parameters. However, we will stick to a notation with explicit Kw and Kz since we do not want to exclude the choice Kw = 0 or Kz = 0. Without loss of generality, we will choose Kw, z ∈ {0, 1}, i.e., either zero or unity. Note that the choice Kz = 0 implies that the dynamics of the auxiliary variable z are linear, while Kz = 1 implies full non-linearity. The choice of the model is explained in the next section.

2.2. Simplification Steps of the 2D-Dynamics

In this section we present the arguments leading from Equation (1) to (2). Readers not interested in the details may jump to the next sections. One way to tackle the very general system in Equation (1) is to perform a Taylor expansion around w = 0 for the first equation

and around z = 0 for the second one

An expansion up to the third order enables us to implement the bistable dynamics (Petersen et al., 1998; O'Connor et al., 2005) of single contact points. Bistability requires the system to have at least two stable fixed points at finite value. This condition cannot be met by degree 1 or degree 2 polynomials since they can have at most one stable fixed point. Therefore, bistability requires a polynomial of degree 3 or higher in at least one equation. To be more general, we will consider a system in which both polynomials are of degree 3. We restrict our analysis to the situation in which we have linear coupling between the two variables, of the form A(z) = A0 + A1 · z, B(z) = B, C(z) = C, and D(z) = D. Analogously, in the second equation we set , B′(w) = B′, C′(w) = C′, and D′(w) = D′.

Bistability is be obtained with a negative coefficient of the third power in both equations. Before we start the analysis, we rewrite Equations (3) and (4) in a more symmetric form. To do so we proceed in three steps. (i) Assuming that the degree 3 polynomial has three real roots, we rewrite our system in the more intuitive form

where C1 and C2 are coupling constants and the roots w1, w2, w3 correspond to the fixed points of the equations in the uncoupled case (C1 = C2 = 0). The parameters τw and τz can be interpreted as time constants since they do not influence the location of the fixed points but only the speed of the dynamics. K1 and K2 are two positive constants that scale the whole polynomial, while C1 and C2 are positive constants that control the amount of coupling between the two variables. If we exclude the coupling terms, each equation corresponds to an over-damped particle moving in a double-well potential (Strogatz, 2014). The parameters K1, K2, τw, τz, C1, C2, w1, w2, w3 are simple transformations of the parameters A0, A1, B, C, D, , , B′, C′, D′ of the original system. For example K1 = D. (ii) In order to further simplify our study, we assume that in both equations one of the three roots is zero, one is positive and one negative, equally distant from zero. Following (Zenke et al., 2015), we add a plasticity induction term to the first equation that describes the drive provided by an LTP induction protocol. The equations now read

In the absence of coupling, the double well potential related to Equation (6) has minima in , and a local maximum in w = 0 (z = 0). Notice that this seems to imply that a synaptic weight can take both positive and negative values, which is biologically implausible. However, this choice simplifies the calculations without loss of generality, since it is always possible to go back to a system with positive weights by applying a coordinate translation.

(iii) In the absence of a drive (I = 0), the system has eight free parameters, which all influence the location of the fixed points. In a final transformation step we rewrite Equation (6) such that the location of two stable fixed points becomes independent of the coupling constants C1 and C2. The reason for doing this is that the stable fixed points of the system are easier to access experimentally than other constants. In particular, the value of w at the stable fixed point should be related to the synaptic weight measured experimentally. We, therefore, rewrite the system in the form of Equation (2), where w0 and z0 are the absolute values of the stable fixed point and the parameters can be mapped from Equation (6) to (2), for example, Kw = K1 and and analogously for Cz and Kz.

2.3. Nullclines and Phase-Plane Analysis

Since the system is two-dimensional, it can be studied using phase-plane analysis, following a well-established tradition in computational neuroscience (Wilson and Cowan, 1972; Ermentrout, 1996, 2002; Rinzel and Ermentrout, 1998). The fixed points of the system are graphically represented by the intersections of the nullclines (i.e., the curves defined by either or ), which in our system are:

The maximum number of fixed points for the system in Equation (2) can be easily computed. To do so, consider a more general form of two nullclines:

where Pn(z) is a polynomial of degree n in w and, analogously, Qm(w) is a polynomial of degree m in z (cf. Equation 7). To find the fixed points of the system (Equation 8) we need to solve:

Equation (9) is a polynomial equation of degree n · m in w and therefore it allows a number of real solutions s, 0 ≤ s ≤ n · m. Applying this formula to our case, we find that we can have a maximum of nine fixed points.

In order to reduce the number of parameters from 8 to 4, we first consider the symmetric case (section 2.4) in which the two equations have the same parameters. Moreover, since we make the choice z0 = w0 = 1, the actual number of free parameters is three. In the next section, we show the effect of changing the coupling coefficients. Then, we briefly comment on the effect of the time constants and of a constant plasticity-inducing stimulus I. We will move to the analysis of the asymmetric cases in section 2.5.

2.4. Symmetric Changes of Coupling Coefficients Reveal Two Bifurcations

We study the case of symmetric coupling Cw = Cz = C and analyze how a change of coupling strength influences the dynamics of the system. As an aside, we note that for symmetric coupling we can define a pseudopotential (Cohen and Grossberg, 1983)

in which the dynamical variables move according to and .

We fix τw = τz, Kw = Kz = 1, I = 0, w0 = z0 = 1 and vary C in Equation (2). In the case C = 1, the system is in a rather simple regime: there are two stable fixed points in (w, z) = (−1, −1) and (w, z) = (1, 1) and a saddle fixed point at the origin (Figure 2). The basins of attraction of the stable fixed points are separated by the z = −w diagonal.

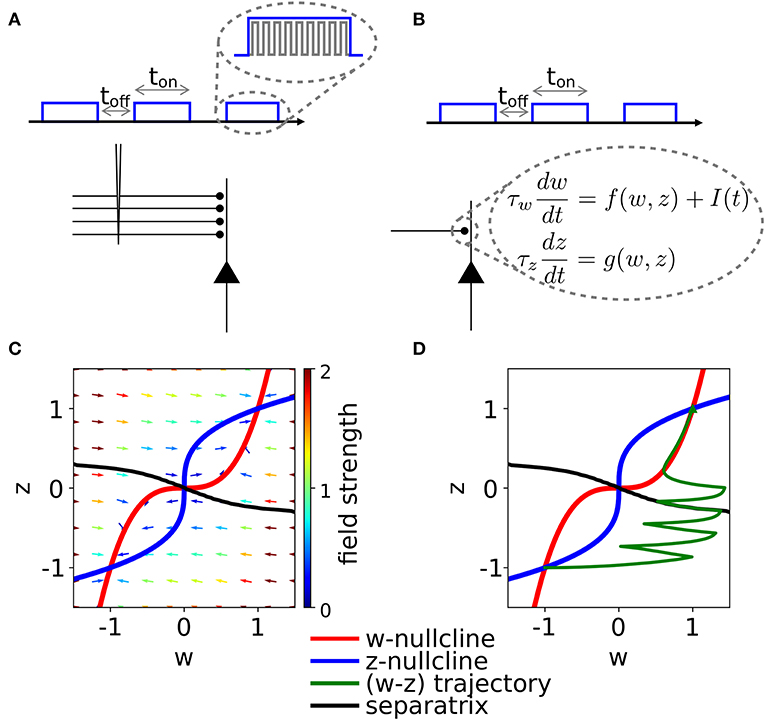

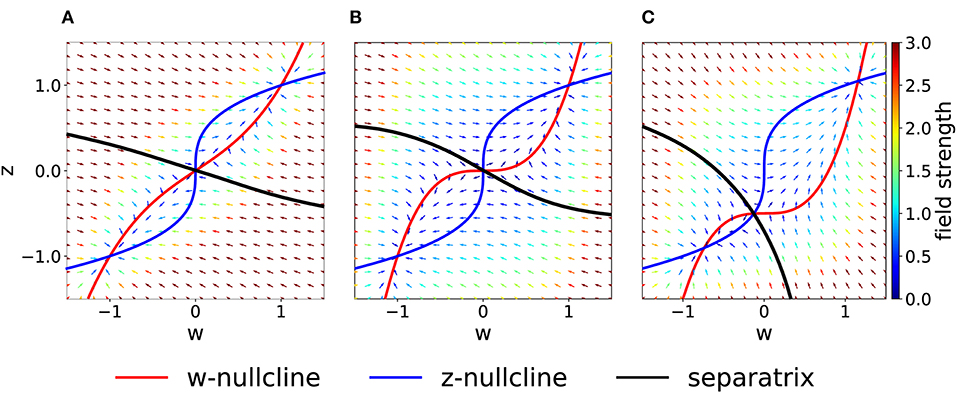

Figure 2. Phase-plane diagram and basins of attractions for the symmetric case with equal coupling constants, Cw = Cz = C. The plasticity-inducing stimulus is null, I = 0. (A) C = 1, phase-plane with field arrows. The color of the arrows is proportional to the field strength. w− and z− nullclines are indicated in red and blue, respectively. The line that separates the two basins of attraction is indicated in black. (B) Same as (A), but C = 0.4. Compared to (A), we notice the creation of two saddle points. (C) Same as (A), but C = 0.2. The maximum number of fixed points is achieved. In this case we have four basins of attraction.

If we decrease the coupling C, we encounter two bifurcations. A first pitchfork bifurcation takes place at C = 1/2, when the two nullclines are tangent to each other in the saddle point. Beyond the bifurcation point of the coupling coefficient, we observe the creation of two additional saddle points (Figure 2B). The stability properties, the location and the basins of attraction of the other two fixed points remain unchanged, but the local field strength changes, as shown by the colored arrows. The second pitchfork bifurcation takes place at C = 1/3. For this coupling value, each of the two new saddle points splits into a stable fixed point and two further saddle points. Therefore, for very weak coupling we observe four basins of attractions, whose shape is shown in Figure 2C. The stability of the fixed points in (w, z) = (−1, −1) and (w, z) = (1, 1) is not affected by the bifurcations.

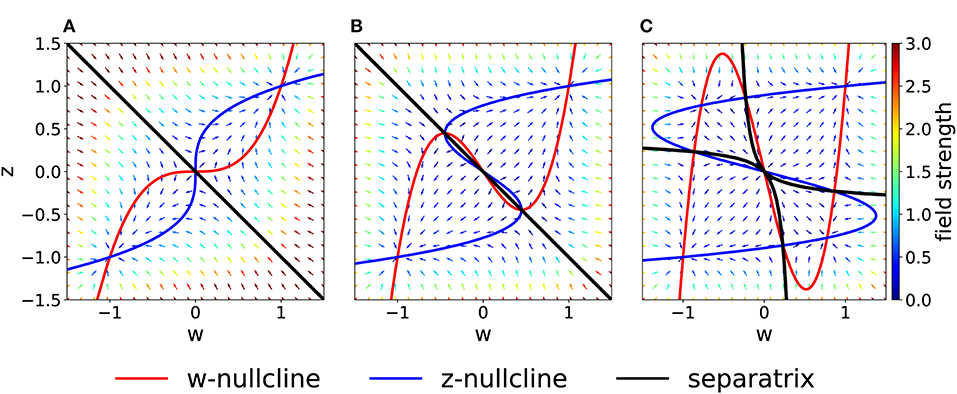

On the other hand, if we increase the coupling coefficient to a value C > 1, then the two nullclines will progressively flatten, but the location of the three fixed points is unchanged with respect to the case C = 1. These observations have been summarized in the bifurcation diagram of Figure 3A. We observe that there are actually three pitchfork bifurcations, but that two of them are degenerate since they happen for the same value of C.

Figure 3. Bifurcations diagrams. (A) Fixed points in the symmetric case. Dashed lines indicate unstable fixed points while continuous lines indicate stable fixed points. Orange and green dots indicate bifurcation points. (B) Bifurcation points in the Cw – Cz plane (black) for the general (asymmetric) case. The dashed gray line corresponds to Cw = Cz. The orange and green dots indicate the corresponding bifurcations in (A). Note that, in (B), the bifurcation at (green dot) is a degenerate point.

2.5. Asymmetric Parameter Choices Shape the Basins of Attraction

As a more general case, we consider asymmetric coupling C or timescale τ. When the coupling coefficients are asymmetric, we can plot the position of the bifurcation points in the Cw—Cz plane (Figure 3B). The choice Cw = Cz of the previous section corresponds to the dashed gray line. We notice that in the asymmetric case it is possible to have three distinct bifurcations (for example, we can fix Cw = 0.3 and decrease Cz, from 1 to 0). We find that, for Cw + Cz > 1, the number of fixed points is always three and no bifurcation is possible. On the other hand, if Cw + Cz < 1, the system enters in the regime with minimum five fixed points. Moreover, we can analytically compute the bifurcation value of one coupling constant, given the other. An asymmetric choice Cw ≠ Cz influences the shape of the basins of attraction (Figure 4A).

Figure 4. Asymmetric parameter choices. (A) In the case Cw = 3 > Cz = 1, the curvature of the w−nullcline (red) is smaller than that of the z−nullcline (blue) and the basins of attraction are deformed compared to Figure 2A. (τz = τw = 1) (B) For τz/τw = 3 and Cw = Cz = 1, nullclines are not affected (compare to Figure 2A) but the basins of attraction are. (C) For I = 0.5 (all other parameters set to 1), the basin of attraction of the fixed point at (−1, −1) is smaller than of the fixed point at (1, 1).

If we keep Cw = Cz but consider instead τz > τw, the system in Equation (2) may be interpreted as two different molecular mechanisms that act on different timescales. For example, the variable z can be interpreted as a tagging mechanism or a consolidation variable while w is the weight variable or amplitude of a post-synaptic potential. A comparison of Figure 2A and Figure 4B shows that the changes in τ do not affect the nullclines but change the flow field and the basin of attraction.

Another way by which we can introduce asymmetry in the system is by adding a plasticity-inducing stimulus I. It follows from Equation (7) that a value I > 0 will cause a down shift of the w−nullcline. The case of Cw = Cz = 1, τw = τz = 1 s, Kw = Kz = 1 and I > 0 is shown in Figure 4C. A plasticity-inducing stimulus I > 0 also implies a reduction of the basin of attraction of the lower stable fixed point in favor of an increase of the basin of attraction of the upper stable fixed point. For high values of I, the basin of attraction of the lower fixed point disappears via a steady state bifurcation. Therefore, when I > 0 is large enough, the system is forced to move to the upper fixed point that can be interpreted as a potentiated state of the synapse. Analogously, when I < 0, the attraction basin of the lower fixed point is enlarged and leads, eventually, to a bifurcation in which the upper fixed point and the saddle point are lost.

A possible generalization of the model would be to consider the coupling coefficients Cw and Cz as dynamical variables, as it has been explored in previous work (Ziegler et al., 2015). In these models, the coupling parameters Cw and Cz of the two dynamical variables alternates between Cw = 0 and Cz = 1 or Cw = 1 and Cz = 0, implementing a write-protection mechanism. The price we pay is the introduction of additional differential equations and parameters for the dynamics of the coupling coefficients. In the specific implementation of Ziegler et al. (2015), the dynamical coupling is controlled by a low-pass filter of the plasticity-inducing stimulus I and the concentration of neuromodulators on plasticity.

2.6. Numerical Simulations

All figures were obtained using Python 2.7, except for the bifurcation plot in Figure 3, which was created with Wolfram Mathematica. In the phase-plane plots, the separatrix between the basins of attraction was obtained doing a mesh-grid search: we initialized the dynamical system (Equation 2) in each point of a 100 × 100 grid in the w, z space (w, z ∈ [−1.5, 1.5]) and checked to which stable fixed point it converges. Therefore we interpolated the separation line. The trajectory of the system in the phase-plane was obtained by solving the system in Equation (2) using the Runge-Kutta 4 method with integration step dt = 0.01. In Figures 6, 7, we inject an external stimulus into the dynamical equations. The system trajectory is always initialized in the depotentiated state (−1, −1) and the simulation is stopped when the trajectory enters into the basin of attraction of the potentiated state (1, 1). The position of the stable fixed points depends on the choice w0 = z0 = 1, which we made for simplicity. In fact, we can remap the values of the synaptic weight w the desired (positive range) with an affine transformation, without loss of generality.

3. Results

The two-dimensional model, introduced Methods section, predicts a complex dependence of the synaptic consolidation dynamics upon the parameters of the experimental protocol. This complex dependence has similarities with the behavior observed in experiments (Sajikumar et al., 2005; Larson and Munkácsy, 2015; cf. Figure 1). First, we describe how we abstract the experimental protocol into a time-dependent plasticity-inducing stimulus I(t). Then, we show the response of our model to different stimulation protocols. In our model, the plasticity-inducing stimulus I(t) drives the synaptic weight w via a non-linear equation characterized by a time constant τw. The weight w is coupled to a second variable z with time constant τz (Equation 2). The variable z is an abstract description of the complex metastable states (potentiated or unpotentiated) caused by consolidation (Redondo and Morris, 2011; Bosch et al., 2014). After an analysis of a single rectangular stimulation (one episode), in section 3.2, we will move to the more realistic case of repetitive stimulation across multiple episodes. Throughout the results section, we will focus on synaptic potentiation. Since the self-interaction term in Equation (2) is symmetric with respect to w = z = 0, synaptic depression of a potentiated state is the mirror image of synaptic potentiation of a unpotentiated state.

3.1. Abstraction of the Stimulation Protocol

In their seminal work, Bliss and Lømo (1973) showed that repeated high-frequency stimulation of afferent fibers can lead to long-lasting synaptic potentiation. In later work it was shown that low-frequency stimulation can lead to long-lasting synaptic depression (Bashir and Collingridge, 1994). In order to keep the analysis transparent, we use a time-dependent, real-valued quantity I(t) as an abstraction for such experimental protocols. In what follows, we will refer to I(t) as to the plasticity-inducing stimulus. Note that, we do not perform an explicit mapping from the electrical current used in LTP experiments for the stimulation of pre-synaptic fibers onto the plasticity-inducing stimulus I(t) that influences the dynamics of Equation (2). A precise mapping would require additional assumptions on (i) how extra-cellular stimulation triggers axonal spikes in multiple fibers, (ii) how pre-synaptic spike arrivals cause post-synaptic firing and (iii) how pre- and post-synaptic neural activity leads, potentially via a Hebbian model, to the induction of early-LTP. This means that, in principle, the model's dynamics is rich enough to reproduce the four classical synaptic-consolidation experiments (Frey and Morris, 1997; Nader et al., 2000), however, we would need to set at least four free parameters, corresponding to the amplitudes of the external input I, needed for strong and weak LTP and LTD. Instead, we model a set of extra-cellular high-frequency pulses as a single rectangular plasticity-inducing stimulus of positive amplitude (Figure 1B). The larger the stimulation frequency, the larger the amplitude of I(t). Analogously, a set of extra-cellular current pulses at low frequency is modeled as a single negative rectangular plasticity-inducing stimulus. The compression of multiple extra-cellular pulses into a single rectangular episode I(t) is justifiable since the time between single pulses, even in the case of low-frequency stimulation, is very short compared to the timescale of plasticity. This implies that multiple short pulses in experiments can be well approximated by a single episode, described by one prolonged rectangular stimulus in our model (Figures 1A,B). In agreement with well-established plasticity models (Bienenstock et al., 1982; Senn et al., 2001; Pfister and Gerstner, 2006; Clopath et al., 2010; Gjorgjieva et al., 2011), we use I > 0 to describe a high-frequency stimulation since a positive I leads to potentiation (see section Methods and Figure 4C). Conversely, a negative I favors depotentiation. On the other hand, experiments that involve global variables, such as cross-tagging (Sajikumar and Frey, 2004a,b), can not be explained by our model.

3.2. One Episode

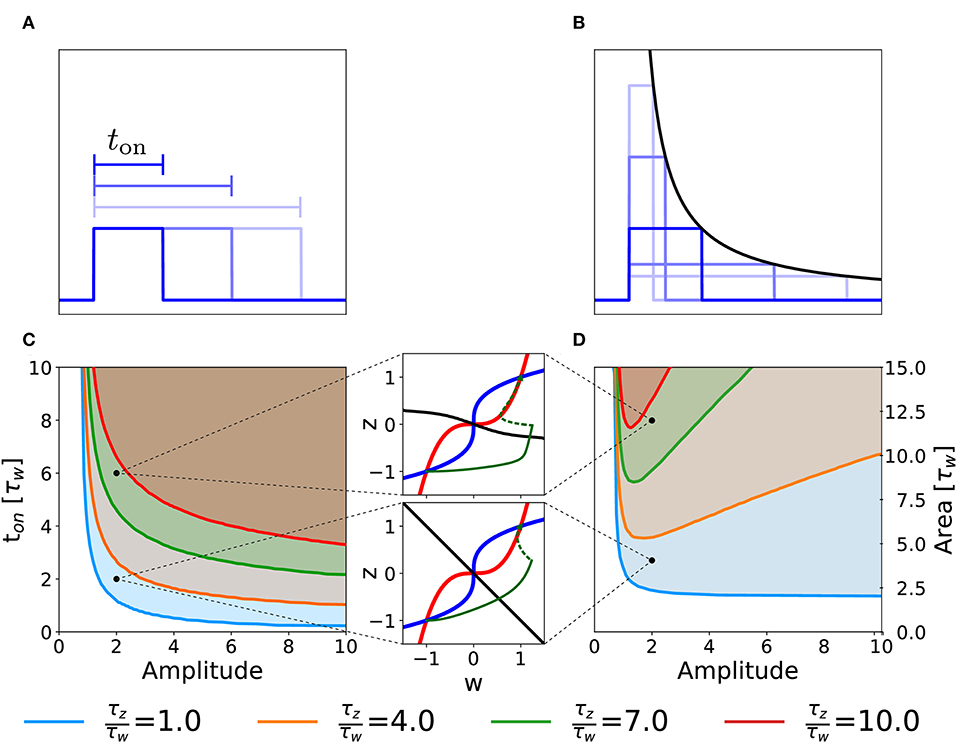

We consider the case in which our two-variable synapse model is stimulated with a single rectangular plasticity-inducing stimulus I(t) of variable amplitude and duration ton (Figure 5A). Experimentally, this would correspond to single-episode, high-frequency protocols of variable stimulation intensity (i.e., pulse frequency) and duration. For each choice of duration and amplitude, we initialize the system in the unpotentiated state, defined by the initial value (w, z) = (−1, −1) and we numerically integrate the system dynamics until convergence. We then measure the final state of the synapse, i.e., whether it converged to the potentiated or returned to the unpotentiated state. In Figure 5, we plot the curve that separates the region of the parameter space that yields potentiation (shaded area) from the one that does not. Different curves correspond to different time constants τw and τz of the synaptic variables w and z in Equation (2).

Figure 5. Potentiation during a single episode. Different curves correspond to different ratios of the time constant τz and τw in Equation (2). (A) Schematic representation of single-episode stimulations, corresponding to different choices of ton. (B) Schematic representation of different single-episode stimulations with constant area. The black line is proportional to 1/amplitude in order to stress that all pulses have the same area. (C) Separation curves between regions of successful or unsuccessful potentiation as a function of amplitudes and duration ton of a the plasticity-inducing stimulus. The initial state is always the unpotentiated synapse (w = z = −1). The shaded region of the parameter space is the one in which the synapse gets potentiated. (D) Same as (C) but as a function of amplitude and area of the stimulus during the episode. The two insets show examples of trajectories (green lines) in the phase-plane for two different parameters choices. The solid green lines represent the dynamical evolution of the system during the application of the external stimulus, while the dotted green line shows the relaxation of the system to a stable fixed point after the stimulation. Red: w-nullcline; blue: z-nullcline; black: separatrix. The parameters that are not specified in the figure are: Cw = Cz = 1, I = 0.

Figure 5C illustrates a rather intuitive result, i.e., if the amplitude of the plasticity-inducing stimulus is increased, the duration needed for potentiation decreases. Moreover, if the amplitude is too small, we cannot achieve potentiation, even for an infinite pulse duration. The limit of infinite pulse duration is in the following called the “DC” limit. The effect of DC-stimulation can be easily understood from a phase-plane analysis (Figure 4). Indeed, the introduction of a constant term I > 0 in the w equation (DC term), yields a shift in the w-nullcline vertically downward. However, if the term is too small to cause the loss of the low fixed point, potentiation cannot be achieved (Figure 4C).

The separation curves in Figure 5C indicate that the minimal duration of an episode necessary for potentiation decreases as the intensity of the plasticity-inducing stimulus increases. We wondered whether the relevant parameter for potentiation is the area under the rectangular plasticity-inducing stimulus. To study this, we performed a similar analysis, with the amplitude of the plasticity-inducing stimulus and its area as independent variables. For each choice of area and amplitude, the duration of the episode is given by ton = area/amplitude (see Figure 5B). The results are shown in Figure 5D. If there were a regime in which the relevant parameter is the area of the pulse, then the curve separating parameters of successful from unsuccessful potentiation would be horizontal. However, we find a near-horizontal curve only for τz = τw, limited to the high-amplitude region. For τz > τw we find the existence of an optimal value of the amplitude that yields potentiation with the minimal area. If we increase the amplitude beyond this optimal value, the necessary area under the stimulus curve I(t) starts to increase again.

In order to understand this effect, we look again at the phase-plane, in particular at the dependence of the separatrix on the timescale separation. In the limit in which the amplitude goes to infinity and the duration goes to 0 while the area of the whole plasticity-inducing stimulus stays the same, the stimulus can be described by a Dirac-δ function. In Figure 5D, we can see that, if τz ≫ τw, the separatrix tends to an horizontal line for w ≫ 1. Since a δ-pulse input is equivalent to an instantaneous horizontal displacement of the momentary system state in the phase-plane, a single δ-pulse cannot bring the system across the separatrix. The δ-pulse stimulation is, of course, a mathematical abstraction. In a real experimental protocol, such a stimulation can be approximated by a short episode of very intense high frequency stimulation. Due to the necessary finite duration of an episode, the system response in the phase-plane will not be a perfectly horizontal displacement. However, achieving potentiation with short pulses can still be considered as difficult, because it would require a disproportionately large stimulation amplitude.

Our findings highlight the fact that changing parameters, such as the ratio of τw and τz, gives rise to different behaviors of the model in response to changes in the stimulation protocols. We may use this insight to design optimal experimental protocols for single-episode plasticity induction. In particular, a model with timescale separation would predict the existence of an optimal stimulus intensity for which the total stimulus area necessary for potentiation is minimized. We emphasize that any model where consolidation works on a timescale that is slower than that of plasticity induction will exhibit timescale separation and be therefore sensitive to details of the stimulation protocol.

3.3. Repeated Episodes

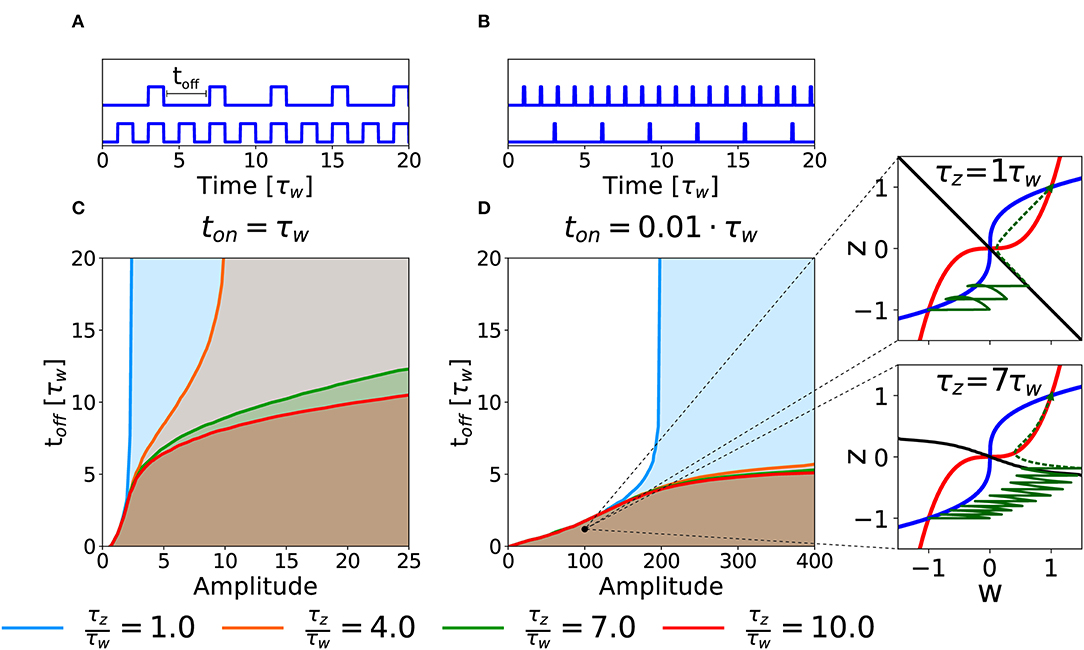

As a second case, we consider the potentiation of a synapse induced by repetitions of several stimulation episodes. In an experimental setting, this type of stimulation would correspond to several episodes of high-frequency stimulations, characterized by three parameters: the intensity of stimulation during each episode (amplitude), the duration (ton) of each episode and the inter-episode interval, toff (cf. Figures 6A,B). To keep the analysis transparent we apply a number of repetitions large enough to decide whether potentiation is successful or not given the three parameters. Notice that if toff = 0 we are back to the DC stimulation as defined in the previous section.

Figure 6. Potentiation with repeated episodes. (A) Schematic representation of stimulation protocols characterized by different toff, while ton = τw is fixed. (B) Schematic representation of stimulation protocols with ton = 0.01τw. (C) Potentiation region for stimulation with long episodes for fixed ton = τw. The curves for different ratios τz/τw (see color code) indicate the separation between the region that yields potentiation (shaded) and the region that does not (white) as a function of intensity of stimulation in each episode (amplitude) and inter-episode interval (toff). (D) Potentiation regions for protocols with shorter episode ton = 0.01τw. The potentiation region is shaded. The two insets show examples of trajectories (green lines) in the phase-plane for the same choice of stimulation parameters but different timescale separation. The solid green lines represent the dynamical evolution of the system during the application of the external stimulus, while the dotted green line shows the relaxation of the system to a stable fixed point after the end of the stimulation protocol. The parameters that are not specified otherwise are: Cw = Cz = 1, I = 0.

The curves in Figures 6C,D show the separation between parameters that lead to successful potentiation (shaded) or not (white) in the amplitude-toff space for fixed values of ton and for different τz/τw ratios. In Figure 6C, we fix ton = τw. We observe that, at least for low timescale ratios, it exists an amplitude above which the synapse gets potentiated independently of toff, which suggests that, for this intensity of the stimulation, the potentiation happens during the first episode. The amplitude necessary to obtain potentiation in one pulse, however, increases rapidly with the τz/τw ratio (see section 3.2). On the other hand, if the value of toff is small enough (i.e., for high repetition frequency), potentiation can be achieved with smaller amplitudes and the timescale ratio is less important (notice the superimposed lines in the bottom left part of the plot). If we decrease the pulse duration to ton = 0.01τw, we obtain qualitatively similar separation curves, but potentiation now requires much larger values for the amplitude of the plasticity-inducing stimulus (see Figure 6B), than ton = τw (see Figure 6A). Importantly, in the case of timescale separation (e.g., τz = 7τw) several repetitions are needed before the consolidation variable z has sufficiently increased so that the synapse state crosses the separatrix (Figure 6, insert).

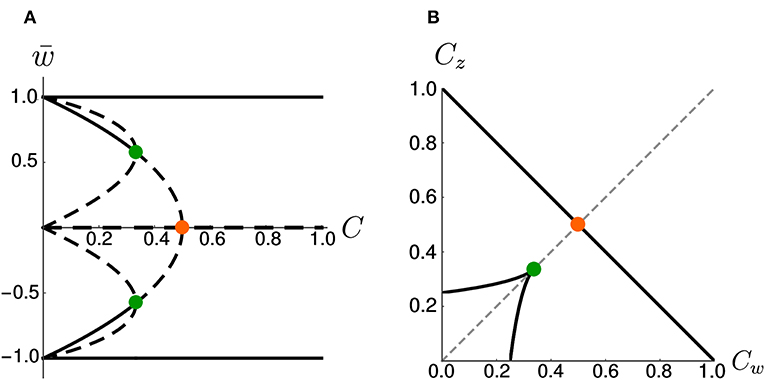

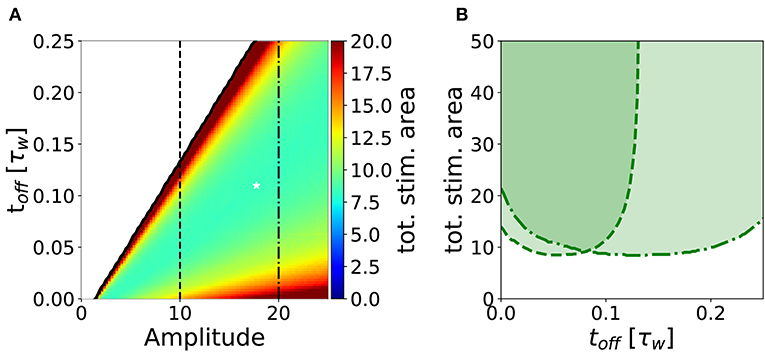

In analogy to the analysis performed in section 3.2, we search for an optimal stimulation protocol in the case of repeated episodes. In order to allow a direct comparison between single and repetitive episodes, we measured the total area under the stimulation curve I(t) in the repetitive episode scenario, limited to the minimal number of episodes sufficient to achieve potentiation. In Figure 7A, we show the minimum stimulation area (number of episodes times the area of each rectangular plasticity inducing stimulus) required to achieve potentiation, as a function of the amplitude and the frequency of the stimulus for strong timescale separation (τz/τw = 7). We notice that the minimum stimulation area (white star) corresponds to toff ~ 10ton, i.e., the waiting time between episodes is ten times long than each episode. In real experimental conditions, however, it might be difficult to control the intensity of the stimulation. For this reason, we consider a fixed intensity (e.g., amplitude I = 10 in Figure 7A) and vary the inter-episode time toff. We find that there exists an optimal stimulation frequency to obtain potentiation with minimal total area (see Figure 7B). These results highlight two main facts: (i) for many stimulation intensities (only two are shown in the graph), one can find an optimal repetition frequency, (ii) there is a broad region in the parameter space (ton, amplitude, and area) where the number of pulses needed to achieve consolidation is constant. Indeed, the broad region around the minima in Figure 7B (fixed amplitude and ton) where the area is approximately constant corresponds a constant number of pulses (npulses = area/ton).

Figure 7. Stimulation effort needed to achieve potentiation for τz/τw = 7 and ton = 0.01τw. (A) The potentiation domain (shaded region in Figure 6) is colored proportionally to the stimulation area needed to achieve potentiation with a repetitive pulse stimulus. The minimum stimulation area is ≃ 8.34, it is indicated by the white star and corresponds to the parameters values toff = 0.11τw, amplitude = 17.75 and 47 pulses. (B) Slices of the diagram in (A) for amplitude = 10 (dashed line) and for amplitude = 20 (dash-dotted line) are plotted against toff. One can notice that for a fixed stimulation amplitude, there is an optimal repetition frequency that minimizes the stimulus area required to achieve potentiation. The parameters that are not specified otherwise are: Cw = Cz = 1, I = 0.

4. Discussion

We introduced and analyzed a minimal mathematical model of synaptic consolidation, that consists of two ODEs with linear coupling terms and cubic non-linearity. Since it is a two-dimensional model, the system can be studied using phase-plane techniques. While our model can have up to four stable fixed points, we focused on the case of two stable fixed points, to allow the physical interpretation of the fixed points as an unpotentiated or potentiated synapse. The weight variable w should be seen as the bistable building block of complex synapses. While there is evidence that the potentiation of a single synapse is a all-or-none process (Petersen et al., 1998; O'Connor et al., 2005; Bosch et al., 2014), recent results challenge this view in favor of a modular structure of the synapse (Lisman, 2017). Either way, it is possible to identify a bistable basic element of the synapse.

We showed that our minimal model responds to stimulation protocols in a non-trivial way: we quantified the total stimulation strength by the stimulus area defined as duration times intensity, where intensity is a combination of intra-episode frequency and current amplitude of extra-cellular pulses. We found that the minimal stimulus area necessary to induce potentiation depends non-monotonically on the choice of stimulus parameters. In particular, we found that, for both single-episode and multiple-episode stimulation, it is possible to choose the stimulation parameters (intensity, duration, and inter-episode frequency) optimally, so as to minimize the stimulus area (Figures 5, 7 and Table 1). Figure 7 can be used to compare the minimum stimulation area needed to achieve potentiation in a single episode (corresponding to the choice toff = 0) to the case of repetitive pulses (toff ≠ 0). We conclude that, for a fixed stimulation area, stimulation over several episodes is advantageous to achieve potentiation, in agreement with some widely used protocols (Larson and Munkácsy, 2015). The effect is stronger if the consolidation variable z is slow compared to the weight variable (τz ≫ τw). Note that in experiments it is often impossible to have a fine control on the stimulation amplitude: extra-cellular stimulation of fibers must be strong enough to excite the post-synaptic neuron, but there is no control of the post-synaptic firing rate, which could undergo adaptation or exhibit other time-dependent mechanisms. In summary, the existence of an optimal stimulation frequency is the direct consequence of two very fundamental synaptic properties: (i) the bistability of a synaptic basic element, and (ii) the time scale separation between the internal synaptic mechanisms.

The minimum of the total stimulus area is particularly pronounced in the regime of strong separation of timescale (τz ≫ τw), which is the relevant regime in view of the experimental consolidation literature which suggests multiple consolidation mechanisms with a broad range of timescales (Bliss and Collingridge, 1993; Reymann and Frey, 2007). Assuming that the timescale τw is on the order of a few seconds, as suggested by some plasticity induction experiments at the level of single contact points (Petersen et al., 1998), we can interpret a short stimulation episode of duration 0.01 · τw ~ 20 ms as a burst of few pulses at high frequency. For example, one particularly interesting protocol is the theta burst stimulation, where each burst consists of 4 pulses at 100 Hz corresponding to a burst of 30 ms duration (Larson and Munkácsy, 2015). Assuming that this stimulation does not correspond to an extremely small amplitude value (a reasonable assumption since the experimentalists want to induce LTP), our model predicts an optimal frequency (see Figure 7) on the order of toff = 0.11τw ~ 200 ms, which is in rough agreement with the experimental protocols where theta bursts are repeated every 200 ms (Larson and Munkácsy, 2015). When comparing the optimal stimulation frequency obtained by our model to experimental data, we should keep in mind that in experiments timing effect come from different sources. In Larson and Munkácsy (2015), the key factor that determines the optimal stimulation protocol is the feed-forward inhibition. Moreover, in Sajikumar et al. (2005), Kumar and Mehta (2011), and Larson and Munkácsy (2015) the position of the stimulation (apical vs. basal) plays a fundamental role, together with priming of NMDA channels. Finally, the fraction of NMDA vs. AMPA receptors is another fundamental element. None of these factors is taken into account in our simplified model.

We have described the simplified dynamics of a bistable basic element of synaptic consolidation (which can be interpreted as a single contact point or a synaptic sub-unit). However, in most experiments, we can only observe the collective effect of many such elements together (Malenka, 1991; Bliss and Collingridge, 1993). Such a collective effect can be interpreted as the average number of potentiated contact points. For a detailed comparison between our model and these experiments, it would be needed to simulate the dynamics of the pre- and post-synaptic neuron groups and of their contact points, in order to obtain an average quantity that can be compared with the continuous change of EPSP observed in experiments. Such approach has been taken in Ziegler et al. (2015) and it requires several assumptions, among others the specification of the dependence of the plasticity induction current I on the pre- and post-synaptic activity, the parameters of the two populations and possible recurrent interactions (see also 3.1) (see Supplementary Material, for a qualitative comparison). For these reasons, such a comparison goes beyond the aim of this work. Moreover, since the model describes a single synaptic contact, it cannot be applied to more complex experiments that involve cross-tagging, where effects of protein synthesis are shared between several synapses (Sajikumar and Frey, 2004b). On the other hand, our results highlight the fact that our model shares similar response properties with the population-averaged quantities measured in experiments, such as its sensitivity to the stimulation frequency and the preference for multiple repetitions. Altogether, these findings suggest that our model possesses the necessary dynamical repertoire to reproduce some of the experimental results (such as Malenka, 1991; Bliss and Collingridge, 1993).

Using our model we can only make some qualitative predictions on experimentally measurable quantities. For example, by comparing Figures 6C,D, we can see that the optimal stimulation parameters change when varying the episode duration ton. More precisely, our model predicts that for shorter ton the optimal stimulus requires a large stimulus intensity during each episode.

The proposed framework is related to a number of previous modeling approaches to synaptic consolidation. In particular, the memory formation in networks of excitatory and inhibitory neurons in Zenke et al. (2015) is based on a synaptic plasticity model with a linear weight variable and a slower consolidation variable, corresponding to a choice of Kw = 0 in Equation (2) of our model. If we exploit this relation between the two models, the coupling term Cw should depend on the post-synaptic activity. Such a time-dependent coupling coefficient is similar to the gating variable in the write-protected model (Ziegler et al., 2015). The write-protected model (Ziegler et al., 2015) can be considered as a three-dimensional generalization of our framework. In our model the weight variable w is directly coupled to the consolidation variable z whereas in the write-protected model w is coupled to an intermediate tag-related variable which is then coupled to z.

The dynamical understanding of the interplay between stimulation protocol and autonomous dynamics gained here by studying the two-dimensional system can be also applied to a three-(or higher-)dimensional generalization, under the assumption that coupling exists only between pairs of variables and that there is timescale separation. Using such a multi-dimensional generalization, it would be possible to explain a much larger set of experimental results. In addition, the model presented in Ziegler et al. (2015) features coupling coefficients that are dynamically adjusted as a function of the induction protocol itself. A change of coupling C makes a model at the same time more expressive and harder to analyze (cf. section 2.3).

The cascade model (Fusi et al., 2005) can be related to the model in the present paper by introducing several slow variables z1, …, zn with time constants τ1, …, τn. The coupling from k to k + 1 is analogous to the coupling of w to z in Equation (2). Even though this extended model and the cascade model share the concept of slower variables, there are some important differences between the two. First, the cascade model (Fusi et al., 2005) is intrinsically stochastic, i.e., the stochasticity due to spiking events is combined with the stochasticity of plasticity itself. Second, the transitions among states in the cascade model are instantaneous (Fusi et al., 2005). In our framework instead, even though there are discrete stable states, the transitions need some time to happen and this is exactly why the frequency of a repetitive stimulus matters in our model.

Similarly to the cascade model (Fusi et al., 2005), the “communicating vessels” model (Benna and Fusi, 2016) relies on multiple hidden variables. However, in contrast to the cascade model (Fusi et al., 2005), the dynamics in the “communicating-vessels” model are determined by continuous variables that obey continuous-time differential equations (Benna and Fusi, 2016). If we truncate the “communication-vessels” model to a single hidden variable, the resulting dynamics fall into our framework, with the simple choice Kw = Kz = 0. Extensions to multiple hidden variables with progressively bigger timescales is possible analogously to our discussion above. Indeed experimental results show that the internal bio-chemical mechanisms of the synapse are characterized by different timescales (Reymann and Frey, 2007; Bosch et al., 2014).

Similar to the cascade model, the “state based model” proposed in Barrett et al. (2009), consist of synapses whose state can shift from e-LTP to l-LTP according to some transition-rates. The model captures two internal mechanisms (tagging and anchor for AMPAR). The probability that a particular synapse is in a specific state is a continuous quantity that depends on the transition probabilities. The main similarity to our model is the presence of a bistable basic synaptic element.

Finally the synaptic plasticity model proposed in Shouval et al. (2002) proposes a non-linear dynamics for the synaptic weights, similarly to our model. The main goal of Shouval et al. (2002) is to relate the amount of NMDAR with the Calcium level in the synapse. However, their model is not bistable and no attempt is made to capture the internal synaptic state.

To summarize, our model focuses on a single transition using two variables. If these variables have different intrinsic timescales, the temporal pattern of the stimulation protocol plays a crucial role. We believe that these insights are applicable beyond our two-variable model in situations where multiple variables covering multiple timescales are pair-wise coupled to each other. This includes well-known consolidation models such as the cascade model (Fusi et al., 2005), the communicating vessels model (Benna and Fusi, 2016), and the write-protected synapse model (Ziegler et al., 2015).

Data Availability Statement

All datasets analyzed for this study are included in the article/Supplementary Material.

Author Contributions

All authors contributed to the conception of the study. CG implemented the code needed to produce all figures, except for Figure 3. SM performed the bifurcation analysis presented in Figure 3 and contributed to the revision and improvement of the figures. CG and SM wrote the first draft of the manuscript. All authors contributed to correcting and improving the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This research was supported by the Swiss national science foundation, grant agreement 200020_165538 and by the HBP.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank Tilo Schwalger for useful comments and discussions.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2019.00078/full#supplementary-material

References

Artola, A., Bröcher, S., and Singer, W. (1990). Different voltage dependent thresholds for inducing long-term depression and long-term potentiation in slices of rat visual cortex. Nature 347, 69–72. doi: 10.1038/347069a0

Barrett, A. B., Billings, G. O., Morris, R. G. M., and van Rossum, M. C. W. (2009). State based model of long-term potentiation and synaptic tagging and capture. PLoS Comput. Biol. 5:e1000259. doi: 10.1371/journal.pcbi.1000259

Bashir, Z. I., and Collingridge, G. L. (1994). An investigation of depotentiation of long-term potentiation in the CA1 region of the hippocampus. Exp. Brain Res. 79, 437–443. doi: 10.1007/BF02738403

Benna, M. K., and Fusi, S. (2016). Computational principles of synaptic memory consolidation. Nat. Neurosci. 19, 1697–1706. doi: 10.1038/nn.4401

Bhalla, U. S., and Iyengar, R. (1999). Emergent properties of networks of biological signaling pathways. Science 283, 381–387. doi: 10.1126/science.283.5400.381

Bienenstock, E. L., Cooper, L. N., and Munro, P. W. (1982). Theory for the development of neuron selectivity: orientation specificity and binocular interaction in visual cortex. J. Neurosci. 2, 32–48. doi: 10.1523/JNEUROSCI.02-01-00032.1982

Bliss, T. V. P., and Collingridge, G. L. (1993). A synaptic model of memory: long-term potentiation in the hippocampus. Nature 361, 31–39. doi: 10.1038/361031a0

Bliss, T. V. P., and Lømo, T. (1973). Long-lasting potentation of synaptic transmission in the dendate area of anaesthetized rabbit following stimulation of the perforant path. J. Physiol. 232, 351–356. doi: 10.1113/jphysiol.1973.sp010273

Bosch, M., Castro, J., Saneyoshi, T., Matsuno, H., Sur, M., and Hayashi, Y. (2014). Structural and molecular remodeling of dendritic spine substructures during long-term potentiation. Neuron 82, 444–459. doi: 10.1016/j.neuron.2014.03.021

Brader, J. M., Senn, W., and Fusi, S. (2007). Learning real-world stimuli in a neural network with spike-driven synaptic dynamics. Neural Comput. 19, 2881–2912. doi: 10.1162/neco.2007.19.11.2881

Brandon, M. P., Bogaard, A. R., Libby, C. P., Connerney, M. A., Gupta, K., and Hasselmo, M. E. (2011). Reduction of theta rhythm dissociates grid cell spatial periodicity from directional tuning. Science 332, 595–599. doi: 10.1126/science.1201652

Brown, T. H., Ganong, A. H., Kairiss, E. W., Keenan, C. L., and Kelso, S. R. (1989). “Long-term potentation in two synaptic systems of the hippocampal brain slice,” in Neural Models of Plasticity, eds J. H. Byrne and W. O. Berry (San Diego, CA: Academic Press Inc.), 266–306.

Caroni, P., Donato, F., and Muller, D. (2012). Structural plasticity upon learning: regulation and functions. Nat. Rev. Neurosci. 13, 478–490. doi: 10.1038/nrn3258

Clopath, C., Busing, L., Vasilaki, E., and Gerstner, W. (2010). Connectivity reflects coding: a model of voltage-based spike-timing-dependent-plasticity with homeostasis. Nat. Neurosci. 13, 344–352. doi: 10.1038/nn.2479

Clopath, C., Ziegler, L., Vasilaki, E., Busing, L., and Gerstner, W. (2008). Tag-trigger-consolidation: a model of early and late long-term-potentiation and depression. PLoS Comput. Biol. 4:e1000248. doi: 10.1371/journal.pcbi.1000248

Cohen, M. A., and Grossberg, S. (1983). Absolute stability of global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans. Syst. Man. Cybern. Syst. 13, 815–823. doi: 10.1109/TSMC.1983.6313075

Dudai, Y., and Morris, R. G. M. (2000). “To consolidate or not to consolidate: what are the questions,” in Brain, Perception, Memory Advances in Cognitive Sciences, ed J. J. Bolhuis (Oxford: Oxford University Press), 149–162.

Ermentrout, B. (1996). Type I membranes, phase resetting curves, and synchrony. Neural Comput. 8, 979–1001. doi: 10.1162/neco.1996.8.5.979

Ermentrout, B. (2002). Simulating, Analyzing, and Animating Dynamical Systems: A Guide to XPPAUT for Researchers and Students, Vol. 14. Philadelphia, PA: Siam.

Frey, U., and Morris, R. G. M. (1997). Synaptic tagging and long-term potentiation. Nature 385, 533–536. doi: 10.1038/385533a0

Fusi, S., Drew, P. J., and Abbott, L. F. (2005). Cascade models of synaptically stored memories. Neuron 45, 599–611. doi: 10.1016/j.neuron.2005.02.001

Gerstner, W., Kempter, R., van Hemmen, J. L., and Wagner, H. (1996). A neuronal learning rule for sub-millisecond temporal coding. Nature 383, 76–78. doi: 10.1038/383076a0

Gjorgjieva, J., Clopath, C., Audet, J., and Pfister, J. P. (2011). A triplet spike-timing–dependent plasticity model generalizes the Bienenstock–Cooper–Munro rule to higher-order spatiotemporal correlations. Proc. Natl. Acad. Sci. U.S.A. 108, 19383–19388. doi: 10.1073/pnas.1105933108

Graupner, M., and Brunel, N. (2007). STDP in a bistable synapse model based on CaMKII and associate signaling pathways. PLoS Comput. Biol. 3:e221. doi: 10.1371/journal.pcbi.0030221

Hasselmo, M. (1999). Neuromodulation: acetylcholine and memory consolidation. Trends Cogn. Sci. 3, 351–359. doi: 10.1016/S1364-6613(99)01365-0

Hayashi-Takagi, A., Yagishita, S., M. Nakamura, F. S., Wu, Y. I., Loshbaugh, A. L., Kuhlman, B., et al. (2015). Labelling and optical erasure of synaptic memory traces in the motor cortex. Nature 525, 333–338. doi: 10.1038/nature15257

Holtmaat, A., and Caroni, P. (2016). Functional and structural underpinnings of neuronal assembly formation in learning. Nat. Neurosci. 19, 1553–1562. doi: 10.1038/nn.4418

Kastner, D. B., Schwalger, T., Ziegler, L., and Gerstner, W. (2016). A model of synaptic reconsolidation. Front. Neurosci. 10:206. doi: 10.3389/fnins.2016.00206

Kramár, E. A., Babayan, A. H., Gavin, C. F., Cox, C. D., Jafari, M., Gall, C. M., et al. (2012). Synaptic evidence for the efficacy of spaced learning. Proc. Natl. Acad. Sci. U.S.A. 109, 5121–5126. doi: 10.1073/pnas.1120700109

Kumar, A., and Mehta, M. R. (2011). Frequency-dependent changes in nmdar-dependent synaptic plasticity. Front. Comput. Neurosci. 5:38. doi: 10.3389/fncom.2011.00038

Larson, J., and Munkácsy, E. (2015). Theta-burst LTP. Brain Res. 1621, 38–50. doi: 10.1016/j.brainres.2014.10.034

Levy, W. B., and Stewart, D. (1983). Temporal contiguity requirements for long-term associative potentiation/depression in hippocampus. Neuroscience 8, 791–797.

Lisman, J. (2017). Glutamatergic synapses are structurally and biochemically complex because of multiple plasticity processes: long-term potentiation, long-term depression, short-term potentiation and scaling. Philos. Trans. R. Soc. B Biol. Sci. 372:20160260. doi: 10.1098/rstb.2016.0260

Lisman, J. E., and Zhabotinsky, A. M. (2001). A model of synaptic memory: a CaMKII/PP1 switch that potentiates transmission by organizing an AMPA receptor anchoring assembly. Neuron 31, 191–201. doi: 10.1016/S0896-6273(01)00364-6

Malenka, R. C. (1991). Postsynaptic factors control the duration of synaptic enhancement in area CA1 of the hippocampus. Neuron 6, 53–60. doi: 10.1016/0896-6273(91)90121-F

Markram, H., Lübke, J., Frotscher, M., and Sakmann, B. (1997). Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275, 213–215. doi: 10.1126/science.275.5297.213

Markram, H., Wu, Y., and Tosdyks, M. (1998). Differential signaling via the same axon of neocortical pyramidal neurons. Proc. Natl. Acad. Sci. U.S.A. 95, 5323–5328. doi: 10.1073/pnas.95.9.5323

Martin, S. J., Grimwood, P. D., and Morris, R. G. M. (2000). Synaptic plasticity and memory: an evaluation of the hypothesis. Annu. Rev. Neurosci. 23, 649–711. doi: 10.1146/annurev.neuro.23.1.649

Nabavi, S., Fox, R., Proulx, C. D., Lin, J. Y., Tsien, R. Y., and Malinow, R. (2014). Engineering a memory with LTD and LTP. Nature 511, 348–352. doi: 10.1038/nature13294

Nader, K., Schafe, G. E., and LeDoux, J. E. (2000). Reply-reconsolidation: the labile nature of consolidation theory. Nat. Rev. Neurosci. 1, 216–219. doi: 10.1038/35044580

Nicolas, F., and Gerstner, W. (2016). Neuromodulated spike-timing-dependent plasticity, and theory of three-factor learning rules. Front. Neural Circuits 9:85. doi: 10.3389/fncir.2015.00085

O'Connor, D. H., Wittenberg, G. M., and Wang, S. S. H. (2005). Graded bidirectional synaptic plasticity is composed of switch-like unitary events. Proc. Natl. Acad. Sci. U.S.A. 102, 9679–9684. doi: 10.1073/pnas.0502332102

Petersen, C. C. H., Malenka, R. C., Nicoll, R. A., and Hopfield, J. J. (1998). All-or-none potentiation at CA3-CA1 synapses. Proc. Natl. Acad. Sci. U.S.A. 95, 4732–4737. doi: 10.1073/pnas.95.8.4732

Pfister, J. P., and Gerstner, W. (2006). Triplets of spikes in a model of spike timing-dependent plasticity. J. Neurosci. 26, 9673–9682. doi: 10.1523/JNEUROSCI.1425-06.2006

Redondo, R. L., and Morris, R. G. M. (2011). Making memories last: the synaptic tagging and capture hypothesis. Nat. Rev. Neurosci. 12, 17–30. doi: 10.1038/nrn2963

Reymann, K. G., and Frey, J. U. (2007). The late maintenance of hippocampal LTP: requirements, phases,synaptic tagging, late associativity and implications. Neuropharmacology 52, 24–40. doi: 10.1016/j.neuropharm.2006.07.026

Rinzel, J., and Ermentrout, G. B. (1998). Analysis of neural excitability and oscillations. Methods Neuronal Model. 2, 251–292.

Roelfsema, P. R. (2006). Cortical algorithms for perceptual grouping. Annu. Rev. Neurosci. 29, 203–227. doi: 10.1146/annurev.neuro.29.051605.112939

Rubin, J. E., Gerkin, R. C., Bi, G. Q., and Chow, C. C. (2005). Calcium time course as a signal for spike-timing-dependent plasticity. J. Neurophysiol. 93, 2600–2613. doi: 10.1152/jn.00803.2004

Sajikumar, S., and Frey, J. U. (2004a). Late-associativity, synaptic tagging, and the role of dopamine during LTP and LTD. Neurobiol. Learn. Mem. 82, 12–25. doi: 10.1016/j.nlm.2004.03.003

Sajikumar, S., and Frey, J. U. (2004b). Resetting of synaptic tags is time- and activity dependent in rat hippocampal CA1 in vitro. Neuroscience 129, 503–507. doi: 10.1016/j.neuroscience.2004.08.014

Sajikumar, S., Navakkode, S., Sacktor, T. C., and Frey, J. U. (2005). Synaptic tagging and cross-tagging: the role of protein kinase Mζ in maintaining long-term potentiation but not long-term depression. J. Neurosci. 25, 5750–5756. doi: 10.1523/JNEUROSCI.1104-05.2005

Senn, W., Tsodyks, M., and Markram, H. (2001). An algorithm for modifying neurotransmitter release probability based on pre- and postsynaptic spike timing. Neural Comput. 13, 35–67. doi: 10.1162/089976601300014628

Shouval, H. Z., Bear, M. F., and Cooper, L. N. (2002). A unified model of NMDA receptor-dependent bidirectional synaptic plasticity. Proc. Natl. Acad. Sci. U.S.A. 99:10831. doi: 10.1073/pnas.152343099

Sjöström, P. J., Turrigiano, G., and Nelson, S. B. (2001). Rate, timing, and cooperativity jointly determine cortical synaptic plasticity. Neuron 32, 1149–1164. doi: 10.1016/S0896-6273(01)00542-6

Song, S., Miller, K. D., and Abbott, L. F. (2000). Competitive Hebbian learning through spike-time-dependent synaptic plasticity. Nat. Neurosci. 3, 919–926. doi: 10.1038/78829

Strogatz, S. H. (2014). Nonlinear Dynamics and Chaos: With Applications to Physics, Biology, Chemistry, and Engineering. Boca Raton, FL: Hachette.

Turrigiano, G. G., Marder, E., and Abbott, L. F. (1996). Cellular short-term memory from a slow potassium conductance. J. Neurophysiol. 75, 963–966. doi: 10.1152/jn.1996.75.2.963

Van Rossum, M. C. W., Bi, G. Q., and Turrigiano, G. G. (2000). Stable Hebbian learning from spike timing-dependent plasticity. J. Neurosci. 20, 8812–8821. doi: 10.1523/JNEUROSCI.20-23-08812.2000

Wilson, H. R., and Cowan, J. D. (1972). Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 12, 1–24. doi: 10.1016/S0006-3495(72)86068-5

Zenke, F., Agnes, E. J., and Gerstner, W. (2015). Diverse synaptic plasticity mechanisms orchestrated to form and retrieve memories in spiking neural networks. Nat. Commun. 6:6922. doi: 10.1038/ncomms7922

Zhou, Q., Tao, H. W., and Poo, M. M. (2003). Reversal and stabilization of synaptic modifications in a developing visual system. Science 300, 1953–1957. doi: 10.1126/science.1082212

Keywords: synaptic consolidation, plasticity, LTP, stimulation frequency, bistability

Citation: Gastaldi C, Muscinelli S and Gerstner W (2019) Optimal Stimulation Protocol in a Bistable Synaptic Consolidation Model. Front. Comput. Neurosci. 13:78. doi: 10.3389/fncom.2019.00078

Received: 10 April 2019; Accepted: 21 October 2019;

Published: 13 November 2019.

Edited by:

Mayank R. Mehta, University of California, Los Angeles, United StatesCopyright © 2019 Gastaldi, Muscinelli and Gerstner. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chiara Gastaldi, Y2hpYXJhLmdhc3RhbGRpQGVwZmwuY2g=

Chiara Gastaldi

Chiara Gastaldi Samuel Muscinelli

Samuel Muscinelli Wulfram Gerstner

Wulfram Gerstner