94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci., 06 September 2019

Volume 13 - 2019 | https://doi.org/10.3389/fncom.2019.00062

This article is part of the Research TopicRecurrence Analysis of Complex Systems DynamicsView all 9 articles

Peter beim Graben1

Peter beim Graben1 Antonio Jimenez-Marin2

Antonio Jimenez-Marin2 Ibai Diez3,4,5

Ibai Diez3,4,5 Jesus M. Cortes2,6,7

Jesus M. Cortes2,6,7 Mathieu Desroches8,9

Mathieu Desroches8,9 Serafim Rodrigues6,10*

Serafim Rodrigues6,10*Metastability refers to the fact that the state of a dynamical system spends a large amount of time in a restricted region of its available phase space before a transition takes place, bringing the system into another state from where it might recur into the previous one. beim Graben and Hutt (2013) suggested to use the recurrence plot (RP) technique introduced by Eckmann et al. (1987) for the segmentation of system's trajectories into metastable states using recurrence grammars. Here, we apply this recurrence structure analysis (RSA) for the first time to resting-state brain dynamics obtained from functional magnetic resonance imaging (fMRI). Brain regions are defined according to the brain hierarchical atlas (BHA) developed by Diez et al. (2015), and as a consequence, regions present high-connectivity in both structure (obtained from diffusion tensor imaging) and function (from the blood-level dependent-oxygenation—BOLD—signal). Remarkably, regions observed by Diez et al. were completely time-invariant. Here, in order to compare this static picture with the metastable systems dynamics obtained from the RSA segmentation, we determine the number of metastable states as a measure of complexity for all subjects and for region numbers varying from 3 to 100. We find RSA convergence toward an optimal segmentation of 40 metastable states for normalized BOLD signals, averaged over BHA modules. Next, we build a bistable dynamics at population level by pooling 30 subjects after Hausdorff clustering. In link with this finding, we reflect on the different modeling frameworks that can allow for such scenarios: heteroclinic dynamics, dynamics with riddled basins of attraction, multiple-timescale dynamics. Finally, we characterize the metastable states both functionally and structurally, using templates for resting state networks (RSNs) and the automated anatomical labeling (AAL) atlas, respectively.

Mapping the brain's functional-structural relationship remains one of the most complex challenges in modern neuroscience (Park and Friston, 2013), in part due to the highly dynamic multi-scale nature of the brain's processes and structures as observed by different measurement modalities, which leads to technical and mathematical difficulties for establishing dynamically invariant relations across scales. As a result, which precise function emerges at the macro-scale (as measured by BOLD signals) from the underlying static neuronal architecture is not yet fully understood. In fact, this rests on a many-to-one function-structure relationship, which is hard to resolve and therefore novel methodologies are demanded. The present study addresses aspects of this macro-scale question by leveraging on recent developments of novel data-driven computational methods, which weeds out recurrent dynamical states from times series and associates to optimal brain structures, thus resolving the function-structure of the so-called resting state networks (RSNs) (Raichle et al., 2001; Fox et al., 2005; Diez et al., 2015; Smitha et al., 2017).

Several methodological advances are making strides in unveiling the macro-scale organization of the brain, comprising both hierarchical brain network structures and functions. These methods classify brain's macro-scale organization under the following terms: structural connectivity (resolved from diffusion tensor imaging techniques—DTI), functional connectivity (determined by statistical dependencies from BOLD signals) and effective connectivity (deduced by causality measures from BOLD signals). The neural structures and patterns of dynamical similarity are represented by e.g., the connectivity matrices. Specifically, for structural connectivity, functional connectivity, and effective connectivity the entries of the network's connectivity matrix indicate the anatomical links (white-matter tracts connecting different gray matter regions), the correlation strength and the causal strength between pairs of imaging regions of interest, respectively (Alonso Montes et al., 2015). Subsequently, features of this matrix can be exploited by using for example standard tools from linear algebra that rely upon spectral analysis (e.g., invariants such as eigenvalues) and other related/complementary methods, such as independent component analysis (ICA) (Bell and Sejnowski, 1997), partial least squares (PLS) (Krishnan et al., 2011), and many more. These procedures extract meaningful and independent quantities and thus decompose features within the connectivity matrix. However, mapping between these different matrices and additionally accounting for temporal dynamics is of paramount interest and an active research area in brain mapping.

To reduce the complexity of this issue, neuroscientists have primarily focused on a precise context provided by the RSNs. This global brain dynamics (measured from the observable fMRI) emerges while a subject is at rest and can be decomposed as a superposition of multiple activation patterns (Bell and Sejnowski, 1995; Beckmann and Smith, 2005; Vergun et al., 2016). Despite the simplicity of the context in which these brain activity patterns are generated, RSNs dynamics is rich and complex. Indeed, different RSNs have been associated to specific cognitive networks, for example, visual networks, sensory-motor networks, auditory networks, memory (default mode) networks, executive control networks, and some others (see for instance Beckmann et al., 2005 and references therein). This has led to the hypothesis that underlying such activation patterns (often recurring) is the existence of stable switching attractors that enhance information maintenance and facilitates cognitive transitions (Vidaurre et al., 2017; Iraji et al., 2018; Shine et al., 2018). Moreover, it is observed that the base resting state network is well conserved across subjects (Damoiseaux et al., 2006).

The mapping between structural connectivity and functional connectivity of RSNs (without considering temporal dynamics) was recently investigated by some authors of the present study and, as a by-product, a novel brain hierarchical atlas was identified (Diez et al., 2015). This was achieved by starting from the hypothesis that segregated functions are associated with distinct brain regions and both structure and functions have a hierarchical modular organization. This provided a tree (dendrogram) structure where the leaves of the tree (first-order nodes) associated to voxel measurements represent self-correlation (i.e., singleton) for the functional connectivity matrix and self-connectivity for the structural connectivity matrix. Moving up the tree corresponds to pairing up tree leaves that have strong correlations or connectivity (for functional connectivity and structural connectivity, respectively), thus forming higher-order nodes (or modules). Recursively, these modules are paired until the entire brain is represented by a mother node. It was shown that for about twenty modules maximal similarity between structural modules and functional ones was achieved and precisely these modules define the novel brain hierarchical atlas (BHA), which also explains the structure-function mapping in RSNs. However, the dynamical evolution of RSNs over time was not investigated and in fact has never been exploited directly from data, though indirect approaches have been explored. For example, computational brain network models have been proposed in attempt to reveal fundamental principles of RSNs (Hansen et al., 2015; Deco et al., 2017; Surampudi et al., 2019), and some of these models predict (for instance) that the human brain during resting state operates at maximum metastability, i.e., in a state of maximal network switching (also observed in EEG Roberts et al., 2019). Moreover, it is conjectured that information flow in the brain is guided by ordered sequences of metastable states (Friston, 1997; Rabinovich et al., 2008; Kelso, 2012; Tognoli and Kelso, 2014; Fingelkurts and Fingelkurts, 2017).

The present work takes a step further and considers a direct approach by employing a data-driven computational method to investigate the stable switching attractors hypotheses of RSNs, leveraging a recently developed method, called recurrence structure analysis (RSA) (beim Graben and Hutt, 2013, 2015; beim Graben et al., 2016). We note however that the proposed framework is not the only method for extracting reproducible time-resolved networks from data as very recently a competing framework based on dynamic mode decomposition was proposed (Kunert-Graf et al., 2018). Briefly, our method utilizes advanced theories of dynamical systems and time series analysis, attempting to extract optimal symbolic dynamics from time series observations that display transient and recurrent states (i.e., metastable states). This is achieved by building upon Poincaré's recurrence theorem, on the one hand, which states that trajectories of a complex dynamical system visit frequently certain regions of their available state space in the course of time and by the so-called recurrence plot method (RP), on the other hand, allowing visualization and matrix identification of recurrent states. These are then transformed to symbolic space by introducing recurrence grammars, which map state space trajectories onto symbolic sequences (beim Graben and Hutt, 2013, 2015; beim Graben et al., 2016). This is carried out by constructing state space partitions that are maximally metastable, based on the assumption that the discretized symbolic dynamics should be approximately Markovian. Thus combining the structure-function modules of the novel brain hierarchical atlas with optimized recurrence structure analysis, opens for the first time new avenues to identifying resting state networks with time-dependent recurrent cognitive states.

In this section we review our methods for the data acquisition, preprocessing, structure-function clustering and recurrence structure analysis.

Data from N = 30 healthy subjects (14 males) with age between 22 and 35 were used in this study. Data were provided by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University. The acquisitions were conducted on the Connectome Skyra, which is a customized Skyra platform with 100mT/m gradients for diffusion encoding and 42mT/m gradients for imaging. High resolution T1 anatomical images and functional images were used in this study.

High-resolution anatomical MRI was acquired using a T1-weighted 3D MPRAGE sequence with the following parameters: TR= 2,400 ms; TE = 2.14ms; TI = 1, 000ms; Flip angle = 8°; FOV = 224 × 224; Voxel size = isotropic 0.7mm; BW = 210Hz/Px; iPAT: 2; Acquisition time 7 min and 40 s.

To measure changes in blood-oxygenation-level-dependent (BOLD) T2* signals a gradient-echo EPI sequence was used to acquire 1,200 volumes (approximately 15 min). The acquisitions were performed with the following parameters: TR = 720ms, TE = 33.1ms; Flip Angle 52; field of view 208 × 180mm (RO × PE); 104 90 (RO × PE) matrix; 72 slices with 2.0mm isotropic voxels; multiband factor 8; echo spacing 0.58ms; and BW 2, 290Hz/Px.

For further information on acquisition parameters and scanning paradigms see the Human connectome documentation1.

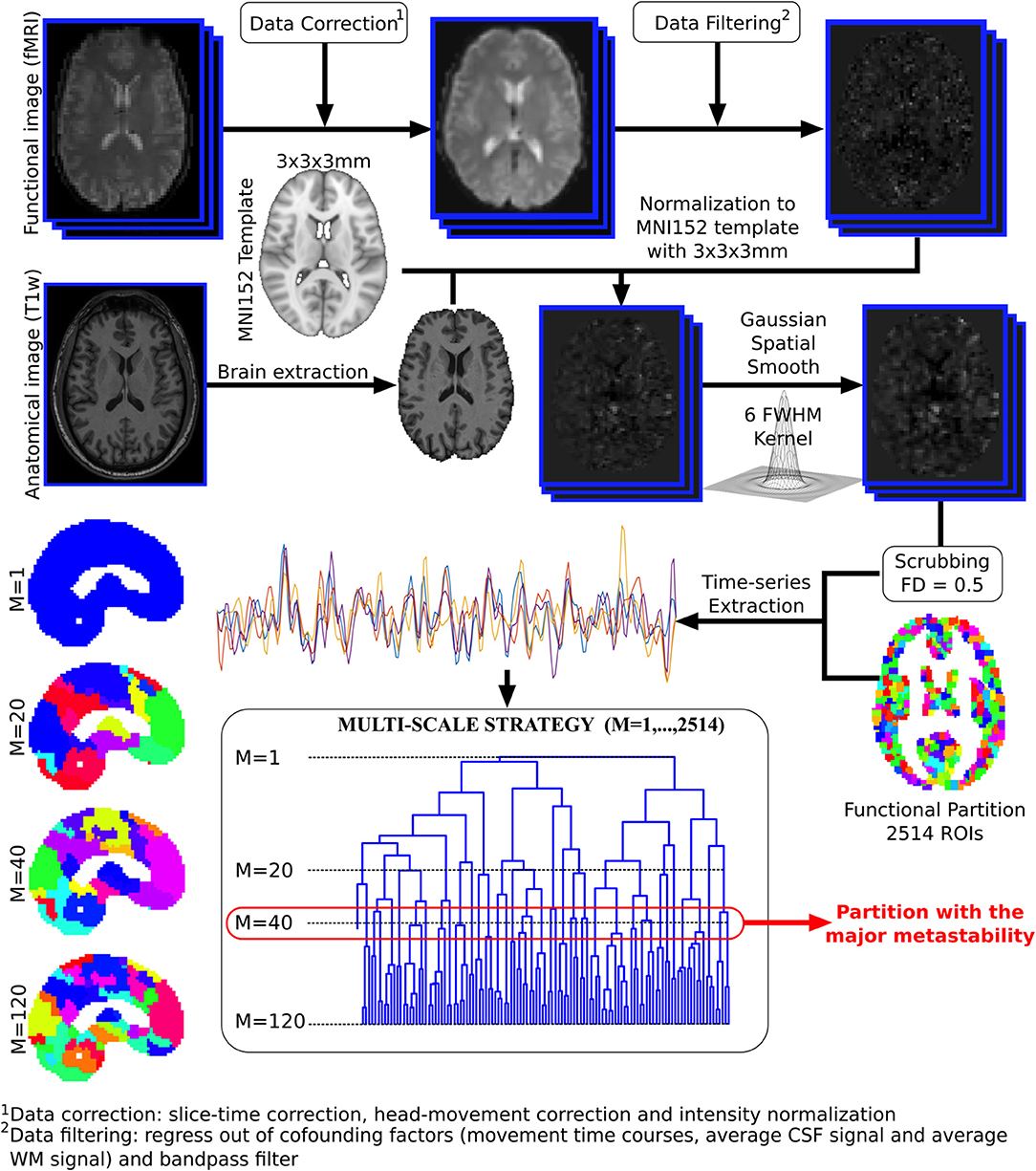

Functional data were preprocessed using FSL (FMRIB Software Library, version 5.0) and AFNI, following a procedure similar to previous work (Diez et al., 2017; Rasero et al., 2017, 2019; Stramaglia et al., 2017; Bonifazi et al., 2018; Camino-Pontes et al., 2018). First, slice-time correction was applied to the fMRI. Next, each volume was aligned to the middle volume to correct for head movement artifacts. After intensity normalization, we removed the effect of confounding factors: movement time courses, the average cerebrospinal fluid (CSF) signal and the average white matter signal, followed by a bandpass filter between 0.01 and 0.08Hz. The functional data were normalized to the MNI152 brain template, with a voxel size of 3 × 3 × 3mm3 and spatially smoothed with a 6mm full-width-at-half-maximum (FWHM) isotropic Gaussian kernel. In addition to head motion correction, we performed scrubbing, which means that all time points with framewise displacement greater than 0.5 were interpolated by a cubic spline (Yan et al., 2013).

Voxel time series were grouped using the brain hierarchical atlas (BHA), recently developed by Diez et al. (2015)2. The use of the BHA guarantees three conditions simultaneously: (1) That the dynamics of voxels belonging to the same module is very similar, (2) that those voxels belonging to the same module are structurally wired by white-matter streamlines; and (3) when varying the level of the hierarchical tree, it provides a multi-scale brain partition, where the highest dendrogram level M = 1 corresponds to all 2,514 regions belonging to a single module (coincident with the entire brain), whereas the lowest level M = 2,514 corresponds to 2,514 isolated modules (all of them composed of only one region). Figure 1 illustrates the functional image preprocessing pipeline.

Figure 1. Functional image preprocessing pipeline. Dual acquisition is needed, high-resolution anatomical images (T1) and functional images at rest. Following state-of-the-art pipeline of neuroimaging preprocessing, time series of the blood oxygenation level dependent (BOLD) signal were obtained for each region of interest (ROI), defined by a functional atlas of 2,514 ROIs. Finally, the time series were averaged using different partitions of the brain hierarchical atlas (BHA). The partition with maximal metastability was the one with M = 40 modules (see section 2.4 for details).

The recurrence structure analysis (RSA) exploits the Poincaré recurrence theorem, the recurrence plot (RP) technique introduced by Eckmann et al. (1987) and Markov state modeling to extract symbolic dynamics and recurrent metastable states from time series data3.

Consider a discretely sampled trajectory of duration T where ℝM denotes the M-dimensional phase space of the system. In our case, the states are obtained as normalized, voxel-averaged resting state fMRI (rsfMRI BOLD) measurements such that M is the number of structure-function modules (SFM) from the BHA analysis of section 2.3 (Diez et al., 2015).

The RP is a binary time-by-time matrix R indicating recurrence events, Rik = 1, when two states at times i > j are detected as being recurrent, i.e., state xj falls into a ball of radius ε > 0 centered around state xi:

with and an appropriately chosen metric or distance function d(x,y). Here, we use the cosine distance

for normalized states, .

According to beim Graben and Hutt (2013, 2015), the RP (1) can be interpreted as a rewriting grammar, called the recurrence grammar, acting on sequences of time indices si = i in the following sense. If the system is recurrent at time points i, j (Rij = 1) and if i > j, then a grammar rule i → j is defined, replacing the larger time index i in the sequence s by its smaller recurrent counterpart j. Moreover, if the system is recurrent at time points i, j, k (Rij = 1, Rik = 1) and if i > j > k, two grammar rules i → k and j → k are introduced that replace the two larger indices i, j in the sequence s by the smallest one k. Applying this grammar at least twice to the sequence s yields a transformed sequence s′ whose indices indicate the distinguished metastable system states.

A metastable state Sk is then rendered as a cloud of all states from the trajectory X that have the same index k in the sequence s′, that is,

The metastable states partition the phase space of the system into mutually disjoint equivalence classes. However, the discretization of the phase space and segmentation of system's trajectories into metastable states depends on a free parameter, the ball size ε. Determining the optimal ε value is pivotal as it enables to explain the time series observations. Several methods have been proposed to optimize ε (beim Graben and Hutt, 2013, 2015) but a more robust approach is based on Markovian optimization (beim Graben et al., 2016). Specifically, it assumes that the time series can be described by a Markov state model expressed via a transition matrix, , which specifies conditional transition probabilities from metastable state Sj into state Si,

where is the state at time t and its immediate successor in the given sampling. Moreover, it is assumed that the system spends most time in its respective metastable states and that the transitions from one metastable state into a transient regime and back into (another) metastable state are uniformly distributed (according to a maximum entropy principle). These combined assumptions enable the derivation of the following utility function

where tr P(ε) is the trace of the transition matrix and

are entropies (for the row and column of the transition matrix) with renormalized transition probabilities

An optimal partition is then obtained through

entailing a maximally metastable Markov state model; for more details see beim Graben et al. (2016). The number of segments n(ε*) characterizes the “complexity” of the transition model comprising one distinguished transient and hence n − 1 metastable states (beim Graben et al., 2016).

Next, we have to consider ensemble analysis. Instead of looking at a single trajectory X we are concerned with an ensemble of N trajectories E = {Xm | 1 ≤ m ≤ N}, which in our case refers to rsfMRI time series from N = 30 individual subjects. As our data are normalized to a unit hypersphere, we are able to compare metastable segments from different subjects. This is achieved by collecting all metastable states from all individuals m and calculating their pairwise Hausdorff distances

according to Hutt and beim Graben (2017). Here,

measures the “distance” of the point x from the compact set A ⊂ X. Note that the Hausdorff distance of two overlapping compact sets vanishes. Again, we use the cosine distance (2) for our normalized data here. Thresholding the distance matrix D with respect to a parameter θ > 0, yields another binary matrix Q as follows

which induces another recurrence grammar from which a Hausdorff clustering of metastable states is obtained; for further details see Hutt and beim Graben (2017). The threshold θ must be chosen in such a way that the entire ensemble E is covered by a minimal set of metastable states.

Finally, we have to express the metastable states either in terms of resting state networks (RSN) or in terms of anatomical structures (AAL). To that aim, Diez et al. (2015) have presented overlap matrices relating M structure-function modules to K nodes in the RSN or AAL representations, respectively. Here we consider the matrix M as a mixing matrix mapping a module state vector onto a network state through

For each metastable state Si, which is actually given as a spherical distribution of sampling points, we select a representative point. Hutt and beim Graben (2017) suggested to use the ensemble mean, i.e., the barycenter of the distribution. Here, however, we choose the vector

maximizing the maximum norm of the distribution for enhancing the contrast between metastable states. We project these particular representatives onto the RSN and AAL representations, according to

with M either the RSN or AAL mixing matrix, respectively.

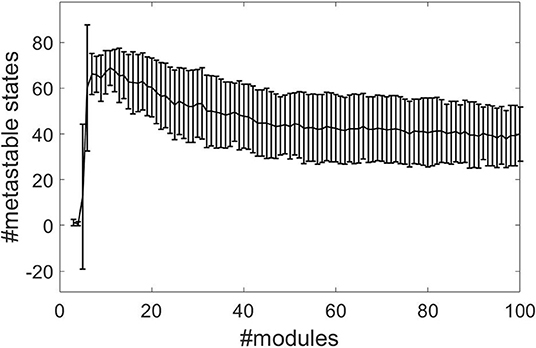

We combine the time-independent structure-function and HBA analysis of Diez et al. (2015) with the time-dependent recurrence structure analysis. We compute the optimal number of metastable states n − 1 determined by the RSA as a measure of complexity (beim Graben et al., 2016) for all subjects and for module numbers ranging from 3 to 100 from normalized rsfMRI BOLD signals. Figure 2 shows the results, where the error bars indicate one standard deviation of the complexity averaged over all subjects. The RSA stabilizes around M = 40 metastable states.

Figure 2. Segmentation complexities measured as number of metastable states against number of structure-function modules for normalized data using the cosine distance.

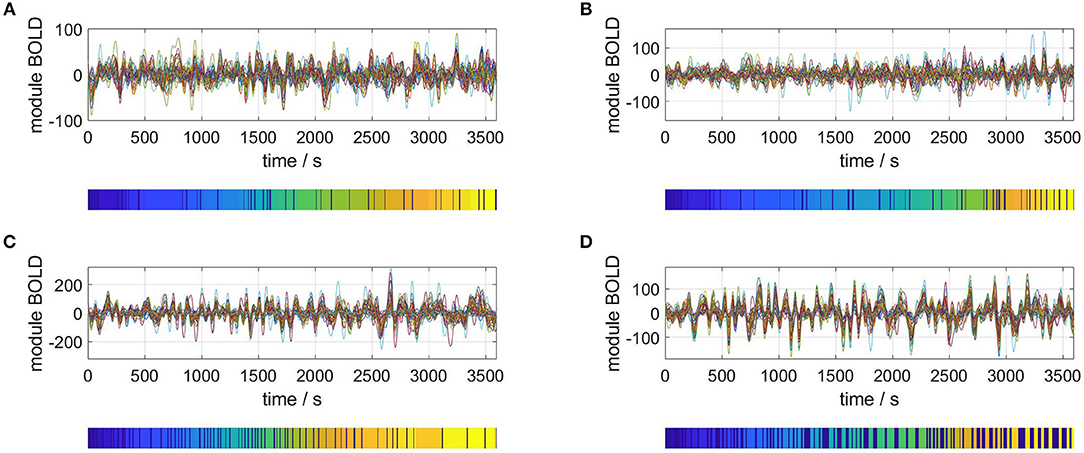

Carrying out the RSA of an M = 40 dimensional phase space for four representative subjects gives the results plotted in Figure 3. For each plot, the upper panel shows the M time series of the rsfMRI BOLD signal before normalization. The lower panels depict the resulting segmentation into metastable states, i.e., the symbolic sequences s′.

Figure 3. Selection of segmented rsfMRI time series. Upper panels: rsfMRI time series averaged over 40 modules, lower panels: symbolic sequences s′ resulting from optimal partitions into metastable states. Selected subjects: (A) #1, (B) #7, (C) #18, (D) #30.

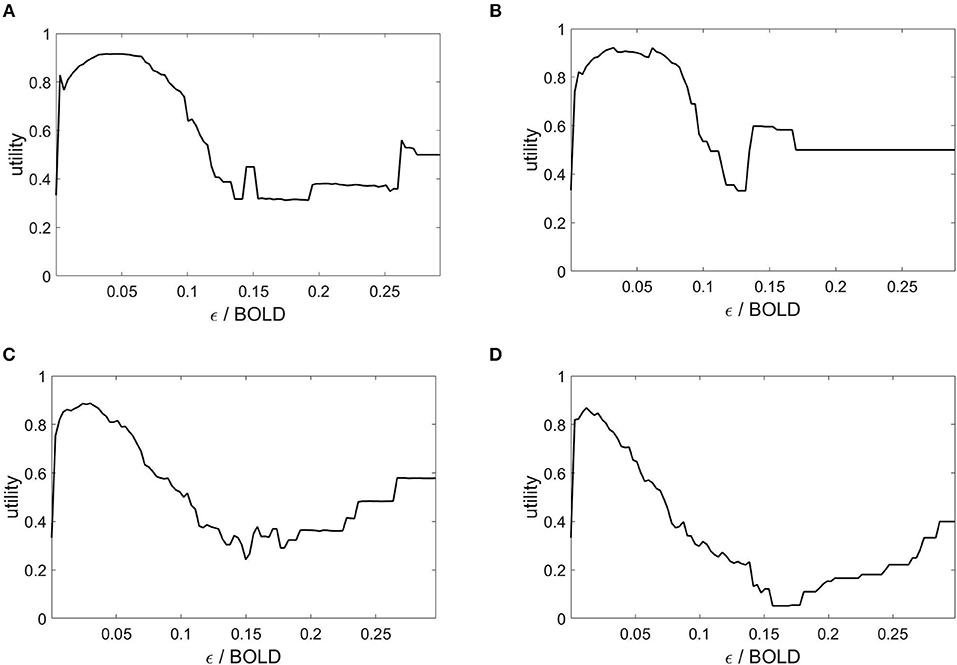

The state space partitioning and symbolic segmentation is achieved by optimizing the Markov criterion (8). The corresponding utility functions are plotted in Figure 4 and we observe that for each plot there exists a global maximum, establishing an optimal ball size for the symbolic segmentation around ε = 0.05.

Figure 4. Markov utility functions from averaged rsfMRI time series over 40 modules. Subjects: (A) #1, (B) #7, (C) #18, (D) #30.

We next consider the ensemble dynamics from the population of N = 30 subjects by employing the Hausdorff clustering (9) and optimally thresholding the distance matrix (11) to obtain a minimal set of metastable states that covers the entire ensemble. For the choice θ = 0.8, we get a bistable segmentation of the resting state dynamics, specifically, into two metastable states and one transient state, which is depicted in Figure 5. Here, yellow and turquoise indicate the two metastable states, while dark blue represents transients.

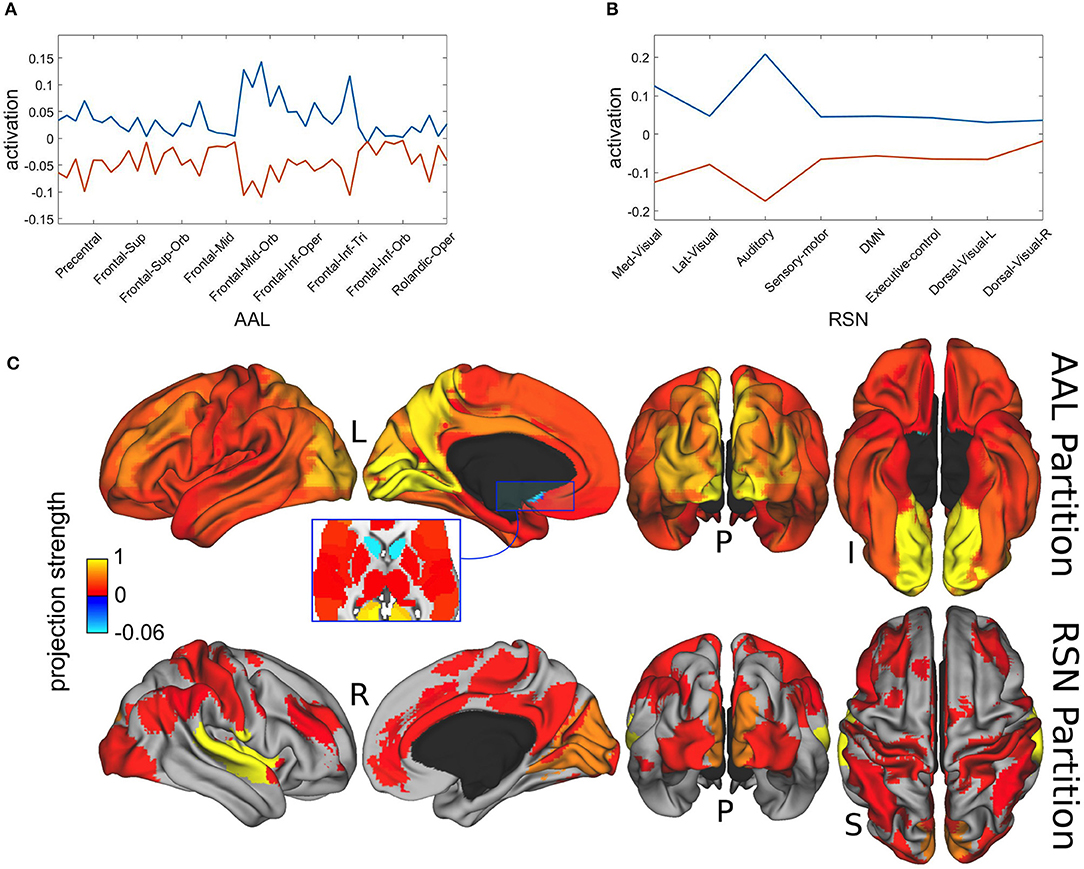

In Figure 6 we present the spatial distributions of both metastable population states, resulting from the projection of the most distinctive representatives onto the AAL atlas in Figure 6A and with respect to the resting state network (RSN) in Figure 6B. Finally, Figure 6C displays the same results as brain map projections for the first metastable state (plotted in blue) in Figures 6A,B, because the second one is largely its complement.

Figure 6. Spatial projections of metastable brain resting states. (A) Projection onto AAL regions. Notice that just for illustration purposes, although the spatial projection has been performed over all the 45 AAL brain regions, some of the labels have been removed from the x-axis. Brain areas corresponding to maximum projections (written in text) were lingual, calcarine, precuneus, occipital and cingulate cortices. (B) Projection onto RSN regions. Maximum projections occurred for the auditory and medial visual networks. (C) Brain localization of blue attractor with regard to AAL and RSN partitions. Notice that projection strength might have positive or negative values depending on the direction of the basis vectors.

Maximal metastability occurred bilaterally at lingual, calcarine, precuneus, occipital and cingulate cortices. The projection over the functional networks revealed maximum participation of the auditory and medial visual networks.

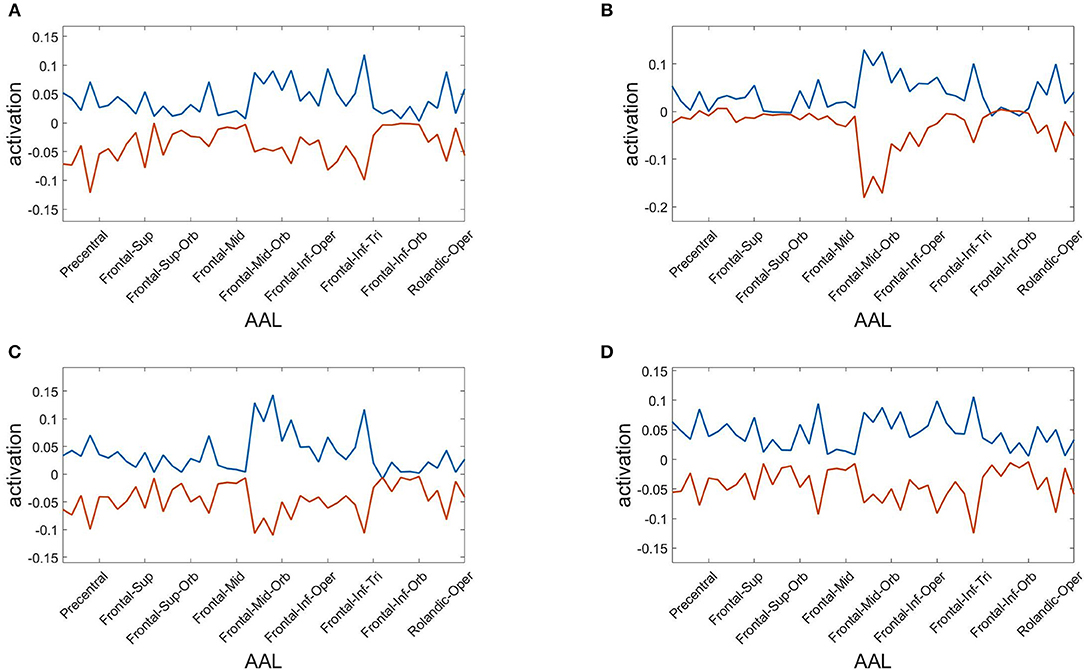

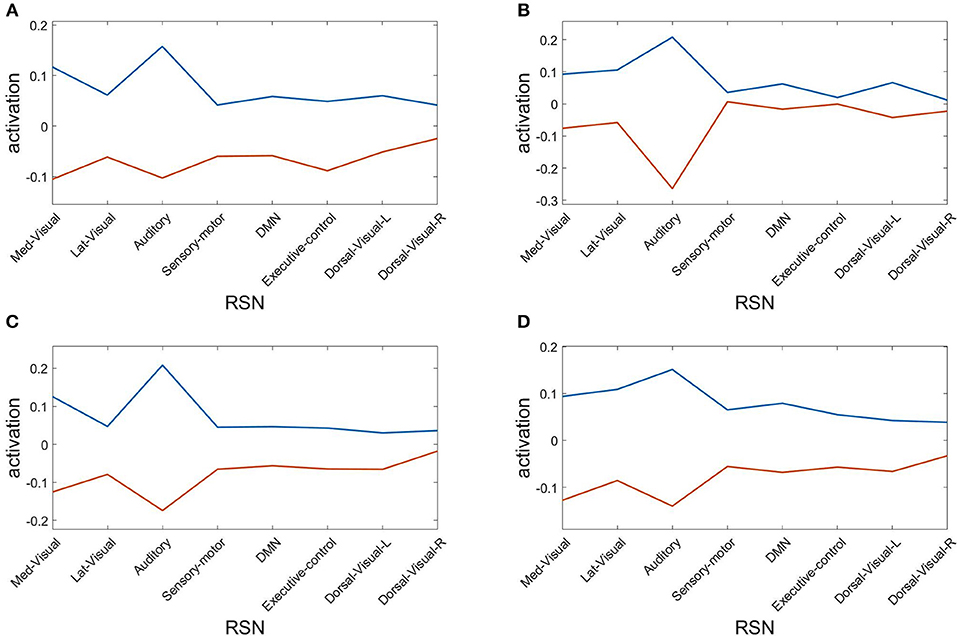

Finally, we assess the robustness of the population analysis by breaking it down to the four selected subjects shown in Figures 3, 4. Figure 7 displays the AAL projections, whereas Figure 8 shows the corresponding RSN projections.

Figure 7. Anatomical AAL projections of the two metastable states obtained from optimizing RSA over 40 modules. Subjects: (A) #1, (B) #7, (C) #18, (D) #30.

Figure 8. Resting state network (RSN) projections of the two metastable states obtained from optimizing RSA over 40 modules. Subjects: (A) #1, (B) #7, (C) #18, (D) #30.

Both analyses indicate that these findings for single subjects are consistent with the population results.

We introduced a novel framework to track the spatiotemporal dynamics of resting state fMRI BOLD signals. Specifically, a time dependent functional-structural brain mapping is achieved by combining the structural-functional brain hierarchical atlas (BHA) partitioning with the recurrence structure analysis (RSA). The combined method is applied to resting state fMRI BOLD signals and successfully identifies their corresponding time dependent metastable states, which are finally mapped to the anatomical brain structures and functional networks. Maximum metastability was found anatomically at lingual, calcarine, precuneus, occipital and cingulate cortices, which encompass both primary and high-order visual and auditory networks, together with the precuneus, that forming part of the default mode network is well-known to be one of the major functional hubs of the human brain. Although this is an observation rather than an interpretation, future studies are needed to really understand the brain organization of the metastable circuits at the large-scale.

The overall framework, in particular the time dependent aspect, relies fundamentally on Poincaré's recurrence theorem, which demands two conditions to be met for the underlying system under study. Specifically, if the system (typically an isolated or autonomous system) is volume preserving and has only bounded trajectories then for each open set (in phase space) there exist orbits that intersect the set infinitely often and are hence recurrent. It is critical to be cautious in drawing immediate interpretations of our results, however we will risk a tentative interpretation under the constraints made by the aforementioned theorem. To begin, we first assume that the brain (and associated processes) can be explained by some suitable complex multi-scale dynamical system. However, under the experimental fMRI condition in which the subjects are at rest, we will further assume that the underlying brain's dynamical system is autonomous (or at least approximately). Moreover, since fMRI BOLD relates to blood oxygenation and indirectly to local energy consumption through brain neural circuits, and thus correlates with neural activity, we assume that the values taken by the brain for its energy consumption are bounded. Finally, energy is not quantized, that is, it can take any value within this bounded domain. These assumptions satisfy the premises of the aforementioned theorem, which ultimately enables us to identifying time dependent recurrent states of resting state networks.

Under this setup, we find convergence toward an optimal segmentation of about 40 metastable states (for normalized data). This convergence may reflect preferred oxygen/energy levels (and switching between these levels) among all possible energy levels consumed across all structure-function brain modules. Thus at rest, it is likely that the energy is equally distributed globally across all networks (i.e., the whole brain), which in feedback entrains locally each module. However, each local module (or a set of few communicating modules) may have high transient use of energy consumption. These preferred energy levels and switching, indirectly reflect time dependent cognitive states mediated by the neural circuits of the resting state networks. To compare across all subjects we consider normalized data and following the Hausdorff clustering we find that across all subjects they share a common transition between two oxygen/energy levels (possibly interpreted as bistability). It is premature yet to draw any hard conclusions, however, we could extrapolate that there are two fundamental dwelling states that could represent a common homeostatic switching process that gateways the remaining energy levels, driving the dynamical transitions of the resting state structure-function network modules.

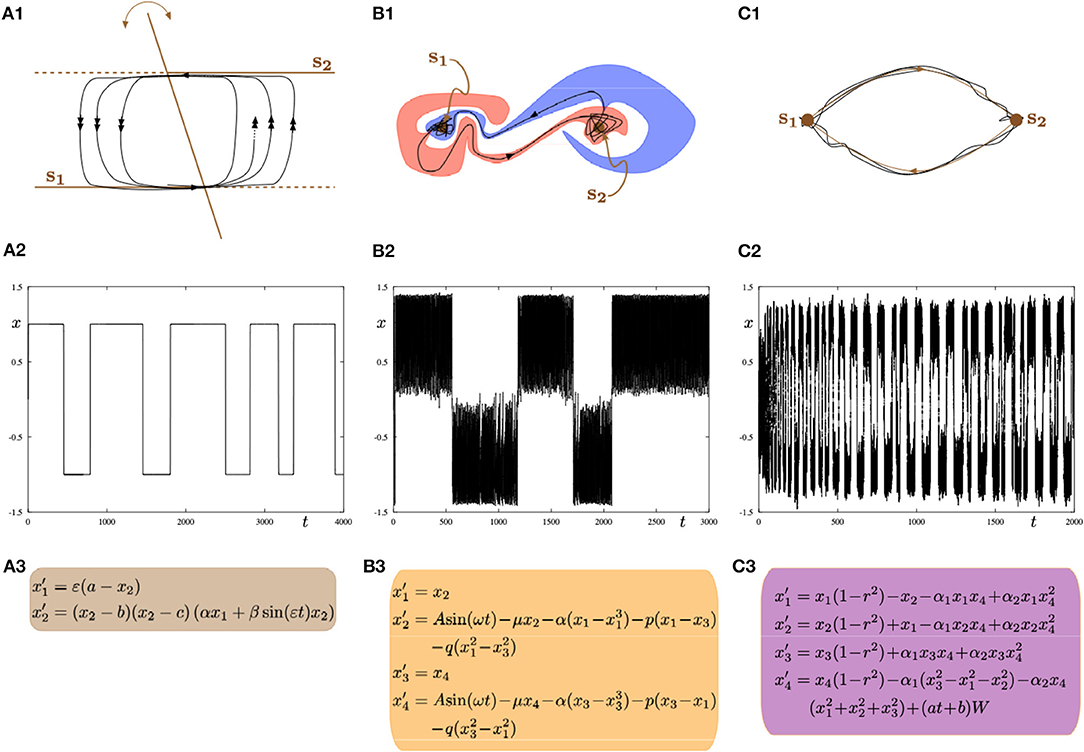

We subsequently contemplate on the dynamical systems point of view, the mechanistic alternatives that could explain switching between metastable states. Typically, within the brain mapping literature (specifically in computational modeling studies) it is often emphasized that transitions are explained via multistability. However, we would like to argue that again, it is crucial to be cautious since there are a multitude of mechanisms that can equally explain transition between states. For the sake of discussion, without wanting to exhaust all possible scenarios and avoiding modeling neurophysiological processes as it would go beyond the scope of the present manuscript, we showcase three canonical alternative mechanisms (as depicted in Figure 9). Moreover, we only focus on transitions between two states (to simplify the discussion). However, the proposed mechanisms can be easily extended to account for a larger number of metastable states. Nonetheless, these canonical mechanisms could be part of neural and hemodynamic biophysical models that explain, electrical-fMRI activity, such as those described via the dynamic causal modeling (DCM) framework (Friston et al., 2000), and/or other macroscopic modeling approaches (Breakspear et al., 2005; Chizhov et al., 2007; Rodrigues et al., 2007, 2009; Marten et al., 2009; beim Graben and Rodrigues, 2013).

Figure 9. Three possible mathematical mechanisms to model switching dynamics: (A1–A3) slow-fast systems with a hysteresis loop; (B1–B3) systems with intermingled basins of attraction; (C1–C3) systems with a robust heteroclinic cycle. In all parts (left, central, right), the top panel shows a sketch of the phase space; the middle panel shows a time series of the minimal model representing one of the frameworks displaying alternating switches between two metastable states s1 and s2; the bottom panel shows minimal ODEs for a given framework. All equations are phenomenological but the resulting dynamics can be found in biophysical models of brain activity.

The first canonical mechanism shown in Figure 9A is that of multiple-timescale dynamical systems, which are ubiquitous in neural modeling (Desroches et al., 2012, 2013, 2016). In this example, we consider a planar slow-fast system (Figure 9A3) with one slow variable x1 and one fast variable x2, where the separation of timescales between these variables is captured by the small timescale parameter 0 < ε≪1. The phase plane (x1, x2) (Figure 9A1) displays the fast nullcline or so-called critical manifold of the system, which consists of two horizontal lines and a third line intersecting the other two with a slowly-time-varying angle with respect to the vertical direction. This slowly-time-varying angle is arbitrary but could represent slow fluctuations inducing varying dwell times along a given attractor. The structure (i.e., fast nullcline) is the bifurcation diagram of the fast subsystem obtained by freezing the dynamics of the slow variable x1 (by setting ε = 0) and hence considering it as a parameter. In this context, the intersection points between the two horizontal components and the third component of the critical manifold correspond to transcritical bifurcation points (Rodrigues et al., 2016). Therefore, the fast subsystem possesses a hysteresis loop with two stable levels of activity, which in the full system (ε > 0 small) correspond to two metastable states s1 and s2 that a given trajectory (black curve) will visit recurrently in alternation. The single (respectively double) arrows along the trajectory correspond to slow (respectively fast) dynamics. Thus the transition between the slow and fast dynamics allows metastability. Parameter values for the simulation shown in Figure 9A2 are: a = 0.3, b = −1, c = 1, α = −2, β = −0.5, ε = 0.004.

A second canonical mechanism that captures switching dynamics between two metastable states is that of a dynamical system with two stable equilibria via so-called intermingled or riddled basins of attraction (Ding and Yang, 1996); this scenario is presented in Figure 9B. As illustrated in panel Figure 9B1 the basins of attraction of the both system's attractors can be arbitrary close to each other and they can even overlap in some region (and projections) of the phase plane of interest. This complex intermingling of basins of attraction enables the trajectory of the system to switch in a complex way between metastable states s1 and s2, as shown in Figure 9B2. The specific model that we consider is formed by two coupled second-order differential equations with a sinusoidal forcing; see panel Figure 9B3. Parameter values for the simulation shown in panel (b2) are: A = 1.011, μ = 0.632, α = −4, p = 0.1, q = 0.005.

The third and last proposed mechanism shown in panels Figure 9C corresponds to a system possessing a robust heteroclinic cycle between two saddle equilibria (Rabinovich et al., 2008; Rodrigues and Labouriau, 2014; beim Graben and Hutt, 2015; Hutt and beim Graben, 2017). In this case, the metastable states are in fact saddles where the unstable manifold of each saddle connects to the other saddle, hence allowing for a stable robust heteroclinic cycle to exist. In this case, the system's trajectory as shown in Figure 9C2 displays recurrent switching between the two saddles that correspond to the two metastable states s1 and s2. The heteroclinic cycle formed by these two states is stable and attracts trajectories. However, each new passage near one of the states brings the trajectory closer to one underlying saddle, hence passage times increase monotonically. However, fluctuations such as system noise (as is often the case in real systems) can disrupt or counteract these monotonic increase in passage times. The specific model is depicted in Figure 9C2, which is a four-dimensional dynamical systems on the 3-sphere possessing a robust stable heteroclinic cycle between two equilibria located at (0, 0, 0, ±1). The two saddles have only their last coordinate different. We add noise in the last equation with a time-dependent amplitude of the Brownian term and the resulting trajectory is depicted in Figure 9C2. Parameter values for the switching trajectory shown in panel (c2) are: α1 = 1, α2 = −0.1, a = 5·10−5, b = 0.33.

To conclude, there are potentially infinitely many models (and a multitude of dynamical mechanisms) that can equally explain the same fMRI BOLD observables. Thus a fundamental question for future research is what signatures within the data could potentially exclude cases and narrow down the possibilities enabling biophysical and parsimonious models to be derived. Finally, the present manuscript shows that is feasible to extract temporal information of the resting state networks, however it also opens up novel questions and avenues in brain mapping research.

The datasets generated for this study are available on request to the corresponding author.

The sample included 30 subjects from the MGH-USC Human Connectome Project. The research was performed in compliance with the Code of Ethics of the World Medical Association (Declaration of Helsinki). All subjects provided written informed consent, approved by the ethics committee in accordance with guidelines of HCP WU-Minn.

SR designed the project. PG did the recurrence analysis. AJ-M, ID, and JC did the data processing. MD did computational models. All authors wrote the paper.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

SR would like to acknowledge Ikerbasque (The Basque Foundation for Science) and moreover, this research is supported by the Basque Government through the BERC 2018-2021 program and by the Spanish State Research Agency through BCAM Severo Ochoa excellence accreditation SEV-2017-0718 and through project RTI2018-093860-B- C21 funded by (AEI/FEDER, UE) and acronym MathNEURO. JC acknowledges financial support from Ikerbasque, Ministerio Economia, Industria y Competitividad (Spain) and FEDER (grant DPI2016-79874-R) and the Department of Economical Development and Infrastructure of the Basque Country (Elkartek Program, KK-2018/00032). Finally, PG acknowledges BCAM's hospitality during a visiting fellowship in fall 2017.

1. ^http://www.humanconnectome.org/

2. ^Available for download at http://www.nitrc.org/projects/biocr_hcatlas/. A new Python version, that was developed during Brainhack Global 2017 – Bilbao, can be downloaded at https://github.com/compneurobilbao/bha

3. ^A toolbox is freely available at https://www.researchgate.net/publication/317597921_RSA_Recurrence_Structure_Analysis_Toolbox_in_MATLAB

Alonso Montes, C., Diez, I., Remaki, L., Escudero, I., Mateos, B., Rosseel, Y., et al. (2015). Lagged and instantaneous dynamical influences related to brain structural connectivity. Front. Psychol. 6:1024. doi: 10.3389/fpsyg.2015.01024

Beckmann, C. F., DeLuca, M., Devlin, J. T., and Smith, S. M. (2005). Investigations into resting-state connectivity using independent component analysis. Philos. Trans. R. Soc. Lond. B 360, 1001–1013. doi: 10.1098/rstb.2005.1634

Beckmann, C. F., and Smith, S. M. (2005). Tensorial extensions of independent component analysis for multisubject fMRI analysis. NeuroImage 25, 294–311. doi: 10.1016/j.neuroimage.2004.10.043

beim Graben, P., and Hutt, A. (2013). Detecting recurrence domains of dynamical systems by symbolic dynamics. Phys. Rev. Lett. 110:154101. doi: 10.1103/PhysRevLett.110.154101

beim Graben, P., and Hutt, A. (2015). Detecting event-related recurrences by symbolic analysis: applications to human language processing. Philos. Trans. R. Soc. Lond. A 373:20140089. doi: 10.1098/rsta.2014.0089

beim Graben, P., and Rodrigues, S. (2013). A biophysical observation model for field potentials of networks of leaky integrate-and-fire neurons. Front. Comput. Neurosci. 6:100. doi: 10.3389/fncom.2012.00100

beim Graben, P., Sellers, K. K., Fröhlich, F., and Hutt, A. (2016). Optimal estimation of recurrence structures from time series. EPL 114:38003. doi: 10.1209/0295-5075/114/38003

Bell, A. J., and Sejnowski, T. (1995). An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 7, 1129–1159. doi: 10.1162/neco.1995.7.6.1129

Bell, A. J., and Sejnowski, T. J. (1997). The "independent components" of natural scenes are edge filters. Vis. Res. 37, 3327–3338. doi: 10.1016/S0042-6989(97)00121-1

Bonifazi, P., Erramuzpe, A., Diez, I., Gabilondo, I., Boisgontier, M. P., Pauwels, L., et al. (2018). Structure-function multi-scale connectomics reveals a major role of the fronto-striato-thalamic circuit in brain aging. Hum. Brain Mapp. 39, 4663–4677. doi: 10.1002/hbm.24312

Breakspear, M., Roberts, J. A., Terry, J. R., Rodrigues, S., Mahant, N., and Robinson, P. A. (2005). A unifying explanation of primary generalized seizures through nonlinear brain modeling and bifurcation analysis. Cereb. Cortex 16, 1296–1313. doi: 10.1093/cercor/bhj072

Camino-Pontes, B., Diez, I., Jimenez-Marin, A., Rasero, J., Erramuzpe, A., Bonifazi, P., et al. (2018). Interaction information along lifespan of the resting brain dynamics reveals a major redundant role of the default mode network. Entropy 20:742. doi: 10.3390/e20100742

Chizhov, A. V., Rodrigues, S., and Terry, J. R. (2007). A comparative analysis of a firing-rate model and a conductance-based neural population model. Phys. Lett. A 369, 31–36. doi: 10.1016/j.physleta.2007.04.060

Damoiseaux, J. S., Rombouts, S. A., Barkhof, F., Scheltens, P., Stam, C. J., Smith, S. M., et al. (2006). Consistent resting-state networks across healthy subjects. Proc. Natl. Acad. Sci. U.S.A. 103, 13848–13853. doi: 10.1073/pnas.0601417103

Deco, G., Kringelbach, M. L., Jirsa, V. K., and Ritter, P. (2017). The dynamics of resting fluctuations in the brain: metastability and its dynamical cortical core. Sci. Rep. 7:3095. doi: 10.1038/s41598-017-03073-5

Desroches, M., Guckenheimer, J., Krauskopf, B., Kuehn, C., Osinga, H. M., and Wechselberger, M. (2012). Mixed-mode oscillations with multiple time scales. SIAM Rev. 54, 211–288. doi: 10.1137/100791233

Desroches, M., Krupa, M., and Rodrigues, S. (2013). Inflection, canards and excitability threshold in neuronal models. J. Math. Biol. 67, 989–1017. doi: 10.1007/s00285-012-0576-z

Desroches, M., Krupa, M., and Rodrigues, S. (2016). Spike-adding in parabolic bursters: the role of folded-saddle canards. Physica D 331, 58–70. doi: 10.1016/j.physd.2016.05.011

Diez, I., Bonifazi, P., Escudero, I., Mateos, B., Muńoz, M. A., Stramaglia, S., et al. (2015). A novel brain partition highlights the modular skeleton shared by structure and function. Sci. Rep. 5:10532. doi: 10.1038/srep10532

Diez, I., Drijkoningen, D., Stramaglia, S., Bonifazi, P., Marinazzo, D., Gooijers, J., et al. (2017). Enhanced pre-frontal functional-structural networks to support postural control deficits after traumatic brain injury in a pediatric population. Netw. Neurosci. 1, 116–142. doi: 10.1162/NETN_a_00007

Ding, M., and Yang, W. (1996). Observation of intermingled basins in coupled oscillators exhibiting synchronized chaos. Phys. Rev. E 54:2489. doi: 10.1103/PhysRevE.54.2489

Eckmann, J. P., Kamphorst, S. O., and Ruelle, D. (1987). Recurrence plots of dynamical systems. Europhys. Lett. 4, 973–977. doi: 10.1209/0295-5075/4/9/004

Fingelkurts, A. A., and Fingelkurts, A. A. (2017). Information flow in the brain: ordered sequences of metastable states. Information 8:22. doi: 10.3390/info8010022

Fox, M. D., Snyder, A. Z., Vincent, J. L., Corbetta, M., Van Essen, D. C., and Raichle, M. E. (2005). The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc. Natl. Acad. Sci. U.S.A. 102:9673. doi: 10.1073/pnas.0504136102

Friston, K. J. (1997). Transients, metastability, and neuronal dynamics. NeuroImage 5, 164–171. doi: 10.1006/nimg.1997.0259

Friston, K. J., Mechelli, A., Turner, R., and Price, C. J. (2000). Nonlinear responses in fMRI: the Balloon model, Volterra kernels, and other hemodynamics. NeuroImage 12, 466–477. doi: 10.1006/nimg.2000.0630

Hansen, E. C., Battaglia, D., Spiegler, A., Deco, G., and Jirsa, V. K. (2015). Functional connectivity dynamics: modeling the switching behavior of the resting state. NeuroImage 105, 525–535. doi: 10.1016/j.neuroimage.2014.11.001

Hutt, A., and beim Graben, P. (2017). Sequences by metastable attractors: interweaving dynamical systems and experimental data. Front. Appl. Math. Stat. 3:11. doi: 10.3389/fams.2017.00011

Iraji, A., DeRamus, T. P., Lewis, N., Yaesoubi, M., Stephen, J. M., Erhardt, E., et al. (2018). The spatial connectome reveals a dynamic interplay between functional segregation and integration. bioRxiv [Preprint]. doi: 10.1101/427450

Kelso, J. A. (2012). Multistability and metastability: understanding dynamic coordination in the brain. Philos. Trans. R. Soc. Lond. B 367, 906–918. doi: 10.1098/rstb.2011.0351

Krishnan, A., Williams, L. J., McIntosh, A. R., and Abdi, H. (2011). Partial least squares (PLS) methods for neuroimaging: a tutorial and review. NeuroImage 56, 455–475. doi: 10.1016/j.neuroimage.2010.07.034

Kunert-Graf, J. M., Eschenburg, K. M., Galas, D. J., Kutz, J. N., Rane, S. D., and Brunton, B. W. (2018). Extracting reproducible time-resolved resting state networks using dynamic mode decomposition. bioRxiv [Preprint]. doi: 10.1101/343061

Marten, F., Rodrigues, S., Benjamin, O., Richardson, M. P., and Terry, J. R. (2009). Onset of polyspike complexes in a mean-field model of human electroencephalography and its application to absence epilepsy. Philos. Trans. R. Soc. Lond. 367, 1145–1161. doi: 10.1098/rsta.2008.0255

Park, H. J., and Friston, K. (2013). Structural and functional brain networks: from connections to cognition. Science 342:1238411. doi: 10.1126/science.1238411

Rabinovich, M. I., Huerta, R., Varona, P., and Afraimovich, V. S. (2008). Transient cognitive dynamics, metastability, and decision making. PLoS Comput. Biol. 4:e1000072. doi: 10.1371/journal.pcbi.1000072

Raichle, M. E., MacLeod, A. M., Snyder, A. Z., Powers, W. J., Gusnard, D. A., and Shulman, G. L. (2001). A default mode of brain function. Proc. Natl. Acad. Sci. U.S.A. 98:676. doi: 10.1073/pnas.98.2.676

Rasero, J., Diez, I., Cortes, J. M., Marinazzo, D., and Stramaglia, S. (2019). Connectome sorting by consensus clustering increases separability in group neuroimaging studies. Netw. Neurosci. 3, 325–343. doi: 10.1162/netn_a_00074

Rasero, J., Pellicoro, M., Angelini, L., Cortes, J. M., Marinazzo, D., and Stramaglia, S. (2017). Consensus clustering approach to group brain connectivity matrices. Netw. Neurosci. 1, 242–253. doi: 10.1162/NETN_a_00017

Roberts, J. A., Gollo, L. L., Abeysuriya, R. G., Roberts, G., Mitchell, P. B., Woolrich, M. W., et al. (2019). Metastable brain waves. Nat. Commun. 10:1056. doi: 10.1038/s41467-019-08999-0

Rodrigues, A. A. P., and Labouriau, I. S. (2014). Spiralling dynamics near heteroclinic networks. Physica D 268, 34–49. doi: 10.1016/j.physd.2013.10.012

Rodrigues, S., Barton, D., Szalai, R., Benjamin, O., Richardson, M. P., and Terry, J. R. (2009). Transitions to spike-wave oscillations and epileptic dynamics in a human cortico-thalamic mean-field model. J. Comput. Neurosci. 27, 507–526. doi: 10.1007/s10827-009-0166-2

Rodrigues, S., Desroches, M., Krupa, M., Cortes, J. M., Sejnowski, T. J., and Ali, A. B. (2016). Time-coded neurotransmitter release at excitatory and inhibitory synapses. Proc. Natl. Acad. Sci. U.S.A. 113, E1108–E1115. doi: 10.1073/pnas.1525591113

Rodrigues, S., Gonçalves, J., and Terry, J. R. (2007). Existence and stability of limit cycles in a macroscopic neuronal population model. Physica D 233, 39–65. doi: 10.1016/j.physd.2007.06.010

Shine, J. M., Breakspear, M., Bell, P., Martens, K. E., Shine, R., Koyejo, O., et al. (2018). The low dimensional dynamic and integrative core of cognition in the human brain. bioRxiv [Preprint]. doi: 10.1101/266635

Smitha, K. A., Akhil Raja, K., Arun, K. M., Rajesh, P. G., Thomas, B., Kapilamoorthy, T. R., et al. (2017). Resting state fMRI: a review on methods in resting state connectivity analysis and resting state networks. Neuroradiol. J. 30, 305–317. doi: 10.1177/1971400917697342

Stramaglia, S., Pellicoro, M., Angelini, L., Amico, E., Aerts, H., Cortés, J. M., et al. (2017). Ising model with conserved magnetization on the human connectome: implications on the relation structure-function in wakefulness and anesthesia. Chaos 27:047407. doi: 10.1063/1.4978999

Surampudi, S. G., Misra, J., Deco, G., Bapi, R. S., Sharma, A., and Roy, D. (2019). Resting state dynamics meets anatomical structure: temporal multiple kernel learning (tMKL) model. NeuroImage 184, 609–620. doi: 10.1016/j.neuroimage.2018.09.054

Tognoli, E., and Kelso, J. (2014). The metastable brain. Neuron 81, 35–48. doi: 10.1016/j.neuron.2013.12.022

Vergun, S., Gaggl, W., Nair, V. A., Suhonen, J. I., Birn, R. M., Ahmed, A. S., et al. (2016). Classification and extraction of resting state networks using healthy and epilepsy fMRI data. Front. Neurosci. 10:440. doi: 10.3389/fnins.2016.00440

Vidaurre, D., Smith, S. M., and Woolrich, M. W. (2017). Brain network dynamics are hierarchically organised in time. Proc. Natl. Acad. Sci. U.S.A. 114, 12827–12832. doi: 10.1073/pnas.1705120114

Keywords: resting state, recurrence structure analysis, metastability, BOLD fMRI, diffusion tensor imaging, brain hierarchical atlas

Citation: beim Graben P, Jimenez-Marin A, Diez I, Cortes JM, Desroches M and Rodrigues S (2019) Metastable Resting State Brain Dynamics. Front. Comput. Neurosci. 13:62. doi: 10.3389/fncom.2019.00062

Received: 29 May 2019; Accepted: 23 August 2019;

Published: 06 September 2019.

Edited by:

Germán Mato, Bariloche Atomic Centre (CNEA), ArgentinaReviewed by:

Xintao Hu, Northwestern Polytechnical University, ChinaCopyright © 2019 beim Graben, Jimenez-Marin, Diez, Cortes, Desroches and Rodrigues. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Serafim Rodrigues, c3JvZHJpZ3Vlc0BiY2FtYXRoLm9yZw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.