- 1Laboratory of Computational Neuroscience, School of Computer and Communication Sciences and Brain Mind Institute, School of Life Sciences, École Polytechnique Fédérale de Lausanne, Lausanne, Switzerland

- 2Faculty of Mathematics and Natural Sciences, Institute for Zoology, University of Cologne, Cologne, Germany

- 3Laboratory of Sensory Processing, Brain Mind Institute, School of Life Sciences, École Polytechnique Fédérale de Lausanne, Lausanne, Switzerland

Experimental measurements of pairwise connection probability of pyramidal neurons together with the distribution of synaptic weights have been used to construct randomly connected model networks. However, several experimental studies suggest that both wiring and synaptic weight structure between neurons show statistics that differ from random networks. Here we study a network containing a subset of neurons which we call weight-hub neurons, that are characterized by strong inward synapses. We propose a connectivity structure for excitatory neurons that contain assemblies of densely connected weight-hub neurons, while the pairwise connection probability and synaptic weight distribution remain consistent with experimental data. Simulations of such a network with generalized integrate-and-fire neurons display regular and irregular slow oscillations akin to experimentally observed up/down state transitions in the activity of cortical neurons with a broad distribution of pairwise spike correlations. Moreover, stimulation of a model network in the presence or absence of assembly structure exhibits responses similar to light-evoked responses of cortical layers in optogenetically modified animals. We conclude that a high connection probability into and within assemblies of excitatory weight-hub neurons, as it likely is present in some but not all cortical layers, changes the dynamics of a layer of cortical microcircuitry significantly.

Introduction

Is it possible to uniquely constrain a model network of point neurons with experimental data? First, suppose that we have access to experimental measurements of electrophysiological properties of single neurons. Indeed, a wealth of single-neuron data exists (Markram et al., 2004, 2015) and methods have been developed that enable a rapid and reliable extraction of parameters of generalized integrate-and-fire neuron models from such experimental data (Jolivet et al., 2006; Pillow et al., 2008; Mensi et al., 2012; Pozzorini et al., 2013, 2015). Thus, parameters of neuron models, including spread of parameters caused by heterogeneity, can be completely constrained by experiments. Second, suppose that we have access to experimental measurements of the distribution of synaptic weights. Indeed, experimental data suggests a unimodal, possibly log-normal, distribution of EPSP amplitudes (Feldmeyer et al., 1999, 2002, 2006; Song et al., 2005; Frick et al., 2008; Lefort et al., 2009). Thus, we can constrain the distribution of synaptic weights in a network model with the data collected over many pairs of neurons. Third, suppose that we know the probability that two neurons (say, of types A and B located in layers n and m of the same cortical column) make a short-range connection from A to B. Again, such data exists (Lefort et al., 2009; Avermann et al., 2012) and should be used to constrain a network model. But is data collected on single neurons and pairs of neurons sufficient to constrain the parameters of a network model?

The answer is negative. There are at least two reasons: (i) The distribution of synaptic weights does not indicate whether a single neuron is driven by a random combination of strong and weak synapses, or whether one neuron receives all the strong input synapses and another one all the weak ones. Similarly, (ii) an average connection probability of say, 20 percent, is consistent with a network of a 1000 neurons where each neuron receives exactly 200 connections, but also equally consistent with a network where half the neurons receive 100 inputs and the other half 300. In this paper, we systematically explore network variants that implement the variations indicated under (i) and (ii) while keeping all single-neuron parameters, number of neurons, as well as pairwise connection probabilities and synaptic weight distributions fixed. To keep the arguments as transparent, consistent, and precise as possible, we focus on a single cortical layer of mouse barrel cortex and use data from a single lab (Lefort et al., 2009; Avermann et al., 2012).

The hypothetical variations (i) and (ii) make our networks different from a classical Erdős–Rényi random network. Indeed, experimental data from various labs indicate non-random features in network connectivity (Song et al., 2005; Yoshimura et al., 2005; Kampa et al., 2006; Perin et al., 2011). The influence of some of these features on activity patterns in neuronal networks has already been studied in a set of modeling papers (Koulakov et al., 2009; Roxin, 2011; Litwin-Kumar and Doiron, 2012; Hu et al., 2013, 2014; Pernice et al., 2013; Vasquez et al., 2013; Jahnke et al., 2014; Luccioli et al., 2014; McDonnell and Ward, 2014; Mazzucato et al., 2015, 2016). We introduce two features of network connectivity which we call degree-hub and weight-hub. These concern two types of neurons and are made based on the hypothetical variations (i) and (ii), respectively. In particular, if a few neurons receive more synaptic input connections than others (Roxin, 2011; Pernice et al., 2013; Tomm et al., 2014), we will refer to these neurons as degree-hubs. On the other hand, even in a network where there are no degree-hubs, there can still be a few neurons (which we will call weight-hubs) that receive all the strong connections while others receive all the weak connections (Koulakov et al., 2009; Tomm et al., 2014), but chosen such that the total distribution of synaptic weights across the network remains consistent with experimental data. More generally, such non-random features can be described as “correlations” in the connectivity matrix or synaptic weight distribution. For example, the input connectivity in a network with weight-hubs is correlated: It is more likely to find a second strong input connection in a neuron in which you have already found a strong synapse than in a neuron for which you have found a weak synapse.

Several experiments studied the existence of both degree-hub and weight-hub neurons in different regions of the brain. In the hippocampus GABAergic neurons that receive more synapses than average were detected (Bonifazi et al., 2009). Excitatory neurons which receive many synapses from inhibitory neurons were found in mouse frontal cortex (Fino and Yuste, 2011). Recently, Okun et al. (2015) found that neurons that are strongly correlated to the population-averaged firing rate receive larger numbers of synapses from their neighbors. Neurons receiving stronger connections from several other neurons were observed in experiments (Song et al., 2005; Perin et al., 2011). Yassin et al. (2010) and Cossart et al. (2003) investigated neocortical excitatory neurons that systematically fire more than other neurons. Such a high firing rate can be due to different intrinsic neuronal properties or more frequent or stronger excitatory synapses onto the neurons. In the latter case, the receiving neurons can be considered as candidates of degree- or weight-hubs. Even though experimental evidence for the existence of weight-hubs within pyramidal neurons is not yet convincing, we explore here signatures of hypothetical weight-hub neurons in neuronal activity.

Another experiment unravels a related but different phenomenon in the cortex. Yoshimura et al. (2005) suggest that excitatory neurons in the cortex can form assemblies and that neurons inside each assembly share common synaptic input. Here we explore a hypothetical network where connectivity between weight-hub neurons is higher than average. We show that such an elevated connection probability between weight-hub neurons significantly changes the dynamics of the network. Note that a subnetwork of densely connected weight-hub neurons can be interpreted as a neuronal assembly (Hebb, 1949).

We build a neuronal network which models layer 5A (L5) of a mouse barrel cortex. Neuron numbers, pairwise connection probabilities, and distribution of synaptic weights are matched to experimental data (Lefort et al., 2009; Avermann et al., 2012). Parameters of the neuron model such as membrane time constants, firing threshold and adaptation have been extracted from experimental data (Mensi et al., 2012). Our model is able to generate up-state/down-state oscillations (Steriade et al., 1993; Cowan and Wilson, 1994; Lampl et al., 1999). We show that in our network of adaptive integrate-and-fire neurons, the existence of weight-hub neurons is not sufficient for producing metastable up- and down-states. For oscillations to appear, weight-hub neurons need to form assemblies with dense internal connectivity. Another phenomenon that we address here is the different light-evoked responses of cortical supra-granular and infra-granular layers. Experiments (Beltramo et al., 2013) show that optogenetic stimulation in L5 leads to a large depolarization and a notable number of emitted spikes in non-stimulated neurons. In contrast after stimulation of a group of neurons in layer 2/3 (L2/3), non-stimulated neurons do not show significant responses. We show that such a difference can be explained by the presence or absence of an assembly of hub neurons. We hypothesize that in L5 weight-hub neurons are connected together densely and form assemblies while in L2/3 their connections are sparse. This may explain experimental observations (Sakata and Harris, 2009; Chauvette et al., 2010; Beltramo et al., 2013) which indicate that up-states are initiated in L5, and that L2/3 largely follows the oscillation passively.

Materials and Methods

Neuron Model and Population Parameters

In each simulation we model one cortical layer, either layer 5A (L5) or layer 2/3 (L2/3) from mouse somatosensory cortex. Based on experimental data (Lefort et al., 2009), our model of a L5 barrel column contains 454 excitatory and 90 inhibitory neurons while L2/3 contains 1691 excitatory and 230 inhibitory neurons. The two layers are studied separately and are not connected to each other.

As a neuron model, we use a current-based Generalized Integrate-and-Fire (GIF) model (Mensi et al., 2012) that features both an adaptation current and a dynamic threshold for spike-frequency adaptation. The GIF model parameters that we use in our simulations have been previously extracted from experimental data (Mensi et al., 2012). Importantly, the GIF model has been shown to capture with high accuracy both the subthreshold dynamics of the membrane potential and the spiking activity recorded from neurons in mouse barrel cortex slices during current injection (Mensi et al., 2012; Pozzorini et al., 2013). In this model, the subthreshold membrane potential V(t) is described by the differential equation:

where the parameters C, gL and EL define the passive properties of the neuron (for parameter values see Table 1), I(t) is the input current and are the spike times. Each time a spike is emitted, an intrinsic current with stereotypical shape η(t) is triggered (see Table 2). Currents triggered by different spikes accumulate and produce spike-frequency adaptation. Immediately after firing, the membrane potential is reset to Vreset, integration of Equation (1) restarts and the neuron goes through an absolute refractory period of duration tref.

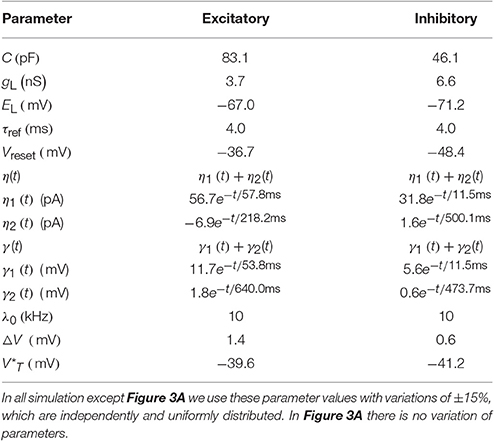

Table 1. The mean of GIF neuron model parameters extracted from Mensi et al. (2012).

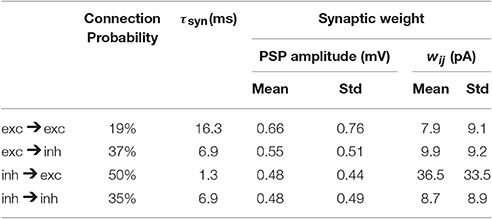

Table 2. Network parameters as extracted from mouse barrel cortex (Lefort et al., 2009; Avermann et al., 2012).

Spikes are produced stochastically according to a point process with the firing intensity

where λ0 is the stochastic intensity at the firing threshold VT, △V is a constant which defines the level of stochasticity and VT is a time-dependent firing threshold:

where is a constant and γ(t) describes the stereotypical time course of the firing threshold after the emission of an action potential (see Table 1).

For all neuronal parameters, we use the values given in Table 1 with ±15% uniformly distributed variations in all simulations, except for Figure 3A. For comparison in Figure 3A all neuron parameters are as in Table 1 without any variation. The values of Table 1 are extracted from experimental data from mouse barrel cortex (Mensi et al., 2012) and no parameter tuning of neuronal parameters was done for the network simulations reported here.

In the network, the input current Ii(t) in Equation (1) is generated by synaptic current pulses into a specific neuron i

where is the fth spike of a presynaptic neuron j and is the spike train of neuron j where δ denotes the Dirac δ-function. We choose an exponential shape for post-synaptic currents (PSC) α with a time constant τsyn: for t ≥ Δ. The transmission delay (Δ) of synaptic connections in all our simulations is 1 ms. The symbol wij denotes the synaptic weight from neuron j to neuron i. The term synaptic weight is commonly used for either of two different quantities, either the amplitude of the PSC or the amplitude of the post-synaptic potential (PSP). In this study we take the first definition, i.e., wij denotes the PSC amplitude; see Equation 4. However, the experimental datasets we used report the synaptic weight based on the second definition (PSP amplitude). Given the neuronal parameters, one can easily relate the two quantities. We report the synaptic weight we used in our simulations according to both of the definitions in Table 2.

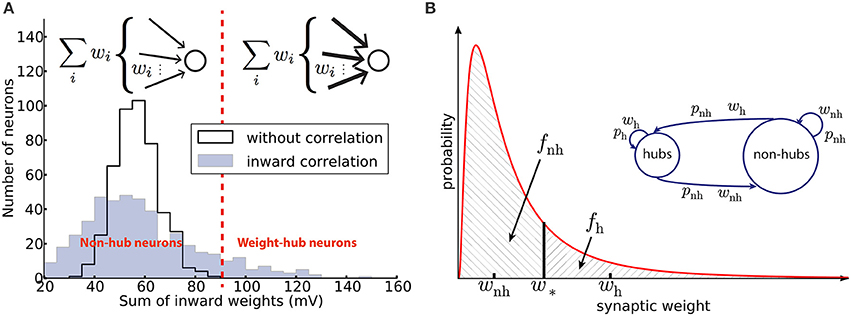

All network parameters (Table 2), e.g., connection probabilities, the distribution of synaptic weights and number of neurons are chosen based on previously published data extracted from mouse barrel cortex (Lefort et al., 2009; Avermann et al., 2012). At present there is no comparable dataset for L5 inhibitory neurons. Therefore, for inhibitory neurons, we use neuronal and network parameters similar to those of L2/3 inhibitory neurons (Avermann et al., 2012). Since, these neurons do not play a crucial role in initiating the up/down oscillations and stimulus-evoked responses, the exact choice of their parameters does not strongly affect the results. Moreover, the study of Pfeffer et al. (2013) explored some aspects of connectivity pattern between different subsets of inhibitory neurons and highlighted more similarities than differences between L2/3 and L5 inhibitory network. In the model, all neurons receive external Poisson noise whose properties are described in Table 3.

In order to reproduce the light-evoked stimulation (Figure 4), we randomly select 15% of the neurons and inject a step current with amplitude 100 pA for 300 ms. For simulating the light-evoked response in L2/3, the connection probability and the mean synaptic weights inside excitatory population are 16.8% and 0.37 mV, respectively (Avermann et al., 2012). The other network parameters are the same as L5. For simulating the active cortical state (Figure 6), during the active period each neuron receives synaptic input from 70 Poisson process neurons firing with a rate of 5 Hz. The synaptic weights of synapses from Poisson neurons to assemblies, non-hubs and inhibitory neurons are 25, 5, and -25 pA, respectively.

All simulations were run using the Brian simulator (Goodman and Brette, 2008).

Partitioning the Excitatory Population into Weight-Hubs and Non-hubs Subpopulations

In order to distinguish between weight-hub and other neurons (“non-hubs”), and to capture the properties of weight-hub neuron subpopulations (assemblies), we use two methods explained in the following. The first method (heterogeneity approach) maintains the heterogeneity of synaptic weights in the population. The experimentally obtained probability density function of synaptic weights p(w) is well-approximated by a lognormal distribution (Lefort et al., 2009):

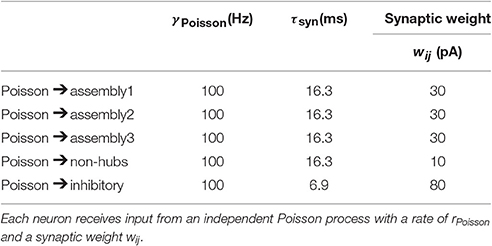

where μ and s are the two parameters of the distribution and wm is the median of synaptic weights (Figure 1).

Figure 1. Networks with weight-hub neurons. (A) Histogram of the sum of inward weights for a random (solid line, network without weight-hubs) and inward correlated (filled, network with weight-hubs) network topology. While the random topology (without weight-hubs) shows an approximately normal distribution, the inward-correlated topology has a broader, lognormal-like distribution. Weight-hub neurons form the tail of this distribution. Both networks, without and with weight-hubs, have the same lognormal distribution of individual weights shown in (B) (red line). Inset: In a heterogeneous network with inward correlations, most neurons receive many weak (thin arrows) connections (top) whereas weight-hub neurons (bottom) receive many strong connections. (B) Fitting the experimental distribution (red line) of synaptic weights (EPSP amplitudes) by a two-element “homogeneous” distribution (dashed areas). The lognormal distribution (solid line) was fitted to experimental data (Lefort et al., 2009) and is used to find the values of weak and strong weights, wnh and wh, respectively. Inset: Splitting the excitatory population into two subpopulations. Weight-hub neurons receive strong synaptic weights (wh) and non-hub neurons receive weak synaptic weights (wnh). All connection probabilities are low (pnh; nh: non-hub) except for the hub-to-hub connections (ph).

Following Tomm et al. (2014), we first generate a synaptic weight matrix with local inward weight correlations, as described in the following. We start by generating an initial random connectivity matrix (connection probability p = 19%, see Table 2) with weights drawn from Equation (5). Let Ŵ = [ŵij]N×N be the initial weight matrix, where N is the total number of excitatory neurons. We continue by generating a vector A = [ai]N×1 using another lognormal distribution. Now, we define a new weight matrix W by

A high value of ai increases the weight of all synapses received by the i-th neuron. Therefore, a high value of ai tends to convert the neuron to a weight-hub neuron, because it will have many large inward weights. Moreover, since multiplication of two lognormal variables yields a lognormal variable, we can be sure that new weights wij are drawn from a lognormal distribution. We choose the parameters of the two lognormal distributions (μ = 0.141, s = 0.924 and wm = 0.372 mV for the distribution of ŵij, and μ = 1.4·10−4, s = 0.15 and am = 1 (median) for the distribution of ai) to set the mean and variance of the final weights equal to the values found in the experimental data (Lefort et al., 2009) (μ = 0.005, s = 0.936 and wm = 0.419 mV). The mean weight is then 0.66 mV and the standard deviation is 0.76 mV with the above choice of parameters. Nh = 95 neurons out of Ne = 454 excitatory neurons (20.9%) were labeled as “weight-hubs,” by choosing those with the highest sum of inward weights (Figure 1A). Note that other values may have been used for the distribution parameters of ŵij and ai, as long as the distribution of wij matches the experimental data (Lefort et al., 2009). We chose the mentioned parameters to achieve biologically plausible network dynamics (skewed firing rate distribution and low correlations between neurons). Note that if we only consider weight matrix Ŵ (without multiplying by ai), then our network does not contain weight-hub neurons. We used this approach in Figure 4C.

In a second step we rewire the network to increase the number of connections between weight-hub neurons in the assembly (such that connection probability between weight-hub neurons increases from to ph = 50%), while keeping the total number of connections fixed. To do so, we randomly select two unconnected weight-hub neurons and add a connection between them. The weight of this new synapse is again drawn from the lognormal distribution described earlier. Then we randomly select a connected neuron pair which contains at least one non-hub neuron and remove the synapse connecting them. This procedure is repeated until we reach the desired connection probability between weight-hubs. An issue here is that, since we remove a weak weight and add a strong one, the overall average weight increases slightly. However, the number of replaced synapses is very small compared to the overall number of synapses: The number of synapses between weight-hub neurons before the rewiring is and after that it should be . Therefore, the fraction of replaced synapses equals:

where is the total number of synapses between excitatory neurons. That means the rewiring concerns only 7.1% of all excitatory synapses, and therefore causes only a small increase of the average weight (average exc. weight is 0.659 mV before and is 0.666 mV after rewiring). Note that choosing a higher fraction of assembly neurons in the network increases the fraction of replaced synapses. For example, if assembly neurons form 35% of all excitatory neurons, 20% of all excitatory synapses will be affected. Clearly, it leads to a significant change in the average of excitatory weighs (0.659 mV before and 0.701 mV after rewiring).

The rewiring procedure can be modified in order to make several connected weight-hub assemblies instead of just one. To this end, we randomly assign weight-hub neurons into several groups. Then new synapses are added inside the groups and the same number of existing synapses between the groups or between a pair of non-hub neurons are removed. Using this procedure, each group becomes an assembly of densely connected weight-hub neurons.

As we observed in the previous method, we could choose different levels of heterogeneity in synaptic weight structure by choosing different values for the distribution parameters of ŵij and ai. However, this heterogeneity does not affect the main outcome of the model, which is oscillation. We support this idea by showing that a model with homogenous weight structure is able to generate oscillations. Here we explain the second method for building weight-hub neurons in the excitatory population. This method produces homogenous synaptic weights within each subpopulation. Hence, we call it homogenous approach. We use it for the analytical results and for Figure 3A. The method splits the excitatory population into two subpopulations. The first one (the assembly of densely connected weight-hubs) contains Nh weight-hub neurons, the second one contains Nnh non-hub neurons. Weight-hubs are those neurons that receive strong synapses from other weight-hubs and from other excitatory neurons; all input weights onto weight-hubs have the same value wh. Non-hubs receive weak synapses, all with identical input weights wnh. Let us assume that all connection probabilities between and inside subpopulations are the same (pnh) except for the weight-hub to weight-hub connection probability (ph) which is larger. Figure 1A summarizes the parameters of this network structure.

The experimental data (Lefort et al., 2009) do not distinguish between weight-hubs and non-hubs but reports an overall synaptic weight distribution (p(w)) and an average connection probability (). We adjust the network parameters of the two homogeneous subpopulations using these data. The average connection probability () should be maintained, despite the split of the population into weight-hubs and non-hubs. Computing the average connection probability in this model yields the equation:

We approximate the synaptic weight distribution p(w) obtained by experiments (Lefort et al., 2009) with a two-element distribution formed by wh and wnh (Figure 1A). The strategy is simple: a classification boundary (w*) divides the synaptic weights into two disjoint sets, i.e., synaptic weights lower than w* and synaptic weights higher than w*. All weights lower than w* are set to wnh and the others to wh.

In order to obtain the value of w*, we introduce the fraction of weak connections fnh as a parameter:

The classification boundary w* follows from the condition that the probability mass of weak connections must account for the fraction of weak connections:

Because p(w) is positive there is a unique solution for w* which we determine numerically. Once the boundary w* is fixed, averaging w over the respective support yields the synaptic weights of weight-hubs wh and non-hubs wnh:

where fh = 1−fnh. A similar procedure may be applied in the case of several connected weight-hub neurons subpopulations. Choosing ph = 50% and Nh = 95, we obtain the remaining parameter values: wh = 1.42 mV, wnh = 0.34 mV for PSP amplitudes, and pnh = 18%. The PSC amplitudes can be calculated using the PSP amplitudes: wh = 16.9 pA and wnh = 4.0 pA.

Rate-Current Relations

Consider a population or a subpopulation of neurons. We can obtain a relation between the average firing rate of all neurons and the average synaptic input current using two different approaches. The first approach employs the neurons' gain function, a generalization of the frequency-current (f−I) curve (the terms firing frequency and firing rate are used interchangeably here). Injecting a weakly fluctuating current Isyn into a neuron causes an average firing rate of

where g is the gain function and 〈Isyn〉 and sI are the average and standard deviation of synaptic current over time, respectively. Although there are ways to compute the firing rate of adaptive integrate-and-fire neuron models in closed-form (Fourcaud-Trocmé et al., 2003; Hertäg et al., 2014) or by using a self-consistent numerical approach (La Camera et al., 2004; Richardson, 2007, 2009), we obtain it here by numerical simulations, using a certain amount of fluctuations in the input:

where α(t) is the shape of an elementary postsynaptic current (PSC) defined in Equation (4), ξ(t) is white noise of unit standard deviation and . If the current is injected for short episodes of 10 ms or less, we can estimate the firing rate in the non-adapted state by averaging over several trials. If it is injected for a longer time, we can divide the time into intervals of 10 ms and extract the frequency-current relation in the different, progressively more adapted states.

The second relation between the average firing rate and the average synaptic current follows from the network activity; see Amit and Brunel (1997) and Gerstner et al. (2014). Each neuron i receives the synaptic current produced by the input spike train:

where Sj(t) is the spike train of j-th neuron, and wij is the synaptic weight of this input onto the receiving neuron. By averaging both sides over time and input neurons we obtain the average input current (also known as the mean field) , where N and p are the number of neurons in the population and the connection probability between them, respectively. Here is the total charge of one PSC pulse, is the average synaptic weight and r is the average firing rate of neurons in this population. Rearranging this equation yields the network feedback relation:

which is a linear relation of 〈Isyn〉 and r with slope . We refer to the denominator as the network feedback (Cfb) of the population:

Equations (13, 17) will be used in the “Results” Section to get insight into the network dynamics.

K-Means Clustering Method

K-means is a machine learning method for assigning data samples of a dataset to K clusters. In this method, each data sample is represented by a vector of numbers.

The algorithm works as follows. It initializes the center vectors of the K clusters randomly. Then, K clusters are created by assigning each data sample to the nearest center vector (using Euclidean distance). Afterward, the new center of each cluster is calculated by averaging over all data samples to the cluster. The algorithm repeats the assignment and averaging steps until it converges (i.e., until no change happens to the clusters by repeating these steps).

An important issue is how to determine the number of clusters (K) to begin with. This has generally to be done with the task in mind. Here we use the algorithm for two tasks. The first one is distinguishing weight-hubs from non-hubs. Clearly, in this task K = 2, because we are looking for two different classes of neurons. The second task is assigning weight-hubs to different clusters. Here, we use the so-called elbow method for choosing the value of K: We run the algorithm for different values of K (2, 3, …). Generally, the error of clustering (sum of squared distances between each data sample and the center of its cluster) decreases with increasing K. However, we choose the K at which the error decreases abruptly and a greater K does not decrease the error that much. This method leads us to K = 3 for the second task.

Results

Layer 5-Model Network Produces Irregular Oscillations

Both during anesthesia and slow-wave sleep cortical neurons show slow oscillations (~1 Hz) between two states (Steriade et al., 1993; Cowan and Wilson, 1994; Lampl et al., 1999; Sanchez-Vives and McCormick, 2000; Sanchez-Vives et al., 2000; Petersen et al., 2003), the active up-state and the quiescent down-state. The underlying mechanism of this phenomenon is not fully understood, but several neuronal network models have been suggested, mostly based on short-term plasticity (Holcman and Tsodyks, 2006; Melamed et al., 2008; Ghorbani et al., 2012).

Here we model cortical L5 with neuron model parameters and network parameters extracted from experimental data of a single column of somatosensory cortex in mice (see Section Materials and Methods and Tables 1, 2). An important feature of our model is that its excitatory population (which consists of 454 neurons) contains three assemblies of densely (p = 50%) connected weight-hubs which consist of 45, 30, and 20 weight-hub neurons, respectively. A weight-hub neuron, or simply weight-hub, is defined here as a neuron receiving many strong synapses so that the sum of incoming synaptic weights across all connections from other neurons in the network is large compared to that of other neurons (Figure 1A).

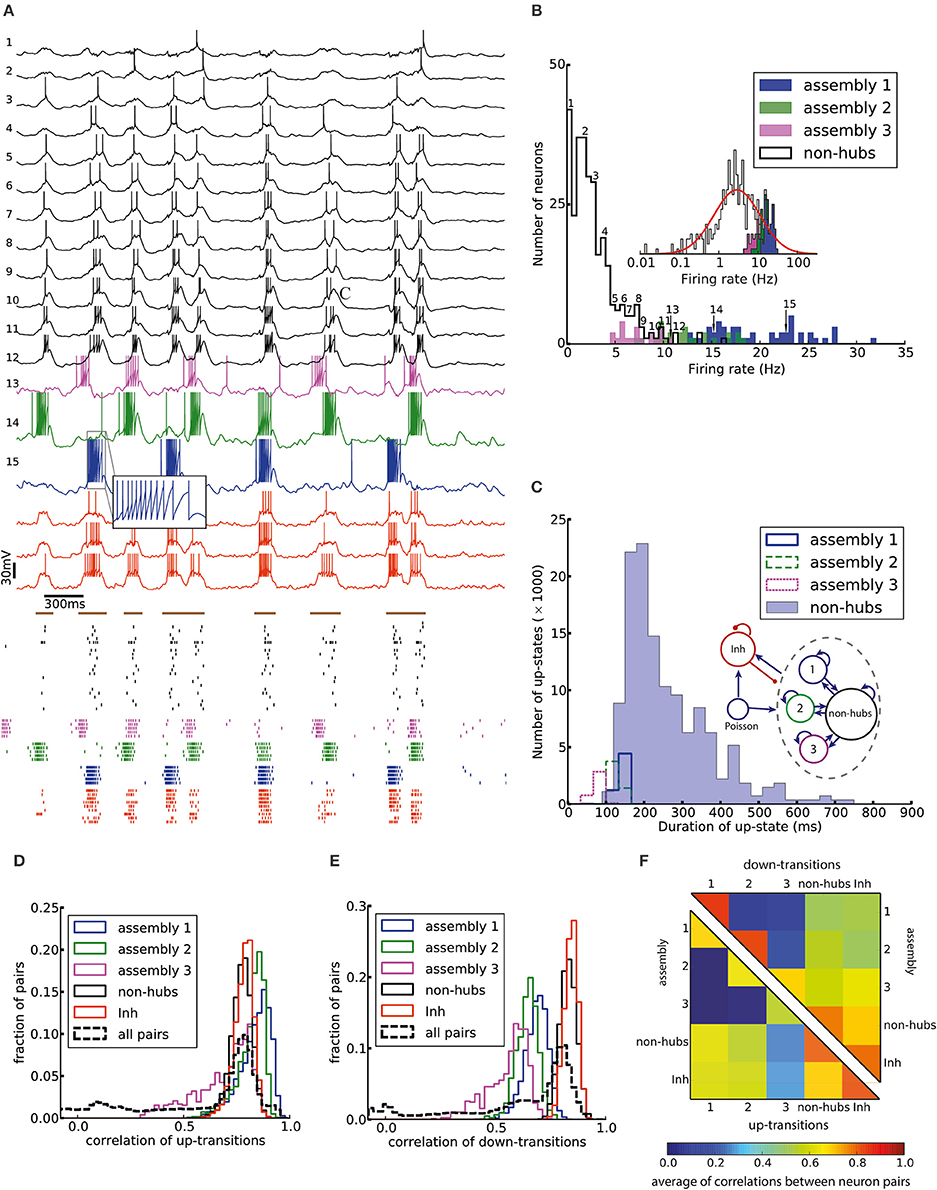

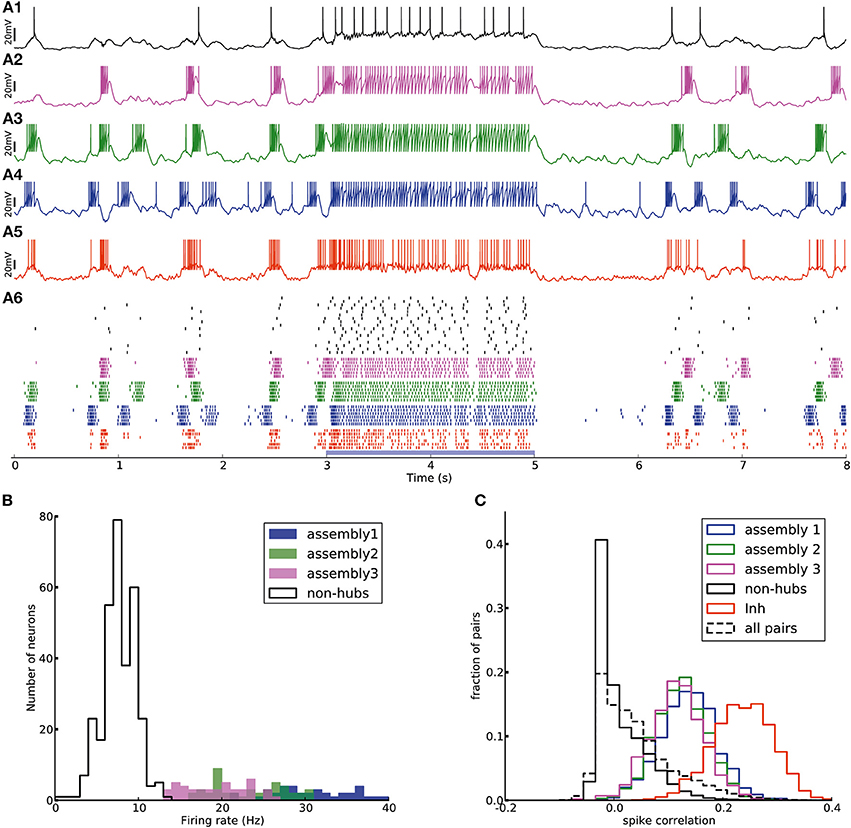

Figure 2A shows the membrane potential and spike raster of several sample neurons. Simulations show that the model exhibits irregular up/down state transitions reminiscent of irregular slow oscillations in anesthetized cortex (Stern et al., 1997; Lampl et al., 1999). In order to compare the up-state durations in the model with experimental data (Stern et al., 1997; Cossart et al., 2003), we quantify the variability of the duration T of the up-state by the coefficient of variation, defined as std(T)/mean(T), where the up-state duration T is measured as the duration for which the membrane potential of a neuron stays at least 10 mV above the resting potential (EL). To this end, the membrane potential is smoothed by filtering with a Gaussian filter kernel (of width 20 ms) in order to remove rapid fluctuations. The coefficient of variation of this presumed up-state duration, averaged over all neurons that have not been classified as weight-hub, is 0.42, which shows that their up-state duration is rather irregular (Figure 2C). Similarly, if we repeatedly select 10 excitatory neurons (choosing randomly from both weight-hubs and other neurons) and measure the average coefficient of variation of the up-state duration, we find a coefficient of variation of 0.40 ± 0.06.

Figure 2. Irregular up- and down-state transitions in a network with three assemblies of densely connected weight-hubs. (A-Top) Membrane potential of sample non-hub (black, labeled 1–12) and weight-hub (labeled 13–15) and inhibitory neurons (red, without label). Inside each group neurons have been sorted by their firing rate. Brown bars indicate time intervals which are considered as up-states for bottommost inhibitory neuron. (A-Bottom) Raster plot of several neurons of each population (same color). (B) Rate distribution of excitatory neurons. Numbered labels indicate the firing rate of neurons whose membrane potential traces are shown in (A). Inset: The distribution of firing rates is close to a normal distribution (red curve) on a (semi-) logarithmic scale. (C) Histograms of the up-state duration for each group of excitatory neurons. The coefficients of variation for the assemblies are 0.06, 0.10, and 0.16, which signifies regular durations. The non-hub neurons (filled histogram) exhibit a broad distribution of up-state durations with coefficient of variation of 0.42. Inset: The Excitatory population contains three assemblies of weight-hubs and a large population of non-hubs. (D,E) Distribution of pairwise Pearson correlation coefficients of transition times from down- to up-state (D) and from up- to down-state (E) inside each subpopulation (solid lines) and over all 145,530 pairs of neurons (dashed lines). Transitions of two neurons are counted as coincident if they happen in the same time bin of 20 ms. (F) Averaged Pearson correlation coefficients of transitions from down- to up-state (upper triangle) and up- to down-state (lower triangle).

The L5-network model produces a skewed and long tailed distribution of firing rates in the whole population (Figure 2B) that is approximately lognormal (Figure 2B, inset). Weight-hub neurons have a high firing rate and form the tail of the distribution, whereas non-hubs have a low firing rate. The overall shape of the firing rate distribution is consistent with observations in in-vivo experiments (Hromádka et al., 2008; Vijayan et al., 2010).

We investigated the correlations of transition times from down- to up-state and vice versa (Figures 2D–F). Transitions inside subpopulations are highly correlated. The mean correlation coefficient for transition from down- to up-state is 0.84, 0.82, 0.69 for neurons within assemblies 1, 2, and 3, respectively, 0.77 for non-hub neurons and 0.78 for inhibitory neurons (Student's t-test for difference in mean: all p-values are smaller than 10−10). The mean cross-correlation for all pairs of neurons is 0.58, indicating a high correlation between randomly chosen pairs of neurons. The corresponding values for transition from up- to down-state are 0.68, 0.64, 0.56 for assemblies 1, 2, and 3, respectively, 0.83 for non-hub neurons, 0.84 for inhibitory neurons and 0.60 for all pairs of neurons (Student's t-test for difference in mean: all p-values are smaller than 10−10). These results indicate that an overall synchrony between neurons in the up-/down state oscillations is maintained, consistent with recordings from multiple extracellular electrodes (Petersen et al., 2003; Fucke et al., 2011). Note that assemblies oscillate out of phase, but not in anti-phase, because they do not strongly compete with each other, as opposed to a network in a winner-takes-all mode. Therefore, occasionally more than one assembly is active at a time (see raster plot in Figure 2A). Competitive neurons with anti-phase oscillations would instead lead to reduced correlation of up/down state transitions, averaged across all neuron pairs in the network. High correlations inside each subpopulation also increase the overall correlation. The peak of the overall correlation distribution mostly belongs to correlations of pairs inside subpopulations (Figure 2B). Another noteworthy point is that since the size of assembly 3 is small (20 neurons), its properties differ from other assemblies. In particular, the firing rate of assembly 3 neurons is less than that of the two other assemblies, and even less than several non-hub neurons (Figure 2B). Also, the correlation of up/down transitions is less than other assemblies and non-hub neurons.

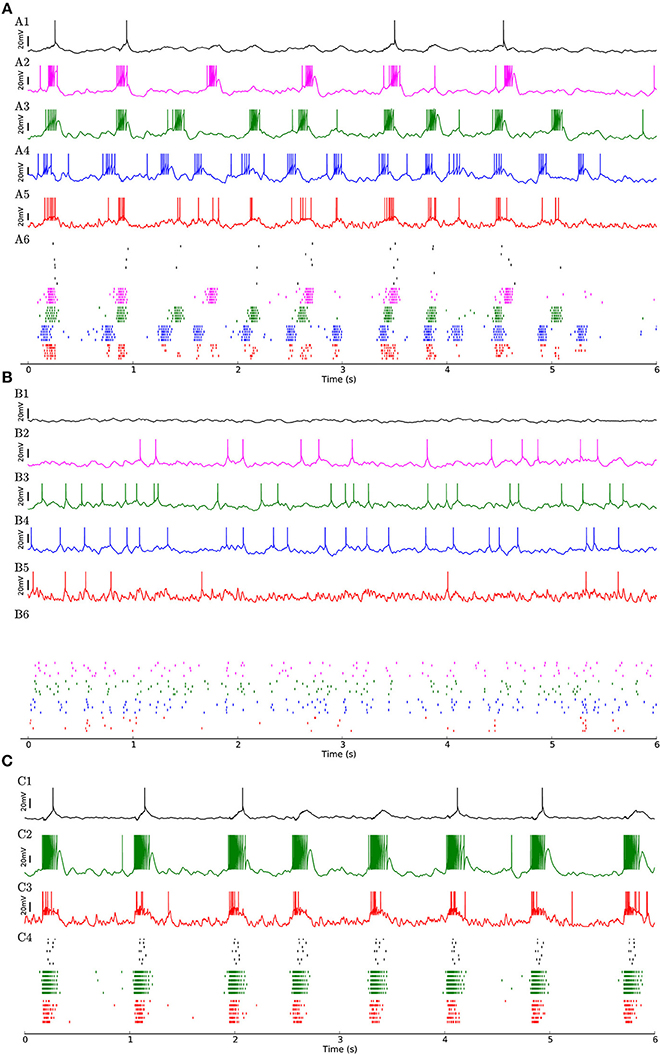

To investigate whether the network structure, the broad distribution of synaptic weights, and the variation of neural parameters values are important for these dynamics, we built a similar model in which all synaptic weights inside a given subpopulation (i.e., weight-hubs, non-hubs, and inhibitory) are identical (See Section Materials and Methods) and values of neural parameters inside each subpopulation are identical (Table 1). The model still produces the irregular up/down state oscillations (Figure 3A).

Figure 3. (A) Network of 454 excitatory and 90 inhibitory neurons with identical neuron parameters, organized into homogenous subpopulations with dense connectivity within each assembly and non-hubs. Membrane potential traces of a non-hub neuron (A1), neurons from each of the three weight-hub assemblies (A2, A3, A4) and an inhibitory neuron (Inh) (A5). (A6) Raster plot of several neurons of each population (same colors). Oscillations of the assemblies are different in terms of the up-state and down-state durations. Non-hub and inhibitory neurons receive input from the three oscillating assemblies and exhibit irregular oscillations. Note that there are 359 non-hub neurons in the network, which is the majority of cells. (B) Heterogeneous network as in Figure 2, but sparse connectivity (p = 20%) inside assemblies. Membrane potential of non-hub (B1), three weight-hub neurons (B2–B4) and inhibitory neurons (B5) and the raster plot of several neurons of each population (B6). The Up-state/down-state oscillation vanishes. Weight-hubs (B2–B4) occasionally emit spikes since they receive stronger synapses from Poisson neurons, while non-hub neurons (B1) do not spike at all. (C) Oscillations in a network with a single assembly of densely connected weight-hubs are more regular than in Figure 2. Membrane potential of a non-hub (C1), a weight-hub (C2) and an inhibitory neuron (C3). (C4) Raster plot of randomly selected neurons of each population. While weight-hub neurons (green ticks) exhibit a high firing rate in the up-state, non-hub neurons (black ticks) show only a small number of spikes. Inhibitory neurons (Inh) are shown in red.

On the other hand, the reduction of the connection probability inside the assemblies of weight-hub neurons from 50 to 20% (while maintaining the overall connection probability such that it agrees with experimental data) causes the dynamics to change significantly, and the irregular oscillations vanish (Figure 3B) even though network and neuronal parameters are heterogeneous. Thus, the connection probability inside weight-hub neurons assemblies plays an important role in the model dynamics. This is consistent with the model of Litwin-Kumar and Doiron (2012), in which clusters of neurons were predefined. Also in their study, decreasing the connection probability inside the neuronal clusters causes the transitions of clusters between active and inactive states to cease. In a related model (Mazzucato et al., 2015), in which inter- and intra-cluster connection probabilities are equal, decreasing the synaptic weights inside the clusters leads to a loss of oscillations.

Finally, we also simulate a network with a single assembly of densely connected weight-hubs (Figure 3C). While transitions between up and down states occur, the oscillations in non-hub neurons (and therefore the majority of neurons) are much more regular than in the full model of Figure 2 (the coefficient of variation of up-state duration for non-hub neurons in Figure 3C is 0.08, while it is 0.42 for Figure 2).

Simulation of Optogenetic Stimulation

Here, we explore the light-evoked response of our model of barrel cortex networks. Mimicking a recent experiment (Beltramo et al., 2013), a small subset of model neurons is stimulated to fire. Then, the activity of several non-stimulated neurons is recorded to investigate the relation and effect of the stimulated subset on the other neurons in the network. In our framework, an increased connection probability between weight-hub neurons can potentially explain the observation of the experiment: Optogenetic stimulation of a group of L5 neurons causes a long-lasting depolarization in non-stimulated L5 excitatory neurons, while the same experiment shows different results in L2/3 (Beltramo et al., 2013). Non-stimulated neurons in L2/3 show little depolarization and a smaller number of emitted spikes upon optogenetic stimulation of L2/3. These results imply that the L5 excitatory population is able to spread the optically induced activation more than the L2/3 one. In principle, this difference could be due to neuron parameters, neuronal morphology or the structure of neuronal networks. Here we argue that the presence or absence of densely connected weight-hubs assemblies, which is a property of the network structure, can explain the difference in the spread of activation within each layer. We propose that weight-hubs may be densely connected in L5, but their connectivity may be sparse in L2/3. This difference can be considered as one of several possible ways for interpretation of different light-evoked response in L2/3 and L5. Another noteworthy point is that although both connection probability and average synaptic weights in L2/3 are lower than L5 (see Section Materials and Methods), the number of neurons in L2/3 is much larger than L5 in our framework (1691 vs. 454). Therefore, the number and total strength of inward synapses into L2/3 neurons are not lower than L5 neurons.

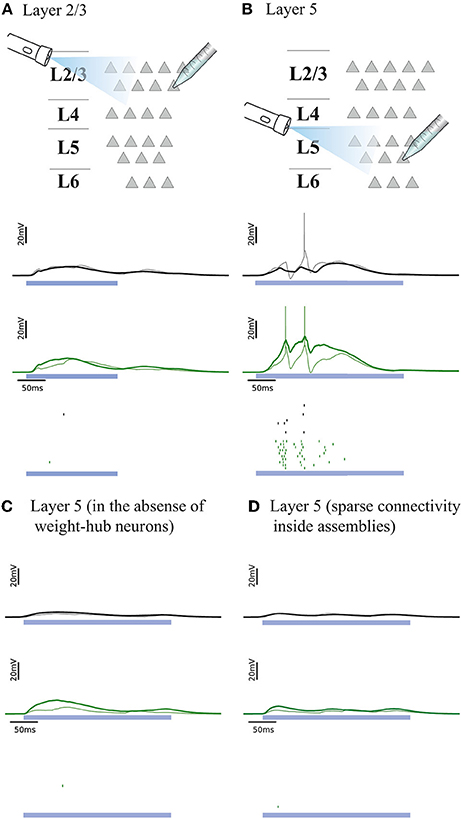

We examined whether the experiments in L2/3 and L5 can be explained by our cortical network model. Figures 4A,B, respectively, show the responses of L2/3 and L5 excitatory population models to a transient direct current stimulus, which we used to model optogenetic stimulation (Beltramo et al., 2013). The stimulus is received by a random subset (15%) of excitatory neurons, to account for the fact that about 15% of experimentally observed neurons express the light-sensitive ion channel. In the L2/3 population model, the connections within the weight-hubs assembly are sparse, whereas in the L5 model they are dense. Non-stimulated model neurons in L2/3 show little depolarization while L5 ones show a high depolarization and a substantial number of spikes, in agreement with experiments in mouse visual cortex (Beltramo et al., 2013). Therefore, we conclude that dense connectivity between weight-hub neurons in L5 and sparse weight-hub connectivity in L2/3 can generate biologically plausible light-evoked responses.

Figure 4. Simulated response to light-evoked stimulation of non-stimulated excitatory neurons in cortical L2/3 (A) and in L5 (B). Approximately 15% of all neurons (weight-hubs, non-hubs and inhibitory) are stimulated in each layer for a time period of 300 ms (blue bar). Membrane potentials (lines) and spikes (ticks) of weight-hubs (green) and non-hubs (black). L2/3 neurons (A) show little depolarization due to sparse connectivity between weight-hubs, while L5 neurons (B) display a long-lasting depolarization and a significant number of spikes. This effect is due to the dense connectivity between weight-hubs in the L5 network model, but not in the L2/3 model. (C) Simulation of L5 in case of a modified assembly model that only has strong internal synaptic weights but is not innervated as strongly from other neurons as the weight-hub assembly in (B), see main text for details. In the absence of weight-hub neurons, L5 does not generate long-lasting depolarization in response to the stimulation. (D) Simulation of L5 in case of having sparse connectivity (p = 20%) inside assemblies. The network is unable to produce long-lasting depolarization.

In the simulations of Figures 2, 3, we observed that densely connected weight-hubs produce up-state/down-state oscillations while sparsely connected ones do not. In the simulation of Figure 4, we set up L5 to contain densely connected weight-hubs and L2/3 to contain sparsely connected ones. Therefore, in this model L5 is the main source of the up/down oscillations in the cortex, while L2/3 is subsidiary. Other experimental studies (Sakata and Harris, 2009; Chauvette et al., 2010; Beltramo et al., 2013) provide further support of this conclusion.

To assess whether the concept of weight-hubs is necessary to explain the light-evoked responses, we modified the structure of L5 network and repeated the simulation. In the new structure, we did not use weight-hub neurons (neurons that receive strong synaptic weights from all other neurons) but we instead implemented an assembly with high connection probability and strong synaptic weights internally. In other words, in the new structure the neurons of the assembly receive strong synaptic weights from each other while they receive normal weights (broadly distributed weak and strong weights, as shown in Figure 1B) from the neurons outside the assembly. Our simulation shows that this structure is unable to produce a long-lasting depolarization and a notable number of spikes (Figure 4C), indicating that the weight-hub property is important to explain the light-evoked response of L5. We also simulated light-evoked response of another variant of L5 model (Figure 4D). We showed that L5 in presence of sparsely connected weight-hub neurons cannot generate long-lasting depolarization.

Correlations

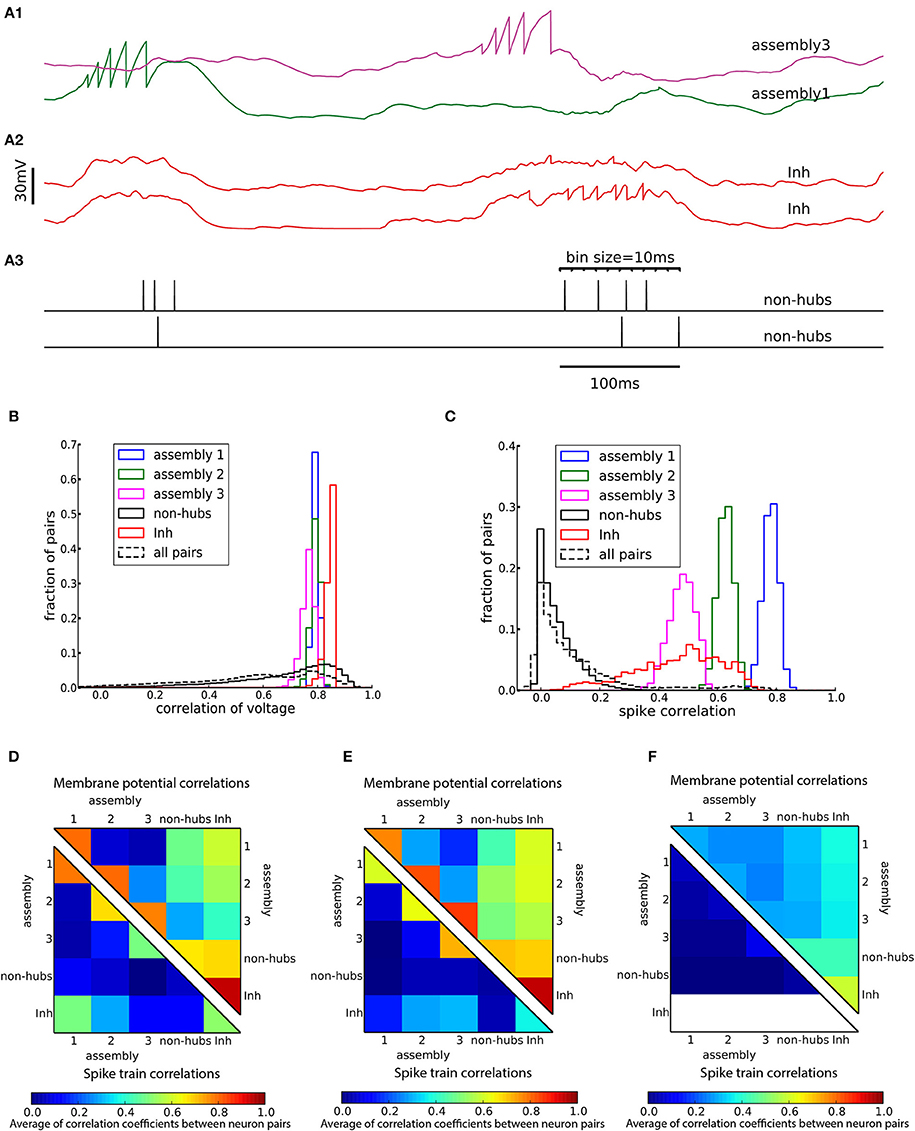

A characteristic of weight-hub assemblies in the model (Figure 2) is that the activity of hub neurons is strongly correlated. We quantified correlations by computing the correlation coefficients of pairs of neurons, both of the subthreshold membrane potentials and the spike trains, binned into a 10 ms time window (Figure 5A). In the heterogeneous network of Figure 2 the subthreshold membrane potentials of neurons inside each subpopulation are strongly correlated (Figures 5B,D; mean correlation coefficient 0.80, 0.79, 0.75 for assemblies 1, 2, and 3, respectively, and 0.94 for inhibitory neurons) and significantly smaller (Student's t-test for difference in mean: all p-values are smaller than 10−10) for non-hubs (mean correlation coefficient 0.65). In contrast, correlations between neurons of different weight-hub assemblies are small, because their oscillations are not synchronized (Figure 5D). The correlation analysis of the spikes generated in the heterogenous network of Figure 2 also shows correlated behavior inside subpopulations except for non-hubs (Figures 5C,D). The mean correlation coefficients for the spike trains of the non-hubs is 0.06, smaller (Student's t-test for difference in mean: all p-values are smaller than 10−10) than that of other subpopulations (mean correlation coefficient 0.79, 0.65, 0.42 for assemblies and 0.52 for inhibitory neurons). If we randomly select two neurons in the network, we find a broad distribution of pairwise spike correlations (Figure 5C, dashed line) with a peak close to zero, consistent with experimental data (Reich et al., 2001) and previous model of metastable dynamics (Mazzucato et al., 2016). Heterogeneous (Figure 5D) and homogeneous (Figure 5E) variants of the model show very similar correlation structure, but in the network with sparsely connected weight-hub neurons (Figure 5F), the correlations disappear, because assemblies are mainly driven by external noise and do not show any joint transitions to the up-state (Figure 3B).

Figure 5. Cross-correlations of neuronal activity. (A) Two pairs of subthreshold membrane potentials (spikes have been removed) with low (A1) and high (A2) correlation and a pair of spike trains (A3). Spikes are counted as coincident if they fall within the same bin of 10 ms. (B,C) Distribution of Pearson correlation coefficients of subthreshold membrane potentials (B) and spike trains (C) of pairs of neurons inside each subpopulation (solid lines) and over all 145,530 pairs of neurons (dashed lines). (D–F) Averaged Pearson correlation coefficients between the membrane potentials (upper triangle) and the spike trains (lower triangle). Correlations are computed for pairs of neurons in the respective subpopulations of (A–D) the heterogeneous network of Figure 2, (E) the homogeneous network of Figure 3A and (F) the sparsely connected weight-hubs network of Figure 3B. Because inhibitory neurons do not fire any spikes in the sparsely connected weight-hubs network (F), the spike train correlations of them are not defined (white area).

Active Cortical State

Our network model can switch from the oscillatory state (which resembles slow-wave sleep or anesthesia) to an active state and vice versa without any change of network properties. In particular, the assemblies of weight-hub neurons, which are responsible for producing the up-down state oscillations, are always embedded in the network. In the active state, cortical neurons receive sensory input predominantly from layer 4 and layer 6 neurons which relay the sensory signals between thalamus and other cortical layers (Binzegger et al., 2004; Poulet et al., 2012). Here we simulate the active state by injecting an external stimulus (homogenous Poisson process to generate excitatory spike trains) to all neurons of the model. Figure 6A shows that the network stops oscillating immediately after receiving the stimulus, and switches back to the up-down state oscillations when the stimulation stops.

Figure 6. Transition from up-down-state oscillations to “active” state. (A1–A6) Network of Figure 2 receiving external Poisson process stimulus from t = 3 s to t = 5 s (blue bar). Neurons show up-down state oscillation before and after the stimulus, while they exhibit higher firing rate (20.9 Hz for inhibitory neurons and 12.4 Hz for excitatory neurons, split into 37.4, 33.6, 33.0, 6.4 Hz for assemblies 1, 2, 3 and non-hubs neurons respectively) during the stimulation period. (B) Distribution of firing rates across neurons in the network during stimulation interval (blue bar in A). (C) Distribution of Pearson pairwise correlation coefficients (bin size = 10 ms) of spike trains of pairs of neurons inside each subpopulation (solid lines) and over all pairs of neurons (dashed lines) during stimulation interval.

The effect of the external input can be explained as follows. When there is no strong external input, the network is driven by the dynamics of the weight-hub assemblies. Since they show self-sustained oscillations, the whole network is affected by the oscillations and follows them. In case the external input is present, however, the network is driven mainly by the input (even if the input is stationary, as in the case driving our stimulus with a homogenous Poisson process) and not the assembly dynamics. Therefore, all neurons, including the weight-hub neuron assemblies, are governed by the input and stop oscillating. During the input-driven active state, the firing rate distribution is narrow (Figure 6B) and the correlation between neurons is very low (Figure 6C). The average correlation coefficients for the active state (0.15, 0.14, 0.12 for assemblies 1, 2, and 3, respectively, and 0.25 for inhibitory neurons) are smaller than in the oscillatory state (Figure 5C; Student's t-test for difference in mean: all p-values are smaller than 10−10). The very low average correlations between (over all neuron pairs, 0.04 ± 0.05, Figure 6C, dashed line) and inside excitatory neurons (Figure 6C, black line) are consistent with recent experimental observations (Ecker et al., 2010). Therefore, the network preserves important aspects of biologically plausibility (such as skewed firing rate distribution and low value of pairwise correlations) also in the active cortical state.

The Role of the Weight-Hub Neurons Assembly in the Slow Oscillations

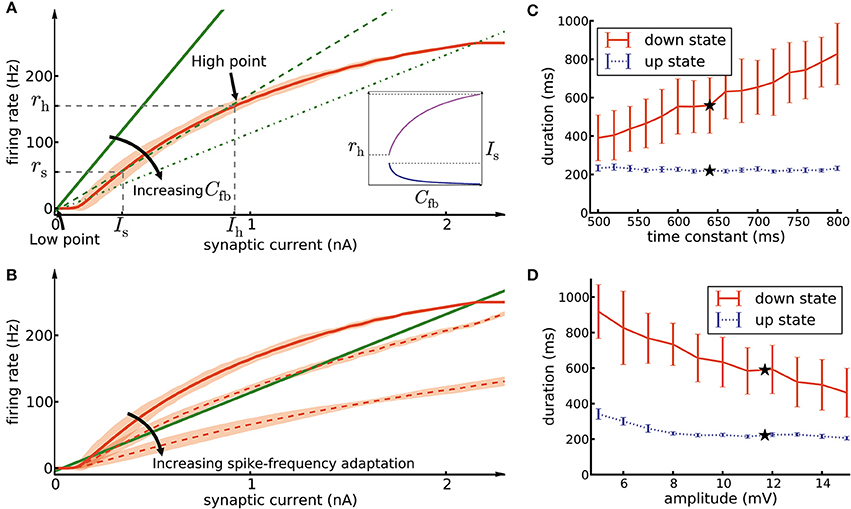

In order to understand why the assembly of densely connected weight-hub neurons generates oscillations, we use methods from network analysis (Amit and Brunel, 1997; Laing and Chow, 2002; Giugliano et al., 2004, 2008; Moreno-Bote et al., 2007; Shpiro et al., 2009; Gerstner et al., 2014; Mazzucato et al., 2015). We relate the mean firing rate of neurons of the assembly to the mean synaptic current received by them. The first relation is given by the neuronal gain function (Equation 13, curve in Figure 7A), i.e., the firing rate that each neuron produces when it is driven by a certain input current. The second relation is given by the feedback of the network (Equation 16, lines in Figure 7A), i.e., how much synaptic current is produced by the activity of the neurons. In the absence of adaptation, intersection points between the two curves form candidates of fixed points of network activity. We define the “network feedback” (Cfb, Equation 17) as the strength of synaptic feedback within the assembly. This quantity is the inverse of the slope of the feedback line (lines in Figure 7A). A high (low) value of Cfb leads to a strong (weak) response of the assembly to synaptic currents.

Figure 7. Mean-field analysis. (A) The network feedback (Cfb, Equation 17) affects the quasi-stationary dynamics of the system. The red curve is the noisy gain function g of the GIF neuron model (mean spike count in a group of 50 independent neurons over 10 ms, divided by 50 × 10 ms, shaded area marks ±3 SEM) measured during the initial 10ms after switching on a synaptic current of mean 〈Isyn〉 (see Section Materials and Methods, Equation 14). The green lines (solid, dashed, and dash-dotted) show the relation of firing rate and synaptic current caused by network feedback (see Section Materials and Methods, Equation 16) for increasing Cfb. The slope of the green lines has an inverse relation with the effective coefficient Cfb of the population. Intersections of the red curve with one of the green lines indicate potential stationary states (fixed points) of a network of non-adapting neurons. Populations with a high Cfb (dashed and dash-dotted green lines) have three fixed points, stable low point, high point and unstable switch point. If the population described by a network feedback given by the dashed lines is driven by a mean current higher than Is, it rapidly converges to the high point. On the other hand, a population with a low Cfb (solid green line) has only one intersection at the low point. Inset: Increasing Cfb causes an increase in the high firing rate rh (magenta curve, left vertical scale) and a decrease of the switch current Is (blue curve, right vertical scale). (B) The noisy gain function of adaptive neurons is different during the first 10 ms after stimulus onset (solid red curve) than later (dashed red curves) (C) The duration of up- and down-states as a function of the time constant of the excitatory neuron firing threshold kernel γ(s). Only the time constant of γ2(s) (the exponential with the longer time constant) was manipulated, while γ1(s) remained as reported in Table 1. The black stars indicate the experimentally extracted value of the time constant, which was used in the other figures. (D) Same as C, except that here we manipulated the amplitude of γ1(s) (the exponential with the larger amplitude). The error bars show the standard deviation of up/down state durations over 10 simulation trials of 10 s duration each.

In the framework of up- and down-state oscillations, an assembly (or any subpopulation of neurons) with low value of Cfb is not able to oscillate and remains in a low firing rate fixed point. This can be explained as follows: A low value of Cfb implies that the feedback line has a large slope (solid line in Figure 7A). Therefore, the two curves have only one intersection point whose rate is close to zero. We refer to this fixed point as “low-point.” In the case of a high value of Cfb, the slope of the feedback line is low (dashed lines in Figure 7A) and there are two additional fixed points of the system. The middle fixed point is unstable and is called the “switch point,” and the upper one is typically stable (neglecting adaptation and oscillatory instabilities; Brunel, 2000; Gerstner, 2000) and is called the “high point.” Let us refer to their positions with symbols (Is, rs) and (Ih, rh) with indices s and h for “switch” and “high,” respectively. Is acts as a threshold for the behavior of the assembly. In case of driving neurons with a current lower than Is, the assembly converges to the low-point and remains quiescent. In contrast, currents higher than Is bring the assembly to the high-point and force it to exhibit a high firing rate. In our framework external Poisson noise occasionally provides a transient synaptic current larger than the current of the switch point. The mechanism described above is the reason that the assembly switches from the low-point to high-point. Switching back from the high-point to the low-point is due to spike-frequency adaptation and will be discussed further below.

As discussed, a sufficiently large value of Cfb gives rise to two stable fixed points of the network activity, the low-point and the high-point. Since the assembly of connected weight-hub neurons exhibits high average synaptic weights (wh) and high connection probability (ph), the value of Cfb ∝ whph for this subpopulation is high (see Section Materials and Methods, Equation 17). But is such an assembly of weight-hub neurons necessary for producing the oscillations? Or can any highly connected group of neurons (not necessarily weight-hub neurons) generate the oscillation? Or even a group of neurons with very strong synaptic weights but sparse connectivity (similar to Mazzucato et al., 2015)? The relevant parameters for the configuration of fixed points is the value of Cfb, which can in principle be increased by an increase of either wh or ph, or both. However, we found some of these possibilities to be not consistent with the existing experimental data. In particular, fixing ph and increasing wh by a large factor yields a set of very strong synapses out of the range of reported experimental PSP values (Lefort et al., 2009). On the other hand, by fixing wh we require a very high ph (close to full connectivity, ph = 1) which does not look biologically plausible for cortical networks (for more details of relation between connection probability and other population parameters see (Klinshov et al., 2014)). For example, in the case of the smallest assembly (containing 20 neurons), choosing sparse connectivity ph = 0.2 yields to very high synaptic weight value wh = 3.55 mV, similarly choosing synaptic weight wh = 0.71 mV (average synaptic weight in L5 excitatory population is 0.66 mV) leads to full connectivity (ph = 1). Our solution was to increase both wh and ph by a moderate factor, so that both remain realistic and still lead to a sufficiently high value of Cfb.

We would like to highlight another characteristic of Cfb. Increasing its value increases the firing rate rh in the high fixed point, and lowers the minimal value of the switching current Is (Figure 7A, inset). Consequently, a high value of Cfb implies that only a small amount of transient external current is required to bring the population above the switch point. In our model, different assemblies have different numbers of neurons, therefore different values of Cfb, and different switching points. The different feedback coefficients of weight-hubs assemblies and non-hub neurons also explain the skewed distribution of firing rates (Figure 2B) in the model. Larger assemblies of weight-hub neurons switch more often to the high-point and produce higher firing rates than smaller assemblies. Hence, weight-hub neurons from different assemblies form the tail of firing rate distribution. Non-hub neurons do not switch to the high-point and are not able to produce high firing rate. Therefore, they form the peak of the firing rate distribution at low rates.

Let us now focus on the return of the assembly from the high-point to the low-point. At the high-point neurons exhibit a high firing rate but spike-frequency adaptation continuously decreases the probability of spike emission. Therefore, the neurons' gain function changes gradually (dashed curves in Figure 7B). The system eventually makes the transition to a new configuration where the low-point is the only fixed point. When the system is at the low-point, both adaptation current and dynamic threshold decay, and eventually the dynamics of the subpopulation go back to the initial configuration in which both high- and low-point exist. In other words, the neurons recover from adaptation while they are in the low-point. Stochastic spike arrivals from other areas, described as Poisson neurons with constant firing rate here, provide the excitation necessary to make the assembly switch to the high-point. The resulting process of repetitive switching between the low-point and the high-point forms the oscillation in the system. The high point, corresponding to high firing rates of the weight-hub neurons in our model, can be interpreted as the up-state of a cortical network, and similarly the low-point corresponding to low firing rates of the down-state. Previous studies (Giugliano et al., 2004, 2008) addressed these dynamics for a simpler adaptive integrate-and-fire neuron model with similar analytical approaches.

Because spike-frequency adaptation in our model is responsible for progressively changing the gain function during the up-state, and eventually for its termination, we investigate the effects of the adaptation parameters on the duration of the up- and down states. Each time a neuron emits a spike, several adaptation processes are added to its firing threshold and spike-triggered current (denoted as “kernels” γ(t) and η(t) in Table 1). Each kernel has an exponential form of be−t/τ, where b and t are amplitude and time constant of the kernel, respectively, and t is the time elapsed since the emission of the spike. By modifying the time constant t of the firing-threshold kernel γ2(t) (which has a longer time constant than γ1(t)) for the excitatory neurons, we are able to change the down-state duration strongly without affecting the up-state duration (Figure 7C). A longer time constant implies that neurons need more time to recover from adaptation, which leads to a longer duration of the down-state. On the other hand, manipulation of the kernel amplitude affects both up- and down-state durations, as shown by changing the amplitude of the exponential term of the kernel γ1(t) (Figure 7D). Longer values of the amplitude cause shorter up- and down-states. Note that because the switch from the down-state to the up-state in our model is caused by stochastic Poisson inputs, the down-state durations have a greater variability compared to the up-state durations (see error bars in Figures 7C,D). In general one could manipulate amplitudes and time constants of all exponential kernels in γ(t) and η(t) as well as other neuronal parameters. However, since all neural parameters of our model (including the adaptation parameters) are extracted from experiments (Mensi et al., 2012), we did not investigate manipulating them any further.

In order to examine whether the above network feedback mechanism is indeed causing the up-down state transitions, we simplified the model of L5 such that it contains a single assembly of densely connected weight-hubs (see Section Materials and Methods) embedded in a network of non-hubs with weak random connections. Figure 3C shows the dynamics of the network. Note that in this model the assembly acts as the driving force of the system and generates an oscillation by switching between its two stable fixed points. The non-hub neurons are enslaved by this oscillation and show only the passive behavior of followers. However, while embedding only one assembly in the excitatory population makes the system oscillate, the duration of up-states is short and has a narrow distribution (regular duration). In contrast, a combination of several assemblies, as in Figures 2, 3A, results in several oscillations with different frequencies, each of which is generated by one assembly. Non-hub and inhibitory neurons receive these excitatory input signals and superimpose them. The result of the superposition are rather irregular up-states with a longer duration (Figures 2, 3A). The role of inhibitory neurons in the model is to regulate the firing rate of the assemblies in the up-state. This regulation is necessary because an excessively high firing rate in each assembly would cause rapid spike-frequency adaptation, and would therefore substantially reduce the duration of the up-states in that assembly. Consequently, the superimposed oscillations would also show short up-state durations.

We can also explain the light-evoked response of L5 and L2/3 (Figure 4) by the dynamics of the assemblies of weight-hub neurons. In order to understand the differences between the layers, let us suppose that most of the neurons that express the light-sensitive ion channel generate one or several spikes in response to the stimulus. Assuming a uniform spatial distribution of weight-hubs in the excitatory population, we estimate that ~15% of both weight-hub and non-hub neurons are stimulated. Since synaptic weights among non-hubs are weak, non-hub neurons do not strongly excite neighboring non-hub neurons. However, since they send strong synapses to weight-hubs (according to our definition of weight-hubs), they contribute to the initial activation of weight-hub neurons. Recall that a densely connected assembly needs only little initial activation (to reach the switch point) to generate a high firing rate via self-excitation. Therefore, a densely connected assembly switches to the high point more easily so that each weight-hub neuron fires several spikes.

In contrast, since the connections from weight-hub neurons to non-hub neurons are weak, the stimulation does not generate a high overall firing rate in the network (non-hub neurons depolarize but do not show a high firing rate). After the weight-hub neurons have fired several spikes, spike-frequency adaptation changes the neuronal gain function. This switches the mean-field dynamics of the weight-hub assembly from a three-fixed-point to a one-fixed-point regime and brings the assembly to the low-point (Figure 7B), as discussed above. Consequently, both firing in weight-hubs and depolarization in non-hubs cease. In contrast, in the case of sparse connectivity between weight-hub neurons, due to a low value of the Cfb, this subpopulation is unlikely to transition to the high-point. Either such a fixed point does not exists because the system has only one fixed point (the low-point), or the value of the switch current (Is) is very high and stimulated neurons cannot provide sufficient input current to reach it. Therefore, the absence of a densely connected weight-hub assembly leads to weak spreading of the induced activation in the population (Figure 4B). For the case of Figure 4C, since synaptic weight from non-hub neurons onto assembly neurons are weakened, assembly neurons do not receive enough synaptic current to cross the switch current. Consequently, they cannot produce notable number of spikes.

Discussion

In this paper, we suggest cortical microcircuits with a particular non-random network feature called assembly of densely connected weight-hub neurons, to explain two different experimental observations: Firstly, spontaneous slow oscillations (irregular up- and down-state) and, secondly, stimulus-evoked responses of cortical layers.

We argue that in our framework the existence of weight-hub neurons in a cortical network alone is not enough to cause significant changes in network dynamics. Since, we want the values of network parameters (synaptic weights and connection probabilities) to remain in the experimentally observed range, we may not increase the synaptic weight of connections on weight-hub neurons by a huge factor. Therefore, the value of network feedback cannot become high enough to produce oscillations by only modifying the weights. But if the connection probability between weight-hubs is also high (at least twice the connection probability between two arbitrary non-hub neurons), the emerging assembly of densely connected weight-hubs shapes the dynamics and the activity of the cortical layer. We have shown both qualitatively and quantitatively that a small but sufficient amount of initial activation brings the assembly of model neurons to a transient high-rate state that resembles cortical up-states.

A single assembly of weight-hub neurons together with a small amount of external noise (which here is provided by constant rate Poisson inputs) forms a slow oscillator. The reason is that this assembly switches between a high-rate state and a low-rate state repetitively. Fluctuations caused by external noise bring the assembly to the high-rate state, and spike-frequency adaptation brings it back to the near-zero, low-rate state. Several experimental studies (Sanchez-Vives and McCormick, 2000; Sakata and Harris, 2009; Chauvette et al., 2010; Beltramo et al., 2013) indicate that the cortical oscillations originate in infra-granular layers (mainly L5), and that supra-granular layers (L2/3) are subsidiary, i.e., the up-state is initiated in L5 and rises from the depth to L2/3. We suggest that the connectivity of weight-hub neurons in L5 is dense, while it is sparse in L2/3. Thus, L2/3 follows oscillations generated in L5, but is not able to sustain oscillations on its own.

In slice cultures slow oscillations are rather regular (Sanchez-Vives and McCormick, 2000), whereas experiments done in the anesthetized animals (Stern et al., 1997; Lampl et al., 1999) show irregular up-down state transitions. In order to reproduce this irregularity, we embedded several densely connected weight-hub assemblies in the excitatory population. Non-hubs, the majority of excitatory cells, and inhibitory neurons receive synaptic input from these oscillations and superimpose them. Consequently a large fraction of model neurons show an irregular oscillation with a broad distribution of up-state durations.

The presence of one or several assemblies of weight-hub neurons may also explain layer-dependent differences of stimulus-evoked responses (Beltramo et al., 2013). While L2/3 exhibits weak depolarization in response to stimulation of a small fraction of it, in L5 the same stimulus induces a strong and long-lasting depolarization and a substantial number of spikes. Since the assembly of weight-hub neurons needs just a small amount of activation to switch to a high firing rate, and may propagate it within the network, we suggest that the connectivity of weight-hub neurons underlies the long-lasting response of L5. Conversely, we would hypothesize that weight-hub neurons in L2/3 are not strongly connected to each other.

While our multi-assembly architecture produces long tailed distribution of firing rates, there is at least one other way to produce such a skewed distribution. In a balanced network in the asynchronous state (Roxin et al., 2011) showed that a Gaussian input distribution can lead to a lognormal firing rate distribution via an exponential non-linearity of the current-frequency relation. In our network model, however, neurons are not in the balanced stationary regime but participate in synchronous transitions between up and down states. Similarly, in the study of Mazzucato et al. (2015) the long tailed distribution of firing rates results from metastable activity not from the balanced stationary state.

Definition of Hub Neuron

The term hub can have two meanings: Firstly, degree-hub, i.e., a neuron that receives more synaptic connections than an average neuron, and secondly, weight-hub, i.e., a neuron that receives stronger synapses than average. The second definition was used in the current work. The common definition of a hub (degree-hub) as a neuron that receives more synapses (Bullmore and Sporns, 2009; Feldt et al., 2011; Prettejohn et al., 2011) does not take into account the strength of synapses, called synaptic weights here. This topological definition of hubs is common in computer sciences, where the issue of degree and connections between nodes is more important than the weight structure. However, in neuronal microcircuit modeling, synaptic weights are as important as degree and connectivity. Surprisingly, the amount of previous modeling work done on degree manipulation and connectivity structure (Roxin, 2011; Hu et al., 2013, 2014; Pernice et al., 2013; Vasquez et al., 2013; Jahnke et al., 2014; Potjans and Diesmann, 2014; Rudolph-Lilith and Muller, 2014) by far exceeds work on non-homogeneous weight structure (Koulakov et al., 2009; Iyer et al., 2013; Tomm et al., 2014).

Here we adopted the less-common definition of a hub in terms of synaptic weights, to shed light on this less-well understood aspect of non-random neuronal network features. While manipulation of the degree distribution in the network and creating degree-hubs has the same effect as creating weight-hubs in producing a skewed firing rate distribution (Roxin, 2011), the two are not always interchangeable. Tomm et al. (2014) showed that for reproducing light-evoked responses in mouse barrel cortex slices, manipulation of both degree and weight distributions are needed under the simulated network conditions of this study. In our case, it would be possible to keep the connectivity random (without rewiring) and manipulate the weights more strongly to produce the oscillations. However, this would entail very strong synaptic weights outside of the experimentally observed range (Lefort et al., 2009), and thus would reduce the biological plausibility of the model. Our combined approach of introducing weight-hubs with dense connectivity overcomes this problem with minimal changes to both weight and degree distribution.

Several electrophysiological experiments indicate that the distribution of synaptic weights (EPSP amplitudes) has a lognormal shape (Lefort et al., 2009; Ko et al., 2011; Avermann et al., 2012; Chapeton et al., 2012). Therefore, we used a lognormal distribution for modeling synaptic weights in neuronal populations. Using random (Erdős–Rényi) networks to define whether a synaptic connection is present or not, together with a lognormal distribution for the synaptic weight of the connection, entails that the sum of inward synaptic weights is similar for all neurons (Figure 1B). However, by modifying the topology of the excitatory network to have local inward correlation in synaptic weights (see Section Materials and Methods), we could produce a more broadly distributed sum of inward synaptic weights (Figure 1B). Therefore, a few excitatory neurons receive larger total synaptic weights, while others receive smaller values. For the sake of concreteness, we have defined a classification boundary such that the 20% of neurons that receive the strongest inputs are called weight-hubs (see Figure 1B). The model is robust against varying this fraction, and shows similar dynamics for 15 and 25% of neurons (data not shown).

Throughout this article, only excitatory to excitatory connections were manipulated and all other connections (inhibitory to inhibitory, inhibitory to excitatory and excitatory to inhibitory) remained unchanged. However, evidence of hubs in inhibitory populations has been found in experiments (Bonifazi et al., 2009). Therefore, the effects of hubs in inhibitory networks remain to be investigated. Since in our model excitatory hubs suffice to explain the aforementioned aspects of cortical dynamics, we neglected inhibitory hubs here in favor of model simplicity. Another reason for not exploring the structure of inhibitory connections is the lack of experimental datasets for inhibitory connectivity in L5. In this work, we use the inhibitory connection properties of L2/3 as a substitute for L5 (see Section Materials and Methods). Therefore, any investigation on inhibitory connectivity of L5 would be based on this unconfirmed hypothesis.

Identifying Weight-Hub Neurons from Data

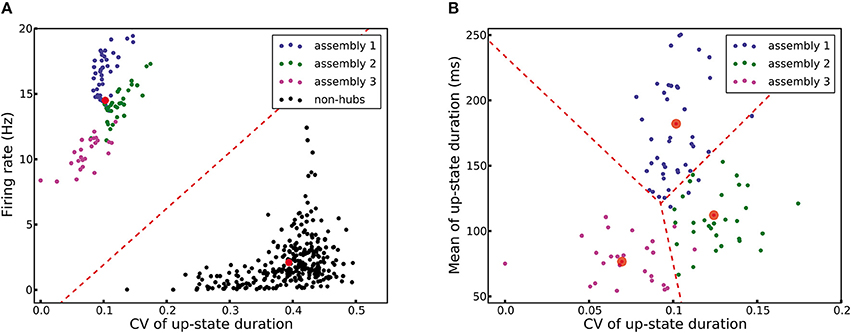

A network structure with densely connected weight-hubs is hypothetical. In this section, we propose a method using machine learning tools which can help experimentalists to label neurons as weight-hub or non-hub using a set of recorded membrane potential. In order to perform such a classification, we need to consider distinct properties of weight-hub neurons. First, weight-hub neurons in our model receive a larger amount of excitation than non-hubs and therefore exhibit a higher firing rate. Therefore, we might label neurons with relatively higher firing rate as weight-hubs. However, this property alone does not yield a robust way of identification, because besides the synaptic input, the firing rate of a neuron also depends on its electrophysiological parameters, such as its firing threshold. A non-hub neuron may therefore occasionally exhibit a higher firing rate than a weight-hub (Figure 2B). A second property of weight-hub neurons predicted in the context of our model is the regularity of transitions between up- and down-states. According to our model, regular up-state durations indicate that the neuron is a weight-hub.

Here we use a combined approach for identifying subpopulations of weight-hub and non-hub neurons. In the first step, we characterize each neuron by a vector of two elements: the overall firing rate and the CV of up-state durations of the neuron. We pass these vectors to a K-means clustering algorithm (see Section Materials and Methods), which clusters neurons into weight-hubs and non-hubs with 100% accuracy (Figure 8A). We identify the group with the lower coefficient of variation of up-state duration as the weight-hubs and other group as the non-hubs. In order to distinguish the assembly that each identified weight-hub neuron belongs to, we perform a second step and run the algorithm again on the weight-hub neurons found in the previous step. Here we define the feature vector of each neuron by the mean and the CV of up-state durations. The algorithm assigns the correct assembly to 89 out of 95 weight-hub neurons. Accordingly the accuracy of the second step is 93.7% (Figure 8B).

Figure 8. K-means clustering identifies weight-hub neuron assemblies. Each dot represents one neuron and its color denotes the corresponding subpopulation in the simulation shown in Figure 2. (A) Clustering of all neurons into two clusters. The first stage of the classification algorithm successfully identifies weight-hubs and non-hubs, but does not distinguish between different assemblies of weight-hubs. The red circles show the center of the clusters and the dashed line displays the classification boundary. (B) Clustering of weight-hub neurons (identified in A) into different assemblies. The K-means algorithm with three clusters identifies the assembly of each weight-hub neuron with 93.7% accuracy.