95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Comput. Neurosci. , 02 April 2014

Volume 8 - 2014 | https://doi.org/10.3389/fncom.2014.00029

Many biological systems are modulated by unknown slow processes. This can severely hinder analysis – especially in excitable neurons, which are highly non-linear and stochastic systems. We show the analysis simplifies considerably if the input matches the sparse “spiky” nature of the output. In this case, a linearized spiking Input–Output (I/O) relation can be derived semi-analytically, relating input spike trains to output spikes based on known biophysical properties. Using this I/O relation we obtain closed-form expressions for all second order statistics (input – internal state – output correlations and spectra), construct optimal linear estimators for the neuronal response and internal state and perform parameter identification. These results are guaranteed to hold, for a general stochastic biophysical neuron model, with only a few assumptions (mainly, timescale separation). We numerically test the resulting expressions for various models, and show that they hold well, even in cases where our assumptions fail to hold. In a companion paper we demonstrate how this approach enables us to fit a biophysical neuron model so it reproduces experimentally observed temporal firing statistics on days-long experiments.

Neurons are modeled biophysically using Conductance-Based Models (CBMs). In CBMs, the membrane time constant and the timescales of fast channel kinetics determine the timescale of Action Potential (AP) generation in the neuron. These are typically around 1–20 ms. However, there are various modulating processes that affect the response on slower timescales. Many types of ion channels exist, and some change with a timescale as slow as 10 s (Channelpedia), and possibly even minutes (Toib et al., 1998). Additional new sub-cellular kinetic processes are being discovered at an explosive rate (Bean, 2007; Sjöström et al., 2008; Debanne et al., 2011). This variety is particularly large for very slow processes (Marom, 2010).

For example, ion channels are known to be regulated over the course of long timescales (Levitan, 1994; Staub et al., 1997; Jugloff, 2000; Monjaraz et al., 2000), which could cause changes in ion channel numbers, conductances and kinetics. Also, the ionic concentrations in the cell depend on the activity of the ionic pumps, which can be affected by the metabolism of the network (Silver et al., 1997; Kasischke et al., 2004). Finally, the cellular neurites (De Paola et al., 2006; Nishiyama et al., 2007) and even the spike initiation region (Grubb and Burrone, 2010) can shift their location with time. All these changes can have a large effect on excitability.

Therefore, current CBMs can be considered as strictly accurate only below a certain timescale, since they do not incorporate most of these slow processes. A main reason for this “neglect” is that such slow processes are not well characterized. This is especially problematic since neurons are excitable, so their dynamics is far from equilibrium, highly non-linear and contain feedback. Due to the large number of processes which are unknown or lacking known parameters, it would be hard to simulate or analyze such models. Therefore, it may be hard to quantitatively predict how adding and tuning slow processes in the model would affect the dynamics at longer timescales.

In order to allow CBMs with many slow process to be fitted and analyzed, it is desirable to have general expressions that describe their Input–Output (I/O) relation explicitly, based on biophysical parameters. In a previous paper (Soudry and Meir, 2012b), we found that this becomes possible if we use (experimentally relevant Elul and Adey, 1966; Kaplan et al., 1996; De Col et al., 2008; Gal et al., 2010; Goldwyn et al., 2012) sparse spike inputs, similar to the typical output of the neuron (Figures 1A,B). In this case, we derived semi-analytically1 a discrete piecewise linear map describing the neuronal dynamics between stimulation spikes, for a general deterministic neuron model with a few assumptions (mainly, a timescale separation assumption). Based on this reduced map, we were able to derive expressions for the “mean” behavior of the neuron (e.g., firing modes, firing rate and mean latency).

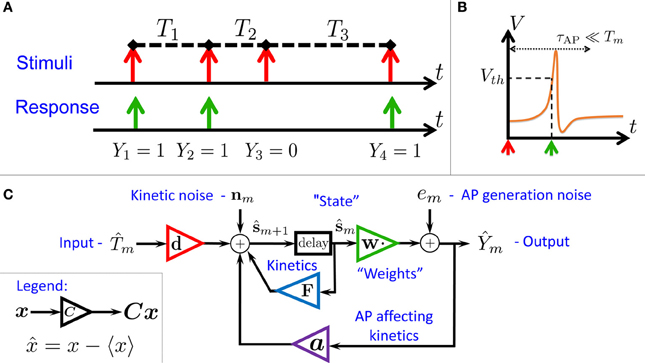

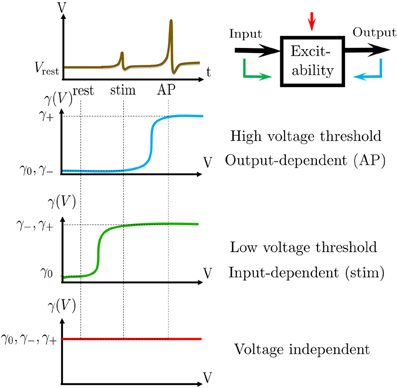

Figure 1. Schematic summary. (A) Aim: find the I/O relation between inter-stimulus intervals (Tm) and Action Potential (AP) occurrences (Ym) – for a general biophysical neuron model (Equations 1–3). (B) An AP “occurred” if the voltage V crossed a threshold Vth following the (sparse) stimulus, with Tm ≫ τAP. (C) Result: Biophysical neuron model reduced to a simple linear system with feedback (Equations 11, 12), and biophysically meaningful parameters (F,d,a and w).

In this paper, we find that stronger and more general analytical results can be obtained if we take into account the stochasticity of the neuron – arising from ion channel noise2 (Neher and Sakmann, 1976; Hille, 2001). Due to the presence of this noise, the discrete map describing the neuronal dynamics is “smoothed out,” and can be linearized. This linearized map constitutes a concise description for the neuronal I/O (Equations 11, 12) based on biophysically meaningful parameters. This I/O is well described by an “engineering-style” block diagram with feedback (Figure 1C), where the input is the process of stimulation intervals and the output is the AP response (Figure 1A). Note that the response is affected both by internal noise and by the input. Beyond conceptual lucidity, such a linear I/O allows the utilization of well known statistical tools to derive all second order statistics, to construct linear optimal estimators and to perform parameter identification. These results hold numerically (Figure 3), even sometimes when our assumptions break down (Figure 4).

In our previous paper, Soudry and Meir (2012b), we used our results to model recent experiments (Gal et al., 2010) where synaptically isolated individual neurons, from rat cortical culture, were stimulated with extra-cellular sparse current pulses for an unprecedented duration of days. Our results enabled us to explain the “mean” response of these neurons. However, the second order-statistics in the experiment seem particularly puzzling. The neurons exhibited 1/fα statistics (Keshner, 1982), responding in a complex and irregular manner from seconds to days. In a companion paper (Soudry and Meir, 2014), we demonstrate the utility of our new results. These results allow us to reproduce and analyze the origins of this 1/fα on very long timescales.

This section described our main results in outline. The details of each sub-section here appear in the corresponding sub-section in section 4. For readers who do not wish to go through the detailed derivations, the present section is self-contained. Readers who do wish to follow the mathematical derivations, should first read section 4, where, for convenience, each subsection (except for the last one) can be read independently. In our notation 〈·〉 is an ensemble average, , a non-capital boldfaced letter x ≜ (x1, …, xn)⊤ is a column vector (where (·)⊤ denotes transpose), and a boldfaced capital letter X is a matrix (with components Xmn).

The voltage dynamics of an isopotential neuron are determined by ion channels, protein pores which change their conformations stochastically with voltage-dependent rates (Hille, 2001). At the population level, such dynamics are generically described by Fox and Lu (1994), Goldwyn et al. (2011), and Soudry and Meir (2012b) a CBM

with voltage V, stimulation current I(t), rapid variables r (e.g., m, n, h in the Hodgkin–Huxley (HH) model Hodgkin and Huxley, 1952), slow “excitability” variables s (e.g., slow sodium inactivation Chandler and Meves, 1970), rate matrices Ar/s, white noise processes ξr/s (with zero mean and unit variance), and matrices Br/s which can be written explicitly using the rates and ion channel numbers (Orio and Soudry, 2012) (D = BB⊤ is the diffusion matrix Orio and Soudry, 2012). For simplicity, we assumed that r and s are not coupled directly, but this is non-essential (Contou-Carrere, 2011; Wainrib et al., 2011). The parameter space can be constrained (Soudry and Meir, 2012b), since we consider here only excitable, non-oscillatory neurons which do not fire spontaneously3 and which have a single resting state – as is common for cortical cells, e.g., Gal et al. (2010).

Since the components of r and s usually represent fractions, in some cases it is more convenient to use the normalization constraint (i.e., that fractions sum to one), and reduce the dimensions of r, s, and ξr/s. After this reduction, the form of Equations (1–3) changes to

where all the variables and parameters have been redefined (with their size decreased). Note that we have slightly abused notation by using the same symbols in Equations (4–6) and in Equations (1–3). The specific set of equations used will always be stated. We call Equations (4–6) the “compressed form” of the CBM.

Such biophysical neuronal models (either Equations 1–3 or 4–6) are generally complex and non-linear, containing many variables and unknown parameters (sometimes ranging in the hundreds Koch and Segev, 1989; Roth and Häusser, 2001), not all of which can be identified (Huys et al., 2006). Therefore, such models are notoriously difficult to tune, highly susceptible to over-fitting and computationally expensive (Migliore et al., 2006; Gerstner and Naud, 2009; Druckmann et al., 2011). Also, the high degree of non-linearity usually prevents exact mathematical analysis of such models at their full level of complexity (Ermentrout and Terman, 2010). However, much of the complexity in such models can be overcome under well defined and experimentally relevant settings (Elul and Adey, 1966; Kaplan et al., 1996; De Col et al., 2008; Gal et al., 2010; Goldwyn et al., 2012), if we use sparse inputs, similar in nature to the spikes commonly produced by the neuron.

We consider a stimulation setting motivated by the experiments described in Gal et al. (2010) and further elaborated on in section 3. Specifically, suppose I(t) consists of a train of pulses arriving at times {tm} (Figure 1A, top), so Tm = tm + 1 − tm ≫ τAP with τAP being the timescale of an AP (Figure 1B). Our aim is to describe the AP occurrences Ym, where Ym = 1 if an AP occurred immediately after the m-th stimulation, and 0 otherwise (Figure 1A, bottom). Recall again that we assume the neuron does not generate APs unless stimulated (as observed in Gal et al., 2010).

In this section we “average out” Equations (1–3) using a semi-analytical method similar to that in Soudry and Meir (2012b). To do so, we need to integrate Equations (1–3) between tm and tm + 1. Since Tm ≫ τAP, the rapid AP generation dynamics of (V, r) relax to a steady state before tm + 1. Therefore, the neuron AP “remembers” any history before tm only through sm = s(tm). Given sm, the response of the fast variables (V, r) to the m-th stimulation spike will determine the probability to generate an AP. This probability,

collapses all the relevant information from Equations (1, 2), and can be found numerically from the pulse response of Equations (1, 2) with s held fixed (section 4.2.4).

In order to integrate the remaining Equation (3), we define X+, X− and X0 to be the averages of a quantity Xs during an AP response, a failed AP response and rest, respectively 4. Also, we denote

as the steady state mean value of Xs. For analytical simplicity we assume5 Tm ≪ τs. We obtain, to first order

where nm is a white noise process with zero mean and variance TmD (Ym, Tm). For the compressed form (Equations 4–6) we have instead

Note that such a simplified discrete time map, which describes the excitability dynamics of the neuron, has far fewer parameters than the full model, since it is written explicitly only using the averaged microscopic rates of s (through A and D), population sizes (through D), the probability to generate an AP given s, pAP (s), and the relevant timescales. This effective model exposes the large degeneracy in the parameters of the full model and leads to significantly reduced simulation times and mathematical tractability. Notably, the dynamics of the state sm (Equation 8) depends on the input Tm and the output Ym – and this feedback affects all of our following results.

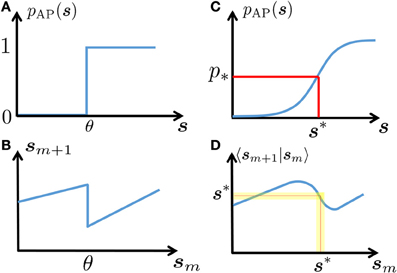

In this section we exploit the intrinsic ion channel noise to linearize the neuronal dynamics, rendering it more tractable than the (less realistic) noiseless case (Soudry and Meir, 2012b). Suppose that the inter-stimulus intervals {Tm} have stationary statistics with mean T* so that τAP ≪ Tm ≪ τs with high probability. Since s is slow and AP generation is rather noisy in this regime (Soudry and Meir, 2012b) (so pAP (sm) is slowly varying), we assume that a stable excitability fixed point s* exists (Figure 2). Therefore, the perturbations are small and we can linearize

Figure 2. Schematic explanation of linearization. In a deterministic neuron, an AP will be generated in response to stimulation if and only if the neuronal excitability (here, s) is above a certain threshold (A). This generates discontinuous dynamics in the neuronal excitability (B), see Equations 7, 8). In a stochastic neuron, the response probability is a smooth function of s (C). In turn, this “smooths” the dynamics (D). Note that if the noise is sufficiently high (as is true in many cases, for biophysically realistic levels of noise), then this generates a stable fixed point s* – which gives the mean response probability p*, and around which the dynamics can be linearized (yellow region).

Denoting X* = X(p*, T*), the mean AP firing rate can be found self consistently from the location of the fixed point s*,

where s* depends on p* through A*s* = 0, or s* = A−1*b* in the compressed form.

The perturbations around the fixed point s* are described by the linear system

where , , F ≜ I + T*A*, 〈nmn⊤m〉 = T*D*, em is a (non-Gaussian) white noise process, 〈em〉 = 〈emnm〉 = 0, σ2e ≜ 〈e2m〉 = p* (1 − p*), d ≜ A0s* and a ≜ τAP (A+ − A−) s*. If we use the compressed form instead, then these results remain valid, except we need to re-define d ≜ A0s* − b0 and a ≜ τAP[(A+ − A−) s* − (b+ − b−)].

The linear I/O for the fluctuations in Equations (11, 12), which contains feedback from the “output” to the state variable (Figure 1C), can be very helpful mathematically and its parameters are directly related to biophysical quantities.

Using standard tools, this formulation makes it now possible to construct optimal linear estimators for Ym and sm (Anderson and Moore, 1979), perform parameter identification (Lejung, 1999), and find all second order statistics in the system (Papoulis and Pillai, 1965; Gardiner, 2004), such as correlations or Power Spectral Densities (PSD). For example, for f ≪ T−1*, the PSD of the output is

where

Similarly, the PSD of the state variables is

and the input–output cross-PSD is

Again, note the large degeneracy here – many different sets of parameters will generate the same PSD. Using similar methods, the PSDs of various response features, such as the AP latency or amplitude, can also be derived (Equation 124).

Finally, we note Equations (11) and (12) can be re-arranged as a direct I/O relation. First, we define the filters (transfer functions)

where K = a + FPwσ−2v and σ2v = w⊤ Pw + σ2e, with P being the solution of

Using these filters, we obtain, in the frequency domain,

where and z(f) are the Fourier transforms of Ym, and zm, respectively, with zm being a white noise process with zero mean and unit variance. Notably, these transfer functions can be identified from the spiking input–output of the neuron , without access to the underlying dynamics or biophysical parameters. Specifically, Equation (20) has the form of an ARMAx(M, M, M) model6 (Lejung, 1999) (recall M is the dimension of s), which can be estimated using standard tools (e.g., the system identification toolbox in Matlab).

As we argued so far, a main asset of the present approach is its applicability to a broad range of models of various degrees of complexity and realism. Recall that our three assumptions are

In this section we will demonstrate that our analytical approximations agree very well with the numerical solution of Equations (1–3), even in some cases where the assumptions 2 and 3 do not hold. Therefore, these assumptions are sufficient, but not necessary.

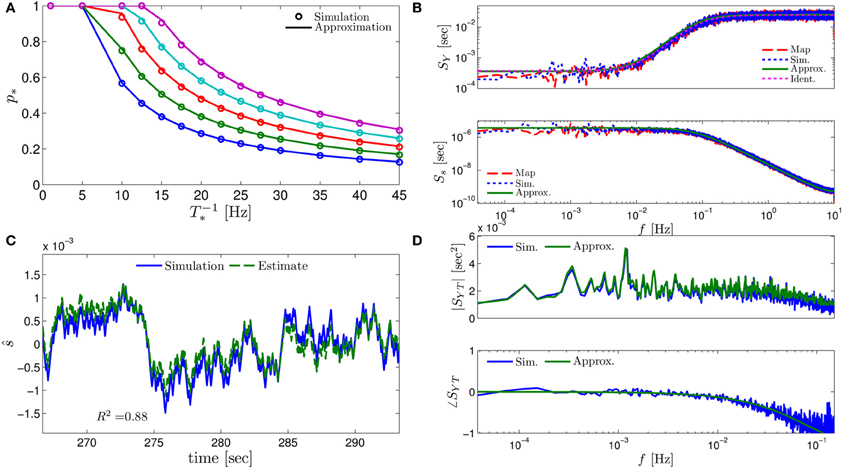

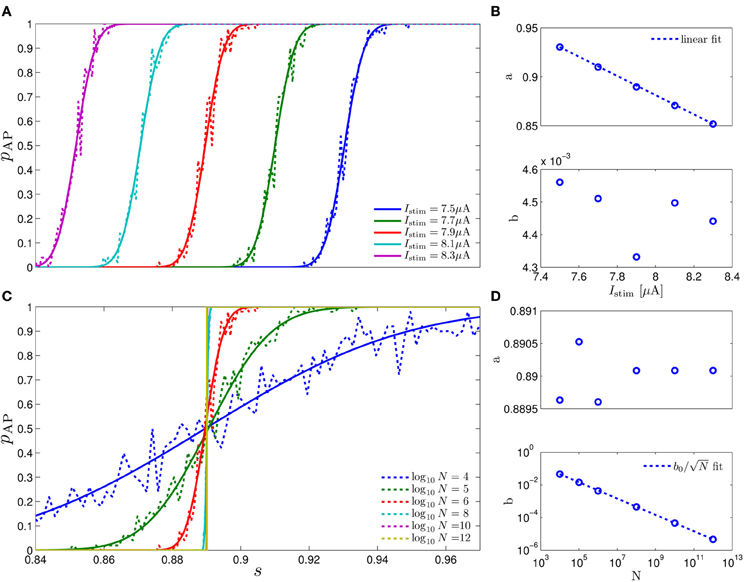

First, in Figure 3 we tested our results on the HH model with Slow sodium inactivation. This “HHS” model (Soudry and Meir, 2012b, and see section 4.5.1 for parameter values) augments the classic HH model (Hodgkin and Huxley, 1952) with an additional slow inactivation process of the sodium conductance (Chandler and Meves, 1970; Fleidervish et al., 1996). The HHS model includes the uncoupled stochastic Hodgkin–Huxley (HH) model equations (Fox and Lu, 1994), and is written in the compressed formulation (Equations 4–6)

for r = m, n and h, with the additional kinetic equation for slow sodium inactivation

where V is the membrane voltage, I(t) is the input current, m, n and h are ion channel “gating variables,” αr(V), βr(V), δ(V), and γ(V) are the voltage dependent kinetic rates of these gating variables, ϕ is an auxiliary dimensionless number, C is the membrane's capacitance, EK, ENa and EL are ionic reversal potentials, gK, gNa and gL are ionic conductances and N is the number of ion channels. Note that in this model τs is between 20 s (at rest) and 40 s (during an AP).

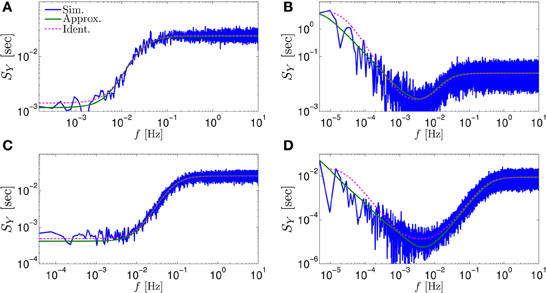

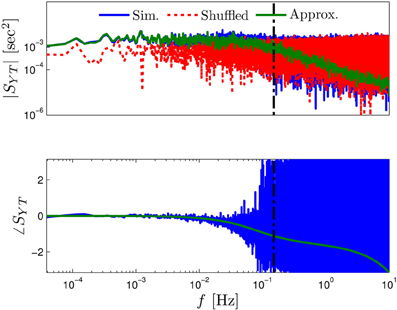

Figure 3. Comparing the mathematical results with the numerical simulation of the full model (Equations 1–3) for the stochastic HHS model (section 4.5.1). (A) Firing probability p*(T−1*) (Equation 10) for different currents (Istim = 7.5, 7.7, 7.9, 8.1, 8.3 μA from bottom to top). (B) The PSDs SY(f) and Ss(f). “Sim” is a simulation of the full model, “Map” is a (104 faster) simulation of Equation (8) together with pAP (sm), while “Approx” refers to the analytical expressions (Equations 13–15). “Ident” is the PSD SY(f) of the linear system identified from the spiking data. Note the high/low-pass filter shapes of SY(f) and Ss(f), respectively. (C) Optimal linear estimation of . (D) Amplitude and phase of the cross-spectrum SYT(f) for Poisson stimulation (Equations 16). Note that the frequency range was cut due to spectral estimation noise (see Figure 8). Parameters: I0 = 7.9 μA and T* = 50 ms in (B–D), and also stimulation is periodical in (A–C). Note the low-pass filter shapes of SYT(f).

In Figure 3A we show that through Equation (10) we can accurately calculate p*, the mean probability to generate an AP (so p*T−1* is the firing rate of the neuron). In Figure 3B we demonstrate both the analytical expression (Equations 13, 15), or a simulation of the reduced model (Equation 8), will give the PSDs SY (f) or Ss (f) of the full model (Equations 1–3). In Figure 3D we do the same for the analytical expression (Equation 16) of the Cross-PSD SYT (f). In Figure 3C we show that we can construct a linear optimal filter for the internal state , given quite well, with low mean square error (section 4.4.4). Finally, back in Figure 3B, top, we infer the linear model parameters from the spike output using system identification tools [here, with ARMAx(1, 1, 1)], and present the PSD of the identified model (“Ident”). Since SY (f) = |Hint (f)|2 (see Equation 111) for periodical input (in which ) this allows us to confirm that the linear model was identified. As can be seen, the identified filter matches well with that of the linear system.

Next, we demonstrate that our analytical expressions hold also for various other models. Specifically, in the following scenarios: (1) when the kinetics of the neuron are extended to arbitrarily slow timescales, (2) when the assumptions 2 and 3 break down, (3) when the rapid and slow kinetics are coupled, (4) when “physiological” synaptic inputs are used. These results are presented in Figures 4, 5, with specific model parameters given in section 4.5.

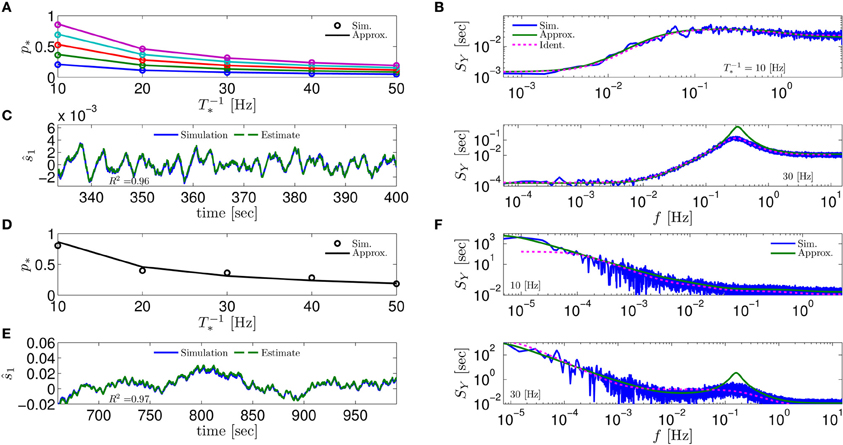

Figure 4. Comparing mathematical results with full model simulation when the assumptions fail to hold. In the HHSIP model (HHS with potassium inactivation) we plot (A) p*(T−1*) for different currents (I0 = 7.5, 7.7, 7.9, 8.1, 8.3μA from bottom to top). (B) SY(f) for two values of T*. As before, “Sim” is a simulation of the full model, “Approx” is the analytical approximation, and “Ident” is the PSD SY(f) of the linear system identified from the spiking data. Upper figure shows the case when T* ≈ 0.5τs so the timescale separation assumption breaks down. In the lower figure the parameters are close to a Hopf bifurcation where a limit cycle is formed so the fixed point assumption breaks down, so the estimation of the limit cycle frequency component is less accurate. (C) The estimation of for T−1* ≈ 30 Hz is even better than in the HHS case. Similarly to (A–C) we plot the results of the HHMSIP model (HHSIP with many additional slow sodium inactivation kinetics) in (D–F), which has considerably more noise in the slow kinetics, and so even larger fluctuations (which further invalidates the fixed point assumption). See section 4.5 for various model details.

Figure 5. Comparing mathematical results (green) with full model simulation (blue) for various models. (A) Coupled HHS (HHS coupled slow and rapid kinetics) (B) HHMS (HHS with many additional slow sodium inactivation kinetics) (C) HHSTM (HHS with a synapse) (D) Multiplicative HHMS (variant of HHMS). As before, “Sim” is a simulation of the full model, “Approx” is the analytical approximation, and “Ident” is the PSD SY(f) of the linear system identified from the spiking data. See section 4.5 for various model details.

First, we tested whether or not the model can be extended to arbitrarily slow timescales. We added to the HHS model four types of slow sodium inactivation processes with increasingly slower kinetics and smaller channel numbers. In the first case, those processes were added additively (as different currents), so s was replaced with ∑i si in the voltage equation (Equation 21). This model was denoted “HHMS” (HH with Many Sodium slow inactivation processes, section 4.5.4). In the second case, those processes were added in a multiplicative manner (as different processes affecting the same channel, in the uncoupled approximation), so s was replaced with ∏i si in the voltage equation (Equation 21). We denote this model as “Multiplicative HHMS” (section 4.5.5). In both cases, our analytical approximations seemed to hold quite well. For example, the approximated SY (f) (Equation 13) corresponded rather well with the numerical simulation of the full model (Figures 5B,D, respectively).

Next, to test the limits of our assumptions we extended the HHS model to the HHSIP model (from Soudry and Meir, 2012b, see section 4.5.6) and added a potassium inactivation current which had faster kinetics (so τs ≈ 5 Hz). So if T−1* = 10 Hz, we get T* ≈ 0.5τs, so the timescale separation assumption 2 is not strictly valid here. Also, for certain parameter values we get a limit cycle in the dynamics of , so the fixed point assumption 3 fails. However, it seems that our approximations still follow the numerical simulation of the full model: for p* at various stimulation frequencies T−1* and currents I0 (Figure 4A), for SY (f) at T−1* = 10 Hz when assumption 2 breaks down (Figure 4B, top), for SY (f) at T−1* = 30 Hz when assumption 3 breaks down (near a Hopf bifurcation) and a limit cycle begins to form (see Figure 4B, bottom), and for state estimation of using a linear optimal filter, again at T−1* = 10 Hz (Figure 4C).

The only discrepancy seemed to appear in the limit cycle case, where the frequency of the limit cycle “sharpens” the peak in SY (f) (Figure 4B, bottom). This may suggest that, in this case, the perturbations of the system near the limit cycle could be linearized, and that the eigenvalues of that linearized system might be related to the eigenvalues of the linearized system around the (now unstable) fixed point s*. More generally, the results so far indicate that even if our assumptions are inaccurate, it is possible that the resulting error will not accumulate and remain small – in comparison with the intrinsic noise in the model.

Next, to challenge the approximation even more, we added to the HHSIP model four types of sodium currents with increasingly slower kinetics and fewer channels, similarly to the HHMS model (so this is the “HHMSIP” model, section 4.5.7). This significantly increased the variance of the dynamic noise nm, rendering the dynamics more “noisy.” These random fluctuations in sm (Figure 4E) are of similar magnitude to the width of the threshold (non-saturated) region in pAP (sm) (see Figure 6). This renders the fixed point assumption 3 inaccurate, since now the linear approximation breaks down most of the time. However, even in this case, the approximations seem to hold quite well with simulations of the full neuronal model (Figures 4D–F).

Figure 6. Fitting of pAP(s) = Φ((s − a)/b) in the HHS model. (A) Fitting of pAP (s) for various values of I0. (B) Fitting shows that a is linearly decreasing in I0. (C) Fitting of pAP (s) for various values of N. (D) Fitting shows that .

In Figure 5A we used a coupled version of the HHS model (“coupled HHS” model, section 4.5.2), in which the equations for r and s in the full model are tangled together, and not separated as we assumed in Equations (2, 3). Even in this case, our approximations seemed to hold well.

Finally, in Figure 5C, we extend the HHS model so that the stimulations are not given directly, but through a synapse. We used the biophysical Tsodyks–Markram model (Tsodyks and Markram, 1997) of a synapse with short-term depression, with added stochasticity (“HHSTM” model, section 4.5.3). This also seemed to work well.

In all simulation we also added the PSD of the linear model identified from the spike output (“Ident.”), to show that it can be estimated reasonably well. Note that the performance at the lowest frequencies seems to be significantly worse when they contain relatively high power. This is not surprising since it is typically harder to estimate model parameters, when the data has such (1/fα) PSD shape – which indicates long-term correlations (Beran, 1992).

In this work we found that under a temporally sparse (“spike-like”) stimulation regime (Figures 1A,B) we can perform accurate semi-analytical linearization of the spiking input–output relation of a CBM (Figure 1C), while retaining biophysical interpretability of the parameters (e.g., Figure 7). This linearization considerably reduces model complexity and parameter degeneracy, and enables the use of standard analysis and estimation tools. Importantly, this method is rather general, since it can be applied to any stochastic CBM, with only a few assumptions.

Figure 7. The averaged kinetic rates. Left: The averaged rates demonstrated for three common kinetic rates γ(V) with sigmoidal shapes. Right: The voltage threshold of the sigmoid determines whether the process is sensitive to APs (the output), stimulation pulse (the input), or neither. Note that a similar classification of biophysical processes affecting excitability was previously suggested in Wallach (2012, Figure 3.1).

To the best of our knowledge, such results are novel, as no previous work examined analytically the response of general stochastic CBMs to temporally sparse input for extended durations. However, the connection between sparse inputs and slow timescales has been previously made. It was previously suggested (Linaro et al., 2011) that sparse inputs could be used to identify neuronal parameters in a network of integrate and fire neurons with spike frequency adaptation. Interestingly, using different methods we reach a qualitatively similar conclusion here, though not in a network setting, and for a different class of neuron models.

Additionally, in Soudry and Meir (2012b) we modeled neurons under periodical stimulation using deterministic CBMs with slow kinetics, which are completely uncoupled from each other, and slower than the stimulation rate. Using a reduction scheme similar in nature to that described here, we were able to describe the deterministic CBM's excitability and response using a discrete-time map – which “samples” the neuronal state at each stimulation. Analyzing this map, we obtained analytical results describing the neuronal activation modes, spike latency dynamics, mean firing rate and short-time firing patterns. Stochastic CBMs were then examined numerically, and were shown to lead to qualitatively different responses, which are more similar to the experimentally observed responses.

The current work, therefore, generalizes this previous work. Here, we analyze the general case of stochastic CBMs, under general sparse stimulation patterns and with coupled slow kinetic dynamics. Therefore, the framework in the previous work (Soudry and Meir, 2012b) could be considered as a special case of this work, in which there is an infinite number of ion channels (N → ∞, so Br/s = Dr/s = 0), Tm = T* (so ) and As (V) (the rate matrix) is a diagonal matrix. In the current work we similarly show that, in the generalized framework, the CBM's excitability and responses can be succinctly described using a discrete-time map. It is then straightforward to derive results paralleling those in Soudry and Meir (2012b) in this more general setting, such as the mean firing rate (Equation 10).

However, the main novelty lies in our additional results, that could not be derived in Soudry and Meir (2012b). Specifically, due to the presence of noise, we were able to linearize the map's dynamics, and derive an explicit input–output relation. Such a linearization became possible because we made the (unusual) choice that the “input” to the CBM consists of the time-intervals between stimulation pulses, while the “output” is a binary series indicating whether or not an AP happened immediately after a stimulation pulse. The linearized input–output relation can be expressed either in biophysically interpretable “state space” (Equations 11, 12 and Figure 1C), or as a sum of the filtered input and filtered noise (Equation 20). Note that the overall I/O includes the mean output (Equation 10) which is non-linear. However, the linear part of the response, allows the derivation of the power spectral densities (Equation 13), the construction of linear optimal estimators (e.g., Figure 3C) and blind identification of the (linearized) system parameters (“Ident.” in Figures 3–5).

Our results rely on three main assumptions. The temporal sparseness of the input τAP ≪ Tm insures that the slow variables sm effectively represent the “neuronal state” alone (as V and r always relax to a steady state before the next stimulation is given). The additional assumption Tm ≪ τs allowed us to integrate the model dynamics and derive the reduced map (Equation 8) for the dynamics of sm, which is linear in Tm. The last assumption is that the dynamics of sm can be linearized around a stable fixed point s*. This fixed point is generated due to the noisiness of the rapid variables (Figure 2), and the assumption Tm ≪ τs ensures that the stochastic fluctuations around s* are small. We performed extensive numerical simulations (section 2.5) that indicate that our analytical results are accurate – sometimes even if our assumptions break down.

However, clearly there are cases, beyond our assumptions, in which are results cannot hold. For example, if has very large fluctuations, then the response of the neuron cannot be completely linear, since . Such cases may require an extension of the formalism described here. There are many possible extensions which we did not pursue here. For example, one can extend the modeling framework (e.g., multi-compartment neurons), stimulation regime (e.g., heterogeneous pulse amplitudes), or the type of neurons modeled (e.g., bursting and spontaneously firing neurons). However, it seems that an important assumption, that cannot be easily removed, is that the input is temporally sparse (τAP ≪ Tm).

Is such a sparse temporally stimulation regime “physiologically relevant” for the soma of a neuron? Currently, such question cannot be answered directly, since it is impossible to accurately measure all the current arriving to the soma from the dendrites under completely physiological conditions. However, there is some indirect evidence. Recent studies have shown that the distribution of synaptic efficacies in the cortex is log-normal (Song et al., 2005) – so a few synapses are very strong, while most are very weak. This indicates that the neuronal firing patterns might in fact be dominated by a small number of very strong synapses while the sum of the weak synapses sets the voltage baseline (Ikegaya et al., 2012). Such a possibility is supported by the fact that individual APs can trigger the complex network events in humans (Molnár et al., 2008; Komlósi et al., 2012). Also, in rats, individual cortical cells can elicit whisker movements in Brecht et al. (2004) and even modify the global brain state (Li et al., 2009). Taken together, these observations suggests that the above-threshold stimulation reaching the soma may be temporally sparse in some cases.

There are other obvious cases were our results are immediately applicable. First, in an axonal compartment, the relevant input current is indeed sparse – an AP spike train arriving from a previous compartment. Second, a direct pulse-like stimulation is used in cochlear implants (Goldwyn et al., 2012, and references therein). Lastly, such stimulation is used as an experimental probe (De Col et al., 2008; Gal et al., 2010; Gal and Marom, 2013). Specifically, since we now have a precise expression for the power spectral density of the response, we are now ready to use these analytical results in Soudry and Meir (2014) to reproduce the experimentally observed 1/fα behavior of the neuron and explore its implications on its input–output relation.

In this section we provide the details of the results presented in the paper. Sections 4.1–4.5 here respectively correspond to Sections 2.1–2.5. The first four (theoretical) sections can be read independently of each other (except when we discuss the repeating “HHS model” example). The last section give the details of the numerical simulations.

As we explained in section 2.1, a general model for a biophysical isopotential neuron is given by the following equations

with voltage V, stimulation current I(t), rapid variables r (e.g., m, n, h in the Hodgkin–Huxley (HH) model Hodgkin and Huxley, 1952), slow variables s (e.g., slow sodium inactivation Chandler and Meves, 1970), rate matrices Ar/s, white noise processes ξr/s (with zero mean and unit variance), and matrices Br/s which can be written explicitly using the rates and ion channel numbers (Orio and Soudry, 2012) (D = BB⊤ is the diffusion matrix Gardiner, 2004; Orio and Soudry, 2012). In this section we give the specific forms of Ar/s and Br/s, and their origin based on neuronal biophysics.

Such a model is commonly called a stochastic Conductance Based Model (CBM). In a non-stochastic CBM, the dynamics of the membrane voltage V (Equation 36) are deterministically determined by some general function of V, the stimulation current I(t), and some internal state variables r and s. In contrast, the dynamical equations for r and s here adhere to a specific Stochastic Differential Equation (SDE) form, since these variables describe the “population state” of all the ion channels in the neuron. We now explain the biophysical interpretation of those equations.

At the microscopic level, each ion channel has several states, and it switches between those states with voltage dependent rates (Hille, 2001). This is usually modeled using a Markov model framework (Colquhoun and Hawkes, 1981). Formally, suppose we index by c the different types of channels, c = 1, …, C. For each channel type c there exist N(c) channels, where each channel of type c possesses K(c) internal states. In the Markov framework, for each ion channel that resides in state i, the probability that the channel will be in state j after an infinitesimal time dt is given by

where A(c) (V) is called the “rate matrix” for that channel type.

To facilitate mathematical analysis and efficient numerical simulation, we preferred to model stochastic CBMs using a compressed, SDE form. This method was initially suggested by Fox and Lu (1994), but their method suffered from several problems (Goldwyn et al., 2011). In a recent paper (Orio and Soudry, 2012) a more general method was derived, which had none of the previous problems, and was shown numerically to produce a very accurate approximation of the original Markov process description.

According to Orio and Soudry (2012), if we define x(c)k to be the fraction of c-type channels in state k, and x(c) to be a column vector composed of x(c)k, then

where ξ(c) is a white noise vector process – meaning it has zero mean and auto-covariance

where I is the identity matrix, δ(t) is the Dirac delta function, and δc, c′ = 1 if c = c′ and 0 otherwise. Furthermore, B(c) is defined so that in Equation (28) each component of ξ(c), which is associated with a transition pair i ⇋ j, is multiplied by , and appears in the equation for and with opposite signs. Note that B(c) is not necessarily square since it has K(c) rows but the number of columns is equal to the number of transition pairs.

We now need to combine Equation (28) for all c to obtain Equations (1–3). For simplicity, assume now that all ion channels types can be classified as either “rapid” or “slow” (this assumption can be relaxed). In this case we can concatenate all vectors related to rapid channels and to slow channels , where R + S = C. We similarly define ξr and ξs together with the block matrices

and similarly for Br and Bs. Note that Ar is square with size rows while As is square with size rows.

In some cases, it is more convenient to re-write Equations (1–3) in a compressed form (this is always possible)

where r, s, and ξr/s have been redefined (their dimension has decreased), as we will show next. First, we comment that the main disadvantage is of these equations is that they are less compact and the notation is somewhat more cumbersome. However, there are also several advantages to this approach: (1) The vectors and matrices are smaller, (2) The rate and diffusion matrices do not have “troublesome” zero eigenvalues and can be diagonal (which is analytically convenient), (3) Most CBMs are written using this form (e.g., the HH model), so it is easier to apply our results using this formalism.

To derive these compressed equations, we use the fact x(c)k denote fractions, so ∑kx(c)k = 1, for all c. We can use this constraint, together with the irreducibility of the underlying ion channel process, to reduce by one the dimensionality of Equation (28) (see Soudry and Meir, 2012a for further details). Defining I to be the identity function, J to be the I with it last row removed, e ≜ (0, 0, …, 1)⊤, u ≜ (1, 1, …, 1)⊤, G ≜ (I − eu⊤) J⊤, , (with x(c)K(c) replaced by 1 − x1 − x2 … −xK(c)−1) and b(c) ≜ −JA(c) e ( is invertible), we obtain the following equation for the reduced state vector y(c) = Jx(c) (which has only K(c) − 1 states)

Again assuming that all channels can be classified as either “rapid” or “slow,” we concatenate all vectors related to rapid channels r ≜ (y⊤(1), …, y⊤(R))⊤ and to slow channels s ≜ (y⊤(R+1), …, y⊤(R+S))⊤, where R + S = C. We obtain Equations (30, 31) by similarly defining br, bs, ξr and ξs together with the block matrices

and similarly for and . Note that is square with rows while is square with rows. Furthermore, it can be shown (Soudry and Meir, 2012a) that is a strictly stable matrix (all its eigenvalues are also eigenvalues of A(c) except its zero eigenvalue, and so have a strictly negative real part), and is positive definite (so all its eigenvalues are real and strictly positive). Therefore, also and are both strictly stable and and are positive definite. Therefore, if V is held constant, 〈s〉 and 〈r〉 tend to and , respectively.

The HHS model can be easily written using the compressed formulation. For example, comparing Equation (23) with Equation (31) we find that

Note that all the parameters are scalar in the HHS model, and so are not boldfaced, as in the general case.

In this section we give additional technical details on section 2.2. Specifically, we show how, given sparse spike stimulation and a few assumptions, it is possible to derive a simple reduced dynamical system (Equation 8) from the full equations of a general biophysical model for an isopotential neuron (Equations 1–3),

For more details on how its parameters and variables map to microscopic biophysical quantities, see section 4.1.

As explained in section 2.1, we focus on models for excitable neurons describable by equations of the general form of Equations (36–38), rather than on arbitrary dynamical systems. This imposes some constraints on the parameters (Soudry and Meir, 2012b). Formally, recall that τAP and τs are the respective kinetic timescales of {V, r} and s, and that τAP < τs. Suppose we “freeze” the dynamics of s (so that effectively τs = ∞) and allow only V and r to evolve in time. We say the original model describes an excitable neuron, if the following conditions hold in this “semi-frozen” model:

Note that due to condition 1, such an excitable neuron is not oscillatory and does not spontaneously fire APs.

Formally, suppose an excitable neuron receives a train of identical stimuli, so

where ⊓(x) is a pulse, of width tw (so ⊓(x) = 0 for x outside [0, tw]). We denote by {Ym}∞m=−∞ the occurrence events of AP responses at times {tm}∞m=−∞, i.e., immediately after each stimulation time tm (Figure 1A),

Defining Tm ≜ tm + 1 − tm, the inter-stimulus interval, and τAP as the upper timescale of an AP event (Figure 1B) we make the following assumption.

Assumption 1. (a) The stimulation pulse width is small, tw < τAP. (b) The spike times {tm}∞m = 0 are temporally sparse, i.e., τAP ≪ Tm for every m (“no collisions”).

Our main objective here is to mathematically characterize the relation between {Ym} and {Tm} under the most general conditions.

We define the sampled quantities Vm ≜ V(tm), rm ≜ r(tm), sm ≜ s(tm), xm ≜ (Vm, r⊤m, s⊤m)⊤ and the history set  m ≜ (note that

m ≜ (note that  m ⊂

m ⊂  m + 1). The Stochastic Differential Equation (SDE) description in Equations (36–38) implies that xm is a “state vector” with the Markov property, namely it is a sufficient statistic on the history to determine the probability of generating an AP at each stimulation,

m + 1). The Stochastic Differential Equation (SDE) description in Equations (36–38) implies that xm is a “state vector” with the Markov property, namely it is a sufficient statistic on the history to determine the probability of generating an AP at each stimulation,

and, together with Ym and Tm, its own dynamics

which implies the following causality relations

This causality structure is reminiscent of the well known Hidden Markov Model (Rabiner, 1989), except that in the present case the output Ym, affects the transition probability, and we have input Tm. Theoretically, if we knew the distributions in Equations (40) and (41), as well as the initial condition P (x0), we could integrate and find an exact probabilistic I/O relation P({Yk}mk=0|{Tk}mk=0. However, since it may be hard to find an expression for P(xm + 1|xm, Tm, Ym) in general, we make a simplifying assumption.

Assumption 2. Tm ≪ τs for every m.

This assumption, together with Assumption 1 and the excitable nature of the CBM, renders the dynamics between stimulations relatively easy to understand. Specifically, between two consecutive stimulations, the fast variables (V(t), r(t)) follow stereotypically either the “AP response” (Ym = 1) or the “no-AP response” (Ym = 0), then equilibrate rapidly (within time τAP) to some quasi-stationary distribution q(V, r|sm). Meanwhile, the slow variable s (t), starting from its initial condition at the time of the previous stimulation, changes slowly according to Equation (38), affected by the voltage trace of V(t) (through As (V)).

Summarizing this mathematically, we obtain the following approximations

Using these equations together with Equations (40) and (41), we obtain

Therefore, the “excitability” vector sm can now replace the full state vector xm = (Vm, r⊤m, s⊤m)⊤ as the sufficient statistic that retains all relevant the information about the history of previous stimuli. Given the input {Tm}∞m=−∞, Equations (45) and (46) together completely specify a Markov process with the causality structure

Since the function pAP (s) is not affected by the kinetics of s, it can be found by numerical simulation of a single AP using only Equations (36, 37), when s is held constant (see section 4.2.4). Now, instead of finding P(sm + 1|sm, Ym, Tm) directly, we calculate the increments Δsm ≜ sm + 1 − sm by integration of the SDE in Equation (38) between tm and tm + 1. First, we integrate the “predictable” part of the increment

to first order, where 〈X|Y〉 denotes the conditional expectation of X given Y. Note that As ~ O(τs−1), so second order corrections are of order O((Tmτs−1)2). Due to assumption 2, we have Tmτs−1 ≪ 1, so these corrections are negligible. Now,

where we defined

which are the average rates during rest, during an AP response and during a no-AP response, receptively. Note a similar notation was also used in Soudry and Meir (2012b) (e.g., Equations 2.15, 2.16 there), where the +/ − /0 were replaced with H/M/L.

Next, we calculate the remaining part of the increment, which is the “innovation,”

Obviously, 〈nm|sm, Tm, Ym〉 = 0, and also

to first order. Note that Ds ~ O(τs−1/N), where N = mincN(c)(N(c) is the channel number of the c-type channel, as we defined in section 4.1), while Equation (53) has corrections of size O((Tmτs−1/N)2). Since N ≥ 1 (usually N ≫ 1), and due to assumption 2, we have Tmτs−1/N ≪ 1, so these corrections are also negligible. Now,

where we defined

Additionally, we note that A±/0 (sm) generally tend to be rather insensitive to changes in sm. This is because the kinetic transition rates (which are used to construct As (V), as explained in section 4.1) tend to demonstrate this insensitivity when similarly averaged (see Figures 4B, 5 in Soudry and Meir, 2012b). The usual reasons behind this are (see appendix section B1 of Soudry and Meir, 2012b): (1) The common sigmoidal shape of the voltage dependency of the kinetic rates reduces their sensitivity to changes in the amplitude of the AP or the resting potential (2) The shape of the AP is relatively insensitive to s (3) The resting voltage is relatively insensitive to s. Therefore, in most cases we can approximate A±/0 (sm) to be constant for simplicity (though this not critical to our subsequent results), as we shall henceforth do.

Additionally, we note that, strictly speaking, the voltage trace during an AP and at rest are stochastic, and therefore, A+, A−, A0, D+, D− and D0 are stochastic. However, there are two factors that render this stochasticity negligible. First, the sigmoidal shape of the kinetic rates implies that A(V) is rather insensitive to fluctuations in the voltage (Figure 4 in our Soudry and Meir, 2012b). Second, noise mainly plays a role in the timing of AP initiation, but does not much affect the AP shape above threshold (see AP voltage traces in Schneidman et al., 1998, p. 1687). Therefore, we shall approximate A+, A−, A0, D+, D− and D0 to be deterministic.

In summary, defining

and

we can write

with〈nm|sm, Tm, Ym〉 = 0 and

These equations correspond to the result presented in Equation (8).

Finally, we note that the distribution of nm given sm, Tm, Ym can be generally computed using the approach described in Orio and Soudry (2012). For example, it can be well approximated to have a normal distribution if channel numbers are sufficiently high and channel kinetics are not too slow (Orio and Soudry, 2012). In that case only knowledge of the variance (Equation 60) is sufficient to generate nm. And so, using Equations (45), (59) and the full distribution of nm, we can now simulate the neuronal response using a reduced model, more efficiently and concisely (with fewer parameters) than the full model (Equations 36, 38), since every time step is a stimulation event. The simulation time should shorten approximately by a factor of 〈Tm〉/dt, where dt is the full model simulation step. Note that the reduced model parameters, having been deduced from the full model itself, still retain a biophysical interpretation.

We numerically calculated pAP (s) by disabling all the slow kinetics in the model – i.e., we only use Equations (1, 2) in main text, while ṡ = 0. Then, for every value of s we simulated this “semi-frozen” model numerically by first allowing r to relax to a steady state and then giving a stimulation pulse with amplitude I0. We repeat this procedures 200 times for each s, and calculate pAP (s) as the fraction of simulations that produced an AP. A few comments are in order: (1) In some cases (e.g., the HHMS model) we can use a shortcut and calculate pAP (s) based on previous results. For example, suppose we know the probability function AP (s) for some model with a scalar s and we make the substitution s = h (s) where the components of s represent independent and uncoupled channel types (Orio and Soudry, 2012) – then pAP (s) = AP (h (s)) in the new model. (2) The timescale separation assumption τAP ≪ Tm ≪ τs implies that all the properties of the generated AP (amplitude, latency etc.) maintain similar causality relations with sm as does Ym, so we can find their distribution using the same simulation we used to find pAP (s), similarly to the approach taken to compute L (s) in the deterministic setting (Soudry and Meir, 2012b). (3) Numerical results (Figure 6) suggest that we can generally write

where Φ is the cumulative distribution function of the normal distribution, E (s) is some “excitability function” (as defined in Soudry and Meir (2012b), so pAP (s) = 0.5 on the threshold Θ = {s|E (s) = 0}), and N−1/2r, the “noisiness” of the rapid sub-system, directly affects the slope of pAP (s) (Figure 6D, bottom). Also, as explained in Soudry and Meir (2012b), E (s) is usually monotonic in each component separately and increasing in I0 (Figure 6C, top) – which could be considered as just another component of s which has zero rates.

We can perform a very similar model reduction and linearization using the compressed formalism presented in section 4.1.1. We just need to define (or re-define) A±,0, b±,0, D±,0 (sm), A (Ym, Tm), b (Ym, Tm) and D (Ym, Tm, sm) in the obvious way and repeat very similar derivations, arriving to

instead of Equation (59) (or Equation 8). Next, we demonstrate this for the HHS model.

We derive the parameters of the HHS reduced map. Recall that the HHS model is based on the compressed formulation. Following the reduction technique described in the previous sections, we numerically find the average rates γ±,0 and δ±,0 (as in Equations (2.15, 2.16) of Soudry and Meir (2012b), where there we denoted H/M/L instead of +/ − /0 here), τAP and pAP (s) (section 4.2.4).

From Equations (32, 35), we find,

and so A (Ym, Tm) and D (Ym, Tm, sm) are defined as in Equations (57) and (58), and similarly

We give for example some specific values: if τAP = 15 ms, then in the range I0 = 7.5−8.3 μA, we have δ±,0 = 25.5−25.6 mHz, γ+ = 22.9−22.1 mHz, γ− = 0.9−1.3μ Hz and γ0 = 0.29−0.28μ Hz.

Recall that these averaged kinetic rates are determined by the shape of the voltage dependent rates (γ (V) and δ (V), see Equation 125) (Soudry and Meir, 2012b). The relative values of the averaged kinetic rates determine what kind of information can be stored in s (which retains the “memory” of the neuron between stimulation). We qualitatively demonstrate this in Figure 7 depicting the values of γ±,0 for three different shapes of γ (V): when γ (V) is sigmoidal with high threshold, when it is sigmoidal with low threshold and when it is constant. These determine whether γ (V) is affected by the output (APs), the input (stimulation pulses) or neither. Therefore: (1) if γ (V) and δ (V) are independent of the voltage, then s cannot store any information on input or output. (2) if γ (V) or δ (V) have low voltage threshold, then s can directly store information on the input. (3) if γ (V) or δ (V) have high voltage threshold, then s can directly store information about the output. In the HHS model the inactivation rate γ has high threshold, while δ is voltage independent – therefore, s directly stores information on the output.

In this section we present a more detailed account on how to arrive from the reduced model (mainly, Equation 8) to its linearized version (the results in Equations 11, 12).

First, we write the complete reduced model, using Equations (59), (60), and (45). The reduced model is a non-linear stochastic dynamic “state-space” system with Tm, the inter-stimulus interval lengths, serving as inputs, sm representing the neuronal state, and Ym the output. We have

where 〈nmn⊤m|sm, Tm, Ym〉 = TmD (Ym, Tm, sm),

and

and we defined

Based on the causality structure in Equation (42), it is straightforward to prove that em and nm are uncorrelated white noise processes – i.e., 〈em〉 = 0, 〈nm〉 = 〈ennm〉 = 0 and 〈nmn⊤n〉 = 〈nmn⊤m〉 δmn, 〈emen〉 = 〈e2m〉 δmn where δnm = 1 if n = m and 0 otherwise.

We now examine the case where {Tm} is a Wide Sense Stationary (WSS) process (i.e., the first and second order statistics of the process are invariant to time shifts), with mean T*, so that the assumptions τAP ≪ Tm ≪ τs are fulfilled with high probability. In this case the processes {sm} and {Ym} are also WSS, with constant means 〈sm〉 = s* and 〈Ym〉 = p*. Also, it is straightforward to verify that , and .

In order to linearize the system in Equations (59–66) we denote , , , . In order for this linearization to be accurate we require that is “small enough.”

Assumption 3. With high probability (component-wise) and.

This assumption essentially means that s* = s*(p*, T*) is a stable fixed point of the system (Equations 59–66), and stochastic fluctuations around it are small, compared to the size of the region {s|pAP (sm) ≠ 0, 1} (usually determined by the noise level of the rapid system {V, r}, see section 4.2.4). Note that the region is usually rather narrow (Figure 6) and therefore is often implied by this description. Given Assumption 3, we can approximate to first order

which allows us to linearize Equation (66). This essentially means that the components of determine the neuronal response linearly, with the components of w serving as the effective weights (related to the relevant conductances in the original full neuron model).

Next, we wish to linearize Equation (59). Using our assumptions, we obtain to first order

Taking expectations and using Equations (66) and (68), we obtain

to zeroth order. Defining the solution of this equation is s*(p*, T*) and we can find p* implicitly from

We write the explicit solution of this equation as p*(T*). Next, using , Equation (70) and defining

we can approximate Equation (69) as

which, together with

yields a simple linear state space representation with as the input, as the state, as the output and two uncorrelated white noise sources with variances

to first order.

From Equation (61), we note that generally we can write

where in many cases the excitability function E (s) has the form E (s) = μ⊤ s − θ, where the components of μ are proportional to the relevant conductances (Soudry and Meir, 2012b). Therefore, if

then E (s) → ±∞, so in this case (assuming E (s*) is not a particularly “pathological” function) we have

In the compressed formulation (introduced in sections 4.1.1 and 4.2.5), we can perform similar linearization by re-defining F ≜ I + T*A (p*, T*), d ≜ A0s* − b0, a ≜ τAP ((A+ − A−)s* − (b+ − b−)), and repeat very similar derivations, where now we can write more explicitly

instead of Equation (70).

Note again that all the parameters are scalar now, and so are not boldfaced, as in the general case. From Equations (71) and (81) we obtain s* and p* for a given T*. Once s* is known, from Equation (79) w can be obtained7. Next, we denote the average inactivation rate at steady state by

and similarly for the recovery rate δ*. And so, s* = δ*/(γ* + δ*), and

Denoting γ1 ≜ γ+ − γ− and similarly for δ1, we obtain

Finally, from Equations (77, 78) we find

In section 2.4 we describe the neuronal dynamics using a linear system for the fluctuations, as depicted in Figure 1. This linear description allows us to use standard engineering tools to analyze the system. In this section we provide an easy to follow description on how this was done, for those unfamiliar with these topics.

We start with a short reminder on some known results for stochastic processes (Papoulis and Pillai, 1965; Gardiner, 2004); these results are standard but are provided for completeness. These results will be used in later sections.

Assume {xm} and {ym} are two real-valued vector stochastic processes that are jointly wide-sense stationary (i.e., a simultaneous time shift of both processes will not change their first and second order statistics). We define the cross-covariance (recall that )

and the Cross-Power Spectral Density (CPSD), given by its Fourier transform

Additionally, the auto-covariance is defined as Rx ≜ Rxx and the corresponding Power Spectral Density (PSD) as Sx ≜ Sxx. Also, note that Ryx(k) = Rxy⊤(−k) and so Syx(k) = Sxy⊤(−ω).

Suppose now that {ym} is generated from a process {xm} using a linear system: i.e., if the Fourier transform x(ω) ≜ ∑∞k = −∞ xke−iωk exists, then in the frequency domain

where H(ω) is a matrix-valued “transfer” function. Therefore, under some regularity conditions (allowing us to switch the order of integration end expectation),

And similarly

where in the second equality here we used an almost identical derivation as for Sxy(ω).

Note that if instead

where x and z are two uncorrelated signals, then we can write

where

Thus Equations (90) and (91), respectively give

Previously, we derived Equations (11, 12), which describe the neuronal dynamics using a linear system, written in “state-space” form

where nm, em and are uncorrelated, zero mean processes with the PSDs Σn ≜ T*D (p*, T*, s*), σ2e = p* (1 − p*) and ST(ω), respectively.

In order to apply Equations (92) and (93) to our system we first need to find the transfer function of the system. Applying the Fourier transform to Equations (94, 95) gives

Re-arranging terms, we obtain

where we denoted

This gives the “closed loop” transfer functions of the system (including the effect of the feedback ). Next, combining Equations (98, 99) and Equations (92, 93) leads to explicit expressions for the PSDs and CPSDs.

For low frequencies it is sometimes more convenient to use the “continuous-time” versions of the PSDs, Sxy(f) ≜ T*Sxy(ω)ω = 2π fT* for f ≪ T−1*, which are approximated by

where

and we used the fact that F = I + T*A (p*, T*) (Equation 72) and Σn = T*D (p*, T*, s*) (Equation 77).

Note that if the dimension of s is finite and there is no degeneracy, we can always write

where λi, the poles of SY(f), are determined solely by the poles of Hc(f) and ST(f), while all the other parameters in Equation (106) affect only the constants cj. Commonly, ST(f) has no poles – for example, if ST(f) is constant so Tm is a renewal process (e.g., the stimulation is periodic or Poisson). Therefore all poles of SY(f) (or the other PSDs) are determined by Hc(f), i.e., λj are the roots of the characteristic polynomial

Equations (96) and (97) can be re-arranged as a direct I/O relation, formulated, for convenience, in the frequency domain (this can be either f or ω – in the section we use ω for brevity of notation, and f in other places). Specifically, this relation is of the form

so vm =  −1 (v(ω)) is a single scalar “noise” process with zero mean and PSD σ2v (here

−1 (v(ω)) is a single scalar “noise” process with zero mean and PSD σ2v (here  −1 is the inverse Fourier transform). This vm process combines the contributions of em and nm, which are the noise processes in the original system (in Equations 96, 97). Such a description, as in Equation (109), describes concisely the contributions of the input and noise to the output (an ARMAx model Lejung, 1999). Using 92 and 93 we respectively find that

−1 is the inverse Fourier transform). This vm process combines the contributions of em and nm, which are the noise processes in the original system (in Equations 96, 97). Such a description, as in Equation (109), describes concisely the contributions of the input and noise to the output (an ARMAx model Lejung, 1999). Using 92 and 93 we respectively find that

Comparing Equation (102) with (110) we obtain

Comparing Equation (103) with (111), while using Equation (112), will yield the equation

This is a “spectral factorization” problem (Anderson and Moore, 1979), with solution

where

and

with P the solution of

derived from the general discrete-time algebraic Riccati equation. This can be verified by substitution

where in (1) we used the fact that w⊤ Hc(ω) = w⊤ Ho(ω) (1 − w⊤ Ho(ω) a)−1 from the Sherman–Morrison lemma, with Ho(ω) = (eiω I − F)−1 being the “open loop” version of Hc(ω) (i.e., if a was zero), and in (2) we used the fact that Ho(ω) = e−iω(FHo(ω) + I).

Given that the neuronal dynamics are given by the linear system in Equations (96, 97), there are two different estimation problems one may be interested in. We may want to estimate, based on the history of the previous inputs and outputs , either the parameters of the model (F, w, a, d, σe and Σn), or the variables in the model ( or ). The first problem is generally termed a “system identification” problem (Lejung, 1999), while the second is a “filtering” (or prediction) problem (Anderson and Moore, 1979). Both are intimately related, and sometimes the solution of the second problem can yield a method of solving the first problem (e.g., section 3.3 in Anderson and Moore, 1979).

A relatively simple way to approach the second (filtering) problem involves the output decomposition we have found in section 4.4.3

Using this decomposition we can now write a new state-space representation for the system in terms of new state variable ,

which has the same output in the frequency domain (recall, from linear systems theory, that a single I/O relation can be generated by multiple state space realizations). This “innovation form” is particularly useful, since, given the entire history of the previous inputs and outputs , we can recursively estimate the current state precisely (with zero error) (Anderson and Moore, 1979)

Given this precise estimate of , the best linear estimate of is simply

and the estimation error is simply

Since both the innovation form and the original form have the same second order statistics for the input–output, the optimal linear estimator (and its error) for in the original system would be the same. Moreover, one can show (Anderson and Moore, 1979) that Equation (118) will also give the optimal linear estimate of in the original system, and with error P (Equation 117). This solution is the well-known “Kalman filter.”

Substituting the parameters for the linearized map (Equations 85–89) into the expressions for the power-spectral densities (Equations 104–106), gives

Note that when ST(f) ≡ 0 (i.e., periodical spike stimulus), SY(f) has the shape of high pass filter (Figure 3B, top). In contrast, Ss(f) (Figure 3B, bottom) and SYT(f) both have the shape of a low pass filter (Figure 3D, top). From Equations (111) and (110) we know that SY(f) = |Hint(f)|2σ2v and SYT(f) = Hext(f)ST(f), respectively. Therefore, this indicates that Hint(f) and Hext(f) are high pass and low pass filters, respectively.

So far we have concentrated on the PSD of the response Ym. However, it is easy to extend our formalism to derive the PSDs of different features of the AP, such as its latency or amplitude. We exemplify this on the latency. In Soudry and Meir (2012b) we showed (Figure 3) that for deterministic CBMs, the latency of the AP generated in response to the m-th stimulation can be written as a function of the excitability Lm = L (sm). In a stochastic model, we have instead

where ϕm is a zero mean, white noise process generated by the stochasticity of the rapid system. Since it is problematic to define the PSD of Lm if sometimes Ym = 0, we focus on the case that p* = 1, so we always have Ym = 1. In this case, assuming again that the perturbations in are small, we can linearize

where l = ∇L (s)s = s*, to obtain (using Equation 11)

where he F = I + T*A (1, T*). Therefore, it is straightforward to show that the PSD of the latency is

where σ2ϕ = 〈ϕ2m〉. Note that if latency is a good indicator of excitability, i.e., L (s) changes similarly to p (s) so that l ∝ w, then SL(f) = c1SY(f) + c2 for some constants c1, c2, when the input is periodic (Tm = T*) and p* → 1.

MATLAB (2010b) code is available on the ModelDB website, with accession number 144993. In all the numerical simulations of the full stochastic Biophysical neuron model we used Equations (1–3) in main text. We used first order Euler–Maruyama integration with a time step of dt = 5 μs (quantitative results were verified also at dt = 0.5 μs). Each stimulation pulse was given as a square pulse with a width of tstim = 0.5 ms and amplitude I0 (which were respectively named t0 and I0 in Soudry and Meir, 2012b). The results are not affected qualitatively by our choice of a square pulse shape. We define an AP to have occurred if, after the stimulation pulse was given, the measured voltage has crossed some threshold Vth (we use Vth = −10 mV in all cases). In all cases where direct stimulation is given, unless stated otherwise, we used periodic stimulation with I0 = 7.9 μA and T* = 50 ms. Note that for the parameter values used, no APs are spontaneously generated, consistently with experimental results (Gal et al., 2010).

The PSDs were estimated using the Welch method and averaged over eight windows, unless 1/f behavior was observed, in which case we used a single window instead, since long term correlations may generate bias if averaging is used (Beran, 1994). Numerical estimation of the cross-PSD is more problematic. When estimating cross-spectra, estimation noise level can be quite high (proportional to the inverse coherence, according to Bendat and Piersol, 2000, p. 321). To estimate the level of estimation noise, we estimate the cross-spectrum with the input randomly shuffled (Figure 8). Since in this case there is no input–output correlation, this new estimate is pure noise. Finally, as suggested by the reviewer, we smoothed the resulting PSD (or cross-PSD) in all figures (except in Figure 8, where we aimed to show the level of estimation noise). To achieve uniform variance with low bias, we divided the spectrum into 30 logarithmically spaced segments from f = 10−3 Hz to the maximal frequency (T*/2). In each segment n the PSD (or cross-PSD) was smoothed using a window of size n.

Figure 8. Estimation noise in the cross-power spectral density. To estimate the level of this noise in Figure 3D, we added where is a shuffled version of {Tm}. Only when the estimated SYT(f) is above , is its estimation valid. Therefore, in Figure 3D we show only this region (left of dashed black line), where estimation is valid.

Next, we describe the models used Figures 3–5 and provide their parameter values. These models have either been studied in the literature or are extensions of such models, which are meant to explore the limit for the validity of our analytic approximations. In all cases where direct stimulation is given, unless stated otherwise, we use periodic stimulation with I0 = 7.9 μA and T* = 50 ms. Notice the form of the models is given in the (more popular) compressed formalism (section 4.1.1), which employs the normalization of state occupation probability to reduce the dimensionality of equations of Equations (2, 3) in the main text.

The HHS model combines the Hodgkin–Huxley equations (Hodgkin and Huxley, 1952) with slow sodium inactivation (Chandler and Meves, 1970; Fleidervish et al., 1996). The model equations (Soudry and Meir, 2012b), which employ the uncoupled stochastic noise approximation, are

Most of the parameters are given their original values (as in Hodgkin and Huxley, 1952; Fleidervish et al., 1996):

where in all the rate functions V is used in units of mV. In order to obtain the specific spike shape and the latency transients observed in cortical neurons, some of the parameters were modified to

We emphasize that these specific choices do not affect any of our general arguments, but were chosen for consistency with experimental results (Gal et al., 2010). Estimates of channel number vary greatly (Soudry and Meir, 2012b). For simplicity, we chose N = Nn = Nh = Nm = Ns, and unless stated otherwise, we chose, by default N = 106, as in Soudry and Meir (2012b). Note that the HHS model is the same model presented in the paper with M = 1, ϕs,1 = 1, Ns,1 = N, Nr, j = N and ϕr = ϕ.

The coupled version of the HHS model uses the same parameters as the uncoupled version, and a similar voltage equation

where the variables n0 and s0m0h0 describe the respective fraction of potassium and sodium channels residing in the “open” state. To obtain the coupled model equations, we need to assume something about the structure of the ion channels. The original assumption by Hodgkin and Huxley was that the channel subunits (e.g., m, n and h) are independent. Over the years, it became apparent that this assumption is inaccurate, and the sodium channel kinetic subunits are, in fact, not independent (Ulbricht, 2005). However, it is not yet clear how the slow sodium inactivation is coupled to the rapid channel kinetics (e.g., Menon et al., 2009; Milescu et al., 2010), so we nevertheless used the original naive HH model assumption that the subunits are independent. In that case the potassium channel structure is given by (for brevity, the voltage dependence on the rates is henceforth ignored for this model)

while for the sodium channel it is described by

In this diagram, transition rates indicated between two boxed regions, imply that the same rates are used between all corresponding states in boxed regions. The corresponding 32 SDEs are derived using the method described in Orio and Soudry (2012) (or 30 equations if we use the compressed formalism). In this model we used I0 = 8.3 μA.

In order to investigate the effect of a more “physiological” stimulation, we changed the HHS model and added synapses. We used the popular Tsodyks–Markram model for the effect of a synapse with short-term-depression on the somatic voltage (the model first appeared in Tsodyks and Markram (1997) and was slightly corrected in Tsodyks et al. (1998)). In the model x, y and z are the fractions of resources in the recovered, active and inactive states respectively, interacting through the system

Here the z → x rate is τ−1rec, the x → y rate is τ−1in, and the x → y rate is USEδ(t − tsp), where δ(·) is the Dirac delta function, and tsp is the pre-synaptic spike arrival time. The post-synaptic current is given by Is(t) = ASEy(t) where ASE is a parameter. Additionally, we added noise to the model using the coupled SDE method (Orio and Soudry, 2012), assuming that the diagram in Equation (126), with the corresponding rates, hint at the underlying Markov kinetic structure, with N = 106. As in Figure 1B of Tsodyks and Markram (1997), we used τin = 3 ms, τrec = 800 ms and USE = 0.67. Additionally, we set ASE = 70 μA to obtain an AP response in our model.

The HHMS model consists of many sodium currents, each with a different slow kinetic variable. The equations are identical to the HHS model, except that gNas is replaced by gNaM−1 ∑ Mi=1 si, where s1 has the same equation as s in the HHS model, and for i > 2,

with ϕs, i = ϵi and Ns, i = N0ϵiη, where γ (V) and δ (V) are taken from the HHS model. Unless mentioned otherwise, we chose as default ϵ = 0.2, η = 1.5, M = 5, and N0 = N as in Figure 5.

The Multiplicative HHMS model is identical to the HHMS model with η = 1, except that is replaced with .

The HHSIP model equations (Soudry and Meir, 2012b) are identical to the HHS model equations, except that s is renamed to s1 and an Inactivating Potassium current was added to the voltage equation, where

with gM = 0.05gK and

where Ns2 = N and

Again, in all the rate functions V is used in mV units. In this model we used I0 = 8.3 μA and T* = 33 ms.

The HHMSIP model combines HHSIP and HHMS. Its equations are identical to the HHMS model with η = 2, except they also contain the IK current from the HHSIP model. In this model we used I0 = 8.3 μA and T* = 33 ms, unless otherwise specified.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors are grateful to O. Barak, N. Brenner, Y. Elhanati, A. Gal, T. Knafo, Y. Kafri, S. Marom, and J. Schiller for insightful discussions and for reviewing parts of this manuscript. The research was partially funded by the Technion V.P.R. fund and by the Intel Collaborative Research Institute for Computational Intelligence (ICRI-CI).

1. ^A semi-analytic derivation is an analytic derivation in which some terms are obtained by relatively simple numerics. See 2.2 for our implementation.

2. ^We demonstrated that such noise should strongly affect the neuronal response to sparse stimulation (Soudry and Meir, 2012b).

3. ^I.e., if ∀t:I(t) = 0, then the probability that a neuron will fire is negligible – on any relevant finite time interval (e.g., minutes or days).

4. ^E.g., as in Equations (50–52). Note also a similar notation was also used in Soudry and Meir (2012b) (e.g., Equations 2.15, 2.16), where we used H/M/L instead of +/−/0.

5. ^Later we shall demonstrate numerically that this is not a necessary condition.

6. ^Note a more general Box–Jenkins model is not required, since the poles of Hext(f) and Hint(f) are identical (assuming no pole-zero cancelation).

7. ^Also, as explained in section 4.3.1, we approximately have w ∝ gNa, from Equation (21).

Anderson, B. D. O., and Moore, J. B. (1979). Optimal Filtering, Vol. 11. Englewood Cliffs, NJ: Prentice Hall.

Bean, B. P. (2007). The action potential in mammalian central neurons. Nat. Rev. Neurosci. 8, 451–465. doi: 10.1038/nrn2148

Bendat, J. S., and Piersol, A. G. (2000). Random Data Analysis and Measurement Procedures, Vol. 11, 3rd edn. New York, NY: Wiley.

Beran, J. (1992). A goodness-of-fit test for time series with long range dependence. J. R. Stat. Soc. Ser. B 54, 749–760.

Brecht, M., Schneider, M., Sakmann, B., and Margrie, T. W. (2004). Whisker movements evoked by stimulation of single pyramidal cells in rat motor cortex. Nature 427, 704–710. doi: 10.1038/nature02266

Chandler, W. K., and Meves, H. (1970). Slow changes in membrane permeability and long-lasting action potentials in axons perfused with fluoride solutions. J. Physiol. 211, 707–728.

Channelpedia. Available online at: http://channelpedia.epfl.ch/

Colquhoun, D., and Hawkes, A. G. (1981). On the stochastic properties of single ion channels. Proc. R. Soc. Lond. Ser. B Biol. Sci. 211, 205–235. doi: 10.1098/rspb.1981.0003

Contou-Carrere, M. N. (2011). Model reduction of multi-scale chemical Langevin equations. Syst. Control Lett. 60, 75–86. doi: 10.1016/j.sysconle.2010.10.011

De Col, R., Messlinger, K., and Carr, R. W. (2008). Conduction velocity is regulated by sodium channel inactivation in unmyelinated axons innervating the rat cranial meninges. J. Physiol. 586, 1089–1103. doi: 10.1113/jphysiol.2007.145383

De Paola, V., Holtmaat, A., Knott, G., Song, S., Wilbrecht, L., Caroni, P., et al. (2006). Cell type-specific structural plasticity of axonal branches and boutons in the adult neocortex. Neuron 49, 861–875. doi: 10.1016/j.neuron.2006.02.017

Debanne, D., Campanac, E., Bialowas, A., and Carlier, E. (2011). Axon physiology. Physiol. Rev. 91, 555–602. doi: 10.1152/physrev.00048.2009

Druckmann, S., Berger, T. K., Schürmann, F., Hill, S., Markram, H., and Segev, I. (2011). Effective stimuli for constructing reliable neuron models. PLoS Comput. Biol. 7:e1002133. doi: 10.1371/journal.pcbi.1002133

Elul, R., and Adey, W. R. (1966). Instability of firing threshold and “remote” activation in cortical neurons. Nature 212, 1424–1425. doi: 10.1038/2121424a0

Ermentrout, B., and Terman, D. (2010). Mathematical Foundations of Neuroscience, Vol. 35. New York, NY: Springer. doi: 10.1007/978-0-387-87708-2

Fleidervish, I. A., Friedman, A., and Gutnick, M. J. (1996). Slow inactivation of Na+ current and slow cumulative spike adaptation in mouse and guinea-pig neocortical neurones in slices. J. Physiol. 493, 83–97.

Fox, R. F., and Lu, Y. N. (1994). Emergent collective behavior in large numbers of globally coupled independently stochastic ion channels. Phys. Rev. E 49, 3421–3431. doi: 10.1103/PhysRevE.49.3421

Gal, A., Eytan, D., Wallach, A., Sandler, M., Schiller, J., and Marom, S. (2010). Dynamics of excitability over extended timescales in cultured cortical neurons. J. Neurosci. 30, 16332–16342. doi: 10.1523/JNEUROSCI.4859-10.2010

Gal, A., and Marom, S. (2013). Entrainment of the intrinsic dynamics of single isolated neurons by natural-like input. J. Neurosci. 33, 7912–7918. doi: 10.1523/JNEUROSCI.3763-12.2013

Gardiner, C. W. (2004). Handbook of Stochastic Methods, 3rd edn. Berlin: Springer-Verlag. doi: 10.1007/978-3-662-05389-8

Gerstner, W., and Naud, R. (2009). How good are neuron models? Science 326, 379–380. doi: 10.1126/science.1181936

Goldwyn, J. H., Imennov, N. S., Famulare, M., and Shea-Brown, E. (2011). Stochastic differential equation models for ion channel noise in Hodgkin-Huxley neurons. Phys. Rev. E 83, 041908. doi: 10.1103/PhysRevE.83.041908