- 1Consiglio Nazionale delle Ricerche, Istituto dei Sistemi Complessi, Sesto Fiorentino, Italy

- 2INFN—Sezione di Firenze and CSDC, Sesto Fiorentino, Italy

- 3SUPA and Institute for Complex Systems and Mathematical Biology, King's College, University of Aberdeen, Aberdeen, UK

In a first step toward the comprehension of neural activity, one should focus on the stability of the possible dynamical states. Even the characterization of an idealized regime, such as that of a perfectly periodic spiking activity, reveals unexpected difficulties. In this paper we discuss a general approach to linear stability of pulse-coupled neural networks for generic phase-response curves and post-synaptic response functions. In particular, we present: (1) a mean-field approach developed under the hypothesis of an infinite network and small synaptic conductances; (2) a “microscopic” approach which applies to finite but large networks. As a result, we find that there exist two classes of perturbations: those which are perfectly described by the mean-field approach and those which are subject to finite-size corrections, irrespective of the network size. The analysis of perfectly regular, asynchronous, states reveals that their stability depends crucially on the smoothness of both the phase-response curve and the transmitted post-synaptic pulse. Numerical simulations suggest that this scenario extends to systems that are not covered by the perturbative approach. Altogether, we have described a series of tools for the stability analysis of various dynamical regimes of generic pulse-coupled oscillators, going beyond those that are currently invoked in the literature.

1. Introduction

Networks of oscillators play an important role in both biological (neural systems, circadian rhythms, population dynamics) (Pikovsky et al., 2003) and physical contexts (power grids, Josephson junctions, cold atoms) (Hadley and Beasley, 1987; Filatrella et al., 2008; Javaloyes et al., 2008). It is therefore comprehensible that many studies have been and are still devoted to understanding their dynamical properties. Since the development of sufficiently powerful tools and the resulting discovery of general laws is an utterly difficult task, it is convenient to start from simple setups.

The first issue to consider is the model structure of the single oscillators. Since phases are typically more sensitive than amplitudes to mutual coupling, they are likely to provide the most relevant contribution to the collective evolution (Pikovsky et al., 2003). Accordingly, here we restrict our analysis to oscillators characterized by a single, phase-like, variable. This is typically done by reducing the neuronal dynamics to the evolution of the membrane potential and introducing the corresponding velocity field which describes the single-neuron activity. Equivalently, one can map the membrane potential onto a phase variable and simultaneously introduce a phase-response curve (PRC) [Upon changing variables, the velocity field can be made independent of the local variable (as intuitively expected for a true phase). When this is done, the phase dependence of the velocity field is moved to the coupling function, i.e., to the PRC] to take into account the dependence of the neuronal response on the current value of the membrane potential (i.e., the phase). In this paper we adopt the first point of view, with a few exceptions, when the second one is mathematically more convenient.

As for the coupling, two mechanisms are typically invoked in the literature, diffusive and pulse-mediated. While the former mechanism is pretty well understood [see e.g., the very many papers devoted to Kuramoto-like models (Acebrón et al., 2005)], the latter one, more appropriate in neural dynamics, involves a series of subtleties that have not yet been fully appreciated. This is why here we concentrate on pulse-coupled oscillators.

Finally, for what concerns the topology of the interactions, it is known that they can heavily influence the dynamics of the neural systems leading to the emergence of new collective phenomena even in weakly connected networks (Timme, 2006), or of various types of chaotic behavior, ranging from weak chaos for diluted systems (Popovych et al., 2005; Olmi et al., 2010) to extensive chaos in sparsely connected ones (Monteforte and Wolf, 2010; Luccioli et al., 2012). We will, however, limit our analysis to globally coupled identical oscillators, which provide a much simplified, but already challenging, test bed. The high symmetry of the corresponding evolution equations simplifies the identification of the stationary solutions and the analysis of their stability properties. The two most symmetric solutions are: (1) the fully synchronous state, where all oscillators follow exactly the same trajectory; (2) the splay state (also known as “ponies on a merry-go-round,” antiphase state or rotating waves) (Hadley and Beasley, 1987; Ashwin et al., 1990; Aronson et al., 1991), where the oscillators still follow the same periodic trajectory, but with different (evenly distributed) time shifts. The former solution is the simplest representative of the broad class of clustered states (Golomb and Rinzel, 1994), where several oscillators behave in the same way, while the latter is the prototype of asynchronous states, characterized by a smooth distribution of phases (Renart et al., 2010).

In spite of the many restrictions on the mathematical setup, the stability of the synchronous and splay states still depend significantly on additional features such as the synaptic response-function, the velocity field, and the presence of delay in the pulse transmission. As a result, one can encounter splay states that are either strongly stable along all directions, or that present many almost-marginal directions, or, finally, that are marginally stable along various directions (Nichols and Wiesenfield, 1992; Watanabe and Strogatz, 1994). Several analytic results have been obtained in specific cases, but a global picture is still missing: the goal of this paper is to recompose the puzzle, by exploring the role of the velocity field (or, equivalently, of the phase response curve) and of the shape of the transmitted post-synaptic potentials. Although we are neither going to discuss the role of delay nor that of the network topology, it is useful to recall the stability analysis of the synchronous state in the presence of delayed δ-pulses and for arbitrary topology, performed by Timme and Wolf in Timme and Wolf (2008). There, the authors show that even the complete knowledge of the spectrum of the linear operator does not suffice to address the stability of the synchronized state.

The stability analysis of the fully synchronous regime is far from being trivial even for a globally coupled network of oscillators with no delay in the pulse transmission: in fact, the pulse emission introduces a discontinuity which requires separating the evolution before and after such event. Moreover, when many neurons spike at the same time, the length of some interspike intervals is virtually zero but cannot be neglected in the mathematical analysis. In fact, the first study of this problem was restricted to excitatory coupling and δ-pulses (Mirollo and Strogatz, 1990). In that context, the stability of the synchronous state follows from the fact that when the phases of two oscillators are sufficiently close to one another, they are instantaneously reset to the same value (as a result of a non-physical lack of invertibility of the dynamics). The first, truly linear stability analyses have been performed later, first in the case of two oscillators (van Vreeswijk et al., 1994; Hansel et al., 1995) and then considering δ-pulses with continuous PRCs (Goel and Ermentrout, 2002). Here, we extend the analysis to generic pulse-shapes and discontinuous PRCs [such as for leaky integrate and fire (LIF) neurons].

As for the splay states, their stability can be assessed in two ways: (1) by assuming that the number of oscillators is infinite (i.e., taking the so called thermodynamic limit) and thereby studying the evolution of the distribution of the membrane potentials—this approach is somehow equivalent to dealing with (macroscopic) Liouville-type equations in statistical mechanics; (2) by dealing with the (microscopic) equations of motion for a large but finite number N of oscillators. As shown in some pioneering works (Kuramoto, 1991; Treves, 1993), the former approach corresponds to develop a mean field theory. The resulting equations have been first solved in Abbott and van Vreeswijk (1993) for pulses composed of two exponential functions, in the limit of a small effective coupling [A small effective coupling can arise also when PRC has a very weak dependence on the phase (see section 3)]. Here, following Abbott and van Vreeswijk (1993), we extend the analysis to generic pulse-shapes, finding that substantial differences exist among δ, exponential and the so-called α-pulses (see the next section for a proper definition).

Direct numerical studies of the linear stability of finite networks suggest that the eigenfunctions of the (Floquet) operator can be classified according to their wavelength ℓ (where ℓ refers to the neuronal phase—see section 4.1 for a precise definition). In finite systems, it is convenient to distinguish between long (LW) and short (SW) wavelengths. Upon considering that ℓ = n/N (1 ≤ n ≤ N), LW can be identified as those for which n « N, while SW correspond to larger n values. Numerical simulations suggest also that the time scale of a LW perturbation typically increases upon increasing its wavelength, starting from a few milliseconds (for small n values) up to much longer values (when n is on the order of the network size N) which depend on “details” such as the continuity of the velocity field, or the pulse shape. On the other hand, SW are characterized by a slow size-dependent dynamics.

For instance, in LIF neurons coupled via α-pulses, it has been found (Calamai et al., 2009) that the Floquet exponents of LW decrease as 1/ℓ2 (for large ℓ), while the time scale of the SW component is on the order of N2. In practice the LW spectral component as determined from the finite N analysis coincides with that one obtained with the mean field approach (i.e., taking first the thermodynamic limit). As for the SW component, it cannot be quantitatively determined by the mean-field approach, but it is nevertheless possible to infer the correct order of magnitude of this time scale. In fact, upon combining the 1/ℓ2 decay (predicted by the mean-field approach) with the observation that the minimal wavelength is 1/N, it naturally follows that the SW time scale is N2, as analytically proved in Olmi et al. (2012). Furthermore, it has been found that the two spectral components smoothly connect to each other and the predictions of the two theoretical approaches coincide in the crossover region.

It is therefore important to investigate whether the same agreement extends to more generic pulse shapes and velocity fields. The finite-N approach can, in principle, be generalized to arbitrary shapes, but the analytic calculations would be quite lengthy, due to the need of distinguishing between fast and slow scales and the need of accounting for higher order terms. For this reason, here we limit ourselves to give a positive answer to this question with the help of numerical studies.

The only, important, exception to this scenario is obtained for quasi δ-like pulses (Zillmer et al., 2007), i.e., for pulses whose width is smaller than the average time separation between any two consecutive spikes, in which case all the SW eigenvalues remain finite for increasing N.

In section 2 we introduce the model and derive the corresponding event-driven map, a necessary step before undertaking the analytic calculations. Section 3 is devoted to a perturbative stability analysis of the splay state in the infinite-size limit for generic velocity fields and pulse shapes. The following section 4 reports a discussion of the stability in finite networks. There we briefly recall the main results obtained in Olmi et al. (2012) for the splay state and we extensively discuss the method to quantify the stability of the fully synchronous regime. The following two sections are devoted to a numerical analysis of various setups. In section 5 we study splay states in finite networks for generic velocity fields and three different classes of of pulses, namely, with finite, vanishing (≈1/N), and zero width. In section 6 we study periodically forced networks. Such studies show that the scaling relations derived for the splay states apply also to such a microscopically quasi-periodic regime. A brief summary of the main results together with a recapitulation of the open problem is finally presented in section 7. In the first appendix we derive the Fourier components needed to assess the stability of a splay state for a generic PRC. In the second appendix the evaporation exponent is determined for the synchronous state in LIF neurons.

2. The Model

The general setup considered in this paper is a network of N identical pulse-coupled neurons (rotators), whose evolution is described by the equation

where Xj represents the membrane potential, g is the coupling constant and the mean field E(t) denotes to the synaptic input, common to all neurons in the network. When Xj reaches the threshold value Xj = 1, it is reset to Xj = 0 and a spike contributes to the mean field E in a way that is described here below. The resetting procedure is an approximation of the discharge mechanism operating in real neurons. The function F(X) (the velocity field) is assumed to be everywhere positive, thus ensuring that the neuron is repetitively firing. For F0(X) = a − X the model reduces to the well-known case of LIF neurons.

The mean field E(t) arises from the linear superposition of the pulses emitted by the single neurons. In order to describe its time evolution, it is sufficient to introduce a suitable ordinary differential equation (ODE), such that its Green function reproduces the expected pulse shape,

where the superscript (i) denotes the ith time derivative, L the order of the differential equation and K = ∏i αi, (−αi being the poles of the differential equation), so as to ensure that the single pulses have unit area (for N = 1). The δ-functions appearing on the right hand side of Equation (2) correspond to the spikes emitted at times {tn}: each time a spike is emitted, the term E(L − 1) has a finite jump of amplitude K/N. Therefore L controls the smoothness of the pulses: L − 1 is the order of the lowest derivative that is discontinuous. L = 0 corresponds to the extreme case of δ-pulses with no field dynamics; L = 1 corresponds to discontinuous exponential pulses; L = 2 (with α1 = α2) to the so-called α-pulses (Es(t) = α2te−αt). Since α-pulses will be often referred to, it is worth being a little more specific. In this case, Equation (2) reduces to

and it is convenient to transform this equation into a system of two ODEs, namely

where we have introduced, for the sake of simplicity, the auxiliary variable P ≡ αE + Ė.

2.1. Event-Driven Map

By following Zillmer et al. (2006) and Calamai et al. (2009), it is convenient to pass from a continuous—to a discrete-time evolution rule, by deriving the event-driven map which connects the network configuration at consecutive spike times. For the sake of simplicity, in the following part of this section we refer to α-pulses, but there is no conceptual limitation in extending the approach to L > 2.

By integrating Equation (4), we obtain

where we have taken into account the effect of the incoming pulse (see the term α2/N in the second equation) while  n = tn + 1 − tn is the interspike interval; tn+1 corresponds to the time when the neuron with the largest membrane potential reaches the threshold.

n = tn + 1 − tn is the interspike interval; tn+1 corresponds to the time when the neuron with the largest membrane potential reaches the threshold.

Since all neurons follow the same first-order differential equation (this is a mean-field model), the ordering of their membrane potentials is preserved [neurons “rotate” around the circle [0, 1] without overtaking each other (Jin, 2002)]. It is, therefore, convenient to order the potentials from the largest to the smallest one and to introduce a co-moving reference frame, i.e., to shift backward the label j, each time a neuron reaches the threshold. By formally integrating Equation (1),

Moreover, since X1n is always the largest potential, the interspike interval is defined by the threshold condition

Altogether, the model now reads as a discrete-time map, involving N + 1 variables, En, Pn, and Xjn (1 ≤ j < N), since one degree of freedom has been eliminated as a result of having taken the Poincaré section (XNn ≡ 0 due to the resetting mechanism). The advantage of the map description is that we do not have to deal any longer with δ-like discontinuities, or with formally infinite sequences of past events.

In this framework, the splay state is a fixed point of the event-driven map. Its coordinates can be determined in the following way. From Equation (5), one can express and Ẽ as a function of the yet unknown interspike interval  ,

,

The value of the membrane potentials k are then obtained by iterating backward in j Equation (7) (the n dependence is dropped for the fixed point) starting from the initial condition N = 0. The interspike interval  is finally obtained by imposing the condition 0 = 0. In practice the computational difficulty amounts to finding the zero of a one dimensional function and, even though

is finally obtained by imposing the condition 0 = 0. In practice the computational difficulty amounts to finding the zero of a one dimensional function and, even though  (Xj + 1,

(Xj + 1,  ) can, in most cases, be obtained only through numerical integration, the final error can be very well kept under control.

) can, in most cases, be obtained only through numerical integration, the final error can be very well kept under control.

3. Theory (N = ∞)

The stability of a dynamical state can be assessed by either first taking the infinite-time limit and then the thermodynamic limit, or vice versa. In general it is not obvious whether the two methods yield the same result and this is particularly crucial for the splay state, as many eigenvalues tend to 0 for N → ∞. In this section we discuss the scenarios that have to be expected when the thermodynamic limit is taken first. We do that by following Abbott and van Vreeswijk (1993).

As a first step, it is convenient to introduce the phase-like variable

where, for later convenience, we have defined G(X) ≡ g + T0 F(X), T0 = N being the period of the splay state (i.e., the single-neuron interspike interval). The phase yi evolves according to the equation

being the period of the splay state (i.e., the single-neuron interspike interval). The phase yi evolves according to the equation

where Ẽ = 1/T0 is the amplitude of the field in the splay state, ε(t) = E(t) − Ẽ. In the splay state, since ε = 0, yi grows linearly in time, as indeed expected for a well-defined phase. In the thermodynamic limit, the evolution is ruled by the continuity equation

where ρ(y, t)dy is the fraction of neurons whose phase yi lies in (y, y + dy) at time t, and

is the corresponding flux. As the resetting implies that the outgoing flux J(1, t) (which coincides with the firing rate) equals the incoming flux at the origin, the above equation has to be complemented with the boundary condition J(0, t) = J(1, t). Finally, in this macroscopic representation, the field equation writes

while the splay state corresponds to the fixed point ρ = 1, ε = 0, J = Ẽ. The smoothness of the splay state justifies the use of a partial differential equation such as (Equation 12). Its stability can be studied by introducing the perturbation j(y, t)

and linearizing the continuity equation,

while the field equation simplifies to

By now introducing the Ansatz

in Equations (16) and (17) and, thereby solving the resulting ODE, one can obtain an implicit expression for jf(y),

where −αk and K are defined as below Equation (2). By imposing the boundary condition for the flux, jf(1) = jf(0) = 1, one finally obtains the eigenvalue equation (Abbott and van Vreeswijk, 1993),

In the case of a constant G(X(y)) = σ, L eigenvalues correspond to the zeroes of the following polynomial equation

For g = 0 such solutions are the poles which define the field dynamics, while for g = σ, λ = 0 is a solution: this corresponds to the maximal value of the (positive) coupling strength beyond which the model does no longer support stationary states, as the feedback induces an unbounded growth of the spiking rate. Besides such L solution, the spectrum is composed of an infinite set of purely imaginary eigenvalues,

The existence of such marginally stable directions reflects the fact that all yi phases experience the same velocity field, independently of their current value (see Equation 11), so that no effective interaction is present among the oscillators. In the limit of small variations of G(X(y)), one can develop a perturbative approach. Here below, we proceed under the more restrictive assumption that the coupling constant g is itself small: we have checked that this restriction does not change the substance of our conclusions, while requiring a simpler algebra.

A small g value implies that λ is close to 2πinẼ and thereby expand the exponential in Equation (19). Up to first order, we find

where

are the Fourier components of the phase-response curve 1/G(X(y)).

In order to estimate the leading terms of the real part of λn in the large n limit, let us rewrite Equation (22) as

where γn = 2πnẼ = (2πn)/T0. Since γn is proportional to n, the leading terms in the product at numerator of Equation (24) are

where while the leading term in the product at denominator in Equation (24) is γ2Ln. Accordingly, the main contribution to the real part of the eigenvalues is, in the case of even L,

and, for odd L,

An exact expression for the Fourier components An and Bn appearing in Equation (23) can be derived in the large n limit. In particular, the integral over the interval [0, 1] appearing in Equation (23) can be rewritten as a sum of integrals, each performed on a sub-interval of vanishingly small length 1/n. Furthermore, since the phase-response 1/G has a limited variation within each sub-interval, it can be replaced by its polynomial expansion up to second order. Finally, as shown in Appendix A, the following expression are obtained at the leading order in 1/n for a discontinuous F(X)

Therefore, for even L, the leading term for n → ∞ is

For even L, the stability of the short-wavelength modes (large n) is controlled by the sign of (F(0) − F(1)): for even (odd) L/2 and excitatory coupling, i.e., g > 0, the splay state is stable whenever F(1) > F(0) (F(1) < F(0)). Obviously the stability is reversed for inhibitory coupling.

Notice that for L = 0, i.e., δ-spikes, the eigenvalues do not decrease with n, as previously observed in Zillmer et al. (2007). This is the only case where all modes exhibit a finite stability even in the thermodynamic limit.

For odd L, the real part of the eigenvalues is

in this case the value of F(X) and of its derivative F′(X) at the extrema mix up in a non-trivial way.

Finally, as for the scaling behavior of the leading terms we observe that

where ⌊·⌋ stays for the integer part of the number. Therefore the scaling of the short-wavelength modes for discontinuous F(X) is dictated by the post-synaptic pulse profile.

For a continuous but non-differentiable F(X), (i.e., F′(1) ≠ F′(0)), if L is even, it is necessary to go two orders beyond in the estimate of the Fourier coefficients (see Appendix A). As a result, the eigenvalues scale as

For odd L, it is instead sufficient to assume F(0) = F(1) in Equation (31).

Altogether, we have seen that the non-smoothness of both the post-synaptic pulse and of the velocity field (or, equivalently, of the phase response curve) play a crucial role in determining the degree of stability of the splay state. The smoother are such functions and the slower short-wavelength perturbations decay, although the changes occur in steps which depend on the parity of the order of the discontinuity (at least for the pulse structure). Moreover, the overall stability of the spectral components depends in a complicate way on the sign of the discontinuity itself.

4. Theory (Finite N)

4.1. The Splay State

The stability for finite N can be investigated by linearizing Equations (5–7). A thorough analysis has been developed in Olmi et al. (2012); here we limit ourselves to review the key ideas as a guide for the numerical analysis.

We start by introducing the vector W = ({xj}, ϵ, p) (j = 1, N − 1), whose components represent the infinitesimal perturbations of the solution {Xj}, E, P. The Floquet spectrum can be determined by constructing the matrix A which maps the initial vector W(0) onto W( ),

),

where  corresponds to the time separation between two consecutive spikes. This is done in two steps, the first of which corresponds to evolving the components of a Cartesian basis according to the equations obtained from the linearization of Equations (1, 4) (in the comoving reference frame),

corresponds to the time separation between two consecutive spikes. This is done in two steps, the first of which corresponds to evolving the components of a Cartesian basis according to the equations obtained from the linearization of Equations (1, 4) (in the comoving reference frame),

The second step consists in accounting for the spike emission, which amounts to add the vector

where τ is obtained from the linearization of the threshold condition (8),

The diagonalization of the resulting matrix A, gives N + 1 Floquet eigenvalues μk, which we express as

where , k = 1, …, N − 1, and ϕN = ϕN−1 = 0, while λk and ωk are the real and imaginary parts of the Floquet exponents. The variable ϕk plays the role of the wavenumber k in the linear stability analysis of spatially extended systems.

Previous studies (Olmi et al., 2012) have shown that the spectrum can be decomposed into two components: (1) k ~  (1); (2) k/N ~

(1); (2) k/N ~  (1). The former one is the LW component and can be directly obtained in the thermodynamic limit (see the previous section). For L = 2 and α1 = α2 (i.e., for α pulses), it has been found that the results reported in Abbott and van Vreeswijk (1993) match does obtained for 1« k « N in Olmi et al. (2012). The latter one corresponds to the SW component: it depends on the system size and cannot, indeed, be derived from the mean field approach discussed in the previous section. In the next section, we illustrate some examples that go beyond the analytic studies carried out in Olmi et al. (2012).

(1). The former one is the LW component and can be directly obtained in the thermodynamic limit (see the previous section). For L = 2 and α1 = α2 (i.e., for α pulses), it has been found that the results reported in Abbott and van Vreeswijk (1993) match does obtained for 1« k « N in Olmi et al. (2012). The latter one corresponds to the SW component: it depends on the system size and cannot, indeed, be derived from the mean field approach discussed in the previous section. In the next section, we illustrate some examples that go beyond the analytic studies carried out in Olmi et al. (2012).

4.2. The Synchronized State

In this section we address the problem of measuring the stability of the fully synchronized state for a generic oscillator dynamics F(x). The task is non-trivial, because of the resetting mechanism, which acts simultaneously on all neurons. On the one side, we extend the results obtained in Goel and Ermentrout (2002) which are restricted to a continuous PRC, on the other side we extend the results of Mirollo and Strogatz (1990) which refer to excitatory coupling and δ pulses. In order to make the analysis easier to understand we start considering α-pulses. Other cases are discussed afterward.

The starting point amounts to writing the event driven map in a comoving frame,

where the function  is obtained by formally integrating the equations of motion over the time interval

is obtained by formally integrating the equations of motion over the time interval  n. Notice that the field dynamics has been, instead, explicitly obtained from the exact integration of the equations of motion [compare with Equations (3, 4)]. The interspike time interval

n. Notice that the field dynamics has been, instead, explicitly obtained from the exact integration of the equations of motion [compare with Equations (3, 4)]. The interspike time interval  n is finally determined by solving the implicit equation

n is finally determined by solving the implicit equation

In order to determine the stability of the synchronized state, it is necessary to assume that the neurons have an infinitesimally different membrane potentials, even though they coincide with one another. As a result, the full period must be broken into N steps. In the first one, of length T, all neurons start in X = 0 and arrive at 1, but only the “first” reaches the threshold; in the following N − 1 steps, of 0-length, one neuron after the other passes the threshold and it is accordingly reset in 0.

With this scheme in mind we proceed to linearize the equations, writing the evolution equations for the infinitesimal perturbations xjn, ϵn, pn, and τn around the synchronous solution. From Equations (39–41) we obtain,

with the boundary condition xNn + 1 = 0 (due to the reset mechanism) and where the subscripts X, E, P, and  denote a partial derivative with respect to the given variable. Moreover, the dependence on j + 1 is a shorthand notation to remind that the various derivatives depend on the membrane potential of the (j + 1)st neuron. Finally, we have left the n-dependence in the variable P as it changes (in α2/N steps, when the neurons progressively cross the threshold), while Ẽ refers to the field amplitude, which, instead, stays constant.

denote a partial derivative with respect to the given variable. Moreover, the dependence on j + 1 is a shorthand notation to remind that the various derivatives depend on the membrane potential of the (j + 1)st neuron. Finally, we have left the n-dependence in the variable P as it changes (in α2/N steps, when the neurons progressively cross the threshold), while Ẽ refers to the field amplitude, which, instead, stays constant.

The above equations must be complemented by the condition

where  Z =

Z =  Z(1)/

Z(1)/

(1) (Z = X, E, P). Equation (46) is obtained by differentiating Equation (42) which defines the period of the splay state.

(1) (Z = X, E, P). Equation (46) is obtained by differentiating Equation (42) which defines the period of the splay state.

We now proceed to build the Jacobian for each of the N steps, starting from the first one. In order not to overload the notations, from now on, the time index n corresponds to the step of the procedure. It is convenient to order all the variables, starting from xj (j = 1, N − 1), and then including ϵ and p, into a single vector, so that the evolution is described by an (N + 1) × (N + 1) matrix with the following structure,

where 0 is an (N − 1) × 2 null matrix; Γ(n) is a quadratic (N − 1) × (N − 1) matrix, whose only non-zero elements are those in the first column and along the supradiagonal; Ψ(n) is a 2 × (N − 1) matrix whose elements are all zero except for the first column; finally Ω(n) is a 2 × 2 matrix.

Since in the first step all neurons start from the same position X = 0, one can drop the j dependence in  . With the help of Equations (46, 43)

. With the help of Equations (46, 43)

Moreover, with the help of Equations (44–46)

where we have also made use that P1 = . Finally,

In the next steps,  n vanishes, so that

n vanishes, so that  E =

E =  P = 0, while

P = 0, while  X = 1 and

X = 1 and

(1) = F(1) + gẼ: = V1. Moreover,

(1) = F(1) + gẼ: = V1. Moreover,

(j) depends on whether the jth neuron has passed the threshold or not. In the former case

(j) depends on whether the jth neuron has passed the threshold or not. In the former case

(j + 1) = F(0) + gẼ: = V0, otherwise

(j + 1) = F(0) + gẼ: = V0, otherwise

(j + 1) = V1. As a result,

(j + 1) = V1. As a result,

where Vj = V0 if j < n and Vj = V1, otherwise. At the same time, from the equations for the field variables, we find that

while Ω(n) reduces to the identity matrix.

From the multiplication of all matrices, we find that the structure is preserved, namely

where Ψ(n) is a 2 × (N − 1) matrix, whose elements are all zero except for those of the first column, namely

Furthermore, Λ is a diagonal matrix, with

Therefore, it is evident that the stability of the orbit is measured by the diagonal elements Λjj together with the eigenvalues of Ω which are associated to the pulse structure. In practice,  X corresponds to the expansion rate from X = 0 to X = 1 under the action of the mean field E and we recover a standard result in globally coupled identical oscillators: the spectrum is degenerate, all eigenvalues being equal and independent of the network size. The result is, however, not obvious in this context, due to the care that is needed in taking into account the various discontinuities. We have separately verified that the same conclusion holds for exponential spikes.

X corresponds to the expansion rate from X = 0 to X = 1 under the action of the mean field E and we recover a standard result in globally coupled identical oscillators: the spectrum is degenerate, all eigenvalues being equal and independent of the network size. The result is, however, not obvious in this context, due to the care that is needed in taking into account the various discontinuities. We have separately verified that the same conclusion holds for exponential spikes.

The stability of the synchronized state can be also addressed by determining the evaporation exponent Λe (van Vreeswijk, 1996; Pikovsky et al., 2001), which measures the stability of a probe neuron subject to the mean field generated by the synchronous neurons with no feedback toward them. By implementing this approach for a negative perturbation, van Vreeswijk found that Λe is equal to Λjj (for α-functions). By further assuming that F′ < 0, he was able to prove that the synchronized state is stable for inhibitory coupling and sufficiently small α-values. The situation is more delicate for exponential pulse-shapes. As shown in di Volo et al. (2013), Λe > 0 (Λe < 0) depending whether the perturbation is positive (negative). In this case, the Floquet exponent reported in Equation (54) coincides with the evaporation exponent estimated for negative perturbations. In Appendix B we show that the difference between the left and right stability is to be attributed to the discontinuous shape of the pulse: no anomaly is expected for α pulses.

5. Numerical Analysis

The theoretical approaches discussed in the previous sections allow determining: (1) the SW components of the Floquet spectrum for discontinuous velocity fields; (2) the leading LW exponents directly in the thermodynamic limit for generic velocity fields and pulse shapes, in the weak coupling limit. It would be possible to extend the finite N results to other setups, but we do not think that the effort is worth, given the huge amount of technicalities. We thus prefer to illustrate the expected behavior with the help of some simulations which, incidentally, cover a wider range than possibly accessible to the analytics.

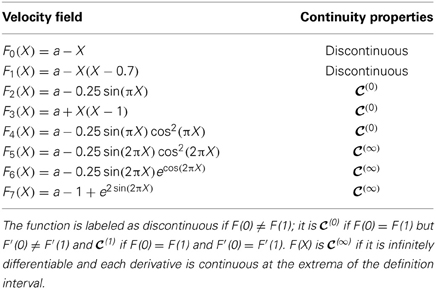

More precisely, in this and the following section we study the models listed in Table 1 in a standard set up (splay states) and under the effect of periodic external perturbations.

Table 1. In the first column is reported the list of the velocity fields F(X) analyzed in the paper. All the considered fields are everywhere positive within the definition interval X ∈ [0, 1], thus ensuring that the neuron is supra-threshold. The second column refers to the continuity properties of the fields within the interval [0, 1].

5.1. Finite Pulse Width

Here, we discuss the stability of the splay state for different degrees of smoothness of the velocity field at the borders of the unit interval for post-synaptic pulses of α-function type.

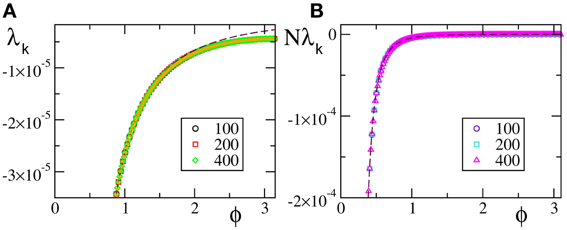

We start from discontinuous velocity fields. They have been the subject of an analytic study which proved that the SW component scales as 1/N2 (Olmi et al., 2012). The data reported in Figure 1A for F1(X) confirms the expected scaling: the agreement with the theoretical curve derived in Olmi et al. (2012) is impressive over the entire spectral range, while the mean field Equation (30) gives a very good estimation of the spectrum except for the shortest wavelengths, where it overestimates the numerical data. The mean field approximation turns out to be more accurate for continuous velocity fields (with a discontinuity of the first derivative at the borders of the definition interval). Indeed the agreement between the theoretical expression Equation (A10) and the numerical data is very good for the entire range [see Figure 1B which refers to F4(X)].

Figure 1. Floquet spectra for α-pulses for (A) a discontinuous field F1(X) and (B) a continuous field F4(X). The orange dotted line in (A) represents the theoretical curve estimated by using Equation (7) in Olmi et al. (2012), while the dashed maroon curve represents the theoretical curve estimated by using Equation (30) in section 3. In (B) the dashed maroon curve is calculated by using Equation (A10). All data refer to a = 1.3 and α = 3.

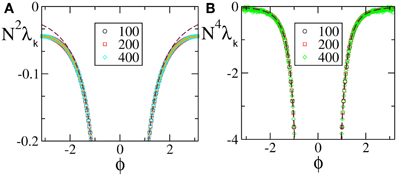

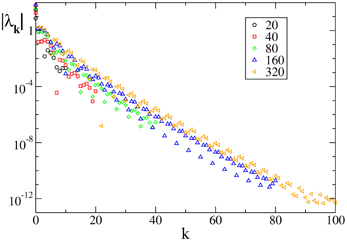

The numerical Floquet spectra for fields that are  (0), but not

(0), but not  (1) (F(0) = F(1), F′(0)≠ F′(1)), are reported in Figure 2 [the curves in panels (A, B) refer to F2(X) and F4, respectively]. For these velocity fields, we have also verified that the spectra scale as 1/N4, confirming the observation reported in Calamai et al. (2009) for a different velocity field with the same analytical properties. The data displayed in Figures 2A,B refer to the LW components: they indeed confirm to be independent of the system size and scale as 1/k4 (see the dashed line) as predicted by the perturbative theory discussed in section 3.

(1) (F(0) = F(1), F′(0)≠ F′(1)), are reported in Figure 2 [the curves in panels (A, B) refer to F2(X) and F4, respectively]. For these velocity fields, we have also verified that the spectra scale as 1/N4, confirming the observation reported in Calamai et al. (2009) for a different velocity field with the same analytical properties. The data displayed in Figures 2A,B refer to the LW components: they indeed confirm to be independent of the system size and scale as 1/k4 (see the dashed line) as predicted by the perturbative theory discussed in section 3.

Figure 2. Floquet spectra for α-pulses for two continuous sinusoidal fields, namely F2(X) (A) and F4(X) (B); and two analytic fields, namely F6(X) (C) and F7(X) (D). The dashed blue line in (B) indicates a scaling 1/k4. All data refer to a = 1.3 and α = 3.

The spectra reported in the other two panels refer to analytic velocity fields: in all cases the initial part of the Floquet spectra is again independent of N and scales approximately exponentially with k, confirming that the scaling behavior of the exponents is related to the analyticity of the velocity field. The fluctuating background with approximate height 10−12 is just a consequence of the finite numerical accuracy. This is the reason why we did not dare to estimate the SW components that would be exceedingly small.

5.2. Vanishing Pulse-Width

Here, we analyze the intermediate case between finite pulse-width and δ-like impulses. Similarly to what done in Zillmer et al. (2007) for the LIF, we consider α pulses, where α = βN, with β independent of N.

In Figure 3A we report the spectra for a discontinuous velocity field, F1(x). In this case the Floquet spectra remain finite, so that the corresponding states remain robustly stable even in the thermodynamic limit. Also in this case the agreement with the theoretical expression reported in Equation (7) in Olmi et al. (2012) is extremely good, while Equation (30) overestimates the spectra for large phases. The field considered in panel (b) (F2(X)) is  (0) but not

(0) but not  (1). In this case, the Floquet spectra scale as 1/N: this scaling is predicted by the analysis reported in section 3 and the whole spectrum is very well reproduced by Equation (A10).

(1). In this case, the Floquet spectra scale as 1/N: this scaling is predicted by the analysis reported in section 3 and the whole spectrum is very well reproduced by Equation (A10).

Figure 3. Floquet spectra for β-pulses with a discontinuous field [F1(X)] (A) and a  (0) field [F2(X)] (B). The orange dotted line in (A) represents the theoretical curve estimated by using Equation (7) in Olmi et al. (2012). The dashed line in (A) [resp. (B)] represents the theoretical curve computed by using Equation (30) [resp. Equation (A10)] for β-pulses. The data refer to a = 1.3 and β = 0.03.

(0) field [F2(X)] (B). The orange dotted line in (A) represents the theoretical curve estimated by using Equation (7) in Olmi et al. (2012). The dashed line in (A) [resp. (B)] represents the theoretical curve computed by using Equation (30) [resp. Equation (A10)] for β-pulses. The data refer to a = 1.3 and β = 0.03.

Last but not least, we have studied an analytic field, namely F7(X). In this case the Floquet spectra appear to scale exponentially to zero with the wavevector k, similarly to what observed for the finite pulse width, as shown in Figure 4.

Figure 4. Floquet spectra for β-pulses for the analytic field F7(X). The data refer to a = 1.3 and β = 0.03.

5.3. δ Pulses

Finally we considered the case of δ-pulses: whenever the potential Xj reaches the threshold value, it is reset to zero and a spike is sent to and instantaneously received by all neurons. We studied just two cases: (1) the analytic field F7(X); (2) a leaky integrate-and fire neuron model with F0(X). The results, obtained for inhibitory coupling [since the splay state is known to be stable only in such a case (van Vreeswijk, 1996; Zillmer et al., 2006)] are consistent with the expectation for the β model.

In particular we found, in the analytic case (1), that the Floquet spectra decay exponentially to zero. The exponential scaling is not altered if a phase shift ζ is introduced in the velocity field (i.e., for F(X) = a − 1 + e2sin(2πX + ζ)). In the case of the LIF model (F0), we already know that the Lyapunov spectrum tends, in the δ-pulse limit, to Zillmer et al. (2007)

This result is confirmed by our simulations which also reveal that the splay state is stable even for small, excitatory coupling values, extending previous results limited to inhibitory coupling (Zillmer et al., 2006).

6. Periodic Forcing

In this section we numerically investigate the scaling behavior of the Floquet spectrum in the presence of a periodic forcing, to test the validity of the previous analysis in a more general context. We have restricted our studies to splay-state-like regimes, where it is important to predict the behavior of the many almost marginally stable directions. Moreover, we have considered only the smooth α-pulses. In this case, the dynamical equations read

They have been written in an autonomous form, since it is more convenient to perform the Poincaré section according to the spiking times, rather than introducing a stroboscopic map. The interspike interval is determined by the equation

where X1 is the membrane potential of the first neuron (the closest to threshold), and Xold is its initial value.

We analyzed only those setups where the unperturbed splay state is stable. More precisely: the two discontinuous fields F0(X) and F1(X), the two  (0) fields (F2(X) and F3(X)), and the analytic field F7(X). In all cases the external modulation induces a periodic modulation of the mean field E with a period Ta = 2π/ω equal to the period of the modulation. At the same time, we have verified that, although the forcing term has zero average (i.e., it does not change the average input current), the average interspike interval is slightly self-adjusted and, what is more important, there is no evidence of locking between the modulation and the frequency of the single neurons. In other words, the behavior is similar to the spontaneous partial synchronization observed in van Vreeswijk (1996) (where the modulation is self-generated).

(0) fields (F2(X) and F3(X)), and the analytic field F7(X). In all cases the external modulation induces a periodic modulation of the mean field E with a period Ta = 2π/ω equal to the period of the modulation. At the same time, we have verified that, although the forcing term has zero average (i.e., it does not change the average input current), the average interspike interval is slightly self-adjusted and, what is more important, there is no evidence of locking between the modulation and the frequency of the single neurons. In other words, the behavior is similar to the spontaneous partial synchronization observed in van Vreeswijk (1996) (where the modulation is self-generated).

Because of the unavoidable oscillations of the interspike intervals, it is necessary to identify the spike times with great care. In practice we integrate Equation (56) with a fixed time step Δt, by employing a standard fourth-order Runge–Kutta integration scheme. At each time step we check if X1 > 1, in which case we go one step back and adopt the Hénon trick, which amounts to exchanging t and X1 in the role of independent variable (Henon, 1982).

The linear stability analysis can be performed by linearizing the system (56), to obtain

and by thereby estimating the corresponding Lyapunov spectrum.

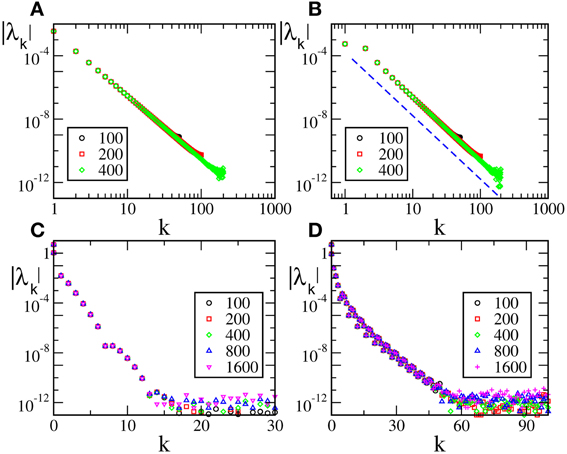

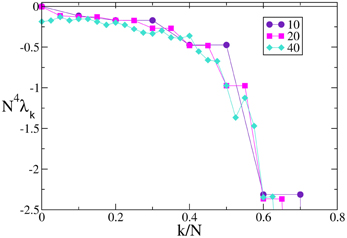

In the case of F0 and F1, we have always found that the Lyapunov spectrum scales as 1/N2 as theoretically predicted in the absence of external modulation (see Figure 5 for one instance of each of the two velocity fields).

Figure 5. Lyapunov spectra for neurons forced by an external periodic signal, we observe the scaling 1/N2 for the discontinuous velocity fields (A) F0(X) and (B) F1(X). In both cases A = 0.1, Ta = 2.

A similar agreement is also found for F3, where the Lyapunov spectrum scales as 1/N4, exactly as in the absence of external forcing (see Figure 6). Analogous results have been obtained for the other velocity fields (data not shown), which confirm that the validity of the previous analysis extends to more complex dynamical regimes, as long as the membrane potentials are smoothly distributed.

Figure 6. Lyapunov spectra for neurons forced by an external periodic signal, we observe the scaling 1/N4 for the continuous velocity field F3(X). The data refer to A = 0.1, Ta = 2.

7. Summary and Open Problems

In this paper we have discussed the linear stability of both fully synchronized and splay states in pulse-coupled networks of identical oscillators. By following Abbott and van Vreeswijk (1993), we have obtained analytic expressions for the long-wavelength components of the Floquet spectra of the splay state for generic velocity fields and post synaptic potential profiles. The structure of the spectra depends on the smoothness of both the velocity field and the transmitted pulses. The smoother they are and the faster the eigenvalues decrease with the wavelength of the corresponding eigenvectors. In practice, while splay states arising in LIF neurons with δ-pulses have a finite degree of (in)stability along all directions, those emerging in analytic velocity fields have many exponentially small eigenvalues. These results have been derived in the mean field framework, where the system is assumed to be infinite. Although realistic neural networks are finite, the present analysis predicts correctly, even for finite systems, the stability of the eigenmodes associated to the fastest scales and the order of magnitude of the eigenvalues corresponding to slower time scales. Interestingly, the scaling behavior of the eigenvalues carries over to that of the Lyapunov exponents, when the network is periodically forced, suggesting that our results have a relevance that goes beyond the highly symmetric solutions studied in this paper.

Finally, we derived an analytic expression for the Floquet spectra for the fully synchronous state. In this case the exponents associated to the dynamics of the membrane potentials are all identical, as it happens for the diffusive coupling, but here the result is less trivial, due to the fact that one must take into account that arbitrarily close to the solution, the ordering of the neurons may be different. Moreover, the value of the (degenerate) Floquet exponent coincides with the evaporation exponent (van Vreeswijk, 1996; Pikovsky et al., 2001) whenever the pulses are sufficiently smooth, while for discontinuous pulses (like exponential and δ-spikes) the equivalence is lost (see also di Volo et al., 2013).

For discontinuous velocity fields, another important property that has been confirmed by our analysis is the role of the ratio R = N/(T0α) between the width of the single pulse (1/α) and the average interspike interval of the whole network ( = T0/N). In fact, it turns out that the asynchronous regimes can be strongly stable along all directions only when R remains finite in the thermodynamic limit (and is possibly small). This includes the idealized case of δ-like pulses, but also setups where the single pulses are so short that they can be resolved by the single neurons. Mathematically speaking, this result implies that the thermodynamic limit does not commute with the limit of a zero pulse-width. It would be interesting to check to what extent this property extends to more realistic models. A first confirmation result is contained in Pazó and Montbrió (2013), where the authors find a similar property in a network of Winfree oscillators.

= T0/N). In fact, it turns out that the asynchronous regimes can be strongly stable along all directions only when R remains finite in the thermodynamic limit (and is possibly small). This includes the idealized case of δ-like pulses, but also setups where the single pulses are so short that they can be resolved by the single neurons. Mathematically speaking, this result implies that the thermodynamic limit does not commute with the limit of a zero pulse-width. It would be interesting to check to what extent this property extends to more realistic models. A first confirmation result is contained in Pazó and Montbrió (2013), where the authors find a similar property in a network of Winfree oscillators.

Among possible extensions of our analysis, one should definitely mention the inclusion of delay in the pulse transmission. This generalization is far from trivial as it modifies the phase diagram of the possible states (see Bär et al., 2012 for a recent brief overview of the possible scenarios) and it complicates noticeably the stability analysis of the synchronized phase. An analytic treatment of this latter case is reported in Timme et al. (2002) for generic velocity fields and excitatory δ-pulses.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank David Angulo Garcia for the help in the use of symbolic algebra software. Alessandro Torcini acknowledges financial support from the European Commission through the Marie Curie Initial Training Network “NETT,” project N. 289146, as well as from the Italian Ministry of Foreign Affairs for the activity of the Joint Italian-Israeli Laboratory on Neuroscience. Simona Olmi and Alessandro Torcini thanks the Italian MIUR project CRISIS LAB PNR 2011–2013 for economic support and the German Collaborative Research Center SFB 910 of the Deutsche Forschungsgemeinschaft for the kind hospitality at Physikalisch-Technische Bundesanstalt in Berlin during the final write up of this manuscript.

References

Abbott, L. F., and van Vreeswijk, C. (1993). Asynchronous states in networks of pulse-coupled oscillators. Phys. Rev. E 48, 1483. doi: 10.1103/PhysRevE.48.1483

Acebrón, J. A., Bonilla, L. L., Pérez Vicente, C. J., Ritort, F., and Spigler, R. (2005). The Kuramoto model: a simple paradigm for synchronization phenomena. Rev. Mod. Phys. 77, 137–185. doi: 10.1103/RevModPhys.77.137

Aronson, D. G., Golubitsky, M., and Krupa, M. (1991). Coupled arrays of Josephson junctions and bifurcation of maps with SN symmetry. Nonlinearity 4, 861–902. doi: 10.1088/0951-7715/4/3/013

Ashwin, P., King, G. P., and Swift, J. W. (1990). Three identical oscillators with symmetric coupling. Nonlinearity 3, 585–601. doi: 10.1088/0951-7715/3/3/003

Bär, M., Schöll, E., and Torcini, A. (2012). Synchronization and complex dynamics of oscillators with delayed pulse-coupling. Angew. Chem. Int. Ed. 51, 9489–9490. doi: 10.1002/anie.201205214

Calamai, M., Politi, A., and Torcini, A. (2009). Stability of splay states in globally coupled rotators. Phys. Rev. E 80: 036209. doi: 10.1103/PhysRevE.80.036209

di Volo, M., Livi, R., Luccioli, S., Politi, A., and Torcini, A. (2013). Synchronous dynamics in the presence of short-term plasticity. Phys. Rev. E. 87: 032801. doi: 10.1103/PhysRevE.87.032801

Filatrella, G., Nielsen, A. H., and Pedersen, N. F. (2008). Analysis of a power grid using a Kuramoto-like model. Eur. Phys. J. B 61, 485–491. doi: 10.1140/epjb/e2008-00098-8

Goel, P., and Ermentrout, B. (2002). Synchrony, stability and firing patterns in pulse-coupled oscillators. Physica D 163, 191–216. doi: 10.1016/S0167-2789(01)00374-8

Golomb, D., and Rinzel, J. (1994). Clustering in globally coupled neurons. Physica D 72, 259–282. doi: 10.1016/0167-2789(94)90214-3

Hadley, P., and Beasley, M. R. (1987). Dynamical states and stability of linear arrays of Josephson junctions. App. Phys. Lett. 50, 621–623. doi: 10.1063/1.98100

Hansel, D., Mato, G., and Meunier, C. (1995). Synchrony in excitatory neural networks. Neural Comput. 7, 307. doi: 10.1162/neco.1995.7.2.307

Henon, M. (1982). On the numerical computation of Poincaré maps. Physica D 5, 412–414. doi: 10.1016/0167-2789(82)90034-3

Javaloyes, J., Perrin, M., and Politi, A. (2008). Collective atomic recoil laser as a synchronization transition. Phys. Rev. E 78: 011108. doi: 10.1103/PhysRevE.78.011108

Jin, D. Z. (2002). Fast convergence of spike sequences to periodic patterns in recurrent networks. Phys. Rev. Lett. 89, 208102. doi: 10.1103/PhysRevLett.89.208102

Kuramoto, Y. (1991). Collective synchronization of pulse-coupled oscillators and excitable units. Physica D 50, 15–30. doi: 10.1016/0167-2789(91)90075-K

Luccioli, S., Olmi, S., Politi, A., and Torcini, A. (2012). Collective dynamics in sparse networks. Phys. Rev. Lett. 109: 138103. doi: 10.1103/PhysRevLett.109.138103

Monteforte, M., and Wolf, F. (2010). Dynamical entropy production in spiking neuron networks in the balanced state. Phys. Rev. Lett. 105: 268104. doi: 10.1103/PhysRevLett.105.268104

Mirollo, R. E., and Strogatz, S. H. (1990). Synchronization of pulse-coupled biological oscillators. SIAM Journal on Applied Mathematics 50, 1645–1662. doi: 10.1137/0150098

Nichols, S., and Wiesenfield, K. (1992). Ubiquitous neutral stability of splay-phase states. Phys. Rev. A 45, 8430–8345. doi: 10.1103/PhysRevA.45.8430

Olmi, S., Livi, R., Politi, A., and Torcini, A. (2010). Collective oscillations in disordered neural network. Phys. Rev. E 81: 046119. doi: 10.1103/PhysRevE.81.046119

Olmi, S., Politi, A., and Torcini, A. (2012). Stability of the splay state in networks of pulse-coupled neurons. J. Math. Neurosci. 2, 12. doi: 10.1186/2190-8567-2-12

Pazó, D., and Montbrió, E. (2013). Low-dimensional dynamics of populations of pulse-coupled oscillators. arXiv:1305.4044 [nlin.AO].

Pikovsky, A., Popovych, O., and Maistrenko, Y. (2001). Resolving clusters in chaotic ensembles of globally coupled identical oscillators. Phys. Rev. Lett. 87: 044102. doi: 10.1103/PhysRevLett.87.044102

Pikovsky, A., Rosenblum, M., and Kurths, J. (2003). Synchronization: A Universal Concept in Nonlinear Sciences. Cambridge: Cambridge University Press.

Popovych, O. V., Maistrenko, Y. L., and Tass, P. A. (2005). Phase chaos in coupled oscillators. Phys. Rev. E. 71: 065201(R). doi: 10.1103/PhysRevE.71.065201

Renart, A., de la Rocha, J., Bartho, P., Hollender, L., Parga, N., Reyes, A., et al. (2010). The asynchronous state in cortical circuits. Science 327, 587–590. doi: 10.1126/science.1179850

Timme, M. (2006). Does dynamics reflect topology in directed networks? Europhys. Lett. 76, 367. doi: 10.1209/epl/i2006-10289-y

Timme, M., and Wolf, F. (2008). The simplest problem in the collective dynamics of neural networks: is synchrony stable? Nonlinearity 21, 1579. doi: 10.1088/0951-7715/21/7/011

Timme, M., Wolf, F., and Geisel, T. (2002). Coexistence of regular and irregular dynamics in complex networks of pulse-coupled oscillators. Phys. Rev. Lett. 89, 258701. doi: 10.1103/PhysRevLett.89.258701

Treves, A. (1993). Mean-field analysis of neuronal spike dynamics. Network Comput. Neural Syst. 4, 259–284. doi: 10.1088/0954-898X/4/3/002

van Vreeswijk, C. (1996). Partial synchronization in populations of pulse-coupled oscillators. Phys. Rev. E 54, 5522. doi: 10.1103/PhysRevE.54.5522

van Vreeswijk, C., Abbott, L. F., and Ermentrout, G. B. (1994). When inhibition not excitation synchronizes neural firing. J. Comput. Neurosci. 1, 313. doi: 10.1007/BF00961879

Watanabe, S., and Strogatz, S. H. (1994). Constants of motion for superconducting Josephson arrays. Physica D 74, 197–253. doi: 10.1016/0167-2789(94)90196-1

Zillmer, R., Livi, R., Politi, A., and Torcini, A. (2006). Desynchronization in diluted neural networks. Phys. Rev. E 75: 036203. doi: 10.1103/PhysRevE.74.036203

Zillmer, R., Livi, R., Politi, A., and Torcini, A. (2007). Stability of the splay state in pulse–coupled networks. Phys. Rev. E 76: 046102. doi: 10.1103/PhysRevE.76.046102

Appendices

A. Fourier Components of the Phase Response Curve

In this appendix we briefly outline the way the explicit expression of An and Bn, defined in Equation (23), can be derived in the large n limit for a velocity field F(X) that is either discontinuous, or continuous with discontinuous first derivatives at the border of the definition interval.

The integration interval [0, 1] appearing in Equation (23) is splitted in n sub-intervals of length 1/n, and the original equation can be rewritten as

For n sufficiently large we can assume that the variation of 1/G(y) is quite limited within each sub-interval, and we can approximate the function as follows, up to the second order

where y0 = (k − 1)/n is the lower extremum of the nth sub-interval.

By inserting these expansions into Equation (A1) and by performing the integration over the n sub-intervals, we can determine an approximate expression for An and Bn. The estimation of An involves integrals containing cos(2πny); it is easy to show that the integral over each sub-interval is zero if the integrand, which multiplies the cosinus term, is constant or linear in y; therefore the only non-zero terms are,

This allows to rewrite

where

It is easy to verify that H2(x) admits an exact primitive and therefore to perform the integral appearing in Equation (A3) and to arrive at the expression reported in Equation (28).

The estimation of Bn is more delicate, since now integrals containing sin(2πny) are involved. The only vanishing integrals over the sub-intervals are those with a constant integrand multiplied by the sinus term and therefore the estimation of Bn reduces to

where

and the non-zero integrals are

and

This allows to rewrite Bn as

We can then return to a continuous variable by rewriting (A8), up to the  (1/n3), as

(1/n3), as

The expression Equation (29) is finally obtained by noticing that the primitive of H2(x) is H1(x)/2, and that

For continuous velocity fields, Bn = 0 so that, we can derive from Equation (26) an exact expression for the real part of the Floquet spectrum in the case of even L (for odd L the equivalent expression is given by Equation (31))

A rigorous validation of the above formula would require going one order beyond in the 1/n expansion of Bn, a task that is utterly complicated. In the specific case of the Quadratic Integrate and Fire neuron (or Θ-neuron) F(X) = a − X(X − 1), it can be, however, analytically verified that Bn is exactly zero. Moreover, Equation (A10) is in very good agreement with the numerically estimated Floquet spectra for two other continuous velocity fields, namely F4(X) and F2(X) as shown in Figures 1, 3, respectively. As a consequence, it is reasonable to conjecture that Equation (29) is correct up to order  (1/n4).

(1/n4).

B. Evaporation Exponent for the LIF Model

In this appendix we determine the (left and right) evaporation exponent for a synchronous state of a network of LIF neurons. This is done by estimating how the potential of a probe neuron, forced by the mean field generated by the network activity, converges toward the synchronized state. The stability analysis is performed by following the evolution of a perturbed probe neuron. Let us first consider an initial condition, where the synchronized cluster has just reached the threshold (Xc = 1), while the probe neuron is lagging behind at a distance δi. Such a distance is equivalent to a delay td

where the subscript “+” means that the velocity field is estimated just after the pulses have been emitted. Over the time td, the potential of the cluster increases from the reset value 0 to

From now on (in LIF neurons), the distance decreases exponentially, reaching the value

after a period T. As a result,

The logarithm of the expansion factor gives the left evaporation exponent

Let us now consider a probe neuron which precedes the synchronized cluster by an amount δi. After a time T the distance becomes

since no reset event has meanwhile occurred. Such a distance corresponds to a delay

where the subscript “−” means that the velocity has now to be estimated just before the pulse emission. By proceeding as before one obtains,

so that the right evaporation exponent writes

It is easy to see that the left and right exponents differ if and only if E− ≠ E+, i.e., if the pulses themselves are not continuous: this is, for instance, the case of exponential and δ pulses.

Keywords: linear stability analysis, splay states, synchronization, neural networks, pulse coupled neurons, Floquet spectrum

Citation: Olmi S, Torcini A and Politi A (2014) Linear stability in networks of pulse-coupled neurons. Front. Comput. Neurosci. 8:8. doi: 10.3389/fncom.2014.00008

Received: 12 November 2013; Paper pending published: 13 December 2013;

Accepted: 13 January 2014; Published online: 04 February 2014.

Edited by:

Tobias A. Mattei, Ohio State University, USAReviewed by:

Marc Timme, Max Planck Institute for Dynamics and Self Organization, GermanyTobias A. Mattei, Ohio State University, USA

Copyright © 2014 Olmi, Torcini and Politi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alessandro Torcini, Consiglio Nazionale delle Ricerche, Istituto dei Sistemi Complessi, Via Madonna del Piano 10, I-50019 Sesto Fiorentino, Italy e-mail:YWxlc3NhbmRyby50b3JjaW5pQGNuci5pdA==

Simona Olmi

Simona Olmi Alessandro Torcini

Alessandro Torcini Antonio Politi

Antonio Politi