94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

HYPOTHESIS AND THEORY article

Front. Comput. Neurosci., 26 April 2012

Volume 6 - 2012 | https://doi.org/10.3389/fncom.2012.00024

We consider approaches to brain dynamics and function that have been claimed to be Darwinian. These include Edelman’s theory of neuronal group selection, Changeux’s theory of synaptic selection and selective stabilization of pre-representations, Seung’s Darwinian synapse, Loewenstein’s synaptic melioration, Adam’s selfish synapse, and Calvin’s replicating activity patterns. Except for the last two, the proposed mechanisms are selectionist but not truly Darwinian, because no replicators with information transfer to copies and hereditary variation can be identified in them. All of them fit, however, a generalized selectionist framework conforming to the picture of Price’s covariance formulation, which deliberately was not specific even to selection in biology, and therefore does not imply an algorithmic picture of biological evolution. Bayesian models and reinforcement learning are formally in agreement with selection dynamics. A classification of search algorithms is shown to include Darwinian replicators (evolutionary units with multiplication, heredity, and variability) as the most powerful mechanism for search in a sparsely occupied search space. Examples are given of cases where parallel competitive search with information transfer among the units is more efficient than search without information transfer between units. Finally, we review our recent attempts to construct and analyze simple models of true Darwinian evolutionary units in the brain in terms of connectivity and activity copying of neuronal groups. Although none of the proposed neuronal replicators include miraculous mechanisms, their identification remains a challenge but also a great promise.

Edelman (1987) published a landmark book with Neural Darwinism and The Theory of Neuronal Group Selection as its title and subtitle, respectively. The view advocated in the book follows, in general, arguably a long tradition, ranging from James (1890) up to Edelman himself, operating with the idea that complex adaptations in the brain arise through some process similar to natural selection (NS). The term “Darwinian” in the title cannot be misunderstood to indicate this fact. Interestingly, the subtitle by the term “group selection” seems to refer to a special kind of NS phenomenon, called group selection [the reader may consult the textbook by Maynard Smith (1998) for many of the concepts in evolutionary biology that we use in this paper]. The expectation one has is then that the mapping between aspects of neurobiology and evolutionary biology has been clearly laid out. This is rather far from the truth, however. This is immediately clear from two reviews of Edelman’s book: one by Crick (1989), then working in neuroscience and another by Michod (1988, 1990), an eminent theoretical evolutionary biologist. The appreciation by these authors of Edelman’s work was almost diametrically opposite. Michod could not help being baffled himself. In a response to Crick he wrote; “Francis Crick concludes that ‘I have not found it possible to make a worthwhile analogy between the theory of NS and what happens in the developing brain and indeed Edelman has not presented one’ (p. 246). This came as a surprise to me, since I had reached a completely opposite conclusion” (Michod, 1990, p. 12). Edelman, Crick, and Michod cannot be right at the same time. But they can all be wrong at the same time. The last statement is not meant to be derogatory in any sense: we are dealing with subtle issues that do matter a lot! It is easy to be led astray in this forest of concepts, arguments, models, and interpretations. We are painfully aware of the fact that the authors of the present paper are by no means an exception. The aim of this paper is fourfold: (i) to show how all three authors misunderstood Darwinian dynamics in the neurobiological context; (ii) to show that at least two different meanings of the term “selection” are confused and intermingled; (iii) to propose that a truly Darwinian approach is feasible and potentially rewarding; and (iv) to discuss to what extent selection (especially of the Darwinian type) can happen at various levels of neurobiological organization.

We believe that a precondition to success is to have some professional training in the theory (at least) of both the neural and cognitive sciences as well as of evolution. Out of this comes a difficulty: neurobiologists are unlikely to follow detailed evolutionary arguments and, conversely, evolutionary biologists may be put off by many a detail in the neurosciences. Since the readership of this paper is expected to sit in the neurobiology/cognitive science corner, we thought that we should explain some of the evolutionary items involved in sufficient detail. It is hard to define in advance what “sufficient” here means: one can easily end up with a book (and one should), but a paper has size limitations. If this analysis were to stimulate a good number of thinkers in both fields, the authors would be more than delighted.

Edelman argued for the applicability of the concepts of selection at the level of neuronal groups. Put simply, it is a group of neurons that have a sufficiently tightly knit web of interactions internally so that they can be regarded as a cohesive unit (“a set of more or less tightly connected cells that fire predominantly together,” Edelman, 1987, p. 198), demonstrated by the fact that some groups react to a given stimulus differentially, and groups that react better get strengthened due to plasticity of the synapses in the group, whereas others get weakened. There are two assumptions in the original Edelman model: synaptic connections are given, to begin with (the primary repertoire); and groups form and transform through the modifications of their constituent synapses. Where does selection come in?

As Crick (1989) noted: “What can be altered, however, is the strength of a connection (or a set of connections) and this is taken to be analogous to an increase in cell number in (for example) the immune system… This idea is a legitimate use of the selectionist paradigm… Thus a theory might well be called… ‘The theory of synaptic selection (TSS)’. But this would describe almost all theories of neural nets” (Crick, 1989, p. 241). We shall come back to this issue because in the meantime TSS has advanced. Let us for the time being assume that whereas a selectionist view of synapse dynamics might be trivially valid, such a view at levels above the synapse is highly questionable. Let us read Michod on the relation between NS and neuronal group selection (NGS): “We can now state the basic analogy between NS and NGS. In NS, differences in the adaptation of organisms to an environment lead to differences in their reproductive success, which, when coupled with rules of genetic transmission, lead to a change in frequency of genotypes in a population. In NGS, differences in receptive fields and connectivity of neuronal groups lead to differences in their initial responses to a stimulus, which, when coupled with rules of synaptic change, lead to a change in probabilities of further response to the stimulus.” Note that reproductive success (fitness) is taken to be analogous to the probability of responding to a stimulus. It should be clear that Michod thinks that the analogy is sufficiently tight, although neuronal groups (and normally their constituent neurons) do not reproduce. How can then NGS be Darwinian, one might ask? What is meant by selection here? We propose that sense can be made in terms of a special formalism, frequently used in evolutionary biology, that of the eminent Price (1970), who made two seminal contributions to evolutionary biology. One of them is the Price equation of selection.

If it is possible to describe a trait (e.g., activation of a neuronal group) and the covariance between that trait and its probability of it occurring again in the future, then Price’s equation applies. It states that the change in some average trait z is proportional to the covariance between that trait zi and its relative fitness wi in the population and other transmission biases E (e.g., due to mutation, or externally imposed instructed changes)…

where w is average fitness in the population. It is the first term that explains the tendency for traits that are positively correlated with fitness to increase in frequency. Note that there is no reference to reproduction here, except through implication by the term “fitness” that is not necessarily reproductive fitness in general. This is a subtle point of the utmost importance, without the understanding of which it is useless to read this paper further. The Price equation (in various forms) has been tremendously successful in evolutionary biology, one of Price’s friends: Hamilton (1975) used it also for a reformulation of his theory of kin selection (one of the goals of which is to explain the phenomenon of altruism in evolution). Approaches to multilevel selection (acting, for example, at the level of organisms and groups at the same time) tend to rely on this formulation also (Damuth and Heisler, 1988). Note that Michod is a former student of Hamilton, and that he is also an expert on the theory of kin selection. Although he does not refer to Price in the context of NGS, he does express a view that is very much in line with the Price equation of selection.

One might suspect, having gotten thus far, that there is some “trouble” with the Price equation, and indeed that is the case, we believe, and this unrecognized or not emphasized feature has generated more trouble, including the problems around NGS. Let us first state, however, where we think the trouble is not. Crick (1990) writes, responding to Michod: “It is very loose talk to call organisms or populations ‘units of selection,’ especially as they behave in rather different ways from bits of DNA or genes, which are genuine units of selection…” This is an interesting case when the molecular biologist/neurobiologist Crick teaches something on evolution to a professional evolutionary biologist. One should at least be a bit skeptical at this point. If one looks at models of multilevel selection, there is usually not much loose talk there, to begin with. Second, Crick (without citation) echoes Dawkins’ (1976) view of the selfish gene. We just mention in passing the existence of the famous paper with the title: “Selfish DNA: the ultimate parasite” by Orgel and Crick(1980, where the authors firmly tie their message to the view of the Selfish Gene; Orgel and Crick, 1980). We shall come back to the problems of levels of selection; suffice to say it here that we are not really worried about this particular concern of Crick, at least not in general.

Our concern lies with the algorithmic deficiency of the Price equation. The trouble is that it is generally “dynamically insufficient”: one cannot solve it progressively across an arbitrary number of generations because of lack of the knowledge of the higher-order moments: one ought to be able either to follow the fate of the distribution of types (as in standard population genetics), or have a way of calculating the higher moments independent of the generation. This is in sharp contrast to what a theoretical physicist or biologist would normally understand under “dynamics.” As Maynard Smith (2008) explains in an interview he does “not understand” the Price equation because it is based on an aggregate, statistical view of evolution; whereas he prefers mechanistic models. Moreover, in a review of Dennett’s, 1995 book “Darwin’s Dangerous Idea,” Maynard Smith writes: “Dennett’s central thesis is that evolution by NS is an algorithmic process. An algorithm is defined in the OED as “a procedure or set of rules for calculation and problem-solving” (Maynard Smith, 1996). The rules must be so simple and precise that it does not matter whether they are carried out by a machine or an intelligent agent; the results will be the same. He emphasizes three features of an algorithmic process. First, “substrate neutrality”: arithmetic can be performed with pencil and paper, a calculator made of gear wheels or transistors, or even, as was hilariously demonstrated at an open day at one of the authors son’s school, jets of water. It is the logic that matters, not the material substrate. Second, mindlessness: each step in the process is so simple that it can be carried out by an idiot or a cogwheel. Third, guaranteed results: whatever it is that an algorithm does, it does every time (although, as Dennett emphasizes, an algorithm can incorporate random processes, and so can generate unpredictable results)”. Clearly, Price’s approach does not give an algorithmic view of evolution. Price’s equation is dynamically insufficient. It is a very high level (a computational level) description of how frequencies of traits should change as a function of covariance between traits and their probability of transmission, and other transmission bias effects that alter traits. It does not constrain the dynamical equations that should determine transmission from one generation to another, i.e., it is not an algorithmic description.

In fact, Price’s aim was to have an entirely general, non-algorithmic approach to selection. This has its pros and cons. In a paper published well after his death, Price (1995) writes: “Two different main concepts of selection are employed in science… Historically and etymologically, the meaning select (from se-aside + legere to gather or collect) was to pick out a subset from a set according to a criterion of preference or excellence. This we will call subset selection… Darwin introduced a new meaning (as Wallace, 1916, pointed out to him), for offspring are not subsets of parents but new entities, and Darwinian NS… does not involve intelligent agents who pick out… These two concepts are seemingly discordant. What is needed, in order to make possible the development of a general selection theory, is to abstract the characteristics that Darwinian NS and the traditional subset selection have in common, and then generalize” (Price, 1995, p. 390). It is worth quoting Gardner (2008) who, in a primer on the Price equation, writes: “The importance of the Price equation lies in its scope of application. Although it has been introduced using biological terminology, the equation applies to any group of entities that undergoes a transformation. But despite its vast generality, it does have something interesting to say. It separates and neatly packages the change due to selection versus transmission, giving an explicit definition for each effect, and in doing so it provides the basis for a general theory of selection. In a letter to a friend, Price explained that his equation describes the selection of radio stations with the turning of a dial as readily as it describes biological evolution” (Gardner, 2008, p. R199). In short, the Price equation was not intended to be specific to biological selection; hence it is no miracle that it cannot substitute for replicator dynamics.

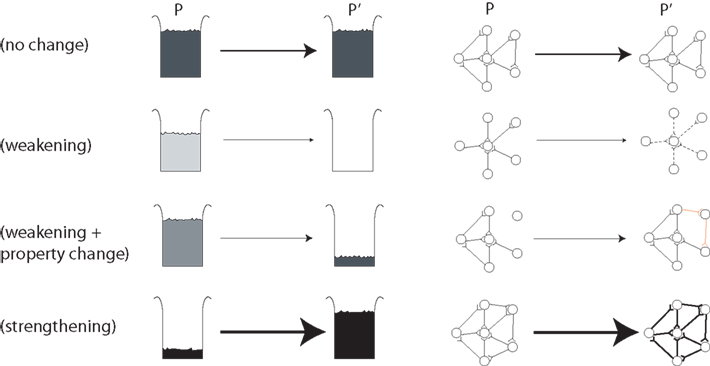

Before we move on we have to show how this generalized view of selection exactly coincides with the view of how the neuronal groups of Edelman are thought to increase or decrease in weight (Figure 1).

Figure 1. The general selection model of price (left) and its application to neuronal groups (right).

A population P of beakers contains amounts wi of solution of varying concentrations xi (dark = high concentration, light = low concentration). In standard selection for higher concentration liquid, low concentration liquids have a lower chance of transmission to the next generation P′ (top two rows). In “Darwinian selection” two elements are added. The first is the capacity for property change (or transmission bias), see row 3 in which the liquid is “mutated” between generations. The second is strengthening in which the offspring can exceed parents in number and mass, see row 4 in which the darkest liquid has actually increased in quantity. To quote Price (1995): “Selection on a set P in relation to property x is the act or process of producing a corresponding set P′ in a way such that the amounts wi′ (or some function of them such as the ratios wi′/wi) are non-randomly related to the corresponding xi values.” (p. 392). The right side of Figure 1 shows one interpretation of neuronal groups within the same general selection framework in which the traits are the pattern of connectivity of the neuronal group, and the amounts are the probability of activation of that neuronal group. In the top row there is no change in the neuronal group between populations P and P′. In the second row the neuronal group is weakened, shown as lighter synaptic connections between neurons, although the trait (connectivity pattern) does not change. In the third row the neuronal group is weakened (reduced probability of being activated) but is also “mutated” or undergoes property change (transmission bias) with the addition of two new synaptic connections in this case. In the final row a neuronal group with the right connectivity but a low probability of being activated gets strengthened. We conclude that Edelman’s theory of NGS is firmly selectionist in this sense of Price!

What is then the approach that is more mechanistic, suggestive of an algorithm that could come as a remedy? We suggest it is the units of evolution approach. There are several alternative formulations (itself a nice area of study); here we opt for Maynard Smith’s formulation that seems to us the most promising for our present purposes. JMS (Maynard Smith, 1986) defined a unit of evolution as any entity that has the following properties. The first property is multiplication; the entity produces copies of itself that can make further copies of themselves: one entity produces two, two entities produce four, four entities produce eight, in a process known as autocatalytic growth. Most living things are capable of autocatalytic growth, but there are some exceptions, for example, sterile worker ants and mules do not multiply and so whilst being alive, they are not units of evolution (Szathmary, 2000; Gánti, 2003; Szathmáry, 2006). The second requirement is inheritance, i.e., there must be multiple possible kinds of entity, each kind propagating itself (like begets like). Some things are capable of autocatalytic growth and yet do not have inheritance, for example fire can grow exponentially for it is the macroscopic phenomenon arising from an autocatalytic reaction, yet fire does not accumulate adaptations by NS. The third requirement is that there must be variation (more accurately: variability): i.e., heredity is not completely exact. If among the hereditary properties we find some that affect the fecundity and/or survival of the units, then in a population of such units of evolution, NS can take place. There is a loose algorithmic prescription here because the definition explicitly refers to operations, such as multiplication, information transmission, variation, fitness mapping, etc. We can conclude that neuronal groups are not units of evolution in this sense. It then follows that the picture portrayed by Edelman cannot be properly named neural Darwinism! This being so despite the fact that it fits Price’s view of general selection, but not specifically Darwinian natural selection. We shall see that this difference causes harsh algorithmic differences in the efficiency of search processes.

Now that we see what is likely to have been behind the disagreements, we would like to consider another aspect in this section: the problem of transmission bias (property change). In biological evolution this can be caused by, for example, environmental change, mutation, or recombination (whenever heredity is not exact). And this can create a problem. Adaptations arise when the covariance term in Eq. 1is significant relative to the transmission bias. One of Crick’s criticisms can again be interpreted in this framework: “I do not consider that in selection the basic repertoire must be completely unchanging, though Edelman’s account suggests that he believes this is usually true at the synaptic level. I do feel that in Edelman’s simulation of the somatosensory cortex (Neural Darwinism, p. 188 onward) the change between an initial confused mass of connections and the final state (showing somewhat distinct neuronal groups) is too extreme to be usefully described as the selection of groups, though it does demonstrate the selection of synapses” (Crick, 1990, p. 13). He also proposes: “If some terminology is needed in relation to the (hypothetical) neuronal groups, why not simply talk about ‘group formation’?” (Crick, 1989, p. 247). First, we concede that indeed there is a problem here with the relative weight of the covariance and transmission bias in terms of the Price formulation. There are two possible answers. One is that as soon as the groups solidify, there is good selection sensu Price in the population of groups. But the more exciting answer is that such group formation is not unknown in evolutionary biology either. One of us has spent by now decades analyzing what is called the major transitions in evolution (Maynard Smith and Szathmáry, 1995). One of the crucial features of major transitions is the emergence of higher-level units from lower level ones, or – to borrow Crick’s phrase – formation of higher units (such as protocells from naked genes or eukaryotic cells from separate microbial lineages). The exciting question is this one: could it be that the formation of Edelman’s groups is somehow analogous to a major transition in evolutionary biology? Surely, it cannot be closely analogous because neuronal groups do not reproduce. But if we take a Pricean view, the answer may turn out to be different. We shall return to this question, we just wet the appetite of the reader now by stating that in fact there is a Pricean approach to major transitions! Indeed, the recent formation of a secondary repertoire of neuronal groups arising from formation and selection of connectivity patterns between neuronal groups appears to be an example of such a transition. However, we note that whether we are referring to the primary repertoire of assemblies, or the secondary repertoire of super assemblies (Perin et al., 2011), there is no replication of these forms at either level in NGS.

Although admittedly not in the focus of either Edelman or Crick, it is worthwhile to have a look at synaptic changes to have a clearer view on whether they are subject to selection or evolution, and in what sense. As we have seen, there is a view that selectionism at the level of the synapse is always trivial, but the different expositions have important differences. In this section we briefly look at some of the important alternatives; there is an excellent survey of this and related matters in Weiss (1994).

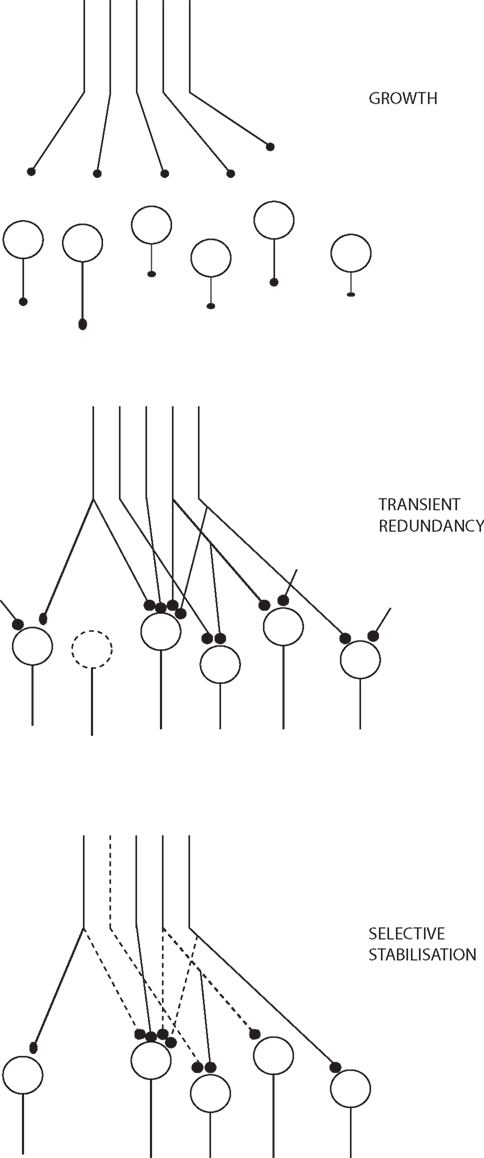

Changeux (1985), Changeux et al. (1973) in his TSS primarily focuses on how the connectivity of the networks becomes established during epigenesis within the constraints set by genetics, based on functional criteria. There is structural transformation of the networks during this maturation. There is a period of structural redundancy, where the number of connections and even neurons is greater than in the mature system (Figure 2). Synapses can exist in stable, labile, and degenerate form. Selective stabilization of functional connections prunes the redundancy to a considerable degree.

Figure 2. Growth and stabilization of synapses, adapted from Changeux (1985).

The question is again to what extent this is selection or a truly Darwinian process. One can readily object that as it is portrayed the process is a one-shot game. An extended period of redundancy formation is followed by an extended period of functional pruning. Since Darwinian evolution unfolds through many generations of populations of units, the original view offered by Changeux is selectionist, but not Darwinian. Again, the whole picture can be conveniently cast in terms of the Price formulation, however.

If it were not a one-shot game, and there were several rounds of synapse formation and selective stabilization, one could legitimately raise the issue of whether one is dealing with generations of evolutionary units in some sense. But this is exactly the picture that seems to be emerging under the modern view of structural plasticity of the adult brain (Chklovskii et al., 2004; Butz et al., 2009; Holtmaat and Sovoboda, 2009). We shall see in a later section that this view has some algorithmic benefits, but for the time being we consider a formulation of synaptic Darwinism that is a more rigorous attempt to build a mapping between some concepts of neuroscience and evolutionary theory. Adams (1998) proposes (consonant with several colleagues in this field) that synaptic strengthening (LTP) is analogous to replication, synaptic weakening (LTD) is analogous to death (disappearance of the copy of a gene), the input array to a neuron corresponds to the genotype, the specification of the output vector by the input vector is analogous to genotype–phenotype mapping, and a (modified) Hebb rule corresponds to the survival of the fittest (selection; Adams, 1998). There is some confusion, though, in an otherwise clear picture, since Adams proposes that something like an organism corresponds to the kind of bundling one obtains when “all neurons within an array receive the same neuromodulatory signal” (p. 434). Here one is uncertain whether the input vector as the “neuronal genotype” is that of these bundled group of axons, or whether he means the input vector of one neuron, or whether it is a matter of context which understanding applies.

We elaborate a bit on the suggestion that Darwin and Hebb shake hands in a modified Hebb rule. We have shown (Fernando and Szathmáry, 2010) that the Oja rule (a modified Hebb rule) is practically isomorphic to an Eigen equation describing replication, mutation, and selection a population of macromolecules. The Eigen (1971) equation reads:

where xi is the concentration of sequence i (of RNA for example), mij is the mutation rate from sequence j to i, Ai is the gross replication rate of sequence i and Qi is its copying fidelity, N is the total number of different sequences, and formally mij= AiQi. The negative term introduces the selection constraint which keeps total concentration constant at the value of c (which can be taken as unity without loss of generality). The relation between the Oja rule and the Eigen equation is tricky. The Oja rule corresponds to a very peculiar configuration of parameters in the Eigen equation. For example, in contrast to the molecular case, here the off-diagonal elements (the mutation rates) are not by orders of magnitude smaller than the diagonal ones (the fitnesses). Moreover, mutational coupling between two replicators is strictly the product of the individual fitness values! In short, Eigen’s equation can simulate Hebbian dynamics with the appropriate parameter values, but the reverse is not generally true: Oja’s rule could not, for example, simulate the classical molecular quasispecies of Eigen in general. This hints at the more general possibility that although formal evolutionary dynamics could hold widely in brain dynamics, it is severely constrained in parameter space so that the outcome is behaviorally useful. A remarkable outcome of the cited derivation is that although there was no consideration of “mutation” in the original setting, there are large effective mutation rates in the corresponding Eigen equation: this coupling ensures correlation detection between the units (synapses or molecules). (Coupling must be represented somehow: in the Eigen equation the only way to couple two different replicators is through mutation. Hence if a molecular or biological population with such strange mutation terms were to exist, it would detect correlation between individual fitnesses.)

The formalistic appearance of synaptic weight change to mutation might with some justification be regarded as gimmickry, i.e., merely an unhelpful metaphor for anything that happens to change through time. So what could be analogous in the case of synapses to genetic mutations? We believe the obvious analog to genetic mutation is structural synaptic change, i.e., the formation of topologies that did not previously exist. Whereas the Eigen equation is a description of molecular dynamics, it is a deterministic dynamical system with continuous variables in which the full state space of the system has been defined at the onset, i.e., the vector of chemical concentrations. It is worth emphasizing that nothing replicates when one numerically solves the Eigen equation. There are no real units of evolution when one solves the Eigen equation, instead the Eigen equation is a model of the concentration changes that could be implemented by units of evolution. It is a model of processes that occur when replicators exist. Real mutation allows the production of entities that did not previously exist, i.e., it allows more than mere subset selection. For example this is the case where the state space being explored is so large that it can only be sparse sampled, e.g., as in a 100 nucleotide sequence, and it is also the case when new neuronal connectivity patterns are formed by structural mutation.

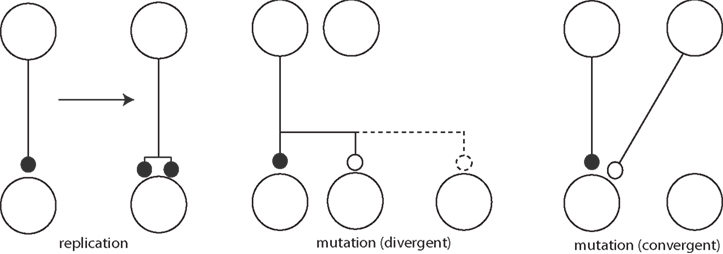

In Hebbian dynamics there are also continuous variables, but in the simplest case there is only growth and no replication of individuals. As Adams put it, “the synaptic equivalent of replication is straightforward… It corresponds to strengthening. If a synapse becomes biquantal, it has replicated” (Adams, 1998, p. 421). Yet this is different from replication in evolution where the two copies normally separate from each other. This aspect will turn out to be crucially important later when we consider search mechanisms.

Adams draws pictures of real replication and mutation of synapses (Figure 3) also. Clearly, these figures anticipate component processes of the now fashionable structural plasticity (Chklovskii et al., 2004). It is this picture that is closely analogous to the dynamics of replicators in evolution. In principle this allows for some very interesting, truly Darwinian dynamics.

Figure 3. Synaptic mutation replication (left) and synaptic mutations (right), adapted from Adams (1998).

The last item in this section is the concept of a “hedonistic synapse” by Seung (2003). This hypothetical mechanism was considered in the context of reinforcement learning. The learning rule is as follows: (1) the probability of release is increased if reward follows release and is decreased if reward follows failure, (2) the probability of release is decreased if punishment follows release and is increased if punishment follows failure. Seung writes: “randomness is harnessed by the brain for learning, in analogy to the way genetic mutation is utilized by Darwinian evolution” (p. 1063) and that “dynamics of learning executes a random walk in the parameter space, which is biased in a direction that increases reward. A picturesque term for such behavior is “hill-climbing,” which comes from visualizing the average reward as the height of a landscape over the parameter space. The formal term is “stochastic gradient ascent” ” (p. 1066). This passage is of crucial importance for our discussion of search algorithms in this paper. The analogy seems to be crystal-clear, especially since it can be used to recall the notion of an “adaptive landscape” by Wright (1932), arguably the most important metaphor in evolution (Maynard Smith, 1988). We shall see later that Seung’s synapse may be hedonistic, but not Darwinian.

We have already touched upon the functioning of the dynamics of neuronal groups as portrayed by Edelman (1987). We shall come back to one key element of NGS at the end of this section.

Now we turn to a complementary approach offered by Changeux (1985), the Theory of Selective Stabilization of Pre-representations (TSSP), which builds on TSS. TSSP elaborates on the question how somatic selection contributes to the functioning of the adult brain (Changeux et al., 1984; Heidmann et al., 1984), i.e., after transient redundancy has been functionally pruned. The first postulate of TSSP is that there are mental object (representations) in the brain, which is a physical state produced by an assembly (group) of neurons. Pre-representations are generated before and during interaction with the environment, and they come in very large numbers due to the spontaneous but correlated activity of neurons. Learning is the transformation, by selective stabilization, of some labile pre-representations into stored representations. Primary percepts must resonate (in space or time) with pre-representations in order to become selected. To quite him: “These pre-representations exist before the interaction with the outside world. They arise from the recombination of pre-existing sets of neurons or neuronal assemblies, and their diversity is thus great. On the other hand, they are labile and transient. Only a few of them are stored. This storage results from a selection!” (Changeux, 1985, p. 139). No explanation is given of how a beneficial property of one group would be transmitted when it is “recombined” with another group. The reticular formation is proposed to be responsible for the selection, by re-entry of signals from cortex to thalamus and back to cortex, which is a means of establishing resonance between stored mental objects and percepts.

Changeux assumes the formation of pre-representations occurs spontaneously from a large number of neurons such that the number of possible combinations is astronomical, and that this may be sufficient to explain the diversity of mental representations, images, and concepts. But how can such a large space of representations be searched rapidly and efficiently? Changeux addresses this by suggesting that heuristics act on the search through pre-representations, notably, he allows recombination between neuronal assemblies, writing “this recombining activity would represent a ‘generator of hypotheses,’ a mechanism of diversification essential for the geneses of pre-representations and subsequent selection of new concepts.” (Changeux, 1985, p167). However, no mechanism for recombination of functions is presented.

Changeux and Dehaene (1989) offer a unified account of TSS and TSSP and their possible contributions to cognitive functions. “The interaction with the outside world would not enrich the landscape, but rather would select pre-existing energy minima or pre-representations and enlarge them at the expense of other valleys.” (p. 89). In an elegant model of temporal sequence learning, Dehaene et al. (1987) show that “In the absence of sensory inputs, starting from any initial condition, sequences are spontaneously produced. Initially these pre-representations are quasirandom, although they partially reveal internal connectivity, but very small sensory weights (inferior to noise level) suffice to influence these productions.” (p. 2731). “The learnable sequences must thus belong both to the pre-representations and to the sensory percepts received” (pp. 2730–2731). Noise plays a role in the dynamics of the system.

Later models incorporate stabilization of the configurations in a global workspace by internal reward and attention signals (Dehaene et al., 1998). In a model of the Stroop task, a global workspace is envisaged as having a repertoire of discrete activation patterns, only one of which can be active at once, and which can persist independent of inputs with some stability. This is meant to model persistent activity of neurons in prefrontal cortex. These patterns constitute the selected entity (pre-representation), which “if negatively evaluated, or if attention fails, may be spontaneously and randomly replaced.” Reward allows restructuring of the weights in the workspace. The improvement in performance depends on the global workspace having sufficient variation in patterns at the onset of the effortful task, perhaps with additional random variability, e.g., Dehaene and Changeux (1997) write that “in the absence of specific inputs, prefrontal clusters activate with a fringe of variability, implementing a ‘generator of diversity’.” The underlying search algorithm is nothing more sophisticated than a random walk through pre-representation space, biased by reward! It truly stretches one’s imagination how such a process could be sufficient for language learning, for example, which is much more complex than the Stoop task but not effortful in the sense of Changeux and Dehaene.

A final note on a common element of the similar theories of Changeux and Edelman is in order. Sporns and Edelman (1993) present a tentative solution the Bernstein problem in the development of motor control. Besides the already discussed selective component processes of NGS, they state: “The ‘motor cortex’ generates patterns of activity corresponding to primary gestural motions through a combination of spontaneous activity (triggered by a component of Gaussian noise) and by responses to sensory inputs from vision and kinesthetic signals from the arm.” Thus noise is again a source of the requisite variety (p. 971).

So, again, “how much” Darwinism is there in these theories? Changeux and Dehaene (1989) insist: “the thesis we wish to defend in the following is the opposite; namely, that the production and storage of mental representations, including their chaining into meaningful propositions and the development of reasoning, can also be interpreted, by analogy, in variation–selection (Darwinian) terms within psychological time-scales.” We actually agree with that, but the trouble is that algorithmically the search mechanisms they present are very different from that of any evolutionary algorithm proper, and seem to correspond to stochastic gradient ascent, as explained by Seung (2003) for his hedonistic synapses, even if there is a population of stochastic hill-climbers. Something is crucially missing!

The reader might think that this is a digression. Not so, there are some crucial lessons to be learnt from this example. The production of functional molecules is critical for life and also for an increasing proportion of industry. It is also important that genes represent what in cognitive science has been called a “physical symbol system” (Fodor and Pylyshyn, 1988; Nilsson, 2007; Fernando, 2011a). Today, the genetic code is an arguably symbolic mapping between nucleotide triplets and amino acids (see Maynard Smith, 2000 for a characteristically lucid account of the concept of information in biology; Maynard Smith, 2000). Moreover, enzymes “know” how to transform a substrate into a product, much like a linguistic rule “knows” how to act on some linguistic constructions to produce others. How can such functionality arise? We must understand both, how the combinatorial explosion of possibilities (sequences) is generated, and how selection for adaptive sequences is implemented.

Combinatorial chemistry is one of the possible approaches. The aim is to generate-and-test a complete library of molecules up to a certain length. The different molecules must be tested for functionality, be identified as distinct sequences, and then amplified for lab or commercial production. It is easy to see that this approach is limited by combinatorial explosion. Whereas complete libraries of oligopeptides can be produced, this is impossible for polypeptides (proteins). The snag is that enzymes tend to be polymers. For proteins, there are 20100 possible polypeptide sequences of length 100, which equal 10130, a hyper-astronomically large number. In any realistic system an extremely tiny fraction of these molecules can be synthesized. The discrete space of possible sequences is heavily under-occupied, or – to use a phrase that should ring a bell for neuroscientists – sparsely populated. In order to look for functional sequences one needs an effective search mechanism. That search mechanism is in vitro genetics and selection. Ultimately, it is applied replicator dynamics. This technology yielded spectacular results. We just mention the case of ribozymes, catalytic RNA molecules that are very rare in contemporary biochemistry but may have been dominating in the “RNA world” before the advent of the genetic code (c.f. Maynard Smith and Szathmáry, 1995). An impressive list of such ribozymes has been generated by in vitro evolution (Ellington et al., 2009).

Of course, the mapping of RNA sequence to a functional 3D molecule is highly degenerate, meaning that many different sequences can perform the same function. But what does this mean in terms of the probability of finding a given function in a library of random RNA molecules? The number of random sequences in a compositionally unbiased pool of RNA molecules, 100 nucleotides long, required for a 50% probability of finding at least one functional molecule is, in the case of the isoleucine aptamer on the order 109, and in case of the hammerhead ribozyme on the order of 1010 (Knight et al., 2005). This is now regarded as an inflated estimate, due to the existence of essential but not conserved parts (Majerfeld et al., 2010); thus the required numbers are at least an order of magnitude larger. Note that these are simple functionalities: the first case is just binding rather than catalysis, and the second case is an “easy” reaction to catalyze for RNA molecules. Simulation studies demonstrate that when mutation and selection are combined, a very efficient search for molecular functionality is possible: typically, 10,000 RNA molecules going through about a 100 generations of mutation and selection are sufficient to find, and often fix, the target (Stich and Manrubia, 2011).

The reason for us presenting the molecular selection/evolution case is as follows. Given the unlimited information potential of the secondary repertoire, configurations from which can only be sparsely sampled as in RNA sequence space, the advantages of Darwinian search are likely to also apply. Molecular technologies show that parallel search for molecular functionalities is efficient with replication with mutation and selection if the search space is vast, and occupation of sequence space is sparse. We shall return to the algorithmic advantages of this paradigm later.

We have already raised the issue whether the developmental origin and consolidation of neuronal groups might be analogous in some sense to the major transitions in evolution. Based on the foregoing analysis this cannot apply in the Darwinian sense since while synapses can grow and reproduce in the sense of Adams (1998), neuronal groups do not reproduce. Yet, the problem is more tricky than this, because – as we have seen – selection does apply to neuronal groups in terms of the Price equation, and the Price equation has been used to describe aspects of multilevel selection (Heisler and Damuth, 1987; Damuth and Heisler, 1988), including those of the major transitions in evolution (Okasha, 2006). In concrete terms, if one defines a Price equation at the level of the groups, the effect of intra-group selection can be substituted for the transmission bias (see Marshall, 2011 for a technical overview), which makes sense because selection within groups effectively means group identity can be changed due to internal dynamics.

As Damuth and Heisler (1988) write: “A multilevel selection situation is one in which we wish to consider simultaneously selection occurring among entities at two or more different levels in a nested biological hierarchy (such as organisms within groups)” (p. 408). “There are two perspectives in this two-level situation from which we may ask questions about selection. First, we may be interested in the relative fitnesses of the individuals and in how their group membership may affect these fitnesses and thus the evolution of individual characters in the whole population of individuals. Second, we may be interested in the changing proportions of different types of groups as a result of their different propensities to go extinct or to found new groups (i.e., the result of different group fitnesses); of interest is the evolution of group characters in the population of groups. In this case, we have identified a different kind of fitness than in the first, a group-level fitness that is not simply the mean of the fitnesses of the group’s members. Of course, individual fitnesses and group fitnesses may be correlated in some way, depending on the biology. But in the second case we are asking a fundamentally different question that requires a focus on different properties – a question explicitly about differential success of groups rather than individuals” (p. 409). It is now customary to call these two perspectives multilevel selection I (MLS1) and multilevel selection II (MLS2) in the biological literature. In the view of Okasha (2006) major transitions can be mirrored by the degree to which these two perspectives apply: in the beginning there is MLS1, and in the end there is MLS2. In between he proposes to have intermediates stages where “collective fitness is not defined as average particle fitness but is proportional to average particle fitness” (p. 238).

It is tempting to apply this picture to the synapses → neuronal group transition, but one should appreciate subtle, tacit assumptions of the evolutionary models. Typically it is assumed that in MLS1 the groups are transient, that there is no population structure within groups, and that each generation of new groups is formed according to some probabilistic distribution. However, in the brain the process does not begin with synapses reproducing before the group is formed, since the topology and strength of synaptic connections defines the group. Synapses, even if labile, are existing connections not only topologically, but also topographically.

In sum, one can formally state that there is a major transition when neuronal groups emerge and consolidate in brain dynamics, and that there are two levels of selection, but only if one adopts a generalized (as opposed to strictly Darwinian) Pricean view of the selection, since neither neurons nor neuronal groups replicate. It is also worth saying that a formal analysis of synapses and groups in terms the Price equation, based on dynamical simulations of the (emerging) networks has never been performed. We might learn something by such an exercise.

Many have drawn analogies between learning and evolution. Bayesian inference has proven a very successful model to characterize aspects of brain function at the computational level, as have Darwinian dynamics accounts for evolution of organisms at the algorithmic level. It is natural to seek a relationship between the two. A few people (Zhang, 1999; Harper, 2009; Shalizi, 2009) have realized the connection in formal terms. Here we follow Harper’s (2009) brief and lucid account. Let H1, H2 …, Hn be a collection of hypotheses; then according to Bayes’ theorem:

where the process iteratively adjusts the probability of the hypotheses in line with the evidence from each new observation E. There is a prior distribution [P(H1), …, P(Hn)], the probability of the event given a hypothesis is given by P(E|Hi), P(E) serves as normalization, and the posterior distribution is [P(H1|E), …, P(Hn|E)]. Compare this with the discrete-time replicator equation:

where xi is the relative frequency of type in the population, prime means next generation, and fi is its per capita fitness that in general may depend on the population state vector x. (Note that this is a difference to Eq. 3where the probability of a hypothesis does not depend on any other hypothesis). It is fairly straightforward to appreciate the isomorphism between the two models. Both describe at a purely computational (not algorithmic) level what happens during Bayesian reasoning and NS, and both equations have the same form. The following correspondences apply: prior distribution ←→ population state now, new evidence ←→ fitness landscape, normalization ←→ mean fitness, posterior distribution ←→ population state in the next generation. This isomorphism is not vacuously formalistic. There is a continuous-time analog of the replicator Eq. 4, of which the Eigen Eq. 2is a concrete case. It can be shown that the Kullback–Leibler information divergence between the current population vector and the vector corresponding to the evolutionarily stable state (ESS) is a local Lyapunov function of the continuous-time replicator equation; the potential information plays a similar role for discrete-time dynamics in that the difference in potential information between two successive states decreases in the neighborhood of the ESS along iteration of the dynamic. Moreover, the solutions of the replicator Eq. 4can be expressed in terms of exponential families (Harper, 2009), which is important because exponential families play an analogous role in the computational approach to Bayesian inference.

Recalling that we said that Darwinian NS that takes place when there are units of evolution is an algorithm that can do computations described by the Eigen equation, one feels stimulated to raise the idea: if the brain is computationally a Bayesian device, than it might be doing Bayesian computation by using a Darwinian algorithm (a “Darwin machine”; Calvin, 1987) containing units of evolution. Given that it is also having to search in a high dimensional space, perhaps the same benefits of a Darwinian algorithm will accrue? The isomorphisms do not give direct proof of this, because of the following reason. Whereas Eq. 3 is a Bayesian calculation, Eq. 4is not an evolutionary calculation, it is a model of a population doing, potentially, an evolutionary calculation.

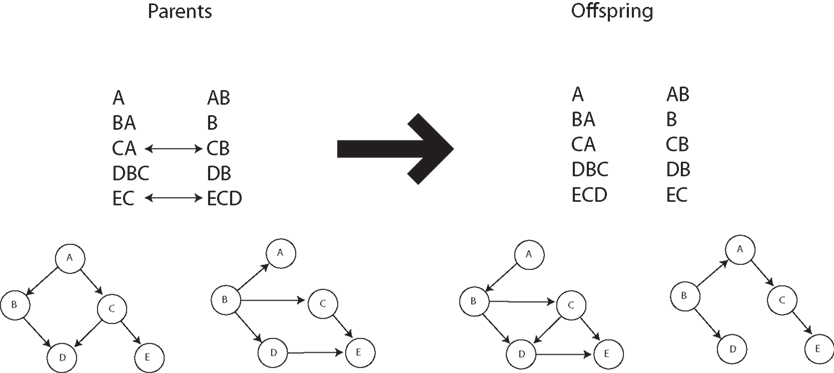

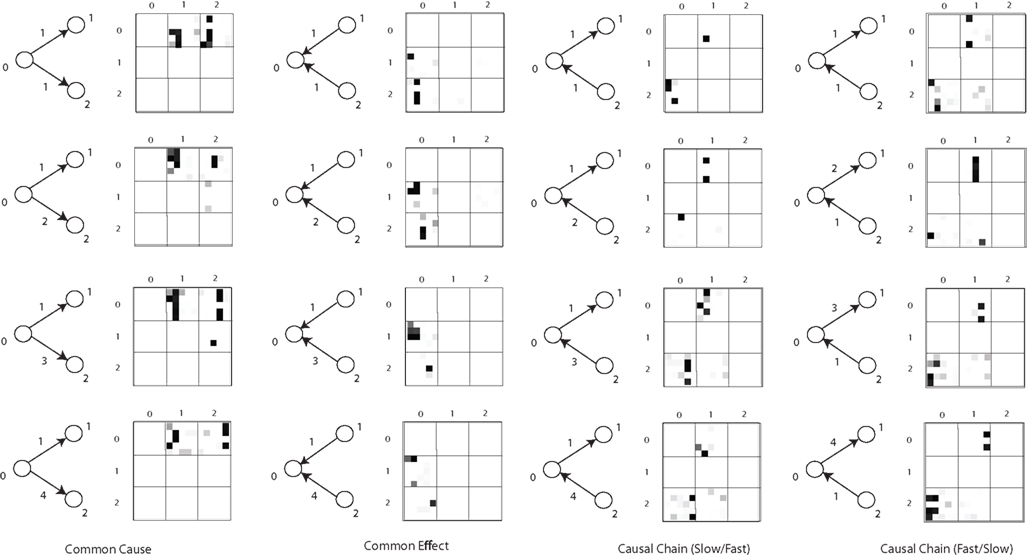

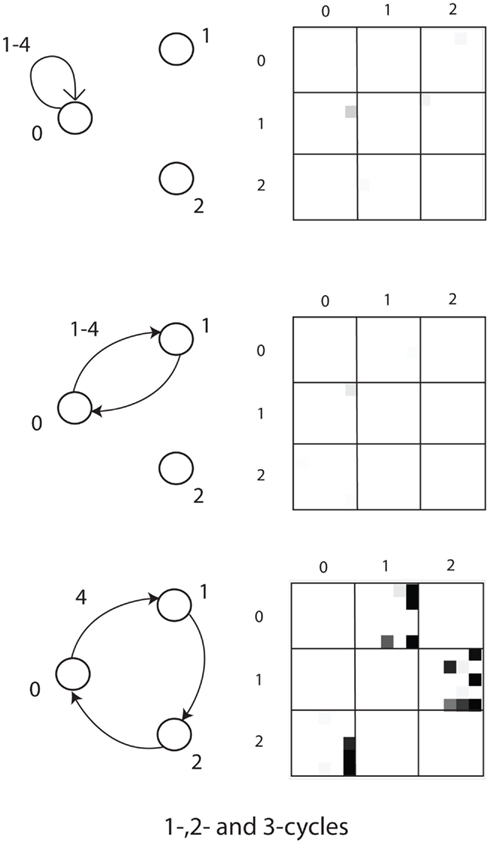

Our recently proposed neuronal replicator hypothesis (NRH) states that there are units of evolution in the brain (Fernando et al., 2008, 2010). If the NRH holds any water, the brain must harbor real replicators, not variables for the frequencies of replicators. In other words, there must be information transfer between units of evolution. This is crucially lacking in Edelman’s proposal of Neural Darwinism. It is molecules and organisms that can evolve, not population counts thereof. Of course, based on the foregoing it must be true that replicating populations can perform Bayesian calculations with appropriate parameters and fitness landscapes. Is any advantage gained from this insight? The answer seems to be yes. Kwok et al. (2005) show the advantages of an evolutionary particle filter algorithm to alleviate the sample impoverishment problem; Muruzábal and Cotta (2007) present an evolutionary programming solution to the search for Bayesian network graph structures; Myers et al. (1999) report a similar study (see Figure 4); Strens (2003) shows the usefulness of evolutionary Markov-Chain Monte Carlo (MCMC) sampling and optimization; and Huda et al. (2009) report on a constraint-based evolutionary algorithm approach to expectation minimization that does not get trapped so often in local optima. Thus it seems that not only can evolutionary algorithms do Bayesian inference, for complex problems they are likely to be better at it. In fact, Darwinian algorithms have yet to be fully investigated within the new field of rational process models that study how optimal Bayesian calculations can be algorithmically approximated in practice (Sanborn et al., 2010).

Figure 4. Crossover operation for Bayesian networks. Adapted from Myers et al. (1999).

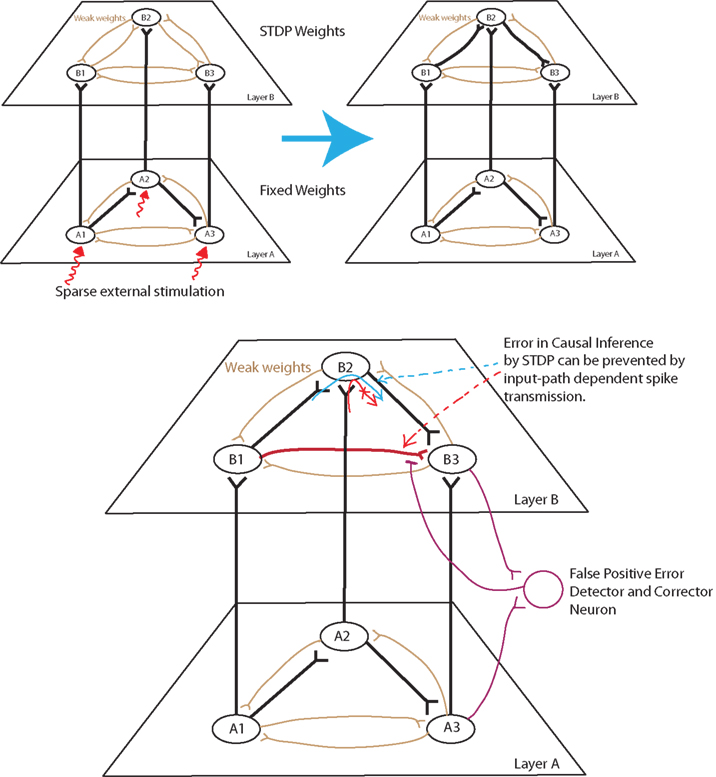

A burning question is how Bayesian calculations can be performed in the brain. George and Hawkins (2009) present a fairly detailed, but tentative account in terms of cortical microcircuits. Recent work by Nessler et al. (2009) shows that Bayesian computations can be implemented in spiking neural networks with first order spike-time-dependent plasticity (STDP). Another possibility is the implementation of Deep Belief Networks which carry out approximate hierarchical Bayesian inference (Hinton et al., 2006). The research program for NRH is to do the same for evolutionary computation, and to determine whether Bayesian inference may be carried out in a related way.

One could object to using NS in the neurobiological context that it is an imperfect search algorithm since there is no guarantee that the optimal solution can be found; the population might get stuck on a local instead of a global peak. This is true but by itself irrelevant. No search algorithm is perfect in this sense. The question is whether we on average gain something important in comparison with other search algorithms. “It is true that the optimization approach starts from the idea, already familiar to Darwin, Wallace, and Weismann… that adaptation is a pervasive feature of living organisms, and that it is to be explained by NS. It is not our aim to add to this claim that adaptation is perfect. Rather, the aim is to understand specific examples of adaptation, in terms of selective forces and the historical and developmental constraints operating. This requires that we have an explicit model, in each specific case, that tells us what to expect from a given assumption… We distinguish between general models and specific models, though in reality they form part of a continuum. General models have a heuristic function; they give qualitative insights into the range and forms of solution for some common biological problem. The parameters used may be difficult to measure biologically, because the main aim is to make the analysis and conclusions as simple and direct as possible” (Parker and Maynard Smith, 1990, p. 27).

Evolution by NS is an optimum-seeking process, but this does not guarantee that it will always find it. There are constraints on adaptation (which can be genetic, developmental, etc.) but the living world is full of spectacular adaptations nevertheless. And in many cases the solution is at, or very close to, the engineering optimum. For example, many enzymes are optimally adapted in the sense that the rate of catalysis is now constrained by the diffusion rates of substrates and products, so in practical terms those enzymes cannot be faster than they are. It is the same for senses (photon detection by the eye), or the boosted efficiency of photosynthesis by quantum entanglement. True, performance just has to be “good enough,” but good enough means relative to the distribution in the population, but as selection acts, the average is increasing, so the level of “good enough” is raising as well, as standard population genetics demonstrates (e.g., Maynard Smith, 1998). It is in this sense that we believe the applicability of evolutionary models of brain function warrant serious scrutiny, even if for the time being their exploration is at the rather “general” level.

A non-trivial aspect of neuronal groups is degeneracy (Edelman, 1987): structurally different networks can do the same calculations. Usually degeneracy is not a feature of minimalist models but it is certainly important for dynamics. Changeux and Dehaene (1989) called attention to this in their landmark paper: “In the course of the proposed epigenesis, diversification of neurons belonging to the same category occurs. Each one acquires its individuality or singularity by the precise pattern of connections it establishes (and neurotransmitters it synthesizes)… A major consequence of the theory is that the distribution of these singular qualities may also vary significantly from one individual to the next. Moreover, it can be mathematically demonstrated that the same afferent message may stabilize different connective organizations, which nevertheless results in the same input–output relationships… The variability referred to in the theory, therefore may account for the phenotypic variance observed between different isogenic individuals. At the same time, however, it offers a neural implementation for the often-mentioned paradox that there exists a non-unique mapping of a given function to the underlying neural organization.” (Changeux and Dehaene, 1989, p. 81).

The important point for NRH is that degeneracy plays a crucial role in the evolvability of replicators (Toussaint, 2003; Wagner, 2007; Parter et al., 2008). Evolvability has several different definitions; for our purposes here the most applicable approach is the measure of how fast a population can respond to directional selection. It is known that genetic recombination is a key evolvability component in this regard (Maynard Smith, 1998). It has been found that neutral networks also play a role in evolvability. Neutral networks are a web of connections in genotype space among degenerate replicators having the same fitness in a particular context. By definition two replicators at different nodes of such a network are selectively neutral, but their evolvability may be very different: one may be far, but the other may be close to a “promising” region of the fitness landscape; their offspring might then have very different fitnesses. Parter et al. (2008) show that under certain conditions variation becomes facilitated: random genetic changes can be unexpectedly more frequent in directions of phenotypic usefulness. This occurs when different environments present selective goals composed of the same subgoals, but in different combinations. Evolving replicator populations can “learn” about the deep structure of the landscape so that their variation ceases to be entirely “random” in the classical neo-Darwinian sense. This occurs if there is non-trivial neutrality as described by Toussaint (2003) and demonstrated for gene regulatory networks (Izquierdo and Fernando, 2008). We propose that this feature will turn out to be critical for neuronal replicators if they exist. This is closely related to how hierarchical Bayesian models find deep structure in data (Kemp and Tenenbaum, 2008; Tenenbaum et al., 2011).

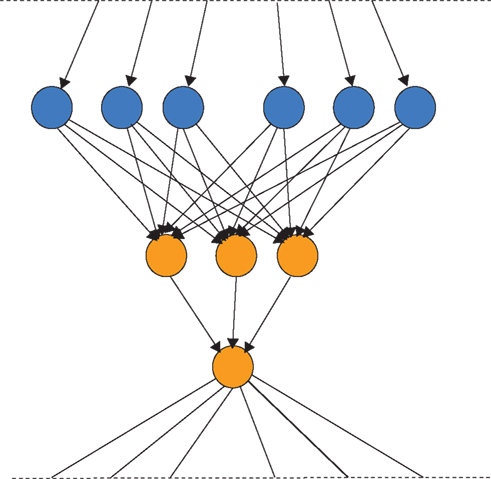

Finally, an important aspect is the effect of population structure on the dynamics of evolution (c.f. Maynard Smith and Szathmáry, 1995; Szathmáry, 2011). Previously we (Fernando and Szathmáry, 2009) have noted that neuronal evolutionary dynamics could turn out to be the best field of application of evolutionary graph theory (Lieberman et al., 2005). It has been shown that some topologies speed up, whereas others retard adaptive evolution. Figure 5 shows an example of favorable topologies (selection amplifiers). The brain could well influence the replacement topologies by gating, thereby realizing the most rewarding topologies.

Figure 5. A selection amplifier topology from Lieberman et al. (2005). Vertices that change often, due to replacement from the neighbors, are colored in orange. In the present context each vertex can be a neuron or neuronal group that can inherit its state from its upstream neighbors and pass on its state to the downstream neighbors. Neuronal evolution would be evolution on graphs.

Thorndike (1911) formulated the “law of effect” stating that beneficial outcomes increase and negative outcomes decrease the occurrence of a particular type of behavior. It has been noted (Maynard Smith, 1986) that there is a similarity between the dynamics of genetic selection and the operant conditioning paradigm of Skinner (1976). Börgers and Sarin (1997) pioneered a formal link between replicator dynamics and reinforcement learning. It could be shown that in the continuous-time limit the dynamics can be approximated by a deterministic replicator equation, formally describing the dynamics of a reinforcement learner.

The most exciting latest development is due to Loewenstein (2011) who shows that if reinforcement follows a synaptic rule that establishes a covariance between reward and neural activity, the dynamics follows a replicator equation, irrespective of the fine details of the model. Let pi(t) be the probability of choosing alternative i at time t. A simple expression of the dynamics of the probabilities postulates:

where η is the learning rate A denotes the action, R is reward, and E[R] is the average return; and the form is that of a continuous-time replicator equation. Probabilities depend on the synaptic weight vector W(t), i.e.,

The learning rule in discrete-time is:

and the change in synaptic strength in a trial is:

where φ is the plasticity rate and N is any measure of neural activity. The expectation value for this change can be shown to obey:

which is the covariance rule (the form of the synaptic weight change in Eq. 8can take different forms, while the covariance rule still holds). Using the average velocity approximation the stochastic dynamics can be replaced by a deterministic one:

Now we can differentiate Eq. 6 with respect to time, and after several operations we obtain exactly Eq. 5, with a calculable learning rate! This is by definition a selectionist view in terms of Price, and arguably there are units of selection at the synapse level, but no units of evolution, since “mutation” in a sense of an evolutionary algorithm does not play a role in this elegant formulation. There is selection from a given stock, exactly as in many other models we have seen so far, but there is no generation and testing of novelty. NGS does provide a mechanism for the generation and testing of novelty, i.e., the formation of the secondary repertoire and stochastic search at the level of one neuronal group itself. Such dynamics can be seen during the formation and destruction of polychronous groups (Izhikevich, 2006; Izhikevich and Hoppensteadt, 2009) and can be modulated by dopamine based value systems (Izhikevich, 2007).

A relevant comparison is that of evolutionary computation with temporal-difference based reinforcement learning algorithms. Neural Darwinism has been formulated as a neuronal implementation of temporal-difference reinforcement learning based on neuromodulation of STDP by dopamine reward (Izhikevich, 2007). Neuronal groups are considered to be polychronous groups, where re-entry is merely recurrence in a recurrent neural network from which such groups emerge (Izhikevich et al., 2004). An elaboration of Izhikevich’s paper by Chorley and Seth considers extra re-entrant connections between basal ganglia and cortex, showing that further TD-characteristics of the dopaminergic signals in real brains can be captured with this model.

We have made the comparison between temporal-difference learning and evolutionary computation extensively elsewhere (Fernando et al., 2008, 2010; Fernando and Szathmáry, 2009, 2010) and we find that there are often advantages in adding units of evolution to temporal-difference learning systems in terms of allowing improved function approximation and search in the space of possible representations of a state–action function (Fernando et al., 2010). We would also expect that adding units of evolution to neuronal models of TD-learning should improve the adaptive potential of such systems.

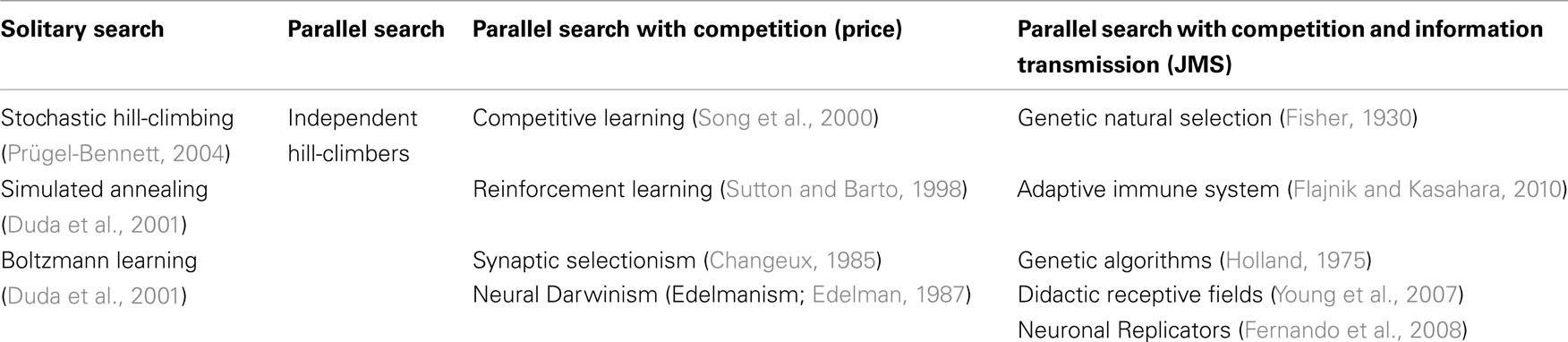

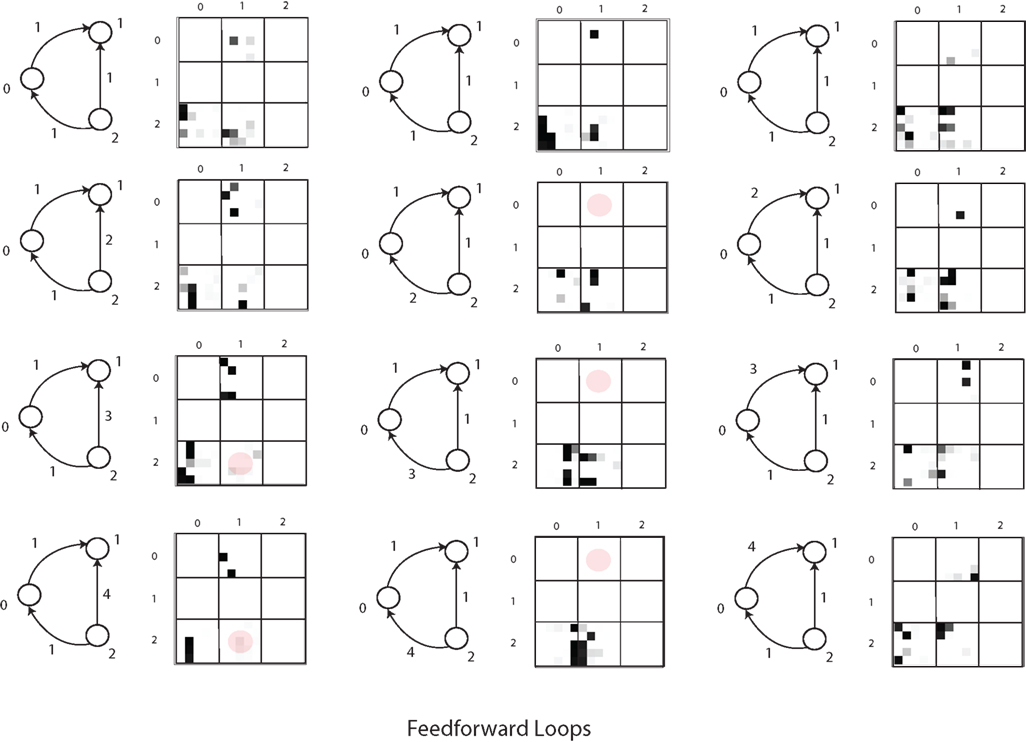

We have argued informally above that in some cases Darwinian NS is superior compared to other stochastic search algorithms that satisfy the Price equation but do not contain units of evolution. Examples of search algorithms are reviewed in the table below.

The left hand column of Table 1 shows the simplest class of search algorithm, solitary search. In solitary search at most two candidate units are maintained at one time. Hill-climbing is an example of a solitary search algorithm in which a variant of the unit (candidate solution) is produced and tested at each “generation.” If the offspring solution’s quality exceeds that of its parent, then the offspring replaces the parent. If it does not, then the offspring is destroyed and the parent produces another correlated offspring. Such an algorithm can get stuck on local optima and does not require replicators for its implementation. For example, it can be implemented by a robot on a mountainous landscape for example. A robot behaving according to stochastic hill-climbing does the same, except that it stays in the new position with a certain probability even if it is slightly lower than the previous position. By this method stochastic hill-climbing can sometimes avoid getting stuck on local optima, but it can also occasionally lose the peak. Simulated annealing is a variant of stochastic hill-climbing in which the probability of accepting a worse solution is reduced over time. Solitary stochastic search has been used by evolutionary biologists such as Fisher to model idealized populations, i.e., where only one mutant exists at any one time in the population (Fisher, 1930). However, a real Darwinian population is a much more complex entity, and cannot be completely modeled by stochastic hill-climbing. Here we should mention Van Belle’s (1997) criticism of Neural Darwinism which makes a subtle point about stochastic search. He points out that replication (units of evolution) permits unmutated parental solutions to persist whilst mutated offspring solutions are generated and tested. If the variant is maladapted, the original is not lost. He claims that such replicators are missing in Neural Darwinism. He demonstrates through a variant of Edelman’s Darwin I simulation that if neuronal groups change without the capacity to revert to their previous form that they cannot even be properly described as undertaking hill-climbing because they cannot revert to the state they were in before taking the unsuccessful exploration step. However, Boltzmann networks (Duda et al., 2001) and other stochastic search processes such as Izhikevich’s (2007) dopamine stabilized reinforcement learning networks and Seung’s (2003) stochastic synapses show that even without explicit memory of previous configurations that optimization is possible. Therefore Van Belle has gone too far in saying that “The individuals of neural Darwinism do not replicate, thus robbing the process of the capacity to explore new solutions over time and ultimately reducing it to random search” because even without replicators, adaptation by stochastic search is clearly possible.

Table 1. A classification of search (generate-and-test) algorithms of the Pricean and true Darwinian types.

Now consider column two of Table 1. What happens if more robots are available on the hillside for finding the global optimum, or more neuronal groups or synapses are available to explore the space of neural representations? What is an efficient way to use these resources? The simplest algorithm for these robots to follow would be that each one behaves completely independently of the others and does not communicate with the others at all. Each of them behave exactly like the solitary robot obeying whichever solitary strategy (hill-climbing, stochastic hill-climbing, etc.) it was using before. This is achieved by simply having multiple instances of the (stochastic) hill-climbing machinery. Multiple-restart hill-climbing is a serial implementation of this same process. It may be clear to the reader that such an algorithm is likely to be wasteful. If a robot becomes stuck on a local optimum then there would be no way of reusing this robot. Its resources are wasted. One could expect only a linear speed up in the time taken to find a global optimum (the highest peak). It is not surprising that no popular algorithm falls into this wasteful class.

Consider now the third column of Table 1. To continue the robot analogy of search on a fitness landscape, we not only have multiple robots available, but there is competition between robots for search resources (the machinery required to do a generate-and-test step of producing a variant and assessing its quality). In the case of robots a step is moving a robot to a new position and reading the altitude there. Such an assessment step is often the bottleneck in time and processing cost in a real optimization process. If such steps were biased so that the currently higher quality solutions did proportionally more of the search, then there would be a biased search dominated by higher quality solutions doing most of the exploration. This is known as competitive learning because candidate solutions compete with each other for reward and exploration opportunities. This is an example of parallel search with resource competition, shown in column 3 of Table 1. It requires no NS as defined by JMS, i.e., it requires no explicit multiplication of information. No robot communicates its position to other robots. Several algorithms fall into the above category. Reinforcement learning algorithms are examples of parallel search with competition (Sutton and Barto, 1998), see the discussion above about the Pricean interpretation of reinforcement learning. Changeux’s synaptic selectionism also falls into this class (Changeux et al., 1973; Changeux, 1985).

Do such systems of parallel search with competition between synaptic slots exhibit NS? Not according to the definition of JMS because there is no replicator; there is no copying of solutions from one robot to another robot, there is no information that is transmitted between synapses. Resources are simply redistributed between synapses (i.e., synapses are strengthened or weakened in the same way that the stationary robots increase or decrease their exploitation of their current location). Notice, there is no transmission of information between robots (e.g., by recruitment) in this kind of search. Similarly there is no information transfer between synapses in synaptic selectionism. Synaptic selectionism is selection in the Price sense, but not in the JMS sense. Edelman’s TNGS falls into this category also. In a recent formulation of Edelman’s theory of NGS, Izhikevich et al. (2004) shows that there is no mechanism by which functional variations in synaptic connectivity patterns can be inherited (transmitted) between neuronal groups. Neural Darwinism is a class of parallel search with competition but no information transfer between solutions, and is thus fundamentally different from Darwinian NS as defined by JMS.

This leads us to the final column in Table 1. Here is a radically different way of utilizing multiple slots that extends the algorithmic capacity of the competitive learning algorithms above. In this case we allow not only the competition of slots for generate-and-test cycles, but we allow slots to pass information (traits/responses) between each other. Returning to the robot analogy, those robots at the higher altitudes can recruit robots from lower altitudes to come and join them. This is equivalent to replication of robot locations. The currently best location can be copied to other slots. There is transmission of information between slots. Note, replication is always of information (patterns), i.e., reconfiguration by matter of other matter. This means that the currently higher quality slots have not only a greater chance of being varied and tested, but that they can copy their traits to other slots that do not have such good quality traits. This permits the redistribution of information between material slots. Crucially, such a system of parallel search, competition, and information transmission between slots does satisfy JMS’ definition of NS. The configuration of a unit of evolution (slot) can reconfigure other material slots. According to this definition, several other algorithms fall into the same class as NS, e.g., particle swarm optimization (Poli et al., 2007) because they contain replicators.

Are there algorithmic advantages of the full JMS-type NS compared to independent stochastic hill-climbers or competitive stochastic hill-climbers without information transmission that satisfies only Price’s formulation of NS? We can ask: for what kinds of search problem is a population of replicators undergoing NS with mutation (but no crossover) superior to a population of independent hill-climbers or stochastic hill-climbers competing for resources?

Note, we are not claiming that Edelman’s Neural Darwinism is exactly equivalent to competitive learning or to independent stochastic hill-climbing It cannot be because Hebbian learning and STDP impose many instructed transmission biases that are underdetermined by the transmission bias term in the Price equation at the level of the neuronal group (and in fact, Hebb, and STDP have been interpreted above as Pricean evolution at the synaptic level thus). The claim is that it does not fall into the far right column of Table 1, but is of the same class as competitive learning algorithms that lack replicators.

So to answer first the question of when JMS-type NS is superior to independent stochastic hill-climbing, a shock to the genetic algorithm community came when it was shown that a hill-climber actually outperforms a genetic algorithm on the Royal Road Function (Mitchell et al., 1994). This was in apparent contradiction to the building-block hypothesis which had purported to explain how genetic algorithms worked (Holland, 1975). But later it was shown that a genetic algorithm (even without crossover) could outperform a hill-climber in a problem which contained a local optimum (Jansen et al., 2001). This was thought to be due to the ability for a population to act almost like an ameba at a local optimum, reaching down into a valley and searching the local solutions more effectively. The most recent explanation for the adaptive power of a Darwinian population is that the population is an ideal data structure for representing a Bayesian prior distribution of beliefs about the fitness landscape (Zhang, 1999). Another possible explanation is that replication allows multiple search points to be recruited to the region of the search space that is currently the best. The entire population (of robots) can acquire the response characteristics (locations) of the currently best unit (robot). Once all the robots have reached the currently best peak, they can all do further exploration to find even higher peaks. In many real-world problems there is never a global optimum; rather further mountain ranges remain to be explored after a plateau has been reached. For example, there is no end to science. Not every system that satisfies Price’s definition of selection can have these special properties of being able to redistribute a variant to all search points, i.e., for a solution to reach fixation in a population.

Here we carefully compare the simplest NS algorithm with independent hill-climbers on a real-world problem. Whilst we do not claim to be able to fully explain why NS works better than a population of independent hill-climbers balanced for the number of solution evaluations (no-one has yet fully explained this) we show that in a representative real-world problem, it does significantly outperform the independent hill-climbers.

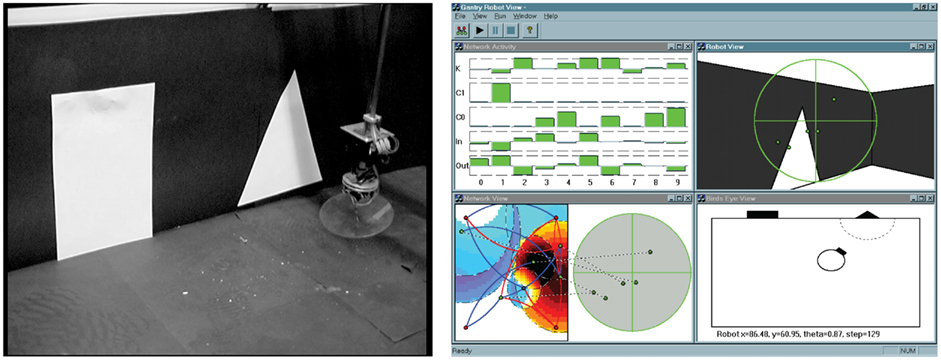

Intuition and empirical evidence (Mitchell et al., 1994; McIlhagga et al., 1996a,b; Keane and Nair, 2005; De Jong, 2006; Harman and McMinn, 2007), suggest that selectionist, population based search (even without crossover) will often outperform hill-climbing in multimodal spaces (those with multiple peaks and local optima). However, in relatively well-behaved search spaces, for example with many smooth peaks which are easily accessible from most parts of the space, a random multi-start hill-climber may well give comparable or better performance (Mitchell et al., 1994; Harman and McMinn, 2007). But as the complexity of the space increases, the advantages of evolutionary search should become more apparent. We explored this hypothesis by comparing mutation-only genetic algorithms with a number of hill-climbing algorithms on a non-trivial evolutionary robotics (ER) problem. The particular ER task has been chosen because it provides a challenging high dimensional search space with the following properties: noisy fitness evaluations, a highly neutral space with very few widely separated regions of high fitness, and variable dimensionality (Smith et al., 2002a). These properties put it among the most difficult class of search problems. Finally, because it is a noisy real-world sensorimotor behavior-generating task, the search space is likely to share some key properties with those of natural brain-based behavior tasks. We should make it clear we do not believe this is how neural networks actually develop. The aim of this demonstration is to add to the molecular example an example of a problem which contains a realistic behavioral fitness landscape.

The task used in the studies is illustrated in Figure 6. Starting from an arbitrary position and orientation in a black-walled arena, a robot equipped with a forward facing camera must navigate under extremely variable lighting conditions to one shape (a white triangle) while ignoring the second shape (a white rectangle). The robot must successfully complete the task over a series of trials in which the relative position and size of the shapes varies. Both the robot control network and the robot sensor input morphology, i.e., the number and positions of the camera pixels used as input and how they were connected into the network, were under evolutionary control as shown in Figure 6. Evolution took place in a special validated simulation of the robot and its environment which made use of Jakobi’s (1998) minimal simulation methodology whereby computationally very efficient simulations are built by modeling only those aspects of the robot–environment interaction deemed important to the desired behavior and masking everything else with carefully structured noise (so that evolution could not come to rely on any of those aspects). These ultra-fast, ultra-lean simulations allow very accurate transfer of behavior from simulation to reality by requiring highly robust solutions that are able to cope with a wide range of noisy conditions. The one used in this work has been validated several times and transfer from simulation to reality is extremely good. The trade-off in using such fast simulations is that the search space is made more difficult because of the very noisy nature of the evaluations.

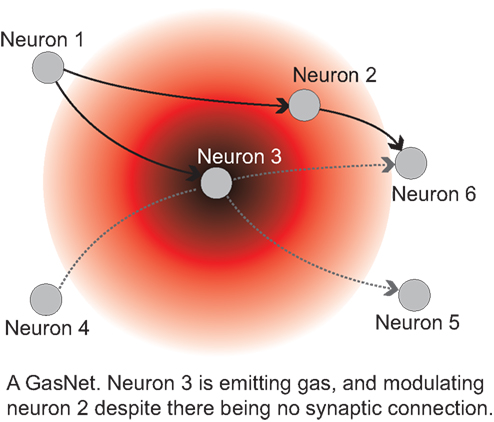

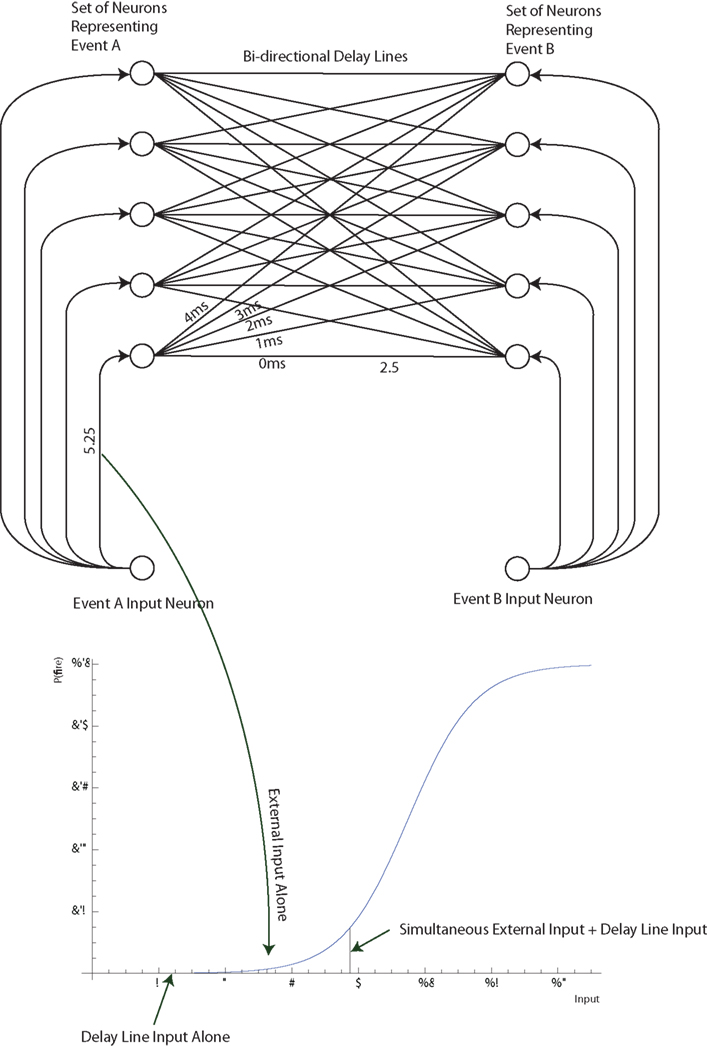

Figure 6. (Left): the gantry robot. A CCD camera head moves at the end of a gantry arm. In the study referred to in the text 2D movement was used, equivalent to a wheeled robot with a fixed forward pointing camera. A validated simulation was used: controllers developed in the simulation work at least as well on the real robot. (Right): the simulated arena and robot. The bottom right view shows the robot position in the arena with the triangle and rectangle. Fitness is evaluated on how close the robot approaches the triangle. The top right view shows what the robot “sees,” along with the pixel positions selected by evolution for visual input. The bottom left view shows how the genetically set pixels are connected into the control network whose gas levels are illustrated. The top left view shows current activity of nodes in the GasNet.