- Department of Electrical Engineering, The Laboratory for Network Biology Research, Technion, Haifa, Israel

In recent experiments, synaptically isolated neurons from rat cortical culture, were stimulated with periodic extracellular fixed-amplitude current pulses for extended durations of days. The neuron’s response depended on its own history, as well as on the history of the input, and was classified into several modes. Interestingly, in one of the modes the neuron behaved intermittently, exhibiting irregular firing patterns changing in a complex and variable manner over the entire range of experimental timescales, from seconds to days. With the aim of developing a minimal biophysical explanation for these results, we propose a general scheme, that, given a few assumptions (mainly, a timescale separation in kinetics) closely describes the response of deterministic conductance-based neuron models under pulse stimulation, using a discrete time piecewise linear mapping, which is amenable to detailed mathematical analysis. Using this method we reproduce the basic modes exhibited by the neuron experimentally, as well as the mean response in each mode. Specifically, we derive precise closed-form input-output expressions for the transient timescale and firing rates, which are expressed in terms of experimentally measurable variables, and conform with the experimental results. However, the mathematical analysis shows that the resulting firing patterns in these deterministic models are always regular and repeatable (i.e., no chaos), in contrast to the irregular and variable behavior displayed by the neuron in certain regimes. This fact, and the sensitive near-threshold dynamics of the model, indicate that intrinsic ion channel noise has a significant impact on the neuronal response, and may help reproduce the experimentally observed variability, as we also demonstrate numerically. In a companion paper, we extend our analysis to stochastic conductance-based models, and show how these can be used to reproduce the details of the observed irregular and variable neuronal response.

1. Introduction

Current approaches to single neuron modeling are usually concerned with short term dynamics, covering timescales up to seconds (Gerstner and Kistler, 2002; Koch and Schutter, 2004; Izhikevich, 2007; Ermentrout and Terman, 2010 and references therein). Naturally, it is also important to describe the behavior of neurons at longer timescales such as minutes, hours, and days (Marom, 2010). However, experiments on single neurons are difficult to perform for such extended periods of time. Nonetheless, in a recent experiment Gal et al. (2010) performed days long continuous extracellular recordings of synaptically isolated single neurons, situated in a culture extracted from rat cortex. These neurons, which did not fire in the absence of stimulation, were stimulated periodically with constant amplitude current pulses applied at physiological Action Potential (AP) firing frequencies (1–50 Hz). For strong enough stimulation, the neurons generated APs. As such they are excitable, non-oscillatory neurons. It was observed (similarly to De Col et al., 2008) that, depending on the AP generation probability and its latency, the neuronal response could be classified to three different modes – “stable,” “transient,” and “intermittent.” At low stimulation rates, APs were generated reliably with a fixed latency (delay) after the stimulus – the stable mode. An increase in the stimulation rate elicited an increase in the latency, building gradually over a few dozen seconds – the transient mode. If the latency climbed above a certain “critical latency,” then AP generation failures began to appear – the intermittent mode. Interestingly, the intermittent mode response was highly irregular and displayed intricate patterns of AP firing. Despite the length of the experiment, these patterns continued to change over its entire duration, never converging to a steady state behavior with predictable stationary statistics.

The present study aims to provide a theoretical explanation for these observed phenomena. Specifically, we were interested in identifying the source of the observed behavior and its implications on the neuronal input-output relation. However, given the huge diversity of biophysical processes taking place at these timescales (Bean, 2007; Sjostrom et al., 2008; Debanne et al., 2011), it is not clear which phenomena should be included in such a modeling effort. Moreover, including a multitude of effects in such a highly non-linear model of an excitable system, necessarily incorporating a large number of poorly identified parameters, would lead to models of large complexity and questionable usefulness, especially when considering such long timescales. Based on these observations, we follow an Occam’s razor approach. We start from the basic Hodgkin-Huxley (HH) model (Hodgkin and Huxley, 1952) and increase its complexity only as needed in order to cover a wider range of experimentally relevant phenomena, within the framework of Conductance-Based Neuron Models (CBNMs). Such models provide the most common formulation used in neuronal modeling (Hille, 2001; Izhikevich, 2007; Ermentrout and Terman, 2010), and are thought to be the simplest possible biophysical representation of an excitable neuron, in which ion channels are represented by voltage-dependent conductances and the membrane by a capacitor. Importantly, by understanding the limitations of a model, we can find the most reasonable way to modify it when some of its predictions are contradicted by the experiment (as tends to happen eventually). However, it is generally hard to find these limitations by numerical simulations, especially if the model contains many parameters. Therefore, it is generally desirable to have an analytically tractable explanation for the behavior of a model.

Fortunately, the simple form of stimulation used in the experiments (periodic pulse train) allowed us to derive simple analytic input-output relations for neuronal responses for a large class of deterministic CBNMs (see section 2). Specifically, we find exact expressions for the transient mode dynamics, and the firing rate and firing patterns during the intermittent mode – all as a function of the neuronal input (stimulation rate and amplitude). These simple relations rely on the analysis of a simplified description for the dynamics of neuronal excitability, derived from the full CBNM, under the assumption of timescale separation [equation (2.9)] and a “step-like behavior of the average kinetic rates” [equation (2.12)]. This simplified version is essentially a piecewise linear recursive map, derived by “averaging out” the fast dynamics. Such an averaging technique is usually used to derive qualitative features of bursting neurons (section 9.2.3 in Izhikevich, 2007, and also Ermentrout and Terman, 2010), and in a few cases, also quantitative results (Ermentrout, 1998). Note that discontinuous maps similar to ours [equation (2.14)] appear in many systems (Di Bernardo, 2008), and recently also in neuroscience (e.g., Medvedev, 2005; Griffiths and Pernarowski, 2006; Touboul and Faugeras, 2007; Juan et al., 2010). However, as explained in Ibarz et al., 2011 (section 1.2), in previous neuroscience works, time is discretized either in fixed steps with some arbitrary size (usually small, as in numerical integration) or in varying step sizes determined by certain internal significant events such as APs. In contrast, our method of discretization relied on the stimulus pulses arrival times, which are both dynamically significant (APs can be generated only at these times) and do not depend on the internal neuronal dynamics. This allowed us to achieve greater analytic tractability, interpretability, and generality than was usually obtained using discrete maps in neuronal dynamics. Moreover, this enabled us to directly connect the map’s parameters to the neuronal input. Note that since Gal et al. (2010) used periodic stimulation, our time steps were fixed. However, it is straightforward to extend this method to a general sparse input. Such a general method might be used numerically to approximate the response of complex and computationally expensive CBNMs in a fast and simple way, and with fewer parameters.

In section 3 we relate the derived analytic relations, augmented by extensive numerical simulations, to the experimental results of Gal et al. (2010). We show how, by extending the HH model with a single slow kinetic process that generates “negative feedback” in the neuronal excitability (we use slow sodium inactivation, but other mechanisms are possible) we can reproduce the different modes observed in Gal et al. (2010). We explain how the intermittent mode is generated by the slow kinetic process “self-adjusting” the neural response so that it becomes highly sensitive to perturbations. The mean response in all modes, and its relation to the neuronal input can be explained in most cases using this single slow variable (in some cases an additional “positive feedback” variable is required – we used slow potassium inactivation). However, using our analytic techniques we show that a rather large class of deterministic CBNMs cannot generate the variability and irregularity of the response (e.g., chaotic dynamics), in both the transient and intermittent modes. This result, together with the high sensitivity of the intermittent mode, led us to conclude that ion channel noise (see White et al., 2000; Faisal et al., 2008) must also be added to the model. Examining this numerically, we show that, indeed, the effects of ion channel noise are far more significant here than is usually considered, and lead to a better fit to the variability observed in the experiment. However, the introduction of noise requires us to change the framework of discussion from deterministic to stochastic CBNMs, and develop different analytic tools. Such work is beyond the scope of the present paper. And so, in a companion paper, we complete our mathematical analysis of stochastic CBNMs, and the reproduction of the variability in the experimental results.

2. Materials and Methods

In this section we introduce the CBNMs used, and the methods we employ to analyze them.

The inputs to our model will be similar to those used in the experiment, a train of short fixed-amplitude current pulses, periodically applied with period T (or, equivalently, frequency fin ≜ 1/T)

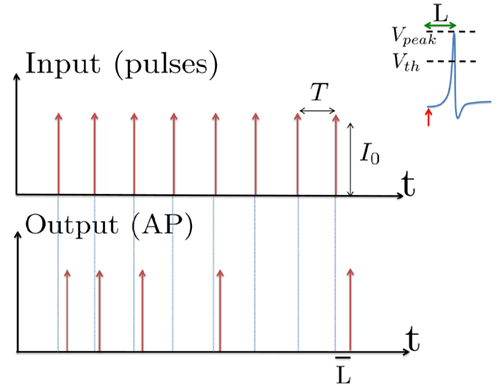

where ⊓(x) is the pulse shape, of width t0 ≪ T (so ⊓(x) = 0 for x outside [0, t0]), and unit magnitude, with I0 being the current amplitude of the pulses (Figure 1). In numerical simulations1 we used first order Euler integration (or Euler-Maruyama for stochastic simulations) with a time step of dt = 5 μs (quantitative results were verified also at dt = 0.5 μs). Each stimulation pulse was given as a square pulse with a width of t0 = 0.5 ms and amplitude I0. The results are not affected qualitatively by our choice of a square pulse shape. In the following discussion, we analyze the neuronal input/output (I/O) relation quantitatively with respect to fin but only qualitatively with respect to I0. Since the amplitude dependence is of secondary importance here we do not write explicitly any dependence of the parameters on I0.

Figure 1. Schematic representation of the stimulation and the measured response. No APs are spontaneously generated. When stimulating a neuron with a periodic current pulse train with period T and amplitude I0 we get either an AP-response or no-AP-response after each stimulation. In the former case an AP appears with a latency L, where L ≪ T. Inset: AP voltage trace. An AP is said to occur if Vth was crossed, and the AP latency L is defined as the time to AP peak since the stimulation pulse (more precisely, its beginning).

The two neuronal response features explored in Gal et al. (2010) are the occurrence of Action Potentials (APs) and their latency. Therefore, we too shall focus on these features. More precisely, we define an AP to have occurred if, after the stimulation pulse was given, the measured voltage has crossed some threshold Vth (we use Vth = −10 mV in all cases). The latency of the AP, L, is defined as the time that passed from the beginning of the stimulation pulse to the voltage peak of the AP (Figure 1, inset). Note that no APs are spontaneously generated.

2.1. Basic HH Model

Similarly to many discussions of neuronal excitability, we begin with the classical Hodgkin-Huxley (HH) conductance-based model (Hodgkin and Huxley, 1952):

where I(t) is the input current, V is the membrane’s voltage and m, n, and h are the gating variables of the channels and the parameters are given their standard values (as in Hodgkin and Huxley, 1952; Ermentrout and Terman, 2010):

Cm = 1 μF/cm2, Φ = 1, and we used the standard kinetic rate functions

where in all the rate functions V is used in millivolts units.

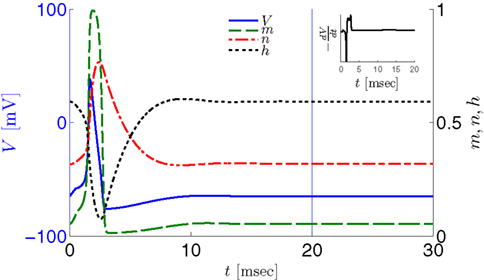

We first note a discrepancy between this model and the experiment – the width of the AP in the model is twice as large in the model than in the experiment. To compensate for this we set the temperature factor Φ = 2 and the capacity Cm = 0.5 μF/cm2, increasing the speed of the HH model dynamics by a factor of two, which results in the correct pulse shape (Figure 2, inset). We refer to the model with these fitted parameters, as the “fitted HH model,” while the model with the original parameters will be referred to as the “original HH model.”

Figure 2. Fitted HH model variables V, m, n, and h after an AP relax to steady state on a shorter timescale than the minimal stimulation period used (T ≥ 20 ms for Gal et al., 2010, marked as a vertical line). This remains true when no AP is generated, since then the perturbation from steady state is even smaller. This timescale separation indicates that the system has no memory of previous pulses – so all stimulation pulses produce the same response. Note also that the AP voltage pulse width is similar to Figure S1B of Gal et al. (2010). Inset: −dV/dt during an AP, which is proportional to extracellular measured voltage trace (Henze et al., 2011). The AP pulse width is similar to Figures 1B, 2A, and 5C of Gal et al. (2010). Parameters: I0 = 15 μA.

However, in either case, the HH model alone cannot reproduce the results in Gal et al. (2010). In both versions of the HH model, given a short pulse stimulation generating an AP, all the HH variables (V, m, n, and h) relax to close to their original values within ∼20 ms (Figure 2). Therefore, pulse stimulation at frequency fin < 50 Hz (or T > 20 ms), as in the experiment, would result in each stimulation pulse producing exactly the same AP each time. This “memory-less” response is far too simple to account for complex behavior seen in the experiment, and so the model must be extended. In the next section we discuss how this is generally done.

2.2. HH Model Deterministic Extensions

The HH model has been extended many times since its original development (Hodgkin and Huxley, 1952), in order to account for the morphology of the neuron and for additional ionic currents as well as other processes that had been discovered (Hille, 2001; Bean, 2007). These extensions are generally done in a similar way, by adding more variables that represent either different neuronal compartments or various cellular processes, such as accumulating changes in the cell’s ionic concentrations or slow inactivation of ion channels (see Izhikevich, 2007; De Schutter, 2010; Ermentrout and Terman, 2010 for a modeling-oriented review of this subject). Such models were used, for example, to explore neuronal adaptation and bursting activity (e.g., Marom and Abbott, 1994; Fleidervish et al., 1996; Powers et al., 1999; Pospischil et al., 2008). The general form of such a conductance-based neuron model must include some spike-generating mechanism with rapid variables r ≜ (r1, …, rm)⊤ ((·)⊤ denotes transpose), along with slow variables s ≜ (s1, …, sn)⊤, modulating the spikes over time:

where ∊ > 0 is some small parameter – rendering the dynamics of s (of timescale τs) much slower than the dynamics of r (of timescale τr). We shall assume for simplicity that all slow variables are normalized to the range [0, 1] (as is usually done for gating variables).

For example, the HH model is a special case of this model where r = (V, m, n, h)⊤, equation (2.5) are given by equation (2.1–2.4) and all other quantities are held fixed, so ∊ = 0. Other basic models of excitable neurons also exist (Fitzhugh, 1961; Morris and Lecar, 1981). In any case, we require that, given a constant value of s (if ∊ = 0), the rapid variables r and equation (2.5) represent an “excitable,” non-oscillating, neuron. By that we mean that:

1. r remains at a unique constant steady state if I(t) = 0 (“resting state”).

2. After each stimulation pulse, for certain values of initial conditions and I0, we get either a stereotypical “strong” response (“AP-response”) or a stereotypical “weak” response in r (“no-AP-response”). Only for a very small set of values of initial conditions and I0, do we get an “intermediate” response (“weak AP-response”).

3. All responses are brief, and r rapidly relaxes back to steady state, within time τr. For example, τr < 20 ms in both versions of the HH model (Figure 2).

Next assume that ∊ > 0. Now s are allowed to change, and to modulate the neuronal activity in a history dependent way. Recall that the dynamics of s are slow since ∊ is small, and therefore ∊−1 ∼ τs ≫ τr. For simplicity, we assume, as is commonly done (Izhikevich, 2007; Ermentrout and Terman, 2010), that hi(r, s) = hi(r, si), a linear function,

where δi and γi are kinetic rate functions of magnitude ∊. Some of this paper’s main results can be extended to general monotonic functions hi(r, si) (see section C). Next we discuss some of the specific models we use in this work. Since we are interested in the temporal rather than the spatial structure of the neuronal response, we shall concentrate on single compartment (isopotential) models which include additional slow kinetic processes.

2.2.1. HHS model: basic HH + sodium slow inactivation

The work of De Col et al. (2008), which has some similarities with the work in Gal et al. (2010), implicates slow inactivation of sodium channels in the latency changes of the AP generated in response to stimulation pulses – as in the transient phase observed in Gal et al. (2010). Since slow inactivation of sodium channels (Chandler and Meves, 1970) has also been implicated in spike-frequency adaptation (Fleidervish et al., 1996; Powers et al., 1999 and in axonal AP failures Grossman et al., 1979a), and since in Gal et al. (2010) the latency change is clearly coupled with the spike failures (e.g., the existence of a critical frequency), we first wanted to examine whether slow inactivation of sodium channels alone can explain the experimental results. We incorporate this mechanism into the HH model (as in Chandler and Meves, 1970; Rudy, 1978; Fleidervish et al., 1996), by introducing s, a new slow inactivation gate variable, into the sodium current

We model s as having first order kinetics,

with voltage-dependent inactivation rate γ(V) = 3.4·(e−0.1·(V+17)+ 1)−1 Hz and recovery rate δ(V) = e−(V+85)/30 Hz as in Fleidervish et al., 1996; again, in all the rate functions V is used in millivolts units). We call this the “HHS model.” In order to make the timescale of the transient change in the latency comparable to that measured in Gal et al. (2010), Figure 4A, we had to make the rates γ and δ 20 times smaller (such small rates were previously demonstrated (e.g., Toib et al., 1998). Also, using the fitted HH model, which had a narrow AP, required us to increase the inactivation rate γ to three times its original value, so sufficient inactivation could occur. However, this last change made inactivation level at rest too high, so we compensated by increasing the steepness of the activation curve of γ to three times its original value. The end result of this was γ(V) = 0.51·(e−0.3·(V+17) + 1)−1 Hz and δ(V) = 0.05e−(V+85)/30 Hz. Using these rates together with the fitted HH model, we get the “fitted HHS model,” which reproduces the experimentally measured timescales of the transient response (Figure 8A). Alternatively, if instead we refer to the “original HHS model,” then it is to be understood that we used the original parameters of the HH model and γ(V) and δ(V), as in Fleidervish et al. (1996). Finally, we comment that sodium channels’ recovery from slow inactivation is known to be history dependent and to occur at multiple timescales (Toib et al., 1998; Ellerkmann et al., 2001). And so, one could argue that simple first order kinetics as in equation (2.8) are not accurate enough to describe the channel’s behavior. However, in neurons under pulse stimulation, the linear form of equation (2.8) is actually quite general – since Soudry and Meir (2010) showed how in this case equation (2.8) approximates the dynamics of an ion channel with power law memory which reproduce the results of Toib et al. (1998), Ellerkmann et al. (2001). Therefore, the response of the HHS model with slow inactivation of sodium as described in Soudry and Meir (2010) is not significantly different from the response using equation (2.8).

2.2.2. HHSAP model: HHS model + potassium activation

Slow activation of potassium current (either voltage or calcium dependent) has been implicated many times in reducing the neuronal excitability after long current step stimulation, termed “spike-frequency adaptation” (e.g., Koch and Segev, 1989; Marom and Abbott, 1994; Pospischil et al., 2008). When we take this into consideration we change the fitted HHS model, rename {s, γ, δ} → {s1, γ1, δ1}, and add a slowly Activating Potassium current with M-current kinetics similar to those of Koch and Segev, 1989, Chapter 4), so the total potassium current is

with  and

and

where

In Koch and Segev (1989), σ = 40 mV, while we used σ = 15 mV (again, in all the rate functions V is used in millivolts units). We refer this model as the “HHSAP model.”

2.2.3. HHSIP model: HHS model + potassium inactivation

Both slow inactivation of sodium channels, and slow activation of potassium channels have similar affect on the neuronal excitability – they act to reduce it after an AP. As we shall see later, this “negative feedback” type of behavior always results in very similar neuronal dynamics. However, “positive feedback” type of behavior is also observed – increased excitability after a depolarization (Hoshi and Zagotta, 1991). To account for this we take the HHSAP model, and switch the potassium rates {γ2, δ2} → {δ2, γ2} – so now potassium is Inactivating (“positive feedback”) instead of Activating (“negative feedback”). We name this the “HHSIP model.”

2.3. Simplifying Deterministic Conductance-Based Models

Deterministic Conductance-Based Neuron Models (CBNMs) are usually explored numerically, with the exception of several dynamical systems reduction methods (Izhikevich, 2007; Ermentrout and Terman, 2010). However, since in this work we concentrate only on a specific form of stimulation (T-periodic, short current pulses with amplitude I0) which fulfills a timescale separation assumption [equation (2.9)]

allows us to replace the full model with a simpler, approximate model. This condition is applicable here, since for all the specific models we use (both fitted and original HH, HHS, HHSAP, and HHSIP models, described in the previous sections), τr ∼ 10 ms and τs ∼ 10 s, and since the stimulation protocol in the experiment, used the (physiologically relevant) period range of 20 ms < T < 1 s. Numerically, we found in all these specific models, that the analytical results stemming from this assumption remain accurate if fin ≤ 30 Hz.

Since τr ≪ T (e.g., Figure 2) and since we deal with an excitable (non-oscillatory) neuron (as defined in 2.2), then after each stimulation pulse, whether or not there was an AP, the rapid variables relax to a unique steady state and do not directly affect the neuronal response when the next stimulation is given. Only s, a vector representing the slow variables of the system [equation (2.6)], retains memory of past stimulations. Therefore, to determine the neuronal behavior, it is only necessary to consider the dynamics of s and how it affects the system’s response. Thus, we consider how the neuronal response is determined by s.

2.3.1. Firing threshold

In response to a stimulation pulse with amplitude I0 an AP will occur if and only if the “excitability” of the neuron is high enough. Since only s retains memory of past stimulations, we should only care about the dependence of this excitability in the value of s, so we write it as a function E(s) – where we say that an AP will occur if and only if E(s) > 0. We denote by Θ the threshold region – a set of values in which each s fulfills E(s) = 0. We calculated numerically the location of this threshold region from the full conductance-based model [equations (2.5 and 2.6)]. First, we set ∊ = 0, disabling all the slow kinetics in the model. Then, for every value of s we simulated this “half-frozen” model numerically by first allowing r to relax to a steady state and then giving a stimulation pulse with amplitude I0. If an AP was generated then E(s) > 0, and E(s) ≤ 0 otherwise.

For example, in the context of the HHS model, the sodium current is depolarizing. Therefore, the stronger the sodium current, the more likely it is that an AP will be generated after a stimulation pulse. Since the sodium current increases with s, then if and only if s is above a certain threshold, which we denote by θ, the sodium current will be strong enough to create an AP. So, in the HHS model (or anytime s = s, a scalar) we can always write E(s) = s − θ (Figure 3A). In the HHSAP and HHSIP models (for which s = (s1, s2)) we found numerically that the shape of Θ is linear (see Figure 3B), so we can write E(s) = w·s − θ. Generally, we expect E(s) to be monotonic in each component of s separately, and monotonically increasing in I0. Therefore, in the HHS model, we expect the threshold θ to decrease with I0 (Figure 3A).

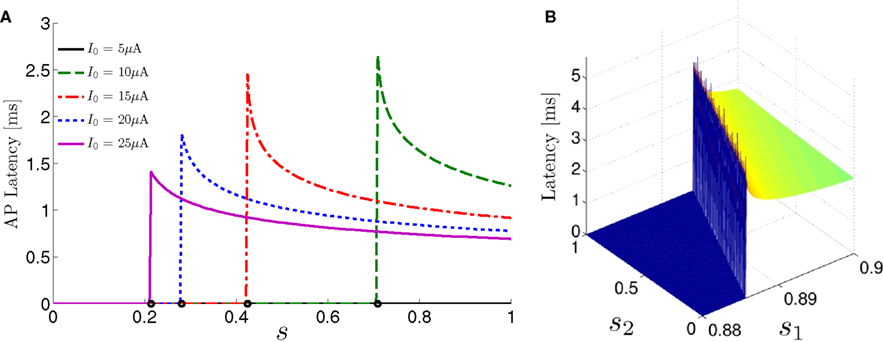

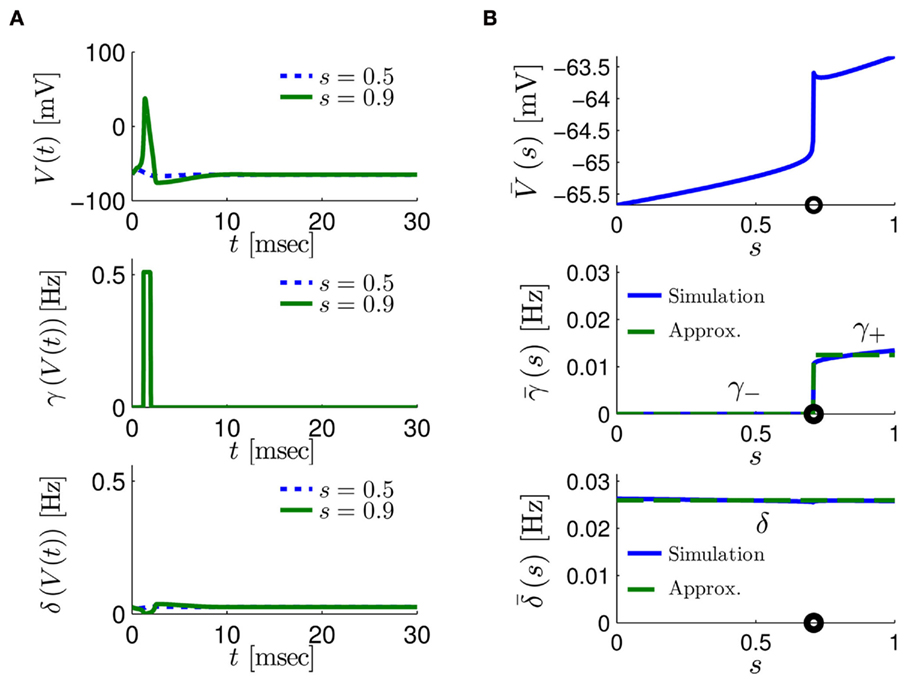

Figure 3. AP threshold and latency. (A) AP latency, L(s), calculated numerically from the fitted HHS model by setting ∊ = 0, and simulating the neuronal response at different values of s and I0. Firing thresholds θ ≜ {s|E(s) = 0} are marked by black circles for each I0. When no AP occurred, we define L ≜ 0. (B) Similarly, we calculated AP latency in the HHSAP and HHSIP models for I0 = 8 μA. Notice the linear shape of the threshold line Θ.

2.3.2. Latency function

When an AP occurs in response to a stimulation pulse, its different characteristics (amplitude, latency, etc.) are also determined by s. We are interested mainly in the latency of the AP, and so we define the latency function L = L(s) for all s for which an AP occurs (when E(s) > 0). This function was also found numerically. For every value of s we simulated the “half-frozen” model (with ∊ = 0) response to a stimulation pulse, and measured the latency of the resulting action potential (when it occurred). For example, in the context of the HHS model, as can be seen in Figure 3A, an AP will have a smaller latency (faster membrane depolarization) the higher are s and I0 – since both the sodium current and the stimulation current are depolarizing. See Figure 3B for the (2D) latency function in the HHSAP and HHSIP models.

2.3.3. Dynamics of s

Having described how s affects the neuronal response, all that remains is to understand how s evolves with time.

Using equations (2.6 and 2.7) we write

where we have used the following notation for a time-averaged quantity

We neglect the O((∊T)2) correction term, since ∊T ≪ 1. Since the value of s(t) determines the r dynamics in the interval [t, t + T], it also determines  and

and  . Thus, slightly abusing notation, we write

. Thus, slightly abusing notation, we write  i(s(t)) and

i(s(t)) and  instead of

instead of  and δi(t), respectively. Since the condition

and δi(t), respectively. Since the condition  determines whether or not an AP is generated, we expect that generally the sharpest changes in the values of

determines whether or not an AP is generated, we expect that generally the sharpest changes in the values of  and

and  occur near the threshold area Θ where E(s) = 0. Following this reasoning, we make the assumption that the kinetic rates may be approximated as step functions with discontinuity near E(s) = 0,

occur near the threshold area Θ where E(s) = 0. Following this reasoning, we make the assumption that the kinetic rates may be approximated as step functions with discontinuity near E(s) = 0,

The interpretation of this assumption is that the kinetic rates are insensitive to the s induced changes the AP shape and the steady state of r. For example, in the context of the HHS model, as can be seen in Figure 4B, we get for the rate of sodium inactivation

where γ+ > γ−, while the recovery rate remains constant

Figure 4. Calculation of the average kinetic rates in the fitted HHS model, where s is held constant. (A) Dynamics of voltage V(t) (top) γ(V(t)) (middle) and δ(V(t)) (bottom) after a stimulation pulse, plotted for two values of s. For s = 0.5 no AP occurred, while for s = 0.9 an AP was generated. Notice how γ(V(t)) increases dramatically during an AP, while δ(V(t)) does not. (B) We derived  (top)

(top)  (middle) and

(middle) and  (bottom) using equation (2.11), by simulations as in (A), for many values of s. Firing threshold θ is marked by a black circle. Notice that

(bottom) using equation (2.11), by simulations as in (A), for many values of s. Firing threshold θ is marked by a black circle. Notice that  changes significantly more than

changes significantly more than  especially near θ, and that we can approximate

especially near θ, and that we can approximate  to be a γ±-valued step function as in equation (2.12) while

to be a γ±-valued step function as in equation (2.12) while  a constant. I0 = 10 μA, T = 50 ms with V, m, n, h initial conditions set at steady state values (which can be somewhat different for each value of s).

a constant. I0 = 10 μA, T = 50 ms with V, m, n, h initial conditions set at steady state values (which can be somewhat different for each value of s).

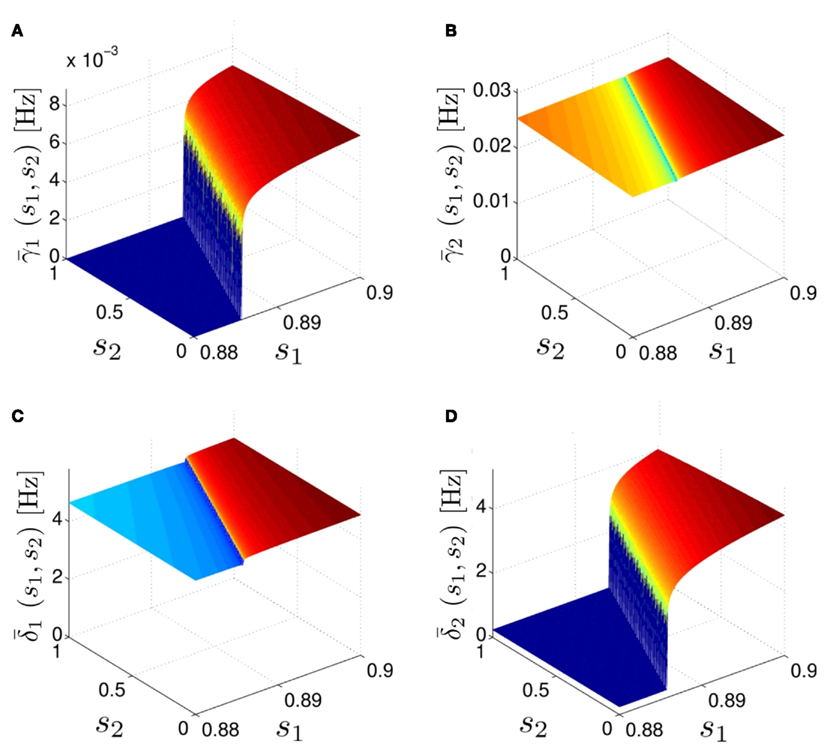

As can be seen in Figure 5, for the HHSAP model, we get

with

and

and

In the HHSIP model,

In the HHSIP model,  and

and  are switched.

are switched.

Figure 5. Average activation and inactivation rates for the HHSAP model, where s is held constant. (A)

(B)

(B)

(C)

(C)

(D)

(D)

and

and  are almost constant, while

are almost constant, while  and

and  have a sharp discontinuity near the threshold area Θ (Figure 3B). I0 = 8 μA, T = 50 ms with V, m, n, h initial conditions set at steady state value.

have a sharp discontinuity near the threshold area Θ (Figure 3B). I0 = 8 μA, T = 50 ms with V, m, n, h initial conditions set at steady state value.

In conclusion, together with equations (2.10 and 2.12) gives

which is a diagonal, piecewise linear (or, more accurately, affine) recursive map for s. Similar discontinuous maps appear in many systems (Di Bernardo, 2008), and recently also in neuroscience (Ibarz et al., 2011). However, in contrast to this recent work, our method of discretization relies on the stimulus pulses arrival times, thereby allowing greater analytical tractability in our case.

2.3.4. Dependence of kinetic rates on stimulation

Next, we show that  and

and  are all linear in fin. Expressing the integral on [t, t + T] in equation (2.11) as the sum of two integrals on [t, t + τ

r

] and [t + τr, t + T], we exploit the fact that most of the response in r(t) after an AP is confined to [t, t + τr] (since τr ≪ T; Figure 4A). Denoting

are all linear in fin. Expressing the integral on [t, t + T] in equation (2.11) as the sum of two integrals on [t, t + τ

r

] and [t + τr, t + T], we exploit the fact that most of the response in r(t) after an AP is confined to [t, t + τr] (since τr ≪ T; Figure 4A). Denoting

and using γH, γM, γL (which are independent of T) we get from equations (2.11 and 2.12)

Similarly defining  we obtain

we obtain

Notice that since τr fin is small (since τr ≪ T),  can be significantly different from

can be significantly different from  if and only if

if and only if  and so, necessarily,

and so, necessarily,  Additionally, since γi(r) are usually monotonic functions, we get

Additionally, since γi(r) are usually monotonic functions, we get  If instead

If instead  then we do not care much about

then we do not care much about  anyway. This reasoning applies also to the recovery rates

anyway. This reasoning applies also to the recovery rates  , to the HHS model, where we get that

, to the HHS model, where we get that  (implied also from Figure 4A), and also in the HHSAP and HHSIP models.

(implied also from Figure 4A), and also in the HHSAP and HHSIP models.

Finally, we note that since I0 has little effect on the steady state of r (e.g., rest voltage), and just a mild effect on the development of r during an AP (“all-or-none response”),  are expected to have a very small dependence on I0. Simulations confirm this low sensitivity to I0 for

are expected to have a very small dependence on I0. Simulations confirm this low sensitivity to I0 for  and

and  for the specific models we considered, so hereafter we assume they are all independent of I0 for the specific models used. However, we may see an increased sensitivity of

for the specific models we considered, so hereafter we assume they are all independent of I0 for the specific models used. However, we may see an increased sensitivity of  and

and  to I0 if I0 is increased, or the voltage threshold of the corresponding kinetic rate (V1/2) is decreased.

to I0 if I0 is increased, or the voltage threshold of the corresponding kinetic rate (V1/2) is decreased.

2.4. Simplified Deterministic Model Analysis

To summarize the main results of section 2.3, any extension to the HH model of the generic form [equations (2.5–2.7)] can be greatly simplified under pulse stimulation given the following two assumptions:

1. Timescale separation [equation (2.9); Figure 2]

2. Step-like behavior of the average kinetic rates [equation (2.12); Figures 4 and 5].

In this case we can write a simplified model for the dynamics of s = (s1,…, sn)⊤ and the neuronal response:

1. At each stimulation an AP is produced if and only if E(s) > 0, where E(s) is “the excitability function,” calculated numerically (Figure 3).

2. The latency of an AP is also determined by a function – L(s), calculated numerically (Figure 3).

3. The change in s between consecutive stimulations is given by the piecewise linear map in equation (2.14).

4. The inactivation and recovery rates  and

and  all change linearly with fin, as derived in equations (2.18 and 2.19).

all change linearly with fin, as derived in equations (2.18 and 2.19).

Additionally we note that for the specific models we examined as I0 increases, E(s) increases, L(s) decreases, while all the other parameters remain approximately constant (Figure 3). Although this behavior does not follow directly from the assumptions as do the above results, we expect it to remain valid in many cases (see section 2.3). This model is mainly useful in order to analyze and explain the dynamics of the full conductance-based model [equations (2.5–2.7)], as we do next. However, all numerical simulations are performed on the full conductance-based model, to demonstrate the validity of our results. In section B we further explain our assumptions, and in section C we show how we can replace some of the them with weaker assumptions.

2.4.1. Transients

In equation (2.14) each si is coupled to the others only through the threshold. Therefore, if s(0) is far from the threshold area E(s) = 0, each si will change independently forever, or until s(t) reaches the threshold area E(s) = 0. Until this happens it is perhaps more intuitive to describe the dynamics by the coarse-grained continuous-time version of equation (2.14),

The solution, for each case, is given by

where

is the timescale of the exponential relaxation, and

so s(t) relaxes toward  depending on whether

depending on whether

2.4.2. Steady state

Eventually s(t) arrives at some stable steady state behavior. There are several different possible steady state modes, depending on  which are affected by the amplitude and frequency of stimulation. These can be found by the following self-consistency arguments.

which are affected by the amplitude and frequency of stimulation. These can be found by the following self-consistency arguments.

1. Stable: if

then s(t) stabilizes at

then s(t) stabilizes at  so APs are generated after each stimulation.

so APs are generated after each stimulation.

2. Unresponsive: if  ,

,  then s(t) stabilizes at

then s(t) stabilizes at  so no APs occur.

so no APs occur.

3. Bi-stable: if

then, depending on the initial condition, s(t) stabilizes either on

then, depending on the initial condition, s(t) stabilizes either on  (as in the stable mode) or on

(as in the stable mode) or on  (as in the unresponsive mode). This type of behavior is exhibited only in cases when the neuron becomes more excitable after an AP (“positive feedback”).

(as in the unresponsive mode). This type of behavior is exhibited only in cases when the neuron becomes more excitable after an AP (“positive feedback”).

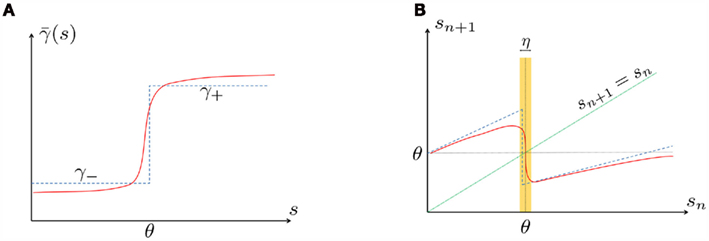

4. Intermittent: if

then steady state is always “on the other side” of the threshold. Thus s(t) will stabilize near the threshold E(s) = 0. In this regime, small changes in s dominate the behavior of the neuron: the neuron alternates between an “on” state, in which E(s) > 0 and the neuron can generate an AP at each stimulation, and an “off” state in which E(s) ≤ 0 and the neuron does not generate any AP. This type of behavior is exhibited in cases when the neuron becomes less excitable after an AP (“negative feedback”).}

then steady state is always “on the other side” of the threshold. Thus s(t) will stabilize near the threshold E(s) = 0. In this regime, small changes in s dominate the behavior of the neuron: the neuron alternates between an “on” state, in which E(s) > 0 and the neuron can generate an AP at each stimulation, and an “off” state in which E(s) ≤ 0 and the neuron does not generate any AP. This type of behavior is exhibited in cases when the neuron becomes less excitable after an AP (“negative feedback”).}

2.4.3. Firing rate

Suppose we count  the number of AP generated over m stimulation periods in steady state, and denote by

the number of AP generated over m stimulation periods in steady state, and denote by  the time-averaged probability of generating an AP. Assuming that the system has indeed arrived to a steady state, for large enough m, pm does not depend on m, so we denote it just by p. The only case where p ≠ {0, 1} is the intermittent mode. At this mode s is near the threshold E(s) = 0, so p can be derived by solving the self-consistent equation

the time-averaged probability of generating an AP. Assuming that the system has indeed arrived to a steady state, for large enough m, pm does not depend on m, so we denote it just by p. The only case where p ≠ {0, 1} is the intermittent mode. At this mode s is near the threshold E(s) = 0, so p can be derived by solving the self-consistent equation

where we defined

and

and  Also, notice that if, for all i,

Also, notice that if, for all i,  and

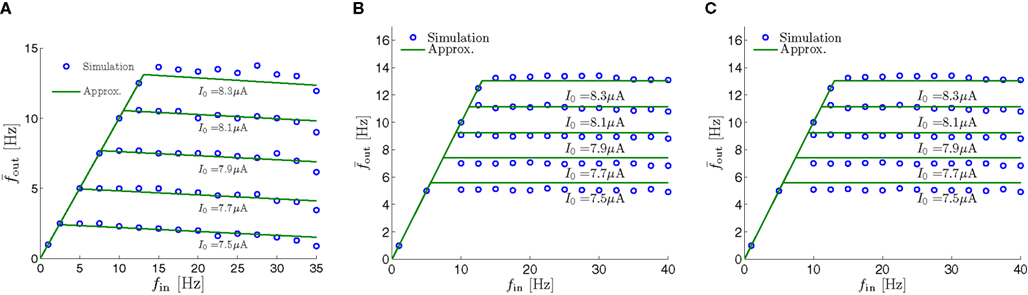

and  then we can rewrite equation (2.24) in the form h(pfin) = 0, which entails that in this case

then we can rewrite equation (2.24) in the form h(pfin) = 0, which entails that in this case

2.4.4. Firing patterns

Assume that equation (2.23) has been solved for p. Under the assumption that in steady state s remains near s∞ (p), from equations (2.12 and 2.14) we get that  if E(s) > 0 and

if E(s) > 0 and  if E(s) ≤ 0, where

if E(s) ≤ 0, where

Simple algebra gives that for all i,  This means that Δs+ has the opposite direction to Δs− – so s remains on a simple one-dimensional limit cycle. If

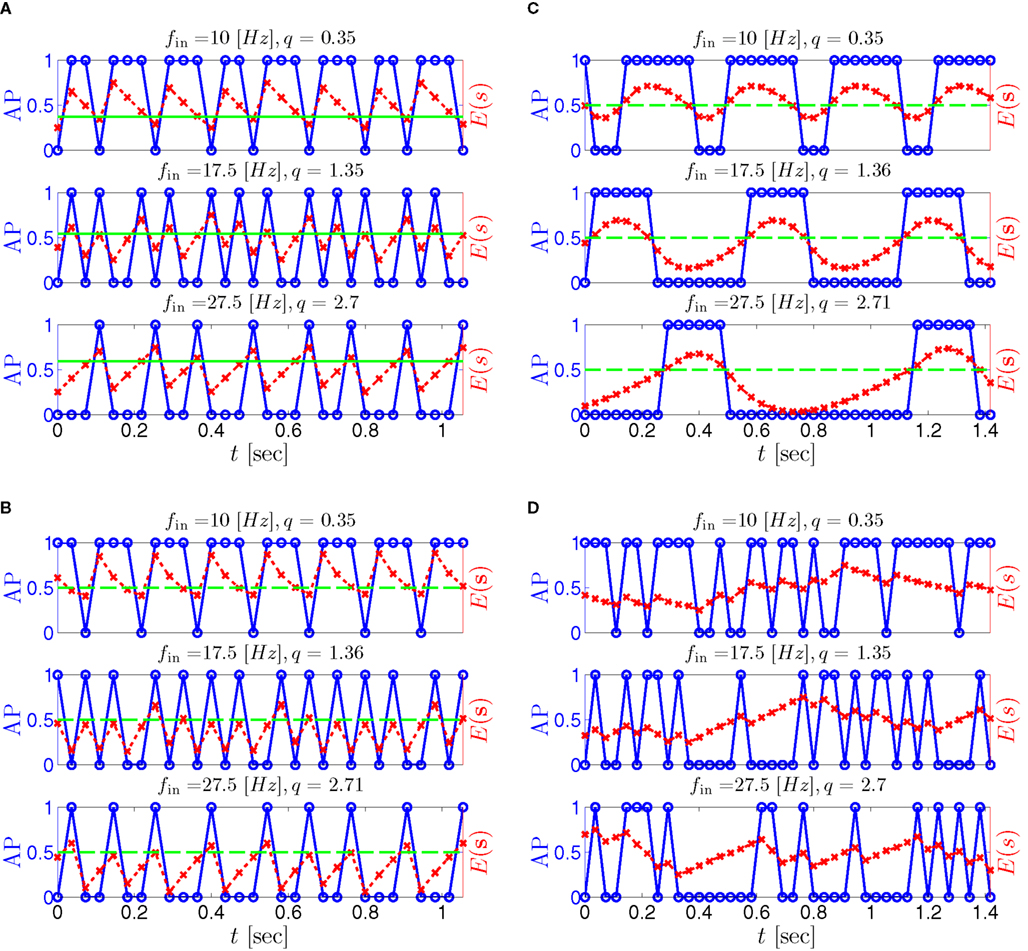

This means that Δs+ has the opposite direction to Δs− – so s remains on a simple one-dimensional limit cycle. If  the AP firing pattern is simple and repetitive: one period with an AP, followed by either ⌊q⌋ (integer part of q) or ⌊q⌋ + 1 periods in which no AP occurred (Figures 6A,C). If instead q < 1, the firing pattern is different, yet still simple and repetitive: one period in which no AP occurred, followed by either ⌊q−1⌋ or ⌊q−1⌋ + 1 periods with APs (Figure 6B). These two simple firing patterns are the only possibilities in the 1D case (s = s). However, in some other cases, this simple description cannot be true, as we explain next. Assume that s remains near s∞(p). In this case we can linearize

the AP firing pattern is simple and repetitive: one period with an AP, followed by either ⌊q⌋ (integer part of q) or ⌊q⌋ + 1 periods in which no AP occurred (Figures 6A,C). If instead q < 1, the firing pattern is different, yet still simple and repetitive: one period in which no AP occurred, followed by either ⌊q−1⌋ or ⌊q−1⌋ + 1 periods with APs (Figure 6B). These two simple firing patterns are the only possibilities in the 1D case (s = s). However, in some other cases, this simple description cannot be true, as we explain next. Assume that s remains near s∞(p). In this case we can linearize  where

where  . Without loss of generality, assume that q > 1 and 0 < w⊤ (s − s∞ (p)) at a certain period, so an AP is produced. In this case, according to the above description, s increases in the next period to s + Δs+, and no AP is produced – so 0 ≥ w⊤ (s − s∞ (p) + Δs+). But this necessarily means that 0 > w⊤Δs+. If this condition is not fulfilled (or, equivalently, 0 < w⊤Δs−), then the above description of the firing patterns cannot be correct. Instead, s will still revolve around s∞(p), but now in a higher-dimensional limit cycle (not 1D). In this case, the firing patterns are somewhat more general than before: s may remain on one side of the threshold Θ for several cycles, so the neuron can fire a continuous AP-response sequence for L+ periods, and then remain silent (no-AP-response sequence) for L− periods, where both L+ > 1 and L− > 1 (Figure 6D). We noticed numerically that even in this case, the value of q still approximates the L+/L− ratio (Figure 9D).

. Without loss of generality, assume that q > 1 and 0 < w⊤ (s − s∞ (p)) at a certain period, so an AP is produced. In this case, according to the above description, s increases in the next period to s + Δs+, and no AP is produced – so 0 ≥ w⊤ (s − s∞ (p) + Δs+). But this necessarily means that 0 > w⊤Δs+. If this condition is not fulfilled (or, equivalently, 0 < w⊤Δs−), then the above description of the firing patterns cannot be correct. Instead, s will still revolve around s∞(p), but now in a higher-dimensional limit cycle (not 1D). In this case, the firing patterns are somewhat more general than before: s may remain on one side of the threshold Θ for several cycles, so the neuron can fire a continuous AP-response sequence for L+ periods, and then remain silent (no-AP-response sequence) for L− periods, where both L+ > 1 and L− > 1 (Figure 6D). We noticed numerically that even in this case, the value of q still approximates the L+/L− ratio (Figure 9D).

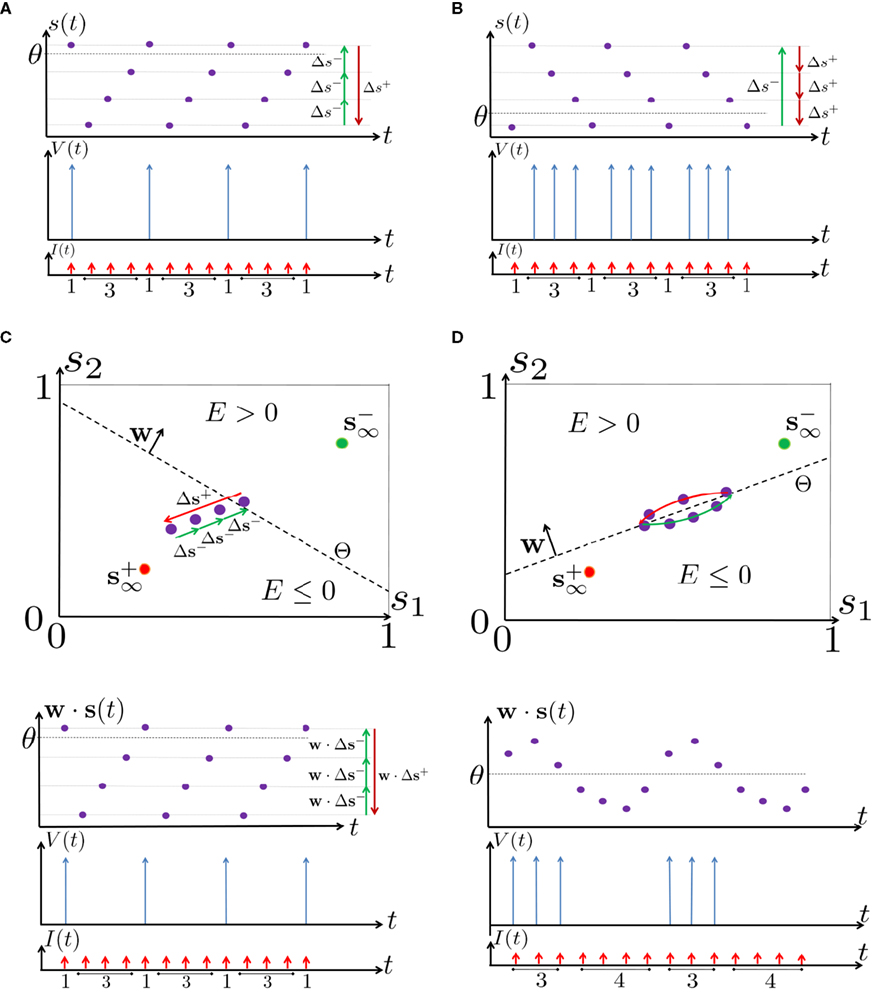

Figure 6. Schematic state space dynamics of s and firing patterns in the intermittent mode. Recall that  The simple dynamics of the 1D model are shown with (A) q = 3 and (B) q = 1/3. For the 2D model, the dynamics can be slightly more complicated. (C) For w⊤Δs+ < 0 we get, from equation (2.25), a 1D limit cycle with Δs+ = − qΔs− (top, with q = 3). This results in a 1: q (AP:No AP) firing pattern (bottom). (D) For w⊤Δs+ > 0 we obtain a 2D limit cycle (top) which results in m:n firing patterns (“bursts”; bottom). See Figures 9A–C for the corresponding numerical results.

The simple dynamics of the 1D model are shown with (A) q = 3 and (B) q = 1/3. For the 2D model, the dynamics can be slightly more complicated. (C) For w⊤Δs+ < 0 we get, from equation (2.25), a 1D limit cycle with Δs+ = − qΔs− (top, with q = 3). This results in a 1: q (AP:No AP) firing pattern (bottom). (D) For w⊤Δs+ > 0 we obtain a 2D limit cycle (top) which results in m:n firing patterns (“bursts”; bottom). See Figures 9A–C for the corresponding numerical results.

In addition to the above approximate analysis, there are several general attributes of the steady state solutions of equation (2.14), that lead us to believe that the firing patterns do not generally exhibit complex patterns. These attributes are the following:

• Finite, periodic: in the 1D case, as was explained above, the firing patterns are periodical and composed of only two basic repeating sub-patterns, but the overall period of a firing pattern may be arbitrarily long. However, as the duration of the overall period increases, the relevant parameter space (that can produce such a period) decreases (Tramontana et al., 2010) – so an infinitely long period can be achieved only in an (uncountable) parameter set of measure zero (Keener, 1980). A similar result is expected to hold in higher-dimensional systems, since, as in the 1D case, infinitely long periods seem to be generated only in the rare (measure zero) cases (bifurcations) in which the steady state (finite) limit cycle touches the threshold.

• Stable, non-chaotic: since equation (2.14) is a piecewise linear diagonal contracting map (namely, |d(si + Δsi)/dsi| < 1), its eigenvalues are inside the unit circle, so any finite limit cycle that does not touch Θ must be stable (Thompson and Stewart, 2002, or section A.1). It can be shown that this remains true even when hi (r, si) [equation (2.7)] is not linear (but still monotonic), or when the timescale separation assumption τr ≪ T ≪ τ s is relaxed to τ r ≪ min (T, τ s ) (section C).

• Globally stable, unique: in the 1D case it was further proved (Keener, 1980, or section A.2) that any such limit cycle must also be globally stable – and therefore unique. Numerical simulations indicate that such global stability (or, uniqueness) remains true in higher-dimensional systems. However, a direct proof of this might be hard, due to results of Blondel et al. (1999).

• Bounded L± durations: in the intermittent mode (where

), from equation (2.21), we get

), from equation (2.21), we get  where

where  And so, the lengths of the L± periods are bounded if

And so, the lengths of the L± periods are bounded if  and d± ≠ 0. Again, the latter condition is violated only for a measure zero set of parameters.

and d± ≠ 0. Again, the latter condition is violated only for a measure zero set of parameters.

We emphasize that if even any of the above mentioned exceptions are reached (either a limit cycle or  touching Θ) – these rare cases will be structurally unstable, meaning they will disappear if infinitesimally small changes occur in almost any parameter, such as stimulation rate or amplitude.

touching Θ) – these rare cases will be structurally unstable, meaning they will disappear if infinitesimally small changes occur in almost any parameter, such as stimulation rate or amplitude.

3. Results

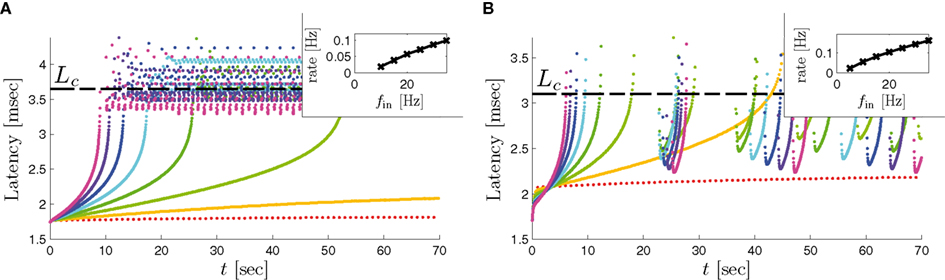

In Gal et al. (2010), a single synaptically isolated neuron, residing in a culture of rat cortical neurons, is stimulated with a train of extracellular periodic current pulses. This neuron is isolated from other neurons by blocking all synaptic activity in the network. For sufficiently strong pulse amplitudes the neuron sometimes responds with a detectable AP. The observed neuronal response was characterized by three different modes (Gal et al., 2010; Figure 2). When the neuron is stimulated at low frequencies (e.g., 1 Hz) it always responds reliably with an AP, which peaks after the stimulus with a constant latency (∼5 ms). This mode is termed a “stable mode.” When the neuron is stimulated at higher frequencies, it begins to adapt and the latency of its APs increases. This phase is termed “transient mode.” If the stimulation frequency is higher than a certain “critical frequency” (2–23 Hz) the transient mode terminates when the latency reaches a certain “critical latency” (∼10 ms). When this critical latency is reached, the neuron enters a phase in which it sometimes “misses,” and an AP is not created in response to the stimulation pulse. When an AP is created, its latency fluctuates around the critical latency. This kind of irregular mode is termed the “intermittent mode.” When the frequency of stimulation is changed back to low frequencies (e.g., 1 Hz) the behavior switches back again to a transient phase, in which the latency now decreases back to its original steady state value in the stable mode.

The main additional observations of Gal et al. (2010) can be summarized as follows:

1. The critical latency of the intermittent mode does not depend on the stimulation frequency (Gal et al., 2010; Figures 4A,D).

2. The rate of change in latency during the transient mode increases linearly with stimulation frequency (Gal et al., 2010; Figure 4B).

3. The firing rate in the intermittent mode decreases moderately with stimulation rate (Gal et al., 2010; Figure 4C).

4. The response of the neuron in the stable and transient mode is almost exactly repeatable (Gal et al., 2010; Figure 9).

5. During the intermittent mode, regular “burst”-like firing patterns appear (Gal et al., 2010; Figure 8D).

6. During the intermittent mode, irregular firing patterns appear (e.g., Gal et al., 2010; Figures 8C,E,F).

7. The irregular patterns of response in the intermittent phase are not repeatable (Gal et al., 2010; Figure 9).

8. The variability of the AP latency fluctuations increase with the latency magnitude during the transients (Gal et al., 2010; Figures 4A,D), and reaches a maximum in the intermittent mode.

9. During long recordings (55 h in Gal et al., 2010; Figures 5A,B,D) it was observed that the firing rate, and the type of firing patterns (see also Gal et al., 2010; Figure 10), change with time. The shape of the AP, however, remains stable throughout the experiment (Gal et al., 2010; Figure 5C).

10. The changes in the firing rate display self-similarity properties (Gal et al., 2010; Figure 6). This remains true for Poisson stimulation (Gal et al., 2010; Figure 7).

Our aim in this section is to find the minimal model capable of reproducing these results. In section 2.1 we have already established that for the classical HH model the response to each stimulation pulse must be the same and therefore cannot reproduce these results. Therefore, we begin by investigating the dynamics of the next simplest model – the HHS model (section 2.2.1), which includes also slow inactivation of sodium channels. As we show in section 3.1, the HHS model reproduces a significant portion of the experimental results (the different modes and observations 1–4), and its dynamics is easily explained using our simplified version (section 2.3).

However, the HHS model fails to generate the specific firing patterns seen in the experiment. In section 3.2 we extend the HHS model to the HHSIP model, enabling us to reproduce some of the burst patterns (observation 5). However, the irregular firing patterns (observation 6) raise a more serious obstacle, which cannot by easily surpassed by simply extending the model, taking into account additional variables representing the states of other types of channels or ion concentrations. We make the general argument (based on our analytic treatment) that the irregular patterns and variability (observation 6–10) cannot be created by such model extension, in the framework of a generic neuron model of the form of equations (2.5 and 2.6) and under our assumptions [equations (2.9 and 2.12)]. Taking this result into account we try to employ a different form of model extension. In section 3.3, we explore numerically the effects of ion channel noise on the results. We show that the sensitivity of the near-threshold dynamics of the neuron in the intermittent mode render such noise highly significant. Additionally, the stochastic model seems to better reproduce the variability of the experimental results in both the transient and intermittent modes. Finally, we reach the conclusion that extending from deterministic CBNMs to stochastic CBNMs is crucial if we wish to reproduce the details of the variability and the irregular patterns (observations 6–10). However, the analysis of a stochastic model requires different tools from those developed here. We therefore relegate the complete analysis of the stochastic model, and the reproduction of remaining results, to a companion paper.

3.1. HHS Model

As discussed in section 2.4, the full HHS model can be greatly simplified under pulse stimulation at low enough frequencies, as in Gal et al. (2010). Next, we explain how the different neuronal response modes seen in the experiment were reproduced, with the help of this simplification.

3.1.1. Transient mode

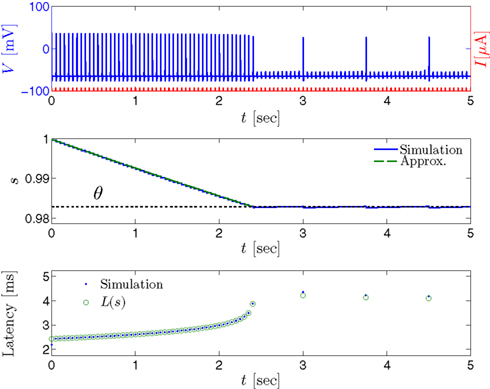

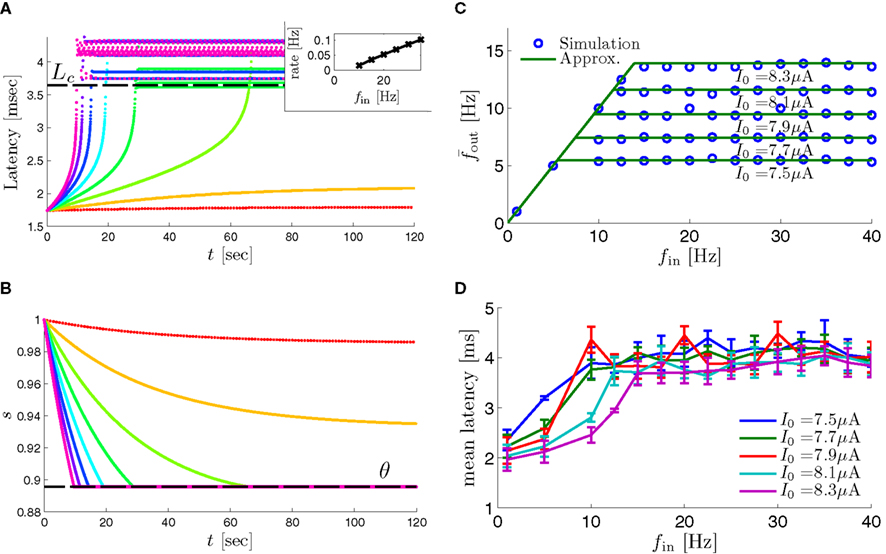

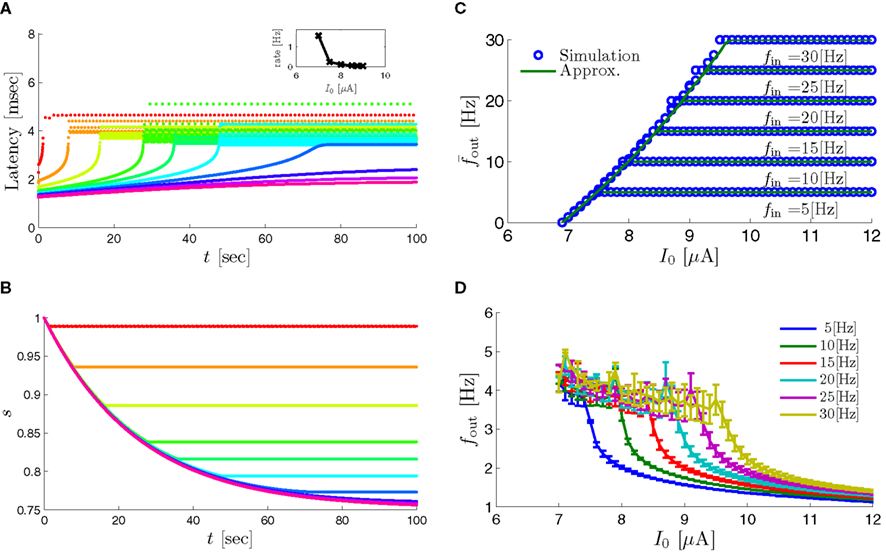

Assume an initial stimulation pulse had a current I0 which was strong enough to generate an AP (as in Gal et al., 2010) – so we get the initial condition s(0) > θ on the sodium availability. According to equation (2.21) as long as s(t) > θ, s(t) decreases exponentially. Since the latency increases when s decreases this seems to confirm (Figure 7) to the experimentally observed transient mode (Gal et al., 2010; Figure 2). Additionally, according to the simplified model [equations (2.22 and 2.18)], the rate of the transient is indeed linear in the frequency of stimulation

as in Gal et al. (2010, Figures 4A,B). And indeed, using the fitted HHS model, we were able to numerically reproduce these results in Figure 8A. As can be seen there, the transient mode ends either in a stable steady state (when fin is low), or in an intermittent mode, which occurs when s(t) reaches θ (Figure 8B), or equivalently, when the latency reaches L(θ) (Figure 8A) – the critical latency observed in the experiment. Also, as can be seen in Figure A1A, the duration of the transient mode also decreases when stimulation current I0 is increased – since the threshold θ decreases with I0 (Figure A1B). Note also that in all cases the latency of the AP is shorter and less variable than the measured one (Figure 8A). Later we address these discrepancies (in sections 4.4 and 3.3, respectively). Finally, we note that if, instead of the fitted HHS, we used the original HHS, the duration of the transient mode would be considerably shorter (∼1 s) – which indicates that perhaps the kinetic variable responsible for this slow transients is significantly slower than the slow sodium inactivation kinetics in Fleidervish et al. (1996).

Figure 7. Fitted HHS model – transient mode and arrival to intermittent mode. Stimulation current pulses generate APs (top). Initially we are at the transient mode where APs are reliably generated each period until we reach intermittent mode, and AP failures start to occur. This happens since (middle) sodium channels availability s(t) decreases during the transient mode, due to slow inactivation caused by APs, until the excitability threshold θ is reached, and then s(t) starts to switch back-and-forth across θ (intermittent mode). Notice that analytical approximation of equation (2.21) closely follows the numerical result during the transient phase. Latency during transient mode (bottom) is increased, as in (Gal et al., 2010) Figure 2. Notice that, as expected, using the latency function L(s) (calculated numerically in Figure 3A) on s yields a similar value as the latency calculated by direct numerical simulation. I0 = 7 μA.

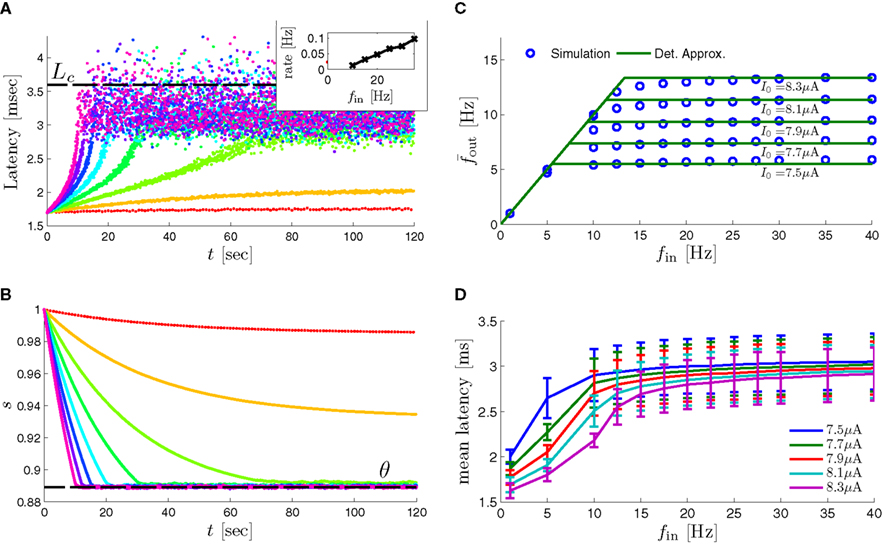

Figure 8. Fitted HHS model – response dependency on fin. (A) Spike latency as a function of time from stimulation onset (each color designates a different stimulation rate): stimulation at I0 = 7.9 μA and rates of fin = 1, 5, 10, 15, 20, 25, 30, 35, 40 Hz (red, orange…,); The transient phase speeds up when fin is increased, and ends at the same critical latency Lc. Similar to Figure 4A of Gal et al. (2010). Inset: the rate is the reciprocal of the timescale of the transient phase, defined as halftime the latency reaches the critical latency, as in Gal et al. (2010). Notice that the rate is linear in fin, as predicted by equation (3.1), and similar to Figure 4B of Gal et al. (2010). Both of these results can be explained by (B) the sodium availability trace, s(t) [using same color code as in (A)] where s transient speeds up when fin is increased, while θ does not change. (C) Dependency of steady state firing rate on stimulation frequency. Comparison between simulation and approximation of equation (3.3), for different values of I0:  in fitted HHS model –

in fitted HHS model –  for

for  (stable mode), and then

(stable mode), and then  for

for  (intermittent mode). Compare with Figure 4C of Gal et al. (2010). Notice also that in both cases,

(intermittent mode). Compare with Figure 4C of Gal et al. (2010). Notice also that in both cases,  increases with I0, as expected from equation (3.2), and the fact that θ decreases in I0. (D) Mean latency at steady state as a function of fin, shows an initial increase [stable mode, where

increases with I0, as expected from equation (3.2), and the fact that θ decreases in I0. (D) Mean latency at steady state as a function of fin, shows an initial increase [stable mode, where  should indeed increase in fin, by equations (2.23 and 2.18)] and then saturates (intermittent mode, where L ≈ L(θ) is indeed independent of fin), as seen in (Gal et al., 2010) Figure 4D. Also, the error bars indicate the SD of the latency – or the latency fluctuations. These fluctuations increase in the intermittent mode, as seen in (Gal et al., 2010) Figure 4D, due to the back-and-forth motion of s around θ at this mode, and the high sensitivity of L(s) near s = θ.

should indeed increase in fin, by equations (2.23 and 2.18)] and then saturates (intermittent mode, where L ≈ L(θ) is indeed independent of fin), as seen in (Gal et al., 2010) Figure 4D. Also, the error bars indicate the SD of the latency – or the latency fluctuations. These fluctuations increase in the intermittent mode, as seen in (Gal et al., 2010) Figure 4D, due to the back-and-forth motion of s around θ at this mode, and the high sensitivity of L(s) near s = θ.

3.1.2. Steady state

In any case, eventually the transient mode ends, and s(t) arrives at some steady state behavior. Using the results of section 2.4, we first explore analytically all the different types of behaviors feasible in the context of the HHS model. The type of mode that would actually occur depends on the model parameters and the inputs fin and I0. Using equations (2.18, 2.23, and 2.24) we find that there are three different possible steady states, depending on the two parameters  and

and  given by

given by

Note that  corresponds to the measured critical frequency, while

corresponds to the measured critical frequency, while  larger than

larger than  is a second critical frequency, which was not observed in Gal et al. (2010). Note that

is a second critical frequency, which was not observed in Gal et al. (2010). Note that  increases with I0, since θ decreases with I0 (Figure 8C). Specifically,

increases with I0, since θ decreases with I0 (Figure 8C). Specifically,  increases quadratically with I0 (Figure A1C), similarly to θ−1 (not shown).

increases quadratically with I0 (Figure A1C), similarly to θ−1 (not shown).

If  then

then  and s(t) stabilizes at

and s(t) stabilizes at  namely above the threshold θ, implying that each stimulation generates an AP (as the steady state for fin = 1.5 Hz in Figure 8A). The latency of the AP in this case is stable at

namely above the threshold θ, implying that each stimulation generates an AP (as the steady state for fin = 1.5 Hz in Figure 8A). The latency of the AP in this case is stable at  (Figure 8D). Therefore, this mode seems to agree with the experimentally measured stable mode in Gal et al. (2010).

(Figure 8D). Therefore, this mode seems to agree with the experimentally measured stable mode in Gal et al. (2010).

If  then

then  and s(t) stabilizes on

and s(t) stabilizes on  namely below the threshold θ, and no APs are fired. We refer to this as the “unresponsive mode,” which was not reached in the experiment. It is possible that if a higher fin or I0 were used, then such a mode would be achievable. However, it is also generally possible that

namely below the threshold θ, and no APs are fired. We refer to this as the “unresponsive mode,” which was not reached in the experiment. It is possible that if a higher fin or I0 were used, then such a mode would be achievable. However, it is also generally possible that  violates the timescale separation assumption τr

≪ T, so that this mode could be unattainable at the specific parameter values used. In the context of the fitted HHS model this violation indeed occurs and also

violates the timescale separation assumption τr

≪ T, so that this mode could be unattainable at the specific parameter values used. In the context of the fitted HHS model this violation indeed occurs and also  is much higher than any physiological stimulation rate. However, if other parameters were used (e.g., larger γM) then such a mode could be attainable at relevant frequencies.

is much higher than any physiological stimulation rate. However, if other parameters were used (e.g., larger γM) then such a mode could be attainable at relevant frequencies.

If  then

then  so the steady state is always “on the other side” of the threshold. Therefore, s(t) will always return to θ – whether above or below. Thus, in this case, the threshold has become effectively the new steady state of s(t) (Figure 7, middle). In this regime, small changes in s dominate the behavior of the neuron: the neuron alternates between an “on” state, in which s(t) > θ, where it generates an AP after each stimulation, and an “off” state in which s(t) ≤ θ, where it does not generate any AP. This steady state is reached when s hits the threshold θ, or, equivalently, when the latency reaches a critical latency ≈L(θ). Due to the back-and-forth motion of s around θ at this steady state, and the high sensitivity of L(s) near s = θ (Figure 9A), we get much larger fluctuations in the latency than in the stable mode (Figure 8D). Together, these properties render this type of steady state qualitatively similar to the experimentally measured intermittent mode in Gal et al. (2010).

so the steady state is always “on the other side” of the threshold. Therefore, s(t) will always return to θ – whether above or below. Thus, in this case, the threshold has become effectively the new steady state of s(t) (Figure 7, middle). In this regime, small changes in s dominate the behavior of the neuron: the neuron alternates between an “on” state, in which s(t) > θ, where it generates an AP after each stimulation, and an “off” state in which s(t) ≤ θ, where it does not generate any AP. This steady state is reached when s hits the threshold θ, or, equivalently, when the latency reaches a critical latency ≈L(θ). Due to the back-and-forth motion of s around θ at this steady state, and the high sensitivity of L(s) near s = θ (Figure 9A), we get much larger fluctuations in the latency than in the stable mode (Figure 8D). Together, these properties render this type of steady state qualitatively similar to the experimentally measured intermittent mode in Gal et al. (2010).

Figure 9. Firing patterns and excitability dynamics in the intermittent mode, for different models: (A) Fitted HHS. (B) HHSAP. (C) HHSIP. (D) Stochastic HHS (N = 106). Blue circles – APs (1 – AP generated, 0 – AP failure), red – excitability function E(s) (s in HHS), green line – excitability threshold E(s) = 0 (θ in HHS). Notice that in deterministic models (A–C) APs occur if and only if E(s) > 0, and that the resulting firing patterns are regular, periodic, and depend very simply on q (calculated from model parameters). Specifically, in (A,B), when q ≥ 1 each AP must be followed by either ⌊q⌋ or ⌊q⌋ + 1 periods in which no AP occurred, and when q < 1 each AP failure must be followed by either ⌊q−1⌋ or ⌊q−1⌋ + 1 APs. These rather specific patterns are generated by the “up and down” motion of s around the threshold (as in Figure 6A–C), due to negative feedback. In contrast, the HHSIP model can generate m:n “burst”-like patterns, due to the positive feedback of potassium inactivation. Notice that as before, q still well approximates the ratio of (AP:No AP) response sequences lengths in the HHSIP. In contrast to deterministic models, the stochastic HHS allows a large variety of irregular patterns. I0 = 7.7 μA

3.1.3. Intermittent mode – firing rate

In the intermittent mode, solving equation (2.24) together with (2.23) and (2.18) gives the an approximate expression for the mean firing rate

where we defined  and the mean firing rate as

and the mean firing rate as  where p is the time-averaged probability of generating an AP (defined in section 2.4). This linear equation approximates well the firing rate of the full HHS model as long as the timescale separation assumption [equation (2.9)] holds true. In the fitted HHS model, this approximation fits well with numerical results up to fin = 40 Hz (Figure 8C). Notice also that in that case we have a a ≪ 1, and

where p is the time-averaged probability of generating an AP (defined in section 2.4). This linear equation approximates well the firing rate of the full HHS model as long as the timescale separation assumption [equation (2.9)] holds true. In the fitted HHS model, this approximation fits well with numerical results up to fin = 40 Hz (Figure 8C). Notice also that in that case we have a a ≪ 1, and  since γM ≪ γH. If the sub-threshold inactivation γM is larger, then a is also larger, as can be seen, for example, in the original HHS model (Figure A2A), where γM is not negligible in comparison with γH. In any case equation (3.3) gives a decreasing linear I/O relation, which, combined with the simple

since γM ≪ γH. If the sub-threshold inactivation γM is larger, then a is also larger, as can be seen, for example, in the original HHS model (Figure A2A), where γM is not negligible in comparison with γH. In any case equation (3.3) gives a decreasing linear I/O relation, which, combined with the simple  relation in stable mode, gives a non-monotonic response function. This response function is similar to the experimental results (Gal et al., 2010; Figure 4C).

relation in stable mode, gives a non-monotonic response function. This response function is similar to the experimental results (Gal et al., 2010; Figure 4C).

3.1.4. Intermittent mode – firing patterns

So far, the HHS model seems to give a satisfactory explanation for many of the experimental results observed in Gal et al. (2010). However, there is an important caveat. If the stimulus frequency obeys the timescale separation assumption [equation (2.9)], the HHS model can produce only very simple patterns during intermittent mode (Figure 9A). As explained in section 2.4, in the intermittent mode, s changes in approximately constant increments, Δs±, close to the threshold θ, defined in equation (2.24): inactivation step Δs+ < 0 if s > θ and recovery step Δs− > 0 if s ≤ θ. Defining  as the ratio between step sizes, and assuming that q ≥ 1, we get that in any firing pattern each AP must be followed by either ⌊q⌋ or ⌊q⌋ + 1 periods in which no AP occurred (“no-AP-response sequence”; Figure 6A). If instead q < 1, then each AP failure (a period in which no AP occurred) must be followed by either ⌊q−1⌋ or ⌊q−1⌋ + 1 periods with APs (“AP-response sequence”; Figure 6B). We note also the q is related to the mean firing rate by q = p−1 − 1, and that in the fitted HHS model the step sizes around the threshold are very small

as the ratio between step sizes, and assuming that q ≥ 1, we get that in any firing pattern each AP must be followed by either ⌊q⌋ or ⌊q⌋ + 1 periods in which no AP occurred (“no-AP-response sequence”; Figure 6A). If instead q < 1, then each AP failure (a period in which no AP occurred) must be followed by either ⌊q−1⌋ or ⌊q−1⌋ + 1 periods with APs (“AP-response sequence”; Figure 6B). We note also the q is related to the mean firing rate by q = p−1 − 1, and that in the fitted HHS model the step sizes around the threshold are very small  which is an important fact we will use later (in section 3.3). In any case, as explained intuitively above, it can be formally proven that the firing patterns in the intermittent steady state are always regular, periodic, and globally stable – as also seen numerically in Figure 9A. Such firing patterns are not at all similar to the highly irregular firing patterns observed experimentally (e.g., Gal et al., 2010; Figure 8). Also, we clearly see in some of the experimental figures m:n “burst” patterns in which m > 1 APs are followed by n > 1 AP failures (e.g., Gal et al., 2010; Figures 8D,E) – which cannot be produced by the HHS model. Therefore, in the next section, we revise the model, in an effort to account for these discrepancies.

which is an important fact we will use later (in section 3.3). In any case, as explained intuitively above, it can be formally proven that the firing patterns in the intermittent steady state are always regular, periodic, and globally stable – as also seen numerically in Figure 9A. Such firing patterns are not at all similar to the highly irregular firing patterns observed experimentally (e.g., Gal et al., 2010; Figure 8). Also, we clearly see in some of the experimental figures m:n “burst” patterns in which m > 1 APs are followed by n > 1 AP failures (e.g., Gal et al., 2010; Figures 8D,E) – which cannot be produced by the HHS model. Therefore, in the next section, we revise the model, in an effort to account for these discrepancies.

3.2. The Addition of Kinetic Variables

As we saw in previous section, the fitted HHS model can reproduce many experimental results. However, it has one major flaw – in the relevant stimulation range it can produce only very simple, regular, and periodic firing patterns in the intermittent mode – in contrast with the irregular firing patterns of Gal et al. (2010). How should the model be extended in order to reproduce this? Suppose we use a more general model for a point neuron [equations (2.5–2.7)]. This general model may include an arbitrary number of slow variables, each corresponding to some slowly changing factor that affects excitability – such as the availability of different types of channels or ionic concentrations. Can such a general model generate more complicated firing patterns? It seems trivial that a system that includes a wide variety of processes, at a large range of timescales, should be able to exhibit arbitrarily complex patterns, and even chaos. However, as explained in section 2.4, if this stimulus and the model adhere to our assumptions [namely, the timescale separation (equation (2.9)) and the step-like behavior of the average kinetic rates (equation (2.12)], this is not the case, chaos cannot occur, and again we conclude that only regular, periodic, and stable firing patterns are possible, except perhaps in a very narrow range of stimulus and model parameters (a “zero measure” set). This perhaps surprising result remains true for any arbitrarily complex conductance-based model (with arbitrarily large number of slow variables, and arbitrary slow timescales) as long as our assumptions remain valid.

What specific type of firing patterns are possible then, under these assumptions? If, for example, only “negative feedback” type slow variables exist (those which reduce excitability after an AP), such as potassium activation and sodium inactivation in the HHSAP model, then we get 1: n or n: 1 (AP: No AP) firing patterns as in the HHS model (Figures 6C and 9B). However, if sufficient “positive feedback” also exists (some slow variables contribute to a increased excitability after an AP), as potassium inactivation in the HHSIP model we can get a more general “burst” firing patterns of m:n (AP: No AP). However, these firing patterns are also expected to be very regular and periodic (Figures 6D and 9C). Such firing patterns were also observed experimentally (e.g., Gal et al., 2010; Figures 10B,C). The condition that separates both types of firing patterns’ dynamics depends on both parameters and stimulation. Therefore a neuron that has both positive and negative feedback can have “bursts” firing patterns in the intermittent mode, for a certain range of fin and I0. We note that the mechanism behind this burst firing pattern is similar in principle to the mechanism behind “slow wave” bursts, that can occur in the standard current step stimulation of neurons (Izhikevich, 2007).

And so, under our assumptions, the addition of more slow variables to the HHS model can only help to reproduce one additional response feature observed in the intermittent mode – namely, the “burst” firing patterns. The simplest extension that achieves this is the HHSIP model. Note also that in the HHSIP model we took the additional potassium current to be relatively weak ( ). Though this weak current can have a large impact in the intermittent mode (e.g., Figure 9C), it has a relatively small effect on the neural dynamics in the transient mode (Figure A3A). In most cases, this is desirable since the HHS model already fits nicely with experimental results in the transient mode, e.g., the existence of a critical latency and the simple linear timescale relation [equation (3.1)]. However, we should be careful not to destroy the fit of the HHS model during the transient phase if we extend it by adding additional slow kinetic variables. For example, by increasing

). Though this weak current can have a large impact in the intermittent mode (e.g., Figure 9C), it has a relatively small effect on the neural dynamics in the transient mode (Figure A3A). In most cases, this is desirable since the HHS model already fits nicely with experimental results in the transient mode, e.g., the existence of a critical latency and the simple linear timescale relation [equation (3.1)]. However, we should be careful not to destroy the fit of the HHS model during the transient phase if we extend it by adding additional slow kinetic variables. For example, by increasing  we can generate an inflection point in the latency transients (Figure A3B), making them more similar to some experimental figures (e.g., Gal et al., 2010; Figure 2A) but less to others figures, which do not have an inflection point (e.g., Gal et al., 2010; Figure 4A). We may also want to increase the magnitude of the latency in the fitted HHS model, which is significantly smaller than that in Gal et al. (2010). As explained in section 4, this should be done by extending the model to include several neuronal compartments, through which the AP propagates and the latency accumulates. Such an extension is beyond the scope of this article.

we can generate an inflection point in the latency transients (Figure A3B), making them more similar to some experimental figures (e.g., Gal et al., 2010; Figure 2A) but less to others figures, which do not have an inflection point (e.g., Gal et al., 2010; Figure 4A). We may also want to increase the magnitude of the latency in the fitted HHS model, which is significantly smaller than that in Gal et al. (2010). As explained in section 4, this should be done by extending the model to include several neuronal compartments, through which the AP propagates and the latency accumulates. Such an extension is beyond the scope of this article.

3.3. Ion Channel Noise

Since deterministic CBNMs cannot reproduce the observed irregularity, we now examine the possibility that it could be produced by stochastic effects. Since synaptic activity was blocked in the experiment of Gal et al. (2010), the only other major source of noise is the stochastic ion channel dynamics (White et al., 2000; Hille, 2001; Faisal et al., 2008). The gating variables used in the conductance-based models to account for channel activation or inactivation (such as s in the HHS model) actually represent averages of a large number of discrete channels. Since the population of ion channels in the neuron is finite, the stochasticity in their dynamics is never completely averaged out, and can affect neuronal dynamics (Schneidman et al., 1998; White et al., 1998; Steinmetz et al., 2000; Carelli et al., 2005; Dorval and White, 2005), and, even more so, thin axons (Faisal and Laughlin, 2007) and dendrites (Diba et al., 2004; Cannon et al., 2010).

Consider the HHS model with channel noise added to it. A naive, yet common way to approximate this noise (Fox and Lu, 1994; Chow and White, 1996; Faisal, 2009, but see Goldwyn et al., 2011, Linaro et al., 2011) is to add a noise term to each of the gating variables representing the channels’ state. For example, equation (2.8) in the HHS model becomes a one-dimensional Langevin equation (Fox and Lu, 1994; Faisal, 2009)