- 1 Department of Chemistry and Biochemistry, UCLA-DOE Institute for Genomics and Proteomics, University of California Los Angeles, Los Angeles, CA, USA

- 2 Department of Computer Science, UCLA-DOE Institute for Genomics and Proteomics, University of California Los Angeles, Los Angeles, CA, USA

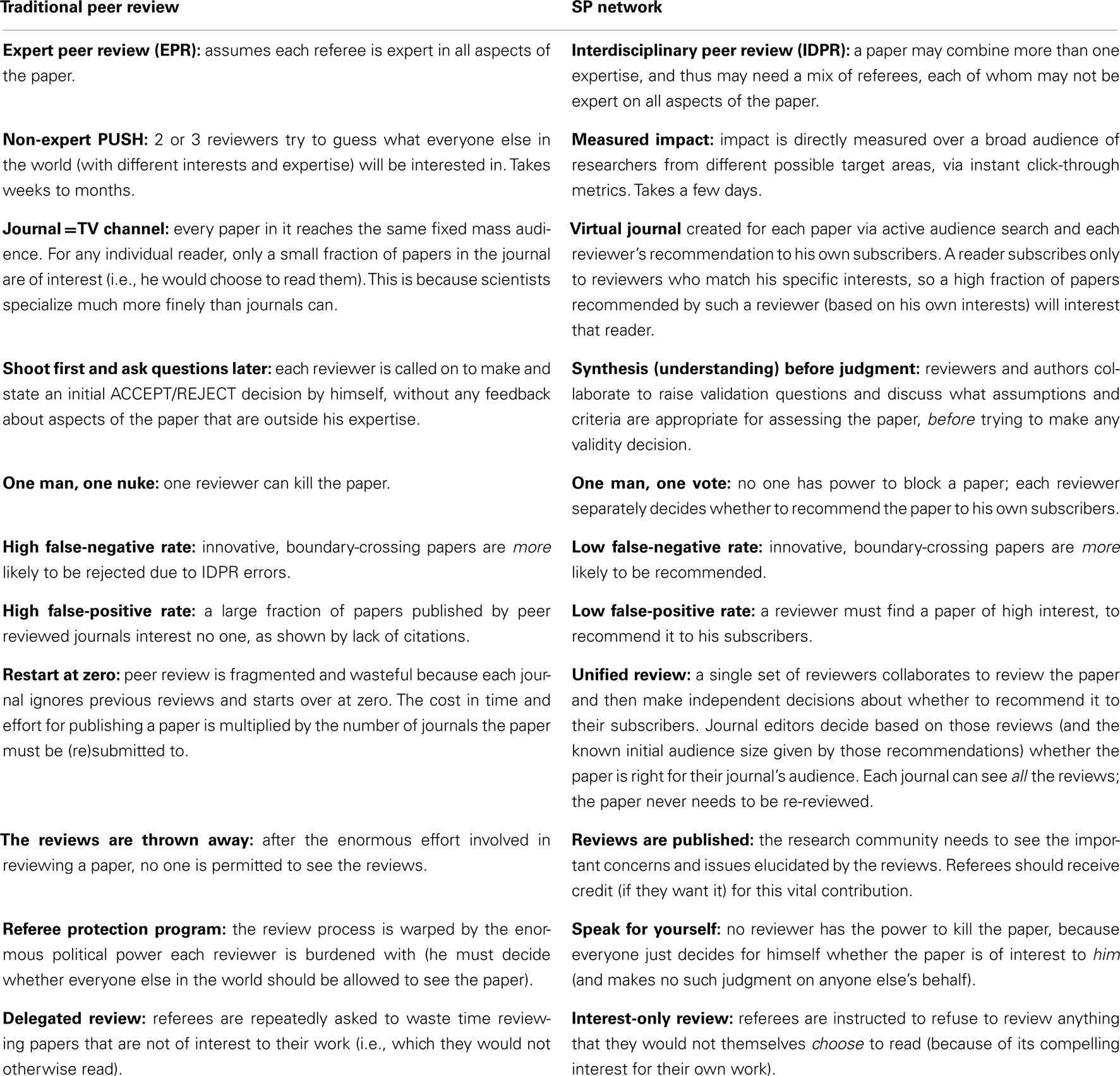

A selected-papers (SP) network is a network in which researchers who read, write, and review articles subscribe to each other based on common interests. Instead of reviewing a manuscript in secret for the Editor of a journal, each reviewer simply publishes his review (typically of a paper he wishes to recommend) to his SP network subscribers. Once the SP network reviewers complete their review decisions, the authors can invite any journal editor they want to consider these reviews and initial audience size, and make a publication decision. Since all impact assessment, reviews, and revisions are complete, this decision process should be short. I show how the SP network can provide a new way of measuring impact, catalyze the emergence of new subfields, and accelerate discovery in existing fields, by providing each reader a fine-grained filter for high-impact. I present a three phase plan for building a basic SP network, and making it an effective peer review platform that can be used by journals, conferences, users of repositories such as arXiv, and users of search engines such as PubMed. I show how the SP network can greatly improve review and dissemination of research articles in areas that are not well-supported by existing journals. Finally, I illustrate how the SP network concept can work well with existing publication services such as journals, conferences, arXiv, PubMed, and online citation management sites.

1. Introduction

1.1. Goals: What Problems does this Proposal Aim to Solve?

I begin by briefly outlining the problems in existing peer review that this proposal aims to resolve. Here I only define the problem, to motivate the subsequent proposal. I will also briefly state some issues that I explicitly exclude from its goals, to make my focus clear.

Current peer review suffers from systemic blind spots, bottlenecks, and inefficiencies that retard the advance of research in many areas. These pathologies reflect the petrification of peer review from what it started as (informal discussions of a colleague’s latest report in a club meeting) into a rigid system of assumptions inherited from outdated distribution and communication models (ink-on-paper printing press and postal mail). Peer review started out as a PULL model (i.e., each person decides what to receive – concretely, which talks to attend), but petrified into a PUSH model (i.e., a centralized distribution system decides what everyone else should receive). Most of these pathologies are due to the basic mismatch of the PUSH model versus the highly specialized, interdisciplinary, and rapidly evolving nature of scientific research. This proposal seeks to address the following problems:

• Expert peer review (EPR) does not work for interdisciplinary peer review (IDPR). EPR means the assumption that the reviewer is expert in all aspects of the paper, and thus can evaluate both its impact and validity, and can evaluate the paper prior to obtaining answers from the authors or other referees. IDPR means the situation where at least one part of the paper lies outside the reviewer’s expertise. Since journals universally assume EPR, this creates artificially high barriers to innovative papers that combine two fields (Lee, 2006) – one of the most valuable sources of new discoveries.

• Shoot first and ask questions later means the reviewer is expected to state a REJECT/ACCEPT position before getting answers from the authors or other referees on questions that lie outside the reviewer’s expertise.

• No synthesis: if review of a paper requires synthesis – combining the different expertise of the authors and reviewers in order to determine what assumptions and criteria are valid for evaluating it – both of the previous assumptions can fail badly (Lee, 2006).

• Journals provide no tools for finding the right audience for an innovative paper. A paper that introduces a new combination of fields or ideas has an audience search problem: it must search multiple fields for people who can appreciate that new combination. Whereas a journal is like a TV channel (a large, pre-defined audience for a standard topic), such a paper needs something more like Google – a way of quickly searching multiple audiences to find the subset of people who can understand its value.

• Each paper’s impact is pre-determined rather than post-evaluated: By “pre-determination” I mean that both its impact metric (which for most purposes is simply the title of the journal it was published in) and its actual readership are locked in (by the referees’ decision to publish it in a given journal) before any readers are allowed to see it. By “post-evaluation” I mean that impact should simply be measured by the research community’s long-term response and evaluation of it.

• Non-expert PUSH means that a pre-determination decision is made by someone outside the paper’s actual audience, i.e., the reviewer would not ordinarily choose to read it, because it does not seem to contribute sufficiently to his personal research interests. Such a reviewer is forced to guess whether (and how much) the paper will interest other audiences that lie outside his personal interests and expertise. Unfortunately, people are not good at making such guesses; history is littered with examples of rejected papers and grants that later turned out to be of great interest to many researchers. The highly specialized character of scientific research, and the rapid emergence of new subfields, make this a big problem.

In addition to such false-negatives, non-expert PUSH also causes a huge false-positive problem, i.e., reviewers accept many papers that do not personally interest them and which turn out not to interest anybody; a large fraction of published papers subsequently receive zero or only one citation (even including self-citations; Adler et al., 2008). Note that non-expert PUSH will occur by default unless reviewers are instructed to refuse to review anything that is not of compelling interest for their own work. Unfortunately journals assert an opposite policy.

• One man, one nuke means the standard in which a single negative review equals REJECT. Whereas post-evaluation measures a paper’s value over the whole research community (“one man, one vote”), standard peer review enforces conformity: if one referee does not understand or like it, prevent everyone from seeing it.

• PUSH makes refereeing a political minefield: consider the contrast between a conference (where researchers publicly speak up to ask challenging questions or to criticize) vs. journal peer review (where it is reckoned necessary to hide their identities in a “referee protection program”). The problem is that each referee is given artificial power over what other people can like – he can either confer a large value on the paper (by giving it the imprimatur and readership of the journal) or consign it zero value (by preventing those readers from seeing it). This artificial power warps many aspects of the review process; even the “solution” to this problem – shrouding the referees in secrecy – causes many pathologies. Fundamentally, current peer review treats the reviewer not as a peer but as one who wields a diktat: prosecutor, jury, and executioner all rolled into one.

• Restart at zero means each journal conducts a completely separate review process of a paper, multiplying the costs (in time and effort) for publishing it in proportion to the number of journals it must be submitted to. Note that this particularly impedes innovative papers, which tend to aim for higher-profile journals, and are more likely to suffer from referees’ IDPR errors. When the time cost for publishing such work exceeds by several fold the time required to do the work, it becomes more cost-effective to simply abandon that effort, and switch to a “standard” research topic where repetition of a pattern in many papers has established a clear template for a publishable unit (i.e., a widely agreed checklist of criteria for a paper to be accepted).

• The reviews are thrown away: after all the work invested in obtaining reviews, no readers are permitted to see them. Important concerns and contributions are thus denied to the research community, and the referees receive no credit for the vital contribution they have made to validating the paper.

In summary, current peer review is designed to work for large, well-established fields, i.e., where you can easily find a journal with a high probability that every one of your reviewers will be in your paper’s target audience and will be expert in all aspects of your paper. Unfortunately, this is just not the case for a large fraction of researchers, due to the high level of specialization in science, the rapid emergence of new subfields, and the high value of boundary-crossing research (e.g., bioinformatics, which intersects biology, computer science, and math).

I wish to list explicitly some things that this proposal does not seek to change:

• it does not seek to replace conventional journals but rather to complement them by offering an improved peer review process.

• it does not seek to address large audience distribution channels (e.g., marquee journals like Nature, or journals associated with large, well-established fields), or papers that fit these journals well. Instead it focuses on papers that need to actively search for an audience, e.g., because they are at the intersection of multiple audiences.

• it does not address the large fraction of papers published by journals that do not interest anyone (as indicated by lack of subsequent citation). Instead it focuses on papers for which it can find an audience that considers the paper “must-read.”

2. The Proposal in Brief

2.1. What is a Selected-Papers Network?

Here I briefly summarize the proposal, by sketching its system for peer review. My purpose is to define the proposed system clearly, and to highlight its core principles. Note that this section will neither seek to prove that it solves all the problems above, nor address the political question of how to make the current system yield to the proposed system. Those are separate issues that deserve separate treatment. Core principles:

• Instead of reviewing a manuscript in secret for the Editor of a journal, a referee simply publishes his review (typically of a paper he wishes to recommend) on an open Selected-Papers (SP) network, which automatically forward his review to readers who have subscribed to his selected-papers list because they feel his interests match their own, and trust his judgment. I will refer to such a reviewer as a “selected-paper reviewer” (SPR).

• Instead of submitting a paper to a specific journal, authors submit it to the SP network, which quickly scans a large number of possible reviewers to see if there is an audience that considers it “must-read.” This audience search process should take just a few days using automated e-mail and click-through metrics. This determination is direct: the system simply measures whether seeing the title makes someone click to see the abstract; whether seeing the abstract makes them click to see the text; whether seeing the text makes them click to see the figures etc.

• Reviewers are instructed to only consider papers that are of compelling interest for their own work, i.e., that they would eagerly choose to read even if they were not being asked to review. In other words, each reviewer should represent only his own interests, and should not try to guess whether it will interest other audiences. Following this principle, refusing to review a paper is itself a review (“this paper does not interest me enough to read”). During this pre-review phase, each SPR can informally ask questions or make comments without yet committing to review the paper, and can restrict their comments to be visible to the authors only, the other reviewers as well, or as part of the permanent review record for the paper that will become public if the paper is published.

• If no one considers the paper must-read and is willing to review it, no further action is needed. (The authors can send it to a regular journal if they wish).

• Otherwise, the SPRs who agree to act as reviewers begin a Questions/Answers phase where they raise whatever questions or issues they want, to assess the validity of the paper. A reviewer can opt to remain anonymous if he feels this is necessary. The authors and referees work together to identify and resolve these issues in the context of an issue tracking system like those used for debugging a software release. This phase would have a set deadline (e.g., 2 weeks). If the authors undertake a major revision (e.g., with new data), a new 2 week Q/A phase ensues.

• Next, during the assessment phase the reviewers individually negotiate with the authors over validation issues they consider essential, e.g., “If you do this additional control, that would address my concern and I could recommend your paper.”

• The authors decide how much they are willing to do for the final version of the paper, based on their time pressures and other competing interests. They produce this final version.

• Each reviewer decides whether or not to recommend the final paper to their subscribers. This gives the paper a known initial audience size (the total number of subscribers of the reviewers who choose to recommend it).

• Once the reviews are complete, they can now be considered by a journal or conference editor. The authors invite any editor they want to consider these reviews and initial audience size, and make a publication decision. Since all impact assessment, reviews, and revisions are complete, this process should be short, e.g., the editor should be given a deadline of a week or so to reach a decision. Note that since many reviewers are also editors, the reviewers’ decisions may already confer a guaranteed publication option. For example, for many fields there is a high probability that one of the reviewers would be a PLoS ONE Academic Editor and thus could unilaterally decide to accept the paper to PLoS ONE. Note that the journal should not seek to re-review the paper using their own procedures or ask the original reviewers to give them a new decision (“is this good enough for Nature?”). This is a clean division of labor: the reviewers decide impact for themselves (and no one else) and assess validity; the journal decides whether the paper is appropriate for the journal’s audience.

• The journal publishing the paper may ask the authors to reformat it, but should not alter the content of the final version (it might be acceptable to have some sections published online but not in the print version).

• When the paper is published online, the reviewers’ recommendations of the paper are forwarded to their subscribers, with a link to view the paper wherever it is published (e.g., the journal’s website). Thus the journal benefits from not only the free review process, but also the free targeted marketing of the paper provided by the SP network.

• The reviews themselves would be published online. Positive recommendations could be published in a “News and Views” style journal created for this purpose; negative reviewers could opt to publish a brief “Letters” style critique in a journal created for this purpose (“Critical Reviews in…”). The community should have access to these important validation assessments, and reviewers should receive credit for this important contribution. All review comments and issues would be available in the SP network page for the paper, which would remain open as the forum for long-term evaluation of the paper’s claims. That is, other users could raise new issues or report data that resolve issues.

• Any online display of the paper’s title, abstract, or full content (e.g., on PubMed, or the journal’s website) should include recommendation links showing who recommended it, each linked to a page showing the text of the review, and that reviewer’s other recommendations/reviews. This would enable readers who find a paper they like to find reviewers who share their interests, and subscribe to receive their future recommendations.

• Furthermore, each reader who considers the paper must-read should add it to their own recommendation list (which at this point does not require writing a review, since the paper is already published). They would simply click a “Like!” icon on any page displaying the paper, with options to simply cite the paper, or recommend it to their own subscribers. In this way the paper can spread far beyond its initial audience – but only if new readers continue to find it “must-read.” This constitutes true impact measurement via post-evaluation: each person decides for themselves what the paper’s value is to them, and the system reports this composite measurement over all audiences.

2.2. Immediate Payoffs and Reduced Barriers to Entry

Systemic reform always faces a bootstrap problem: early adopters gain little benefit (because no one else is participating in the new system yet) and suffer high costs. I have designed this proposal specifically to solve this bootstrap problem by giving it immediate payoffs for the key players (referees, readers, journals, and authors) and to allow it to begin working immediately within the existing system.

• For reviewers, there would normally be little incentive to review manuscripts in a new system, because doing so would have little impact (initially they would have no subscribers). The SP network solves this in two ways: first, by simply making itself a peer review platform for submission to existing journals (so the reviewer has just as much impact and incentive as when they review for an existing journal); second, by displaying their paper recommendations on the key sites where readers find papers (e.g., PubMed, journal websites etc.). This would give their recommendations a large audience even before they have any subscribers, and would create a fast path for them to gain subscribers.

• Readers would ordinarily have little incentive to join a new subscription system, if it requires them to change how they find papers (e.g., by having to log in to a new website). After all, there will initially be very few reviewers or recommendations in the system, and therefore little benefit for readers. The SP network solves this by displaying its recommendations within the main websites where readers find papers, e.g., PubMed and journal websites. Readers will see these recommendations even if they are not subscribers, and if they find them valuable, will be able to subscribe with a single click.

• Journals may well look askance at any proposal for change. However, this proposal offers journals immediate benefits while preserving their autonomy and business model. On the one hand, the SP network provides free marketing for the journal’s papers, in the form of recommendations that will send traffic to the journal, and subscribers who provide a guaranteed initial readership for a recommended paper. What journal would not want recommendations of its papers to be shown prominently on PubMed and its own website? On the other hand, the SP network will cut the journal’s costs by providing it with free reviewing services that go far beyond what journals do, e.g., active audience search and direct measurement of impact over multiple audiences. Since reviewers will still have the option to review anonymously, the journal cannot claim the process is less rigorous (actually, it will be more rigorous, due to its greatly improved discussion, and sharing of multiple expertises). Moreover, the journal preserves complete autonomy over both its editorial decision-making and its business model. It seems reasonable to expect that multiple journals (e.g., the PLoS family) would quickly agree to become SP network partners, i.e., they would accept paper submissions via the SP network review process.

• Authors would ordinarily have little incentive to send their papers to a new subscription system rather than to an existing journal. After all, initially such a system will have few subscribers, and no reputation. The SP network solves this by acting as a peer review platform for submitting a paper to existing journals. Indeed, it offers authors a signal advantage over directly submitting to a journal: a unified review process that guarantees a single round of review; i.e., even if the paper is rejected by one or more journals, it will not need to be re-reviewed. This is a crucial advantage, e.g., for papers whose validity is solid but where the authors want to “gamble” on trying to get it into a high-impact journal.

3. Benefits of a Selected-Papers Network

3.1. Benefits for Readers

The core logic of the SP network idea flows from inherent inefficiencies in the existing system.

For readers, journals no longer represent an efficient way to find papers that match their specific interests. In paper-and-ink publishing, the only way to make distribution cost-effective was to rely on economies of scale, in which each journal must have a large audience of subscribers, and delivers to every subscriber a uniform list of papers that are supposedly all of interest to them. In reality, most papers in any given journal are simply not of direct interest to (i.e., specifically relevant to the work of) each reader. For example, in my own field the journal Bioinformatics publishes a very large number and variety of papers. The probability that any one of these papers is of real interest to my work is low. For this reason, readers no longer find papers predominantly by “reading a journal” from beginning to end (or even just its table of contents). Instead, they have shifted to finding papers mainly from literature searches (PubMed, National Library of Medicine, 1996; Google, Google Scholar, Acharya and Verstak, 2005; etc.) and word of mouth. Note that the latter is just an informal “Selected-Papers network.”

For readers, an SP network offers the following compelling advantages:

• Higher relevance. Instead of dividing attention between a number of journals, each of which publishes only a small fraction of directly relevant papers, a reader subscribes (for free) to the Selected-Papers lists of peers whose work matches his interests, and whose judgment he trusts. Note that since most researchers have multiple interests, you typically subscribe specifically to just the recommendations from a given SPR that are in your defined areas of interest. The advantage is fundamental: whereas journals lump together papers from many divergent subfields, the SP network enables readers to find matches to their interests at the finest granularity – the individuals whose work matches their own interests. For comparison, consider the large volume of e-mail I receive from journals sending me lists of their tables of contents. These e-mails are simply spam; essentially all the paper titles are of zero interest to me, so now I do not even bother to look at them. The subscription model only makes sense if it is specific to the subscriber’s interests (otherwise he is better off just running a literature search). And in this day and age of highly specialized research, that means identifying individual authorities whose work matches your own.

• Real metrics. A key function of the SP network is to record all information about how each paper spreads through the community and to measure interest and opinions throughout this process. This will give readers detailed metrics about both reviewers (e.g., assessing their ability to predict what others will find interesting and important, ahead of the curve) and about papers (e.g., assessing not only their readership and impact but also how their level of interest spreads over different communities, and the community consensus on them, i.e., incorporated into the literature (via ongoing citations) or forgotten).

• Higher quality. Note first that the SPRs are simply the same referees that journals rely on, so the baseline reliability of their judgments is the same in either context. But the SP network aims for a higher level of quality and relevance – it only reports papers that are specially selected by referees as being of high interest to a particular subfield. “Ordinary research” (i.e., work that follows the pattern of work in its field) is typically judged by a standard “checklist” of technical expectations within its field. Unfortunately, a substantial fraction of such papers are technically competent but do not provide important new insights. The sad fact is that the average paper is only cited 1–3 times (over 2 years, even including self-citations), and indeed this distribution is highly skewed, in which the vast majority of papers have zero or very few citations, and only a small fraction of papers have substantial numbers of citations (Adler et al., 2008). For a large fraction of papers, the verdict of history is that almost nobody would be affected if these papers had not been published; even their own authors rarely get around to citing them!

Since the SP network is driven solely by individual interest (i.e., an SPR getting excited enough about a manuscript to recommend it to his subscribers), it is axiomatic that it will filter out papers that are not of interest to anyone. Since such papers unfortunately constitute a substantial fraction of publications, this is highly valuable service. A more charitable (but scarier) interpretation is that actually some fraction of these papers would be of interest to someone, but due to the inefficiencies of the journal system as a method for matching papers to readers, simply never find their proper audience. The SP network could “rescue” such papers, because it provides a fine-grained mechanism for small, specialized interest groups to find each other and share their discoveries.

• Better information. In a traditional journal, a great deal of effort is expended to critically review each manuscript, but when the paper is published, all of that information is discarded; readers are not permitted to see it. By contrast, in the SP network the review process is open and visible to all readers; the concerns, critiques and key tests of the paper’s claims are all made available, giving readers a much more complete understanding of the questions involved. Indeed, one good use for the SP network would be for reviewers and/or authors to make public the reviews and responses for papers published in traditional journals.

• Speed. When a new area of research emerges, it takes time for new journals to cover the new area. By contrast, the SP network can cover a new field from the very day that reviewers in its network start declaring that field in their list of interests. Similarly, the actual decision of a reviewer to recommend a paper can be fast: if they feel confident of their opinion, they can do so immediately without anyone else’s approval.

• Long-term evaluation. In a traditional journal, the critical review process ends weeks to months before the paper is published. In the SP network, that process continues as long as someone has something to say (e.g., new questions, new data) about that paper. The SP network provides a standard platform for everyone to enter their reviews, issues, and data, on papers at every stage of the life cycle.

3.2. Benefits for Referees

Referees get all the disadvantages and none of the benefits of their own work in the current system. Journals ask referees to do all the actual work of evaluating manuscripts (for free), but keep all the benefit for themselves. That is, if the referee does a good job of evaluating a manuscript, it is the journal’s reputation that benefits. This is sometimes justified by arguing that every scientist has an inherent obligation to review others’ work, and that failure to do so (for example, for a manuscript that has no interest to the referee) injures the cooperative enterprise of science. This is puzzling. Why should a referee ever review a paper except because of its direct relevance to his own work? If the authors (and the journal) cannot find anyone who actually wants to read the paper, what is the purpose of publishing it?

Reviewing manuscripts is an important contribution and should be credited as such. The SP network would rectify this in two ways:

• Liberate referees to focus on their interests. The SP network would urge referees to refuse to review anything that does not grab their interest, for the simple reason that it is both inefficient and counter-productive to do so. If a paper is not of interest to the referee, it is probably also not of interest to his subscribers (who chose his list because his interests match theirs). Note that the SP network expects authors to “submit” their manuscript simultaneously to multiple reviewers seeking an “audience” that is interested in their paper. If the authors literally cannot find anyone who wants to read the paper, it should not be recommended by the SP network. Note that if referees simply follow their own interests, this principle is enforced automatically.

• Referees earn reputation and influence through their reviews. Manuscript reviews are a valuable contribution to the research community, and they should be treated and valued as such. By establishing a record of fair, insightful reviews, and recommending important new papers “ahead of the curve,” a referee will attract a large audience of subscribers. This in and of itself should be treated as an important metric for professional evaluation. Moreover, the power to communicate directly with a substantial audience in your field itself constitutes influence, and is an important professional advantage. For example, a referee by default will have the right to communicate his own papers to his subscribers; thus, through his earned reputation and influence, a referee builds an audience for his own work.

• Eliminate the politics of refereeing. Note that a traditional journal does not provide referees these benefits because their role is fraught with the political consequences of acting as the journal’s “agent,” i.e., the power to confer or deny the right of publication, so crucial for academics. These political costs are reckoned so serious that journals shroud their referees in secrecy to protect them from retribution. Unfortunately, this political role incurs many other serious costs (see for example the problem of “prestige battles” analyzed in section 3.3).

These problems largely vanish in an SP network, for the simple reason that each referee represents no one but himself, and is not given arbitrary power to block publication of anyone’s work. In many traditional journals, if one reviewer says “I do not like this paper,” it will be rejected and the authors must start over again from scratch (since they are permitted to submit to only one journal at a time, and the paper must typically be re-written, or at a minimum re-formatted, for submission to another journal). By contrast, in an SP network authors submit their paper simultaneously to multiple referees; if one referee declines to recommend it, that has no effect on the other referees. The referee has not “taken anything away” from the authors, and has no power to block the paper from being selected by other referees.

Moreover, the very nature of the “Selected-Papers” idea is positive, that is, it highlights papers of especial interest for a given community. Being “selected” is a privilege and not a right, and is intended to reflect each referee’s idiosyncratic interests. Declining to select a paper is not necessarily a criticism; it might simply mean that the paper is not well-matched to that reviewer’s personal interests. Since most people in a field will also themselves be reviewers, they will understand that objecting to someone else’s personal selections is morally incompatible with preserving their own freedom to make personal choices. Note that standard etiquette will be that authors may submit a paper to as many referees as they like, but at the same time referees are not obligated to respond.

Of course, in certain cases a referee may feel that important concerns have been ignored, and will raise them by publishing a negative review on his SP list. I believe that referees will feel free to express such concerns in this open setting, for the same reasons that scientists often speak out with such concerns at public talks (e.g., at conferences). That is, they are simply expressing their personal opinions in an open, public forum where everyone can judge the arguments on their merits. They are only claiming equal rights as the authors (i.e., the right to argue for their position in a public forum). What creates conflict in peer review by traditional journals is the fact that the journal gives the referee arbitrary power over the authors’ work – specifically, to suppress the authors’ right to present their work in a public forum. This power is made absolute in the sense that it is exercised in secret; the merit of the referees’ arguments are not subject to public scrutiny; and referees have no accountability for whether their assertions prove valid or not. All of these serious problems are eliminated by the SP network, and replaced by the benefits of openness, transparency, and accountability.

• Eliminate the costs of delegated review: currently, researchers are called upon to waste significant amounts of time reviewing papers that are not of direct interest to their own work. Typically, this time constitutes a cost with no associated gain. By contrast, time spent reviewing a paper that is of vital interest for the referee’s research gives him immediate benefit, i.e., early access to an important advance for his own work.

3.3. Benefits for Authors

I now consider the benefits of the SP network review and publication system in terms of readership and cost. These benefits arise from addressing fundamental inefficiencies: first, how poorly traditional journals fit the highly specialized character of research and the emergence of new fields; and second, how journals have implemented peer review. Criticisms of this peer review system are legion, and most tellingly, come from inside the system, from Editors and reviewers (see for example Smith, 2009). While assessment of its performance is generally blocked by secrecy, the studies that have been done are alarming. For example, re-submission of 12 previously published articles was not detected by reviewers in 9 out of 12 cases (showing that reviewers were not familiar with the relevant literature), and 8 of the 9 papers were rejected (showing a nearly total lack of concordance with the previous set of reviewers who published these articles; Peters and Ceci, 1982). While we each can hope that reviewers in our own field would do better, there is evidently a systemic problem. That is, the system itself promulgates a high level of errors. I now argue that the SP network can help systematically address some of these errors.

3.3.1. Readership

The SP network can help alleviate bottlenecks that impede publishing innovative work in the current system, e.g., because its specialized audience does not “have its own journal,” or because it is “too innovative” or “too interdisciplinary” to fare well in EPR. Let us consider the case of a paper that introduces a novel combination of two previously separate expertises. In a traditional journal, the paper would be “delegated” to two or three referees who have not been chosen on the basis of a personal interest in its topic. So the probability that they can understand its significance for its target audience is low. For each of these referees, approximately half of the paper goes outside their expertise, and may well not follow the assumptions of their own field. Since they lack the technical knowledge to even evaluate its validity, the probability that they will feel confident in its validity is low. Even if the authors get lucky, and one referee ranks it as both interesting and valid, traditional “false-positive” screening requires that all three reviewers recommend it. Multiplying three poor probabilities yields a low probability of success. In practice, this conservative criterion leads to conservative results: it selects what “everybody agrees is acceptable.” It rewards staying in the average referee’s comfort zone, and penalizes innovation.

By contrast, the SP network explicitly searches for interest in the paper, over a far larger number of possible referees (say 10–50), using fast, automatic click-through metrics. Obviously, if no one is interested, the process just ends. But if the paper is truly innovative, the savviest people in the field will likely be intrigued. Next, the interested reviewers question the authors about points of confusion, prior to stating any judgment about its validity. Instead of requiring all referees to recommend the paper for publication, the SP network will “publish” a paper if just one referee chooses to recommend it. (Of course in that case it will start out with a smaller audience, but can grow over time if any of those subscribers in turn recommend it). A truly innovative, sound paper is likely to get multiple recommendations in this system (out of the 10 or more SPRs to whom it was initially shown). By contrast with traditional publishing, it is optimized for a low false-negative error rate, because it selects what at least one expert says is extraordinary (and allocates a larger audience in proportion to the number of experts who say so). Any reduction the SP network makes in this false-negative rate will produce a dramatic increase in coverage for these papers.

3.3.2. Cost

The SP network reduces the costs of publishing to the community (in terms of human time and effort) in several ways:

• it eliminates the costs of “restart at zero” and the “non-compete clause”: markets work efficiently only to the extent they actually function as free markets, i.e., via competition. It is worth noting that while papers compete to get into each journal, journals do not compete with each other for each paper. Journals enforce this directly via a “non-compete clause” that simply makes it illegal for authors to submit to more than one journal, and indirectly via incompatible submission systems and incompatible format requirements (even though there is little point applying such requirements until after the journal has decided to accept the paper). In practice an author must “start over from scratch” by re-writing and re-submitting his paper to another journal. Note that this multiplies the publication cost ratio for a paper by the number of times it must be submitted. It is not uncommon for this to double or triple the publication cost ratio.

From the viewpoint of the SP network, these “restart at zero” strictures are wasteful and illogical. On the one hand, it means that each editor gets only a small slice of the total review information (since the different reviews are kept separate, rather than pooled). On the other hand, it wastes an immense amount of time re-reviewing the same paper over and over. Finally, the SP network pools the parallel review efforts of all interested SPRs in a single unified process. Each SPR sees the complete picture of information from all SPRs, but makes his own independent decision.

Let us consider the publication cost ratios for different cases. For a paper that is not of strong interest to any audience, traditional journal review typically involves months of “restart at zero” reviews. By contrast, the SP network will simply return the negative result in a few days (“no interested audiences found”). Thus, the SP network reduces the publication cost ratio in this case by at least a factor of ten. For papers that require extensive audience search (either because they are in a specialized subfield, or because they contain “too much innovation” or “too many kinds of expertise”), they again are likely to fall into the trap of “restart at zero” re-review, consuming months, and possibly yielding no publication. In the SP network, the authors should be able to find their audience (possibly small) within days, and then go through a single review process leading to publication by one or more SPRs. Because “restart at zero” is avoided, the publication cost ratio should be two to three times less. Finally, for papers with an obvious (easy to find) audience, the SP network still offers some advantages, basically because it guarantees a single round of review. By contrast, traditional peer review requires unanimity. This unavoidably causes a significant false-negative rate. Under the law of “restart at zero,” this means a certain fraction of good papers waste time on multiple rounds of review. For this category overall, I expect the publication cost ratio of traditional publishing to be 1–2 times that of the SP network.

• it eliminates the costs of “gambling for readership”: when researchers discover a major innovation or connection between fields, they become ambitious. They want their discovery published to the largest possible audience. Under the non-compete clause, this means they must take a gamble, by submitting to a journal with a large readership and correspondingly high rejection ratio. Often they start at the top (e.g., a Nature or Nature Genetics level journal) and work their way down until the paper finally gets accepted. Summed over the entire research community, this law of “restart at zero” imposes a vast cost with no productive benefit, i.e., the paper gets published regardless. The SP network avoids this waste, by providing an efficient way for a paper’s readership to grow naturally, as an automatic consequence of its interest to readers. Neither authors nor referees have to “gamble” on predictions of how much readership the paper should be “allocated.” Instead, the paper is simply released into the network, where it will gradually spread, in direct proportion to how many readers it interests.

• it eliminates the costs of “prestige battles”: referees for traditional journals play two roles. They explicitly assess the technical validity of a paper, but they also (often implicitly) judge whether it is “prestigious enough” for the journal. Often referees decide to reject a paper based on prestige, but rather than expressing this subjective judgment (“I want to prevent this paper from being published here”), they justify their position via apparently objective criticisms of technical validity details. The authors doggedly answer these criticisms (often by generating new data). If the response is compelling, referees will commonly re-justify their position simply by finding new technical criticisms. Unfortunately, this process often doubles or triples the review process, and is unproductive, first because the referee’s decision is already set, and second because the “technical criticisms” are just red-herrings; answering them does not address the referee’s real concern. Even if the paper is somehow accepted (e.g., the editor intervenes), this will double or triple the publication cost ratio.

By contrast, in the SP network this issue does not even arise. This problem is a pathology of non-expert PUSH – i.e., asking referees to review a paper they are not personally interested in. In the SP network, there is no “prestige factor” for referees to consider at all (first because the SP network simply measures impact long-term, and second because that metric has little dependency on what any individual reviewer decides). Indeed, the only decision a referee needs to make initially is whether they are personally interested in the paper or not. And that decision is measured instantly (via click-through metrics), rather than dragged out through weeks or months of arguments with the authors.

4. Precedents

This proposal is hardly original–it merely synthesizes what many scientists have argued for in a wide variety of forums (Hitchcock et al., 2002; Neylon, 2005; Nielsen, 2008; Kriegeskorte, 2009; Smith, 2009; Baez et al., 2010; The Peer Evaluation Team, 2010; Birukou et al., 2011; von Muhlen, 2011). There is powerful precedent for both a public publishing service, and for a recommendations-based distribution system. For example, arXiv is the preeminent preprint server for math, physics, and computer science (Cornell University Library, 1996). A huge ecology of researchers are using it as a de facto publishing system; it provides the real substance of publishing (lots of papers get posted there, and lots of people read them) without the official imprimatur of a journal.

As usual with such things, the main barrier to realizing the benefits of a new system is simply the entrenchment of the old system. In my view, the advantage of this proposal is that it provides a seamless bridge between the old and new, by working equally well with either. In the context of the old system, it is a social network in which everyone’s recommendations of published papers can flow efficiently. But the very act of using such a network creates a new context, in which every user becomes in a sense as important a “publication channel” as an established journal (at least for his subscribers).

4.1. Examples that an SP Network Could Build Upon

In my view, most of the key ingredients are in place; what is needed is to integrate them together as an SP network. Here are some examples, by no means comprehensive:

• Online bibliography managers such as Academia.edu (The Academia.edu Team)1, citeUlike (Cameron et al., 2004), Connotea (Nature Publishing Group)2, Mendeley (The Mendeley Team)3, and ResearchGate (The ResearchGate Team)4. These provide public sites where researchers can save citations, rate papers, and share their ratings. CiteUlike also attempts to recommend articles to a user based on his citation list. In principle, users’ lists of favorite papers could be used as a source of recommendations for the SP network.

• open peer review platforms: PeerEvaluation.org has launched an open access manuscript sharing and open peer review site (The Peer Evaluation Team, 2010). Peer review is open (non-anonymous), and it also seeks to provide “qualitative metrics” of impact. It could be viewed as a hybrid of arXiv (i.e., an author self-publishes by simply uploading his paper to the site) plus open, community peer review.

• improved metrics: The LiquidPub Project analyzed a wide variety of metrics for assessing impact and peer review quality; for a review see Birukou et al. (2011).

• journals that support aspects of open peer review: PLoS ONE (Public Library of Science, 2006) represents an interesting precedent for the SP network. In terms of its “back-end,” PLoS ONE resembles some aspects of the SP network. For example, its massive list of “Academic Editors” who each have authority to accept any submitted paper is somewhat similar to the “liberal” definition of SPRs that allows any SPR to recommend a paper to his subscribers. However, on its “front-end” PLoS ONE operates like a traditional journal: reviews are secret; no effort is made to search for a paper’s audience(s); and above all there is no network structure for papers to spread naturally through a community.

Biology Direct (Koonin et al., 2006) is another interesting precedent. It employs a conventional (relatively small) editorial board list. However, like the SP network, it asks authors to contact possible reviewers from this list directly, and reviewers are encouraged to decline a request if the paper does not interest them. Moreover, reviews are made public when a paper is published. Again, however, Biology Direct’s front-end is that of a conventional journal, with no network structure.

4.2. Lessons from These Precedents

Given that these sites already provide important pieces of this proposal, it is interesting to ask why they have not already succeeded in creating an SP network. I see two basic reasons:

• Several pieces must be put together before you have a network that can truly act as content distribution system. For example, people do not normally think of bibliography management (e.g., citeUlike) as a distribution system, and there are good reasons for this. Bibliography managers do not solve the fundamental problems of publication, namely audience search (finding a channel that will reach the audience of people that would read the paper), validation (identifying all issues which could undercut the paper’s claims, and figuring out how to address them), and distribution (actually reaching the audience). There are certainly aspects of CiteULike, Mendeley, etc., that could be applied to solve the distribution problem (e.g., paper recommendations), but this will not happen until all of the components are present and working together.

• These sites are “yet another thing” a busy scientist would have to do (and therefore is unlikely to do), rather than something that is integrated into what he already does. For example, I think that a scientist is far more likely to view (and make) recommendations linked on PubMed search result pages, than if we ask him to log in to a new website such as citeUlike. The problem is the poor balance of incentives vs. costs for asking the scientist to use a new website: on the one hand, any recommendations he makes are unlikely to be seen by many people (because a new site has few users); on the other hand, he has to go out of his way to remember to use the site.

To create a positive balance of incentives vs. costs, an SP network must (a) make reviewing truly important (i.e., it must gate whether the paper gets published, just like peer review at a journal); (b) reward reviewers by prominently displaying their recommendations directly on PubMed search results and the journal website, etc. (so that recommendations you write will be immediately seen by many readers, even if you do not yet have any subscribers); (c) make it easy for all scientists to start participating, directly from sites they already use (such as PubMed). For example, a page showing a paper at PubMed or the journal website should have a “Like!” link that enables the reader to enter a recommendation directly; (d) help authors search for the specific audience(s) for their paper through automated click-through metrics. This harnesses a real motive force – the quest for your personal scientific interests, both as an author and a reader – in service of getting people to participate in the new peer review system.

These precedents also suggest that an SP network should be open to a wide variety of communication methods – by providing a common interface that many different sites could plug in to – rather than trying to create a single site or mechanism that everyone must use. Ideally, all of these different sites (e.g., citeUlike, PeerEvaluation.org) should be able to both view and enter information into the SP network. In this way, the SP network serves to tie together many different efforts. For example, it might be possible to create mechanisms for the SP network to draw from the large number of researchers who are using blogs to discuss and review their latest finds in the literature, some of them are extremely influential (e.g., Tao, 2007), and John Baez/n-category cafe (Baez, 1993–2010; Baez et al., 2007), to cite two examples). As one simple example of allowing many input methods, the SP network should make it easy for a blog user to indicate which of his blog posts are reviews, and what papers they recommend, automatically delivering these recommendations to his subscribers.

In my view, it is very important that the SP network be developed as an open-source, community project rather than as a commercial venture, because its data are freely provided by the research community, and should be freely used for its benefit. To the extent that they become valuable, commercial sites tend to become “walled gardens” in which the community is encouraged to donate content for free, which then becomes the property of the company. That is, it both controls how that content can be used, and uses that content for its own benefit rather than that of the community. The SP network would provide enormous benefits to the community, but from the viewpoint of a publishing company (e.g., NPG) it might simply look like a threat to their business. The SP network should be developed as a walled garden, because its data belong to the community and must be used for the community’s benefit. It must be developed “of the people, by the people, for the people,” or it will never come to be in the first place.

5. A Three Phase Plan for Building a Selected-Papers Network

To provide concrete details about how this concept could work, I outline how it could be implemented in three straightforward, practical phases:

• Phase I: the basic SP network. Building a place where reviewers can enter paper selections and post-reviews, readers can search and subscribe to reviewers’ selections, and papers’ diffusion through research communities is automatically measured.

• Phase II: A better platform for scientific publishing. This phase will focus on providing a comprehensive platform for open peer review, as an alternative to journals’ in-house peer review. Authors would be invited to submit directly to the SP network peer review platform, and then after its review process was complete, to invite a journal editor to decide whether to accept the paper on the basis of the SPR’s reviews. To make this an attractive publishing option, it will give authors powerful tools for quickly locating the audience(s) for a paper, and it will give reviewers powerful tools for pooling expertise to assess its validity, in collaboration with the authors. All of this is driven by the SP network’s ability to target specialized audiences far more accurately, flexibly, and quickly than traditional journals. One way of saying this is that the SP network automatically creates a new “virtual journal” (list of subscribers) optimized for each individual paper, and that this is done in the most direct, natural way possible (i.e., by each reviewer deciding whether or not to recommend the paper to his subscribers). Note that this strategy aims not at supplanting traditional journals but complementing them. This alternative path will be especially valuable for specialized subfields that are not well-served by existing journals, for newly emerging fields, and for interdisciplinary research (which tends to “fall between the cracks” of traditional journal categories).

• Phase III: discovering and measuring the detailed structure of scientific networks. I propose that the SP network should record not only of the evolution of the subscription network (revealing sub-communities of people who share a common interest as shown by cliques who subscribe to each other), but also the exact path of how each paper spreads through the network. Together with a wide range of automatic measurements of each reader’s interest in a paper, these data constitute a golden opportunity for rigorous research on knowledge networks and social networks (e.g., statistical methods for discovering the creation of new subfields directly from the network structure). Properly developed, this dataset would enable new scientometrics research and will produce a wide variety of new algorithms (e.g., Netflix-style prediction of a paper’s level of interest for any given reader) and new metrics (e.g., how big is a reviewer or author’s influence within his field? How accurately does he predict what papers will be of interest to his field, or their validity? How far “ahead of the curve” is a given reviewer or author?). Note that the SP network needs only to capture the data that enables such research; it is the research community that will actually do this research. But the SP network then benefits, because it can put all these algorithms and metrics to work for its readers, reviewers and authors. For example, it will be able to create publishing “channels” for new subfields as soon as new cliques are detected within the SP network structure.

5.1. Phase I: Building a Selected-Papers Network

Technically, the initial deployment requires only a few basic elements:

• a mechanism for adding reviewers (“Selected-Paper Reviewer” or SPR): the SP network restricts reviewers in a field simply to those who have published peer reviewed papers in that field (typically as corresponding author). Initially it will focus on building (by invitation) a reasonably comprehensive group of reviewers within certain fields. In general, any published author from any field can add themselves as a reviewer by linking their e-mail address to one of their published papers (which usually include the corresponding author’s e-mail address). Note that the barrier to entry need not be very high, since the only privilege this confers is the right to present one’s personal recommendations in a public forum (no different than starting a personal blog, which anyone can do). Note also that the initial “field definitions” can be very broad (e.g., “Computational Biology”), since the purpose of the SP network is to enable subfield definitions to emerge naturally from the structure of the network itself.

• a mechanism for publishing reviews: Peer reviews represent an important contribution and should be credited as such. Concretely, substantive reviews should be published, so that researchers can read them when considering the associated paper; and they should be citable like any other publication. Accordingly, the SP network will create an online journal Critical Reviews that will publish submitted reviews. The original paper’s authors will be invited to check that a submitted review follows basic guidelines (i.e., is substantive, on-topic, and contains no inappropriate language or material), and to post a response if desired. Note that this also triggers inviting the paper’s corresponding author to become an SP reviewer (by virtue of having published in this field). Reviews may be submitted as Recommendations (i.e., the reviewer is selecting the paper for forwarding to his subscribers), Comments (neutral: the review is attached to the paper but not forwarded to subscribers), or Critiques (negative: a warning about serious concerns. The reviewer can opt to forward this to his subscribers). Recommendations should be written in “News and Views” style, as that is their function (to alert readers to a potentially important new finding or approach). Comments and Critiques can be submitted in standard “Referee Report” style. Additional categories could be added at will: e.g., Mini Reviews, which cover multiple papers relevant to a specific topic (for an excellent example, see the blog This Week’s Finds in Mathematical Physics; Baez, 1993–2010); Classic Papers, which identify must-read papers for understanding a specific field; etc.

Note also that the SP network can give reviewers multiple options for how to submit reviews: via the Science Select website (the default); via Google Docs; via their personal blog; etc. For example, a reviewer who has already written “News and Views” or mini reviews on his personal blog, could simply give the SP network the RSS URL for his blog. He would then use the SP network’s tools (on its website) to select the specific post(s) he wants to publish to his subscribers, and to resolve any ambiguities (e.g., about the exact paper(s) that his review concerns).

• a subscription system: the SP network would define an open standard by which any site that displays paper titles, abstracts, or full-text could link to the ranked set of recommendations for those papers, or let its users easily make paper recommendations. For example, the PubMed search engine could display a “Recommended” link next to any recommended paper title, or (when displaying an abstract) a ranked list of people who recommended the paper. In each case these would be linked to that person’s review of the paper, their other paper recommendations, and the option to subscribe to their future recommendations. Similarly, it would display a “Like!” icon that would let the user recommend the paper. This would give people a natural way to start participating immediately in the SP network by viewing and making recommendations anywhere that they view papers – whether it be on PubMed, a journal’s website, etc.

Subscribers could opt to receive recommendations either as individual e-mails; weekly/monthly e-mail summaries; an RSS feed plugged into their favorite browser; a feed for their Google Reader; or other preferred news service, etc. Invitations will emphasize the unique value of the SP network, namely that it provides the subscriber reviews of important new papers specifically in his area (whereas traditionally review comments are hidden from readers).

• an automatic history-tracking system: each paper link sent to an individual subscriber will be a unique URL, so that when s/he accesses that URL, the system will record that s/he viewed the paper, as well as the precise path of recommenders via which the paper reached this reader. In other words, whereas the stable internal ID for a paper will consist of its DOI (or arXiv or other database ID), the SP network will send this to a subscriber as a URL like http://doc.scienceselect.net/Tase3DE6w21… that is a unique hash code indicating a specific paper for a specific subscriber, from a specific recommender. Clicking the title of the paper will access this URL, enabling the system to record that this user actually viewed this paper (the system will forward the user to the journal website for viewing the paper in the usual way). If this subscriber then recommends the paper to his own subscribers, the system sends out a new set of unique links and the process begins again. This enables the system to track the exact path by which the paper reached each reader, while at the same time working with whatever sources the user must access to actually read any given paper. Of course, the SP network will take every possible measure to prevent exposure or misuse of these data. These metrics should include appropriate controls for excluding trivial effects such as an attention-grabbing title. Since the SP network directly measures the probability that someone will recommend the paper after reading it, it should be able to control for such trivial effects.

• an automatic interest-measuring system: click-through rates are a standard measure of audience response in online advertising. The SP network will automatically measure audience interest via click-through rates, in the following simple ways:

– The system will show (send) a user one or more paper titles. The system then measures whether the user clicks to view the abstract or review.

– The system displays the abstract or review, with links to click for more information, e.g., from the review, to view the paper abstract or full-paper. Each of these click-through layers (title, review, abstract, full-paper) provides a stronger measure of interest.

– The system provides many options for the user to express further interest, e.g., by forwarding the paper to someone else; “stashing” it in their personal cubbyhole for later viewing; rating it; reviewing or recommending it on their SP list, etc.

• a paper submission mechanism: while reviewers are encouraged to post-reviews on their own initiative, the SP network will also give authors a way to invite reviews from a targeted set of reviewers. Authors may do this either for a published paper (to increase its audience by getting “selected” by one or more SPRs, and spreading through the SP subscriber network), or for a preprint. Either way, authors must supply a preprint that will be archived on the SP network (unless they have already done so on standard repository such as arXiv). This both ensures that all reviewers can freely access it, and guarantees Open Access to the paper (the so-called “Green Road” to open access). (Note that over 90% of journals explicitly permit authors to self-archive their paper in this way; Harnad et al., 2004). Authors use the standard SP subscriber tools to search for relevant reviewers, and choose up to 10 reviewers to send the paper link to. Automatic click-through measurements (see section below) will immediately assess whether each reviewer is interested in the paper; actually proceeding to read the paper (“whoa! I gotta read this!”) triggers an invitation to review the paper. These automatic interest metrics should be complete within a few days. For reviewers who exhibit interest in a paper, the authors follow up with them directly. As always, each reviewer decides at their sole discretion whether or not to recommend the paper to their subscribers. As in traditional review, a reviewer could demand further experiments, analysis, or revisions as a condition for recommending the paper. While each reviewer makes an independent decision, all reviewers considering a paper would see all communications with the authors, and could chime in with their opinions during any part of that discussion.

It is interesting to contrast SP reviewer invitations vs. the constant stream of review requests that we all receive from journals. While SP reviewers could in principle receive a larger number of “paper title invitations,” this imposes no burden of demands on them; i.e., no one is asking them to review anything unless it is of burning interest to them. There is no nagging demand for a response; indeed, reviewers will be expressly instructed to ignore anything that does not grab their interest!

5.2. Phase II: The SP Network as a Peer Review Platform

The capabilities developed in phase I provide a strong foundation for giving authors the choice of submitting their work directly to the SP network as the peer review mechanism (which could result in publication in a traditional journal). To do this, the SP network will make these capabilities available as a powerful suite of tools 1. for authors to search for the audience(s) that are interested in their work; 2. for authors and referees to combine their different expertises (in synthesis rather than opposition) to identify and address key issues for the paper’s impact and validity; 3. for long-term evaluation after a paper’s publication, to enable the community to raise new issues, data, or resolutions. This will be particularly useful for newly emerging fields (which lack journals) or subfields that are not well-served by existing journals.

However, it must be emphasized that this is not an attempt to compete with or replace traditional journals. Instead, the SP network complements the strengths of traditional journals, and its suite of tools could be useful for journals as well. Concretely, the SP network will develop its tools as an open-source project, and will make its software and services freely available to journals as well as to the community at large. For example, journals could use the SP network’s services as their submission and review mechanism, to gain the many advantages it offers over the very limited tools of traditional review (which consist of little more than an ACCEPT/REJECT checkbox for the Editor, and a text box for feedback to the authors).

5.2.1. The SP network publication process

The SP network will provide tools for “market research” (i.e., finding the audience(s) that are interested in a given paper) and for synthesis (integrating multiple expertises to maximize the paper’s value for its audience(s)), culminating in publication of a final version of the paper (by being selected by one or more SPRs). I will divide this into three “release stages”: alpha (market research); beta (synthesis); post-publication (long-term evaluation). These are analogous to the alpha-testing, beta-testing, and post-release support stages that are universal in the software industry. The alpha release cycle identifies a specific audience that is excited enough about the paper to work on reviewing it. The beta release cycle draws out questions and discussion from all the relevant expertise needed to evaluate the paper and optimize it for its target audience(s). The reviewers and authors work together to raise issues and resolve them. Individual reviewers can demand new data or changes as pre-conditions for recommending the paper on their SP list. On the one hand, the authors decide when the paper is “done” (i.e., to declare it as the final, public version of the paper). On the other hand, each reviewer decides whether or not to “select” the paper for their SP list. On this basis, authors and reviewers negotiate throughout the beta period what will go into the final release. As long as one SPR elects to recommend the paper to his subscribers, the authors have the option of publishing the paper officially in the SP network’s journal (e.g., Selected-Papers in Biology). Regardless of how the paper is published, the same tools for synthesis (mainly an issue tracking system) will enable the entire research community to continue to raise and resolve issues, and to review the published paper (i.e., additional SPRs may choose to “select” the paper).

5.2.2. Alpha release tools

For alpha, the tools already provided by Phase I are sufficient: e.g., the paper submission mechanism; methods for measuring reader interest; and audience search methods. Here I will simply contrast it with traditional peer review.

• assess impact, not validity: I wish to emphasize that alpha focuses entirely on measuring the paper’s impact (interest level) over its possible audiences. It does not attempt to evaluate the paper’s validity (which by contrast tends to dominate the bulk of referee feedback in traditional peer review). There are three reasons. First, impact is the key criterion for the SP network: if no SPR is excited about the paper, there is no point wasting time assessing its validity. Second, for papers that combine multiple expertises, its impact might lie within one field, yet it might use methods from another field. In that case, a referee who is expert in evaluating the validity of the methodology would not be able to assess the paper’s impact (which lies outside his field). Therefore in IDPR impact must often be evaluated separately. Third, the SP network is very concerned about failing to detect papers with truly novel approaches. Such papers are both less common, and harder for the average referee to understand in their entirety. This makes it more likely that referees will feel doubt about a novel approach’s validity. To avoid this serious risk of false-negatives, the SP first searches for SPRs who are excited about a paper’s potential impact, completely separate from assessing its validity.

• impact-driven review, not non-expert PUSH: traditional journals do essentially nothing to help authors find their real target audience, for the simple reason that journals have no tools to do this. Exploring the space of possible audiences requires far more than a single, small sample (2–3 reviews). It requires efficiently measuring the interest level from a meaningful sample for each audience. The key is that the SP network will directly measure interest (see the metrics described in Phase I and Phase III) over multiple audiences. By contrast, non-expert PUSH tends to produce high false-negative rates, because people are not good at predicting the interest level of papers that they themselves are not interested in. Being unaware of a paper’s interest for a problem outside your knowledge, and being unaware that another group of people is interested in that problem, tend to go together.

• speed: because alpha requires no validity review, it can be fast and automatic. The SP network’s click-through metrics can be measured for 10–100 people over the course of just a few days; advertisers (e.g., Google) measure such rates over vastly larger audiences every day.

• journal recommendation system: whenever a researcher expands the scope of his work into a new area, he initially may be unsure where to publish. The SP network can automatically suggest appropriate journals, by using its interest measurement data. Simplistically, it can simply relate the set of SPRs who expressed strong interest in the paper to the set of journals which published papers recommended by those same SPRs.

5.2.3. Beta release tools

Beta consists of several steps:

• Q & A: This means that reviewers with different relevant expertise raise questions about the paper, and work with the authors to resolve them, using an online issue tracker that makes it easy to see what issues have already been raised, their status, and detailed discussion. Such systems provide great flexibility for synthesizing a consensus that draws on multiple expertises. For example, one referee may resolve another referee’s issue. (A methodology reviewer might raise the concern that the authors did not follow one of the standard assumptions of his field; a reviewer who works with the data source analyzed in the paper might respond that this assumption actually is not valid for these data). Powerful issue tracking systems are used universally in commercial and open-source software projects, because they absolutely need such synthesis (to find and fix all their bugs). Using a system that actually supports synthesis changes how people operate, because the system makes it obvious they are all working toward a shared goal. Note that such a system is like a structured wiki or “threaded” discussion in that it provides an open forum for anyone to discuss the issues raised by the paper.

The purpose of this phase is to allow referees to ask all the questions they have in a non-judgmental way–a conversation with the authors, and with the other referees–before they even enter the Validity Assessment phase. This should distinguish clearly several types of questions:

–False-positive: Might result/interpretation X be due to some other explanation, e.g., random chance; bias; etc.? Indicate a specific test for the hypothetical problem.

–False-negative: is it possible your analysis missed some additional results due to problem Y? Indicate a specific test for the hypothetical problem.

–Overlap: how does your work overlap previous study X (citation), and in what ways is it distinct?

–Clarification/elaboration: I did not understand X. Please explain.

–Addition: I suggest that idea X is relevant to your paper (citation). Could that be a useful addition?

Each referee can post as many questions as he wants, and also can “second” other referees’ questions. Authors can immediately answer individual questions, by text or by adding new data/analyses. Referees can ask new questions about these responses and data. Such discussion is important for synthesis (combining the expertise of all the referees and the authors) and for definitive clarification. It should leave no important question unanswered.

• validity assessment: eventually, these discussions culminate in each reviewer deciding whether there are serious doubts about the validity of paper’s data or conclusions. While each reviewer decides independently (in the sense that only he decides what to recommend on his SP list), they will inevitably influence each other through their discussions.

• improving the paper’s value for its audience: once the critical validity (false-positive) issues are resolved, referees, and authors should consider the remaining issues to improve the manuscript, by clarifying points that confused readers, and adding material to address their questions. To take an extreme example, if reviewers feel that the paper’s value is obscured by poor English, they might demand that the authors hire a technical writer to polish or rewrite parts of it. Of course, paper versions will be explicitly tracked through the whole process using standard software (e.g., Git; Torvalds and Hamano, 2005).

• public release version: the authors decide when to end this process, and release a final version of the paper. Of course, this is closely tied to what the reviewers demand as conditions for recommending the paper.

5.2.4. Publication

Authors can use the SP network alpha and beta processes to demonstrate their paper’s impact and validity, and then invite a journal editor to consider their paper on that basis. A journal editor can simply join the beta process for such a paper; like the other SPRs, he decides (based on the complete synthesis of issues and resolutions in the issue tracker) whether he wishes to “select” the paper. The only difference is that he is offering the authors publication in his journal, whereas the other referees are offering a recommendation on their SP lists. Of course, the paper will typically have to be re-formatted somewhat to follow the journal’s style guidelines, but that is a minor issue; extra material that does not fit its size limits can be posted as an online Supplement.

Note that this process offers many advantages to the journal. It does not need to do any work for the actual review process (i.e., to find referees, nag them to turn in reviews on time, etc.). More importantly, it gets all of the SP network’s impact measurements for the paper, allowing it to see exactly what the paper’s level of interest is. Indeed, the journal can get a “free-ride” on the SP network’s ability to market the paper, by simply choosing papers that multiple (or influential) SPRs have decided to recommend to their subscribers. If the journal decides to publish such a paper, all that traffic will come to its website (remember that the SP network just forward readers to wherever the paper is published). For a journal, the SP network is a gold mine of improved review process and improved marketing – all provided to the journal for free.

However, an even greater value of the SP network review system is for areas that are not well-served by journals. If an SPR selects a paper for recommendation to his subscribers, the authors can opt to officially publish the paper in the SP network’s associated journal. Note that this serves mainly to get the paper indexed by search engines such as PubMed, and to give the paper an “official” publication status. After all, the real substance of publication is readership, and being recommended on the SP network already provides that directly.

5.3. Phase III: Analysis and Metrics for Scientific Networks

Here I will only briefly list some basic metrics that the SP network will incorporate into the peer review process. Of course, data collected by the SP network would make possible a wide range of scientometric analyses, far beyond the scope of this paper. There is a large literature exploring new metrics for impact; for a review see Birukou et al. (2011).